Windows Azure and Cloud Computing Posts for 8/31/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: Links to Twitter @handles haven’t been working this week for an unknown reason. Epic FAIL!

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Larry Franks posted Ruby and Windows Azure Local Storage on 8/31/2011 to the Windows Azure’s Silver Lining blog:

Recently I’ve been hashing out an idea with Brian on creating a sample multi-tenant application, one of the features we’ve talked about is that it should let users upload and share photos. So this morning I’ve been thinking about storage. Windows Azure Storage Services are pretty well covered for Ruby (as well as other languages: http://blog.smarx.com/posts/windows-azure-storage-libraries-in-many-languages,) but there’s another storage option for non-relational data that I think gets overlooked. I’m talking about local storage.

Local Storage

Local storage is a section of drive space allocated to a role. Unlike Windows Azure Blob storage, which has to be accessed via REST, local storage shows up as a directory off c:\ within the role. Before you get all excited about being able to do fast file IO instead of REST, there are a couple drawbacks to it that make local storage undesirable for anything other than temp file storage:

- It isn’t shared between multiple role instances

- It isn’t guaranteed to persist the data*

*While you can set local storage to persist when a role recycles, it does not persist when a role is moved to a different hardware node. Since you have no control over when this happens, don’t depend on data in local storage to persist. If you've set it to persist between recycles and it does, great; you lucked out and are running on the same hardware you were before the instance recycled, but don't count on this happening all the time.

Local storage is great for holding things needed by your instance while it's running, like your Ruby installation, gems, application code, or for holding temp files like uploads that haven’t been pushed over to blob storage yet. In fact your application code lives in, and is ran from, local storage. But for long term persistance of data you should use Windows Azure Storage like tables and blobs.

How to Allocate

If you happened to look through the .NET code for the deployment options mentioned in Deploying Ruby (Java, Python, and Node.js) Applications to Windows Azure, or checked properties on the web role entry in Solution Explorer, you probably noticed some entries for local storage. Here’s a screenshot of the local storage settings from the Smarx Role as an example, which allocates local storage for Git, Ruby, Python, Node.js, and your web application:

You can also modify this in the Service Definition file (ServiceDefinition.csdef), which contains the following XML:

<LocalResources>

<LocalStorage name="Git" cleanOnRoleRecycle="true" sizeInMB="1000" />

<LocalStorage name="Ruby" cleanOnRoleRecycle="true" sizeInMB="1000" />

<LocalStorage name="Python" cleanOnRoleRecycle="true" sizeInMB="1000" />

<LocalStorage name="App" cleanOnRoleRecycle="true" sizeInMB="1000" />

<LocalStorage name="Node" cleanOnRoleRecycle="true" sizeInMB="1000" />

</LocalResources>Note that the total amount of local storage you can allocate is determined by the size of the VM you’re using. You can see a chart of the VM size and the amount of space at http://msdn.microsoft.com/en-us/library/ee814754.aspx.

Unfortunately the Service Definition file is packed into the pre-built deployment packages provided by Smarx Role or AzureRunMe, so you’ll need Visual Studio and the source for these projects to change existing allocations or allocate custom stores. You also need some way to make the local storage directory visible to Ruby, as it's allocated at runtime and the directory path is slightly different for each role instance. This can be accomplished by storing the path into an environment variable, which your application can then read. We'll get to an example of this in a minute.

So why is this important?

One thing that I think gets overlooked or forgotten during application planning is the space used by transient temporary files. For example, file uploads have to go somewhere locally before you can send them over to blob storage. Most applications read the path assigned to the TEMP or TMP environment variable, and while this generally works, there's a gotcha with the default value of these two for Windows Azure; the directory they point to is limited to a max of 100MB (http://msdn.microsoft.com/en-us/library/hh134851.aspx.) You can't increase the max allocated for this default path either, but you can allocate a larger chunk of local storage and then modify the TEMP and TMP environment variables to point to this larger chunk.

Matias Woloski recently blogged about this at http://blogs.southworks.net/mwoloski/2011/08/04/not-enough-space-on-the-disk-windows-azure/ and provided an example of how to work around the 100MB limit by pointing the TEMP & TMP environment variables to a local storage path he’d allocated (tempdir is the name of the local storage allocation in his example.) You should be able to use the same approach by modifying the AzureRunMe, Smarx Role, or whatever custom .NET solution you're using to host your Ruby application.

As an example, here's how to accomplish this with the Smarx Role project (http://smarxrole.codeplex.com/):

- Download and open the project in Visual Studio. Double click on the WebRole entry in Solution Explorer. You'll see a dialog similar to the following:

- Click the Add Local Storage entry, and populate the new field with the following values:

- Name: TempDir

- Size (MB): 1000 (or whatever max size you need)

- Clean on role recycle: Checked

- Select File, and then select Save WebRole to save your changes.

- Back in Solution Explorer, find Program.cs and double click to open it. Find the line that contains the string "public static SyncAndRun Create(string[] args)". Within this method, find the line that states "if (RoleEnvironment.IsAvailable)" and add the following three lines immediately after the opening brace following the if statement.

The if statement should now appear as follows:string customTempLocalResourcePath = RoleEnvironment.GetLocalResource("TempDir").RootPath;

Environment.SetEnvironmentVariable("TMP", customTempLocalResourcePath);

Environment.SetEnvironmentVariable("TEMP", customTempLocalResourcePath);if (RoleEnvironment.IsAvailable)

{

string customTempLocalResourcePath = RoleEnvironment.GetLocalResource("TempDir").RootPath;

Environment.SetEnvironmentVariable("TMP", customTempLocalResourcePath);

Environment.SetEnvironmentVariable("TEMP", customTempLocalResourcePath);

paths.Add(Path.Combine(RoleEnvironment.GetLocalResource("Node").RootPath, "bin"));

paths.Add(RoleEnvironment.GetLocalResource("Python").RootPath);

paths.Add(Path.Combine(RoleEnvironment.GetLocalResource("Python").RootPath, "scripts"));

paths.Add(Path.Combine(

Directory.EnumerateDirectories(RoleEnvironment.GetLocalResource("Ruby").RootPath, "ruby-*").First(),

"bin"));

Environment.SetEnvironmentVariable("HOME", Path.Combine(RoleEnvironment.GetLocalResource("Ruby").RootPath, "home"));

localPath = RoleEnvironment.GetLocalResource("App").RootPath;

gitUrl = RoleEnvironment.GetConfigurationSettingValue("GitUrl");

}- Select file, and then select Save Program.cs.

- Build the solution, and then right click the SmarxRole entry in Solution Explorer and select Package. Accept the defaults and click the Package button.

- You will be presented with a new deployment package and ServiceConfiguration.cscfg.

Once you've uploaded the new deployment package, your web role will now be using the TempDir local storage that you allocated instead of the default TEMP/TMP path. You can use the following Sinatra web site to retrieve the TEMP & TMP paths to verify that they are now pointing to the TempDir local storage path:

If you are using the default TEMP/TMP path, the return value should contain a path that looks like:get '/' do

"Temp= #{ENV['TEMP']}</br>Tmp= #{ENV['TMP']}"

endC:\Resources\temp\b807eb0fa61741d9b2e77d2d686fce98.WebRole\RoleTemp\Once you've switched to the TempLib local storage, the path will look something like this:

C:\Resources\directory\b807eb0fa61741d9b2e77d2d686fce98.WebRole.TempDir\* The GUID value in the paths above will be different for each instance of your role.

Summary

While there's a lot of information on using Windows Azure Storage Services like blobs and tables from a Ruby application, don't forget about local storage. It's very useful for per-instance, runtime data, and it's probably where your data is going when you accept file uploads. If you rely on the TEMP & TMP, be sure to clean up your temp files after you're done with them, and it's probably a good idea to estimate how much temp storage space you'll need in a worst case scenario. If you're going to be anywhere near the 100MB limit of the default TEMP & TMP path, consider allocating local storage and remapping the TEMP & TMP environment variables to it.

<Return to section navigation list>

SQL Azure Database and Reporting

Eric Nelson (@ericnel) added background in his No OLEDB support in SQL Server post Denali post of 8/31/2011:

The next release of Microsoft SQL Server, codename “Denali”, will be the last release to support OLE DB. OLE DB will be supported for 7 years from launch, the life of Denali support, to allow you a large window of opportunity for changing your applications before the deprecation. This deprecation applies to the Microsoft SQL Server OLE DB provider only. Other OLE DB providers as well as the OLE DB standard will continue to be supported until explicitly announced. Read more over on the sqlnativeclient blog.

A little history:

Today there are many different ways to connect to SQL Server. What you normally require is a consumer (e.g. the ADO.NET Entity Framework) and a provider (e.g. an ADO.NET Data Provider). ADO.NET Data Providers are the most recent manifestation of providers and are the preferred way to connect to SQL Server today if your consumer is running on the Windows platform. The ADO.NET Data Provider model allows “older” providers to be consumed via modern ADO.NET clients – specifically that means ODBC and OLE DB drivers. This feature is very cool although there are now many great native ADO.NET Data Providers.

Now lets briefly recap on ODBC and OLEDB:

- ODBC emerged around 1992. It replaced the world of DB Library, ESQL for C et al and soon became a “standard”

- OLEDB appeared 4 years later in 1996 (which happens to be when I joined Microsoft)

OLE DB was created to be the successor to ODBC – expanding the supported data sources/models to include things other than relational databases. Notably OLEDB was tightly tied to a Windows only technology (COM) whilst ODBC was not (Although we did try and take COM cross platform via partners)

ODBC never did get replaced. What actually happened is that ODBC remained the dominant of the two technologies for many scenarios – and became increasingly used on none Windows platforms and has become the de-facto industry standard for native relational data access.

Therefore we find ourselves in a world where:

A new Windows client to SQL Server/SQL Azure will most likely use the ADO.NET Data Provider for SQL Server

- A new none Windows client to SQL Server/SQL Azure will most likely use the ODBC driver for SQL Server [Emphasis added.]

Notice no mention of … OLEDB.

I know many UK ISVs with older applications that do use OLEDB. Please do check out the related links below and remember this is just about the SQL Server OLEDB Provider.

Related Links

- There is no rush (remember the 7 years) but check out how to migrate from OLEDB to ODBC.

- SQL Server and interoperability

The Windows Azure Service Dashboard reported [SQL Azure Database] [North Central US] [Yellow] SQL Azure Unable to Create Procedure or Table on 8/30/2011:

3:00 PM UTC Investigating - Customers attempting to create a Table or a Stored Procedure may notice that the attempt runs for an extended period of time after which you may lose connectivity to your database. Microsoft is aware and is working to resolve this issue. If you are experiencing this issue please contact support at http://www.microsoft.com/windowsazure/support.

As the screen capture illustrates, the problem affects all current Azure data centers and has not been rectified as of 8/31/2011, 9:50 AM PDT.

Brian Hopkins (@practicingEA) posted Big Data, Brewer, And A Couple Of Webinars to his Forrester blog on 8/31/2011:

Whenever I think about big data, I can't help but think of beer – I have Dr. Eric Brewer to thank for that. Let me explain.

I've been doing a lot of big data inquiries and advisory consulting recently. For the most part, folks are just trying to figure out what it is. As I said in a previous post, the name is a misnomer – it is not just about big volume. In my upcoming report for CIOs, Expand Your Digital Horizon With Big Data, Boris Evelson and I present a definition of big data:

Big data: techniques and technologies that make handling data at extreme scale economical.

You may be less than impressed with the overly simplistic definition, but there is more than meets the eye. In the figure, Boris and I illustrate the four V's of extreme scale:

The point of this graphic is that if you just have high volume or velocity, then big data may not be appropriate. As characteristics accumulate, however, big data becomes attractive by way of cost. The two main drivers are volume and velocity, while variety and variability shift the curve. In other words, extreme scale is more economical, and more economical means more people do it, leading to more solutions, etc.

So what does this have to do with beer? I've given my four V's spiel to lots of people, but a few aren't satisfied, so I've been resorting to the CAP Theorem, which Dr. Brewer presented at conference back in 2000. I'll let you read the link for the details, but the theorem (proven by MIT) goes something like this:

For highly scalable distributed systems, you can only have two of following: 1) consistency, 2) high availability, and 3) partition tolerance. C-A-P.

Translating the nerd-speak, as systems scale, you eventually need to go distributed and parallel, which requires tradeoffs. If you want perfect availability and consistency, system components must never fail (partition). If you want to scale using commodity hardware that does occasionally fail, you have to give up having perfect data consistency. How does this explain big data? Big data solutions tend to trade off consistency for the other two – this doesn’t mean they are never consistent, but the consistency takes time to replicate through big data solutions. This makes typical data warehouse appliances, even if they are petascale and parallel, NOT big data solutions. Make sense?

What are big data solutions? We are giving some webinars on the topic to help you get answers:

- Expand Your Digital Horizons With Big Data on September 7, from 11-12 p.m. Eastern time. I will co-present in this complementary webinar with Boris Evelson. Non-Forrester clients can go to the link and register.

- Getting A Handle On Big Data – Is It A Big Deal? on September 12, from 1-2 p.m. Eastern time. This is for enterprise architecture professionals who are Forrester Clients.

These will feature material from my recent research, Expand Your Digital Horizon With Big Data, as well as from Big Opportunities In Big Data and many recent inquiries.

Hope to speak with you there. Now, thanks, Dr. B...I need a brew.

<Return to section navigation list>

MarketPlace DataMarket and OData

Jeff Dillon of Netflix responded “Yes, we are working on the TV Series availability, and hope to have resolution soon” on 8/29/2011 to an OData Update? question posted by Gerald McGlothin 8/25/2011:

Just checked my ap[p]lication this morning, and it seems there have been some major changes to the OData catalog. For example, the genre "Television" isn't showing any tiltes, and most of the TV genres only have 1 or 2 titles. Any information on what is being done and when to expect OData to be working properly again?

There have been several complaints about problems with Netflix’s OData catalog recently. We subscribe to Netflix online and am unhappy about the lack of streaming availability of recent PBS series, such as 2010 and 2011 Doc Martin episodes.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Ron Jacobs announced the WF Azure Activity Pack CTP 1 of .NET 4.0 Workflow Foundation and the Windows Azure SDK in an 8/31/2011 post to the AppFabric Team blog:

We’re so excited to announce that the WF Activity Pack for Azure has just been released! Please refer to our CodePlex page for the download information. The package is also available via NuGet, you can type “Install-Package WFAzureActivityPack” in your package manager console to install the activity pack.

The Azure Activity Pack is built on top of Windows Azure SDK (August Update, 2011). Please install the SDK on your development machine.

Introduction

The Microsoft WF Azure Activity Pack CTP 1 is the first community technology preview (CTP) release of Azure activities implementation based on Windows Workflow Foundation in .NET Framework 4.0 (WF4) and Windows Azure SDK. The implementation contains a set of activities based on Windows Azure Storage Service and Windows Azure AppFabric Caching Service, which enables developers to easily access these Azure services within a workflow application.

This Activity Pack includes the following activities:

For Windows Azure Storage Service - Blob

- PutBlob creates a new block blob, or replace an existing block blob.

- GetBlob downloads the binary content of a blob.

- DeleteBlob deletes a blob if it exists.

- CopyBlob copies a blob to a destination within the storage account.

- ListBlobs enumerates the list of blobs under the specified container or a hierarchical blob folder.

For Windows Azure Storage Service - Table

- InsertEntity<T> inserts a new entity into the specified table.

- QueryEntities<T> queries entities in a table according to the specified query options.

- UpdateEntity<T> updates an existing entity in a table.

- DeleteEntity<T> deletes an existing entity in a table using the specified entity object.

- DeleteEntity deletes an existing entity in a table using partition and row keys.

For Windows Azure AppFabric Caching Service

- AddCacheItem adds an object to the cache, or updates an existing object in the cache.

- GetCacheItem gets an object from the cache as well as its expiration time.

- RemoveCacheItem removes an object from the cache.

Sample

We’ve prepared a sample for this Activity Pack, which is a simple application for users to upload a file, and view all file entities available in the system. The sample solution is created from the template of Windows Azure Project. Please refer to our CodePlex page for the source code and document of the sample.

Screenshots

Here’s what they look like in Microsoft Visual Studio.

You can configure the activity in the property grid.

There’re 4 generic activities for Azure Table service, and you need to specify the table entity type when adding them to the designer canvas. The type you specified should inherit from TableServiceEntity.

Notice

Activities in this Activity Pack don’t have special logics for handling potential exceptions. All exception behaviors are consistent with the Managed Library API provided by the Azure Service. For example, if the GetBlob activity tries to get a blob that doesn’t exist, a StorageClientException will be thrown.

So, please get prepared for the potential exceptions that may occur in the workflow. Today, the simplest approach is to wrap a TryCatch activity outside of the Azure activity.

For more information on the error handling in Windows Workflow Foundation, please refer to the “Transactions and Error Handling” section in the article “A Developer's Introduction to Windows Workflow Foundation (WF) in .NET 4”.

Feedback

You’re always welcome to send us your thoughts on WF Azure Activity Pack, and please let us know how we can do it better. You can leave feedback:

- Here on the blog comments.

- Open an Issue at wf.CodePlex.com

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Wade Wegner (@WadeWegner) announced Windows Azure Toolkits for Devices – Now With Android! on 8/31/2011:

I am tremendously pleased to share that today we have released the Windows Azure Toolkit for Android! We announced our intentions to build a toolkit for Android back in May, and it had always been our intention to release this summer (we only missed by a week or so).

In addition to this release of Android, we have also:

Released the Windows Azure Toolkit for Windows Phone (v1.3.0)

- Released the Windows Azure Toolkit for iOS (v1.2.1)

- Released a new Windows Phone sample called BabelCam both as source code and to the Windows Phone Marketplace

These releases complete our coverage of the three device platforms we intended to cover earlier this year when we started our work – Windows Phone, iOS, and Android.

It’s my belief that cloud computing provides a significant opportunity for mobile device developers, as it gives you the ability to write applications that target the same services and capabilities regardless of the device platform. Furthermore, I believe that Windows Azure is the best place to host these services. Take a look at the post Microsoft Releases the Windows Azure Toolkit for Android for examples of how American Airlines and Linxter are using the toolkits and Windows Azure to build great cross-device applications!

In addition to releasing the the Android toolkit, we have released some important updates to the Windows Phone and iOS (i.e. iPhone & iPad) toolkits for Windows Azure. Since I have so many things to cover in this post, let me break it all down in various sections (click the links to jump to the section of choice):

Android

Today we released version 0.8 of the Windows Azure Toolkit for Android. This version includes native libraries that provide support for storage and authN/Z, a sample application, and unit tests. Everything is built in Eclipse and uses the Android SDK.

Here’s the project structure:

- library

- simple (sample application)

- tests

The library project includes the full source code to the storage client and authentication implementations.

Once you configure your workspace in Eclipse, you can run the simple sample application within the Android emulator.

From here you choose to either connect directly to Windows Azure storage using your account name and key or through your proxy services running in Windows Azure. To set your account name and key, modify the ProxySelector.java file …

… found here:

As with the Windows Azure Toolkits for Windows Phone and iOS, we recommend you do not put the storage account name and key in your application source code. Instead, use a set of secure proxy services running in Windows Azure. You can use the Cloud Ready Packages for Devices which contain a set of pre-built services ready to deploy to Windows Azure.

As you can see from it’s name, the shipping sample is designed to be simple – do not consider this a best practice from a UI perspective. However, it does should you fully how to implement the storage and authentication libraries. For another alternative at the library implementations, take a look at the unit tests.

Windows Phone

Today we released version 1.3.0 of the Windows Azure Toolkit for Windows Phone. This release includes a number of long awaited features and updates, including:

- Support for SQL Azure as a membership provider.

- Support for SQL Azure as a data source through an OData service.

- Upgraded the web applications to ASP.NET MVC 3.

- Support for the Windows Azure Tools for Visual Studio 1.4 and Windows Phone Developer Tools 7.1 RC.

- Lots of little updates and bug fixes.

I’m most excited about the support for SQL Azure.

The new project wizard now lets you choose where you want to store data in Windows Azure – you can both Windows Azure storage and SQL Azure database!

You can choose to enter your SQL Azure credentials into the wizard (which places them securely in your Windows Azure project, not the device) or use a SQL Server instance locally for development.

Once you’ve finished walking through the wizard, you may not immediately notice anything different – that’s a good thing! However, in the background all the membership information has been stored in a SQL database, and you’ll see in the application a new tab for your SQL Azure data that’s consumed through an OData service.

Moving forward we plan to only target the Windows Phone Developer Tools 7.1 releases (i.e. no more WP 7.0). However, we have archived all the 7.0 samples, and will continue to ship them as part of the toolkit as long as the Windows Phone Marketplace accepts 7.0 applications. You can find them organized in two different folders: WP7.0 and WP7.1.

iOS

Over the last few weeks, and with the help of Scott Densmore, we have made a series of important updates to the Windows Azure Toolkit for iOS – namely, bug fixes and project restructuring!

Over the last few weeks, as more and more developers used our iOS libraries, we started getting reports of memory leaks. Scott has spent a considerable amount of time tracking these down, and all these updates have been checked into the repo. Additionally, we have spent some time refactoring our github repos, and you’ll now find everything related to the Windows Azure Toolkit for iOS in a single repository:

This gives us a lot more flexibility for releases, as well as making sure that the resources are easy to use and consume.

What’s Next?

Oh no, we’re not done! There’s still a lot we want to do. We continue to get great feedback from customers and partners using these toolkits.

Over the next few months, here are the things we’ll focus on:

- Continue to update the Windows Azure Toolkits for iOS and Android so that they are in full parity with the Windows Azure Toolkit for Windows Phone.

- Samples, samples, and more samples! We want to have a great set of samples that work across all three device platforms. We’ve got a good start with BabelCam, but we need to bring it to iOS and Android, and then build more!

- Continue to support and fix the toolkits.

Your feedback is invaluable, so please continue to send it our way!

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) posted a Trip Report: LightSwitch Southwest Speaking Tour on 8/31/2011:

This month I spoke in Fresno, L.A., and Phoenix areas on Visual Studio LightSwitch. I broke up the “tour” into two driving trips since I spoke in Fresno on the 11th and then had a week in between before I left for L.A. and Phoenix last week. Of course, road trips would require me to tack on a couple days vacation :-). It’s summer and I LOVE the great outdoors so it was a perfect excuse to get away from home. See below for details on the awesome developer community (and hiking) experiences.

Fresno, CA - Central California .NET User Group

The Central California .NET user group meeting in Fresno was put together by Gustavo Cavalcanti. Gustavo has spoken at our local user group here in the San Francisco Bay Area before and so when he asked me to come to Fresno I wanted to return the favor of course. Fresno is only a few hours away from where I live in the Bay Area and was a perfect opportunity to make my way to Yosemite for the weekend :-). Gustavo and his family graciously let me stay the night at their house after the talk so I could take off to the amazing national park in the morning. (See below for pictures)

About 30 people came to the meeting in Fresno. There were predominantly professional developers in the room (people paid to write code) but there were also a few non-devs, and database and IT admins in the room. I also met an interesting fellow that knew me back in my FoxPro days that had moved onto Cold Fusion/JSP/Java instead of .NET 10 years ago that was extremely interested in LightSwitch. So because of the mixed crowd, I started with a basic intro for 45 minutes and then slowly transitioned into the pro developer uses around custom controls and extensibility. I first explained the SKU lineup and ran through all the designers to create my version of the Vision Clinic sample. I showed how to federate multiple data sources, use the screen templates, program access control, and ran through the deployment options.

I then transitioned to the more programmer-focused tasks. I showed all the method hooks in the “Write Code” button – I called this the “developer escape hatch” and got some smiles. :-) I talked about the 3-tier architecture & file view on the Solution Explorer. I then moved onto the more advanced development scenarios using the Contoso Construction sample and showed how to add your own helper classes to the Client & Server projects in order to customize the code. I also showed how to add a custom Silverlight control to a screen – this got applause. Finally I demonstrated the Extensibility Toolkit and some additional themes and shells. The pro-developers really appreciated the fact you could customize the app in a variety of ways. Most devs had no idea that LightSwitch was this flexible.

Santa Monica, CA - Los Angeles Silverlight User Group

The L.A. Silverlight user group (or LASLUG as they call it) put together a cool venue in Santa Monica to have the meeting called Blank Spaces which lets people rent out office space by the hour. Unfortunately I was a bit late because my smartphone led me over to City Hall instead (argh!), but when I showed up people were still eating their pizza so I was cool :-). It was co-sponsored by LightSwitchHelpWebsite.com, a great LightSwitch developer resource run by Michael Washington that caters more to extending and customizing LightSwitch. The main organizers, Michael and Victor Gaudioso are Silverlight MVPs and considering this was a Silverlight user group I was expecting a room of Silverlight gurus (something that I definitely am not). I have to admit I was slightly nervous. Fortunately (for me) the room of about 40 people was mixed with developers of all skill levels and expertise. Victor later mentioned that they have talks on a variety of .NET technologies so it draws all kinds of .NET developers.

Because the room was almost all professional developers that had seen LightSwitch before, I did a super-quick intro and then ran through the more advanced development scenarios like I did in Fresno. I also showed a sneak peak of the Office Integration Pack that one of our partners is working on. It’s a free extension from Grid Logic that will help LightSwitch developers work with Microsoft Office (Outlook, Word, Excel) in a variety of ways. People were very interested in this as I imagined they would be – making working with Office via COM easier is always welcome by .NET developers ;-). Look for that release within the next couple weeks on the Developer Center.

In addition to questions about Office and LightSwitch, I also had a couple questions after the talk on deployment and pointed people to the Deployment Guide here. And we also had a couple folks from DevExpress who built the XTraReports extension for LightSwitch that provided some give-aways and bought me a well deserved glass of wine after the talk. Thanks Seth and Rachel!

Chandler, AZ - Southeast Valley .NET User Group

The Southeast Valley .NET User Group is run by Joe Guadagno, the president of INETA in North America (which provides support to Microsoft .NET user groups). This venue was the coolest of all. The meeting was held at Gangplank – a FREE collaborative workspace that provides office space and equipment to the public. I spoke in an open space with desks scattered all over the back of the large room filled with people working on all sorts of stuff together, mostly hunched over a computer.

There were about 30 people in the area where I was presenting. What was interesting is that apparently I drew a very different crowd that normally Joe sees. He said he only recognized a few faces in the audience. He had booked 4 hours for the meeting but considering it was 118 degrees, we settled for a 2.5 hour presentation with a cold beer chaser :-). I started with a basic introduction to LightSwitch and then ended with the advanced extensibility similar to the format I did in Fresno. There weren’t very many questions with this group but there definitely was applause at the end so I think people enjoyed it. In fact, I was cc’d on an email to Joe from an attendee saying that he had traveled all the way from northern Arizona to see the presentation and that he really enjoyed it. Plus he won an ultimate MSDN subscription! I have to say Joe definitely had the best SWAG of all the groups. Thanks to all our partners that supported the event.

One of the prizes was a set of tickets to the Diamondbacks game the next evening. So one of the attendees got to come with us to see the Padres lose. …

Beth continues with pictures and stories.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Lori MacVittie (@lmacvittie) took on Examining responsibility for auto-scalability in cloud computing environments in her The Case (For & Against) Application-Driven Scalability in Cloud Computing Environments post of 8/31/2011 to F5’s Dev Central blog:

Today, the argument regarding responsibility for auto-scaling in cloud computing as well as highly virtualized environments remains mostly constrained to e-mail conversations and gatherings at espresso machines. It’s an argument that needs more industry and “technology consumer” awareness, because it’s ultimately one of the underpinnings of a dynamic data center architecture; it’s the piece of the puzzle that makes or breaks one of the highest value propositions of cloud computing and virtualization: scalability.

The question appears to be a simple one: what component is responsible not only for recognizing the need for additional capacity, but acting on that information to actually initiate the provisioning of more capacity? Neither the answer, nor the question, it turns out are as simple as appears at first glance. There are a variety of factors that need to be considered, and each of the arguments for – and against - a specific component have considerable weight.

Today we’re going to specifically examine the case for the application as the primary driver of scalability in cloud computing environments.

ANSWER: THE APPLICATION

The first and most obvious answer is that the application should drive scalability. After all, the application knows when it is overwhelmed, right? Only the application has the visibility into its local environment that indicates when it is reaching capacity with respect to memory and compute and even application resource. The developer, having thoroughly load tested the application, knows the precise load in terms of connections and usage patterns that causes performance degradation and/or maximum capacity and thus it should logically fall to the application to monitor resource consumption with the goal of initiating a scaling event when necessary.

In theory, this works. In practice, however, it doesn’t. First, application instances may be scaled-up in deployment. The subsequent increase in memory and/or compute resources changing the thresholds at which the application should scale. If the application is developed with the ability to set thresholds based on an external set of values this may still be a viable solution. But once the application recognizes it is approaching a scaling event, how does it initiate that event? If it is integrated with the environment’s management framework – say the cloud API – it can do so. But to do so requires that the application be developed with an end-goal of being deployed in a specific cloud computing environment. Portability is immediately sacrificed for control in a way that locks in the organization to a specific vendor for the life of the application – or until said organization is willing to rewrite the application so that it targets a different management framework.

Some day, in the future, it may be the case that the application can simply send out an SoS of sorts – some interoperable message that indicates it is hovering near dangerous utilization levels and needs help. Such a message could then be interpreted by the infrastructure and/or management framework to automatically initiate a scaling event, thus preserving control and interoperability (and thus portability). That’s the vision of a stateless infrastructure, but one that is unlikely to be an option in the near future.

At this time, back in reality land, the application has the necessary data but is unable to practically act upon it.

But let’s assume it could, or that the developers integrated the application appropriately with the management framework such that the application could initiate a scaling event. This works for the first instance, but subsequently becomes problematic – and expensive. This is because visibility is limited to the application instance, not the application as a whole – the one which is presented to the end-user through network server virtualization enabled by load balancing and application delivery. As application instance #1 nears capacity, it initiates a scaling event which launches application instance #2. Any number of things go wrong at this point:

- Application instance #1 continues to experience increasing load while application instance #2 launches, causing it to (unnecessarily) initiate another scaling event, resulting in application instance #3. Obviously timing and a proactive scaling strategy is required to avoid such a (potentially costly) scenario. But proactive scaling strategies require historical trend analysis, which means maintaining X minutes of data and determining growth rates as a means to predict when the application will exceed configured thresholds and initiate a scaling event prior to that time, taking into consideration the amount of time required to launch instance #2.

- Once two application instances are available, consider that load continues to increase until both are nearing capacity. Both applications, being unaware of each other, will subsequently initiate a scaling event, resulting in the launch of application instance #3 and application instance #4. It is likely the case that one additional instance would suffice, but because the application instances are not collaborating with each other and have no control over each other, it is likely that growth of application instances will occur exponentially assuming a round robin load balancing algorithm and a constant increase in load.

- Because there is no collaboration between application instances, it is nearly impossible to scale back down – a necessary component to maintaining the benefits of a dynamic data center architecture. The decision to scale down, i.e. decommission an instance would need to be made by…the instance. It is not impossible to imagine a scenario in which multiple instances simultaneously “shut down”, neither is it impossible to imagine a scenario in which multiple instances remain active despite overall application load requiring merely one to sustain acceptable availability and performance.

These are generally undesirable results; no one architects a dynamic data center with the intention of making scalability less efficient or rendering it unable to effectively meet business and operational requirements with respect to performance and availability. Both are compromised by an application-driven scalability strategy because application instances lack the visibility necessary to maintaining capacity at levels appropriate to meet demand, and have no visibility into end-user performance, only internal application and back-end integration performance. Both impact overall end-user performance, but are incomplete measures of the response time experienced by application consumers.

To sum up, the “application” has limited data and today, no control over provisioning processes. And even assuming full control over provisioning, the availability of only partial data leaves the process fraught with potential risk to operational stability.

NEXT: The Case (For & Against) Network-Driven Scalability in Cloud Computing Environments

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

The Server and Cloud Platform Team (@MSServerCloud) posted Microsoft Path to the Private Cloud Contest: Day 2, Question 2 44 minutes late to the Microsoft Virtualization Team blog on 8/31/2011 at 10:44 AM PDT:

Yesterday we kicked off the “Microsoft Path to the Private Cloud” Contest, read official rules. Today we have another Xbox to give away, so see below for second question in the series of three for the day.

A reminder on how the contest works: We’ll be posting three (3) questions related to Microsoft’s virtualization, system management and private cloud offerings today (8/31), one per hour from 9:00 a.m. – 11:00 a.m. PST. You’ll be able to find the answers on the Microsoft.com sites. Once you have the answers to all three questions, you send us single Twitter message (direct tweet) with all three answers to the questions to the @MSServerCloud Twitter handle. The person that sends us the first Twitter message with all three correct answers in it will win an Xbox 360 4GB Kinect Game Console bundle for the day.

And the 8/31, 10 am. Question is:

Q: What is the name of the Microsoft program which provides an infrastructure as a service private cloud with a pre-validated configuration from server partners through the combination of Microsoft software; consolidated guidance; validated configurations from OEM partners for compute; network; and storage; and value-added software components?

Remember to gather all three answers before you direct tweet us the message. Be sure to check back here at 11:00 a.m. PST as well for the next question.

Reminder - the 8/31, 9 am. Question was:

Q: Which Microsoft offering provides deep visibility into the health, performance and availability of your datacenter, private cloud and public cloud environments (such as Windows Azure) – across applications, operating systems, hypervisors and even hardware – through a single, familiar and easy to use interface? [See answer below.]

My answer to Question 2 is the Hyper-V Cloud Jumpstart (scroll down to The Microsoft Server and Cloud Platform Team announced the Hyper-V Jumpstart as a Data Center Solution on 8/29/2011 article.)

The Server and Cloud Platform Team (@MSServerCloud) posted Microsoft Path to the Private Cloud [Contest]: Day 2, Question 1 to the Microsoft Virtualization Team blog on 8/31/2011:

Yesterday we kicked off the “Microsoft Path to the Private Cloud” Contest, read official rules. As of the posting of this blog, we still have not received a tweet with the winning answers. That means there are still two Xboxes on the table. But we're keeping things moving and today is the final day of the contest so see below for first question in the series of three for the day.

A reminder on how the contest works: We’ll be posting three (3) questions related to Microsoft’s virtualization, system management and private cloud offerings today (8/31), one per hour from 9:00 a.m. – 11:00 a.m. PST. You’ll be able to find the answers on the Microsoft.com sites. Once you have the answers to all three questions, you send us single Twitter message (direct tweet) with all three answers to the questions to the @MSServerCloud Twitter handle. The person that sends us the first Twitter message with all three correct answers in it will win an Xbox 360 4GB Kinect Game Console bundle for the day.

And the 8/31, 9 am. Question is:

Q: Which Microsoft offering provides deep visibility into the health, performance and availability of your datacenter, private cloud and public cloud environments (such as Windows Azure) – across applications, operating systems, hypervisors and even hardware – through a single, familiar and easy to use interface? [Emphasis added.]

Let the contest begin!! Remember to gather all three answers before you direct tweet us the message. Be sure to check back here at 10:00 a.m. and 11:00 a.m. PST as well for the next two questions.

The answer obviously is System Center Operations Manager (SCOM), which recently gained the Monitoring Pack for Windows Azure Applications for SCOM 2007 R2 and SCOM 2012 Beta on 8/16/2011. Stay tuned for a tutorial post about setting up the MP4WAz with the SCOM 2012 Beta.

Alex Wilhelm (@alex) posted Dell snubs Microsoft, launches private cloud product with VMware, a concise history of Dell’s WAPA promise, to the The Next Web Microsoft blog on 8/31/2011:

In March of 2010, Dell announced that it was working in partnership with Microsoft’s Azure team to build ‘Dell-powered Windows Azure platform appliance[s].’

In that statement, Dell elaborated on how close it was with Microsoft: “Dell and Microsoft will collaborate on the Windows Azure platform, with Dell and Microsoft offering services, and Microsoft continuing to invest in Dell hardware for Windows Azure infrastructure.” They were, for all intents, best friends.

Even more, Dell was a given a very early invite to Azure, one of the first, in fact. So Microsoft was treating Dell to early access and fat orders, and Dell was set to build cloud tools (in this cast the platform appliance product) on top of Azure. Everything looked like it was going swimmingly, as it was a win-win for both companies.

As an aside, the ‘platform appliances’ that were to be built are more or less each a ‘private cloud in a box,’ that can contain, according to Dell, even up to 1,000 servers.

But then something odd happened: nothing. In January of 2011, Mary Jo Foley asked the question that was on everyone’s mind: “Where are those Windows Azure Appliances?” Specifically, where was what Dell had promised twice, early in the previous year? It wasn’t until June of 2011 that Fujitsu announced that it was going to be first to market with an Azure-based platform appliance. It was to launch in August, some 16 months after Dell was announced as a partner with Azure.

Then yesterday happened, with Dell announcing its first major cloud product. The only problem? It did so with VMware, Microsoft’s new arch-rival. This announcement, of course, follows Microsoft’s lampooning of VMware’s cloud solutions, and VMware’s rather stark words about the future viability of the PC market.

GigaOm managed to eek out of Dell that the company still intends to build an Azure based solution:

[Dell] said clouds based on Microsoft Windows Azure and the open-source OpenStack platforms will come in the next several months. The VMware-based cloud came first because of VMware’s large footprint potential business customers. Dell’s mantra has been “open, capable, affordable,” he added, which is why the company is building such an expansive portfolio of cloud computing services. Often, he noted, “customers have a bent for one [cloud] or another,” so Dell wants to be able to meet their needs.

That sounds very reasonable, provided you don’t look at the history and language that Dell had used before about Microsoft and its cloud products.

So what happened? We are not sure. We are trying to find the right person at Microsoft to talk to, but that will take some time (we will update this post later, of course). For now, it seems that somewhere down the line, despite what was once a cozy relationship, Dell was converted.

As Dell had very early access to Azure, and the full cooperation of Microsoft, it could have beaten Fujitsu to the market with an Azure-based solution if it had chosen. Therefore, it must have waited on purpose. VMware, it appears, won.

The VMware v. Microsoft wars are heating up. Who will cast the next blow?

Update – Microsoft responded with the following statement: “To reinforce what Dell said earlier, we continue to collaborate closely on the Windows Azure Platform Appliance.”

<Return to section navigation list>

Cloud Security and Governance

Bill Claybrook issued a Warning: Not all cloud licensing models are user-friendly in an 8/31/2011 post to SearchCloudComputing.com:

Cloud licensing models, or cloud licensing management, should focus on the ability to move applications and data from one virtualized environment -- data centers, private clouds and public clouds -- to another. Cloud licensing management also encompasses license mobility, or moving licenses for applications and operating systems from one virtual environment to another, such as from:

- a virtual host to another virtual host within a virtual data center

- one host to another host within a public cloud

- one host to another host within a private cloud

- a virtual data center to a public cloud and back

- a private cloud to a public cloud and back

- a public cloud to another public cloud and back

Traditionally, there are three basic software and hardware licensing types:

- Per user: User is granted a license to use an application or connect to an OS.

- Per device: An application or OS is granted a license on a per-device or per-processor model.

- Enterprise: This licensing model covers all users and devices.

Even though these licensing structures are still in existence and are used today for hardware and software, some of them can lead to some un-user-friendly cloud licensing models. In the virtual data center, VMware created vMotion to dynamically move virtual machines (VMs) from one virtual host to another. However, technology isn't available to easily move an application and its data from one cloud environment to another and then back again, mainly because there are often major differences in cloud implementations.

In cloud computing, the number of servers hosting applications to meet demand increases or decreases dynamically according to uptime, reliability, stability and performance. This dynamic auto-scaling capability could overwhelm enterprises with excessive software licensing costs.

Three choices in cloud software licensing

You can encounter various software licensing issues, no matter which environment you're moving applications or data to or from. In cloud computing licensing models, enterprises have three basic choices: propriety software, commercial open source licensing and community open source licensing -- each of which has its own pros and cons. For example, while open source software licensing has fewer limitations for customers, proprietary software licenses generally include vendor support."If cloud providers get it right and software vendors play ball, the proprietary vs. open source software debate may become more favorable to proprietary software in the cloud than it is on-premises," said William Vambenepe in a blog post. This argument centers on cloud computing providers and vendors removing the usual hassle of cloud license management that users experience.

For large customers with enterprise (i.e., unlimited) licensing agreements, removing the concern of license management may not be a big deal, added Vambenepe. With better, more-friendly cloud license management options, enterprises won't have to track license usage and renewal. In addition, users wouldn't need to worry about switching from a development license to a production license when moving to a production environment.

…while community open source cloud software offers some reprieve, you'll have to fend for yourself when it comes to support.

Proprietary software licensing has traditionally been static and bound to specific locations and hardware. This doesn't necessarily work in virtual data centers or cloud environments though, which have opened up enterprises to a more dynamic world. Proprietary software licensing has not kept up with this shift.

But you can't point a finger solely at proprietary cloud software; commercial open source software also has its share of restrictions and problems. Several open-source vendors such as Eucalyptus Systems and Cloud.com have licensing models that are somewhat similar to proprietary vendors' licensing structures.

Commercial open source vendors typically implement closed-source features that aren't available in the underlying open source project code. These features aren't shared with the associated community until the vendor deems that they no longer provide a competitive advantage. Some say that commercial open source software is "software that is open for all, but better for paying customers."

Eucalyptus Systems is an example of a commercial open source, or open core, cloud vendor. It offers two editions of its Eucalyptus cloud software: Eucalyptus Community Edition and Eucalyptus Enterprise Edition 2.0, the commercial edition. The Community Edition, which is a free download from the Eucalyptus software project site, does not include support. Eucalyptus Systems is the primary contributor to the Eucalyptus project.

Eucalyptus Enterprise Edition 2.0 is the closed source edition of the Eucalyptus open source project. It is licensed based on the number of processor cores on the physical host server. Eucalyptus Systems takes a snapshot of the Eucalyptus open source project code, adds features to it, provides support and charges a licensing fee. Any add-ons are closed source code; however, one industry blogger noted that one of the features previously reserved for Eucalyptus Enterprise Edition 2.0 are now being open sourced.

Community open source cloud software, on the other hand, practically has no limits; you can license it to an unlimited number of users and can run it on an unlimited number of servers --no matter the number of processors. Community open source cloud software allows you to access the code and make changes -- as long as you have the qualified staff to do so. If the community open source cloud software lacks features that your enterprise needs, there's no need to wait for the next release. You can implement the features, send them to the community open source software project team and lobby to get them included in the mainstream release. There are no licensing costs involved with this, but there is cost in man hours associated with the time your software team spends coding and implementing the features. And you will need to provide your own support or hire a third-party to handle support.

OpenStack is the primary example of community open source cloud software. It has received the support of several large vendors, including Dell, HP, Intel, Citrix and Rackspace, and is expected to become a strong candidate for an open standard for cloud computing. If this happens, it will resolve, to a large extent, the issues surrounding cloud interoperability and application mobility and can greatly simplify licensing in hybrid clouds.

Cloud.com, which was acquired by Citrix in July 2011, also provides a closed source version of its CloudStack software. Cloud.com CloudStack 2.0 Community Edition is an open source Infrastructure as a Service (IaaS) software platform that's available under the GNU General Public License. The Community Edition enables users to build, manage and deploy cloud computing environments; it's free of charge and the Cloud.com community provides support.

CloudStack 2.0 Enterprise Edition is open source and includes closed source code. It comes with an enterprise-ready subscription-only feature set and commercial support. Cloud.com's CloudStack 2.0 Service Provider Edition also is open source and includes closed source code. It gives service providers a management software and infrastructure technology to host their own public clouds.

Commercially licensed open source software is sometimes derived from a single vendor-controlled open source project in which the vendor limits contributions from third parties. Copyright ownership and an inability to track code contributions are two reasons why vendors would limit outsider contributions.

Red Hat created its Red Hat Cloud Access program to enable customers to transfer Red Hat Enterprise Linux subscriptions between their own self-hosted infrastructure and Amazon Elastic Cloud Compute (EC2). Customers can use standard licensed support contracts and methods to receive Red Hat support, but not all licenses are eligible for Red Hat Cloud Access.

Customers need to have a minimum of 25 active premium subscriptions and maintain a direct support relationship with Red Hat. Customers that receive Red Hat support through a third party or hardware OEM, such as HP and IBM, are not eligible. A premium subscription costs $1,299 annually, for a server with up to two processor sockets, or $2,499, for a server with three or more sockets. In addition, you can only use a single subscription for one VM at a time.

A look at Microsoft and Oracle licensing models

Major proprietary software vendors like Microsoft and Oracle have complex cloud licensing models. Both companies, however, are making attempts to make cloud licensing management more customer-friendly and less expensive, though the desire to maintain profit margins still gets in the way of this do-good attitude.In July 2011, Microsoft initiated cloud mobility, allowing customers to use current license agreements to move server applications from on-premises data centers to clouds. Customers can use license mobility benefits for SQL Server, Exchange Server, SharePoint Server, Lync Server, Systems Center and Dynamics CRM. One limitation of Microsoft's license mobility is that customers with volume licenses can only move the license between environments once every 90 days. If you move Exchange Server to Amazon EC2, for example, you cannot move it back to an on-premises server until the initial 90 days have passed. If you do, you need to purchase another license for the on-premises server.

But there's a catch. Microsoft license mobility is available only to customers who pay extra for Software Assurance (SA), which can nearly double the cost of a license; SA for servers is typically 25% of the cost of a license, multiplied by three years. Customers that want to deploy Windows Server instances in a cloud cannot use their current licenses. They must purchase new licenses.

And while a Windows Server Datacenter Edition license doesn't limit the number of VMs installed on a physical host, it does cost more. Microsoft is clearly limiting its licensing changes to give greater mobility across clouds to maintain profit margins.

Enterprise agreements with SA usually apply to customers with more than 250 users and devices. Smaller customers without volume licensing agreements will have to pay again if they decide to move applications from an on-premises data center to a cloud service.

Amazon gives its Amazon EC2 customers that want to use Windows Server instances a break in complexity. Customers simply pay Amazon on a per-hour basis; they don't have to purchase a license from Microsoft. However, using a Windows Server instance on Amazon EC2 does cost more than using a Linux instance does.

Oracle is another large proprietary vendor with a complex and costly cloud licensing model. When Oracle programs are licensed in the cloud, customers must count each virtual core as a physical core, even though multiple virtual cores could be sharing a physical core. Oracle licensing depends on a few things: the Oracle database edition, software environment, and whether you want to license based on named users or number of processors. Blogger Neeraj Bhatia provides more information on the complexities and cost of licensing Oracle database editions in cloud environments.

No quick answers for cloud licensing confusion

Licensing models for all cloud software -- except community open source software --hasn't caught up with the times and the flexibility and mobility of cloud computing. That means you may have to accept the possibility of having to live with nightmarish cloud license complexity when using proprietary vendors, commercial open source vendors, and vendors such as Red Hat, that provide open source products but sell subscriptions to cover support. And while community open source cloud software offers some reprieve, you'll have to fend for yourself when it comes to support.Today, the best way to develop, run and license applications with a minimum amount of licensing complexity is to use open source software tools like LAMP or run a single vendor environment such as an all-Microsoft environment using Windows Azure.

Companies like Red Hat that primarily attract only their installed base to their cloud products risk losing share in both the cloud and OS markets if they don't address the pricing and licensing needs of cloud customers. But there are no clear winners yet, either. Given the inherent limitations of cloud licensing among most cloud vendors, a large swath of the vendor pool needs to adapt to the changing computing landscape.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Christine Drake posted Matching Security to Your Cloud to the Trend Micro blog on 8/30/2011:

There’s a lot of talk about cloud computing and cloud security this week as many people are attending VMworld in Las Vegas (follow Trend Micro at VMworld). But not all types of cloud security are best suited for all types of cloud computing.

When people generically refer to “cloud computing” they usually mean the public cloud. But what about private clouds or hybrid clouds? The May 2011 Trend Micro cloud survey results showed that companies are adopting all three models almost equally. Although there are certainly overlaps in security best practices across these models, there are also differences, and your security should be able to address the security risks in your specific cloud deployment.

For this discussion, let’s look at private, hybrid, and public Infrastructure as a Service (IaaS) clouds. Regardless of which cloud model you deploy, you’ll actually want similar protection as you would on a physical machine—firewall, antimalware, intrusion detection and prevention, application control, integrity monitoring, log inspection, encryption, etc. But you’ve turned to cloud computing to reap the benefits of flexibility, cost savings, and more. Your cloud security should work with your cloud model to maximize these benefits. How this is done varies by type of cloud.

Virtual Data Centers and Private Clouds

In both virtual data centers and private clouds you control the hypervisor. In these environments, your cloud security should integrate with the hypervisor APIs to enable agentless security. This approach deploys a dedicated security virtual machine on each physical host and uses a small footprint driver on each guest VM to coordinate and stagger security scans and updates. This approach has numerous benefits, including better performance, higher VM ratios, faster protection with no off-box security communication needed, and less administrative complexity with no agents to deploy, configure, or update. In addition, security such as hypervisor integrity monitoring can help protect these environments.Public Clouds

In a public IaaS environment, businesses don’t get control over the hypervisor because it’s a multi-tenant environment. Without hypervisor control, security needs to be deployed as agent-based protection on the VM-level, creating self-defending VMs that stay secure in the shared infrastructure and that help maintain VM isolation. Although the agents put more of a burden on the host, the economies of scale in a public cloud compensate, and there are additional cost benefits with capex savings and a pay-per-use approach.Hybrid Clouds

With hybrid clouds, you use both a private and public cloud to leverage the different benefits of both. Your cloud security should have flexible deployment options, so you can get better performance in your private cloud with agentless security and can create self-defending VMs in your public cloud deployment. In addition, if you want certain VMs to travel between your private and public clouds, you should be able to use agent-based security that travels with the VMs, but that still coordinates with the dedicated security virtual appliance when in your private cloud to stagger scans and preserve performance. Of course, all of these security deployments should be managed through one console—this should actually cover your physical, virtual, and private, public, and hybrid cloud server security.Trend Micro just published a new web page on Total Cloud Protection and a white paper, Total Cloud Protection: Security for Your Unique Cloud Infrastructure. The paper discusses different cloud models, security designed for each model, and Trend Micro solutions. Your cloud security should maximize your cloud benefits and help accelerate your cloud ROI. Why settle for anything less?

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Tim Anderson (@timanderson) reported Heroku gets Java, Salesforce.com embraces HTML5 for mobile in an 8/31/2011 post:

Salesforce.com has made a host of announcements at its Dreamforce conference currently under way in San Francisco. In brief:

- Chatter, the Salesforce.com social networking platform for enterprises, is being extended with presence status, screen sharing, approval actions, and the ability to create groups with customers as well as with internal users. Salesforce.com calls this the Social Enterprise.

- Heroku, a service for hosting Ruby applications which Salesforce.com acquired in 2010, will now also support Java.

- Salesforce.com is baking mobile support into its applications via HTML 5. The new mobile, touch-friendly user interface is called Touch.salesforce.com.

Other announcements include the general availability of database.com, a cloud database service announced at last year’s Dreamforce, and a new service called Data.com which provides company information though a combination of Dun and Bradstreet’s data along with information from Jigsaw.

I spoke to EMEA VP Tim Barker about the announcements. Does Java on Heroku replace the VMForce platform, which lets you run Java applications on VMWare using the Spring framework plus access to Salesforce.com APIs? Barker is diplomatic and says it is a developer choice, but adds that VMForce “was an inspiration for us, to see that we needed Java language on Heroku as well.”

My observation is that since the introduction of VMForce, VMWare has come up with other cloud-based initiatives, and the Salesforce.com no longer seems to be a key platform. These two companies have grown apart.

For more information on Java on Heroku, see the official announcement. Heroku was formed in part to promote hosted Ruby as an alternative to Java, so this is a bittersweet moment for the platform, and the announcement has an entertaining analysis of Java’s strengths and weaknesses, including the topic “How J2EE detailed Java”:

J2EE was built for a world of application distribution — that is, software packaged to be run by others, such as licensed software. But it was put to use in a world of application development and deployment — that is, software-as-a-service. This created a perpetual impedance mismatch between technology and use case. Java applications in the modern era suffer greatly under the burden of this mismatch.

Naturally the announcement goes on to explain how Heroku has solved this mismatch. Note that Heroku also supports Clojure and Node.js.

What about Database.com, why is it more expensive than other cloud database services? “It is a trusted platform that we operate, and not a race to the bottom in terms of the cheapest possible way to build an application,” says Barker.

That said, note that you can get a free account, which includes 100,000 records, 50,000 transactions per month and support for three enterprise users.

What are the implications of the HTML5-based Touch.salesforce.com for existing Salesforce.com mobile apps, or the Flex SDK and Adobe AIR support in the platform? “We do have an existing set of apps,” says Barker. “We have Salesforce mobile which supports Blackberry, iOS and Android. We also have an application for Chatter. Native apps are an important part of our strategy. But what we’ve found is that for customer apps and for broad applications, to be able to deliver all the functionality, we’re finding the best approach is using HTML 5.”

The advantage of the HTML5 approach for customers is that it comes for free with the platform.

As for Adobe AIR, it is still being used and is a good choice if you need a desktop application. That said, I got the impression that Salesforce.com sees HTML5 as the best solution to the problem of supporting a range of mobile operating systems.

I have been following Salesforce.com closely for several years, during which time the platform has grown steadily and shown impressive consistency. “We grew 38% year on year in Q2,” says Barker. This year’s Dreamforce apparently has nearly 45,000 registered attendees, which is 50% up on last year, though I suspect this may include free registrations for the keynotes and exhibition. Nevertheless, the company claims “the world’s largest enterprise software conference”. Oracle OpenWorld 2010 reported around 41,000 attendees.

Related posts:

Matthew Weinberger (@MattNLM) reported Dell Reaches Out to SMBs with New Cloud App Offering in an 8/31/2011 post to the TalkinCloud blog:

Dell is starting to see money in the cloud. And in an effort to keep that momentum going, Dell used this week’s Dreamforce ’11 conference to launch Dell Cloud Business Applications, a solution aimed at connecting SMBs with SaaS providers and then providing Boomi integration services to get them up to speed. Oh, and Dell is heavily promoting systems integrators as the key avenue of support for these customers.

As you may have guessed from the venue where Dell chose to make the announcement, the first SaaS vendor included in the offering is Salesforce.com and its cloud CRM solution. Part of the offering will be helping these customers choose the SaaS app that’s right for them, and to that end Dell will be carefully auditing potential vendor partners for its SMB value propositions, according to the press release.

Dell Boomi comes in for cloud data integration and making sure the new SaaS works with existing systems. And Dell Cloud Business Applications comes with analytics and visibility features that work across applications, helping giving greater visibility into cloud value. For example, thanks to this newly reinforced partnerships, Salesforce CRM data will be available across multiple applications in the Dell Cloud Business Applications catalog for smarter, more detailed reporting.

And as I mentioned above, Dell definitely sees a place for its cloud partners in this new solution. Dell PartnerDirect systems integrators and consultants (read: MSPs) are enabled to provide support and additional value-added services on top of the out-of-the-box deployment you get with Dell Cloud Business Applications.

Dell Business Cloud Applications is available today in the United States, with pricing for Salesforce CRM and Boomi integration services starting at $565 per month. Implementation packages start at $5,000.

Dell’s often built itself up as a technology titan that cares about the smallest of small businesses. I guess Dell is putting its cloud services where its mouth is ahead of a more sweeping cloud update at this October’s Dell World conference.

Read More About This Topic

- Dreamforce ’11: Salesforce Investing $50 Million in Global Partners

- Dreamforce 2011 Agenda: What Cloud Services Providers Can Expect

- LiveOffice Connecting Salesforce and Microsoft Outlook

- Is Salesforce.com Buying Social Media Helpdesk Assistly?

- Salesforce.com Posts Small Q2 Loss, But Raises 2011 Outlook

Jeff Barr (@jeffbarr) announced Elastic Load Balancer SSL Support Options (for PCI compliance) in an 8/30/2011 post:

We've added some additional flexibility to Amazon EC2's Elastic Load Balancing feature:

- You can now terminate SSL sessions at the load balancer and then re-encrypt them before they are sent to the back-end EC2 instances.

- You can now configure the set of ciphers and SSL protocols accepted by the load balancer.

- You can now configure HTTPS health checks.

Terminate and Re-Encrypt

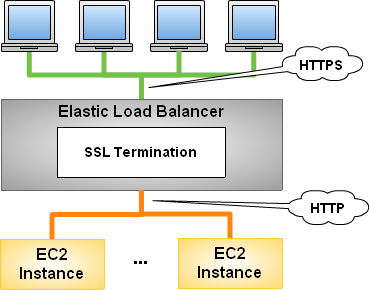

The Elastic Load Balancer has supported SSL for a while. Here's the original model:

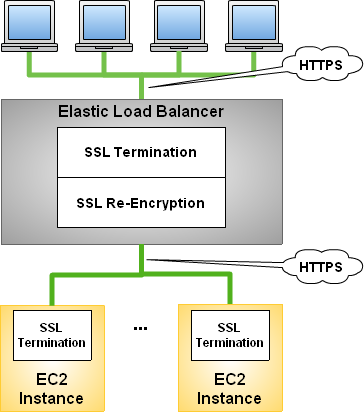

We're enhancing this feature to allow you to terminate a request at the load balancer and then re-encrypt it before it is sent to an EC2 instance:

This provides additional protection for your data, a must for PCI compliance, among other scenarios. As a bonus feature, the Elastic Load Balancer can now verify the authenticity of the EC2 server before sending the request. By allowing the ELB to handle the termination and re-encryption, developers can now take advantage of features like session affinity (sticky sessions) while still using HTTPS.

You can set this up from the AWS Management Console, the Command Line tools, or the Elastic Load Balancer APIs.

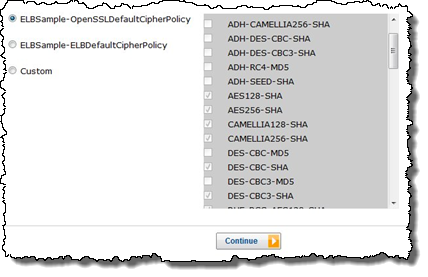

Configurable Ciphers

You may not know it, but SSL can use a variety of different algorithms (also known as ciphers) to encrypt and decrypt requests and responses. The algorithms vary in the level of protection that they offer and in the computational load required to do the work.Developers have told us that they need additional control over the set of ciphers used by their Load Balancers. In some cases they need to support archaic clients using equally archaic ciphers. More commonly, they want to use only the most recent ciphers as part of a compliance effort. For example, they might want to stick with 256 bit AES, a cipher that the US Government has approved for the protection of top secret information.

You can now control the set of ciphers that each of your Load Balancers presents to its clients. You can do this using the command-line tools, the APIs, or the AWS Management Console:

HTTPS Health Checks

You can (and definitely should) configure your Load Balancers to perform periodic health checks on the EC2 instances under their purview. Up until now these health checks had to be done via HTTP. As of this release, you can now configure HTTPS health checks. This means that you can configure your instances such that they respond only to requests on port 443.Keep Going

Read more about these new features in the Elastic Load Balancing Developer Guide.

Tim Anderson (@timanderson) asserted Perfect virtualization is a threat to Windows: VMWare aims to embrace and extinguish and summarized new VMware applications in an 8/31/2011 post:

One advantage Microsoft Windows has in the cloud and tablet wars is that it it is, well, Windows. Microsoft’s Office 365 cloud computing product largely depends on the assumption that users want to run Office on their local PC or tablet. Windows 8 tablets will be attractive to enterprises that want to continue running custom Windows applications, though they had better be x86 tablets since applications for Windows on ARM processors will require recompilation.

On the other hand, if you can run Windows applications just as easily on a Mac, Apple iPad or Android tablet, then Microsoft’s advantage disappears. Virtualization specialist VMWare is making that point at its VMworld conference in Las Vegas this week. In a press release announcing “New Products and Services for the Post-PC Era”, VMWare VP Christopher Young says:

As our customers begin to embrace this shift to the post-PC era, we offer a simple way to deliver a better Windows-based desktop-as-a-service that empowers organizations to do more with what they already have. At the same time, we are investing in expertise and delivering the open products needed to accelerate the journey to a new way to work beyond the Windows desktop.

Good for Windows, good for what comes after Windows is the message. It is based on several products: