Windows Azure and Cloud Computing Posts for 8/8/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

• Updated 8/9/2011 7:30 AM PDT with an forgotten article marked • by me.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

My (@rogerjenn) Microsoft's, Google's big data [analytics] plans give IT an edge article of 8/8/2011 for TechTarget’s SearchCloudComputing.com blog describes Microsoft’s HPC Pack (formerly Microsoft Research Dryad), LINQ to HPC (formerly DryadLINQ), Project “Daytona”, and Excel Data Scope for big-data analytics, as well as Google Fusion Tables for visualizing up to 100 MB of data. See the below article for more details.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

My (@rogerjenn) Links to Resources for my “Microsoft's, Google's big data [analytics] plans give IT an edge” Article begins:

My (@rogerjenn) Microsoft's, Google's big data [analytics] plans give IT an edge article of 8/8/2011 for TechTarget’s SearchCloudComputing.com blog contains many linked and unlinked references to other resources for big data, HPC Pack, LINQ to HPC, Project “Daytona”, Excel DataScope, and Google Fusion Tables. From the article’s Microsoft content:

CIOs are looking to pull actionable information from ultra-large data sets, or big data. But big data can mean big bucks for many companies. Public cloud providers could make the big data dream more of a reality.

“[Researchers] want to manipulate and share data in the cloud.”

Roger Barga, architect and group lead, XCG's Cloud Computing Futures (CCF) team

Big data, which is measured in terabytes or petabytes, often is comprised of Web server logs, product sales [information], and social network and messaging data. Of the 3,000 CIOs interviewed in IBM's Essential CIO study1, 83% listed an investment in business analytics as the top priority. And according to Gartner, by 2015, companies that have adopted big data and extreme information management will begin to outperform unprepared competitors by 20% in every available financial metric3.

Budgeting for big data storage and the computational resources that advanced analytic methods require2, however, isn't so easy in today's anemic economy. Many CIOs are turning to public cloud computing providers to deliver on-demand, elastic infrastructure platforms and Software as a Service. When referring to the companies' search engines, data center investments and cloud computing knowledge, Steve Ballmer said, "Nobody plays in big data, really, except Microsoft and Google.4"

Microsoft's LINQ Pack, LINQ to HPC, Project "Daytona" and the forthcoming Excel DataScope were designed explicitly to make big data analytics in Windows Azure accessible to researchers and everyday business analysts. Google's Fusion Tables aren't set up to process big data in the cloud yet, but the app is very easy to use, which likely will increase its popularity. It seems like the time is now to prepare for extreme data management in the enterprise so you can outperform your "unprepared competitors." …

and concludes with a complete table of 34 resources with links that begins:

Read the entire post here.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Jim O’Neil described Photo Mosaics Part 2: Storage Architecture in an 8/8/2011 post to his Developer Evangelist blog:

Prior to my vacation break, I presented the overall workflow of the Azure Photo Mosaics program, and now it's time to dig a little deeper. I'm going to start off looking at the storage aspects of the application and then tie it all together when I subsequently tackle the the three roles that comprise the application processing. If you want to crack open the code as you go through this blog post (and subsequent ones), you can download the source for the Photo Mosaics application from where else but my Azure storage account!

For the Photo Mosaics application, the storage access hierarchy can be represented by the following diagram. At the top is the core Windows Azure Storage capabilities, accessed directly via a REST API but with some abstractions for ease of programming (namely the Storage Client API), and that is followed by my application-specific access layers (in green). In this post I'll cover each of these components in turn.

Windows Azure Storage

As you likely know, Windows Azure Storage has four primary components:

- Tables - highly scalable, schematized, but non-relational storage,

- Blobs - unstructured data organized into containers,

- Queues - used for messaging between role instances, and

- Drives - (in beta) enabling mounting of NTFS VHDs as page blobs

All of your Windows Azure Storage assets (blobs, queues, tables, and drives) fall under the umbrella of a storage account tied to your Windows Azure subscription, and each storage account has the following attributes:

- can store up to 100TB of data

- can house an unlimited number of blob containers

- with a maximum of 200MB per block blob and

- a maximum of 1TB per page blob (and/or Windows Azure Drive)

- can contain an unlimited number of queues

- with a maximum of 8KB per queue message

- can store an unlimited number of tables

- with a maximum of 1MB per entity ('row') and

- a maximum of 255 properties ('columns') per entity

- three copies of all data maintained at all times

- REST-based access over HTTP and HTTPS

- strongly (versus eventually) consistent

What about SQL Azure? SQL Azure provides full relational and transactional semantics in the cloud and is an offering separate from the core Windows Azure Storage capabilities. Like Windows Azure Storage though, it's strongly consistent, and three replicas are kept at all times. SQL Azure databases can store up to 50 GB of data each, with SQL Azure Federations being the technology to scale beyond that point. We won't be discussing SQL Azure as part of this blog series, since it's not currently part of the Photo Mosaics application architecture.

REST API Access

The native API for storage access is REST-based, which is awesome for openness to other application platforms - all you need is a HTTP stack. When you create a storage account in Windows Azure, the account name becomes the first part of the URL used to address your storage assets, and the second part of the name identifies the type of storage. For example in a storage account named azuremosaics:

http://azuremosaics.blob.core.windows.net/flickr/001302.jpguniquely identifies a blob resource (here an image) that is named 001302.jpg and located in a container named flickr. The HTTP verbs POST, GET, PUT, and DELETE when applied to that URI carry out the traditional CRUD semantics (create, read, update, and delete).http://azuremosaics.table.core.windows.net/Tablesprovides an entry point for listing, creating, and deleting tables; while a GET request for the URIhttp://azuremosaics.table.core.windows.net/jobs?$filter=id eq 'F9168C5E-CEB2-4faa-B6BF-329BF39FA1E4'returns the entities in the jobs table having an id property with value of the given GUID.- A POST to

http://azuremosaics.queue.core.windows.net/slicerequest/messageswould add the message included in the HTTP payload to the slicerequest queue.Conceptually it's quite simple and even elegant, but in practice it's a bit tedious to program against directly. Who wants to craft HTTP requests from scratch and parse through response payloads? That's where the Windows Azure Storage Client API comes in, providing a .NET object layer over the core API. In fact, that's just one of the client APIs available for accessing storage; if you're using other application development frameworks there are options for you as well:

- Ruby: WAZ Storage Gem

- PHP: Windows Azure SDK for PHP Developers

- Java: Windows Azure SDK for Java Developers

- Python: Python Client Wrapper for Windows Azure Storage

Where's the REST API for Windows Azure Drives? Windows Azure Drives are an abstraction over page blobs, so you could say that the REST API also applies to Drives; however, the entry point for accessing drives is the managed Storage Client API (and that makes sense since Drives are primarily for legacy Windows applications requiring NTFS file storage).

Note too that with the exception of opting into a public or shared access policy for blobs, every request to Windows Azure Storage must include an authorization header whose value contains a hashed sequence calculated by applying the HMAC-SHA256 algorithm over a string that was formed by concatenating various properties of the specified HTTP request that is to be sent. Whew! The good news is that the Storage Client API takes care of all that grunt work for you as well.

Storage Client API

The Storage Client API, specifically the Microsoft.WindowsAzure.StorageClient namespace, is where .NET Developers will spend most of their time interfacing with Windows Azure Storage. The Storage Client API contains around 40 classes to provide a clean programmatic interface on top of the core REST API, and those classes roughly fall into five categories:

- Blob classes

- Queue classes

- Table classes

- Drive classes

- Classes for cross-cutting concerns like exception handling and access control

Each of the core storage types (blobs, queues, tables, and drives) have what I call an entry-point class that is initialized with account credentials and then used to instantiate additional classes to access individual items such as messages within queues and blobs within containers. For blob containers, that class is CloudBlobClient, and for queues it's CloudQueueClient. Table access is via both CloudTableClient and a TableServiceContext; the latter extends System.Data.Services.Client.DataServiceContext which should ring a bell for those that have been working with LINQ to SQL or the Entity Framework. And, finally, CloudDrive is the entry point for mounting a Windows Azure Drive on the local file system, well local to the Web or Worker Role that's mounting it.

While the Storage Client API can be used directly throughout your code, I'd recommend encapsulating it in a data access layer, which not only aids testability but also provides a single entry point to Azure Storage and, by extension, a single point for handling the credentials required to access that storage. In the Photo Mosaics application, that's the role of the CloudDAL project discussed next.

Cloud DAL

Within the CloudDAL, I've exposed three points of entry to each of the storage types used in the Photo Mosaics application; I've called them TableAccessor, BlobAccessor, and QueueAccessor. These classes offer a repository interface for the specific data needed for the application and theoretically would allow me to replace Windows Azure Storage with some other storage provider altogether without pulling apart the entire application (though I haven't actually vetted that :)) Let's take a look at each of these 'accessor' classes in turn.

TableAccessor (in Table.cs)

The TableAccessor class has a single public constructor requiring the account name and credentials for the storage account that this particular instance of TableAccessor is responsible for. The class itself is essentially a proxy for a CloudTableClient and a TableServiceContext reference:

- _tableClient provides an entry point to test the existence of and create (if necessary) the required Windows Azure table, and

- _context provides LINQ-enabled access to the two tables, jobs and status, that are part of the Photo Mosaics application. Each table also has an object representation implemented via a descendant of the TableServiceEntity class that has been crafted to represent the schema of the underlying Windows Azure table; here those descendant classes are StatusEntity and JobEntity.

The methods defined on TableAccessor all share a similar pattern of testing for the table existence (via _tableClient) and then issuing a query (or update) via the _context and appropriate TableServiceEntity classes. In a subsequent blog post, we'll go a bit deeper into the implementation of TableAccessor (and some of its shortcomings!).

BlobAccessor (in Blob.cs)

BlobAccessor is similarly a proxy for a CloudBlobClient reference and like the TableAccessor is instantiated using a connection string that specifies a Windows Azure Storage Account and its credentials. The method names are fairly self-descriptive, but I'll also have a separate post in the future on the details of blob access within the Photo Mosaics application.

QueueAccessor (in Queue.cs and Messages.cs)

The QueueAccessor class is the most complex (and the image above doesn't even show the associated QueueMessage classes!). Like BlobAccessor and TableAccessor, QueueAccessor is a proxy to a CloudQueueClient reference to establish the account credentials and manage access to the individual queues required by the application.

You may notice though that QueueAccessor is a bit lean on methods, and that's because the core queue functionality is encapsulated in a separate class, ImageProcessingQueue, and QueueAccessor instantiates a static reference to instances of this type to represent each of the four application queues. It's through the ImageProcessingQueue references (e.g., QueueAccessor.ImageRequestQueue) that the web and worker roles read and submit messages to each of the four queues involved in the Photo Mosaics application. Of course, we'll look at how that works too in a future post.With the CloudDAL layer, I have a well-defined entry point into the three storage types used by the application, and we could stop at that; however, there's one significant issue in doing so: authentication. The Windows Forms client application needs to access the blobs and tables in Windows Azure storage, and if it were to do so by incorporating the CloudDAL layer directly, it would need access to the account credentials in order to instantiate a TableAccessor and BlobAccessor reference. That may be viable if you expect each and every client to have his/her own Windows Azure storage account, and you're careful to secure the account credentials locally (via protected configuration in app.config or some other means). But even so, by giving clients direct access to the storage account key, you could be giving them rope to hang themselves. It's tantamount to giving an end-user credentials to a traditional client-server application's SQL Server database, with admin access to boot!

Unless you're itching for a career change, such an implementation isn't in your best interest, and instead you'll want to isolate access to the storage account to specifically those items that need to travel back and forth to the client. An excellent means of isolation here is via a Web Service, specifically implemented as a WCF Web Role; that leads us to the StorageBroker service.

StorageBroker Service

StorageBroker is a C# WCF service implementing the IStorageBroker interface, and each method of the IStorageBroker interface is ultimately serviced by the CloudDAL (for most of the methods the correspondence is names is obvious). Here, for instance, is the code for GetJobsForClient, which essentially wraps the identically named method of TableAccessor.

public IEnumerable<JobEntry> GetJobsForClient(String clientRegistrationId){ try { String connString = new StorageBrokerInternal(). GetStorageConnectionStringForClient(clientRegistrationId); return new TableAccessor(connString).GetJobsForClient(clientRegistrationId); } catch (Exception e) { Trace.TraceError("Client: {0}{4}Exception: {1}{4}Message: {2}{4}Trace: {3}", clientRegistrationId, e.GetType(), e.Message, e.StackTrace, Environment.NewLine); throw new SystemException("Unable to retrieve jobs for client", e); } }Since this is a WCF service, the Windows Forms client can simply use a service reference proxy (Add Service Reference… in Visual Studio) to invoke the method over the wire, as in JobListWindow.vb below. This is done asynchronously to provide a better end-user experience in light of the inherent latency when accessing resources in the cloud.

Private Sub JobListWindow_Load(sender As System.Object, e As System.EventArgs) Handles MyBase.Load Dim client = New AzureStorageBroker.StorageBrokerClient() AddHandler client.GetJobsForClientCompleted, Sub(s As Object, e2 As GetJobsForClientCompletedEventArgs) ' details elided End Sub client.GetJobsForClientAsync(Me._uniqueUserId) End SubNow, if you look closely as the methods within StorageBroker, you'll notice calls like the following:String connString = new StorageBrokerInternal(). GetStorageConnectionStringForClient(clientRegistrationId);What's with StorageBrokerInternal? Let's find out.

StorageBrokerInternal Service

Although StorageBrokerInternal service may flirt with the boundaries of YAGNI, I incorporated it for two major reasons:

I disliked having to replicate configuration information across each of the (three) roles in my cloud service. It's not rocket science to cut and paste the information within the ServiceConfiguration.cscfg file (and that's easier than using the Properties UI for each role in Visual Studio), but it just seems 'wrong' to have to do that. StorageBrokerInternal allows the configuration information to be specified once (via the AzureClientInterface Web Role's configuration section) and then exposed to any other roles in the cloud application via a WCF service exposed on an internal HTTP endpoint – you might want to check out my blog post on that

adventuretopic as well.I wanted some flexibility in terms of what storage accounts were used for various facets of the application, with an eye toward multi-tenancy. In the application, some of the storage is tied to the application itself (the queues implementing the workflow, for instance), some storage needs are more closely aligned to the client (the jobs and status tables and imagerequest and imageresponse containers), and the rest sits somewhere in between.

Rather than 'hard-code' storage account information for data associated with clients, I wanted to allow that information to be more configurable. Suppose I wanted to launch the application as a bona fide business venture. I may have some higher-end clients that want a higher level of performance and isolation, so perhaps they have a dedicated storage account for the tables and blob containers, but I may also want to offer a free or trial offering and store the data for multiple clients in a single storage account, with each client's information differentiated by a unique id.

The various methods in StorageBrokerInternal provide a jumping off point for such an implementation by exposing methods that can access storage account connection strings tied to the application itself, or to a specific blob container, or indeed to a specific client. In the current implementation, these methods all do return information 'hard-coded' in the ServiceConfiguration.cscfg file, but it should be apparent that their implementations could easily be modified, for example, to query a SQL Azure database by client id and return the Windows Azure storage connection string for that particular client.Within the implementation of the public StorageBroker service, you'll see instantiations of the StorageBrokerInternal class itself to get the information (since they are in the same assembly and role), but if you look at the other roles within the cloud service (AzureImageProcessor and AzureJobController) you'll notice they access the connection information via WCF client calls implemented in the InternalStorageBrokerClient.cs file of the project with the same name. For example, consider this line in WorkerRole.cs of the AzureJobController

// instantiate storage accessors blobAccessor = new BlobAccessor( StorageBroker.GetStorageConnectionStringForAccount(requestMsg.ImageUri));It's clearly instantiating a new BlobAccessor (part of the CloudDAL) but what account is used is determined as follows:

- The worker role accesses the URI of the image reference within the queue message that it's currently processing.

- It makes a call to the StorageBrokerInternal service (encapsulated by the static StorageBroker class) passing the image URI

- The method of the internal storage broker (GetStorageConnectionStringForAccount) does a lookup to determine what connection string is appropriate for the given reference. (Again the current lookup is more or less hard-coded, but the methods of StorageBrokerInternal do provide an extensibility point to partition the storage among discrete accounts in just about any way you'd like).

Is there no authentication on the public StorageBroker service itself? Although the interaction between StorageBroker and StorageBrokerInternal is confined to the application itself (communication is via an internal endpoint accessible only to the Windows Azure role instances in the current application), the public StorageBroker interface is wide open with no authorization or authentication mechanisms currently implemented. That means that anyone can access the public services via the Windows Forms client application or even just via any HTTP client, like a browser!

I'd like to say I meant for it to be that way, but this is clearly not a complete implementation. There's a reason for the apparent oversight! First of all, domain authentication won't really work here, it's a public service and there is no Active Directory in the cloud. Some type of forms-based authentication is certainly viable though, and that would require setting up forms authentication in Windows Azure using either Table Storage or SQL Azure as the repository. There are sample providers available to do that (although I don't believe any are officially supported), but doing so means managing users as part of my application and incurring additional storage costs, especially if I bring in SQL Azure just for the membership support.

For this application, I'd prefer to offload user authentication to someone else, such as by providing customers access via their Twitter or Facebook credentials, and it just so happens that the Windows Azure AppFabric Access Control Service will enable me to do just that. That's not implemented yet in the Photo Mosaics application, partly because I haven't gotten to it and partly because I didn't want to complicate the sample that much more at this point in the process of explaining the architecture. So consider fixing this hole in the implementation as something on our collective 'to do' list, and we'll get to it toward the end of the blog series.Client

At long last we reach the bottom of our data access stack: the client. Here, the client is a simple Windows Forms application, but since the public storage interface occurs via a WCF service over a BasicHttpBinding, providing a Web or mobile client in .NET or any Web Service capable language should be straightforward. In the Windows Forms client distributed with the sample code, I used the Add Service Reference… mechanism in Visual Studio to create a convenient client proxy (see right) and insulate myself from the details of channels, bindings, and the such.

One important thing to note is that all of the access to storage from the client is done asynchronously to provide a responsive user experience regardless of the latency in accessing Windows Azure storage.

Key Takeaways

I realize that was quite a bit to wade through and it's mostly not even specific to the application itself; it's all been infrastructure so far! That observation though is a key point to take away. When you're building applications for the cloud – for scale and growth – you'll want to tackle key foundational aspects first (and not back yourself into a corner later on!):

How do I handle multi-tenancy?

How do I expose and manage storage and other access credentials?

How do future-proof my application for devices and form factors I haven't yet imagined?

There's no one right approach to all of these concerns, but hopefully by walking you through how I tackled them, I've provided you some food for thought as to how to best architect your own applications as you head to the cloud.

Next up: we'll look at how blob storage is used in the context of the Azure Photo Mosaics application.

<Return to section navigation list>

SQL Azure Database and Reporting

The Windows Azure Team (@WindowsAzure) reported Two New SQL Azure Articles Now Available on TechNet Wiki in an 8/8/2011 post:

The Microsoft TechNet Wiki is always chock-full of the latest and greatest user-generated content about Microsoft technologies. Two new articles that dig deeper into SQL Azure just posted to the wiki are worth checking out:

- Connecting to SQL Azure from Ruby Applications

- How to Manage SQL Azure Servers using Windows PowerShell

You should also check out the updated SQL Azure FAQ, which is available in multiple languages. Please visit the wiki index page for a full list of the latest SQL Azure articles on the wiki.

The TechNet Wiki is also a great place to interact and share your expertise with technology professionals worldwide. Click here to learn how to join the wiki community.

Kalyan Bandarupalli explained Creating Firewall rules using SQL Azure in an 8/7/2011 post to the TechBubbles blog:

The firewall feature in Windows Azure portal allows you to store your data securely on cloud which deny all connections by default. The new firewall feature allows you to specify list of IP addresses which can access your SQL Azure Server.You can also programmatically add connections and retrieve information for SQL Azure database. This post discusses about creating the firewall rule using the Azure portal.

1. Browse the website https://windows.azure.com/ and enter your live account credentials

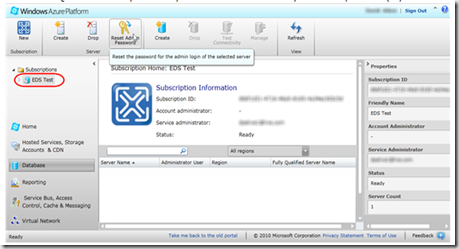

2. In the portal select the database option and select the SQL Azure subscription

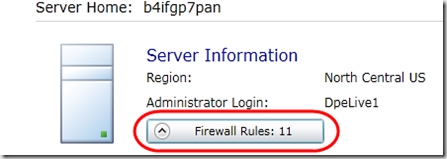

3. Now in the subscription select the SQL Azure server press the Firewall Rules button

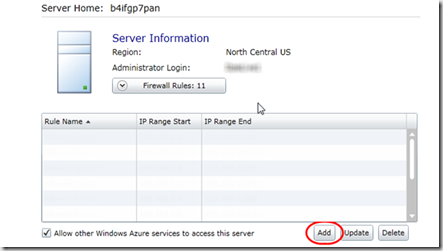

4. Click Add button as shown below

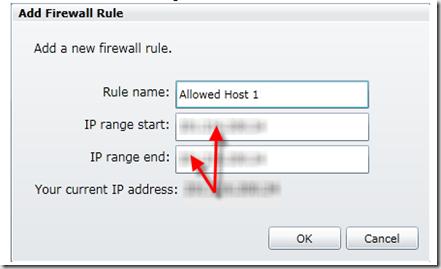

5. In the Add Firewall Rule dialog, give the name to rule and supply the IP range values

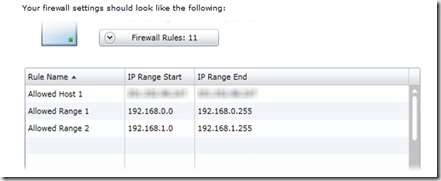

6. After completion, Your firewall settings looks as below

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Lori MacVittie (@lmacvittie) asserted #mobile A single, contextual point of control for access management can ease the pain of managing the explosion of client devices in enterprise environments as an introduction to her Strategic Trifecta: Access Management post of 8/8/2011 to F5’s DevCentral blog:

Regardless of the approach to access management, ultimately any solution must include the concept of control. Control over data, over access to corporate resources, over processes and over actions b y users themselves.

The latter requires a non-technological solution – education and clear communication of policies that promote a collaborative approach to security.

As Michael Santarcangelo

, a.k.a. The Security Catalyst, explains: “Our success depends on our ability to get closer to people, to work together to bridge the human paradox gap, to partner on how we protect information.” (Why dropping the label of “users” improves how we practice security) This includes facets of security that simply cannot effectively be addressed through technology. Don’t share confidential information on social networks, be aware of corporate data and where it may be at rest and protect it with passwords and encryption if it’s a personal device. Because of the nature of mobile devices, technology cannot seriously address security concerns without extensive assistance from service providers who are unlikely to be willing to implement what would be customer-specific controls over data within their already stressed networks. This means education and clear communication will be imperative to successfully navigating the growing security chasm between IT and mobile devices.

The issues regarding control over access to corporate resources, however, can be addressed through the implementation of policies that govern access to resources in all its aspects. Like cloud, control is at the center of solving most policy enforcement issues that arise and like cloud, control is likely to be difficult to obtain. That is increasingly true as the number of devices grows at a rate nearly commensurate with that of data. IT security pros are outnumbered and attempts to continue manually configuring and deploying policies to govern the access to corporate resources from myriad evolving clients will inevitably end with an undesirable result: failure resulting in a breach of policy.

Locating and leveraging strategic points of control within the data center architecture can be invaluable in reducing the effort required to manually codify policies and provides a means to uniformly enforce policies across devices and corporate resources. A strategic point of control offers both context and control, both of which are necessary to applying the right policy and the right time. A combination of user, location, device and resource must be considered when determining whether access should or should not be allowed, and it is at those points within the architecture where resources and users meet that make the most operationally efficient points at which policies can be enforced.

Consider those “security” concerns that involve access to applications from myriad endpoints. Each has its own set of capabilities – some more limited than the others – for participating in authentication and authorization processes. Processes which are necessary to protect applications and resources from illegitimate access and to ensure audit trails and access logs are properly maintained. Organizations that have standardized on Kerberos-based architectures to support both single-sign on efforts and centralize identity management find that new devices often cannot be supported. Allowing users access from new devices lacking native support for Kerberos both impacts productivity in a negative way and increases the operational burden by potentially requiring additional integration points to ensure consistent back-end authentication and authorization support.

Leveraging a strategic point of control that is capable of transitioning between non-Kerberos supporting authentication methods and a Kerberos-enabled infrastructure provides a centralized location at which the same corporate policies governing access can be applied. This has the added benefit of enabling single-sign on for new devices that would otherwise fall outside the realm of inclusion. Aggregating access management at a single point within the architecture allows the same operational and security processes that govern access to be applied to new devices based on similar contextual clues.

That single, strategic point of control affords organizations the ability to consistently apply policies governing access even in the face of new devices because it simplifies the architecture and provides a single location at which those policies and processes are enforced and enabled. It also allows separation of client from resource, and encapsulates access services such that entirely new access management architectures can be deployed and leveraged without disruption. Perhaps a solution to the exploding mobile community in the enterprise is a secondary and separate AAA architecture. Leveraging a strategic point of control makes that possible by providing a service layer over the architectures and subsequently leveraging the organizationally appropriate one based on context – on the device, user and location.

The key to a successful mobile security strategy is an agile infrastructure and architectural implementation. Security is a moving target, and mobile device management seems to make that movement a bit more frenetic at times. An agile infrastructure with a more services-oriented approach to policy enforcement that decouples clients from security infrastructure and processes will go a long way toward enabling the control and flexibility necessary to meet the challenges of a fast-paced, consumerized client landscape.

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

My (@rogerjenn) Microsoft's, Google's big data plans give IT an edge article of 8/8/2011 for TechTarget’s SearchCloudComputing.com blog begins:

CIOs are looking to pull actionable information from ultra-large data sets, or big data. But big data can mean big bucks for many companies. Public cloudproviders could make the big data dream more of a reality.

[Researchers] want to manipulate and share data in the cloud.

Roger Barga, architect and group lead, XCG's Cloud Computing Futures (CCF) team

Big data, which is measured in terabytes or petabytes, often is comprised of Web server logs, product sales, data, and social network and messaging data. Of the 3,000 CIOs interviewed in IBM's Essential CIO study, 83% listed an investment in business analytics as the top priority. And according to Gartner, by 2015, companies that have adopted big data and extreme information management will begin to outperform unprepared competitors by 20% in every available financial metric.

Budgeting for big data storage and the computational resources that advanced analytic methods require, however, isn't so easy in today's anemic economy. Many CIOs are turning to public cloud computing providers to deliver on-demand, elastic infrastructure platforms and Software as a Service. When referring to the companies' search engines, data center investments and cloud computing knowledge, Steve Ballmer said, "Nobody plays in big data, really, except Microsoft and Google."

Microsoft's LINQ Pack, LINQ to HPC, Project "Daytona" and the forthcoming Excel DataScope were designed explicitly to make big data analytics in Windows Azure accessible to researchers and everyday business analysts. Google's Fusion Tables aren't set up to process big data in the cloud yet, but the app is very easy to use, which likely will increase its popularity. It seems like the time is now to prepare for extreme data management in the enterprise so you can outperform your "unprepared competitors."

See also My (@rogerjenn) Links to Resources for my “Microsoft's, Google's big data [analytics] plans give IT an edge” Article in the Azure Blob, Drive, Table and Queue Services section above.

Patrick Butler Monterde described the Windows Azure Platform Training Kit 2011 August Update in an 8/8/2011 post:

The Windows Azure Platform Training Kit includes a comprehensive set of technical content including hands-on labs, presentations, and demos that are designed to help you learn how to use the Windows Azure platform, including: Windows Azure, SQL Azure and the Windows Azure AppFabric.

Link: http://www.microsoft.com/download/en/details.aspx?displaylang=en&id=8396

August 2011 Update

The August 2011 update of the training kit includes the following updates:

- [New Hands-On Lab] Introduction to Windows Azure Marketplace for Applications

- [Updated] Labs and Demos to leverage the August 2011 release of the Windows Azure Tools for Microsoft Visual Studio 2010

- [Updated] Windows Azure Deployment to use the latest version of the Azure Management Cmdlets

- [Updated] Exploring Windows Azure Storage to support deleting snapshots

- Applied several minor fixes in content

Tony Bailey (a.k.a. tbtechnet and tbtonsan) described Getting a Start on Windows Azure Pricing in an 8/8/2011 post to TechNet’s Windows Azure, Windows Phone and Windows Client blog:

The Windows Azure Platform Pricing Calculator is pretty neat to help application developers get a rough initial estimate of their Windows Azure usage costs. But where to start? What sort of numbers do you plug in? We've taken a crack at some use cases so you can get a basic idea. We've put the Windows Azure Calculator Category Definitions at the bottom of the post.

- Get a no-cost, no credit card required 30-day Windows Azure platform pass

- Get free technical support and also get help driving your cloud application sales

How?

- It's easy. Chat online or call (hours of operation are from 7:00 am - 3:00 pm Pacific Time):

- Or you can use e-mail

1. E-commerce application

a. An application developer is going to customize an existing Microsoft .NET e-commerce application for a national chain, US-based florist. The application needs to be available all the time, even during usage spikes, scale for peak periods such as Mother’s Day and Valentine’s Day

b. You can use the Azure pricing data to figure out what elements of your application are likely to cost the most, and then examine optimization and different architecture options to reduce costs. For example, storing data in binary large object (BLOB) storage will increase the number of storage transactions. You could decrease transaction volume by storing more data in the SQL Azure database and save a bit on the transaction costs. Also, you could reduce the number of compute instances from two to one for the worker role if it’s not involved in anything user-interactive—it can be offline for a couple minutes during an instance reboot without a negative impact on the e-commerce application. Doing this would save $90.00 per month in compute instances (three instead of four instances in use).

2. Asset Tracking Application

a. A building management company needs to track asset utilization in its properties worldwide. Assets include equipment related to building upkeep, such as electrical equipment, water heaters, furnaces, and air conditioners. The company needs to maintain data about the assets and other aspects of building maintenance and have all necessary data available for quick and effective analysis.

b. Hosting applications like this asset tracking system on Windows Azure can make a lot of sense financially and as a strategic move. For about $6,500.00 in the first year, this building management company can improve the global accessibility, reliability, and availability of their asset tracking system. They gain access to a scalable cloud application platform with no capital expenses and no infrastructure management costs, and they avoid the internal cost of configuring and managing an intricate relational database. Even the pay-as-you-go annual cost of about $9,000.00 is a bargain, given these benefits.

3. Sales Training Application

a. A worldwide technology corporation wants to streamline its training process by distributing existing rich-media training materials worldwide over the Internet.

b. Hosting applications like this sales training system on Windows Azure can make a lot of sense financially and as a strategic move. Using the high estimate, for a little less than $10,000.00 a year, this technology company can avoid the cost of managing and maintaining its own CDN while taking advantage of a scalable cloud application platform with no capital expenses and no infrastructure management.

4. Social Media Application

a. A national restaurant chain has decided to use social media to connect directly with its customers. They hire an application developer that specializes in social media to develop a web application for a short-term marketing campaign. The campaign’s goal is to generate 500,000 new fans on a popular social media site.

b. Choosing to host applications on Windows Azure can make financial sense for short-term marketing campaigns that use social media applications because you don’t have to spend thousands of dollars buying hardware that will sit idle after the campaign ends. It also gives you the ability to rapidly scale the application in or out, up or down depending on demand. As a long-term strategic decision, using Azure lets you architect applications that are easy to repurpose for future marketing campaigns.

Azure Calculator Category How does this relate to my application? What does this look like on Azure? Measured by Compute Instances This is the raw computing power needed to run your application. A compute instance consists of CPU cores, memory, and disk space for local storage resources—it’s a pre-configured virtual machine (VM). A small compute instance is a VM with one 1.6 GHz core, 1.75 GB of RAM, and a 225 GB virtual hard drive. You can scale your application out by adding more small instances, or up by using larger instances. Instance size:

- Extra small

- Small

- Medium

- Large

- Extra large

Relational Databases If you application uses a relational database such as Microsoft SQL Server, how large is your dataset excluding any log files? A SQL Azure Business Edition database. You choose the size. GB Storage This is the data your application stores—product catalog information, user accounts, media files, web pages, and so on. The amount of storage space used in blobs, tables, queues, and Windows Azure Drive storage GB Storage Transactions These are the requests between your application and its stored data: add, update, read, and delete. Requests to the storage service: add, update, read, or delete a stored file. Each request is analyzed and classified as either billable or not-billable based on the ability to process the request and the request’s outcome. # of transactions Data Transfer This is the data that goes between the external user and your application (e.g., between a browser and a web site). The total amount of data going in and out of Azure services via the internet. There are two data regions: North America/Europe and Asia Pacific. GB in/GB out Content Delivery Network (CDN) This is any data you host in datacenters close to your users. Doing this usually improves application performance by delivering content faster. This can include media and static image files. The total amount of data going in and out of Azure CDN via the internet. There are two CDN regions: North America/Europe and other locations. GB in/GB out Service Bus Connections These are connections between your application and other applications (e.g., for off-site authentication, credit card processing, external search, third-party integration, and so on). Establishes loosely-coupled connectivity between services and applications across firewall or network boundaries using a variety of protocols. # of connections Access Control Transactions These are the requests between your application and any external applications. The requests that go between an application on Azure and applications or services connected via the Service Bus. # of transactions

Martin Ingvar Kofoed Jensen (@IngvarKofoed) described Composite C1 non-live edit multi instance Windows Azure deployment in an 8/7/2011 post:

Introduction

This post is a technical description of how we made a non-live editing multi datacenter, multi instance Composite C1[URL] deployment. A very good overview of the setup can be found here. The setup can be split into three parts. The first one is the Windows Azure Web Role. The second one is the Composite C1 “Windows Azure Publisher” package. And the third part is the Windows Azure Blob Storage. The latter is the common resource shared between the two first and, except for it usage, is self explaining. The rest of this blog post I will describe the first two parts of this setup in more technical detail. This setup also supports the Windows Azure Traffic Manager for handling geo dns and fall-over, which is a really nice feature to put on top!

The non-live edit web role

The Windows Azure deployment package contains an empty website and a web role. The configuration for the package contains a blob connection string, a name for the website blob container, a name for the work blob container and a display name used by the C1 Windows Azure Publisher package. The web role periodically checks the timestamp of a specific blob in the named work blob container. If this specific blob is changed since last synchronization, the web role will start a new synchronization from named website blob container to the local file system. It is a optimized synchronization that I have described in an earlier blog post: How to do a fast recursive local folder to/from azure blob storage synchronization.

Because it is time consuming to downloading new files from the blob, the synchronization is done to a local folder and not the live website. This minimizes the offline time of the website. All the paths of downloaded and deleted files are kept in memory. When the synchronization is done the live website is put offline with the app_offline.htm and all downloaded files are copied to the website and all deleted files are also deleted from the website. After this, the website is put back online. All this extra work is done to keep the offline time as low as possible.

The web role writes its current status (Initialized, Ready, Updating, Stopped, etc) in a xml file located in the named work blob container. During the synchronization (updating), the web role also includes the progress in the xml file. This xml is read by the local C1’s Windows Azure Publisher and displayed to the user. This is a really nice feature because if a web role is located in a datacenter on the other side of the planet, it will take longer time before it is done with the synchronization. And this feature gives the user a full overview of the progress of each web role. See the movie below of how this feature looks like.

All needed for starting a new Azure instance with this, is to create a new blob storage or use and existing one and modified the blob connection string in the package configuration. This is also shown in the movie below.

Composite C1 Windows Azure Publisher

This Composite C1 package adds a new feature to an existing/new C1 web site. The package is installed on a local C1 instance and all development and future editing is done on this local C1 website. The package adds a way of configuring the Windows Azure setup and a way of publishing the current version of the website.

A configuration consists of the blob storage name and access key. It also contains two blob container names. One container is used to upload all files in the website and the other container is used for very simple communication between the web roles and the local C1 installation.

After a valid configuration has been done, it is possible to publish the website. The publish process is a local folder to blob container synchronization with the same optimization as the one I have described in this earlier blog post: How to do a fast recursive local folder to/from azure blob storage synchronization. Before the synchronization is started the C1 application is halted. This is done to insure that no changes will be made by the user while the synchronization is in progress. The first synchronization will obvious take some time because all files has to be uploaded to the blob. Ranging from 5 to 15 minutes or even more, depending on the size of the website. Consecutive synchronizations are much faster. And if no large files like movies are added a consecutive synchronization takes less than 1 minute!

The Windows Azure Publisher package also installs a feature that gives the user an overview of all the deployed web roles and their status. When a new publish is finished all the web roles will start synchronizing and the current progress for each web role synchronization is also displayed the overview. The movie below also shows this feature.

Here is a movie that shows: How to configure and deploy the Azure package, Adding the Windows Azure Traffic Manager, Installing and configuring the C1 Azure Publisher package, the synchronization process and overview and finally showing the result.

Gizmox updated its Client/Server Applications to Windows Azure page in 8/2011:

A bridge to the clouds

Visual WebGui Instant CloudMove takes a migration approach combined with a virtualization engine. Through its unique virtualization framework, a layer existing between the web server and the application and its highly automated process, Instant CloudMove converts legacy applications to native .Net code and then optimizes it for Microsoft's Azure platform. This produces new software that runs fast and efficiently on the cloud while retaining the desktop user controls from the original app.

Realizing Azure Cloud economics and scalability

Instant CloudMove is the only solution to migrate Client/Server applications (without rewriting them) to the Cloud and thus a major cost saver for enterprises and ISVs looking to leverage cloud platform technology due to the fact that:

- The path to the Cloud is short, quick and with minimal changes in HR and expertise/skills requirements

- VWG applications are optimized for Azure and run highly efficiently on Microsoft Azure cloud, consuming less CPU and less bandwidth than competing solutions and therefore cuts running costs.

- It automatically produces applications with unhackable security and a desktop-like richness, just like the original C/S application.

Free assessment of the migration efforts & costs

Visual WebGui free AssessmentTool allows decision makers to get valuable information on their specific project in advance and without any commitment on their side by locally running the AssessmentTool on the applications' executable files:

- An indication of the overall readiness of each .NET application to Windows Azure (automation level score) and a detailed report of any manual work needed, all before actually starting to do any work on it.

- A calculation of the expected Windows Azure resource consumption for each specific application.

Better performance. Less Azure running costs

Automated migration process

The automated migration is done with the unique TranspositionTool (until Beta 1 is released contact us for execution). The tool receives .NET code which it automatically transposes into a ASP.NET based Visual WebGui application ready for Windows Azure cloud.

The smart tool is designed to assist in transforming desktop to a Web/Cloud/Mobile based architecture via decision supporting smart advisory and troubleshooting aggregators that do not require specific knowledge or skills.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) reported More New How Do I Videos Released! (Beth Massi) in an 8/8/2011 post to the Visual Studio LightSwitch blog:

We’ve got a couple new “How Do I'” videos on the LightSwitch Developer Center to check out:

- #21 - How Do I: Add Commands as Buttons and Links to Screens?

- #22 - How Do I: Show Multiple Columns in an Auto-Complete Dropdown Box?

And if you missed them, you can access all the “How Do I” videos here:

.png)

Watch all the LightSwitch How Do I videos

(Please note that if the video doesn’t appear right away, try refreshing. You can also download the video in a variety of formats at the bottom of the video page.)

Next up I’m working on some videos on working with screen layouts as well as data concurrency. Drop me a line and let me know what other videos you’d like to see.

Gill Cleeren posted Looking at LightSwitch - Part 1 to the SilverlightShow blog on 8/8/2011:

In 2010, Microsoft announced LightSwitch, a product aimed at developing Line-of-Business applications with ease. In July 2011, the beta tag was finally removed from the product as Microsoft officially introduced Visual Studio LightSwitch 2011 as a new member of the Visual Studio family. Many however are still unsure about the product. Questions crossing a lot of minds include “Is it something for me?”, “What can I do with it?”, “Where should I use it?”, “Is it more than Microsoft Access?”, “Am I limited to what’s in the box?” and quite a few more.

For this reason, I have decided that a few articles are in place to give you a clear understanding of LightSwitch. I won’t be saying that you should [use] LightSwitch for this or that. Using a sample application we will build throughout the series, I will try giving you an overview of how we can use it to build a real-world application. By reading these articles, I hope you’ll be able to make up your mind whether or not LightSwitch is something for you.

More specifically, I plan the following topics to be tackled along the way:

- What is LightSwitch and where I see it being useful

- The concepts of a LightSwitch application

- Data in more detail

- Screens in more detail

- Queries in more detail

- Writing business logic and validation

- Managing security in a LightSwitch application

- Deploying a LightSwitch application

- Extensions - what and how

- Using custom Silverlight controls in LightSwitch

- Adapting the LightSwitch UI to your needs

- Using RIA Services

In this first article, we’ll start by looking at what LightSwitch is and we’ll look at the concepts as well. First, let’s introduce the scenario we’ll use for this series.

The scenario

Imagine you are asked to build a new system for a movie theatre named MoviePolis. Today, they are using an old application which was written in COBOL and it consists out of several old-school screens, with absolutely no user experience at all. A typical interface of such an application is shown below.

As can be seen, this is not how we do things nowadays. That’s why MoviePolis wants a new system.

Their old system manages the following data:

- Movies

- Distributors

- Show times

- Tickets

Movies can be managed, they are owned by a movie distributor. For showing movies, show times are created. For show times, tickets can be purchased. This system already contains several interfaces, which are to be used by different people in the system: a manager will buy movies or add new distributors. An employee can manage show times. Ticket staff can view the interface to sell tickets for a show time.

Let’s now look at where LightSwitch in general can be used and what it really is.

Where is LightSwitch a good fit?

As a consultant, I’ve seen several times that non-technical people (sometimes with some technical background) have created applications, mostly in Excel or Access. There’s nothing wrong with that, absolutely not. Sometimes, even an entire organization runs on such an application. Things however may get difficult if that person decides to leave the company. In some cases, a consultant is hired to figure out how things work. Sadly, that’s costly and often very difficult to find out what is in the “application” and how it works (things like business rules, valid data etc). One of, if not the biggest problems here is that everyone creates such applications in their own way: there’s no consistency whatsoever. These people default to Access or Excel because it doesn’t require them to learn developing applications with a framework such as .NET. They get up-to-speed fast. Here, LightSwitch would be a good alternative. As we’ll see later on, there’s no development knowledge required to build a working application with LightSwitch. Not everything however can be done without writing code, so a good approach here might be that the non-technical/business person creates the rough outline of the application and a developer can then add finishing touches (that is, adding code to validate etc).

Reading this may get you thinking that I see LightSwitch as a tool only for those people. Well, I certainly don’t. In systems that I’ve build, very often typical administration screens need to be built. Things like managing countries, currencies, languages… Screens may have to be built for that type of data while there’s little business value here. Building those screens manually costs time (and therefore money). If we have these generated by LightSwitch, we’ll definitely make some profit.

Also, I see LightSwitch as a valid alternative for small applications. Very often, we as developers spend a lot of time thinking how we are going to build the application. Lot of time goes wasted in thinking about an architecture, a data access layer… even for small applications. LightSwitch can be a valid development platform here as well, since it has everything on board to get started, based on a solid foundation using Silverlight, RIA Services, MVVM etc.

Finally, using the extensibility features (which we’ll be looking at further down the road), a team of developers can build a number of extensions, typical for the business. Business persons can then use these extensions to create applications that are already tailored to the need of the business, without having to write logic.

Hello LightSwitch

Now that we have a bit of a clearer view on who could use LightSwitch and where it can be used, we can conclude that LightSwitch is a platform, part of the Visual Studio family, that allows building LOB applications in a rapid way. It allows people to build applications without writing code but it doesn’t block you from writing code. As developers, the tool can be used to build real applications, including validations etc. It has a rich extensibility framework that enables to build extensions to further customize the tool and the application that we are developing.

The applications we build are in fact Silverlight application. Because of this, LightSwitch applications can be deployed on the desktop (Silverlight out-of-browser application) and in-browser (Silverlight in-browser application). LightSwitch applications can be deployed to Windows Azure as well.

LightSwitch can be downloaded from http://www.microsoft.com/visualstudio/en-us/lightswitch. It can be installed in two ways. If you have Visual Studio 2010 already installed, it will integrate and become a new type of template that you can select. If not, it will install the Visual Studio Shell and it can run stand-alone.

Let’s now take a look at the foundations of a LightSwitch application.

Foundations of LightSwitch development

Any LightSwitch application evolves around 3 important foundations: data, screens and queries.

Data is where it all starts. Since LightSwitch is aimed at building LOB applications, it’s no wonder that we should start with the data aspect. LightSwitch has several options of working with data. It can work with a SQL Express database that it manages itself, it can connect to an existing SQL Server database or it can get data from a SharePoint list. On top of that, LightSwitch can consume WCF RIA Services, therefore. Because of this, we can virtually connect to any type of data source where RIA Services serves as some kind of middle tier to get and store data. We’ll look at this further in this series.

The default option is creating the application using an internal SQL Server Express database. In this case, we define the data from within the LightSwitch environment. Based on this data, LightSwitch can then be used to generate screens. Note that in one application, we can connect to an internal LightSwitch database and several external data sources. This way, it’s even possible to create relations between the several data sources: LightSwitch allows creating relations between entities inside the internal database and between the internal and any external database. It cannot change external databases though.

Once the data is defined, we can start generating screens on top of this data. (Note that LightSwitch supports an iterative way of working, so we can easily make changes to the data and alter the screens later on.) A screen can be created based on a template. Out-of-the-box, LightSwitch has some templates already; we as developers can create new templates as well. A screen template is as the word says nothing more than a template. We can change the screen once it’s generated to better fit our application needs.

The third and final foundation is queries. A query is the link between the data and the screen. A screen is always generated based on a query that runs on the data. Even if we don’t specify a query, a default query (Select * from …) is generated. We can change queries and we can create queries as well. We’ll be looking at how to do so later on.

Creating the MoviePolis application

Of course we can’t finish of the first part of this series without showing LightSwitch. Therefore, we’ll start with building the foundations of the application. In the next parts, we’ll be extending on this application a lot further.

So open Visual Studio LightSwitch and follow along to build the application. For the purpose of this demo, we create a LightSwitch C# application and name it MoviePolis.

When Visual Studio is ready creating the application from the template, we get the following screen. Note that LightSwitch suggest we connect to data first. For our demo, we are going to use the first option: we’ll create the data from scratch

Data of MoviePolis

As said, we should start with the data. In the solution explorer, we have 2 options: Data Sources and Screens. Right-click on Data Source and select Add Table. In the table/entity designer that appears, we can create our entity. Create the Movie entity as shown below.

Next, we’ll create the Distributor entity as shown below.

A Movie is distributed by a Distributor. We should therefore embed this information in a relation. In the designer with the Movie entity open, click on the Add relationship button. A dialog appears as shown below. Create the relationship so that one Distributor can have many Movie entities but each Movie can only have one Distributor.

The result is that this relation is shown in the designer.

For now, that’s enough data; we’ll model the other entities in the following articles.

Give me some screen

Now that we have our data model created in LightSwitch, we can move to creating some screens. In the solution explorer, right-click on the Screens folder and select Add Screen. In the dialog that appears, we see a list of screen templates that come with LightSwitch. Developers can create new ones, but for now, we’ll use one of the standard ones. Create a new List and Details screen and select that you want to use the Distributor entity. Mark that we also want to be able to edit the Movie data. The screenshot below shows these options.

LightSwitch will now reflect on the entity and generate a screen, based on this template. The screen designer shows a hierarchical view of the generated screen. Note that behind the scenes, code was generated. When we start editing this screen, this code will be changed.

Without any further ado, let’s run the application (remember to have your SQL Express instance running!). You’ll get a screen as shown below. We can edit the Distributor as well as add Movie instances.

At this point, we have a working application that handles things like adding, updating and deleting data.

Of course, we’ve only scratched the surface. In the following article, we’ll do a deep dive in all the options we have to work with data and screens and I’ll be telling you more on what happens behind the scenes. Stay tuned!

Gill is Microsoft Regional Director (www.theregion.com), Silverlight MVP (former ASP.NET MVP), INETA speaker bureau member and Silverlight Insider. He lives in Belgium where he works as .NET architect at Ordina.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: July 2011 of 8/8/2011 shows 0 downtime (forgotten yesterday):

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Azure Web role instances from Microsoft’s US South Central (San Antonio) data center. Here’s its uptime report from Pingdom.com for July 2011 (a bit late this month):

This is the second uptime report for the two-Web role version of the sample project. Reports will continue on a monthly basis. See my Republished My Live Azure Table Storage Paging Demo App with Two Small Instances and Connect, RDP post of 5/9/2011 for more details of the Windows Azure test harness instance.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Yung Chow reported Private Cloud, Public Cloud, and Hybrid Deployment in an 8/8/2011 TechNet post:

As of August, 2011, US NIST has published a draft document (SP 800-145) which defines cloud computing and outlines 4 deployment models: private, community, public, and hybrid clouds. At the same time, Chou has proposed a leaner version with private and public as the only two deployment models in his 5-3-2 Principle of Cloud Computing. The concept is illustrated below.

Regardless how it is viewed, cloud computing characterizes IT’s capabilities with which a set of authorized resources can be abstracted, managed, delivered, and consumed as a service, i.e. with capacity on demand, without the concerns of underlying infrastructure. Amid a rapid transformation from legacy infrastructure to a cloud computing environment, many IT professionals remain struggling in better understanding what is and how to approach cloud. IT decision makers need to be crisp on what are private cloud and public before developing a roadmap for transitioning into cloud computing.

Private Cloud and Public Cloud

Private cloud is a “cloud” which is dedicated, hence private. As defined in NIST SP 800-145, private cloud has its infrastructure operated solely for an organization, while the infrastructure may be managed by the organization or a third party and may exist on premise or off premise. By and large, private cloud is a pressing and important topic, since a natural progression in datacenter evolution for the post-virtualization-era enterprise IT is to convert/transform existing establishments, i.e. what have been already deployed, into a cloud-ready and cloud-enabled environment, as shown below.

NIST SP 800-145 points out that public cloud is a cloud infrastructure available for consumers/subscribers and owned by an organization selling cloud services to the public or targeted audiences. Free public cloud services like Hotmail, Windows Live, SkyDrive, etc. and subscription-based offerings like Office 365, Microsoft Online Services, and Windows Azure Platform are available in Internet. And many simply refer Internet as the public cloud. This is however not entirely correct since Internet is in generally referenced as connectivity and not necessary a service with the 5 essential characteristics of cloud computing. In other words, just because it is 24x7 accessible through Internet does not make it a cloud application. In such case, cloud computing is nothing more than remote access.

Not Hybrid Cloud, But Hybrid Deployment

According to NIST SP 800-145, hybrid cloud is an infrastructure of a composition of two or more clouds. Here these two or more clouds are apparently related or have some integrated or common components to complete a service or form a collection of services to be presented to users as a whole. This definition is however vague. And the term, hybrid cloud, is

extraneous and adds too few values. A hybrid cloud of a corporation including two private clouds from HR and IT, respectively, and both based on corporate AD for authentication is in essence a private cloud of the corporation, since the cloud as a whole is operated solely for the corporation. If a hybrid cloud consists two private clouds from different companies based on established trusts, this hybrid cloud will still be presented as a private cloud from either company due to the corporate boundaries. In other words, a hybrid cloud of multiple private clouds is in essence one logical private cloud. Similarly a hybrid cloud of multiple public clouds is in essence a logical public cloud. Further, a hybrid cloud of a public cloud and a private cloud is either a public cloud when accessing from the public cloud side or a private cloud from the private cloud side. It is either “private” or “public.” Adding “hybrid” only confuses people more.

Nevertheless, there are cases in which a cloud and its resources are with various deployment models. I call these hybrid deployment scenarios including:

- An on-premise private cloud integrated with resources deployed to public cloud

- A public cloud solution integrated with resources deployed on premises

I have previously briefly talked about some hybrid deployment scenarios. In upcoming blogs, I will walk through the architectural components and further discuss either scenarios.

Closing Thoughts

A few interesting observations I have when classifying cloud computing. First, current implementation of cloud computing relies on virtualization and a service is relevant only to those VM instances, i.e. virtual infrastructure, where the service is running. Notice that the classification of private cloud or public cloud is not based on where a service is run or who owns the employed hardware. Instead, the classification is based on whom, i.e. the users, that a cloud is operated/deployed for. In other words, deploying a cloud to a

company’s hardware does not automatically make it a private cloud of the company’s. Similarly a cloud hosted in hardware owned by a 3rd party does not make it a public cloud by default either.

Next, at various levels of private cloud IT is a service provider and a consumer at the same time. In an enterprise setting, a business unit IT may be a consumer of private cloud provided by corporate IT, while also a service provider to users served by the business unit. For example, the IT of an application development department consumes/subscribes a private cloud of IaaS from corporate IT based on a consumption-based charge-back model at a departmental level. This IT of an application development department can then act as a service provider to offer VMs dynamically deployed with lifecycle management to authorized developers within the department. therefore, when examining private cloud, we should first identify roles, followed by setting proper context based on the separation of responsibilities to clearly understand the objectives and scopes of a solution.

Finally, community cloud as defined in NIST SP 800-145 is really just a private cloud of a community since the cloud infrastructure is still operated for an organization which now consists a community. This classification in my view appears academic and extraneous.

<Return to section navigation list>

Cloud Security and Governance

Tom Simonite asserted “Researchers at Microsoft have built a virtual vault that could work on medical data without ever decrypting it” in a deck for his A Cloud that Can't Leak article of 8/8/2011 for the MIT Technology Review:

Imagine getting a friend's advice on a personal problem and being safe in the knowledge that it would be impossible for your friend to divulge the question, or even his own reply.

Researchers at Microsoft have taken a step toward making something similar possible for cloud computing, so that data sent to an Internet server can be used without ever being revealed. Their prototype can perform statistical analyses on encrypted data despite never decrypting it. The results worked out by the software emerge fully encrypted, too, and can only be interpreted using the key in the possession of the data's owner.

Cloud services are increasingly being used for every kind of computing, from entertainment to business software. Yet there are justifiable fears over security, as the attacks on Sony's servers that liberated personal details from 100 million accounts demonstrated.

Kristin Lauter, the Microsoft researcher who collaborated with colleagues Vinod Vaikuntanathan and Michael Naehrig on the new design, says it would ensure that data could only escape in an encrypted form that would be nearly impossible for attackers to decode without possession of a user's decryption key. "This proof of concept shows that we could build a medical service that calculates predictions or warnings based on data from a medical monitor tracking something like heart rate or blood sugar," she says. "A person's data would always remain encrypted, and that protects their privacy."

The prototype storage system is the most practical example yet of a cryptographic technique known as homomorphic encryption. "People have been talking about it for a while as the Holy Grail for cloud computing security," says Lauter. "We wanted to show that it can already be used for some types of cloud service."

Researchers recognized the potential value of fully homomorphic encryption (in which software could perform any calculation on encrypted data and produce a result that was also encrypted) many years ago. But until recently, it wasn't known to be possible, let alone practical. Only in 2009 did Craig Gentry of IBM publish a mathematical proof showing fully homomorphic encryption was possible.

In the relatively short time since, Gentry and other researchers have built on that initial proof to develop more working prototypes, although these remain too inefficient to use on a real cloud server, says Lauter.

Read more: 2

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

David Strom explained Why You Need to Understand WAN Optimization With AWS Direct Connect in an 8/8/2011 post to the ReadWriteCloud:

With Amazon's Direct Connect announcement last week, it seems we are going back to the past, when dedicated WAN lines connected our computing resources. Way back, like to when IBM's SNA was around in the late 1970s and 1980s and when corporations purchased communication lines at the whopping speed of 19 kbps. Now Amazon can connect one and 10 Gbps networks to their cloud.

There is a catch, of course. Your racks of servers have to be connected via one to three initial providers supporting Direct Connect, and if you don't have this equipment in one of their points of presence, you will have to buy connectivity to get your packets there. Remember, we are talking communicating outside of the Internet on a private network.

One of these providers is Equinix, who operate 90 high-performance data centers around the world. In their announcement, they provide a selection of use cases including:

- High performance integration with core-internal applications - This allows customers to treat AWS instances as part of the data center LAN and integrate cloud strategy into more applications.

- High volume data processing - This allows customers to efficiently move much larger data volumes into and out of AWS.

- Direct storage connectivity - This allows for more regular migration/replication of data from AWS into customer-managed storage solutions.

- Custom hardware integration - This allows for better integration of custom hardware solutions into the AWS workflow, with real-time streaming of data from/to AWS.

"Cloud access doesn't necessarily equal the Internet, and in many cases the Internet isn't appropriate," says Joe Skorupa, a Gartner research VP on data center issues who has been arguing the need for dedicated links for several years. "First, performance is variable and there are times when a business critical app can be at risk. Second, you don't have control over QoS if you are running multiple services. And service level agreements around a dedicated point-to-point link will be more expensive but there are guarantees around restore times which should be faster. If latency is a problem, then WAN optimization may be very appropriate."

So if you are interested in Direct Connect, your first step is going to be to examine what kind of WAN optimization technology to use to move your data across these private networks. This is because your are charged for every packet, so if you can cache and avoid sending some data over your WAN link, you can save some cash, and up your performance too.

There are many vendors in this space, including:

David Greenfield's Network Computing column earlier this spring talks about some of the issues with actually getting the kind of performance improvements promised by these vendors. He says, "With the ability to save real dollars by improving WAN connections, WAN optimization vendors are encouraged to tout ever greater levels of performance. But those numbers may have little bearing on your reality."

- Riverbed's Steelhead, long considered the market leader in this space,

- BlueCoat MACH5, a general purpose WAN optimizer that has an interesting twist on the cloud,

- Aspera, which offers a cloud-based subscription service that is billed directly through Amazon,

- Silver Peak Systems, who have both physical and virtual appliances,

- Infineta Systems Data Mobility Switch is the newest entry in this field with specialized hardware-based accelerators.

WAN optimizers work in a variety of methods to speed up data transfers: by acting as proxy caches to deliver frequent-asked content, by putting in place application-specific techniques to handle Web and database transactions, and by looking at improving overall quality of service performance.