Windows Azure and Cloud Computing Posts for 8/3/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

• Updated 8/5/2011 8:00 AM with link to Steve Marx’ detailed Playing with the new Windows Azure Storage Analytics Features article of 8/4/2011 in the Azure Blob, Drive, Table and Queue Services section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

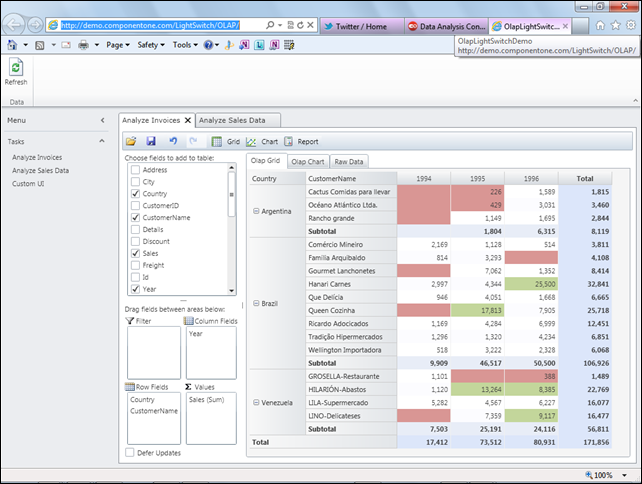

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

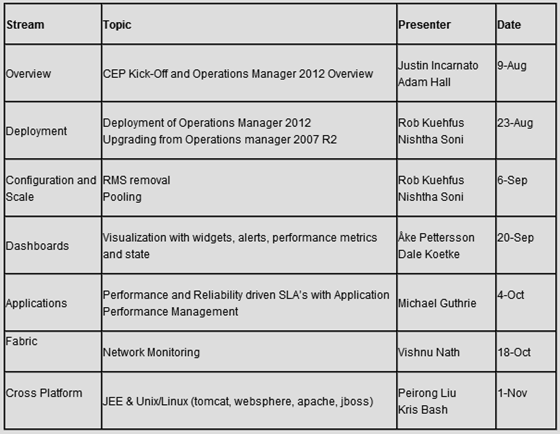

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

The Windows Azure Storage Team explained Windows Azure Storage Analytics in an 8/3/2011 post:

Windows Azure Storage Analytics offers you the ability to track, analyze, and debug your usage of storage(Blobs, Tables and Queues). You can use this data to analyze storage usage to improve the design of your applications and their access patterns to Windows Azure Storage. Analytics data consists of:

- Logs

- Provide trace of executed requests for your storage accounts

- Metrics

- Provide summary of key capacity and request statistics for Blobs, Tables and Queues

Logs

This feature provides a trace of all executed requests for your storage accounts as block blobs in a special container called $logs. Each log entry in the blob corresponds to a request made to the service and contains information like request id, request URL, http status of the request, requestor account name, owner account name, server side latency, E2E latency, source IP address for the request etc.

This data now empowers you to analyze your requests much more closely. It allows you to run the following types of analysis:

- How many anonymous requests is my application seeing from a given range of IP address?

- Which containers are being accessed the most?

- How many times is a particular SAS URL being accessed and how?

- Who issued the request to delete a container?

- For a slow request –where is the time being consumed?

- I got a network error, did the request reach the server?

Metrics

Provide summary of key statistics for Blobs, Tables and Queues for a storage account. The statistics can be categorized as:

- Request information: Provides hourly aggregates of number of requests, average server side latency, average E2E latency, average bandwidth, total successful requests and total number of failures and more. These request aggregates are provided at a service level and per API level for APIs requested in that hour. This is available for Blob, Table and Queue service.

- Capacity information: Provides daily statistics for the space consumed by the service, number of containers and number of objects that are stored in the service. Note, this is currently only provided for the Windows Azure Blob service.

All Analytics Logs and Metrics data are stored in your user account and is accessible via normal Blob and Table REST APIs. The logs and metrics can be accessed from a service running in Windows Azure or directly over the Internet from any application that can send and receive HTTP/HTTPS requests. You can opt in to store either the log data and/or metric data by invoking a REST API to turn on/off the feature at a per service level. Once the feature is turned on, the Windows Azure Storage stores analytics data in the storage account. Log data is stored as Windows Azure Blobs in a special blob container and metrics data is stored in special tables in Windows Azure Tables. To ease the management of this data, we have provided the ability to set a retention policy that will automatically clean up your analytics blob and table data.

Please see the following links for more information:

- MSDN Documentation (this link will be live later today)

- Logging: Additional information and Coding Examples

- Metrics: Additional information and Coding Examples

Nothing heard from the Windows Azure Storage Team for three months and suddenly three posts (two gigantic) in two days!

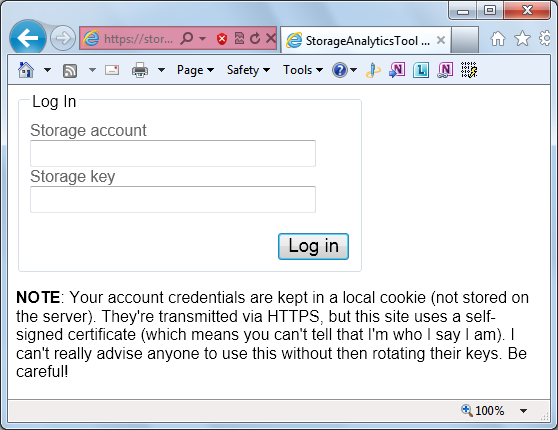

Steve Marx (@smarx) posted a link to his web app to change Windows #Azure storage analytics settings on Twitter: http://storageanalytics.cloudapp.net/. Take heed of Steve’s caution:

The XML elements’ values (white) are editable:

• Steve’s detailed Playing with the new Windows Azure Storage Analytics Features article of 8/4/2011 explains how to use an updated version of http://storageanalytics.cloudapp.net/, which uses a self-signed certificate to encrypt transmissions:

From the introduction to Steve’s post:

Since its release yesterday, I’ve been playing with the new ability to have Windows Azure storage log analytics data. In a nutshell, analytics lets you log as much or as little detail as you want about calls to your storage account (blobs, tables, and queues), including detailed logs (what API calls were made and when) and aggregate metrics (like how much storage you’re consuming and how many total requests you’ve done in the past hour).

As I’m learning about these new capabilities, I’m putting the results of my experiments online at http://storageanalytics.cloudapp.net. This app lets you change your analytics settings (so you’ll need to use a real storage account and key to log in) and also lets you graph your “Get Blob” metrics.

A couple notes about using the app:

- When turning on various logging and analytics features, you’ll have to make sure your XML conforms with the documentation. That means, for example, then when you enable metrics, you’ll also have to add the

<IncludeAPIs />tag, and when you enable a retention policy, you’ll have to add the<Days />tag.- The graph page requires a browser with

<canvas>tag support. (IE9 will do, as will recent Firefox, Chrome, and probably Safari.) Even in modern browsers, I’ve gotten a couple bug reports already about the graph not showing up and other issues. Please let me know on Twitter (I’m @smarx) if you encounter issues so I can track them down. Bonus points if you can send me script errors captured by IE’s F12, Chrome’s Ctrl+Shift+I, Firebug, or the like.- The site uses HTTPS to better secure your account name and key, and I’ve used a self-signed certificate, so you’ll have to tell your browser that you’re okay with browsing to a site with an unverified certificate.

In the rest of this post, I’ll share some details and code for what I’ve done so far.

Jai Haridas, Monilee Atkinson and Brad Calder of the Windows Azure Storage Team described Windows Azure Storage Metrics: Using Metrics to Track Storage Usage on 8/3/2011:

Windows Azure Storage Metrics allows you to track your aggregated storage usage for Blobs, Tables and Queues. The details include capacity, per service request summary, and per API level aggregates. The metrics information is useful to see aggregate view of how a given storage account’s blobs, tables or queues are doing over time. It makes it very easy to see the types of errors that are occurring to help tune your system and diagnose problems and the ability to see daily trends of its usage. For example, the metrics data can be used to understand the request breakdown (by hour).

The metrics data can be categorized as:

- Capacity: Provides information regarding the storage capacity consumed for the blob service, the number of containers and total number of objects stored by the service. In the current version, this is available only for the blob service. We will provide table and queue capacity information in a future version. This data is updated daily and it provides separate capacity information for data stored by user and the data stored for $logs.

- Requests: Provides summary information of requests executed against the service. It provides total number of requests, total ingress/egress, average E2E latency and server latency, total number of failures by category, etc. at an hourly granularity. The summary is provided at service level and it also provides aggregates at an API level for the APIs that have been used for the hour. This data is available for all the three services provided – Blobs, Tables, and Queues.

Finding your metrics data

Metrics are stored in tables in the storage account the metrics are for. The capacity information is stored in a separate table from the request information. These tables are automatically created when you opt in for metrics analytics and once created the tables cannot be deleted, though their contents can be.

As mentioned above, there are two types of tables which store the metrics details:

- Capacity information. When turned on, the system creates $MetricsCapacityBlob table to store capacity information for blobs. This includes the number of objects and capacity consumed by Blobs for the storage account. This information will be recorded in $MetricsCapacityBlob table once per day (see PartitionKey column in Table 1). We report capacity separately for the amount of data stored by the user and the amount of data stored for analytics.

- Transaction metrics. This information is available for all the services – Blobs, Tables and Queues. Each service gets a table for itself and hence the 3 tables are:

- $MetricsTransactionsBlob: This table will contain the summary – both at a service level and for each API issued to the blob service.

- $MetricsTransactionsTable: This table will contain the summary – both at a service level and for each API issued to the table service.

- $MetricsTransactionsQueue: This table will contain the summary – both at a service level and for each API issued to the queue service.

A transaction summary row is stored for each service that depicts the total request count, error counts, etc., for all requests issued for the service at an hour granularity. Aggregated request details are stored per service and for each API issued in the service per hour.

Requests will be categorized separately for:

- Requests issued by the user (authenticated, SAS and anonymous) to access both user data and analytics data in their storage account.

- Requests issued by Windows Azure Storage to populate the analytics data.

It is important to note that the system stores a summary row for each service every hour even if no API was issued for the service in that hour, which would result in a row showing that there were 0 requests during the hour. This helps applications in issuing point queries when monitoring the data to perform analysis. For APIs level metrics the behavior is different; the system stores a summary row for individual APIs only if the API was utilized in that hour.

These tables can be read from like any other user created Windows Azure Table. The REST URI for the metrics table is http://<accountname>.table.core.windows.net/Tables(“$MetricsTransactionsBlob”) to access the Blob Transaction table and http://<accountname>.table.core.windows.net/$MetricsTransactionsBlob() to access rows stored for Blob service in the transaction table.

What does my metrics data contain?

As mentioned, there are two different types of tables for the metrics data. In this section we will review the details contained in each table.

Blob capacity metrics data

This is stored in the $MetricsCapacityBlob Table. Two records with capacity information will be stored in this table for each day. The two records are used to track the capacity consumed by the data that the user has stored separately from the logging data in $logs container.

Table 1 Capacity Metrics table

Transaction metrics data

This is stored in the $MetricsTransactions<service> tables. All three (blob, table, queue) of the services have the same table schema, outlined below. At the highest level, these tables contain two kinds of records:

- Service Summary – This record contains hourly aggregates for the entire service. A record is inserted for each hour even if the service has not been used. The aggregates include request count, success count, failure counts for certain error categories, average E2E latency, average Server latency etc.

- API Summary – This record contains hourly aggregates for the given API. The aggregates include request count, success count, failure count by category, average E2E latency, average server latency, etc. A record is stored in the table for an API only if the API has been issued to the service in that hour.

We track user and Windows Azure Storage Analytics (system) requests in different categories.

User Requests to access any data under the storage account (including analytics data) are tracked under “user” category. These requests types are:

- Authenticated Requests

- Authenticated SAS requests. All failures after a successful authentication will be tracked including authorization failures. An example of Authorization failure in SAS scenario is trying to write to a blob when only read access has been provided.

- Anonymous Requests - Only successful anonymous requests are tracked. The only exception to this are the following which are tracked:

- Server errors – Typically seen as InternalServerError

- Client and Server Timeout errors

- Requests that fail with 304 i.e. Not Modified

Internal Windows Azure Storage Analytics requests are tracked under the “system” category:

- Requests to write logs under the $logs container

- Requests to write to metrics table

These are tracked as “system” so that they are separated out from the actual requests that users issue to access their data or analytics data.

Note that if a request fails before the service can deduce the type of request (i.e. the API name of the request), then the request is recorded under the API name of “Unknown”.

Before we go over the schema for the metrics transaction tables (the table columns and their definitions), we will define some terms used in this table:

- For service summary row, the column represents the aggregate data at a service level. For API summary row, the same column represents data at an API level granularity.

- Counted For Availability: If we have listed an operation result count in the below table as “Counted For Availability”, then it implies that the result category is accounted for in the availability calculation. Availability is defined as (‘billable requests)/(total requests). These requests are counted in both the numerator and denominator of that calculation. Examples: Success, ClientOtherError etc.

- Counted Against Availability: These requests are counted in the Availability denominator only (were not considered as ‘billable requests). This implies that requests are counted only in the denominator and will impact the availability (for example, server timeout errors).

- Billable: These are the requests that are billable. See this post for more information on understanding Windows Azure Storage billing. Example: ServerTimeoutError, ServerOtherError.

NOTE: If a result category is not listed as “Counted For/Against Availability”, then it implies that those requests are not considered in the availability calculations. Examples: ThrottlingError …

See original post for a five-foot long “Table 2 Schema for transaction metrics tables for Blobs, Tables and Queues.” …

Turning Metrics On

A REST API call, as shown below, is used to turn Analytics on for Metrics. In this example, logging is turned on for deletes and writes, but not for reads. The retention policy is enabled and set to ten days - so the analytics service will take care of deleting data older than ten days for you at no additional cost.

Request: PUT http://sally.blob.core.windows.net/?restype=service&comp=properties

HTTP/1.1 x-ms-version: 2009-09-19 x-ms-date: Fri, 25 Mar 2011 23:13:08 GMT Authorization: SharedKey sally:zvfPm5cm8CiVP2VA9oJGYpHAPKxQb1HD44IWmubot0A= Host: sally.blob.core.windows.net <?xml version="1.0" encoding="utf-8"?> <StorageServiceProperties> <Logging> <Version>1.0</Version> <Delete>true </Delete> <Read> false</Read> <Write>true </Write> <RetentionPolicy> <Enabled>true</Enabled> <Days>7</Days> </RetentionPolicy> </Logging> <Metrics> <Version>1.0</Version> <Enabled>true</Enabled> <IncludeAPIs>true</IncludeAPIs> <RetentionPolicy> <Enabled>true</Enabled> <Days>10</Days> </RetentionPolicy> </Metrics> </StorageServiceProperties > Response: HTTP/1.1 202 Content-Length: 0 Server: Windows-Azure-Metrics/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 4d02712a-a68a-4763-8f9b-c2526625be68 x-ms-version: 2009-09-19 Date: Fri, 25 Mar 2011 23:13:08 GMTThe logging and metrics sections allow you to configure what you want to track in your analytics logs and metrics data. The metrics configuration values are described here:

- Version - The version of Analytics Logging used to record the entry.

- Enabled (Boolean) – set to true if you want track metrics data via analytics

- IncludedAPIs (Boolean) – set to true if you want to track metrics for the individual APIs accessed

- Retention policy – this is where you set the retention policy to help you manage the size of your analytics data

- Enabled (Boolean) – set to true if you want to enable a retention policy. We recommend that you do this.

- Days (int) – the number of days you want to keep your analytics logging data. This can be a max of 365 days and a min of 1 day

For more information, please see the MSDN Documentation. (this link will be live later today)

How do I cleanup old Metrics data?

As described above, we highly recommend you set a retention policy for your analytics data. If set, the maximum retention policy allowed is 365 days. Once a retention policy is set, the system will delete the records in the metrics tables and log blobs from the $logs container. The retention period can be set different for logs from metrics data. For example: If a user sets the retention policy for metrics to be 100 days and 30 days for logs, then all the logs for queue, blob, and table services will be deleted after 30 days and records stored in the associated tables will be deleted if the content is > 100 days. Retention policy is enforced even if analytics is turned off but retention policy is enabled. If you do not set a retention policy you can manage your data by manually deleting entities (like you delete entities in regular tables) whenever you wish to do so.

Searching your analytics metrics data

Your metrics can be retrieved using the Query Tables and Query Entities APIs. You have the ability to query and/or delete records in the tables. Note that the tables themselves cannot be deleted. These tables can be read from like any other user created Windows Azure Table. The REST URI for the metrics table is http://<accountname>.table.core.windows.net/Tables(“$MetricsTransactionsBlob”) to access the Blob Transaction table and http://<accountname>.table.core.windows.net/$MetricsTransactionsBlob() to access rows stored in them. To filter the data you can use the $filter=<query expression> extension of the Query Entities API.

The metrics are stored on an hour granularity. The time key represents the starting hour in which the requests were executed. For example, the metrics with a key of 1:00, represent the requests that started between 1:00 and 2:00. The metrics data is optimized to get historical data based on a time range.

Scenario: I want to retrieve capacity details for blob service starting from 2011/05/20 05:00a.m until 2011/05/30 05:00a.m

GET http://sally.table.core.windows.net/$MetricsCapacityBlob()?$filter=PartitionKey ge ‘20110520T0500’and PartitionKey le ‘20110530T0500’

Scenario: I want to view request details (including API details) for table service starting from 2011/05/20 05:00a.m until 2011/05/30 05:00a.m only for requests made to user data

GET http://sally.table.core.windows.net/$MetricsTransactionsTable()?$filter= PartitionKey ge ‘20110520T0500’and PartitionKey le ‘20110530T0500’and RowKey ge ‘user;’ and RowKey lt ‘user<’

To collect trending data you can view historical information (up to the point of your retention policy) which gives you insights into usage, capacity, and other trends.

What charges occur due to metrics?

The billable operations listed below are charged at the same rates applicable to all Windows Azure Storage operations. For more information on how these transactions are billed, see Understanding Windows Azure Storage Billing - Bandwidth, Transactions, and Capacity.

The following actions performed by Windows Azure Storage are billable:

- Write requests to create table entities for metrics

Read and delete requests by the application/client to metrics data are also billable. If you have configured a data retention policy, you are not charged when Windows Azure Storage deletes old metrics data. However, if you delete analytics data, your account is charged for the delete operations.

The capacity used by $metrics tables are billable.

The following can be used to estimate the amount of capacity used for storing metrics data:

- If a service each hour utilizes every API in every service, then approximately 148KB of data may be stored every hour in the metrics transaction tables if both service and API level summary are enabled.

- If a service each hour utilizes every API in every service, then approximately 12KB of data may be stored every hour in the metrics transaction tables if just service level summary is enabled.

- The capacity table for blobs will have 2 rows each day (provided user has opted in for logs) and that implies that every day approximately up to 300 bytes may be added.

Download Metrics Data

Since listing normal tables does not list out metrics tables, existing tools will not be able to display these tables. In absence of existing tools, we wanted to provide a quick reference application with source code to make this data accessible.

The following application takes the service to download the capacity/request aggregates for, the start time and end time and a file to export to. It then exports all metric entities from the selected table to the file in a csv format. This csv format can then be consumed by say excel to study various trends on availability, errors seen by application, latency etc.

For example the following command will download all the ($MetricsTransactionsBlob) table entities for blob service between the provided time range into a file called MyMetrics.txt

DumpMetrics blob requests .\MyMetrics.txt “2011-07-26T22:00Z” “2011-07-26T23:30Z”

const string ConnectionStringKey = "ConnectionString"; static void Main(string[] args) { if (args.Length < 4 || args.Length > 5) { Console.WriteLine("Usage: DumpMetrics <service to search - blob|table|queue> <capacity|requests> <file name to export to> <Start time in UTC for report> <Optional End time in UTC for report>."); Console.WriteLine("Example: DumpMetrics blob capacity test.txt \"2011-06-26T20:30Z\" \"2011-06-28T22:00Z\""); return; } string connectionString = ConfigurationManager.AppSettings[ConnectionStringKey]; CloudStorageAccount account = CloudStorageAccount.Parse(connectionString); CloudTableClient tableClient = account.CreateCloudTableClient(); DateTime startTimeOfSearch = DateTime.Parse(args[3]); DateTime endTimeOfSearch = DateTime.UtcNow; if (args.Length == 5) { endTimeOfSearch = DateTime.Parse(args[4]); } if (string.Equals(args[1], "requests", StringComparison.OrdinalIgnoreCase)) { DumpTransactionsMetrics(tableClient, args[0], startTimeOfSearch.ToUniversalTime(), endTimeOfSearch.ToUniversalTime(), args[2]); } else if (string.Equals(args[1], "capacity", StringComparison.OrdinalIgnoreCase) && string.Equals(args[0], "Blob", StringComparison.OrdinalIgnoreCase)) { DumpCapacityMetrics(tableClient, args[0], startTimeOfSearch, endTimeOfSearch, args[2]); } else { Console.WriteLine("Invalid metrics type. Please provide Requests or Capacity. Capacity is available only for blob service"); } } /// <summary> /// Given a service, start time, end time search for, this method retrieves the metrics rows for requests and exports it to CSV file /// </summary> /// <param name="tableClient"></param> /// <param name="serviceName">The name of the service interested in</param> /// <param name="startTimeForSearch">Start time for reporting</param> /// <param name="endTimeForSearch">End time for reporting</param> /// <param name="fileName">The file to write the report to</param> static void DumpTransactionsMetrics(CloudTableClient tableClient, string serviceName, DateTime startTimeForSearch, DateTime endTimeForSearch, string fileName) { string startingPK = startTimeForSearch.ToString("yyyyMMddTHH00"); string endingPK = endTimeForSearch.ToString("yyyyMMddTHH00"); // table names are case insensitive string tableName = string.Format("$MetricsTransactions{0}", serviceName); Console.WriteLine("Querying table '{0}' for PartitionKey >= '{1}' and PartitionKey <= '{2}'", tableName, startingPK, endingPK); TableServiceContext context = tableClient.GetDataServiceContext(); // turn off merge option as we only want to query and not issue deletes etc. context.MergeOption = MergeOption.NoTracking; CloudTableQuery<MetricsTransactionsEntity> query = (from entity in context.CreateQuery<MetricsTransactionsEntity>(tableName) where entity.PartitionKey.CompareTo(startingPK) >= 0 && entity.PartitionKey.CompareTo(endingPK) <= 0 select entity).AsTableServiceQuery<MetricsTransactionsEntity>(); // now we have the query set. Let us iterate over all entities and store into an output file. // Also overwrite the file Console.WriteLine("Writing to '{0}'", fileName); using (StreamWriter writer = new StreamWriter(fileName)) { // write the header writer.Write("Time, Category, Request Type, Total Ingress, Total Egress, Total Requests, Total Billable Requests,"); writer.Write("Availability, Avg E2E Latency, Avg Server Latency, % Success, % Throttling Errors, % Timeout Errors, % Other Server Errors, % Other Client Errors, % Authorization Errors, % Network Errors, Success,"); writer.Write("Anonymous Success, SAS Success, Throttling Error, Anonymous Throttling Error, SAS ThrottlingError, Client Timeout Error, Anonymous Client Timeout Error, SAS Client Timeout Error,"); writer.Write("Server Timeout Error, Anonymous Server Timeout Error, SAS Server Timeout Error, Client Other Error, SAS Client Other Error, Anonymous Client Other Error,"); writer.Write("Server Other Errors, SAS Server Other Errors, Anonymous Server Other Errors, Authorization Errors, Anonymous Authorization Error, SAS Authorization Error,"); writer.WriteLine("Network Error, Anonymous Network Error, SAS Network Error"); foreach (MetricsTransactionsEntity entity in query) { string[] rowKeys = entity.RowKey.Split(';'); writer.WriteLine("{0}, {1}, {2}, {3}, {4}, {5}, {6}, {7}, {8}, {9}, {10}, {11}, {12}, {13}, {14}, {15}, {16}, {17}, {18}, {19}, {20}, {21}, {22}, {23}, {24}, {25}, {26}, {27}, {28}, {29}, {30}, {31}, {32}, {33}, {34}, {35}, {36}, {37}, {38}, {39}, {40}", entity.PartitionKey, rowKeys[0], // category - user | system rowKeys[1], // request type is the API name (and "All" for service summary rows) entity.TotalIngress, entity.TotalEgress, entity.TotalRequests, entity.TotalBillableRequests, entity.Availability, entity.AverageE2ELatency, entity.AverageServerLatency, entity.PercentSuccess, entity.PercentThrottlingError, entity.PercentTimeoutError, entity.PercentServerOtherError, entity.PercentClientOtherError, entity.PercentAuthorizationError, entity.PercentNetworkError, entity.Success, entity.AnonymousSuccess, entity.SASSuccess, entity.ThrottlingError, entity.AnonymousThrottlingError, entity.SASThrottlingError, entity.ClientTimeoutError, entity.AnonymousClientTimeoutError, entity.SASClientTimeoutError, entity.ServerTimeoutError, entity.AnonymousServerTimeoutError, entity.SASServerTimeoutError, entity.ClientOtherError, entity.SASClientOtherError, entity.AnonymousClientOtherError, entity.ServerOtherError, entity.SASServerOtherError, entity.AnonymousServerOtherError, entity.AuthorizationError, entity.AnonymousAuthorizationError, entity.SASAuthorizationError, entity.NetworkError, entity.AnonymousNetworkError, entity.SASNetworkError); } } } /// <summary> /// Given a service, start time, end time search for, this method retrieves the metrics rows for capacity and exports it to CSV file /// </summary> /// <param name="tableClient"></param> /// <param name="serviceName">The name of the service interested in</param> /// <param name="startTimeForSearch">Start time for reporting</param> /// <param name="endTimeForSearch">End time for reporting</param> /// <param name="fileName">The file to write the report to</param> static void DumpCapacityMetrics(CloudTableClient tableClient, string serviceName, DateTime startTimeForSearch, DateTime endTimeForSearch, string fileName) { string startingPK = startTimeForSearch.ToString("yyyyMMddT0000"); string endingPK = endTimeForSearch.ToString("yyyyMMddT0000"); // table names are case insensitive string tableName = string.Format("$MetricsCapacity{0}", serviceName); Console.WriteLine("Querying table '{0}' for PartitionKey >= '{1}' and PartitionKey <= '{2}'", tableName, startingPK, endingPK); TableServiceContext context = tableClient.GetDataServiceContext(); // turn off merge option as we only want to query and not issue deletes etc. context.MergeOption = MergeOption.NoTracking; CloudTableQuery<MetricsCapacityEntity> query = (from entity in context.CreateQuery<MetricsCapacityEntity>(tableName) where entity.PartitionKey.CompareTo(startingPK) >= 0 && entity.PartitionKey.CompareTo(endingPK) <= 0 select entity).AsTableServiceQuery<MetricsCapacityEntity>(); // now we have the query set. Let us iterate over all entities and store into an output file. // Also overwrite the file Console.WriteLine("Writing to '{0}'", fileName); using (StreamWriter writer = new StreamWriter(fileName)) { // write the header writer.WriteLine("Time, Category, Capacity (bytes), Container count, Object count"); foreach (MetricsCapacityEntity entity in query) { writer.WriteLine("{0}, {1}, {2}, {3}, {4}", entity.PartitionKey, entity.RowKey, entity.Capacity, entity.ContainerCount, entity.ObjectCount); } } }The definitions for entities used are:

[DataServiceKey("PartitionKey", "RowKey")] public class MetricsCapacityEntity { public string PartitionKey { get; set; } public string RowKey { get; set; } public long Capacity { get; set; } public long ContainerCount { get; set; } public long ObjectCount { get; set; } } [DataServiceKey("PartitionKey", "RowKey")] public class MetricsTransactionsEntity { public string PartitionKey { get; set; } public string RowKey { get; set; } public long TotalIngress { get; set; } public long TotalEgress { get; set; } public long TotalRequests { get; set; } public long TotalBillableRequests { get; set; } public double Availability { get; set; } public double AverageE2ELatency { get; set; } public double AverageServerLatency { get; set; } public double PercentSuccess { get; set; } public double PercentThrottlingError { get; set; } public double PercentTimeoutError { get; set; } public double PercentServerOtherError { get; set; } public double PercentClientOtherError { get; set; } public double PercentAuthorizationError { get; set; } public double PercentNetworkError { get; set; } public long Success { get; set; } public long AnonymousSuccess { get; set; } public long SASSuccess { get; set; } public long ThrottlingError { get; set; } public long AnonymousThrottlingError { get; set; } public long SASThrottlingError { get; set; } public long ClientTimeoutError { get; set; } public long AnonymousClientTimeoutError { get; set; } public long SASClientTimeoutError { get; set; } public long ServerTimeoutError { get; set; } public long AnonymousServerTimeoutError { get; set; } public long SASServerTimeoutError { get; set; } public long ClientOtherError { get; set; } public long AnonymousClientOtherError { get; set; } public long SASClientOtherError { get; set; } public long ServerOtherError { get; set; } public long AnonymousServerOtherError { get; set; } public long SASServerOtherError { get; set; } public long AuthorizationError { get; set; } public long AnonymousAuthorizationError { get; set; } public long SASAuthorizationError { get; set; } public long NetworkError { get; set; } public long AnonymousNetworkError { get; set; } public long SASNetworkError { get; set; } }Case Study

Let us walk through a sample scenario how these metrics data can be used. As a Windows Azure Storage customer, I would like to know how my service is doing and would like to see the request trend between any two time periods.

Description: The following is a console program that takes as input: service name to retrieve the data for, start time for the report, end time for the report and the file name to export the data to in csv format.

We will start with the method “ExportMetrics”. The method will use the time range provided as input arguments to create a query filter. Since the PartitionKey is of the format “YYYYMMDDTHH00” we will create the starting and ending PartitionKey filters. The table name is $MetricsTransactions appended by the service to search for. Once we have these parameters, it is as simple as creating a normal table query using the TableServiceDataContext. We use the extension AsTableServiceQuery as it takes care of continuation tokens. The other important optimization is we turn off merge tracking in which the context tracks all the entities returned in the query response. We can do this here since the query is solely used for retrieval rather than subsequent operations like Delete on these entities. The class used to represent each row in the response is MetricsEntity and its definition is given below. It is a plain simple CSharp class definition exception for the DataServcieKey required by WCF Data Services .NET and has only subset of properties that we would be interested in.

Once we have the query, all we do is to iterate over this query which results in executing the query. Behind the scenes, this CloudTableQuery instance may lazily execute multiple queries if needed to handle continuation tokens. We then write this in csv format. But one can imagine importing this into Azure table or SQL to perform more reporting like services.

NOTE: Exception handling and parameter validation is omitted for brevity.

/// <summary> /// Given a service name, start time, end time search for, this method retrieves the metrics rows for requests /// and exports it to CSV file /// </summary> /// <param name="tableClient"></param> /// <param name="serviceName">The name of the service interested in</param> /// <param name="startTimeForSearch">Start time for reporting</param> /// <param name="endTimeForSearch">End time for reporting</param> /// <param name="fileName">The file to write the report to</param> static void ExportMetrics(CloudTableClient tableClient, string serviceName, DateTime startTimeForSearch, DateTime endTimeForSearch, string fileName) { string startingPK = startTimeForSearch.ToString("yyyyMMddTHH00"); string endingPK = endTimeForSearch.ToString("yyyyMMddTHH00"); // table names are case insensitive string tableName = "$MetricsTransactions"+ serviceName; Console.WriteLine("Querying table '{0}' for PartitionKey >= '{1}' and PartitionKey <= '{2}'", tableName, startingPK, endingPK); TableServiceContext context = tableClient.GetDataServiceContext(); // turn off merge option as we only want to query and not issue deletes etc. context.MergeOption = MergeOption.NoTracking; CloudTableQuery<MetricsEntity> query = (from entity in context.CreateQuery<MetricsEntity>(tableName) where entity.PartitionKey.CompareTo(startingPK) >= 0 && entity.PartitionKey.CompareTo(endingPK) <= 0 select entity).AsTableServiceQuery<MetricsEntity>(); // now we have the query set. Let us iterate over all entities and store into an output file. // Also overwrite the file using (Stream stream= new FileStream(fileName, FileMode.Create, FileAccess.ReadWrite)) { using (StreamWriter writer = new StreamWriter(stream)) { // write the header writer.WriteLine("Time, Category, Request Type, Total Ingress, Total Egress, Total Requests, Total Billable Requests, Availability, Avg E2E Latency, Avg Server Latency, % Success, % Throttling, % Timeout, % Misc. Server Errors, % Misc. Client Errors, % Authorization Errors, % Network Errors"); foreach (MetricsEntity entity in query) { string[] rowKeys = entity.RowKey.Split(';'); writer.WriteLine("{0}, {1}, {2}, {3}, {4}, {5}, {6}, {7}, {8}, {9}, {10}, {11}, {12}, {13}, {14}, {15}, {16}", entity.PartitionKey, rowKeys[0], // category - user | system rowKeys[1], // request type is the API name (and "All" for service summary rows) entity.TotalIngress, entity.TotalEgress, entity.TotalRequests, entity.TotalBillableRequests, entity.Availability, entity.AverageE2ELatency, entity.AverageServerLatency, entity.PercentSuccess, entity.PercentThrottlingError, entity.PercentTimeoutError, entity.PercentServerOtherError, entity.PercentClientOtherError, entity.PercentAuthorizationError, entity.PercentNetworkError); } } } } [DataServiceKey("PartitionKey", "RowKey")] public class MetricsEntity { public string PartitionKey { get; set; } public string RowKey { get; set; } public long TotalIngress { get; set; } public long TotalEgress { get; set; } public long TotalRequests { get; set; } public long TotalBillableRequests { get; set; } public double Availability { get; set; } public double AverageE2ELatency { get; set; } public double AverageServerLatency { get; set; } public double PercentSuccess { get; set; } public double PercentThrottlingError { get; set; } public double PercentTimeoutError { get; set; } public double PercentServerOtherError { get; set; } public double PercentClientOtherError { get; set; } public double PercentAuthorizationError { get; set; } public double PercentNetworkError { get; set; } }The main method is simple enough to parse the input.

static void Main(string[] args) { if (args.Length < 4) { Console.WriteLine("Usage: MetricsReporter <service to search - blob|table|queue> <Start time in UTC for report> <End time in UTC for report> <file name to export to>."); Console.WriteLine("Example: MetricsReporter blob \"2011-06-26T20:30Z\" \"2011-06-28T22:00Z\""); return; } CloudStorageAccount account = CloudStorageAccount.Parse(ConnectionString); CloudTableClient tableClient = account.CreateCloudTableClient(); DateTime startTimeOfSearch = DateTime.Parse(args[1]).ToUniversalTime(); DateTime endTimeOfSearch = DateTime.Parse(args[2]).ToUniversalTime(); //ListTableRows(tableClient, timeOfRequest); ExtractMetricsToReports(tableClient, args[0], startTimeOfSearch, endTimeOfSearch, args[3]); }Once we have the data exported as CSV files, once can use Excel to import the data and create required important charts.

For more information, please see the MSDN Documentation

Jai Haridas, Monilee Atkinson and Brad Calder of explained Windows Azure Storage Logging: Using Logs to Track Storage Requests in an 8/2/2011 post to the the Windows Azure Storage Team Blog:

Windows Azure Storage Logging provides a trace of the executed requests against your storage account (Blobs, Tables and Queues). It allows you to monitor requests to your storage accounts, understand performance of individual requests, analyze usage of specific containers and blobs, and debug storage APIs at a request level.

What is logged?

You control what types of requests are logged for your account. We categorize requests into 3 kinds: READ, WRITE and DELETE operations. You can set the logging properties for each service indicating the types of operations they are interested in. For example, opting to have delete requests logged for blob service will result in blob or container deletes to be recorded. Similarly, logging reads and writes will capture reads/writes on properties or the actual objects in the service of interest. Each of these options must be explicitly set to true (data is captured) or false (no data is captured).

What requests are logged?

The following authenticated and anonymous requests are logged.

- Authenticated Requests:

- Signed requests to user data. This includes failed requests such as throttled, network exceptions, etc.

- SAS (Shared Access Signature) requests whose authentication is validated

- User requests to analytics data

- Anonymous Requests:

- Successful anonymous requests.

- If an anonymous request results in one of the following errors, it will be logged

- Server errors – Typically seen as InternalServerError or ServerBusy

- Client and Server Timeout errors (seen as InternalServerError with StorageErrorCode = Timeout)

- GET requests that fail with 304 i.e. Not Modified

The following requests are not logged

- Authenticated Requests:

- System requests to write and delete the analytics data

- Anonymous Requests:

- Failed anonymous requests (other than those listed above) are not logged so that the logging system is not spammed with invalid anonymous requests.

During normal operation all requests are logged; but it is important to note that logging is provided on a best effort basis. This means we do not guarantee that every message will be logged due to the fact that the log data is buffered in memory at the storage front-ends before being written out, and if a role is restarted then its buffer of logs would be lost.

What data do my logs contain and in what format?

Each request is represented by one or more log records. A log record is a single line in the blob log and the fields in the log record are ‘;’ separated. We opted for a ‘;’ separated log file rather than a custom format or XML so that existing tools like LogParser, Excel etc, can be easily extended or used to analyze the logs. Any field that may contain ‘;’ is enclosed in quotes and html encoded (using HtmlEncode method).

Each record will contain the following fields:

- Log Version (string): The log version. We currently use “1.0”.

- Transaction Start Time (timestamp): The UTC time at which the request was received by our service.

- REST Operation Type (string) – will be one of the REST APIs. See “Operation Type: What APIs are logged?” section below for more details.

- Request Status (string) – The status of request operation. See Transaction Status section below for more details.

- HTTP Status Code (string): E.g. “200”. This can also be “Unknown” in cases where communication with the client is interrupted before we can set the status code.

- E2E Latency (duration): The total time in milliseconds taken to complete a request in the Windows Azure Storage Service. This value includes the required processing time within Windows Azure Storage to read the request, send the response, and receive acknowledgement of the response.

- Server Latency (duration): The total processing time in milliseconds taken by the Windows Azure Storage Service to process a request. This value does not include the network latency specified in E2E Latency.

- Authentication type (string) – authenticated, SAS, or anonymous.

- Requestor Account Name (string): The account making the request. For anonymous and SAS requests this will be left empty.

- Owner Account Name (string): The owner of the object(s) being accessed.

- Service Type (string): The service the request was for (blob, queue, table).

- Request URL (string): The full request URL that came into the service – e.g. “PUT http://myaccount.blob.core.windows.net/mycontainer/myblob?comp=block&blockId=”. This is always quoted.

- Object Key (string): This is the key of the object the request is operating on. E.g. for Blob: “/myaccount/mycontainer/myblob”. E.g. for Queue: “/myaccount/myqueue”. E.g. For Table: “/myaccount/mytable/partitionKey/rowKey”. Note: If custom domain names are used, we still have the actual account name in this key, not the domain name. This field is always quoted.

- Request ID (guid): The x-ms-request-id of the request. This is the request id assigned by the service.

- Operation Number (int): There is a unique number for each operation executed for the request that is logged. Though most operations will just have “0” for this (See examples below), there are operations which will contain multiple entries for a single request.

- Copy Blob will have 3 entries in total and operation number can be used to distinguish them. The log entry for Copy will have operation number “0” and the source read and destination write will have 1 and 2 respectively.

- Table Batch command. An example is a batch command with two Insert’s: the Batch would have “0” for operation number, the first insert would have “1”, and the second Insert would have “2”.

- Client IP (string): The client IP from which the request came.

- Request Version (string): The x-ms-version used to execute the request. This is the same x-ms-version response header that we return.

- Request Header Size (long): The size of the request header.

- Request Packet Size (long) : The size of the request payload read by the service.

- Response Header Size (long): The size of the response header.

- Response Packet Size (long) : The size of the response payload written by the service.

NOTE: The above request and response sizes may not be filled if a request fails.- Request Content Length (long): The value of Content-Length header. This should be the same size of Request Packet Size (except for error scenarios) and helps confirm the content length sent by clients.

- Request MD5 (string): The value of Content-MD5 (or x-ms-content-md5) header passed in the request. This is the MD5 that represents the content transmitted over the wire. For PutBlockList operation, this means that the value stored is for the content of the PutBlockList and not the blob itself.

- Server MD5 (string): The md5 value evaluated on the server. For PutBlob, PutPage, PutBlock, we store the server side evaluated content-md5 in this field even if MD5 was not sent in the request.

- ETag(string): For objects for which an ETag is returned, the ETag of the object is logged. Please note that we will not log this in operations that can return multiple objects. This field is always quoted.

- Last Modified Time (DateTime): For objects where a Last Modified Time (LMT) is returned, it will be logged. If the LMT is not returned, it will be ‘‘(empty string). Please note that we will not log this in operations that can return multiple objects.

- ConditionsUsed(string): Semicolon separated list of ConditionName=value. This is always quoted. ConditionName can be one of the following:

- If-Modified-Since

- If-Unmodified-Since

- If-Match

- If-None-Match

- User Agent (string): The User-Agent header.

- Referrer (string): The “Referrer” header. We log up to the first 1 KB chars.

- Client Request ID (string): This is custom logging information which can be passed by the user via x-ms-client-request-id header (see below for more details). This is opaque to the service and has a limit of 1KB characters. This field is always quoted.

Examples

Put Blob

1.0;2011-07-28T18:02:40.6271789Z;PutBlob;Success;201;28;21;authenticated;sally;sally;blob;"http://sally.blob.core.windows.net/thumbnails/lake.jpg?timeout=30000";"/sally/thumbnails/lake.jpg";fb658ee6-6123-41f5-81e2-4bfdc178fea3;0;201.9.10.20;2009-09-19;438;100;223;0;100;;"66CbMXKirxDeTr82SXBKbg==";"0x8CE1B67AD25AA05";Thursday, 28-Jul-11 18:02:40 GMT;;;;"7/28/2011 6:02:40 PM ab970a57-4a49-45c4-baa9-20b687941e32"Anonymous Get Blob

1.0;2011-07-28T18:52:40.9241789Z;GetBlob;AnonymousSuccess;200;18;10;anonymous;;sally;blob;"http:// sally.blob.core.windows.net/thumbnails/lake.jpg?timeout=30000";"/sally/thumbnails/lake.jpg";a84aa705-8a85-48c5-b064-b43bd22979c3;0;123.100.2.10;2009-09-19;252;0;265;100;0;;;"0x8CE1B6EA95033D5";Thursday, 28-Jul-11 18:52:40 GMT;;;;"7/28/2011 6:52:40 PM ba98eb12-700b-4d53-9230-33a3330571fc"Copy Blob

Copy blob will have 3 log lines logged. The request id will be the same but operation id (highlighted in the examples below) will be incremented. The line with operation ID 0 represents the entire copy blob operation. The operation ID 1 represents the source blob retrieval for the copy. This operation is called CopyBlobSource. Operation ID 2 represents destination blob information and the operation is called CopyBlobDestination. The CopyBlobSource will not have information like request size or response size set and is meant only to provide information like name, conditions used etc. about the source blob.1.0;2011-07-28T18:02:40.6526789Z;CopyBlob;Success;201;28;28;authenticated;account8ce1b67a9e80b35;sally;blob;"http://sally.blob.core.windows.net/thumbnails/lake.jpg?timeout=30000";"/sally/thumbnails/lakebck.jpg";85ba10a5-b7e2-495e-8033-588e08628c5d;0;268.20.203.21;2009-09-19;505;0;188;0;0;;;"0x8CE1B67AD473BC5";Thursday, 28-Jul-11 18:02:40 GMT;;;;"7/28/2011 6:02:40 PM 683803d3-538f-4ba8-bc7c-24c83aca5b1a"

1.0;2011-07-28T18:02:40.6526789Z;CopyBlobSource;Success;201;28;28;authenticated;sally;sally;blob;"http://sally.blob.core.windows.net/thumbnails/lake.jpg?timeout=30000";"/sally/thumbnails/d1c77aca-cf65-4166-9401-0fd3df7cf754";85ba10a5-b7e2-495e-8033-588e08628c5d;1;268.20.203.21;2009-09-19;505;0;188;0;0;;;;;;;;"7/28/2011 6:02:40 PM 683803d3-538f-4ba8-bc7c-24c83aca5b1a"

1.0;2011-07-28T18:02:40.6526789Z;CopyBlobDestination;Success;201;28;28;authenticated;sally;sally;blob;"http://sally.blob.core.windows.net/thumbnails/lake.jpg?timeout=30000";"/sally/thumbnails/lakebck.jpg";85ba10a5-b7e2-495e-8033-588e08628c5d;2;268.20.203.21;2009-09-19;505;0;188;0;0;;;;;;;;"7/28/2011 6:02:40 PM 683803d3-538f-4ba8-bc7c-24c83aca5b1a"

Table Batch Request or entity group requests with 2 inserts.

The first one stands for the batch request, and the latter two represent the actual inserts. NOTE: the key for the batch is the key of the first command in the batch. The operation number is used to identify different individual commands in the batch1.0;2011-07-28T18:02:40.9701789Z;EntityGroupTransaction;Success;202;104;61;authenticated;sally;sally;table;"http://sally.table.core.windows.net/$batch";"/sally";b59c0c76-dc04-48b7-9235-80124f0066db;0;268.20.203.21;2009-09-19;520;100918;235;189150;100918;;;;;;;;"7/28/2011 6:02:40 PM 70b9cb5c-8e2f-4e8a-bf52-7b79fcdc56e7"

1.0;2011-07-28T18:02:40.9701789Z;InsertEntity;Success;202;104;61;authenticated;sally;sally;table;"http:// sally.table.core.windows.net/$batch";"/sally/orders/5f1a7147-28df-4398-acf9-7d587c828df9/a9225cef-04aa-4bbb-bf3b-b0391e3c436e";b59c0c76-dc04-48b7-9235-80124f0066db;1;268.20.203.21;2009-09-19;520;100918;235;189150;100918;;;"W/"datetime'2011-07-28T18%3A02%3A41.0086789Z'"";Thursday, 28-Jul-11 18:02:41 GMT;;;;"7/28/2011 6:02:40 PM 70b9cb5c-8e2f-4e8a-bf52-7b79fcdc56e7"

1.0;2011-07-28T18:02:40.9701789Z;InsertEntity;Success;202;104;61;authenticated;sally;sally;table;"http:// sally.table.core.windows.net/$batch";"/sally/orders/5f1a7147-28df-4398-acf9-7d587c828df9/a9225cef-04aa-4bbb-bf3b-b0391e3c435d";b59c0c76-dc04-48b7-9235-80124f0066db;2;268.20.203.21;2009-09-19;520;100918;235;189150;100918;;;"W/"datetime'2011-07-28T18%3A02%3A41.0086789Z'"";Thursday, 28-Jul-11 18:02:41 GMT;;;;"7/28/2011 6:02:40 PM 70b9cb5c-8e2f-4e8a-bf52-7b79fcdc56e7"

Operation Type: What APIs are logged?

The APIs recorded in the logs are listed by service below, which match the REST APIs for Windows Azure Storage. Note that for operations that have multiple operations executed (e.g. Batch) as part of them, the field OperationType will contain multiple records with a main record (that has Operation Number ‘0’) and an individual record for each sub operation.

Blob Service APIs

- AcquireLease

- BreakLease

- ClearPage

- CopyBlob

- CopyBlobSource: Information about the source that was copied and is used only for logs

- CopyBlobDestination: Information about the destination and is used only for logs

- CreateContainer

- DeleteBlob

- DeleteContainer

- GetBlob

- GetBlobMetadata

- GetBlobProperties

- GetBlockList

- GetContainerACL

- GetContainerMetadata

- GetContainerProperties

- GetLeaseInfo

- GetPageRegions

- LeaseBlob

- ListBlobs

- ListContainers

- PutBlob

- PutBlockList

- PutBlock

- PutPage

- ReleaseLease

- RenewLease

- SetBlobMetadata

- SetBlobProperties

- SetContainerACL

- SetContainerMetadata

- SnapshotBlob

- SetBlobServiceProperties

- GetBlobServiceProperties

Queue Service APIs

- ClearMessages

- CreateQueue

- DeleteQueue

- DeleteMessage

- GetQueueMetadata

- GetQueue

- GetMessage

- GetMessages

- ListQueues

- PeekMessage

- PeekMessages

- PutMessage

- SetQueueMetadata

- SetQueueServiceProperties

- GetQueueServiceProperties

- EntityGroupTransaction

- CreateTable

- DeleteTable

- DeleteEntity

- InsertEntity

- QueryEntity

- QueryEntities

- QueryTable

- QueryTables

- UpdateEntity

- MergeEntity

- SetTableServiceProperties

- GetTableServiceProperties

Transaction Status

This table shows the different statuses that can be written to your log. …

See the original post for an even longer (~ 10-foot) table.

Client Request Id

One of the logged fields is called the Client Request Id. A client can choose to pass this client perspective id up to 1KB in size as a HTTP header “x-ms-client-request-id” in with every request and it will be logged for the request. Note, if you use the optional client request id header, it is used in constructing the canonicalized header, since all headers starting with “x-ms” are part of the resource canonicalization used for signing a request.

Since this is treated as an id that a client may associate with a request, it is very helpful to investigate requests that failed due to network or timeout errors. For example, you can search for requests in the log with the given client request id to see if the request timed out, and see if the E2E latency indicates that there is a slow network connection. As noted above, some requests may not get logged due to node failures.

Turning Logging On

A REST API call, as shown below, is used to turn on Logging. In this example, logging is turned on for deletes and writes, but not for reads. The retention policy is enabled and set to ten days - so the analytics service will take care of deleting data older than ten days for you at no additional cost. Note that you need to turn on logging separately for blobs, tables, and queues. The example below demonstrates how to turn logging on for blobs.

Request:

PUT http://sally.blob.core.windows.net/?restype=service&comp=properties

HTTP/1.1 x-ms-version: 2009-09-19 x-ms-date: Fri, 25 Mar 2011 23:13:08 GMT Authorization: SharedKey sally:zvfPm5cm8CiVP2VA9oJGYpHAPKxQb1HD44IWmubot0A= Host: sally.blob.core.windows.net <?xml version="1.0" encoding="utf-8"?> <StorageServiceProperties> <Logging> <Version>1.0</Version> <Delete>true </Delete> <Read> false</Read> <Write>true </Write> <RetentionPolicy> <Enabled>true</Enabled> <Days>7</Days> </RetentionPolicy> </Logging> <Metrics> <Version>1.0</Version> <Enabled>true</Enabled> <IncludeAPIs>true</IncludeAPIs> <RetentionPolicy> <Enabled>true</Enabled> <Days>10</Days> </RetentionPolicy> </Metrics> </StorageServiceProperties > Response:

HTTP/1.1 202 Content-Length: 0 Server: Windows-Azure-Metrics/1.0 Microsoft-HTTPAPI/2.0 x-ms-request-id: 4d02712a-a68a-4763-8f9b-c2526625be68 x-ms-version: 2009-09-19 Date: Fri, 25 Mar 2011 23:13:08 GMTThe above logging and metrics section shows you how to configure what you want to track in your analytics logs and metrics data. The logging configuration values are described below:

- Version - The version of Analytics Logging used to record the entry.

- Delete (Boolean) – set to true if you want to track delete requests and false if you do not

- Read (Boolean) – set to true if you want to track read requests and false if you do not

- Write (Boolean) – set to true if you want to track write requests and false if you do not

- Retention policy – this is where you set the retention policy to help you manage the size of your analytics data

- Enabled (Boolean) – set to true if you want to enable a retention policy. We recommend that you do this.

- Days (int) – the number of days you want to keep your analytics logging data. This can be a max of 365 days and a min of 1 day.

For more information, please see the MSDN Documentation. (this link will be live later today)

Where can I find the logs and how does the system store them?

The analytics logging data is stored as block blobs in a container called $logs in your blob namespace for the storage account being logged. The $logs container is created automatically when you turn logging on and once created this container cannot be deleted, though the blobs stored in the container can be deleted. The $logs container can be accessed using “http://<accountname>.blob.core.windows.net/$logs” URL. Note that normal container listing operations that you issue to list your containers will not list the $logs container. But you can list the blobs inside the $logs container itself. Logs will be organized in the $logs namespace per hour by service type (Blob, Table and Queue). Log entries are written only if there are eligible requests to the service and the operation type matches the type opted for logging. For example, if there was no table activity on an account for an hour but we had blob activity, no logs would be created for the table service but we would have some for blob service. If in the next hour there is table activity, table logs would be created for that hour.

We use block blobs to store the log entries as block blobs represents files better than page blobs. In addition, the 2 phase write semantics of block blobs allows our system to write a set of log entries as a block in the block blob. Log entries are accumulated and written to a block when the size reaches 4 MB or if it has been up to 5 minutes since the entries have been flushed to a block. The system will commit the block blob if the size of the uncommitted blob reaches 150MB or if it has been up to 5 minutes since the first block was uploaded – whichever is reached first. Once a blob is committed, it is not updated with any more blocks of log entries. Since a block blob is available to read only after commit operation, you can be assured that once a log blob is committed it will never be updated again.

NOTE: Applications should not take any dependency on the above mentioned size and time trigger for flushing a log entry or committing the blob as it can change without notice.

What is the naming convention used for logs?

Logging in a distributed system is a challenging problem. What makes it challenging is the fact that there are many servers that can process requests for a single account and hence be a source for these log entries. Logs from various sources need to be combined into a single log. Moreover, clock skews cannot be ruled out and the number of log entries produced by a single account in a distributed system can easily run into thousands of log entries per second. To ensure that we provide a pattern to process these logs efficiently despite these challenges, we have a naming scheme and we store additional metadata for the logs that allow easy log processing.

The log name under the $logs container will have the following format:

<service name>/YYYY/MM/DD/hhmm/<Counter>.log

- Service Name: “blob”, “table”, “queue”

- YYYY – The four digit year for the log

- MM – The two digit month for the log

- DD – The two digit day for the log

- hh – The two digit hour (24 hour format) representing the starting hour for all the logs. All timestamps are in UTC

- mm – The two digit number representing the starting minute for all the logs. This is always 00 for this version and kept for future use

- Counter – A zero based counter as there can be multiple logs generated for a single hour. The counter is padded to be 6 digits. The counter progress is based on the last log’s counter value within a given hour. Hence if you delete the last log blob, you may have the same blob name repeat again. It is recommended to not delete the blob logs right away.

The following are properties of the logs:

- A request is logged based on when it ends. For example, if a request starts 13:57 and lasts for 5 minutes, it will make it into the log at the time it ended. It will therefore appear in a log with hh=1400.

- Log entries can be recorded out of order which implies that just inspecting the first and last log entry is not sufficient to figure if the log contains the time range you may be interested in.

To aid log analysis we store the following metadata for each of the log blobs:

- LogType = The type of log entries that a log contains. It is described as combination of read, write and delete. The types will be comma separated. This allows you to download blobs which have the operation type that you are interested in.

- StartTime = The minimum time of a log entry in the log. It is of form YYYY-MM-DDThh:mm:ssZ. This represents the start time for a request that is logged in the blob.

- EndTime = The maximum time of a log entry in the log of form YYYY-MM-DDThh:mm:ssZ. This represents the maximum start time of a request logged in the blob.

- LogVersion = The version of the log format. Currently 1.0. This can be used to process a given blob as all entries in the blob will conform to this version.

With the above you can list of all of the blobs with the “include=metadata” option to quickly see which blob logs have the given time range of logs for processing.

For example, assume we have a blob log that contains write events generated at 05:10:00 UTC on 2011/03/04 and contains requests that started at 05:02:30.0000001Z until 05:08:05.1000000X, the log name will be: $logs/blob/2011/03/04/0500/000000.log and the metadata will contain the following properties:

- LogType=write

- StartTime=2011-03-04T05:02:30.0000001Z

- EndTime=2011-03-04T05:08:05.1000000Z

- LogVersion=1.0

Note, duplicate log records may exist in logs generated for the same hour and can be detected by checking for duplicate RequestId and Operation number.

Operations on $logs container and manipulating log data

As we mentioned above, once you enable logging, your log data is stored as block blobs in a container called $logs in the blob namespace of your account. You can access your $logs container using http://<accountname>.blob.core.windows.net/$logs. To list your logs you can use the list blobs API. The logs are stored organized by service type (blob, table and queue) and are sorted by generation date/time within each service type. The log name under the $logs container will have the following format: <service name>/YYYY/MM/DD/hhmm/<Counter>.log

The following operations are the operations allowed on the $logs container:

- List blobs in $logs container. (Note that $logs will not be displayed in result of listing all containers in the account namespace).

- Read committed blobs under $logs.

- Delete specific logs. Note: logs can be deleted but the container itself cannot be deleted.

- Get metadata/properties for the log.

An example request for each type of operation can be found below:

- List logs

GET http://sally.blob.core.windows.net/$logs?restype=container&comp=list- List logs generated for blob service from 2011-03-24 05:00 until 05:59

GET http://sally.blob.core.windows.net/$logs?restype=container&comp=list&prefix=blob/2011/03/24/0500/&include=metadata- Download a specific log

GET http://sally.blob.core.windows.net/$logs/blob/2011/03/24/0500/000000.log- Delete a specific log

Delete http://sally.blob.core.windows.net/$logs/blob/2011/03/24/0500/000000.logTo improve enumerating the logs you can pass a prefix when using the list blobs API. For example, to filter blobs by date/time the logs are generated on, you can pass a date/time as the prefix (blob/2011/04/24/) when using the list blobs API.

Listing all logs generated in the month of March in 2011 for the Blob service would be done as follows: http://myaccount.blob.core.windows.net/$logs?restype=container&comp=list&prefix=blob/2011/03

You can filter down to a specific hour using the prefix. For example, if you want to scan logs generated for March 24th 2011 at 6 PM (18:00), use

http://myaccount.blob.core.windows.net/$logs?restype=container&comp=list&prefix=blob/2011/03/24/1800/It is important to note that log entries are written only if there are requests to the service. For example, if there was no table activity on an account for an hour but you had blob activity, no logs would be created for the table service but you would have some for blob service. If in the next hour there is table activity, table logs would be created for that hour.

What is the versioning story?

The following describes the versioning for logs:

- The version is stored in the blob metadata and each log entry as the first field.

- All records within a single blob will have the same version.

- When new fields are added, they may be added to the end and will not incur a version change if this is the case. Therefore, applications responsible for processing the logs should be designed to interpret only the first set of columns they are expecting and ignore any extra columns in a log entry.

- Examples of when a version change could occur:

- The representation needs to change for a particular field (example – data type changes).

- A field needs to be removed.

What is the scalability targets and capacity limits for logs and how does this related to my storage account?

Capacity and scale for your analytics account is separate from your ‘regular’ account. There is separate 20TB allocated for analytics data (this includes both metrics and logs data). This is not included as part of the 100TB limit for an individual account. In addition, $logs are kept in a separate part of the namespace for the storage account, so it is throttled separately from the storage account, and requests issued by Windows Azure Storage to generate or delete these logs do not affect the per partition or per account scale targets described in the Storage Scalability Targets blog post.

How do I cleanup my logs?

To ease the management of your logs, we have provided the functionality of retention policy which will automatically cleanup ‘old’ logs without you being charged for the cleanup. It is recommended that you set a retention policy for logs such that your analytics data will be within the 20TB limit allowed for analytics data (logs and metrics combined) as described above.

A maximum of 365 days is allowed for retention policy. Once a retention policy is set, the system will delete the logs when logs age beyond the number of days set in the policy. This deletion will be done lazily in the background. Retention policy can be turned off at any time but if set, the retention policy is enforced even if logging is turned off. For example: If you set the retention policy for logging to be 10 days for blob service, then all the logs for blob service will be deleted if the content is > 10 days. If you do not set a retention policy you can manage your data by manually deleting entities (like you delete entities in regular tables) whenever you wish to do so.

What charges occur due to logging?

The billable operations listed below are charged at the same rates applicable to all Windows Azure Storage operations. For more information on how these transactions are billed, see Understanding Windows Azure Storage Billing - Bandwidth, Transactions, and Capacity.

The capacity used by $logs is billable and the following actions performed by Windows Azure are billable:

- Requests to create blobs for logging

The following actions performed by a client are billable:

- Read and delete requests to $logs

If you have configured a data retention policy, you are not charged when Windows Azure Storage deletes old logging data. However, if you delete $logs data, your account is charged for the delete operations.

Downloading your log data

Since listing normal containers does not list out $logs container, existing tools will not be able to display these logs. In absence of existing tools, we wanted to provide a quick reference application with source code to make this data accessible.

The following application takes the service to download the logs for, the start time and end time for log entries and a file to export to. It then exports all log entries to the file in a csv format.

For example the following command will select all logs that have log entries in the provided time range and download all the log entries in those logs into a file called mytablelogs.txt:

DumpLogs table .\mytablelogs.txt “2011-07-26T22:00Z” “2011-07-26T23:30Z”

const string ConnectionStringKey = "ConnectionString"; const string LogStartTime = "StartTime"; const string LogEndTime = "EndTime"; static void Main(string[] args) { if (args.Length < 3 || args.Length > 4) { Console.WriteLine("Usage: DumpLogs <service to search - blob|table|queue> <output file name> <Start time in UTC> <Optional End time in UTC>."); Console.WriteLine("Example: DumpLogs blob test.txt \"2011-06-26T22:30Z\" \"2011-06-26T22:50Z\""); return; } string connectionString = ConfigurationManager.AppSettings[ConnectionStringKey]; CloudStorageAccount account = CloudStorageAccount.Parse(connectionString); CloudBlobClient blobClient = account.CreateCloudBlobClient(); DateTime startTimeOfSearch = DateTime.Parse(args[2]); DateTime endTimeOfSearch = DateTime.UtcNow; if (args.Length == 4) { endTimeOfSearch = DateTime.Parse(args[3]); } List<CloudBlob> blobList = ListLogFiles(blobClient, args[0], startTimeOfSearch.ToUniversalTime(), endTimeOfSearch.ToUniversalTime()); DumpLogs(blobList, args[1]); } /// <summary> /// Given service name, start time for search and end time for search, creates a prefix that can be used /// to efficiently get a list of logs that may match the search criteria /// </summary> /// <param name="service"></param> /// <param name="startTime"></param> /// <param name="endTime"></param> /// <returns></returns> static string GetSearchPrefix(string service, DateTime startTime, DateTime endTime) { StringBuilder prefix = new StringBuilder("$logs/"); prefix.AppendFormat("{0}/", service); // if year is same then add the year if (startTime.Year == endTime.Year) { prefix.AppendFormat("{0}/", startTime.Year); } else { return prefix.ToString(); } // if month is same then add the month if (startTime.Month == endTime.Month) { prefix.AppendFormat("{0:D2}/", startTime.Month); } else { return prefix.ToString(); } // if day is same then add the day if (startTime.Day == endTime.Day) { prefix.AppendFormat("{0:D2}/", startTime.Day); } else { return prefix.ToString(); } // if hour is same then add the hour if (startTime.Hour == endTime.Hour) { prefix.AppendFormat("log-{0:D2}00", startTime.Hour); } return prefix.ToString(); } /// <summary> /// Given a service, start time, end time, provide list of log files /// </summary> /// <param name="blobClient"></param> /// <param name="serviceName">The name of the service interested in</param> /// <param name="startTimeForSearch">Start time for the search</param> /// <param name="endTimeForSearch">End time for the search</param> /// <returns></returns> static List<CloudBlob> ListLogFiles(CloudBlobClient blobClient, string serviceName, DateTime startTimeForSearch, DateTime endTimeForSearch) { List<CloudBlob> selectedLogs = new List<CloudBlob>(); // form the prefix to search. Based on the common parts in start and end time, this prefix is formed string prefix = GetSearchPrefix(serviceName, startTimeForSearch, endTimeForSearch); Console.WriteLine("Prefix used for log listing = {0}", prefix); // List the blobs using the prefix IEnumerable<IListBlobItem> blobs = blobClient.ListBlobsWithPrefix( prefix, new BlobRequestOptions() { UseFlatBlobListing = true, BlobListingDetails = BlobListingDetails.Metadata }); // iterate through each blob and figure the start and end times in the metadata foreach (IListBlobItem item in blobs) { CloudBlob log = item as CloudBlob; if (log != null) { // we will exclude the file if the file does not have log entries in the interested time range. DateTime startTime = DateTime.Parse(log.Metadata[LogStartTime]).ToUniversalTime(); DateTime endTime = DateTime.Parse(log.Metadata[LogEndTime]).ToUniversalTime(); bool exclude = (startTime > endTimeForSearch || endTime < startTimeForSearch); Console.WriteLine("{0} Log {1} Start={2:U} End={3:U}.", exclude ? "Ignoring" : "Selected", log.Uri.AbsoluteUri, startTime, endTime); if (!exclude) { selectedLogs.Add(log); } } } return selectedLogs; } /// <summary> /// Dump all log entries to file irrespective of the time. /// </summary> /// <param name="blobList"></param> /// <param name="fileName"></param> static void DumpLogs(List<CloudBlob> blobList, string fileName) { if (blobList.Count > 0) { Console.WriteLine("Dumping log entries from {0} files to '{1}'", blobList.Count, fileName); } else { Console.WriteLine("No logs files have selected."); } using (StreamWriter writer = new StreamWriter(fileName)) { writer.Write( "Log version; Transaction Start Time; REST Operation Type; Transaction Status; HTTP Status; E2E Latency; Server Latency; Authentication Type; Accessing Account; Owner Account; Service Type;"); writer.Write( "Request url; Object Key; RequestId; Operation #; User IP; Request Version; Request Packet Size; Request header Size; Response Header Size;"); writer.WriteLine( "Response Packet Size; Request Content Length; Request MD5; Server MD5; Etag returned; LMT; Condition Used; User Agent; Referrer; Client Request Id"); foreach (CloudBlob blob in blobList) { using (Stream stream = blob.OpenRead()) { using (StreamReader reader = new StreamReader(stream)) { string logEntry; while ((logEntry = reader.ReadLine()) != null) { writer.WriteLine(logEntry); } } } } } }Case Study

To put the power of log analytics into perspective, we will end this post with a sample application. Below, we cover a very simple console application that allows search (e.g., grep) functionality over logs. One can imagine extending this to download the logs and analyze it and store data in structured storage such as Windows Azure Tables or SQL Azure for additional querying over the data.

Description: A console program that takes as input: service name to search for, log type to search, start time for the search, end time for the search and the keyword to search for in the logs. The output is log entries that match the search criteria and contains the keyword.

We will start with the method “ListLogFiles”. The method will use the input arguments to create a prefix for the blob listing operation. This method makes use of the utility methods GetSearchPrefix to get the prefix for listing operation. The prefix uses service name, start and end time to format the prefix. The start and end times are compared and only the parts that match are used in the prefix search. For example: If start time is "2011-06-27T02:50Z" and end time is "2011-06-27T03:08Z", then the prefix for blob service will be: “$logs/blob/2011/06/27/”. If the hour had matched then the prefix would be: “$logs/blob/2011/06/27/0200”. The listing result contains metadata for each blob in the result. The start time, end time and logging level is then matched to see if the log contains any entries that may match the time criteria. If it does, then we add the log to the list of logs we will be interested in downloading. Otherwise, we skip the log file.

NOTE: Exception handling and parameter validation is omitted for brevity.