Windows Azure and Cloud Computing Posts for 8/19/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

•• Updated 8/20/2011 8:50 AM PDT with a UI screen capture of George Huey’s SQL Azure Federation Data Migration Wizard v0.1 in the SQL Azure Database and Reporting section below.

• Updated 8/19/2011 4:30 PM PDT with articles marked • by George Huey, Avkash Chauhan, Paul Patterson, Joel Foreman, Scott M. Fulton III, and Petri Salonen.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, Traffic Manager, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

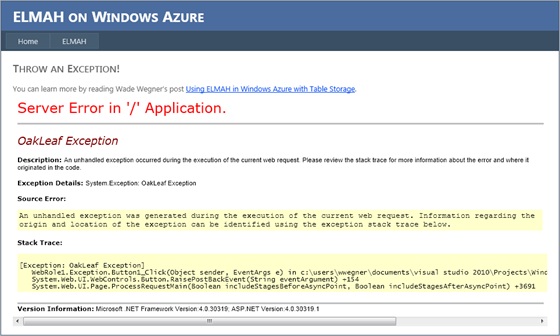

Wade Wegner (@WadeWegner, pictured below) described Using Error Logging Modules and Handlers (ELMAH) in Windows Azure with Table Storage and a live Windows Azure demo in an 8/18/2011 post:

In this week’s episode of Cloud Cover, Steve and I covered Logging, Tracing, and ELMAH in Windows Azure. Steve explored the first two topics while I looked into ELMAH in Windows Azure. You should make sure and take a look at his posts – they’re useful:

ELMAH (Error Logging Modules and Handlers) itself is extremely useful, and with a few simple modifications can provide a very effective way to handle application-wide error logging for your ASP.NET web applications. If you aren’t already familiar with ELMAH, take a look at the ELMAH project page.

In the news from Cloud Cover 56:

- Announcing Simplified Data Transfer Billing Meters and Swappable Compute Instances

- Windows Azure Storage Libraries in Many Languages

- Windows Azure Accelerator for Web Roles Version 1.1

- Debugging an Application in the Cloud

- CloudXplorer Introduces Support for $logs Container

NuGet

Before going any further, I thought I’d let you know that I’ve created a NuGet package that makes this extremely easy to try. You can take a look at ELMAH with Windows Azure Table Storage on the NuGet gallery or immediately try this out with the following command:

Install-Package WindowsAzure.ELMAH.Tables

By default this NuGet package is configured to use the local storage emulator. If you want to use your actual Windows Azure storage account you can uncomment the following line in the Web.Config file:

<!-- <errorLog type="WebRole1.TableErrorLog, WebRole1" connectionString="DefaultEndpointsProtocol=https;AccountName=YOURSTORAGEACCOUNT; AccountKey=YOURSTORAGEKEY" /> -->Incidentally, if you like NuGet, then you should check out Cory Fowler’s post on Must have NuGet packages for Windows Azure development.

Demo

For those of you unfamiliar with ELMAH, I put together a simple demo. You can try it out on http://elmahdemo.cloudapp.net/. Just enter a message (keep it clean, please!) and throw an exception.

Click the ELMAH button to then load the handler. You’ll see all the errors logged with a lot of great detail.

The great part is that these files are getting serialized into Windows Azure table storage. The benefit of this is you can read them from anywhere – in fact, you don’t have to even deploy the elmah.axd handler with your web application! You could run it locally.

Here’s what [Wade’s original] files look like in table storage:

How Does it Work?

The nice part is you can easily grab the NuGet package to view all the source code. There are two items of interest: ErrorEntity.cs and Web.Config.

In ErrorEntity.cs we first create our ErrorEntity:

public class ErrorEntity : TableServiceEntity { public string SerializedError { get; set; } public ErrorEntity() { } public ErrorEntity(Error error) : base(string.Empty, (DateTime.MaxValue.Ticks - DateTime.UtcNow.Ticks).ToString("d19")) { this.SerializedError = ErrorXml.EncodeString(error); } }Then we implement the ErrorLog abstract class from ELMAH to create a TableErrorLog class with all the implementation details.

public class TableErrorLog : ErrorLog { private string connectionString; public override ErrorLogEntry GetError(string id) { return new ErrorLogEntry(this, id, ErrorXml.DecodeString(CloudStorageAccount.Parse( connectionString).CreateCloudTableClient().GetDataServiceContext() .CreateQuery<ErrorEntity>("elmaherrors").Where(e => e.PartitionKey == string.Empty && e.RowKey == id).Single().SerializedError)); } public override int GetErrors(int pageIndex, int pageSize, IList errorEntryList) { var count = 0; foreach (var error in CloudStorageAccount.Parse(connectionString). CreateCloudTableClient().GetDataServiceContext() .CreateQuery<ErrorEntity>("elmaherrors") .Where(e => e.PartitionKey == string.Empty).AsTableServiceQuery() .Take((pageIndex + 1) * pageSize).ToList().Skip(pageIndex * pageSize)) { errorEntryList.Add(new ErrorLogEntry(this, error.RowKey, ErrorXml.DecodeString(error.SerializedError))); count += 1; } return count; } public override string Log(Error error) { var entity = new ErrorEntity(error); var context = CloudStorageAccount.Parse(connectionString) .CreateCloudTableClient().GetDataServiceContext(); context.AddObject("elmaherrors", entity); context.SaveChangesWithRetries(); return entity.RowKey; } public TableErrorLog(IDictionary config) { connectionString = (string)config["connectionString"] ?? RoleEnvironment .GetConfigurationSettingValue((string)config["connectionStringName"]); Initialize(); } public TableErrorLog(string connectionString) { this.connectionString = connectionString; Initialize(); } void Initialize() { CloudStorageAccount.Parse(connectionString).CreateCloudTableClient() .CreateTableIfNotExist("elmaherrors"); } }Now, to leverage these assets, we update the Web.Config file to include an <elmah> … </elmah> section that specifies our custom error log (and also allows remote access to the handler:

<elmah> <security allowRemoteAccess="yes" /> <errorLog type="WebRole1.TableErrorLog, WebRole1" connectionString="UseDevelopmentStorage=true" /> </elmah>That’s all there’s to it!

Of course, there are many other ways you could define your ErrorEntity and implement the TableErrorLog (i.e. you could extract more details into additional entities within your table), but this way is pretty effective.

<Return to section navigation list>

SQL Azure Database and Reporting

•• George Huey released v0.1 of his SQL Azure Federation Data Migration Wizard project to CodePlex on 8/19/2011:

From the Home Page:

…

Requirements

SQLAzureFedMW and tools requires SQL Server 2008 R2 bits to run.

SQLAzureFedMW also requires BCP version 10.50.1600.1 or greaterProject Description

SQL Azure Federation Data Migration Wizard simplifies the process of migrating data from a single database to multiple federation members in SQL Azure Federation.SQL Azure Federation Data Migration Wizard (SQLAzureFedMW) is an open source application that will help you move your data from a SQL database to (1 to many) federation members in SQL Azure Federation. SQLAzureFedMW is a user interactive wizard that walks a person through the data migration process.

The SQLAzureFedMW tool greatly simplifies the data migration process. If you don’t have an SQL Azure account and have been thinking about moving your data to the cloud (SQL Azure), but have been afraid to try because of “unknowns” like cost, compatibility, and unfamiliarity, take advantage of the Try Windows Azure Platform 30 Day Pass.

SQLAzureFedMW Project Details

The SQL Azure Federation Data Migration Wizard (SQLAzureFedMW ) allows you to select a SQL Server database and specify which tables (Data Only) to migrate. The data will be extracted (via BCP) and then uploaded to SQL Azure Federation. The BCP data upload process can be done in a sequential process or parallel process (where you specify the number of parallel threads). See Documentation for more detail.SQL Azure Migration Wizard Utils (SQLAzureMWUtils)

SQLAzureMWUtils is a class library that a developer can use to assist them in developing for SQL Azure.Note: SQLAzureFedMW expects that your database schema has already been migrated to SQL Azure Federation and that they tables on the source database match the tables in the federated member databases.

SQLAzureFedMW is built off of the SQLAzureMWUtils library found in the codeplex project {url:SQL Azure Migration Wizard|http://sqlazuremw.codeplex.com].

Further Reading: Microsoft SQL Server Migration Assistant (SSMA): The free Microsoft SQL Server Migration Assistant (SSMA) makes it easy to migrate data from Oracle, Microsoft Access, MySQL, and Sybase to SQL Server. SSMA converts the database objects to SQL Server database objects, loads those objects into SQL Server, migrates data to SQL Server, and then validates the migration of code and data.

For information on SQL Azure and the Windows Azure Platform, please see the following resources:

- Cihan Biyikoglu's blog

- SQL Azure Community Technology Preview (CTP) Import and Export

- SQL Azure

- SQL Azure Videos

- SQL Azure Community

- Microsoft Cloud

- SQL Server 2008: Solutions

- SQL Server 2008 R2: Trial Download

- SQL Server 2008: Express

- Microsoft Visual Studio 2010 Express

- SQL Server 2008 R2 Express

- Windows Azure Platform

- Windows Azure AppFabric

From the Documentation page:

There are two tools (SQLAzureMW and SQLAzureFedMW) that can be used to migrate SQL Server / SQL Azure schema and data to a SQL Azure Federation.

The first thing that you need to do before running either of these tools is to create your ROOT database with your Federation. Note, that I would not SPLIT the Federation until after I had the objects (tables, views, stored procs …) migrated to the Federation. If the amount of data is small, you could also migrate your data at this time.Once you have your ROOT and Federation created, you can run SQLAzureMW. In the SQLAzureMW.exe.config file, you can preset source server and target server information. Here is an example of target server information:

<add key="TargetConnectNTAuth" value="false"/> <add key="TargetServerName" value="xxxxxx.database.windows.net"/> <add key="TargetUserName" value="user@xxxxxx"/> <add key="TargetPassword" value="TopSecret"/> <add key="TargetDatabase" value="AdventureWorksRoot" /> <add key="TargetServer" value="TargetServerSQLAzureFed"/>Valid values for TargetServer are:

- TargetServerSQLAzure = "SQL Azure"

- TargetServerSQLAzureFed = "SQL Azure Fed"

- TargetServerSQLServer = "SQL Server"

Here is another key that you will probably want to modify:

<add key="ScriptTableAndOrData" value="ScriptOptionsTableSchema"/>

Valid values for ScriptTableAndOrData are:

- ScriptOptionsTableSchema = "Table Schema Only"

- ScriptOptionsTableSchemaData = "Table Schema with Data"

- ScriptOptionsTableData = "Data Only"

This key tells SQLAzureMW if you want to migrate schema only, schema and data, or data only. You can also set this during runtime through the advanced options.

Note that the important thing is to set TargetServer to TargetServerSQLAzureFed. If you forget, you can always set this in the advanced options during runtime. Once you are ready, you can run SQLAzureMW and select the option to migrate a database. Select your source database and select the objects that you want to migrate. Once ready, hit Next a couple of times and have SQLAzureMW analyze and create TSQL scripts. Be sure to look for compatibility errors (like identity columns). You will have to fix before you proceed. The best way to do this is to copy the generated script to SSMS query windows. Edit the TSQL and remove any compatibility issues AND add “FEDERATED ON (cid=CustomerID” to the tables you want to federate on.You will have to modify cid to match your federation key and CustomerID to match your specific column to shard on. Once done, you can copy the TSQL from your query window and put it back into SQLAzureMW (if you have no data, you don’t need to go back to SQLAzureMW for second part, you can just change your connection in the query window and then execute your modified TSQL). If you choose to use SQLAzureMW for the second part (migration to SQL Azure Federation), replace the old TSQL with your modified TSQL and then click next. SQLAzureMW will prompt you for your SQL Azure Federation (it will be prefilled with data from the config file). Hit connect when you have verified information is correct. SQLAzureMW will then display Federations on the left side and Federation Members on the right side. If you have not done a split yet, then you should only see one Federation Member. Select that Federation member and then click next and SQLAzureMW will execute the TSQL against target server. With any kind of luck, you should be ready to SPLIT your Federation Members.

If you didn’t have SQLAzureMW copy your data during the initial phase, you can always use SQLAzureMW or SQLAzureFedMW to move your data at a later time. IF you SPLIT your Federated Members, then you will have to use SQLAzureFedMW for data movement.

…

George’s original SQL Azure Migration Wizard has been my favorite SQL Azure tool since v0.1 (see my Using the SQL Azure Migration Wizard v3.3.3 with the AdventureWorksLT2008R2 Sample Database post of 7/18/2010.) I’m sure the version for SQL Azure Federations will gain the same status. Stay tuned for examples when the SQL Azure Federations feature releases to the web later this year.

In the meantime, check out my detailed Build Big-Data Apps in SQL Azure with Federation cover article for Visual Studio Magazine’s March 2011 issue.

The Windows Azure Customer Advisory Team posted an SQL Azure Retry Logic library to CodePlex on 8/16/2011 (missed when published). From the Description:

Introduction

This sample shows how to handle transient connection failures in SQL Azure.

The Windows Azure AppFabric Customer Advisory Team (CAT) has developed a library that encapsulates retry logic for SQL Azure, Windows Azure storage, and the AppFabric Service Bus. Using this library, you decide which errors should trigger a retry attempt, and the library handles the retry logic.

This sample assumes you have a Windows Azure Platform subscription. The sample requires Visual Studio 2010 Professional or higher.

A tutorial describing this sample in more detail can be found at Retry Logic for Transient Failures in SQL Azure

TechNet Wiki.*SQL Azure queries can fail for various reasons – a malformed query, network issues, and so on. Some errors aretransient, meaning the problem often goes away by itself. For this subset of errors, it makes sense to retry the query after a short interval. If the query still fails after several retries, you would report an error. Of course, not all errors are transient. SQL Error 102, “Incorrect syntax,” won’t go away no matter how many times you submit the same query. In short, implementing robust retry logic requires some thought.

More Information

For more information, see [Windows Azure and SQL Azure Tutorials] http://social.technet.microsoft.com/wiki/contents/articles/windows-azure-and-sql-azure-tutorials.aspx [Last updated 7/8/2011]

* Thanks to Gregg Duncan for heads-up to this sample and the corrected tutorial location.

From the beginning of Windows Azure CAT Team’s the Retry Logic for Transient Failures in SQL Azure article:

This tutorial shows how to handle transient connection failures in SQL Azure.

SQL Azure queries can fail for various reasons – a malformed query, network issues, and so on. Some errors are transient, meaning the problem often goes away by itself. For this subset of errors, it makes sense to retry the query after a short interval. If the query still fails after several retries, you would report an error. Of course, not all errors are transient. SQL Error 102, “Incorrect syntax,” won’t go away no matter how many times you submit the same query. In short, implementing robust retry logic requires some thought.

The Windows Azure AppFabric Customer Advisory Team (CAT) has developed a library that encapsulates retry logic for SQL Azure, Windows Azure storage, and AppFabric Service Bus. Using this library, you decide which errors should trigger a retry attempt, and the library handles the retry logic. This tutorial will show how to use the library with a SQL Azure database.

Testing retry logic is an interesting problem, because you need to trigger transient errors in a repeatable way. Of course, you could just unplug your network cable, or block port 1433. (SQL Azure uses TCP over port 1433.) But for this tutorial, I’ve opted for something that’s easier to code: Before submitting a query, hold a table-wide lock, which causes a deadlock or a timeout. When the lock is released, the original query can be retried.

Prerequisites

This tutorial assumes you have a Windows Azure Platform subscription. For more information on creating a subscription, see Getting Started with SQL Azure using the Windows Azure Platform Management Portal.

This tutorial requires Visual Studio 2010 Professional or higher.

Download the project files.In this Article

Patrick O’Keefe described Tales from the Engine Room: Bulk Updates [to SQL Azure] in an 8/18/2011 post:

We have been tweaking the Project Lucy datastore again this week, and have found out a couple of things we think worth sharing. We found out the fastest way to update a lot of rows, was to do the complete opposite of what you’d think was the right thing to do.

Let me paint a scenario – we store wait stats data coming from Spotlight on SQL Server Enterprise customers in a fact table. One of the columns in the fact table is

wait_type, which contains the name of the wait. This was recognized as a bad idea some time ago, but we had other fish to fry.Recently our piscatorial problems have come back to bite us.

Since this column is an

nvarchar(100)it takes up beaucoup space, and since it is indexed multiple times, multiply that by the number of indexes. This results in a performance problem, since SQL Azure has to read lots more pages because there are less rows per page. Also we have an operational problem – not only are we are using more space that we want to (and space costs money), but any DDL operations on the table are expensive because of its size.

So since we have already gone back to the nineties by eschewing the NoSQL-ness of Azure Table Services and adopting SQL Azure in all its relational glory, we thought; why stop there? Why not travel back into the eighties and start doing a bit of data modelling? [Emphasis added.]

Hence, we find ourselves creating a

dim_wait_typesdimension table and instead of storing the wait type directly in the fact table, we store theidof the related row from the dimension table.So far so good.

This database stuff is easy! We just create the dimension table and populate it, create a

wait_type_idcolumn in the fact table and write an update statement that updates thewait_type_idbased on the value of thewait_typecolumn. The update looks something like this:

update sqlserver_fact_waits_by_time set wait_type_id = 620, wait_category_id = 2 where wait_type = ''XE_DISPATCHER_JOIN''The table has close to 40 million rows in it, so a single update is out of the question. SQL Azure will throttle us based on log space long before the update completes. We have to break it down, so we decide to do it per wait type, since there are 635 SQL Server wait types (that we know about).

We start running 635 update statements….

- We find each statement does a full table scan, so we get hold of the plan and create the appropriate index

- We find we cannot create the index from on-prem – the CREATE INDEX statement takes too long and the socket between us the the datacenter keeps getting closed. The solution is to remote desktop to one of the roles in the running application, install SQL Server 2008R2 Express Tools and run the CREATE INDEX from there. Don”t forget to use the WITH (ONLINE=ON) option, otherwise SQL Azure will throttle you because you use too much log space

- Now that the index has been created, running the updates can commence. Chaos ensues. Because each update is doing a bunch of physical IO, all of our queued processing (end-users upload data around the clock) starts failing with timeouts.

- We turn queue processing off, and allow the updates to continue. Each one averages 3-5 minutes and there are 635 of them which is roughly 60hrs in total.

- Realization dawns that we cannot leave processing turned off for 3 days, so we stop the updates and turn queue processing back on. We need to find a way to trade time for concurrency. In other words, we don”t care if the updates take 3 days, but we need to be able to process incoming data whilst the updates are going on

A Solution

We went back to the original query plan for the update and found that 90% of the cost was in actually doing the update, very little cost was extant in actually finding the rows. Also and most significantly, we found that the amount of physical IO required scaled somewhat worse than linearly with the number of rows updated. The answer was to keep the actual number of rows updated small. So now our update looks something like this:

update

sqlserver_fact_waits_by_time

set

wait_type_id = 620, wait_category_id = 2

from

sqlserver_fact_waits_by_time wbt

inner join

(select top(2500) upload_id, id from sqlserver_fact_waits_by_time

where wait_type_id is null and wait_type = ''XE_DISPATCHER_JOIN'') wbt_top

on wbt.upload_id = wbt_top.upload_id and wbt.id = wbt_top.idThis new update statement takes under 20 seconds to run and we have a command line application run these for all wait types in a loop until there are no rows updated. Whilst these updates are running, user processing continues normally.

What we Learned

- Execute long running DDL operations from within the data centre

- Try to look for ways that allow you to trade time for maintaining concurrency

- On SQL Azure, doing a larger number of small updates (where each update touches less rows) took less elapsed time than doing a smaller number of large updates (where each update touches more rows)

Patrick O’Keefe shared his experiences in Tales from the Engine Room: Migrating to SQL Azure to the SQLServerPedia blog on 8/7/2011 (missed when published):

We’ve been very busy here at Project Lucy aver the last few weeks. We are “cup-runneth-over” with ideas on how to provide really cool and valuable stuff to our users – only problem was, we were getting bogged down in performance and data storage issues.

The way we were storing our data was not conducive to providing the sorts of features we wanted to provide and something had to change. After we re-architected our approach 4 times, with still no suitable solution, we decided our only two alternatives were between doing something stupid, or going broke.

We decided that we should store our analysed data in SQL Azure. Now we have done this, we will be able to move much faster in providing new features that expose even more interesting things in the data that our users upload.

How we used to do it

It is useful to look at how we did things up until a week ago in order to explain the changes so let’s look at an example – SQL Workload Analysis.

When we process a trace file (this applies to Spotlight on SQL Server uploads that include SQL Analysis data as well), we have a piece of code that detects a SQL event; we will use SQL:BatchCompleted as an example. Way back when we first went live we did some on the fly aggregation of these events but didn’t really store any event data because we had no way to display it. Fast forward to the end of Feb 2011 and we went live with the ability to explore the workload data within your upload using a grid and some ajax magic that allowed you to do multi-level grouping on the data in real-time.

In order to allow you to do grouping in real-time, we couldn’t store aggregates any more – we had to store every event. We could have stored each event as an entity in Table Services if we were prepared to live with the latency of fetching these entities. But we weren’t. You see, some of Project Lucy’s users upload trace files with hundreds of thousands of SQL events and pulling 200,000 entities out of table services is two orders of magnitude slower than a wet week. Another solution was required.

We found that when we stripped all of the text data (SQL, application name, login name, database name) out of the event data, it really wasn’t that big – certainly small enough to fit in memory. So that is what we did; as we were processing the data we put all of the text columns in look up tables and stored the even data in a big list. What we ended up with was a binary structure that had one big list for the event data, and numerous smaller hash tables for the category (SQL, application, database etc) data. This we then streamed out into a blob.

When a user came to the workload page and started interacting with it, we would check to see if we had the blob that contained the data we wanted to render for the user on the web server. If the file did not exist we would go get it from blob storage and cache it locally. Once the blob was local to the web server, we would load it into memory and perform the query user had requested using Linq. This worked surprisingly well and the response times even from blob files that contained many hundreds of thousands of events were acceptable.

The downside of this approach was that the data was stored one blob per upload. This meant that a few things were out of reach. Because a user can upload files from multiple servers in an upload, we had no easy way of getting data from just one server.

Also, we had no easy way of querying across time. We wanted to give users the ability to look at performance characteristics over time. In order to do this with our blob approach we would have had to load each blob in turn, get the rows we needed from it (after reading all the rows in it) and then merge those rows at the end. So we decided to invent our own database.

No seriously. We did. We spent some hours basking in warm delusions of our own grandeur, scribbling madly on a whiteboard.

We then came to our senses and decided that inventing our own database was somewhat foolish, and might distract us from our core objective of providing value to our users. So we decided to use SQL Azure to store our data. Now instead of a binary file, we store the event data in a big fact table and we store all the “category” (application, database etc) data in dimension tables. This now means that we can start to build features that allow querying across time – which is what we are working on right now.

What we learnt

As part of our migration to SQL Azure we learnt a few things:

- The database size limit is there to encourage you to think scale out, instead of scale up. This is a good thing – with scale out, you are not constrained by being on one machine. You need to design your scale out strategy from the start. We plan to use SQL Azure Federations when it ships, so our schema is optimised for this

- For tables that you are going to federate, you need to make sure there are no database scoped functions used. This means no identity columns. We used uniqueidentifier with a newid() default constraint

- Make sure you are religious about putting clustered indexes on tables. Operations against tables without clustered indexes will work in the development environment with a local database, but when you push into the cloud it will break

- Using table services for bulk inserts or updates involves high latency. You can only insert/modify 100 entities (rows) at a time and this takes 500ms – designs which involve big fetches of many rows from Table Services will be unsatisfactory

- If you are going to tempt fate and use Table Services, in order to fetch data from table services quickly enough (it takes 500ms per 1000 entities fetched) you have to be very careful about the partition and key values used when storing the data. (The partition+key must be unique for each entity; and you select multiple rows by partition.) The trick is to define your partition key so you can fetch multiple rows at a time, but not too many rows. Invariably this mean the same data will need to be stored multiple times, via different partition values. For example, for SQL Server wait statistics, you might need to store data partitioned by time (to service a trend over time chart) and store the same data partitioned by wait-name so you can compare different users with each other. As a consequence of storing the data multiple times, you end up writing a lot more (serialization-deserialization) code than you really feel you should need to

- Tables in Table Services store entities. An entity is an object with arbitrary properties. Just because you can store different entities in the same table doesn’t mean you should. We got clever with C# generics and got this to work and then decided it was just a rod for our own backs

- SQL Azure is expensive (we hope this changes) make sure you are indexing just enough. You can double your storage requirements by just creating an index – make sure that the index is useful

- High concurrency MERGE SQL statements that update multiple rows were problematic for us in SQL Azure. We had deadlocks, no matter what you try. The only way we were able to stop the deadlock was with a WITH TABLOCKX locking hint – not really a solution. Until Microsoft provide a way to get the deadlock graph from SQL Azure so you can really troubleshoot the problem rather than guess, we will be left with a cloying sense of dissatisfaction

- We’ve seen really impressive IO rates from our SQL Azure database (7,500 writes per second) – when you consider that this involves replication to two slaves, color us extra impressed

- Keep the persistence layer of your software simple. i.e. Where feasible, perform simple SQL select statements from multiple tables and then use something akin to LINQ in C# to merge and project that data. This will make life easier in future – when you decide to locate those tables within different Azure databases, or use federations. We encountered this issue. Our database grew to over 43Gb in size (50Gb is the limit, as at August 2011). We had to move one of our tables into a different database. Consequently, any SQL statements joining this table to other tables had to be achieved via LINQ instead. (There are no cross-database joins in SQL Azure)

- Long running SQL Statements like CREATE INDEX have to be run from within the data centre. This will drive you nuts. The internet will conspire against you to ensure that the socket will get closed while you wait for the query to finish

In general, our experience with SQL Azure has been a positive one, and now having taken the leap, we look forward to building out the new features we have planned in Project Lucy.

<Return to section navigation list>

MarketPlace DataMarket and OData

Brandon George posted AX 2012, PowerPivot and a Dash of OData Feeds on 8/17/2011:

Sometime back, I wrote about the importance of Microsoft PowerPivot as well as Open Data Protocol (OData) for Microsoft Dynamics AX 2012. All of those pointed to the fact of what AX 2012 would be able to do with such enabling technologies.

Well now finally we have the launch of Microsoft Dynamics AX 2012, and it's time to start cooking up some self-service BI with PowerPivot and OData feeds!

First it's very important to understand what it means for having data published as OData feeds, coming out of Microsoft Dynamics AX 2012. This starts with a query object within AX, as shown below.:

This is the basis for an OData feed being published, as it's similar the base for Document Services in AX 2012. This is were we stop though, and go a different path from Doucment services, for enabling these feeds. From here, we need to take and go into the Application Workspace, and navigate to: Organization Administration > Setup >Document Management > Document Data sources.

From here, we need to take and add a new entry. If we do not do this, then nothing will show as active in our list of possible OData feeds. In order to create go to File and then new to create a new document. Once you have the screen up, you should then select the data source type of Query, and select the query we created from the drop down. After that we click "Activate".

At this point, AX system service called ODataQueryService will be what publishes our new query document source we just setup. To test this out, you can open up a IE window, and type the following (Assumes your on the AOS): http://localhost:8101/DynamicsAx/Services/ODataQueryService/

When you do this, and Atom list of queries, that you have security rights too, will appear to work with. That's it for enabling a query to be published as an OData feed. We can start working with this right away yes?

Well, like all great baking stories there are little things that you need to understand so the final product comes out tasty and delicious!

So say, and in the real world this will be the case, that you have Excel and PowerPivot installed on a seperate machines from your AOS. Well if this is the case, and you wanted to work with your published OData feeds from the seperate machine, you might think, ok so instead of using localHost I would use the actual server name.Wrong! This will cause a 400 Bad Request to be generated, and the reason is the implementation of OData does not allow the use of named servers. You have to use the IP Address of the server in which the ODataQueryService is being published from. So the correct format, to access this from a remote server, or just really separate server than that which the AOS is running is as follows: http://xxx.xxx.xxx.xxx:8101/DynamicsAx/Services/ODataQueryService/

Now of course, any good cook knows that you replace the xxx with the actual values of the ip address itself, however for good measure I point that out to you here.Doing this, we are actually able to take and see the WSDL, after logging into the box when prompted for our AD creds. You do have to login the first time with your AD creds, however after that you should be fine.

Now that we have our WSDL showing up, we can launch into PowerPivot, and click to add Feed data sources.

Make sure to take and enter into the URI the same format as you did before, and click test connection. This should come back ready for your use, and hit next. In doing so we should see our new query document data source from within AX 2012, being published and therefore able to be consumed as an OData feed.

Now that we have this, we can work with our Query document data sources from within PowerPivot to create some wonderful self-service BI goods! It's pretty powerful stuff to be able to do this, and this adhere's to the security model within AX. Therefore if you have rights to a query then you will see it. Otherwise, you will not see it.

Alex James (@adjames) et al., posted Simple Web Discovery for OData Services to CodePlex on 8/12/2011 (missed when published). From the Project Description and Usage Overview:

This Simple Web Discovery (SWD) endpoint provides a uniform access point that tells you (1) where to authenticate and (2) where to get access tokens. It makes it easier to implement OAuth2.0 in a general way. It can also make other info available if need be. Written in C#.

More Details

When creating an OAuth protected OData service (see Alex's blogs for more on this), clients need to know about two additional endpoints:

A client may want to draw data from many different sources, but there is no standard way to find these two extra endpoints besides hard coding each of them for every single data source. We’d like to make this easier to enable richer uses of data.

- Consent Server: where the client actually authenticates and authorizes data access (Google, Facebook, etc.).

- Token Endpoint: where the client exchanges an Authorization code for an Access Token, or where they acquire a new Access Token with a Refresh Token.

The Simple Web Discovery protocol (hereafter SWD) outlines a mechanism for discovering the location(s) of services for a particular principal. In our case the principal will be the OData Service itself. Using a slightly modified version of this protocol, we can expose a standardized endpoint discovery method.

To make this as easy as possible, we are publishing code that will serve both of these necessary service URLs. We have created an Attribute that can just be added onto an existing OData service. It will add a Simple Web Discovery endpoint that provides all of the necessary endpoint information. The code is fairly general, so it can be useful in other contexts too. Let’s get started.

Note: The SWD protocol requires that the .wellknown endpoint extend directly from the root of the domain, but with an OData service this may not be possible without significant server configuration changes. This may not be possible for everyone, so in our case the .wellknow endpoint will extend from the OData service itself. Since we are extending from the service we already know our principal, it’s already in the URL. Therefore, it does not need to be specified as a query argument to the SWD endpoint. These changes make it extremely easy to add to an existing OData service.Simple Web Discovery Behavior

Adding a simple web discovery (SWD) endpoint is as easy as adding an attribute to your DataService. We will discuss the OAuthEndpoints class in the next section.[SimpleWebDiscoveryBehavior(typeof(OAuthEndpoints))] public class WcfDataService : DataService<WorldCupData> { // your code here }In this example, our service is located at …/WorldCupData.svc. By adding this attribute you will create a queryable endpoint at

…/WorldCupData.svc/$wellknown/simple-web-discovery

If we want to discover the usable consent endpoints for our service we just query the following URL:

…/WorldCupData.svc/$wellknown/simple-web-discovery?service=urn:odata.org/auth/oauth/2.0/consent

This returns a JSON string listing all of the allowed consent servers. It will look something like this:{ "locations" : [ "http://www.google.com", "http://www.facebook.com" ] }This gives the client application options to authenticate through many different supported services. For instance, if the client only supports Facebook authentication, they simply choose that particular endpoint and carry on.

Endpoints

We need some way to configure the locations returned by this new endpoint. This is done by extending the Endpoints class. The Endpoint class has a dictionary field mapping service names (keys) to endpoint lists (values). When you query the SWD endpoint for a particular service, it will look the service up in this dictionary and return the locations in the corresponding list. Let’s see it in action.Example 1

The best way to add your own endpoints is to extend the Endpoints class provided above. Once you have created your endpoint subclass, you can simply add the SimpleWebDiscoveryBehavior attribute to your service. The whole thing will look something like this:public class OAuthEndpoints : Endpoints { public OAuthEndpoints() : base() { this.AddEndpoint("consent", "http://www.google.com"); } } [SimpleWebDiscoveryBehavior(typeof(OAuthEndpoints))] public class WcfDataService : DataService<WorldCupData> { // your code here }Now when we can navigate to

…/WorldCupData.svc/$wellknown/simple-web-discovery?service=consent

This returns a JSON string listing all of the allowed consent endpoints. It will look like this:{ "locations" : [ "http://www.google.com" ] }Nice! We are pretty close to having a fully functional SWD endpoint.

Example 2

There is just one last thing. To make it more difficult to make a typo when entering keys into the EndpointDictionary, we have provided the static string values ConsentEndpoints and TokenEndpoints in the Endpoints class. This way you don’t need to type out those crazy strings for every endpoint you add.public class OAuthEndpoints : Endpoints { public OAuthEndpoints() : base() { this.AddEndpoint(Endpoints.ConsentEndpoints, "http://www.google.com"); this.AddEndpoint(Endpoints.ConsentEndpoints, "http://www.facebook.com"); this.AddEndpoint(Endpoints.TokenEndpoints, "http://www.bing.com"); } } [SimpleWebDiscoveryBehavior(typeof(OAuthEndpoints))] public class WcfDataService : DataService<WorldCupData> { // your code here }Now we will have precisely the behavior described at the beginning of this post:

…/WorldCupData.svc/$wellknown/simple-web-discovery?service=urn:odata.org/auth/oauth/2.0/consent

will return{ "locations" : [ "http://www.google.com", "http://www.facebook.com" ] }Furthermore, we’ve seen that you can add as many endpoints as you’d like. In this case we also included a token endpoints service, so

…/WorldCupData.svc/$wellknown/simple-web-discovery?service=urn:odata.org/auth/oauth/2.0/token-endpoint

will return{ "locations" : [ "http://www.bing.com" ] }Great, now you have listings of the consent endpoints and token endpoints! You can also add any other endpoint that you may need, so hopefully you can use this code again when OAuth3.0 or HotNewAuthProtocol becomes the cool thing to do.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, Traffic Manager, RDP and CDN

• Avkash Chauhan explained Windows Azure Traffic Manager (WATM) and App Fabric Access Control Service (ACS) in an 8/19/2011 post:

Recently I was asked if Windows Azure App Fabric Access Control Service (ACS) works with Windows Azure Traffic Manager or not.

The current CTP release of Windows Azure Traffic Manager is not supported with App Fabric Access Control Services.

As you may

haveknow,thatWindows Azure Traffic manager is in CTP now and offers many benefits to Windows Azure Hosted services.For more info on Windows Azure Traffic Manager visit:

Avkash Chauhan described Windows Azure Traffic Manager and using your domain name CNAME Entry in an 8/19/2011 post:

With the release of Windows Azure Traffic Manager, Windows Azure users, can point their domains such [as] www. companydomain.com to their Azure Traffic Manager policy domain name, such as companydomain.ctp.trafficmgr.com.

Above setting is equivalent to the following DNS record:

www.companydomain.com CNAME companydomain.ctp.trafficmgr.com

So using www record such as www.companydomain.com is fine with everyone (mostly?) as this can be done using CNAME, but now you may want to think why not use companydomain.com? The reason for such CNAME restriction is mainly because of technical reasons, as it is a DNS limitation not an Windows Azure limitation.

Once you have your application hosted in Windows Azure and registered domain name i.e. companydomain.com, you might have expectation with Windows Azure as:

- To respond to your domain name without the www in the name (http://companydomain.com only),

- And to have that site running through traffic manager.

Now the problem here is that above http://companydomain.com configuration won’t work currently because DNS does not allow for a CNAME record to have an empty name. Use of A records is not possible Windows Azure does not guarantee static IP address. An A record can be registered with an empty name, but the consequence of that is that you are then bound to a single IP address, and that then conflicts with how traffic manager works by using DNS redirection to an IP address in an appropriate data center. It is not the client that is wrong here – it is that you cannot combine a www-less domain name AND traffic manager.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Joel Foreman described Building and Deploying Windows Azure Projects using MSBuild and TFS 2010 in a 8/19/2011 post to his Slalom Consulting blog:

Windows Azure Tools for Visual Studio makes it very easy for a developer to build and deploy Windows Azure projects directly from within Visual Studio. This works fine for a single developer working independently. But for developers working on projects as part of a development team, there is the need to integrate the ability to build and deploy the project from source control via a central build process.

There are some good resources out there today that talk about how to approach this. Tom Hollander has a great blog post on Using MSBuild to deploy to multiple Windows Azure environments, which leverages an approach that Alex Lambert blogged about regarding how to handle the ServiceConfiguration file for different environments. However, the new Windows Azure Tools for Visual Studio 1.4 introduced some changes into this process that will now have to be accounted for, such as a separate Windows Azure targets file, and the concept of a Cloud configuration setting.

I thought this would be a good opportunity for me to dive into updating our build process for Windows Azure projects to be in line with these changes. The following post will cover configurations in Visual Studio, packaging from Visual Studio and the command line, packaging on a build server, and finally deploying from a build server.

Before I dug in, I came up with some goals for my Windows Azure build process. Here is what was at the top of my list:

- Being able to build, package, and deploy our projects to multiple Windows Azure environments from a build server using MSBuild

- Being able to retain copies of our packages and configurations for our builds in Windows Azure BLOB Storage for safe keeping, history, easy rollback scenarios, etc.

- Having a build number generated for a build and make sure that build number is present in the name of our packages, configs, and deployment labels in Azure for consistency

- Leveraging the Release Configurations in Visual Studio as much as possible as the designation of our different environments, since Web.Config transformations rely on this pattern already

- Being able to still package and deploy locally, in case there is an issue with the build server for some reason

Let’s start with a new Windows Azure Project in Visual Studio, with a MVC 3 web role. I quickly prepped the MVC project for deployment to Azure by installing the NuGet package for MVC 3 and turning off session state in my web.config. I will use this as my sample project.

Defining Target Configurations and Profiles

Release Configurations and Service Configurations

The first thing I want to examine is configuration files are now handled for deployments. I see that the Web.config has two release transformations that match up to the “Debug” and “Release” configurations that come with the project. I also see that there are two configs, a ServiceConfiguration.Local.cscfg file and a ServiceConfiguration.Cloud.cscfg file, that represent “Local” and “Cloud” target environments. I have found in my experience that each target environment for a web project needs its own transformation for a web.config and its own version of a ServiceConfiguration file. For example, the ServiceConfiguration might have settings such as the Windows Azure Storage Account to use for Diagnostics, but the web.config has the configuration section for your Windows Azure Cache Settings. I am sure there could be advantages to having the flexibility to have these differ, but for my project I want them to be consistent, in-line with our target environments, and to rely on the Release Configuration setting.

I added a Release Configuration for “Test” and “Prod” and removed the one for “Release” to mimic a couple of target environments. This was easily done using the Configuration Manager.

I used the “Update Config Transforms” option by right clicking on the web.config file to generate the new transforms.

Now I just needed to get the ServiceConfiguration files to fall in line. In Windows Explorer, I added ServiceConfiguration.Test.cscfg, and renamed the other two so that I had ServiceConfiguration.Debug.cscfg and ServiceConfiguration.Prod.cscfg. I then unloaded the Cloud Service project in Solution Explorer and editing the project file to include these elements.

After saving and reloading, you can see that all the configurations are showing up and named consistently.

Next, I needed to make sure these would package and publish correctly from Virtual Studio. I am happy to see that my changes are recognized and supported. There is a setting for which ServiceConfiguration to use when debugging.

For packaging/publishing my cloud project, I am prompted to select the configurations I want (and in this case I do not have variability in the two). The correct configuration file is staged alongside my package file.

The Service Definition File

In Hollander’s post, he mentioned some good reasons why you might want to have a different ServiceDefinition file for different environments, such as altering the size of the role. I also could envision other cases such as not having an SSL endpoint in a DEV environment, etc. I see this more as a nice to have than a must have. Feel free to skip this section if you are ok with a standard definition file for all of your environments.

For those interested, lets now tackle adding different ServiceDefinition files for our Release Configurations and making sure those are built as part of our package.

Hollander’s example shows how to use transformations on both the ServiceConfiguration file and ServiceDefinition file. In this example, I am simply going to replace the entire file, to fall in line with what is being done by Visual Studio for the ServiceConfiguration file.

In Windows Explorer, I added a ServiceDefinition.Test.csdef and ServiceDefinition.Prod.csdef, and renamed the existing one to ServiceDefinition.Debug.csdef for consistency. I updated the ccproj file for my cloud service to include those files like I did with the configuration files before, but added a separate section so that VS doesn’t complain about multiple definition files.

They now show up in Solution Explorer.

The part that is a little trickier is making sure the correct ServiceDefinition file for our Release Configuration is chosen when we are packaging/publishing our project. To understand this process, I started looking at the targets file that my project is using, Microsoft.WindowsAzure.targets located at C:\Program Files (x86)\MSBuild\Microsoft\VisualStudio\v10.0\Windows Azure Tools\1.4, which is different from the targets file it used in Tom and Alex’s examples before the 1.4 tools update. I also starting running msbuild from the command line so that I could see the log file it was generating for the Publish process. Here is an example of the command I was using:

msbuild CloudService.ccproj /t:Publish /p:Configuration=Prod > %USERPROFILE%\Desktop\msbuild.log

On a side note, initially the command fails. In looking at the log file, it was because the variable $(TargetProfile) was not specified for the target “ResolveServiceConfiguration”, which looks for the correct ServiceConfiguration file to use.

I updated my command to the following and ran again:

msbuild CloudService.ccproj /t:Publish /p:Configuration=Prod;TargetProfile=Prod > %USERPROFILE%\Desktop\msbuild.log

This time my project build and packaged successfully and produced the correct configuration file. This property will come in handy later for our Build server configuration.

Now, back to the task of getting the appropriate definition file into our package. After more digging into the targets file and log file, its appears that a target service definition file, ServiceDefinition.build.csdef, gets generated from the original source definition file, and that one is used with cspack to create the package file. The target file is first created in the same directory as your project file, and then it is used with cspack and for reference can be seen in the .csx folder in your bin directory.

In looking back at the Microsoft.WindowsAzure.targets file, we can see that the ResolveServiceDefinition target sets these source and target variables. If I override this target to set the appropriate source definition file variable, then that one will be used. I added the following section to my CloudService.ccproj file to override this target and use the Configuration property as part of the source definition file name (could have also used TargetProfile).

I then ran some builds, packages, and publishes from the command line and from Visual Studio. Everything was ending up in the right place. To verify, you can dig into the CloudService.csx folder for our service located in bin\(Configuration) to find the definition file that was packed. For this “Prod” build we can verify that the correct definition file was used by looking at the contents of this folder:

Now I have my environment definitions and configurations in sync, and all relying on the same Release Configuration property. I am ready to starting building from our build machine.

Packaging and Deploying on a Build Machine

Build Machine Setup

I am using an existing instance of TFS 2010 for source control. I now need to configure a machine to be able to build my Azure project. I elected to set up a new virtual machine to serve as a new build server because of the Azure dependencies my build server will have. From some research, it appears that most people are installing Visual Studio, the Windows Azure SDK and the Windows Azure Tools for Visual Studio onto their build server in order to build Azure projects. I briefly looked at what it would take to not go down this path, but decided against it. If anyone has a more elegant solution (that doesn’t involve faking the Azure SDK installation), I am all ears. Due to these requirements, I felt it was best to use a separate VM as a build server.

After provisioning a new Windows Server 2008 R2 VM, I installed the Build Services only for TFS 2010. I did end up creating a new Project Collection on our TFS 2010 server and configured the build controller to be associated to that collection.

I was now ready to install the necessary dependencies to build Windows Azure projects. I installed Visual Studio 2010. I then used the Web Platform Installer to install the Windows Azure SDK and Windows Azure Tools for Visual Studio – August 2011. The Web Platform Installer did a good job of taking care of upstream dependencies for me.

After the installation completed, I double checked that the Microsoft.WindowsAzure.targets file was present on the VM.

Finally, I created a drop location on my build machine and updated the permissions to allow builds to be initially stored on this machine itself. My end goal is to have these builds being pushed to Windows Azure, but I figure it will be nice to verify locally on the machine first.

Packaging on the Build Machine

Back in Visual Studio on my laptop, I created a new Build Definition called “AzureSample-Prod”. I specified the Drop location to the share I created on the build VM. On the Process tab of the wizard I made sure to select my CloudService.ccproj file for my solution under “Items To Build” and also entered the “Prod” Configuration for which Configuration to build. I selected the cloud service project so I can package my solution for Windows Azure. The “Prod” Configuration represents my target environment I will be publishing to.

Next, remembering my experience running msbuild.exe from the command line before, I knew I had to set some MSBuild arguments. The field is located in the Advanced Properties window section on the Process tab. I added an MSBuild argument for $TargetProfile and set it to match my Release Configuration. I also specified a MSBuild argument for the Publish target to indicate that we want to create the package.

This Build Definition should successfully build and package my Azure project. I ran the build and it succeeded. I then went to the drop location that I specified to verify everything was packaged appropriately. To my surprise, the “app.publish” folder that should contain the .cspkg and .cscfg file that was packaged did not exist at the drop location. Strange. I looked over the MSBuild log file for any clues. I also found that the app.publish folder was created at the source build location on the build server. It just looked like it was getting left behind and not making it to the drop location. I am thinking it could be a timing issue for when contents are dropped at the drop location. This was not an issue prior to the 1.4 Tools for Visual Studio.

To get around this problem, I decided to add a simple custom target to my project file to copy the app.publish folder contents to the drop location after they are created. The condition checks the value of a new parameter, which I set as an MSBuild argument, so that this target doesn’t run when building/packaging locally in Virtual Studio.

My MSBuild Arguments property now looked like this:

A kicked off another build, and was happy to see the app.publish folder with package file and configuration file in my drop location. Note that my drop location happens to be on my build server, but it really could be another shared location.

Deploying from the Build Machine

The next task was to start deploying from my build machine directly to Windows Azure. Already having a Windows Azure Subscription, I created a new hosted service and storage account for my target environments. To be able to deploy, there are some extra configuration steps to complete on the build machine. In Hollander’s post, he outlines these steps:

- Create a Windows Azure Management Certificate and install it on the build machine and also upload it to the Windows Azure Subscription

- Download and install the Windows Azure Management Cmdlets on the build machine

- Create an AzureDeploy.ps1 powershell script and save it to the build machine

After completing these steps, I also made sure to update the execution policy for powershell on my build machine, knowing that the script would fail if the execution policy was restricted.

I continued following Tom’s instructions, and updated my project file to add a target that calls the AzureDeploy.ps1 script. I used the AzureDeploy.ps1 “as is” from his post. I did have to modify his “AzureDeploy” target. I had to update some of the parameter values to match my naming conventions and also to match the new app.publish folder where our package is created. I also reused the same “BuildServer” parameter that I used in my other target above. I eliminated the “AzureDeployEnviornment” parameter since we are leveraging the Release Configuration for this.

Finally, I came back to my Build Definition, and updated the MSBuild Arguments on the Process Tab to include the parameters for the Azure Subscription ID, the Hosted Service Name, the Storage Account Name, and the Certificate Thumbprint:

/t:Publish /p:TargetProfile=Test /p:BuildServer=True /p:AzureSubscriptionID="{subscriptionid}" /p:AzureCertificateThumbprint="{thumbprint}" /p:AzureHostedServiceName="{hostedservicename}" /p:AzureStorageAccountName="{storageaccountname}"I then kicked off a new build and logged onto the Windows Azure Management Portal to watch my service deploy!

Final Tweaks: Build Numbers, Deployment Labels, Deployment Slots, and Saving Builds in BLOB Storage

Now having my project deploying to Windows Azure, I noticed a few other tweaks I wanted to make to tighten this process up a bit. One of the things that I thought would be valuable was to have the Build Number generated by TFS during the build process be the Label on the deployment in Windows Azure. Then it would be very easy to identify what build is deployed. This turned out to be a little more complicated than I expected.

I knew that if I passed in the build number as a parameter to the AzureDeploy.ps1 script, then I could easily set that to the label for the deployment. But unfortunately, by default the build number value is not available to downstream targets. I found this post by Jason Prickett, which describes an easy update to make to the build template that exposes this value. I made a copy of the default build process template, edited the XML as indicated in that post, and saved the file as a new build process template. You then need to check this template into source control. I edited my Build Definition to use this new template rather than the default template.

Now that I had a variable containing the value of the build number, I updated the target to pass the $BuildNumber into the AzureDeploy.ps1 script. I then updated the script to accept this parameter and use it for the build label. The script already had a variable for “buildlabel” that was being used. I simply set its value to this incoming argument.

$buildLabel = $args[7]

I kicked off another build and went back to the Azure Portal. This time the deployment label matched the build number in TFS!

Next, I took a look at what was being stored in BLOB storage when deploying to Windows Azure with this process. My goal was to have both the package and service configuration file for a build be stored in BLOB Storage for historical purposes, and also to easily support rollback scenarios. I noticed that the Cmdlet being used was placing the package file in a private blob container called “mydeployments” in the storage account I specified. The name of the blob was a current date format plus the name of my package. Also, the configuration file was not present.

I wanted to see the package and configuration file here, and using a naming convention that included the build number so I could distinguish them appropriately. Let’s make this happen.

I opened the solution file for the Windows Azure Management Cmdlets. I looked at the source code for the cmdlet that was being called from the AzureDeploy.ps1 script. Inside NewDeployment.cs, I navigated to the NewDeploymentProcess method. It was easy to see where the package was being uploaded to blob storage. It was eventually calling to a static method called UploadFile in AzureBlob.cs. There it was applying a timestamp to all BLOBs as part of a naming convention, likely to ensure uniqueness. I decided to comment this out, because I plan to make my BLOB names unique myself by using the Build Number.

Next, back in the NewDeploymentProcess method, I added a snippet to also upload the configuration file to BLOB storage, right after the package file. Finally, I was prepared to move these changes onto the build server. I uninstalled the existing Cmdlets using an uninstall script that is provided with the cmdlets. I then removed them entirely from the build server. I zipped up and copied the updated version to the build server and then installed them once again.

The last remaining step was to have the package and configuration file names contain the build number from TFS. I once again modified the target that copied these files to the drop location. I simply included the $(BuildNumber) parameter that we exposed earlier as part of the destination file name.

After running a new build, I verified the contents of the “mydeployments” container. I was pleased again to see a package and configuration file labeled by build number.

This now matches the label on my hosted service that was just deployed, and also aligns with the build history in TFS and on the build server. All are now in sync, and centered around the Build Number generated from TFS. If I decided to change my naming convention for builds in my build definition, that change would be represented in these other areas as well.

For extra credit, I made one final improvement. Knowing that I may want to target either the Production or Staging deployment slot for different build definitions, I updated the AzureDeploy.ps1 script to accept deployment slot as an incoming parameter.

Here is a look at the final version of these targets in my CloudService.csproj file. You will be able to download the entire solution from a link at the end of this post.

Resources

I hope that this post is helpful in creating and customizing your build process for Windows Azure. If there are better ways to accomplish some of these things, please let me know. Credit to Tom Hollander and others for laying the groundwork for me to follow.

The solution, which includes the ccproj file with my custom targets, as well as the other files such as the AzureDeploy.ps1 script are available for download here.

• Petri I. Salonen warned Cloud ISV: Make sure you understand your ecosystem play – example of Intuit and Microsoft collaboration on software platforms to create a foundation for solution developers in an 8/19/2011 post:

I have written several times in my blogs about ecosystems and the role that ecosystems play. I recently run into an interesting article in the Redmond ChannelPartner with the header “Intuit Extends Cloud Pact with Microsoft”. As I am working with Microsoft ecosystem every single working day, I became interested what the article was all about. Intuit has been building a Partner Platform (IPP) that was reported by Mary-Jo Foley already back in January 2010. I am a longtime QuickBooks Online user so I have a pretty good picture of Intuit’s SaaS delivery model at least from 2003. I believe Intuit was one of the first software companies to introduce a full-blown accounting solution for the SMB market and my company still uses it every single day.

In January 2010 Jeffrey Schwartz reported that Microsoft and Intuit stroke a cloud pact for small business where Windows Azure would be the preferred PaaS platform for Intuit and Intuit App Center. This value proposition is obviously good for ISVs that can build solutions to the waste QuickBooks ecosystem with integration not only to QuickBooks data but also between QuickBook applications.

The idea behind this Intuit Partner Platform (IPP) is to help developers to build and deploy SaaS applications that are integrated with QuickBooks data and also to give huge exposure for the ISV on the marketplace that Intuit provides for its partners. This marketplace (Intuit App Center) has thousands of applications that can be used with QuickBooks and other QuickBooks third-party solutions.

Let’s look closer to why Intuit and Microsoft need each other. I read an interesting blog entry from Phil Wainewright that includes very interesting remarks about software platform that happens to be the topic of my Ph.D. dissertation (Evaluation of a Product Platform Strategy for Analytical Application Software). The blog entry from Wainewright includes following picture:

You can read more about this topic and download Wainewright’s report “Redefining Software Platforms – How PaaS changes the game for ISVs) for Intuit” and this can be found by following this link.

When you review the picture above in more detail, you will find interesting and relevant information how Windows Azure and Intuit IPP platform play together. According to Wainewright, the conventional software platform capabilities are all about functional scope of the development platform whereby cloud platforms add three additional distinct elements according to Wainewright: Multi-tenancy, Cloud Reach and Service delivery capabilities. The service delivery capabilities have to do with provisioning, pay-as-you-go pricing and billing, service monitoring etc. The multi-tenancy is typically not something that the PaaS platform provides automatically without the application developer building the multi-tenancy logic to the application. I still hear people saying that a legacy application that is migrated to the PaaS platform will automatically become multi-tenant. This is not true as each application has to be re-architected to take advantage of things such as scalability (application increases compute instances based on load).

The idea behind Intuit IPP platform according to Wainewrite is that Intuit has built service delivery capabilities that can be abstracted from the functional platform that is on the left hand side of the picture. The idea that Intuit had initially was to be able to provide support for any PaaS platform to be integrated to the IPP platform which I think is a good idea by not practical considering how fast the PaaS platforms are evolving and the amount of investments that are put into them.

One thing to remember is that all cloud platforms such as Windows Azure has already moved on the horizontal axis whereby the situation and clear cut separation between functional platform and service delivery capabilities is no longer that obvious. This also means that any Microsoft ISV that builds additional infrastructure elements to Windows Azure has to be carefully aligned with Microsoft product teams as there might be a danger to be irrelevant as some third-party functionality will be covered with the functional platform itself (PaaS platform) like Windows Azure. I have seen the same situation with some ISVs working with BizTalk extensions that suddenly have become part of BizTalk itself. Microsoft is very clear with its ISV partners that they should focus on vertical functionality or features that are unlikely to be part of the Microsoft platform in the short-term.

A new post from Jeffrey Schwartz on August 11th, 2011 explains how Intuit IPP and Microsoft Azure will be even more integrated as Intuit will drop its native development stack and instead “focus on the top of the stack to make data and services for developers a top priority” according to Schwartz. In reality this means that Intuit will invest heavily in Windows Azure SDK for IPP and make developing an app on Azure and integrating it to QuickBooks data and IPP’s go-to-market services easy and effective. Microsoft released some more information about this partnership in the Windows Azure blog. The two companies have launched a program for this called “Front Runner for Intuit Partner Program” that explains what the developers get by participating in the program. The site portrays three steps: Develop, Test and Market and there is a video that explains what it means.

So what should we learn from this blog entry? First of all, every development platform (PaaS etc.) will evolve and my recommendation for the ISV is to focus and invest on one that you think is here in the long run. I think this example from Intuit is a great example of a company that was initially in the race of competing in the PaaS space to some extent to conclude that the investments to keep the competition going is just too huge and this led to the conclusion to select Microsoft Azure as the foundation for IPP. Intuit will be much better off by focusing on building logic on-top of Windows Azure by participating in SDK development an ensuring that any solution specific development can be easily integrated into Windows Azure platform. Intuit will therefore focus on providing data and services for developers to use with Windows Azure PaaS platform.

Microsoft has been in the development tools and platform development since its foundation so they are much better off to do those kinds of massive investments. I think this is very smart from Intuit and this enables Intuit to have a scalable solution that developers can rely on even if the decision was not easy according to Liz Ngo from Microsoft. Alex Chriss (Director, Intuit Partner Platform) from Intuit explains this in his blog why Windows Azure is a good foundation for Intuit development. Also, Intuit provides a tremendous opportunity for ISVs like CoreConnext and Propelware report based on the blog from Liz Ngo.

Software ecosystem will continue to evolve and EVERY ISV has to figure out how its solutions will meld to be part of different sub-ecosystems. This will also require efficient and well-defined Application Programming Interfaces (API) from all parties to be able to create an integrated solution based on service oriented architecture (SOA).

Let me known if you know other good examples where software ecosystems mesh nicely with each other.

David Gristwood answered So, Who Else Is Using Windows Azure…? in the UK in an 8/19/2011 post:

If you are in the process of evaluating the Windows Azure platform, one of the questions you are may be asking yourself is, who else is using Windows Azure? Our Developer and Platform Group within Microsoft here in the UK has been working closely with a number of early adopters, many of whom are now live with applications and services running on Windows Azure:

At the recent TechDays UK event, I asked four partners that I had been working with, to come on stage, and talk for around 10 minutes on what they been doing, why the chose the Windows Azure Platform, and what their experiences has been. They talked very openly and very honestly about their work, and if you want to know what they though of Windows Azure, and why they and their customers are building on Windows Azure, check out the four videos – they are short, and very watchable:

CisionWire reported Adactus Ltd, Releases New Version of its Pulse Claims Management Software for Windows Azure on 8/19/2011:

London — 19th August 2011 — Adactus Ltd today announced it will launch a new version of its application, Pulse, which works with Microsoft Windows Azure to offer customers enhanced security, as well as innovative user interface features and reliability improvements.

Pulse, a cloud based claims management software solution to help the insurance industry better manage the wide variety of insurance property claims. Designed using the very latest Microsoft Cloud based technology with ease of use as a primary design goal, Pulse can be used on a software-as-a-service payment model which helps the insurance industry with any variations in their claim volumes and significantly reduces the initial level of investment required.

“Our ISV community is alive with innovation, and we’re committed to helping our partners drive the next generation of software experiences,” said Ross Brown, Vice President of ISV and Solutions Partners for the Worldwide Partner Group at Microsoft. “Adding compatibility for the latest Microsoft technologies helps ISVs to stay ahead of the competition and give their customers access to cutting-edge technologies.”

“Adactus is excited to launch this new version of Pulse,” said James Smith, Head Of Software Development at Adactus Ltd. “Making our application compatible with Microsoft Windows Azure helps us offer our customers compelling benefits, including improved security and reliability features, tools to keep them connected to data stored on the Web, sophisticated management features and flexible access administration to improve mobile working.”

Pulse is a Microsoft approved cloud based application operating on the Windows Azure platform and offers unparalleled scalability, flexibility and security in claim management. Pulse is ideally suited to managing high claim volumes during claim surges experienced earlier this year. Commenting on this solution, James Smith, Head of Software Development at Adactus said “Our clients are demanding flexibility in the pricing and support models for claims technology solutions and Pulse has been built with that as its core objective. Volumes can vary dramatically based on a number of factors including the environmental conditions and the complexity of claim information required.

Yvonne Muench (@yvmuench) answered I’ve Built A Windows Azure Application… How Should I Market It? in an 8/19/2011 post to the Windows Azure blog:

We’ve heard from Independent Software Vendors (ISVs) that the technical aspect of building a Windows Azure application is sometimes the easier part. Coming up with the business strategy and transitioning a traditional company to Software-as-a-Service (SaaS) is often more challenging. My team, part of the Developer and Platform Evangelist (DPE) organization, works with ISVs who are building on Windows Azure. We’ve been talking to our early adopter ISVs to see what they're doing and how they’re mitigating the risks. We've found some interesting trends, which I highlighted in a session at the Worldwide Partner Conference (WPC) last month. Here’s a recap.

The first trend involves organizational structure. Rather than change from within, many partners create entirely new entities to pursue SaaS, whether to isolate and reduce conflict between the new and established business, or to hire new for the right skill set. We're seeing this all over the world from all types of ISVs, and even systems integrators (SIs) who are becoming ISVs. For example one of our global ISVs, MetraTech, who is a leader in on-premises billing software, recently launched Metanga, a dedicated multi-tenant, cloud-based billing service on Windows Azure. There are many other examples; here are just a few:

- UK: eTVMedia creates Enteraction, which enables businesses to “gamify” their applications

- Brazil: Connectt creates Zubit, which enables online brand managers to monitor social sites

- Mexico: Netmark creates WhooGoo, an online content and game platform for tweens.