Windows Azure and Cloud Computing Posts for 8/5/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Wade Wegner and Steve Marx (@smarx) produced Cloud Cover Episode 54 - Storage Analytics and the Windows Azure Tools for Visual Studio 1.4 for Channel9 on 8/5/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show at @CloudCoverShow.

In this episode, Steve and Wade dig into new tools and capabilities of the Windows Azure Platform—the Windows Azure Tools for Visual Studio 1.4 and Windows Azure Storage Analytics. To provide additional background on these features, Wade and Steve have written the following blog posts:

- Deploying the Windows Azure ASP.NET MVC 3 Web Role

- Playing with the new Windows Azure Storage Analytics Features [See below]

In the news:

- Announcing The End of The Free Promotional Period for Windows Azure AppFabric Caching

- Must have NuGet Packages for Windows Azure Development

- Updates to the Windows Azure Platform Training Kit

- Announcing the August 2011 Release of the Windows Azure Tools for Microsoft Visual Studio 2010

- Using the New Windows Azure Tools v1.4 for VS 2010

- Announcing Windows Azure Storage Analytics

For all recent posts by the Windows Azure Storage Team about Azure storage metrics and analytics, see my Windows Azure and Cloud Computing Posts for 8/3/2011+ post.

Steve Marx (@smarx) posted Playing with the new Windows Azure Storage Analytics Features on 8/4/2011:

Since its release yesterday, I’ve been playing with the new ability to have Windows Azure storage log analytics data. In a nutshell, analytics lets you log as much or as little detail as you want about calls to your storage account (blobs, tables, and queues), including detailed logs (what API calls were made and when) and aggregate metrics (like how much storage you’re consuming and how many total requests you’ve done in the past hour).

As I’m learning about these new capabilities, I’m putting the results of my experiments online at http://storageanalytics.cloudapp.net. This app lets you change your analytics settings (so you’ll need to use a real storage account and key to log in) and also lets you graph your “Get Blob” metrics.

A couple notes about using the app:

- When turning on various logging and analytics features, you’ll have to make sure your XML conforms with the documentation. That means, for example, then when you enable metrics, you’ll also have to add the

<IncludeAPIs />tag, and when you enable a retention policy, you’ll have to add the<Days />tag.- The graph page requires a browser with

<canvas>tag support. (IE9 will do, as will recent Firefox, Chrome, and probably Safari.) Even in modern browsers, I’ve gotten a couple bug reports already about the graph not showing up and other issues. Please let me know on Twitter (I’m @smarx) if you encounter issues so I can track them down. Bonus points if you can send me script errors captured by IE’s F12, Chrome’s Ctrl+Shift+I, Firebug, or the like.- The site uses HTTPS to better secure your account name and key, and I’ve used a self-signed certificate, so you’ll have to tell your browser that you’re okay with browsing to a site with an unverified certificate.

In the rest of this post, I’ll share some details and code for what I’ve done so far.

Turning On Analytics

There’s a new method that’s used for turning analytics on and off called “Set Storage Service Properties.” Because this is a brand new method, there’s no support for it in the .NET storage client library (

Microsoft.WindowsAzure.StorageClientnamespace). For convenience, I’ve put some web UI around this and the corresponding “Get Storage Service Properties” method, and that’s what you’ll find at the main page on http://storageanalytics.cloudapp.net.If you want to write your own code to change these settings, the methods are actually quite simple to call. It’s just a matter of an HTTP

GETorPUTto the right URL. The payload is a simple XML schema that turns on and off various features. Here’s the relevant source code from http://storageanalytics.cloudapp.net:[OutputCache(Location = OutputCacheLocation.None)] public ActionResult Load(string service) { var creds = new StorageCredentialsAccountAndKey(Request.Cookies["AccountName"].Value, Request.Cookies["AccountKey"].Value); var req = (HttpWebRequest)WebRequest.Create(string.Format( "http://{0}.{1}.core.windows.net/?restype=service&comp=properties", creds.AccountName, service)); req.Headers["x-ms-version"] = "2009-09-19"; if (service == "table") { creds.SignRequestLite(req); } else { creds.SignRequest(req); } return Content(XDocument.Parse(new StreamReader(req.GetResponse().GetResponseStream()) .ReadToEnd()).ToString()); } [HttpPost] [ValidateInput(false)] public ActionResult Save(string service) { var creds = new StorageCredentialsAccountAndKey(Request.Cookies["AccountName"].Value, Request.Cookies["AccountKey"].Value); var req = (HttpWebRequest)WebRequest.Create(string.Format( "http://{0}.{1}.core.windows.net/?restype=service&comp=properties", creds.AccountName, service)); req.Method = "PUT"; req.Headers["x-ms-version"] = "2009-09-19"; req.ContentLength = Request.InputStream.Length; if (service == "table") { creds.SignRequestLite(req); } else { creds.SignRequest(req); } using (var stream = req.GetRequestStream()) { Request.InputStream.CopyTo(stream); stream.Close(); } try { req.GetResponse(); return new EmptyResult(); } catch (WebException e) { Response.StatusCode = 500; Response.TrySkipIisCustomErrors = true; return Content(new StreamReader(e.Response.GetResponseStream()).ReadToEnd()); } }You can find a much more complete set of sample code for enabling and disabling various analytics options on the storage team’s blog posts on logging and metrics, but I wanted to show how straightforward calling the methods can be.

Reading the Metrics

The new analytics functionality is really two things: logging and metrics. Logging is about recording each call that is made to storage. Metrics are about statistics and aggregates. The analytics documentation as well as the storage team’s blog posts on logging and metrics have more details about what data is recorded and how you can use it. Read those if you want the details.

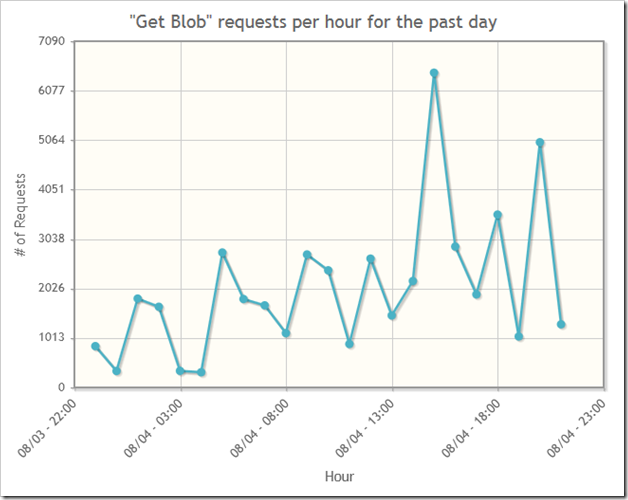

For http://storageanalytics.cloudapp.net, I created just one example of how to use metrics. It draws a graph of “Get Blob” usage over time. Below is a graph of how many “Get Blob” calls have been made via http://netflixpivot.cloudapp.net over the past twenty-four hours. (Note that http://netflixpivot.cloudapp.net is a weird example, since most access goes through a CDN. I think a large number of the requests below are actually generated by the backend, which is doing image processing to construct the Deep Zoom images, involving a high number of calls directly to storage and not through the CDN. This explains the spikiness of the graph, since the images are reconstructed roughly once per hour.)

You can see a similar graph for your blob storage account by clicking on the graph link on the left of http://storageanalytics.cloudapp.net. This graph is drawn by the excellent jqPlot library, and the data is pulled from the

$MetricsTransactionsBlobtable.Note that the specially named

$logscontainer and$Metrics*family of tables are simply regular containers and tables within your storage account. (For example, you’ll be billed for their use.) However, these containers and tables do not show up when you perform a List Containers or List Tables operation. That means none of your existing storage tools will show you these logs and metrics. This took me a bit by surprise, and at first I thought I hadn’t turned on analytics correctly, because the analytics didn’t seem to appear in my storage explorer of choice (the ClumsyLeaf tools).Here’s the code from http://storageanalytics.cloudapp.net that I’m using to query my storage metrics, and specifically get the “Get Blob” usage for the past twenty-four hours:

public class Metrics : TableServiceEntity { public string TotalRequests { get; set; } public Metrics() { } } ... IEnumerable<Metrics> metrics = null; try { var ctx = new CloudStorageAccount(new StorageCredentialsAccountAndKey( Request.Cookies["AccountName"].Value, Request.Cookies["AccountKey"].Value), false).CreateCloudTableClient().GetDataServiceContext(); metrics = ctx.CreateQuery<Metrics>("$MetricsTransactionsBlob").Where(m => string.Compare(m.PartitionKey, (DateTime.UtcNow - TimeSpan.FromDays(1)).ToString("yyyyMMddTHH00")) > 0 && m.RowKey == "user;GetBlob").ToList(); } catch { } // just pass a null to the view in case of errors... the view will display a generic error message return View(metrics);I created a very simple model (just containing the

TotalRequestsproperty I wanted to query), but again, the storage team’s blog post on metrics has more complete source code (including a full class for reading entities from this table with all the possible properties).Download the Source Code

You can download the full source code for http://storageanalytics.cloudapp.net here: http://cdn.blog.smarx.com/files/StorageAnalytics_source.zip

More Information

For further reading, consult the MSDN documentation and the storage team’s blog posts on logging and metrics.

Joseph Fultz described Searching Windows Azure Storage with Lucene.Net in his Forecast: Cloudy column for the August 2011 issue of MSDN Magazine:

You know what you need is buried in your data somewhere in the cloud, but you don’t know where. This happens so often to so many, and typically the response is to implement search in one of two ways. The simplest is to put key metadata into a SQL Azure database and then use a WHERE clause to find the Uri based on a LIKE query against the data. This has very obvious shortcomings, such as limitations in matching based only on the key pieces of metadata instead of the document content, potential issues with database size for SQL Azure, added premium costs to store metadata in SQL Azure, and the effort involved in building a specialized indexing mechanism often implemented as part of the persistence layer. In addition to those shortcomings are more specialized search capabilities that simply won’t be there, such as:

- Relevance ranking

- Language tokenization

- Phrase matching and near matching

- Stemming and synonym matching

A second approach I’ve seen is to index the cloud content from the local search indexer, and this has its own problems. Either the document is indexed locally and the Uri fixed up, which leads to complexity in the persistence and indexing because the file must be local and in the cloud; or the local indexing service reaches out to the cloud, which decreases performance and increases bandwidth consumption and cost. Moreover, using your local search engine could mean increased licensing costs, as well. I’ve seen a hybrid of the two approaches using a local SQL Server and full-text Indexing.

Theoretically, when SQL Azure adds full-text indexing, you’ll be able to use the first method more satisfactorily, but it will still require a fair amount of database space to hold the indexed content. I wanted to address the problem and meet the following criteria:

- Keep costs relating to licensing, space and bandwidth low.

- Have a real search (not baling wire and duct tape wrapped around SQL Server).

- Design and implement an architecture analogous to what I might implement in the corporate datacenter.

Search Architecture Using Lucene.Net

I want a real search architecture and, thus, need a real indexing and search engine. Fortunately, many others wanted the same thing and created a nice .NET version of the open source Lucene search and indexing library, which you’ll find at incubator.apache.org/lucene.net. Moreover, Tom Laird-McConnell created a fantastic library for using Windows Azure Storage with Lucene.Net; you’ll find it at code.msdn.microsoft.com/AzureDirectory. With these two libraries I only need to write code to crawl and index the content and a search service to find the content. The architecture will mimic typical search architecture, with index storage, an indexing service, a search service and some front-end Web servers to consume the search service (see Figure 1).

Figure 1 Search Architecture

The Lucene.Net and AzureDirectory libraries will run on a Worker Role that will serve as the Indexing Service, but the front-end Web Role only needs to consume the search service and doesn’t need the search-specific libraries. Configuring the storage and compute instances in the same region should keep bandwidth use—and costs—down during indexing and searching.

Crawling and Indexing

The Worker Role is responsible for crawling the documents in storage and indexing them. I’ve narrowed the scope to handle only Word .docx documents, using the OpenXML SDK 2.0, available at msdn.microsoft.com/library/bb456488. I’ve chosen to actually insert the latest code release for both AzureDirectory and Lucene.Net in my project, rather than just referencing the libraries.

Within the Run method, I do a full index at the start and then fire an incremental update within the sleep loop that’s set up, like so:

- Index(true);

- while (true)

- {

- Thread.Sleep(18000);

- Trace.WriteLine("Working", "Information");

- Index(false);

- }

For my sample, I keep the loop going at a reasonable frequency by sleeping for 18,000 ms. I haven’t created a method for triggering an index, but it would be easy enough to add a simple service to trigger this same index method on demand to manually trigger an index update from an admin console or call it from a process that monitors updates and additions to the storage container. In any case, I still want a scheduled crawl and this loop could serve as a simple implementation of it.

Within the Index(bool) method I first check to see if the index exists. If an index does exist, I won’t generate a new index because it would obliterate the old one, which would mean doing a bunch of unnecessary work because it would force a full indexing run:

- DateTime LastModified = new DateTime(IndexReader.LastModified(azureDirectory),DateTimeKind.Utc);

- bool GenerateIndex = !IndexReader.IndexExists(azureDirectory) ;

- DoFull = GenerateIndex;

Once I determine the conditions of the index run, I have to open the index and get a reference to the container that holds the documents being indexed. I’m dealing with a single container and no folders, but in a production implementation I’d expect multiple containers and subfolders. This would require a bit of looping and recursion, but that code should be simple enough to add later:

- // Open AzureDirectory, which contains the index

- AzureDirectory azureDirectory = new AzureDirectory(storageAccount, "CloudIndex");

- // Loop and fetch the information for each one.

- // This needs to be managed for memory pressure,

- // but for the sample I'll do all in one pass.

- IndexWriter indexWriter = new IndexWriter(azureDirectory, new StandardAnalyzer(Lucene.Net.Util.

- Version.LUCENE_29), GenerateIndex, IndexWriter.MaxFieldLength.UNLIMITED);

- // Get container to be indexed.

- CloudBlobContainer Container = BlobClient.GetContainerReference("documents");

- Container.CreateIfNotExist();

Using the AzureDirectory library allows me to use a Windows Azure Storage container as the directory for the Lucene.Net index without having to write any code of my own, so I can focus solely on the code to crawl and index. For this article, the two most interesting parameters in the IndexWriter constructor are the Analyzer and the GenerateIndex flag. The Analyzer is what’s responsible for taking the data passed to the IndexWriter, tokenizing it and creating the index. GenerateIndex is important because if it isn’t set properly the index will get overwritten each time and cause a lot of churn. Before getting to the code that does the indexing, I define a simple object to hold the content of the document:

- public class DocumentToIndex

- {

- public string Body;

- public string Name;

- public string Uri;

- public string Id;

- }

As I loop through the container, I grab each blob reference and create an analogous DocumentToIndex object for it. Before adding the document to the index, I check to see if it has been modified since the last indexing run by comparing its last-modified time to that of of the LastModified time of the Index that I grabbed at the start of the index run. I will also index it if the DoFull flag is true:

- foreach (IListBlobItem currentBlob in Container.ListBlobs(options))

- {

- CloudBlob blobRef = Container.GetBlobReference(currentBlob.Uri.ToString());

- blobRef.FetchAttributes(options);

- // Add doc to index if it is newer than index or doing a full index

- if (LastModified < blobRef.Properties.LastModifiedUtc || DoFull )

- {

- DocumentToIndex curBlob = GetDocumentData(currentBlob.Uri.ToString());

- //docs.Add(curBlob);

- AddToCatalog(indexWriter, curBlob);

- }

- }

For this simple example, I’m checking that the last modified time of the index is less than that of the document and it works well enough. However, there is room for error, because the index could’ve been updated via optimization and then it would look like the index was newer than a given document, but the document did not get indexed. I’m avoiding that possibility by simply tying an optimization call with a full index run. Note that in a real implementation you’d want to revisit this decision. Within the loop I call GetDocumentData to fetch the blob and AddToCatalog to add the data and fields I’m interested in to the Lucene.Net index. Within GetDocumentData, I use fairly typical code to fetch the blob and set a couple of properties for my representative object:

- // Stream stream = File.Open(docUri, FileMode.Open);

- var response = WebRequest.Create(docUri).GetResponse();

- Stream stream = response.GetResponseStream();

- // Can't open directly from URI, because it won't support seeking

- // so move it to a "local" memory stream

- Stream localStream= new MemoryStream();

- stream.CopyTo(localStream);

- // Parse doc name

- doc.Name = docUri.Substring(docUri.LastIndexOf(@"/")+1);

- doc.Uri = docUri;

Getting the body is a little bit more work. Here, I set up a switch statement for the extension and then use OpenXml to pull the contents of the .docx out (see Figure 2). OpenXml requires a stream that can do seek operations, so I can’t use the response stream directly. To make it work, I copy the response stream to a memory stream and use the memory stream. Make a note of this operation, because if the documents are exceptionally large, this could theoretically cause issues by putting memory pressure on the worker and would require a little fancier handling of the blob.

Figure 2 Pulling Out the Contents of the .docx File

- switch(doc.Name.Substring(doc.Name.LastIndexOf(".")+1))

- {

- case "docx":

- WordprocessingDocument wordprocessingDocument =

- WordprocessingDocument.Open(localStream, false);

- doc.Body = wordprocessingDocument.MainDocumentPart.Document.Body.InnerText;

- wordprocessingDocument.Close();

- break;

- // TODO: Still incomplete

- case "pptx":

- // Probably want to create a generic for DocToIndex and use it

- // to create a pptx-specific that allows slide-specific indexing.

- PresentationDocument pptDoc = PresentationDocument.Open(localStream, false);

- foreach (SlidePart slide in pptDoc.PresentationPart.SlideParts)

- {

- // Iterate through slides

- }

- break;

- default:

- break;

- }

My additional stub and comments show where to put the code to handle other formats. In a production implementation, I’d pull the code out for each document type and put it in a separate library of document adapters, then use configuration and document inspection to resolve the document type to the proper adapter library. Here I’ve placed it right in the switch statement.

Now the populated DocumentToIndex object can be passed to AddToCatalog to get it into the index (Figure 3).

Figure 3 Passing DocumentToIndex to the AddToCatalog Method

- public void AddToCatalog(IndexWriter indexWriter, DocumentToIndex currentDocument

- )

- {

- Term deleteTerm = new Term("Uri", currentDocument.Uri);

- LuceneDocs.Document doc = new LuceneDocs.Document();

- doc.Add(new LuceneDocs.Field("Uri", currentDocument.Uri, LuceneDocs.Field.Store.YES,

- LuceneDocs.Field.Index.NOT_ANALYZED, LuceneDocs.Field.TermVector.NO));

- doc.Add(new LuceneDocs.Field("Title", currentDocument.Name, LuceneDocs.Field.Store.YES,

- LuceneDocs.Field.Index.ANALYZED, LuceneDocs.Field.TermVector.NO));

- doc.Add(new LuceneDocs.Field("Body", currentDocument.Body, LuceneDocs.Field.Store.YES,

- LuceneDocs.Field.Index.ANALYZED, LuceneDocs.Field.TermVector.NO));

- indexWriter.UpdateDocument(deleteTerm, doc);

- }

I decided to index three fields: Title, Uri and Body (the actual content). Note that for Title and Body I use the ANALYZED flag. This tells the Analyzer to tokenize the content and store the tokens. I want to do this for the body, especially, or my index will grow to be larger in size than all the documents combined. Take note that the Uri is set to NOT_ANALYZED. I want this field stored in the index directly, because it’s a unique value by which I can retrieve a specific document. In fact, I use it in this method to create a Term (a construct used for finding documents) that’s passed to the UpdateDocument method of the IndexWriter. Any other fields I might want to add to the index, whether to support a document preview or to support faceted searching (such as an author field), I’d add here and decide whether to tokenize the text based on how I plan to use the field.

Implementing the Search Service

Once I had the indexing service going and could see the segment files in the index container, I was anxious to see how well it worked. I cracked open the IService1.cs file for the search service and made some changes to the interfaces and data contracts. Because this file generates SOAP services by default, I decided to stick with those for the first pass. I needed a return type for the search results, but the document title and Uri were enough for the time being, so I defined a simple class to be used as the DataContract:

- [DataContract]

- public class SearchResult

- {

- [DataMember]

- public string Title;

- [DataMember]

- public string Uri;

- }

Using the SearchResult type, I defined a simple search method as part of the ISearchService ServiceContract:

- [ServiceContract]

- public interface ISearchService

- {

- [OperationContract]

- List<SearchResult> Search(

- string SearchTerms);

Next, I opened SearchService.cs and added an implementation for the Search operation. Once again AzureDirectory comes into play, and I instantiated a new one from the configuration to pass to the IndexSearcher object. The AzureDirectory library not only provides a directory interface for Lucene.Net, it also adds an intelligent layer of indirection that caches and compresses. AzureDirectory operations happen locally and writes are moved to storage upon being committed, using compression to reduce latency and cost. At this point a number of Lucene.Net objects come into play. The IndexSearcher will take a Query object and search the index. However, I only have a set of terms passed in as a string. To get these terms into a Query object I have to use a QueryParser. I need to tell the QueryParser what fields the terms apply to, and provide the terms. In this implementation I’m only searching the content of the document:

- // Open index

- AzureDirectory azureDirectory = new AzureDirectory(storageAccount, "cloudindex");

- IndexSearcher searcher = new IndexSearcher(azureDirectory);

- // For the sample I'm just searching the body.

- QueryParser parser = new QueryParser("Body", new StandardAnalyzer());

- Query query = parser.Parse("Body:(" + SearchTerms + ")");

- Hits hits = searcher.Search(query);

If I wanted to provide a faceted search, I would need to implement a means to select the field and create the query for that field, but I’d have to add it to the index in the previous code where Title, Uri and Body were added. The only thing left to do in the service now is to iterate over the hits and populate the return list:

- for (int idxResults = 0; idxResults < hits.Length(); idxResults++)

- {

- SearchResult newSearchResult = new SearchResult();

- Document doc = hits.Doc(idxResults);

- newSearchResult.Title = doc.GetField("Title").StringValue();

- newSearchResult.Uri = doc.GetField("Uri").StringValue();

- retval.Add(newSearchResult);

- }

Because I’m a bit impatient, I don’t want to wait to finish the Web front end to test it out, so I run the project, fire up WcfTestClient, add a reference to the service and search for the term “cloud” (see Figure 4).

Figure 4 WcfTestClient Results

I’m quite happy to see it come back with the expected results.

Note that while I’m running the search service and indexing roles from the Windows Azure compute emulator, I’m using actual Windows Azure Storage.

Search Page

Switching to my front-end Web role, I make some quick modifications to the default.aspx page, adding a text box and some labels.

As Figure 5 shows, the most significant mark-up change is in the data grid where I define databound columns for Title and Uri that should be available in the resultset.

Figure 5 Defining Databound Columns for the Data Grid

I quickly add a reference to the search service project, along with a bit of code behind the Search button to call the search service and bind the results to the datagrid:

- protected void btnSearch_Click(

- object sender, EventArgs e)

- {

- refSearchService.SearchServiceClient

- searchService = new

- refSearchService.SearchServiceClient();

- IList<SearchResult> results =

- searchService.Search(

- txtSearchTerms.Text);

- gvResults.DataSource = results;

- gvResults.DataBind();

- }

Simple enough, so with a quick tap of F5 I enter a search term to see what I get. Figure 6 shows the results. As expected, entering “neudesic” returned two hits from various documents I had staged in the container.

Figure 6 Search Results

Final Thoughts

I didn’t cover the kind of advanced topics you might decide to implement if the catalog and related index grow large enough. With Windows Azure, Lucene.Net and a sprinkle of OpenXML, just about any searching requirements can be met. Because there isn’t a lot of support for a cloud-deployed search solution yet, especially one that could respect a custom security implementation on top of Windows Azure Storage, Lucene.Net might be the best option out there as it can be bent to fit the requirements of the implementer.

Anders Lybecker (@AndersLybecker) mentioned in a comment to Joseph’s post (above) that he posted a Using Lucene.Net with Microsoft Azure article on 1/16/2011:

Lucene indexes are usually stored on the file system and preferably on the local file system. In Azure there are additional types of storage with different capabilities, each with distinct benefits and drawbacks. The options for storing Lucene indexes in Azure are:

- Azure CloudDrive

- Azure Blob Storage

Azure CloudDrive

CloudDrive is the obvious solutions, as it is comparable to on premise file systems with mountable virtual hard drives (VHDs). CloudDrive is however not the optimal choice, as CloudDrive impose notable limitations. The most significant limitation is; only one web role, worker role or VM role can mount the CloudDrive at a time with read/write access. It is possible to mount multiple read-only snapshots of a CloudDrive, but you have to manage creation of new snapshots yourself depending on acceptable staleness of the Lucene indexes.

Azure Blob Storage

The alternative Lucene index storage solution is Blob Storage. Luckily a Lucene directory (Lucene index storage) implementation for Azure Blob Storage exists in the Azure library for Lucene.Net. It is called AzureDirectory and allows any role to modify the index, but only one role at a time. Furthermore each Lucene segment (See Lucene Index Segments) is stored in separate blobs, therefore utilizing many blobs at the same time. This allows the implementation to cache each segment locally and retrieve the blob from Blob Storage only when new segments are created. Consequently compound file format should not be used and optimization of the Lucene index is discouraged.

Code sample

Getting Lucene.Net up and running is simple, and using it with Azure library for Lucene.Net requires only the Lucene directory to be changes as highlighted below in Lucene index and search example. Most of it is Azure specific configuration plumbing.

Lucene.Net.Util.Version version = Lucene.Net.Util.Version.LUCENE_29; CloudStorageAccount.SetConfigurationSettingPublisher( (configName, configSetter) => configSetter(RoleEnvironment .GetConfigurationSettingValue(configName))); var cloudAccount = CloudStorageAccount .FromConfigurationSetting("LuceneBlobStorage"); var cacheDirectory = new RAMDirectory(); var indexName = "MyLuceneIndex"; var azureDirectory = new AzureDirectory(cloudAccount, indexName, cacheDirectory); var analyzer = new StandardAnalyzer(version); // Add content to the index var indexWriter = new IndexWriter(azureDirectory, analyzer, IndexWriter.MaxFieldLength.UNLIMITED); indexWriter.SetUseCompoundFile(false); foreach (var document in CreateDocuments()) { indexWriter.AddDocument(document); } indexWriter.Commit(); indexWriter.Close(); // Search for the content var parser = new QueryParser(version, "text", analyzer); Query q = parser.Parse("azure"); var searcher = new IndexSearcher(azureDirectory, true); TopDocs hits = searcher.Search(q, null, 5, Sort.RELEVANCE); foreach (ScoreDoc match in hits.scoreDocs) { Document doc = searcher.Doc(match.doc); var id = doc.Get("id"); var text = doc.Get("text"); } searcher.Close();Download the reference example which uses Azure SDK 1.3 and Lucene.Net 2.9 in a console application connecting either to Development Fabric or your Blob Storage account.Lucene Index Segments (simplified)

Segments are the essential building block in Lucene. A Lucene index consists of one or more segments, each a standalone index. Segments are immutable and created when an IndexWriter flushes. Deletes or updates to an existing segment are therefore not removed stored in the original segment, but marked as deleted, and the new documents are stored in a new segment.

Optimizing an index reduces the number of segments, by creating a new segment with all the content and deleting the old ones.

Azure library for Lucene.Net facts

- It is licensed under Ms-PL, so you do pretty much whatever you want to do with the code.

- Based on Block Blobs (optimized for streaming) which is in tune with Lucene’s incremental indexing architecture (immutable segments) and the caching features of the AzureDirectory voids the need for random read/write of the Blob Storage.

- Caches index segments locally in any Lucene directory (e.g. RAMDirectory) and by default in the volatile Local Storage.

- Calling Optimize recreates the entire blob, because all Lucene segment combined into one segment. Consider not optimizing.

- Do not use Lucene compound files, as index changes will recreate the entire blob. Also this stores the entire index in one blob (+metadata blobs).

- Do use a VM role size (Small, Medium, Large or ExtraLarge) where the Local Resource size is larger than the Lucene index, as the Lucene segments are cached by default in Local Resource storage.

Azure CloudDrive facts

- Only Fixed Size VHDs are supported.

- Volatile Local Resources can be used to cache VHD content

- Based on Page Blobs (optimized for random read/write).

- Stores the entire VHS in one Page Blob and is therefore restricted to the Page Blob maximum limit of 1 TByte.

- A role can mount up to 16 drives.

- A CloudDrive can only be mounted to a single VM instance at a time for read/write access.

- Snapshot CloudDrives are read-only and can be mounted as read-only drives by multiple different roles at the same time.

Additional Azure references

Anders is chief architect at Kring Development A/S, a consultancy firm in Copenhagen, Denmark.

<Return to section navigation list>

SQL Azure Database and Reporting

Susan Price posted Columnstore Indexes: A New Feature in SQL Server known as Project “Apollo” to the SQL Server Team Blog on 8/4/2011. Some NoSQL datastores support columnstore indexes:

Do you have a data warehouse? Do you wish your queries would run faster? If your answers are yes, check out the new columnstore index (aka Project “Apollo”) in SQL Server Code Name “Denali” today!

Why use a column store?

SQL Server’s traditional indexes, clustered and nonclustered, are based on the B-tree. B-trees are great for finding data that match a predicate on the primary key. They’re also reasonably fast when you need to scan all the data in a table. So why use a column store? There are two main reasons:

- Compression. Most general-purpose relational database management systems, including SQL Server, store data in row-wise fashion. This organization is sometimes called a row store. Both heaps and B-trees are row stores because they store the values from each column in a given row contiguously. When you want to find all the values associated with a row, having the data stored together on one page is very efficient. Storing data by rows is less ideal for compressing the data. Most compression algorithms exploit the similarities of a group of values. The values from different columns usually are not very similar. When data is stored row-wise, the number of rows per page is relatively few, so the opportunities to exploit similarity among values are limited. A column store organizes data in column-wise fashion. Data from a single column are stored contiguously. Usually there is repetition and similarity among values within a column. The column store organization allows compression algorithms to exploit that similarity.

- Fetching only needed columns. When data is stored column-wise, each column can be accessed independently of the other columns. If a query touches only a subset of the columns in a table, IO is reduced. Data warehouse fact tables are often wide as well long. Typical queries touch only 10 – 15% of the columns. That means a column store can reduce IO by 85 – 90%, a huge speedup in systems that are often IO bound, meaning the query speed is limited by the speed at which needed data can be transferred from disk into memory.

It’s clear that cold start queries, when all the data must be fetched from disk, will benefit from compression and eliminating unneeded columns. Warm start queries benefit too, because more of your working set fits in memory. At some point, however, eliminating IO moves the bottleneck to the CPU. We’ve added huge value here too, by introducing a new query execution paradigm, called batch mode processing. When the query uses at least one columnstore index, batch mode processing can speed up joins, aggregations, and filtering. During batch mode processing, columnar data is organized in vectors during query execution. Sets of data are processed a-batch-at-a-time instead of a-row-at-a-time, using highly efficient algorithms designed to take advantage of modern hardware. The query optimizer takes care of choosing when to use batch mode processing and when to use traditional row mode query processing.

Why not use a column store for everything?

While it’s possible to build a system that stores all data in columnar format, row stores still have advantages in some situations. A B-tree is a very efficient data structure for looking up or modifying a single row of data. So if your workload entails many single row lookups and many updates and deletes, which is common for OLTP workloads, you will probably continue to use row store technology. Data warehouse workloads typically scan, aggregate, and join large amounts of data. In those scenarios, column stores really shine.SQL Server now provides you with a choice. You can build columnstore indexes on your big data warehouse tables and get the benefits of column store technology and batch mode processing without giving up the benefits of traditional row store technology when a B-tree is the right tool for the job.

Try it out: Build a columnstore index

Columnstore indexes are available in CTP 3 of SQL Server Code Name “Denali.” You can create a columnstore index on your table by using a slight variation on existing syntax for creating indexes. To create an index named mycolumnstoreindex on a table named mytable with three columns, named col1, col2, and col3, use the following syntax:CREATE NONCLUSTERED COLUMNSTORE INDEX mycolumnstoreindex ON mytable (col1, col2, col3);

To avoid typing the names of all the columns in the table, you can use the Object Explorer in Management Studio to create the index as follows:

- Expand the tree structure for the table and then right click on the Indexes icon.

- Select New Index and then Nonclustered columnstore index

- Click Add in the wizard and it will give you a list of columns with check boxes.

- You can either choose columns individually or click the box next to Name at the top, which will put checks next to all the columns. Click OK.

- Click OK.

Typically you will want to put all the columns in your table into the columnstore index. It does not matter what order you list the columns because a columnstore index does not have a key like a B-tree index does. Internally, the data will be re-ordered automatically to get the best compression.

Be sure to populate the table with data before you create the columnstore index. Once you create the columnstore index, you cannot directly add, delete, or modify data in the table. Instead, you can either:

- Disable or drop the columnstore index. You will then be able to update the table and then rebuild the columnstore index

- Use partition switching. If your table is partitioned, you can put new data into a staging table, build a columnstore index on the staging table, and switch the staging table into an empty partition of your main table. Similarly, you could modify existing data by first switching a partition from the main table into a staging table, disable the columnstore index on the staging table, perform your updates, rebuild the columnstore index, and switch the partition back into the main table.

For more information about using columnstore indexes, check out the MSDN article Columnstore Indexes and our new SQL Server Columnstore Index FAQ on the TechNet wiki.

If you haven’t already, be sure to download SQL Server Code Name “Denali” CTP3 and begin testing today!

Susan Price, Senior Program Manager, SQL Server Database Engine Team

It will be interesting to see if future versions of SQL Azure support columnstore indexes.

<Return to section navigation list>

MarketPlace DataMarket and OData

SAP AG described the relationship between SAP NetWeaver Gateway and OData on 8/5/2011:

By exposing SAP Business Suite functionality as REST-based OData (Open Data Protocol) services, SAP NetWeaver Gateway enables SAP applications to share data with a wide range of devices, technologies, and platforms in a way that is easy to understand and consume.

Using REST services provides the following advantages:

Human readable results; you can use your browser to see what data you will get.

Stateless apps.

One piece of information probably leads to other, related pieces of information.

Uses the standard GET, PUT, POST, and DELETE. If you know where to GET data, you know where to PUT it, and you can use the same format.

Widely used, for example, by Twitter, Twilio, Amazon, Facebook, eBay, YouTube, Yahoo!

What is OData and Why Do we Use It?

OData was initiated by Microsoft to attempt to provide a standard for platform-agnostic interoperability.

OData is a web protocol for querying and updating data, applying and building on web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON (JavaScript Object Notation) to provide access to information from a variety of applications. It is easy to understand and extensible, and provides consumers with a predictable interface for querying a variety of data sources.

AtomPub is the de facto standard for treating groups of similar information snippets as it is simple, extensible, and allows anything textual in its content. However, as so much textual enterprise data is structured, there is also a requirement to express what structure to expect in a certain kind of information snippet. As these snippets can come in large quantities, they must be trimmed down to manageable chunks, sorted according to ad-hoc user preferences, and the result set must be stepped through page by page.

OData provides all of the above as well as additional features, such as feed customization that allows mapping part of the structured content into the standard Atom elements, and the ability to link data entities within an OData service (via “…related…” links) and beyond (via media link entries). This facilitates support of a wide range of clients with different capabilities:

Purely Atom, simply paging through data.

Hypermedia-driven, navigating through the data web.

Aware of query options, tailoring the OData services to their needs.

OData is also extensible, like the underlying AtomPub, and thus allows the addition of features that are required when building easy-to-use applications, both mobile and browser-based.

OData for SAP Applications

SAP NetWeaver Gateway uses OData for SAP applications, which contains SAP-specific metadata that helps the developer to consume SAP business data, such as descriptions of fields that can be retrieved from the SAP Data Dictionary. The following are examples of OData for SAP applications:

Human-readable, language-dependent labels for all Properties (required for building user interfaces).

Free-text search, within collections of similar entities, and across collections using OpenSearch. OpenSearch can use the Atom Syndication Format for its search results, so the OData entities that are returned by the search fit in, and OpenSearch can be integrated into AtomPub service documents via links with rel=”search”, per collection as well as on the top level. The OpenSearch description specifies the URL template to use for searching, and for collections it simply points to the OData entity set, using a custom query option with the name of “search”.

Semantic annotations, which are required for apps running on mobile devices to provide seamless integration into contacts, calendar, and telephony. The client needs to know which OData Properties contain a phone number, a part of a name or address, or something related to a calendar event.

Not all entities and entity sets will support the full spectrum of possible interactions defined by the uniform interface, so capability discovery will help clients avoiding requests that the server cannot fulfill. The metadata document will tell whether an entity set is searchable, which Properties may be used in filter expressions, and which Properties of an entity will always be managed by the server.

Most of the applications for “light-weight consumption” follow an interaction pattern called “view-inspect-act”, “alert-analyze-act”, or “explore & act”, meaning that you somehow navigate (or are led) to an entity that interests you, and then you have to choose what to do. The chosen action eventually results in changes to this entity, or entities related to it, but it may be tricky to express it in terms of an Update operation, so the available actions are advertised to the client as special atom links (with an optional embedded simplified “form” in case the action needs parameters) and the action is triggered by POSTing to the target URI of the link.

The following simplified diagram shows how Atom, OData, and OData for SAP applications fit together:

(SAP NetWeaver Gateway and OData for SAP Applications)

For more information on OData, see www.odata.org.

Matthew Weinberger (@MattNLM) reported SAP Updates SaaS Business ByDesign Management Suite on 8/5/2011:

SAP AG has announced a sweeping series of updates for the second major feature pack release of the Business ByDesign SaaS business management suite, with several enhancements to foster communication between the company and its partners and customers. Let’s take a look.

Here’s how SAP broke down the updates to Business ByDesign in its press release:

- Community-driven enhancements: SAP is now offering an English global community portal for Business ByDesign partners to share ideas and suggestions for the future of the platform. A Chinese version is forthcoming, SAP said.

- Additional professional service functionality: Business ByDesign now supports industry-specific needs including managed services, customer contract management and enhanced revenue recognition automation, following partner suggestions.

- Extended flexibility through co-innovation: SAP is courting ISV partners to develop solutions that enhance the reach and usability of Business ByDesign.

- Procure-to-pay with integration to SAP Business Suite software: SMBs are the target audience for SAP Business ByDesign. But its other core market is subsidiaries of companies running the SAP Business Suite legacy software. With the new feature pack, SAP is supporting additional preconfigured integration scenarios so data is automatically pushed from home base to subsidiary and back.

Here’s what Peter Lorenz, executive vice president of OnDemand Solutions and corporate officer of SAP AG had to say about the release in a prepared statement:

“With the second release of SAP Business ByDesign this year, we are taking another significant step in executing our cloud computing strategy, building on feedback of trusted partners and customers and our commitment to delivering immediate innovation through bi-yearly release cycles. We continue to gain market traction with SAP Business ByDesign, have expanded our ecosystem to 250 partner companies and welcome companies around the world — now also in Australia and Mexico — to experience the new release’s usability and business value.”

I’m a little surprised that SAP’s press materials didn’t mention the company’s recent partnership with Google for mapping big data, but there you have it. Stay tuned to TalkinCloud for updates on SAP’s cloud strategy.

Read More About This Topic

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, Caching, WIF and Service Bus

Paolo Salvatori described How to use a WCF custom channel to implement client-side caching in a Windows Azure-hosted application in a 5/8/2011 post to the AppFabricCAT blog:

Some months ago I created a custom WCF protocol channel to transparently inject client-side caching capabilities into an existing WCF-enabled application just changing the its configuration file. Since I posted my first post on this subject I received positive feedbacks on the client-side caching pattern, hence I decided to create a version of my component for Windows Azure. The new implementation is almost identical to the original version and introduces some minor changes. The most relevant addition is the ability to cache response messages in Windows Azure AppFabric Caching Service. The latter enables to easily create a cache in the cloud that can be used by Windows Azure roles to store reference and lookup data. Caching can dramatically increase performance by temporarily storing information from backend stores and sources.

My component allows to boost the performance of existing WCF-enabled applications running in the Azure environment by injecting caching capabilities in the WCF channel stack that transparently exploit the functionality offered by Windows Azure AppFabric Caching to avoid redundant calls against remote WCF and ASMX services. The new version of the WCF custom channel provides the possibility to choose among three caching providers:

- A Memory Cache-based provider: this component internally uses an instance of the MemoryCacheclass contained in the .Net Framework 4.0.

- A Web Cache-based provider: this provider utilizes an instance of the Cacheclass supplied by ASP.NET to cache response messages.

- An AppFabric Caching provider: this caching provider leverages Windows Azure AppFabric Caching Service. To further improve the performance, it’s highly recommended the client application to use the Local Cache to store response messages in-process.

As already noted in the previous article, client-side caching and server-side caching are two powerful and complimentary techniques to improve the performance of WCF-enabled applications. Client-caching is particularly indicated for those applications, like a web site, that frequently invoke one or multiple back-end systems to retrieve reference and lookup data, that is data that is typically static, like the catalog of an online store and that changes quite infrequently. By using client-side caching you can avoid making redundant calls to retrieve the same data over time, especially when these calls take a significant amount of time to complete.

For more information on Windows Azure AppFabric, you can review the following articles:

- “Introducing the Windows Azure AppFabric Caching Service” article on the MSDN Magazine site.

- “Caching Service (Windows Azure AppFabric)” article on the MSDN site.

- “Windows Azure AppFabric Caching Service Released and notes on quotas” article on the AppFabric CAT site.

- “How to best leverage Windows Azure AppFabric Caching Service in a Web role to avoid most common issues” article on the AppFabric CAT site.

- “Windows Azure AppFabric Learning Series” samples and videos on the CodePlex site.

- “Azure AppFabric Caching Service” article on Neil Mackenzie’s blog.

Problem Statement

The problem statement that the cloud version of my component intends to solve can be formulated as follows:

How can I implicitly cache response messages within a service or an application running in a Windows Azure role that invokes one or multiple underlying services using WCF and a Request-Response message exchange pattern without modifying the code of the application in question?

To solve this problem, I created a custom protocol channel that you can explicitly or implicitly use inside a CustomBinding when specifying client endpoints within the configuration file or by code using the WCF API.

Design Pattern

The design pattern implemented by my component can be described as follows: the caching channel allows to extend existing WCF-enabled cloud applications and services with client-caching capabilities without the need to explicitly change their code to exploit the functionality supplied by Windows Azure AppFabric Caching. To achieve this goal, I created a class library that contains a set of components to configure, instantiate and use this caching channel at runtime. My custom channel is configured to checks the presence of the response message in the cache and behaves accordingly:

If the response message is in the cache, the custom channel immediately returns the response message from the cache without invoking the underlying service.

Conversely, if the response message is not in the cache, the custom channel calls the underlying channel to invoke the back-end service and then caches the response message using the caching provider defined in the configuration file for the actual call.

You can exploit the capabilities of the caching channel in 2 distinct ways:

- You can configure the client application to use a CustomBinding. In this case, you have to specify the ClientCacheBindingElementas the topmost binding element in the binding configuration. This way, at runtime, the caching channel will be the first protocol channel to be called in the channel stack.

- Alternatively, you can use the ClientCacheEndpointBehavior to inject the ClientCacheBindingElement at the top of an existing binding, for example the BasicHttpBinding or the WsHttpBinding.

Scenarios

This section describes 2 scenarios where you can use the caching channel in a cloud application.

First Scenario

The following picture depicts the architecture of the first scenario that uses Windows Azure Connect to establish a protected connection between a web role in the cloud and local WCF service and uses Windows Azure AppFabric Caching provider to cache response messages in the local and distributed cache. The diagram below shows an ASP.NET application running in a Web Role that invokes a local, on-premises WCF service running in a corporate data center. In particular, when the Service.aspx page first loads, it populates a drop-down list with the name of writers from the Authors table of the notorious pubs database which runs on-premise in the corporate domain. The page offers the user the possibility to retrieve the list of books written by the author selected in the drop-down list by invoking the AuthorsWebService WCF service located in the organization’s network that retrieves data for from the Titles tables of the pubs database.

Directly invoke the WCF service via Windows Azure Connect: the Service.aspx page always calls the AuthorsWebService to retrieve the list of titles for the current author using Windows Azure Connect (see below for more information).

Use Caching API Explicitly and via Windows Azure Connect: the page explicitly uses the cache aside programming pattern and the Windows Azure AppFabric Caching API to retrieve the list of titles for the current author. When the user presses the Get Titles button, the page first checks whether data is in the cache: if yes it retrieves titles from the cache, otherwise it invokes the underlying WCF service, writes the data in the distributed cache, then returns the titles.

Use Caching API via caching channel and Windows Azure Connect: this mode uses the caching channel to transparently retrieve data from the local or distributed cache. The use of the caching channel allows to transparently inject client-side caching capabilities into an existing WCF-enabled application without the need to change its code. In this case, it’s sufficient to change the configuration of the client endpoint in the web.config.

Directly invoke the WCF service via Service Bus: the Service.aspx page always calls the AuthorsWebService to retrieve the list of titles for the current author via Windows Azure AppFabric Service Bus. In particular, the web role invokes a BasicHttpRelayBinding endpoint exposed in the cloud via relay service by the AuthorsWebService.

Use Caching API via caching channel and Service Bus: this mode uses the Service Bus to invoke the AuthorsWebService and the caching channel to transparently retrieve data from the local or distributed cache. The ClientCacheEndpointBehavior replaces the original BasicHttpRelayBinding specified in the configuration file with a CustomBinding that contains the same binding elements and injects the ClientCacheBindingElement at the top of the binding. This way, at runtime, the caching channel will be the first channel to be invoked in the channel stack.

Let’s analyze what happens when the user selects the third option to use the caching channel with Windows Azure Connect.

Message Flow:

The user chooses an author from the drop-down list, selects the Use Caching API via WCF channel call mode and finally presses the Get Titles button.

This event triggers the execution of the GetTitles method that creates a WCF proxy using the UseWCFCachingChannel endpoint. This endpoint is configured in the web.config to use the CustomBinding. The ClientCacheBindingElement is defined as the topmost binding element in the binding configuration. This way, at runtime, the caching channel will be the first protocol channel to be called in the channel stack.

The proxy transforms the .NET method call into a WCF message and delivers it to the underlying channel stack.

The caching channel checks whether the response message in the local or distributed cache. If ASP.NET application is hosted by more than one web role instance, the response message may have been previously put in the distributed cache by another role instance. If the caching channel finds the response message for the actual call in the local or distributed cache, it immediately returns this message to the proxy object without invoking the back-end service.

Conversely, if the response message is not in the cache, the custom channel calls the inner channel to invoke the back-end service. In this case, the request message goes all the way through the channel stack to the transport channel that invokes the AuthorsWebService.

The AuthorsWebService uses the authorId parameter to retrieve a list of books from the Titles table in the pubs database.

The service reads the titles for the current author from the pubs database.

The service returns a response message to the ASP.NET application.

The transport channel receives the stream of data and uses a message encoder to interpret the bytes and to produce a WCF Message object that can continue up the channel stack. At this point each protocol channel has a chance to work on the message. In particular, the caching channel stores the response message in the distributed cache using the AppFabricCaching provider.

The caching channel returns the response WCF message to the proxy.

The proxy transforms the WCF message into a response object.

The ASP.NET application creates and returns a new page to the browser.

Second Scenario

The following picture depicts the architecture of the second scenario where the web role uses the Windows Azure AppFabric Service Bus to invoke the AuthorsWebService and Windows Azure AppFabric Caching provider to cache response messages in the local and distributed cache.

Let’s analyze what happens when the user selects the fifth option to use the caching channel with the Service Bus.

Message Flow:

The user chooses an author from the drop-down list, selects the Use Caching API via WCF channel call mode and finally presses the Get Titles button.

This event triggers the execution of the GetTitles method that creates a WCF proxy using the UseWCFCachingChannel endpoint. This endpoint is configured in the web.config to use the CustomBinding. The ClientCacheBindingElement is defined as the topmost binding element in the binding configuration. This way, at runtime, the caching channel will be the first protocol channel to be called in the channel stack.

The proxy transforms the .NET method call into a WCF message and delivers it to the underlying channel stack.

The caching channel checks whether the response message in the local or distributed cache. If ASP.NET application is hosted by more than one web role instance, the response message may have been previously put in the distributed cache by another role instance. If the caching channel finds the response message for the actual call in the local or distributed cache, it immediately returns this message to the proxy object without invoking the back-end service.

Conversely, if the response message is not in the cache, the custom channel calls the inner channel to invoke the back-end service via the Service Bus. In this case, the request message goes all the way through the channel stack to the transport channel that invokes the relay service.

The Service Bus relays the request message to the AuthorsWebService.

The AuthorsWebService uses the authorId parameter to retrieve a list of books from the Titles table in the pubs database.

The service reads the titles for the current author from the pubs database.

The service returns a response message to the relay service.

The relay service passes the response message to the ASP.NET application.

The transport channel receives the stream of data and uses a message encoder to interpret the bytes and to produce a WCF Message object that can continue up the channel stack. At this point each protocol channel has a chance to work on the message. In particular, the caching channel stores the response message in the distributed cache using the AppFabricCaching provider.

The caching channel returns the response WCF message to the proxy.

The proxy transforms the WCF message into a response object.

The ASP.NET application creates and returns a new page to the browser.

NOTE

In the context of a cloud application, the use of the caching channel not only improves performance, but allows to decrease the traffic on Windows Azure Connect and Service Bus and therefore the cost due to operations performed and network used.Windows Azure Connect

In order to establish an IPsec protected IPv6 connection between the Web Role running in the Windows Azure data center and the local WCF service running in the organization’s network, the solution exploits Windows Azure Connect that is main component of the networking functionality that will be offered under the Windows Azure Virtual Network name. Windows Azure Connect enables customers of the Windows Azure platform to easily build and deploy a new class of hybrid, distributed applications that span the cloud and on-premises environments. From a functionality standpoint, Windows Azure Connect provides a network-level bridge between applications and services running in the cloud and on-premises data centers. Windows Azure Connect makes it easier for an organization to migrate their existing applications to the cloud by enabling direct IP-based network connectivity with their existing on-premises infrastructure. For example, a company can build and deploy a hybrid solution where a Windows Azure application connects to an on-premises SQL Server database, a local file server or an LOB applications running the corporate network.

For more information on Windows Azure Connect, you can review the following resources:

“Overview of Windows Azure Connect” article on the MSDN site.

“Tutorial: Setting up Windows Azure Connect” article on the MSDN site.

“Overview of Windows Azure Connect When Roles Are Joined to a Domain” article on the MSDN site.

“Domain Joining Windows Azure roles” article on the Windows Azure Connect team blog.

Windows Azure AppFabric Service Bus

The Windows Server AppFabric Service Bus is an Internet-scale Service Bus that offers scalable and highly available connection points for application communication. This technology allows to create a new range of hybrid and distributed applications that span the cloud and corporate environments. The AppFabric Service Bus is designed to provide connectivity, queuing, and routing capabilities not only for the cloud applications but also for on-premises applications. The Service Bus and in particular Relay Services support the WCF programming model and provide a rich set of bindings to cover a complete spectrum of design patterns:

One-way communications

Publish/Subscribe messaging

Peer-to-peer communications

Multicast messaging

Direct connections between clients and services

The Relay Service is a service residing in the cloud, whose job is to assist in the connectivity, relaying the client calls to the service. Both the client and service can indifferently reside on-premises or in the cloud.

For more information on the Service Bus, you can review the following resources:

“AppFabric Service Bus” section on the MSDN site.

“Getting Started with AppFabric Service Bus” section on the MSDN site.

“Developing Applications that Use the AppFabric Service Bus” section on the MSDN site.

“Service Bus Samples” on the CodePlex site.

“Implementing Reliable Inter-Role Communication Using Windows Azure AppFabric Service Bus, Observer Pattern & Parallel LINQ” article on the AppFabric CAT site.

We are now ready to delve into the code.

Solution

The solution code has been implemented in C# using Visual Studio 2010 and the .NET Framework 4.0. The following picture shows the projects that comprise the WCFClientCachingChannel solution.

A brief description of the individual projects is indicated below:

- AppFabricCache: this caching provider implements the Get and Put methods to retrieve and store data items from\to Windows Azure AppFabric Caching.

- MemoryCache: this caching provider provides the Get and Put methods to retrieve and store items to a static in-process MemoryCacheobject.

- WebCache: this caching provider provides the Get and Put methods to retrieve and store items to a static in-process Web Cache object.

- ExtensionLibrary: this assembly contains the WCF extensions to configure, create and run the caching channel at runtime.

- Helpers: this library contains the helper components used by the WCF extensions objects to handle exceptions and trace messages.

- Pubs WS: this project contains the code for the AuthorsWebService.

- Pubs: this test project contains the definition and configuration for the Pubs web role.

- PubsWebRole: this project contains the code of the ASP.NET application running in Windows Azure.

As I mentioned in the introduction of this article, the 3 caching providers have been modified to be used in a cloud application. In particular, the AppFabricCache project has been modified to use the Windows Azure AppFabric Caching API in place of their on-premises counterpart. Windows Azure AppFabric uses the same cache client programming model as the on-premise solution of Windows Server AppFabric. However, the 2 API are not identical and there are relevant differences when developing a Windows Azure AppFabric Caching solution compared to developing an application that leverages Windows Server AppFabric Caching . For more information on this topic, you can review the following articles:

“Differences Between Caching On-Premises and in the Cloud” article on the MSDN site.

“API Reference (Windows Azure AppFabric Caching)” article on the MSDN site.

NOTE

The Client API of Windows Server AppFabric Caching and Windows Azure AppFabric Caching have the same fully-qualified name. So, what happens when you install the Windows Azure AppFabric SDK on a development machine where Windows Server AppFabric Caching is installed? The setup process of Windows Server AppFabric installs the Cache Client API assemblies in the GAC whereas the Windows Azure AppFabric SDK copies the assemblies in the installation folder, but it doesn’t register them in the GAC. Therefore, if you create an Azure application on a development machine hosting both the on-premises and cloud version of the Cache Client API, even if you reference the Azure version in your web or worker role project, when you debug the application within the Windows Azure Compute Emulator, your role will load the on-premises version, that is, the wrong version of the Cache Client API. Fortunately, the 2 on-premises and cloud versions of the API have the same fully-qualified name but different version number, hence you can include the following snippet in the web.config configuration file of your role to refer the right version of the API.<!-- Assembly Redirection --> <runtime> <assemblyBinding xmlns="urn:schemas-microsoft-com:asm.v1"> <dependentAssembly> <assemblyIdentity name="Microsoft.ApplicationServer.Caching.Client" publicKeyToken="31bf3856ad364e35" culture="Neutral" /> <bindingRedirect oldVersion="1.0.0.0" newVersion="101.0.0.0"/> </dependentAssembly> <dependentAssembly> <assemblyIdentity name="Microsoft.ApplicationServer.Caching.Core" publicKeyToken="31bf3856ad364e35" culture="Neutral" /> <bindingRedirect oldVersion="1.0.0.0" newVersion="101.0.0.0"/> </dependentAssembly> </assemblyBinding> </runtime>

Paolo continues with thousands of lines of configuration files and C# source code (elided for brevity.)

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Nubifer Blogs posted Kentico Portal, a CMS for the Cloud on 8/5/2011:

Cloud computing has been gaining momentum for the last few years, and has recently become required ingredient in every robust enterprise IT environment. Leading CMS vendor, and Nubifer partner, Kentico Software, took a step forward recently when they announced that their CMS Portals are now supported by the leading Cloud platforms. This means that you can now decide to deploy Kentico either on premise in your own IT landscape, using a public Cloud platform (such as Amazon or Windows Azure), or leveraging a hybrid model (with a database behind a firewall and a front end in the cloud). [Emphasis added.]

Kentico Software sees the cloud computing as an important step for their customers. The recent releases of Kentico CMS “…removes barriers for our customers who are looking at their enterprise cloud computing strategy. Regardless of whether it’s on-premise or in the cloud, Kentico CMS is ready,” says Kentico Software CEO, Petr Palas.

Based on the influence of cloud, mobile devices and social media, the online needs of users and customers have changed significantly in recent years. The days of simple brochure-esque websites targeting traditional browser devices with one-way communication are quickly coming to an end. The web has evolved to become much more sophisticated medium. A business website is no longer a destination; rather, it is a central nexus for commercial engagement.Nubifer realizes that a business site today needs to cover the gamut – it needs to be visually appealing, it needs to have an intuitive information architecture, it needs to deliver dynamic, rich, compelling content, it needs to have mechanisms for visitor interaction, it needs to be optimized for speed and responsiveness, it needs to be highly scalable and it needs to deliver an excellent experience to traditional browser devices like desktops and laptops.

Kentico identified that in order to deal with the huge demand for web content from the social and mobile Internet, business websites need to be built with scalability at the forefront of the engineer’s minds. This is where the Cloud and Kentico CMS meet; elastic infrastructure which can be optimized to adapt to the growing needs of your business. Whether this is Infrastructure-as-a-Service ( IaaS ), or Platform-as-a-Service (PaaS), Kentico CMS provides turn-key solutions to the various options available which will allow your organization’s web properties to scale efficiently and economically.Kentico’s cloud optimized CMS platform enables organizations to deploy their portal in minutes and easily create a fully-configured, fault-tolerant and load-balanced cluster. Kentico’s cloud-ready portal deployments automatically scale to meet the needs’ of customers, which can vary widely depending on the number of projects, the number of people working on each project and users’ geographic locations.

By automatically and dynamically growing and reducing the number of servers on the cloud, those leveraging a Kentico CMS solution can reduce costs, only paying for the system usage as needed, while maintaining optimum system performance.”Kentico Software shares our vision of driving the expansion and delivery of new capabilities in the cloud,” said Chad Collins, Nubifer CEO. “The Kentico CMS brings automation, increased IT control and visibility to users, who understand the advantages of creating and deploying scalable portal solutions in the cloud.”

About Kentico CMS

Kentico CMS is an affordable Web content management system providing a complete set of features for building websites, community sites, intranets and on-line stores on the Microsoft ASP.NET platform. It supports WYSIWYG editing, workflows, multiple languages, full-text search, SEO, on-line forms, image galleries, forums, groups, blogs, polls, media libraries and is shipped with 250+ configurable Web parts. It’s currently used by more than 6,000 websites in 84 countries.Kentico Software clients include Microsoft, McDonald’s, Vodafone, O2, Orange, Brussels Airlines, Mazda, Ford, Subaru, Isuzu, Samsung, Gibson, ESPN, Guinness, DKNY, Abbott Labs, Medibank, Ireland.ie and others.

About Kentico Software

Kentico Software (www.kentico.com) helps clients create professional websites, online stores, community sites and intranets using Kentico CMS for ASP.NET. It’s committed to deliver a full-featured, enterprise-class, stable and scalable Web Content Management solution on the Microsoft .NET platform. Founded in 2004, Kentico is headquartered in the Czech Republic and has a U.S. office in Nashua, NH. Since its inception, Kentico has continued to rapidly expand the Kentico CMS user base worldwide.Kentico Software is a Microsoft Gold Certified Partner. In 2010, Kentico was named the fastest growing technology company in the Czech Republic in the Deloitte Technology FAST 50 awards. For more information about Kentico’s CMS offerings, and how it can add value to your web properties, contact Nubifer today.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Ayende Rahien (@ayende) posted Entity Framework 4.1 Update 1, Backward Compatibility and Microsoft on 8/5/2011:

One of the things that you keep hearing about Microsoft products is how much time and effort is dedicated to ensuring Backward Compatibility. I have had a lot of arguments with Microsoft people about certain design decisions that they have made, and usually the argument ended up with “We have to do it this was to ensure Backward Compatibility”.

That sucked, but at least I could sleep soundly, knowing that if I had software running on version X of Microsoft, I could at least be pretty sure that it would work in X+1.

Until Entity Framework 4.1 Update 1 shipped, that is. Frans Bouma has done a great job in describing what the problem actually is, including going all the way and actually submitting a patch with the code required to fix this issue.

But basically, starting with Entity Framework 4.1 Update 1 (the issue also appears in Entity Framework 4.2 CTP, but I don’t care about this much now), you can’t use generic providers with Entity Framework. Just to explain, generic providers is pretty much the one way that you can integrate with Entity Framework if you want to write a profiler or a cacher or a… you get the point.

Looking at the code, it is pretty obvious that this is not intentional, but just someone deciding to implement a method without considering the full ramifications. When I found out about the bug, I tried figuring out a way to resolve it, but the only work around would require me to provide a different assembly for each provider that I wanted to support (and there are dozens that we support on EF 4.0 and EF 3.5 right now).

We have currently implemented a work around for SQL Server only, but if you want to use Entity Framework Profiler with Entity Framework 4.1 Update 1 and a database other than SQL Server, we would have to create a special build for your scenario, rather than have you use the generic provider method, which have worked so far.

Remember the beginning of this post? How I said that Backward Compatibility is something that Microsoft is taking so seriously?

Naturally I felt that this (a bug that impacts anyone who extends Entity Framework in such a drastic way) is an important issue to raise with Microsoft. So I contacted the team with my finding, and the response that I got was basically: Use the old version if you want this supported.

What I didn’t get was:

- Addressing the issue of a breaking change of this magnitude that isn’t even on a major release, it is an update to a minor release.

- Whatever they are even going to fix it, and when this is going to be out.

- Whatever the next version (4.2 CTP also have this bug) is going to carry on this issue or not.

I find this unacceptable. The fact that there is a problem with a CTP is annoying, but not that important. The fact that a fully release package has this backward compatibility issue is horrible.

What makes it even worse is the fact that this is an update to a minor version, people excepts this to be a safe upgrade, not something that requires full testing. And anyone who is going to be running Update-Package in VS is going to hit this problem, and Update-Package is something that people do very often. And suddenly, Entity Framework Profiler can no longer work.

Considering the costs that the entire .NET community has to bear in order for Microsoft to preserve backward compatibility, I am deeply disappointed that when I actually need this backward compatibility.

This is from the same guys that refused (for five years!) to fix this bug: