Windows Azure and Cloud Computing Posts for 8/11/2011+

• Updated 8/22/2011: Added link to download the code sample for Suren Machiraju’s Running .NET4 Windows Workflows in Azure article in the Windows Azure AppFabric: Apps, Access Control, WIF, WF and Service Bus section below.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF, WF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Jim O’Neil (@jimoneil) continued his series with Photo Mosaics Part 3: Blobs on 8/11/2011:

Given that the Photo Mosaics application's domain is image processing, it shouldn't be a surprise that blobs (namely, images) are the most prevalent storage construct in the application. Blobs are used in two distinct ways:

- image libraries, collections of images used as tiles to generate a mosaic for the image submitted by the client. When requesting that a photo mosaic be created, the end-user picks a specific image library from which the tiles (or tesserae) should be selected.

- blob containers used in tandem with queues and roles to implement the workflow of the application.

Regardless of the blob's role in the application, access occurs almost exclusively via the storage architecture I described in my last post.

Image Libraries

In the Azure Photo Mosaics application, an image library is simply a blob container filled with jpg, GIF, or png images. The Storage Manager utility that accompanies the source code has some functionality to populate two image libraries, one from Flickr and the other from the images in your My Pictures directory, but you can create your own libraries as well. What differentiates these containers as image libraries is simply a metadata element named ImageLibraryDescription.

Storage Manager creates the containers and sets the metadata using the Storage Client API that we covered in the last post; here's an excerpt from that code:

// create a blob containervar container = new CloudBlobContainer(i.ContainerName.ToLower(), cbc); try{ container.CreateIfNotExist(); container.SetPermissions( new BlobContainerPermissions() { PublicAccess = BlobContainerPublicAccessType.Blob }); container.Metadata["ImageLibraryDescription"] = "Image tile library for PhotoMosaics"; container.SetMetadata(); ...In the application, the mere presence of the ImageLibraryDescription metadata element marks the container as an image library, so the actual value assigned really doesn't matter.In the application workflow, it's the Windows Forms client application that initiates a request for all of the image libraries (so the user can pick which one he or she wants to use when generating the photo mosaic). As shown below, first the user selects the browse option in the client application. That invocation traverses the data access stack – asynchronously invoking EnumerateImageLibraries in the StorageBroker service, which in turn calls GetContainerUrisAndDescriptions on the BlobAccessor class in the CloudDAL, which accesses the blob storage service via the Storage Client API.

EnumerateImageLibraries (code shown below) ostensibly accepts a client ID to select which Azure Storage account to search for image libraries, but the current implementation ignores that parameter and defaults to the storage account directly associated with the application (via a configuration element in the ServiceConfiguration.cscfg). Swapping out GetStorageConnectionStringForApplication in line 5 for a method that searches for the storage account associated with a specific client would be an easy next step.

The CloudDAL enters the picture at line 6 via the invocation of GetContainerUrisAndDescriptions, passing in the specific metadata tag that differentiates image libraries from other blob containers in that same account.

1: public IEnumerable<KeyValuePair<Uri, String>> EnumerateImageLibraries(String clientRegistrationId) 2: { 3: try 4: { 5: String connString = new StorageBrokerInternal() .GetStorageConnectionStringForApplication(); 6: return new BlobAccessor(connString) .GetContainerUrisAndDescriptions("ImageLibraryDescription"); 7: } 8: catch (Exception e) 9: { 10: Trace.TraceError( "Client: {0}{4}Exception: {1}{4}Message: {2}{4}Trace: {3}", 11: clientRegistrationId, 12: e.GetType(), 13: e.Message, 14: e.StackTrace, 15: Environment.NewLine); 16: 17: throw new SystemException("Unable to retrieve list of image libraries", e); 18: } 19: }The implementation of GetContainerUrisAndDescriptions appears below. The Storage Client API provides the ListContainers method (Line 4) which yields an IEnumerable that we can do some LINQ magic on in lines 7-10. The end result of this method is to return just the names and descriptions of the containers (as a collection of KeyValuePair) that have the metadata element specified in the optional parameter. If that parameter is null (or not passed), then all of the container names and descriptions are returned.1: // get list of containers and optional metadata key value 2: public IEnumerable<KeyValuePair<Uri, String>> GetContainerUrisAndDescriptions(String metadataTag = null) 3: { 4: var containers = _authBlobClient.ListContainers("", ContainerListingDetails.Metadata); 5: 6: // get list of containers with metadata tag set (or all of them … 7: return from c in containers 8: let descTag = (metadataTag == null) ? null : HttpUtility.UrlDecode(c.Metadata[metadataTag.ToLower()]) 9: where (descTag != null) || (metadataTag == null) 10: select new KeyValuePair<Uri, String>(c.Uri, descTag); 11: }After the list of container names and descriptions has been retrieved, the Windows Forms client displays them in a simple dialog form, prompting the user to select one of them.

When the user makes a selection, another request flows through the same data access stack:

- The Windows Forms client invokes EnumerateImages on the StorageBroker service, passing in the URI of the image library (i.e., blob container) selected in the previous dialog

- EnumerateImages invokes RetrieveImageUris on the BlobAccessor class in the CloudDAL, also passing in the URI of the container

- RetrieveImageUris (below) builds a list of blobs in that container that have a content type of jpg, png, or GIF

1: // get list of images given blob container name 2: public IEnumerable<Uri> RetrieveImageUris(String containerName, String prefix = "") 3: { 4: IEnumerable<Uri> blobNames = new List<Uri>(); 5: try 6: { 7: // get unescaped names of blobs that have image content 8: var contentTypes = new String[] { "image/jpeg", "image/gif", "image/png" }; 9: blobNames = from CloudBlob b in 10: _authBlobClient.ListBlobsWithPrefix (String.Format("{0}/{1}", containerName, prefix), 11: new BlobRequestOptions() { UseFlatBlobListing = true }) 12: where contentTypes.Contains(b.Properties.ContentType) 13: select b.Uri; 14: } 15: catch (Exception e) 16: { 17: Trace.TraceError("BlobUri: {0}{4}Exception: {1}{4}Message: {2}{4}Trace: {3}", 18: String.Format("{0}/{1}", containerName, prefix), 19: e.GetType(), 20: e.Message, 21: e.StackTrace, 22: Environment.NewLine); 23: throw; 24: } 25: 26: return blobNames; 27: }What is the prefix parameter in RetrieveImageUris for? In this specific context, the prefix parameter defaults to an empty string, but generally, it allows us to query blobs as if they represented directory paths. When it comes to Windows Azure blob storage, you can have any number of containers, but containers are flat and cannot be nested. You can however create a faux hierarchy using a naming convention. For instance,http://azuremosaics.blob.core.windows.net/images/foods/dessert/icecream.jpgis a perfectly valid blob URI: the container isimages,and the blob name isfoods/dessert/icecream.jpg– yes, the whole thing as one opaque string. In order to provide some semantic meaning to that name, the Storage Client API method ListBlobsWithPrefix supports designating name prefixes, so you could indeed enumerate just the blobs in the pseudo-directoryfood/dessert.After the call to the service method EnumerateImages has completed, the Windows Form client populates the list of images within the selected image library. When a user selects an image in that list, a preview of the tile, in the selected size, is shown.

You might be inclined to assume that another service call has been made to retrieve the individual image tile, but that's not the case! The retrieval occurs via a simple HTTP GET by virtue of assigning to the ImageLocation property of the Windows Forms PictureBox the blob image URI (e.g.,

http://azuremosaics.blob.core.windows.net/flickr/5935820179); there are no web service calls, no WCF.We can do that here because of the access policy we've assigned to the blob containers designated as image libraries. If you revisit the Storage Manager code that I mentioned at the beginning of this post, you'll see that there is a SetPermissions call on the image library containers. SetPermissions is part of the Storage Client API and used to set the public access policy for the blob container; there are three options:

- Blob – allow anonymous clients to retrieve blobs and metadata (but not enumerate blobs in containers),

- Container – allow anonymous clients to additionally view container metadata and enumerate blobs in a container (but not enumerate containers within an account),

- Off – do not allow any anonymous access; all requests must be signed with the Azure Storage Account key.

By setting the public access level to Blob, each blob in the container can be accessed directly, even in a browser, without requiring authentication (but you must know the blob's URI). By the way, blobs are the only storage construct in Windows Azure Storage that allow access without authenticating via the account key, and, in fact, there's another authentication mechanism for blobs that sits between public access and fully authenticated access: shared access signatures.

Shared access signatures allow you to more granularly define what functions can be performed on a blob or container and the duration those functions can be applied. You could, for instance, set up a shared access signature (which is a hash tacked on to the blob's URI) to allow access to, say, a document for just 24 hours and disseminate that link to the designated audience. While anyone with that shared access signature-enabled link could access the content, the link would expire after a day.

Blobs in the Workflow

The second use of blobs within the Azure Photo Mosaics application is the transformation of the original image into the mosaic. Recall from the overall workflow diagram (below) that there are four containers used to move the image along as it's being 'mosaicized':

imageinput

This is the entry point of an image into the workflow. The image is transferred from the client via a WCF service call (SubmitImageForProcessing in the JobBroker service of the AzureClientInterface Web Role) and assigned a new name as it's copied to blob storage: a GUID corresponding to the unique job id for the given request.

As you might expect by now, BlobAccessor in the CloudDAL does the heavy lifting via the code below. The entry point is actually the second method (Line 17), in which the image bytes, the imageinput container name, and the GUID are passed in from the service call. Storage Client API methods are used to tag the content type and store the blog (Lines 5 and 11, respectively).

1: // store image with given URI (with optional metadata) 2: public Uri StoreImage(Byte[] bytes, Uri fullUri, NameValueCollection metadata = null) 3: { 4: CloudBlockBlob blobRef = new CloudBlockBlob(fullUri.ToString(), _authBlobClient); 5: blobRef.Properties.ContentType = "image/jpeg"; 6: 7: // add the metadata 8: if (metadata != null) blobRef.Metadata.Add(metadata); 9: 10: // upload the image 11: blobRef.UploadByteArray(bytes); 12: 13: return blobRef.Uri; 14: } 15: 16: // store image with given container and image name (with optional metadata) 17: public Uri StoreImage(Byte[] bytes, String container, String imageName, NameValueCollection metadata = null) 18: { 19: var containerRef = _authBlobClient.GetContainerReference(container); 20: containerRef.CreateIfNotExist(); 21: 22: return StoreImage(bytes, new Uri(String.Format("{0}/{1}", containerRef.Uri, imageName)), metadata); 23: }sliceinputWhen an image is submitted by the end-user, the 'number of slices' option in the Windows Forms client user interface is passed along to specify how the original image should be divided in order to parallelize the task across the instances of ImageProcessor worker roles deployed in the cloud. For purposes of the sample application, the ideal number to select is the number of ImageProcessor instances currently running, but a more robust implementation would dynamically determine a optimal number of slices given the current system topology and workload. Regardless of how the number of image segments is determined, the original image is taken from the imageinput container and 'chopped up' in to uniformly sized slices. Each slice is stored in the sliceinput container for subsequent processing.

The real work is processing the image into the slice; once that's done, it's really just a call to StoreImage in the BlobAccessor:

Uri slicePath = blobAccessor.StoreImage( slices[sliceNum], BlobAccessor.SliceInputContainer, String.Format("{0}_{1:000}", requestMsg.BlobName, sliceNum));In the above snippet, each element of the slices array contains an array of bytes representing one slice of the original image. As you can see by the String.Format call, each slice retains the original name of the image (a GUID) with a suffix indicating a slice number (e.g.,21EC2020-3AEA-1069-A2DD-08002B30309D_003)sliceoutput

As each slice of the original image is processed, the 'mosaicized' output slice is stored in the sliceoutput container with the exact same name as it had in the sliceinput container. There's nothing much more going on here!

imageoutput

After all of the slices have been processed, the JobController Worker Role has a bit of work to do to piece together the individual processed slices into the final image, but once that's done it's just a simple call to StoreImage in the BlobAccessor to place the completed mosaic in the imageoutput container.

A Quandary

Since I've already broached the subject of access control with the image libraries, let's revisit that topic again now in the context of the blob containers involved in the workflow. Of the four containers, two (sliceinput and sliceoutput) are used for internal processing and their contents never need to be viewed outside the confines of the cloud application. The original image (in imageinput) and the generated mosaic (in imageoutput) do, however, have relevance outside of the generation process.

In the current client application, the links that show in the Submitted Jobs window (above) directly reference images that are associated with the current user, and that user can access both the original and the generated image quite easily, via a browser even. That's possible since the imageinput and imageoutput containers have a public access policy just like the image libraries; that is, you can access a blob and its metadata if you know its name. Of course, anyone can access those images if they know the name. Granted the name is somewhat obfuscated since it's a GUID, but it's far from private.

So how can we go about making the images associated with a given user accessible only to that user?

- You might consider a shared access signature on the blob. This allows you to create a decorated URI that allows access to the blob for a specified amount of time. The drawbacks here are that anyone with that URI can still access the blob, so it's only slightly more secure than what we have now. Additionally a simple shared access signature has a maximum lifetime of one hour, so it wouldn't accommodate indefinite access to the blob.

- You can extend shared access signatures with a signed identifier, which associates that signature with a specific access policy on the container. The container access policy doesn't have the timeout constraints, so the client could have essentially unlimited access. The URI can still be compromised, although access can be revoked globally by modifying the access policy that the signed identifier references. Beyond that though, there's a limit of five access policies per container, so if you wanted to somehow map a policy to an end user, you'd be limited to storing images for only five clients per container.

- You could set up customer-specific containers or even accounts, so each client has his own imageimput and imageoutput container, and all access to the blobs is authenticated. That may result in an explosion of containers for you to manage though, and it would require providing a service interface since you don't want the clients to have the authentication keys for those containers. I'd argue that you could accomplish the same goal with a single imageinput and imageoutput container that are fronted with a service and an access policy implemented via custom code.

- Another option is to require that customers get their own Azure account to house the imageinput and imageoutput containers. That's a bit of an encumbrance in terms of on-boarding, but as an added benefit, it relieves you from having to amortize storage costs across all of the clients you are servicing. The clients own the containers so they'd have the storage keys in hand and bear the responsibility for securing them. You'd probably still want a service front-end to handle the access, but the authentication key (or a shared access signature) would have to be provided by the client to the Photo Mosaics application.

My current thinking is that a service interface to access the images in imagerequest and imageresponse is the way to go. It's a bit more code to write, but it offers the most flexibility in terms of implementation. In fact, I could even bring in the AppFabric Access Control Service to broker access to the service. Thoughts?

Brent Stineman (@BrentCodeMonkey) posted Introduction to Azure Storage Analytics (YOA Week 6) on 8/10/2011:

Since my update on Storage Analytics last week was so short, I really wanted to dive back into it this. And fortunately, its new enough that there was some new ground to tread here. While is great because I hate just putting up another blog post that doesn’t really add anything new.

Steve Marx posted his sample app last week and gave us a couple nice methods for updating the storage analytics settings. The Azure Storage team did two solid updates on working with both the Metrics and Logging. However, neither of them dove deep into working with the API I wanted more meat on how to do exactly this. By digging through Steve’s code and the MSDN documentation on the API, I can hopefully shed some additional light on this.

Storage Service Properties (aka enabling logging and metrics)

So the first step is turning this on. Well, actually its understanding what we’re turning on and why, but we’ll get to that in a few. Steve posted on his blog a sample ‘Save’ method. This is a implementation of the Azure Storage Analytics API’s “Set Storage Service Properties” call. However, the key to that method is an XML document that contains the analytics settings. It looks something like this:

<?xml version="1.0" encoding="utf-8"?> <StorageServiceProperties> <Logging> <Version>version-number</Version> <Delete>true|false</Delete> <Read>true|false</Read> <Write>true|false</Write> <RetentionPolicy> <Enabled>true|false</Enabled> <Days>number-of-days</Days> </RetentionPolicy> </Logging> <Metrics> <Version>version-number</Version> <Enabled>true|false</Enabled> <IncludeAPIs>true|false</IncludeAPIs> <RetentionPolicy> <Enabled>true|false</Enabled> <Days>number-of-days</Days> </RetentionPolicy> </Metrics> </StorageServiceProperties>Cool stuff, but what does it mean. Well fortunately, its all explained in the API documentation. Also fortunately, I won’t make you click a link to look at it. I’m nice that way.

Version – the service version / interface number to help with service versioning later one, just use “1.0” for now.

Logging->Read/Write/Delete – these nodes determine if we’re going to log reads, writes, or deletes. So you can get just the granularity of logging you want.

Metrics->Enabled – turn metrics capture on/off

Metrics->IncludeAPIs – set to true if you want to include capture of statistics for your API operations (like saving/updating analytics settings). At least I think it is, I’m still playing/researching this one.

RetentionPolicy – Use this to enabled/disable a retention policy and set the number of days to retain information for. Now without setting a policy, data will be retained FOREVER, or at least until your 20TB limit is reached. So I recommend you set a policy and leave it on at all times. The maximum value you can set is 365. To learn more about the retention policies, check out the MSDN article on them.

Setting Service Properties

Now Steve did a slick little piece of code, but given that I’m not what I’d call “MVC fluent” (I’ve been spending too much time doing middle/backend services I guess), I took a bit of deciphering, at least for me, to figure out what was happening. And I’ve done low level Azure Storage REST operations before. So I figured I’d take a few minutes to explain what was happening in his “Save” method.

First off, Steve setup the HTTP request we’re going to send to Azure Storage:

var creds = new StorageCredentialsAccountAndKey(Request.Cookies["AccountName"].Value, Request.Cookies["AccountKey"].Value); var req = (HttpWebRequest)WebRequest.Create(string.Format("http://{0}.{1}.core.windows.net/?restype=service&comp=properties", creds.AccountName, service)); req.Method = "PUT"; req.Headers["x-ms-version"] = "2009-09-19";So this code snags the Azure Storage account credentials from the cookies (where it was stored when you entered it). They are then used it to generate an HttpWebRequest object using the account name, and the service (blob/table/queue) that we want to update the settings for. Lastly, we set a method and x-ms-version properties for the request. Note: the service was posted to this method by the javascript on Steve’s MVC based page.

Next up, we need to digitally sign our request using the account credentials and the length of our XML analytics config xml document.

req.ContentLength = Request.InputStream.Length; if (service == "table") creds.SignRequestLite(req); else creds.SignRequest(req);Now what’s happening here, is that our XML document came to this method via the javascript/AJAX post to our code-behind method via Request.InputStream. We sign the request using the StorageCredentialsAccountAndKey object we created earlier, doing either a SignRequestLite for a call to the Table service, or SignRequest for the blob or queue service.

Next up, we need to copy our XML configuration settings to our request object…

using (var stream = req.GetRequestStream()) { Request.InputStream.CopyTo(stream); stream.Close(); }This chunk of code uses GetRequestStream to get the stream we’ll copy our payload to, copy it over, then close the stream so we’re ready to send the request.

try { req.GetResponse(); return new EmptyResult(); } catch (WebException e) { Response.StatusCode = 500; Response.TrySkipIisCustomErrors = true; return Content(new StreamReader(e.Response.GetResponseStream()).ReadToEnd()); }Its that first line that we care about. req.GetResponse will send our request to the Azure Storage service. The rest of this snippet is really just about exception handling and returning results back to the AJAX code.

Where to Next

I had hoped to have time this week to create a nice little wrapper around the XML payload so you could just have an Analytics configuration object that you could hand a connection too and set properties on, but I ran out of time (again).

I hope to get to it and actually put something ou[t] before we get the official update to the StorageClient library. Meanwhile, I think you can see how easy it is to generate your own REST requests to get (which we didn’t cover here) and set (which we did) the Azure Storage Analytics settings.

For more information, be sure to check out Steve Marx’s sample project and the MSDN Storage Analytics API documentation.

<Return to section navigation list>

SQL Azure Database and Reporting

Edu Lorenzo (@edulorenzo) described Migrating your SQL Server data to the cloud in an 8/10/2011 post:

It has been quite a while since SQL Azure has come out. But there are still areas where I have been asked; what is the best way to migrate the existing database to a SQL Azure instance? And, almost as always, I start off answering with “it depends”. Because really, that’s what it is. There are a lot of factors that needs to be weighed before even considering migrating and then choosing. And with that, I won’t be able to provide a generic answer for all. As migration issues will be a case-to-case basis. Specially with all the “homegrown” techniques that we apply.

So here, I will just go ahead and timewarp myself to the stage where we have already decided to migrate the database to the app, and then show you the different means of getting there.

I start off with the Database Manager.

A SQL Azure subscription comes with a web based database manager. This is fine and dandy. It hosts a Silverlight powered UI where we will be given a connection to the Azure database and be able to view the database contents and run scripts. For most DBAs, they would say having the ability to run their scripts is all they need.

Here are my pros and cons with this:

Pros:

- You can actually manage the DB from anywhere you have a Silverlight enabled browser and a good internet connection.

Cons:

- You will need a browser with Silverlight installed on it. So it is not 100%. And you might want to turn off pop-up blockers; which has a good reason why they are turned on.

- Being browser based, it is prone to browser based problems like session time-outs, sessions persisting. I experienced once where I was not able to log on again using the manager after closing the window. As it turns out, I was still logged in.

- Another browser issue might be Zoom level. You might not be able to see some buttons if you are not at 100%

- Again with session issues, once you log in, the state of the database’s properties does not update from that state. So you might miss out on some changes while you are logged on.

- And lastly, being browser based and the UI doesn’t really catch up with the processes behind the scenes, sometimes pressing STOP on a process doesn’t really stop it.

The second option; using SQL Server 2008 R2 Management Studio

This might be the option that most will take. With SSMS already installed, the next step is to connect it to the SQL Azure instance. Connection can be done the old fashioned right-click way and providing the proper credentials. Another good thing is, SSMS08R2 has the options in the UI to actually steer you in the right direction of using SQL Azure. Commands that won’t work with SQL Azure, are disabled so we won’t get to mess up.

Pros:

- SSMS is already installed and with the right settings (like enabling TCP and going through 1433), should be able to connect to the SQL Azure instance readily.

- SSMS is already a familiar tool. And its ability to connect to multiple databases beats the Browser based manager. Using SSMS, you can view both your SQL Azure database, and your SQL Server databases at the same time.

- The express edition works too.

- SSMS08, well at least the R2 edition, actually includes SQL Azure to the types of dabatases that it targets.

Cons:

- Although it is unlikely that you have a SQL Azure database but no SQL Server database, I will have to place this as a con; even if the situation is not for those who want to migrate. Installing JUST SSMS is not possible and you will need to install SQL Server for that.

There are also some migration tools that you can find, download and use, like the SQLAzureMigrationWizard that you should be able to find on codeplex.

There are also other tools for other databases

SQL Server Integration Services (SSIS) is also another option, and this should be able to “export” your data to a SQL Azure database.

Transfer data to SQL Azure by using SQL Server 2008 Integration Services (SSIS). SQL Server 2008 R2 or later supports the Import and Export Data Wizard and bulk copy for the transfer of data between an instance of Microsoft SQL Server and SQL Azure. You can use this tool to migrate on-premise databases to SQL Azure.Another way that you may want to look into is to use the BCP Utility that can be ran using SSMS to perform a bulk copy.

You can download the current version (v3.7.4) of my favorite, George Huey’s SQL Azure Migration Wizard from CodePlex.

Edu is a Microsoft Philippines ISV-Developer Evangelist.

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF, WF and Service Bus

• Suren Machiraju of the Windows Azure CAT Team (formerly the AppFabricCAT Team) described Running .NET4 Windows Workflows in Azure in an 8/11/2011 post:

This blog reviews the current (January 2011) set of options available for hosting existing .NET4 Workflow (WF) programs in Windows Azure and also provides a roadmap to the upcoming features that will further enhance support for hosting and monitoring the Workflow programs. The code snippets included below are also available as an attachment for you to download and try it out yourself.

Workflow in Azure – Today

Workflow programs can broadly classified as durable or non-durable (aka non-persisted Workflow Instances). Durable Workflow Services are inherently long running, persist their state, and use correlation for follow-on activities. Non-durable Workflows are stateless, effectively they start and run to completion in a single burst.

Today non-durable Workflows are readily supported by Windows Azure of course with a few configuration/trivial changes. Hosting durable Workflows today is a challenge; since we do not yet have a ‘Windows Server AppFabric’ equivalent for Azure which can persist, manage and monitor the Service. In brief the big buckets of functionality required to host the durable Workflow Services are:

- Monitoring store: There is no Event Collection Service available to gather the ETW events and write them to the SQL Azure based Monitoring database. There is also no schema that ships with .NET Framework for creating the monitoring database, and the one that ships with Windows Server AppFabric is incompatible with SQL Azure – an example, the scripts that are provided with Windows Server AppFabric make use of the XML column type which is currently not supported by SQL Azure.

- Instance Store: The schemas used by the SqlWorkflowInstanceStore have incompatibilities with SQL Azure. Specifically, the schema scripts require page locks, which are not supported on SQL Azure.

- Reliability: While the SqlWorkflowInstanceStore provides a lot of the functionality for managing instance lifetimes, the lack of the AppFabric Workflow Management Service means that you need to manually implement a way to start your WorkflowServiceHosts before any messages are received (such as when you bring up a new role instance or restart a role instance), so that the contained SqlWorkflowInstanceStore can poll for workflow service instances having expired timers and subsequently resume their execution.

The above limitations make it rather difficult to run a durable Workflow Service on Azure – the upcoming release of Azure AppFabric (Composite Application) is expected to make it possible to run durable Workflow Services. In this blog, we will focus on the design approaches to get your non-durable Workflow instances running within Azure roles.

Today you can run your non-durable Workflow on Azure. What this means, is that your Workflow programs really cannot persist their state and wait for subsequent input to resume execution- they must complete following their initial launch. With Azure you can run non-durable Workflows programs in one of the three ways:

- Web Role

- Worker Roles

- Hybrid

The Web Role acts very much like IIS does on premise as an HTTP server, and is easier to configure and requires little code to integrate and is activated by an incoming request. The Worker Role acts like an on-premise Windows Service and is typically used in backend processing scenarios have multiple options to kick off the processing – which in turn add to the complexity. The hybrid approach, which bridges communication between Azure hosted and on-premise resources, has multiple advantages: it enables you to leverage existing deployment models and also enables use of durable Workflows on premise as a solution until the next release. The following sections, succinctly, provide details on these three approaches and in the ‘Conclusion’ section we will also provide you pointers on the appropriateness of each approach.

Host Workflow Services in a Web Role

The Web Role is similar to a ‘Web Application’ and can also provide a Service perspective to anything that uses the http protocol – such as a WCF service using basicHttpBinding. The Web Role is generally driven by a user interface – the user interacts with a Web Page, but a call to a hosted Service can also cause some processing to happen. Below are the steps that enable you to host a Workflow Service in a Web Role.

First step is to create a Cloud Project in Visual Studio, and add a WCF Service Web Role to it. Delete the IService1.cs, Service1.svc and Service1.svc.cs added by the template since they are not needed and will be replaced by the workflow service XAMLX.

To the Web Role project, add a WCF Workflow Service. The structure of your solution is now complete (see the screenshot below for an example), but you need to add a few configuration elements to enable it to run on Azure.

Windows Azure does not include a section handler in its machine.config for system.xaml.hosting as you have in an on-premises solution. Therefore, the first configuration change (HTTP Handler for XAMLX and XAMLX Activation) is to add the following to the top of your web.config, within the configuration element:

<configSections> <sectionGroup name="system.xaml.hosting" type="System.Xaml.Hosting.Configuration.XamlHostingSectionGroup, System.Xaml.Hosting, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"> <section name="httpHandlers" type="System.Xaml.Hosting.Configuration.XamlHostingSection, System.Xaml.Hosting, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" /> </sectionGroup> </configSections>Next, you need to add XAML http handlers for WorkflowService and Activities root element types by adding the following within the configuration element of your web.config, below the configSection that we included above:

<system.xaml.hosting> <httpHandlers> <add xamlRootElementType="System.ServiceModel.Activities.WorkflowService, System.ServiceModel.Activities, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" httpHandlerType="System.ServiceModel.Activities.Activation.ServiceModelActivitiesActivationHandlerAsync, System.ServiceModel.Activation, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" /> <add xamlRootElementType="System.Activities.Activity, System.Activities, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" httpHandlerType="System.ServiceModel.Activities.Activation.ServiceModelActivitiesActivationHandlerAsync, System.ServiceModel.Activation, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" /> </httpHandlers> </system.xaml.hosting>Finally, configure the WorkflowServiceHostFactory to handle activation for your service by adding a serviceActivation element to system.serviceModel\serviceHostingEnvironment element:<serviceHostingEnvironment multipleSiteBindingsEnabled="true" > <serviceActivations> <add relativeAddress="~/Service1.xamlx" service="Service1.xamlx" factory="System.ServiceModel.Activities.Activation.WorkflowServiceHostFactory"/> </serviceActivations> </serviceHostingEnvironment>The last step is to deploy your Cloud Project and with that you now have your Workflow service hosted on Azure – graphic below!

Note: Sam Vanhoutte from CODit in his blog also elaborates on Hosting workflow services in Windows Azure and focuses on troubleshooting configuration by disabling custom errors– do review.

Host Workflows in a Worker Role

The Worker Role is similar to a Windows Service and would start up ‘automatically’ and be running all the time. While the Workflow Programs could be initiated by a timer, it could use other means to activate such as a simple while (true) loop and a sleep statement. When it ‘ticks’ it performs work. This is generally the option for background or computational processing.

In this scenario you use Workflows to define Worker Role logic. Worker Roles are created by deriving from the RoleEntryPoint Class and overriding a few of its method. The method that defines the actual logic performed by a Worker Role is the Run Method. Therefore, to get your workflows executing within a Worker Role, use WorkflowApplication* or WorkflowInvoker to host an instance of your non-service Workflow (e.g., it doesn’t use Receive activities) within this Method. In either case, you only exit the Run Method when you want the Worker Role to stop executing Workflows.

The general strategy to accomplish this is to start with an Azure Project and add a Worker Role to it. To this project you Add a reference to an assembly containing your XAML Workflow types. Within the Run Method of WorkerRole.cs, you initialize one of the host types (WorkflowApplication or WorkflowInvoker), referring to an Activity type contained in the referenced assembly. Alternatively, you can initialize one of the host types by loading an Activity instance from the XAML Workflow file available on the file system.. You will also need to add references to .NET Framework assemblies (System.Activities and System.Xaml – if you wish to load XAML workflows from a file).

Host Workflow (Non-Service) in a Worker Role

For ‘non-Service’ Workflows, your Run method needs to describe a loop that examines some input data and passes it to a workflow instance for processing. The following shows how to accomplish this when the Workflow type is acquired from a referenced assembly:

public override void Run(){Trace.WriteLine("WFWorker entry point called", "Information");while (true){Thread.Sleep(1000);/* ...* ...Poll for data to hand to WF instance...* ...*///Create a dictionary to hold input dataDictionary<;string, object> inputData = new Dictionary<string,object>();//Instantiate a workflow instance from a type defined in a referenced assemblySystem.Activities.Activity workflow = new Workflow1();//Execute the WF passing in parameter data and capture output resultsIDictionary<;string, object> outputData =System.Activities.WorkflowInvoker.Invoke(workflow, inputData);Trace.WriteLine("Working", "Information");}}Alternatively, you could perform the above using the WorkflowApplication to host the Workflow instance. In this case, the main difference is that you need to use semaphores to control the flow of execution because the workflow instances will be run on threads separate from the one executing the Run method.

public override void Run(){Trace.WriteLine("WFWorker entry point called", "Information");while (true){Thread.Sleep(1000);/* ...* ...Poll for data to hand to WF...* ...*/AutoResetEvent syncEvent = new AutoResetEvent(false);//Create a dictionary to hold input data and declare another for output dataDictionary<;string, object> inputData = new Dictionary<string,object>();IDictionary<;string, object> outputData;//Instantiate a workflow instance from a type defined in a referenced assemblySystem.Activities.Activity workflow = new Workflow1();//Run the workflow instance using WorkflowApplication as the host.System.Activities.WorkflowApplication workflowHost = new System.Activities.WorkflowApplication(workflow, inputData);workflowHost.Completed = (e) =>{outputData = e.Outputs;syncEvent.Set();};workflowHost.Run();syncEvent.WaitOne();Trace.WriteLine("Working", "Information");}}Finally, if instead of loading Workflow types from a referenced assembly, you want to load the XAML from a file available, for example, one included with WorkerRole or stored on an Azure Drive, you would simply replace the line that instantiates the Workflow in the above two examples with the following, passing in the appropriate path to the XAML file to XamlServices.Load:

System.Activities.Activity workflow = (System.Activities.Activity)System.Xaml.XamlServices.Load(@"X:\workflows\Workflow1.xaml");By and large, if you are simply hosting logic described in a non-durable workflow, WorkflowInvoker is the way to go. As it offers fewer lifecycle features (when compared to WorkflowApplication), it is also more light weight and may help you scale better when you need to run many workflows simultaneously.

Host Workflow Service in a Worker Role

When you need to host Workflow Service in a Worker Role, there are a few more steps to take. Mainly, these exist to address the fact that Worker Role instances run behind a load balancer. From a high-level, to host a Workflow Service means creating an instance of a WorkflowServiceHost based upon an instance of an Activity or WorkflowService defined either in a separate assembly or as a XAML file. The WorkflowService instance is created and opened in the Worker Role’s OnStart Method, and closed in the OnStop Method. It is important to note that you should always create the WorkflowServiceHost instance within the OnStart Method (as opposed to within Run as was shown for non-service Workflow hosts). This ensures that if a startup error occurs, the Worker Role instance will be restarted by Azure automatically. This also means the opening of the WorkflowServiceHost will be attempted again.

Begin by defining a global variable to hold a reference to the WorkflowServiceHost (so that you can access the instance within both the OnStart and OnStop Methods).

public class WorkerRole : RoleEntryPoint{System.ServiceModel.Activities.WorkflowServiceHost wfServiceHostA;…}Next, within the OnStart Method, add code to initialize and open the WorkflowServiceHost, within a try block. For example:

public override bool OnStart(){Trace.WriteLine("Worker Role OnStart Called.");//…try{OpenWorkflowServiceHostWithAddressFilterMode();}catch (Exception ex){Trace.TraceError(ex.Message);throw;}//…return base.OnStart();}Let’s take a look at the OpenWorkflowServiceHostWithAddressFilterMode method implementation, which really does the work. Starting from the top, notice how either an Activity or WorkflowService instance can be used by the WorkflowServiceHost constructor, they can even be loaded from a XAMLX file on the file-system. Then we acquire the internal instance endpoint and use it to define both the logical and physical address for adding an application service endpoint using a NetTcpBinding. When calling AddServiceEndpoint on a WorkflowServiceHost, you can specify either just the service name as a string or the namespace plus name as an XName (these values come from the Receive activity’s ServiceContractName property).

private void OpenWorkflowServiceHostWithAddressFilterMode(){//workflow service hosting with AddressFilterMode approach//Loading from a XAMLX on the file systemSystem.ServiceModel.Activities.WorkflowService wfs =(System.ServiceModel.Activities.WorkflowService)System.Xaml.XamlServices.Load("WorkflowService1.xamlx");//As an alternative you can load from an Activity type in a referenced assembly://System.Activities.Activity wfs = new WorkflowService1();wfServiceHostA = new System.ServiceModel.Activities.WorkflowServiceHost(wfs);IPEndPoint ip = RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["WorkflowServiceTcp"].IPEndpoint;wfServiceHostA.AddServiceEndpoint(System.Xml.Linq.XName.Get("IService", "http://tempuri.org/"),new NetTcpBinding(SecurityMode.None),String.Format("net.tcp://{0}/MyWfServiceA", ip));//You can also refer to the implemented contract without the namespace, just passing the name as a string://wfServiceHostA.AddServiceEndpoint("IService",// new NetTcpBinding(SecurityMode.None),// String.Format("net.tcp://{0}/MyWfServiceA", ip));wfServiceHostA.ApplyServiceMetadataBehavior(String.Format("net.tcp://{0}/MyWfServiceA/mex", ip));wfServiceHostA.ApplyServiceBehaviorAttribute();wfServiceHostA.Open();Trace.WriteLine(String.Format("Opened wfServiceHostA"));}In order to enable our service to be callable externally, we next need to add an Input Endpoint that Azure will expose at the load balancer for remote clients to use. This is done within the Worker Role configuration, on the Endpoints tab. The figure below shows how we have defined a single TCP Input Endpoint on port 5555 named WorkflowServiceTcp. It is this Input Endpoint, or IPEndpoint as it appears in code, that we use in the call to AddServiceEndpoint in the previous code snippet. At runtime, the variable ip provides the local instance physical address and port which the service must use, to which the load balancer will forward messages. The port number assigned at runtime (e.g., 20000) is almost always different from the port you specify in the Endpoints tab (e.g., 5555), and the address (e.g., 10.26.58.148) is not the address of your application in Azure (e.g., myapp.cloudapp.net), but rather the particular Worker Role instance.

It is very important to know that currently, Azure Worker Roles do not support using HTTP or HTTPS endpoints (primarily due to permissions issues that only Worker Roles face when trying to open one). Therefore, when exposing your service or metadata to external clients, your only option is to use TCP.

Returning to the implementation, before opening the service we add a few behaviors. The key concept to understand is that any workflow service hosted by an Azure Worker Role will run behind a load balancer, and this affects how requests must be addressed. This results in two challenges which the code above solves:

- How to properly expose service metadata and produce metadata which includes the load balancer’s address (and not the internal address of the service hosted within a Worker Role instance).

- How to configure the service to accept messages it receives from the load balancer, that are addressed to the load balancer.

To reduce repetitive work, we defined a helper class that contains extension methods for ApplyServiceBehaviorAttribute and ApplyServiceMetadataBehavior that apply the appropriate configuration to the WorkflowServiceHost and alleviate the aforementioned challenges.

//Defines extensions methods for ServiceHostBase (useable by ServiceHost &; WorkflowServiceHost)public static class ServiceHostingHelper{public static void ApplyServiceBehaviorAttribute(this ServiceHostBase host){ServiceBehaviorAttribute sba = host.Description.Behaviors.Find<;ServiceBehaviorAttribute>();if (sba == null){//For WorkflowServices, this behavior is not added by default (unlike for traditional WCF services).host.Description.Behaviors.Add(new ServiceBehaviorAttribute() { AddressFilterMode = AddressFilterMode.Any });Trace.WriteLine(String.Format("Added address filter mode ANY."));}else{sba.AddressFilterMode = System.ServiceModel.AddressFilterMode.Any;Trace.WriteLine(String.Format("Configured address filter mode to ANY."));}}public static void ApplyServiceMetadataBehavior(this ServiceHostBase host, string metadataUri){//Must add this to expose metadata externallyUseRequestHeadersForMetadataAddressBehavior addressBehaviorFix = new UseRequestHeadersForMetadataAddressBehavior();host.Description.Behaviors.Add(addressBehaviorFix);Trace.WriteLine(String.Format("Added Address Behavior Fix"));//Add TCP metadata endpoint. NOTE, as for application endpoints, HTTP endpoints are not supported in Worker Roles.ServiceMetadataBehavior smb = host.Description.Behaviors.Find<;ServiceMetadataBehavior>();if (smb == null){smb = new ServiceMetadataBehavior();host.Description.Behaviors.Add(smb);Trace.WriteLine("Added ServiceMetaDataBehavior.");}host.AddServiceEndpoint(ServiceMetadataBehavior.MexContractName,MetadataExchangeBindings.CreateMexTcpBinding(),metadataUri);}}Looking at how we enable service metadata in the ApplyServiceMetadataBehavior method, notice there are three key steps. First, we add the UseRequestHeadersForMetadataAddressBehavior. Without this behavior, you could only get metadata by communicating directly to the Worker Role instance, which is not possible for external clients (they must always communicate through the load balancer). Moreover, the WSDL returned in the metadata request would include the internal address of the service, which is not helpful to external clients either. By adding this behavior, the WSDL includes the address of the load balancer. Next, we add the ServiceMetadataBehavior and then add a service endpoint at which the metadata can be requested. Observe that when we call ApplyServiceMetadataBehavior, we specify a URI which is the service’s internal address with mex appended. The load balancer will now correctly route metadata requests to this metadata endpoint.

The rationale behind the ApplyServiceBehaviorAttribute method is similar to ApplyServiceMetadataBehavior. When we add a service endpoint by specifying only the address parameter (as we did above), the logical and physical address of the service are configured to be the same. This causes a problem when operating behind a load balancer, as messages coming from external clients via the load balancer will be addressed to the logical address of the load balancer, and when the instance receives such a message it will not accept—throwing an AddressFilterMismatch exception. This happens because the address in the message does not match the logical address at which the endpoint was configured. With traditional code-based WCF services, we could resolve this simply by decorating the service implementation class with [ServiceBehavior(AddressFilterMode=AddressFilterMode.Any)], which allows the incoming message to have any address and port. This is not possible with Workflow Services (as there is no code to decorate with an attribute), hence we have to add it in the hosting code.

If allowing an incoming address concerns you, an alternative to using AddressFilterMode is simply to specify the logical address that is to be allowed. Instead of adding the ServiceBehaviorAttribute, you simply open the service endpoint specifying both the logical (namely the port the load balancer will receives messages on) and physical address (at which your service listens). The only complication, is that your Workflow Role instance does not know which port the load balancer is listening- so you need to add this value to configuration and read it from their before adding the service endpoint. To add this to configuration, return to the Worker Role’s properties, Settings tab. Add string setting with the value of the of the port you specified on the Endpoints tab, as we show here for the WorkflowServiceEndpointListenerPort.

With that setting in place, the rest of the implementation is fairly straightforward:

private void OpenWorkflowServiceHostWithoutAddressFilterMode(){//workflow service hosting without AddressFilterMode//Loading from a XAMLX on the file systemSystem.ServiceModel.Activities.WorkflowService wfs = (System.ServiceModel.Activities.WorkflowService)System.Xaml.XamlServices.Load("WorkflowService1.xamlx");wfServiceHostB = new System.ServiceModel.Activities.WorkflowServiceHost(wfs);//Pull the expected load balancer port from configuration...int externalPort = int.Parse(RoleEnvironment.GetConfigurationSettingValue("WorkflowServiceEndpointListenerPort"));IPEndPoint ip = RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["WorkflowServiceTcp"].IPEndpoint;//Use the external load balancer port in the logical address...wfServiceHostB.AddServiceEndpoint(System.Xml.Linq.XName.Get("IService", "http://tempuri.org/"),new NetTcpBinding(SecurityMode.None),String.Format("net.tcp://{0}:{1}/MyWfServiceB", ip.Address, externalPort),new Uri(String.Format("net.tcp://{0}/MyWfServiceB", ip)));wfServiceHostB.ApplyServiceMetadataBehavior(String.Format("net.tcp://{0}/MyWfServiceB/mex", ip));wfServiceHostB.Open();Trace.WriteLine(String.Format("Opened wfServiceHostB"));}With that, we can return to the RoleEntryPoint definition of our Worker Role and override the Run and OnStop Methods. For Run, because the WorkflowServiceHost takes care of all the processing, we just need to have a loop that keeps Run from exiting.

public override void Run(){Trace.WriteLine("Run - WFWorker entry point called", "Information");while (true){Thread.Sleep(30000);}}For OnStop we simply close the WorkflowServiceHost.

public override void OnStop(){Trace.WriteLine(String.Format("OnStop - Called"));if (wfServiceHostA != null)wfServiceHostA.Close();base.OnStop();}With OnStart, Run and OnStop Methods defined, our Worker Role is fully capable of hosting a Workflow Service.

Hybrid Approach – Host Workflow On-Premise and Reach From the Cloud

Unlike ‘pure’ cloud solutions, hybrid solutions have a set of “on-premises” components: business processes, data stores, and services. These must be on-premises, possibly due to compliance or deployment restrictions. A hybrid solution is one which has parts of the solution deployed in the cloud while some applications remain deployed on-premises.

This is a great interim approach, leveraging on-premise Workflows hosted within on-premise Windows Server AppFabric (as illustrated in the diagram below) to various components and application that are hosted in Azure. This approach may also be applied if stateful/durable Workflows are required to satisfy scenarios. You can build a Hybrid solution and run the Workflows on-premise and use either the AppFabric Service Bus or Windows Azure Connect to reach into your on-premise Windows Server AppFabric instance.

Source: MSDN Blog Hybrid Cloud Solutions with Windows Azure AppFabric Middleware

Conclusion

How do you choose which approach to take? The decision ultimately boils down to your specific requirements, but here are some pointers that can help.

Hosting Workflow Services in a Web Role is very easy and robust. If your Workflow is using Receive Activities as part of its definition, you should be hosting in a Web Role. While you can build and host a Workflow Service within a Worker Role, you take on the responsibility of rebuilding the entire hosting infrastructure provided by IIS in the Web Role- which is a fair amount of non-value added work. That said, you will have to host in a Worker Role when you want to use a TCP endpoint, and a Web Role when you want to use an HTTP or HTTPS endpoint.

Hosting non-service Workflows that poll for their tasks is most easily accomplished within a Worker Role. While you can build another mechanism to poll and then call Workflow Services hosted in a Web Role, the Worker Role is designed to support and keep a polling application alive. Moreover, if your Workflow design does not already define a Service, then you should host it in a Worker Role– as Web Role hosting would require you to modify the Workflow definition to add the appropriate Receive Activities.

Finally, if you have existing investments in Windows Server AppFabric as hosted Services that need to be called from Azure hosted applications, then taking a hybrid approach is a very viable option. One clear benefit, is that you retain the ability to monitor your system’s status through the IIS Dashboard. Of course this approach has to be weighed against the obvious trade-offs of added complexity and bandwidth costs.

The upcoming release of Azure AppFabric Composite Applications will enable hosting Workflow Services directly in Azure while providing feature parity to Windows Server AppFabric. Stay tuned for the exciting news and updates on this front.

Sample

The sample project attached provides a solution that shows how to host non-durable Workflows, in both Service and non-Service forms. For non-Service Workflows, it shows how to host using a WorkflowInvoker or WorkflowApplication within a Worker Role. For Services, it shows how to host both traditional WCF service alongside Workflow services, in both Web and Worker Roles.

• When I posted this article, there was not link to the sample project. I left a comment to that effect and will update this post when a link is provided.

Suren informed me on 8/22/2011 that the sample code can be downloaded here.

Rich Garibay (@rickggaribay) described a String Comparison Gotcha for AppFabric Queues in an 8/10/2011 post:

I was burning the midnight oil last night writing some samples for a project I’m working on with Azure AppFabric Service Bus Queues and Topics. In short, I was modifying some existing code I had written to defensively check for the existence of a queue so that I could avoiding entity conflicts when attempting to create the same queue name twice.

Given a queueName of “Orders”, the first thing I did was to check to see if it exists by using the Client API along with a very simple LINQ query:

1: var qs = namespaceClient.GetQueues();2:3: var result = from q in qs4:5: where q.Path == queueName6:7: select q;If the result came back as empty, I’d go ahead and create the queue:

1: if (result.Count() == 0)2: {3:4: Console.WriteLine("Queue does not exist");5:6: // Create Queue7:8: Console.WriteLine("Creating Queue...");9:10: Queue queue = namespaceClient.CreateQueue(queueName);11:12: }This code worked fine the first time, since the queue did not exist, but the next time I ran the code, and each time following, Line 10 above would execute each time because the result.Count() was always zero.

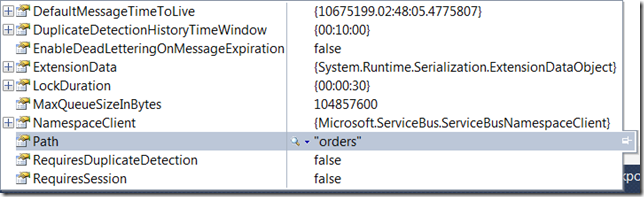

Looking at the queue properties, it was clear that the queue name was stored in lower case, regardless of the case used in my queueName variable:

On the surface, this created an interesting dilemma: Even though case didn’t matter when creating the queue, when I was searching for the queue name in Line 5 of my LINQ query, it was always resulting in a false match. Could this be a bug?

My first thought was to go back and make queueName lowercase, changing to “orders”. This fixed the problem on the surface, but it felt dirty and fragile.

After reviewing the code with some colleagues (thanks @clemensv and @briannoyes) it turns out that I had made an esoteric, but key error when checking for equality of the queue path:

where q.Path == queueNameThe full explanation is available on the C# Programming Guide on MSDN, but in a nutshell, I had made the error of using the “==” operator to check for equality. From MSDN: “Use basic ordinal comparisons when you have to compare or sort the values of two strings without regard to linguistic conventions. A basic ordinal comparison (System.StringComparison.Ordinal) is case-sensitive, which means that the two strings must match character for character: "and" does not equal "And" or "AND".”

The solution was to modify the where clause to use the Equals method which takes the object you are comparing to along with a StringComparison rule of StringComparison.OrdinalIgnoreCase:

1: var result = from q in qs2: where q.Path.Equals(queueName,StringComparison.OrdinalIgnoreCase)3: select q;Explanation from MSDN: “A frequently-used variation is System.StringComparison.OrdinalIgnoreCase, which will match "and", "And", and "AND". StringComparison.OrdinalIgnoreCase is often used to compare file names, path names, network paths, and any other string whose value does not change based on the locale of the user's computer.”

The AppFabric team is doing exactly the right thing since the queue path corresponds to a network path.

Here is the full code, which allows me to check for the existence of the queue path ensuring that I am ignoring case and the rest is as it was:

1: var qs = namespaceClient.GetQueues();2:3: var result = from q in qs4: where q.Path.Equals(queueName,StringComparison.OrdinalIgnoreCase)5: select q;6:7: if (result.Count() == 0)8: {9: Console.WriteLine("Queue does not exist");10:11: // Create Queue12: Console.WriteLine("Creating Queue...");13: Queue queue = namespaceClient.CreateQueue(queueName);14: }When you’ve been programming for a while, it’s sometimes easy to forget the little things, and it is often the least obvious things that create the nastiest bugs.

Hope this saves someone else from having a Doh! moment like I did.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

No significant articles today.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Visual Studio LightSwitch Team (@VSLightSwitch) published descriptions of a new Visual Studio LightSwitch Technical White Paper Series on 8/22/2011:

Andrew Brust (@andrewbrust) from Blue Badge Insights has just published a great series of whitepapers on Visual Studio LightSwitch aimed at educating you on what LightSwitch is and what it can do for you. Check out the PDFs below.

What is LightSwitch?

This is the first in a series of white papers about Microsoft® Visual Studio® LightSwitch™ 2011, Microsoft’s new streamlined development environment for designing data-centric business applications. We’ll provide an overview of the product that includes analysis of the market need it meets, examination of the way it meets that need relative to comparable products in the software industry, concrete examples of how it works, and discussion of why it’s so important.

Quickly Build Business Apps

In this paper we’ll walk through the development of a sample LightSwitch application and experience a wide range of LightSwitch techniques and features as we present configuration examples, illustrating screenshots, and code listings.Get More from Your Data

In this paper we’ll explore how to access external data sources, LightSwitch’s data capabilities, and advanced data access and querying techniques.Wow Your End Users

In this paper we’ll explore the finer points of LightSwitch user interface design, including menus and navigation, screen templates, search, export, and application deployment.Make Your Apps Do More with Less Work

In this paper, we discuss using LightSwitch extensions to bring extra features, connectivity, and UI sophistication to your applications.More Visual Studio LightSwitch resources -

- Download the Trial

- Learn how to build LightSwitch Applications

- Watch the instructional LightSwitch "How Do I?" videos

- Download Starter Kits

- Download Code Samples

- Build LightSwitch Extensions

- Ask Questions in the Forums

- Become a LightSwitch Fan on Facebook

- Follow us on Twitter

Enjoy,

-Beth Massi, Visual Studio Community

Erik Ejlskov Jensen (@ErikEJ) described Viewing SQL statements created by Entity Framework with SQL Server Compact in an 8/11/2011 post:

Sometimes it can be useful to be able to inspect the SQL statements generated by Entity Framework against your SQL Server Compact database. This can easily be done for SELECT statements as noted here. But for INSERT/UPDATE/DELETE this method will not work. This is usually not a problem for SQL Server based applications, as you can use SQL Server Profiler to log all SQL statements executed by an application, but this is not possible with SQL Server Compact.

This forum thread contains an extension method, that allows you to log INSERT/UPDATE/DELETE statements before SaveChanges is called on the ObjectContext. I have updated and fixed the code to work with SQL Server Compact 4.0, and it is available in the updated Chinook sample available below in the ObjectQueryExtensions class in the Chinook.Data project.You can now use code like the following to inspect an INSERT statement:

using (var context = new Chinook.Model.ChinookEntities())

{

context.Artists.AddObject(new Chinook.Model.Artist { ArtistId = Int32.MaxValue, Name = "ErikEJ" });

string sql = context.ToTraceString();

}The “sql” string variable now contains the following text:

--=============== BEGIN COMMAND ===============

declare @0 NVarChar set @0 = 'ErikEJ'

insert [Artist]([Name])

values (@0)

; select [ArtistId]

from [Artist]

where [ArtistId] = @@IDENTITYgo

--=============== END COMMAND ===============

This statement reveals some of the magic behind the new support for “server generated” keys with SQL Server Compact 4.0 when used with Entity Framework 4.0. SQL Server Compact is “tricked” into executing multiple statements in a single call.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

The Windows Azure Team (@WindowsAzure) reported that it’s Looking for Cloud Evangelists to Join the Windows Azure ISV Team in an 8/11/2011 post:

Do you want to be on the leading edge of innovation and get in on the ground floor of helping software vendors accelerate and revolutionize their business with cloud computing?

If you are, this is your lucky day! We’re looking for individuals around the world to support Cloud Service Vendors in developing commercially available applications on the Windows Azure Platform. The Developer and Platform Evangelism (DPE) organization is looking for architect evangelists, developer evangelists, and platform strategy advisors to join the team who can help accelerate our partners’ success. Click here for more information about the positions we’re currently looking to fill.

The MSDN blogs were down for maintenance when I posted this (from the Atom feed).

Damon Edwards (@damonedwards) posted a 00:32:01 Full video of Israel Gat interview (Agile in enterprise, DevOps, Technical Debt) to his dev2ops blog on 8/11/2011:

After posting the exceprt on Technical Debt, I've gotten a number of requests to post the full video of my beers-in-the-backyard discussion with Israel Gat (Director of Cutter Consortium's Agile Product & Project Management practice).

We covered a number of interesting topics, including bringing Agile to enterprises, how Israel found himself part of the DevOps movement, and the measurement of Technical Debt.

James Downey (@james_downey, pictured below) commented on the University of Washington’s new Cloud Computing Certificate program in his A Cloud Curriculum post of 8/11/2011:

Erik Bansleben, Program Development Director at the University of Washington, invited me and several other bloggers to participate in a discussion last Friday on UW’s new certificate program in cloud computing. Aimed at students with programming experience and a basic knowledge of networking, the program covers a range of topics from the economics of cloud computing to big data in the cloud.

I had a mixed impression of the curriculum. It looked a bit heavy on big data, an important cloud use case that would perhaps be better suited to a certificate program of its own. And the curriculum looked a bit light on platform-as-a-service and open source platforms for managing clouds such as OpenStack. Since the program is aimed at programmers, perhaps I’d organize it around the challenges of architecting applications to benefit from cloud computing.

But that is nitpicking. It is certainly important for universities to update course offerings based on current trends. And UW has put together an impressive panel of industry experts to guide the program.

Clearly, it’s difficult to put together a program around cloud computing, since there is so much debate around what constitutes cloud computing. And given that just about every tech firm out there has repositioned its products as cloud offerings, it would seem very difficult to achieve sufficient breath without giving up the opportunity for rigorous depth.

But any interesting new direction in the tech industry would pose such challenges. Indeed, perhaps it is these difficulties that make it worth tackling the topic.

For more information, see the UW website at http://www.pce.uw.edu/certificates/cloud-computing.html.

James is a Solution Architect for Dell Services.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Chris Henley posted Windows Server 2008 R2 SP1 :The Operating System of the Private Cloud. Time to Install Your Trial Edition for Free. (Head in the Clouds Episode 1) to the TechNet blogs on 8/11/2011:

Everyone is talking about Cloud. There are all kinds of claims but no one is actually showing you how to actually build it. I want to show you how to actually implement some of the cloud solutions you have undoubtedly heard so much about. It seems logical to me to begin at your own network because it is very likely that you already have some of the key components of what we would define as a “Private Cloud” already built in to your network.

I guess a definition is in order as we get under way.

- Cloud Computing - is the on-demand delivery of standardized IT services on shared resources, enabling IT to be more reliable through greater elasticity and scale, end-users to accelerate access to their IT needs through self-service, and the business to be more efficient through usage-based and SLA-driven services.

- Private Cloud - provides many of the benefits of Cloud Computing plus unique security, control and customization on IT resources dedicated to a single organization

I wonder if that was written by the marketing department? OK!

So lets get started building a Private Cloud. Perhaps as we build it we will see its true potential value.

The first piece to any cloud environment (Public or Private) is a platform. Some things just don’t change. You need a platform on which to run all of your stuff. Fear not the platform is the one you are already used to using. The public and private cloud offerings from Microsoft rely on Windows Server 2008 R2 as the platform. Some of you are scratching your heads thinking……”but, isn’t the public cloud platform called Windows Azure?”

Yes, you would be correct! However it would behoove you to realize that Windows Azure is actually running a series of virtual machine instances on your behalf. Guess which platform those virtual machine instances are running? You guessed it Windows Server 2008 R2! So whether you choose public or private cloud you will be working with your favorite platform and mine Windows Server 2008 R2.

Since we are building a private cloud we will be using Hyper-V to host virtual machines inside our private cloud and so therefore will want to get a version of Windows Server 2008 R2 that includes Hyper-V. I would recommend Windows Server 2008 R2 Enterprise Edition with hyper-v. My reasoning is 2 fold.

1. Windows Server 2008 R2 Enterprise Edition has better capacity capabilities than the standard edition.

2. From a purely stingy licensing perspective if you run Windows Server 2008 R2 Enterprise Edition you get 4 free licenses to run Windows Server as virtual machines. that means I can run 1 Hyper V server and 4 additional servers for no additional licensing costs. Wahoo! When was the last time you went wahoo?

I know someone is wondering about running Datacenter

baseedition. So here’s the deal. If you choose to run Datacenter edition you do get to run as many free virtual machines as you want, however we do license that edition of server on a per processor basis so it can get pricey fast. For our purposes we are going to stick with Window server 2008 R2 Enterprise edition SP1.We are going to be building a private cloud for testing purposes and so therefore are not going to pay one thin dime for the opportunity to try this stuff out.

Where, pray tell, will I get this free stuff from.

Ask and ye shall receive.

http://technet.microsoft.com/evalcenter/dd459137.aspx?ocid=otc-n-us-jtc-DPR-EVAL_WS2008R2SP1_ChrisH

I have my own personally recognized trial software link. Click the link above and download the trial version of the software. In this case trial version simply measn that in 180- days (Which by the way we will be long finished with this discussion by then) the software will simply stop working. Timebomb! Kapow! Boom!

You get the picture!

Once you have signed up for the software download a copy for yourself to use and burn it to DVD.

At this point there is a question to be asked and seriously considered. “Should we install the full version, or the Server Core version?”

That is an excellent question and the answer is “It depends!”

The Private Cloud deployment guide states without reservation that you should use the Server Core edition. I really think it depends on your knowledge. If you are not already [familiar] with Server Core installations this is going to add an extra layer of complexity that you just frankly don’t need to deal with right now. I totally understand that because we install the full version that there will be some local resources specifically RAM that is used by the full install that we could conserve by using the server core installation. Keep that knowledge in the back of your mind for when your building out your production systems and maybe you choose to go Server core at that point in time. For now I want you to do the full install.

I realize that this is really just a next, next, next wait 45 minutes [to] finish [the] operation. Go for it!

When you have your operating system installed kick back relax and play some XBOX 360.

Next time we will talk about Hyper V and the role that it plays in Private Cloud Operations.

David Strom reported Altaro Software Brings Hyper-V Backup to SMBs in a 8/11/2011 post to the ReadWriteCloud blog:

Altaro Software announced this week its Hyper-V Backup for SMBs. It comes with a free version that can backup two VMs. While there are numerous backup products that support Microsoft's Hyper-V, this one is geared for smaller enterprises and aims at simplicity. It takes advantage of Microsoft's Volume Shadow Copy technology, so you can perform hot backups, meaning that the VMs can keep running smoothly even during the actual backup process.

In addition to the free version, there is a five VM-license for $345 and an unlimited VM license for $445, which is significantly less than many of its competitors such as Symantec Backup Exec. Pricing is based on each Hyper-V host. Altaro is based in Malta and sells a variety of other backup software products.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

The Unbreakable Cloud blog reported Real Time Data Analytics on the cloud using HStreaming Cloud Beta in an 8/11/2011 post:

Hadoop is becoming the new de facto engine for processing large amount data even if they are social web sites, news or log files and you name it. But still Hadoop has been so far used only in large batch processing. If you have lots of volume of data including if they are in Petabyte sizes, they can be scheduled to be mined using Hadoop batch processing. But it was still taking time as it was tuned for the batch processing. Recently so many vendors especially business intelligence and analytic engines – Netezza, Greenplum, Teradata etc – all either already have options to get there or working on it.

Various optimizations have been done on Hadoop to improve the speed of the batch processing since then. It is becoming a reality to have a massive data processing engine running on commodity based servers with local storage. In other words Hadoop has been optimized enough to run on big cloud clusters. And then on top of it, it can be used for real time analytics in a big time.

Yahoo is a big user and big contributor in optimizing Hadoop. So Yahoo Hadoop spin off HStreaming has HStreaming Cloud Beta which can do real time analytics on your data. As the company crowns its name HStreaming(Hadoop Streaming?), it is actually getting real time streaming data to do the analysis. All the real time analytics tool need to have some kind of machine learning algorithms and predictive analysis so that it can do a better job of real time analytics.

Predictive and real time analytics are going to be future of the computing. Whoever is getting real time data, doing real time analytics and making real time connections with the users will be the winner. It seems HStreaming is exactly doing that by getting real time streaming data to do the analysis. If the system can find out what is the product is selling like a hot cake and what percentage of on-line sales of that product goes to a specific company in real time, you have a wealth of information right in front of the business to confront the competitor. You can see such type of information comes in a real time from this example from SeeVolution.

In order to do real time analytics you have to mine through lots of real time data and they have to mined and analyzed pretty quickly. If its delayed, the user will be on some other site. So speed is another important factor.

Real time analytics is an evolving field with many models and many areas to use for. It is has lots of potential application areas and has potential to transform we do business today. To learn more about HStreaming or explore it with a trial run, please visit HStreaming.