Windows Azure and Cloud Computing Posts for 11/30/2009+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Update 11/31/12/1/2009: Jim O’Neill: Discovering “Dallas”; Panagiotis Kefalidis: Upgrading your table storage schema without disrupting your service; Eric Lai: Microsoft adds app, data marketplace to Windows Azure; James Urquhart: Practice overtaking theory in cloud computing; David Linthicum: Could Ubuntu get enterprises to finally embrace the cloud?; Trevor Clarke: 2009: The year the cloud was seeded; Windows Azure Team: Introducing Windows Azure Diagnostics; Jouni Heikniemi: Get your Azure ideas out (and vote for Mini-Azure); and more. (James Urquhart reminded me that November doesn’t have 31 days.)

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts, Databases, and DataHubs*”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page.

* Content for managing DataHubs will be added when Microsoft releases a CTP of the technology

Off-Topic: OakLeaf Blog Joins Technorati’s “Top 100 InfoTech” List on 10/24/2009, SharePoint Nightmare: Installing the SPS 2010 Public Beta on Windows 7 of 11/27/2009, and Unable to Activate Office 2010 Beta or Windows 7 Guest OS, Run Windows Update or Join a Domain from Hyper-V VM (with fix) of 11/29/2009.

Azure Blob, Table and Queue Services

• Panagiotis Kefalidis explains Patterns: Windows Azure - Upgrading your table storage schema without disrupting your service in this 11/30/2009 post:

In general, there are two kind of updates you’ll mainly perform on Windows Azure. One of them is changing your application’s logic (or so called business logic) e.g. the way you handle/read queues, or how you process data or even protocol updates etc and the other is schema updates/changes. I’m not referring to SQL Azure schema changes, which is a different scenario and approach but in Table storage schema changes and to be more precise only on specific entity types because, as you already now, Table storage is schema-less.

As in In-Place upgrades, the same logic applies here too. Introduce a hybrid version, which handles both the new and the old version of your entity (newly introduced properties) and then proceed to your “final” version which handles the new version of your entities (and properties) only. It’s a very easy technique and I’m explaining how to add new properties and of course remove although it’s a less likely scenario. …

Stephen Forte’s The Open Data Protocol (OData) post of 11/30/2009 describes the ADO.NET Data Service (a.k.a Project Astoria) name change to WCF Data Services:

[At] PDC, Microsoft unveiled the new name for ADO. NET Data Services: WCF Data Services. That makes sense since Astoria was built on top of WCF, not ADO .NET. I thought that Astoria was a cool code name and that ADO.NET Data Services sucked as a product name. At least WCF Data Services sucks less since at least it is more accurate and reflects the alignment of WCF, REST capabilities of WCF, and RIA Services (now WCF Data Services).

Astoria, I mean WCF Data Services :), is a way to build RESTful services very easily on the Microsoft platform. Astoria implements extensions to ATOMPub and uses either ATOM or JSON to publish its data. The protocol that Astoria used did not have a name. Since other technology was using this Astoria protocol inside (and even outside of) Microsoft, Microsoft decided to give it a name and place the specification under the Open Specification Promise. Astoria’s protocol is now called: the Open Data Protocol, or OData.

If you have been using Astoria and liked it, not much is different. What is cool is that other technology is now adopting OData including: SharePoint 2010, SQL Server 2008 R2, PowerPivot, Windows Azure Table Storage, and 3rd party products like IBM’s WebSphere eXtreme Scale project implements the protocol.

With all of this technology supporting OData, Microsoft created the Open Data Protocol Data Visualizer for Visual Studio 2010 Beta 2. The tool gives you the ability to connect to a service and graphically browse the shape of the data. You can download it from here or search the extensions gallery for Open Data. Once you have it installed you can view OData data very easily. Here is how. …

I like the name Astoria better than WCF Data Services.

Stephen is Chief Strategy Officer at Telerik and a Microsoft regional director.

Rob Gillen published the slides from his Large-Scale Scientific Data Notes from the Field for Climate Analysis presentation on 11/30/2009. His project uses Azure tables, blobs, queues, Web roles and Worker roles. His current data loaded consists of > 1.1 billion table entries (lat/lon/value) and > 250,000 blobs (75 GB of blobs.)

T. S. Jensen describes a problem deploying Windows Azure 1.0 CloudTableClient Minimal Configuration in this 11/29/2009 post:

It turns out that using table storage in Windows Azure 1.0 is quite easily done with a minimal amount of configuration. In the new Azure cloud service Visual Studio project template, configuration is significantly simpler than what I ended up with in a previous blog post where I was able to get the new WCF RIA Services working with the new Windows Azure 1.0 November 2009 release and the AspProvider code from the updated demo.

And while the project runs as expected on my own machine, I can’t seem to get it to run in the cloud for some reason. I have not solved that problem. Since I can’t seem to get much of a real reason for the failure via the Azure control panel, I’ve decided to start at the beginning, taking each step to the cloud rather than building entirely on the local simulation environment before taking it to the cloud for test.

His earlier Azure-related posts include Silverlight WCF RIA Services Beta Released of 11/22/2009, Windows Azure Tools SDK (November 2009) Refactor or Restart? of 11/15/2009 and Building Enterprise Applications with Silverlight 3, .NET RIA Services and Windows Azure Part 1 of 10/15/2009.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

David Robinson asks SQL Azure v.Next – What features do you want to see? in this 11/30/2009 post:

Each and every time we are out speaking with customers, we always make sure we thank them for the incredible feedback they have given us. As I have said before, and will continue to say, it’s our awesome users that have shaped SQL Azure into the relational database service it is today.

With that being said, we are looking for feedback. There are two ways you can go and provide us info on the features you want to see in the service.

- http://www.mygreatsqlazureidea.com – This is a great site you can visit, suggest new features and vote for your favorites. The Windows Azure team set up something similar a couple weeks ago and since it is a great avenue for feature requests, we did the same.

- Connect – For those of you that prefer the ‘old school’ way of providing feedback, we have a SQL Azure Feedback Community Engagement Survey that you can fill out. To provide feedback using this method, follow these two easy steps…

Doug Purdy demos “Quadrant”: Three Features in Two Minutes in this brief video posted on 11/29/2009:

The below screencast runs ~2 minutes and worth watching if you care about interacting with data (and who doesn’t).

What you see are 3 key features of “Quadrant” in the latest CTP:

- Access to SQL Azure databases

- Creating custom views (including master detail) by mashing views together

- See the “markup” of any view in Quadrant and steal it/change it (just like view source in a Web browser)

You also get to see the infinite canvas and the zoom features indirectly.

I am thinking about posting a couple more of these 2 minute demos over the course of the next couple weeks.

If you want to see something more in-depth now, check out the “Quadrant” PDC talk or Quadrant Overview video.

[Updated: There is some issue with the screencast on some machines. I'll rerecord tomorrow.]

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

• Vittorio Bertocci (Vibro) analyzes what makes Good Claims, Bad Claims 1: an Example in this 12/1/2009 post:

Ahh, claims. Aren’t they a thing of beauty? When you first discover them, you’d be tempted to use them for everything up to and including brewing coffee.

Now that we finally have powerful tools at our disposal for actually developing claims-based systems, as opposed of just talking about it, it is time to go past the trivial examples and make some more realistic considerations. Oversimplification is useful for breaking the ice, but we don’t want to fall victim of the hype and do silly things do we? Just remember that there was a time in which there was the belief that before writing any web service (including the one doing a + b) you should have provided the corresponding XSD… yeah, fits beautifully with the vision but not especially agile in practice :)

We kind of started this process in the “what goes into claims” post, which BTW is being reworked for appearing in the claims guide, however here I’d like to go a step further and reason about what makes a claim a good or a bad choice for a given scenario. I’ll start by discussing one of the most classic example of claims in the authorization space, the Action claim, and use it as an example of kind of the things you want to consider in practice when evaluating which claims are right for your scenario. …

Vibro goes on with a dissertation on Action claims and promises:

In the last several months my typical posts where of the type “announcing sample X” or similar: now that a very successful PDC and WIF launch is behind us, I finally have some time to get back to some good ol’ architecture. In the next few posts I’ll try to reason about claims effectiveness. As I mentioned, there are no hard & fast rules here: my hope for this and the next posts is to make exactly that point, and inspire you to think about your systems and the best way of taking full advantage of the claims-based identity approach. Don’t get caught in information cascades and never suspend your judgment, including (especially) about what you read here! ;)

• Michael Desmond quotes Burley Kawasaki in this Microsoft's New AppFabric Serves Up Composite Apps news article of 11/19/2009 for Visual Studio Magazine:

During the opening keynote at the Microsoft Professional Developers Conference in Los Angeles on Tuesday, Microsoft President of the Server and Tools Business Bob Muglia introduced AppFabric as a foundational component of Microsoft's Windows Server and Windows Azure strategy.

AppFabric comprises a number of already existing technologies bound together in an abstraction layer that will help establish a common set of target services for applications running either on-premise on Windows Server or in the cloud on Windows Azure. Burley Kawasaki, director of Microsoft's Connected System Division, described AppFabric as a logical next step up in .NET abstraction.

"We really see AppFabric as being the next layer that we add to move up the stack, so we can provide a developer with truly symmetrical sets of services, application level services that you can take advantage of, regardless of whether you are targeting on-premise or cloud platforms," Kawasaki said. "This is an additional level of abstraction that is built into the system model, just like SQL as an example uses T-SQL as a common model, whether or not you are on the cloud."

Kawasaki said that AppFabric will help fix a problem facing Microsoft developers today. ".NET is a consistent model today, but when you do File/New to write an app for Azure, your whole application looks a little different than it would if you were just writing an app on-premise." …

Michael is the editor in chief of the online Redmond Developer News magazine.

Dave Kearns reports Microsoft adds identity to cloud in this 11/25/2009 NetworkWorld article sub-captioned “Releases Windows Identity Foundation, formerly the Geneva project:”

Everyone eyeing Azure, their candidate for cloud-based computing, can at least agree on one thing: Redmond is late to the party that's dominated by Salesforce.com, Google, Amazon and a host of others. How can they hope to differentiate themselves?

Microsoft's JG Chirapurath, director of marketing for the Identity and Security Division, knows exactly how, and he told me about it last week. Identity is the key differentiator.

Last spring ("Identity management is key to the proper operation of cloud computing,") I noted that some people were finally beginning to realize that identity had a part to play in cloud-based computing, but very little has been done. Until Microsoft's announcements last week at their Professional Developers Conference (PDC), that is.

As Chirapurath pointed out, along with lots of info about Azure, Microsoft also rolled out what's now called the Windows Identity Foundation (formerly the Geneva project). This is the glue that's needed for third-party developers to work with Windows Cardspace (and other information card technologies) to secure -- among other things -- cloud-based services and applications.

The release of the identity framework puts Microsoft ahead of all of the other cloud-based solution providers (many of whom are still struggling to attempt to adapt OpenID, with its security problems, to their cloud scenarios). …

See Michele Leroux Bustamante’s Securing REST-Based Services with Access Control Service presentation in the Events section.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

• Jim Nakashima shows you How to: Add an HTTPS Endpoint to a Windows Azure Cloud Service on 12/1/2009 in exhaustive detail:

Back in May I posted about Adding an HTTPS Endpoint to a Windows Azure Cloud Service and with the November 2009 release of the Windows Azure Tools, that article is now obsolete.

In the last week I received a number of requests to post a new article about how to add HTTPS endpoints with the November 2009 release and later and I’m always happy to oblige!

To illustrate how to add an HTTPS endpoint to a Cloud Service, I’ll start with the thumbnail sample from the Windows Azure SDK – the web role in that sample only has an http endpoint and I’ll walkthrough the steps to add an HTTPS endpoint to that web role. …

• The Windows Azure Team is Introducing Windows Azure Diagnostics in this 12/1/2009 post:

Windows Azure Diagnostics is a new feature in the November release of the Windows Azure Tools and SDK. It gives developers the ability to collect and store a variety of diagnostics data in a highly configurable way.

Sumit Mehrotra, a Program Manager on the Windows Azure team, has been blogging about the new diagnostics feature. From his first post “Introducing Windows Azure Diagnostics”:

There are a number of diagnostic scenarios that go beyond simple logging. Over the past year we received a lot of feedback from customers regarding this as well. We have tried to address some of those scenarios, e.g.

- Detecting and troubleshooting problems

- Performance measurement

- Analytics and QoS

- Capacity planning

- And more…

Based on the feedback we received we wanted to keep the simplicity of the initial logging API but enhance the feature to give users more diagnostic data about their service and to give users more control of their diagnostic data. The November 2009 marks our first initial release of the Diagnostics feature.

For more information, follow Sumit’s blog and read the Windows Azure Diagnostics documentation on MSDN. You might also want to watch Matthew Kerner’s PDC talk: “Windows Azure Monitoring, Logging, and Management APIs.”

• Mary Jo Foley reports Ruby on Rails becomes latest open-source offering to run on Microsoft's Azure cloud in this 12/1/2009 post to her All About Microsoft blog for ZDNet:

For a while now, Microsoft has been courting open-source software makers to convince them of the wisdom of offering their wares on Windows. So it’s not too surprising that many of those same apps also are being moved to the Windows Azure cloud platform.

At the end of November, Microsoft architect Simon Davies blogged that he had gotten the open-source Ruby on Rails framework to run on Windows Azure. By using a combination of new functionality in the November Windows Azure software development kit (SDK), plus some new Solution Accelerator technology, Davies said he managed to get Ruby on Rails to run. (The fruits of Davies’ labors are available at http://rubyonrails.cloudapp.net/.)

• Jim Nakashima points to a Visual Web Developer blog post that explains how to convert ASP.NET Web Site to Web Application projects in his Web Site Projects and Windows Azure post of 11/30/2009:

Currently (November 2009), the Windows Azure Tools for Visual Studio only support Web Application projects – the type of Web projects that have a project file and are compiled.

For most folks, they reason to choose a Web Site project was for the ability to update it easily on the server and generally, the target customer for Windows Azure is typically using a Web Application project. (for a good article about the differences and when to use each one, please see this post)

Because Windows Azure has a deployment model where you can’t update the files on the server itself and considering the target customer, this seemed like a reasonable approach.

That said, there are a lot of apps out there that are in the Web Site format that folks want to deploy to Windows Azure and we’re figuring out the best way to support this moving forward. [Emphasis added.]

For the time being, most folks are doing the conversion from Web Site to Web Application project and I wanted to point to a good post off the Visual Web Developer blog that will help make this easier: http://blogs.msdn.com/webdevtools/archive/2009/10/29/converting-a-web-site-project-to-a-web-application-project.aspx

• Jim O’Neill’s Discovering “Dallas” post of 11/30/2009 is a fully illustrated tutorial for Microsoft’s new Data-as-a-Service (DaaS) offering codenamed “Dallas,” not to be confused with Database-as-a-Service (DBaaS?) products or Azure’s codename “Houston":”

While the availability of Windows Azure and the announcement of Silverlight 4 were certainly highlights of PDC 09, I was especially intrigued by the introduction of “Dallas,” the code name for Microsoft’s Data-as-a-Service (DaaS) offering. So after the relatives had left and my turkey coma had subsided, I thought I’d give “Dallas” a whirl this Thanksgiving weekend.

What’s “Dallas” all about?

“Dallas” is essentially a repository of data repositories, a service - built completely on Windows Azure - that allows consumers (developers and information workers) to discover, access, and purchase data, images, and real-time services.

“Dallas” essentially democratizes data, enabling a one-stop shopping place (via PinPoint) for all types of premium content. With “Dallas” one can opt in to a pay-as-you-grow type model, facilitating access to data that may have previously only been accessible via expensive subscriptions directly with the data provider.

Developers can access “Dallas” via REST-based APIs and Atom feeds or in raw format (as many of the content providers had made available pre-“Dallas”). The web-accessible “Dallas” Service Explorer (shown below) allows the consumer to explore the data as well as the HTTP URLs that are constructed and executed to retrieve the data set based on the user-provided parameters. …

Jim continues with detailed instructions for accessing Dallas’ Quick Start page:

And obtaining an account key to access the “Dallas” Developer Portal, whose Catalog page offers links to offerings by current and forthcoming providers.

Jim is a Developer Evangelist for Microsoft covering the Northeast District (namely, New England and upstate New York.)

Note: Codename “Houston” is the name of forthcoming Access-like database design tool for SQL Azure that Dave Robinson described near the end of his SVC 27 session, The Future of Database Development with SQL Azure, at PDC 2009.

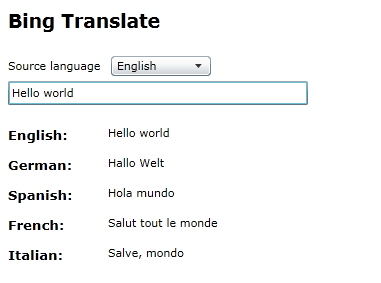

• Matthias Jauernig’s Rx: Bing Translate Example of 11/28/2009 is a port of Erik Meijer’s Reactive Framework (Rx) project to a Visual Studio solution with a live demo on an Azure data center:

In his Rx session at PDC09 Eric Meijer gave a nice example named Bing Translate. It was connecting to Bing via the Bing Web Service API and translated some term given by the user. It was quite interesting because Eric used the Reactive Framework for handling the translation and did some composition of the incoming results. He took just the first 2 translations and discarded the others. This didn’t make much sense, but it was ok for demonstration purposes. As followup Somasegar described the example in his blog.

But there was a lack of the whole sourcecode (btw: Eric Meijer posted it now on the Rx forum, but not as VS solution) and so I implemented it on my own with some modifications and now I want to share the whole Visual Studio solution with you.

Live demo

I’ve put my Bing Translate example as SL3 application on Windows Azure at http://bingtranslate.cloudapp.net, where you can directly test it:

• Gaston Hillar reports “Intel's Parallel Universe Portal is a free developer's service in the cloud” in his Cloud Computing: Detecting Scalability Problems article of 11/23/2009 for Dr. Dobbs Digest:

You already know that achieving a linear speedup as the number of cores increases in real life parallelized applications is indeed very difficult. However, sometimes, the multicore scalability of certain algorithms for existing multicore systems could be worse than expected. The overhead and the bugs introduced by concurrency could bring really unexpected scalability problems when the number of cores increases. Intel can help you with its Parallel Universe Portal, a free service in the cloud.

Gaston continues with detailed instructions for using Intel’s new service. Most subscribers to developer-oriented magazines have already received Intel’s Parallel Studio CD.

• Eric Lai adds background to Jim O’Neill’s post in his Microsoft adds app, data marketplace to Windows Azure ComputerWorld article of 11/17/2009 that’s subtitled “Cloud platform expanded to take on rivals such as Salesforce.com, Microsoft says at PDC:”

Microsoft Corp. said on Tuesday that its upcoming Windows Azure cloud computing platform will come with marketplaces for both online apps built to run on Azure as well as datasets that companies can use to build their own apps.

PinPoint.com will host business-oriented apps developed by Microsoft partners, chief software architect Ray Ozzie said during a keynote speech at Microsoft's Professional Developers Conference 2009 (PDC09) in Los Angeles.

PinPoint will compete with Salesforce.com Inc.'s 4-year-old AppExchange online marketplace and other more recently-emerging app stores.

Azure will also host "an open catalog and marketplace for public and commercial data" codenamed Dallas, Ozzie said. Developers can use the data to build their own services and mashups. Dallas is now in Commercial Technical Preview. …

Charlie Calvert announces Official Publication of Creating Windows Azure Applications with Visual Studio Videos in this 11/30/2009 post:

Though they were previewed earlier this month, we have now officially released the first of Windows Azure series of How Do I videos. Below you find a list of the videos that have been created, along with links to their location in the How Do I Video library.

The Developing Windows Azure Applications with Visual Studio Video Series

In this series of videos Jim Nakashima shows how to develop Windows Azure applications with the tools provided in Visual Studio.

SyntaxC4’s Windows Azure Guest Book – Refactored post of 11/29/2009 describes breaking changes to Windows Azure Platform Training Kit projects:

Due to the namespace change of the Windows Azure SDK, a number of the Windows Azure Platform Training Kit projects were broken. As a part of the Guelph Coffee and Code Windows Azure Technology Focus Panel, I have refactored this Project in order to launch it into the cloud as one of my Azure Deployments. If you haven’t already, you may want to visit my previous blog post entitled Moving from the ‘ServiceHosting’ to ‘WindowsAzure’ Namespace. In the previous post I reviewed some of the changes in the classes that were contained in the Windows.ServiceHosting.ServiceRuntime namespace.

Another change in the Azure SDK is in the RoleEntryPoint Class. The Start method which originally had a void return type has been changed to OnStart and returns a boolean value. A method called GetHealthStatus() which returned a RoleStatus enum value, was removed.

I am still looking for an alternative to one of the most useful debugging features of Windows Azure to date. RoleManager.WriteToLog() was not replaced in the RoleEnvironment class. This was one of the only gateways into writing out some error information without the use of the Storage Services or a SQL Azure database.

All of the mentioned Changes to the SDK were the issues behind the Windows Azure Guest Book not functioning from the November Platform Training Kit. You can go and make these changes yourself if you would like, or you can download the refactored version.

His other recent Azure-related posts are Moving from the ‘ServiceHosting’ to ‘WindowsAzure’ Namespace (11/29/2009), Making data rain down from the Cloud with SQL Azure (11/24/2009), and Floating up into the Windows Azure Cloud (11/20/2009).

Microsoft’s Partners Expand Services Portfolios, Add Technical Value with the Windows Azure Platform, a November 2009 white paper, provides “Three Case Studies for Technical Decision Makers:”

This white paper describes how Microsoft® system integrator partners are using Windows Azure™, an Internet-scale cloud services platform that is hosted in Microsoft data centers, to develop applications and services that are quick to deploy, easy to manage, readily scalable, and competitively priced.

Because the Windows Azure platform handles the deployment and operations infrastructure, partners can extend their reach into new markets while staying focused on the bottom-line business value of customers’ IT investments.

The three partners are Avenade: High-Volume Applications from Enterprise to Web, CSC: Legal Solutions Suite, and Infosys: mConnect.

Return to section navigation list>

Windows Azure Infrastructure

• James Urquhart reports Practice overtaking theory in cloud computing in his 11/30/2009 post to CNet News’ Wisdom of Clouds blog:

It's getting harder to focus on the vision of cloud computing these days. While there are still plenty of critical and complex problems to solve, and many, many implications of this disruptive operations model that have yet to be understood, the truth is that we've entered a new phase in the evolution of cloud adoption. Real work now exceeds theory when it comes to both new online content and work produced. …

However, what really made me aware of the changing cloud buzz is what's happening in the software development space. I was shaken awake by Microsoft's brilliant launch of its Azure cloud service. I loved almost everything about how Ray Ozzie and crew positioned and discussed Azure's services to its target market: developers of the next generation of business applications.

The recent (re)unveiling at Microsoft's Professional Developers Conference in Los Angeles included an impressive array of services, customer testimonials, and partner announcements. If it had stopped at that, I would have assumed it was just "Mister Softy's" massive marketing machine in action.

However, I began following the "#azure" tag on Twitter from that day forward, and I've been blown away by the amount of content being generated by developers for developers. For example, this step-by-step guide to installing SQL Server on Azure. Or, how about this list of sessions from PDC from a variety of vendor and customer presenters, covering topics ranging from development basics to "making sense out of ambient data". …

James is market manager for the Data Center 3.0 strategy at Cisco Systems

• Jouni Heikniemi requests you to Get your Azure ideas out (and vote for Mini-Azure) in this 11/27/2009 post:

The Azure team launched a new product feedback site, and I’m lobbying for one idea on it: Give me mini-sites at a mini-price.

A few days ago the director of Windows Azure Planning, Mike Wickstrand, posted about the launch of a new feedback site with a slightly odd name but with quite a decent purpose: www.mygreatwindowsazureidea.com. If you have ideas on improving the Azure experience, go check the site out!

Mini-Azure wanted for small web properties

One aspect that makes me particularly happy: The most voted suggestion so far is making Azure cheaper for very small web applications. I have been talking about this to various people at Microsoft, with varying response. The site now confirms what I was expecting all along: The current $0.12/hour pricing model (~$86/month) is too expensive for small sites. Hosted LAMP stack offerings are available at a few bucks a month, and that’s a hard price tag to beat.

While I already did post some of my points in the original thread, I want to reiterate my views on (and arguments for) the mini-Azure concept here.

He goes on to describe his proposals for lower-cost Windows Azure Express and Windows Azure Compute Small Business Edition. Jouni has my votes for both.

• Trevor Clarke’s 2009: The year the cloud was seeded article of 11/26/2009 for ComputerWorld Austrailia covers IDC Research’s Powering the Enterprise Cloud Conference in Sydney:

The economic downturn may have dominated the agenda for much of 2009 but it was also the year the cloud was seeded, according to analyst firm IDC.

At its Powering the Enterprise Cloud Conference in Sydney, IDC research manager Matthew Oostveen said the retrospective view of this year for IT managers and CIOs won't necessarily be focussed on the global financial crisis.

"It won't be the economy that we think of, it will actually be the year the cloud was seeded," Oostveen said.

The analyst also laid out IDC's view of cloud computing and noted many IT departments are being stretched by having to continue to provide increasing functionality for line of business requests while also reducing costs. …

Joe Weinman offers a Mathematical Proof of the Inevitability of Cloud Computing in this 11/30/2009 post:

In the emerging business model and technology known as cloud computing, there has been discussion regarding whether a private solution, a cloud-based utility service, or a mix of the two is optimal. My analysis examines the conditions under which dedicated capacity, on-demand capacity, or a hybrid of the two are lowest cost. The analysis applies not just to cloud computing, but also to similar decisions, e.g.: buy a house or rent it; rent a house or stay in a hotel; buy a car or rent it; rent a car or take a taxi; and so forth.

To jump right to the punchline(s), a pay-per-use solution obviously makes sense if the unit cost of cloud services is lower than dedicated, owned capacity. And, in many cases, clouds provide this cost advantage.

Counterintuitively, though, a pure cloud solution also makes sense even if its unit cost is higher, as long as the peak-to-average ratio of the demand curve is higher than the cost differential between on-demand and dedicated capacity. In other words, even if cloud services cost, say, twice as much, a pure cloud solution makes sense for those demand curves where the peak-to-average ratio is two-to-one or higher. This is very often the case across a variety of industries. The reason for this is that the fixed capacity dedicated solution must be built to peak, whereas the cost of the on-demand pay-per-use solution is proportional to the average.

Also important and not obvious, leveraging pay-per-use pricing, either in a wholly on-demand solution or a hybrid with dedicated capacity turns out to make sense any time there is a peak of “short enough” duration. Specifically, if the percentage of time spent at peak is less than the inverse of the utility premium, using a cloud or other pay-per-use utility for at least part of the solution makes sense. For example, even if the cost of cloud services were, say, four times as much as owned capacity, they still make sense as part of the solution if peak demand only occurs one-quarter of the time or less. [Emphasis Joe’s.] …

Joe’s family of Web sites includes JoeWeinman.com, ComplexModels.com which is a Monte Carlo simulation site addressing cloud computing, network dynamics, and statistical behavior, and The Network Effect blog on networks and their structure, value, and dynamics. GigaOm has published his Compelling Cases for Clouds essay, which he expands in his Viable Business Models for the Cloud post of 11/15/2009. Joe is an “employee of a very large telecommunications and cloud computing company” (AT&T.)

James Hamilton designates 2010 the Year of MicroSlice Servers in an 11/30/2009 post:

Very low-power scale-out servers -- it’s an idea whose time has come. A few weeks ago Intel announced it was doing Microslice servers: Intel Seeks new ‘microserver’ standard. Rackable Systems (I may never manage to start calling them ‘SGI’ – remember the old MIPS-based workstation company?) was on this idea even earlier: Microslice Servers. The Dell Data Center Solutions team has been on a similar path: Server under 30W.

Rackable has been talking about very low servers as physicalization: When less is more: the basics of physicalization. Essentially they are arguing that rather than buying, more-expensive scale-up servers and then virtualizing the workload onto those fewer server, buy lots of smaller servers. This saves the virtualization tax which can run 15% to 50% in I/O intensive applications and smaller and low-scale servers can produce more work done per joule and better work done per dollar. I’ve been a believer in this approach for years and wrote it up for the Conference on Innovative Data Research last year in The Case for Low-Cost, Low-Power Servers.

I’ve recently been very interested in the application of ARM processors to web-server workloads:

ARMs are an even more radical application of the Microslice approach. …

Tony Bishop posits Enterprise Clouds Require Service-Level Discipline in this 11/30/2009 post that’s sub-captioned “Real Time Infrastructure Enables Clouds through Quality of Experience (QofE) Management:”

Organizations have become increasingly dependent on technical infrastructure to enable customer interactions. As such, the business has a vested interest in making sure its technology partners understand what constitutes good customer experience so that it’s prepared for projected volumes and rapidly knows how to resolve any impediments.

Yet, the industry standard model and best practices for establishing, managing, and measuring contracts via traditional Service Level Agreements (SLAs) has not been successful. In practice most agreements are based on simple Key Performance Indicators (KPIs) that are grounded in arcane technical concepts and not in the context of the customer consuming services. The result is artificial measurements that lead to IT having a ‘green’ dashboard, but lost business opportunity and irate customers.

The source of this disparity is the traditional agreement which is limited to guarantees of uptime, quantities of dedicated resources, and/or KPIs of utilization. This supply-driven view of the platform does not map back to business events and therefore has limited applicability. Furthermore, this traditional agreement model entirely breaks down when considering the infrastructure in support of a Real Time Enterprise (RTE) operating in the cloud.

Tony is the Founder and CEO of Adaptivity.

Andre Leibovici’s Cloud Computing Offerings – Part 2 – “Wishes” of 11/30/2009 observes:

“A Bird’s-Eye Look at Cloud Computing offerings” published on November, 26th, generated a lot of interest from the Cloud providers. Both mentioned providers contacted me, providing some corrections to the blog post and requesting some additional feedback. Even a new Cloud start-up has requested some advice and offered me to be part of the beta program. …

On the same line of thinking I came up some features, not too far from today’s technology, that we would like to see available for the next Cloud release.

- Storage Layering

- CPU Guarantee

- VM Portability for compatible Clouds

- Some other ‘wishes’ but a bit further down the track

Andre details each new feature request.

John Fontana reports Microsoft begins paving path for IT and cloud integration in this two-page 11/23/2009 post to InfoWorld’s Cloud Computing blog that’s subtitled “Microsoft's goal with what it calls the second wave of the cloud trend is to help users run applications that span corporate and cloud networks:”

Microsoft last week launched its first serious effort to build IT into its cloud plans by introducing technologies that help connect existing corporate networks and cloud services to make them look like a single infrastructure.

The concept began to come together at Microsoft's Professional Developers Conference. The company is attempting to show that it wants to move beyond the first wave of the cloud trend, which is defined by the availability of raw computing power supplied by Microsoft and competitors such as Amazon and Google. Microsoft's goal is to supply tools, middleware, and services so users can run applications that span corporate and cloud networks, especially those built with Microsoft's Azure cloud operating system.

"Azure is looking at the second wave," says Ray Valdes, an analyst with Gartner. "That wave is what happens after raw infrastructure. When companies start moving real systems to the cloud and those systems are hybrid and they have to connect back in significant ways to legacy environments. It's a big challenge and a big opportunity for Microsoft."

To attack the opportunity, Microsoft introduced projects called Sydney, AppFabric, Next Generation Active Directory, System Center "Cloud", and updates to the .Net Framework that provide bridges between corporate networks and cloud services. While a small portion of the software is available now, the majority will hit beta cycles in 2010. …

Scott Hanselman’s Hanselminutes on 9 - Guided Tour inside the Windows Azure Cloud with Patrick Yantz of 11/23/2009 carries this description:

Scott [was] at PDC09 in Los Angeles this week and got a great opportunity to get a guided tour of a piece of the Windows Azure Cloud from Patrick Yantz, a Cloud Architect with Data Center Services. You may think it's a Cloud Container, but it's not!

Join me on this very technical 15 minute deep dive inside the making of the hardware behind the Windows Azure Cloud.

<Return to section navigation list>

Cloud Security and Governance

• Scott Morrison asks How Secure is Cloud Computing? on 12/1/2009 and replies with a throwaway “Right now we are still in the early days of cloud computing” subtitle:

Technology Review has published an interview with cryptography pioneer Whitfield Diffie that is worth reading. I had the great pleasure of presenting to Whit down at the Sun campus. He is a great scientist and a gentleman.

In this interview, Diffie–who is now a visiting professor at Royal Holloway, University of London–draws an interesting analogy between cloud computing and air travel:

“Whitfield Diffie: The effect of the growing dependence on cloud computing is similar to that of our dependence on public transportation, particularly air transportation, which forces us to trust organizations over which we have no control, limits what we can transport, and subjects us to rules and schedules that wouldn’t apply if we were flying our own planes. On the other hand, it is so much more economical that we don’t realistically have any alternative.”

Diffie makes a good point: taken as a whole, the benefits of commodity air travel are so high that it allows us to ignore the not insignificant negatives (I gripe as much as anyone when I travel, but this doesn’t stop me from using the service). In the long term, will the convenience of cloud simply overwhelm the security issues? …

Most non-airline commercial pilots (like me) prefer to “fly our own planes,” regardless of the cost.

Steve Hannah’s Cloud Computing: A Security Analysis starts by advising “Don’t worry about cloud security, ensure it:”

With its ability to provide users dynamically scalable, shared resources over the Internet and avoid large upfront fixed costs, cloud computing promises to change the future of computing. However, storing a lot of data creates a situation similar to storing a lot of money, attracting more frequent assaults by increasingly skilled and highly motivated attackers. As a result, security is one - if not the - top issue that users have when considering cloud computing.

Cloud Security Concerns

Storing critical data on a cloud computing provider's servers raises several questions. Can employees or administrators at the cloud provider be trusted to not look at your data or change it? Can other customers of the cloud provider hack into your data and get access to it? Can your competitors find out what you know: who your customers are, what customer orders you are bidding on, pricing and cost information, and other critical data from your business? This information in the wrong hands would be devastating for a business. And what about privacy issues and government regulations?

He continues with a detailed explanation of cloud security risks and countermeasures to mitigate them.

Steve is co-chair of the Trusted Network Connect Work Group in the Trusted Computing Group and co-chair of the Network Endpoint Assessment Working Group in the Internet Engineering Task Force.

Alexander Wolfe reports Microsoft Seeks Patent For Cloud Data Migration in this analytical 11/30/2009 article for InformationWeek:

On the cusp of launching its Azure cloud computing service, Microsoft (NSDQ: MSFT) is also making a savvy bid to lock up a patent for one of the main worries--vendor lock-in--of cloud users. (The other big concern is security.) The folks from Redmond have filed a patent application for migrating data to a new cloud, which is what you'd have to do when leave your first vendor.

The patent has a number of unusual angles, so bear with me while I deconstruct it.

The application is number 20080080526, filed by Microsoft this past April, and entitled "Migrating Data To New Cloud." (The current application appears to be a refile of a doc originally submitted in Sept. 2006. Such resubmittals are common practice, by the way.)

Two things immediately jump out at the reader. First off is the fact that this patent proposal addresses data migration not so much from a vendor lock-in perspective (as in, you have to migrate your data because you want to bag your provider and go get a better deal) but rather as an auto fail-over data protection mechanism. (I'll get to the second thing, which is that this is constituted as an automated migration process, later on.) …

Michael Vizard claims in his 11/30/2009 post that The Ultimate Aribiter of Cloud Computing will be automated policy engines delivered by cloud providers:

When it comes to cloud computing, it’s pretty apparent that we’re rapidly heading toward commodity services. Obviously, most cloud computing services can’t survive by merely trying to compete on scale. So how will cloud computing services try to differentiate themselves?

Savvis chief technology officer Bryan Doerr says it will all come down to how effectively each cloud computing service provider can execute policies and how flexible that provider is in adapting its policy engines to each customer's specific needs.

This higher level of cloud computing, however, could create a quandary for customers. To really take advantage of it, IT organizations will need some fairly robust IT processes in place before they can think about either automating them on their own or using a cloud computing provider. The cloud computing provider, however, might be eager enough to get the business that it will re-engineer the customer’s application environment to secure the recurring revenue afforded by the cloud computing contract. After all, it’s in the best interest of the provider to modernize the customer’s IT environment, given that the provider now will bear the support costs.

Of course, not all cloud computing providers will be able to do this. Nor are most of them likely to have sophisticated policy engines in place that can adapt to the needs of multiple customers. In fact, once you start analyzing the multitude of cloud computing providers in the market, it becomes apparent that few possess the business process and IT skills required to take cloud computing to next level.

<Return to section navigation list>

Cloud Computing Events

Michele Leroux Bustamante will present Securing REST-Based Services with Access Control Service on 11/22/2009 at 11:00am – 12:30pm PST:

Abstract: The Access Control Service (ACS), part of Windows Azure platform AppFabric, makes it easy to secure REST-based services using a simple set of standard protocols. In addition to enabling secure calls to REST-based services from any client, the ACS uniquely makes it possible to secure calls from client-side script, and enables federation scenarios with REST-based services. This webcast will provide a tour of ACS features and demonstrate scenarios where the ACS can be employed to secure REST-based WCF services and other web resources. You’ll learn how to configure ACS, learn how to request a token from the ACS, and learn how applications and services can authorize access based on the ACS token.

Event ID: 1032435379

Register here.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• David Linthicum asks Could Ubuntu get enterprises to finally embrace the cloud? and replies “Ubuntu's ability to act as a gateway between on-premise IT and multiple clouds, using technology you probably already know, provides a much-need baby step for IT” in his 12/1/2009 post to InfoWorld’s Cloud Computing blog:

The Ubuntu Enterprise Cloud (UEC) allows you to build your own private cloud on existing hardware platforms that already run (or can run) Ubuntu Server, which is pretty much most of the Intel-based servers you have on hand. UEC is really just an implantation of the Eucalyptus cloud computing architecture, which is interface-level compatible with Amazon.com's cloud. This means that most who understand and deal with AWS will find UEC to be an on-premise extension of that technology, generally speaking.

The UEC architecture uses front-end computing that functions as the "controller," and one more "node" system using either KVM or Xen virtualization technology for running system images. Note that you cannot use just any OS image; it has to be prepared for use within UEC. A few basic images are provided by Canonical, the developer of UEC. …

The larger selling point here is the on-premise compatibility with Amazon Web Services. Those who use Amazon.com's EC2 -- there are legions of such users right now -- will be familiar with Amazon.com's S3, which allows you to persist data for use in the cloud. Eucalyptus offers a similar technology, called Walrus, which is interface-compatible with S3. Thus, if you're already an S3 shop, moving to this technology should not be much of a stretch.

Gary Hamilton, Jocelyn Quimbo and Saurabh Verma describe Database as a Service: A Different Way to Manage Data as “An important tool in a developer’s toolbox for rapid development” but (surprisingly) don’t include SQL Azure in their 11/30/2009 article:

SaaS has rapidly evolved from an online application source to providing application building blocks such as

- Platform as-a-Service (PaaS)

- Infrastructure-as-a-Service (IaaS) and

- Database-as-a-Service (DaaS)

DaaS is the latest entrant into the "as a Service" realm and typically provides tools for defining logical data structures, data services like APIs and web service interfaces, customizable user interfaces, and data storage, backup, recovery and export policies.

To ensure successful DaaS implementations, developers and database professionals need to address traditional challenges associated with data design and performance tuning. They will also need to address new challenges introduced by the lack of physical access for backup, recovery and integration. …

Two real-world examples of DaaS are Salesforce.com's Force.com, which provides data services in its toolkit for building applications, and Amazon's SimpleDB, which provides an API for creating data stores which can be used for applications or pure data storage. …

The authors are employees of Acumen Solutions.

<Return to section navigation list>