Windows Azure and Cloud Computing Posts for 8/30/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

The SQL Server Team announced Microsoft Aligning with ODBC (and Deprecating OLE DB) in a surprising 8/29/2011 post to the Data Platform Insider blog:

Today we are announcing that Microsoft is aligning with ODBC for native relational data access – the de-facto industry standard. This move supports our commitment to interoperability and our partners and customers’ journey to the cloud. SQL Azure is already aligned with ODBC today. [Emphasis added]

The next release of Microsoft SQL Server, Code Name “Denali,” will be the last release to support OLE DB. OLE DB will be supported for 7 years from launch, the date

lifeof SQL Server Code Name “Denali” support, to allow you a large window of opportunity for change before deprecation. We encourage you to adopt ODBC in any future version or new application development.Conversations with our customers and partners have shown that many of you are already on this path. The marketplace is moving away from OLE DB and towards ODBC, with an eye towards supporting PHP and multi-platform solutions. Making this move to ODBC also drives more clarity for our C/C++ programmers who can now focus their efforts on one API.

- For more information and resources, please see:

http://blogs.msdn.com/b/sqlnativeclient/archive/2011/08/29/microsoft-is-aligning-with-odbc-for-native-relational-data-access.aspx- To submit technical questions, please log onto:

http://social.technet.microsoft.com/Forums/en/sqldataaccess/threadsFor more on SQL Server and Microsoft’s commitment to interoperability, see:

Lynn Langit (@llangit) posted SQL Server and SQL Azure for Developers on 8/29/2011:

Here are the decks from my presentations for TechEd Australia this week. The sessions will be recorded. I’ll post the links after – thanks.

<Return to section navigation list>

MarketPlace DataMarket and OData

See above post.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brian Swan (@brian_swan) posted Introducing the Windows Azure SDK for PHP to the Windows Azure’s Silver Lining blog on 8/30/2011:

The Windows Azure SDK for PHP isn’t exactly new, so maybe it doesn’t need an introduction. However, for anyone building PHP applications for the Windows Azure platform, this SDK is essential. Besides, if you haven’t checked out the 4.0 release (or you have never heard of it), then an introduction is in order. In this post, I’ll provide links to resources that will help you get started with and use the SDK. As you’ll see by the links below, I’m leaning heavily on the AzurePHP site maintained by the Interoperability Team at Microsoft.

What’s in the SDK, Where to get it, and How to set it up

This post, Set up the Windows Azure SDK for PHP, does a great job of providing an overview of the SDK, showing options for getting it, and showing how to set it up. The diagram below (from that post) shows how the SDK can be divided into three main components:

- A class library for accessing Windows Azure storage services, logging information in Blob storage, gathering diagnostics information about your services and deployments, and managing your services and deployments. For API reference documentation, point your favorite PHP documentation generator at the \library directory of the SDK.

- A set of command line tools (package, scaffolder, deployment, certificate, storage, and service) for building, packaging, deploying, and managing projects in Windows Azure. For more detail (including examples), type the tool name and hit enter at the command line after you have set up the SDK.

- A set of project templates (“scaffolds”) in the form of .phar files. (More on these templates in the How to package and deploy applications section below.)

How to package and deploy applications

The 4.0 release of the Windows Azure SDK for PHP introduced the concept “scaffolds” for packaging PHP applications to Windows Azure. If you have been using the Windows Azure Command Line Tools for PHP Developers for this purpose, getting your head around scaffolds takes a little work. Once you get used to using scaffolds, they are quite simple and quite powerful. A scaffold is a PHP/Azure project template in the form of a .phar file. The SDK ships with a default template (in the /scaffolders directory) that you can use to deploy an application – just add your application’s source code to the PhpOnAzure.Web directory of the project produced by running the scaffolder against the default scaffold. This post, Build and deploy a Windows Azure PHP application, will walk you through all the details.

The power of scaffolds becomes evident in these posts:

- How to deploy WordPress using the Windows Azure SDK for PHP WordPress scaffold

- How to deploy WordPress Multisite to Windows Azure using the WordPress scaffold

- How to deploy Drupal to Windows Azure using the Drupal scaffold

From those posts you begin to understand how a scaffold can be created for any application. The scaffolder tool then allows anyone to run it and add their application-specific information (e.g. SQL Azure database connection information) and quickly deploy to Azure. For information about creating a scaffold for your own application, see Using Scaffolds.

How to access Windows Azure Blob, Table, and Queue storage services

Within the class library for the SDK, there are classes for accessing the Windows Azure Blob, Table, and Queue storage services. The main classes you’ll use are the Microsoft_WindowsAzure_Storage_Table, Microsoft_WindowsAzure_Storage_Blog, and Microsoft_WindowsAzure_Storage_Queue classes. Details on how to use these classes can be found in these articles (note that some of these tutorials are based on versions of the SDK prior to the 4.0 release, but storage APIs have maintained backward compatibility):

Using Table Storage

Using Blob Storage

Using the Queue Service

Other resources

For reference, here are other resources worth checking out:

- Introduction to “Deal of the Day” – A PHP sample scaling application. This article provides an example application that makes heavy use of the Windows Azure SDK for PHP. It also contains lots of links to other interesting reading.

- Maarten’s Balliauw’s blog. Maarten has been a key contributor to the development of the Windows Azure SDK for PHP. He often blogs about what’s coming soon.

That’s quite a few resources, but I’m sure there are more out there. If you come across good resources related to the Windows Azure SDK for PHP, please let me know and I’ll add them to this post. As I gather more resources, maybe I can put together a sort of “table of contents” of what’s out there. And, of course, if you are looking for a resource that doesn’t exist, let me know…I’ll write it!

Robin Shahan (@robindotnet) explained “How to handle URLs and configuration settings in a Windows Azure staging server environment” in her Publish a Microsoft Windows Azure Application to a Staging Server in an 8/30/2011 post to the DevProConnections blog:

If you are using Microsoft Windows Azure to host your applications and services in the cloud, it's easy to figure out how to publish to the cloud for production. You publish to the production instance with the configuration settings pointing to production, and voila, you're done. In this article, I will discuss how to handle publishing to staging. One issue is the "ever-changing URL" problem, when you publish to a staging instance of an Azure service, and another is the issue of handling different configuration settings for production, staging, and development. Let's start by talking about those pesky staging URLs.

Pesky Staging URLs: The Problem

For production instances, Microsoft sets the URL for your service using the service name you selected when you first set it up. For example, if you have a service called a-contoso, the URL for accessing that service is http://a-contoso.cloudapp.net, as shown in Figure 1 [below].

Most people set up a DNS entry to point to their service rather than using and displaying the cloudapp.net address. For example, the Contoso company might set up www.contoso.com to point to contoso.cloudapp.net. This is what people expect. When you go to microsoft.com, you expect to see microsoft.com, not something like microsoftwebsite.cloudapp.net. (I totally made that up, by the way, so don't bother trying it in Internet Explorer.) (OK, I saw you try that. Told you so.)

Another advantage of using DNS entries is flexibility. If Microsoft wants to move the application to another region or host it on Amazon's cloud service (ha ha!), they can just change the DNS entry to point to the new site, and it has no impact on their customers.

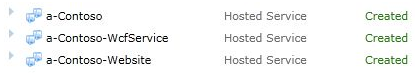

This is all fine and dandy for production, because the URL that is assigned to the production instance of a service doesn't change. But what happens if I publish to the staging instance? I have set up three services for Contoso in my Azure portal, as displayed in Figure 2 [below].

Let's publish my application to the staging instance of a-Contoso, shown in Figure 3.

Figure 3: Publish to staging instance of a-ContosoAfter it finishes, you'll see the service address displayed in the Azure portal, shown in Figure 4 [below].

If I publish it to staging again, here's the service address displayed in the Azure portal, shown in Figure 5 [below].

The URL changes every time you publish the application to the staging instance. What if this is a service used by a client application? Or a website accessed by another website? You would need to change those entry points to use the new URL each time. Or if you have a DNS entry for the staging instance, you would have to change it every time you publish the application to the staging instance, then wait for it to propagate before you can try out your new version.

Unfortunately, you can't always schedule this to coincide with lunchtime. If you're like me, this will propagate quickly for everyone except the QA director who is waiting for the next version, and they will be typing "doesn't work for me yet" repeatedly in their IM window because their connection to the network doesn't pick up DNS changes for a long time, for some mysterious reason. This impacts productivity and tends to frustrate and irritate the QA director, and trust me, if you're the head of engineering, you do not want to irritate the head of QA!

This might not seem like a big deal if you only have one service. But what if you have 12? And what if you have interdependencies, like service A calls service B which calls service C, so if you want to test A, you also have to publish B and C. This means you have to publish C, get the URL, and put it in B's configuration, then publish B, get B's URL to put in A's configuration, and then publish A. Are you tired yet?Pesky Staging URLs: The Solution

There's an easy solution to this problem. Let's say Contoso has three production instances. For each production service, I set up a corresponding "production service" (production to Azure) with the abbreviation "st" in the name to indicate that these are actually the staging version of the services. So now I have my list of services displayed in Figure 6.

I set up DNS entries to point to each of these, and they will never need to be changed. For example, I set up www.contoso-staging.com to point to a-st-contoso.cloudapp.net.You can decide for yourself what naming convention to use, and whether you want the string that indicates it is staging to appear before or after the service name. You have to think about the sort order. You can basically sort them by service:

a-Contoso

a-Contoso-staging

a-Contoso-WcfService

a-Contoso-WcfService-staging

a-Contoso-Website

a-Contoso-Website-staging

or you can sort them by deployment type:

a-Contoso

a-Contoso-WcfService

a-Contoso-Website

a-st-Contoso

a-st-Contoso-WcfService

a-st-Contoso-WebsiteI tend to be paranoid about accidentally publishing to production, I don't have services published to staging all the time, and I don't want the staging services mixed in with my production services because it's more clutter, so I sort them by deployment type. …

Read more: 2, 3, 4, 5, Next, Last

Toddy Mladenov posted Microsoft Windows Azure Development Cookbook Review on 8/30/2011:

Recently I got my hands on the Microsoft Windows Azure Development Cookbook

book written by Neil Mackenzie (@mknz), and honestly I was impress with the quality of the examples he gives into it. As a disclaimer I have to say that the book was sent to me by the publisher however there are no incentives for me to write this review. Once again I think the book provides very good hands on examples for the complete set of services that Windows Azure offers – starting with Windows Azure Storage, going through the Hosted Services and ending with SQL Azure and AppFabric.

The value I saw in the book is that 1.) it explains each of the services with real examples, and walks you step by step through what is happening, and 2.) is written from the customer point of view (Neil is a MVP, who is dealing with Windows Azure since it was announced at PDC08). I personally found the following three chapters very valuable even for me:

- Using the Shared Access Signature for a container blob

- Using the retry policies with blob operations

- Autoscaling with the Windows Azure Service Management REST API

I am sure that I will refer to those three chapters very often for my own work on Windows Azure.

As a suggestion I would like to say to Neil that in the next edition I would be happy to see a section dedicated to the troubleshooting, debugging and profiling services on Windows Azure. From my own experience I can say that the majority of question I’ve seen have been in this area, and his experience with the platform as well as his independent view will allow him to provide really good troubleshooting tips and helpful workarounds.

I know Neil remotely, and we have interacted couple of time on MSDN forums or via Twitter. I can say that he is very knowledgeable about the topic, and you can learn a lot from the book and his personal blog.

I hope you will find the book a good resource, and kudos to Neil for writing it!

Still waiting for my copy to arrive.

Bytes by MSDN released a Bytes by MSDN: August 30 - Thomas Robbins interview on 8/30/2011:

Join Clark Sell, Senior Developer Evangelist at Microsoft, and Thomas Robbins, Chief Product Evangelist at Kentico Software, as they discuss the many exciting opportunities available with the Cloud. Kentico is Windows Azure certified and their focus is essentially to help people build better websites. Their ultimate goal in leveraging Windows Azure, is for customers to take their on-premise contact management systems and upload them to the cloud. Tune in for some great tips!

- Video Downloads: WMV (Zip) | WMV | iPod | MP4 | 3GP | Zune | PSP

- Audio Downloads: AAC | WMA | MP3 | MP4

About Thom

Thom Robbins is the Chief Product Evangelist for Kentico Software LLC. He is responsible for working with Web developers, Web designers and interactive agencies using Kentico CMS. Prior to Kentico, Thom joined Microsoft Corporation in 2000 and served in a number of executive positions. Most recently, he led the Developer Audience Marketing group that was responsible for increasing developer satisfaction with the Microsoft platform. Thom also led the .NET Platform Product Management group responsible for customer adoption and implementation of the .NET Framework and Visual Studio. Robbins was also a Principal Developer Evangelist working with developers across the world on implementing .NET based solutions. Thom can be found on his blog, followed on twitter, or reached at thomasr@kentico.com

About Clark

Clark Sell is a Senior Developer Evangelist at Microsoft. Name a role in the software industry, and Clark has probably played it. He started as a Y2K tester and has since worked as a developer, lead, “build monkey,” solutions architect and product manager. His professional sweet spot however, lies in designing and building software solutions that make life easier – there’s no chance for boredom and constant opportunities for growth.

Clark is MCSD certified and received top Microsoft honors with the Circle of Excellence Award. He’s a graduate of Western Illinois University and before joining Microsoft in 2005, he served as a solutions architect at Allstate Insurance Company. As a senior developer evangelist and Visual Studio team system ranger, Clark brings a good dose of humor and a zest for life to the podium.

You can hear Clark’s technical musings on “The Smackdown” at DeveloperSmackdown.com and The Thirsty Developer podcast – or find him getting grease under his nails in the garage. Clark’s a muscle car fanatic who’s currently finishing a body-off restoration of his 1970 Chevrolet Camaro while driving his 1968 Camaro SS. You can also take a look at what Clark's been up to on his blog.

Thomas Robbins and Clark Sell recommend you check out

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Spursoft Solutions updated its Spursoft LightSwitch Extension offered by the Visual Studio Gallery on 8/28/2010:

This extension contains a menu navigated shell and several themes along with several custom controls.

The Shell is navigated by menus driven from the navigation groups. They can be docked by the user at runtime in 1 of 4 positions, Horizontally above and below the ribbon bar or vertically on the right or left side of the active screen area. It also allows the user to decide to dock the active screen tabs on the bottom or the top of the active screen area.

This extension also contains several themes, the twilight blue theme from the theme manager in the silverlight toolkit and an Office 2010 Black theme from VIBlend. and a Default Theme.

This extension contains several controls such as a drop down list, Horizontal and Vertical Splitters, SQL Server Reporting Server Viewer Control, PDF Viewer Control. The Horizontal and Vertical Splitter allows the designer to split the UI into user resizable panes either vertically or horizontally. The drop down list is a simple list allowing for up and down arrow keys to navigate and select the items from the list. The SSRS Viewer is a WebBrowser based control allowing for the display of SQL Server Reporting Services Reports by way of ReportViewer control, WebService or spoofed Web Page. It can also be set to popup the report in resuable or new browser windows.

Issues and workarounds

An issue has come to light with the use of this latest version. The issue is that it requires the use of the Silverlight ToolKit from April 10 for Silverlight 4. You can get this fromhere. After installing this toolkit you need to add a reference to the System.Windows.Controls.Layout.Toolkit assembly to both the Client and ClientGenereated Project. The next release will do this on the use of the Extension but for now this is the workaround. For more in depth information please seethis forum discussion.

This update contains several fixes and adds 2 new locations to dock the tabs and menus. You can now dock the tabls on the left and right with the tabls rotating vertically. You can also dock the menu at the bottom of the active screen.

It will also load the default screen on the load of the shell.

The bug fixes are related to the reports in both the forums and on this site in the Q and A section. Please feel free to continue to ask for features and bug fixes.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “New efforts to tax cloud computing could make it less cost-effective, and certainly messy for both buyers and providers” as a deck for his The taxman cometh for cloud computing post of 8/30/2011 to InfoWorld’s Cloud Computing blog:

You had to see this one coming. As local municipalities and states seek to find additional revenue in this down economy, they now have their sights on the emerging market of cloud computing. As more companies use cloud services, the traditional rules of taxation based on physical presence no longer fit. However, the new set of proposed tax laws could drive more confusion and remove much of the costs savings of transitioning to the cloud.

States recognize the shift in buying patterns from boxed software and hardware to computing services delivered over the Internet. Thus, they want to position or reposition tax laws to make sure they get their traditional share as purchases shift venues.

Many established interests want to shape this movement. Accountants, lawyers, state tax officials, and companies such as Google, Apple, and NetSuite are looking to develop new guidelines for taxing the use of cloud computing. Amazon.com has exited more than a dozen states that changed their laws to consider such affiliates as equivalent to taxable physical presence for distributors. Instead, Amazon is pulling affiliate arrangements to avoid collecting taxes and trying to get a ballot initiative in front of voters to exempt it from a recent decision to tax online retailers' in-state sales.

Now the federal government is chiming in. This includes Sen. Ron Wyden (D-Ore.) and House Judiciary Committee chairman Lamar Smith (R-Texas), who are backing federal legislation that would limit the states' ability to tax "digital goods and services." As you may recall, this was the same type of law that limited the taxation of the then-emerging Internet-based e-commerce industry in the 1990s, and it's based on an old Supreme Court decision that exempts catalog sales from having to collect sales taxes when the customers are in a different state than the retailer.

At the same time, Amazon and others are supporting a bill from Sen. Dick Durbin (D-Ill.) that would impose a streamlined national sales tax for e-commerce, avoiding the complexity of figuring out hodgepodge of state and local tax rates. As online sales have grown dramatically, states have challenged the catalog sales-based exemption, some imposing sales taxes. …

Read more: next page ›

Scott M. Fulton III (@SMFulton3) reported Microsoft: 'Virtualization Is Not Cloud Computing' from VMworld on 8/30/2011:

At this week's VMworld conference in Las Vegas, attendees are gearing up for a series of events and breakthrough announcements beginning Tuesday, some of which are expected to come directly from the mouth of VMware CEO Paul Maritz. This while the big news already hitting the floor is Citrix's move to full and free open source for its recently acquired Cloud.com infrastructure management system.

Somewhere in the middle of all this is Microsoft, which is not accustomed to playing the role of also-ran. Yesterday that company announced a revised licensing model, moving back to a per-processor scheme with unlimited virtual machine instances. It's part of the company's effort to attack VMware by going after its "V" word - de-emphasizing virtualization.

"Let's be clear: Virtualization is not cloud computing. It is a step on the journey, but it is not the destination." This from Microsoft's Brad Anderson, Corporate VP and head of what's currently being called the Management and Security Division. Under the new corporate setup, Anderson is now the company's chief cloud spokesperson.

"We are entering a post-virtualization era that builds on the investments our customers have been making and are continuing to make," Anderson continues. "This new era of cloud computing brings new benefits - like the agility to quickly deploy solutions without having to worry about hardware, economics of scale that drive down total cost of ownership, and the ability to focus on applications that drive business value - instead of the underlying technology."

It will be difficult to make the case, technologically speaking, that cloud computing is moving beyond virtualization - that's a bit like saying the automotive industry is moving beyond the drivetrain. As Microsoft's own literature on cloud computing (PDF available here) states, "The primary vehicle for cloud infrastructure is virtualization; more specifically, running virtual servers in large data centers, thereby removing the need to buy and maintain expensive hardware, and taking advantages of economies of scale by sharing Infrastructure resources."

But weighing in Microsoft's favor is a publication from arguably the company's key cloud customer, the U.S. Government. In spelling out for itself and prospective vendors just what constitutes "the cloud" and what does not, the National Institute for Standards and Technology specified classes of services, not technical foundations. NIST essentially said (PDF available here) a cloud was a self-service platform enabling rapid access to pooled resources, not a data center running a virtualized infrastructure.

So the shift Anderson is referring to is more of a change of focus, away from the center of gravity that VMware now commands and toward service and management tools where Microsoft is more competitive. In an effort to help customers change their focus along with Microsoft, the company announced yesterday a shift in the licensing strategy for its cloud infrastructure platform, moving back to a per-processor model.

Three years ago, the move away from per-processor licensing seemed to require more than a crowbar. Microsoft's scheme for Windows Server at that time was to disallow any transfer of the operating system image away from the processor to which it was "rooted" - essentially forbidding the whole process of VM migration, which is completely necessary for the cloud principle to work.

The new scheme is with respect to customers building private clouds - elastic computing realms that are built and managed by an organization, and housed on- or off-site. Microsoft's new Enrollment for Core Infrastructure (ECI) plays off the notion that VMware uses per-processor licensing for its core infrastructure tools but per-VM licensing for its services.

ECI, by comparison, enables unlimited (or "unlimnited," as the case may be) VMs of Windows Server, so the new scheme avoids re-introducing prohibition. What's more, as a marketing brochure released yesterday (PDF available here) explains, as businesses deploy more virtual machines, they could be saving about 75% on licensing expenses when the total figure enters the five-digit range.

"VMware's current licensing for private clouds places restrictions on customers and essentially taxes them as they grow," the brochure reads. "While the initial costs may seem tolerable for many IT budgets the long term impact as technology evolves means that costs will rise significantly, and negate a customer's ability to benefit from the economics of cloud computing. With Microsoft, you gain the advantages of cloud economics, based on our unlimited virtualization rights."

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Edwin Yuen (@edwinyuen) posted Delivering business value with an app-centric approach to Private Cloud to the Server and Cloud Platform Team blog on 8/30/2011:

Hear more from Edwin Yuen, Director of Cloud and Virtualization Strategy, about Microsoft's approach to private cloud and how that differs from VMware's:

Yesterday in his keynote, Paul Maritz’s said VMware’s “heart” is in infrastructure. He also talked a lot about VMware’s focus on the cloud. Well, if your heart is in infrastructure (read: virtualization), and you’re focused on the cloud, we think that leads to a virtualization-focused approach to cloud.

At Microsoft our approach to private cloud delivers business value through a focus on the application, not the infrastructure. And, we believe economics is a fundamental benefit of cloud – it is one that customers should benefit from, not just us.

VMware’s private cloud solution reflects that infrastructure/virtualization heart, and that heart beats pretty loudly in their licensing – which is per-vm and per product. So, as your cloud grows, and density increases, your costs grow - right along with it. That doesn’t seem very cloud friendly to me - especially when a VMware private cloud solution can cost 4 to nearly 10 times more than a comparable Microsoft private cloud solution. If you’d like to take a look at the research behind these numbers take a look at the new whitepaper my team just published.

Your cloud, on your terms, built for the future, ready now – take a look & let us know what you think.

Edwin is Director for Cloud and Virtualization at Microsoft.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Eric Nelson (@ericnel) reported FREE One Day Windows Azure Discovery Workshops – second Tues of every month to his IUpdateable (UK) blog:

Subsequent to the September 12th workshop (which I previously blogged on ) we will be delivering Workshops every month on the second Tuesday:

- October 11th

- November 8th

- December 13th

- January 10th

These monthly workshops on the Windows Azure Platform are designed to help Microsoft partners who are developing software products and services and would like to explore the relevance and opportunities presented by the Windows Azure Platform for Cloud Computing.

Overview:

The workshops are designed to help partners such as yourself understand what the Windows Azure Platform is, how it is being used today, what resources are available and to drill into the individual technologies such as SQL Azure and Windows Azure. The intention is to ensure you leave with a good understanding of if, why, when and how you would take advantage of this exciting technology plus answers to all (or at least most!) of your questions.

Who should attend:

These workshops are aimed at technical decision makers including CTOs, Technical Directors, senior architects and developers. Attendees should be from companies who create software products or software services used by other organisations. For example Independent Software Vendors.

There are a maximum of 12 spaces per workshop and one space per partner (we can waitlist additional employees).

Format:

This format is designed to encourage discussion and feedback and ensure you get any questions you have about the Windows Azure platform answered.

Topics covered will include:

- Understanding Microsoft’s Cloud Computing Strategy

- Just what is the Windows Azure platform?

- Exploring why software product authors should be in interested in the Windows Azure Platform

- Understanding the Windows Azure Platform Pricing Model

- How partners are using the Windows Azure platform today

- Getting started building solutions that utilise the Windows Azure Platform

- Drilling into the key components – Windows Azure, SQL Azure, AppFabric

Timings:

- 09:30 Registration

- 10:00 Workshop starts

- 11:15 Break

- 11:30 Workshop continues

- 13:00 Lunch

- 13:30 Workshop continues

- 15:00 End (although the Microsoft team will be happy to continue the discussion…)

How to register:

If you are interested in attending, please email ukdev@microsoft.com with the following:

- Which month

- Your Company Name

- Your Role

- Why you would like to attend

- You current knowledge/exposure to Cloud Computing and the Windows Azure Platform

Related Links:

- Sign up to Microsoft Platform Ready for assistance on the Windows Azure Platform

- http://www.azure.com/offers

- http://www.azure.com/getstarted

Lynn Langit (@llangit) posted SQL Server and SQL Azure for Developers on 8/29/2011:

Here are the decks from my presentations for TechEd Australia this week. The sessions will be recorded. I’ll post the links after – thanks.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

The HPC in the Clouds blog reported Fujitsu Develops World's First Cloud Platform to Leverage Big Data in an 8/30/2011 press release:

Fujitsu today announced that it is currently developing a cloud platform-tentatively named the Convergence Services Platform-that will leverage "big data." Fujitsu will offer this platform in the fourth quarter of fiscal 2011, followed by a phased service release.

The Convergence Services Platform is a PaaS platform that accumulates data collected from a variety of sensors or data that has already been collected, integrates it, processes it in real time, and uses it to make future projections. With the Convergence Services Platform, Fujitsu will be the first in the world to deliver a PaaS platform that can securely leverage different types of large data sets on one platform.

Through the use of this platform, customers can enhance the value of their products and services, quickly bring new information services businesses on line, and enhance collaboration by aggregating information from a particular region, thereby creating new value and bringing new dynamism to regional communities.

The Convergence Services Platform is a key technology to realize Fujitsu's vision of a secure and prosperous human-centric intelligent society by leveraging the power of information and communication technology (ICT). Going forward, Fujitsu is committed to do more than just offer ICT platforms-it strives to deliver comprehensive and focused convergence services, including with operations centers and expert staff that support the utilization of customer data to provide management of customers' new businesses and in diverse regions.

In recent years, as people's lives and the nature of business have become increasingly varied and complex, the requirements demanded of ICT increasingly extend beyond traditional industry boundaries into realms such as the efficient use of energy, the resolution of such urban problems as traffic jams, and the reduction of spending on healthcare. As its approach to contributing to resolving these types of issues and delivering new value, Fujitsu works to provide convergence services in which large volumes of data are collected, stored, analyzed, and integrated with knowledge in a feedback loop to society.

Fujitsu has been developing its Convergence Services Platform that is essential for making convergence services a reality. It is an integrated platform that can accumulate and analyze big data, process it in real time, and perform contextual processing of data(1). Fujitsu plans to be the first in the world to offer this type of Convergence Services Platform as a PaaS platform.

Features of the New Platform:

1. Comprehensive service that integrates all required technologies

The platform integrates, via the cloud, all of the technologies required to leverage big data, including compound event processing(2), parallel distributed processing, data compression and anonymization, and data mashups(3).2. Different types of large data sets can be integrated

Because different types of large data sets, including data collected from a variety of sensors, business transaction log data, text data, and binary streams(4), can be integrated for a variety of purposes, it contributes to the creation of new value.3. Delivery as a cloud service enables compatibility with all required computing resources. Depending on the requirements of the service employed, it can be used starting from even a small scale in terms of data volumes and number of servers employed. Only computing resources that are required are used, making for appropriate operational costs.

Platform Functions

The Convergence Services Platform is comprised of the following six-function suite. Platform Functions:

- Data collection and detection. By applying rules to the data streaming in from sensors, an analysis of the current status can be performed and, based on that analysis, decisions can be made with navigation or other required procedures performed in real time.

- Data management and integration. A huge volume of diverse data in different formats constantly being collected from sensors is efficiently accumulated and managed through the use of technology that automatically categorizes the data for archive storage.

- Data analysis. The huge volume of accumulated data is quickly analyzed using a parallel distributed processing engine to create value through the analysis of past data, future prediction, or simulations.

- Information application. Using service mashup technology, the analytical results from the platform are integrated with multiple web services to easily create new navigation services. The analysis and judgment results are made visible and used to inform recommendations(5) that are made to support end users.

- Data exchange. Using data federation(6) technology in which the data can be accessed in compliance with authorizations, secure data convergence among tenants (customers) is available in the required format when needed.

- Development support and operational management. Development support functions and operations management functions for the entire platform are provided in a user portal. As a result, all development resources can be managed and operated in an integrated manner.

Delivery Formats for the Platform. The Convergence Services Platform is expected to be provided in the following three formats.

- Integration format: Fujitsu provides the platform as a PaaS and develops the customer's proprietary applications on the platform

- Application services format: Fujitsu provides each type of application service (such as SPATIOWL(7)) via the platform

- Data format: Fujitsu utilizes the environment for analyzing the customer's data as well as the data accumulated on the platform. …

Fujitsu also is the first third-party to implement the Window Azure Platform Appliance to provide Windows Azure as a service.

Matthew Weinberger (@MattNLM) reported Salesforce.com Invests in Cloud Integration Platform Appirio in an 8/30/2011 post to the TalkinCloud blog:

Cloud integration platform provider Appirio is expanding its footprint in the Europe and Asia Pacific regions, thanks to a strategic investment on the parts of cloud CRM provider Salesforce.com and VC firm GGV Capital. Both Salesforce and GGV were existing investors in Appirio.

Chris Barbin, CEO of Appirio, had this to say on the announcement’s timing in a prepared statement:

“Cloud computing is already almost a $70 billion market, but it’s still early days in the overall shift happening in IT – especially outside the U.S. When companies see the impact of powering their business with the cloud, that shift will only accelerate and they’ll need a cloud-focused partner to help them.”

The money apparently will be used for both organic and inorganic growth, so watch for further news of Appirio acquisitions. According to the press release, Appirio has had a presence in Japan since opening a Tokyo regional office in 2008, but this move will see the company expand greatly in the region. What we don’t know is how much Appirio raised in this round, as the company is keeping that information under its hat.

As a refresher, Appirio’s value proposition mainly involves its CloudWorks platform, which integrates various and sundry SaaS applications with each other and on-premises systems, addressing data siloing issues.

Stay tuned for more on both Appirio and Salesforce.com, especially as both will be out in, ahem, force at this week’s Dreamforce ’11.

Read More About This Topic

- Appirio CloudWorks Cloud Integration Platform Gets Update

- Appirio SalesWorks Integrates Salesforce Chatter with Google Apps

- Dreamforce 2011 Agenda: What Cloud Services Providers Can Expect

- LiveOffice Connecting Salesforce and Microsoft Outlook

- Is Salesforce.com Buying Social Media Helpdesk Assistly?

Watch for a change in Matt’s Twitter handle to MattPenton, because Penton acquired the TalkinCoud and Nine Lives Media (NLM).

Joe Brockmeier (@jzb) reported VMware Improves View, and Extends Horizon in an 8/30/2011 post to the ReadWriteCloud:

Yesterday's VMware announcements were all about the data center and the global cloud ecosystem. Today the announcements are more end-user focused. Today the company took the wraps off VMware View 5 and updates to VMware Horizon.

Aside from a scenic theme to the announcements, what's going on with View and Horizon? VMware View lets organizations provide personalized virtual desktops to users as a managed service. Users can get their desktop on a mobile device, laptop, desktop, or thin client.

Thanks to improvements in View 5, they can get them faster and with less bandwidth. VMware has enhanced its PCoIP delivery so that you can get up to 75% bandwidth improvement. Better compression, client-side caching, and the choice of lossless options. They've also added performance optimizations that are supposed to reduce the load on CPUs by 5 to 10%.

View 5 also promises to deliver better graphics overall, by using View 5 with vSphere 5. This means that users that get their desktops via View will have the option of using Windows Aero or other 3D applications that require Direct-X or OpenGL support. And users can have displays that are up to 1900x1200. Two of them, actually.

The real biggie with View 5 is persona management. This preserves the user's profile between sessions – that includes user-specific desktop settings, application data and settings, and other Windows registry entries configured by applications. This doesn't look quite as comprehensive as the technology Citrix picked up from RingCube, but should be a big boost for shops that are already invested in VMware View and vSphere. VMware's Scott Davis has blogged extensively about the "flavors" of persona management.

There's also an update to the VMware View Client for iPad on the, er, horizon. Pricing for View 5 is $150 per concurrent connection for the enterprise edition and $250 per concurrent user for the premier edition. Both editions include vSphere 5 for desktops, vCenter Server 5 and VMware View Manager 5. Premier adds View Client with Local Mode, ThinApp 4.6, and View Composer and vShield Endpoint.

On the Horizon

In addition to updating View, VMware announced updates to VMware Horizon. Horizon is meant to serve as an "identity-as-a-service" hub to extend user identity from systems like Microsoft Active Directory into the cloud. It consists of Mobile Manager and the Mobile Virtualization Platform (MVP) Mobile Manager is a console for monitoring, updating, and provisioning/de-provisioning virtual workspaces on mobile devices. The MVP is to allow users to run a virtual work environment on their mobile phones that's separate from their personal environment.

This isn't entirely new, VMware previewed MVP earlier this year at the Mobile World Congress. The announcement for this week is that MVP now works with LG and Samsung phones. LG was announced earlier this year, Samsung is new to the family of supported devices.

This is good and bad news for users and organizations. Good in that if they have a supported phone, they can use one phone for everything. Bad in that they're saddled with a limited set of Android devices. And iOS isn't on the list yet, though Raj Mallempati, director product marketing, enterprise desktop solutions for VMware says it's "technically possible" to support iOS. It seems to be more a matter of getting Apple's cooperation. Mallempati says he can't say whether it's likely or not that the iOS version will come to market.

James Urquhart (@jamesurquhart) asked Can any cloud catch Amazon Web Services? Part 1 in an 8/29/2011 post to C|Net News’ Wisdom of Clouds blog:

While there has been an incredible increase in the competitive landscape for various cloud computing services, one company stands out to me as unique in both its approach and its success. That company is Amazon, and their Amazon Web Services offerings.

Before you accuse me of being some kind of fanboy, I want to explain why I say this. And in part 2 of this series, I'll point out how this uniqueness will be challenged in the coming year or two. There is no guarantee that Amazon will be the dominant cloud capacity provider forever, but they deserve their props when it comes to what they have achieved to date, and how--at least for now--they continue to go about staying the leader.

For the most part today, Amazon is competing against hosting companies in the infrastructure-as-a-service (IaaS) market. To understand why Amazon's approach remains so successful relative to those other offerings, you have to look closely at their approach to the cloud relative to those competitors.

Most IaaS is built for operations, Amazon services are built for developers

Hosting companies generally deal with servers and people who operate servers. Yes, managed service offerings can move the conversation to those operating specific applications, but that is different than software development.Even though cloud computing is an operations model, it is a model that requires applications to be architected a certain way--an architecture that most well-known software packages aren't very good at today. It is also a model that opens the door to whole new application categories that were just not cost effective to approach when everything had to be paid for whether it was used or not.

Both of those conditions require software developers to create the appropriate software to take advantage of cloud's benefits. This means that cloud's success depends on software development, and cloud solutions should target that market. Which is precisely what AWS has done.

Amazon gives developers control with "services as a platform" approach

Coding distributed systems is hard. It requires a huge amount of supporting software to make sure components and systems can communicate, coordinate, and survive negative events.Amazon gets this, and it provides services that enable some flexibility of application architecture, while eliminating the headache of installing and operating the underlying systems. ElasticCache was the latest, but AWS RDS, S3, SQS, and others are examples of core infrastructure software elements that are now offered as an API to developers for a price.

Of course, developers have to do more work to coordinate scaling and failure recovery of their applications in this model, but they have tremendous control over their application architectures and operations.

Traditional hosters, on the other hand, are placing their bets on "platform as a service" (PaaS), which is a term largely associated with framework-centric development environments, in which developers write their code on top of a set of APIs that define the architecture of the application, and how that architecture deploys, scales, and recovers from failure. That's powerful, but it is also limiting for developers looking to push the boundaries of cloud models, as those boundaries are hard-set by the framework architecture.

Amazon doesn't wait for others to create necessary technologies

I think one reason that Amazon addresses the developer market so well is that they are developers themselves, while most hosting companies are data center operators. Thus, even with respect to core infrastructure automation technologies, Amazon hasn't waited for a known vendor to solve all of their problems, but have attacked solutions themselves head on.This, in turn, allows them to focus on priorities as determined by their customers, as well as innovate new services that customers didn't even know they wanted. If the technologies exist, they will explore them, and maybe use one. However, for a systems software shop like AWS, often the faster, cheaper route is to create the service themselves, the way they want it.

Hosting companies, on the other hand, generally don't have large software development teams to create new services or address new markets.

Amazon understands economies of scale

Some time ago, Amazon's data center guru, James Hamilton, wrote a post (which I covered) explaining the importance of keeping systems busy to keep costs down. It was brilliant insight, and their maniacal push to find ways to sell capacity (both compute and storage) is one of their strongest advantages.My favorite example of this is the AWS spot instances, in which users can purchase compute time at a variable price set by Amazon, with the condition that their VMs can be killed if the spot price goes higher than a limit set by the user. Why offer this plan, which clearly takes a fair investment in automation and management software to operate?

As I noted when it was introduced, the plan exists for a simple reason: to encourage people to consume capacity that might otherwise go unused for a variety of reasons. Even at a reduced rate, the capacity is cheaper to operate than to let lie dormant.

This is an argument that I thought hosting companies would understand immediately, but to my surprise I am not aware of any other cloud provider-operated spot market today. So, those providers appear to be at a disadvantage when it comes to squeezing revenue out of cloud servers and disks.

All of this probably makes it sound like I think Amazon's dominance is a foregone conclusion. I don't. In fact, what excites me about the cloud marketplace today is the number of initiatives that are creating challenges to the Amazon ecosystem. I'll explore those in part 2 of this series.

Lydia Leong (@Cloudpundit) described VMware vCloud Global Connect and commoditization in an 8/29/2011 post to her Gartner (CloudPundit) blog:

At VMworld, VMware has announced vCloud Global Connect, a federation between vCloud Datacenter Provider partners.

My colleague Kyle Hilgendorf has written a good analysis, but I wanted to offer a few thoughts on this as well.

The initial partners for the announcement are Bluelock (US, based in Indianapolis), SingTel (Singapore), and SoftBank Telecom (Japan). Notably, these vendors are landlocked, so to speak — they have deployments only within their home countries, and who probably will not expand significantly beyond their home territories. Consequently, they’re not able to compete for customers who want multi-region deployments but one throat to choke. (Broadly, there are still an insufficient number of high-quality cloud providers who have multi-region deployments.)

These providers are relatively heavyweight — their typical customers are organizations which are going through a formal sourcing process in order to procure infrastructure, and are highly concerned about security, availability, performance, and alignment with enterprise IT. I expect that anyone who chooses federation with Global Connect is going to apply intense scrutiny to the extension provider, as well. At least because the vCloud Datacenter architecture is to some extent proscriptive, and has relatively high requirements, in theory all federation providers should pass the buyer’s most basic “is this cloud provider architected in a reasonable fashion” checks.

However, I think customers will probably strongly prefer to work with a truly global provider if they need truly global infrastructure (as opposed to simply trying to globally source infrastructure that will be used in unique ways within each region) — and those with specific regional needs are probably going to continue to buy from regional (or local) providers, especially given how fragmented cloud IaaS sourcing frequently is.

It’s an important technical capability for VMware to demonstrate, though, since, implicitly, being able to do this between providers also means that it should be possible to move workloads between internal vClouds and external vClouds, and to disaster-recover between providers.

Importantly, the providers chosen for this launch are also providers who are not especially worried about being commoditized. Their margin is really made on the value-added services, especially managed services, and not so much from just providing compute cycles. Each of them probably gains more from being able to address global customer needs, than they lose from allowing their infrastructure to be used by other providers in this fashion.

I do believe that the core IaaS functionality will be commoditized over time, just like the server market has become commoditized. I believe, however, that IaaS providers will still be able to differentiate — it’ll just be a differentiation based on the stuff on top, not the IaaS platform itself.

In the early years of the market, there is significant difference in features/functionality between IaaS providers (and how that relates to cost), but the roadmaps are largely convergent over the next few years. Just like hosters don’t depend on having special server hardware in order to differentiate from one another, cloud IaaS providers eventually won’t depend on having a differentiated base infrastructure layer — the value will primarily come higher up the stack.

That’s not the say that there won’t still be difference in the quality of the underlying IaaS platforms, and some providers will manage costs better than others. And the jury’s still out on whether providers who build their own intellectual property at the IaaS platform layer, vs. buying into vCloud (or Cloud.com, some future OpenStack-based stack, or one of many other “cloud stacks”), will generate greater long-term value.

(For further perspective on commoditization, see an old blog post of mine.)

Derrick Harris (@derrickharris) quoted VMware’s Maritz: No more putting lipstick on legacy apps in an 8/29/2011 post to GigaOm’s Structure blog:

Speaking to a jam-packed room of thousands, VMware CEO Paul Maritz kicked off today’s VMworld conference by declaring, once again, the advent of the cloud era. If you don’t believe him, just look at the numbers. As Maritz highlighted, there are now more virtual workloads deployed worldwide than there are physical workloads. There

are 1 million VMs launched every secondis one VM deployed every six seconds. There are more than 20 million VMs deployed overall.

Assuming that most of those are running atop some version of VMware’s hypervisor, there’s a lot of reason to care what Maritz has to say about the future of the cloud. His company will have a major role in defining the transition from virtualization to cloud computing.

Maritz noted that there’s a lot of hype around the cloud, even acknowledging that “We at VMworld are not immune to cloud fever,” he said, but he believes it’s more than just a fad. Maritz thinks there are three very profound, and very real, forces driving the move to cloud computing: modernization of infrastructure, investment in new and renewed applications, and entirely new modes of end-user access.

However, there’s a big difference between what drove the world to deploying 20 million VMs and what will drive it to modernize infrastructure even further with the cloud. Consolidation largely drove the move to virtualization, but applications and mobile devices will drive the move to cloud computing.

On the application front, Maritz looks to what he calls canonical applications. “When canonical applications change, that’s when you see really profound change [across the computing ecosystem],” Maritz said. He pointed to bookkeeping applications as indicative of the mainframe era, and to ERP, CRM and e-commerce as the defining applications of the client-server era.

Real-time and high-scalability capabilities — both in terms of traffic and data — are driving the development of new applications. Being able to analyze data days after it’s generated, or to adapt to new traffic patterns within days, just isn’t good enough anymore. We can’t keep “putting lipstick around” current applications and expect them to meet these new demands, Maritz said.

How we write those applications also will be critical, because they’ll have to run on a variety of non-PC devices. We’re approaching the intersection of consumerization and next-generation enterprise IT, Maritz explained, which means that companies like VMware have to plan for very serious change. Running enterprise applications on consumer devices, especially of the mobile variety, is a big change.

They’ll have to embrace things such as HTML5 to enable cross-platform applications, and new programming frameworks to attract young developers that demand a simple, dynamic development experience. Companies will also have to figure out how to secure corporate data against the myriad threats that accompany employees downloading apps willy-nilly and operating often on unsecured (0r at least less-secured) networks. VMware CTO Steve Herrod actually will be highlighting VMware’s role in the mobile ecosystem at our Mobilize conference next month, and it’s safe to assume these will be among the topics he addresses.

Maritz, of course, thinks VMware has strong plays in all of these spaces — vSphere, Cloud Foundry, Horizon, the list does on — and he highlighted them. However, as my colleague Stacey Higginbotham pointed out while highlighting the key VMworld trends, VMworld isn’t alone in making this realization. It has major competition, including from companies like Microsoft that know both the enterprise and the consumer spaces very well.

Every year at VMworld, Maritz highlights the movement toward cloud computing and how VMware is driving that migration. In large part, he’s right every time on the latter point. Now that almost everyone is on board with Maritz’s vision, though, I’m interested to see how long VMworld, and VMware in general, continues to drive the discussion around the future of IT.

Related research and analysis from GigaOM Pro:

Subscriber content. Sign up for a free trial.

<Return to section navigation list>

0 comments:

Post a Comment