Windows Azure and Cloud Computing Posts for 8/1/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

• Updated 8/1/2011 4:00 PM PDT with new articles marked • by the Azure AppFabric Team and Beth Massi.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, Cache, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Juan Pablo Garcia Dalolla (@jpgd) published A simple implementation of Windows Azure Storage API for Node.js for blobs to GitHub on 8/13/2011. From the ReadMe:

Installation

waz-storage-js depends on querystring (>= v0.0.1) and xml2js (>= v0.1.9).

To install via npm:

npm install waz-storage-jsExamples

Initial Setup

var waz = waz.establishConnection({ accountName: 'your_account_name' , accountKey: 'your_key', , useSsl: false });Blobs

// Creating a new container waz.blobs.container.create('myContainer', function(err, result){ }); // Listing existing containers waz.blobs.container.list(function(err, result){ }); // Finding a container waz.blobs.container.find('myContainer', function(err, container){ // Getting container's metadata container.metadata(function(err, metadata){ }); // Adding properties to a container container.putProperties({'x-ms-custom' : 'MyValue'}, function(err, result){ }); // Getting container's ACL container.getAcl(function(err, result){ }); // Setting container's ACL (null, 'blob', 'container') container.setAcl('container', function(err, result){ }); // Listing blobs in a container container.blobs(function(err, result){ }); // Getting blob's information result.getBlob('myfolder/my file.txt', function(err, blob){ // Getting blob's contents blob.getContents(function(err,data){ console.log(data); }); }); // Uploading a new Blob result.store('folder/my file.xml', '<xml>content</xml>', 'text/xml', {'x-ms-MyProperty': 'value'}, function(err, result){ }); } }); // Deleting containers waz.blobs.container.delete('myContainer', function(err){ });Queues

Coming Soon - If you are anxious, you can always contribute [to] the project :)Tables

Coming Soon - If you are anxious, you can always contribute [to] the project :)Remaining Stuff

- Documentation

- SharedAccessSignature

Blobs

- Copy / Delete / Snapshot

- Metadata (get/put)

- Update contents

- Blocks

Queues

- Everything

Tables

- Everything

Known Issues

- Container's putProperties doesn't work.

Authors

License

(The MIT License)

Copyright (c) 2010 by Juan Pablo Garcia Dalolla

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the 'Software'), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED 'AS IS', WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE

Juan Pablo is a developer with Southworks.

<Return to section navigation list>

SQL Azure Database and Reporting

The SQL Server Team Blog posted Cloud On Your Terms: Enables organizations to create and scale business solutions fast, on your terms from server to private or public cloud on 8/1/2011:

We recognize that each organization has its own unique challenges and requirements, and this is no different when it comes to taking advantage of the cloud. Organizations have different requirements when it comes to security, compliance, application needs, and level of control - which is why it is necessary to choose a solution which is flexible enough to meet an organization’s unique needs while still allowing it to adapt to rapidly changing requirements.

Scale on demand with flexible deployment options: With SQL Server Code Name “Denali,” customers are not constrained to any one environment be it traditional deployments, private clouds or the public cloud. Instead, organizations can “mix and match” and take advantage of a common underlying architecture which gives them maximum flexibility in deploying their solutions across private, public or hybrid environments to meet growing and changing business needs.

Fast time to market: Whether customers want to build it themselves, buy it ready-made or simply consume it as service, SQL Server Code Name “Denali” gives customers a range of options for quickly building solutions. For those who want to build it themselves, take advantage of reference architectures and tools to rapidly build private cloud solutions. Alternatively, buy ready-made SQL Server appliances optimized for workloads and which eliminate the time to design tune and test hardware and software appliances. Or simply consume it as a service from the public cloud with SQL Azure!

Common set of tools across on-premises and cloud: Maximize your skills and be cloud-ready by using the same set of skills and an integrated set of developer and management tools for developing and administering applications across private cloud and public cloud. SQL Server Developer Tools code name “Juneau,” enables an integrated development experience for developers to build next-generation web, enterprise and data-aware mobile applications within SQL Server Code Name “Denali.” Also, support for enhancements to the Data-tier Application Component (DAC) introduced in SQL Server 2008 R2, further simplify the management and movement of databases across server, private and public cloud.

Extend any data, anywhere: Extend the reach of your data and power new experiences by taking advantage of technologies such as SQL Azure Data Sync to synchronize data across private and public cloud environments, OData to expose data through open feeds to power multiple user experiences, and Windows Azure Marketplace DataMarket to monetize data or consume it from multiple data providers. Meanwhile, SQL Server Code Name “Denali” delivers extended support for heterogeneous environments by connecting to SQL Server and SQL Azure applications using any industry standard APIs (ADO.NET, ODBC, JDBC, PDO, and ADO) across varied platforms including .NET, C/C++, Java, and PHP.

Download SQL Server Code Name “Denali” CTP3 or SQL Server Developer Tools Code Name “Juneau” CTP bits TODAY at: http://www.microsoft.com/sqlserver/en/us/get-sql-server/try-it.aspx

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, Cache, WIF and Service Bus

• The Windows Azure AppFabric Team posted Announcing The End of The Free Promotional Period for Windows Azure AppFabric Caching on 8/1/2011:

For the past few months, we have offered the Windows Azure AppFabric Caching service free of charge for the promotional period that ends July 31, 2011. For all billing periods beginning on August 1, 2011 onward, we will begin charging at the following standard monthly rates:

- $45.00 for a 128MB cache

- $55.00 for a 256MB cache

- $75.00 for a 512MB cache

- $110.00 for a 1GB cache

- $180.00 for a 2GB cache

- $325.00 for a 4GB cache

If you are signed up for one of the Windows Azure Platform offers, it may include a 128MB cache option at no additional charge for a certain period of time, depending on the specific offer. You can find out whether your offer includes a 128MB cache at no additional charge by going to our page that lists our various Windows Azure Platform offers.

If you are using caching and do not desire to be billed, please be sure to stop using caching in excess of what is included with your offer immediately.

If you have any questions about the Windows Azure platform or the Windows Azure AppFabric Caching service, please visit our support page.

Ron Jacobs announced Windows Workflow Foundation - WF Migration Kit CTP 2 Released in an 8/1/2011 post to the AppFabric Team blog:

Update

We’re happy to announce that CTP 2 version of WF Migration Kit has been released to CodePlex, please refer to our CodePlex page for more information. The new State Machine activity for WF 4 is released with the .NET Framework 4 Platform Update 1 in April, 2011. In our CTP 2 release, we’ve added the support of State Machine migration.

If you already have CTP 1 installed, the CTP 2 installation will remove CTP 1 version on your machine.

Introduction

The WF Migration Kit helps users migrate WF3 (System.Workflow) artifacts to WF4 (System.Activities). The high level goal is to migrate workflow definitions and declarative conditions, but not code (such as workflow code-beside methods). Some WF3 workflows will be fully migratable to WF4, while others will be partially migratable and will require manual editing to complete the migration to WF4.

The WF Migration Kit provides an API as well as a command line executable tool, which is a wrapper around the API. Migrators are included for some, but not all, of the WF3 out-of-box activities. The WF Migration Kit offers an extensibility point so that custom activity migrators can be developed by third parties.

Activity migrators are provided for the following WF3 activities:

- Delay

- Throw

- Terminate

- Send, Receive

- CallExternalMethod, HandleExternalEvent (requires ExternalDataExchangeMapping information)

- Sequence, SequentialWorkflow

- IfElse, IfElseBranch

- While

- Parallel

- Listen

- Replicator

- TransactionScope

- CancellationHandler, FaultHandlers, FaultHandler

- StateMachineWorkflow, State, StateInitialization, StateFinalization, SetState, EventDriven

The WF Migration Kit CTP runs on top of the Visual Studio 2010 and .NET Framework 4, which can be found here. Please note that the Client Profile of the .NET Framework 4 is not sufficient since it does not contain the WF3 assemblies. State Machine migration requires .NET Framework 4 Platform Update 1, which can be found here.

The WF Migration Kit is also available via NuGet.

State Machine Migration

Now let’s take a look at how WF Migration Kit can help us with State Machine migration. Please download WF Migration Kit CTP 2 Samples.zip from our CodePlex page.

The overall steps of State Machine migration includes:

- Either download and install WF Migration Kit CTP 2 Setup.msi from CodePlex, or download WFMigrationKit package from NuGet.

- Execute migration on the legacy workflow project: DelayStateMachine3.

- Port migrated activities to the new workflow project: DelayStateMachine4.

Installation

You can download and install WF Migration Kit CTP 2 Setup.msi from CodePlex, or download WFMigrationKit package from NuGet.

Download and install WF Migration Kit CTP 2 Setup.msi from CodePlex.

- Visit WF Migration Kit homepage on CodePlex.

- Download WF Migration Kit CTP 2 Setup.msi from the page.

- Install WF Migration Kit CTP 2.

The WF Migration Kit is installed in the %ProgramFiles%\Microsoft WF Migration Kit\CTP 2\ folder or %ProgramFiles(x86)%\Microsoft WF Migration Kit\CTP 2\ for 64-bit operating system.

Download WFMigrationKit package from NuGet.

- Download NuGet Package Manager, and install.

- Open a project in Microsoft Visual Studio 2010

- Right click on References and choose Manage NuGet Packages.

- In the Online tab search for WFMigrationKit. Click on Install button to download the package.

- Close the dialog, open a Command Prompt window and change to the package tools directory. e.g:

cd WF Migration Kit CTP 2 Samples\StateMachineMigrationSamples\Begin\packages\WFMigrationKit.1.1.0.0\tools

Test migration on the legacy workflow project: DelayStateMachine3.

- Compile the DelayStateMachine3 project, which produces an executable DelayStateMachine3.exe.

- Copy DelayStateMachine3.exe to the installation directory (or package tools directory for NuGet) which contains WFMigrator.exe and Microsoft.Workflow.Migration.dll.

- Execute the following command in the previously opened Command Prompt:

WFMigrator DelayStateMachine3.exeA DelayWorkflow.xaml file has been generated in the current working directory. The migration completes with information indicating actions required to manually solve custom code activities not migrated during the execution.

Port migrated activities to the new workflow project: DelayStateMachine4.

- Use Microsoft Visual Studio to add the DelayWorkflow.xaml file generated earlier to DelayStateMachine4 project.

- Open DelayWorkflow.xaml.

- Adjust the design canvas as you need.

- Inspect WriteLine activities which have been emitted in places of custom code activities, and develop corresponding WF 4 activities for a manual migration.

- Verify workflow behaviors after migration.

Feedback

You’re always welcome to send us your thoughts on WF Migration Kit, and please let us know how we can do it better. You can leave feedback

- Here on the blog comments

- Open an Issue at wf.CodePlex.com

Thanks!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan answered How RDP works in Windows Azure Roles? in an 8/1/2011 post:

The way RDP works is that one of the roles (when you have multiple roles and instance) will have RemoteForwarder running in all of its instances, and all of the roles will have RemoteAccess running. When you RDP into a specific instance, the role type which has the RemoteForwarder running is listening on port 3389 for all incoming RDP requests to that deployment.

Because all of your role instances are behind the load balancer, any random instance could receive the RDP connection. The RemoteForwarder then internally passes that connection to the RemoteAccess agent running on the specific instance you are trying to connect to.

So it is possible that if the role which is running RemoteForwarder is cycling, then you will not be able to RDP into any other role in that deployment. This could also bring some intermittent RDP issues with your instances.

If you would want to dig deeper you can look at CSDEF to determine which role is running the RemoteForwarder because that one will be the key instance for RDP enablement overall. If you have multiple instances and know few are stable then other, you can move RemoteForwarder to a role instance which you know is stable.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team (@WindowsAzure) reminded developers to watch Scott Guthrie Talks About Windows Azure With Steve and Wade on Cloud Cover in an 8/1/2011 post:

Don’t miss the latest episode of Cloud Cover on Channel 9, which includes a discussion about Windows Azure with Scott Guthrie, corporate vice president, Server & Tools Business. In this episode Scott talks with Steve and Wade about what he's been focused on with Windows Azure and, during this conversation, he talks about the good, the bad, and the ugly and also shares some code along the way.

See Matt Weinberger (@MattNLM) reported Fujitsu Launches Microsoft Windows Azure Cloud Middleware in an 8/1/2011 post in the Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds section below.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Beth Massi (@bethmassi) suggested Getting Started with the LightSwitch Starter Kits in a 8/1/2011 post:

Visual Studio LightSwitch Starter Kits are project templates that help get you started building specific LightSwitch applications. You can download them right from the within Visual Studio LightSwitch and once installed they show up in your “New Project” dialog. Using the Starter Kits can help you learn about LightSwitch as well as get you started off right with a basic data model, queries and screens that you can customize further. Currently there are Starter Kits for:

- Customer Service

- Expense Tracking

- Issue Tracking

- Job Candidate Tracking

- Performance Review

- Status Reports

- Time Tracking

It’s really easy to get started using them. There’s a few ways you can install the Starter Kits. The easiest way is directly through Visual Studio LightSwitch. You can find the Starter Kit templates when you create a LightSwitch project from the “File –> New Project” dialog. Select the “Online Templates” node on the left and you will see the Starter Kits listed. Each Starter Kit comes in both Visual Basic (VB) and C# (CS) versions.

Select the Starter Kit you want, name the project, and click OK. This will download and install the Starter Kit. You can also install the Starter Kits through the Extension Manager. Here you will also find LightSwitch third-party extensions that have been placed on the Visual Studio Gallery. Open up the Extension Manager from the Tools menu.

Then click the “Online Gallery” node on the left side and expand the Templates node and you will see the Starter Kits there. Click the download button to install the one you want.

Once you install the Starter Kits they will be listed in your New Project dialog along with the blank solutions you get out of the box.

You can also obtain the Starter Kits as well as other extensions directly from the Visual Studio Gallery.

Once you create a new project based on the Starter Kit, you are presented with a documentation window that explains how to use the Starter Kit and how the application works. You can then choose to customize the application further for your needs.

That’s it! For more information on building business applications with Visual Studio LightSwitch including articles, videos, and more, see:

Templates such as these are responsible for much of Microsoft Access’s recent success.

Michael Washington (@ADefWebserver) described WCF RIA Service: Combining Two Tables with LightSwitch in an 8/1/2011 post:

Note: If you are new to LightSwitch, it is suggested that you start here: Online Ordering System (An End-To-End LightSwitch Example)

Note: You must have Visual Studio Professional (or higher) to complete this tutorial

LightSwitch is a powerful application builder, but you have to get your data INTO it first. Usually this is easy, but in some cases, you need to use WCF RIA Services to get data into LightSwitch that is ALREADY IN LightSwitch.

LightSwitch operates on one Entity (table) at a time. A Custom Control will allow you to visualize data from two entities at the same time, but inside LightSwitch, each Entity is always separate. This can be a problem if you want to, for example, combine two Entities into one.

LightSwitch wont do that… unless you use a WCF RIA Service. Now you can TRY to use a PreProcess Query, but a PreProcess Query is only meant to filter records in the Entity it is a method for. You cannot add records, only filter them out (this may be easier to understand if you realize that a PreProcess Query simply tacks on an additional Linq statement, to “filter” the linq statement, that is about to be passed to the data source).

In this article, we will create a application that combines two separate entities and creates a single one. Note that this article is basically just a C# rehash of the Eric Erhardt article, but this one will move much slower, and provide a lot of pictures :). We will also cover updating records in the WCF RIA Service.

Also note, that the official LightSwitch documentation covers creating WCF RIA Services in LightSwitch in more detail: http://207.46.16.248/en-us/library/gg589479.aspx.

Create The Application

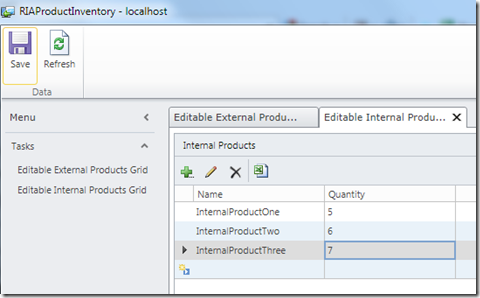

Create a new LightSwitch project called RIAProductInventory.

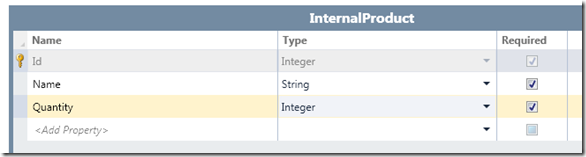

Make a Entity called InternalProduct, with the schema according to the image above.

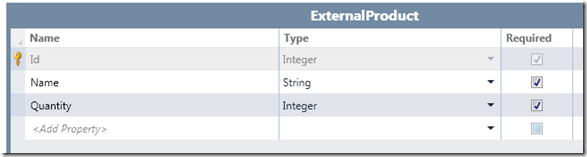

Make a Entity called ExternalProduct, with the schema according to the image above.

Create Editable Grid Screens for each Entity, run the application, and enter some sample data.

The WCF RIA Service

We will now create a WCF RIA Service to do the following things:

- Create a new Entity that combines the two Entities

- Connects to the existing LightSwitch database

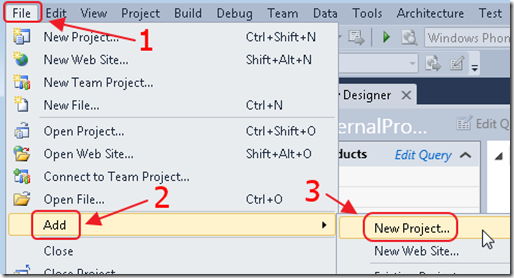

Create a New Project (Note: You must have Visual Studio Professional (or higher) to complete this tutorial).

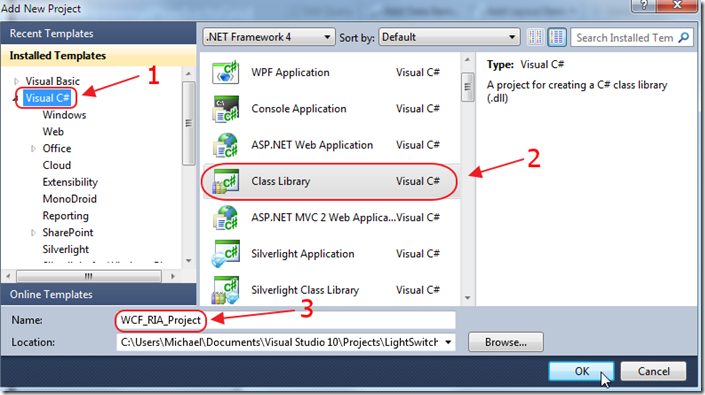

Create a Class Library called WCF_RIA_Project.

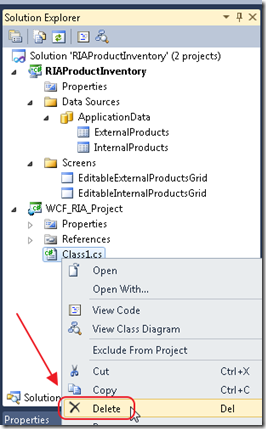

Delete the Class1.cs file that is automatically created.

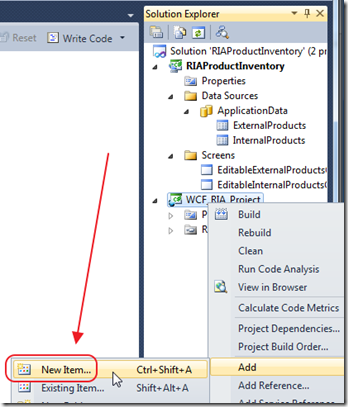

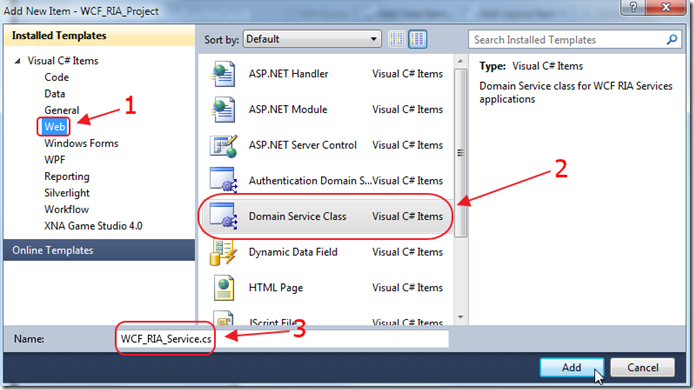

Add a New Item to WCF_RIA_Project.

Add a Domain Service Class called WCF_RIA_Service.

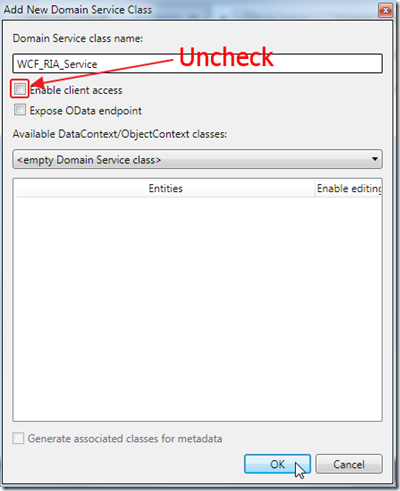

When the Add New Domain Service Class box comes up, uncheck Enable client access. Click OK.

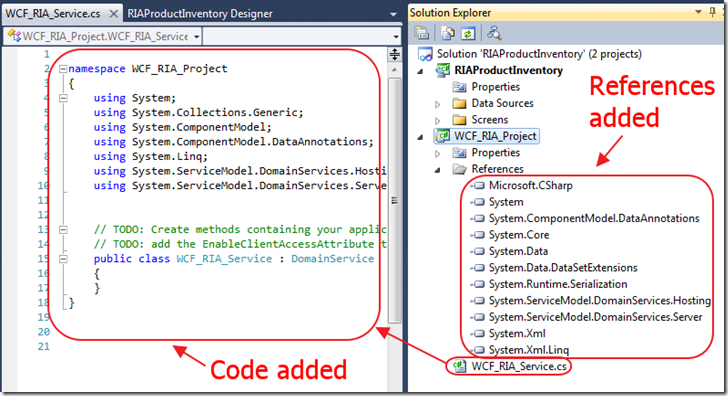

Code and references will be added.

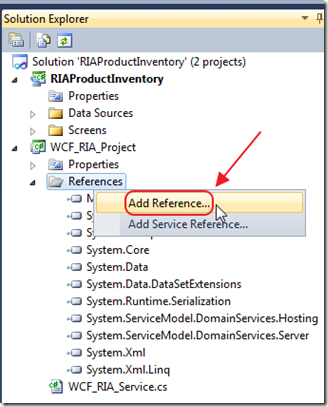

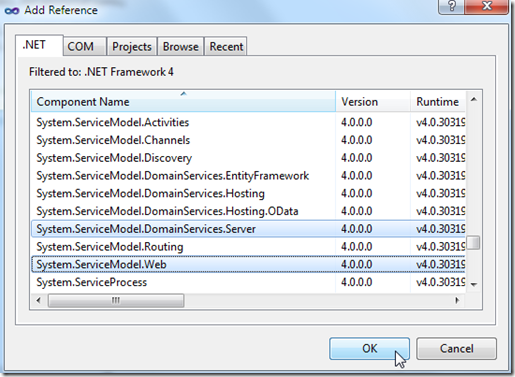

We need additional references, add the following references to the WCF_RIA_Project:

- System.ComponentModel.DataAnnotations

- System.Configuration

- System.Data.Entity

- System.Runtime.Serialization

- System.ServiceModel.DomainServices.Server (Look in %ProgramFiles%\Microsoft SDKs\RIA Services\v1.0\Libraries\Server if it isn’t under the .Net tab)

- System.Web

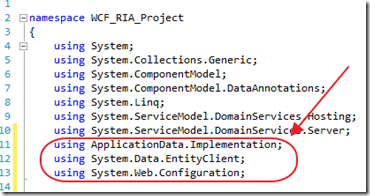

Add the following Using Statements to the class:

using ApplicationData.Implementation;

using System.Data.EntityClient;

using System.Web.Configuration;Reference The LightSwitch Object Context

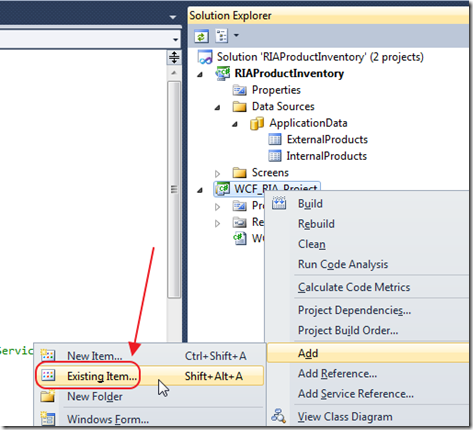

Now, we will add code from the LightSwitch project to our WCF RIA Service project. We will add a class that LightSwitch automatically creates, that connects to the database that LightSwitch uses.

We will use this class in our WCF RIA Service to communicate wit the LightSwitch database.

Right-click on the WCF_RIA_Project and select Add then Existing Item.

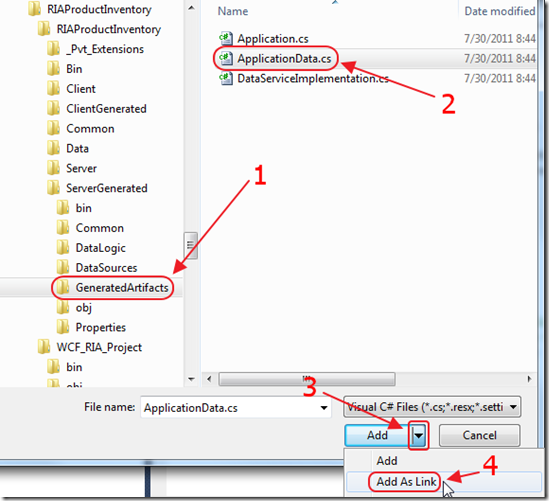

Navigate to ..ServerGenerated\GeneratedArtifacts (in the LightSwitch project)and click on ApplicationData.cs and Add As Link.

We used “add As Link” so that whenever LightSwitch updates this class, our WCF RIA Service is also updated. This is how our WCF RIA Service would be able to see any new Entities (tables) that were added, deleted, or changed.

Create the Domain Service

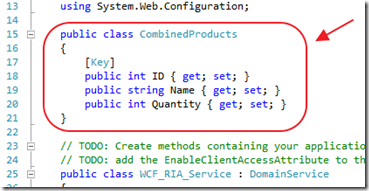

In the WCF_RIA_Service class, in the WCF RIA project, we now create a class to hold the combined tables.

Note, that we do not put the class inside the WCF_RIA_Service class. To do this would cause an error.

Use the following code:

public class CombinedProducts{[Key]public int ID { get; set; }public string Name { get; set; }public int Quantity { get; set; }}Note that the “ID” field has been decorated with the “[Key]” attribute. WCF RIA Services requires a field to be unique. The [Key] attribute indicates that this will be the unique field.

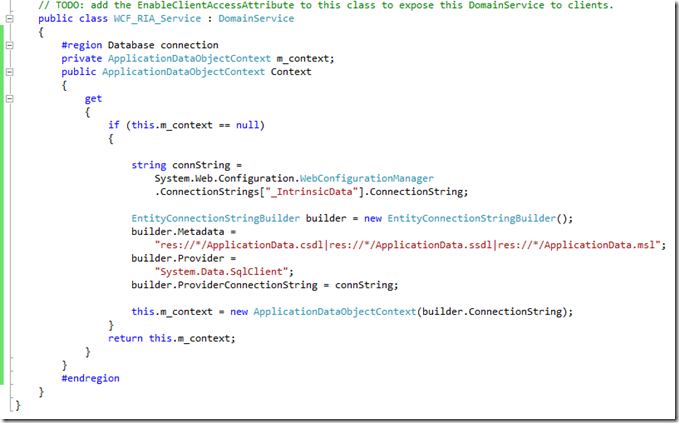

Dynamically Set The Connection String

Next, Add the following code to the WCF_RIA_Service class:

#region Database connectionprivate ApplicationDataObjectContext m_context;public ApplicationDataObjectContext Context{get{if (this.m_context == null){string connString =System.Web.Configuration.WebConfigurationManager.ConnectionStrings["_IntrinsicData"].ConnectionString;EntityConnectionStringBuilder builder = new EntityConnectionStringBuilder();builder.Metadata ="res://*/ApplicationData.csdl|res://*/ApplicationData.ssdl|res://*/ApplicationData.msl";builder.Provider ="System.Data.SqlClient";builder.ProviderConnectionString = connString;this.m_context = new ApplicationDataObjectContext(builder.ConnectionString);}return this.m_context;}}#endregionThis code dynamically creates a connection to the database. LightSwitch uses “_IntrinsicData” as it’s connection string. This code looks for that connection string and uses it for the WCF RIA Service.

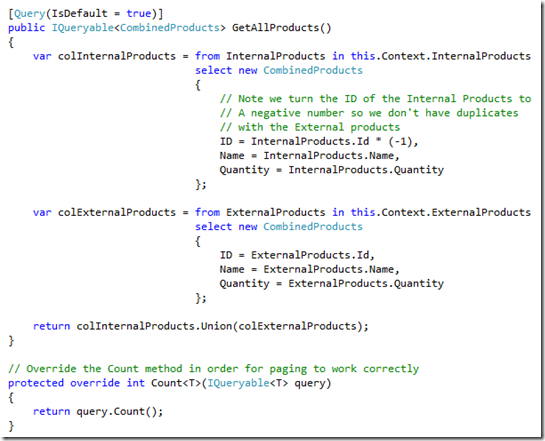

Create The Select Method

Add the following code to the class:

[Query(IsDefault = true)]public IQueryable<CombinedProducts> GetAllProducts(){var colInternalProducts = from InternalProducts in this.Context.InternalProductsselect new CombinedProducts{// Note we turn the ID of the Internal Products to// A negative number so we don't have duplicates// with the External productsID = InternalProducts.Id * (-1),Name = InternalProducts.Name,Quantity = InternalProducts.Quantity};var colExternalProducts = from ExternalProducts in this.Context.ExternalProductsselect new CombinedProducts{ID = ExternalProducts.Id,Name = ExternalProducts.Name,Quantity = ExternalProducts.Quantity};return colInternalProducts.Union(colExternalProducts);}// Override the Count method in order for paging to work correctlyprotected override int Count<T>(IQueryable<T> query){return query.Count();}This code combines the InternalProducts and the ExternalProducts, and returns one collection using the type CombinedProducts.

Note that this method returns IQueryable so that when it is called by LightSwitch, additional filters can be passed, and it will only return the records needed.

Note that the ID column needs to provide a unique key for each record. Because it is possible that the InternalProducts table and the ExternalProducts table can have records that have he same ID, we multiply the ID for the InternalProducts by –1 so that it will always produce a negative number and will never be a duplicate of the ExternalProducts Id’s that are always positive numbers.

Also notice that the GetAllProducts method is marked with the [Query(IsDefault = true)] attribute, one method, for each collection type returned, must not require a parameter, and be marked with this attribute, to be used with LightSwitch.

Consume The WCF RIA Service

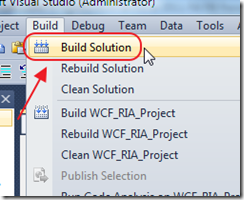

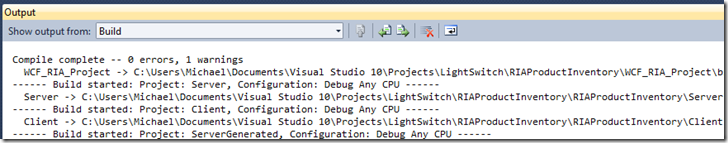

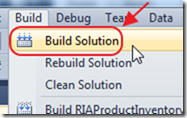

Build the solution.

You will get a ton or warnings. you can ignore them.

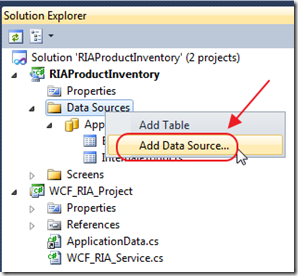

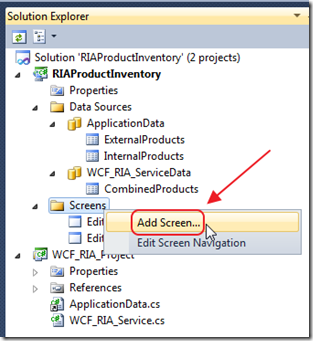

In the Solution Explorer, right-click on the Data Sources folder and select Add Data Source.

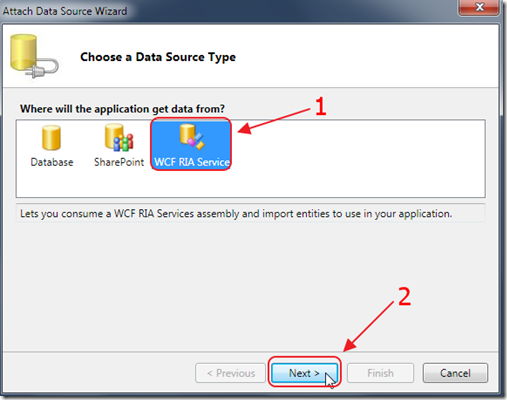

Select WCF RIA Service.

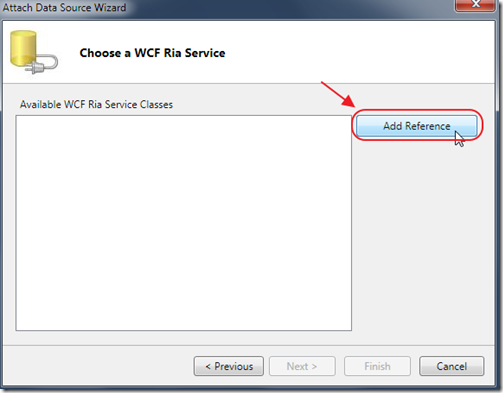

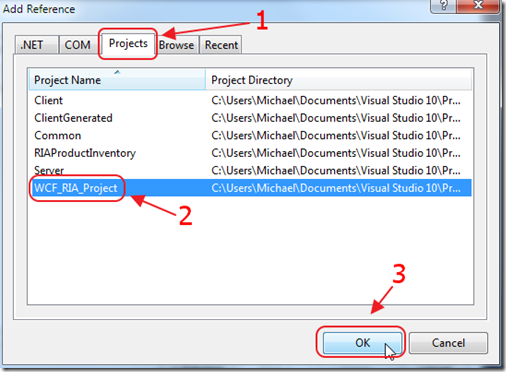

Click Add Reference.

Select the RIA Service project.

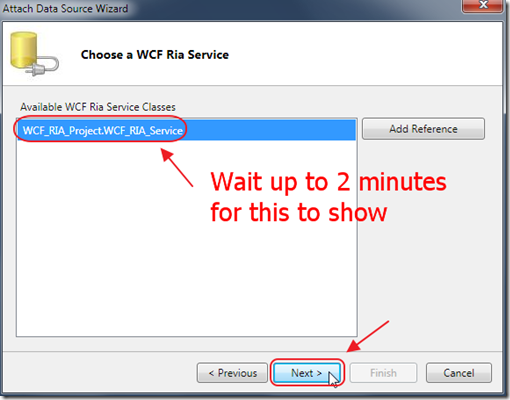

You have to wait for the service to show up in the selection box. Select it and click Next.

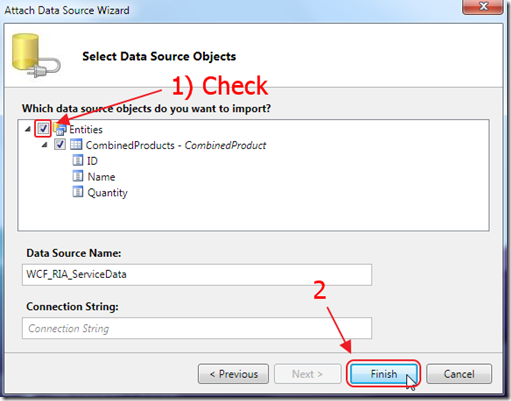

Check the box next to the Entity, and click Finish.

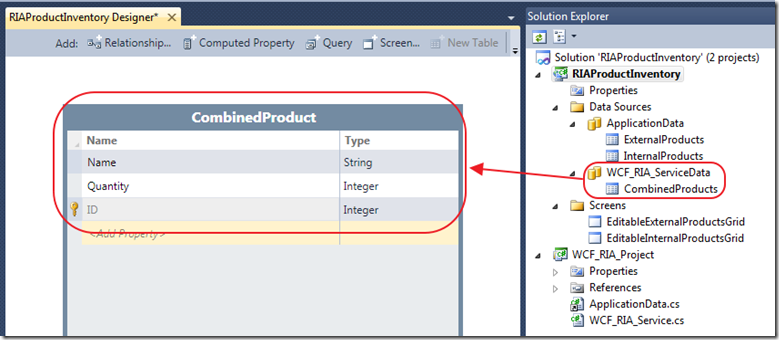

The Entity will show up.

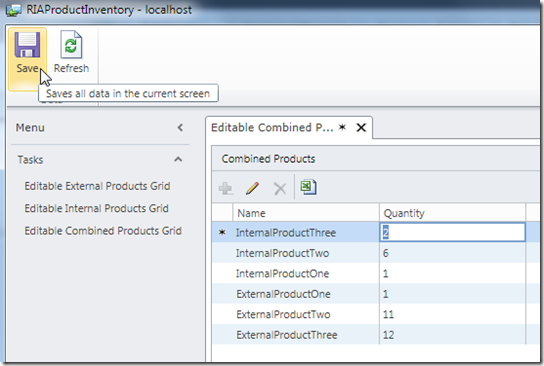

Create a Screen That Shows The Combined Products

Add a Screen.

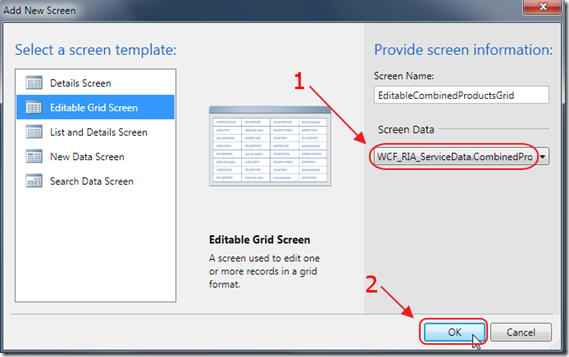

Add an Editable Grid Screen, and select the Entity for the Screen Data.

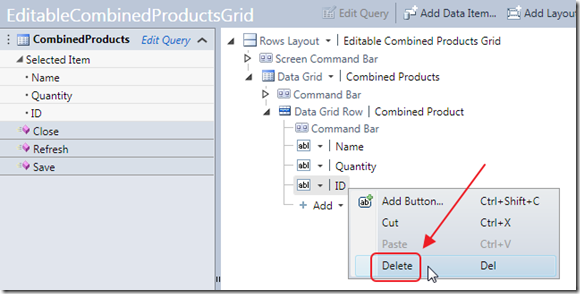

Delete the ID column (because it is not editable anyway).

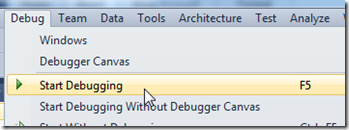

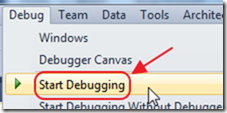

Run the application.

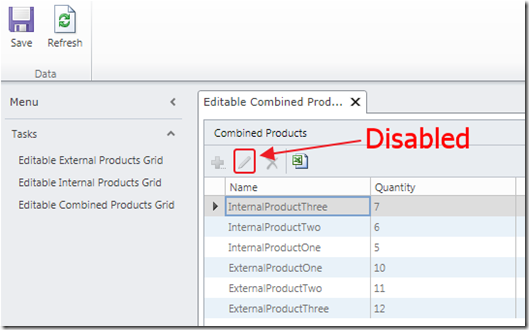

You will be able to see the combined Products.

However, you will not be able to edit them.

Updating Records In A WCF RIA Service

Add the following code to the WCF RIA Service

public void UpdateCombinedProducts(CombinedProducts objCombinedProducts){// If the ID is a negative number it is an Internal Productif (objCombinedProducts.ID < 0){// Internal Product ID's were changed to negative numbers// change the ID back to a positive numberint intID = (objCombinedProducts.ID * -1);// Get the Internal Productvar colInternalProducts = (from InternalProducts in this.Context.InternalProductswhere InternalProducts.Id == intIDselect InternalProducts).FirstOrDefault();if (colInternalProducts != null){// Update the ProductcolInternalProducts.Name = objCombinedProducts.Name;colInternalProducts.Quantity = objCombinedProducts.Quantity;this.Context.SaveChanges();}}else{// Get the External Productvar colExternalProducts = (from ExternalProducts in this.Context.ExternalProductswhere ExternalProducts.Id == objCombinedProducts.IDselect ExternalProducts).FirstOrDefault();if (colExternalProducts != null){// Update the ProductcolExternalProducts.Name = objCombinedProducts.Name;colExternalProducts.Quantity = objCombinedProducts.Quantity;this.Context.SaveChanges();}}}Build the solution.

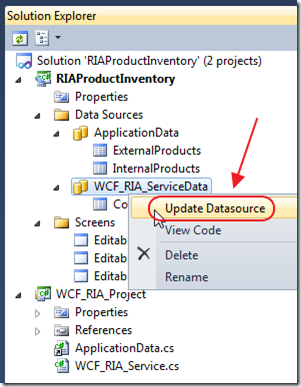

Right-click on the WCF_RIA_Service node in the Solution Explorer, and select Update Datasource.

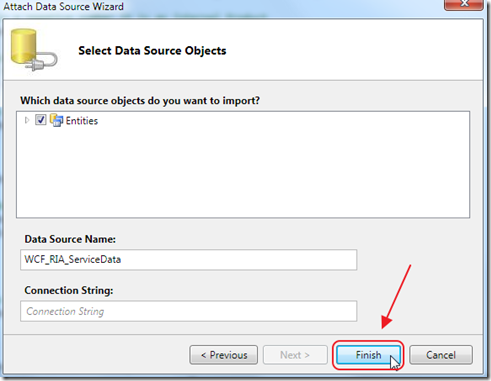

When the wizard shows up click Finish.

Run the application.

You will now be able to update the records.

Download Code

The LightSwitch project is available at http://lightswitchhelpwebsite.com/Downloads.aspx

The Visual Studio LightSwitch Team (@VSLightSwitch) reported Visual Studio LightSwitch Training Kit Updated for RTM in an 8/1/2011 post:

The Visual Studio LightSwitch RTM Training Kit is now live!

The LightSwitch Training Kit contains demos and labs to help you learn to use and extend LightSwitch. The introductory materials walk you through the Visual Studio LightSwitch product. By following the hands-on labs, you'll build an application to inventory a library of books. The more advanced materials will show developers how they can extend LightSwitch to make components available to the LightSwitch developer. In the advanced hands-on labs, you will build and package an extension using the Extensibility Toolkit that can be reused by the Visual Studio LightSwitch end user.

And for more learning resources please visit the LightSwitch Learning Center on MSDN.

Emanuele Bartolesi described how to Install Microsoft Visual Studio Lightswitch in a 7/31/2011 post:

My last love is called Microsoft Visual Studio Lightswitch.

After the official launch (26th of July) I installed the official release instantly.

The setup is very easy but I want to share my installation tutorial because Lightswitch was created for develop application easily and quickly way.

That’s why you can install it even though you are not a programmer.

First of all, mount the iso image of installation and launch the setup.

Accept the license.

Click customize.

Check if the path of Microsoft Visual Studio installation is right.

Click next and wait the finish of installation.Click Launch.

When Visual Studio is started, choose New Project and select Lightswitch type of project.

Select your preferred language (I hope that is C#)

When you see the follow[ing] screenshot, you can start to enjoy with Microsoft Visual Studio Lightswitch.

Michael Washington (@ADefWebserver) wrote a Walkthrough: Creating a Shell Extension topic for MSDN, who posted it on 7/31/2011:

This walkthrough demonstrates how to create a shell extension for LightSwitch. The shell for a Visual Studio LightSwitch 2011 application enables users to interact with the application. It manifests the navigable items, running screens, associated commands, current user information, and other useful information that are part of the shell’s domain. While LightSwitch provides a straightforward and powerful shell, you can create your own shell that provides your own creative means for interacting with the various parts of the LightSwitch application.

In this walkthrough, you create a shell that resembles the default shell, but with several subtle differences in appearance and behavior. The command bar eliminates the command groups and moves the Design Screen button to the left. The navigation menu is fixed in position, no Startup screen is displayed, and screens are opened by double-clicking menu items. The screens implement a different validation indicator, and current user information is always displayed in the lower-left corner of the shell. These differences will help illustrate several useful techniques for creating shell extensions.

The anatomy of a shell extension consists of three main parts:

The Managed Extensibility Framework (MEF), which exports the implementation of the shell contract.

The Extensible Application Markup Language (XAML), which describes the controls that are used for the shell UI.

The Visual Basic or C# code behind the XAML, which implements the behavior of the controls and interacts with the LightSwitch run time.

Creating a shell extension involves the following tasks:

Visual Studio 2010 SP1 (Professional, Premium, or Ultimate edition)

Visual Studio 2010 SP1 SDK

Visual Studio LightSwitch 2011

Visual Studio LightSwitch 2011 Extensibility Toolkit

Michael continues with lengthy step-by-step instructions and many feet of source code. He concludes with:

This concludes the shell extension walkthrough; you should now have a fully functioning shell extension that you can reuse in any LightSwitch project. This was just one example of a shell extension; you might want to create a shell that is significantly different in behavior or layout. The same basic steps and principles apply to any shell extension, but there are other concepts that apply in other situations.

If you are going to distribute your extension, there are a couple more steps that you will want to take. To make sure that the information displayed for your extension in the Project Designer and in Extension Manager is correct, you must update the properties for the VSIX package. For more information, see How to: Set VSIX Package Properties. In addition, if you are going to distribute your extension publicly, there are several things to consider. For more information, see How to: Distribute a LightSwitch Extension.

Tasks

Concepts

Charlie Maitland posted Visual Studio LightSwitch and Dynamics CRM–Why this matters on 7/20/2011 (missed when posted):

Microsoft have released a new component for Visual Studio called LightSwitch.

This is a set of tools that is designed to allow semi-technical users to easily build user interfaces to databases using Silverlight.

I have been following this product through its beta phases and I believe that it will make a massive impact on the on-premise Dynamics CRM world.

The reason I feel this way is that there is a new licencing option available in Dynamics 2011 that is the Employee Self Service CAL (ESS).

The ESS CAL allows users to interact with Dynamics CRM 2011 but NOT to use the native UI. So what better way than through an easy to build Silverlight application? The same applies (to a lesser extent from the UI capability PoV) to external users licenced under the External Connector Licence.

So if you have not looked into the LightSwitch capabilities, you should.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Lori MacVittie (@lmacvittie) asked When there’s a problem with a virtual network appliance installed in “the cloud”, who do you call first? as a preface to her Your Call is Important to Us at CloudCo: Please Press 1 for Product, 2 for OS, 3 for Hypervisor, or 4 for Management Troubles post of 8/1/2011 to F5’s DevCentral blog:

An interesting thing happened on the way to troubleshoot a problem with a cloud-deployed application – no one wanted to take up the mantle of front line support. With all the moving parts involved, it’s easy to see why. The problem could be with any number of layers in the deployment: operating system, web server, hypervisor or the nebulous “cloud” itself. With no way to know where it is – the cloud has limited visibility, after all – where do you start?

Consider a deployment into ESX where the guest OS (hosting a load balancing solution) isn’t keeping its time within the VM. Time synchronization is a Very Important aspect of high-availability architectures. Synchronization of time across redundant pairs of load balancers (and really any infrastructure configured in HA mode) is necessary to ensure that a failover even isn’t triggered by a difference caused simply by an error in time keeping. If a pair of HA devices are configured to failover from one to another based on a failure to communicate after X seconds, and their clocks are off by almost X seconds…well, you can probably guess that this can result in … a failover event.

Failover events in traditional HA architectures are disruptive; the entire device (virtual or physical) basically dumps in favor of the backup, causing a loss of connectivity and a quick re-convergence required at the network layer. A time discrepancy can also wreak havoc with the configuration synchronization processes while the two instances flip back and forth.

So where was the time discrepancy coming from? How do you track that down and, with a lack of visibility and ultimately control of the lower layers of the cloud “stack”, who do you call to help? The OS vendor? The infrastructure vendor? The cloud computing provider? Your mom?

We’ve all experienced frustrating support calls – not just in technology but other areas, too, such as banking, insurance, etc… in which the pat answer is “not my department” and “please hold while I transfer you to yet another person who will disavow responsibility to help you.” The time, and in business situations, money, spent trying to troubleshoot such an issue can be a definite downer in the face of what’s purportedly an effortless, black-box deployment. This is why the black-box mentality marketed by some cloud computing providers is a ridiculous “benefit” because it assumes the abrogation of accountability on the part of IT; something that is certainly not in line with reality. Making it more difficult for those responsible within IT to troubleshoot and having no real recourse for technical support makes cloud computing far more unappealing than marketing would have you believe with their rainbow and unicorn picture of how great black-boxes really are.

The bottom line is that the longer it takes to troubleshoot, the more it costs. The benefits of increased responsiveness of “IT” are lost when it takes days to figure where an issue might be. Black-boxes are great in airplanes and yes, airplanes fly in clouds but that doesn’t mean that black-boxes and clouds go together. There are myriad odd little issues like time synchronization across infrastructure components and even applications that must be considered as we attempt to move more infrastructure into public cloud computing environments.

So choose your provider wisely, with careful attention paid to support, especially with respect to escalation and resolution procedures. You’ll need the full partnership of your provider to ferret out issues that may crop up and only a communicative, partnership-oriented provider should be chosen to ensure ultimate success. Also consider more carefully which applications you may be moving to “the cloud.” Those with complex supporting infrastructure may simply not be a good fit based on the difficulties inherent not only in managing them and their topological dependencies but also their potentially more demanding troubleshooting needs. Rackspace

put it well recently when they stated, “Cloud is for everyone, not everything.”

That’s because it simply isn’t that easy to move an architecture into an environment in which you have very little or no control, let alone visibility. This is ultimately why hybrid or private cloud computing will stay dominant as long as such issues continue to exist.

JP Morgenthal (@jpmorgenthal) asserted Thar Be Danger in That PaaS in a 7/31/2011 post to his Tech Evangelist blog:

There's a lot of momentum behind moving to Platform-as-a-Service (PaaS) for delivery of applications on the cloud, but is there enough maturity in PaaS to deploy a mission-critical application? Here's a story regarding one loyalty program provider that offers his customers SaaS solutions. This SaaS provider deployed his platform on a PaaS that offered SQL Server and ASP.NET services. The SaaS has been operating perfectly for months handling the needs of his clientele, but unexplainably stopped working midday on a Saturday—a busy retail time period.

While the PaaS provider supposedly offered 24x7 support, they responded only to the first support ticket with a single response, “we really can't identify problems within your application.” The developers did what they could to discern the problem, but all their research pointed to something changing on the server. Upon hearing this, I had a bevy of thoughts regarding the maturity of PaaS:

- With IaaS, the customer takes responsibility for maintenance and updates to the server OS, so, hopefully, they test changes before rolling out into production. SaaS providers also could implement changes that could impact their customers, but, again, I would expect that these would be tested before rolling out into production with the ability to rollback should some unforeseen problem arise. However, PaaS providers can make changes to the platform that are all but impossible to test against every customer's application, meaning that changes the PaaS provider rolls out could shut your application down. Moreover, they may not even be aware of all the nuances of a vendor-supplied patch making it very difficult to recover and correct.

- Out of IaaS, PaaS, & SaaS, the PaaS provider has the greatest likelihood of pointing their finger back at you before pointing at themselves. After all, other customer's applications are up and running, so it must be your application. Hence, a PaaS provider needs to provide much more expensive help desk support than the other two service models in order to be able to ascertain the severity of a problem and get it corrected. Additionally, PaaS providers need to provide more 1-on-1 phone support as these issues are too complex to handle by email and trouble tickets alone.

- IaaS, PaaS and SaaS each offer less visibility into the internals of the service respectively. However, lack of control over the PaaS means that key settings that may affect the performance of your application may be outside the ability for you to affect. Case in point, the specific problem this loyalty SaaS provider had was that the PaaS was reporting a general error and telling him to make certain changes to his application configuration environment. However, the recommended changes were already implemented and it was seemingly ignored by the platform. At this point, the lack of visibility meant that the PaaS service provider was the only one capable of debugging the problem, even it was the fault of the application, which in this case it was not.

- Which brings us to the next point, the problem according to the PaaS provider was that an update forced the application to run under an incorrect version of .NET. Even though the developers forced the appropriate version through their control panel once problems started occurring, it seems that the control panel changes didn't actually have any affect. Hence, the customer must have a lot of faith that the tools the PaaS provider offers actually do what they state they are doing and if they don't then the visibility issue in point #3 will once again limit any ability to return to full operating status.

All this leads me to question if PaaS is actually a viable model for businesses to rely on. IaaS gives them complete control, but they will need to learn how to architect for scale. SaaS removes all concern for having to manage the underlying architecture and the SaaS provider will live or die based on their ability to manage the user experience. PaaS, is fraught with pitfalls and dangers that could cause your application to stop running at any point. Moreover, should this occur, the ability to identify and correct the problem may be so far out of your hands that only by spending an inordinate amount of time with your PaaS provider's support personnel could the problem be corrected.

I welcome input from PaaS providers to explain how they overcome these issues and guarantee service levels to their customers, when they cannot guarantee that customer's applications are properly written, that the libraries they use will run as expected in a multi-tenant environment or that a change they make to the platform won't stop already applications from running. Additionally, I'd be interested in hearing answers that equate to PaaS solutions that are more than pre-defined IaaS images, such as CloudFoundry or Azure.

I wouldn’t call the Windows Azure Platform a “pre-defined IaaS image.” Would you?

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Matt Weinberger (@MattNLM) reported Fujitsu Launches Microsoft Windows Azure Cloud Middleware in an 8/1/2011 post:

Fujitsu is extending its commitment to the Microsoft Windows Azure platform-as-a-service (PaaS) cloud with the launch of new middleware designed to bring Java and COBOL to Redmond’s SaaS ISV partners. The offering is designed to easily enable enterprises to deploy legacy mission-critical on-premises applications in Microsoft’s cloud.

The new cloud products will be available in the Windows Azure Marketplace app store, and plays an important role in building Fujitsu’s overall middleware business, according to the press release. Right now, the Azure application scene mostly consists of Visual C# or Visual Basic .NET applications — but by extending application runtime environments to Windows Azure, Fujitsu is banking on making the platform much more attractive for enterprise developers.

Here’s the skinny on the four new products, taken directly from Fujitsu’s press release:

- Interstage Application Server V1 powered by Windows Azure: Provides the latest Java runtime environment for the Windows Azure Platform

- NetCOBOL for .NET V4.2: Provides a COBOL runtime environment for the Windows Azure Platform

- Systemwalker Operation Manager V1 powered by Windows Azure: Coordinates job scheduling between on-premise systems and the Windows Azure Platform

- Systemwalker Centric Manager V13.5: Central multiplatform system monitoring that includes the Windows Azure Platform

The guest operating system for the entire new line of middleware is Windows Azure Guest OS, which supports Windows Azure SKD 1.4. The middleware is compatible with both Microsoft Windows Azure and the Fujitsu Global Cloud Platform Powered by Windows Azure.

Fujitsu remains a major partner in Microsoft’s quest for cloud domination, receiving at least one onstage shout-out during the keynote sessions at July’s Microsoft Worldwide Partner Conference 2011. I have a strong feeling this isn’t the last we’ve heard from Fujitsu about deepening its ties with Microsoft Windows Azure, so stay tuned.

Read More About This Topic

<Return to section navigation list>

Cloud Security and Governance

James Urquhart (@jamesurquhart, pictured below) posted Regulation, automation, and cloud computing to C|Net News’ The Wisdom of Clouds blog on 8/1/2011:

Chris Hoff, a former colleague now at Juniper Systems, and a great blogger in his own right, penned a piece last week about the weak underbelly of automation: our decreased opportunity to react manually to negative situations before they become a crisis. Hoff put the problem extremely well in the opening of the post:

I'm a huge proponent of automation. Taking rote processes from the hands of humans & leveraging machines of all types to enable higher agility, lower cost and increased efficacy is a wonderful thing.

However, there's a trade off; as automation matures and feedback loops become more closed with higher and higher clock rates yielding less time between execution, our ability to both detect and recover -- let alone prevent -- within a cascading failure domain is diminished.

I've stated very similar things in the past, but Hoff went on to give a few brilliant examples of the kinds of things that can go wrong with automation. I recommend reading his post and following some of the links, as they will open your eyes to the challenges we face in an automated IT future.

One of the things I always think about when I ponder the subject of cloud automation, however, is how we handle one of the most important--and difficult--things we have to control in this globally distributed model: legality and compliance.

If we are changing the very configuration of our applications--including location, vendors supplying service, even security technologies applied to our requirements--how the heck are we going to assure that we don't start breaking laws or running afoul of our compliance agreements?

It wouldn't be such a big deal if we could just build the law and compliance regulations into our automated environment, but I want you to stop and think about that for a second. Not only do laws and regulations change on an almost daily basis (though any given law or regulation might change occasionally), but there are so many of them that it is difficult to know which rules to apply to which systems for any given action.

In fact, I long ago figured out that we will never codify into automation the laws required to keep IT systems legal and compliant. Not all of them, anyway. This is precisely because humanity has built a huge (and highly paid) professional class to test and stretch the boundaries of those same rules every day: the legal profession.

How is the law a challenge to cloud automation? Imagine a situation in which an application is distributed between two cloud vendor services. A change is applied to key compliance rules by an authorized regulatory body.

That change is implemented by a change in the operations automation of the application within one of the cloud vendor's service. That change triggers behavior in the distributed application that the other cloud vendor sees as an anomalous operational event in that same application.

The second vendor triggers changes via automation that the first vendor now sees as a violation of the newly applied rules, so it initiates action to get back into compliance. The second vendor sees those new actions as another anomaly, and the cycle repeats itself.

Even changes not related to compliance run the risk of triggering a cascading series of actions that result in either failure of the application or unintentionally falling out of compliance. In cloud, regulatory behavior is dependent on technology, and technology behavior is dependent on the rules it is asked to adhere to.

Are "black swan" regulatory events likely to occur? For any given application, not really. In fact, one of the things I love about the complex systems nature of the cloud is the ability for individual "agents" to adapt. (In this case, the "agents" are defined by application developers and operators.) Developers can be aware of what the cloud system does to their apps, or what their next deployment might need to do to stay compliant, and take action.

However, the nature of complex systems is that within the system as a whole, they will occur. Sometimes to great detriment. It's just that the positive effect of the system will outweigh the cost of those negative events...or the system will die.

I stumbled recently on a concept called "systems thinking" which I think holds promise as a framework for addressing these problems. From Wikipedia:

Systems Thinking has been defined as an approach to problem solving, by viewing "problems" as parts of an overall system, rather than reacting to specific part, outcomes or events and potentially contributing to further development of unintended consequences. Systems thinking is not one thing but a set of habits or practices within a framework that is based on the belief that the component parts of a system can best be understood in the context of relationships with each other and with other systems, rather than in isolation. Systems thinking focuses on cyclical rather than linear cause and effect.

Dealing with IT regulation and compliance in an automated environment will take systems thinking--understanding the relationship between components in the cloud, as well as the instructed behavior of each component with regard to those relationships. I think that's a way of thinking about applications that is highly foreign to most software architects, and will be one of the great challenges of the next five to 10 years.

Of course, to what extent the cloud should face regulation is another nightmare entirely.

Graphics Credit: Flickr/Brian Turner

<Return to section navigation list>

Cloud Computing Events

Barton George (@Barton808) posted OSCON: The Data Locker project and Singly on 8/1/2011:

Who owns your data? Hopefully the answer is you and while that may be true it is often very difficult to get your data out of sites you have uploaded it to and move it elsewhere. Additionally, your data is scattered across a bunch of sites and locations across the web, wouldn’t it be amazing to have it all in one place and be able to mash it up and do things with it? Jeremie Miller observed these issues within his own family so, along with a few friends, he started the Data Locker project and Singly (Data Locker is an open source project and Singly is the commercial entity behind it).

I caught up with Jeremie right after the talk he delivered at OSCON. Here’s what he had to say:

Some of the ground Jeremie covers:

- The concept behind the Data Locker project, why you should care

- How the locker actually works

- The role Singly will play as a host

- Where they are, timeline-wise, on both the project and Singly

Extra-credit reading

- Forbes.com: The Locker Project and Your Digital Wake

- BusinessInsider: The 20 Hot Silicon Valley Startups You Need To Watch

- Matt Zimmerman: Why I’m excited about joining Singly

- OSCON: ex-NASA cloud lead on his OpenStack startup, Piston

- OSCON: How foursquare uses MongoDB to manage its data

Pau for now…

Barton George (@Barton808) interview at OSCON: ex-NASA cloud lead on his OpenStack startup, Piston reported in a 7/31/2011 post:

Last week at OSCON in Portland, I dragged Josh McKenty away from the OpenStack one-year anniversary (that’s what Josh is referring to at the very end of the interview) to do a quick video. Josh, who headed up NASA’s Nebula tech team and has been very involved with OpenStack from the very beginning has recently announced Piston, a startup that will productize OpenStack for enterprises.

Here is what the always entertaining Josh had to say:

Some of the ground Josh covers:

- What, in a nutshell, will Piston be offering?

- Josh’s work at NASA and how got involved in OpenStack

- Timing around Piston’s general release and GA

- The roles he plays on the OpenStack boards

- What their offering will have right out of the shoot and their focus on big data going forward

Extra-credit reading

- InformationWeek: OpenStack Founder’s Startup, Piston, Eyes Private Cloud Security

- My blog: NASA’s chief cloud architect talks OpenStack

Pau for now…

See the embedded video in Bart’s post.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Matt Weinberger (@MattNLM) asked Is Amazon S3 Cloud Storage Becoming a Hotbed of Cybercrime? in an 8/1/2011 post:

Evidence is emerging that the Amazon S3, the cloud storage offering from IaaS giant Amazon Web Services (AWS), has become the go-to location for cybercriminals looking to squirrel away their identity-theft malware in the cloud.

The data in question was revealed by the security researchers at Kaspersky Labs in a series of blog entries at SecureList. In early June, Kaspersky Lab Expert Dmitry Bestuzhev reported he had uncovered the fact that a rootkit worm designed to steal financial data from unwary users and send it back to home base via e-mail was hosted on Amazon S3 by users based in Brazil.

Oh, and by using a legitimate, legal anti-piracy baffler called The Enigma Protector, the criminals were able to delay reverse-engineering efforts. It took AWS more than 12 hours to take down the malware links after Bestuzhev alerted the provider to their presence.

And then late last week, Kaspersky’s Jorge Mieres blogged the Amazon S3 cloud is what The VAR Guy might call a wretched hive of scum and villainy — cybercriminals are using Amazon’s cloud to host and run SpyEye, an identity theft suite. In fact, it appears the thieves used their ill-gotten identity data to open and fund the AWS accounts they needed.

And while Mieres said noted the two incidents are isolated, they definitely speak to a rise in cybercriminals turning to the cloud to mask their illegal actions.

To me, this calls to mind the debate over Wikileaks’ ejection from Amazon EC2. And while I’ll let the scholars debate the legal distinction between the incidents, the takeaway for cloud service providers is the same: In a market where you don’t know who’s using your service, and where you may never meet some of your customers face-to-face, you need an action plan and a process for dealing with the fallout when illegal — or even questionable — material ends up on your servers.

Read More About This Topic

James Hamilton reported SolidFire: Cloud Operators Becomes a Market in an 8/1/2011 post:

It’s a clear sign that the Cloud Computing market is growing fast and the number of cloud providers is expanding quickly when startups begin to target cloud providers as their primary market. It’s not unusual for enterprise software companies to target cloud providers as well as their conventional enterprise customers but I’m now starting to see startups building products aimed exclusively at cloud providers. Years ago when there were only a handful of cloud services, targeting this market made no sense. There just weren’t enough buyers to make it an interesting market. And, many of the larger cloud providers are heavily biased to internal development further reducing the addressable market size.

A sign of the maturing of the cloud computing market is there now many companies interested in offering a cloud computing platform not all of which have substantial systems software teams. There is now a much larger number of companies to sell to and many are eager to purchase off the shelf products. Cloud providers have actually become a viable market to target in that there are many providers of all sizes and the overall market continues to expand faster than any I have seen any I’ve seen over the last 25 years.

An excellent example of this new trend of startups aiming to sell to the Cloud Computing market is SolidFire which targets the high performance block storage market with what can be loosely described as a distributed Storage Area Network. Enterprise SANs are typically expensive, single-box, proprietary hardware. Enterprise SANs are mostly uninteresting to cloud providers due to high cost and the hard scaling limits that come from scale-up solutions. SolidFire implements a virtual SAN over a cluster of up to 100 nodes. Each node is a commodity 1RU, 10 drive storage server. They are focused on the most demanding random IOPS workloads such as database and all 10 drives in the SolidFire node are Solid State Storage devices. The nodes are interconnected by up 2x 1GigE and 2x10GigE networking ports.

In aggregate, each node can deliver a booming 50,000 IOPS and the largest supported cluster with 100 nodes can support 5m IOPS in aggregate. The 100 node cluster scaling limit may sound like a hard service scaling limit but multiple storage clusters can be used to scale to any level. Needing multiple clusters has the disadvantage of possibly fragmenting the storage but the advantage of dividing the fleet up into sub-clusters with rigid fault containment between them limiting the negative impact of software problems. Reducing the “blast radius” of a failure makes moderate sized sub-clusters a very good design point.

Offering distributed storage solution isn’t that rare – there are many out there. What caught my interest at SolidFire was 1) their exclusive use of SSDs, and 2) an unusually nice quality of service (QoS) approach. Going exclusively with SSD makes sense for block storage systems aimed exclusively at high random IOPS workload but they are not a great solution for storage bound workloads. The storage for these workloads is normally more cost-effectively hosted on hard disk drives. For more detail on where SSDs are win an where they are not:

- When SSDs Make Sense in Server Applications

- When SSDs Don’t Make Sense in Server Applications

- When SSDs make sense in Client Applications (just about always)

The usual solution to this approach is do both but SolidFire wanted a single SSD optimized solution that would be cost effective across all workloads. For many cloud providers, especially the smaller ones, a single versatile solution has significant appeal.

The SolidFire approach is pretty cool. They exploit the fact that SSDs have abundant IOPS but are capacity constrained and trade off IOPS to get capacity. Dave Wright the SolidFire CEO describes the design goal as SSD performance at a spinning media price point. The key tricks employed:

- Multi-Layer Cell Flash: They use MLC Flash Memory storage since it is far cheaper than Single Level Cell, the slightly lower IOPS rate supported by MLC is still more than all but a handful of workloads require and they can solve the accelerated wear issues with MLC in the software layers above

- Compression: Aggregate workload dependent gains estimated to be 30 to 70%

- Data Deduplication: Aggregate workload dependent gains estimated to be 30 to 70%

- Thin Provisioning: Only allocate blocks to a logical volume as they are actually written to. Many logical volumes never get close to the actual allocated size.

- Performance Virtualization: Spread all volumes over many servers. Spreading the workload at a sub-volume level allows more control of meeting the individual volume performance SLA with good utilization and without negatively impacting other users

The combination of the capacity gains of thin provisioning, duplication, and compression bring the dollars per GB of the SolidFire solution very near to some hard disk based solutions at nearly 10x the IOPS performance.

The QoS solution is elegant in that they have three settings that allow multiple classes of storage to be sold. Each logical volume has 2 QoS settings: 1) Bandwidth, and 2) IOPS. Each setting has a min, max, and burst capacity setting. The min setting sets a hard floor where capacity is reserved to ensure this resource is always available. The burst is the hard ceiling that prevents a single user for consuming excess resource. The max is the essentially the target. If you run below the max you build up credits that allow a certain time over the max. The Burst limits the potential negative impact of excursions above max on other users.

This system can support workloads that need dead reliable, never changing I/O requirements. It can also support dead reliable average case with rare excursions above (e.g. during a database checkpoint). Its also easy to support workloads that soak up resources left over after satisfying the most demanding workloads without impacting other users. Overall, a nice simple and very flexible solution to a very difficult problem.

Marcin Okraszewski described resources for Comparing prices of cloud computing providers in an 8/1/2011 post to the Cloud Computing Economics blog:

When considering ROI maximization of cloud computing solutions it is crucial to minimize costs of cloud hosting, especially that differences can be very big. Anyone who has tried to compare prices of IaaS providers knows that it is a very tedious task if done manually, since there are different pricing models used, there are subscription plans available, etc. It really requires some time to understand a provider’s pricing model. Probably most of users will give up just after few providers. But there are several ways to deal with this.

Probably the most popular way of finding the cheapest provider is studying articles that try to compare several providers or “which provider is the cheapest” forum questions. These are the most natural way of sharing ones experience but still it is only individuals’ research result and what is the most importantly – matching their needs. Prices of cloud computing are dependent on many factors, like size of a cloud, proportion of resources need (one may have cheap RAM, while other have storage), bandwidth requirements or if you need on demand or subscription instances. So there is no universally cheapest provider but rather cheapest for the particular needs.

A one step further is Cloud Price Calculator. Unlike the name suggests its approach is to define a Cloud Price Normalization index, which is a sum of resources divided by price. Unfortunately it has just several configurations from few providers, which does not cover full space, and you can only provide your own resources with price (which you need to calculate on your own), to get the index calculated so you can compare it to others.

But the ultimate solution may bring Cloudorado – Cloud Computing Comparison Engine. With this service you provide the resources you need on your cloud (RAM, CPU, storage, bandwidth, OS) while it will calculate the cheapest option for each provider and give you the price for each of them. It will even take into account packages or subscription plans, if you provide maximum plan duration. In advanced mode you can also calculate more complicated models with multiple servers, servers not running instantly or tell the engine to find best combination of servers to get a summary of a resource (scale horizontally). This allows you to really compare prices and find the cheapest option for your specific needs.

Also an interesting option is recently described Python library to calculate cloud costs. It takes the required resources either from monitoring tools or from a specified usage simulation. In this way you can calculate price on your historical data or simulate the future load. Unfortunately you will need to manually program the library and what is probably even more limiting – currently it will calculate costs only for Amazon and Rackspace.

There is couple of ways of finding the cheapest cloud provider. Regardless the way you choose – manual calculations, articles or any way of automatic price calculation (Cloudorado or the Python library) keep in mind that there is no universally cheapest cloud provider but the cheapest providers for specific needs. And take it really seriously – difference between the cheapest and the most expensive can easily exceed 10x while the cheapest provider in one configuration might be the most expensive in another!

The Unbreakable Cloud reported A flagship NoSQL Database Server Couchbase Server 2.0 released in a 7/31/2011 post:

Couchbase has released a developer preview of Couchbase Server 2.0 which is described as high performance, highly scalable, document-oriented NoSQL database. Couchbase Server 2.0 combines the unmatched elastic data management capabilities of Membase Server with the distributed indexing, querying and mobile synchronization capabilities of Apache CouchDB, the most widely deployed open source document database, to deliver the industry’s most powerful, bullet-proof NoSQL database technology.

Couchbase Server 2.0 Highlights are according to Couchbase press release:

Couchbase Server 2.0 integrates the industry’s most trusted and widely deployed open source data management technologies – CouchDB, Membase, and Memcached – to deliver the industry’s most powerful, reliable NoSQL database.

- Couchbase Server 2.0 is a member of the Couchbase family of interoperable database servers – which also includes Couchbase Single Server and Couchbase Mobile. Unique to Couchbase, “CouchSync” technology allows each of these database systems to synchronize its data with any other. For example, mobile applications built with Couchbase Mobile can automatically synchronize data with a cloud- or datacenter-deployed Couchbase cluster whenever network connectivity is available.

- Couchbase also announced its new Couchbase Certification Program. Recognizing that NoSQL database technology is increasingly being viewed as a core component in the modern web application development stack, Couchbase’s new program is designed to help developers and administrators demonstrate proficiency in the leading NoSQL database technology. The first certification exams for the new program were administered this week in conjunction with CouchConf San Francisco, the company’s developer conference.

To explore Couchbase Server 2.0, please contact Couchbase.

Related:

<Return to section navigation list>

Prerequisites

Prerequisites

0 comments:

Post a Comment