Windows Azure and Cloud Computing Posts for 8/6/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

•• Updated 8/7/2011 3:00 PM PDT with new articles marked •• by Wade Wegner, Michael Washington, James Downey, Eric Evans, Alex Popescu, Jonathan Ellis, Lydia Leong, Erik Ejlskov Jensen, Michael Hausenblas, Jeffrey Palermo, and Scott Hanselman.

• Updated 8/6/2011 4:30 PM PDT with new articles marked • by Thomas Rupp, Derrick Harris and Czaroma Roman.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting, SQL Server Compact, NoSQL, et al.

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

•• Wade Wegner (@WadeWegner) explained Using Windows Azure Blob Storage and CDN with WordPress in an 8/7/2011 post:

UPDATE: In publishing this post, I encountered an issue with the plugin. Originally I used OneNote to create screen clippings of all the images in the post, then I pasted them into Live Writer. This is my normal practice for blog posts. Unfortunately, when I published, there ended up being a mismatch between the files stored in blob storage and those included in my blog post. However, when I updated the blog post and made actual JPEGs for each image (instead of screen clippings), everything worked fine. So, until I track down the underlying issue, simply save the image to a file first.

Serving up your blogs content through CDN can create a much better experience for your readers (additionally, I’m told it helps in page ranking, so search engines will rank based on response time). I personally use WordPress running on Windows Server (not yet Windows Azure), and using Windows Azure Blob Storage and CDN seemed daunting until I found a few resources to help. Below you’ll find a tutorial on setting this up yourself.

There are two resources you need to grab in order to easily set this up.

Important: These two resources ship separately, which means that you must ensure you’re grabbing versions designed to work together. In my case, I’ve downloaded version 3.0.0 of the Windows Azure SDK for PHP v3.0.0 even thought v4.0.1 is the most recent drop.

These two resources provide documentation that I assume works well when running on Linux, but there’s hardly any documentation for those of us running WordPress on Windows Server (in my case Windows Server 2008 R2). Here are the steps I took to configure these resources.

Setting up the Windows Azure SDK for PHP Developers v3.0.0

Downloading the v3.0.0 SDK will yield a zip field named PHPAzure-3.0.0.zip. The first thing I did was to unzip it under C:\Program Files (x86)\PHPAzure-3.0.0. Why did I choose this folder structure? Two reasons:

- PHP itself is installed to C:\Program Files (x86)\, so I thought I’d keep this SDK near to PHP.

- It’s possible in the future that I’ll want to use v4.0.1 along side version v.3.0.0 (although to be honest I don’t know if this is supported) and I didn’t want to get myself stuck by using a non-versioned folder.

You should now have a folder that looks like this:

In order to take advantage of the SDK, you need to update your php.ini file and add the path to the library folder in the include_path variable. Browse to your PHP folder (in my case it is C:\Program Files (x86)\PHP\v5.2) and open the php.ini file.

Search for the include_path variable. It might help to use “include_path = “ for your search terms. In my case, this variable was commented out, so I had to both uncomment the variable and add my path. In the end, you should have something looking like this:

include_path = ".;c:\Program Files (x86)\PHPAzure-3.0.0"

Pretty reasonable. This is all you need to do with the Windows Azure SDK for PHP Developers.

Setting up Windows Azure Storage for WordPress

Now you can download the Windows Azure Storage for WordPress plugin. This time I unzipped the contents of the folder to my plugins folder at C:\Websites\WadeWegner\wp-content\plugins. Your folder path will most definitely be different; however, I will need to be under “wp-content\plugins” to work correctly.

Once unzipped, you should see the following:

Now, to get this to work, you also have to add the path to the SDK in the windows-azure-storage.php file. It took me a bit to figure out exactly what to update, but in the end it’s not particularly challenging.

We’re going to update the set_include_path method so that it includes the SDK library.

Initially, the code block looks like this:

if (isset($_SERVER["APPL_PHYSICAL_PATH"])) {

set_include_path(

get_include_path() . PATH_SEPARATOR . $_SERVER["APPL_PHYSICAL_PATH"]

);

}You should update it accordingly (where the updates are highlighted in yellow):

if (isset($_SERVER["APPL_PHYSICAL_PATH"])) {

set_include_path(

get_include_path() . PATH_SEPARATOR . $_SERVER["APPL_PHYSICAL_PATH"] . PATH_SEPARATOR . ‘C:\Program Files (x86)\PHPAzure-3.0.0\library’

);

}Be sure to use the correct path based on where you placed the SDK. Also, but sure use double backslashes, or it won’t work.

At this point you should be ready to activate and configure the plugin.

Activating and Configuring the Windows Azure Storage plugin.

In WordPress, browse to your Installed Plugins. The Windows Azure Storage for WordPress plugin will be deactivated – activate it. If activation fails, then something above is incorrect.

Once activated, you’ll have a Windows Azure link under Settings. Browse to it.

Here’s where you’ll specify information specific to your Windows Azure storage account. In the Windows Azure Platform Management Portal, login and grab your storage name and key. You can get this by going to Hosted Services, Storage Accounts & CDN –> Storage Accounts. From here, select the appropriate storage account. Under properties you can get the name from the Name property, and you can get your key by clicking the View button under the Primary access key property.

Back in WordPress, enter your Storage Account Name and Primary Access Key. It should look something like this:

Of course, your values will be different.

Click the Save Changes button. Once you’ve saved, the Default Storage Container dropdown list will populate with storage containers listed in your storage account. Here’s what mine looks like:

I created a container called “wordpress”. If you don’t see a container you want to use, you’ll have to create it. To do this easily, I recommend you use a tool like CloudXplorer. This tool is not only useful for creating the container, but setting the access control policy to “Public read access (blobs only)” – this is important because by default the container is marked “private” and is not accessible through the browser without the proper credentials.

When you’ve set the policy correctly, it should look like this:

Test this out and make sure you can browse to a file in your container and view it correctly.

Once you have your container, select it and click Save Changes.

Lastly, I recommend you check the Use Windows Azure Storage for default upload option so that by default your resources will go to Windows Azure storage instead of your server. Click Save Changes when complete.

At this point everything should be correctly setup such that when you publish a blog post – even with a tool such as Windows Live Writer – it will place your images and resources into your Windows Azure blob storage account. This is great! Test it out yourself (but I recommend you publish as a draft post just in case things don’t work correctly).

Now that you have this setup correctly, I think you should strongly consider using the CDN. The primary reason to do this is so that your site loads as fast as possible for your readers. Take a look at the article WordPress + CDN == Fast Load Times for more info.

Setting Up the CDN

While this is a bit more advanced of a topic, it is by no means difficult to accomplish.

The Windows Azure CDN is setup such that you can easily turn the CDN on for your storage account such that everything stored in blob storage can get served through the CDN. In the Windows Azure Platform Management Portal, select Hosted Services, Storage Accounts & CDN –> CDN. Select your storage account. In the upper left and corner of the portal, click the button New Endpoint.

A dialog window will open that gives you the opportunity to choose some settings:

You don’t need to change any of the settings (unless you want to do so). Click OK.

At this point it will start to create and propagate your CDN settings. This will take awhile, so don’t get impatient – it needs to propagate to the 26 (or more) CDN nodes worldwide.

Once this process has completed, you’ll see a note saying that the CDN endpoint has been enabled (again, note that the portal will say Enabled before everything has propagated worldwide – patience!).

Now, once this is done, you’ll be able to browse to resources in storage through the CDN URL, which will look something like this:

http://az29238.vo.msecnd.net/images/Mug-195w.jpg

While this works perfectly well, I personally don’t like the non-customized URL. To fix this, you can use a custom domain name of your own choosing with the CDN. Take a look at Steve Marx’s post on Using the New Windows Azure CDN with a Custom Domain. Warning: his post pre-dates the new portal design, but conceptually it’s still the same. Note that once you verify your account it can take another 60 minutes or so for your new custom domain name to propagate to the CDN nodes. The end result should look like this:

http://images.wadewegner.com/images/Mug-195w.jpg

If you run nslookup on the custom domain name, you should see that it ultimately resolves to the CDN:

Great, now you have successfully enabled the CDN on your storage account along with a custom domain name. Now we have to perform one last update in WordPress.

Using the CDN with the WordPress Plugin

Last step is to configure the Windows Azure Storage for WordPress plugin to serve your content up via the CDN.

One the plugin settings page you’ll se a CNAME property you can specify. Set the value to your custom domain’s FQDN, like this: http://images.wadewegner.com. It should look like this on the page:

Make sure to click Save Changes.

Test it Out!

The easiest way to test it out is to create a draft blog post with an image. I’ll use Windows Live Writer. Here’s a quick example.

When you go to post, but sure to use Post draft to blog. This way, if you screw up, no one will know.

After you post, take a look at the preview of the post. In particular, right-click on the image and look at the URL:

See how it’s getting served up from the custom CDN domain? Awesome!

Furthermore, take a look at blob storage. You’ll see that your resources/images where uploaded:

That’s it!

I hope you find this useful. I plan to go through all the resources on my blog soon and update them such that the images are served through the CDN, but I’ll save that for another post!

I delayed posting Wade’s article until 8/8/2011 8:00 AM PDT because the original was unavailable and the images were missing from his Atom feed on 8/7.

Brent Stineman (@BrentCodeMonkey) posted Azure Tools for Visual Studio 1.4 August Update–Year of Azure Week 5 on 8/5/2011:

Good evening folks. Its 8pm on Friday August 5th, 2011 (aka international beer day) as I write this. Last week’s update to my year of Azure series was weak

week, but this week’s will be even lighter. Just too much to do and not enough time I’m afraid.

As you can guess from the title of this update, I’d like to talk about the new 1.4 SDK update. Now I could go to great length about all the updates, but given that the Windows Azure team blog already did, and that Wade and Steve already covered it in this week’s cloud cover show. So instead, I’d like to focus on just one aspect of this update, the Azure Storage Analytics.

I can’t tell you all how thrilled I am. The best part of being a Microsoft MVP is all the great people you get to know. The second best part is getting to have an impact in the evolution of a product you’re passionate about. And while I hold no real illusion that anything I’ve said or done has led to the introduction of Azure Storage analytics, I can say its something I (and others) have specifically asked for.

I don’t have enough time this week to write up anything. Fortunately, Steve Marx has already put together the basics on how to interact with it. If that’s not enough, I recommend you go and check out the MSDN documentation on the new Storage Analytics API.

One thing I did run across while reading through the documentation tonight was that the special container that Analytics information gets written to, $Logs, has a 20TB limit. And that this limit is independent of he 100TB limit that is on rest of the storage account. This container is also subject to the being billed for data stored, and read/write actions. However, delete operations are a bit different. If you do it manually, its billable. But if its done as a result of the retention policies you set, it[‘]s not

now. [Emphasis added.]So again, apologies for an extremely weak

weekupdate this week. But I’m going to try and ramp things up and take what Steve did and give you a nice code snippet that you can easily reuse. If possible, I’ll see if I can’t get that cranked out this weekend.

For more detailed information about Windows Azure Storage Analytics, see my Windows Azure and Cloud Computing Posts for 8/5/2011 and Windows Azure and Cloud Computing Posts for 8/3/2011+.

<Return to section navigation list>

SQL Azure Database and Reporting, SQL Server Compact, NoSQL, et al.

•• Alex Popescu reported in an 8/17/2011 post to his myNoSQL blog that Eric Evans (@jericevans, who coined the term “NoSQL”, pictured below) presented Cassandra Query Language: Not Just NoSQL. It’s MoSQL to the Cassandra SF 2011 conference held at the University of California at San Francisco (UCSF) Mission Bay campus on July 11, 2011:

Cassandra 0.8 included the first version of Cassandra Query Language or CQL. Eric Evans gave a talk at Cassandra SF 2011 introducing Cassandra Query Language as an alternative and not replacement of the current Cassandra API:

Eric’s recommended pronunciation for CSQL is (not surprisingly) “Sequel.”

Click here for DataStax’s full presentation page for the conference.

•• Jonathan Ellis (@spyced) provided a description and sample queries for CSQL in his What’s New in Cassandra 0.8, Part 1: CQL, the Cassandra Query Language post to DataStax’ Cassandra Developer Center blog on 6/9/2011 (missed when pupblished):

Why CQL?

Pedantic readings of the NoSQL label aside, Cassandra has never been against SQL per se. SQL is the original data DSL, and quite good at what it does.

Cassandra originally went with a Thrift RPC-based API as a way to provide a common denominator that more idiomatic clients could build upon independently. However, this worked poorly in practice: raw Thrift is too low-level to use productively, and keeping pace with new API methods to support (for example) indexes in 0.7 or distributed counters in 0.8 is too much for many maintainers to keep pace with.

CQL, the Cassandra Query Language, addresses this by pushing all implementation details to the server; all the client has to know for any operation is how to interpret “resultset” objects. So adding a feature like counters just requires teaching the CQL parser to understand “column + N” notation; no client-side changes are necessary.

CQL drivers are also hosted in-tree, to avoid the problems caused in the past by client proliferation.

At the same time, CQL is heavily based on SQL–close to a subset of SQL, in fact, which is a big win for newcomers: everyone knows what “SELECT * FROM users” means. CQL is the first step to making the learning curve on the client side as gentle as it is operationally.

(The place where CQL isn’t a strict subset of SQL–besides TABLE vs COLUMNFAMILY tokens, which may change soon–is in support for Cassandra’s wide rows and heirarchical data like supercolumns.)

A taste of CQL

Here we’ll use the cqlsh tool to create a “users” columnfamily, add some users and an index, and do some simple queries. (See our documentation for details on installing cqlsh.) Compare to the same example using the old cli interface if you’re so inclined.

cqlsh> CREATE KEYSPACE test with strategy_class = 'SimpleStrategy' and strategy_options:replication_factor=1;

cqlsh> USE test;

cqlsh> CREATE COLUMNFAMILY users (

... key varchar PRIMARY KEY,

... full_name varchar,

... birth_date int,

... state varchar

... );cqlsh> CREATE INDEX ON users (birth_date);

cqlsh> CREATE INDEX ON users (state);cqlsh> INSERT INTO users (key, full_name, birth_date, state) VALUES ('bsanderson', 'Brandon Sanderson', 1975, 'UT');

cqlsh> INSERT INTO users (key, full_name, birth_date, state) VALUES ('prothfuss', 'Patrick Rothfuss', 1973, 'WI');

cqlsh> INSERT INTO users (key, full_name, birth_date, state) VALUES ('htayler', 'Howard Tayler', 1968, 'UT');cqlsh> SELECT key, state FROM users;

key | state |

bsanderson | UT |

prothfuss | WI |

htayler | UT |cqlsh> SELECT * FROM users WHERE state='UT' AND birth_date > 1970;

KEY | birth_date | full_name | state |

bsanderson | 1975 | Brandon Sanderson | UT |Current status and the road ahead

cqlsh (included with the Python driver) is already useful for testing queries against your data and managing your schema. The above example works as written in 0.8.0; ALTER COLUMNFAMILY, TTL support, and counter support are already done for 0.8.1.

Supercolumn support is the main missing feature and will probably come in 0.8.2 (minor releases are done roughly monthly). Removing Thrift as a requirement is a longer-term goal.

CQL does not change the underlying Cassandra data model; in particular, there is no support for JOINs. (For doing analytical queries with SQL against Cassandra, see Brisk.)

The Python and Node.js CQL drivers are ready for wider use; JDBC and Twisted are almost done, and PHP and Ruby are being worked on.

In short, CQL is ready for client authors to get involved. Most application developers should stick with the old Thrift RPC-based clients until 0.8.1*. If you want to give CQL a try, see the installation instructions, and for developers, the drivers tree was recently moved here.

*One such client, Hector, already has CQL query support.

Jonathan is a DataStax co-founder and project chair for Apache Cassandra.

•• Erik Ejlskov Jensen (@ErikEJ) described his SQL Server Compact Toolbox 2.3–Visual Guide of new features in an 8/7/2011 post:

After more than 44.000 downloads, version 2.3 of my SQL Server Compact Toolbox extension for Visual Studio 2010 is now available for download. This blog post is a visual guide to the new features included in this release

Generate database documentation

This feature allows you to create documentation of all tables and columns in your database, in HTML or XML (raw) format, for use with product documentation etc. If you have added descriptions to database, table or column, these will also be included.

From the database context menu, select Create Database Documentation…

You will be prompted for a filename and can choose between HTML and XML format. The generated document will then open in the associated application (for example your browser).

The format of the HTML and XML file comes from the excellent DB>doc for Microsoft SQL Server CodePlex project. You can use the XML file as the data in your own documentation format.

By default, tables beginning with __ are not included in the documentation (this includes the table with object descriptions). They can optionally be included via a new option:

Please provide any feedback for this new feature to the CodePlex issue tracker

Handle password protected files better

When trying to open a password protected file, where the password is not saved with the connection string, you are now prompted to enter the database password, instead of being faced with an error.

Show result count in status bar

The query editor status bar now displays the number of rows returned.

Other fixes

Improvements to Windows Phone DataContext generation, improved error handling to prevent Visual Studio crashes, and the latest scripting libraries included.

•• Erik (@ErikEJ) listed Windows Phone / SQL Server Compact resources in an 8/3/2011 post (missed when published):

MSDN

- Local Database Overview for Windows Phone

- How to: Create a Basic Local Database Application for Windows Phone

- How to: Create a Local Database Application with MVVM for Windows Phone

- How to: Deploy a Reference Database with a Windows Phone Application

- Local Database Best Practices for Windows Phone

- Local Database Connection Strings for Windows Phone

- LINQ to SQL Support for Windows Phone

- Video: SQL Server Compact and User Data Access in Mango

- PowerPoint slides: SQL Server Compact and User Data Access in Mango

Jesse Liberty

- Coming in Mango–Sql Server CE

- Coming In Mango–Local DB Part 2- Relationships

- Best Practices For Local Databases

- Yet Another Podcast #43–Sean McKenna and Windows Phone Data

Rob Tiffany

Alex Golesh

Windows Phone Geek

- Windows Phone Mango Local Database- mapping and database operations

- Using SqlMetal to generate Windows Phone Mango Local Database classes

- Performance Best Practices: Windows Phone Mango Local Database

- Windows Phone Mango Local Database(SQL CE): Introduction

- Windows Phone Mango Local Database(SQL CE): Linq to SQL

- Windows Phone Mango Local Database(SQL CE): [Table] attribute

- Windows Phone Mango Local Database(SQL CE): [Column] attribute

Arsahnt

- Distributing a SQL CE database in a WP7 Mango application

- Arsanth Daily – May 25th

- Arsanth Daily – May 27th

- Windows Phone 7 SQL CE – Column inheritance

- Windows Phone 7 SQL CE – DataContext Tables

- Working with pre-populated SQL CE databases in WP7

- LINQ to SQL CE performance tips for WP7

- Arsanth Daily – June 6th

Kunal Chowdhury

- Windows Phone 7 (Mango) Tutorial - 22 - Local Database Support, Create DataContext

- Windows Phone 7 (Mango) Tutorial - 23 - Local Database Support, Configuring Project

- Windows Phone 7 (Mango) Tutorial - 24 - Local Database Support, CRUD operation with Demo

- Windows Phone 7 (Mango) Tutorial - 25 - Learn about Database Connection String

ErikEJ

- Populating a Windows Phone “Mango” SQL Server Compact database on desktop

- SQL Server Compact Toolbox 2.2–Visual Guide of new features

Sergey Barskiy

Derik Whittaker

- Using SQL CE on WP7 Mango–Getting Started

- Using SQL CE On WP7 Mango–Working with Associations

- Using SQL CE On WP7 Mango–Working with Indexes

Mark Artega

Rabeb

JeffCren

Matt Lacey

Corrado

Max Paulousky

SQL Server Compact works with SQL Azure Data Sync and provides the database engine for Microsoft’s IndexedDB implementation for HTML 5.

Stephen O’Grady (@sogrady) asserted It’s Beginning to Look a Lot Like SQL in an 8/5/2011 post to his RedMonk blog:

Besides the fact that it has been used to group otherwise fundamentally dissimilar technologies, the fact is that the fundamental rejection of SQL implied by the term NoSQL misidentifies the issue. As the 451 Group’s Matthew Aslett says, “in most cases SQL itself is not the ‘problem’ being avoided.”

Having a standardized or semi-standardized interface for datasets is, in fact, desirable. Google App Engine, for example, which is backed by a non-relational store, exposes stored data to its own GQL query language. And the demand for a query language is, in fact, the reason that the Hive and Pig projects exist.

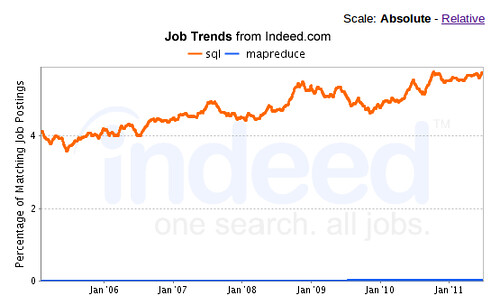

SQL is more easily learned than MapReduce, and an order of magnitude more available from a skills perspective.

That this lesson has not been lost on other non-relational datastore projects is now obvious. Last week saw the introduction of UnQL, a SQL-like query language targeted at semi-structured datasets, document databases and JSON repositories. Backed by Couchbase and SQLite, it is seeking wider adoption within the NoSQL term with its donation to the public domain.

Cassandra, meanwhile, has evolved its own SQL alternative, the Cassandra Query Language (CGL). Described by Jonathan Ellis as a subset of UnQL – CGL has no ORDER BY, for example – the Cassandra project has focused on the features that fit its particular data model best.

MongoDB also makes an effort to relate its querying syntax to SQL-trained developers, as do related projects like MongoAlchemy’s Mongo Query Expression Language.

It is too early to handicap the probable outcomes for these various query language projects. Nor is it certain that NoSQL will achieve the same consolidation the relational market did around a single approach; the differing approaches of the various NoSQL projects argue against this, in fact.

What is apparent is the demand for query languages within the NoSQL world. The category might self-identify with its explicit rejection of the industry’s original query language, but the next step in NoSQL’s evolution will be driven in part by furious implementations of SQL’s children.

Disclosure: Couchbase is a RedMonk client, and we supplied a quote for the release announcing UnQL.

<Return to section navigation list>

MarketPlace DataMarket and OData

•• Michael Hausenblas (@mhausenblas) described JSON, data and the REST in an 8/7/2011 post to the Web of Data blog:

Tomorrow, on 8.8. is the International JSON day. Why? Because I say so!

Is there a better way to say ‘thank you’ to a person who gave us so much – yeah, I’m talking about Doug Crockford – and to acknowledge how handy, useful and cool the piece of technology is, this person ‘discovered‘?

From its humble beginning some 10 years ago, JSON is now the light-weight data lingua franca. From the nine Web APIs I had a look at recently in the REST: From Research to Practice book, seven offered their data in JSON. These days it is possible to access and process JSON data from virtually any programming language – check out the list at json.org if you doubt that. I guess the rise of JSON and its continuing success story is at least partially due to its inherent simplicity – all you get are key/value pairs and lists. And in 80% or more of the use cases that is likely all you need. Heck, even I prefer to consume JSON in my Web applications over any sort of XML-based data sources or any given RDF serialization.

But the story doesn’t end here. People and organisations nowadays in fact take JSON as a given basis and either try to make it ‘better’ or to leverage it for certain purposes. Let’s have a look at three of these examples …

JSON Schema

I reckon one of the first and most obvious things people where discussing once JSON reached a certain level of popularity was how to validate JSON data. And what do we do as good engineers? We need to invent a schema language, for sure! So, there you go: json-schema.org tries to establish a schema language for JSON. The IETF Internet draft by Kris Zyp states:

JSON Schema provides a contract for what JSON data is required for a given application and how to interact with it. JSON Schema is intended to define validation, documentation, hyperlink navigation, and interaction control of JSON data.

One rather interesting bit, beside the obvious validation use case, is the support for ‘hyperlink navigation’. We’ll come back to this later.

Atom-done-right: OData

I really like the Atom format as well as the Atom Publishing Protocol (APP). A classic, in REST terms. I just wonder, why on earth is it based on XML?

Enter OData. Microsoft, in a very clever move adopted Atom and the APP and made it core of OData; but they didn’t stop there – Microsoft is using JSON as one of the two official formats for OData. They got this one dead right.

OData is an interesting beast, because here we find one attempt to address one of the (perceived) shortcomings of JSON – it is not very ‘webby’. I hear you saying: ‘Hu? What’s that and why does this matter?’ … well it matters to some of us RESTafarians who respect and apply HATEOAS. Put short: as JSON uses a rather restricted ‘data type’ system, there is no explicit support for URIs and (typed) links. Of course you can use JSON to represent and transport a URI (or many, FWIW). But the way you choose to represent, say, a hyperlink might look different from the way I or someone else does, meaning that there is no interoperability. I guess, as long as HATEOAS is a niche concept, not grokked by many people, this might not be such a pressing issue, however, there are cases were it is vital to be able to unambiguously deal with URIs and (typed) links. More in the next example …

Can I squeeze a graph into JSON? Sir, yes, Sir!

Some time ago Manu Sporny and others started an activity called JSON-LD (JavaScript Object Notation for Linking Data) that gained some movement over the past year or so; as time of writing support for some popular languages incl. C++, JavaScript, Ruby and Python is available. JSON-LD is designed to be able to express RDF, microformats as well as Microdata. With the recent introduction of Schema.org, this means JSON-LD is something you might want to keep on your radar …

On a related note: initially, the W3C planned to standardize how serialize RDF in JSON. Once the respective Working Group was in place, this was dropped. I think they made a wise decision. Don’t get me wrong, I’d have also loved to get out an interoperable way to deal with RDF in JSON, and there are certainly enough ways how one could do it, but I guess we’re simply not yet there. And JSON-LD? Dunno, to be honest – I mean I like and support it and do use it, very handy, indeed. Will it be the solution for HATEOAS and Linked Data. Time will tell.

Wrapping up: JSON is an awesome piece of technology, largely due to its simplicity and universality and, we should not forget: due to a man who rightly identified its true potential and never stopped telling the world about it.

Tomorrow, on 8.8. is the International JSON day. Join in, spread the word and say thank you to Doug as well!

Nick described Removing Strings in INotifyPropertyChanged and OData Expands in an 8/6/2011 post to his Nick’s .NET Travels blog:

Ok, so this is a rather random post but I wanted to jot down a couple of scenarios where I often see string literals in code.

Scenario 1: String Literals in INotifyPropertyChanged Implementation

The first is the default implementation of INotifyPropertyChanged. If you’ve done data binding (for example with WPF or Silverlight) you’ll be familiar with this interface – when a source property value changes if you raise the PropertyChanged event the UI gets an opportunity to refresh. The standard implementation looks a bit like this:

public class MainPageViewModel: INotifyPropertyChanged { public event PropertyChangedEventHandler PropertyChanged; private string _Title; public string Title { get { return _Title; } set { if (Title == value) return; _Title = value; RaisePropertyChanged("Title"); } } private void RaisePropertyChanged(string propertyName) { if (PropertyChanged != null) { PropertyChanged(this, new PropertyChangedEventArgs(propertyName)); } } }I always cringe when I see this because the name of the property, ie “Title” is passed into the RaisePropertyChanged method. This wouldn’t be so bad except this code block gets repeated over and over and over again – for pretty much any property you end up data binding to. Having string literals littered through your code is BAD as it makes your code incredibly brittle and susceptible to errors (for example you change the property name, without changing the string literal). A while ago I adopted the following version of the RaisePropertyChanged method which accepts an Expression and extracts the property name:

public void RaisePropertyChanged<TValue>(Expression<Func<TValue>> propertySelector) { var memberExpression = propertySelector.Body as MemberExpression; if (memberExpression != null) { RaisePropertyChanged(memberExpression.Member.Name); } }The only change you need to make in your properties is to use a lambda expression instead of the string literal, for example:

private string _Title; public string Title { get { return _Title; } set { if (Title == value) return; _Title = value; RaisePropertyChanged(()=>Title); } }Scenario 2: String Literals in the Expands method for OData

Let’s set the scene – you’re connecting to an OData source using either the desktop or the new WP7 (Mango) OData client library. Your code might look something similar to the following – the important thing to note is that we’re writing a strongly typed LINQ statement to retrieve the list of customers.

NorthwindEntities entities = new NorthwindEntities(new Uri("http://services.odata.org/Northwind/Northwind.svc"));

private void LoadCustomers()

{

var customerQuery = from c in entities.Customers

select c;

var customers = new DataServiceCollection<Customer>();

customers.LoadCompleted += LoadCustomersCompleted;

customers.LoadAsync(customerQuery);

}

private void LoadCustomersCompleted(object sender, LoadCompletedEventArgs e)

{

var customers = sender as DataServiceCollection<Customer>;

foreach (var customer in customers)

{

var name = customer.CompanyName;

}

}By default the LINQ expression will only retrieve the Customer objects themselves. If you wanted to not only retrieve the Customer but also their corresponding Orders then you’d have to change the LINQ to use the Expand method:

var customerQuery = from c in entities.Customers.Expand("Orders") select c;Now, if you wanted to be more adventurous you could extend this to include the OrderDetails (for each Order) and subsequent Product (1 for each OrderDetails record) and Category (each Product belongs to a category).

var customerQuery = from c in entities.Customers.Expand("Orders/OrderDetails/Product/Category") select c;The Order is also connected to the Shipper and Employee tables, so you might want to also bring back the relevant data from those tables too:

var customerQuery = from c in entities.Customers .Expand("Orders/OrderDetails/Product/Category") .Expand("Orders/Employee") .Expand("Orders/Shipper") select c;The result is that you have a number of string literals defining which relationships you want to traverse and bring back. Note that you only need to do this if you want to eager load this information. If you want your application to lazy load the related data you don’t require the Expand method.

The work around for this isn’t as easy as the RaisePropertyChanged method used to eliminate string literals for the INotifyPropertyChanged scenario. However, we essentially use the same technique – we replace the string literal with an expression that makes the necessary traverses. For example:

var customerQuery = from c in entities.Customers .Expand(c=>c.Orders[0].Order_Details[0].Product.Category) .Expand(c => c.Orders[0].Employee) .Expand(c => c.Orders[0].Shipper) select c;You’ll notice that in this case where we traverse from Orders to Order_Details we need to specify an array index. This can actually be any number as it is completely ignored – it’s just required so that we can reference the Order_Details property which exists on an individual Order object.

Ok, but how is this going to work? Well we’ve simply created another extension method for the DataServiceQuery class, also called Expand but accepts an Expression instead of a string literal. This method expands out the Expression and converts it to a string, which is passed into the original Expand method. I’m not going to step through the following code – it essentially traverses the Expression tree looking for MemberAccess nodes (ie property accessors) which it adds to the expand string. It also detect Call nodes (which typically corresponds to the array index accessor eg get_item( 0 )) which is skipped to move on to the next node in the tree via the Object property.

public static class ODataExtensions { public static DataServiceQuery<TElement> Expand<TElement, TValue>(this DataServiceQuery<TElement> query, Expression<Func<TElement, TValue>> expansion) { var expand = new StringBuilder(); var expression = expansion.Body as Expression; while (expression.NodeType != ExpressionType.Parameter) { if (expression.NodeType == ExpressionType.MemberAccess) { if (expand.Length > 0) { expand.Insert(0, "/"); } var mex = (expression as MemberExpression); expand.Insert(0, mex.Member.Name); expression = mex.Expression; } else if (expression.NodeType == ExpressionType.Call) { var method = (expression as System.Linq.Expressions.MethodCallExpression); if (method != null) { expression = method.Object as MemberExpression; } } } return query.Expand(expand.ToString()); } }And there you have it – you can now effectively remove string literals from the Expand method. Be warned though: using the Expand method can result in a large quantity of data being retrieved from the server in one hit. Alternatively if the server has paging enabled you will need to ensure that you traverse any Continuations throughout the object graph (more on that in a subsequent post).

Sam Matthews answered Has Netflix abandoned Odata? with a resounding “Yes” in an 8/1/2011 reply in the Netflix API Forums:

wingnutzero, Topic created 1 week ago

The Odata database hasn't been updated in almost 2 months. I use it exclusively for my website because it's so easy to get info from it without having to go through the oauth process, but users are complaining that my info isn't up to date... and they're right!

Any plans to update it? Or will I just have to shut down my site and let Netflix lose the click-through from my 60,000 monthly visitors?

Message edited by wingnutzero 5 days ago

Sam Matthews, 5 days ago

NF has abandoned the API and OData altogether... they are keeping the streaming end just to placate the set-top box manufactures who are heavily using it.

Netflix was the poster child of OData implementers and most common data source for OData client demos. Sorry to see their feed go.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Charles Babcock asserted “Amazon, Radiant Logic join competition to supply virtual directories that make enterprise identities available in the cloud” in a deck for his Cloud Identity Problems Solved By Federating Directories post of 8/5/2011 to InformationWeek’s Security blog:

Cloud computing has prompted some companies to turn to virtualized directory servers that sit atop enterprise directory resources and allow users to carry their on-premises identities into the cloud.

Radiant Logic wants to play in this space. The third-party company announced recently it can collect users' enterprise identities and allow them to be used inside or outside corporate walls in cloud computing settings.

A company spokesmen said July 25 that Radiant Logic creates a virtual directory that "securely links cloud-based apps with all enterprise identity sources," including multiple Active Directories. Radiant Logic's virtual directory will deal with multiple forests--a grouping at the top of the Active Directory hierarchy, such as the name of an enterprise, with tree and domain sub-groupings underneath it.

Other third parties, including Quest Software's Symlabs and Optimal IdM's Virtual Identity Server Federation Services, are also active in the virtual directory space.

Amazon Web Services likewise announced Thursday that it has expanded its Identity and Access Management service, introduced late last year, to federate enterprise directories into a virtual directory for use in EC2's infrastructure.

Getting identity management servers, such as Microsoft's Active Directory, Lotus Notes directories, and Sun Microsystems LDAP-based Identity Manager, to work together in cloud computing is a problem. To do so, a directory set up for one reason is called upon to serve a broader purpose.

Microsoft recognized the problem early and has supplied the means to federate a user's identity through a platform that coordinates on-premises Active Directories and its Windows Azure cloud. Active Directory Federation Services 2.0 works with Windows Azure Access Control Services to create a single sign-on identity for Azure users.

Moving across vendor directories and specific enterprise contexts, however, gets more complicated. Data management teams don't set up user access the same way that identity management teams do. In some data settings, context is important and determines whether a user with general permission has specific permission to view the data.

Radiant spokesmen said in the July 25 announcement that its RadiantOne Virtual Directory Server Plus (VDS+) captures context-sensitive information as well as identity information, then makes it usable to its RadiantOne Cloud Federation Service. Both products were announced at Gartner's Catalyst Conference in San Diego.

Virtual Director Server Plus uses wizard-driven configuration to create a virtual directory. Cloud Federation Service, in conjunction with VDS+, then becomes a user identity and security token provider to applications running in the cloud. It federates identities from Active Directories, Sun, and other LDAP directories, databases, and Web services.

Cloud Federation Service (CFS) uses SAML 2.0, an OASIS standard that uses security tokens in exchanging authentication information about a user between security domains. CFS can also use WS-Federation, a specification that enables different security domains to interoperate and form a federated identity service.

Both are used by Radiant Logic to allow a user to sign on once to a service in the cloud, then have his credentials carried forward to additional applications and services without requiring follow up log-ins. CFS is priced starting at $10,000 per CPU. VDS+ is priced starting at $25,000 per CPU.

CFS appears a bit pricey to me when compared with free AppFabric Access Control and AD FS 2.0.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

SD Times Newswire (@sdtimes) reported Kofax announces release of Atalasoft DotImage 10 software development kit on 8/5/2011:

Kofax plc (LSE: KFX), a leading provider of capture driven process automation solutions, today announced the release of Atalasoft DotImage 10, the latest version of its software development toolkit (SDK) for Microsoft .NET developers. DotImage 10 introduces several new capabilities that streamline the process of image enabling cloud based applications and reduce the associated costs and time of doing so for developers.

Atalasoft DotImage is a full featured document imaging toolkit for applications built using the .NET Framework for Microsoft Windows Forms, WPF, ASP.NET and Silverlight. DotImage 10 incorporates controls to add zero footprint web document viewing and annotation support, enabling users to author layered annotations, and view and manipulate TIFF and PDF documents in web browsers without the need to download additional add ons or plug ins.

“Building web based applications that leverage advanced document imaging can be time consuming and difficult,” said William Bither, Founder and General Manager at Atalasoft, a Kofax company. “DotImage’s new capabilities allow developers to build custom cloud based applications that can scan, view and process documents and images with significantly less effort and cost.”

DotImage 10’s new capabilities include:

New HTML document viewer: Developers can create a full page, zero footprint document viewer with smooth, continuous scrolling for viewing documents in browsers on PCs and mobile devices, such as an iPad, without installing any client software. As such, minimal programming is needed to embed the viewer into any ASP.NET or ASP.NET MVC website, or deploy to Microsoft SharePoint®.

- Silverlight imaging SDK: A large subset of DotImage has been ported to run inside the Silverlight client and other managed environments, such as Windows Phone 7, Office 365 and Partial Trust environments.

- Improved web scanning: Browser based scanning has been significantly improved with the addition of ActiveX TWAIN capabilities and included in DotTwain and DotImage Document Imaging.

- Barcode reader SDK: In addition to Code 39, UPC, PDF417, QR Code, DataMatrix and the rest of DotImage’s 1D and 2D barcodes, DotImage now supports AustraliaPost, OneCode/Intelligent Mail, Planet and RM4SCC with a new managed code engine.

- OCR SDK: DotImage 10 now supports the IRIS IDRS engine, which can process 137 languages including Latin, Greek and Cyrillic with add ons offering Asian, Hebrew and Farsi languages. It also offers zonal and page analysis support, and the IRIS Intelligent Character Recognition (ICR) engine supports spaced and even touching handprint for all Latin languages.

DotImage 10 is now available through Atalasoft's network of system integrators and resellers or directly from Atalasoft.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

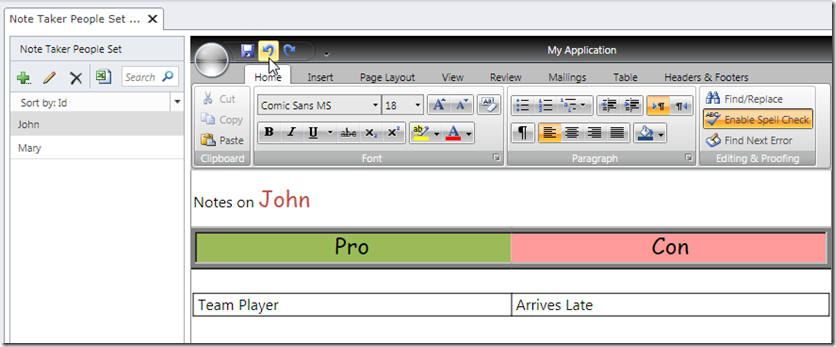

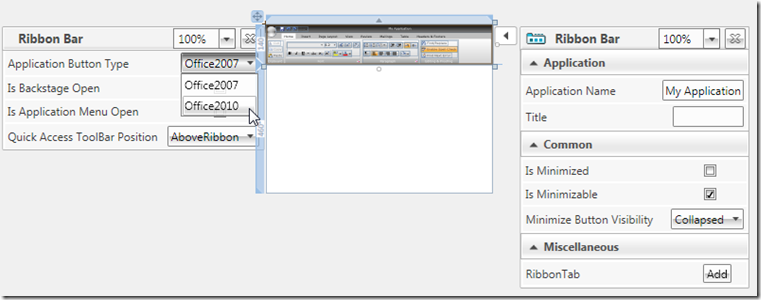

•• Michael Washington (@ADefWebserver) compared three vendors’ LightSwitch-specific controls in The LightSwitch Control Extension Makers Dilemma post of 8/7/2011:

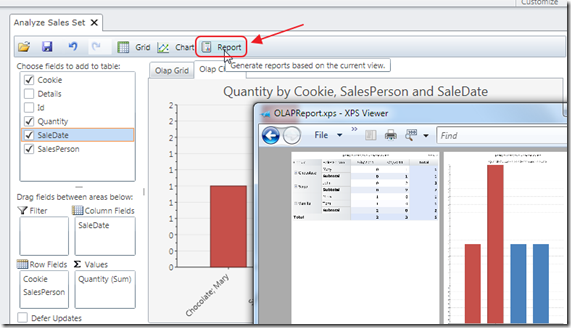

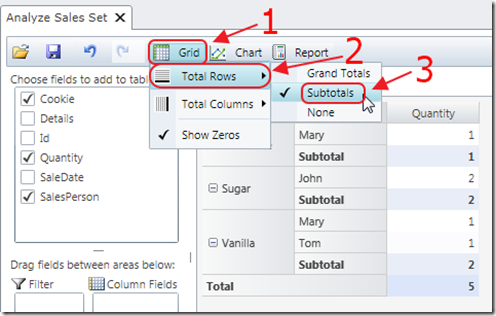

You know it seems like ComponentOne gets all the breaks. Due to the nature of their control, they were able to take an existing Silverlight control, and create a LightSwitch control, and create what I call the “Killer Application” for LightSwitch, ComponentOne's OLAP for LightSwitch (live demo) (you can get it for $295). Take a look at the walk-thru I did for the control : Using OLAP for LightSwitch.

Compare that to a walk-thru I just did for the Telerik Rich Text Editor control: Using the Telerik Rich Text Editor In Visual Studio LightSwitch. The difference is that it took me a lot longer to explain how to use the Telerik control. This is not really Telerik’s fault. What really happened, is that due to the way LightSwitch is designed, everything went ComponentOne’s way. They got all the upsides and none of the downsides.

The Problem: The Properties

LightSwitch is really a View Model application designer. Yes it has some built-in controls that it dynamically creates, but for real professional work, it simply says “I have the data, you provide the control, and tell me where on the control you want me to bind to”.

This is easy enough, the problem is when you want to set options. Both the Telerik and ComponentOne controls have a ton of options;

however, the ComponentOne OLAP control is designed for the end-user to set the options.

The Telerik control is designed to have the programmer set the options.

With the Telerik Rich Text Editor Control, you have to step out side of the “click, next, click, next” wizard process, and carefully set some properties. With the ComponentOne OLAP Control, you select a table, and then “click, click, F5” and run the working app. NOW you do have to “get your hands dirty” and set a bunch of options, but you are doing it on a working app (besides YOU are not suppose to do it, all this fun is intended for your end-users).

That’s why I gave ComponentOne the “Killer App” title. ANYONE can use their control. Your success rate is 100%. I cannot say the same thing for the Telerik control or ANY control that needs the programmer to set the options rather than the end-user.

Now, don’t get me wrong, your success rate is WAY higher than any other technology I can think of. Definitely higher than if you coded your Silverlight app by hand without using LightSwitch.

If you don’t believe me, take a look at the MVVM forum on the Silverlight.net site. There, many people are having a hard time performing simple tasks, and that group is mostly professional programmers. Take a look at the LightSwitch forums, and the questions are usually related to non-common scenarios. Remember that group is mostly people who are NOT professional programmers.

What Is A Control Vendor To Do?

So what is the answer? As a control vendor you want to make things as easy as possible for your end-users. LightSwitch does allow a control vendor, the ability to provide a custom menu, that will allow the programmer to set properties on the control (see Switch on the Light - Bing Maps and LightSwitch).

You can read the documentation on the API here: Defining, Overriding, and Using LightSwitch Control Properties. However, if you look at the BING maps example, you still get a property page that looks like this:

That box with the red box is around it, is the extent of the customization, the rest of the properties are the default properties.

My point is that Telerik would have a hard time cramming the 1000’s of possible configurations of their Rich Text Editor into a LightSwitch property panel (however, don’t be surprised if they come out with a “light” version of some of their controls in the future).

The Infragistic Solution

Infragistics looks like it is taking the “middle ground”. You can see their controls here: http://labs.infragistics.com/lightswitch/. What they are doing is making their controls available on a granular level.

When the programmer is implementing say, a Slider Control, one instance at a time...

The property page is more manageable, however, they are still really pushing it.

You can see a walk-thru of what their design-time experience is like at these links:

- Understanding NetAdvantage for Visual Studio LightSwitch - Dealing with Charts (Part 1)

- Understanding NetAdvantage for Visual Studio LightSwitch – Using Range Sliders (Part 2)

- Understanding NetAdvantage for Visual Studio LightSwitch – Creating Maps (Part 3)

No Easy Answers… Yet

In the end, we end up with choice. “choice” is an odd thing, you only want it if you need it, otherwise “choice” causes you to have to do too much work… you have to make a choice. It’s not that you don’t want to do the work, it is just that you are always afraid of making the wrong choice, and that is stressful.

Should Telerik simplify their Rich Text Control to allow it to be configured in a LightSwitch property page? Is it even possible?

I think the real answer may come in LightSwitch version 2. I suspect that a future version of LightSwitch will allow control vendors more customization options for their controls. I also suspect that the reason it was not in version one, is that LightSwitch also needs to allow you to create HTML5 controls…

… but, that’s another story.

•• Jeffrey Palermo (@jeffreypalermo) described Visual Studio LightSwitch, an Upgrade Path for Microsoft Access in a 7/30/2011 post to the Headspring blog:

There are lots of business systems written in Microsoft Access. One of the most successful companies I know is Gladstone, Inc, makers of ShoWorks software. This software runs most of the county fairs in the U.S. From entries, to checks, to vendors, this piece of software does it all to help manage and run a fair and keep track of all the data. And it is written in Access.

Started on Access 97, I have watched this software grow through the various Access upgrades, and it tests the limits of the platform. It’s author, Mike Hnatt, is one of the premiere Access gurus, and Microsoft has previous invited him up to the Redmond campus to be a part of internal Software Design Reviews, or SDR’s. Mike knows the limits of access, but even with the vast array of other development options out there, nothing comes close to parity with the capabilities he relies on – until today.

This is my first LightSwitch application. I just installed the software, ran it, defined a table structure and a few screens. It’s really simple, and I see that i runs a desktop version of Silverlight. It feels like Access (I have done some of that programming earlier in my career) because you just define the tables and queries, and then ask for screens that work off the data. You can customize the screens to some degree, and you can write code behind the screens, just like you can write VBA behind Access screens. This is my first time looking at Lightswitch in a serious way since it was just released. I will be looking at it more because it belongs in our toolbelt at Headspring. There are plenty of clients who have Access and FoxPro systems. These systems have tremendously useful built-in functionality that is prohibitively expensive to duplicate in a custom way with raw WPF and C#, but Lightswitch provides a possible upgrade path that won’t break the bank.

In case you are wondering what it looks like to develop this, here it is.

Notice that there is a Solution Explorer, and you are in Visual Studio with a new project type. I was really pleased that I could write code easily.

I tried some ReSharper shortcuts, but they didn’t work. I guess we’ll have to wait for ReSharper to enable this project type. Here is my custom button that shows the message box.

I think LightSwitch as a lot of promise for legacy system rewrites, upgrades, and conversions. Because it’s 100% .Net, you can mix and match with web services, desktop, SQL Server, etc.

Jeffrey is COO of Headspring.

It’s nice to see a well-known and respected .NET developer give credit to Microsoft Access where it’s due.

Code Magazine posted CodeCast Episode 109: Launch of Visual Studio LightSwitch 2011 with Beth Massi on 7/27/2011 (missed when published):

In this episode of CodeCast, Ken Levy talks with Beth Massi, a senior program manager at Microsoft on the BizApps team who build the Visual Studio tools for Azure, Office, SharePoint, and LightSwitch. Beth is also a community champion and manager for LightSwitch, Visual Studio based business applications, and Visual Basic developers.

In this special episode, Beth discusses the official release of Visual Studio LightSwitch 2011 with the latest news and resources including availability, pricing, optionally deploying to Azure, extensibility model, and the growing ecosystem with vendors plus the Visual Studio Gallery.

Guest

- Beth Massi - Blog: http://BethMassi.com, Twitter: http://twitter.com/BethMassi

Links

- LightSwitch Developer Center - http://msdn.com/lightswitch

- LightSwitch Team Blog - http://blogs.msdn.com/lightswitch

- LightSwitch 2011 on Microsoft Store

Length: 32:56

Direct download: CodeCast_109.mp3 - Size: 30MB

Return to section navigation list>

Windows Azure Infrastructure and DevOps

•• James Downey (@james_downey) asserted The DevOps Gap: A False Premise in an 8/7/2011 post to his Cloud of Innovation blog:

The Wikipedia article on DevOps states well the problem that DevOps seeks to address [Link added]:

Many organizations divide Development and System Administration into different departments. While Development departments are usually driven by user needs for frequent delivery of new features, Operations departments focus more on availability, stability of IT services and IT cost efficiency. These two contradicting goals create a “gap” between Development and Operations, which slows down IT’s delivery of business value.

But is this gap a real problem?

In some cases, friction between developers and system administrators, arising out of their different training, work routines, and priorities, can result in losses. I can think of projects in which the failures of developers and system administrators to communicate caused project delays. In my case, these projects involved exposing corporate data to partners over the internet.

Many of the approaches advocated by DevOps make a lot of sense: training developers to better understand production environments, more effective release management, scripting, and automation. I can think of IT departments that have become too dependent upon vendor-supplied GUIs for system administration, causing much wasted time on repetitive tasks. So there certainly is value to a renewed focus on scripting.

And many DevOps ideas, especially those described as agile infrastructure, tie in well with the concept of private cloud, which is all about elasticity and agility.

However, the notion that Dev and Ops should merge into one harmonious DevOps is neither practical nor desirable; the two groups stand for different values: developers desire rapid change and operations staff demand stability. In heterogeneous, complex IT environments, organizations need advocates for both change and stability. IT needs people at the table with diverse perspectives, even if that means the occasional heated debate. The natural animosity between Dev and Ops, with each wielding influence, creates a healthy system of checks and balances, assuring a better airing of concerns.

The gap between development and operations, the problem that DevOps sets out to solve, represents as much a benefit as a hindrance to IT.

James is a solution architect working for Dell Services.

•• Scott Hanselman (@shanselman, pictured below) posted a 00:31:00 Hanselminutes: Microsoft Web Platform and Azure direction with Scott Hunter (@coolcsh) podcast on 8/5/2011:

Scott Hanselman and Scott Hunter (also known as Scotts the Lesser [when Scott Guthrie is present]) talk about recently Azure/Web reorg, the direction that ASP.NET and Azure are talking, and how they see open source fitting into the future at Microsoft.

Scott Hunter is Scott Hanselman’s boss at Microsoft. According to Scott Hunter, the combined team reporting to ScottGu is more than 600 people.

• Derrick Harris (@derrickharris) posted Meet the new breed of HPC vendor to Giga Om’s Structure blog on 8/3/2011:

The face of high-performance computing is changing. That means new technologies and new names, but also familiar names in new places. Sure, cluster management is still important, but anyone that doesn’t have a cloud computing story to tell, possibly a big data one too, might starting looking really old really quickly.

We’ve been seeing the change happening over the past couple years, as Amazon Web Services (s amzn) and Hadoop, in particular, have changed the nature of HPC by democratizing access to resources and technologies. AWS did it by making lots of cores available on demand, freeing scientists from the need to buy expensive clusters or wait for time on their organization’s system. That story clearly caught on, and even large pharmaceutial companies and space agencies began running certain research tasks on AWS.

Amazon took things a step further by supplementing its virtual machines with physical speed in the form of Cluster Compute Instances. With a 10 GbE backbone, Intel Nehalem processors and the option of Nvidia Tesla GPUs, users can literally have a Top500 supercomputer available on demand for a fraction of the cost of buying one. Cycle Computing, a startup that helps customers configure AWS-based HPC clusters, recently launched a 10,000-core offering that costs only $1,060 per hour.

Hadoop, for its part, made Google- or Yahoo-style parallel data-processing available to anyone with the ambition to learn how to do it — and a few commodity servers. It’s not the be all, end all of the big data movement, but Hadoop’s certainly driving the ship and has opened mainstream businesses to the promise of advanced analytics. Most organizations have lots of data, some of it not suitable for a database or data warehouse, and tools like Hadoop let them get real value from it if they’re willing to put in the effort.

New blood

This change in the way organizations think about obtaining advanced computing capabilities has opened the door for new players that operate at the intersection of HPC, cloud computing and big data.

One relative newcomer to HPC — and someone that should give Appistry and everyone else a run for their money — is Microsoft (s msft). It only got into the space in the late ’00s, so it didn’t have much of a legacy business to disrupt when the cloud took over. In a recent interview with HPCwire, Microsoft HPC boss Ryan Waite details, among other things, an increasingly HPC-capable Windows Azure offering and “the emergence of a new HPC workload, the data intensive or ‘big data’ workload.”

Indeed, Microsoft has been busy trying to accommodate big data workloads. It just launched an Azure-based MapReduce service called Project Daytona, and has been developing its on-premise Hadoop alternative called Dryad for quite some time.

The latest company to get into the game is Appistry. As I noted when covering its $12 million funding round yesterday, Appistry actually made a natural shift from positioning itself as a cloud software vendor to positioning itself as an HPC vendor. Sultan Meghji, Appistry’s vice president of analytics applications, explained to me just how far down the HPC path the company already has gone.

Probably the most extreme change is that Appistry is now offering its own cloud service for running HPC computational or analytic workloads. It’s based on a per-pipeline pricing model, and today is targeted at the life sciences community. Meghji said the scope will expand, but the cloud service just “soft launched” in May, and life sciences is a new field of particular interest to Appistry.

The new cloud service is built using Appistry’s existing CloudIQ software suite, which already is tuned for HPC on commodity gear thanks to parallel-processing capabilities, “computational storage” (i.e., co-locating processors and relevant data to speed throughput) and Hadoop compatibility.

Appistry is also tuning its software to work with common HPC and data-processing algorithms, as well as some it’s writing itself, and is bringing in expertise in fields like life sciences to help the company better serve those markets.

“Cloud has become, frankly, meaningless,” Meghji explained. Appistry had a choice between trying to get heard of the noise of countless other private cloud offerings or trying to add distinct value in areas where its software was always best suited. It chose the latter, in part because Appistry’s products are best taken as a whole. If you need just cloud, HPC or analytics, Meghji said, Appistry might not be the right choice.

One would be remiss to ignore AWS as a potential HPC heavyweight, too, although it seems content to simply provide the infrastructure and let specialists handle the management. However, its Cluster Compute Instances and Elastic MapReduce service do open the doors for other companies, such as Cycle Computing, to make their mark on the HPC space by leveraging that readily available computing power.

The old guard gets it

But the emergence of new vendors isn’t to say that mainstay HPC vendors were oblivious to the sea change. Many, including Adaptive Computing and Univa UD, have been particularly willing to embrace the cloud movement.

Platform Computing has really been making a name for itself in this new HPC world. It recently outperformed the competition in Forrester Research’s comparison of private-cloud software offerings, and its ISF software powers SingTel’s nationwide cloud service. Spotting an opportunity to cash in on the hype around Hadoop, Platform also has turned its attention to big data with a management product that’s compatible a number of other data-processing frameworks and storage engines.

Whoever the vendor, though, there’s lots of opportunity. That’s because the new HPC opens the doors to an endless pipeline of new customers and new business ideas that could never justify buying a supercomputer or developing a MapReduce implementation, but that can enter a credit-card number or buy a handful of commodity servers with the best of them.

• Thomas Rupp posted Platforms – why the concept worked for Microsoft and how Google is porting it into the cloud to his CloudAlps (Hidden Peaks) blog on 8/3/2011:

In essence, “platform” in the IT industry stands for some common foundation that is used by many independent providers (ISV, SI, ..) but typically controlled by just one or few companies (through a formal consortium or by contractual agreements). In the last few decades, large dominant companies have been established based on such platforms (IBM:Mainframe, Microsoft:Windows) that resulted from their R&D efforts. Their critical differentiation allowed them to orchestrate (i.e. control) evolving “value networks” and draw significant economic rents.

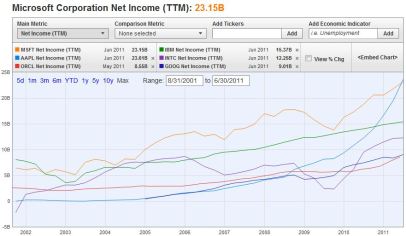

Microsoft, incorporated 1975, is arguably the most successful and instructive case of how an entirely new platform is built from scratch and extended into a dominant business that delivered $174bn of net income over the last 21 fiscal years. Over the longer term (>5y) such profits can only be sustained if the participating members of a value network continue to prosper and therefore have reason to continue supporting the rents extracted by the platform owners. Thousands of ISVs and hundreds of hardware vendors including e.g. Dell only exist because the PC has been so successful which led them to create billions of additional profits thereby strengthening the PC ecosystem and namely Microsoft.

What has been true for the many software companies (ISVs) created in the Windows ecosystem applies to those in the iOS ecosystem – however it is critical to understand that these are different companies, simply because paying 30% revenues (and therefore a multiple of profits) to Apple makes little sense to ISVs that run an established business for one or more decades. On the other end, the platform leaders become busy defending their position – both commercially and in terms of innovation lead. As long as the paradigm (e.g. the concepts of the mainframe, the PC, the AppStore, etc.) on which the leader has built its business remains unchallenged, it is hard for any competitor to challenge that leader.

Cloud Computing or Online Business (in the Web 2.0 sense) qualifies as a developing paradigm shift and hence old and new competitors position themselves to play a significant role, e.g. generate higher profits than those of a contributing member of a platform. Not only does a general platform leader in Cloud Computing not exist yet, but the web was originally seen as a level playing field where thousands of independent “web services” would interact and deliver according to the requirement of customers. More recently, incumbent ISVs and service providers have positioned cloud computing as a mere extension of virtualized IT while targeting their traditional customer base of large, mature companies.

Google – the undisputed leader in online advertising - is building an unparalleled Cloud Platform which is seldom fully understood. While its position during its first decade of existence was built as a consumer play (with the most eyeballs tracked in the most accurate details to attract the most advertising spend), Google’s visible ambitions today exceed consumer online search by far. Whatever Cloud Computing will turn out to be over the next 2-5 years (as per Gartner), it will leverage the gigantic scale of the web datacenters, access to individuals and firms ideally including a billing relationship, mobile devices, cloud-specific software development tools and experts.

Besides technological innovation, the biggest driver of Cloud Computing adoption will be Globalization or more specifically the entrance of new competitors from the Eastern hemisphere in most markets of the West. These new ventures from Asia and India will come without any legacy in terms of IT, supply chain and other infrastructure. The young entrepreneurs running them will be used to Web Services, mobile access and above all “frugal engineering”: highly efficient, functional design and exceptional value. It is hard to argue that anyone but Google is leading in that sort of services already.

[this was planned to be a short post that precedes a longer paper that I am currently writing - please excuse that it got lengthy]

Thomas is an 3conomist & MBA focused on leveraging Disruptive Technologies in IT and Telecommunications, mostly for Enterprise clients with more than a decade at Microsoft.

The question, of course, is whether Microsoft can exact similar economic rent for Windows Azure as a result of its Windows franchise.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Carl Brooks (@eekygeeky) described transitions from conventional IT architectures to private clouds in his Vendors' cloud prep portends pain for enterprise IT article on 8/5/2011 for SearchCloudComputing.com. From the GreenPages story:

This column is about two vendors (No, no, keep reading! It gets good, I promise) that turned their IT infrastructure on its ear and built out private clouds. Yes, vendors have IT shops too, they need all the same stuff you do while they're picking your pocket, like email servers, app servers, ERP and what have you.

And the vendors, along with the service providers -- a line that is blurring very quickly, by the way -- are by far the hottest spot for cloud computing initiatives. I'd bet 90% of the private cloud efforts underway today are on the sell side because, of course, to vendors IT is the money maker, directly or indirectly.

The fun part? Well, schadenfreude ist die schönste freude, of course. How was your last migration? Now picture this, "It's a little scary considering we'll have a $100 million dollar organization rolling down the road," said Jon Drew, director of IT at Kittery, Maine IT outsourcer GreenPages.

That's his fun plan for August. GreenPages will ship its entire consolidated IT infrastructure from its home on Badgers Island in the middle of a river in Maine, to a Windstream Corp. colocation in Charlestown, Mass., where, not coincidentally, it runs the bulk of customers' IT infrastructure.

Drew said that GreenPages had spent three years collecting its entire infrastructure --basically a pile of unsupported Compaq ProLiants and semi-supported HP ProLiants. It junked them in favor of a UCS, upon which they built an easy access virtualized environment, which had the practical effect of going from a dozen servers to more than 90, but hey. Among other things, there was an application called FileMaker that "crapped all over itself" once virtualized; and the strange fact that Cisco's Unified Communications software simply did not run as a virtual machine unless it was on a UCS chassis.

Once that earthmoving was done, with all the concomitant pain and suffering, Drew discovered the true Achilles heel of a cloud. "Two were on voice, two were really dedicated for data and two were for fax, believe it or not, we get a ton of fax into the building."

T-1 lines to the backbone, that is, so Drew had a spiff 10 GB internal network that amounted to… 3 Mbps for anyone not literally in the building. So they decided to pack it up and move it to Boston, where GreenPages already maintained a bunch of client hardware and infrastructure. But first, that motley, many thousands of dollars per month collection of T-1 had to go. That it itself was heartache. "It took us four months to get that DS3 installed."

Now with 40 MB of copper in the building, why move it out to a colo? Well, GreenPages' cloud was parked on a pile of sand in the middle of a river, while the new colo hosts were right next to a bunch of the infrastructure it already maintains for customers. Why not get the same lovin' they gave to the paying public? But that's a relatively straightforward story of moving your IT into the modern day. …

Carl continues with a description of CA Technologies’ migration.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

Cloud Security and Governance

K. Scott Morrison (@KScottMorrison) reported a Certificate Program in Cloud Computing from the University of Washington in an 8/6/2011 post:

This fall, the Professional and Continuing Education division at the University of Washington is introducing a new certificate program in cloud computing. It consists of three consecutive courses taken on Monday nights throughout the fall, winter and spring terms. In keeping with the cloud theme, you can attend either in person at the UW campus, or online. The cost is US $2577 for the program.

The organizers invited me on to a call this morning to learn about this new program. The curriculum looks good, covering everything from cloud fundamentals to big data. The instructors are taking a very project-based approach to teaching, which I always find is the best way to learn any technology.

It is encouraging to see continuing ed departments address the cloud space. Clearly they’ve noted a demand for more structured education in cloud technology. No doubt we will see many programs similar to this one appear in the future.

<Return to section navigation list>

Cloud Computing Events

•• Lydia Leong described How to get a meeting with me at VMworld in an 8/6/2011 post:

I will be at VMworld in Las Vegas this year. If you’re interested in meeting with me during VMworld, please do the following:

Gartner clients and current prospects: Please contact your Gartner account executive to have them set up a meeting (they can use a Gartner internal system called WhereRU to schedule it). I’ve set aside Thursday, September 1st, for client meetings. If you absolutely cannot do Thursday, please have your account executive contact me and we’ll see what else we can work out. (Because there are often more meeting requests than there are meeting times available, I will allow our sales team to prioritize my time.)

Non-clients: Please contact me directly via email, with a range of times that you’re available. In general, these will be meetings after 5 pm, although depending on my schedule, I may fit in meetings throughout the day on Wednesday, August 31st, as well.

I am particularly interested in start-ups that have innovative cloud IaaS offerings, or which have especially interesting enabilng technologies targeted at the service provider market.

Lydia is an analyst at Gartner, where she covers Web hosting, colocation, content delivery networks, cloud computing, and other Internet infrastructure services.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Lydia Leong reported Amazon and Equinix partner for Direct Connect in an 8/7/2011 post to her CloudPundit blog:

Amazon has introduced a new connectivity option called AWS Direct Connect. In plain speak, Direct Connect allows an Amazon customer to get a cross-connect between his own network equipment and Amazon’s, in some location where the two companies are physically colocated. In even plainer speak, if you’re an Equinix colocation customer in their Ashburn, Virginia (Washington DC) data center campus, you can get a wire run between your cage and Amazon’s, which gives you direct connectivity between your router and theirs.

This is relatively cheap, as far as such things go. Amazon imposes a “port charge” for the cross-connect at $0.30/hour for 1 Gbps or $2.25/hour for 10 Gbps (on a practical level, since cross-connects are by definition nailed up 100% of the time, about $220/month and $1625/month respectively), plus outbound data transfer at $0.02/GB. You’ll also pay Equinix for the cross-connect itself (I haven’t verified the prices for these, but I’d expect they would be around $500 and $1500 per month). And, of course, you have to pay Equinix for the colocation of whatever equipment you have (upwards of $1000/month+ per rack).

Direct Connect has lots of practical uses. It provides direct, fast, private connectivity between your gear in colocation and whatever Amazon services are in Equinix Ashburn (and non-Internet access to AWS in general), vital for “hybrid cloud” use cases and enormously useful for people who, say, have PCI-compliant e-commerce sites with huge databases Oracle RAC and black-box encryption devices, but would like to put some front-end webservers in the cloud. You can also buy whatever connectivity you want from your cage in Equinix, so you can take that traffic and put it over some less expensive Internet connection (Amazon’s bandwidth fees are one of the major reasons customers leave them), or you can get private networking like ethernet or MPLS VPN (an important requirement for enterprise customers who don’t want their traffic to touch the Internet at all).

This is not a completely new thing — Amazon has quietly offered private peering and cross-connects to important customers for some time now, in Equinix. But this now makes cross-connects into a standard option with an established price point, which is likely to have far greater uptake than the one-off deals that Amazon has been doing.