Rachel Chalmers, "State of the Infrastructure " (14:52)

It’s easy to forget that the story of infrastructure is a human story. In this session, we will take a step back and trace the history of automation and virtualization, touching on specific pioneers and the use cases they developed for both technologies, looking at the emergence of the devops role and its significance as organizations move towards the cloud. State of the Infrastructure Presentation [ZIP].

It’s easy to forget that the story of infrastructure is a human story. In this session, we will take a step back and trace the history of automation and virtualization, touching on specific pioneers and the use cases they developed for both technologies, looking at the emergence of the devops role and its significance as organizations move towards the cloud. State of the Infrastructure Presentation [ZIP].

Rachel has led the infrastructure software practice for The 451 Group since its debut in April 2000. She pioneered coverage on SOA, distributed application management, utility computing and open source software. Today she focuses on datacenter automation and server, desktop and application virtualization.

Tim O'Reilly, "O'Reilly Radar" (10:54)

Tim O’Reilly shares his insights into the world of emerging technology, presenting his take on what matters most – and what will be most disruptive – to the tech community.

Tim O’Reilly shares his insights into the world of emerging technology, presenting his take on what matters most – and what will be most disruptive – to the tech community.

Tim O’Reilly is the founder and CEO of O’Reilly Media, Inc. O’Reilly Media also hosts conferences on technology topics, including the O’Reilly Open Source Convention, the Web 2.0 Summit, and the Gov 2.0 Summit.

John Rauser, "Look at Your Data" (17:51)

John has been extracting value from large datasets for 15 years at companies ranging from hedge funds and small data-driven startups to retailing giant amazon.com. He has deep experience in the areas of personalization, business intelligence, website performance and real-time fault analysis. An empiricist at heart, John’s optimism and can-do attitude make “Just do the experiment!” his favorite call to arms.

John has been extracting value from large datasets for 15 years at companies ranging from hedge funds and small data-driven startups to retailing giant amazon.com. He has deep experience in the areas of personalization, business intelligence, website performance and real-time fault analysis. An empiricist at heart, John’s optimism and can-do attitude make “Just do the experiment!” his favorite call to arms.

John has been extracting value from large datasets for 15 years at companies ranging from hedge funds and small data-driven startups to retailing giant amazon.com. He has deep experience in the areas of personalization, business intelligence, website performance and real-time fault analysis.

Douglas Crockford, "JavaScript & Metaperformance" (15:40)

There are lies, damned lies, and benchmarks. Tuning language processor performance to benchmarks can have the unintended consequence of encouraging bad programming practices. This is the true story of developing a new benchmark with the intention of encouraging good practices.

There are lies, damned lies, and benchmarks. Tuning language processor performance to benchmarks can have the unintended consequence of encouraging bad programming practices. This is the true story of developing a new benchmark with the intention of encouraging good practices.

Douglas Crockford is an Architect at Yahoo! Inc. He discovered JSON while he was CTO of State Software.

Theo Schlossnagle, "Career Development" (12:45)

In this session, we will discuss how operations teams can extract key information from user-perceived performance measurements in real-time to make key operational assessments and decisions. Network routing collapses, CDN nodes fail, DNS Anycast horizons can shift and it can all be shown to you by your users. However, often times, this information isn’t correctly segmented and aggregated and the gems remain undiscovered. By looking at real-time user performance data and adding a good deal of magic meta information, we can now assess uncover serious operational problems before they subtly manifest is business reports later.

In this session, we will discuss how operations teams can extract key information from user-perceived performance measurements in real-time to make key operational assessments and decisions. Network routing collapses, CDN nodes fail, DNS Anycast horizons can shift and it can all be shown to you by your users. However, often times, this information isn’t correctly segmented and aggregated and the gems remain undiscovered. By looking at real-time user performance data and adding a good deal of magic meta information, we can now assess uncover serious operational problems before they subtly manifest is business reports later.

Through Javascript, browser web performance information and a good deal of real-time data analysis, you will dive to a deeper operational understanding of how your site is used by the world. Your NOC will be enlightened and forever better.

Theo Schlossnagle is a Founder and Principal at OmniTI where he designs and implements scalable solutions for highly trafficked sites and other clients in need of sound, scalable architectural engineering.

Mark Burgess, "Change = Mass x Velocity, and Other Laws of Infrastructure” (17:35)

The key challenges for infrastructure designers and maintainers today are scale, speed and complexity. Mark Burgess was one of the first people to look for ways of managing these issues based on theoretical analysis. Much of his work has gone into the highly successful software Cfengine, which is still very much a leading light in the industry. In this session, Mark will ask if we have yet learned the lessons of infrastructure management, and, either way, what must come next.

The key challenges for infrastructure designers and maintainers today are scale, speed and complexity. Mark Burgess was one of the first people to look for ways of managing these issues based on theoretical analysis. Much of his work has gone into the highly successful software Cfengine, which is still very much a leading light in the industry. In this session, Mark will ask if we have yet learned the lessons of infrastructure management, and, either way, what must come next.

Mark Burgess is the founder, CTO and principal author of Cfengine. He is Professor of Network and System Administration at Oslo University College and has led the way in theory and practice of automation and policy based management for 20 years.

Vik Chaudhary, "Testing and Monitoring the Smartphone Experience” (18:25)

Keynote demonstrates how you can improve the end-user experience of your latest smartphone apps. This year at Velocity, Keynote debuts Mobile Device Perspective 5.0 (MDP), a cloud based testing and monitoring platform for ensuring the end to end quality of iPhone, Android and BlackBerry mobile apps accessing online content, streaming video, music and games. Learn how MDP’s mobile monitoring capabilities are designed for testing and optimizing smartphone access with gestures and touch events, using real mobile devices connected to the latest 3G and 4G networks in multiple mobile markets across the globe.

Keynote demonstrates how you can improve the end-user experience of your latest smartphone apps. This year at Velocity, Keynote debuts Mobile Device Perspective 5.0 (MDP), a cloud based testing and monitoring platform for ensuring the end to end quality of iPhone, Android and BlackBerry mobile apps accessing online content, streaming video, music and games. Learn how MDP’s mobile monitoring capabilities are designed for testing and optimizing smartphone access with gestures and touch events, using real mobile devices connected to the latest 3G and 4G networks in multiple mobile markets across the globe.

Mr. Chaudhary is responsible for leading Keynote’s product management team and has extended the company into new markets via 15 acquisitions.

Steve Souders, Jesse Robbins & John Allspaw, "Opening Remarks" (9:52)

Steve works at Google on web performance and open source initiatives. He previously served as Chief Performance Yahoo!. Steve is the author of High Performance Web Sites and Even Faster Web Sites.

Steve works at Google on web performance and open source initiatives. He previously served as Chief Performance Yahoo!. Steve is the author of High Performance Web Sites and Even Faster Web Sites.

Jesse Robbins (@jesserobbins) is CEO of Opscode and a widely recognized expert in Infrastructure, Web Operations, and Emergency Management. Jesse serves as co-chair of the Velocity Web Performance & Operations Conference and contributes to the O’Reilly Radar.

Jesse Robbins (@jesserobbins) is CEO of Opscode and a widely recognized expert in Infrastructure, Web Operations, and Emergency Management. Jesse serves as co-chair of the Velocity Web Performance & Operations Conference and contributes to the O’Reilly Radar.

John has worked in systems operations for over fourteen years in biotech, government and online media. He built the backing infrastructures at Salon, InfoWorld, Friendster, and Flickr. He is now VP of Tech Operations at Etsy, and is the author of The Art of Capacity Planning published by O’Reilly.

John has worked in systems operations for over fourteen years in biotech, government and online media. He built the backing infrastructures at Salon, InfoWorld, Friendster, and Flickr. He is now VP of Tech Operations at Etsy, and is the author of The Art of Capacity Planning published by O’Reilly.

Lew Tucker, "Cisco and Open Stack" (12:39)

Cloud computing is changing the way we think about data centers, applications, and services. By pooling resources and aggregating workloads across multiple customers, cloud service providers achieve highly cost effective, multi-tenant, infrastructure as a service. Some applications, however, need a richer set of networking capabilities than are present in today’s environment. We will cover Cisco’s recent participation in the Open Stack community and explore what a networking service might provide for both application developers and service providers.

Cloud computing is changing the way we think about data centers, applications, and services. By pooling resources and aggregating workloads across multiple customers, cloud service providers achieve highly cost effective, multi-tenant, infrastructure as a service. Some applications, however, need a richer set of networking capabilities than are present in today’s environment. We will cover Cisco’s recent participation in the Open Stack community and explore what a networking service might provide for both application developers and service providers.

Lew Tucker is the Vice President and Chief Technology Officer of Cloud Computing at Cisco, where he is responsible for helping to shape the future of cloud and enterprise software strategies.

Jonathan Heiliger, "Facebook Open Compute & Other Infrastructure” (26:48)

Jonathan Heiliger is the Vice President of Technical Operations at Facebook, where he oversees global infrastructure, site architecture and IT. Prior to Facebook, he was a technology advisor to several early-stage companies in connection with Index Ventures and Sequoia Capital. He formerly led the engineering team at Walmart.com, where he was responsible for infrastructure and building scalable systems. Jonathan also spent several years at Loudcloud (which became Opsware and was later acquired by HP) as the Chief Operating Officer.

Jonathan Heiliger is the Vice President of Technical Operations at Facebook, where he oversees global infrastructure, site architecture and IT. Prior to Facebook, he was a technology advisor to several early-stage companies in connection with Index Ventures and Sequoia Capital. He formerly led the engineering team at Walmart.com, where he was responsible for infrastructure and building scalable systems. Jonathan also spent several years at Loudcloud (which became Opsware and was later acquired by HP) as the Chief Operating Officer.

John Resig, "Holistic Performance" (25:32)

Working on the development of jQuery one tends to learn about all the performance implications of a particular change to a JavaScript code base (whether it be from an API change or a larger internals rewrite). Performance is an ever-present concern for every single commit and for every release. Performance implications must be well-defended and well-tested. In this talk we’re going to look at all the different performance concerns that the project deals with (processor, memory, network) and the tools that are used to make sure development continues to move smoothly.

Working on the development of jQuery one tends to learn about all the performance implications of a particular change to a JavaScript code base (whether it be from an API change or a larger internals rewrite). Performance is an ever-present concern for every single commit and for every release. Performance implications must be well-defended and well-tested. In this talk we’re going to look at all the different performance concerns that the project deals with (processor, memory, network) and the tools that are used to make sure development continues to move smoothly.

John Resig is a JavaScript Evangelist for the Mozilla Corporation and the author of the book Pro JavaScript Techniques. He’s also the creator and lead developer of the jQuery JavaScript library.

Paul Querna, "Cast - The Open Deployment Platform" (4:10)

Cast is an open source platform for deploying and managing applications being developed by Rackspace. Cast is the bridge between traditional configuration management like Puppet or Chef, and the longer term shift to Platforms as a Service. Cast is written in Node.js, built entirely on top of HTTP APIs for easy control, and has plugins and hooks to make customization trivial.

Cast is an open source platform for deploying and managing applications being developed by Rackspace. Cast is the bridge between traditional configuration management like Puppet or Chef, and the longer term shift to Platforms as a Service. Cast is written in Node.js, built entirely on top of HTTP APIs for easy control, and has plugins and hooks to make customization trivial.

Paul Querna is an Architect at Rackspace, and former Chief Architect at Cloudkick.

Jon Jenkins, "Velocity Culture" (15:14)

Operations is can play a critical role in driving revenue for the business. This talk will explore some ways in which the ops team at Amazon is thinking outside the box to drive profitability. JJ will also issue a challenge for next year’s Velocity Conference.

Operations is can play a critical role in driving revenue for the business. This talk will explore some ways in which the ops team at Amazon is thinking outside the box to drive profitability. JJ will also issue a challenge for next year’s Velocity Conference.

Jon Jenkins is the Director of Platform Analysis at Amazon.com. He leads a team focused on designing and implementing highly-available architectures capable of operating within tight performance requirements at massive scale with minimal human intervention.

Michael Kuperman & Ronni Zehavi, "Your Mobile Performance – Analyze and Accelerate” (4:45)

Michael Kuperman has 10 years of experience in the CDN and ADN industry. To his role as VP of Operations at Cotendo he brings deep expertise in deploying and operating mission critical global environments in the US, Europe and Asia. Before joining Cotendo, Michael held senior Operations positions at a number of leading companies in the content delivery network industry.

Michael Kuperman has 10 years of experience in the CDN and ADN industry. To his role as VP of Operations at Cotendo he brings deep expertise in deploying and operating mission critical global environments in the US, Europe and Asia. Before joining Cotendo, Michael held senior Operations positions at a number of leading companies in the content delivery network industry.

Ronni Zehavi is CEO and Co-Founder of Cotendo. He has over fifteen years of executive experience in start-up and multi-national high-technology companies. Prior to Cotendo he founded Commtouch Software where he commercialized email security technologies in a distributed global service environment that handled over a billion content transactions per day with 100% uptime.

Ronni Zehavi is CEO and Co-Founder of Cotendo. He has over fifteen years of executive experience in start-up and multi-national high-technology companies. Prior to Cotendo he founded Commtouch Software where he commercialized email security technologies in a distributed global service environment that handled over a billion content transactions per day with 100% uptime.

Patrick Lightbody, "From Inception to Acquisition" (15:29)

Launched in December 2008, BrowserMob set out to change the way performance and load testing is done – all using the cloud. Learn from the founder how he built a high performance testing product on top of dozens of cloud technologies, and how the operational support the cloud provided enabled the company to not only profit from day one, but to be acquired within a year and a half of its launch.

Launched in December 2008, BrowserMob set out to change the way performance and load testing is done – all using the cloud. Learn from the founder how he built a high performance testing product on top of dozens of cloud technologies, and how the operational support the cloud provided enabled the company to not only profit from day one, but to be acquired within a year and a half of its launch.

Patrick Lightbody is the founder of BrowserMob, a cutting edge load testing and website monitoring provider, which is now a part of Neustar. He also founded HostedQA, an automated web testing platform.

Velocity 2011: Ian Flint, "World IPv6 Day: What We Learned" (18:24)

One of the greatest challenges facing an organization that wants to migrate to IPv6 is that the protocol is not supported on all clients. In fact, it is estimated that 1/2000 of clients will be unable to connect to a dual-stack site.

One of the greatest challenges facing an organization that wants to migrate to IPv6 is that the protocol is not supported on all clients. In fact, it is estimated that 1/2000 of clients will be unable to connect to a dual-stack site.

This poses an interesting question; who goes first? Enabling IPv6 in the current climate means sending customers to the competition. But if no one goes first, IPv6 will never happen.

This is the motivating factor behind World IPv6 Day. Major content providers have agreed to simultaneously enable IPv6 for a 24-hour period in early June. What this means is that rather than one site seeming broken, the whole Internet will seem broken to clients with this problem, which will call attention to deficiencies in the infrastructure of the Internet, rather than to individual content providers.

Enabling IPv6 is a full stack exercise – everything from routers to applications needs to be adapted to work with the new protocols. The purpose of this session is to share lessons learned in the preparation for World IPv6 day, and discoveries made on the day itself.

Ian Flint is a 14-year veteran of the Internet. He has founded and sold two successful startups, and has been an architect at eBay and Yahoo. He is currently the service architect for communities and communications at Yahoo.

Arvind Jain, "Making the Web Instant" (13:40)

Since last August, you have been able to get Google Search results instantly, even before you are done typing your query. But, that’s not enough. When you click on a result, you want that page to load instantly as well. If you don’t search, but navigate directly to a web page from the address bar, that should load instantly too.

Since last August, you have been able to get Google Search results instantly, even before you are done typing your query. But, that’s not enough. When you click on a result, you want that page to load instantly as well. If you don’t search, but navigate directly to a web page from the address bar, that should load instantly too.

We have implemented prerendering in Chrome to make these possible. This experimental feature utilizes the time when you are thinking or typing to predict what you need next, and gets it ready for you ahead of time. It is a challenging problem. A wrong prediction makes ads and analytics accounting inaccurate, wastes client resources and increases server load. We discuss how we address these concerns

Arvind Jain works as a Director of Enginering at Google. He is responsible for making Google products fast and also runs its “Make the Web faster” initiative.

Artur Bergman, "Artur on SSD's" (3:55)

Artur Bergman, hacker and technologist at-large, is the VP of Engineering and Operations at Wikia. He provides the technical backbone necessary for Wikia’s mission to compile and index the world’s knowledge. … In past lives, he’s built high volume financial trading systems, re-implemented Perl 5’s threading system, wrote djabberd, managed LiveJournal’s engineering team, and served as operations architect at Six Apart.

Artur Bergman, hacker and technologist at-large, is the VP of Engineering and Operations at Wikia. He provides the technical backbone necessary for Wikia’s mission to compile and index the world’s knowledge. … In past lives, he’s built high volume financial trading systems, re-implemented Perl 5’s threading system, wrote djabberd, managed LiveJournal’s engineering team, and served as operations architect at Six Apart.

"Lightning Demos Wednesday" (30:46)

"Lightning Demos Thursday" (33:12)

Tim O’Reilly is a strong proponent of free and open-source software but he doesn’t believe conference breakout session and workshop videos should be free and open. You must purchase a $495 online access pass to view the entire conference video archive.

city 2011 Web Performance & Operations Conference conference was held 6/22-6/24/2011 at the Santa Clara Convention Center in Santa Clara, CA. O’Reilly Media recorded all Velocity Conference 2011 keynotes, breakout sessions and workshops but only made the following video segments of keynote and lightning presentations available for public consumption:

city 2011 Web Performance & Operations Conference conference was held 6/22-6/24/2011 at the Santa Clara Convention Center in Santa Clara, CA. O’Reilly Media recorded all Velocity Conference 2011 keynotes, breakout sessions and workshops but only made the following video segments of keynote and lightning presentations available for public consumption: It’s easy to forget that the story of infrastructure is a human story. In this session, we will take a step back and trace the history of automation and virtualization, touching on specific pioneers and the use cases they developed for both technologies, looking at the emergence of the devops role and its significance as organizations move towards the cloud.

It’s easy to forget that the story of infrastructure is a human story. In this session, we will take a step back and trace the history of automation and virtualization, touching on specific pioneers and the use cases they developed for both technologies, looking at the emergence of the devops role and its significance as organizations move towards the cloud.  Tim O’Reilly shares his insights into the world of emerging technology, presenting his take on what matters most – and what will be most disruptive – to the tech community.

Tim O’Reilly shares his insights into the world of emerging technology, presenting his take on what matters most – and what will be most disruptive – to the tech community. John has been extracting value from large datasets for 15 years at companies ranging from hedge funds and small data-driven startups to retailing giant amazon.com. He has deep experience in the areas of personalization, business intelligence, website performance and real-time fault analysis. An empiricist at heart, John’s optimism and can-do attitude make “Just do the experiment!” his favorite call to arms.

John has been extracting value from large datasets for 15 years at companies ranging from hedge funds and small data-driven startups to retailing giant amazon.com. He has deep experience in the areas of personalization, business intelligence, website performance and real-time fault analysis. An empiricist at heart, John’s optimism and can-do attitude make “Just do the experiment!” his favorite call to arms. There are lies, damned lies, and benchmarks. Tuning language processor performance to benchmarks can have the unintended consequence of encouraging bad programming practices. This is the true story of developing a new benchmark with the intention of encouraging good practices.

There are lies, damned lies, and benchmarks. Tuning language processor performance to benchmarks can have the unintended consequence of encouraging bad programming practices. This is the true story of developing a new benchmark with the intention of encouraging good practices. In this session, we will discuss how operations teams can extract key information from user-perceived performance measurements in real-time to make key operational assessments and decisions. Network routing collapses, CDN nodes fail, DNS Anycast horizons can shift and it can all be shown to you by your users. However, often times, this information isn’t correctly segmented and aggregated and the gems remain undiscovered. By looking at real-time user performance data and adding a good deal of magic meta information, we can now assess uncover serious operational problems before they subtly manifest is business reports later.

In this session, we will discuss how operations teams can extract key information from user-perceived performance measurements in real-time to make key operational assessments and decisions. Network routing collapses, CDN nodes fail, DNS Anycast horizons can shift and it can all be shown to you by your users. However, often times, this information isn’t correctly segmented and aggregated and the gems remain undiscovered. By looking at real-time user performance data and adding a good deal of magic meta information, we can now assess uncover serious operational problems before they subtly manifest is business reports later. The key challenges for infrastructure designers and maintainers today are scale, speed and complexity. Mark Burgess was one of the first people to look for ways of managing these issues based on theoretical analysis. Much of his work has gone into the highly successful software Cfengine, which is still very much a leading light in the industry. In this session, Mark will ask if we have yet learned the lessons of infrastructure management, and, either way, what must come next.

The key challenges for infrastructure designers and maintainers today are scale, speed and complexity. Mark Burgess was one of the first people to look for ways of managing these issues based on theoretical analysis. Much of his work has gone into the highly successful software Cfengine, which is still very much a leading light in the industry. In this session, Mark will ask if we have yet learned the lessons of infrastructure management, and, either way, what must come next. Keynote demonstrates how you can improve the end-user experience of your latest smartphone apps. This year at Velocity, Keynote debuts Mobile Device Perspective 5.0 (MDP), a cloud based testing and monitoring platform for ensuring the end to end quality of iPhone, Android and BlackBerry mobile apps accessing online content, streaming video, music and games. Learn how MDP’s mobile monitoring capabilities are designed for testing and optimizing smartphone access with gestures and touch events, using real mobile devices connected to the latest 3G and 4G networks in multiple mobile markets across the globe.

Keynote demonstrates how you can improve the end-user experience of your latest smartphone apps. This year at Velocity, Keynote debuts Mobile Device Perspective 5.0 (MDP), a cloud based testing and monitoring platform for ensuring the end to end quality of iPhone, Android and BlackBerry mobile apps accessing online content, streaming video, music and games. Learn how MDP’s mobile monitoring capabilities are designed for testing and optimizing smartphone access with gestures and touch events, using real mobile devices connected to the latest 3G and 4G networks in multiple mobile markets across the globe. Steve works at

Steve works at  Jesse Robbins (

Jesse Robbins ( John has worked in systems operations for over fourteen years in biotech, government and online media. He built the backing infrastructures at

John has worked in systems operations for over fourteen years in biotech, government and online media. He built the backing infrastructures at  Cloud computing is changing the way we think about data centers, applications, and services. By pooling resources and aggregating workloads across multiple customers, cloud service providers achieve highly cost effective, multi-tenant, infrastructure as a service. Some applications, however, need a richer set of networking capabilities than are present in today’s environment. We will cover Cisco’s recent participation in the Open Stack community and explore what a networking service might provide for both application developers and service providers.

Cloud computing is changing the way we think about data centers, applications, and services. By pooling resources and aggregating workloads across multiple customers, cloud service providers achieve highly cost effective, multi-tenant, infrastructure as a service. Some applications, however, need a richer set of networking capabilities than are present in today’s environment. We will cover Cisco’s recent participation in the Open Stack community and explore what a networking service might provide for both application developers and service providers. Jonathan Heiliger is the Vice President of Technical Operations at Facebook, where he oversees global infrastructure, site architecture and IT. Prior to Facebook, he was a technology advisor to several early-stage companies in connection with Index Ventures and Sequoia Capital. He formerly led the engineering team at Walmart.com, where he was responsible for infrastructure and building scalable systems. Jonathan also spent several years at Loudcloud (which became Opsware and was later acquired by HP) as the Chief Operating Officer.

Jonathan Heiliger is the Vice President of Technical Operations at Facebook, where he oversees global infrastructure, site architecture and IT. Prior to Facebook, he was a technology advisor to several early-stage companies in connection with Index Ventures and Sequoia Capital. He formerly led the engineering team at Walmart.com, where he was responsible for infrastructure and building scalable systems. Jonathan also spent several years at Loudcloud (which became Opsware and was later acquired by HP) as the Chief Operating Officer. Working on the development of jQuery one tends to learn about all the performance implications of a particular change to a JavaScript code base (whether it be from an API change or a larger internals rewrite). Performance is an ever-present concern for every single commit and for every release. Performance implications must be well-defended and well-tested. In this talk we’re going to look at all the different performance concerns that the project deals with (processor, memory, network) and the tools that are used to make sure development continues to move smoothly.

Working on the development of jQuery one tends to learn about all the performance implications of a particular change to a JavaScript code base (whether it be from an API change or a larger internals rewrite). Performance is an ever-present concern for every single commit and for every release. Performance implications must be well-defended and well-tested. In this talk we’re going to look at all the different performance concerns that the project deals with (processor, memory, network) and the tools that are used to make sure development continues to move smoothly. Cast is an open source platform for deploying and managing applications being developed by Rackspace. Cast is the bridge between traditional configuration management like Puppet or Chef, and the longer term shift to Platforms as a Service. Cast is written in Node.js, built entirely on top of HTTP APIs for easy control, and has plugins and hooks to make customization trivial.

Cast is an open source platform for deploying and managing applications being developed by Rackspace. Cast is the bridge between traditional configuration management like Puppet or Chef, and the longer term shift to Platforms as a Service. Cast is written in Node.js, built entirely on top of HTTP APIs for easy control, and has plugins and hooks to make customization trivial. Operations is can play a critical role in driving revenue for the business. This talk will explore some ways in which the ops team at Amazon is thinking outside the box to drive profitability. JJ will also issue a challenge for next year’s Velocity Conference.

Operations is can play a critical role in driving revenue for the business. This talk will explore some ways in which the ops team at Amazon is thinking outside the box to drive profitability. JJ will also issue a challenge for next year’s Velocity Conference. Michael Kuperman has 10 years of experience in the CDN and ADN industry. To his role as VP of Operations at Cotendo he brings deep expertise in deploying and operating mission critical global environments in the US, Europe and Asia. Before joining Cotendo, Michael held senior Operations positions at a number of leading companies in the content delivery network industry.

Michael Kuperman has 10 years of experience in the CDN and ADN industry. To his role as VP of Operations at Cotendo he brings deep expertise in deploying and operating mission critical global environments in the US, Europe and Asia. Before joining Cotendo, Michael held senior Operations positions at a number of leading companies in the content delivery network industry. Ronni Zehavi is CEO and Co-Founder of Cotendo. He has over fifteen years of executive experience in start-up and multi-national high-technology companies. Prior to Cotendo he founded Commtouch Software where he commercialized email security technologies in a distributed global service environment that handled over a billion content transactions per day with 100% uptime.

Ronni Zehavi is CEO and Co-Founder of Cotendo. He has over fifteen years of executive experience in start-up and multi-national high-technology companies. Prior to Cotendo he founded Commtouch Software where he commercialized email security technologies in a distributed global service environment that handled over a billion content transactions per day with 100% uptime. Launched in December 2008, BrowserMob set out to change the way performance and load testing is done – all using the cloud. Learn from the founder how he built a high performance testing product on top of dozens of cloud technologies, and how the operational support the cloud provided enabled the company to not only profit from day one, but to be acquired within a year and a half of its launch.

Launched in December 2008, BrowserMob set out to change the way performance and load testing is done – all using the cloud. Learn from the founder how he built a high performance testing product on top of dozens of cloud technologies, and how the operational support the cloud provided enabled the company to not only profit from day one, but to be acquired within a year and a half of its launch. One of the greatest challenges facing an organization that wants to migrate to IPv6 is that the protocol is not supported on all clients. In fact, it is estimated that 1/2000 of clients will be unable to connect to a dual-stack site.

One of the greatest challenges facing an organization that wants to migrate to IPv6 is that the protocol is not supported on all clients. In fact, it is estimated that 1/2000 of clients will be unable to connect to a dual-stack site. Since last August, you have been able to get Google Search results instantly, even before you are done typing your query. But, that’s not enough. When you click on a result, you want that page to load instantly as well. If you don’t search, but navigate directly to a web page from the address bar, that should load instantly too.

Since last August, you have been able to get Google Search results instantly, even before you are done typing your query. But, that’s not enough. When you click on a result, you want that page to load instantly as well. If you don’t search, but navigate directly to a web page from the address bar, that should load instantly too. Artur Bergman, hacker and technologist at-large, is the VP of Engineering and Operations at Wikia. He provides the technical backbone necessary for Wikia’s mission to compile and index the world’s knowledge. … In past lives, he’s built high volume financial trading systems, re-implemented Perl 5’s threading system, wrote djabberd, managed LiveJournal’s engineering team, and served as operations architect at Six Apart.

Artur Bergman, hacker and technologist at-large, is the VP of Engineering and Operations at Wikia. He provides the technical backbone necessary for Wikia’s mission to compile and index the world’s knowledge. … In past lives, he’s built high volume financial trading systems, re-implemented Perl 5’s threading system, wrote djabberd, managed LiveJournal’s engineering team, and served as operations architect at Six Apart.

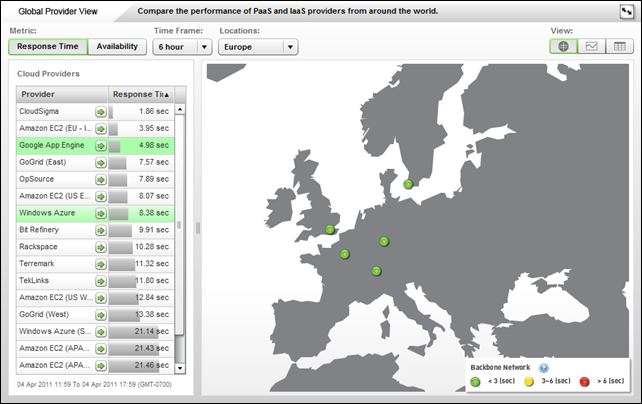

When an article regarding cloud performance and an associated average $1M in loss as a result appeared it caused an uproar in the Twittersphere, at least amongst the Clouderati. There was much gnashing of teeth and pounding of fists that ultimately led to questioning the methodology and ultimately the veracity of the report.

When an article regarding cloud performance and an associated average $1M in loss as a result appeared it caused an uproar in the Twittersphere, at least amongst the Clouderati. There was much gnashing of teeth and pounding of fists that ultimately led to questioning the methodology and ultimately the veracity of the report.  What the Compuware performance survey does is highlight the

What the Compuware performance survey does is highlight the  In a comparative measure of cloud service providers, Microsoft's Windows Azure has come out ahead. Azure offered the fastest response times to end users for a standard e-commerce application. But the amount of time that separated the top five public cloud vendors was minuscule.

In a comparative measure of cloud service providers, Microsoft's Windows Azure has come out ahead. Azure offered the fastest response times to end users for a standard e-commerce application. But the amount of time that separated the top five public cloud vendors was minuscule.  A few weeks ago we released calendar year Q4 “

A few weeks ago we released calendar year Q4 “