Windows Azure and Cloud Computing Posts for 5/23/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Jeff00Seattle updated his Windows Azure Drives: Part 1: Configure and Mounting at Startup of Web Role Lifecycle article for The Code Project on 5/23/2010:

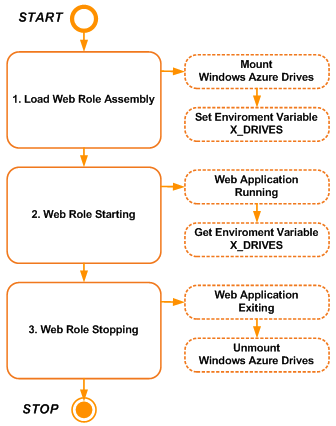

An approach for providing Windows Azure Drives (a.k.a. XDrive) to any cloud-based web applications through RoleEntryPoint callback methods and exposing successful mounting results within an environment variable through Global.asax callback method derived from the HttpApplication base class.

Introduction

This article presents an approach for providing Windows Azure Drives (a.k.a. XDrive) to any cloud-based web applications through RoleEntryPoint callback methods and exposing successful mounting results within an environment variable through Global.asax callback method derived from the HttpApplication base class. The article will demonstrate how to use this approach in mounting XDrives before a .NET (C#) cloud-base web application is running.

The next article will take this approach and apply it to PHP cloud-based web applications.

Jeff continues with the details for creating a “WebRole DLL in C# that would manage all XDrives' mounting before the PHP web application starts.”

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Nick Josevski’s OData, AtomPub and JSON of 5/23/2010 continues his OData series:

Continuing my mini-series of looking into OData I thought I would cover off the basic structure of AtomPub and JSON. They are both formats that OData can deliver the requested resources (a collection of entities; e.g. products or customers).

For the most part there isn’t much difference in terms of data volume returned by AtomPub vs JSON, tho AtomPub being XML, is slightly more verbose (tags and closing tags) and referencing namespaces via xmlns. A plus for AtomPub for your OData service is ability to define the datatype as you’ll see below via m:type the example being an integer Edm.Int32. Whereas the lack of such features is a plus in a different way for JSON – it’s simpler, and a language such as JavaScript interprets the values of basic types (string, int, bool, array, etc).

I’m not attempting to promote one over the other, just saying that each can serve a purpose. If you’re after posts that discuss this is a more critical fashion, have a look at this post by Joe Gregorio.

What I do aim to show is that comparing the two side by side there’s only a slight difference, and based on what you’re intending to accomplish with processing said data the choice for format is up to you. If you’re just re-purposing some data on a web interface JSON would be a suitable choice. If you’re processing the data within another service first, making use of XDocument (C#.NET) would seem suitable.

NOTE: In the examples that follow the returned result data is from the NetFlix OData service, I have stripped out some of the xmlns, and shortened/modified the urls in particular omitting http:// just so it fits better (less line wrapping).

So let us compare…

AtomPub

Yes that stuff that’s makes up web feeds.Example from the NetFlix OData feed access via URL http://odata.netflix.com/Catalog/Titles:

JSON

Yes that simple text used in JavaScript.Example from the NetFlix OData feed access via URL http://odata.netflix.com/Catalog/Titles?$format=JSON:

Gregg Duncan wrote Happy Birthday Data.gov. You’ve grown so in the last year… (from 47 to 272,677 datasets) on 5/22/2010:

WhiteHouse.gov - Data.gov: Pretty Advanced for a One-Year-Old

“One year ago, data.gov was born with 47 datasets of government information that was previously unavailable to the public. The thinking behind this was that this data belonged to the American people, and you should not only know this information, but also have the ability to use it. By tapping the collective knowledge of the American people, we could leverage this government asset to deliver more for millions of people.

Today, there are more than 250,000 datasets, hundreds of applications created by third parties, and a global movement to democratize data. To date, the site has received 97.6 million hits, and following the Obama Administration’s lead, governments and institutions of all sizes are unlocking the value of data for their constituents. San Francisco, New York City, the State of California, the State of Utah, the State of Michigan, and the Commonwealth of Massachusetts have launched data.gov-type sites, as have countries such as Canada, Australia, and the UK as well as the World Bank.

…”

“…

Data.gov is leading the way in democratizing public sector data and driving innovation. The data is being surfaced from many locations making the Government data stores available to researchers to perform their own analysis. Developers are finding good uses for the datasets, providing interesting and useful applications that allow for new views and public analysis. This is a work in progress, but this movement is spreading to cities, states, and other countries. After just one year a community is born around open government data.

Just look at the numbers:

6 Other nations establishing open data

8 States now offering data sites

8 Cities in America with open data

236 New applications from Data.gov datasets

253 Data contacts in Federal Agencies

272,677 Datasets available on Data.gov…”

Gregg concludes:

If only there was a API for Data.gov (cough… odata/”Dallas” would be very cool here… cough)

Still, there’s a ton of “data” here. Now to only turn it into information and finally wisdom…

Andy Novick’s Introduction to SQL Azure is a 00:02:18 Webcast posted 5/17/2010:

The first step in working with SQL Azure is to set up an account and provision a server. It only take a couple of minutes and when you're done you'll have a server name and a full fledged DNS path to it as well. We'll have more on this topic soon!

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Microsoft Case Studies posted IT Company [ISHIR Infotech] Attracts New Customers at Minimal Cost with Cloud Computing Solution on 5/19/2010:

ISHIR Infotech is an outsourced product development company. With extensive experience in the product development lifecycle, ISHIR helps emerging software leaders bring superior products to market.

Business Situation

ISHIR wanted to transform its in-house Vendor Management Solution (VMS) application into a commercially-viable solution, but without changing its business model and without capital expenses.

Solution

The company evaluated several service providers, but chose Windows Azure and Microsoft SQL Azure to quickly migrate its existing application to the cloud.

David Pallman outlines and details 11 of Neudesic’s Windows Azure Best Practices in this 4/22/2010 article that I missed when posted:

At Neudesic we do a great deal of Windows Azure consulting and development. Here are the best practices we've identified from our field experience:

- Validate your approach in the cloud early on

- Run a minimum of 2 server instances for high availability

- SOA best practices generally apply in the cloud.

- SOAP is out, REST is in.

- Be as stateless as possible.

- Co-locate code and data as much as possible.

- Take advantage of data center affinity.

- Retry calls to Windows Azure services, SQL Azure databases, and your own web services before failing.

- Use separation of concerns to isolate cloud/enterprise differences.

- Get as current as possible before migrating to azure.

- Migrate applications one tier at a time.

David fleshes out each item in his post.

Return to section navigation list>

Windows Azure Infrastructure

Alan Irimie offers a brief review of Sriram Krisnan’s Programming Windows Azure: Programming the Microsoft Cloud Book in this 5/23/2010 post:

The book by Sriram Krishnan Programming Windows Azure is now available on Amazon. Sriram Krishnan is a Program Manager on the Windows Azure team at Microsoft. At Windows Azure, he ran the feature teams which built the service management APIs, geo-capabilities and several back-end infrastructure pieces. He is a prolific speaker and has delivered talks at several conferences including PDC and MIX.

The book’s first half focuses on how to write and host application code on Windows Azure, while the second half explains all of the options you have for storing and accessing data on the platform with high scalability and reliability. Lots of code samples and screenshots are available to help you along the way.

Not everything about Windows Azure is covered in this book, and it is impossible for one book to cover it all. It is a must read however, for any Windows Azure developer. It is one of those must have books.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Waldo reviews Freddy Kristiansen’s session at Directions EMEA 2010: Windows Azure Applications on 5/22/2010:

I've been looking forward to this [Directions EMEA 2010] session of Freddy [Kristiansen]. He has been advertising it during our session on Wednesday, and apparently, people listened, because lots of the people that attended our session were here as well. Off course, it's a hot topic as well .. the cloud.. what the hell is that cloud?? And Azure?? I don't know if these questions were really answered during the session, but at least, everyone has got a pretty nice picture now of what it can be useful for ... .

I'm not going to blog all the details, because very soon, Freddy will blog all about it on his blog.

He started out with a simple explanation about Windows Azure: it's some kind of "Cloud Services Operation System" and serves as the development, service hosting and service management environment for the windows azure platform... . Simply said: it's something out there (hosted by Microsoft) that you can use (or abuse) to put your services .. and off course pay for what you use. If you need a lot of resources, you'll get it automagically (and pay for it, off course), if you don't need it, not. For example, ticket sales for Michael Jackson (he used Bruce Springsteen as an example, but I like Michael Jackson a little bit more :-) ) would have sold out in a matter of minutes. So, in a matter of minutes, you have to be able to sell 40000 tickets, and after that, you need a lot less resources from the server(s). Cloud services is a great way to deal with this, but not only this, off course.

Cloud services is just something out there, hosted, that you can use to make your setup much easier as well. For your internet applications, you don't have to foresee some hosted environment to do your stuff. It's already there, on Microsoft hardware, and they guarantee it's scalable, secure, reliable and an uptime of 100%.

What a lot of people wonder is whether it's good for hosting ERP .. well, Freddy stated pretty clear that it's definitely not intended for that... so now you know ;°).

He continued by explaining that there are multiple ways of using the web services .. and you can also use NAV 2009 web service on the internet .. by using a proxy service, which he explains on his blog. Thing is that he showed us how to connect over the cloud (service bus) with a guestbook application and an iPhone app... . Quite nice examples, but again, I'm making it myself easy, and I'm not going into details, because Freddy announced multiple times that he is going to publish everything on his blog .. so you'll be able to find every detail there shortly.Now, we'll have to think about applications in where we can use "the cloud" ... . It brings us a lot of opportunies. It's just a matter of how creative we are to develop solutions for it .. . It's nice to see that Microsoft are bringing services to the cloud as well (like the Dynamics Online Payments thingy...) .. so we'll have to go on that bus as well.

Good job, Freddy, and keep the blogging comin' :-).

David Makogon wrote Richmond Code Camp May 2010 Materials: Azure talk on 5/22/2010:

On May 22, I presented “Azure: Taking Advantage of the Platform.” Here’s the slide deck, sample code, and sample PowerShell script from the talk:

Thanks to everyone who attended, and for all the great questions! Here are a few takeaways from the talk:

- The Azure portal is http://www.azure.com. Here’s where you’ll be able to administer your account. You’ll also see a link to download the latest SDK.

- To set up an MSDN Premium account, visit my blog post here for a detailed walkthrough.

- Download the SDK here. Then grab the Azure PowerShell cmdlets here.

- To understand the true cost of web and worker roles, visit my blog post here, and the follow-up regarding staging here.

- The official pricing plan is here. MSDN Premium pricing details are here.

- The Azure teams have several blogs, as well as voting sites for future features. I compiled a list of the blogs and voting sites here.

- Remember to configure your service and storage to be co-located in the same data center. This is done by setting affinity when creating your services.

- While all storage access is REST-based, the Azure SDK has a complete set of classes that insulate you from having to construct properly-formed REST-based calls.

- We talked about the limited indexing available with table storage (partition key + row key). Don’t let this be a deterrent: Tables are scalable up to 100TB, where SQL Azure is limited to 50GB. Consider using SQL Azure for relational data, and offload content to table storage, creating a hybrid approach that offers both flexible indexing and massive scalability. You can reference partition keys in a relational table, for instance.

- Clarifying timestamps across different data centers and time zones (a question brought up in Brian Lanham’s Azure Intro talk): Timestamps are stored as UTC.

- Don’t forget about queue names: they must be all lower-case letters, numbers, or dash (and must start and end with letter or number)

If anyone’s interested in a 2-day Azure Deep Dive, I’ll be teaching a free 2-day Azure Bootcamp July 7-8 in Virginia Beach. Register here.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Derrick Harris explains Why Amazon Should Worry About Google App Engine for Business in this 5/23/2010 post to the GigaOm blog:

I wrote last week that the time may be right for Amazon Web Services to launch its own platform-as-a-service (PaaS) offering, if only to preempt any competitive threat from other providers’ increasingly business-friendly PaaS offerings. The time is indeed right, now that Google has introduced to the world App Engine for Business.

That’s because App Engine for Business further advances the value proposition for PaaS. PaaS offerings have been the epitome of cloud computing in terms of automation and abstraction, but they left something to be desired in terms of choice. With solutions like App Engine for Business, however, the idea of choice in PaaS offerings isn’t so laughable. Python or Java. BigTable or SQL. It’s not AWS (not that any PaaS offering really can be), but it’s a big step in the right direction. App Engine for Business is very competitive in terms of pricing and support, too.

Google is often is cited as a cloud computing leader, but until now had yet to deliver a truly legitimate option for computing in the cloud. Mindshare and a legit product make Google dangerous to cloud providers of all stripes, including AWS.

The integration of the Spring Framework in App Engine for Business is important because it means that customers have the option of easily porting Java applications to a variety of alternative cloud environments. Yes, AWS supports Spring, but the point is that Google is now on board with what is fast becoming the de facto Java framework for both internal and external cloud environments.

Meanwhile, in the IaaS market, AWS is busy trying to distinguish itself on the services and capabilities levels now that bare VMs are becoming commodities. Thus, we get what we saw this week, with AWS cutting storage costs for customers who don’t require high durability (a move some suggest was in response to a leak about Google’s storage announcement), and increasing RDS availability with cross-Availability-Zone database architectures. It’s all about differentiation around capabilities, support and services, and every IaaS provider is engaged in this one-upmanship.

If PaaS is destined to become the preferred cloud computing model, and if the IaaS market is becoming a rat race of sorts, why not free cloud revenues from the IaaS shackles and the threat of PaaS invasion? Amazon CTO Werner Vogels will be among several cloud computing executives speaking at Structure 2010 June 23 & 24, so we should get a sense then what demands are driving future advances for AWS and other cloud providers. For more on Google vs. Amazon and PaaS vs. IaaS, read my entire post here. [Requires GigaOm Pro subscription.]

GigaOM’s must-attend cloud computing and Internet infrastructure conference. Join us for insight into this fast-paced, trillion-dollar market. Register now »

Derrick also offers a brief How Google Put the Cloud Computing World on Notice post of the same date:

I wrote last week that the time might be right for Amazon Web Services to launch its own platform-as-a-service (PaaS) offering, if only to preempt any competitive threat from other providers’ increasingly business-friendly PaaS offerings. That stance is firmer than ever now that Google has introduced to the world App Engine for Business, which further advances the PaaS value proposition. Subscribe [to GigaOm Pro] to read the rest of this article.

James Urquhart replies to Geva Perrry’s post [see below] with a Does cloud computing need LAMP? article of 5/23/2010 for C|Net News’ The Wisdom of Clouds blog:

The LAMP stack is a collection of open-source technologies commonly integrated to create a platform capable of supporting a wide variety of Web applications. LAMP typically consists of Linux, Apache Tomcat, MySQL, and either the PHP, Python or Perl scripting languages. Famously used at some of the best known Web businesses (such as Wikipedia), LAMP has seen widespread adopting in corporate and government settings in the last several years.

My cohost on the occasional Overcast podcast, Geva Perry, recently wrote a blog post asking a simple but profound question: who will build the LAMP cloud? Who will create the first platform as a service (PaaS) offering, a complete programming environment that hides the operational challenges of running applications in the open-source stack while providing all the tools and compatibility LAMP offers today?

As I initially read the post, I thought "good question." As Geva notes, there is a huge gap in the existing market--almost a bias towards Java and Ruby that ignores the value of LAMP:

“Salesforce.com and VMware recently unveiled a Java-focused platform-as-a-service offering, VMForce.com. Meanwhile, Microsoft has Azure, a PaaS offering focused on the .Net stack, and startups Heroku and Engine Yard both deliver Ruby-on-Rails cloud platforms. But who's going to offer a PaaS for LAMP?”

Geva goes on to analyze the key players in the market in some depth. For example, Zend Technologies, a business founded by the creators of PHP, may be planning to do so with $9M in funding secured this week. Google, with App Engine, already has a Python offering that goes some of the way toward LAMP, and recently announced MySQL support for later this year, but also seems to be switching its focus to Java.

Geva also runs down analyses of Microsoft Azure, Heroku, and the Ruby community, and Amazon Web services.

After reading Geva's post, however, I was struck by the very first comment he received from Kirill Sheynkman, arguing that the future of LAMP in the cloud may be a moot point:

“Yes, yes, yes. PHP is huge. Yes, yes, yes. MySQL has millions of users. But, the "MP" part of LAMP came into being when we were hosting, not cloud computing. There are alternative application service platforms to PHP and alternatives to MySQL (and SQL in general) that are exciting, vibrant, and seem to have the new developer community's ear. Whether it's Ruby, Groovy, Scala, or Python as a development language or Mongo, Couch, Cassandra as a persistence layer, there are alternatives. MySQL's ownership by Oracle is a minus, not a plus. I feel times are changing and companies looking to put their applications in the cloud have MANY attractive alternatives, both as stacks or as turnkey services [such as] Azure and App Engine.”

I have to say that Kirill's sentiments resonated with me. First of all, the LA of LAMP are two elements that should be completely hidden from PaaS users, so does a developer even care if they are used anymore? (Perhaps for callouts for operating system functions, but in all earnestness, why would a cloud provider allow that?)

Second, as he notes, the MP of LAMP were about handling the vagaries of operating code and data on systems you had to manage yourself. If there are alternatives that hide some significant percentage of the management concerns, and make it easy to get data into and out of the data store, write code to access and manipulate that data, and control how the application meets its service level agreements, is the "open sourceness" of a programming stack even that important anymore?

This discussion reflects a larger discussion about the future of open source in a world dominated by cloud computing. If you can manipulate code, but not deploy it (because it is the cloud provider's role to deploy platform components), what advantage do you gain over platform components provided to you at a reasonable cost that "just work," but happen to be proprietary?

I'd love to hear your thoughts on the subject. Has cloud computing reduced the relevance of the LAMP stack, and is this indicative of what cloud computing will do to open-source platform projects in general?

Graphics Credit: Fractal Angel

Geva Perry asks Who Will Build the LAMP Cloud? in this 5/22/2010 post to the GigaOm blog:

Zend Technologies, whose founders created the programming language PHP and subsequently touts itself as “the PHP company,” said Monday that it raised an additional $9 million. But while the press release offered little information as to the money’s intended use, it did contain a somewhat cryptic quote from its lead investor and board member, Moshe Mor of Greylock Partners (italics mine):

“Today’s enterprises are looking to agile Web and Cloud-based technologies such as PHP to deliver business value better and faster…We believe that Zend’s leadership position in the PHP space enables the company to drive its solution to deeper adoption across a broad commercial audience in the U.S. and around the globe.”

Since when is PHP a “cloud-based technology” (whatever that means)? I know Mor to be a smart guy, so I can only assume there’s more to his statement than meets the eye — and I believe it has to do with the LAMP stack.

Salesforce.com and VMware recently unveiled a Java-focused platform-as-a-service offering, VMForce.com. Meanwhile, Microsoft has Azure, a PaaS offering focused on the .Net stack, and startups Heroku and Engine Yard both deliver Ruby-on-Rails cloud platforms. But who’s going to offer a PaaS for LAMP?

One candidate is, of course, Zend, the commercial company behind PHP, the biggest P in LAMP. Zend is also the driving force behind the Simple Cloud API, which is intended to simplify integration between PHP applications and cloud services. But for Zend, which has operated under a typical open-source commercialization model by offering services, support and premium commercial licenses for on-premise installations, operating a cloud service is a whole new area of competency that requires an entirely new business model.

Google is another candidate. The search giant already has a PaaS offering, Google App Engine, that supports both Java and Python, another one of the Ps in LAMP. But until recently it’s been accused of being a lightweight offering that creates lock-in by forcing developers to use Google-specific programming models, such as with threading and data structure. In fact, because of this, Google’s platform lacked MySQL support, the M in LAMP. And although Google recently rolled out a version of its App Engine tweaked for the enterprise, including support for MySQL, the focus seems to be on Java, not on LAMP.

Heroku is another possibility, perhaps surprisingly given how much the startup is identified with the Ruby community. As Stacey noted in a post about its recent $10 million investment announcement:

“We don’t think the market is going to end up with a Ruby platform and a Java platform and a PHP platform,” Byron Sebastian, Heroku’s CEO, said to me in an interview. “People want to build enterprise apps, Twitter apps and to do what they want regardless of the language.” Sebastian said he sees the round as a huge validation for the Ruby language as a way to build cloud-based applications, but doesn’t want to tie Heroku too closely to Ruby. “The solution is going to be a cloud app platform, rather than as a specific language as a service,” Sebastian said.

I like Sebastian and the Heroku guys a lot, but my head’s still spinning from that ambivalent statement.

Even Microsoft has committed to supporting PHP and MySQL on its Azure platform, behind which there’s already an open-source project called PHPAzure. But the operating system is still Windows, so the Microsoft initiative does not qualify as a LAMP stack cloud.

Finally, Amazon can never be discarded as a significant player whenever it comes to cloud computing. As Derrick Harris has postulated, there’s a strong possibility that Amazon will come out with a PaaS offering. And if it does, a LAMP stack-focused platform makes a lot of sense, given that it already offers a MySQL database-as-a-service offering with its Amazon RDS service.

Then again, there could always be a startup hard at work building the LAMP Cloud. Do you know of anyone else? Would you want a PHP or LAMP platform as a service? Let us know in the comments.

Vivek Kundra’s 35-page State of Public Sector Cloud Computing white paper PDF of 5/20/2010 carries this Executive Summary:

The Obama Administration is changing the way business is done in Washington and bringing a new sense of responsibility to how we manage taxpayer dollars. We are working to bring the spirit of American innovation and the power of technology to improve performance and lower the cost of government operations.

The United States Government is the world’s largest consumer of information technology, spending over $76 billion annually on more than 10,000 different systems. Fragmentation of systems, poor project execution, and the drag of legacy technology in the Federal Government have presented barriers to achieving the productivity and performance gains found when technology is deployed effectively in the private sectors.

In September 2009, we announced the Federal Government’s Cloud Computing Initiative. Cloud computing has the potential to greatly reduce waste, increase data center efficiency and utilization rates, and lower operating costs. This report presents an overview of cloud computing across the public sector. It provides the Federal Government’s definition of cloud computing, and includes details on deployment models, service models, and common characteristics of cloud computing.

As we move to the cloud, we must be vigilant in our efforts to ensure that the standards are in place for a cloud computing environment that provides for security of government information, protects the privacy of our citizens, and safeguards our national security interests. This report provides details regarding the National Institute of Standards and Technology’s efforts to facilitate and lead the development of standards for security, interoperability, and portability.

Furthermore, this report details Federal budget guidance issued to agencies to foster the adoption of cloud computing technologies, where relevant, and provides an overview of the Federal Government’s approach to data center consolidation.

This report concludes with 30 illustrative case studies at the Federal, state and local government levels. These case studies reflect the growing movement across the public sector to leverage cloud computing technologies.

<Return to section navigation list>

But is it really? Is it really an evolution when you appear to moving back toward what we had before? Because the only technological difference between isolated, dedicated resources in the cloud and “outsourced data center” appears to be the way in which the resources are provisioned. In the former they’re mostly virtualized and provisioned on-demand. In the latter those resources are provisioned manually. But the resources and the isolation is the same. …

But is it really? Is it really an evolution when you appear to moving back toward what we had before? Because the only technological difference between isolated, dedicated resources in the cloud and “outsourced data center” appears to be the way in which the resources are provisioned. In the former they’re mostly virtualized and provisioned on-demand. In the latter those resources are provisioned manually. But the resources and the isolation is the same. … Chrapaty, who heads its infrastructure business, is leaving the software giant to take a top job at Cisco (CSCO), sources said.

Chrapaty, who heads its infrastructure business, is leaving the software giant to take a top job at Cisco (CSCO), sources said.