Windows Azure and Cloud Computing Posts for 8/13/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

•• Update 8/14/2011 11:30 AM PDT: Added new articles marked •• by Michael Washington, Infragistics, Michael Ossou, O’Reilly Media, Mihail Mateev, Greg Orzell, Mario Kosmiskas, Jim Priestly and Dhananjay Kumar

• Update 8/13/2011 4:00 PM PDT: Added new articles marked • by Cerebrata and Kent Weare.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Caching, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

•• Jim Priestly described Migrating to Azure Blob Storage–Cost and Performance Gains in an 8/14/2011 post:

The Obvious Costs of Hosting Images On-Premise versus Off-Premise

In my previous post, I talked about how we used a phased approach to migrate PlcCenter.com to Azure. For the typical catalog type website, many static images are served with the product detail pages. There are usually logos, banners, and other static content on the site, as well. A quick analysis of the server logs will likely show, as we found, that the majority of the raw outbound bandwidth from our site is used serving this content. You will likely find similar results.

At time of writing, the current Azure pricing makes hosting this content off-site extremely attractive.

Below is a breakdown of actual costs for hosting static content on Azure based on current pricing for traffic of about 20,000 unique visitors per day:

As the chart shows, hosting a 9GB library of static content, serving 70GB of bandwidth and serving 11.4 Million requests costs only $23.25 per month!

Hosting this data onsite required a server, power, UPS, cooling and 10MB Internet connection. The power, cooling and amortization of the server over 3 years far exceeded $24 per month before we even looked at ISP, server licensing and maintenance labor costs.

The Hidden Cost of Hosting Images On-Premise

Hosting images in a folder on your webserver gives POOR PERFORMANCE!

If you run a small to mid-size site, it is also likely that you are hosting your images from a folder on your web site, ex. http://www.mysite.com/images/image.jpg. While this seems correct and works fine, it is a performance trap that does not scale with traffic growth.

IIS7 is a great web server. It’s easy to configure and secure, and it’s robust. On busy sites, it can be tuned and tweaked to support a heavy load. IIS7 includes both static and dynamic compression. While these will help with the bandwidth, every image is a request. Every request and the effort to compress that request increases CPU usage on your server. Moving your images offsite will decrease the CPU load on your server. Decreasing the CPU load consumed by compressing and serving static content will enable the site to serve dynamic pages faster.

We host many million unique catalog pages. In our experience, moving the images from the primary server to Azure resulted in page performance increasing from 200 to 250 pages per minute on a single IIS7/ASP.Net 2.0 server. The faster that Google can crawl the site, the more pages that get indexed. Google ranks faster pages higher, so page performance is critical..

Modern browsers like IE, Chrome and Safari are designed to load your site on multiple threads. This gives the user a better experience by background loading images while the browser renders your HTML and executes your script. The hidden trick is that each of these browsers is tuned by default to only open a maximum of two simultaneous downloads per host per tab. Simply moving your images from http://www.mysite.com/images/image.jpg to http://images.mysite.com/images/image.jpg will make your site appear faster to users.

Summary

Windows Azure Blob Storage provides a platform for inexpensively hosting all of your public static content. Using an offsite hosting service like Azure will increase the performance and user experience of your site by offloading the CPU usage required to compress and serve the content, lower your on-premise bandwidth consumption, and double the default download threads used by browsers, increasing your page render speed for users.

Microsoft Azure Blob Storage is a cost effective way to get you static content hosted Off-Premise, and is a great first step project to get your team exposed to the cloud.

In my my next few posts on Azure Blob Storage, I’ll discuss setting up Azure Storage, when to use the CDN, how to handle SSL, synchronizing your local content to Azure Storage, configuring your blobs to be cached in user’s browsers, and using the new Azure Storage Analytics to monitor your content.

Jim is the Vice President of Information Technology at Radwell International, Inc. His earlier Migrating to Azure post of 8/8/2011 described how he migrated the PlcCenter.com site to Windows Azure.

• Cerebrata announced the Cerebrata Windows Azure Storage Analytics Configuration Utility - A Free Utility to Configure Windows Azure Storage Analytics in early August 2011:

Recently Windows Azure team announced the availability of Storage Analytics (http://blogs.msdn.com/b/windowsazurestorage/archive/2011/08/03/windows-azure-storage-analytics.aspx) which will allow users to get granular information about the storage utilization.

First step for doing this would be to enable and configure storage analytics parameters using which you can instruct Windows Azure Storage service to log certain pieces of analytical information and specify data retention policy. Following the footsteps of Steve Marx (http://blog.smarx.com), I’m pleased to announce the availability of a desktop client for configuring storage analytics. Steve has also created a simple web application which does the same thing (https://storageanalytics.cloudapp.net/).

The utility is extremely simple to use. What it allows you to do is set different analytics configuration for each service type (blobs, tables and queues) separately or you can set the analytics configuration for all three services with same value by clicking on “Quick Configuration” button shown above.

What you will need to run this application:

1. .Net Framework 4.0 (full version and NOT the client profile)

2. Cloud storage account credentials.Download location: You can download this utility from our website @ https://www.cerebrata.com/Downloads/SACU/SACU.application.

This utility is completely FREE thus you don’t need to have licenses for our products to use this utility.

The Configuration Utility worked fine for me when I tested it on 8/13/2011.

<Return to section navigation list>

SQL Azure Database and Reporting

AllComputers.us completed the first three parts of its SQL Azure/SharePoint series with Securing the Connection to SQL Azure (part 3) - Surface SQL Azure Data in a Visual Web Part on 8/11/2011.

Note: This technique doesn’t work with SharePoint Online because it doesn’t support the Secure Store Service (SSS) for the Business Connectivity Service (BCS). You must have access to a SharePoint Server 2010 Standard or Enterprise instance to create the required Application ID object. (See Securing the Connection to SQL Azure (part 1) - Create an Application ID & Create an External Content for more details about SSS.)

4. Surface SQL Azure Data in a Visual Web Part

Open Visual Studio 2010 and click File | New Project. Select the SharePoint 2010 Empty Project template, and click Deploy As A Farm Solution when prompted in the SharePoint Customization Wizard.

Click Finish.

Right-click the newly created project, and select Add | New Item.

In the Add New Item dialog box, select Visual Web Part. Provide a name for the project (for example, SQL_Azure_Web_Part).

In the Solution Explorer, right-click the newly added feature, and select Rename. Provide a more intuitive name for the Feature (for example, SQLAzureWebPartFeature).

Right-click the ASCX file (SQL_Azure_Web_PartUserControl.ascx, for example), and select View Designer.

When the designer opens, ensure that the Toolbox is visible. If it is not, click View, and select Toolbox.

Click the Design tab, and drag a label, datagrid, and button onto the designer surface. Use the control types and names shown in the following table.

Control Type Control Name Label lblWebPartTitle GridView datagrdSQLAzureData Button btnGetSQLAzureData

After you’ve added the controls, you can click the Source tab to view the UI code. It should look something similar to the following code (note that you won’t have the onclick event until you double-click the button to add this event):<%@ Assembly Name="$SharePoint.Project.AssemblyFullName$" %>

<%@ Assembly Name="Microsoft.Web.CommandUI, Version=14.0.0.0, Culture=neutral,

PublicKeyToken=71e9bce111e9429c" %>

<%@ Register Tagprefix="SharePoint" Namespace="Microsoft.SharePoint.WebControls"

Assembly="Microsoft.SharePoint, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71

e9bce111e9429c" %>

<%@ Register Tagprefix="Utilities" Namespace="Microsoft.SharePoint.Utilities"

Assembly="Microsoft.SharePoint, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71

e9bce111e9429c" %>

<%@ Register Tagprefix="asp" Namespace="System.Web.UI" Assembly="System.Web.

Extensions, Version=3.5.0.0,

Culture=neutral, PublicKeyToken=31bf3856ad364e35" %>

<%@ Import Namespace="Microsoft.SharePoint" %>

<%@ Register Tagprefix="WebPartPages" Namespace="Microsoft.SharePoint.WebPartPages"

Assembly="Microsoft.SharePoint, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71

e9bce111e9429c" %>

<%@ Control Language="C#" AutoEventWireup="true" CodeBehind="SQL_Azure_Web_

PartUserControl.ascx.cs"

Inherits="C3_SQL_Azure_And_Web_Part.SQL_Azure_Web_Part.SQL_Azure_Web_PartUserControl"

%>

<asp:Label ID="lblWebPartTitle" runat="server" Text="SQL Azure Web Part"></asp:Label>

<p>

<asp:GridView ID="datagrdSQLAzureData" runat="server">

</asp:GridView>

</p>

<p>

<asp:Button ID="btnGetSQLAzureData" runat="server"

onclick="btnGetSQLAzureData_Click" Text="Get Data" />

</p>

After you add the controls, edit the Text property of the label so it reads SQL Azure Web Part, and change the button text to Get Data. When you’re done, they should look similar to the following image.

Double-click the Get Data button to add an event to the btnGetSQLAzureData button. Visual Studio should automatically open the code-behind view, but if it doesn’t, right-click the ASCX file in the Solution Explorer, and select View Code.

Add the bold code shown in the following code snippet to your Visual Web Part ASCX code-behind file. Note that you’ll need to replace the servername, username, and password with your own variables and credentials for your SQL Azure instance (for example, server.database.windows.net for servername, john@server for username, and your password for the password variable):

using System;

using System.Web.UI;

using System.Web.UI.WebControls;

using System.Web.UI.WebControls.WebParts;

using System.Data;

using System.Data.SqlClient;

namespace C3_SQL_Azure_And_Web_Part.SQL_Azure_Web_Part

{

public partial class SQL_Azure_Web_PartUserControl : UserControl

{

string queryString = "SELECT * from Customers.dbo.CustomerData;";

DataSet azureDataset = new DataSet();

protected void Page_Load(object sender, EventArgs e)

{

}

protected void btnGetSQLAzureData_Click(object sender, EventArgs e)

{

//Replace servername, username and password below with your SQL Azure

//server name and credentials.

string connectionString = "Server=tcp:servername;Database=Customers;" +

"User ID=username;Password=password;Trusted_Connection=False;

Encrypt=True;";

using (SqlConnection connection = new SqlConnection(connectionString))

{

SqlDataAdapter adaptor = new SqlDataAdapter();

adaptor.SelectCommand = new SqlCommand(queryString, connection);

adaptor.Fill(azureDataset);

datagrdSQLAzureData.DataSource = azureDataset;

datagrdSQLAzureData.DataBind();

}

}

}

}

Amend the .webpart file to provide some additional information about the Web Part; specifically, amend the Title and Description properties. You can also amend the location of where you deploy the Web Part. These amendments are shown in the following code:

...

<properties>

<property name="Title" type="string">SQL_Azure_Web_Part</property>

<property name="Description" type="string">Web part to load SQL Azure data.</

property>

</properties>

...

...

<Property Name="Group" Value="SP And Azure" />

<Property Name="QuickAddGroups" Value="SP And Azure" />

...

When you’re done, right-click the project, and select Build. When the project successfully builds, right-click the project, and select Deploy.

After the project successfully deploys, navigate to your SharePoint site and create a new Web Part page. (Click Site Actions | View All Site Content | Create | Page | Web Part Page | Create. Then provide a name for the Web Part page, and click Create.)

Click Add A Web Part, navigate to the SP And Azure category, select your newly deployed Web Part, and click Add. The following image illustrates where you’ll find your newly created Web Part—in the SP And Azure group.

Click Stop Editing on the ribbon to save your changes to SharePoint.

Click the Get Data button in the Visual Web Part to retrieve the SQL Azure data and display it in your new Visual Web Part. The result should look similar to the following image.

At this point, you’ve created a Visual Web Part that consumes your SQL Azure data—albeit one that has very little formatting. You can use the DataGrid Properties window to add some design flair to your Visual Web Part if you like.

You could equally use the SQL connection pattern to integrate SQL Azure with SharePoint in other ways. For example, you could create a Microsoft Silverlight application that displays data in similar ways. You can also use the SQLDataAdapter class to update and delete records from SQL Azure, whether you make the initial call from the Visual Web Part or from Silverlight.

You can wrap integration with a SQL Azure database with a WCF service and use the service as your proxy to the SQL Azure data; you could also create and deploy a Microsoft ASP.NET application to Windows Azure that uses SQL Azure and then integrate that with SharePoint; and you can use the Microsoft ADO.NET Entity Framework and Microsoft Language Integrated Query (LINQ), and develop WCF Data Services and access your SQL Azure data by using REST.

The first two parts of the SharePoint series are here:

- Securing the Connection to SQL Azure (part 2) - Set Permissions for an External Content Type

- Securing the Connection to SQL Azure (part 1) - Create an Application ID & Create an External Content

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Caching, Access Control, WIF and Service Bus

• Kent Weare (@wearsy) described AppFabric Apps (June 2011 CTP) Accessing AppFabric Queue via REST from a Windows 7.1 Phone on 8/13/2011:

I recently watched an episode of AppFabric TV where they were discussing the REST API for Azure AppFabric. I thought the ability to access the AppFabric Service Bus, and therefore other Azure AppFabric services like Queues, Topics and Subscriptions via REST was a pretty compelling scenario. For example, if we take a look at the “Power Outage” scenario that I have been using to demonstrate some of the features of AppFabric Applications, it means that we can create a Windows Phone 7 (or Windows Phone 7.1/Mango Beta) application and dump messages into an AppFabric Queue securely via the AppFabric Service Bus. Currently, the Windows Phone SDK does not allow for the managed Service Bus bindings to be loaded on the phone so using the REST based API over HTTP is a viable option.

Below is a diagram that illustrates the solution that we are about to build. We will have a Windows Phone 7.1 app that will push messages to an Azure AppFabric Queue. (You will soon discover that I am not a Windows Phone developer. If you were expecting to see some whiz bang new Mango features then this post is not for you.)

The purpose of the mobile is to submit power outage information to an AppFabric Queue. But before we can do this we need to retrieve a token from the Access Control Service and include this token in our AppFabric Queue message Header. Once the message is in the Queue, we will once again use a Code Service to retrieve messages that we will then insert into a SQL Azure table.

Building our Mobile Solution

One of the benefits of using AppFabric queues is the loose coupling between publishers and subscribers. With this in mind, we can proceed with building a Windows Phone 7 application in its own solution. For the purpose of this blog post I am going to use the latest mango beta sdk bits which are available here.

Since I have downloaded the latest 7.1 bits, I am going to target this phone version.

On our WP7 Canvas we are going to add a few controls:

- TextBlock called lblAddress that has a Text property of Address

- TextBox called txtAddress that has an empty Text Property

- Button called btnSubmit that has a Content property of Submit

- TextBlock called lblStatus that has an empty Text Property

Within the btnSubmit_Click event we are going to place our code that will communicate with the Access Control Service.

private void btnSubmit_Click(object sender, RoutedEventArgs e)

{//Build ACS and Service Bus Addresses

string acsAddress = https + serviceNameSpace + acsSuffix ;

string relyingPartyAddress = http + serviceNameSpace + serviceBusSuffix;

string serviceAddress = https + serviceNameSpace + serviceBusSuffix;//Formulate Query String

string postData = "wrap_scope=" + Uri.EscapeDataString(relyingPartyAddress) +

"&wrap_name=" + Uri.EscapeDataString(issuerName) +

"&wrap_password=" + Uri.EscapeDataString(issuerKey);

WebClient acsWebClient = new WebClient();

//Since Web/Http calls are all async in WP7, we need to register and event handler

acsWebClient.UploadStringCompleted += new UploadStringCompletedEventHandler(acsWebClient_UploadStringCompleted);//instantiate Uri object with our acs URL so that we can provide in remote method call

Uri acsUri = new Uri(acsAddress);

acsWebClient.UploadStringAsync(acsUri,"POST",postData);}

Since we are making an Asynchronous call to the ACS service, we need to implement the handling of the response from the ACS Service.

private void acsWebClient_UploadStringCompleted(object sender, UploadStringCompletedEventArgs e)

{

if (e.Error != null)

{

lblStatus.Text = "Error communicating with ACS";

}

else

{

//store response since we will need to pull ACS token from it

string response = e.Result;//update WP7 UI with status update

lblStatus.Text = "Received positive response from ACS";

//Sleep just for visual purposes

System.Threading.Thread.Sleep(250);//parsing the ACS token from response

string[] tokenVariables = response.Split(‘&’);

string[] tokenVariable = tokenVariables[0].Split(‘=’);

string authorizationToken = Uri.UnescapeDataString(tokenVariable[1]);

//Creating our Web client that will use to populate the Queue

WebClient queueClient = new WebClient();//add our authorization token to our header

queueClient.Headers["Authorization"] = "WRAP access_token=\"" + authorizationToken +"\"";

queueClient.Headers[HttpRequestHeader.ContentType] = "text/plain";//capture textbox data

string messageBody = txtAddress.Text;

//assemble our queue address

//For example: "https://MyNameSpace.servicebus.appfabriclabs.com/MyQueueName/Messages"

string sendAddress = https + serviceNameSpace + serviceBusSuffix + queueName + messages;//Register event handler

queueClient.UploadStringCompleted += new UploadStringCompletedEventHandler(queueClient_UploadStringCompleted);Uri queueUri = new Uri(sendAddress);

//Call method to populate queue

queueClient.UploadStringAsync(queueUri, "POST", messageBody);

}

}

So at this point we have made a successful request to ACS and received a response that included our token. We then registered an event handler as we will call the AppFabric Service Bus Queue using an Asynchronous call. Finally we made a call to our Service Bus Queue.

We now need to process the response coming back from the AppFabric Service Bus Queue.

private void queueClient_UploadStringCompleted(object sender, UploadStringCompletedEventArgs e)

{

//Update status to user.

if (e.Error != null)

{

lblStatus.Text = "Error sending message to Queue";

}

else

{

lblStatus.Text = "Message successfully sent to Queue";

}

}That concludes the code that is required to submit a message securely to the AppFabric Service Bus Queue using the Access Control Service to authenticate our request.

Building our Azure AppFabric Application

The first artifact that we are going to build is the AppFabric Service Bus Queue called QueueMobile.

Much like we have done in previous posts we need to provide an IssuerKey, IssuerName and Uri.

The next artifact that we need add is a SQL Azure Database.

Adding this artifact is only the beginning. We still need to create our local database in our SQL Express instance. So what I have done is manually created a Database called PowerOutage and a Table called Outages.

Within this table I have two very simple columns: ID and Address.

So the next question is how do I connect to this database. If you navigate to the AppFabric Applications Manager which is found within the AppFabric Labs portal, we will see that a SQL Azure DB has been provisioned for us.

Part of this configuration includes our connection string for this Database. We can access this connection string by clicking on the View button that is part of the Connection String panel.

I have covered up some of the core credential details that are part of my connection string for security reasons. What I have decided to do though to make things a little more consistent is created a SQL Server account that has these same credentials in my local SQL Express. This way when I provision to the cloud I only need to change my Data Source.

For the time being I am only interested my local development fabric so I need to update my connections string to use my local SQL Express version of the database.

With our Queue and Database now created and configured, we need to focus on our Code Service. The purpose of this Code Service is to retrieve messages from our AppFabric Queue and insert them into our SQL Azure table. We will call this Code Service CodeMobileQueue and then will click the OK button to proceed.

We now need to add references from our Code Service to both our AppFabric Queue and our SQL Azure Instance. I always like to rename my references so that they have meaningful names.

Inside our Code Service, It is now time to start focusing on the plumbing of our solutions We need to be able to retrieve messages from the AppFabric Queue and insert them into our SQL Azure table.

public void Run(CancellationToken cancelToken)

{

//Create reference to our Queue CLient

QueueClient qClient = ServiceReferences.CreateQueueMobile();

//Create reference to our SQL Azure Connection

SqlConnection sConn = ServiceReferences.CreateSqlQueueMobile();

MessageReceiver mr = qClient.CreateReceiver();

BrokeredMessage bm;

Stream qStream;

StreamReader sReader;

string address;System.Diagnostics.Trace.TraceInformation("Entering Queue Retrieval " + System.DateTime.Now.ToString());

while (!cancelToken.IsCancellationRequested)

{

//Open Connection to the database

sConn.Open();

while (mr.TryReceive(new TimeSpan(hours: 0, minutes: 30, seconds: 0), out bm))

{try

{

//Note: we are using a Stream here instead of a String like in other examples

//the reason for this is that did not put the message on the wire using a

//BrokeredMessage(Binary Format) like in other examples. We just put on raw text.

//The way to get around this is to use a Stream and then a StreamReader to pull the text out as a String

qStream = bm.GetBody<Stream>();

sReader = new StreamReader(qStream);

address = sReader.ReadToEnd();//remove message from the Queue

bm.Complete();System.Diagnostics.Trace.TraceInformation(string.Format("Message received: ID= {0}, Body= {1}", bm.MessageId, address));

//Insert Message from Queue and add it to a Database

SqlCommand cmd = sConn.CreateCommand();

cmd.Parameters.Add(new SqlParameter("@ID", SqlDbType.NVarChar));

cmd.Parameters["@ID"].Value = bm.MessageId;

cmd.Parameters.Add(new SqlParameter("@Address", SqlDbType.NVarChar));

cmd.Parameters["@Address"].Value = address;

cmd.CommandText = "Insert into Outages(ID,Address) Values (@ID,@Address)";

cmd.CommandType = CommandType.Text;

cmd.ExecuteNonQuery();

System.Diagnostics.Trace.TraceInformation("Record inserted into Database");

}

catch (Exception ex)

{

System.Diagnostics.Trace.TraceError("error occurred " + ex.ToString());

}

}// Add your code here

Thread.Sleep(5 * 1000);

}mr.Close();

qClient.Close();

sConn.Dispose();

}Testing Application

We are done with all the coding and configuration for our solution. Once again I am going to run this application in the local Dev Fabric so I am going to go ahead and type CRTL + F5. Once my Windows Azure Emulator has been started and our application has been deployed we can start our Windows Phone Project.

For the purpose of this blog post we are going to run our Windows Mobile solution in the provided emulator. However, I have verified the application can be side-loaded on a WP7 device and the application does work properly.

We are now going to populate our Address text box with a value. In this case I am going to provide 1 Microsoft Way and click the Submit button.

Once we click the Submit button we can expect our first status message update indicating that we have received a positive response from ACS.

The next update we will have displayed is one that indicates our message has been successfully sent to our AppFabric Queue.

As outlined previously, our WP7 app will publish message to our AppFabric Queue, from there we will have our Code Service de-queue the message and then insert our record into a SQL Azure table. So if we check our Outages table we will discover that a record has been added to our Database.

Conclusion

Overall I am pretty please[d] with how this demo turned out. I really like the ability to have a loosely coupled interface that a Mobile client can utilize. What is also nice about using a RESTful interface is that we have a lot of flexibility when porting a solution like this over to other platforms.

Another aspect of this solution that I like is having a durable Queue in the cloud. In this solution we had a code service de-queuing this message in the cloud. However, I could also have some code written that is living on-premise that could retrieve these messages from the cloud and then send them to an LOB system like SAP. Should we have a planned, or unplanned system outage on-premise, I know that all mobile clients can still talk to the Queue in the cloud.

Wely Lau compared Various Options to Manage Session State in Windows Azure in an 8/12/2011 post:

Introduction

The Windows Azure Platform is a Microsoft cloud platform used to build, host and scale web applications through Microsoft datacenters. Customers are given privilege to scale VM instance up and down in the matter of a few minutes. Although this flexibility would indeed very useful, it may affect the way we architect and design the solution.

One of the essential aspect that we would need to take into account is session state. Traditionally, if you are running one single server, going for default InProc session state will just work fine. However, when you have more than one server hosting your application, this may be a challenge for us. Similarly this scenario applies to Cloud environment.

This article describes various options to handle Session State in Windows Azure. For each option, I’ll start with common introduction as brief information, follow by various advantages and disadvantages, and finalize by recommendation and suggestion.

As prerequisite, I would assume the readers are familiar with the basic, what Session is and how it works…

Various Options to Manage Session State in Windows Azure

1. InProc Session

InProc session state maybe is the best performed option (in term of access time) and the default when you are not specifying one. It actually stores the session in web server’s memory. Therefore, the access is very fast since hitting to memory is extremely speedy.

I had a post last November 2011 that described In-proc Session does not work well in Windows Azure. Well, in fact, it may be fine if you just run on single instance. However, I won’t recommend you to just spin up single instance at production environment, unless you tolerate some downtime. To meet the 99.95% SLA, we are required to spin at least 2 instance per role.

Advantages

- Very fast access since the session information is stored in memory (RAM)

- No extra cost as it will be using your VM’s memory

Disadvantages

- As mentioned above, this will only valid for single instance. If you use more than one instance, the inconsistency will happen.

*The rest of the option[s] will tackle the single instance issue as they use a centralized medium.

2. Table Storage Session Provider

Table Storage Provider is actually a subset of Windows Azure ASP.NET Providers written by some Microsoft folks. The Session Provider is actually a custom provider that is compiled into a dll, centralize the session information in Windows Azure Table Storage. You may download the package from here. Clicking on the “Browse Code” section will show you pretty comprehensive example of how to implement this on your project.

The way how it actually works is to store each session in Table Storage as could be seen in below screenshot. Each record will have its expired column that describe the expired time of each session if there’s no interaction from the user.

Advantages

- Cost effective. In essence, Windows Azure Storage only charge you $ 0.15 per GB per month.

Disadvantages

- Not officially supported by Microsoft

- Performance may not be very good. I experience a pretty bad experience on performance when using Windows Azure Storage provider.

- Need to clear unused session.

For each time a session (with properties including expiry time) is created on a session table. For the subsequent request, it will be check against the table to see if it exists. For the scenario we need delete the record which expiry time equals or older than current time. This is to enable timeout when there is no activity against session.

In order to automatically delete expired session, most of the time we use Windows Azure Worker Role to perform the batch activity.

SQL Azure Session Provider

SQL Azure Session Provider is actually a modified version of SQL Server Session Provider provided some changes that had been made on TSQL function, in order to comply SQL Azure. It is identified some issue on the original script and some folk posted the resolution or you can download it here.

Advantages

- Cost effective. Although it may not be cost effective compare to table storage, it’s still pretty affordable, especially when combining it into the main database.

Disadvantages

- Not official support[ed] by Microsoft

- Need to clear unused session

For each time a session (with properties including expiry time) is created on a session table. For the subsequent request, it will be check against the table to see if it exists. For the scenario we need delete the record which expiry time equals or older than current time. This is to enable timeout when there is no activity against session.

In order to automatically delete expired session, most of the time we use Windows Azure Worker Role to perform the batch activity.

AppFabric Caching

AppFabric Caching is actually the recommended option and officially supported by Microsoft. AppFabric Caching is distributed in-memory cache service. It is automated provisioned based on Windows Server AppFabric Caching Technology.

Advantages

- In memory cache, very fast access

- Officially supported by Microsoft

Disadvantages

- The cost is relatively high. The pricing starts from $ 45 per month for 128 MB and all the way up to $ 325 per month for 4 GB.

Conclusion

To conclude this discussion, there’re actually multiple ways of managing session in Windows Azure. All of them have pros and cons. It’s actually up to us to decide which one to use that fits better circumstance.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

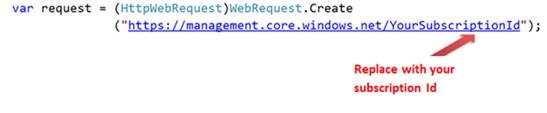

•• Dhananjay Kumar explained Fetching Hosting Services Name using Windows Azure Management API in an 8/14/2011 post:

Windows Azure Management API enables you to manage Azure subscription through codes. In this post, I will show how you could list hosted services inside your subscription through code.

Windows Azure Management API is [a] REST based API and allows you to perform almost all management level tasks with Azure subscription.

Any client making call to Azure portal using Management API has to authenticate itself before making call. Authenticating being done between Azure portal and client calling REST based Azure Management API through the certificates.

Very first let us create a class representing HostedService. There is property representing name of hosted service.

public class HostedServices { public string serviceName { get; set; } }Essentially you need to perform four steps,

1. You need to create a web request to your subscription id

2. While making request you need to make sure you are calling the correct version and adding the cross ponding certificate of your subscription.

Note: I have uploaded debugmode.cer to my azure portal at subscription level.

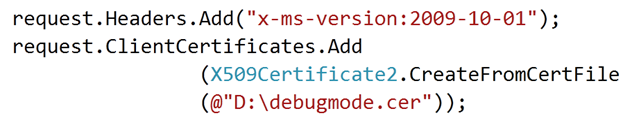

3. Get the stream and convert response stream in string

You will get the XML response in below format,

4. Once XML is there in form of string you need to extract ServiceName from XML element using LINQ to XML.

On running you should get all the hosted service. For me there is only one hosted service debugmode9 under my Azure subscription.

For your reference full source code is as below,

using System; using System.IO; using System.Linq; using System.Net; using System.Security.Cryptography.X509Certificates; using System.Xml.Linq; namespace ConsoleApplication26 { class Program { static void Main(string[] args) { var request = (HttpWebRequest)WebRequest.Create("https://management.core.windows.net/697714da-b267-4761-bced-b75fcde0d7e1/services/hostedservices"); request.Headers.Add("x-ms-version:2009-10-01"); request.ClientCertificates.Add (X509Certificate2.CreateFromCertFile (@"D:\debugmode.cer")); var response = request.GetResponse().GetResponseStream(); var xmlofResponse = new StreamReader(response).ReadToEnd(); XDocument doc = XDocument.Parse(xmlofResponse); XNamespace ns = "http://schemas.microsoft.com/windowsazure"; var servicesName = from r in doc.Descendants(ns + "HostedService") select new HostedServices { serviceName = r.Element(ns + "ServiceName").Value }; foreach (var a in servicesName) { Console.WriteLine(a.serviceName); } Console.ReadKey(true); } } }

•• Mario Kosmiskas described Creating a Hadoop Development Cluster in Azure in an 8/12/2011 post:

In my previous post on Hadoop I showed how you could easily deploy a cluster to run on Azure. What was missing was a way to efficiently use the cluster. You could always remote desktop to the Job Tracker and kick off a job but there are better ways.

This post is about actually using the cluster once it has been deployed to Azure. I chose the theme of a Development Cluster to justify making a few changes to how I previously configured the cluster and show some new techniques.

As a developer I expect easy access to the development cluster. The goal is to allow developers to safely connect to the cluster to deploy and debug their map/reduce jobs. SSH provides all the necessary tools for this – secure connection and tunneling. SSH not only allows developers to establish a secure session with the cluster in Azure but it also allows for full integration with IDEs making the typical development tasks a breeze.

I will be referring to two of my previous posts on Setting Up a Hadoop Cluster and Configuring SSH. It would be helpful to have them open as you follow the setup instructions below.

The Development Cluster

In this scenario of a development cluster I will use a single host to run both the Name Node and the Job Tracker. This is obviously not true for every development cluster but suffices for this demo. The number of slaves is initially set to 3. You can dynamically change the cluster size as I demonstrated in my previous post. If you are going to try it you might also want to adjust the VM size to meet your needs.

The procedure for deploying a Hadoop cluster has not changed. The dependencies are different though. First is the Hadoop version, I had previously used 0.21 which is not supported by many development tools since it’s an unstable release. I reverted to the stable versions and ended up using 0.20.2. At the time of this writing 0.20.203.0rc1 was out but did not work on Windows. Cygwin needs the OpenSSH package installed to provide the SSH Windows Service (instructions in the SSH post). Finally is YAJSW. That didn’t technically need to be updated, I just grabbed the latest drop for which is Beta-10.8.

Just follow the instructions from my previous post using the updated dependencies and grab this cluster configuration template and this Visual Studio 2010 project instead. You should have the following files in a container in your storage account:

You should be able to deploy your development cluster by publishing the HadoopAzure project directly from Visual Studio.

Connecting to the Cluster

A developer needs to connect to the cluster using SSH. I demonstrated how to do that using PuTTY, the only difference here is that we will need to setup a couple of tunnels. This screen shows the two tunnels required to access the Name Node and Job Tracker.

Once you connect and login you can minimize the PuTTY window. We won’t be using it but it must be open for the tunnels to remain open.Accessing the Cluster

With the tunnels open to the development cluster you can use it as if it was local.

Mario is a software architect on the Windows Azure team.

Michael Epprecht listed Ports for Windows Azure [et al.] on your firewall on 8/13/2011:

I get asked quite often, what ports do I need to open on my corporate firewall to connect to the various Azure services from within my network.

- SQL Azure: outgoing TCP 1433 -

http://msdn.microsoft.com/en-us/library/ee621782.aspx- Windows Azure Connect: outbound TCP 443 (https) -

http://msdn.microsoft.com/en-us/library/gg433061.aspx- AppFabric Caching: depends on what you configured -

http://msdn.microsoft.com/en-us/magazine/gg983488.aspx- AppFabric Service Bus: depends on your bindings -

http://msdn.microsoft.com/en-us/library/ee732535.aspx- If you are using RDP access into your Windows Azure roles you need outgoing TCP 3389 - http://msdn.microsoft.com/en-us/library/hh124107

Michael is an IT Pro Evangelist at Microsoft Switzerland.

Claire Rogers wrote Wellington firm links up with Pixar on 8/8/2011 for the stuff.co.nz Technology blog:

Wellington supercomputing start-up GreenButton is working with Disney's animation giant Pixar to make its movie rendering software available to anyone over the web. The RenderMan software has been used to produce most of the world's latest hits, including by Weta Digital for Avatar and Lord of the Rings, for the Harry Potter movies and kids' favourites Cars and Toy Story [Link added].

GreenButton – one of the capital's most promising tech start-ups – has received more than US$1 million from Microsoft and counts several of the country's leading technologists among its investors. Its application can be built into software products and lets computer users access supercomputer power at the click of a button as they need it and without having to invest in their own systems.

Chief marketing officer Vivian Morresey said it planned to rent out the RenderMan software and processing power to users over the internet, and split the revenue with Pixar.

The software and computing grunt needed to power it had traditionally been beyond the budgets of small to mid-tier animation and visual effects firms and one-man operations, Morresey said. "We're empowering any organisation to have access to software and computer power they couldn't normally afford. All the revenue is going to come through us. It absolutely cements our business model. We're going to take Pixar's No1 application to the world."

Weta Digital managing director Joe Letteri has described the RenderMan software as the ultimate creative tool. "It's the easiest and most flexible way to create a picture from an idea".

Morresey said GreenButton would use Microsoft's cloud computing platform Windows Azure to provide processing power for RenderMan customers. [Emphasis added.]

The Wellington firm was last month named as the global winner of the Windows Azure independent software vendor partner of the year award. It has a "Global Alliance" with the software giant, that will see the two work together to make Azure available to software users around the world through the GreenButton application.

GreenButton investors include the Government's Venture Investment Fund, Movac founder Phil McCaw, and Datacom founder and rich-lister John Holdsworth. Its founder and chief executive is former Weta Digital head of technology Scott Houston and its chairman is Fonterra's former chief information officer Marcel van den Assum. Former Sun Microsystems executive Mark Canepa is also a shareholder and director.

Morresey said the company was talking to firms in data intensive industries, such as the oil and gas, engineering, biological analysis and financial sectors, that could use its application in their software.

GreenButton's application is already embedded in six software products, including Auckland firm Right Hemisphere's Deep Exploration 3-D rendering software, and has more than 4000 registered users in more than 70 countries.

No significant articles today.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

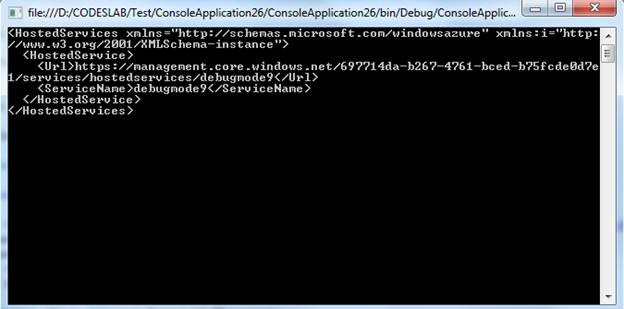

The Microsoft | Volume Licensing site posted Product Use Rights and a Product List for Visual Studio LightSwitch 2010[!] in 8/2011:

Note the incorrect version number in the Product Use Rights and Product List sections. The product was LightSwitch 2010 during the beta period but released as Visual Studio LightSwitch 2011.

•• Michael Washington (@ADefWebserver) described How To Create a Simple Control Extension (Or How To Make LightSwitch Controls You Can Sell) on 8/14/2011:

If you are not a Silverlight programmer, LightSwitch is still easy to use. You just need to use LightSwitch Control Extensions. It is important to note the difference between a Silverlight Custom Control, and a LightSwitch Control Extension.

Karol Zadora-Przylecki covers the difference in the article “Using Custom Controls to Enhance Your LightSwitch Application UI” (http://blogs.msdn.com/b/lightswitch/archive/2011/01/13/using-custom-controls-to-enhance-lightswitch-application-ui-part-1.aspx).

Essentially the difference is that a LightSwitch Control Extension is installed into LightSwitch, and meant to be re-used in multiple LightSwitch applications, like a normal internal LightSwitch control. The downside is, that creating a LightSwitch Control Extension, is significantly more difficult and time-consuming to create.

Silverlight Custom Controls are controls created specifically for the LightSwitch application they will be implemented in. They are significantly easier to create.

Control Extensions are harder to create, but they are significantly easer to use. Keep in mind that for full control over the look of the user interface, you will want to use a Silverlight Custom Control because one disadvantage of Control Extensions, is that you can only change options that are available in the property options panel for the control (see: The LightSwitch Control Extension Makers Dilemma) (also note, in this example, we will not implement any property panel options for the sample control).

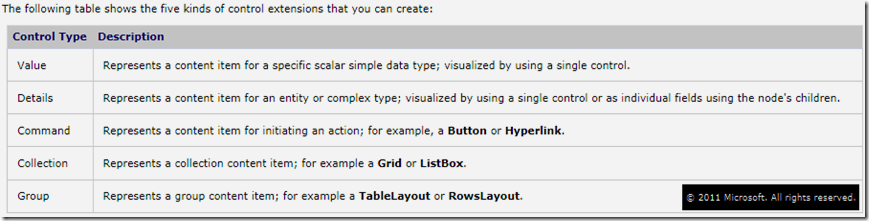

Note that there are 5 types of Control Extensions you can create. This article will only demonstrate the simplest, the Value Control Extension.

For a detailed explanation of the process to create a Value Control Extension, see the article: Walkthrough: Creating a Value Control Extension.

The Process to Use A Custom Control Extension

Let us first look at the process to use a completed LightSwitch Custom Control Extension.

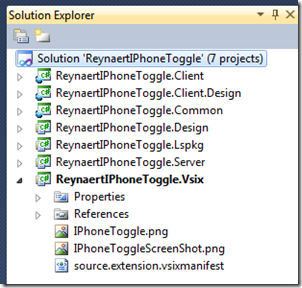

When we create a Custom Control Extension, we have a solution that contains several projects.

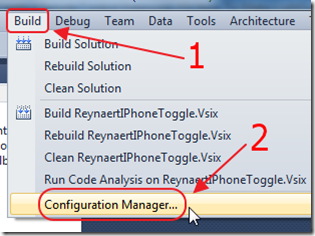

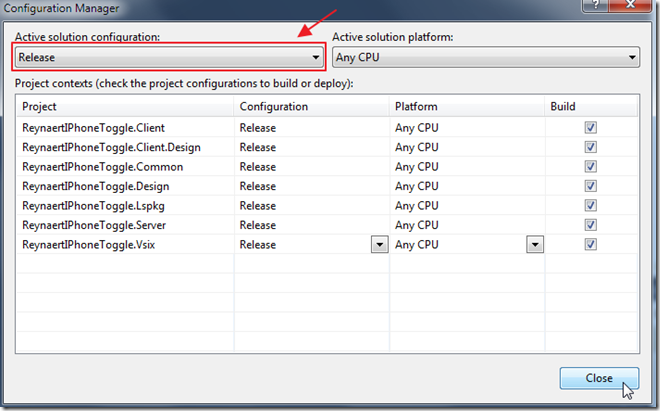

We go into the Configuration Manager of the .Vsix project…

We set the Active Configuration to Release, and we Build the Solution.

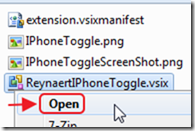

When we look in the ..\ReynaertIPhoneToggle.Vsix\bin\Release folder, we see a .vsix file that we can open…

This allows us to install the extension.

Note: that it installs the extension to a sub folder in the folder ..\AppData\Local\Microsoft\VisualStudio\10.0\Extensions. The process to uninstall an extension is to simply delete the folder the extension is installed in.

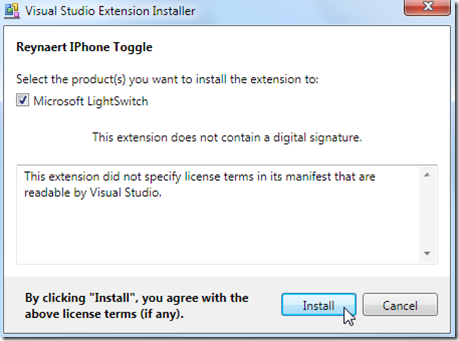

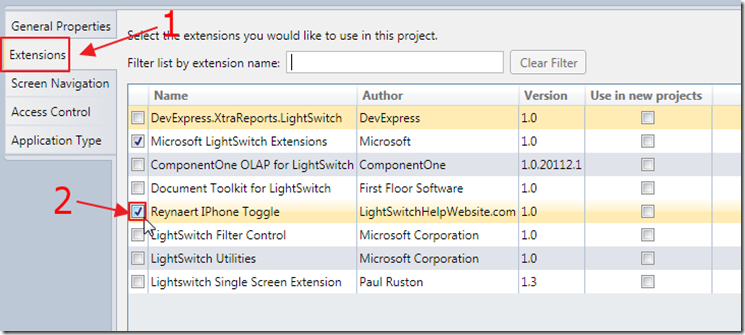

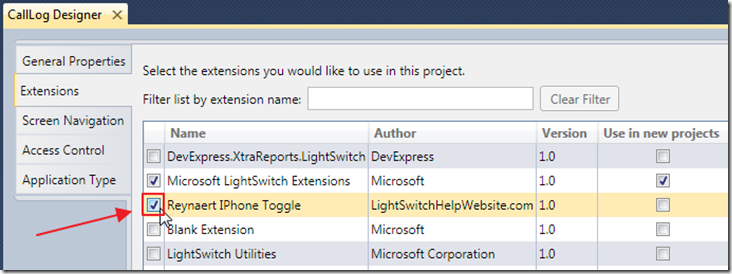

When we go into Properties in our LightSwitch application…

… we can enable the extension by checking the box next to it on the Extensions tab.

This allows us to select the control for any field that it supports on the Screen designer.

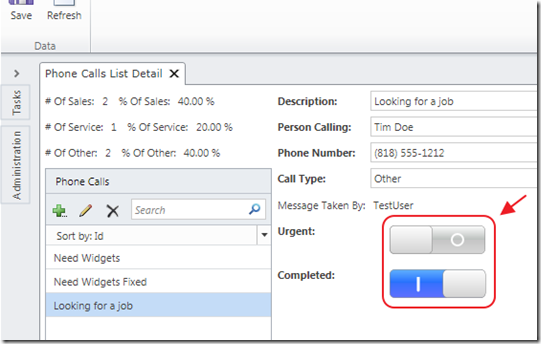

The control shows up when the application is run.

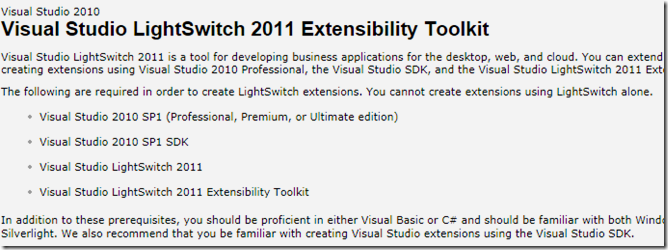

Tools You Need To Create Control Extensions

The first step is to install the LightSwitch Extensibility Toolkit. You will need to make sure you also have the prerequisites. You can get everything at this link: http://msdn.microsoft.com/en-us/library/ee256689.aspx

The Sample Project

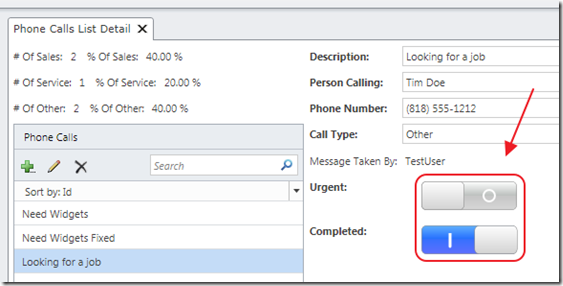

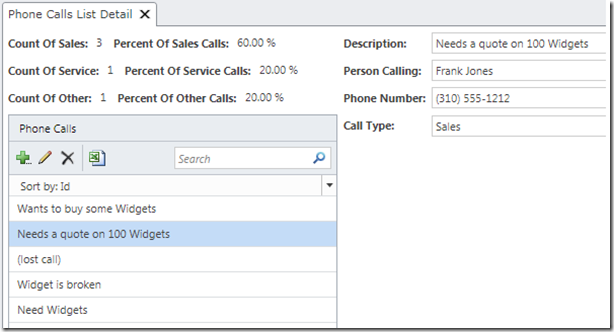

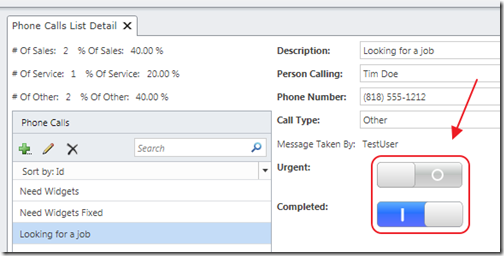

For the sample project, we will start with the application created in It Is Easy To Display Counts And Percentages In LightSwitch. This application allows users to take phone messages.

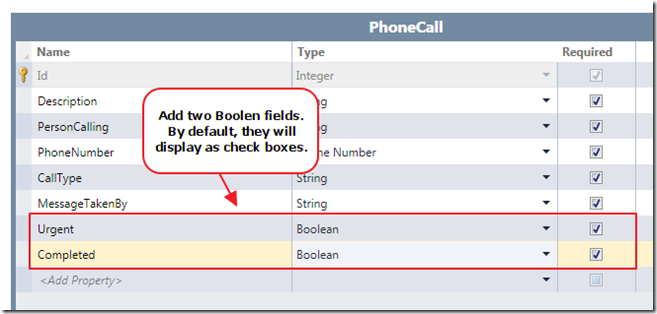

This application only contains one Entity (table) called PhoneCalls. We open the Entity and add two Boolean fields, Urgent and Completed.

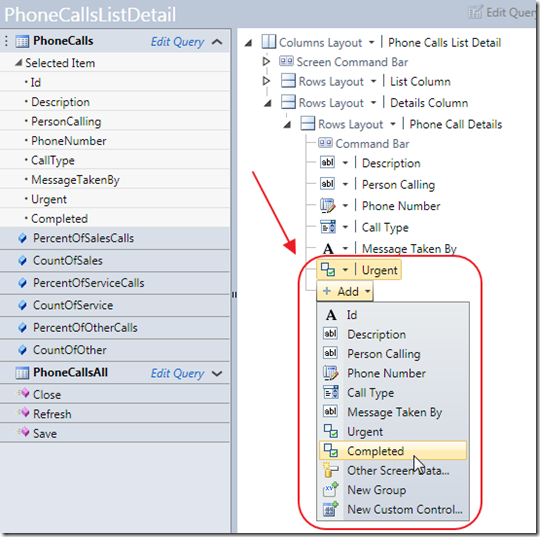

We then add the two fields in the Screen designer.

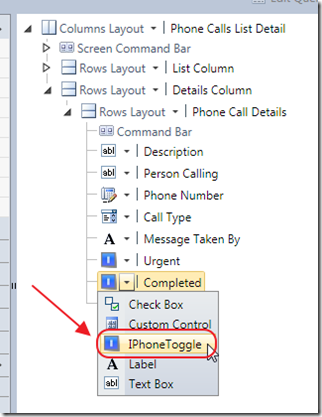

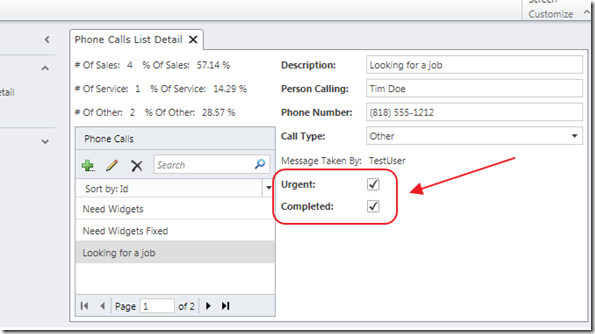

When we run the application, the added fields show up as check boxes.

Check boxes are actually not the best user experience. They are small, so the user has to carefully position their mouse on the box and carefully click it to change it’s value.

What we desire is a much larger control that leaves a lot of room for error when trying to change it’s value.

Create The LightSwitch Control Extension

Let’s use a Silverlight control that looks good. Alexander Reynaert has created a Silverlight control that resembles a IPhone toggle. You can get the original project here:

http://reynaerta.wordpress.com/2010/12/26/iphone-like-switch-button-in-silverlight/.

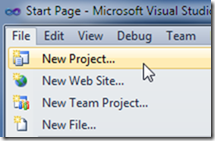

Open Visual Studio and select File then New Project.

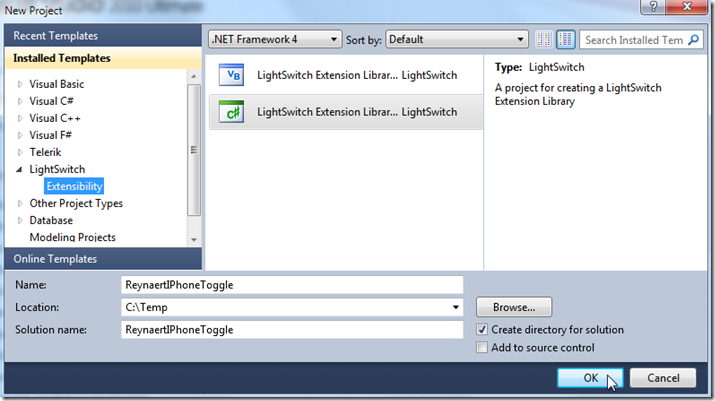

Let’s call the project ReynaertIPhoneToggle.

A number of Projects will be created.

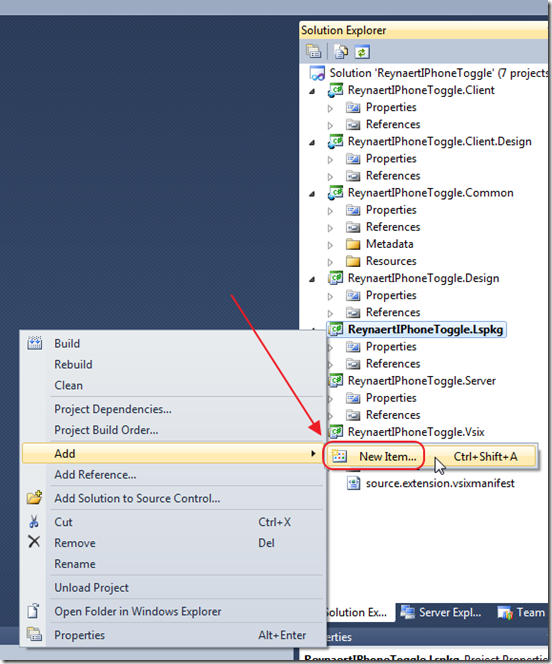

Right-click on the ReynaertIPhoneToggle.Lspkg project and select Add then New Item.

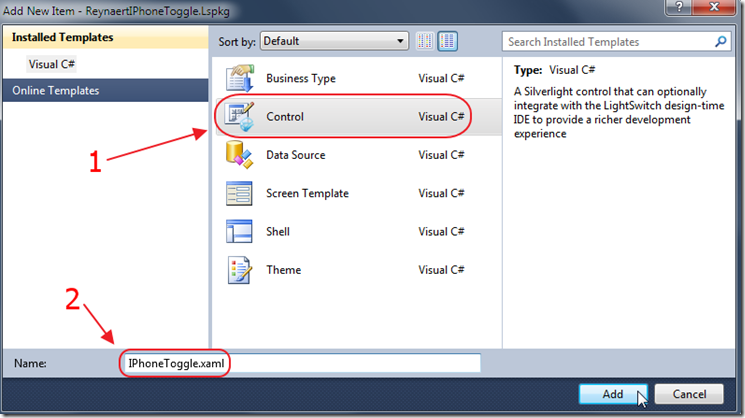

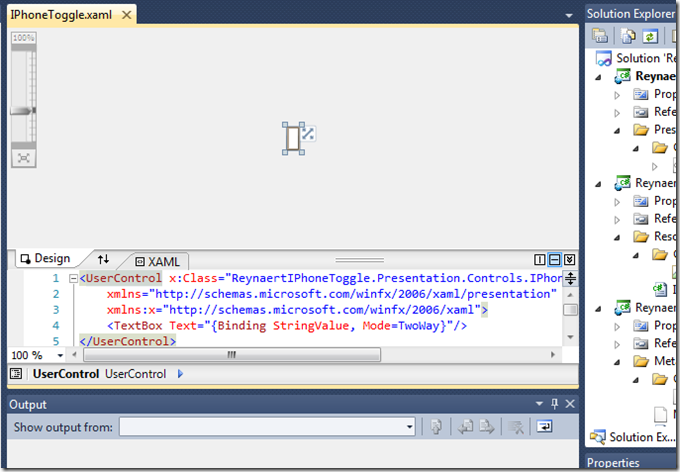

Select Control and name it IPhoneToggle.xaml.

The screen will resemble the image above.

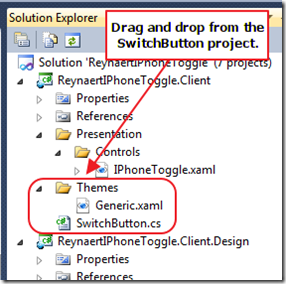

Open the Alexander Reynaert SwitchButton project, and drag and drop the Themes folder (and the Generic.xaml file), and the SwitchButton.cs file, and drop them in the ReynaertIPhoneToggle.Client project.

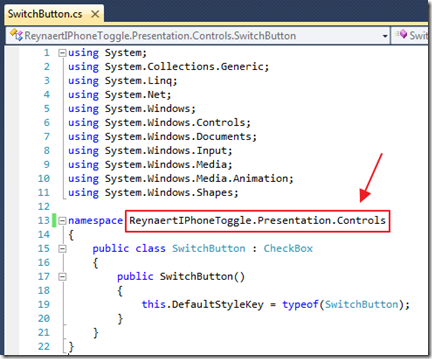

Open the SwitchButton.cs file and change the namespace to ReynaertIPhoneToggle.Presentation.Controls.

Save the file.

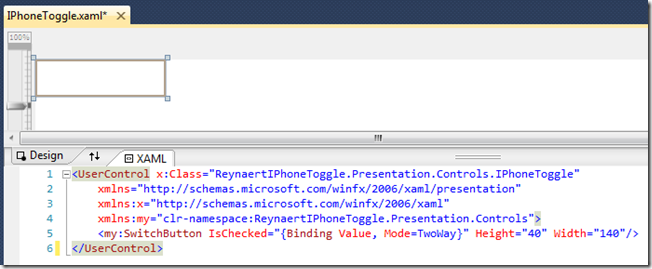

Change the code for the IPhoneToggle.xaml to the following:

<UserControl x:Class="ReynaertIPhoneToggle.Presentation.Controls.IPhoneToggle"xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"xmlns:my="clr-namespace:ReynaertIPhoneToggle.Presentation.Controls"><my:SwitchButton IsChecked="{Binding Value, Mode=TwoWay}" Height="40" Width="140"/></UserControl>What this does is implement the SwitchButton control. It also sets the binding to Value and this is what LightSwitch will bind to (and sets it to TwoWay so it can be updated by LightSwitch, and will also update the value stored in LightSwitch). The various forms of binding are covered in Creating Visual Studio LightSwitch Custom Controls (Beginner to Intermediate).

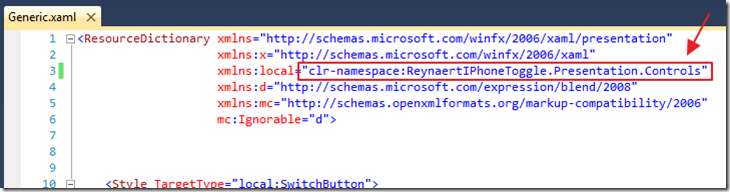

Open the Themes/Generic.xaml file in the ReynaertIPhoneToggle.Client project, and change the “xmlns:local=” line to: "clr-namespace:ReynaertIPhoneToggle.Presentation.Controls".

This is done so that the Generic file that contains the layout for the control is in the same namespace as the control.

Save the file.

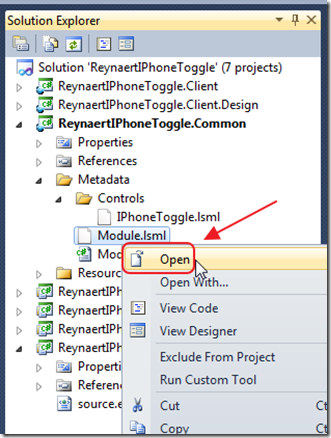

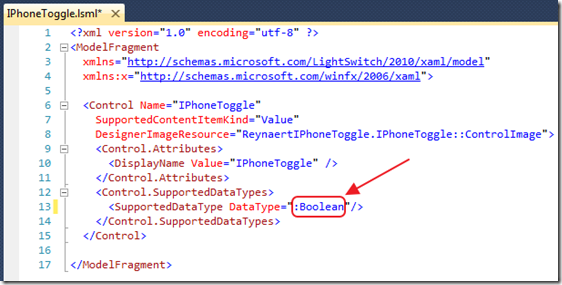

To set the data type that this control will bind to, right-click on the Module.lsml file in the ReynaertIphoneToggle project and select Open.

Set the type as Boolean.

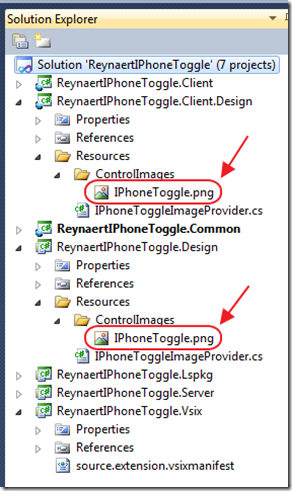

There are IPhoneToggle.png icons that you will want to customize with 16x16 images. These display in various menus when the control is implemented.

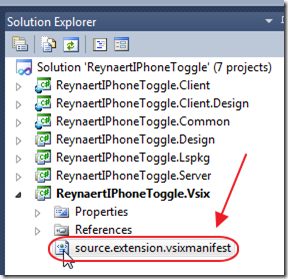

In the Solution Explorer, double-click on the source.extension.vsixmanifest file to open it.

This page allows you to set properties for the control. For more information see: How to: Set VSIX Package Properties and How to: Distribute a LightSwitch Extension.

Test Your Control Extension

Note: All there values may already be set correctly:

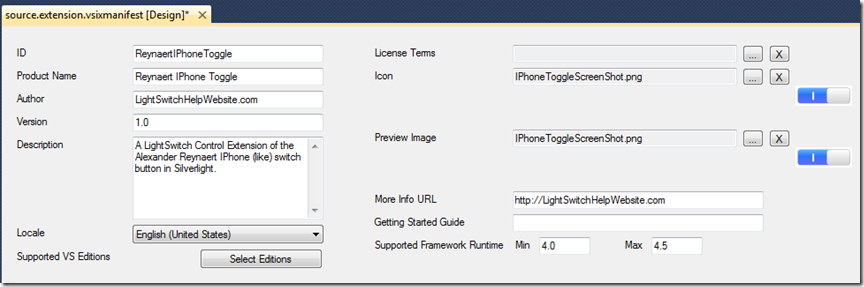

In the Solution Explorer, select the ReynaertIPhoneToggle.Vsix project.

On the menu bar, choose Project, ReynaertIPhoneToggle.Vsix Properties.

On the Debug tab, under Start Action, choose Start external program.

Enter the path of the Visual Studio executable, devenv.exe.

By default on a 32-bit system, the path is C:\Program Files\Microsoft Visual Studio 10.0\Common7\IDE\devenv.exe; on a 64-bit system, it is C:\Program Files (x86)\Microsoft Visual Studio 10.0\Common7\IDE\devenv.exe.

In the Command line arguments field, type /rootsuffix Exp as the command-line argument.

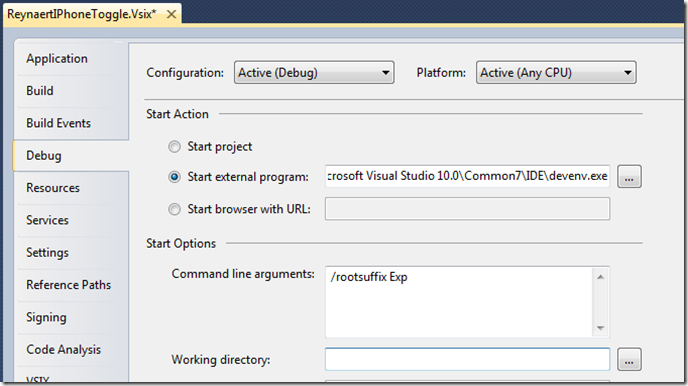

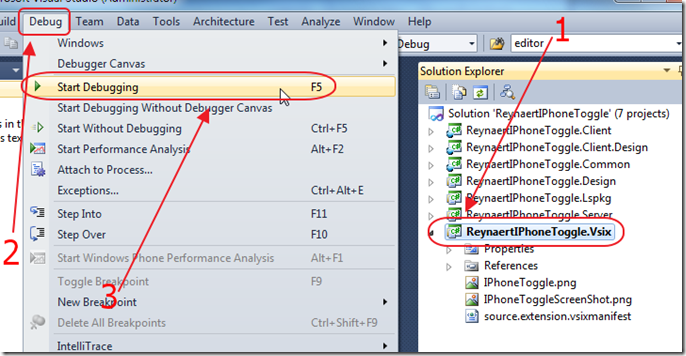

Ensure that the ReynaertIPhoneToggle.Vsix project is selected in the Solution Explorer, then select Debug, then Start Debugging.

This will open another instance of Visual Studio.

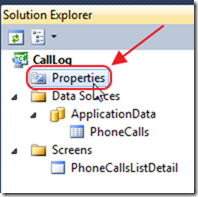

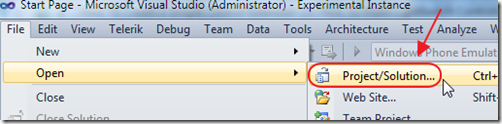

Open a new Project and open the CallLog Project.

In the Solution Explorer in Visual Studio, double-click on Properties to open properties. Then, select the Extensions tab and check the box next to Reynaert IPhone Toggle.

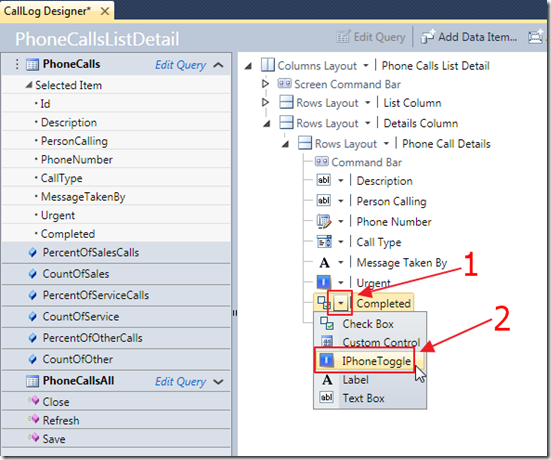

In the Screen designer, change the Completed and Urgent check boxes to to use the IPhoneToggle control.

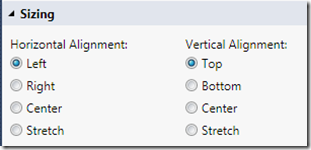

In the Properties for each control, set the Alignment according to the image above.

When you run the application, the toggle controls will display instead of the check boxes. Clicking on them will change their state.

Yes You Can Now Sell Your Control!

Now you have a LightSwitch control that you can easily sell.

Take a look at “My Offer To You” in this article: The Visual Studio LightSwitch Economy.

Download Code

The LightSwitch project is available at http://lightswitchhelpwebsite.com/Downloads.aspx

Also See:

- Walkthrough: Creating a Value Control Extension

- Walkthrough: Creating a Detail Control Extension

- Walkthrough: Creating a Smart Layout Control Extension

- Walkthrough: Creating a Stack Panel Control Extension

- How to: Create a LightSwitch Extension Project

- How to: Debug or Test a LightSwitch Extension

- How to: Set VSIX Package Properties

- How to: Distribute a LightSwitch Extension

•• Infragistics reported on 8/12/2011 a Visual Studio LightSwitch Challenge to be judged on 8/24/2011:

Infragistics, the worldwide leader in user interface / user experience controls and components, is sponsoring a contest to promote the creation of beautiful and useful application using the Infragistics NetAdvantage for Visual Studio LightSwitch controls! The judging will take place on August 24th at 7pm at the Infragistics office in Sofia.

The rules are simple! The winning entry will be the most attractive and useful LightSwitch application using Infragistics controls! Use your imagination to create something interactive and awesome!

The application must be built using Visual Studio LightSwitch

The application can contain no more than 4 screens

The application must include Infragistics LightSwitch controls in a meaningful scenario using our Map and Charting controls

The prototype must visualize geospatial data implement a two way relationship between geographic data and attribute data from the database.

The application must allow different symbolism depending on the card value of the data (color and / or shape of symbols).

You can use various sources of geographic data (Google Maps, Bing Maps, SQL Spatial, file data (shapefiles) and others.

The application can use attribute data from any source - database (MS SQL Server, MySQL, Microsoft Access), SharePoint, WCF RIA and others.

Archived, sent applications should be of a size greater than 10MB.

Address to send tasks sofiaoffice@infragistics.com

Deadline for receipt of the tasks - 12: 00hrs. August 24, 2011.

Winners must be present to win

Infragistics retains the right to use entries for marketing / advertising purposes and for inclusion as product sample showcases.

Winners will be announced by Jason Beres, VP of Product Management, Community, and Evangelism at Infragistics on August 24th at the Infragistics office. Winners MUST BE PRESENT TO WIN!

Prize Allocation:

1st place prize: 1 Kindle DX with $25.00 gift certificate for books purchase + Infragistics NetAdvantage Ultimate + Icons subscription for 1 year ($3,000 value!)

2nd place prize: Infragistics NetAdvantage Ultimate subscription for 1 year ($1,895 value!)

3rd place prize: All Infragistics Icons packs ($595 value!)

Since we want everyone to be a winner, all entries that are placed in the contest will receive a complimentary NetAdvantage for Visual Studio LightSwitch product!

- When: 24 August 2011, 7PM EET

- Where: Infragistics office in Sofia.

- Address: 110 B, Simeonovsko Shosse Bul., Office Floor II, 1700 Sofia, Bulgaria.

Requiring the winner to be present in Sofia would appear to limit contestants to folks living in Eastern Europe, Greece, or Turkey (zoom out).

•• Michael Ossou (@MikeyDasp) posted a Visual Studio LightSwitch 2011 and You essay on 8/11/2011:

As some of you may know, Microsoft released version 1.0 of Visual Studio LightSwitch 2011. In a nutshell, its a development tool that allows you to easily build business applications. What does that mean in non-marketing terms? Think of Microsoft Access of old. You could click, drag, tab, and wizard your way into building an application. Only this time, everything is compiled into a Silverlight application for you. Yes, you could customize the application and write code as well, but my question to you is…would you want to?

As an initial test, I downloaded the bits, built a phone book application and deployed it. This whole process took me all of 30 minutes without reading a single article or watching a video. Granted, it’s a silly example, but the point is, with 30 minutes of time invested, I have an application hosted that’s accessible from anywhere, backed by SQL server, and can be used by multiple users at the same time. Doing it a second time around, I could probably do it in 10 minutes. That makes for a pretty compelling story for any development tool.

Looking at the other side of the wall, we don’t live in a world of phone book apps. The more complex the application, the more you have to dig under the abstraction that LightSwitch provides and get your hands dirty, the more some may question its usefulness. There is a huge grey area in between. How much of that grey area LightSwitch can effectively address will ultimately decide how successful of a product it is. And the final judge of that success is you.

So I want to know what you think. Do you plan on hosting a Visual Studio LightSwitch application? Do you have a wish list of applications that you would have liked to have built, but didn’t think they were worthy of building out a full blown web project for? Are you of the opinion that “real developers” build all applications, no matter how trivial, by hand in assembler? Or…do you think the world has gotten too complicated and want to see more stuff that will make life easier?

There is a comments section below. Use it, let me know your thoughts. In fact, feel free to fight it out this time.

•• Mihail Mateeve posted Understanding NetAdvantage for Visual Studio LightSwitch – Creating Maps (Part 3) in an 8/5/2011 post to the Infragistics blog:

Infragistics Inc. released the new NetAdvantage for Visual Studio LightSwitch 2011.1. This product includes two map control extensions:

- Infragistics Map Control (value based map)

- Infragistics Geospatial Map (series based map)

Microsoft Visual Studio LightSwitch 2011 uses WCF RIA Services to maintain data. This framework for now doesn’t support spatial data (SQL Server Spatial). That is the reason map controls

touse their own implementation to access spatial data.These controls have more settings than other controls in NetAdvantage for Visual Studio LightSwitch 2011.1. because of the requirement to cover different cases when using

usespatial data.Visual Studio LightSwitch control extensions are in 3 main groups defined by the type of the bound data

- Value based controls

- Group based controls

- Collection based controls

This article is about value based controls and especially for Infragistics Map Control.

Value based control use for data source scalar values (that means a value from the specified property from one entity). These could be used inside another collection based or croup based controls like List, DataGrid etc.

Infragistics Map Control is used to represent spatial objects, related with the selected item on the screen. Relation between selected item and spatial elements is based on the value of the specified property from the selected object. This value should match to the name property of the map elements. How to set Name property via DataMapping String is describes below in this article.

Sample application.

- Sample application is based on the application, used in the part 2 of this article.

- Sample database is Northwind. Link could be found in the part 1 of this article.

Create a new List and Detail Screen and name it “CustomersMap”.

Change the Customers List with DataGrid control and leave in the UI only three properties:

- Company Name

- Contact Name

- Contact Title

Add second time Country in details column and select “Infragistics Map Control” to represent this value.

Select the map control and press “F4” to see the properties grid.

There are several important properties:

- Map Brushes

- DataMapping String

- Shapefile Projection

- Map Projection

- Map Control SourceUriString

- Show Navigation

- Spatial Data Source

- Use Tile Source

- Highlight Color

- Highlight Selection

- Zoom to Selection

- BingMaps Key

1. Map Brushes are used to set the theme for the thematic maps according to Value DataMapping String property.

2. DataMapping String used to be set in four matches characteristics of the elements of the map - Name, Value, Caption and ToolTip. Selecting an object is based on correspondence between the value of the data source and Name elements in the map. Thematic maps are colored according to Map Brushes and value of Value

3. Shapefile Projection is a projection. set to shapefile. Map control provides several predefined the most popular geographic projections. The default is the Spherical Mercator. More about geographic coordinate systems and projections you could find below in this article.

4. Map Projection is a projection, used to visualize data in the Infragistics Map Control. All details are the same like in the Shapefile Projection. It is possible to have data in one projection and display it in another one.

5. Map Control SourceUriString used to specify the relative path for shapefiles from the place where is generated the client part the LightSwitch Application.

This part is located in [LightSwitch Application Project Path]\Bin\[Configuration]. Configuration means Debug, Release or other specific build configuration.

Relative path includes [Relative Path]\[Shapefile Name]. Shapefile Name means the name of the file without file extension.

In the sample application SourceUriString “ShapeFiles\World\Cntry00” means you have relative path “ShapeFiles\World” and “Cntry00” as the name of the file.

Shapefile spatial data source is composed of several files with same name and different extensions. Only two files are required - [shapefile name]. shp and [shapefile name]. dbf. The first contains information on the graph and the second - attribute data. The relationship between the two files is the record id of [shapefile name]. Dbf, which requires use of specialized software for editing data. The remaining files contain indexes and their presence is not mandatory.

6. Show Navigation property is used to show and hide navigation panel inside the map control.

7. Spatial Data Source property defines the type of the data source.

Infragistics Map Control supports three spatial data source types:

- Shapefile

- WCF

- Silverlight Enabled WCF

In the sample application is used the first one. WCF and Silverlight Enabled WCF propose spatial data like a nested collections via WCF services. This post does not affect these two data sources.

8. Use Tile Source property is used to include or exclude raster data from BingMaps service. To be possible to use it you should have BingMaps developer key. You can receive a key from here.

9. Highlight Color property specifies the color for the highlighted map element.

10. Highlight Selection property is used to specify the map behavior when change the selected element. If this property is true (checked) than the selected element will be highlighted on the map.

11. Zoom to Selection property determines whether the map showing the area around the selected item.

12. BingMaps Key is used to set the BingMaps Key for tile source.

Shapefile structure

Geographic Coordinate Systems and Projections

Geographic Coordinate Systems

The most common locational reference system is the spherical coordinate system measured in latitude and longitude. This system can be used to identify point locations anywhere on the earth's surface. Because of its ability to reference locations, the spherical coordinate system is usually referred to as the Geographic Coordinate System, also known as the Global Reference System.

Longitude and latitude are angles measured from the earth's center to a point on the earth's surface. Longitude is measured east and west, while latitude is measured north and south. Longitude lines, also called meridians, stretch between the north and south poles. Latitude lines, also called parallels, encircle the globe with parallel rings.

Latitude and longitude are traditionally measured in degrees, minutes, and seconds (DMS). Longitude values range from 0° at the Prime Meridian (the meridian that passes through Greenwich, England) to 180° when traveling east and from 0° to –180° when traveling west from the Prime Meridian.Geographic Projections

Because it is difficult to make measurements in spherical coordinates, geographic data is projected into planar coordinate systems (often called Cartesian coordinates systems). On a flat surface, locations are identified by x,y coordinates on a grid, with the origin at the center of the grid. Each position has two values that reference it to that central location; one specifies its horizontal position and the other its vertical position. These two values are called the x coordinate and the y coordinate.

More information about the shapefiles you can find here .

Spatial data in shapefile can be viewed only with special software. One popular solution is free MapWindow GIS.

Another popular solution is ESRI ArcGIS.

You can create, view and edit spatial data, including shapefiles with MapWindow GIS Desktop.

Change the properties in accordance with the screen below:

- Map Control SourceUriString: “ShapeFiles\World\Cntry00”

- Show Navigation: checked

Run the application and change the selected item.

Design in runtime mode

Select customize screen and change these properties:

- Map Brushes: Grey Blue Magenta Red Orange

- Highlight Color: Yellow

- Use Tile Source - checked

- Zoom to Selection – unchecked

Changes will be available in the design screen.

Save changes and go back to the “Customers Map” screen.

Change the selected customer. Now map will no zoom around the selected element.

Sample application, including shapefiles you could find here: IgLighrSwitchDemo_Part3.zip

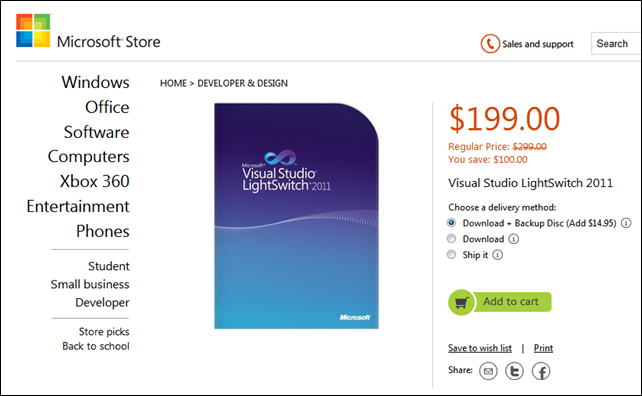

The Microsoft Store offered a $100 discount on orders for standalone Visual Studio LightSwitch 2011 as of 8/2011:

According to Microsoft, this introductory offer is valid through January 31, 2012.

- Visual Studio LightSwitch 2011 RTM Training Kit is available for download here.

- Visual Studio LightSwitch 2011 Extensibility Toolkit is available for download here.

- Visual Studio LightSwitch 2011 RTM Trial is available for downloadhere.

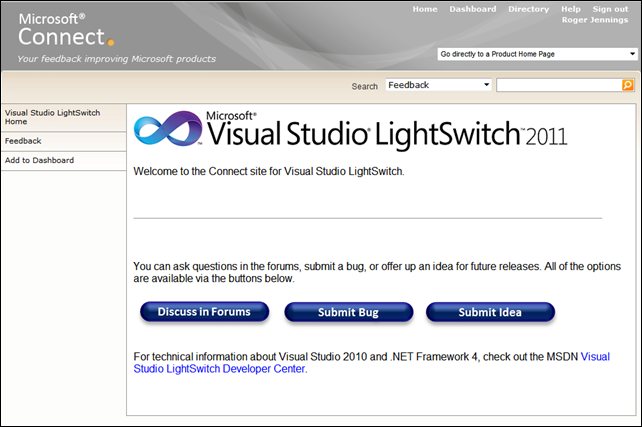

The Visual Studio LightSwitch Team (@VSLightSwitch) added a new Microsoft Connect Site for VS LightSwitch 2011 on 5/11/2011 (missed when published):

Sarah McDevitt described Enums in the Entity Designer v4.1 (June 2011 CTP) in an 8/8/2011 post to the ADO.NET blog:

One of the most highly-requested features for the Entity Framework is first-class support for Enums, and we are happy to provide support for this feature in the Entity Designer in the June 2011 CTP recently released. If you haven’t checked it out already, find the bits here:

http://www.microsoft.com/download/en/details.aspx?displaylang=en&id=26660

We’d like to give you a look at the experience of developing with Enum types in the Entity Designer. If you haven’t yet, take a look at what is going on under the hood with Enums in the Entity Framework in the blog post here:

http://blogs.msdn.com/b/efdesign/archive/2011/06/29/enumeration-support-in-entity-framework.aspx

and you can follow an example of using Enums in the Walkthrough here:

http://blogs.msdn.com/b/adonet/archive/2011/06/30/walkthrough-enums-june-ctp.aspx

While the Walkthrough will take you briefly through the Entity Designer Enums experience, we want to make sure we show what is available. We’d also like you to use this as a way to give direct feedback to the Entity Designer team on the user experience of working with Enums. We are already working on improvements to the experience shipped in the CTP, so please let us know what you think!

Experience

Similar to how Complex Types are represented in the Entity Designer, the primary location to work with Enums is via the Model Browser.

There are two major points to note about using Enums in your Entity Data Model:

1. Enum Types are not supported as shapes on the Entity Designer diagram surface

2. Enum Types are not created in your model via Database-First actions

a. When you create an EDM from an existing database, Enums are not defined in your model.

b. Update Model from Database will preserve your declaration of Enum types, but again, will not detect Enum constructs from your database.

Please let us know your feedback on the importance of these items. For example, how valuable is it to you to be able to convert reference entity types imported from Database-First into enum types?

Enum Type Dialog

Name: Name of Enum Type

Underlying Type: Valid underlying types for enum types are Int16, Int32, Int64, Byte, SByte.

IsFlags: When checked, denotes that this enum type is used as a bit field, and applies the Flags attribute to the definition of the Enum Type.

Member Name and Value (optional): Type or edit the members for the enum type and define optional values. Values must adhere to the underlying type of the enum.

Errors: Errors with your Enum Type definition will appear in the dialog in red, accompanied by a tooltip explaining what is invalid. Common errors are:

- Not a valid enum member value: A Value entered that is invalid according to the underlying type

- Member is duplicated: Multiple Members entered with the same name

- Not a valid name for an enum member: A Member name entered with unsupported characters

Errors must be fixed before the Enum Type can be created or updated. We do have known issues with our error UI that we are fixing as I write! Feedback is more than welcome as to what is most helpful to you in the dialog.

Entry Points

Create new Enum Type and Edit existing Enum Type:

Context Menu on Enum Types folder or existing Enum node in Model Browser

Convert Property to Enum:

Context menu on a Property in an Entity Type

Converting a property to an Enum type will create a new Enum Type. To use an existing Enum Type for a property, select the property and you will find existing Enum Types available in the Property Window in the Type drop down.

Use existing Enum Type:

Type in Property Window

Feedback

As always, please let us know what is most valuable to you for using Enum Types in the Entity Designer. What would help you further about the existing support in CTP1? What would you need to see for Enum support in the Designer for it to be the most valuable to your development?

Feedback is always appreciated!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

•• O’Reilly Media (@strataconf) posted on 8/11/2011 the Strata Conference New York 2011 Schedule for 9/22 - 9/23/2011 at the New York Hilton:

At our first Strata Conference in February [in Santa Clara, CA], the sold-out crowd of developers, analysts, researchers, and entrepreneurs realized that they were converging on a new profession—data scientist. Since then, demand has skyrocketed for data scientists who are proficient in the fast-moving constellation of technologies for gleaning insight and utility from big data.

“A significant constraint on realizing value from big data will be a shortage of talent, particularly of people with deep expertise in statistic and machine learning, and the manager and analysts who know how to operate companies by using insights from big data.”

[from the] McKinsey Global Institute report, "Big data: The next frontier for innovation, competition, and productivity," May, 2011

Strata Conference covers the latest and best tools and technologies for this new discipline, along the entire data supply chain—from gathering, cleaning, analyzing, and storing data to communicating data intelligence effectively. With hardcore technical sessions on parallel computing, machine learning, and interactive visualizations; case studies from finance, media, healthcare, and technology; and provocative reports from the leading edge, Strata Conference showcases the people, tools, and technologies that make data work.

Strata Conference is for developers, data scientists, data analysts, and other data professionals.

Strata Conference Topics and Program

Thank you to everyone who submitted a speaking proposal.

We will be posting the program schedule soon. The topics and themes you can expect to see include:Data: Distributed data processing, Real time data processing and analytics, Crowdsourcing, Data acquisition and cleaning, Data distribution and market, Data science best practice, Analytics, Machine learning, Cloud platforms and infrastructure

Business: From research to product, Data protection, privacy and policy, Becoming a data-driven organization, Training, recruitment, and management for data, Changing role of business intelligence

Interfaces: Dashboards, Mobile strategy, applications & futures, Visualization and design principles, Augmented reality and immersive interfaces, Connectivity and wireless

Important Dates

- Early Registration ends August 23, 2011

- Strata Conference is September 22-23, 2011

Additional Strata Events

Strata Jumpstart: September 19, 2011

A crash course for managers, strategists, and entrepreneurs on how to manage the data deluge that's transforming traditional business practices across the board--in finance, marketing, sales, legal, privacy/security, operations, and HR. Learn More.

Strata Summit: September 20-21, 2011

Two days on the essential high-level strategies for thriving in "the harsh light of data," delivered by the battle-tested business and technology pioneers who are leading the way. Learn More.

Jeff Price of Terrace Software will present How to Design Cloud Software Using Five Unique Capabilities in Azure on 8/23/2011 9:00 AM PDT at Microsoft’s Mountain View, CA Campus:

Please join Terrace Software for a technical seminar that will explore real-world cases of Windows Azure development and deployment using an Agile approach. We will present several common Azure implementation scenarios and discuss how to implement quick, effective resolutions.

This seminar is intended to save developers days or weeks of development time. We will explore some of the following scenarios of Azure development:

- Designing for Scalability, including caching best practices, sessions and when to use them, Blobs for session storage, and session clean-up approaches.

- Web Roles, including Dynamic File System interaction, Logging Event handling strategies, and why full package deployments.

- Migrating Legacy Applications to Azure, including asynchronous design, queues, multiple data stores, integrations and OData, and security.

- Certificates, Certificates, Certificates, how to manage them across multiple physical and virtual machines.

- VM Roles, including how surprisingly useful they are, scale out considerations and persistence.

Seminar Title: How to Design Cloud Software Using Five Unique Capabilities in Azure

Seminar Date, Time and Location: Tuesday, August 23, 2011, 9:00 a.m. - 11:30 a.m. (Registration at 8:30 a.m.), Microsoft, 1065 La Avenida Street, Mountain View, CA

Link for Registration and Details: Click to Register

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Gregg Orzell described Netflix’s DevOps program in his Building with Legos article of 8/13/2011:

In the six years that I have been involved in building and releasing software here at Netflix, the process has evolved and improved significantly. When I started, we would build a WAR, get it setup and tested on a production host, and then run a script that would stop tomcat on the host being pushed to, rsync the directory structure and then start tomcat again. Each host would be manually pushed to using this process, and even with very few hosts this took quite some time and a lot of human interaction (potential for mistakes).

Our next iteration was an improvement in automation, but not really in architecture. We created a web based tool that would handle the process of stopping and starting things as well as copying into place and extracting the new code. This meant that people could push to a number of servers at once just by selecting check boxes. The tests to make sure that the servers were back up before proceeding could also be automated and have failsafes in the tool.