Links to Resources for my “Microsoft's, Google's big data [analytics] plans give IT an edge” Article

My (@rogerjenn) Microsoft's, Google's big data [analytics] plans give IT an edge article of 8/8/2011 for TechTarget’s SearchCloudComputing.com blog contains many linked and unlinked references to other resources for big data, HPC Pack, LINQ to HPC, Project “Daytona”, Excel DataScope, and Google Fusion Tables. From the article’s Microsoft content:

CIOs are looking to pull actionable information from ultra-large data sets, or big data. But big data can mean big bucks for many companies. Public cloud providers could make the big data dream more of a reality.

“[Researchers] want to manipulate and share data in the cloud.”

Roger Barga, architect and group lead, XCG's Cloud Computing Futures (CCF) team

Big data, which is measured in terabytes or petabytes, often is comprised of Web server logs, product sales [information], and social network and messaging data. Of the 3,000 CIOs interviewed in IBM's Essential CIO study1, 83% listed an investment in business analytics as the top priority. And according to Gartner, by 2015, companies that have adopted big data and extreme information management will begin to outperform unprepared competitors by 20% in every available financial metric3.

Budgeting for big data storage and the computational resources that advanced analytic methods require2, however, isn't so easy in today's anemic economy. Many CIOs are turning to public cloud computing providers to deliver on-demand, elastic infrastructure platforms and Software as a Service. When referring to the companies' search engines, data center investments and cloud computing knowledge, Steve Ballmer said, "Nobody plays in big data, really, except Microsoft and Google.4"

Microsoft's LINQ Pack, LINQ to HPC, Project "Daytona" and the forthcoming Excel DataScope were designed explicitly to make big data analytics in Windows Azure accessible to researchers and everyday business analysts. Google's Fusion Tables aren't set up to process big data in the cloud yet, but the app is very easy to use, which likely will increase its popularity. It seems like the time is now to prepare for extreme data management in the enterprise so you can outperform your "unprepared competitors."

LINQ to HPC marks Microsoft's investment in big data

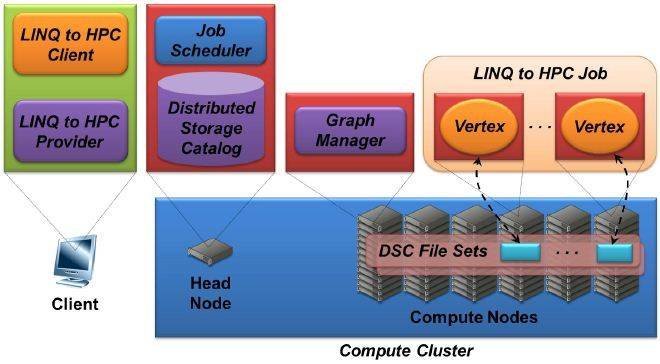

Microsoft has dipped its toe in the big data syndication market with Windows Azure Marketplace DataMarket5. However, the company's major investments in cloud-based big data analytics are beginning to emerge as revenue-producing software and services candidates. For example, in June 2001, Microsoft's High Performance Computing (HPC) team released Beta 2 of HPC Pack for Windows HPC Server 2008 clusters and LINQ to HPC R2 SP28.Bing search analytics use HPC Pack and LINQ to HPC, which were called Dryad and Dryad LINQ, respectively, during several years of development at Microsoft Research8. LINQ to HPC is used to analyze unstructured big data stored in file sets that are defined by a Distributed Storage Catalog (DSC). By default, three DSC file replicas are installed on separate machines running HPC Server 2008 with HPC Pack R2 SP2 in multiple compute nodes9. LINQ to HPC applications, or jobs, process DSC file sets. According to David Chappel[l], principal, Chappell & Associates, the components of LINQ to HPC are "data-intensive computing with Windows HPC Server" combined with on-premises hardware (Figure 1)6.

Figure 1. In an on-premises installation, the components of LINQ to HPC are spread across the client workstation, the cluster's head node and its compute nodes.The LINQ to HPC client contains a .NET C# or VB project that executes LINQ queries, which a LINQ to HPC Provider then sends to the head node's Job Scheduler. LINQ to HPC uses the directed acyclic graph data model. A graph database7 is a document database that uses relations as documents. The Job Scheduler then creates a Graph Manager that manipulates the graphs.

One major benefit of the LINQ to HPC architecture is that it enables .NET developers to easily write jobs that execute in parallel across many compute nodes, a situation commonly called an "embarrassingly parallel" workload.

Microsoft recently folded the HPC business into the Server and Cloud group and increased its emphasis on running HPC in Windows Azure. Service Pack 2 allows you to run compute nodes as Windows Azure virtual machines (VMs)9. The most common configuration is a hybrid cloud mode called the "burst scenario" where the head node resides in an on-premises data center and a number of compute nodes run as Windows Azure VMs -- depending on the load -- with file sets stored on Windows Azure drives. In LINQ to HPC, customers can perform data-intensive computing with the LINQ programming model on Windows HPC Server.

Will "Daytona" and Excel DataScope simplify development?

The eXtreme Computing Group (XCG), an organization in Microsoft Research (MSR) established to push the boundaries of computing as a part of the group's Cloud Research Engagement Initiative, released the "Daytona" platform, as a Community Technical Preview (CTP) in July 201110. The group refreshed the project later that month.Daytona is a MapReduce runtime for Windows Azure that competes with Amazon Web Service's Elastic Map Reduce11, Apache Foundation's Hadoop Map Reduce12, MapR's Apache Hadoop distribution13 and Cloudera Enterprise Hadoop14. A major advantage of "Daytona" is that it's easy to deploy to Windows Azure. The CTP includes a basic deployment package with pre-built .NET MapReduce libraries and host source code, C# code and sample data for k-means clustering15 and outlier detection analysis16, as well as complete documentation.

"Daytona has a very simple, easy-to-use programming interface for developers to write machine-learning and data-analytics algorithms," said Roger Barga, an architect and group lead on the XCG's Cloud Computing Futures (CCF) team. "[Developers] don't have to know too much about distributed computing or how they're going to spread the computation out, and they don't need to know the specifics of Windows Azure."

Barga said in a telephone interview that the Daytona CTP will be updated in eight-week sprints. This interval parallels the update schedule for Windows Azure CTP during the later stages of its preview in 2010. Plans for the next Daytona CTP update include a RESTful API and performance improvements. In fall 2011, you can expect an upgrade to the MapReduce engine that will enable the addition of stream processing to traditional batch processing capabilities17. Barga also said the team is considering an open-source "Daytona" release, depending on community interest in contributing to the project.

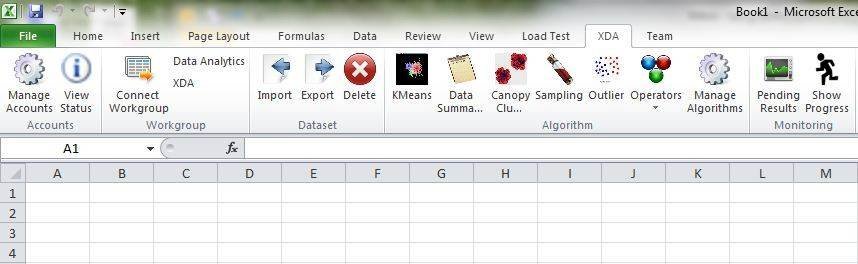

In June 2011, Microsoft Research released Excel DataScope18, 19, its newest big data analytical and visualization candidate. Excel DataScope lets users upload data, extract patterns from data stored in the cloud, identify hidden associations, discover similarities between datasets and perform time series forecasting using a familiar spreadsheet user interface called the research ribbon (Figure 2).

Figure 2. Excel DataScope's research ribbon lets users access and share computing and storage on Windows Azure."Excel presents a closed worldview with access only to the resources on the user's machine. Researchers are a class of programmers who use different models; they want to manipulate and share data in the cloud,20" explained Barga.

"Excel DataScope keeps a session [of] Windows Azure open for uploading and downloading data to a workspace stored in an Azure blob. A workspace is a private sandbox for sharing access to data and analytics algorithms. Users can queue jobs, disconnect from Excel and come back and pick up where they left off; a progress bar tracks status of the analyses." The Silverlight PivotViewer provides Excel DataScope's data visualization feature. Barga expects the first Excel DataScope CTP will drop in fall 2011. …

Read the entire article here.

Articles like the above require considerable research. Here are the references to external resources, listed by the order in which they appeared before final editing:

Klint Finley’s (@Klintron) Microsoft Research Watch: AI, NoSQL and Microsoft's Big Data Future post to the ReadWriteCloud of 3/21/2011 provides the backstory of further-out big data projects at Microsoft Research, such as Probase and Trinity.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

0 comments:

Post a Comment