Windows Azure and Cloud Computing Posts for 7/14/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry describes how SQL Azure relates to Windows Azure in his SQL Azure and Windows Azure post of 7/14/2010 to the SQL Azure Team blog:

I have been deep diving into SQL Azure by blogging about circular references and connection handling for the last couple of months – which are great topics. However, in an internal meeting with the Windows Azure folks last week, I realized that I hadn’t really talked about what SQL Azure is and how to get started with SQL Azure. So I am going to take a minute to give you my unique perspective on SQL Azure and how it relates to our brethren, Windows Azure.

SQL Azure is a cloud-based relational database service built on SQL Server technologies. That is the simplest sentence that describes SQL Azure. You can find more of a description here.

Windows Azure

SQL Azure is independent from Windows Azure. You don’t need to have a Windows Azure compute instance to use SQL Azure. However, SQL Azure is the best and only place for storing relational data on the Windows Azure Platform. In other words, if you are running Windows Azure you probably will have a SQL Azure server to hold your data. However, you don’t need to run your application within Windows Azure account just because you have your data stored in SQL Azure. There are a lot of clients and platforms other than Windows Azure that can make use of SQL Azure, including PowerPivot, WinForms applications (via ADO.NET), JavaScript running in the browser (via OData), Microsoft Access, and SQL Server Reporting Services to name a few.

Windows Azure Platform Training Kit

You don’t have to download the Windows Azure Platform Training Kit in order to use SQL Azure. The Platform Training kit is great for learning SQL Azure and getting started however it is not required.

Here are some of the highlights from the June 2010, Windows Azure Platform Training Kit:

Hands on Labs

- Introduction to SQL Azure for Visual Studio 2008 Developers

- Introduction to SQL Azure for Visual Studio 2010 Developers

- Migrating Databases to SQL Azure

- SQL Azure: Tips and Tricks

Videos

- What is SQL Azure? (C9 - VIDEO) . You can also view this video here without downloading the Training Kit.

Presentations

- Introduction to SQL Azure (PPTX)

- Building Applications using SQL Azure (PPTX)

- Scaling out with SQL Azure.

Demonstrations

- Preparing your SQL Azure Account

- Connecting to SQL Azure

- Managing Logins and Security in SQL Azure

- Creating Objects in SQL Azure

- Migrating a Database Schema to SQL Azure

- Moving Data Into and Out Of SQL Azure using SSIS

- Building a Simple SQL Azure App

- Scaling Out SQL Azure with Database Sharding

Windows Azure SDK

For Windows Azure, there is a development fabric that runs on your desktop to simulate Windows Azure; this is great tool for Windows Azure development. This development fabric is installed when you download and install the Windows Azure SDK. Installing the SDK is not required for SQL Azure. SQL Server 2008 R2 Express edition is the equivalent of SQL Azure on your desktop. There is no SQL Azure development fabric, nor a simulator for the desktop. This is because SQL Azure is extremely similar to SQL Server, so if you want to test your SQL Azure deployment on your desktop you can test it in SQL Server Express first. They are not 100% the compatible (details here); however you can work out most of your Transact-SQL queries, database schema design, and unit testing using SQL Server express.

I personally use SQL Azure for all my development, right after I design my schemas in SQL Server Express edition. It is just as fast for me as SQL Server Express, as long as my queries are not returning a lot of data, i.e. large number of rows with lots of varbinary(max) or varchar(max) data types.

Downloads

So if you don’t need the Windows Azure Platform Training Kit, nor the Windows Azure SDK in order to use SQL Azure, what should you download and install locally? If you are developing on SQL Azure, I recommend the SQL Server Management Studio Express 2008 R2. SQL Server Management Studio provides some of the basics for managing your tables, views, and stored procedures, and also helps you visualize your database. If you are using PowerPivot to connect to a “Dallas” or private SQL Azure database you just need to have PowerPivot installed. The same goes for all the other clients that can connect to SQL Azure, obviously you have to have them installed.

Purchase

If you are going to store your Windows Azure data in SQL Azure, then there are some good introductory and incentive plans, which can be found here. If you just want to use SQL Azure, then you want to purchase the “Pay as you Go” option from this page. That said, there are always new offers, so things could change after this blog post.

Summary

You can use SQL Azure independently of Windows Azure and there is no mandatory software required for your desktop to use or develop SQL Azure. However, if you are using Windows Azure, SQL Azure is the best storage for your relational data.

M Dingle reports WPC: GCommerce creates cloud-based inventory system using Microsoft SQL Azure to transform the special order process in a post of 7/13/2010 to the Tech Net Blogs:

In D.C. this week at WPC, listen to Steve Smith of GCommerce talk about growing market momentum for its Virtual Inventory Cloud (VIC) solution. Built on the Windows Azure platform, VIC enables hundreds of commercial wholesalers and retailers to improve margins and increase customer loyalty through better visibility into parts availability from suppliers.

Read the full release on GCommerce's website.

Chris Pendleton’s Data Connector: SQL Server 2008 Spatial & Bing Maps post of 7/13/2010 to the Bing Community blog notes that the new Bing Data Connector works with SQL Azure’s spatial data types:

For those of you who read my blog, by now I’m sure you are all too aware of SQL Server 2008’s spatial capabilities. If you’re not up to speed with the spatial data support in SQL Server 2008, I suggest you read up on it via the SQL Server site. Now, with the adoption of spatial into SQL Server 2008 there are a lot of questions around Bing Maps rendering support. Since we are one Microsoft I’m sure you heard the announcement of SQL Server 2008 R2 Reporting Services natively supporting Bing Maps.

Okay, so let’s complete the cycle. Now, I want more than just Reporting Services – I want access to all those spatial methods natively built into SQL Server 2008. I want to access the geography and geometry spatial data types for rendering on to the Bing Maps Silverlight Control. Enter, the Data Connector. OnTerra has created an simple, open source way to complete the full cycle of importing data into SQL Server 2008 and rendering onto Bing Maps as point, lines and polygons. Data Connector is available now on CodePlex, so go get it.

Do you know what this means??? It means with only a few configurations (and basically no coding) you can pull all of your wonderful geo-data out of SQL Server 2008 and render it onto Bing Maps Platform! You get to tweak the functions for colors and all that jazz; but, holy smokes this will save you a bunch of time in development. Okay, so using SQL Server 2008 as your database for normal data, also gets you free spatial support and now you have a simple way to visualize and visually analyze all of the information coming out of the database. Did you know that SQL Server 2008 Express (you know, the free desktop version of SQL Server 2008) has spatial support.

Did you know that we launched SQL Server 2008 into Windows Azure calling it SQL Azure? So, if you’re moving to the cloud out of the server farm you have access to all the spatial methods in SQL Server 2008 from the Windows Azure cloud. Any way you want to slice and dice the data we have you covered – desktop, server, cloud; and, Bing Maps with the Data Connector is now ready to easily bring all the SQL Server 2008 data to life without any other software, webware or middleware needed!

Oh, and we have a HUGE Bing Maps booth at the ESRI User Conference going on RIGHT NOW in San Diego. If you’re there, stop by and chat it up with some of the Bing Maps boys. Wish I was there. Also, a reminder that Bing Maps (and OnTerra) are at the Microsoft Worldwide Partner Conference, so if you’re there stop by and talk shop with them too.

Here are some helpful links:

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Joe Panattieri interviewed Jon Roskill re partners marketing BPOS vs. Windows Azure apps in his Microsoft Channel Chief: Potential BPOS Marketplace? post of 7/14/2010 to the MSPMentor blog:

A few minutes ago, I asked Microsoft Channel Chief Jon Roskill the following question: Can small VARs and MSPs really profit from basic BPOS (Business Productivity Online Suite) applications like SharePoint Online and Exchange Online? Or should those small partners begin to focus more on writing their own targeted cloud applications for Windows Azure? Roskill provided these perspectives.

First, let’s set the stage: VARs and MSPs receive a flat monthly fee for reselling BPOS applications like Exchange Online and SharePoint Online. In stark contrast, Windows Azure is an extendable cloud platform. Partners can use Azure to write and deploy customer applications in the Microsoft cloud, that means partners can create business assets that deliver even more revenue on Azure.

But Roskill offered some caution. He noted that business app stores are quite different than consumer market places like the Apple App Store. Yes, developers can write applications for Azure. But he also sees opportunities for partners to profit from BPOS add-ons.

How? It sounds like Microsoft plans to take some steps to expand BPOS as a platform that VARs and MSPs can use for application extensions. For instance, Roskill mentioned potential workflow opportunities for VARs and MSPs down the road.

Roskill certainly didn’t predict that Microsoft would create a BPOS application marketplace. But the clues he shared suggest BPOS will become more than basic hosted applications. It sounds like Microsoft will find a way for VARs and MSPs to truly add value, rather than simply reselling standard hosted applications.

The press conference with Roskill continues now. Back later with more thoughts.

Watch Jon Roskill’s Vision Keynote video of 7/14/2010 here.

PC Magazine defines an MSP (Managed Service Provider) as follows:

(Managed Service Provider) An organization that manages a customer's computer systems and networks which are either located on the customer's premises or at a third-party datacenter. MSPs offer a variety of service levels from just notifying the customer if a problem occurs to making all necessary repairs itself. MSPs may also be a source for hardware and staff for its customers.

Here’s Joe Panattieri’s promised “more thoughts” about Jon Roskill in a subsequent Microsoft Channel Chief Concedes: I Need to Learn About MSPs post of 7/14/2010:

It was a brief but revealing comment. During a press briefing at Microsoft Worldwide Partner Conference 2010 (WPC10) today, new Microsoft Channel Chief Jon Roskill (pictured) described his views on VARs, distributors, integrators and other members on the channel partner ecosystem. Then, in an unsolicited comment, he added: “MSP… it’s a piece [of the channel] I need to learn more about.” Rather than being depressed by Roskill’s comment, I came away impressed. Here’s why.

When Roskill entered the press meeting, he and I spoke on the side for about a few moments. Roskill mentioned he had read MSPmentor’s open memo to him. Roskill said he agreed with the memo — which called on Microsoft’s channel team spend more time with MSPs, among other recommendations.

Roskill is a long-time Microsoft veteran but he’s been channel chief for less than a month. He’s drinking from a fire hose — navigating the existing Microsoft Partner program and new announcements here at the conference.

Sticking to the script, Roskill has been reinforcing CEO Steve Ballmer’s core cloud messages to partners — Get in now or get left behind. During today’s press conference, Roskill described why he’s upbeat about BPOS, Azure and the forthcoming Azure Appliance for partners. (I’ll post a video recap, later.)

But Roskill’s actions may wind up speaking louder than his words. During a keynote session on Tuesday, Roskill’s portion of the agenda was hit and miss. On stage under the Tuesday spotlight, he looked like an Olympic skater still getting a feel for the ice.

New Day, New Audience

During the far smaller press conference today (Wednesday), Roskill looked at ease. He even took a few moments to walk the room, shake hands with reporters and introduce himself during brief one-on-one hellos with about 20 members of the media. Some media members may question Microsoft’s channel cloud strategy. But Roskill welcomed the dialog — which is a solid first step as Channel Chief.

No doubt, Roskill still has more work to do. Portions of the media asked — multiple times — why Microsoft won’t let partners manage end-customer billing for BPOS. Each time, Roskill said he’s listening to partner feedback and media inquiries, and Microsoft has adjusted its BPOS strategy from time to time based on that feedback. But ultimately, it sounds like Microsoft thinks it will become too difficult to give each partner so much individual control over billing.

Good Listener?

That said, Roskill is an approachable guy. He’s obviously reading online feedback from the media and from partners. During the 30-minute press conference, he made that 15-second admission about needing to learn more about MSPs.

I respect the fact that he said it, and I look forward to seeing how Microsoft attempts to move forward with MSPs.

Read More About This Topic

Microsoft recently posted a new Why Dynamic Data Center Toolkit? landing page for the Dynamic Data Center Toolkit v2 (DDCTK-H) version for MSBs, which was released in June 2010:

Build Managed Services with the Dynamic Data Center Toolkit for Hosting Providers

Your customers are looking for agile infrastructure delivered from secure, highly available data centers so they can quickly respond to rapid business fluctuations. You can address this need by extending your hosting portfolio with high-value, high-margin services, such as managed hosting, on demand virtualized servers, clustering, and network services.

With the Dynamic Data Center Toolkit for Hosting Providers, you can deliver these services, built on Microsoft® Windows Server® 2008 Hyper-V™ and Microsoft System Center. The toolkit contains guidance to help you establish appropriate service level agreements (SLAs) and create portals that your customers can use to directly provision, manage, and monitor their infrastructure.

In-Depth Resources

The Dynamic Data Center Toolkit enables you to build an ongoing relationship with your customers while you scale your business with these resources:

- Step-by-step instructions and technical best practices to provision and manage a reliable, secure, and scalable data center

- Customizable marketing material you can use to help your customers take advantage of these new solutions

- Sample code and demos to use in your deployment

The MSB landing page offers two screencasts and links to other resources for MSBs. The Microsoft Cloud Computing Infrastructure landing page targets large enterprises:

Josh Greenbaum recommends xRM as an Azure app toolset in his How to Make Money in the Cloud: Microsoft, SAP, the Partner Dilemma and The Tools Solution post of 7/13/2010 to the Enterprise Irregulars blog:

It’s cloud’s illusions I recall, I really don’t know clouds at all…..

One of the primary devils in the details with cloud computing will always be found in the chase for margins, and this is becoming abundantly clear for Microsoft’s market-leading partner ecosystem, gathered this week in Washington, DC. for their Worldwide Partner Conference. Chief cheerleader Steve Ballmer repeated the standard mantra about how great life will be in the cloud, something I tend to agree with. But missing from Ballmer’s talk was the money quote for Microsoft’s partners: “…..and here’s how, once we’ve stripped the implementation and maintenance revenue from your business, you’ll be able to make a decent margin on your cloud business.”

More than the coolness of the technology, it’s the coolness of the possibility for profit margins that will make or break Microsoft Azure and any other vendor’s cloud or cloud offering. This is hardly just Microsoft’s problem, SAP has grappled with this margin problem as it has moved in fits and starts towards this year’s re-launch of Business ByDesign. And it’s top of mind across the growing legions of ISVs and developers looking at what they can do to capitalize on this tectonic shift in the marketplace.

The trick with chasing cloud margins is that the chase dovetails nicely with a concept I have been touting for a while, the value-added SaaS opportunity. What is obvious from watching Ballmer and his colleagues discuss the future according to Azure is that much of the profits to be gained from moving existing applications and services into the cloud will largely go to Microsoft. That’s the first generation SaaS opportunity – save money by flipping existing applications to the cloud and reap the economies of the scale inherent in consolidating maintenance, hardware, and support costs. To the owner of the cloud goes the spoils.

This is the same issue, by the way, bedeviling SAP’s By Design: SAP will run its own ByD cloud services, and in doing so, assuming that the problems with the cost-effectiveness of ByD have been full resolved, sop up the first order profits inherent in the economies of scale of the cloud. How SAP’s partners will make the healthy margins they need to be in the game with SAP has been, in retrospect, a bigger problem than the technology issues that stymied ByD’s initial release. And, by the way, thinking that value-added partners – the smart, savvy ones SAP wants to have on board selling ByD – will be happy with a volume business won’t cut it. Smart and savvy won’t be interested in volume, IMO.

But there is an answer to the answer that will appeal to everyone: build net new apps that, in the words of Bob Muglia, president of Microsoft’s server and tools business, weren’t possible before. This act of creation is where the margins will come from. And the trick for partners of any cloud company is how easy the consummation of this act of creation will be.

The easiest way to do for partners to create this class of apps is for the vendor to offer the highest level of abstraction possible: from a partner/developer standpoint, this means providing, out of the box and in the most consumable manner possible, a broad palate of business-ready services that form the building blocks for net new apps.

Microsoft is starting to do this, and Muglia showed off Dallas, a data set access service that can find publicly and privately available data sets and serve up the data in an Azure application, as an example of what this means. As Azure matures, bits and pieces of Microsoft Dynamics will be available for use in value-added applications, along with value-added services for procurement, credit card banking, and other business services.

This need for value-added apps then begs two important questions for Microsoft and the rest of the market: question one is what is the basic toolset to be used to get value-added SaaS app development started? And question two: who, as in what kind of developer/partner, is best qualified to build these value-added apps?

I’ll start with the second question first. I have maintained for a while that, when it comes to the Microsoft partner ecosystem, it’s going to be up to the Dynamics partners to build the rich, enterprise-class applications that will help define this value-added cloud opportunity. The main reason for this is that the largest cloud opportunity will lie in vertical, industry-specific applications that require deep enterprise domain knowledge. This is the same class of partner SAP will need for ByD as well.

Certainly there will be opportunities for the almost all of the 9 million Microsoft developers out there, especially when it comes to integrating the increasingly complex Microsoft product set – Sharepoint. SQL Server, Communications Server, etc. – into the new cloud-based opportunities.

But with more of the plumbing and integration built into Azure, those skills, like the products they are focused on, will become commoditized and begin to wane in importance and value, and therefore limit the ability of these skills to contribute to strong margins.

The skills that will rise like crème to the top will be line of business and industry skills that can serve as the starting point for creating new applications that fill in the still gaping white spaces in enterprise functionality. Those skills are the natural bailiwick of many, but not all, Dynamics partners: Microsoft, like every other channel company, has its share of great partners and not so great partners, depending on the criteria one uses to measure channel partner greatness. Increasingly, in the case of Azure, that greatness will be defined by an ability to create and deliver line of business apps, running in Azure, that meet specific line of business requirements.

The second skillset, really more ancillary than completely orthogonal to the first, involves imagining, and then creating, the apps “that have never been built before.” This may task even the best and brightest of the Dynamics partners, in part because many of these unseen apps will take a network approach to business requirements that isn’t necessarily well-understood in the market. There’s a lot of innovative business thinking that goes into building an app like that, the kind found more often in start-ups than in existing businesses, but either way it will be this class of app that makes Azure really shine.

Back to toolset question: Microsoft knows no shortage of development tools under Muglia’s bailiwick, but there’s one that comes from his colleague Stephen Elop’s Business Solutions group that is among the best-suited for the job. Muglia somewhat obliquely acknowledged when I asked him directly that this tool would one day be part of the Azure toolset, but it’s clear we disagree about how important that toolset is.

The toolset in question is xRM, the extended CRM development environment that Elop’s Dynamics team has been seeding the market with for a number of years. xRM is nothing short of one of the more exciting ways to develop applications, based on a CRM-like model, that can be run on premise, on-line today, and on Azure next year. While there are many things one can’t do with xRM, and which therefore require some of Muglia’s Visual Studio tools, Microsoft customers today are building amazingly functional apps in a multitude of industries using xRM.

The beauty of xRM is that development can start at a much higher level of abstraction than is possible with a Visual Studio-like environment. This is due to the fact that it offers up the existing services of Dynamics CRM, from security to workflow to data structures, as development building blocks for the xRM developer. One partner at the conference told me that his team can deliver finished apps more than five times faster with xRM than with Visual Studio, which either means he can put in more features or use up less time or money. Either one looks pretty good to me.

The success of xRM is important not just for Microsoft’s channel partners: the ones who work with xRM fully understand its value in domains such as Azure. xRM is also defines a model for solving SAP’s channel dilemma as well for ByD. The good news for SAP is that ByD will have an xRM-like development environment by year’s end, one that can theoretically tap into a richer palate of processes via ByD than xRM can via Dynamics CRM.

Of course, xRM has had a double head start: it’s widely used in the market, and there’s a few thousand partners who know how to deploy it. And xRM developers will have the opportunity when Dynamics CRM 2011 is released (in 2011, duh) of literally throwing a switch and deploying their on premise or in the cloud. Thus far, ByD’s SDK only targets on-demand deployments. And there are precious few ByD partners today, and no one has any serious experience building ByD apps.

What’s interesting for the market is that xRM and its ByD equivalent both represent fast-track innovation options that can head straight to the cloud. This ability to innovate on top of the commodity layer that Azure and the like provide is essential to the success of Microsoft’s and SAP’s cloud strategies. Providing partners and developers with a way to actually make money removes an important barrier to entry, and in the process opens up an important new avenue for delivering innovation to customers. Value-added SaaS apps are the future of innovation, and tools like xRM are the way to deliver them. Every cloud has a silver lining: tools like xRM hold the promise of making that lining solid gold.

The Microsoft Case Study Team dropped Software Developers [Intergrid] Offer Quick Processing of Compute-Heavy Tasks with Cloud Services on 7/8/2011:

InterGrid is an on-demand computing provider that offers cost-effective solutions to complex computing problems. While many customers need support for months at a time, InterGrid saw an opportunity in companies that need only occasional bursts of computing power—for instance, to use computer-aided drafting data to render high-quality, three-dimensional images. In response, it developed its GreenButton solution on the Windows Azure platform, which is hosted through Microsoft data centers. Available to software vendors that serve industries such as manufacturing, GreenButton can be embedded into applications to give users the ability to call on the power of cloud computing as needed. By building GreenButton on Windows Azure, InterGrid can deliver a cost-effective, scalable solution and has the opportunity to reach another 100 million industry users with a reliable, trustworthy platform.

Business Situation

The company wanted to give software developers the opportunity to embed on-demand computing options into applications, and it needed a reliable, trustworthy cloud services provider.

Solution

InterGrid developed its GreenButton solution using the Windows Azure platform, giving software users the option to use cloud computing in an on-demand, pay-as-you-go model.

Benefits

- Increases scalability

- Reduces costs for compute-heavy processes

- Delivers reliable solution

Return to section navigation list>

Windows Azure Infrastructure

Mary Jo Foley quotes a Microsoft slide from WPC10 in her Microsoft: 'If we don't cannibalize our existing business, others will' post of 7/14/2010 to the All About Microsoft blog:

That’s from a slide deck from a one of many Microsoft presentations this week at the company’s Worldwide Partner Conference, where company officials are working to get the 14,000 attendees onboard with Microsoft’s move to the cloud. It’s a pretty realistic take on why Microsoft and its partners need to move, full steam ahead, to slowly but surely lessen their dependence on on-premises software sales.

Outside of individual sessions, however, Microsoft’s messaging from execs like Chief Operating Officer Kevin Turner, has been primarily high-level and inspirational.

“We are the undisputed leader in commercial cloud services,” Turner claimed during his July 14 morning keynote. “We are rebooting, re-pivoting, and re-transitioning the whole company to bet on cloud services.”

Turner told partners Microsoft’s revamped charter is to provide “a continuous cloud service for every person and every business.” He described that as a 20-year journey, and said it will be one where partners will be able to find new revenue opportunities. …

Watch Kevin Turner’s Vision Keynote 7/14/2010 video here.

<Return to section navigation list>

Windows Azure Platform Appliance

Chris Czarnecki claims Private Clouds Should Not Be Ignored in this 7/14/2010 post to Learning Tree’s Perspectives on Cloud Computing blog:

With the publicity surrounding Cloud Computing it is easy to form the opinion that Cloud Computing means using Microsoft Azure, Google App Engine or Amazon EC2. These are public clouds that are shared by many organisations. Related to using a public cloud is the question of security. What often gets missed in these discussions is the fact that Cloud Computing very definitely offers a private cloud option.

Leveraging a private cloud can offer an organisation many advantages, not least of which is a better utilisation of existing on-premise IT resources. The number of products available to support private clouds is growing. For instance Amazon recently launched their Virtual Private Cloud which provides a secure seamless bridge between a company’s existing IT infrastructure and the AWS cloud. Eucalyptus provides a cloud infrastructure for private clouds which in the latest release, includes Windows image hosting, group management, quota management and accounting. This is a truly comprehensive solution for those wishing to run a private cloud.

The latest addition to the private cloud landscape is the launch by Microsoft of the Appliance for building private clouds. Microsoft have developed a private cloud appliance in collaboration with eBay. This product compliments Azure by enabling the cloud to be locked down behind firewalls and intrusion detection systems, perfect for handling customer transactions and private data. Interestingly the private cloud appliance allows Java applications to run as first class citizens alongside .NET. Microsoft is partnering with HP, Fujitsu and Dell who will adopt the appliance for their own cloud services.

I am really encouraged by these developments in private cloud offerings, as during consulting and teaching the Learning Tree Cloud Computing course, the concerns of security and data location are offered as barriers to cloud adoption. My argument that private or hybrid clouds offer solutions is being reinforced by the rapidly increasing products in this space and their adoption by organisations such as eBay.

Alex Williams is conducting ReadWriteCloud’s Weekly Poll: Will Private Clouds Prevail Or Do Platforms Represent the Future? in this 7/13/2010 post:

The news from Microsoft this week about its private cloud initiative points to an undeniable trend. The concept of the private cloud is here to stay.

This sticks in the craw of many a cloud computing veteran who makes the clear distinction between what is an Internet environment as opposed to an optimized data center.

Amazon CTO Werner Vogels calls the private variety "false clouds." Vogels maintains that you can't add cloud elements to a data center and suddenly have a cloud of your own.

In systems architecture diagrams, servers are represented by boxes and storage by containers. Above it all, the Internet is represented by a cloud. That's how the cloud got its name. Trying to fit the Internet in a data center would be like trying to fit the universe in a small, little house. It's a quantum impossibility.

Our view is more in line with Vogels. In our report on the future of the cloud, Mike Kirkwood wrote that it is the rich platforms that will come to define the cloud. That makes more sense for us as platforms are part of an Internet environment, connected in many ways by open APIs.

But what do you think? Here's our question:

- Will Private Clouds Prevail Or Do Platforms Represent the Future?

- Platforms will prevail as services. They are the true foundation for the organic evolution of the cloud.

- Private clouds are only an intermediary step before going to a public cloud environment.

- Yes, private clouds will rule. The enterprise is too concerned about security to adopt public cloud environments.

- There will be no one cloud environment that prevails. Each has its own value.

- No, private clouds will serve to optimize data centers but they will not be the cloud of choice for the enterprise.

- Private clouds will be the foundation for most companies. They will use public and hybrid clouds but the private variety will be most important.

- Baloney. Private clouds are glorified data centers.

- Other

Go to the ReadWriteCloud and vote!

The concept of the private cloud is perceived as real, no surprise, by the players represented in the vast ecosystem that is the enterprise. You can hear their big sticks rattling as they push for private clouds.

Virtualization is important for data center efficiency. it helps customers make a transition to the public cloud.

But it is not the Internet. Private clouds still mean that the enterprise is hosting its own applications. And for a new generation, that can make it feel a bit like a college graduate of 30 years ago walking into an enterprise dominated by mainframes and green screens.

That's not necessarily a bad thing. A private cloud is just not a modern oriented vision. To call it cloud computing is just wrong. It's like saying I am gong to have my own, private Internet.

Still, we expect the term to be here for the long run, especially as the marketing amps up and "cloud in a box" services start popping up across the landscape.

The only thing we can hope is that these private clouds allow for data to flow into public clouds.

It is starting to feel like the concept of the cloud is at the point where the tide can go either way. Will we turn the cloud into an isolated environment or will the concepts of the Internet prevail? Those are big questions that will have defining consequences.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie describes Exorcising your digital demons in her Out, Damn’d Bot! Out, I Say! post of 7/14/2010 to F5’s DevCentral blog:

Most people are familiar with Shakespeare’s The Tragedy of Macbeth. Of particularly common usage is the famous line uttered repeatedly by Lady Macbeth, “Out, damn’d spot! Out, I say” as she tries to wash imaginary bloodstains from her hands, wracked with the guilt of the many murders of innocent men, women, and children she and her husband have committed.

It might be no surprise to find a similar situation in the datacenter, late at night. With the background of humming servers and cozily blinking lights shedding a soft glow upon the floor, you might hear some of your infosecurity staff roaming the racks and crying out “Out, damn’d bot! Out I say!” as they try to exorcise digital demons from their applications and infrastructure.

Because once those bots get in, they tend to take up a permanent residence. Getting rid of them is harder than you’d think because like Lady Macbeth’s imaginary bloodstains, they just keep coming back – until you address the source.

A RECURRING NIGHTMARE

One of the ways in which a bot can end up in your datacenter wreaking havoc and driving your infosec and ops teams insane is through web application vulnerabilities. These vulnerabilities in both the underlying language and server software as well as the web application itself, are generally exploited through XSS (Cross-site scripting). While we tend to associate XSS with attempts to corrupt a data source and subsequently use it as distribution channel for malware, a second use of XSS is to enable the means by which a bot can be loaded onto an internal resource. From there the bot can spread, perform DoS attacks on the local network, be used as a SPAM bot, or join in a larger bot network as part of a DDoS.

The uses of such deposited bots is myriad and always malevolent.

Getting rid of one is easy. It’s keeping it gone that’s the problem. If it entered via a web application it is imperative that the vulnerability be found and patched. And it can’t wait for days, because the bot that likely exploited that vulnerability and managed to deploy the bot inside your network is probably coming back. In a few hours. You can remove the one that’s there now, but unless the hole is closed, it’ll be back – sooner rather than later.

According to an ARS Technica report, you may already know this pain as “almost all Fortune 500 companies show Zeus botnet activity”:

Up to 88% of Fortune 500 companies may have been affected by the Zeus trojan, according to research by RSA's FraudAction Anti-Trojan division, part of EMC.

The trojan installs keystroke loggers to steal login credentials to banking, social networking, and e-mail accounts.

This is one of those cases in which when is more important than where the vulnerability is patched initially. Yes, you want to close the hole in the application, but the reality is that it takes far longer to accomplish that then it will take for attackers to redeposit their bot. According to WhiteHat Security’s Fall 2009 Website Security Statistics Report

a website is 66 percent likely to be vulnerable to an XSS exploit, and it will take an average of 67 days for that vulnerability to be patched.

That’s 67 days in which the ops and infosec guys will be battling with the bot left behind – cleaning it up and waiting for it to show up again. Washing their hands, repeatedly, day and night, in the hopes that the stain will go away.

WHAT CAN YOU DO?

The best answer to keeping staff sane is to employ a security solution that’s just a bit more agile than the patching process. There are several options, after all, that can be implemented nearly immediately to plug the hole and prevent the re-infection of the datacenter while the development team is implementing a more permanent solution.

Virtual patching provides a nearly automated means of plugging vulnerabilities by combining vulnerability assessment services with a web application firewall to virtually patch, in the network, a vulnerability while providing information necessary to development teams to resolve the issue permanently.

Network-side scripting can provide the means by which a vulnerability can be almost immediately addressed manually. This option provides a platform on which vulnerabilities that may be newly discovered and have not yet been identified by vulnerability assessment solutions can be addressed.

Web application firewall can be manually instructed to discover and implement policies to prevent exploitation of vulnerabilities. Such policies generally target specific pages and parameters and allow operations to target protection at specific points of potential exploitation.

All three options can be used together or individually. All three options can be used as permanent solutions or temporary stop-gap measures. All three options are just that, options, that make it possible to address vulnerabilities immediately rather than forcing the organization to continually battle the results of a discovered vulnerability until developers can address it themselves.

More important than how is when, and as important as when is that the organization have in place a strategy that includes a tactical solution for protection of exploited vulnerabilities between discovery and resolution. It’s not enough to have a strategy that says “we find vulnerability. we fix. ugh.” That’s a prehistoric attitude that is inflexible and inherently dangerous given the rapid evolution of botnets over the past decade and will definitely not be acceptable in the next one. …

Michael Krigsman posted his Gartner releases cloud computing ‘rights and responsibilities’ analysis to the Enterprise Irregulars blog on 7/14/2010:

Gartner specified “six rights and one responsibility of service customers that will help providers and consumers establish and maintain successful business relationships:”

The right to retain ownership, use and control one’s own data - Service consumers should retain ownership of, and the rights to use, their own data.

The right to service-level agreements that address liabilities, remediation and business outcomes – All computing services – including cloud services – suffer slowdowns and failures. However, cloud services providers seldom commit to recovery times, specify the forms of remediation or spell out the procedures they will follow.

The right to notification and choice about changes that affect the service consumers’ business processes – Every service provider will need to take down its systems, interrupt its services or make other changes in order to increase capacity and otherwise ensure that its infrastructure will serve consumers adequately in the long term. Protecting the consumer’s business processes entails providing advanced notification of major upgrades or system changes, and granting the consumer some control over when it makes the switch.

The right to understand the technical limitations or requirements of the service up front – Most service providers do not fully explain their own systems, technical requirements and limitations so that after consumers have committed to a cloud service, they run the risk of not being able to adjust to major changes, at least not without a big investment.

The right to understand the legal requirements of jurisdictions in which the provider operates – If the cloud provider stores or transports the consumer’s data in or through a foreign country, the service consumer becomes subject to laws and regulations it may not know anything about.

The right to know what security processes the provider follows - With cloud computing, security breaches can happen at multiple levels of technology and use. Service consumers must understand the processes a provider uses, so that security at one level (such as the server) does not subvert security at another level (such as the network).

The responsibility to understand and adhere to software license requirements - Providers and consumers must come to an understanding about how the proper use of software licenses will be assured.

Readers interested in this topic should also see enterprise analyst, Ray Wang’s, Software as a Service (SaaS) Customer’s Bill of Rights. That document describes a set of practices to ensure consumer protections across the entire SaaS lifecycle, as indicated in the following diagram:

My take. For cloud computing to achieve sustained success and adoption, the industry must find ways to simplify and align expectations between us

Michael is a well-known expert on why IT projects fail, CEO of Asuret, a Brookline, MA consultancy that uses specialized tools to measure and detect potential vulnerabilities in projects, programs, and initiatives. He’s also a popular and prolific blogger, writing the IT Project Failures blog for ZDNet.

The Government Information Security blog offered A CISO's Guide to Application Security white paper from Fortify on 7/14/2010:

Focusing on security features at both the infrastructure and application level isn't enough. Organizations must also consider flaws in their design and implementation. Hackers looking for security flaws within applications often find them, thereby accessing hardware, operating systems and data. These applications are often packed with Social Security numbers, addresses, personal health information, or other sensitive data.

In fact, according to Gartner, 75% of security breaches are now facilitated by applications. The National Institute of Standards and Technology, or NIST, raises that estimate to 92%. And from 2005 to 2007 alone, the U.S. Air Force says application hacks increased from 2% to 33% of the total number of attempts to break into its systems.

To secure your agency's data, your approach must include an examination of the application's inner workings, and the ability to find the exact lines of code that create security vulnerabilities. It then needs to correct those vulnerabilities at the code level. As a CISO, you understand that application security is important. What steps can you take to avoid a security breach?

Read the CISO's Guide to Application Security to learn:

- The significant benefits behind application security

- Implement a comprehensive prevention strategy against current & future cyberattacks

- 6 quick steps to securing critical applications

<Return to section navigation list>

Cloud Computing Events

The Windows Azure Platform Partner Hub posted Day 2 WPC: Cloud Essentials Pack and Cloud Accelerate Program on 7/142010:

The Microsoft Partner Network is trying to make it easier for partners to adopt new cloud technologies and providing a logo and competency benefits for the partners who are driving successful business by closing deals with Microsoft Online Services, Windows Intune, and the Windows Azure Platform.

Get details on the Cloud Essentials Pack and Cloud Accelerate Program here: www.microsoftcloudpartner.com/

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Liz McMillan asserts “Health information exchange will enable secure web-based access” as a preface to her Verizon Unveils 'Cloud' Solution to Facilitate Sharing of Information post of 7/14/2010:

One of the biggest obstacles to sharing patient information electronically is that health care systems and providers use a wide range of incompatible IT platforms and software to create and store data in various formats. A new service - the Verizon Health Information Exchange - will soon be available via the "cloud" to address this challenge.

The Verizon Health Information Exchange, one of the first services of its kind in the U.S., will consolidate clinical patient data from providers and translate it into a standardized format that can be securely accessed over the Web. Participating exchange providers across communities, states and regions will be able to request patient data via a secure online portal, regardless of the IT systems and specific protocols the providers use. This will enable providers to obtain a more complete view of a patient's health history, no matter where the data is stored.

Having more information at their fingertips will help providers reduce medical errors and duplicative testing, control administrative costs and, ultimately, enhance patient safety and treatment outcomes. With monthly charges based on a provider's patient-record volume, the service is economical because subscribers only pay for what they use.

"By breaking down the digital silos within the U.S. health care delivery system, the Verizon Health Information Exchange will address many of the interoperability barriers that prevent sharing of clinical data between physicians, clinics, hospitals and payers," said Kannan Sreedhar, vice president and global managing director, Verizon Connected Health Care Solutions. "Providing secure access to patient data will enable health care organizations to make a quantum leap forward in the deployment of IT to meet critical business and patient-care issues."

Adoption of health information exchanges is expected to grow as a result of the American Recovery and Reinvestment Act of 2009. As of March, 56 federal grants totaling $548 million have been awarded to states to facilitate and expand the secure electronic movement and use of health information among organizations, using nationally recognized standards.

Strong Security, Comprehensive Service

Because the Verizon Health Information Exchange will be delivered via Verizon's cloud computing platform, health care organizations will be able to use their current IT systems, processes and workflows, without large additional capital expenditures. The service will be ideal for large and small health care providers.

The Verizon Health Information Exchange will use strong identity access management controls to provide security for sensitive patient information. Only authorized users will have access to patient clinical data.

To build out the solution, Verizon will leverage the capabilities of several key technology and service providers - MEDfx, MedVirginia and Oracle - to deliver key features of the service including: clinical dashboard, record locator service, cross-enterprise patient index and secure clinical messaging.

"The ability to dynamically scale technical resources and pay for those used are key benefits of health information exchange platforms hosted in the cloud," said Lynne A. Dunbrack, program director, IDC Health Insights. "Cloud-based platforms will appeal to small to mid-sized organizations looking to shift technology investment from cap-ex to op-ex and to large regional or statewide initiatives that need to establish connectivity with myriad stakeholders with divergent needs and interoperability requirements."

It appears to me that Verizon is entering into competition with existing state HIEs who are or will be the recipients of the $548 million in ARRA grants. Will states be able to use ARRA funds to outsource HIE operations to Verizon?

Nicole Hemsoth reports being caught off-guard in her A Game-Changing Day for Cloud and HPC post of 7/13/2010 to HPC in the Cloud’s Behind the Cloud blog:

On Monday the news was abuzz with word from the Microsoft Partner Conference—tales of its various partnerships to enhance its Azure cloud offering were proliferating, complete with the requisite surprises and some items that dropped off the radar as soon as I heard them given our HPC focus. Monday night, I went to bed comforted by the notion that when I would wake up on Tuesday, for once I would have a predictable amount of news coming directly from the Redmond camp. I wasn’t sure what partnerships they would declare or how they planned on bringing their private cloud in a box offering to real users, but I knew that I would be writing about them in one way or another.

Little did I know that the Tuesday news I was expect[ing] didn’t surface at all and in its place [appeared] this remarkable announcement from Amazon about its HPC-tailored offering, which they (rather clunkily) dubbed “Cluster Compute Instances” --(which is thankfully already being abbreviated CCI).

I almost fell off my chair.

Then I think I started to get a little giddy. Because this means that it’s showtime. Cloud services providers, private and public cloud purveyors—everyone needs to start upping their game from here on out, at least as far as attracting HPC users goes. If I recall, Microsoft at one point noted that HPC was a big part of their market base—a surprise, of course, but it’s clear that this new instance type (that makes it sound so non-newsy, just calling it another new instance type) is set to change things. Exciting stuff, folks.

As you can probably tell from this little stream of consciousness ramble, this release today turned my little world on its head, I must admit. I hate to be too self-referential, but man, I wish I could [go] back now and rewrite some elements of a few articles that have appeared on the site where I directly question the viability of the public cloud for a large number of HPC applications in the near future, including those that invoke the term “MPI”—Actually though, it’s not necessarily me saying these things, it’s a host of interviewees as well. The consensus just seemed to be, Amazon is promising and does work well for some of our applications but once we step beyond that, there are too many performance issues for it to be a viable alternative. Yet. Because almost everyone added that “yet” caveat.

I did not expect to see news like this in the course of this year. I clapped my hands like a little girl when I read the news (which was after I almost fell off my chair). It is exciting because it means big changes in this space from here on out. Everyone will need to step up their game to deliver on a much-given promise of supercomputing for the masses. This means ramped up development from everyone, and what is more exciting that a competitive kick in the behind to get the summer rolling again in high gear on the news-of-progress front?

Microsoft’s news was drowned out today—and I do wonder about the timing of Amazon’s release. Did Amazon really, seriously time this with Redmond’s conference where they’d be breaking big news? Or was the timing of this release a coincidence? This one gets me—and no one has answered my question yet about this from Amazon. What am I thinking though; if I ask a question like that I get a response that is veiled marketing stuff anyway like, “we so firmly believed that the time is now to deliver our product to our customers—just for them. Today. For no other reason than that we’re just super excited.”

We’re going to be talking about this in the coming week. We need to gauge the impact on HPC—both the user and vendor sides, and we also need to get a feel for what possibilities this opens up, especially now that there is a new player on the field who, unlike the boatload of vendors in the space now, already probably has all of our credit card numbers and personal info. How handy.

To review Microsoft’s forthcoming HPC features for Windows Azure, which they announced in mid-May, 2010, read Alexander Wolfe’s Microsoft Takes Supercomputing To The Cloud post to the InformationWeek blogs of 5/17/2010:

Buried beneath the bland verbiage announcing Microsoft's Technical Computing Initiative on Monday is some really exciting stuff. As Bill Hilf, Redmond's general manager of technical computing, explained it to me, Microsoft is bringing burst- and cluster-computing capability to its Windows Azure platform. The upshot is that anyone will be able to access HPC in the cloud.

HPC stands for High-Performance Computing. That's the politically correct acronym for what we used to call supercomputing. Microsoft itself has long offered Windows HPC Server as its operating system in support of highly parallel and cluster-computing systems.

The new initiative doesn't focus on Windows HPC Server, per se, which was what I'd been expecting to hear when Microsoft called to corral me for a phone call about the announcement. Instead, it's about enabling users to access compute cycles -- lots of them, as in, HPC-class performance -- via its Azure cloud computing service.

As Microsoft laid it out in an e-mail, there are three specific areas of focus:

- Cloud: Bringing technical computing power to scientists, engineers and analysts through cloud computing to help ensure processing resources are available whenever they are needed—reliably, consistently and quickly. Supercomputing work may emerge as a “killer app” for the cloud.

- Easier, consistent parallel programming: Delivering new tools that will help simplify parallel development from the desktop to the cluster to the cloud.

- Powerful new tools: Developing powerful, easy-to-use technical computing tools that will help speed innovation. This includes working with customers and industry partners on innovative solutions that will bring our technical computing vision to life.

Trust me that this is indeed powerful stuff. As Hilf told me in a brief interview: "We've been doing HPC Server and selling infrastructure and tools into supercomputing, but there's really a much broader opportunity. What we're trying to do is democratize supercomputing, to take a capability that's been available to a fraction of users to the broader scientific computing."

In some sense, what this will do is open up what can be characterized as "supercomputing light" to a very broad group of users. There will be two main classes of customers who take advantage of this HPC-class access. The first will be those who need to augment their available capacity with access to additional, on-demand "burst" compute capacity.

The second group, according to Hilf, "is the broad base of users further down the pyramid. People who will never have a cluster, but may want to have the capability exposed to them in the desktop."

OK, so when you deconstruct this stuff, you have to ask yourself where one draws the line between true HPC and just needing a bunch of additional capacity. If you look at it that way, it's not a stretch to say that perhaps many of the users of this service won't be traditional HPC customers, but rather (as Hilf admitted) users lower down the rung who need a little extra umph.

OTOH, as Hilf put it: "We have a lot of traditional HPC customers who are looking at the cloud as a cost savings."

Which makes perfect sense. Whether this will make such traditional high-end users more like to postpone purchase of a new 4P server or cluster in favor of additional cloud capacity is another issue entirely, one which will be interesting to follow in the months to come.

You can read more about Microsoft's Technical Computing Initiative here and here.

Joe Panettieri reported Intel Hybrid Cloud: First Five Partners Confirmed in a 7/13/2010 post to the MSPMentor blog:

There’s been considerable buzz about the Intel Hybrid Cloud in recent days. The project involves an on-premises, pay-as-you-go server that links to various cloud services. During a gathering this evening in Washington, D.C., Intel confirmed at least five of its initial Intel Hybrid Cloud integrated partners. Intel’s efforts arrive as Microsoft confirms its own cloud-enabled version of Windows Small Business Server (SBS), code-named Aurora. Here are the details.

First, a quick background: The Intel Hybrid Cloud is a server designed for managed services providers (MSPs) to deploy on a customer premise. The MSPs can use a range of software to remotely manage the server. And the server can link out to cloud services. Intel is reaching out to MSPs now for the pilot program.

By the end of this year, Intel expects roughly 30 technology companies to plug into the system. Potential partners include security, storage, remote management and monitoring (RMM), and other types of software companies, according to Christopher Graham Intel’s product marketing engineer for Server CPU Channel Marketing.

Graham and other Intel Hybrid Cloud team members hosted a gathering tonight at the Microsoft Worldwide Partner Conference 2010 (WPC10). It sounds like Graham plans to stay on the road meeting with MSPs that will potentially pilot the system in the next few months.

Getting Started

At least five technology companies are already involved in the project with Intel. They include:

- Astaro, an Internet security specialist focused on network, mail and Web security.

- Level Platforms, promoter of the Managed Workplace RMM platform. Level Platforms also is preloaded on CharTec HaaS servers and HP storage systems for MSPs.

- Lenovo, which launched its first MSP-centric server in April 2010.

- SteelEye Technology Inc., a business continuity and high availability specialist.

- Vembu, a storage company that has attracted more than 2,000 channel partners, according to “Jay” Jayavasanthan, VP of online storage services.

I guess you can consider Microsoft partner No. 6, since Windows Server or Windows Small Business Server (SBS) can be pre-loaded on the Intel Hybrid Cloud solution.

Which additional vendors will jump on the bandwagon? I certainly expect one or two more RMM providers to get involved. But I’m curious to see if N-able — which runs on Linux — will join the party. Intel has been a strong Linux proponent in multiple markets, but so far it sounds like Intel Hybrid Cloud is a Windows-centric server effort…

Market Shifts… And Microsoft

I’m also curious to see how MSPs and small business customers react to Intel Hybrid Cloud. There are those who believe small businesses will gradually — but completely — abandon on-premises servers as more customers shift to clouds.

And Microsoft itself is developing two new versions of Windows Small Business Server — including SBS Aurora, which includes cloud integration for automated backup. I wonder if Microsoft will connect the dots between SBS Aurora and Windows Intune, a remote PC management platform Microsoft is beta testing now.

MSPmentor will be watching both Microsoft and Intel for updates.

<Return to section navigation list>

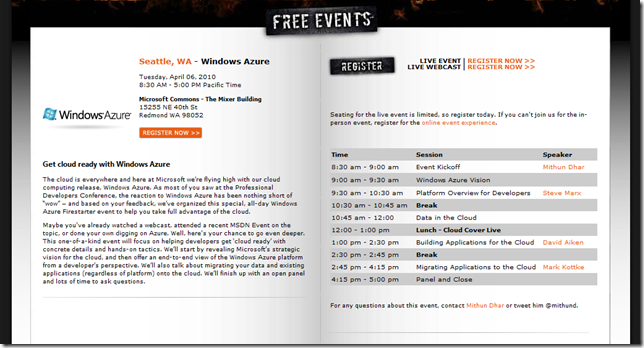

The Windows Azure Firestarter event here in Redmond, WA is coming up in just a few short weeks. You can attend in person or watch the live webcast by registering at

The Windows Azure Firestarter event here in Redmond, WA is coming up in just a few short weeks. You can attend in person or watch the live webcast by registering at

What is the effect of the Oracle acquisition of Sun Microsystems on cloud computing? Well, there have been quite a few if you look at where Sun's best and brightest have moved on to in the past few months.

What is the effect of the Oracle acquisition of Sun Microsystems on cloud computing? Well, there have been quite a few if you look at where Sun's best and brightest have moved on to in the past few months.