Windows Azure and Cloud Computing Posts for 9/1/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: Links to Twitter @handles still fail to populate the page, despite my previous complaint.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Brent Stineman (@BrentCodeMonkey) continued his Year of Azure series with Displaying a List of Blobs via your browser (Year of Azure Week 9) on 9/1/2011:

Sorry folks, you get a short and simple one again this week. And with no planning what-so-ever it continues the theme of the last two items.. Azure Storage Blobs.

So in the demo I did last week I showed how to get a list of blobs in a container via the storage client. Well today my inbox received the following message from a former colleague:

Hey Brent, do you know how to get a list of files that are stored in a container in Blob storage? I can’t seem to find any information on that. At least any that works.

Well I pointed out the line of code I used last week, container.ListBlobs(), and he said he was after an approach he’d seen that you could just point a URI at it and have it work. I realized then he was talking about the REST API.

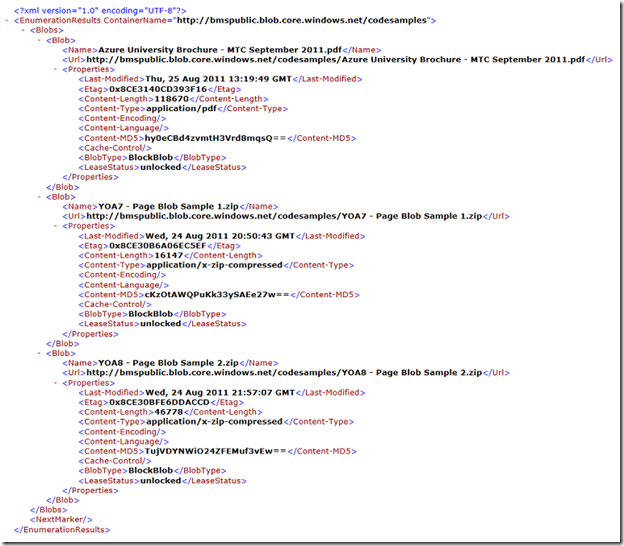

Well as I turns out, the Rest API List Blobs operation just needs a simple GET operation. So we can execute it from any browser. We just need a URI that looks like this:

http://myaccount.blob.core.windows.net/mycontainer?restype=container&comp=list

All you need to do is replace the underlines values. Well, almost all. If you try this with a browser (which is an anonymous request), you’ll also need to specify the container level access policy, allowing Full public read access. If you don’t, you may only be allowing public red access for the blobs in the container, in which case a browser with the URI above will fail.

Now if you’re successful, your browser should display a nice little chunk of XML that you can show off to your friends. Something like this…Unfortunately, that’s all I have time for this week. So until next time!

Dhananjay Kumar explained Uploading Stream in AZURE BLOB in a 9/1/2011 post:

In this post I will show you to upload stream to Azure BLOB.

Set the Connection String as below,

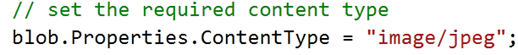

public void UploadBlob(Stream s, string fileName, string containerName, string connectionString ) { account = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue(connectionString)); blobClient = account.CreateCloudBlobClient(); container = blobClient.GetContainerReference(containerName); blob = container.GetBlobReference(fileName); s.Seek(0, SeekOrigin.Begin); // set the required content type blob.Properties.ContentType = "image/jpeg"; blob.SetProperties(); BlobRequestOptions options = new BlobRequestOptions(); options.AccessCondition = AccessCondition.None; blob.UploadFromStream(s, options); }You need to set required content type. If you are uploading image then content type would be like below,

To use this function you need to pass ,

- Public container name

- BLOB name as filename

- Data connection string

<Return to section navigation list>

SQL Azure Database and Reporting

The SQL Azure Team reported on 9/1/2011 resolving the [SQL Azure Database] [North Central US] [Yellow] SQL Azure Unable to Create Procedure or Table problem originally reported for all SQL Azure data centers on 8/26/2011:

Sep 1 2011 7:50PM Issue is resolved. Problem was found not to be as common as previous believed. Fix is being deployed by SQL Azure.

Aug 26 2011 3:00PM Investigating - Customers attempting to create a Table or a Stored Procedure may notice that the attempt runs for an extended period of time after which you may lose connectivity to your database. Microsoft is aware and is working to resolve this issue. If you are experiencing this issue please contact support at http://www.microsoft.com/windowsazure/support/.

Seems to me the response was a bit slow to arrive.

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Jesus Rodriguez described Cloud wars: Windows Azure AppFabric Caching Service vs. Amazon AWS ElastiCache in a 9/1/2011 post:

A few days ago, Amazon AWS announced the general availability of ElastiCache, a new distributed, in-memory caching service which complements the storage services included in the AWS platform. ElastiCache enables traditional caching capabilities to applications running in the AWS cloud.

Seeing this, you can’t avoid drawing a comparison with the existing cloud caching technologies such as the Windows Azure AppFabric Caching Service. There are some capabilities of ElastiCache that are clear differentiators with similar technologies including the Windows Azure AppFabric. To mention a few, ElastiCache is fully compliant with Memcached which will facilitate its adoption and interoperability with existing memcached libraries and tools. Secondly, ElastiCache is tightly integrated with other AWS technologies such as Amazon CloudWatch for monitoring and SNS for events and notifications.

The following matrix might help reveals a high level comparison between ElastiCacheand the Windows Azure AppFabric Caching Service.

In my opinion, currently, the AWS ElastiCache represents a more compelling option if you are looking to enable distributed, in-memory caching capabilities in your cloud applications. As an alternative, remember that you could deploy an on-premise caching technology like memcached as part of a Azure Worker Roles or an AWS EC2 instance.

Richard Seroter (@rseroter, pictured below) covered Window Azure AppFabric Applications and BizTalk solutions in an Interview Series: Four Questions With … Ryan CrawCour of 9/1/2011:

The summer is nearly over, but the “Four Questions” machine continues forward. In this 34th interview with a “connected technologies” thought leader, we’re talking with Ryan CrawCour who is a solutions architect, virtual technology specialist for Microsoft in the Windows Azure space, popular speaker and user group organizer.

Q: We’ve seen the recent (CTP) release of the Azure AppFabric Applications tooling. What problem do you think that this is solving, and do you see this as being something that you would use to build composite applications on the Microsoft platform?

A: Personally, I am very excited about the work the AppFabric team, in general, is doing. I have been using the AppFabric Applications CTP since the release and am impressed by just how easy and quick it is to build a composite application from a number of building blocks. Building components on the Windows Azure platform is fairly easy, but tying all the individual pieces together (Azure Compute, SQL Azure, Caching, ACS, Service Bus) is sometimes somewhat of a challenge. This is where the AppFabric Applications makes your life so much easier. You can take these individual bits and easily compose an application that you can deploy, manage and monitor as a single logical entity. This is powerful. When you then start looking to include on-premises assets in to your distributed applications in a hybrid architecture AppFabric Applications becomes even more powerful by allowing you to distribute applications between on-premises and the cloud. Wow. It was really amazing when I first saw the Composition Model at work. The tooling, like most Microsoft tools, is brilliant and takes all the guess work and difficult out of doing something which is actually quite complex. I definitely seeing this becoming a weapon in my arsenal. But shhhhh, don’t tell everyone how easy this is to do.

Q: When building BizTalk Server solutions, where do you find the most security-related challenges? Integrating with other line of business systems? Dealing with web services? Something else?

A: Dealing with web services with BizTalk Server is easy. The WCF adapters make BizTalk a first class citizen in the web services world. Whatever you can do with WCF today, you can do with BizTalk Server through the power, flexibility and extensibility of WCF. So no, I don’t see dealing with web services as a challenge. I do however find integrating line of business systems a challenge at times. What most people do is simply create a single service account that has “god” rights in each system and then the middleware layer flows all integration through this single user account which has rights to do anything on either system. This makes troubleshooting and tracking of activity very difficult to do. You also lose the ability to see that user X in your CRM system initiated an invoice in your ERP system. Setting up and using Enterprise Single Sign On is the right way to do this, but I find it a lot of work and the process not very easy to follow the first few times. This is potentially the reason most people skip this and go with the easier option.

Q: The current BizTalk Adapter Pack gives both BizTalk, WF and .NET solutions point-and-click access to SAP, Siebel, Oracle DBs, and SQL Server. What additional adapters would you like to see added to that Pack? How about to the BizTalk-specific collection of adapters?

A: I was saddened to see the discontinuation of adapters for Microsoft Dynamics CRM and AX. I believe that the market is still there for specialized adapters for these systems. Even though they are part of the same product suite they don’t integrate natively and the connector that was recently released is not yet up to Enterprise integration capabilities. We really do need something in the Enterprise space that makes it easy to hook these products together. Sure, I can get at each of these systems through their service layer using WCF and some black magic wizardry but having specific adapters for these products that added value in addition to connectivity would certainly speed up integration.

Q [stupid question]: You just finished up speaking at TechEd New Zealand which means that you now get to eagerly await attendee feedback. Whenever someone writes something, presents or generally puts themselves out there, they look forward to hearing what people thought of it. However, some feedback isn’t particular welcome. For instance, I’d be creeped out by presentation feedback like “Great session … couldn’t stop staring at your tight pants!” or disheartened by book review like “I have read German fairy tales with more understandable content, and I don’t speak German.” What would be the worst type of comments that you could get as a result of your TechEd session?

A: Personally I’d be honored that someone took that much interest in my choice of fashion, especially given my discerning taste in clothing. I think something like “Perhaps the presenter should pull up his zipper because being able to read his brand of underwear from the front row is somewhat distracting”. Yup, that would do it. I’d panic wondering if it was laundry day and I had been forced to wear my Sunday (holey) pants. But seriously, feedback on anything I am doing for the community, like presenting at events, is always valuable no matter what. It allows you to improve for the next time.

I half wonder if I enjoy these interviews more than anyone else, but hopefully you all get something good out of them as well!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brian Swan (@brian_swan) described Windows Azure Remote Desktop Connectivity for PHP Applications in a 9/1/2011 post to the Windows Azure’s Silver Lining blog:

If you are someone who is experimenting with PHP on Azure (or even if you have some experience in this area), it is often helpful to have remote access to your running deployments. Remotely accessing deployments can be helpful in debugging problems that might otherwise be very difficult to figure out. In this article, I’ll show you how to configure, deploy, and remotely access a PHP application in Windows Azure.

I’ll assume that you have created a ready-to-deploy Azure/PHP application by following this tutorial: Build and Deploy a Windows Azure PHP Application. (i.e. I’ll assume you have run the scaffolder tool on the default scaffold, you have added your application files to the PhpOnAzure.Web directory, and that you’ve tested your application in the local development environment).

Note: Once you have a ready-to-deploy application, the instructions below apply (it doesn't necessarily have to be a PHP application).

1. Download the encutil.exe. You can download the encutil.exe tool here: http://wastarterkit4java.codeplex.com/releases/view/61026

2. Create a certificate (if you don’t already have one). You can use the encutil.exe to create a self-signed certificate (which is fine for basic testing situations), but using a trusted certificate issued by a certificate authority is the most secure way to go. The following command will create a self-signed certificate in the same directory that the encutil.exe tool is in (you may need to execute this from a command prompt with Administrator privileges):

c:\>encutil.exe -create -cert "phptestcert.cer" -pfx "phptestcert.pfx" -alias "PhpTestCert" -pwd yourCertificatePasswordYou should see two files created: phptestcert.cer and phptestcert.pfx.

3. Get the certificate thumbprint. Execute the following command to get your certificate’s thumbprint:

c:\>encutil -thumbprint -cert "phptestcert.cer"This thumbprint (which you will add to your project’s ServiceConfiguration.cscfg file) must correspond to the certificate you upload to Windows Azure in order for your remote access configuration to be valid.

4. Add certificate thumbprint to ServiceConfiguration.cscfg file. To do this, open the ServiceConfiguration.cscfg file in your PhpOnAzure.Web directory and add the following element as a child of the <Role> element (adding your certificate thumbprint):

<Certificates><Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption"thumbprint="your_certificate_thumbprint_here"thumbprintAlgorithm="sha1"/></Certificates>5. Encrypt your remote login password. The following command will encrypt the password you will use to remotely login to your Windows Azure instance:

c:\>;encutil -encrypt -text YourLoginPassword -cert "phptestcert.cer" >> encryptedPwd.txtYour encrypted login password will be written to a file (encryptedPwd.txt in the example above) in the same directory that encutil.exe is in.

6. Add remote settings to ServiceConfiguration.cscfg file. Add the elements below as children of the <ConfigurationSettings> element in your ServiceConfiguration.cscfg file. Note that you will need to add values for…

- AccountUserName (the user name you will use to login remotely)

- AccountEncryptedPassword (from the previous step)

- AccountExpirationDate (in the format shown)

<Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled" value="true" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername" value="login_user_name" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword" value="your_encrypted_login_password" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration" value="2011-09-23T23:59:59.0000000-07:00" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled" value="true" />7. Add <Import> elements to ServiceDefinition.csdef file. Add the following elements as children to the <Imports> element in your project’s ServiceDefinition.csdef file.

<Import moduleName="RemoteAccess" /><Import moduleName="RemoteForwarder" />Your ServiceConfiguration.cscfg and ServiceDefinition.csdef files should now look similar to these:

ServiceConfiguration.cscfg

<?xml version="1.0" encoding="utf-8"?><ServiceConfiguration serviceName="PhpOnAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="2" osVersion="*"><Role name="PhpOnAzure.Web"><Instances count="1" /><ConfigurationSettings><Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="UseDevelopmentStorage=true"/><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled" value="true" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername" value="testuser" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword" value="MIIBnQYJKoZIhvcNAQcDoIIBjjCCAYoCAQAxggFOMIIBSgIBADAyMB4xHDAaBgNVBAMTE1dpbmRvd3MgQXp1cmUgVG9vbHMCEGTrrnxrjs+HQvP1BWHaJGswDQYJKoZIhvcNAQEBBQAEggEAIKtZV4cEog2V9Ktf89s+Km8WBZZ5FPH/TPrc7mQ02lMgn9wblusf5F8xIDQhgzZNRyIPh6wzAeHJ/8jy6i/pBWjcgT1jZ2eHzBxQ78EUpCubuLNYq1WoH6mLQ3Uy9GqCmA3zB67hUOyt7nzjOV8zsYWa8LYF10swWiDIqYKcIbMCACPonSFtY6BEDA/duPoOkcWHytj4FdHLu9yrgscIV/iVT7bJlmbZg7yo8Mb5Svf1DBkLubKHLVaT//0Sj4BUOaqIBpP6l2A5sruNRKBEB9bNX/C8ChlGOWWth5mCDbsg6Qy9njdac9e4KeO4DkGyRbsqMvH9DesneChuudGunDAzBgkqhkiG9w0BBwEwFAYIKoZIhvcNAwcECKskiIAhPkHCgBAQDpUxflSHQCFaHvihEH6y" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration" value="2011-09-23T23:59:59.0000000-07:00" /><Setting name="Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled" value="true" /></ConfigurationSettings><Certificates><Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption" thumbprint="7D1755E99A2ECA6B56F8A6D9A4650C65C1B30C72" thumbprintAlgorithm="sha1" /></Certificates></Role></ServiceConfiguration>ServiceDefinition.csdef

<?xml version="1.0" encoding="utf-8"?><ServiceDefinition name="PhpOnAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"><WebRole name="PhpOnAzure.Web" enableNativeCodeExecution="true"><Sites><Site name="Web" physicalDirectory="./PhpOnAzure.Web"><Bindings><Binding name="Endpoint1" endpointName="HttpEndpoint" /></Bindings></Site></Sites><Startup><Task commandLine="add-environment-variables.cmd" executionContext="elevated" taskType="simple" /><Task commandLine="install-php.cmd" executionContext="elevated" taskType="simple" /></Startup><Endpoints><InputEndpoint name="HttpEndpoint" protocol="http" port="80" /></Endpoints><Imports><Import moduleName="Diagnostics"/><Import moduleName="RemoteAccess" /><Import moduleName="RemoteForwarder" /></Imports><ConfigurationSettings></ConfigurationSettings></WebRole></ServiceDefinition>You are now ready to deploy your application to Windows Azure. Follow the instructions in this tutorial to do so, Deploying your first PHP application to Windows Azure, but be sure to add your certificate when you create the deployment:

Browse to the .pfx file you created in step 2 and supply the password that you specified in that step:

When your deployment is in the Ready state, you can access your running Web role via Remote Desktop by selecting your role in the portal and clicking on Connect (at the top of the page):

Clicking on Connect will download an .rdp file which you can open directly (for immediate remote access) or save and use to gain remote access at any later time.

That’s it! You now have full access to your running Web role. A couple of things to note:

- Your web root directory is E:\approot.

- As mentioned earlier, remote access to running web roles is primarily for debugging purposes. Any changes you make to a running instance will not be propagated to other instances should you spin more of them up.

Happy debugging!

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Visual Studio LightSwitch Team (@VSLightSwitch) reported Update for LightSwitch Released in a 9/1/2011 post:

The LightSwitch team has released a General Distribution Release (GDR) to resolve an issue encountered when publishing to SQL Azure.

In some cases, customers have reported a “SQLServer version not supported” exception when publishing a database to SQL Azure. This is due to a recent SQL Azure server upgrade which caused the version incompatibility. The GDR enables LightSwitch to target the upgraded version. The fix also makes it resilient to future server upgrades.

The GDR and instructions for installing it can be found on the Download Center.

Thank you to all who reported the issue to us. We apologize for any inconvenience.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Michael K. Campbell asserted “Microsoft's Windows Azure marketing is still a bit too cloudy for Michael K. Campbell” as an introduction to his Microsoft Windows Azure: Why I Still Haven't Tried It post of 9/1/2011 to the DevProConnections blog:

Within the next two months I'm hoping to launch a product that requires scalable, redundant, and commoditized hosting for a number of geographically distributed nodes. As of today, I don't think I'll be using Microsoft Windows Azure to host my solution.

Windows Azure and the Cloud

After looking around at various cloud-hosting options for my solution, I've considered the notion of deploying my solution on either a Platform as a Service (PaaS) cloud or going a bit deeper down the "stack" and using an Infrastructure as a Service (IaaS), where I'll be looking for full-blown virtual machine (VM) cloud hosting.

I'm no stranger to the cloud. As I discussed in "Amazon's S3 for ASP.NET Web Developers," I've been actively using it for a number of solutions for myself and clients for years now, and I'm sold on the benefits of being able to easily access scalable, redundant, commodity hardware and services as a quick way to decrease development time.

For some reason, I've yet to actually test an Azure solution. Oh, I've tried to test Azure a few times, but some aspect of the service or sign-up always turns me away without actually setting up an account. So, in an effort to try to be helpful, as was my intent in "A Call to Action on .NET Versioning," I thought I'd share some reasons why.

PaaS and Dumbing Down My Apps

When Windows Azure was first released, I had no qualms about being unimpressed. From my perspective, Microsoft was (once again) late to the game while still acting like they'd invented everything.

Even so, I could see the benefit in Microsoft working on Azure. I was quite hopeful and optimistic that Windows Azure and SQL Azure would eventually bring some big things to SQL Server and the .NET Framework. I still believe those big benefits are on the horizon—just as I feel that Microsoft's focus on the cloud is a great move.

When it comes to deploying my .NET applications to Windows Azure, I start running into problems. In my mind, I have to dumb down my apps to get them to work on Windows Azure or SQL Azure. Ironically, I haven't paid enough attention to Windows Azure to know exactly what kind of changes are required to my sites or apps, but I have paid enough attention to know that I will have to make some changes.

What I do know, however, is that the site I'm directed to from Google is Microsoft's Windows Azure site, which is designed to cater to developers. The sad thing is that the introduction to Azure for developers, Introducing Windows Azure, is a page where I can download a whitepaper that happens to have been written over a year ago.

Since I already feel that one of the biggest pains that I have with .NET is jumping through configuration, deployment, framework versioning, and compatibility hurdles, I just can't compel myself to click on a link that's going to give me year-old information on how I have to do that with handcuffs (Azure limitations) on.

Instead, I'm left wondering why there can't be at least an FAQ that makes it easy for me to get a glimpse of what I need to do to get my apps up and running instead of having to download a white paper.Azure's PaaS Versus My Need for IaaS

The tiny bit of Googling that I did to determine how hard it would be to deploy a Windows service to an Azure host let me know that while Windows Azure offers both web and worker roles. As this blog post "Windows Service in Azure platform" states, you can't deploy both roles to the same machine or hosting slot.* [Emphasis added.]

More important, that tiny bit of research let me know that Windows Azure doesn't support Windows Servers. Granted, I'm not at all surprised by the fact that Windows Azure doesn't support services, as they're a special set of operations that are very heavily tied to the OS. So having to modify deployment needs doesn't seem that big of a problem to me if I want to deploy "service-y" solutions.

What did take me back for a second was the fact that if you need worker and web roles, you'll need two distinct instances of Azure to pull that off. The truth is that this is really just a limitation of how Windows Azure is a PaaS, not an entire piece of the infrastructure.In fairness, a big part of what continues to keep me from using Azure is that it doesn't quite mesh with my needs. It's only fair that I call that out in an article about why I haven't actually tried Azure for a long time now.

In fact, I don't think I would have even bothered with detailing my own weird misgivings about trying Azure if it weren't for the fact that Azure now offers a Virtual Machine Role, an IaaS offering that does meet my needs and speak my language without the need to dumb anything down.

* An individual Windows Azure compute instance supports multiple (up to 25) Web and Worker roles. See Corey Sanders’ post below.

This article receives my “Non-Sequitur of the Month Award.” If a VM Role meets the author’s needs, why the “Azure's PaaS Versus My Need for IaaS” headline?

Corey Sanders (@CoreySandersWA) reported Number of Roles Allowed In Windows Azure Deployments Increased To 25 in a 9/1/2011 post to the Windows Azure blog:

Windows Azure has increased the maximum number of roles allowed in a deployment from 5 to 25. This change allows customers to deploy up to 25 distinct roles, which can be a mixture of Web Roles, Worker Roles, and Virtual Machine Roles, as part of a single deployment. This increase gives application developers a more granular level of control over the lifecycle of different aspects of their deployment, since each of these 25 roles can be scaled and updated independently.

Additionally, Windows Azure has changed the way in which we account for endpoints. Previously, a deployment was restricted to a maximum of 5 internal endpoints per role. Now, a deployment can have the total 25 internal endpoints allocated to roles in any combination (including all 25 on the same role).

This aligns with the way input endpoints can be allocated. Windows Azure now supports 25 internal endpoints and 25 input endpoints, allocated in 25 roles in any combination.

Further details on enabling role communication can be found here.

David Linthicum (@DavidLinthicum) recommended “If you plan to implement cloud computing, get your IT assets under control prior to making the move” in a deck to his Shape up before heading to the cloud article for InfoWorld’s Cloud Computing blog:

Enterprises looking to implement cloud computing typically have numerous issues that they hope the move to the cloud will magically solve. It's no surprise that many of today's IT shops face a huge number of negatives: overly complex enterprise architecture, out-of-control data sprawl, and a huge backlog of app dev work that's not being met, typically in an environment of diminishing budgets.

Newer technology approaches like cloud computing have the potential to make things better. However, it's clear to me that cloud computing won't save you unless you get your own IT house in order first. I see this pattern over and over.

The problem comes when enterprises try to push data, processes, and applications outside the firewall without regard for their states. Thus, they end up placing poorly defined, poorly designed, and poorly developed IT assets into public and private clouds only to find that not much changes other than where the assets are hosted.

Sorry to be the buzzkill here. To make cloud computing work for your enterprise, you need to drive significant changes in the existing on-premise systems before or as you move to cloud. This means that you redesign database systems so that they're much less complex and more meaningful to the business problems, invest in process change and optimization, and even normalize the hundreds of stovepipe applications pulled into the enterprise over the years.

Of course, the problem with this necessary approach is that it could take years to complete at a significant cost. As a result, most of those in charge of IT try to make the end run to the cloud -- and hope for the best. As I say in my talks, the crap in the enterprise moved to clouds is just crap in the cloud.

Scott Guthrie (@scottgu) described the reason for his infrequent blog posts in a Let’s get this blog started again… post of 8/31/2011:

A few people have sent me email over the summer about my blog: “hey – does your blog still work?”, “did it move somewhere else?”, “did you die?”. To provide a few quick answers: my blog does still work, it didn’t move anywhere, and I am not dead. :-)

I fell behind on blogging mostly because I’ve been working pretty hard the last few months on a few new products that I haven’t previously been involved in. In particular, I’ve been focusing a lot of time on Windows Azure – which is our cloud computing platform – as well as on some additional server products, frameworks and tools. I’ll be blogging a lot more about them in the future. [Emphasis added.]

To kick-start my blog again, though, I thought I’d start a new blog series that covers some cool new ASP.NET and Visual Studio features that my team has been working on this summer.

Hope this helps,

Scott

I was one of the folks bugging The Gu about his silence after receiving his Window Azure management assignment.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Tom Nolle (@CIMICorp) described Mapping applications to the hybrid cloud in a 9/1/2011 post to SearchCloudComputing.com

Most enterprises see public cloud computing as a cooperative technology in the data center; they'll cloud-source some applications, but not all of them. When building a hybrid cloud, the trick is knowing which apps to cloud-source and when.

Mapping the right applications to an enterprise's hybrid cloud vision will almost certainly determine how much value it can draw from the public cloud. The majority of enterprise IT applications fit into one of the following categories, each of which has its own hybridization issues:

- Transactional Web applications: serve Web-connected users and provide entry, customer support or other transaction-oriented activities.

- Private transactional applications: provide worker access to company applications, but do not provide general access to the public or customers.

- SOA-distributed applications: built on a service-oriented architecture componentization model where you can run a portion of the application in a distributed way.

- Data mining applications: access historical data to glean business intelligence (BI).

Transactional Web applications not only support customers or suppliers, but also work with Web front-end applications in the data center and can be accessed by remote workers. Transactional Web cloud models are the most commonly deployed application models in the data center.

The current industry practice is to use public cloud services to host these applications. A VPN link is then used to pass transactions from the Web server to an application server located within the company's data center.

Enterprise data remains in the data center, so information security and data storage costs are lower. The only significant security and compliance issue you may encounter involves securing the Web host-to-application server connection; offloading Web hosting can improve data center resource usage and costs. If you're looking into hybrid cloud, this is a good place to start.

Private transactional is more a problematic application model because it raises the question of where data is stored. Public cloud data storage fees vary considerably; some can be so high that charges for a single month would equal the cost of a disk drive of the same capacity. In enterprises where storage charges are a factor, it's best to store data in the data center and then access it using a remote SQL Server query.

The private transactional model is valuable in scenarios where a worker transaction requires users to access a large amount of data. For security reasons, most enterprises in this situation look for cloud providers who offer provisioned VPN service integration to their cloud computing product. A provisioned VPN lets enterprises obtain a meaningful application service-level agreement (SLA) that the Internet cannot provide.

SOA-distributed applications can increase data storage costs if the application components hosted in the cloud require access to the enterprise data warehouse; remote SQL Server queries can remedy this as well. But distributing application components via SOA can create additional security and performance challenges, particularly if you use the Internet to link enterprise users, enterprise-hosted SOA components and cloud-hosted components.

When building a hybrid cloud, the trick is knowing which apps to cloud-source and when.

In this instance, it's important to have a private VPN connection to the cloud via a network provider with a stringent SLA. Even when accessing the cloud through a VPN, you should review how application components are hosted to ensure that work isn't being duplicated between the data center and the cloud. Data duplication could cause delays and negatively affect worker productivity.

Out of all four of the enterprise IT applications discussed, data mining can create the highest data storage expenses. Despite this, BI and data mining applications are two of the most interesting candidates for to cloud-source because of their unpredictable nature. The option to allow cloud-based data mining or BI applications to access the data center via a remote SQL query logic is an option, but the volume of information that would need to move across a network connection could create performance problems.

The best approach to moving data mining applications to a hybrid cloud might be to create abstracted databases that summarize the large quantity of historical data enterprises collect. You then can store the summary databases in the cloud and access them via public cloud BI tools -- without racking up excessive charges. Additionally, there are minimal risks to storing detailed core data in the cloud.

More on hybrid cloud:

Tom is president of CIMI Corporation, a strategic consulting firm specializing in telecommunications and data communications since 1982.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

Cloud Security and Governance

Tanya Forsheit reported California Amends Its Data Breach Law - For Real, This Time! (As California Goes, So Goes the Nation? Part Three) in a 9/1/2011 post to the Information Law Group blog:

California's infamous SB 1386 (California Civil Code sections 1798.29 and 1798.82) was the very first security breach notification law in the nation in 2002, and nearly every state followed suit. Many states added their own new twists and variations on the theme - new triggers for notification requirements, regulator notice requirements, and content requirements for the notices themselves. Over the years, the California Assembly and Senate have passed numerous bills aimed at amending California's breach notification law to add a regulator notice provision and to require the inclusion of certain content. However, Governor Schwarzenegger vetoed the bills on multiple occasions, at least three times. Earlier this year, State Sen. Joe Simitian (D-Palo Alto) introduced Senate Bill 24, again attempting to enact such changes. Yesterday, August 31, 2011, Governor Brown signed SB 24 into law.

SB 24, which will take effect January 1, 2012, requires the inclusion of certain content in data breach notifications, including a general description of the incident, the type of information breached, the time of the breach, and toll-free telephone numbers and addresses of the major credit reporting agencies in California. In addition, importantly, SB 24 requires data holders to send an electronic copy of the notification to the California Attorney General if a single breach affects more than 500 Californians. This adds California to the list of states and other jurisdictions that require some type of regulator notice in the event of certain types of data security breaches (note that California already requires notice to the Department of Public Health for certain regulated entities in the event of a breach involving patient medical information, Health & Safety Code section 1280.15). Other states that require some form of regulator notice in some circumstances for certain kinds of entities (sometimes for a breach, and sometimes to explain why an entity has determined there was no breach) include Alaska, Arkansas, Connecticut, Hawaii, Indiana, Louisiana, Maine, Maryland, Massachusetts, Missouri, New Hampshire, New Jersey, New York, North Carolina, Puerto Rico, South Carolina, Vermont, and Virginia.

You can find the text of SB 24 here.

Tanya L. Forsheit is a Founding Partner of InfoLawGroup LLP and a former partner with Proskauer, where she was Co-Chair of that firm’s Privacy and Data Security practice group.

David Mills reported System Center Data Protection Manager 2012 Beta released! in an 8/31/2011 post to the System Center Team blog:

Today, we’re happy to announce the beta release of Data Protection Manager 2012, with the latest and greatest System Center data protection capabilities. In its fourth generation, Microsoft System Center Data Protection Manager 2012 builds on a legacy of providing unified data protection for Windows servers and clients as a best-of-breed backup & recovery solution from Microsoft, for Windows environments. As a key component of System Center 2012, DPM provides critical data protection and supports restore scenarios from disk, tape and cloud -- in a scalable, manageable and cost-effective way.

So what have customers been asking for since this last release to help us drive even greater value in Data Protection Manager 2012? Probably the biggest request was for a single console for the datacenter that reduces management costs and can fit into the existing environment. To support the increasing need to protect highly-virtualized and private cloud environments, we’ve enabled Hyper-V Item Level Recovery even when DPM is running inside a virtual machine.

Here’s a more detailed list of what’s new

Provides centralized management

- Centralized monitoring

- Centralized troubleshooting

- Push to resume backups

- Manage DPM 2010 and DPM 2012 from the same console

- Media co-location

Fits existing environment

- Integration into existing ticketing systems, workflows and team structures.

- Enterprise scale, Fault tolerance & Reliability.

- Generic data source protection

- All common Operations Manager 2007 R2 deployment configurations supported

Helps reduce management costs

- Remote administration, corrective actions and recovery

- Certificate based protection

- Prioritize issues with SLA based Alerting, consolidation of alerts and alert categorization

- Role based administration

- Support for item level recovery, even when DPM is in a VM

The System Center team will be hosting a series of online meetings highlighting features and functionality for Data Protection Manager 2012 through the existing Community Evaluation Programs.

- All registered members of the currently running Community Evaluation Programs will be invited to the DPM sessions. If you are having trouble accessing any program you have applied to, please email us at mscep@microsoft.com with your full name and Windows Live ID, and we will check on your status.

- If you have not registered for a program, you can Apply Here. After review of your application, we will send an invitation to your Windows Live ID email with instructions on how to access the portal on Connect. Once you have accepted the invitation, you will have access to the CEP resources and the DPM event invitations.

Throughout the CEP sessions, you will be taken on a guided tour of the Data Protection Manager 2012 Beta, with presentations from the Product Group and guidance on how to evaluate the different features. This is your chance to engage directly with the team!

- Download the DPM 2012 beta here.

- To use DPM 2010 servers with this beta, download the Interoperability hotfix here.

For more product information, refer to the Data Protection Manager 2012 beta web page

<Return to section navigation list>

Cloud Computing Events

Janet I. Tu reported Microsoft announces speakers for this year's Financial Analysts Meeting in a 9/1/2011 post to the Seattle Times Business / Technology Microsoft Pri0 blog:

Microsoft has posted the line-up of speakers for its annual Financial Analysts Meeting (FAM), taking place this year 1 p.m. to 4 p.m. on Sept. 14 in conjunction with its BUILD conference in Anaheim, Calif.

FAM is where the company talks about its product road map and answers some analysts' questions, with a reception afterward.

This year's speakers include: CEO Steve Ballmer, COO Kevin Turner, CFO Peter Klein, General Manager of Investor Relations Bill Koefoed, President of Online Services Division Qi Lu and President of Server & Tools Satya Nadella.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Chris Hoff (@Beaker) posted Quick Blip: Hoff In The Cube at VMworld 2011 – On VMware Security in a 9/1/2011 post:

Cloud Computing, Cloud Security, Virtualization, Virtualization Security, VMware

John Furrier and Dave Vellante from SiliconAngle were kind enough to have my on the Cube, live from VMworld 2011 on the topic of virtualization/cloud security, specifically VMware…:

Mary Jo Foley (@maryjofoley) reported “Google is preparing to turn Google App Engine — its Windows Azure cloud-platform competitor — into a fully supported product in the second half of September” in a deck for her Google's Windows Azure competitor to finally shed its 'preview' tag post of 9/1/2011 to her All About Microsoft blog for ZDNet:

Google is notifying administrators working with its Google App Engine (GAE) cloud platform that it will be removing the “preview” tag from the product in the second half of September.

Google first released a beta of GAE in April 2008. GAE is Google’s platform-as-a-service (PaaS) offering that competes head-to-head with Windows Azure. GAE allows developers to create and host applications using infrastructure in Google’s own datacenters. At Google I/O in May of this year, Google execs promised that GAE would become a fully supported Google product before the end of 2011.

The GAE team is notifying GAE testers via e-mail of the following new policy and pricing changes that will take effect later this month, including the introduction of a new “Premier Account” offering at $500 per account per month, plus usage fees. From that e-mail:

• For all paid applications using the High Replication Datastore (HRD), we will be introducing a new 99.95% uptime SLA. The current draft form of the SLA can be found at http://code.google.com/appengine/sla.html. For apps not using HRD, we will also soon release a tool that will assist with the migration from Master/Slave (M/S) to HRD (you can sign up to be in the Trusted Tester for the tool here: http://goo.gl/3jrXu). We would like to emphasize that the SLA only applies to applications that have both signed up to be a paid App and use the HRD Datastore.

• App Engine has a 3 year deprecation policy. This policy applies to the entire App Engine platform with the exception of “trusted tester” and “experimental” APIs. This is intended to allow you to develop your app with confidence knowing that you will have sufficient notice if we plan to make any backward-incompatible API changes that will impact your application.

• We will be updating the Terms of Service with language more geared towards businesses. A draft of the new ToS can be found at http://code.google.com/appengine/updated_terms.html.

• We are introducing new Premier Accounts that will have access to Operational Support, invoice-based billing, and allow companies to create as many applications as they need for $500 per account per month (plus usage fees). If you are interested in a Premier Account, please contact us at appengine_premier_requests@google.com.

We will be moving to a new pricing structure that ensures ongoing support of App Engine. Details of the new structure can be found at http://www.google.com/enterprise/cloud/appengine/pricing.html. This includes lowering the free quotas for all Apps. Almost all applications will be billed more under the new pricing. Once App Engine leaves preview this pricing will immediately go into effect, but we’ve done a few things to ease the transition:

• If you sign up for billing or update your budget between now and October 31st we will give you a $50 credit.

• In order to help you understand your future costs we are now providing a side by side comparison of your old bill to what your new bill would be. You can find these in your Admin Console under “Billing history” by clicking on any of your “Usage Reports”. Please review this information. It’s important that you study this projected billing and begin any application tuning that you want to be in effect prior to the new bill taking effect.

• We have created an Optimization Article to help you determine how you could optimize your application to reduce your costs under the new model.

• We have created a Billing FAQ based on the questions many of our customers have had about the new pricing model.

• The new pricing model charges are based on the number of instances you have running. This is largely dictated by the App Engine Scheduler, but for Python we will not be supporting concurrent requests until Python 2.7 is live. Because of this, and to allow all developers to adjust to concurrent requests, we have reduced the price of all Frontend Instance Hours by 50% until November 20th.

• Finally, if you have any additional questions or concerns please contact us at appengine_updated_pricing@google.com. (Thanks to @pradeepviswav for the mail)

Microsoft began charging Azure customers as of February 1, 2010, for its own PaaS service. Microsoft has been continually tweaking Azure pricing in an effort to increase its appeal to smaller developers. The Redmondians ares expected to talk up Windows Azure during the second day of the Build conference in mid-September, sharing more with developers about its development tools and technologies there.

And in other cloud-related news…

Google seemingly is not interested in fielding offerings in the private cloud and/or cloud appliance arenas, where Microsoft is playing in addition to the public cloud space.

Microsoft is stepping up its private cloud evangelization and sales efforts, as of late, and published in late August a new whitepaper, entitled “Microsoft Private Cloud: A comparative look at Functionality, Benefits, and Economics.” That paper focuses primarily on comparing Microsoft’s private-cloud products with those from VMware.

Speaking of VMware, Dell and VMware announced this week a joint offering known as Dell Cloud with VMware vCloud Data Services. The pair will offer public, private and hybrid cloud capabilities. The public cloud offering — hosted in a secure Dell data center — sounds similar to the Windows Azure Appliance offering that Dell was on tap to launch with Microsoft late last year or early this year. (The Dell Cloud with VMware offering is in beta and will open to the general public in the U.S. in Q4 2011.)

Of the original Windows Azure Appliance partners — Dell, HP and Fujitsu — so far, only Fujitsu has made the Azure Appliance offering available to customers (and only as of August 2011).

When I asked Dell for an update on its Windows Azure Appliance plans, I received the following statement from a Dell spokesperson: “Dell continues to collaborate with Microsoft on the Windows Azure Platform Appliance and we don’t have any additional details to share at this time.”

Tim Anderson (@timanderson) asserted Google gets serious about App Engine, ups prices in a 9/1/2011 post:

Google App Engine will be leaving preview status and becoming more expensive in the second half of September, according to an email sent to App Engine administrators:

We are updating our policies, pricing and support model to reflect its status as a fully supported Google product … almost all applications will be billed more under the new pricing.

Along with the new prices there are improvements in the support and SLA (Service Level Agreement) for paid applications. For example, Google’s High Replication Datastore will have a new 99.95% uptime SLA (Service Level Agreement).

Premier Accounts offer companies “as many applications as they need” for $500 per month plus usage fees. Otherwise it is $9.00 per app.

Free apps are now limited to a single instance and 1GB outgoing and incoming bandwidth per day, 50,000 datastore operations, and various other restrictions. The “Instance” pricing is a new model since previously paid apps were billed on the basis of CPU time per hour. Google says in the FAQ that this change removes a barrier to scaling:

Under the current model, apps that have high latency (or in other words, apps that stay resident for long periods of time without doing anything) can’t scale because doing so is cost-prohibitive to Google. This change allows developers to run any sort of application they like but pay for all of the resources that your applications use.

Having said that, the free quota remains generous and sufficient to run a useful application without charge. Google says:

We expect the majority of current active apps will still fall under the free quotas.

Free apps are limited to a single instance, 1GB outgoing and 1GB incoming bandwidth per day, and 50,000 datastore operations, among other restrictions.

The pricing is complex, and comparing prices between cloud providers such as Amazon, Microsoft and Salesforce.com is even more complex as each one has its own way of charging. My guess is that Google will aim to be at least competitive with AWS (Amazon Web Services), while Microsoft Azure and Salesforce.com seem to be more expensive in most cases.

Related posts:

K. Scott Morrison (@KScottMorrison) described Clouds On A Plane: VMware’s Micro Cloud Foundry Brings PaaS To My Laptop in an 8/31/2011 post:

On the eve of this week’s VMworld conference in Las Vegas, VMware announced that Micro Cloud Foundry is finally available for general distribution. This new offering is a completely self-contained instantiation of the company’s Cloud Foundry PaaS solution, which I wrote about earlier this spring. Micro Cloud Foundry comes packaged as a virtual machine, easily distributable on a USB key (as they proved at today’s session on this topic at VMworld), or as a quick download. The distribution is designed to run locally on your laptop without any external dependencies. This allows developers to code and test Cloud Foundry apps offline, and deploy these to the cloud with little more than some simple scripting. This may be the killer app PaaS needs to be taken seriously by the development community.

The reason Micro Cloud Foundry appeals to me is that it fits well with my own coding style (at least for the small amount of development I still find time to do). My work seems to divide into two different buckets consisting of those things I do locally, and the things I do in the cloud. More often than not, things find themselves in one bucket or the other because of how well the tooling supports my work style for the task at hand.

As a case in point, I always build presentations locally using PowerPoint. If you’ve ever seen one of my presentations, you hopefully remember a lot of pictures and illustrations, and not a lot of bullet points. I’m something of a frustrated graphic designer. I lack any formal training, but I suppose that I share some of the work style of a real designer—notably intense focus, iterative development, and lots of experimentation.

Developing a highly graphic presentation is the kind of work that relies as much on tool capability as it does on user expertise. But most of all, it demands a highly responsive experience. Nothing kills my design cycle like latency. I have never seen a cloud-based tool for presentations that meets all of my needs, so for the foreseeable future, local PowerPoint will remain my illustration tool of choice.

I find that software development is a little like presentation design. It responds well to intense focus and enjoys a very iterative style. And like graphic design, coding is a discipline that demands instantaneous feedback. Sometimes I write applications in a simple text editor, but when I can, I much prefer the power of a full IDE. Sometimes I think that IntelliJ IDEA is the smartest guy in the room. So for many of the same reasons I prefer local PowerPoint for presentations, so too I prefer a local IDE with few if any external dependencies for software development.

What I’ve discovered is that I don’t want to develop in the cloud; but I do want to use cloud services and probably deploy my application into the cloud. I want a local cloud I can work on offline without any external dependency. (In truth, I really do code on airplanes—indeed some of my best work takes place at 35,000 feet.) Once I’m ready to deploy, I want to migrate my app into the cloud without modifying the underlying code.

Until recently, this was hard to do. But it sounds like Micro Cloud Foundry is just what I have been looking for. More on this topic once I’ve had a chance to dig deeply into it.

Micro Cloud Foundry appears to me to correspond to Windows Azure development fabric.

<Return to section navigation list>

0 comments:

Post a Comment