Windows Azure and Cloud Computing Posts for 8/25/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

• Updated 8/25/2011 at 4:30 PM PDT with added articles by Avkash Chauhan, Tom Winans, Maureen O’Gara, Information Week::analytics, Craig Kitterman, Microsoft Server and Cloud Platform Team, Visual Studio LightSwitch Team and Windows Azure Team.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

• Avkash Chauhan described Windows Azure Table Storage: Problem occurred while inserting a certain record in a Table in an 8/25/2011 post:

Recently I found something very interesting while trying to insert a record in a table in my Windows Azure Table Storage.

The following record was inserted in the Windows Azure Table without any problem:

partitionkey: KSIEIRI6I4ER39GFDGRger4000173KDLWDTTKWKWITO405482950skeiklkKL324444242Ah4u2A1UY41QB4==

rowkey: 0434FDEA4534590808324DA09823423ACB09432409AEFD03295CEA230954049650238CB3F4A764B5DB0CEAD09951D18655E222D72A044F27CBD871E6188BE51B67F114709AE70194AA75B12A9BF625999A553BB9C0D8B997BA08035492CDF344A

Timestamp: 01/01/0001 00:00:00

However the following record failed to insert:

partitionkey: k349slfkLKDOE93KSKLAJK92-Sllldw943543lkEEWRKDR53/8729ejkal4KdgOL5O463wr20l28KH8kd1SJD5aI3==

rowkey: 0843CDF082309349A39948380238CB3F4A764B5DB0CEAD09951D18653864CDE99213AEF93845CDFE919BCA98235DEFCA89048932094CFEBB8214FD8C7D6D00491D57352BA661E2F6192E7C558876719486A91D87A4C90234CDA9093DG09AA6DEF

Timestamp: 01/01/0001 00:00:00The problem here was related with a certain character “/” in the partition key because ”/” is not allowed to present in partition key.

k349slfkLK45OE93KSKLAJK92-Sl3odw943543lkEEWRKDR53/8729ej6al4KdgOL5O463wr20l28KH8kd1SJD5aI3==

The following characters are not allowed in values for the PartitionKey or

andRowKey properties:

- The forward slash (/) character

- The backslash (\) character

- The number sign (#) character

- The question mark (?) character

More info is available at: http://msdn.microsoft.com/en-us/library/dd179338.aspx:

• Tom Winans claimed Silverlight And Access To Cloud Storage – also read as “You Rich Client Folks Wake Up” in an 8/23/2011 post:

I am gobsmacked to learn that Microsoft Azure storage is not fully accessible to Silverlight, even by Silverlight 4. Storage client libraries are not available in Silverlight form (e.g., they are not compiled as Silverlight libraries, no source code written to current Silverlight APIs appears to be available). Http access is available, but not all storage types support cross domain access (suggesting there might be code base differences between the available Azure libraries and their supposedly common http RESTful service call underpinnings). In sum, there are enough caveats relating to access of Azure Storage (and other cloud storage, for that matter) from Silverlight that make the thought of attempting to get around them off-putting. I see where there is a Silverlight library enabling direct access to Amazon’s S3, but buying it does not meet my need to access cloud storage in general from an in-browser Silverlight client.

While this state is disappointing, one could access cloud storage in Silverlight indirectly through byte arrays and WCF – as inefficient and possibly stupid as this sounds. But this almost ridiculous scheme does result in full access to cloud storage, and it also sidesteps the awkwardness of having to coordinate/catenate series of asynchronous web service or RESTful service calls that become necessary because of Silverlight’s UI-centric view on synchrony (i.e., synchrony is evil).

This sets up the real peeve I have with Silverlight. First – let me say I like Silverlight. But I am beyond irritated with the decisions that have been made by the Silverlight team that constrain my ability to deal with middleware types of concerns in a Silverlight rich client that appear to be motivated by the view that all of the world is asynchronous, and the part that is not can be ostracized to this or that thread pool.

I’ve read blog entry after blog entry that discusses how to almost get around Silverlight’s asynchrony constraint by wrapping asynchronous method calls in some ThreadPool delegate method just so that I can limit callback methods to one.

REALLY? Have you LOOKED at that code? The ridiculousness of WCF and byte array access to cloud storage pales in comparison.

And … you asynchronous UI zealots that think I have not experienced nirvana yet … please don’t tell me I need to become enlightened and then I will understand. The point is not that I cannot see how to make asynchrony work in most cases, it is that things should not be forced into working asynchronously when they are not. Pretending that all things synchronous really can function asynchronously is as silly as pretending that Wall Street mathematics work as well in really bad economic situations as when all is well with the financial world.

No. Not so much!

When bridging user interface and service boundaries, issues like synchrony vs. asynchrony will surface. Middleware is often assigned the role to mediate such, and it is easy to relegate middleware to “the server side” of an application or a platform. But this is an oversight that will result in significant frustration when developers try to develop applications that are more enterprise class (I do not mean to diminish browser applications, etc., but I do wish to make a distinction between application classes): rich client in/out of browser frameworks provide the foundation to build phenomenal applications that, with web and RESTful services, separate architectural concerns and – hopefully – enable us to get beyond limitations of browsers where we need to do. The abstraction of adapters and other middleware patterns will be necessary to at least partially implement in the rich client to avoid silly little data hops between services like Cloud Storage/servers where synchrony is supported/the desired rich client end point.

Synchrony is not evil. And, if a more informed point of view prevailed in the Silverlight world, numerous blog entries would not have been written, and Cloud Storage user interfaces would be looking awesome.

Tom Winans is the principal consultant of Concentrum Inc., a professional software engineering and technology diligence consultancy.

<Return to section navigation list>

SQL Azure Database and Reporting

Greg Leake explained Checking Your SQL Azure Server Connection in an 8/25/2011 post to the Windows Azure blog:

As the SQL Azure July 2011 Service Release is being rolled out, we want to advise you of an important consideration for your application logic. Some customers may be programmatically checking to determine if their application is connected to SQL Azure or another edition of SQL Server. Please be advised, you should not base your application logic on the server version number, since it changes across upgrades and service releases. It is okay, however, to use the version number for logging purposes.

If you want to check to see if you’re connected to SQL Azure, use the following SQL statement:

- SELECT SERVERPROPERTY('EngineEdition') == 5.

See the SQL Server Books Online for a full description of the SERVERPROPERTY function.

The current SQL Azure service release will upgrade the underlying SQL Azure database engine version from 10 to version 11 as it is rolled out across data centers.

You will see this server version number change show up in server-side APIs such as:

- SERVERPROPERTY('ProductVersion')

- @@VERSION

and also client-side APIs such as:

- ODBC: SQLGetInfo (SQL_DBMS_VER)

- SqlClient: SqlConnection.ServerVersion

There will be a period of time during the service upgrade, measured in days, during which some user sessions will connect to version 10.25.bbbb.bb SQL servers and other user sessions will connect to version 11.0.bbbb.bb SQL servers (bbbb.bb is the build #). One given user connection to one database may see different server version numbers reported at different times. At the end of the upgrade all sessions will be connecting to version 11.0.bbbb.bb SQL Servers.

User queries and applications should continue to function normally and should not be adversely affected by the upgrade.

The July 2011 Service Release is foundational, with significant upgrades to the underlying engine that are designed to increase overall performance and scalability. This upgrade also represents a big first step towards providing a common base and feature set between the cloud SQL Azure service and our upcoming release of SQL Server Code Name “Denali”. Please read the blog post, “Announcing: SQL Azure July 2011 Service Release” for more information.

Greg is a product manager on the SQL Server team, focused on SQL Azure and Microsoft cloud technologies.

The SQL Server Team (@SQLServer) announced Microsoft ships CTP of Hadoop Connectors for SQL Server and Parallel Data Warehouse in an 8/25/2011 post:

Two weeks ago, Microsoft announced its commitment to Hadoop and promised to release new connectors to promote interoperability between Hadoop and SQL Server. Today we are announcing the availability of the Community Technology Preview (CTP) of two connectors for Hadoop: The first is a Hadoop connector for SQL Server Parallel Data Warehouse (PDW) that enables customers to move large volumes of data between Hadoop and SQL Server PDW. The second connector empowers customers to move data between Hadoop and SQL Server 2008 R2. Together these connectors will allow customers to derive business insights from both structured and unstructured data.

Hadoop Connector for SQL Server Parallel Data Warehouse

SQL Server PDW is a fully integrated appliance for the most demanding Data Warehouses that offers customers massive scalability to over 600 TB, and breakthrough performance at low cost. PDW is an excellent solution for analyzing large volumes of structured data in an organization. The new Hadoop connector enables customers to move data seamlessly between Hadoop and PDW. With this connector customers can analyze unstructured data in Hadoop and then integrate useful insights back into PDW. As a two way connector, it also enables customers to move data from PDW to a Hadoop environment. The connector uses SQOOP (SQL to Hadoop) for efficient data transfer between the Hadoop File System (HDFS) and relational databases. In addition, it uses the high performance PDW Bulk Load/Extract tool for fast import and export of data.Existing SQL Server PDW customers can obtain the Hadoop connector for PDW from Microsoft’s Customer Support Service, as is also the case for the Microsoft Informatica Connector for SQL Server PDW, and Microsoft SAP Business Objects DI Connector for SQL Server PDW.

Hadoop Connector for SQL Server

This connector enables customers to move data between Hadoop and SQL Server. It is compatible with SQL Server 2008 R2 and SQL Server code named “Denali”. Like the PDW version, this is a two-way connector that allows the movement of data to and from a Hadoop environment. It also uses SQOOP for efficient data movement. The CTP version of this connector is now available for trial. Together these two connectors reaffirm Microsoft’s commitment to interoperability with Hadoop. Customers can use them to derive business insights from all their data – structured or unstructured.

Building on the Business Intelligence Platform and Data Warehousing capabilities provided by SQL Server, the support for near real time insights and analytics into large volumes of complex unstructured data i.e. ‘Big Data’ will continue to be a focus area for us. The connectors are a first step in this journey and you can expect to hear more from us in the coming year.

Test drive these connectors and send us your feedback. Thanks!

Wonder when the SQL Server 2008 R2/Denali Converter will be ported to SQL Azure.

<Return to section navigation list>

MarketPlace DataMarket and OData

Carl Bergenhem described AJAX Series Part 2: Moving to the Client – Using jQuery and OData with Third-party Controls in an 8/24/2011 post to the Telerik blog:

Part two of the webinar series occurred yesterday and, just like with the first one, I wanted to follow up with a blog post answering all of the questions that came up. I also wanted to thank everyone that attended, you guys were great and were very attentive (as I saw after reading the chat logs regarding my initial Eval statement!).

Your exact question might not be included here, but that’s just due to a duplicate (or very similar) question. You can find all the answers to the questions below:

- Will this code be made available for download the webinar?

The code will be available after the conclusion of the series, or part three, which will occur on August 30th at 11 AM EST.

- Will the webinar recordings be placed on Telerik TV?

Each webinar is recorded and placed on Telerik TV, part one has been available since last week and part two was uploaded earlier today.

- What is a common practice to use: OnNeedDataSource or call DataBind on Page load and then use ajax calls?

It all depends on your particular scenario, and both scenarios can end up being very similar. If you are doing server-side binding with the RadGrid or RadListView, no matter if it’s through OnNeedDataSource or setting it in Page_Load, the controls will go back to the server when paging/sorting/filtering to grab additional data. So while OnNeedDataSource will be fired when the Datasource property has not been set and any of these commands fire, the same will occur with using the Datasource property in the Page_Load event. To prevent a full postback (which is usually the concern) you could take use of the RadAjax control and all of its sub-controls (RadAjaxPanel, RadAjaxManager etc.). This will also be a topic during the third webinar.

- Is OData different than any standard web service?

This could be answered as yes and no at the same time. If you simply take use of the regular service references and use only the proxy classes which are generated by the web services you might not necessarily notice a difference. However, the main difference with OData is that it has a huge focus on core web principles, such as an HTTP-based uniform interface for interacting with its objects. As I showed off in the webinar, the great thing about it is that I can very easily generate a string using the OData conventions and have the same string execute a query across JavaScript, in my browser URL window, .NET languages, or even any other platform that you can think of. For some further insight to this see the links in the question below.

- Where can we find more information about OData?

The best place is honestly OData.org. Here you can find a full list of Producers and Consumers (including some Telerik Products). What is pretty helpful is that the documentation covers the protocol pretty well, so to get a good understanding of how queries work I definitely recommend reading over that page.

- What version of jQuery are you using?

In this particular demo I’m using version 1.6.2, but most of the code used in this example does not take use of any new features in 1.6.2, so older versions can be used as well if need be.

- You mentioned partial postbacks for performance gains, will you be covering this?

This will be covered in the third webinar, so mark your calendar for August 30th :)Webinar Recording

The second webinar is also now uploaded on Telerik TV. Follow this link to see what we went through in the second webinar. For those of you that have not watched the first webinar, found here on Telerik TV, I recommend that you go watch that first as this webinar builds off of the work we did in the first one.

Don’t forget to sign up for the third webinar!

Important links (in case you just scrolled down ;)):

Part 1 on Telerik TV

Part 2 on Telerik TV

Webinar Registration Link

Todd Anglin of the Kendo UI team explained Cross-Domain Queries to OData Services with jQuery in an 8/23/2011 post:

As more application code moves from the server to the client, it's increasingly common to use JavaScript to load JSON data that lives on a different domain. Traditionally, this cross-domain (or more accurately, cross-origin) querying is blocked by browser security, but there is a popular technique for working around this limit called JSONP (or JSON with Padding).

With JSONP, a server returns JSON data wrapped in JavaScript, causing the response to be evaluated by the JavaScript interpreter instead of being parsed by the JSON parser. This technique takes advantage of a browser's ability to load and execute scripts from different domains, something the XmlHttpRequest object (and, in turn, Ajax) is unable to do. It opens the door that enables JavaScript applications to load data from any remote source that supports JSONP directly from the browser.

OData and JSONP

OData, an up and coming RESTful data service schema that is trying to "standardize" the REST vocabulary, can support JSONP when the backing OData implementation supports it. In its most basic form, OData is simply AtomPub XML or JSON that conforms to OData's defined query keywords and response format. How you generate that XML or JSON- and what features you support (like JSONP)- is up to you.

A very popular approach for building OData services (given OData's Microsoft roots), one used by Netflix, is to implement an OData end-point using Microsoft's ADO.NET Data Services. With this implementation, most of the OData heavy-lifting is done by a framework, and most of the common OData schema features are fully supported.

Critically missing with vanilla ADO.NET Data Services, though, is support for JSONP.

To add support for JSONP to ADO.NET Data Service OData implementations, a behavior add-on is needed. Once installed, the new behavior adds two OData keywords to the RESTful query vocabulary:

- $format - which allows explicit control over the response format from the URL (i.e. $format=json)

- $callback - which instructs the server to wrap JSON results in a JavaScript function to support JSONP

With this support, OData services on any remote domain can be directly queried by JavaScript.

Querying Netflix Using jQuery

Let's put our new found JSONP support to the test. First we need a RESTful query:

http://odata.netflix.com/v2/Catalog/Genres

By default, this query will return all Netflix movie genres in XML AtomPub format. Let's make the results more JavaScript friendly by "forcing" a JSON return type (technically not needed in our jQuery code, but it makes debugging easier):

http://odata.netflix.com/v2/Catalog/Genres?$format=json

That's better. Now, how would we use this with jQuery to get some data? Like this:

What's going on in this jQuery snippet:

- We're using jQuery's $.ajax API to query our OData endpoint

- We're setting the request contentType header to 'application/json' (which can auto-trigger OData JSON responses)

- We're telling jQuery that this is a JSONP request with the dataType property

- And finally, we're handling the Success and Failure events

Upon running this snippet, though, you may encounter this dreaded JavaScript error, accompanied by a "parsererror" alert:

Uncaught SyntaxError: Unexpected token :

What? Looking at your network traffic, you see the request to Netflix. You see the response with JSON. The JSON data looks good. Why isn't jQuery parsing the JSON correctly?

When you tell jQuery that the dataType is JSONP, it expects the JSON results to be returned wrapped in JavaScript padding. If that padding is missing, this error will occur. The server needs to wrap the JSON data in a JavaScript callback function for jQuery to properly handle the JSONP response. Assuming the OData service you're using has added the proper support, that means we need to modify our RESTful URL query one more time:

http://odata.netflix.com/v2/Catalog/Genres?$format=json&$callback=?

By adding the "$callback" keyword, the OData endpoint is instructed to wrap the JSON results in a JavaScript function (in this case, using a name auto-generated by jQuery). Now our data will be returned and properly parsed.

Using the Kendo UI Data Source

The Kendo UI Data Source is a powerful JavaScript abstraction for binding to many types of local and remote data. Among the supported remote data endpoints is OData. Since the Kendo UI Data Source knows how OData should work, it can further abstract the raw jQuery APIs and properly configure our query URL.

In this example, we can configure a Kendo UI Data Source with a basic OData query, like this:

Notice that our URL does not include any of the OData keywords, like $format or $callback. We told the Kendo UI Data Source that this is an OData endpoint by setting the "type" property to "odata," and with this simple configuration, Kendo UI handles the rest. When the data source is used, a request is made to the following RESTful URL:

http://odata.netflix.com/v2/Catalog/Genres?$format=json&$inlinecount=allpages&$callback=callback

As you can see, Kendo UI has automatically added the needed parameters. In fact, Kendo UI can do much more. If we configure the Kendo UI data source to use server paging and filtering, it will automatically build the proper OData URL and push the data shaping to the server. For example, let's only get Genres that start with "A", and let's page our data. Kendo UI configuration is simply:

A quick configuration change, and now we will precisely fetch the needed data from the OData service using this Kendo UI generated URL:

We've gone from very raw, low-level jQuery $.ajax queries, where we had to remember to set the correct content type, request type, data type, and manually construct our query with the needed parameters, to a nicely abstracted JavaScript data source that handles much of the dirty work for us.

What about CORS?

CORS, or Cross-Origin Resource Sharing, is a newer pattern for accessing data across domains using JavaScript. It aims to reduce the need for JSONP-style hacks by providing a native browser construct for using normal, XHR requests to fetch data across domains. Unlike JSONP, which only supports GET requests, CORS offers JavaScript developers the ability to use GET, POST, and other HTTP verbs for a more powerful client-side experience.

Why, then, is JSONP still so popular?

As you might expect, CORS is not as fully supported as JSONP across all browsers, and more importantly, it requires servers to include special headers in responses that indicate to browsers the resource can be accessed in a cross-domain way. Specifically, for a resource to be available for cross-domain (note: I'm saying domain, but I mean "origin" - domain is just a more familiar concept), the server must include this header in the response:

Access-Control-Allow-Origin: *

If this header is present, then a CORS-enabled browser will allow the cross-domain XHR response (except IE, which uses a custom XDomainRequest object instead of reusing XHR for CORS…of course). If the header value is missing, the response will not be allowed. The header can more narrowly grant permissions to specific domains, but the "*" origin is "wide open" access. Mozilla has good documentation on detecting and using CORS, and the "Enable CORS" website has info on configuring server headers for a number of platforms.

Using CORS with jQuery and OData

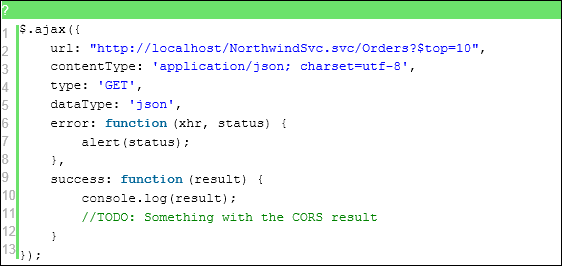

The primary obstacle with CORS is the server. Very few public web services that provide JSON data today have the necessary CORS response headers. If you have a service with the headers, everything else is easy! In fact, you don't really have to change your JavaScript code at all (compared to traditional XHR requests). Assuming I have a CORS-enabled OData service, I would use code like this to query with jQuery:

As you can see in this snippet, CORS is completely transparent and supported by jQuery. If we're migrating form JSONP, then we change the "dataType" from "jsonp" to "json" and we remove the JSONP callback parameter from our OData query. jQuery even navigates the different XDomainRequest object in Internet Explorer!

I expect CORS will become much more popular in the next 12 to 18 months. We'll explore ways the Kendo UI Data Source can embrace and enrich this pattern, too.

If you're going to start building JavaScript applications, you must get comfortable querying data from the browser. I've demonstrated a few ways you can use jQuery and Kendo UI to query OData end-points, but many of the concepts translate to other service end points. I hope this helps enrich your understanding of cross-origin data access and helps you build better HTML5 and JavaScript apps!

Michael Crump (@mbcrump) posted Slides / Code / Resources for my OData Talk at devLINK 2011 on 8/21/2011 (missed when posted):

On August 19th 2011 I gave a presentation at devLINK titled, “Producing and Consuming OData in an Silverlight and Windows Phone 7 Application”. As promised, here is the Slides / Code / Resources to my talk.

Slides – The query slide in this deck was taken from a slide in Mike Taulty’s talk.

This is the first time that I used Prezi in a talk and it worked out wonderful! I want to apologize in advance for embedding a flash player in my blog. It is all that Prezi supports at this time. =(

Producing and Consuming OData in an Silverlight and Windows Phone 7 application. on Prezi

Free Tools

Silverlight OData Explorer – Special Thanks to Adam Ryder for bringing it up.

Articles

You can read any of the parts by clicking on the links below.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 1) – Creating our first OData Data Source and querying data through the web browser and LinqPad.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 2) – Consuming OData in a Silverlight Application.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 3) – Consuming OData in a Windows Phone 7 Application (pre-Mango).

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 4) – Consuming OData in a Windows Phone 7 Application (Mango).

e-Book:

The e-Book is available on SilverlightShow.net. It includes a .PDF/.DOC/.MOBI of the series with high resolution graphics and complete source code. See below for a table of contents:

Contents:

Chapter 1

Creating our first OData Data Source.

Getting Setup (you will need…)

ConclusionChapter 2

Adding on to our existing project.

ConclusionChapter 3

Testing the OData Data Service before getting started…

Downloading the tools…

Getting Started

ConclusionChapter 4

Testing and Modifying our existing OData Data Service

Reading Data from the Customers Table

Adding a record to the Customers Table

Update a record to the Customers Table

Deleting a record from the Customers Table

ConclusionThanks to all for attending my talk and I hope to see you all next year.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

David Strom (@dstrom) reported Okta Has New Cloud Active Directory Integration in an 8/25/2011 post to the ReadWriteCloud blog:

Okta today announced its Directory Integration Edition cloud-based identity service. The idea is if done correctly, end-users should not even know which of their apps is on-premise and which is based in the cloud. And as more apps migrate to the cloud, the seamless integration of where they are located means that the directory services piece has to function better and recognize more off-premises apps with ease.

The notion of directory integration with cloud-based apps isn't new: Previously, Okta offered through its Cloud Service Platformthe ability to federate authentication between a single cloud app and AD. And there are lots of others in this space, including Cloudswitch, Centrify, and Microsoft's own Active Directory Federation Services who have been available for some time. Today's announcement adds a SaaS-based service that replicates an on-premises Active Directory store with a number of cloud-based services. Setup is fairly straightforward and requires a downloadable Windows-based software agent to make an outbound connection across the link.

Okta's Directory Integration Edition goes beyond federation to provision and update user information across the cloud/premises links. It can handle thousands of multiple cloud apps that they have already cataloged for this kind of integration. Here you can see an example of how to setup your Salesforce integration using this service.

This means once a user logs into a Windows domain, their identity is maintained no matter what other app they bring up on the local network or in whatever cloud apps they pull up in their browser.

Pricing is $1 per user per month, with discounts beyond 200 users.

Windows Azure App Fabric Access Control solutions are much less expensive.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• The Windows Azure Team (@WindowsAzure) announced New Bytes by MSDN Delivers the Latest Interviews with Experts on the Cloud and Windows Azure in an 8/25/2011 post:

Bytes by MSDN interviews offer a great way to learn from cloud experts and Microsoft developers as they talk about user-experience, the cloud, phone, and other topics they’re passionate about. This week's featured video is with Rob Gillen and Dave Neilson, who discuss how the scientific community harnesses the cloud for high performance computing. Rob, from Planet Technologies, illustrates how Cloud Computing fits into the areas of computational biology and image processing and shares a neat example of gene mapping using the Basic Local Alignment Search Tool (BLAST) tool on Windows Azure.

Other recent News Bytes by MSDN videos about the cloud and Windows Azure include

Jim O’Neil and Dave on Windows Azure and Start-ups

In this discussion with Jim O'Neil, Microsoft’s North East developer evangelist, and Dave Nielsen, co-founder of CloudCamp & principal at Platform D, share real life examples of start-up companies that are harnessing the cloud to enhance their productivity and grow cost effectively.

Michael Wood and Dave on Windows Azure And Proof-of-Concept Projects

In this discussion Strategic Data Systems’ Michael Wood and Dave talk about how Cloud Computing can alleviate the backlog and lower the barrier to innovation.

Lynn Langit and Dave on Windows Azure and Big Data

In this talk, Microsoft’s Lynn Langit and Dave offer a few examples of big data, and how data scientists are using the Cloud to manage massive amounts of data. Using SQL Azure as an example, Lynn describes why customers are using a combination of NO SQL Windows Azure tables and SQL Azure relational tables.

Bruno Terkaly and Dave on Windows Azure and Mobile Applications

With 4.5 billion mobile devices around the globe, the cloud enables scale in terms of power and storage to support growing markets. In this video, Microsoft Senior Developer Evangelist Bruno Terkaly and Dave discuss how the cloud is powering the mass consumerization of mobile applications and enables sites with massive storage needs and a growing user base, to scale using the cloud.

Check back often for the next installment in the series or subscribe and take it on the go.

Subscribed.

• Craig Kitterman (@craigkitterman) reported From DrupalCon London: Drupal and Microsoft – It’s Jolly Good! on 8/25/2011:

It’s been a busy week here in Croydon (just outside of central London), and Microsoft is very proud to be participating in our 5th DrupalCon. The most exciting bit is the clear progress that’s been made as a result of our engagements with the Drupal community. Last month also marked the 1 year anniversary with the work set out by The Commerce Guys on the Drupal 7 Driver for SQL Server and SQL Azure, a solid piece of work that is starting to see great uptake in the Drupal Community.

There’s been solid progress, but the journey continues. Over the past 8 months we have received a great deal of feedback on Drupal on Windows and Windows Azure as well as tools/features that would create value to the community and their customers. We took these things to heart and once again engaged the community to help close the gaps in these areas.

drush on Windows

ProPeople (the creators of drush) has independently developed and released drush for Windows with the help of the comaintainer of Drush. From Drupal.org: “drush is a command line shell and scripting interface for Drupal, a veritable Swiss Army knife designed to make life easier for those of us who spend some of our working hours hacking away at the command prompt.”. For the Drupal power user, drush is a must and we are proud to have sponsored this initial release. Bringing this capability to Windows unlocks new scenarios for these users and we are really excited to see what next here!

Windows Azure Integration

There has been steady progress on the journey to delivering a great offering for Windows Azure and I would like to share with you the status of making this a reality.

First, with version 4.0 of the PHP SDK for Windows Azure, the team at Real Dolmen released a new tool that provides the capability to “scaffold” Windows Azure projects based on templates. What this means is that for any project type or application, one can easily create a template that ensures the proper file structure, as well as automatic configuration of the application components. To help those who wish to run Drupal today on Windows Azure, the Microsoft Interop Team has released a simple scaffold template and instructions which provide a shortcut to getting up and running quickly with an instance of Drupal that will scale on Windows Azure. This solution is not going to work for everyone and we are continuing to invest on building an even simpler and more streamlined solution that will be truly ready for the masses – stay tuned for more info on this in the coming months.

And second, The Commerce Guys have released a Windows Azure Integration module. This provides an easy method to offload media storage for your Drupal site to Windows Azure blob storage. There are a number of benefits to using the power of the cloud to store this type of data, regardless of where your application is actually hosted. By leveraging this module along with the Windows Azure Content Delivery Network (CDN), you can with just a few clicks have geo-distributed edge caching of all your Drupal site media assets across 24 CDN nodes worldwide – getting that content closer to the user providing greater performance and lower server loads on your web server(s).

Finally, with continuous improvement of the Drupal 7 Driver for SQL Server and SQL Azure mentioned earlier, SQL Azure support is getting even better giving Drupal users the ability to have an auto-scale, fault tolerant database at their disposal with a couple of clicks without any of the headaches of configuration or management (all handled by Microsoft).

We look forward to continued engagements and discussions with the Drupal community on these great Open Source projects and are really excited about the progress that has been made to date. Together we will have more great news to share in the coming months!

Cheers!

Craig Kitterman

Sr. Technical Ambassador

Aemami claimed Inspired by Windows 8 [and powered by Windows Azure], Weave 3.0 takes Metro on Windows Phone to a new level in an 8/24/2011 press release from WMPowerUser.com:

Seles Games – makers of the popular “Windows Phone News” app – is proud to announce the latest update to their signature news reader Weave. For the first time ever, Weave is now available as a FREE application.

Weave 3.0 has been rebuilt from the ground up to be faster, more beautiful, and easier to use than ever. The redesign of the app was inspired by the new tablet interface for Windows 8. The changes reflect a more evolved take on Metro – ideal for anyone tired of all the various apps that look exactly alike!

- Powered by Windows Azure, all feed parsing now happens “in the cloud”. End result – news articles come in much faster than before, regardless of how many feeds and categories you have enabled [Emphasis added]

- New article coloring: when in an article list, all old articles will appear in your phone’s accent color. New articles, however, will appear in the complementary color of your phone’s accent color.

- The number of new articles is now highlighted in the phone’s accent color on the top right of the article list page, above the page count,

- Article list has a new UI. The application bar at the bottom of the screen has been removed. The ‘refresh’, ‘previous page’, and ‘next page’ buttons have all been moved to the top left side of the page. This frees up more pixels vertically, and we feel leads to a better look overall. Additionally, a settings button has been added to that page.

- The ‘settings’ button now links to a new page that lets you select amongst different preset font sizes. You can also change the font thickness/weight.

- You also have checkboxes to toggle what actions happen when you tap on an article, giving you more control over how Weave functions.

- You can now toggle the System Tray (i.e. time, battery life, WiFi, etc.) on or off on the article list page.

- At the top of the page, you can now tap on the large category name to bring down a navigation menu that takes you directly to another category!

- New ‘share’ button. Tapping it once brings up the share tiles (5 of them: Instapaper, Twitter, email, Facebook, Internet Explorer). Aside from looking cooler, we feel this new approach is easier and faster to use.

- Most animations throughout the application have been sped up.

PRWire Australia announced Object Consulting wins Innovation Partner of the Year Award at Microsoft Australian Partner Awards (2011 MAPA) in an 8/24/2011 press release:

Object Consulting is proud to announce it has been acknowledged as Winner in the Innovation Partner of the Year category at the 2011 Microsoft Partner Awards (MAPA). Object Consulting received this award on the strength of its Connected Experience solutions on the Windows Azure platform.

Connected Experience connects devices and screens together with the Azure cloud to deliver unique, innovative and compelling experiences to link organisations with their customers. Azure provides the Cloud content store that allows all of the connected devices to access shared content. It also stores identity and state such that experiences can be started on one device and then continued across other devices.

Gil Thew, Object Consulting's Executive Director responsible for New Business Development and Strategic Partnerships said at the Awards Event: “Two years ago Object recognised the great potential of Microsoft Surface in the emerging world of systems delivery.

"We have been working on this to perfect Connected Experience, a horizontal solution for any organisation that wants to optimise its contact and business relationship with their consumers. Surface and Connected Experience on the Windows Azure platform is a genuinely successful B2C solution. This award from Microsoft confirms that as much as the recent orders from our clients."

Kevin Francis, Object Consulting’s National Microsoft Practices Manager, added: “All of our cloud solutions, including Connected Experience, work across industries including financial services, healthcare and government, utilities, museums and libraries, retail, hospitality, transport and entertainment.

“Connected Experience is a unique, world-leading solution to the problems of staff and customer engagement.

“Leveraging Windows Azure and a range of exciting technologies like Microsoft Surface, Interactive Digital Signage, Tablet computers and customers’ mobile phones, and home computers, Object Consulting is able to provide a range of consistent, engaging, connected experiences in store, at home and on the move.”

Further details on Object Consulting’s Connected Experience Solution are available at www.connectedexperience.com.au.

…

About Object Consulting (www.objectconsulting.com.au)

Object Consulting is Australia’s leader in delivering enterprise business solutions. With 21 years of experience, Object’s consulting, development, training and support services keep their customers at the forefront of innovation.

Marketwire reported Ariett's Xpense & MyTripNet Solutions Successfully Complete Microsoft(R) Windows Azure Platform Testing in an 8/24/2011 press release:

Ariett, a leading provider of travel, expense, AP invoice and purchasing automation solutions, is pleased to announce that MyTripNet, Xpense and AP Invoice have successfully completed Microsoft® Windows Azure™ platform compatibility testing. This week, thousands of business travelers will gather at the 2011 Global Business Travel Association (GBTA) Convention in Denver, Co., to find cost-effective solutions for several industry challenges. One of the biggest challenges facing travelers and corporations is the need to effectively manage employee travel and expenses. Now business travelers and their companies can be assured of the highest quality platform reliability and security for travel approval and expense reporting with an Ariett Cloud based Software as a Service (SaaS) deployment.

Today, business travelers are working harder than ever, covering more miles and selling across the globe. In order to improve workforce productivity, they need a simple way to handle business trip details. From pre-booking approval to expense reporting, the entire process can be time-consuming, unsystematic, and costly.

With Ariett's MyTripNet and Xpense as a cloud-based service on the Windows Azure platform, Ariett can provide travel and expense software that is easily accessible, extremely secure, and user friendly to businesses. Microsoft manages the platform and Ariett manages the application software and client training, with a full complement of functionality including robust reporting, policy and audit management, corporate credit card integration, multicurrency, automated tax calculations and mobile smart phone support.

"Ariett MyTripNet and Xpense Cloud solutions are a full enterprise-class version of our on premise solution and is not a simplified, light solution," said Glenn Brodie, President of Ariett Business Solutions. "Ariett on Microsoft Windows Azure allow us to deliver dramatic scalability and secure services to customers from 20 to 20,000 users on the same platform."

Web Service Integration

For customers using Microsoft Dynamics GP, Ariett on Windows Azure™ will include optional, secure web service integration. This additional Web service provides a complete closed loop service with seamless integration to the financial system, following the business rules in Dynamics GP for the specific company selected. This capability eliminates the requirement for time consuming and error prone manual data file uploads and download and completes this process at the click of a button and eliminates the need to deploy the integration manager for file uploads. The client-located portion of the Web service will reside at the client's site on their Microsoft IIS Server.

Ariett Cloud services require low capital expenditures and operational costs, resulting in enterprise, global functionality at a mid-market per user or per transaction price. Ariett customers benefit from the Cloud platform by having the ability to utilize multiple global Microsoft data centers for scalability, reliability and security of Microsoft software premier Cloud based computing.

About Ariett Business Solutions Inc.

Ariett provides Purchasing and Expense software solutions for global companies that automate Requisition and Purchase Management, AP Invoice Automation and Employee Expensing Management with Pre-Travel and Booking. Ariett offers enterprise class functionality with superior workflows, web-based analytics and reporting, document management, and electronic receipt & credit card processing. Offered in the Cloud, Software as a Service (SaaS) on Microsoft Windows Azure or deployed on premise, with optional direct integration to Microsoft Dynamics ERP. Visit www.ariett.com for more information.

Wade Wegner (@WadeWegner) updated the Windows Azure Toolkit for iOS (Library) - v1.2.0 to v1.2.1 on GitHub on 8/18/2011:

The Windows Azure Toolkit for iOS is a toolkit for developers to make it easy to access Windows Azure storage services from native iOS applications. The toolkit can be used for both iPhone and iPad applications, developed using Objective-C and XCode.

The toolkit works in two ways – the toolkit can be used to access Windows Azure storage directly, or alternatively, can go through a proxy server. The proxy server code is the same code as used in the WP7 toolkit for Windows Azure (found here) and negates the need for the developer to store the Azure storage credentials locally on the device. If you are planning to test using the proxy server, you’ll need to download and deploy the services found in the cloudreadypackages here on GitHub.

The Windows Azure Toolkit for iOS is made available as an open source product under the Apache License, Version 2.0.

Downloading the Library

To download the library, select a download package (e.g. v1.2.0). The download zip contains binaries for iOS 4.3, targeted for both the simulator and devices. Alternatively, you can download the source and compile your own version. The project file has been designed to work with XCode 4.

Using the Library in your application

To use the library in your own application, add a reference to the static library (libwatoolkitios.a) and reference the include folder on your header search path. The walkthrough document at http://www.wadewegner.com/2011/05/windows-azure-toolkit-for-ios/ provides a more thorough example of creating a new XCode 4 project and adding references to the library.

or

Drag the library project into your Xcode 4 workspace. Set the watoolkitios-lib project as a target dependency of your project and a dd libwatoolkitios.a to your set of linked libraries in the build phases of your project.

Using the Sample Application

The watoolkitios-samples project contains a working iPhone project sample to demonstrate the functionality of the library. To use this, download the XCode 4 project and compile. Before running, be sure to enter your Windows Azure storage account name and access key in RootViewController.m. Your account name and access key can be obtained from the Windows Azure portal.

Contact

For additional questions or feedback, please contact the team.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Steve Hoag of the Visual Studio LightSwitch Team announced Extensibility - Metro Theme Sample Code Released! on 8/25/2011:

This morning the LightSwitch Team released samples in both Visual Basic and C# that contain the code for the recently released Metro Theme for Visual Studio LightSwitch.

For more information on creating extensions for Visual Studio LightSwitch, see Visual Studio LightSwitch 2011 Extensibility Toolkit.

Download the LightSwitch Metro Theme Extension Sample

The sample expands upon the Help topic Walkthrough: Creating a Theme Extension, which demonstrates a simple theme that defines fonts and colors. The Metro theme also makes use of styles, defining new appearance and behavior for the built-in LightSwitch control templates. To provide a consistent experience, you will need to define Resource Dictionaries in the form of a .xaml file for each control template, as shown in the following illustration:

Additional styles are defined in the MetroStyles.xaml file, which also contains a MergedDictionaries node that references the other .xaml files. When LightSwitch loads the extension, it reads in all of the style information and applies it to the built-in templates, providing a different look and feel for your application.

There isn't much actual code in this sample; most of the work is done in xaml. You can use this sample as a starting point for your own theme, changing the fonts, colors, and styles to create your own look.

Michael Washington (@ADefWebserver) reported Beth Massi presents on LightSwitch on 8/25/2011:

Beth Massi spoke at the Los Angeles Silverlight User's Group about LightSwitch at Blank Spaces in Santa Monica, CA on 8/24/2011. The event was co-sponsored with the LightSwitchHelpWebsite.com.

The room was packed with over 40 people and she did a special deep dive into extending LightSwitch for advanced situations.

Beth also revealed that her team will introduce some exciting new LightSwitch extensions next week.

(Beth Massi and Michael Washington (of LightSwitchHelpWebsite.com))

(It’s a slow news day.)

See the Michael Washington (@ADefWebServer) will present W8 Unleash The Power: Implementing Custom Shells, Silverlight Custom Controls and WCF RIA Services in LightSwitch at 1105 Media’s Visual Studio Live! conference on 12/7/2011 at the Microsoft Campus in Redmond, WA article in the Cloud Computing Events section below.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• The Information Week::analytics staff released their Network Computing Digital Issue: August 2011 for no-charge download on 8/25/2011:

Hypervisor Derby: Microsoft and Citrix are closing the gap with VMware. Before you roll out the latest edition of vSphere, reconsider your virtualization platform. [Emphasis added.]

- Cloud Reliability: Into every public cloud a little downtime must fall. We outline the steps to maximize availability and keep you from getting soaked by outages.

- OpenFlow's Possibilities: Mike Fratto discusses the standards effort’s potential to make networks more responsive to application demands.

- VMware Pricing Changes Cause IT Grief: When vendors make major changes out of the blue, IT goes through five stages of grief. Howard Marks helps you get over it.

Table of Contents

3 Preamble: Get Ready For OpenFlow's Smarter Networks

OpenFlow can make networks more automated and intelligent.

4 Datagram: The Five Stages Of IT Grief

Howard When vendors make big changes, IT suffers.

5 Hypervisor Derby

VMware leads on virtualization features, but Microsoft and Citrix are closing the gap. Before you roll out vSphere 5, now's the time to consider switching horses.

13 Intro To Cloud Reliability

Every public cloud suffers downtime. Here's how to maximize availability and keep apps running, rain or shine.

18 Editorial and Business ContactsAbout the Author

InformationWeek is the business technology market's foremost multimedia brand. We recognize that business technology executives use various platforms for different reasons throughout the technology decision-making process, and we develop our content accordingly. The real-world IT experience and expertise of our editors, reporters, bloggers, and analysts have earned the trust of our business technology executive audience.

InformationWeek is the anchor brand for the InformationWeek Business Technology Network, a powerful portfolio of resources that span the technology market, including security with DarkReading.com, storage with ByteandSwitch.com, application architecture with IntelligentEnterprise.com, network architecture with NetworkComputing.com, communications with NoJitter.com and internet innovation with InternetEvolution.com.

Through its multi-media platform and unique content-in-context information distribution system, the InformationWeek Business Technology Network provides trusted information developed both by editors and real-world IT professionals delivered how and when business technology executives want it, 24/7.

David Linthicum (@DavidLinthicum) asserted “While businesses are making the move for long-term savings and flexibility, the U.S. government is mired in budget and staffing woes -- and excess desire for control” as a deck for his 3 reasons the feds are avoiding cloud computing post of 8/25/2011 to InfoWorld’s Cloud Computing blog:

A New York Times article does a great job defining the issues around cloud adoption within the U.S. government -- or, I should say, the glaring lack thereof. As the Times reports, "Such high praise for new Internet technologies may be common in Silicon Valley, but it is rare in the federal government."

Convenient excuses for skirting cloud computing are easy to find these days; for example, attacks on internal government systems from abroad this spring and summer are easy to recall. In July, the Pentagon said it suffered its largest breach when hackers obtained 24,000 confidential files.

I suspect that issues such as the recent attacks are going to be more the rule than the exception, so there will always be an excuse not to move to cloud. (I reject the notion that cloud-based systems are fundamentally less secure, by the way.)

It's really how you use security best practices and technology, not so much where the server resides. I'm not suggesting that state secrets go up on Amazon Web Services right now, but almost all of the other information managed by the government is fine for the cloud.

Why the lack of adoption? Once again, the lack of speed in the government's move to the cloud comes down to three factors: money, talent, and control.

No money. Most agencies just don't have the money to move to the cloud at this time. Migration costs are high, and lacking the budget, it's easier for them to maintain systems where they live rather than make the leap to cloud computing. Although the ROI should be readily apparent after a few years, what they lack are "seed" dollars.

No talent. There continues to be a shortage of cloud computing talent in the government and its contractors. There's no experience in moving government IT assets to the cloud, despite what's spun out to the public.

Control. Finally, there's the matter of control. Some IT people define their value around the number of servers they have in the data center. Cloud computing means fewer servers, so they push back using whatever excuses they can find in the tech press, such as security and outages.

I'd really like to see the government solve this one. Because it's workable.

So would I, David.

Andre Yee described Five Challenges to Monitoring Cloud Applications in an 8/12/2011 article for ebizQ’s Cloud Talk blog:

Cloud application monitoring is the Rodney Dangerfield of cloud technologies - it gets no respect... but it ought to. Notice how much of the discussion around cloud computing is about how to design, build or deploy cloud applications. There are healthy debates around frothy topics like security and vendor lock-in. However, when it comes to how cloud applications (SaaS) are monitored and managed on an ongoing basis, there's a dearth of information and interest.

Perhaps there's a working assumption that monitoring cloud applications is only marginally different from monitoring internal enterprise applications. That assumption couldn't be more wrong. Undoubtedly, there are similarities in the monitoring of enterprise applications that transpose seamlessly to the cloud. But monitoring cloud applications bring new challenges that need to be addressed by the IT organization.

Here are just five challenges that make monitoring cloud applications fundamentally different from monitoring the traditional on-premise enterprise application.

Virtualized Resourcing - Virtualization in both private and public clouds pose a new challenge to systems monitoring tools. Instead of simply monitoring the key system elements of each individual physical node, the use of virtualization creates a dynamic capacity pool of compute and storage resources that needs to be monitored and ultimately managed in a completely different way. For example - monitoring the hypervisor layer for performance, resource capacity isn't something traditional systems management tools were built to handle.

Profiling End User Response Times - End user performance monitoring is different for a cloud application for two reasons. First, cloud applications operate across the open public network - a fact that poses its own challenges in being able to effectively monitor response times. Also, due to nature of SaaS, end users are often distributed across the globe - location is no longer a limitation in application accessibility. This makes determining end user response time particularly difficult. If users in Singapore experience slow performance, how do you measure the effective application response times and determine if its due to the Internet or the application? End user response times used to be easier with on premise, enterprise applications.

Web Scale Load Testing - One difference between on premise enterprise applications and cloud applications is scale - in short, enterprise scale vs web scale. Enterprise scale is bounded, predictable and measurable. Web scale experienced by cloud applications isn't. The traffic spikes with cloud application are seemingly unlimited and unpredictable. Performing load testing in web scale is a present challenge in testing and monitoring cloud applications.

Multi-tenancy - Multi-tenancy is an architectural construct that introduces new challenges to monitoring. Conventional multi-tenant architectures provide a single instance of the application, founded on common data model servicing multiple tenants (customer/client accounts). This logical abstraction makes it more challenging to monitor and profile individual client performance indicators. Application administrators need to be able to not only monitor overall health of the application but also the performance issues related to a specific tenant.

Trend to Rich Clients/RIA Clients - As cloud applications move toward richer HTML5 clients, profiling application performance will become more difficult. Finding the root cause of performance in older SaaS applications is easier in some ways due to the limited client side processing. With most of the processing happening on server side, it's within the visibility of the IT monitoring team. But the introduction of HTML5 means richer clients with more processing and potentially more systems issues happening client side. However, gaining visibility into the client side processing is near impossible - current monitoring tools are severely limited in this area. It's an example of how old models of monitoring SaaS won't work in the new world. However, due to the widespread and increasing adoption of HTML5, you can expect more tools to show up on the market to address this issue.

In a future post, I'll be highlighting some of the tools available today to handle some of these challenges. I'd like to hear your thoughts and stay tuned for more on this topic.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• The Microsoft Server and Cloud Platform Team suggested that you Check out the New Microsoft Server and Cloud Platform Website! on 8/25/2011:

The new site is a comprehensive source for information relating to our private cloud and virtualization solutions, as well as other solutions based on Windows

Server, System Center, Forefront and related products. It is designed to

help connect you to relevant information across Microsoft web destinations,

including TechNet, MSDN, Pinpoint and more - making it a great place to

understand what we offer, why you should consider it and how to get started.

Enjoy the new site and stay tuned to the blog for the latest Server & Cloud

Platform updates!

Subscribed.

• The Microsoft Server and Cloud Platform Team announced The Microsoft Path to the Private Cloud Contest - Begins August 30th, 2011! in an 8/25/2011 post:

Your opportunity to win one of two Xbox 360 4GB Kinect Game Console Bundles!

Starting next week on August 30th at 9:00 a.m. PST, we’re kicking off our Microsoft Path to the Private Cloud Contest. During the contest you’ll have the opportunity to demonstrate your knowledge of Microsoft virtualization, systems

management and private cloud offerings by being the first to provide the

correct answers to three questions posted daily on the Microsoft Server and Cloud Platform blog.

Aidan Finn and Damian Flynn are conducting a Survey: Why Hyper-V? on their Hyper-V.nu site:

I’d like to draw your attention to a survey focusing on why you selected Microsoft as your virtualization platform.

This survey aims to get an idea about why you chose Hyper-V, how you use it, and what your experience has been. It has nothing to do with Microsoft; they’ll see the results at the same time you do. And we’re not collecting any personal information.

There are 4 pages of questions. Yes; that might seem like a lot but you’ll get through this in a few minutes. When it is complete, we will be publishing the results and analysis on the following sites. We believe it should give everyone an interesting view on what is being done with Hyper-V worldwide.

You can find the survey here: http://kwiksurveys.com/online-survey.php?surveyID=NLMJNO_8579c98b

Here is Aidan Finn’s blog about The Great Big Hyper-V Survey of 2011: http://www.aidanfinn.com/?p=11486

See the PR2Web reported IBM Unveils New Hybrid Cloud Solution for the Enterprise in an 8/25/2011 press release article in the Other Cloud Computing Platforms and Services section below.

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

My (@rogerjenn) Windows Azure and Cloud Computing Videos from Microsoft’s Worldwide Partners Conference 2011 post of 8/25/2010 provides links to the videos:

The WPC 2011 team posted videos of all keynotes and sessions at WPC 2011 in mid-August 2011 (missed when published). Following are links to Windows Azure and cloud-related sessions in two tracks:

CLD01 - Now is the Time to Build a Successful Cloud...

- CLD03 - Make $$$ in the Cloud with Managed Services

- CLD05 - Embracing the Cloud: Leveraging the Windows...

- CLD07 - Cloud Ascent: Learn How to Evolve Your SI...

- CLD10 - Websites and Profitability in the Cloud with...

- CLD12 - How to Build a Successful Online Marketing and...

Michael Washington (@ADefWebServer) will present W8 Unleash The Power: Implementing Custom Shells, Silverlight Custom Controls and WCF RIA Services in LightSwitch at 1105 Media’s Visual Studio Live! conference on 12/7/2011 at the Microsoft Campus in Redmond, WA:

Do not be fooled into believing that Visual Studio LightSwitch is only for basic forms over data applications. LightSwitch is a powerful MVVM (Model - View- View Model) application builder that enables you to achieve incredible productivity.

LightSwitch Silverlight Custom Controls, and WCF RIA Services, provide the professional developer, the tools to unleash the power of this incredible product.

In this session you will learn that there is practically nothing you can do in normal Silverlight application that you cannot do in LightSwitch.

- When you use a Custom Shell, and Silverlight Custom Controls, you have 100% control of the user interface.

- WCF RIA Services, allow you to access any data source, and to perform complex Linq queries, and operations that are normally not possible in LightSwitch.

- How to create and implement Silverlight Custom Controls in LightSwitch

- How to create and consume WCF RIA Services

- How to use a Custom Shell to have total control over your user interface

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Maureen O’Gara asserted “Price was not disclosed but CloudSwitch raised about $15.4 million from the venture boys” as a deck for her Verizon Buys CloudSwitch post of 8/25/2011 to the Cloudonomics Journal:

Verizon said Thursday that it's bought three-year-old cloud management software start-up CloudSwitch to help its recently acquired Terremark IT services subsidiary move applications or workloads between company data centers and the cloud.

CloudSwitch is supposed to do it without changing the application or the infrastructure layer, which is deemed an adoption hurdle.

CloudSwitch's software appliance technology ensures that enterprise applications remain tightly integrated with enterprise data center tools and policies, and can be moved easily between different cloud environments and back into the data center based on the requirements. They are supposed to feel like they're running locally.

CloudSwitch CEO John McEleney said in a statement that the company's "founding vision has always been to create a seamless and secure federation of cloud environments across enterprise data centers and global cloud services."

Reportedly Terremark and CloudSwitch were already partners.

Price was not disclosed but CloudSwitch raised about $15.4 million from the venture boys.

Anuradha Shukla reported EnterpriseDB Intros Database-as-a-Service for Public and Private Clouds in an 8/25/2011 post to the Cloud Tweaks blog:

EnterpriseDB has introduced a full-featured, enterprise-class PostgreSQL database-as-a-service (DaaS) for public and private clouds with support for Amazon EC2 and Eucalyptus.

The new launch-Postgres Plus Cloud Server- can also be used outside the cloud as an easy-to-use scale-out database architecture for bare metal deployment in standard data centers. EnterpriseDB provides PostgreSQL and Oracle compatibility products and services. With its new release, it is bringing capabilities expected in a premier cloud database solution. The company is now delivering point-and-click simple setup and management with web-based interface and transparent/elastic node addition capabilities to its users.

Postgres Plus Cloud Server also ensures automatic load balancing and failover; status management and monitoring; online backup and point-in-time recovery; and database cloning. EnterpriseDB will offer two versions of its cloud database within Postgres Plus Cloud Server. The first offering is an advanced open source database called PostgreSQL 9.0. Its second release- Postgres Plus Advanced Server 9.0 provides the best enterprise-class performance, scalability and security on top of PostgreSQL.

EnterpriseDB is set to open a private Postgres Plus Cloud Server beta for customers and partners on August 31, 2011.

This should be great news according to the company because even though a large number of organizations are enabling applications to be deployed on public and private cloud infrastructure, there are no PostgreSQL DaaS options that have the features, functionality and support required for enterprise environments.

Postgres Plus Cloud Server addresses these requirements and also offers a simple way of moving applications written to run on Oracle to the cloud at a fraction of the cost of Oracle license prices.

Kenneth van Surksum (@kennethvs) reported VMware releases beta of Micro Cloud solution for Cloud Foundry in an 8/25/2011 post:

When VMware announced its Platform as a Service (PaaS) solution Cloud Foundry in April this year it detailed that Cloud Foundry would be able to run on several types of clouds, including on what VMware called a Micro Cloud but technically means a VM running under VMware Fusion of Player.

The beta of Micro Cloud Foundry can be downloaded from the Cloud Foundry website, a CloudFoundry.com account is required. Once running developers should be able to use vmc or STS from their client and successfully target the Micro Cloud Foundry instance.

PR2Web announced IBM Unveils New Hybrid Cloud Solution for the Enterprise in an 8/25/2011 press release:

New offering will connect, manage and secure software-as--a-service and on-premise applications.

IBM … today announced a new hybrid cloud solution – building on its acquisition of Cast Iron -- to help clients significantly reduce the time it takes to connect manage and secure public and private clouds. With new integration and management capabilities, organizations of all sizes will gain greater visibility, control and automation into their assets and computing environments, regardless of where they reside.

As a result, it becomes significantly easier to integrate and manage all of an organization's on-and-off premise resources, and allows a task that once took several months to now be done in a few days.

Demand for Hybrid Cloud Computing Model Grows

Increasing numbers of organizations are looking to leverage the scale and flexibility of public cloud, but are concerned about losing control of resources outside of their four walls. This is causing organizations to embrace a hybrid cloud model, where they can more easily manage some resources in-house, while also using other applications externally as a service.

According to industry analysts, 39 percent of cloud users report that the hybrid cloud is currently part of their strategy, with this number expected to grow to 61 percent in the near future. This is the result of both private and public cloud users evolving towards the use of a hybrid strategy.1

Yet, as businesses adopt a cloud computing model, they are faced with integrating existing on premise software such as Customer Relationship Management (CRM), Enterprise Resource Planning (ERP) and homegrown applications, while managing their usage and security. Managing the complexities within and between such hybrid environments is key to effective enterprise business use of software-as-a-service (SaaS), according to Saugatuck Technology.2

New IBM Technology Will Manage, Connect and Secure Public and Private Clouds.

To overcome this challenge, IBM is expanding its SmartCloud portfolio with new hybrid cloud technology. The solution will build new hybrid cloud capabilities of IBM Tivoli cloud software onto the application and data integration technology from its Cast Iron acquisition to provide:

· Control and Management Resources: The new software will define policies, quotas, limits, monitoring and performance rules for the public cloud in the same way as on premise resources. This allows users to access public cloud resources through a single-service catalog enabling IT staff to govern the access and the usage of this information. As a result, organizations will more easily be able to control costs, IT capacity and regulatory concerns.

· Security: IBM enables better control of users’ access by synching the user directories of on premise and cloud applications. The automated synchronization means users will be able to gain entry to the information they are authorized to access.

· Application Integration: Using a simplified "configuration, not coding" approach to application integration, the software combines the power of native connectivity with industry leading applications to provide best-practices for rapid and repeatable project success.

· Dynamic Provisioning: IBM’s monitoring, provisioning and integration capabilities allow its hybrid cloud to support "cloud bursting," which is the dynamic relocation of workloads from private environments to public clouds during peak times. IBM’s technical and business policies control this sophisticated data integration.

…

The press release continues with a paean to IBM Cloud Computing.

Barton George (@Barton808) posted a 00:07:27 Cote’s first 10 days at Dell video interview on 8/25/2011:

A few weeks ago Michael Cote joined Dell from the excellent analyst firm, Redmonk which focuses on software and developers. Cote who spent five plus years with Redmonk has joined Dell in our corporate strategy group, focusing on software. I for one am very glad he’s here and feel that he’s joined at the right time in Dell’s trajectory to make a big impact.

I grabbed some time with him to get his initial thoughts and impressions. Here are his thoughts both witty and wise.

[Note: So there's no confusion, this Michael Cote is Dell's second Michael Cote. The first is the the former CEO of SecureWorks which Dell acquired.]

Some of the ground Cote covers:

- Intro: Man is it hot, Cote’s background

- (0:34) Why Cote made the move: going to the other side of the fence

- (1:55) What is his new position and what will he be doing: his cloudy focus

- (2:44) His first impressions: serious about solutions

- (5:18) What his big goal is while at Dell

Extra-credit reading:

- An Era Ends, An Era Begins – Stephen O’Grady, Redmonk

It sounds to me as if Michael will be focusing primarily on Dell’s cloud computing strategy, rather than software.

Keith Hudgins (@keithhudgins) offered an Introduction to Crowbar (and how you can do it, too!) in an 8/24/2011 post:

Dell's Crowbar is a provisioning tool that combines the best of Chef, OpenStack, and Convention over Configuration to enable you to easily provision and configure complex infrastructure components that would take days, if not weeks to get up and running without such a tool.

What is Crowbar?

Crowbar was oribinally written as a simplified installer for OpenStack's compute cloud control system Nova. Because you can't run Nova without having at least three separate components (one of which requiring its own physical server), creating an automated install system requires more than just running a script on a server or popping in a disk.

Crowbar consists of three major parts:

- Sledgehammer: A lightweight CentOS-based boot image that does hardware discovery and phones home to Crowbar for later assignment. It can also munge the BIOS settings on Dell hardware.

- OpenStack Manager: This is the Ruby-on-Rails based web ui to Crowbar. (Fun fact: it uses Chef as its datastore... there's some pretty sweet Rails model hackery inside!)

- Barclamps: The meat and potatoes of Crowbar - these are the install modules that set up and configure your infrastructure (across multiple physical servers, even)

Dell and other contributors have been working on extending Crowbar outside of just OpenStack as a generalized infrastructure provisioning tool. This article is the first in a 3-part series describing the development and build-up to a Barclamp (A crowbar install snippet) that gets CloudFoundry running for you.

So, that's neat and all, but what if you want to hack on this stuff and don't have a half-rack worth of servers lying around? This is DevOps, man, you virtualize it! (Automation will come later. I promise!)

Virtualizing Crowbar

The basics of this are shown on Rob Hirschfield's blog and a little more on project wiki. Dell, being Dell, is pretty much a Windows shop. We don't hold that against them, but the cool kids chew their DevOps chops on Mactops and Linux boxen. Since VMWare Fusion isn't that far from VMWare Workstation, it only took a half day of reading docs and forum posts to come up with a reliable way to do it on a Mac

Bonus homework: Get that running and read down to the bottom of the DTO Knowledge Base article I just linked, and you'll get a sneak preview of where we're going with this article series.

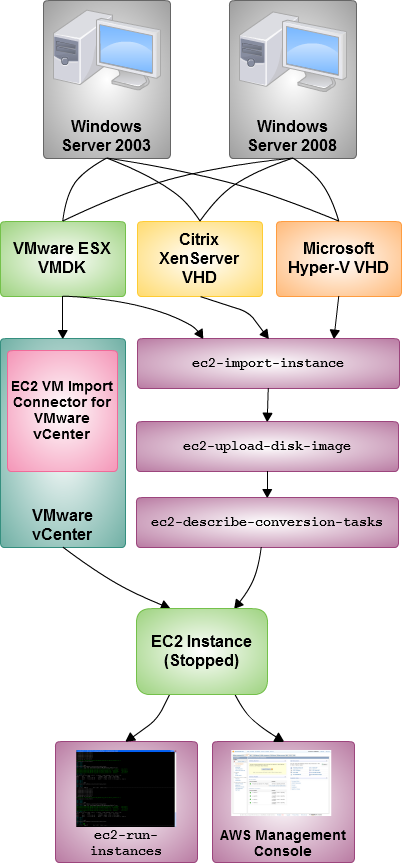

Jeff Barr (@jeffbarr) announced Additional VM Import Functionality - Windows 2003, XenServer, Hyper-V for Amazon Web Services in an 8/24/2011 post:

We've extended EC2's VM Import feature to handle additional image formats and operating systems.

The first release of VM Import handled Windows 2008 images in the VMware ESX VMDK format. You can now import Windows 2003 and Windows 2008 images in any of the following formats:

- VMware ESX VMDK

- Citrix XenServer VHD

- Microsoft Hyper-V VHD

I see VM Import as a key tool that will help our enterprise customers to move into the cloud. There are many ways to use this service – two popular ways are to extend data centers into the cloud and to be a disaster recovery repository for enterprises.

You can use the EC2 API tools, or if you use VMware vSphere, the EC2 VM Import Connector to import your VM into EC2. Once the import process is done, you will be given an instance ID that you can use to boot your new EC2 instance You have complete control of the instance size, security group, and (optionally) the VPC destination. Here's a flowchart of the import process:

You can also import data volumes, turning them in to Elastic Block Storage (EBS) volumes that can be attached to any instance in the target Availability Zone.

As I've said in the past, we plan to support additional operating systems, versions, and virtualization platforms in the future. We are also planning to enable the export of EC2 instances to common image formats.

Read more:

<Return to section navigation list>

0 comments:

Post a Comment