| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |  |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Bruce Kyle reported ‘Big Data’ Hadoop Coming to BI on SQL Server, Windows Server, Windows Azure in a 10/15/2011 post:

In his keynote at PASS 2011, Microsoft Corporate Vice President Ted Kummert announced new investments to help customers manage “big data,” including an Apache Hadoop-based distribution for Windows Server and Windows Azure and a strategic partnership with Hortonworks Inc. and Hadoop Connectors for SQL Server and Parallel Data Warehouse.

In his keynote at PASS 2011, Microsoft Corporate Vice President Ted Kummert announced new investments to help customers manage “big data,” including an Apache Hadoop-based distribution for Windows Server and Windows Azure and a strategic partnership with Hortonworks Inc. and Hadoop Connectors for SQL Server and Parallel Data Warehouse.

Microsoft will be working with the Hadoop ecosystem, including core contributors from Hortonworks, to deliver Hadoop-based distributions for Windows Server and Windows Azure that works with industry-leading business intelligence (BI) tools, including Microsoft PowerPivot.

Microsoft will be working with the Hadoop ecosystem, including core contributors from Hortonworks, to deliver Hadoop-based distributions for Windows Server and Windows Azure that works with industry-leading business intelligence (BI) tools, including Microsoft PowerPivot.

A Community Technology Preview (CTP) of the Hadoop-based service for Windows Azure will be available by the end of 2011, and a CTP of the Hadoop-based service for Windows Server will follow in 2012. Microsoft will work closely with the Hadoop community and propose contributions back to the Apache Software Foundation and the Hadoop project.

A Community Technology Preview (CTP) of the Hadoop-based service for Windows Azure will be available by the end of 2011, and a CTP of the Hadoop-based service for Windows Server will follow in 2012. Microsoft will work closely with the Hadoop community and propose contributions back to the Apache Software Foundation and the Hadoop project.

Hadoop Connectors

The company also made available final versions of the Hadoop Connectors for SQL Server and Parallel Data Warehouse. Customers can use these connectors to integrate Hadoop with their existing SQL Server environments to better manage data across all types and forms.

More information on the connectors can be found at http://www.microsoft.com/download/en/details.aspx?id=27584.

Vision for Data

See Kummert’s blog post on Technet, Microsoft Expands Data Platform to Help Customers Manage the ‘New Currency of the Cloud’.

About Hadoop

According to the Hadoop Website, “The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using a simple programming model. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-avaiability (sic), the library itself is designed to detect and handle failures at the application layer, so delivering a highly-availabile (sic) service on top of a cluster of computers, each of which may be prone to failures.”

Old news by now, but worth repeating.

Alex Popescu (@al3xandru, pictured below) quoted Rob Thomas on 10/15/2011 in an Oracle, Big Data, Hadoop...There Is Nothing to See Here post to his MyNoSQL blog:

Oracle, Big Data, Hadoop...There Is Nothing to See Here:

Oracle, Big Data, Hadoop...There Is Nothing to See Here:

Rob Thomas:

Anyone that has spent any time looking at Hadoop/Big Data and has actually talked to a client, knows a few basic things:

-

Big Data platforms enable ad-hoc analytics on non-relational (ie unmodelled data). This allows you to uncover insights to questions that you never think to ask. This is simply not possible in a relational database.

-

You cannot deliver true analytics of Big Data relying only on batch insights. You must deliver streaming and real-time analytics. That is not possible if you are biased towards putting everything in a database, before doing anything.

-

Clients will demand that Big Data platforms connect to their existing infrastructure. Clients don’t think that Big Data platforms exist solely for the purpose of populating existing relational systems. Big difference.

As I pointed out before, Oracle is neither the first nor the last using this strategy. But I don’t think this “let them believe we are providing Hadoop integration, but all we want is to push our hardware and databases” approach will sell very well.

As I pointed out before, Oracle is neither the first nor the last using this strategy. But I don’t think this “let them believe we are providing Hadoop integration, but all we want is to push our hardware and databases” approach will sell very well.

Maria Deutscher asserted Hadoop Leads Market Trends as Hortonworks gains Traditional Players in a 10/14/2011 post to the Silicon Angle blog:

Open-source big data engine Hadoop has become one of the fastest growing trends in the IT world, and the number of companies that get involved with this ecosystem – both solution providers and users – consistently contributes to their growth. One of the latest names on the list is Microsoft.

Open-source big data engine Hadoop has become one of the fastest growing trends in the IT world, and the number of companies that get involved with this ecosystem – both solution providers and users – consistently contributes to their growth. One of the latest names on the list is Microsoft.

On the same day it announced SQL Server 2012, the software maker revealed a strategic partnership with Hortonworks that will help it integrate Hadoop into Windows Server and Windows Azure. A visibility suite called PowerView and a couple of Hadoop connectors for SQL Server 2008 R2 and SQL Server Parallel Data Warehouse have already been launched, and Hortonworks will work with Microosft’s R&D crew to develop the rest.

On the same day it announced SQL Server 2012, the software maker revealed a strategic partnership with Hortonworks that will help it integrate Hadoop into Windows Server and Windows Azure. A visibility suite called PowerView and a couple of Hadoop connectors for SQL Server 2008 R2 and SQL Server Parallel Data Warehouse have already been launched, and Hortonworks will work with Microosft’s R&D crew to develop the rest.

Microsoft is hoping to tap a rapidly growing market, which also happens to be a very competitive one. And it seems the competition between Cloudera and Hortonworks on delivering enterprise-level Hadoop services extends beyond market share to a more personal level: Jeff Kelly looks at some important points of comparison between the two companies, noting the perspectives of Cloudera and Hortonworks CEOs.

The Hadoop market also attracted a more traditional player: Oracle. The company announced a NoSQL product at Oracle OpenWorld 2011, which fuses Hadoop and NoSQL with Oracle offerings. Oracle isn’t giving up on its core SQL portfolio just yet in favor of big data. Here we discuss how the Oracle Big Data Appliance, as it’s called, is more of a marketing ploy than a new strategy for the company.

The reason behind all this competition is demand. A survey commissioned by analytics UI developer Karmasphere found that 54 percent of the respondents’ organizations are either considering or already using Hadoop. And as the usage of big data analytics tools becomes more widespread, so does the demand for big data scientists.

The reason behind all this competition is demand. A survey commissioned by analytics UI developer Karmasphere found that 54 percent of the respondents’ organizations are either considering or already using Hadoop. And as the usage of big data analytics tools becomes more widespread, so does the demand for big data scientists.

In the same vein:

Ercenk Keresteci updated the documentation for the Cloud Ninja project on 10/3/2011 (missed when published):

Project Description

The Cloud Ninja Project is a Windows Azure multi-tenant sample application demonstrating metering and automated scaling concepts, as well as some common multi-tenant features such as automated provisioning and federated identity. This sample was developed by the Azure Incubation Team in the Developer & Platform Evangelism group at Microsoft in collaboration with Full Scale 180. One of the primary goals throughout the project was to keep the code simple and easy to follow, making it easy for anyone looking through the application to follow the logic without having to spend a great deal of time trying to determine what’s being called or have to install and debug to understand the logic.

The Cloud Ninja Project is a Windows Azure multi-tenant sample application demonstrating metering and automated scaling concepts, as well as some common multi-tenant features such as automated provisioning and federated identity. This sample was developed by the Azure Incubation Team in the Developer & Platform Evangelism group at Microsoft in collaboration with Full Scale 180. One of the primary goals throughout the project was to keep the code simple and easy to follow, making it easy for anyone looking through the application to follow the logic without having to spend a great deal of time trying to determine what’s being called or have to install and debug to understand the logic.

Key Features

Key Features

- Metering

- Automated Scaling

- Federated Identity

- Provisioning

- Metering Charts

- Changes to metering views

- Dynamic Federation Metadata Document

Version 3.0 of Cloud Ninja is available. New Features:

- Utilizing Azure Store XRay project for Azure Storage metering and health monitoring [Emphasis added.]

- Storage Analytics with charts exposing storage metrics [Emphasis added.]

- Additions to metering data for storage inboud/outbound bandwith usage, and billable transactions count per tenant.

Contents

- Sample source code

- Design document

- Setup guide

- Sample walkthrough

Notes - Project Cloud Ninja is not a product or solution from Microsoft. It is a comprehensive code sample developed to demonstrate the design and implmentation of key features on the platform.

- Another sample (also known as Fabrikam Shipping Sample) published by Microsoft provides an in depth coverage of Identity Federation for multi-tenant applications, and we recommend you to review this in addition to Cloud Ninja. In Cloud Ninja we utilized the concepts in that sample but also put more emphasis on metering and automated scaling. You can find the sample here and related Patterns and Practices team's guidance here.

Team

<Return to section navigation list>

Jeffrey Schwartz reported New Tools Ease Movement of Databases to Microsoft's SQL Azure in a 10/13/2011 post to his Schwartz Cloud Report for RedmondMag.com:

Microsoft's SQL Server database server platform and its cloud-based SQL Azure may share many core technologies but they are not one in the same. As a result, moving data and apps from one to the other is not all that simple.

Two companies this week set out to address that during the annual PASS Summit taking place in Seattle. Attunity and CA Technologies introduced tools targeted at simplifying the process of moving data from on-premises databases to SQL Azure.

Attunity Replicate loads data from SQL Server, Oracle and IBM's DB2 databases to SQL Azure and does so without requiring major development efforts, claimed Itamar Ankorion, Attunity's VP of business development and corporate strategy.

"The whole idea is to facilitate the adoption of SQL Azure, allow organizations and ISVs to benefit from cloud environments and the promise of SQL Server in the cloud," Ankorion said. "One of the main challenges that customers have is how do they get their data into the cloud. Today, it requires some development effort, which naturally creates a barrier to adoption, more risk for people, more investment, with our tools, it's a click away."

"The whole idea is to facilitate the adoption of SQL Azure, allow organizations and ISVs to benefit from cloud environments and the promise of SQL Server in the cloud," Ankorion said. "One of the main challenges that customers have is how do they get their data into the cloud. Today, it requires some development effort, which naturally creates a barrier to adoption, more risk for people, more investment, with our tools, it's a click away."

It does so by using Microsoft's SQL Server Integration Services, which provides data integration and transformation, and Attunity's change data capture (CDC) technology, designed to efficiently process and replicate data as it changes. "We wanted to basically provide software that would allow you to drag a source and drag a target, click, replicate and go," Ankorion said.

For its part, CA rolled out a new version of its popular ERwin data modeling tool for SQL Azure. CA ERwin Data Modeler for SQL Azure lets customers integrate their in-house databases with SQL Azure.

"CA ERwin Data Modeler for Microsoft SQL Azure provides visibility into data assets and the complex relationships between them, enabling customers to remain in constant control of their database architectures even as they move to public, private and hybrid IT environments," said Mike Crest, CA's general manager for data management, in a statement.

CA's tool provides a common interface for combining data assets from both premises and cloud-based databases. Customers can use the same modeling procedure to maintain their SQL Azure databases.

Full disclosure: I’m a contributing editor for Visual Studio Magazine, a sister publication of RedmondMag.com.

<Return to section navigation list>

Pekka Heikura (@pekkath) described an OData service using WebApi and NHibernate in a 10/11/2011 post:

OData is a popular format for data services built on top of Microsoft technology stack. It's very simple to create your own services as long as you are using the .NET Framework parts like Entity Framework for data access but when you try to do it with other data access frameworks things quickly get very complicated. That probably is the reason that has made developers quickly step away from the OData services and use custom services instead.

OData is a popular format for data services built on top of Microsoft technology stack. It's very simple to create your own services as long as you are using the .NET Framework parts like Entity Framework for data access but when you try to do it with other data access frameworks things quickly get very complicated. That probably is the reason that has made developers quickly step away from the OData services and use custom services instead.

WCF Web Api is doing the same thing to WCF as ASP.NET MVC did to WebForms by giving the control back to the developers. As of writing this the current version of Web Api is 0.5 which includes some great new features like OData formatter for data. Previous versions supported creating services that could be queried by using OData query format but were missing the actual OData serialization for data. With the latest version it’s quite easy to create OData services to query data from service and return properly OData formatted data back to clients.

WCF Web Api is doing the same thing to WCF as ASP.NET MVC did to WebForms by giving the control back to the developers. As of writing this the current version of Web Api is 0.5 which includes some great new features like OData formatter for data. Previous versions supported creating services that could be queried by using OData query format but were missing the actual OData serialization for data. With the latest version it’s quite easy to create OData services to query data from service and return properly OData formatted data back to clients.

In this blog post I'm going to show you how to build a OData service which returns data from database using NHibernate as a ORM. You can find the full sample code under my github account. The sample code is bit more than just a bare bones code showing a OData service. It uses Autofac for dependency injection and has a custom lifetime extension to manage lifetime of NHibernate session during the execution of the service calls.

Okay the best part is that to create a service endpoint which can be queried using OData query format and returns OData formatted data is easy.

[WebGet]

public IQueryable<Project> Query()

{

return this.session.Query<Project>();

}

To actually configure OData formatting requires you to register the ODataMediaTypeFormatter. In this sample I'm going to remove the OData Json format so only atom+xml is supported.

var config = new WebApiConfiguration();

var odataFormatter = new ODataMediaTypeFormatter();

odataFormatter.SupportedMediaTypes.Clear();

odataFormatter.SupportedMediaTypes.Add(new MediaTypeHeaderValue("application/atom+xml"));

config.Formatters.Clear();

config.Formatters.Insert(0, odataFormatter);

routes.SetDefaultHttpConfiguration(config);

routes.MapServiceRoute<ProjectsApi>("api/projects");

After configuring the service rest is basically just getting the NHibernate ISession into the service and managing the lifetime of it so that the service can be queried.

OData results displayed using soapUI:

That is all you need to do to implement a read only OData service. Sample code also includes a GET -endpoint for creating projects into database.

<Return to section navigation list>

Chris Klug (@ZeroKoll) explained Using the Windows Azure Service Bus - Queuing in a 10/10/2011 post (missed when published):

I guess it is time for another look at the Azure Service Bus. My previous posts about it has covered the basics, message relaying and relaying REST. So I guess it is time to step away from the relaying and look at the other way you can work with the service bus.

I guess it is time for another look at the Azure Service Bus. My previous posts about it has covered the basics, message relaying and relaying REST. So I guess it is time to step away from the relaying and look at the other way you can work with the service bus.

When I say “the other” way, it doesn’t mean that we are actually stepping away from relaying. All messages are still relayed via the bus, but in “the other” case, we utilize the man in the middle a bit more.

When I say “the other” way, it doesn’t mean that we are actually stepping away from relaying. All messages are still relayed via the bus, but in “the other” case, we utilize the man in the middle a bit more.

“The other” way means utilizing the message bus for “storage” as well. It means that we send a message to the bus, let the bus store it for us until the service feels like picking it up and handling it.

There are several ways that this can be utilized, but in this post, I will focus on queuing.

The sample in this post requires the same things as the previous posts, that is the Azure SDK and so on. If this is the first post you are reading from me regarding the bus, I suggest going back to the first code sample and look at the prerequisites.

Azure Service Bus queues are very similar to the normal Azure Storage queues, as well as any other queue. The biggest difference in the Azure scenario is that with the service bus version, we get some things for free. We don’t have to build our own polling scheme for example, as the queues support long polling from the start.

But before we start looking at code, I want to cover a basic thing, the message. When sending a message to the queue, the message is always of the type BrokeredMessage. A brokered message has a bunch of properties and features which are outside of the scope of this blog post, but some of the main features are the possibility of sending a typed message, meta data/properties for that message, control time to live (TTL) etc.

So the basic flow for using a queue on the service side is – get a reference to the queue, listen for messages on the queue, handle the incoming message. Just as you would expect…

There are a couple of things to remember though. First of all, the queue might not exist already. So make sure it is there! And also, it is a queue, it has its own storage, so when you start listening, you might get flooded by messages straight away!

Ok, so what does it look like on the client? Well, pretty much the same, get a reference to the queue, send the required messages, and then close it… And the same gotchas exists!

So let’s look at some code then…

First we need a message to send. And by that, I mean a typed message to put inside the BrokeredMessage that we send to the queue. This message however is ridiculously easy to create, just create a new class and define what properties you need. However, you need to put it in a separate assembly that both the service and the client can reference.

I start of by creating a new class library project called something good that reflects that it will contain messages for the queue. Inside this project, I create a class called MyMessage.

The MyMessage class gets a single property of the type string. This is just to keep it simple. It could contain a lot more information. Just remember that it is not allowed to be bigger than 256 kB.

public class MyMessage

{

public MyMessage()

{

}

public MyMessage(string msg)

{

Message = msg;

}

public string Message { get; set; }

}

Next up is the client, so I create a new console application project called QueueClient and add a reference to the Microsoft.ServiceBus, System.Runtime.Serialization and System.Configuration assemblies, as well as my messages project. (Remember to change the target to the full .NET Framework)

Inside of the Program.cs file, I add a new global member of type string, called QueueName. I also make is static. I also add the get-methods for the configuration that I have used before.

class Program

{

private static string QueueName = "TheQueue";

static void Main(string[] args)

{

}

private static string GetIssuerName()

{

return ConfigurationManager.AppSettings["issuer"];

}

private static string GetSecret()

{

return ConfigurationManager.AppSettings["secret"];

}

private static string GetNamespace()

{

return ConfigurationManager.AppSettings["namespace"];

}

}

To back this up, I obviously need to add the necessary appSettings elements in the app.config file as well. These are however identical to the previous posts, so I won’t show these. But even if you haven’t read the previous posts, it should be fairly obvious what needs to be there…

Next up is the actual functionality… I have to start by getting hold of the URL to the service bus namespace. This is really easy to do using a static class called ServiceBusEnvironment. On this class, you will find a couple of methods and a couple of properties. What I want is the CreateServiceUri() method. It takes the scheme to use, the namespace and the path to the service. In this case, the scheme is “sb” for Service Bus, the namespace comes from configurations, and there is no service name. So it looks like this

var url = ServiceBusEnvironment.CreateServiceUri("sb", GetNamespace(), string.Empty);

I also need to provide the service bus with some credentials. This is done using shared secret token provider, which is not at all as complicated to create as it sounds. Just use another static method on a class provided by Microsoft called TokenProvider. The method is called CreateSharedSecretTokenProvider(), which is really descriptive! It takes an issuer name and a secret. Both of these come from configuration, so it simply looks like this

var credentials = TokenProvider.CreateSharedSecretTokenProvider(GetIssuerName(), GetSecret());

Next I need a NamespaceManager, which is newed up, by passing in the URL and the taken provider. I also need a MessagingFactory, which is created by passing in the same values to a static Create() method on the MessagingFactory class.

var nsm = new NamespaceManager(url, credentials);

var mf = MessagingFactory.Create(url, credentials);

Now that we have all the classes we need, it is time to do some actual work! …I know, that is a lot of things to create before we can even begin…

First out is to verify that the queue exists. Luckily, the NamespaceManager can help me with this. It has a method called QueueExists(), which takes the name of the queue and returns bool.

If the queue doesn’t exist, I create it using the CreateQueue() method on the NamespaceManager. See, the NamespaceManager that we created is really useful. It helps us with managing the “features” in our namespace (not only for queues).

And finally, I use the MessagingFactory to create a client for the queue… And all of that boils down to these 3 lines of code

if (!nsm.QueueExists(QueueName))

nsm.CreateQueue(QueueName);

var qClient = mf.CreateQueueClient(QueueName);

Now, I am ready to send messages to the queue. In this contrived example, I set up a loop that retrieves the message to send from console input until the input is an empty string.

string msg;

do

{

Console.Write("Message to send: ");

msg = Console.ReadLine();

if (!string.IsNullOrEmpty(msg))

qClient.Send(new BrokeredMessage(new MyMessage(msg)));

} while (!string.IsNullOrEmpty(msg));

As you can see, if you ignore all of the plumbing, the important stuff is in the qClient.Send() method. The Send() method takes a BrokeredMessage, as mentioned before, but the BrokeredMessage “wraps” my own message. So the service will receive a BrokeredMessage, but it can then get the MyMessage object as well as you will see soon.

The last thing to do is to close the client as soon as the sending is done. This is important as the service bus is charged by connections at the moment. I assume this will change ion the future to be charged per message, but for now it is per connection. So you want to keep the connections as short-lived as possible to keep the connection count down.

Closing the connection is done by calling Close() on the QueueClient.

The service end is pretty much as simple, except for some threading things… I create the service in the same way as the client, by adding a new console application project, adding the previously mentioned references and changing the target framework.

I also copy across the app.config and the config “getters” from my client…

The main difference between the service and the client is that I move the QueueClient out to a global member so that I can reach it from several places in the class. I have also changed the sending loop to a receive thingy… It looks like this

private static QueueClient _qClient;

private static string QueueName = "TheQueue";

static void Main(string[] args)

{

var credentials = TokenProvider.CreateSharedSecretTokenProvider(GetIssuerName(), GetSecret());

var url = ServiceBusEnvironment.CreateServiceUri("sb", GetNamespace(), string.Empty);

var nsm = new NamespaceManager(url, credentials);

var mf = MessagingFactory.Create(url, credentials);

if (!nsm.QueueExists(QueueName))

nsm.CreateQueue(QueueName);

_qClient = mf.CreateQueueClient(QueueName);

BeginReceive();

Console.WriteLine("Waiting for messages...");

Console.ReadKey();

_qClient.Close();

}

As you can see, it is very similar. The sending loop has been replaced with a call to BeginReceive() and a locking call to Console.ReadKey(). The BeginReceive() method is async, so we need to the Console.ReadKey() to keep the application running.

So what does the BeginReceive() method look like? Well, it is literally a one-liner. It looks like this

private static void BeginReceive()

{

_qClient.BeginReceive(TimeSpan.FromMinutes(5), MessageReceived, null);

}

It makes a single call to the BeginReceive() method on the QueueClient. In the call, it tells the QueueClient to wait for up to 5 minutes for the message, to pass the message to the MessageReceived() methods when received, and that it does not need to pass any state object to the MessageReceived() method.

It is in the MessageReceived method that all the important stuff is happening. First of all, the MessageReceived() method is an async thingy, so it needs to take an IAsyncResult parameter.

But besides that, it is pretty straight forward. It uses the QueueClient’s EndReceive() method to get hold of the potentially received message. I say “potentially” as it might not have received a message, and instead timed out, ie not received a message within 5 mins.

Next, I verify whether or not the I did receive a message. if I did, I get the BrokeredMessage’s “body” in the form of a MyMessage instance. I then Console.WriteLine() the message. Finally, I call BegindReceive() again. I also make sure to wrap it all in a try/catch for good measure.

private static void MessageReceived(IAsyncResult iar)

{

try

{

var msg = _qClient.EndReceive(iar);

if (msg != null)

{

var myMsg = msg.GetBody<MyMessage>();

Console.WriteLine("Received Message: " + myMsg.Message);

}

}

catch (Exception ex)

{

Console.WriteLine("Exception was thrown: " + ex.GetBaseException().Message);

}

BeginReceive();

}

That’s all there is to it! This will make sure that the service receives any message sent to the queue. It does so in a non-blocking async way, making sure the UI stays responsive. It also turns the BeginReceive() into a loop, making sure that we go back and ask the queue for a new message continuously until we press a key in the console and thus close the client’s connection.

Code is available as usual here: DarksideCookie.Azure.ServiceBusDemo - Queuing.zip

Subscribed.

Chris Klug (@ZeroKoll) described Using Azure Service Bus relaying for REST services in a 10/6/2011 post (missed when published):

I am now about a week and a half into my latest Azure project, which so far has been a lot of fun and educational. But the funky thing is that I am still excited about working with the Service Bus, even though we are a week and a half into the project. I guess there is still another half week before my normal 2 week attentions span is up, but still!

I am now about a week and a half into my latest Azure project, which so far has been a lot of fun and educational. But the funky thing is that I am still excited about working with the Service Bus, even though we are a week and a half into the project. I guess there is still another half week before my normal 2 week attentions span is up, but still!

So what is so cool about the bus, well, my last 2 posts covered some of it, but it is just so many cool possibilities that open up with it.

So what is so cool about the bus, well, my last 2 posts covered some of it, but it is just so many cool possibilities that open up with it.

This post has very little to do with what I am currently working on, and to be honest, the sample is contrived and stupid, but it shows how we can use REST based services with the bus.

But before I start looking at code, I would suggest reviewing the previous post for details regarding NuGet package for the Service But stuff as well as some note about setting up your project for Azure development as well as setting up the Azure end of things.

Once that is done, it is time to look at the code, which in this case, once again, will be implemented in a console applications.

Start by adding a new interface, remember, the bus is all about WCF services, and WCF services are all about interfaces. I am calling my interface IIsItFridayService. It has a single method called IsIt(). It takes no parameters, and returns a Stream. I also add a ServiceContract attribute as well as a OperationContract one. These are the standard WCF attributes unlike the last attribute I add, which is called WebGet. It is a standard WCF attribute as such, but it is not used as much as the other two which are mandatory. And on top of that, it is located in another assembly that you need to reference, System.ServiceModel.Web. In the end it looks like this

[ServiceContract(Name = "IIsItFriday", Namespace = "http://chris.59north.com/azure/restrelaydemo")]

public interface IIsItFridayService

{

[OperationContract]

[WebGet(UriTemplate = "/")]

Stream IsIt();

}

The WebGet attribute has a UriTemplate that defines the template to use when calling it, pretty much like routes in ASP.NET MVC. You can find out more about it here.

Now that we have an interface/contract, we obviously need to implement it. So a new class needs to be created, and it needs to implement the interface we just designed.

The implementation for my IsItFriday service is ridiculously simple. It uses a standard HTML format string, and inserts a yes or no statement into it based on the day of the week. It then converts the string into a MemoryStream and return that. Like this

public class IsItFridayService : IIsItFridayService

{

private static string ReturnFormat = "<html><head><title>{0}</title></head><body><h1>{0}</h1></body>";

public Stream IsIt()

{

WebOperationContext.Current.OutgoingResponse.ContentType = "text/html";

var str = DateTime.Now.DayOfWeek == DayOfWeek.Friday ? "Yes it is!" : "No it isn't...";

return new MemoryStream(Encoding.UTF8.GetBytes(string.Format(ReturnFormat, str)));

}

}

The only thing that is out of the ordinary is the use of the WebOperationContext class. Using this, I change the content type of the response to enable the browser to interpret it as HTML. If you were to return JSON or XML, then you would set it to that…

The reason for returning a Stream instead of a string is that when we return a string, it will be reformatted and surrounded with an XML syntax. A Stream is rendered as is…

Ok, now there is a service contract and a service, I guess it is time to host it in the console app. This is done by creating a new WebServiceHost, passing in the type of the service to be hosted. Next we call Open() to open to service ports.

However, as this is a console app, we also need to make sure to keep the thread busy and alive. I do this with a simple Console.ReadKey(), which is followed by host.Close() to make sure we close the host, and thus service bus connection, before we leave the app

class Program

{

static void Main(string[] args)

{

var host = new WebServiceHost(typeof(IsItFridayService));

host.Open();

Console.WriteLine("Service listening at: " + host.Description.Endpoints[0].Address);

Console.WriteLine("Press any key to exit...");

Console.ReadKey();

host.Close();

}

}

That’s it! Almost at least… There is obviously a config file somewhere in play here. The host needs configuration, and there isn’t any here.

In the previous post, I did some of the config in code, not all though even though I could. In this case, I have left it all in the config to make the code easier to read.

The config looks like this

<?xml version="1.0"?>

<configuration>

<system.serviceModel>

<services>

<service name="DarksideCookie.Azure.ServiceBusDemo.RelayingREST.IsItFridayService">

<endpoint contract="DarksideCookie.Azure.ServiceBusDemo.RelayingREST.IIsItFridayService"

binding="webHttpRelayBinding" address="http:// [ NAMESPACE ] .servicebus.windows.net/IsItFriday/" />

</service>

</services>

<bindings>

<webHttpRelayBinding>

<binding>

<security mode="None" relayClientAuthenticationType="None" />

</binding>

</webHttpRelayBinding>

</bindings>

<behaviors>

<endpointBehaviors>

<behavior>

<transportClientEndpointBehavior credentialType="SharedSecret">

<clientCredentials>

<sharedSecret issuerName="owner" issuerSecret=" [ SECRET ] " />

</clientCredentials>

</transportClientEndpointBehavior>

<serviceRegistrySettings discoveryMode="Public"/>

</behavior>

</endpointBehaviors>

</behaviors>

<extensions>

<bindingExtensions>

<add name="webHttpRelayBinding" type="Microsoft.ServiceBus.Configuration.WebHttpRelayBindingCollectionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</bindingExtensions>

<behaviorExtensions>

<add name="transportClientEndpointBehavior" type="Microsoft.ServiceBus.Configuration.TransportClientEndpointBehaviorElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

<add name="serviceRegistrySettings" type="Microsoft.ServiceBus.Configuration.ServiceRegistrySettingsElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</behaviorExtensions>

</extensions>

</system.serviceModel>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.0"/>

</startup>

</configuration>

And that is a LOT of config for something this trivial. I know! But it all makes sense to be honest. Let’s look at it one part at the time, ignoring the <startup /> element as it just tells the system that it is a .NET 4 app.

I’ll start from below. The <extensions /> element contains new stuff that the machine config doesn’t know about. On this case, it contains one new binding type, and two new behaviors. The binding is the webHttpRelayBinding, which is used when relaying HTTP calls through the service bus. And the 2 behaviors consists of one that handles passing credentials from the service to the service bus, and one that tells the bus to make the service discoverable in the service registry.

The next element is the standard <behaviors /> element. It configures the behaviors to use for the endpoint. By not naming the <behavior /> element, it will be the default and will be used by all endpoints that do not specifically tell it to use another one. The behavior configuration sets up the 2 new extensions added previously.

When trying this, you will have to modify the downloaded config and add your shared secret in here…

Next element in the tree is the <bindings /> one. It configures the webHttpRelayBinding to use no security, that means require no SSL and so on, as well as not use relay any client credentials. This last part will make sure that you do not get a log in prompt when browsing to the service.

And the final element is the service registration with its endpoint…

So, what does spinning this application up do for us? Well, it looks like this

Which is far from impressive. However, if you direct a browser to the URL displayed in the window, you get this

Cool! Well…at least semi cool. If we start thinking about it, I think we would agree. I have managed to create a local application (Console even) that can be reached from any browser in the world through the Service Bus.

It does have some caveats, like the fact that it is pretty specific about the URL for example. It has to end with “/, and it doesn’t take any parameters. The latter part however can be changed, and the service could be turned into a full blown REST service with all the bells and whistles…

That was it for me this time! I hope it has shown you a bit more of the power of the bus!

The code is available here: DarksideCookie.Azure.ServiceBusDemo - Relaying REST.zip

Chris Klug (@ZeroKoll) described Using the Windows Azure Service Bus - Message relaying in a 9/29/2011 post (missed when published):

My last post [What is the Windows Azure Service Bus?] was a bit light on the coding side I know. I also know that I promised to make up for that with a post with some actual code. And this is it! Actually, this is one of them. My plan is to walk through several of the features over the next few posts in the n00b kind of way.

My last post [What is the Windows Azure Service Bus?] was a bit light on the coding side I know. I also know that I promised to make up for that with a post with some actual code. And this is it! Actually, this is one of them. My plan is to walk through several of the features over the next few posts in the n00b kind of way.

That is, I am going to go through the basics for each of the features I deem interesting, making it easy to follow for people who are new to the Service Bus. And please don’t be offended by the “n00b” comment. We are all “n00bs” at some point in every thing we do.

That is, I am going to go through the basics for each of the features I deem interesting, making it easy to follow for people who are new to the Service Bus. And please don’t be offended by the “n00b” comment. We are all “n00bs” at some point in every thing we do.

Before we can build some cool service bus things, we need to make sure that we have everything we need on the machine, which basically means verifying that we have the Azure SDK installed. In this case, I am using the 1.5 release, that was released recently, but if they have released a new version, hopefully most of the code will port nicely.

If not, it is easy to do using the Web Platform Installer or by going here, which will launch the WPI automatically for you. It is possible to get just the SDK install by clicking here for 64-bit and here for the 32-bit.

Launching the WPI and searching for Azure will give you the following screen

At least at the time of writing.

Just select the Windows Azure AppFabric SDK V<whatever is the current>. As you can see, I am also installing the Azure tools for Visual Studio, but that shouldn’t not be required for this demo.

Now that we have the tools, we need to set up a Service bus namespace in Azure. This is not hard at all. Just browse to https://windows.azure.com/ and log in to your account.

If you don’t have an account, you will have to set one up. Currently I believe that there is a queue for trial accounts, but that might change. Otherwise, if you have an MSDN account, this will give you some Azure love as well. But yes, you do need to enter credit card details to cover any costs that you might incur. Generally this will not happen while just testing things. But make sure you stay in the defined limitations of your subscription type unless you have a few dollars to spend…

As you log in, you will see the Azure Management Portal (built in Silverlight of course). In the bottom left corner you will find a button for “Service Bus, Access Control & Caching”.

Click it! Then click the “New” button at the top, followed by the" “Service bus” link.

Fill out the window that pops up

And you are done. This should have given you a new Service bus namespace to play with.

I will return to the Azure Management Portal later, so keep the window open.

Next, we can start looking at getting some code up and going. In this case, I have created a solution with 3 projects, one for the server, one for the client and one for common stuff.

I prefer having my common things in a third project, instead of creating duplicates in the other projects. The client and server projects are Console projects, and the common project is a class library.

The first thing we need to do is to create a service contract, which is fairly easy. Just create a new interface in the common project that defines the features you need. In my case, that is a service that will tell me whether or not it is Friday, which would look something like this

public interface IIsItFridayService

{

bool IsItFriday();

}

However, we need to turn it into a WCF service contract by adding some attributes from the System.ServiceModel assembly. So let’s add a reference to that, and add the ServiceContractAttribute and OperationContractAttribute to the interface.

[ServiceContract(Name="IsItFridayService", Namespace="http://chris.59north.com/azure/relaydemo")]

public interface IIsItFridayService

{

[OperationContract]

bool IsItFriday();

}

Finally, we need to create a channel interface as well. This will be used later on. Luckily, it is a lot easier than it sounds. It is basically an interface that implements the service interface as well as the IChannel interface. Like this

public interface IIsItFridayServiceChannel : IIsItFridayService, IChannel { }

That’s it! Service contract finished!

Next we have to create the server, and the first thing we have to do is add a reference to the service bus assembly called Microsoft.ServiceBus. However, if you open the “Add Reference” dialog, you will probably realize that it isn’t there. That’s because the Console project for some reason is configured to use the “.NET Framework 4 Client Profile” instead of the full framework.

To solve this, just change the target framework to “.NET Framework 4”, and then you can add the reference. You will need to do the same thing to the client project as well.

Ok…with that out of the way, we can start looking at building the server.

When you create a WCF service, you will first of all need an address where the service is to be exposed. In the case of the service bus, this address is created using a static helper method on a class called ServiceBusEnvironment

This address can then be passed to a standard WCF ServiceHost together with the type of service to host.

Uri endpointAddress = ServiceBusEnvironment.CreateServiceUri("sb", GetNamespace(), "IsItFridayService");

var host = new ServiceHost(typeof(IsItFridayService), endpointAddress);

Note that the “sb” in there, is the scheme for the service bus…

The host however is just a regular Wcf service host and has no knowledge of the service bus. So to be able to work with the service bus, we need to add a service behavior called TransportClientEndpointBehavior. This behavior is responsible for authenticating towards the service bus using a token, which it gets from a TokenProvider. In this case, the TokenProvider will be one that uses the issuer name, and a secret to create the token. This provider is created by calling the TokenProvider.CreateSharedSecretTokenProvider method, passing in the issuer name and the secret.

There are a couple of other providers as well, but let’s ignore those for now!

The issuer name and secret is available through the Azure Management Portal. Just select the namespace you need it for in the list of service bus namespaces, and then click the little “View” button to the right

This will give you a window that shows you everything you need

As soon as we have the TOkenProvider and ServiceBehavior set up, we need to add it to the hosts endpoints.

var serviceBusEndpointBehavior = new TransportClientEndpointBehavior();

serviceBusEndpointBehavior.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(GetIssuerName(), GetSecret());

foreach (var endpoint in host.Description.Endpoints)

{

endpoint.Behaviors.Add(serviceBusEndpointBehavior);

}

That’s it, next we can call Open() on the host, and await connections!

host.Open();

Console.WriteLine("Service listening at: " + endpointAddress);

Console.WriteLine("Press any key to exit...");

Console.ReadKey();

host.Close();

The client needs an address to connect to as well, and can use the same helper method as the server. It also needs a TransportClientEndpointBehavior to be able to communicate with the service, and once again, it can be created in the same way as on the server.

var serviceUri = ServiceBusEnvironment.CreateServiceUri("sb", GetNamespace(), "IsItFridayService");

var serviceBusEndpointBehavior = new TransportClientEndpointBehavior();

serviceBusEndpointBehavior.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(GetIssuerName(), GetSecret());

But that’s where the similarities end. The next step is to create a ChannelFactory<T> that is responsible for creating a “channel” to use when communicating with the service. This is where that extra “channel” interface comes into play. The factory will create an instance of a class that implements that interface.

This factory is also responsible for the behavior stuff, so we create it and add the TransportClientEndpointBehavior to the endpoint.

var channelFactory = new ChannelFactory<IIsItFridayServiceChannel>("RelayEndpoint", new EndpointAddress(serviceUri));

channelFactory.Endpoint.Behaviors.Add(serviceBusEndpointBehavior);

As soon as the factory is created, we can create a channel, open it, call the method we need to call, and finally close it.

var channel = channelFactory.CreateChannel();

channel.Open();

Console.WriteLine("It is" + (channel.IsItFriday() ? " " : "n't ") + "Friday");

channel.Close();

That’s it! More or less at least… If you have ever worked with Wcf, you have probably figured out that we are missing some configuration.

The configuration is however not very complicated. For the server, it looks like this

<?xml version="1.0"?>

<configuration>

<system.serviceModel>

<services>

<service name="DarksideCookie.Azure.ServiceBusDemo.RelayService.IsItFridayService">

<endpoint contract="DarksideCookie.Azure.ServiceBusDemo.RelayService.Common.IIsItFridayService" binding="netTcpRelayBinding" />

</service>

</services>

<extensions>

<bindingExtensions>

<add name="netTcpRelayBinding"

type="Microsoft.ServiceBus.Configuration.NetTcpRelayBindingCollectionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</bindingExtensions>

</extensions>

</system.serviceModel>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.0"/>

</startup>

</configuration>

Nothing complicated there, except for that new binding type that requires us to register a binding extension.

That new binding is what is responsible for communicating with the service bus. This is what makes it possible for us to create a regular Wcf service, and have it communicate with the service bus.

The client config is very similar

<?xml version="1.0"?>

<configuration>

<system.serviceModel>

<client>

<endpoint name="RelayEndpoint"

contract="DarksideCookie.Azure.ServiceBusDemo.RelayService.Common.IIsItFridayService"

binding="netTcpRelayBinding"/>

</client>

<extensions>

<bindingExtensions>

<add name="netTcpRelayBinding"

type="Microsoft.ServiceBus.Configuration.NetTcpRelayBindingCollectionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</bindingExtensions>

</extensions>

</system.serviceModel>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.0"/>

</startup>

</configuration>

As you can see, it has the same binding stuff registered, as well as a single client endpoint.

Running the server and client will result in something like this

There is however one more thing I want to look at!

The service works fine, but it requires the client to know exactly where to find it, which might not be a problem. But it is possible for us to actually output some information about the available services.

This is done by “registering” the service in the so called “service registry” using a service behavior called ServiceRegistrySettings. This behavior will tell the service bus whether the service should be possible to find publicly, or not. By creating an instance, passing in DiscoveryType.Public to the constructor, and adding it to the hosts endpoints, we can enable this feature

var serviceRegistrySettings = new ServiceRegistrySettings(DiscoveryType.Public);

foreach (var endpoint in host.Description.Endpoints)

{

endpoint.Behaviors.Add(serviceRegistrySettings);

}

What does this mean? Well, it means that if we browse to the “Service Gateway” address (available in the Azure Management Portal), we get an Atom feed defining what services are available in the chosen namespace.

So in my case, browsing to http://darksidecookie.servicebus.windows.net, gives me a feed that looks like this

<feed xmlns="http://www.w3.org/2005/Atom">

<title type="text">Publicly Listed Services</title>

<subtitle type="text">This is the list of publicly-listed services currently available</subtitle>

<id>uuid:206607c9-4819-40ad-bfd6-cd730732cab0;id=31581</id>

<updated>2011-09-28T17:12:54Z</updated>

<generator>Microsoft® Windows Azure AppFabric - Service Bus</generator>

<entry>

<id>uuid:206607c9-4819-40ad-bfd6-cd730732cab0;id=31582</id>

<title type="text">isitfridayservice</title>

<updated>2011-09-28T17:12:54Z</updated>

<link rel="alternate" href="sb://darksidecookie.servicebus.windows.net/IsItFridayService/"/>

</entry>

</feed>

Without that extra behavior, this feed will not disclose any information about the services available in the namespace.

That’s all there is to it! At least if you are creating something as simple as this. But it is still kind of cool that it is this simple to create a service that can be hosted anywhere and be called from anywhere and having all messages relayed in the cloud for us automatically.

And the code is available as usual here: DarksideCookie.Azure.ServiceBusDemo

<Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

Luka Debeljak reported Microsoft Dynamics Powered By Windows Azure on 10/14/2011:

Yesterday some more news were released around the implementation of the Microsoft Dynamics NAV “7” that will run on Windows Azure platform! Here is a short excerpt from the Convergence blog post:

Yesterday some more news were released around the implementation of the Microsoft Dynamics NAV “7” that will run on Windows Azure platform! Here is a short excerpt from the Convergence blog post:

At Convergence 2011, we announced that we would deliver Microsoft Dynamics NAV on Azure with the next major release of the solution - Microsoft Dynamics NAV “7”. Last night we demonstrated Dynamics NAV running on Azure. The development project is in great shape. We expect to ship this release in September/October 2012.

We also announced a very significant addition to the scope of NAV 7. NAV 7 will ship with a web browser capability – users (whether they are running NAV on premise or in the cloud) will be able to access the product with nothing more than Internet Explorer 9 on their desktop. This web browser capability, together with the Microsoft SharePoint client we have announced previously, will provide our SMB (small and mid-sized businesses) customers with lots of flexibility about how they choose to deploy the product and provide their employees with an interface that makes sense and adds value in the context of their job roles.

We also announced a very significant addition to the scope of NAV 7. NAV 7 will ship with a web browser capability – users (whether they are running NAV on premise or in the cloud) will be able to access the product with nothing more than Internet Explorer 9 on their desktop. This web browser capability, together with the Microsoft SharePoint client we have announced previously, will provide our SMB (small and mid-sized businesses) customers with lots of flexibility about how they choose to deploy the product and provide their employees with an interface that makes sense and adds value in the context of their job roles.

Definitely a step into the right direction, exciting times ahead!

Avkash Chauhan (avkashchauhan) analyzed Windows Azure VM downtime due to Host and Guest OS update and how to manage it in multi-instance Windows Azure Application in a 10/14/2011 post:

I have seen some Azure VM downtime concerns from Windows Azure users who have minimum 2 or more instances to meet to 99.95% SLA. The specific concerns are related with VM downtime when Guest and Host OS update is scheduled.

I have seen some Azure VM downtime concerns from Windows Azure users who have minimum 2 or more instances to meet to 99.95% SLA. The specific concerns are related with VM downtime when Guest and Host OS update is scheduled.

So lets consider following 3 scenarios:

So lets consider following 3 scenarios:

Scenario 1:

- If you have 1 instance, then this instance will be down and will be ready after update is finished and there is nothing you can do about it

Scenario 2:

- If you have 2 instances, then only 1 instance will down at a given time, and you will be running on half capacity of your resources during update process. This is still a situation when your first VM is getting ready the second VM could go down for patching so there may a very small time slice when your both VM are not ready to serve your request.

Scenario 3:

- If you have lot more instances, then also only 1 instance is down at a given time for patching however it is possible that during update process 1 or more machines are going down or coming up same time so this does not mean if you have total 10 machines, at any given time you sure will have 9 machines ready for you.

Note: In Scenario 2 and 3 you have some control to decide when and how all of your instances will be updated while Guest OS is being updated. And in this scenario, the concept of upgrade-domains is used when the Guest OS update is performed.

Guest OS Update (OS in your Azure VM):

To master the art of setting Upgrade Domain/ Fault domain, I would suggest you to read the blog below and architect you multi-instance Windows Azure application after digesting this info:

Host OS Update (OS in which you Azure VM is running):

- You don’t have any control when and how Host OS will be updated.

At last, now you can have better idea that you can control the timing of you own VM (web/worker role) OS updates using upgrade domain/fault domain concept up to certain extent, but you cannot control when the host OS is updated.

Now you may ask how often host OS gets updated usually? Monthly/Weekly/Daily? Also does it tend to be scheduled during off-peak/night time of the timezone which the to-be-updated datacenter is located in, or it can just happen at any time during the day?

- Based on historic schedule located at http://msdn.microsoft.com/en-us/library/ee924680.aspx you can see it almost happens monthly and it can happen at any time during the day. In fact, each datacenter takes multiple days to walk all the upgrade domains, so any given tenant within the datacenter can be upgraded at any time over the course of a couple days. Most of the time the host OS update is depend on security fixes so if the security fixes are not applicable to the Host OS the host OS update time can go longer than [a] month.

I believe that patching and updates run on the same Update Domain, so Scenario 2 is unlikely to occur (or impossible). Microsoft schedules patching and updates, so it’s easy to make sure that the operations don’t overlap in a domain.

Cain Ullah (@cainullah) asserted “Azure Table service provides a low maintenance, hugely scalable structured data store for Birdsong that just works” in a introduction to the Windows Cast Studies group’s Red Badger: Creative Software Consultancy Uses Cloud Operating System for Twitter Push Alerts post of 10/12/2011:

United Kingdom (U.K.) creative software consultancy Red Badger wanted to provide push notifications to users of its Birdsong for Windows Phone Twitter client. Birdsong is a popular social networking application on Windows Phone 7, used in 32 countries. Windows Azure provides a low maintenance platform as a service (PaaS) infrastructure for Birdsong, so Red Badger can scale up to meet demand as its user base grows, without requiring additional capital investment.

United Kingdom (U.K.) creative software consultancy Red Badger wanted to provide push notifications to users of its Birdsong for Windows Phone Twitter client. Birdsong is a popular social networking application on Windows Phone 7, used in 32 countries. Windows Azure provides a low maintenance platform as a service (PaaS) infrastructure for Birdsong, so Red Badger can scale up to meet demand as its user base grows, without requiring additional capital investment.

Business Needs

Founded in 2010 and based in London, Red Badger is a creative software consultancy that specialises in customised software projects, developer tools, and platforms on Microsoft technologies. Its founders have years of experience developing desktop, web, and mobile applications—and, in recent years, using 3-D gaming technology.

Founded in 2010 and based in London, Red Badger is a creative software consultancy that specialises in customised software projects, developer tools, and platforms on Microsoft technologies. Its founders have years of experience developing desktop, web, and mobile applications—and, in recent years, using 3-D gaming technology.

Red Badger is a Microsoft BizSpark partner—a global programme that helps software startups succeed by giving them early and cost-effective access to Microsoft software development tools. BizSpark connects its partners with key industry players, including investors, and provides marketing visibility to help software entrepreneurs to start businesses. Supported by BizSpark, Red Badger has developed a premium Twitter social networking client called Birdsong for Windows Phone.

Red Badger is a Microsoft BizSpark partner—a global programme that helps software startups succeed by giving them early and cost-effective access to Microsoft software development tools. BizSpark connects its partners with key industry players, including investors, and provides marketing visibility to help software entrepreneurs to start businesses. Supported by BizSpark, Red Badger has developed a premium Twitter social networking client called Birdsong for Windows Phone.

Cain Ullah, Founder, Red Badger, says: “Birdsong is already an enormously popular social application on the Windows Phone 7 platform and the number one application of its kind in 10 countries.” Part of the appeal of Birdsong is its innovative features such as infinite scrolling and offline viewing, which are powered by a customised document database.

Ullah adds: “Support for push notifications—for example, Live Tile and Toast—has been one of the most requested features from Birdsong users. With thousands of people monitoring their accounts for mentions and direct messages, timely notifications presented a huge challenge.”

Red Badger needed a service for Birdsong that would allow developers to manage increased demand. Ullah says: “It was impossible to predict how popular it would become or how rapidly it would require greater compute power.”

Solution

Red Badger decided to use the Windows Azure cloud-based delivery system to support a push notification capability to Birdsong version 1.3. It developed the tool in the Microsoft Visual Studio 2010 development system.

Rackspace Hosting was a possible alternative hosting partner, but multiple technical advantages favoured Windows Azure, together with a potential time to market of three to six months.

The architecture uses the Windows Azure load balancer, which distributes incoming internet traffic for users transparently. Birdsong also uses the Windows Azure Queue and Table service features to retain a user’s notification preferences. Live Tile images are created dynamically with the unread tweets counted and downloaded via the Microsoft Push Notification Services in Windows Phone 7.

In addition, the Windows Azure Queue feature queues an unlimited number of messages in receipt order.

Commenting on the privacy policy for Birdsong, David Wynne, Founder and Technical Architect, Red Badger, who worked on the development of Birdsong, says: “The content of new messages is not used or stored for any other purpose than to push a notification message to the Birdsong subscriber’s device. It’s easy for subscribers to see updates at a glance thanks to a top bar that displays the exact number of new mentions, messages, and tweets.”

Benefits

Red Badger used Windows Azure to ensure that the performance of its Birdsong for Windows Phone Twitter client would not be affected by large increases in demand. The Microsoft BizSpark programme helped the startup launch the application, and provided easy access to the tools and technology Red Badger are using giving subscribers several unique features, with more to come.

- Windows Azure helps ensure reliable storage for subscribers. Windows Azure offers unlimited space for Birdsong users to store messages—this is a key requirement for the company remaining competitive. Ullah says: “Azure Table service provides a low maintenance, hugely scalable structured data store for Birdsong that just works.”

- Flexibility in processing secures data. With Windows Azure, Red Badger can choose to scale up with roles or processes inside roles. Wynne says: “As a result, we have the confidence that no data will be lost and that the service function will remain the same whatever the demand.”

- Scalability helps avoid need for further capital investment. The scalability of Birdsong running on Windows Azure means that Red Badger can accommodate expansion to another 19 countries. “We pay hour by hour and have a whole range of Microsoft cloud infrastructure and scale available to us at the click of a mouse,” says Ullah.

- Birdsong aims for new-generation Windows Phone 7 Mango. Ullah and his team will use their experience in the Microsoft .NET Framework and Microsoft Silverlight browser plug-in to work on a new release of Birdsong for Windows Phone 7 Mango. Ullah says: “We’re keeping a few features to ourselves for now and are committed to the Windows Phone roadmap.”

This case study is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS SUMMARY.

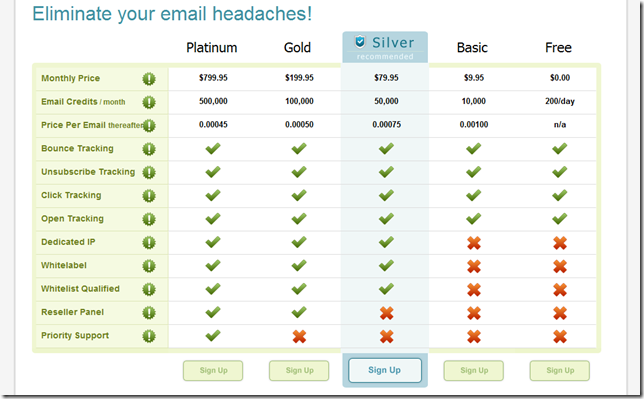

Microsoft’s Public Sector Developer Weblog posted Sending and Receiving Email in Windows Azure on 10/14/2011:

The Windows Azure environment itself does not currently provide a SMTP relay or mail relay service. So how do you send and receive email in your Windows Azure applications? Steve Marx puts together a great sample application (available for download here) that does the following:

The Windows Azure environment itself does not currently provide a SMTP relay or mail relay service. So how do you send and receive email in your Windows Azure applications? Steve Marx puts together a great sample application (available for download here) that does the following:

- It uses a third party service (SendGrid) to send email from inside Windows Azure.

- It uses a worker role with an input endpoint to listen for SMTP traffic on port 25.

- It uses a custom domain name on a CDN endpoint to cache blobs.

Resources:

I reported Steve’s original post in May 2010, but it’s worth posting a reminder now and then.

<Return to section navigation list>

Kostas Christodoulou (@Kostoua) described The Lights Witch Project(s) in a post of 10/3/2011 to his Visual Studio Tip of the Day blog:

Did you know that when you create a new LightSwitch Application project beneath the Data Sources and Screens “Folders” live 5 projects in total?

Changing your view to File View only “reveals” 3 of them: Client, Common, Server. If you choose to Show All Files 2

Changing your view to File View only “reveals” 3 of them: Client, Common, Server. If you choose to Show All Files 2  more appear: Client Generated, Server Generated. Now you have 5 projects in all.

more appear: Client Generated, Server Generated. Now you have 5 projects in all.

Ok, hurry back to Logical View, pretend you didn’t see this.

Seriously now, the reason I include the beginners tag in posts like this one and this one, is because I am trying to make a point. LightSwitch is NOT the new Access. It’s much, much more than Access in terms on both potential but also complexity. LightSwitch Team has done a great job making the Logical View a user-friendly powerful application design environment. But, I believe it’s good to have a broad (at least) idea of what actually happens under the surface. This way when, for some reason (not very probable if you play with the designer only but always possible), you get a build error and clicking on that error a code file you have never seen before appears, you will not be terrified by the fact that someone has turned your beautiful screens and tables into ugly, messy code files.

Seriously now, the reason I include the beginners tag in posts like this one and this one, is because I am trying to make a point. LightSwitch is NOT the new Access. It’s much, much more than Access in terms on both potential but also complexity. LightSwitch Team has done a great job making the Logical View a user-friendly powerful application design environment. But, I believe it’s good to have a broad (at least) idea of what actually happens under the surface. This way when, for some reason (not very probable if you play with the designer only but always possible), you get a build error and clicking on that error a code file you have never seen before appears, you will not be terrified by the fact that someone has turned your beautiful screens and tables into ugly, messy code files.

- Client Project: Is the project where the client application and accompanying code is found. In this project, in the UserCode folder, partial classes are automatically created and then populated by code every time you choose (for example) to Edit or Override Execute Code for a screen command. You also put your own classes there (but this is not beginner stuff…). It, very broadly, represents the presentation tier of your application.

- Common Project: Is the project where business logic lies. Well, “Business Logic” has always been a bit vague to me as deciding what is business logic and what is not, is a bit foggy. I mean, deciding if a command can be executed under specific conditions or not is business or client? Client is the politically correct answer. To me this has always been a convention of thought. Anyhow Common contains all code that should be available both at the client and the server. One example is writing code for <Entity Name>_Created. This code is executed either at the client or at the server, hence Common.

- Server Project: Is the project where the web application and the data-tier lies. An example would be <Entity Name>_PreprocessQuery methods when overridden, the code is included in Server\UserCode\<DataSource Name>Service.cs.

- Client/Server Generated are complementary to the respective projects containing auto-generated code that you should never edit in any way (any change will be lost during the next build or modification made by the designer) and this is the reason why the are not visible in File View by default.

It’s better to know the enemy…

Click here to see Kostas’ earlier LightSwitch posts. Subscribed.

Return to section navigation list>

Nicole Henderson (@NicoleHenderson) reported Quincy, Washington Leases Microsoft Water Treatment Plant in a 10/14/2011 post to the Web Host Industry Review (WHIR) blog:

Microsoft (www.micrsoft.com) has agreed to lease its water treatment plant to the city of Quincy, Washington. Microsoft uses the plant to treat the water at its 500,000 square foot data center in Quincy.

Microsoft (www.micrsoft.com) has agreed to lease its water treatment plant to the city of Quincy, Washington. Microsoft uses the plant to treat the water at its 500,000 square foot data center in Quincy.

According to a blog post, the city will lease the plant for $10 a year and has the option to buy it after 30 years. Quincy will operate, maintain and manage the plant. Through loaning the assets to the city, construction costs for the new reuse system were lowered.

A report by Geekwire says this arrangement is believed to be the first of its kind between a city and a data center operator.

Christian Belady, general manager of data center advanced development at Microsoft, says that the project will promote a long term sustainable use of water and support the Quincy economy for many years to come.

Belady says the current water treatment plant extracts minerals from the potable water supply prior to using the water in cooling its data center. The plant was built to reuse the water from the local food processing plant.

The location of the plant will benefit other businesses as well, according to Belady.

Quincy plans to retrofit the plant as an expanded industrial reuse system in two phases. In the first phase, the system will generate approximately 400,000 gallons per day and the second upgrade will product 2.5 to 3 million gallons per day, with approximately 20 percent used by local industries.

"This collaborative partnership with the City of Quincy solves a local sustainability need by taking a fresh look at integrating our available resources and allows Microsoft to focus on its core expertise in meeting the needs of its customers who use our Online, Live and Cloud Services such as Hotmail, Bing, BPOS, Office 365, Windows Live, Xbox Live and the Windows Azure platform," Belady says. [Emphasis added.]

"This collaborative partnership with the City of Quincy solves a local sustainability need by taking a fresh look at integrating our available resources and allows Microsoft to focus on its core expertise in meeting the needs of its customers who use our Online, Live and Cloud Services such as Hotmail, Bing, BPOS, Office 365, Windows Live, Xbox Live and the Windows Azure platform," Belady says. [Emphasis added.]

According to the blog post, Microsoft will continue to look for ways to eliminate the use of resources like water in its data center designs.

Microsoft has two data centers in Quincy, but Belady says its new modular data center than went live in January uses airside economization for cooling and substantially less water.

Microsoft appears to have settled its differences with the State of Washington over payment of sales taxes on computing equipment in data centers and expanded the Quincy data center, which was once reported shut down. That being the case, why doesn’t Windows Azure have a North West US region?

Barb Darrow (@gigabarb) asserted Microsoft Azure: B for effort, less for execution in a 10/13/2011 post to Giga Om’s Structure blog:

Microsoft has poured money and resources into Windows Azure, its grand attempt to transport the company’s software dominance into the cloud computing era. How’s it doing? So-so.

Nearly everyone agrees that Azure has huge promise. It’s a soup-to-nuts Platform-as-a-Service (PaaS) for developing, deploying and hosting applications. And yet, much of that promise remains unfulfilled. Despite its support for a full complement of computer languages, Azure remains Windows .NET-centric.

Nearly everyone agrees that Azure has huge promise. It’s a soup-to-nuts Platform-as-a-Service (PaaS) for developing, deploying and hosting applications. And yet, much of that promise remains unfulfilled. Despite its support for a full complement of computer languages, Azure remains Windows .NET-centric.

In July 2010, seven months after Azure went live, Microsoft claimed 10,000 users. Since then, it has only said that Azure has added thousands of more users monthly. That total number may be big, but it’s unclear how many of these customers are viable commercial users as opposed to tire kickers.

In July 2010, seven months after Azure went live, Microsoft claimed 10,000 users. Since then, it has only said that Azure has added thousands of more users monthly. That total number may be big, but it’s unclear how many of these customers are viable commercial users as opposed to tire kickers.

For better or worse, Azure is viewed as an attempt to lock customers into Microsoft’s Windows-centric worldview, this time in the cloud. That may be fine for the admittedly huge population of .NET developers, but new-age web shops don’t take to that idea. Nor do they necessarily like the idea of having Microsoft infrastructure as their only deployment option which is currently the case.

It’s hard to compete with Amazon

A long-time Microsoft development partner who builds e-commerce websites exemplifies Microsoft’s problem. His tool set includes SQL Server and other parts of the Microsoft stack, but he deploys the sites on Amazon Web Services. He tried Azure, but gave up in frustration.

Why? He gives Azure an F-grade mostly because Azure is all about Azure. “It’s a programming platform that can only be used in Azure. Legacy apps are out in the cold — it’s only new development. And the coup de grace is that five years after [Microsoft leadership] finally figured out that they need to be able to run what amounts to an elastic cloud instance, they’re still only in beta with that,” he said. “Meanwhile, Amazon adds features and flourishes every month.”