Windows Azure, Azure Data Services, SQL Data Services and related cloud computing topics now appear in this weekly series.

Windows Azure, Azure Data Services, SQL Data Services and related cloud computing topics now appear in this weekly series.

•• Updated 5/23 - 5/24/2009: Additions

• Updated 5/20 - 5/22/2009: First SDS Tech*Ed video and several Amazon stories

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

<Return to section navigation list>

•• Sam Johnston asks Is AtomPub already the HTTP of cloud computing? in this 5/24/2009 post and concludes:

The only question that remains is whether or not this is the best we can do... stay tuned for the answer. The biggest players in cloud computing seem to think so (except Amazon, whose APIs predate Google's and Microsoft's) but maybe there's an even simpler approach that's been sitting right under our noses the whole time.

<Return to section navigation list>

•• My CheckScoreWorkflow Sample Appears to Return Wrong Answer post of 5/23/2009 gripes about a pair of typos in the .NET Services SDK (March 2009 CTP)’s CheckScoreWorkflow sample project. A conflict with the Readme.htm in the student’s test score sent to the Workflow cost me a couple of hours of work today.

•• Philip Richardson, a lead program manager for .NET Services, posted Utilizing the Service Bus in Windows Azure on 5/23/2009. His tutorial focuses on two basic scenarios:

- Service Bus Client in a Windows Azure Web or Worker Role

- Service Bus Listener in a Windows Azure Worker Role,

which aren’t covered by the sample projects.

•• Zhong-Wei Wu and Rick Negrin explain The Role of a DBA with SQL Data Services in this seven-minute Tech•Talk from Tech•Ed 2009:

The role of a DBA is as important with SQL Data Services as it is with an on premises database. Come hear from members of the product team as they discuss what tasks are automatically handled for you with SDS and the challenges a SDS DBA will face and how to overcome them with proper planning and data modeling.

Thanks to Dave Robinson for the heads-up.

• David Robinson and Liam Cavanaugh presented Building Applications with Microsoft SQL Data Services and Windows Azure (DAT316) at Tech•Ed 2009. The video of their session is available as of 5/22/2009 to Tech•Ed 2009 attendees only:

Are you looking to reduce the costs of building and maintaining enterprise applications? Do you want to extend the reach of your applications across multiple devices, locations and partners? SQL Data Services and Windows Azure provides you a friction free, highly scalable platform for building applications. The scale and reach of the cloud lights up a new class of application scenarios. Come see how easy it is to consume SQL Data Services from within Windows Azure. In addition, we dive into Microsoft's new "Data Hub" for businesses and see how this SQL Data Services powered synchronization service allows for data aggregation within the Hub to provide straightforward data sharing between on-premises databases, business partners, remote offices, and mobile users.

• Rick Negrin’s What’s New in Microsoft SQL Data Services (DAT202) session is available to Tech•Ed 2009 attendees only:

Come and learn how SQL Data Services has evolved over the past year based on your feedback. In this session, learn how SQL Data Services delivers on promise of Database as a Service (DaaS). See how easy it is to take an existing class of SQL Server applications and extend them to the DaaS service using existing SQL Server knowledge, protocols, client libraries and tools. With minimal changes, your application will be running in a highly available and scalable service. Finally we touch on the business model, terms of use, and present a roadmap for the service.

Instead of being in the Cloud Computing & Web Technologies group, it’s in the Database Platform and Business Intelligence group.

My Ten Slides from the “What’s New in Microsoft SQL Data Services” (DAT202) Tech*Ed 2009 Session has captures of most of Rick’s slides for those who didn’t attend Tech*Ed 2009.

• David Robinson’s Provisioning an SDS Database post of 5/20/2009 says:

The SDS team was extremely busy last week at TechEd North America in L.A.. We presented 3 sessions, recorded 4 TechEd online videos and spent a great deal of time speaking with customers.

Here is a video we did on what the provisioning experience will be like for SDS in the Azure Developer Portal. You can see the video here - Provisioning an SDS Database in the Azure Developer Portal [with Dave and Nino Bice.]

I’ve requested that Dave post links to the other Azure-related session and Tech*Talk videos in his SDS and other Azure blogs.

See the “Azure Infrastructure” section for more Tech*Talk sessions about the Azure Services Platform and cloud computing in general.

<Return to section navigation list>

Donovan Follette interviews Intand President, Bryan Otis, and CIO, Scott Otis, along with Vijay Rajagopalan, Principal Architect, Microsoft Interoperability Strategy, “to drill into how Intand enabled their PHP application to support information cards” in this 5/21/2009 episode of the IdElement video series, Geneva Server, Windows CardSpace Geneva, Information Cards and PHP Interoperability:

This prototype project, for the Lake Washington School District, was based upon the use of Microsoft code name Geneva Server, Windows CardSpace Geneva and Intand’s PHP application using the Zend Framework’s information card support for interoperability. This project was also featured in a video during the Scott Charney, Corporate Vice President, Microsoft, keynote address at the April, 2009 RSA Conference.

Thanks to Vittorio Bertocci for the heads-up.

My Problems Using Self-Issued CardSpace Cards with .NET Service Bus WSHttp Binding Sample of 5/19/2009 describes difficulties I ran into when attempting to use CardSpace information cards to authenticate with the .NET Services SDK (March 2009 CTP) Service Bus samples:

The problem was resolved as due to a combination of the following:

- An incorrect scope URI autogenerated when creating an Access Control Service credential for the solution (bug).

- Incompatibility between the Windows CardSpace “Geneva” and original WindowsCardSpace Control Panel applets (unpublicized).

<Return to section navigation list>

•• David Aiken’s More Azure Samples post of 5/23/2009 provides pointers to these new Azure sample projects available from the MSDN Code Gallery:

Bid Now Sample

Bid Now is an online auction site designed to demonstrate how you can build highly scalable consumer applications.

This sample is built using Windows Azure and uses Windows Azure Storage. Auctions are processed using Windows Azure Queues and Worker Roles. Authentication is provided via Live Id. One of the cool things that Bid Now demonstrates is how you can de-normalize data in Windows Azure Table Storage, to build a very scalable application. Check out Bid Now at http://code.msdn.microsoft.com/bidnowsample.

Contoso Cycles

Contoso Cycles shows how the Azure Services Platform can be used to build a supply chain application. .NET Services allows messages to flow between companies across firewalls, NATs and routers. You can download Contoso Cycles from http://code.msdn.microsoft.com/contosocycles.

•• Guy Burstein’s ASP.Net MVC on Windows Azure | ASP.Net MVC Web Role demonstrates creating a new ASP.Net MVC Web Role or moving an existing ASP.Net MVC Application to Windows Azure. Guy writes:

There are 2 ways to do this:

- Manually adding an ASP.Net MVC application as a Web Role (suitable both for a new ASP.Net MVC application and for an existing one).

- Use a Project Template to simply create a new ASP.Net MVC Web Role (new ASP.Net MVC application only).

• Ryan Dunn shows you how to Create and Deploy your Windows Azure Service in 5 Steps in this lavishly illustrated tutorial of 5/22/2009. If you’re new to Azure don’t miss this.

• Sungard Financial Systems presented Using Windows Azure for computationally intensive workloads at Microsoft’s Enterprise Developer and Solutions Conference in New York City on 5/6/2009. You can view a video segment of the session, which describes Sungard’s feasibilty study of Windows Azure and Amazon EC2, as well as the F# functional programming language for financial calculations, here.

<Return to section navigation list>

•• Peter Dinham reports in his All pointing skywards for cloud computing post of 5/23/2009 for iTWire:

This take on the development of cloud computing comes from industry analysts, Coda Research Consultancy, who reckon there’s nothing short of a “radical transformation” going on across the technology world, and, “it’s called cloud computing.”

“IT users who do not begin to evaluate cloud computing for their businesses risk seeing their competitiveness, productivity, customer loyalty and revenues all decline relative to their competitors.”

The 17 percent growth in cloud computing over the next six year predicted by Coda, if achieved, would bring revenues worldwide to $180 billion by 2015, from a mere $46 billion just last year.

•• James Hamilton describes Dell’s new, low-power Fortuna server based on the Via Nano which consumes 15W at idle and 30W at full load in his Server under 30W post of 5/22/200:

The name [XS11-VX8] aside, this server is excellent work. It is based on the Via Nano and the entire server is just over 15W idle and just under 30W at full load. It’s a real server with 1GigE ports, full remote management via IPMI 2.0 (stick with the DCMI subset). In a fully configured rack, they can house 252 servers only requiring 7.3KW. Nice work DCS!

• Alin Irimie’s Microsoft Set To Announce Commercial Availability of Windows Azure at PDC This Year post of 5/21/2009 is a detailed analysis of why and how Microsoft will announce the commercial release of the Azure Services Platform at the Professional Developers Conference 2009 in Los Angeles on November 17 – 20, 2009.

• Reuters predicts Mobile Web, cloud to attract tech spending in this 5/22/2009 article by Ritsuko Ando:

While the global economic downturn has forced companies to cut back on spending and favor technology like "cloud computing" that helps save costs, executives at the Reuters Global Technology Summit forecast growth in new wireless and online arenas.

Companies need to invest in "cloud computing," a technology that allow people to access computers and software online and spend less on hardware, space and energy, they said.

IBM plans to launch several cloud computing services this year, joining companies like Amazon.com Inc (AMZN.O: Quote, Profile, Research, Stock Buzz), Google Inc (GOOG.O: Quote, Profile, Research, Stock Buzz) and Salesforce.com Inc (CRM.N: Quote, Profile, Research, Stock Buzz) which were among the first to offer storage and computing over the Web.

Along with cloud computing, Loughridge forecast strong growth in IBM's analytics software and consulting business that helps customers run their businesses more efficiently.

• Tech•Ed 2009 Azure-related Tech Talk videos are coming on line slowly. As of 5/19/2009 these were publicly available:

Patterns for Moving to the Cloud (with Simon Guest and Denny Boynton): Everything that you read these days seems to suggest that you should be moving to the cloud. But where do you start? Which applications and services should you be moving? How do you build the bridge between on-premises and the cloud? And more importantly, what should you be looking out for along the way? In this talk, learn architectural patterns and factors for moving to the cloud. Based on real-world projects, the talk explores building block services, patterns for exposing applications, and challenges involving identity, data federation, and management. This talk provides the tools and knowledge to determine whether cloud computing is right for you, and where to start.

De-Mystifying the Cloud (with Simon Guest and Kevin Remde): Learn IT manager centric view of Microsoft's latest roadmap for online storage and computing as well as the power of choice with the latest from Microsoft Online Services. Kevin Remde and Simon Guest's objective in this talk is to discuss the Microsoft overview and roadmap for cloud computing services and software as a service.

Cloud Computing is for Small Companies, Too (with Shy Cohen and Michael Stiefel): Cloud Computing is often presented as an esoteric technology that is only interesting to large companies. In point of fact, small- and medium-sized companies will find the economics and technology opportunities very compelling. This talk explains why this is so, and why small- and medium-sized companies should look for opportunities in the cloud computing area.

The few session videos that are becoming available are for Tech•Ed 2009 attendees only.

• Carl Brooks asks Cloud security: Sorry, what was that? on 5/21/2009 as a result of attending the Enterprise Cloud Summit in Las Vegas:

IT pros at the Enterprise Cloud Summit confirmed the basic stumbling block for enterprises considering cloud computing: security. Even as evangelists and cloud vendors made attractive pitches on cost and ease of access – the term "business agility" is frequently heard -- attendees made clear that they will need more than enthusiasm to want to participate.

Carl’s 5/19/2009 story, Amazon CTO brushes aside cloud phobias, adds further fuel to the security fire:

Amazon.com CTO Werner Vogels led the cheerleading effort at the Enterprise Cloud Summit this week calling this "day one" for cloud usage in the enterprise. But despite his upbeat attitude, IT shops voiced some fundamental concerns and were not always comforted by his replies.

Dr. Lennox Superville, the CEO of Infosysarchitecture LLC, said he felt Amazon was "hedging" its infrastructure in case of disaster. "Give me some confidence," he told Vogels at a standing room-only session. Security concerns were top of mind with attendees after last week's two-hour outage at Google. Vogels did not have a direct reply, but assured the crowd that Amazon's investment in data centers and hardware made its cloud very safe.

Vogels went on to say that customers with regulatory and security concerns could get privileged access to AWS workings once they had committed as customers. He cited helping customer TC3 Health to become HIPAA compliant.

• Krishnan Subramanian asks Whose Throat Should We Choke? when a Cloud service goes down:

With more and more enterprises jumping into Cloud Computing bandwagon, this question becomes all the more important. It is time for the Cloud vendors to evolve a strategy that will make customers trust Cloud Computing.

• The Cloud Architect (Claude) reports Gartner Says Federal Government Must Play a Major Role in Stimulating Progress Toward Higher Levels of Cybersecurity in this 5/21/2009 press release that asserts:

U.S. national cybersecurity policy needs to take a more operational approach toward stimulating higher levels of security in cyberspace, rather than focusing on strategies to drive higher spending or higher visibility for security, according to Gartner, Inc.

• Jonathan Erickson cautions Cloud Computing Standards: Be Careful What You Wish For in this 5/21/2009 Editors Note to Dr. Dobbs Update. He reports on a less well known standardization effort:

One approach to standards for cloud computing is what Andrzej Goscinski, a professor at [Australia’s] Deakin University is compiling. He is working on a framework for building infrastructures that are more accessible, reliable, efficient, and yes, understandable. Goscinski's approach builds on his earlier work involving a Resources Via Web Instances (RVWI) framework, which bundles state information of a web service into its WSDL. Goscinski and Michael Brock describe RVWI in their paper State Aware WSDL: The Resources Via Web Instances Framework.

Ray Ozzie said “Azure is Microsoft’s ‘20 or 30 year vision’ at the J.P. Morgan Technology, Media and Telecom Conference on May 20 according to Mary Jo Foley’s Microsoft's Ozzie defends Microsoft's aggressive online spending post of 5/20/2009:

Ozzie touched on his favorite topics — software plus services, Microsoft’s “three screens and a cloud” (mobile devices, PCs and TV) vision; and the need for Microsoft to field consumer services as a way to show off its cloud-computing prowess. …

The underlying infrastructure Microsoft has built to deploy and run its consumer services is now being extended to support other services throughout the company, he said. Ozzie pointed to “Cosmos,” the high-scale file system that is part of Microsoft’s Azure cloud platform, as ultimately supporting and aiding every consumer, enterprise and developer property at Microsoft. He noted that the management systems for Microsoft’s current and existing cloud services are all derived from the learnings Microsoft has gleaned from managing its consumer online services.

Ozzie said he believed one of Microsoft’s main advantages vis-a-vis its cloud competitors is “the fact we build both platforms and applications.”

Ozzie said Microsoft’s focus on building a cloud operating system differentiates it from other cloud vendors.

Andrea DiMaio’s U.S. Government’s Flavors of Cloud Computing Need More Clarity post of 5/20/2009 contends that questions in the GSA’’s Request for Information for capability statements of 5/13/2009:

[A]re relatively sensible, although broadly scoped in places. However there are potential problems with the working definition of cloud computing that NIST has proposed and GSA has adopted and that may generate some confusion among users and vendors.

Charles Babcock reports that “The state's Public Utilities Commission is using the Open Campus application to give employees wireless access as well as virtual desktops and diskless laptops to ensure data security” in his California Agency Embraces Mobility, Cloud Computing post of 5/20 for InformationWeek. Babcock writes:

The California Public Utilities Commission (PUC) has adopted a wireless approach to mobile end-user computing to unleash employees from their desks and allow travel between offices. At the same time, it's giving them the chance to collaborate in the cloud.

The commission's Open Campus application made its debut at the Conference on California's Future in Sacramento, May 11-14. Open Campus is aimed at freeing up 1,200 PUC employees to work on the road, from home, or wherever they otherwise find themselves disconnected from the wired network, said CIO Carolyn Lawson.

James Hamilton’s High-Scale Service Server Counts post of 5/20/2009 quotes from an article in Data Center Knowledge Who has the Most Web Servers a list of 12 organizations that have from 10,000 to 55,000 servers in use. The list includes only publicly disclosed server counts, and therefore doesn’t include Google, Microsoft, and Amazon.

Andrea DiMaio asks Is There A European Government Cloud? in this 5/19/2009 post that concludes “there is less excitement about cloud computing in government” in European countries. Andrea notes:

A few months ago the European Commission published a Draft Document as the basis for the European Interoperability Framework Version 2. This document, which was debated extensively by the IT industry and EU member states, introduced a number of concepts that complement the interoperability framework, such as the interoperability strategy, the interoperability architectural guidelines and the European Infrastructure Interoperability Services (or EIIS),

While all the attention focused on the Interoperability Framework and what the adoption of (certain) open standards and open source might mean for the industry, few have given much attention to the EIIS. The document is conspicuous in its silence about what they really are, but looking at the overall interoperability architecture, they seem to be basic infrastructure services that may well be provided centrally, e.g. by a European Commission department or agency.

Rob Douglas’s Pinning Down Enterprise Data Security in the Cloud story of 5/18/2009 for eCommerce Times contends:

For a business of any size, moving any portion of IT operations to a cloud provider means you're putting your data in its hands. Can it protect your data from criminals and accidents as well as or better than you can? A thorough examination of both your own operation and your prospective provider should be undertaken before jumping to the cloud.

And goes on with a detailed analysis of “a range of security issues in order to avoid a data security breach that could prove costly to the enterprise's financial and reputational bottom lines.”

John Treadway’s Cross-Border Constraints on Cloud Computing post of 5/18/2009 from the Enterprise Cloud Summit at Interop notes “there were several examples given of constraints imposed by governments on where data and processes can reside,” citing several examples.

Liz McMillan reports on 5/18/2009 that HP, Sun, Dell, and Microsoft Are Among Cloud Computing's Losers according to Roman Stanek, who “delivered his opening keynote today at the Cloud Computing Conference & Expo Europe in Prague:”

Roman Stanek, during his opening keynote at the Cloud Computing Conference & Expo Europe in Prague today, said "Big server vendors such as HP, Sun, Dell, Microsoft, as well as monolithic app providers will be among the losers of the Cloud Computing revolution, while innovation, SMBs, and the little guys will be the winners of the Cloud."

You can view Roman’s slides on SlideShare at Cloud Expo Europe keynote: Building Great Companies on the Cloud. There’s more on Stanek’s keynote on 5/21/2009 at Cloud BI Guru Believes Great Companies Can Be Built in the Cloud.

Naomi Grossman says “Companies looking to reduce costs through cloud computing will have to make some tough decisions about security” in her A Secure Leap into the Cloud article for the May 2009 issue of Redmond Magazine.

Darryl C. Plummer and Gartner coauthors offer Three Levels of Elasticity for Cloud Computing Expand Provider Options and Anatomy of a Cloud 'Capacity Overdraft': One Way Elasticity Happens reports for US$195 each.

<Return to section navigation list>

•• The Microsoft Developer Dinner Series for Partners Presenting: Microsoft Open Government Data Initiative – Cloud Computing, REST, AJAX, CSS, oh my! - May 27, 2009 - Reston, VA has been canceled.

• CloudCamp Paris says on 5/22/2009 Welcome to CloudCamp Paris, June 11th:

Our first CloudCamp in Paris will be held on June 11th, 2009 at 6:30pm

CloudCamp is an unconference where early adopters of Cloud Computing technologies exchange ideas. With the rapid change occurring in the industry, we need a place we can meet to share our experiences, challenges and solutions. At CloudCamp, you are encouraged you to share your thoughts in several open discussions, as we strive for the advancement of Cloud Computing. End users, IT professionals and vendors are all encouraged to participate.

When: 6/11/2009, 6:30 – 11:00 PM GMT +01

Where: Institut Telecom (

map), 46, rue Barrault, Paris, France

• Reuven Cohen warns URGENT: Federal Cloud Standards Summit Changed to July 13 on 5/21/2009 in the Cloud Computing Interoperability Forum (CCIF).

• Alin Irimie’s Microsoft Set To Announce Commercial Availability of Windows Azure at PDC This Year post of 5/21/2009 is a detailed analysis of why and how Microsoft will announce the commercial release of the Azure Services Platform at the Professional Developers Conference 2009 in Los Angeles on November 17 – 20, 2009. (Repeated from the “Azure Infrastructure” section.)

• Cloudonomics’ Terremark Executives to Present at Kaufman Bros. and Cowen and Company Conferences post of 5/21/2009 says:

Terremark Worldwide, Inc. (NASDAQ:TMRK), a leading global provider of managed IT infrastructure services, today announced that its executives will be presenting at two leading investor conferences. On Wednesday, May 27, 2009, Terremark executives will be participating in a panel discussion at 8:30 a.m. ET and presenting at 10 a.m. ET at the Kaufman Bros., L.P.’s The Big Picture in Cloud Computing Conference in New York City. On Thursday, May 28, 2009 at 3:40 p.m. ET, the Company’s executives will be presenting at Cowen and Company’s 37th Annual Technology Media & Telecom Conference also in New York City.

When: 5/27/2009, 8:30 AM

Where: New York City (address not given)

• Cloudonomics says Rackspace Hosting to Host Investor Webcast on Cloud Computing on 5/20/2009:

Rackspace Hosting, Inc. (NYSE:RAX), the world's leader in hosting, will host a webcast to discuss the Cloud computing landscape, how Cloud computing technologies are enabling businesses to consume on-demand computing services, and Rackspace's position in the broader cloud computing marketplace. The webcast will take place on Friday, May 22, 2009 at 8:00 a.m. PDT/11:00 a.m. EDT.

When: 5/22/2009 8:00 AM PDT

Where: Link to slides:

http://researchmedia.ml.com Replay info:

ir.rackspace.com Kevin Jackson reports in NDU (IRM) and DoD CIO (NII) Co-Hosting Cloud Computing and Cyber Security Symposia of 5/20/2009 that:

The Information Resources Management (IRM) College and the Office of the Assistant Secretary of Defense (Networks and Information Integration)/DOD CIO are co-hosting a symposia on cloud computing at the college’s campus on Fort Lesley J. McNair Washington DC waterfront), Wednesday, July 15, 2009. The event is open to government, private sector, academic, and international attendees.

“The Cloud Computing Symposium” will examine the utility of cloud computing from many angles and offer government IT executives insight into its promise and challenges. The full-day agenda includes:

- A key-note speech by Vivek Kundra, the nation's first Federal CIO; and

- Presentations by executives from Google, Lockheed Martin, OSD, IBM, HP, Gartner, TIBCO, NSA, NDU, and DISA.

When: July 15, 2009

Where: IRM College campus at Fort Lesley J. McNair on the Washington, DC waterfront

Nandita announces Microsoft Developer Dinner Series for Partners Presenting: Microsoft Open Government Data Initiative – Cloud Computing, REST, AJAX, CSS, oh my! - May 27, 2009 - Reston, VA with speakers Marc Schweigert, Developer Evangelist; James Chittenden, User Experience Evangelist; and Vlad Vinogradsky, Architect Evangelist, all from the Microsoft Corporation, US Public Sector Developer and Evangelism Team.

Register here.

When: March 27, 2009

Where: Microsoft Innovation & Technology Center, 12012 Sunset Hills Road, Reston, VA 20190 CloudBook announces its one-hour Research: Cutting Through The Cloud CloudTV presentation:

NTT Europe Online (with researcher Vanson Bourne) recently cut through the hype of cloud computing and uncovered the real views of 100 CIOs and FDs and their plans for application hosting and cloud computing. Robert Steggles, European Marketing Director, NTT Europe Online takes you through the findings of the research including details on:

- IT priorities in the recession and where the cloud fits

- Which applications would/wouldn't they host in the cloud

- What is affecting their decision-making? Who is responsible?

- How comfortable are they with other hosting models?

When: May 27, 2009, 2:30 PM GMT, 7:30 AM PDT

Where: Internet (GoToMeeting)

CloudBook offers an RSS Feed of Cloud Computing Events that you can subscribe to. Each entry has details on the dates, times, and venue, as well as a description of the event. Subscribed.

John Foley reports in his Microsoft's Beginner's Guide To Cloud Computing post of 5/20/2009 that Ron Markezich, a Microsoft VP, “is participating in a panel discussion on cloud computing today at Massachusetts Institute of Technology's CIO Symposium. Markezich will share five tips on how to get started in the cloud, based on the experiences of Microsoft's early cloud customers:”

- Know where and how cloud services fit into your company's IT architecture. This is important as IT departments subscribe to their first cloud services, and even more so as they sign on to services from multiple providers.

- Prepare your company for the changes associated with cloud services. That goes beyond end user readiness to include the company's procurement and legal teams. Cloud services are different from traditional outsourcing services. For example, cloud service options tend to be standardized for all customers; there's less customization.

- Attend to your identity management system. User access, security, and integration with on-premises systems and applications require a "clean," up-to-date identity management system. For Microsoft customers, this means time spent preparing Active Directory.

- Choose the right apps. Most companies will move into the cloud gradually, so it's a matter of deciding where to get started. Markezich says SharePoint Online is very popular as a standalone service, given SharePoint's collaboration capabilities.

- Select the right cloud service provider. "There are a lot of little guys out there today," says Markezich. "You have to ask yourself, 'Are they going to be in it for the long run? Do they have enterprise credibility? Breadth of options?' " All good questions, though it's only fair to point out that Microsoft's own track record in the cloud remains largely unproven.

Read the full press release with Q&Q with Ron: Jumping Into the Cloud – Microsoft gives Advice for CIOs

J. Nicholas Hoover reports from Interop: Vendors Still Confuse With Cloud Computing Definitions on 5/19/2009:

The afternoon keynotes at Interop saw IBM, HP and SAP giving their visions of cloud computing. While the companies had a number of real deliverables to talk about, the keynotes also showed that vendors continue to confound and confuse with their various conflicting definitions.

TechWeb wants you to Build partnerships, generate leads, and drive the adoption of cloud computing at Cloud Connect 2010.

When: March 15-18, 2010

Where: Santa Clara Convention Center, Santa Clara (Silicon Valley), CA

<Return to section navigation list>

•• Eric Engleman takes A look at Amazon's evolving government cloud strategy on 5/22/2009:

Amazon.com has targeted its cloud computing business at web startups, large companies, and scientists. But the Seattle online retailer has also been eyeing another potential customer for its cloud: government. The company is quietly building an operation in the Washington, D.C. area, and is aiming to become a key technology provider to federal and state governments and the U.S. military

•• NASA Ames Research Center posts About the NEBULA Cloud:

NEBULA is a Cloud Computing environment developed at NASA Ames Research Center, integrating a set of open-source components into a seamless, self-service platform. It provides high-capacity computing, storage and network connectivity, and uses a virtualized, scalable approach to achieve cost and energy efficiencies. …

Built from the ground up around principles of transparency and public collaboration, NEBULA is also an open-source project. NEBULA is built on the NEBULA platform.

The home page has links to more details about NEBULA and a Goddard CIO on "Cloud Computing in the Federal Government" blog post.

•• Kent Langley’s Legal Cloud: Virtual Data Centers for Law Firms is a Cloud Service Provider (CSP) with a private (VPN-accessed) specialized cloud that:

[P]rovides virtual data center services, designed specifically to meet the needs of international law firms. Created in consultation with leading CIO's, LegalCloud provides firms of all sizes with the ability to optimize the storage, protection and availability of critical business data and applications.

For more information about the service, read Kent Langley’s Thinking Out Loud About My Private Cloud post of 5/22/2009.

It will be interesting to see how the CSP market for ISVs develops. Gartner’s Daryl Plummer posted Delivering Cloud Services: ISVs - Change or Die or both! on 11/6/2008. Navatar offers a white paper on the topic here.

• Andrea DiMaio comments about the newly opened federal site in Data.gov Goes Live: What’s Next? Andrea writes:

With a lot of anticipation, data.gov, the “one-stop repository of government information and tools to make that information useful” (as defined in the relevant White House announcement) has been launched on May 21st.

As one may expect, there are still few data sets, but the approach is quite clear. Data are provided in raw form (in XML, KML, ESRI, Excel, text, etc) and are described using a common metadata, covering attributes category, data, agency, description and so forth. The site also provides tools such as “widgets and data-mining and extraction tools, applications, and other services” to help use data.

I am sure critics will be abundant. Some will claim that data that are most important to them are not there yet. Some will say that tools could be better. And I guess some will complain that the site provides data in proprietary formats (such as XLS): I can hardly imagine what reaction this single fact would create in many government circles on the other side of the Atlantic.

Who cares though? This is a pragmatic initiative, and the real issue is not how many data gets published and when, by whether and how they will be utilized to create value.

• Paulo Calçada’s Increasing the control over the Cloud - Reducing the EDoS danger post of 5/21/2009 claims:

One of the control technologies that will definitely reduce the dangers of the [Economic Denial of Sustainability] (EDoS) is the new Auto Scaling from Amazon. With this technology Amazon clients will be able to define boundaries that would limit the elasticity of its platforms. With these boundaries they will always control how their platforms will grow and therefore they will no longer be exposed to the EDoS.

See the last item in this section for more on AWS’s new Auto Scaling feature.

• Reuven Cohen reports U.S Government Launches Data.gov Open Government Initiative on 5/21/2009:

I'm happy to announce that the U.S. Federal Government earlier today launched the new Data.Gov website. The primary goal of Data.Gov is to improve access to Federal data and expand creative use of those data beyond the walls of government by encouraging innovative ideas (e.g., web applications). Data.gov strives to make government more transparent and is committed to creating an unprecedented level of openness in Government. The openness derived from Data.gov will strengthen the Nation's democracy and promote efficiency and effectiveness in Government.

Is Ruv becoming an official spokesman for the feds? His update to the NIST cloud computing definition is Federal Government Defining the Expanding World of Cloud Computing of 5/21/2009.

• Sunlight Labs announced Apps for America 2: the data.gov challenge on 5/21/2009:

Apps for America is a special contest we're putting on this year to celebrate the release of Data.gov! We're doing it alongside Google, O'Reilly Media, and TechWeb and the winners will be announced at the Gov2.0 Expo Showcase in Washington, DC at the end of the Summer.

Just as the federal government begins to provide data in Web developer-friendly formats, we're organizing Apps for America 2: The Data.gov Challenge to demonstrate that when government makes data available it makes itself more accountable and creates more trust and opportunity in its actions. The contest submissions will also show the creativity of developers in designing compelling applications that provide easy access and understanding for the public while also showing how open data can save the government tens of millions of dollars by engaging the development community in application development at far cheaper rates that traditional government contractors.

• Werner Vogels’ Expanding the Cloud: Moving large data sets into Amazon S3 with AWS Import/Export post of 5/20/2009 describes Amazon’s new Import/Export service and the reason Amazon Web Services provides it:

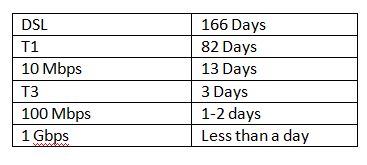

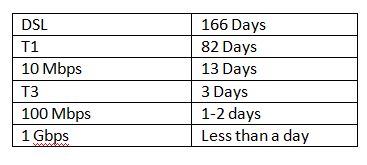

Many of our customers have large datasets and would love to move into our storage services and process them in Amazon EC2. However moving these large datasets over the network can be cumbersome. If you look at typical network speeds and how long it would take to move a terabyte dataset:

Depending on the network throughput available to you and the data set size it may take rather long to move your data into Amazon S3. To help customers move their large data sets into Amazon S3 faster, we offer them the ability to do this over Amazon's internal high-speed network using AWS Import/Export.

• James Hamilton chimes in with Amazon Web Services Inport/Export of 5/19/2009:

Cloud services provide excellent value but it’s easy to underestimate the challenge of getting large quantities of data to the cloud. When moving very large quantities of data, even the fastest networks are surprisingly slow. And, many companies have incredibly slow internet connections. Back in 1996 MInix author and networking expert, Andrew Tanenbaum said “Never underestimate the bandwidth of a station wagon full of tapes hurtling down the highway”. For large data transfers, it’s faster (and often cheaper) to write to local media and ship the media via courier.

• Sally Whittle reports VMware releases its 'cloud OS' on 5/21/2009 for ZDNet UK:

VMware has released the latest version of its core virtualisation platform, vSphere 4, claiming it acts as a cloud operating system to the datacentre.

The product, the renamed successor to VMware Infrastructure 3, was made generally available on Wednesday, having been announced in April. The company says vSphere 4 will allow companies to centrally manage servers, storage and networks in the datacentre as though they were a single pool of computing resource

• Werner Vogels was a member of the Cloud Computing Power Panel consisting of the CTOs of Amazon.com and Rackspace, joined by Sun's Hal Sterns and Rod Fontecilla from Booz Allen Hamilton that Syscon presented live from Times Square, New York City on 5/5/2009.

CloudBook has this and videos of other Vogels cloud presentations, including “A Head in the Cloud: The Power of Infrastructure as a Service” A lecture by Dr. Werner Vogels for the Stanford University Computer Systems Colloquium.

John Garling, Chief information officer, Defense Information Systems Agency (DISA) is the 14th of “Fast Company’s 100 Most Creative People in Business” because, inter alia:

John Garing powwowed with such luminaries as Amazon's Werner Vogels and Salesforce.com's Marc Benioff to bring cloud computing, network services, and Web 2.0 tools to the Department of Defense. Garing's biggest challenge: overcoming the "box hugging" impulse to control servers, data, and process. His version of cloud computing, called RACE (rapid-access computing environment), acts as an open-source innovation lab for military developers, complete with peer-review certification. "Projects that used to take seven months to approve now take a matter of days," he says.

Sean Michael Kerner reports Google to Launch Amazon S3 Competitor in this 5/20/2009 Datamation story:

Google's answer to Amazon's S3 cloud storage service is coming 'within weeks' according to Mike Repass, Product Manager at Google. Repass was on the stage at the Interop Enterprise Cloud summit talking about Google's AppEngine and related cloud initiatives.

"It's a feature debt issue," Repass told the audience. "We started out Google AppEngine as an abstract virtualization service. Our static content solution is something we're shipping soon."

The Planet claims “The Planet Provides Highest Performing Cloud Storage Platform” in this 5/19/2009 article:

The white paper — titled “Five Reasons Storage Cloud Is Right for You” — highlights upload and download testing performed on three cloud storage platforms: The Planet Storage Cloud, Amazon S3 and Mosso Cloud Files. The Planet Storage Cloud demonstrated the best upload and download performance among the three, with speeds up to 46 MB/sec. It also delivered the smallest variance in test results among the three companies. Other highlights of the paper include ease of integration, multiple global nodes, flexibility and simplified billing.

To download the complete white paper, please visit: www.theplanet.com/cloud-storage-reasons.

The technical brief – “Performance Test: Comparing Cloud Storage Platforms” – outlines the performance testing methodology and results published in the white paper. This brief – and instructions on how to independently recreate the tests – is available at www.theplanet.com/cloud-storage-performance-test.

Tests were conducted internally by The Planet, and neither the testing criteria nor the results of such testing have been independently verified.

Reuven Cohen reports Amazon Releases Elastic Load Balancing, Auto Scaling, and Amazon CloudWatch in this 5/18/2009 post:

Amazon CloudWatch tracks and stores a number of per-instance performance metrics including CPU load, Disk I/O rates, and Network I/O rates. The metrics are rolled-up at one minute intervals and are retained for two weeks. …

Auto Scaling lets you define scaling policies driven by metrics collected by Amazon CloudWatch. Your Amazon EC2 instances will scale automatically based on actual system load and performance but you won't be spending money to keep idle instances running. …

Finally, the Elastic Load Balancing feature makes it easy for you to distribute web traffic across Amazon EC2 instances residing in one or more Availability Zones. …

Technorati Tags:

Windows Azure,

Azure Services Platform,

Azure Storage Services,

Azure Table Services,

Azure Blob Services,

Azure Queue Services,

SQL Data Services,

SDS,

.NET Services,

Cloud Computing,

Amazon Web Services,

Google App Engine

Windows Azure, Azure Data Services, SQL Data Services and related cloud computing topics now appear in this weekly series.

Windows Azure, Azure Data Services, SQL Data Services and related cloud computing topics now appear in this weekly series.