Windows Azure and Cloud Computing Posts for 7/27/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Don Pattee posted Announcing LINQ to HPC Beta 2 on 7/7/2011 (missed when posted):

We’re pleased to announce the availability of LINQ to HPC Beta 2.

LINQ to HPC enables a new class of data intensive applications for Windows HPC Server by providing a sophisticated distributed runtime and associated programming model for large scale, unstructured data analysis that is easy to use and program. For deeper insights, it integrates with SQL Server 2008, SQL Azure, and the rich portfolio of Business Intelligence offerings from Microsoft such as SQL Server Reporting Services, SQL Server Analysis Services, PowerPivot, and Excel. IT Professionals and developers now have a unified HPC platform that can run both compute and data intensive HPC applications.

Key features of LINQ to HPC include:

- LINQ to HPC enables a new class of data intensive applications by providing a sophisticated distributed runtime and associated programming model for analyzing large volumes of unstructured data using commodity clusters. LINQ to HPC is offered as an integral feature of Windows HPC Server, providing a unified scale-out platform that can run both compute and data intensive HPC applications.

- LINQ to HPC is based on LINQ, a powerful, language integrated query model, offering a higher level of abstraction that makes it easy to develop distributed, scale-out data intensive applications. It integrates with Visual Studio 2010 and the .NET framework for a superior development experience and increased developer productivity.

For deeper insights, LINQ to HPC can integrate with SQL Server 2008, SQL Azure and the rich portfolio of Business Intelligence offerings from Microsoft such as SQL Server Reporting Services, SQL Server Analysis Services, PowerPivot, and Excel. [Emphasis added.]

- Through Windows HPC Server 2008 R2, LINQ to HPC offers a familiar, easy to use and easy to manage environment that lets you take advantage of your existing IT infrastructure, thereby reducing the learning curve and increasing return on investment.

For more information about LINQ to HPC see Introducing LINQ to HPC and the documentation accompanying the Beta 2 download.

The Beta 2 installer, along with documentation and samples, can be downloaded from https://connect.microsoft.com/hpc. LINQ to HPC Beta 2 requires HPC Pack 2008 R2 SP2.

You will be admitted to the beta program on Microsoft Connect to download HPC Pack 2008 R2 SP1 and LINQ to HPC 2008 R2 SP2 after you log in with your Windows Live ID and complete an initial survey form.

Don Pattee is a Senior Product Manager at Microsoft.

Following is the “Overview” section of HPC Pack 2008 R2 SP2 from its download page, dated 6/28/2011:

This service pack updates the HPC Pack 2008 R2 products listed in the 'System Requirements' section. It provides improved reliability as well as an enhanced feature set including:

Enhanced Windows Azure integration - you can now add Azure VM Roles to your cluster, you can Remote Desktop to your Azure nodes, and you can run MPI jobs on Azure nodes. [Emphasis added.]

- Use a new 'resource pool' job scheduling policy that ensures resources are available to multiple groups of users.

- Access job scheduling services through a REST interface or through an IIS-hosted web portal.

- Assigning different submission and activation filters to jobs based on job templates.

- Over-subscribe or under-subscribe the number of cores on cluster nodes

For more information on what's new in this release, please refer to the documentation at http://technet.microsoft.com/en-us/library/hh184314(WS.10).aspx.

Here’s the “Windows Azure Integration” section from the HPC2008 R2 SP2 documentation referenced above:

The following features are new for integration with Windows Azure:

Note: Azure Virtual Machine roles and Azure Connect are pre-release features of Windows Azure. To use these features with Windows HPC, you must join the Azure Beta program. To apply for participation in the beta, log on to the Windows Azure Platform Portal, click Home, and then click Beta Programs. Acceptance in the program might take several days.

- Add Azure VM Roles to the cluster. While SP1 introduced the ability to add Azure Worker nodes to the cluster, SP2 introduces the ability to add Azure Virtual Machine nodes. Azure VMs support a wider range of applications and runtimes than do Azure Worker nodes. For example, applications that require long-running or complicated installation, are large, have many dependencies, or require manual interaction in the installation might not be suitable for worker nodes. With Azure VM nodes, you can build a VHD that includes an operating system and installed applications, save the VHD to the cloud, and then use the VHD to deploy Azure VM nodes to the cluster.

- Run MPI jobs on Azure Nodes. SP2 includes support for running MPI jobs on Azure Nodes. This gives you the ability to provision computing resources on demand for MPI jobs. The MPI features are installed on both worker and virtual machine Azure Nodes.

- Run Excel workbook offloading jobs on Azure Nodes. While SP1 introduced the ability to run UDF offloading jobs on Azure Nodes, SP2 introduces the ability to run Excel workbook offloading jobs on Azure Nodes. This enables you to provision computing resources on demand for Excel jobs. The HPC Services for Excel features for UDF and workbook offloading are included in Azure Nodes that you deploy as virtual machine nodes. Workbook offloading is not supported on Azure Nodes that you deploy as worker nodes.

- Automatically run configuration scripts on new Azure Nodes. In SP2, you can create a script that includes configuration commands that you want to run on new Azure Node instances. For example, you can include commands to create firewall exceptions for applications, set environment variables, create shared folders, or run installers. You upload the script to Azure Storage, and specify the name of the script in the Azure Node template. The script runs automatically as part of the provisioning process, both when you deploy a set of Azure Nodes and when a node is reprovisioned automatically by the Windows Azure system. If you want to configure a subset of the nodes in a deployment, you can create a custom node group to define the subset, and then use the %HPC_NODE_GROUPS% environment variable in your script to check for inclusion in the group before running the command. For more information, see LINK.

- Connect to Azure Nodes with Remote Desktop. In SP2, you can use Remote Desktop to help monitor and manage Azure Nodes that are added to the HPC cluster. As with on-premises nodes, you can select one or more nodes in HPC Cluster Manager and then click Remote Desktop in the Actions pane to initiate a connection with the nodes. This action is available by default with Azure VM roles, and can be enabled for Azure Worker Roles if Remote Access Credentials are supplied in the node template.

- Enable Azure Connect on Azure Nodes. In SP2, you can enable Azure Connect with your Azure Nodes. With Azure Connect, you can enable connectivity between Azure Nodes and on-premises endpoints that have the Azure Connect agent installed. This can help provide access from Azure Nodes to UNC file shares and license servers on-premises.

- New diagnostic tests for Azure Nodes. SP2 includes three new diagnostic tests in the Windows Azure test suite. The Windows Azure Firewall Ports Test verifies connectivity between the head node and Windows Azure. You can run this test before deploying Azure Nodes to ensure that any existing firewall is configured to allow deployment, scheduler, and broker communication between the head node and Windows Azure. The Windows Azure Services Connection Test verifies that the services running on the head node can connect to Windows Azure by using the subscription information and certificates that are specified in an Azure Node template. The Template test parameter lets you specify which node template to test. The Windows Azure MPI Communication Test runs a simple MPI ping-pong test between pairs of Azure Nodes to verify that MPI communication is working.

In the 'Downloads' section for HPC2008 R2 SP2 you will see three files:

Note: This update does not support 'uninstallation.' Once installed attempting to remove it will completely remove the HPC Pack 2008 R2 software. We suggest initial testing be done on a non-production system, and that a system backup be performed prior to installing the service pack.

HPC2008R2SP2-Update-x64 : Download this .zip file to obtain the updater for use on your head nodes, compute nodes, broker nodes, and x64-based clients and workstation nodes. It also contains the Web Components (REST and Web Portal), Azure VM, and KSP ('soft card') installers necessary to use those features. [Emphasis added.]

- HPC2008R2SP2-Update-x86 : This .zip file contains the update for use on 32-bit clients and workstation nodes. It also contains the KSP ('soft card') installer for 32-bit clients.

- SP2 Media Integration Package : For customers who will be doing multiple new cluster installations (setting up brand new head nodes, not just updating existing clusters) you can use this package to update your original ('RTM') installation media to have SP2 integrated from the beginning. (Instead of installing from your RTM DVD, then applying SP1, then applying SP2.) Please refer to the instructions on the HPC team blog for more information.

System requirements

Supported Operating Systems: Windows 7, Windows HPC Server 2008 R2, Windows Server 2003 Service Pack 2, Windows Server 2008, Windows Server 2008 R2, Windows Vista, Windows XP Professional x64 Edition , Windows XP Service Pack 3

An HPC Pack 2008 R2-based cluster with Service Pack 1 installed including:

HPC Pack 2008 R2 Express, HPC Pack 2008 R2 Enterprise, Windows HCP Server 2008 R2 Suite, HPC Pack 2008 R2 Client Utilities, HPC Pack 2008 R2 MS-MPI Redistributable.Instructions

This service pack need[s] to be run on all nodes and client machines.

Please refer to the documentation at http://technet.microsoft.com/en-us/library/ee783547(WS.10).aspx

The following online documentation is available for HPC Server 2008 R2 Service Pack 2:

- What's New in Windows HPC Server 2008 R2 Service Pack 2

- Release Notes for Microsoft HPC Pack 2008 R2 Service Pack 2

- Deploying Windows Azure VM Nodes in Windows HPC Server 2008 R2 Step-by-Step Guide

- New Feature Evaluation Guide for Windows HPC Server 2008 R2 SP2

- Deploying a Windows HPC Server Cluster for LINQ to HPC Beta 2

The Windows HPC with Burst to Windows Azure: Application Models and Data Considerations white paper, sample data and code samples of 5/11/2011 describe “the supported application models, data considerations, and deployment for bursting to Windows Azure from a Windows HPC Server 2008 R2 SP1 cluster and code samples of parametric sweep, SOA, and Excel UDF applications that can burst to Azure.” Following is the description of the package:

The article provides a technical overview of developing HPC applications that are supported for the Windows Azure burst scenario. The article addresses the application models that are supported, and the data issues that arise when working with Windows Azure and on-premises nodes, such as the proper location for the data, the storage types in Windows Azure, various techniques to upload data to Windows Azure storage, and how to access data from the computational nodes in the cluster (on-premises and Windows Azure). Finally, this article describes how to deploy HPC applications to Windows Azure nodes and how to run these HPC applications from client applications, as well as from the Windows HPC Server 2008 R2 SP1 job submission interfaces.

A Windows HPC with Burst to Windows Azure Training Course resource kit of 5/2011 for SP1 is available also:

This Resource Kit contains samples that demonstrate HPC application types and concepts shown in the article "Windows HPC with Burst to Windows Azure Application Models and Data Considerations".

Units

Parametric Sweep. Parametric sweep provides a straightforward development path for solving delightfully parallel problems on a cluster (sometimes referred to as "embarrassingly parallel" problems, which have no data interdependencies or shared state precluding linear scaling through parallelization). For example, prime numbers calculation for a large range of numbers. Parametric sweep applications run multiple instances of the same program on different sets of input data, stored in a series of indexed storage items, such as files on disk or rows in a database table. Each instance of a parametric sweep application runs as a separate task, and many such tasks can execute concurrently, depending on the amount of available cluster resources.

SOA. Service-oriented architecture (SOA) is an architectural style designed for building distributed systems. The SOA actors are services: independent software packages that expose their functionality by receiving data (requests) and returning data (responses). SOA is designed to support the distribution of an application across computers and networks, which makes it a natural candidate for scaling on a cluster. The SOA support provided by Windows HPC Server 2008 R2 is based on Windows Communication Foundation (WCF), a .NET framework for building distributed applications. Windows HPC Server 2008 R2 SP1 improves SOA support by hosting WCF services inside Windows Azure nodes, in addition to on-premises nodes.

Excel Offloading. The execution of compute intensive Microsoft Excel workbooks with independent calculations can be sometimes scaled using a cluster. The Windows HPC Server 2008 R2 SP1 integration with Windows Azure supports User Defined Functions (UDFs) offloading. Excel workbook calculations that are based on UDFs defined in an XLL file can be installed on the cluster’s nodes (on-premises and/or Windows Azure nodes). With the XLL installed on the cluster, the user can perform the UDF calls remotely on the cluster instead of locally on the machine where the Excel workbook is open.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

Michael Crump (@mbcrump) continued his series with a Producing and Consuming OData in a Silverlight and Windows Phone 7 application (Part 4) post to the Silverlight Show blog on 7/27/2011:

This article is Part 4 of the series “Producing and Consuming OData in a Silverlight and Windows Phone 7 application.”.

To refresh your memory on what OData is:

The Open Data Protocol (OData) is simply an open web protocol for querying and updating data. It allows for the consumer to query the datasource (usually over HTTP) and retrieve the results in Atom, JSON or plain XML format, including pagination, ordering or filtering of the data.

To recap what we learned in the previous section:

- We began by creating the User Interface and setting up our bindings on our elements.

- We added a service reference pointing to our OData Data Service inside our project.

- We added code behind to sort and filter the data coming from our OData Data Source into our Silverlight 4 Application.

- Finally, we consumed an OData Data Source using Windows Phone 7 (pre-Mango).

In this article, I am going to show you how to create, read, update and delete OData data using the latest Windows Phone 7 Mango release. Please read the complete series of articles to have a deep understanding of OData and how you may use it in your own applications.

Download the source code for part 4

Testing and Modifying our existing OData Data Service

I would recommend at the very least that you complete part 1 of the series. If you have not, you may continue with this exercise by downloading the completed source code to Part 1 and continue. I would also recommend watching the video to part 1 in order to have a better understanding of the OData protocol.

Before we get started, I have good news. The good news is that in Mango you do not have to download extra tools or generate your own proxy classes and import them into Visual Studio like you had to do pre-mango. You will be able to use the “Add Service Reference” like we did in Part 2 (Silverlight 4). Let’s go ahead and get started learning more about OData with Mango.

Go ahead and download the completed source code to Part 1 of this series and load it into Visual Studio 2010. Now right click CustomerService.svc and Select “View in Browser”.

If everything is up and running properly, then you should see the following screen (assuming you are using IE8).

Please note that any browser will work. I chose to use IE8 for demonstration purposes only.

If you got the screen listed above then we know everything is working properly and can continue. If not download the completed solution and continue with the rest of the article.

We are going to need to make one quick change before continuing. Double click the CustomerService.svc.cs file and change the following line from:

1: config.SetEntitySetAccessRule("CustomerInfoes", EntitySetRights.AllRead);to

1: config.SetEntitySetAccessRule("CustomerInfoes", EntitySetRights.All);This will simply give our service the ability to create, read, update and delete data.

At this point, leave this instance of Visual Studio running. We are going to use this OData Data Service in the “Getting Started” part. You may also want to copy and paste the address URL which should be similar to the following:

http://localhost:6844/CustomerService.svc/

At this point, we are ready to create our Windows Phone 7 Mango Application. So open up a new instance of Visual Studio 2010 and select File –> New Project.

Select Silverlight for Windows Phone –> Windows Phone Panorama Application and give it the name, SLShowODataP4 and finally click OK.

On the next screen make sure you select “Windows Phone 7.1” as the Windows Phone Platform that you want to target.

Once the project loads, the first thing we are going to do is to add a Service Reference to our CustomerService. Simply right click your project and select “Add Service Reference”…

Type the Address of your service (as shown in the first step) and hit “Go”. You should now see Services and you can expand that box to see CustomerInfoes if you wish. Now go ahead and type a name for the Namespace. We are going to use CustomersModel for the name and click OK.

You may notice a few things happened behind the scene. First the reference to “System.Data.Services.Client” was added automatically for us and you now have a Service Reference.

Now we will need to create the User Interface and write the code behind to pull the data into our application.

Double click on the MainPage.xaml file and replace the contents of <—Panorama item one---> with the following code snippet:

1: <!--Panorama item one-->2: <controls:PanoramaItem Header="first item">3: <!--Double line list with text wrapping-->4: <ListBox x:Name="lst" Margin="0,0,-12,0" ItemsSource="{Binding}">5: <ListBox.ItemTemplate>6: <DataTemplate>7: <StackPanel Margin="0,0,0,17" Width="432">8: <TextBlock Text="{Binding FirstName}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextExtraLargeStyle}"/>9: <TextBlock Text="{Binding LastName}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextExtraLargeStyle}"/>10: <TextBlock Text="{Binding Address}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/>11: <TextBlock Text="{Binding City}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/>12: <TextBlock Text="{Binding State}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/>13: <TextBlock Text="{Binding Zip}" TextWrapping="Wrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/>14: </StackPanel>15: </DataTemplate>16: </ListBox.ItemTemplate>17: </ListBox>18: </controls:PanoramaItem>This xaml should be very straightforward. This ListBox contains a DataTemplate with a StackPanel that each TextBlock is binding to an element from our collection. This includes the FirstName, LastName, Address, City, State and Zip Code.

Let’s go ahead and setup our second Panorama Item page which will include the “Read”, “Add”, “Update” and “Delete” buttons by using the following code snippet.

Double click on the MainPage.xaml file and replace the contents of <—Panorama item two---> with the following code snippet:

1: <controls:PanoramaItem Header="second item">2: <!--Double line list with image placeholder and text wrapping-->3: <StackPanel>4: <Button x:Name="btnRead" Content="Read" Click="btnRead_Click"/>5: <Button x:Name="btnAdd" Content="Add" Click="btnAdd_Click" />6: <Button x:Name="btnUpdate" Content="Update" Click="btnUpdate_Click" />7: <Button x:Name="btnDelete" Content="Delete" Click="btnDelete_Click" />8: </StackPanel>9: </controls:PanoramaItem>Page 2 should look like the following in the design view.

NOTE: You will want to go ahead and make sure the Click Event handlers are resolved before continuing.

Now that we are finished with our UI and adding our Service Reference, let’s add some code behind to call our OData Data Service and retrieve the data into our Windows Phone 7 Application.

Now would be a good time to go ahead and Build our project. Select Build from the Menu and Build Solution.

Reading Data from the Customers Table.

Go ahead and add the following namespaces on the MainPage.xaml.cs file.

1: using System;2: using System.Data.Services.Client;3: using System.Linq;4: using System.Windows;5: using Microsoft.Phone.Controls;6: using SLShowODataP4.CustomersModel;Lines 1-6 are the proper namespaces that we will need to query an OData Data Source. We have already included our CustomersModel so we are ready to go.

Add the following line just before the MainPage() Constructor.

1: public CustomersEntities ctx = new CustomersEntities(new Uri("http://localhost:6844/CustomerService.svc/", UriKind.Absolute));This line just creates a new context with our CustomersEntities (which was generated by using the DataSvcUtil tool that we downloaded earlier) and passes the URI of our data service which is located in our ASP.NET Project that we have running called CustomerService.svc.

Let’s now add code for our Read Button.

1: private void btnRead_Click(object sender, RoutedEventArgs e)2: {3: //Read from OData Data Source4: var qry = from g in ctx.CustomerInfoes5: orderby g.FirstName6: select g;7:8: var coll = new DataServiceCollection<CustomerInfo>();9: coll.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(coll_LoadCompleted);10: coll.LoadAsync(qry);11:12: DataContext = coll;13: }Line 4 is the query that we will return records to in our collection. In this particular query, we are going to select all records and order them by the first name.

Lines 8-12 creates a DataServiceCollection which provides notification if an item is added, deleted or the list is refreshed. We have also added a LoadCompleted event that will display a MessageBox if we had an error. It will then Asynchronously load the collection passing the query we specified earlier. Finally, it sets the DataContext of the page to the collection.

If we go ahead and run this application and click on the “Read” button on the second page, we will get the following screen.

Notice how our results are in alphabetical order? That occurred because our query on line 4 above specified to get all the Customers and order them by the FirstName. Pretty easy huh? Let’s step it up a notch and actually add a record to our Customers Table.

Adding a record to the Customers Table.

Adding a record is going to be pretty straight forward since we already have a context with CustomerEntities defined. Go ahead and add the following code snippet:

1: private void btnAdd_Click(object sender, RoutedEventArgs e)2: {3: //Adding a new Customer4: var newCustomer = new CustomerInfo();5: newCustomer.FirstName = "Andy1";6: newCustomer.LastName = "Griffith1";7: newCustomer.Address = "1111 New Address";8: newCustomer.City = "Empire";9: newCustomer.Zip = "34322";10: newCustomer.State = "AL";11:12: ctx.AddObject("CustomerInfoes", newCustomer);13:14: //Code to Add/Update/Delete from OData Data Source15: ctx.BeginSaveChanges(insertUserInDB_Completed, ctx);16: }17:18: private void insertUserInDB_Completed(IAsyncResult result)19: {20: ctx.EndSaveChanges(result);21: }Lines 4-10 are just setting up a new CustomerInfo() object. We are going to add all the required fields to create a new Customer.

Line 12 Adds the specified object to the set of objects that the DataServiceContext is tracking. In this case, we are going to add our newCustomer that we just created to “CustomerInfoes”.

Lines 15-21 Asynchronously submits the pending changes to the OData service collected by the DataServiceContext since the last time changes were saved. The last line called to complete the BeginSaveChanges operation. Now if we click the 1) “Add” button from our second screen then hit 2) “Read” to refresh our screen we shall see the following.

Update a record to the Customers Table.

Now let’s go ahead and update an existing record. In this example, we are going to update the first record titled “Andy Griffith”. We are going to change his address to the value “ New Address”. How creative! Ok, let’s get started.

1: private void btnUpdate_Click(object sender, RoutedEventArgs e)2: {3: var qry = ctx.CreateQuery<CustomerInfo>("CustomerInfoes").AddQueryOption("$filter", "LastName eq" + " \'" + "Griffith" + "\'");4:5: qry.BeginExecute(r =>6: {7: var query = r.AsyncState as DataServiceQuery<CustomerInfo>;8:9: try10: {11: var result = query.EndExecute(r).First();12: result.Address = " New Address";13: ctx.UpdateObject(result);14:15: ctx.BeginSaveChanges(changeUserInDB_Completed, ctx);16: }17:18: catch (Exception ex)19: {20: MessageBox.Show(ex.ToString());21: }22:23:24: }, qry);25: }26:27: private void changeUserInDB_Completed(IAsyncResult result)28: {29: ctx.EndSaveChanges(result);30: }Lines 1-3 are setting up the query that we are going to pass to the “CustomerInfoes” object. As you can see, we are setting the filter to LastName equals Griffith. You could also run the filter command directly inside a web browser. In this case, it is only going to return one record.

Lines 5-13 Starts an asynchronous network operation that executes the query that we created earlier. Line 11 will return the first element in the sequence. We then specify the new Address value and update the object.

Lines 15-30 Asynchronously submits the pending changes to the OData service collected by the DataServiceContext since the last time changes were saved. Now if we click the 1) “Update” button from our second screen then hit 2) “Read” to refresh our screen we shall see the following.

Deleting a record from the Customers Table.

Now let’s go ahead and delete a existing record. In this example, we are going to update the first record titled “Andy Griffith”. Go ahead and copy and paste the following code snippet for the “Delete” button.

1: private void btnDelete_Click(object sender, RoutedEventArgs e)2: {3: var qry = ctx.CreateQuery<CustomerInfo>("CustomerInfoes").AddQueryOption("$filter", "LastName eq" + " \'" + "Griffith" + "\'");4:5: qry.BeginExecute(r =>6: {7: var query = r.AsyncState as DataServiceQuery<CustomerInfo>;8:9: try10: {11:12: var result = query.EndExecute(r).First();13: ctx.DeleteObject(result);14:15: ctx.BeginSaveChanges(changeUserInDB_Completed, ctx);16: }17:18: catch (Exception ex)19: {20: MessageBox.Show(ex.ToString());21: }22:23:24: }, qry);25: }26:27: private void changeUserInDB_Completed(IAsyncResult result)28: {29: ctx.EndSaveChanges(result);30: }Right off the bat you can probably tell, this looks exactly like the update code. You are correct except it has a ctx.DeleteObject where the update statement had a ctx.UpdateObject. Let’s take a look at the code anyways.

Lines 1-3 are setting up the query that we are going to pass to the “CustomerInfoes” object. As you can see, we are setting the filter to LastName equals Griffith. It will only going to return one record.

Lines 5-13 Starts an asynchronous network operation that executes the query that we created earlier. Line 11 will return the first element in the sequence. We then specify that we wish to delete the Customer that matches the filter.

Lines 15-30 Asynchronously submits the pending changes to the OData service collected by the DataServiceContext since the last time changes were saved. Now if we click the 1) “Delete” button from our second screen then hit 2) “Read” to refresh our screen we shall see that “Griffith” has been removed.

Conclusion

At this point, we have seen how you would produce and consume an OData Data Source using the web browser, LinqPad, Silverlight 4 and Windows Phone 7 pre-Mango and now Mango. We have learned how to do some basic sorting and filtering using all the same. Now that you are equipped with a solid understanding of producing and consuming OData Data Services, you can begin to use this in your own applications. I want to thank you for reading this series and if you ever have any questions feel free to contact me on the various souces listed below. I also wanted to thank SilverlightShow.Net for giving me the opportunity to share this information with everyone.

IDV Solutions announced a New Open Data connector for Visual Fusion unites more data sources for enhanced business intelligence by a 7/26/2011 press release published by Directions magazine:

IDV Solutions today released an Open Data Protocol Connector for their business intelligence software, Visual Fusion. With this release, organizations can easily connect solutions built with Visual Fusion to any source that uses the OData protocol, including the DataMarket on Microsoft's Windows Azure Marketplace.

Visual Fusion is innovative business intelligence software that unites data from disparate sources in a web-based, visual context. Users can analyze their data in the context of location and time, using an interactive map, timeline, data filters and other analytic tools to achieve greater insight and understanding. With today’s release, users can combine OData sources with other business information—including content from SQL Server, SharePoint, Salesforce, Oracle, ArcGIS, and Web feeds.

“The new OData connector enhances one of the key advantages of Visual Fusion—the ability to unite data from virtually any sources in one interactive visualization,” said Riyaz Prasla, IDV’s Program Manager. “It lets users add context to their applications by bringing in data from government sources, the Azure Marketplace, and other organizations that support the protocol.”

OData provides an HTTP-based, uniform interface for interacting with relational databases, file systems, content management systems, or Web sites.

Brian Rhea posted a simple demo of Consuming an OData feed in MVC in a 7/20/2011 post (missed when posted):

I created this quick demo on consuming a WCF Data Service to get my feet wet with OData feeds. I thought it might be a good quick start guide for anyone getting started. Enjoy!

Referencing the ServiceAfter creating the MVC 3 Application our first step is to add the service reference to leverage the proxy class generated for us.

Now that we have our proxy class let’s go ahead and add a strongly-typed partial view in the Home folder for the products.

Once we have a list view to show the products, we can add our call to get the view into the main page using the helper Html.RenderPartial.

On the controller side, we will access the products from the OData feed and return them to the view. Since the OData feed is a RESTful service we could just add /Products to the service url and retrieve them:

http://services.odata.org/Northwind/Northwind.svc/Products

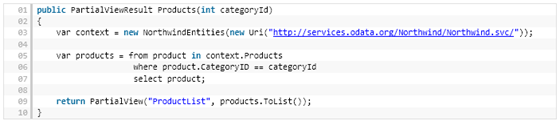

But since I have the generated class available I can just use it’s context and LINQ to get the products:

The last thing we need to do is show a select list of categories to sort by and wire it up to change the list using some jQuery binding.

Our page now looks like this:

And in our controller we add the method to get our categories:

Run this and we see our results based on the category chosen:

Output CachingOkay, so we have our products and can change them by category. Let’s take advantage of Output Caching since the products will not change much (except of course the units in stock). Output Caching is easy to accomplish by adding an attribute to the controller action. If you want you can also use Action Filtering to handle all controllers and actions, providing a great level of caching granularity. Back to our products, just add the OutputCache attribute to the action along with some parameters you can play with to see it working.

{Let’s look at this in action in Firebug to really see what’s happening. The first three calls show the first time I select the category. I then select each category again and we can see the difference in response time:

And there we have it. Consuming a WCF Data Service public OData feed and caching the output by parameter.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Sean Deuby reported about “Cloud Identity and Security Up in the Mountains” in his Keeping Cool At The Cloud Identity Summit post of 7/26/2011 to the Windows IT Pro blog:

I spent most of last week beating the Dallas heat in Keystone, Colorado, for the second annual Cloud Identity Summit, hosted by Ping Identity. This is a great conference for the "identerati" - key players in cloud identity security and standards from companies like Ping, Google, Microsoft, Salesforce.com, and UnboundID - and increasingly, high-level identity professionals that want to learn how to address their own identity issues in our hybrid on-premises / cloud future. And it certainly lived up to expectations.

The conference has about doubled in size from last year, but it's still only about 250 attendees. This allows everyone to rub elbows with everyone else, and participate in some really interesting conversations. It's part of what makes CIS special. You could tell how the conversation has evolved from last year. In 2010, most of the talk was about the existing and emerging protocols that make cloud identity work such as SAML, OpenID, and OAuth. This year the talk has moved both deeper (Shibboleth’s Ken Klingenstein gave a breakout session named “Killer Attributes”) and broader (for example addressing the need for standardized just-in-time provisioning / de-provisioning of accounts at the service provider).

Monday and Tuesday were reserved for pre-conference workshops held by experts from salesforce.com, Google, Microsoft, and Ping.

There was also an OpenID Summit held at the same time to work on the evolving standards of this important authentication protocol. Wednesday and Thursday were the big days, however, with keynotes and two breakout tracks each afternoon. Andre Durand, the founding CEO of Ping Identity, acted as master of ceremonies, obviously respected his speakers and indeed shares a long technical history with many of them. His short speech focused on the importance of the formal and informal standards work being done at the conference, quoting Konrad Friedemann’s “Every undertaking…is generally accompanied by unforeseen repercussions that can overshadow the principal behavior.” (If you need an example, think of the IBM PC.) He quite rightly noted that results from a conference like this that focuses on cloud computing security and identity standards will affect literally billions of people in the future.

Gunnar Peterson, Principal Consultant for the Arctec Group and overall highly respected security guy, led off the keynotes with “Cloud Identity: Yesterday, Today, and Tomorrow”. He demonstrated how, as software architecture has evolved over the last ten years, the security model (firewall and SSL) has not evolved to keep pace. He pointed out what he considers to be one of the most important statements in the Cloud Security Alliance (CSA) Security Guidance paper: "A portion of the cost savings from cloud computing must be invested into increased scrutiny of the security capabilities...to ensure requirements are continuously met." In other words, companies should take some of the capital they’ve saved by the move to cloud computing and invest it in security – because today’s firewalls and SSL transport encryption isn’t up to the job.

Patrick Harding, Ping’s CTO, presented a “State Of Cloud Identity” keynote. He mentioned how he was recently interviewed by CNN on cloud security, and one of the instructions CNN gave was that he talk about the topic as though he were addressing an eight-year old. (It’s good guidance to keep in mind when you talk about cloud security to non-technologists; it forces you to boil complicated concepts down to their essence.) Most of his keynote covered an important project named SCIM, for Simple Cloud Identity Management. SCIM is a specification to create a standard for adding, changing, and deleting user accounts at service providers. Currently there is no standard, and as a result setting up a connector between the identity provider (e.g. your enterprise) and the service provider (e.g. one of the thousands of SaaS applications available) requires custom work for each vendor. I’ll talk more about SCIM in a future article, and Patrick has posted a short SCIM tutorial video about it.

Pamela Dingle of Ping moderated a panel about the future of identity and mobility featuring speakers from Google, Verizon, and LG. The standout quote came from Paul Donfried, CTO for Identity and Access Management at Verizon. Answering a question about location privacy on mobile devices, he quipped, “People worry about service providers locating their phones. Well, if we don’t know where the hell your phone is, we can’t make it ring.”

In “Federated IT and Identity”, Microsoft Technical Fellow John Shewchuk talked about how, since last year, “we’ve really hit the tipping point on cloud”. The conversation has moved from just the infrastructure to the applications on top of the infrastructure (such as productivity, mobility and consumerization) that are driving cloud computing’s adoption. “Applications are pulling in customers, and customers are pulling through the infrastructure…it’s not about the identity erector set, it’s about the solutions (customers) can get.” 50,000 organizations, for example, signed up for Office 365 the first two weeks after the product launch.

He also mentioned what must be the largest Active Directory-like directory in the world: The Windows Azure directory. This is a multi-tenant, highly available, highly scaled directory, “built on the same model that we have with Active Directory.” It houses the synchronized identities of customers using Azure, Office 365 / Live@edu, and presumably many other Microsoft online properties. His key message was that, in the new world of federated IT, companies must empower IT professionals to empower users or IT will become less and less relevant as users find their own way without IT.

Gartner Vice President and Distinguished Analyst Bob Blakley got everyone’s interest with his session title: “Death of Authentication”. His premise was, unlike the real world, authentication took place because there wasn’t enough information about you online to identify you. With the increasing ubiquity of the internet and the rise of social media, plus the explosion in mobility, that’s rapidly changing. People will be able to identify – not just authenticate– someone using their mobile devices, which will collect and correlate a wealth of public information about a subject to give the user an augmented reality. (Think of James Cameron’s Terminator head-up display.) The trick, of course, is to use this augmented reality wisely and enact restrictions on retaining data after the subject has been identified.

Finally (though there were other excellent presentations), Jeremy Grant of the National Institute of Standards and Technology (NIST) presented a US government strategy with the potential to greatly increase the individual’s online security. Called the National Strategy for Trusted Identities in Cyberspace (NSTIC, pronounced “n-stick”), it envisions an identity ecosystem composed of private identity providers working within a framework of standards they participate in creating. This ecosystem will allow individuals and organizations to use enhanced, more secure versions of their accounts with existing and new identity providers to access a range of online services well beyond what’s available today. Participation in the system will be voluntary, but the goal is to make the system so useful and secure that it will be voluntarily adopted. The government intends to only provide a general framework, coordination, and "grease the skids" (for example, volunteer agencies to act as early adopters) to keep things moving forward.

If you’re suspicious of the government having any involvement in cloud security, know that remarks and tweets from almost all of the identerati present were favorable after listening to Jeremy’s engaging presentation. It’s the right direction, and the right idea. But even though everyone wished Jeremy good luck, the devil is in the details. The strategy is just getting started, and it could be derailed by any number of road blocks or political interference in technical issues. I’ll examine the NSTIC strategy in more detail in an upcoming article. Jeremy’s standout quote: “We think the password is fundamentally insecure and needs to be shot.”

This year’s Cloud Identity Summit exceeded the expectations set by last year’s inaugural event. It combined technology learning, getting trends and opinions from the people that know, one-on-one networking, and standards work so important in this early phase of cloud computing security. Andre Durand and Ping Identity deserve special thanks for organizing and putting resources into a conference that is not, by any means, just about them. The Cloud Identity Summit is all about getting the right people together (evidenced by the stellar array of speakers and the fact the conference was sponsored by both Microsoft AND Google) and watching the unforeseen repercussions reverberating through the cloud.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team (@WindowsAzure) posted Just Announced: Windows Azure Toolkit for iOS Now Supports Access Control Service, Includes Cloud Ready Packages on 7/27/2011:

Wade Wegner just announced in his blog post, “Windows Azure Toolkit for iOS Now Supports the Access Control Service” the release of an update to the Windows Azure Toolkit for iOS.

This new version of the toolkit includes three key components Wade describes as incredibly important when trying to develop iOS applications that use Windows Azure:

- Cloud Ready Packages for Devices

- Configuration Tool

- Support for ACS

Read Wade’s blog post to learn more about these updates and download all the bits here:

- watoolkitios-lib UPDATED

- watoolkitios-samples UPDATED

- watoolkitios-doc UPDATED

- watoolkitios-configutility NEW

- cloudreadypackages NEW

Mary Jo Foley (@maryjofoley) reported Microsoft gives Windows Phone developers a refreshed (non-RTM) Mango build in a 7/27/2011 post to her All About Microsoft blog for ZDNet:

On the heels of its announcement that it had released to manufacturing the Windows Phone operating system 7.5 — better known as “Mango” — Microsoft execs said they’re now updating Mango developers with a near-final build. [See below post.]

The developer update released via the Microsoft Connect site on July 27 is Build 7712. The RTM build is believed to be 7720.The Windows Phone Dev Podcast team hinted yesterday that Microsoft might be ready to launch the 7712 build as early as today.

The big question on many developers’ minds: Why not just give Mango devs the actual RTM bits? Windows Phone Senior Product Manager Cliff Simpkins provided an explanation in a new blog post on the Windows Phone Developer blog:

“For the folks wondering why we’re not providing the ‘RTM’ version, there are two main reasons. First, the phone OS and the tools are two equal parts of the developer toolkit that correspond to one another. When we took this snapshot for the refresh, we took the latest RC drops of the tools and the corresponding OS version. Second, what we are providing is a genuine release candidate build, with enough code checked in and APIs locked down that this OS is close enough to RTM that, as a developer, it’s more than capable to see you through the upcoming RC drop of the tools and app submission. It’s important to remember that until the phone and mobile operator portion of Mango is complete, you’re still using a pre-release on your retail phone – no matter the MS build. Until that time, enjoy developing and cruising around on build 7712 – it’s a sweet ride, to be sure.”

Developers got their first “Beta 2″ test build of Mango in late June. This refresh includes a number of updates, including locked application platform programming interfaces; a screenshot capability built into the emulator; an update to the profiler to include memory profiling; the ability to install NuGet into the free version of the Windows Phone SDK tools; and an “initial peek” at the Marketplace Test Kit.

Microsoft execs said last week that the company was planning to deliver a Release Candidate build of the Mango phone software development kit in late August. From today’s post, the RC plan still seems to remain a go.

Mango is now in telco and handset makers’ hands for testing. Microsoft officials have said Mango will be pushed to existing Windows Phone users this fall and be available on/with new handsets around the same time. Mango includes a number of new features, ranging from the inclusion of an HTML5-compliant IE 9 browser, to third-party multitasking, to Twitter integration.

On July 27, Fujitsu-Toshiba announced what are expected to be the first Mango phones. Due out in Japan in “September or beyond,” the IS12T is a waterproof handset that will come in yellow, pink and black and include a 13.2 megapixel camera.

Rob Tiffany (@RobTiffany) reported Windows Phone “Mango” has been Released to Manufacturing! in a 7/27/2011 post:

On July 26th, the Windows Phone development team officially signed off on the release to manufacturing (RTM) build of “Mango,” which is the latest version of the Windows Phone operating system. We now hand over the code to OEMs to tailor and optimize the OS for their phones. After that, our Mobile Operator partners will do the same in order to prepare the phones for their wireless networks.

This is an amazing milestone for the Windows Phone team and Microsoft. With hundreds of new features including the world’s fastest mobile HTML5 web browser, Windows Phone “Mango” promises to make a huge impact in the mobile + wireless space this fall. This “splash” is made even bigger around the world as we expand our Windows Phone language support to include Brazilian Portuguese, Chinese (simplified and traditional), Czech, Danish, Dutch, Finnish, Greek, Hungarian, Japanese, Korean, Norwegian (Bokmål), Polish, Portuguese, Russian, and Swedish.

Maarten Balliauw (@maartenballiauw) announced Windows Azure SDK for PHP 4 released on 7/27/2011:

Only a few months after the Windows Azure SDK for PHP 3.0.0, Microsoft and RealDolmen are proud to present you the next version of the most complete SDK for Windows Azure out there (yes, that is a rant against the .NET SDK!): Windows Azure SDK for PHP 4. We’ve been working very hard with an expanding globally distributed team on getting this version out.

The Windows Azure SDK [for PHP] 4 contains some significant feature enhancements. For example, it now incorporates a PHP library for accessing Windows Azure storage, a logging component, a session sharing component and clients for both the Windows Azure and SQL Azure Management API’s. On top of that, all of these API’s are now also available from the command-line both under Windows and Linux. This means you can batch-script a complete datacenter setup including servers, storage, SQL Azure, firewalls, … If that’s not cool, move to the North Pole.

Here’s the official change log:

- New feature: Service Management API support for SQL Azure

- New feature: Service Management API's exposed as command-line tools

- New feature: MicrosoftWindowsAzureRoleEnvironment for retrieving environment details

- New feature: Package scaffolders

- Integration of the Windows Azure command-line packaging tool

- Expansion of the autoloader class increasing performance

- Several minor bugfixes and performance tweaks

Some interesting links on some of the new features:

- Setup the Windows Azure SDK for PHP

- Packaging applications

- Using scaffolds

- A hidden gem in the Windows Azure SDK for PHP: command line parsing

- Scaffolding and packaging a Windows Azure project in PHP

Also keep an eye on www.sdn.nl where I’ll be posting an article on scripting a complete application deployment to Windows Azure, including SQL Azure, storage and firewalls.

And finally: keep an eye on http://azurephp.interoperabilitybridges.com and http://blogs.technet.com/b/port25/. I have a feeling some cool stuff may be coming following this release...

As an aside, www.sdn.nl is in Dutch, which isn’t surprising because it’s domiciled in the Netherlands, but I didn’t see an English translation option.

Web Host Industry Review posted Q&A: DotNetNuke’s Mitch Bishop Discusses Version 6 on 6/27/2011:

On July 20, open source software developer DotNetNuke (www.dotnetnuke.com) released Version 6 of its ASP.NET content management system.

DotNetNuke says version 6 offers a significant improvement over its predecessor, including a simplified interface that allows developers, designers and content owners to effectively design, deliver and update websites.

The cloud-focused CMS software has built-in integration for cloud-based services such as Amazon Web Services and Windows Azure, offers the ability to publish files managed in Microsoft Office SharePoint to external websites, and the entire core platform has been rewritten in C# to make it more accessible and customizable to the developer community.

Along with the new version, DotNetNuke has also launched the Snowcovered (www.snowcovered.com) store, which offers some 10,000 DotNetNuke modules, skins, and application extensions for developers to download. The company acquired Snowcovered in August of 2009, and has built it up to better support the CMS.

In an email interview, Mitch Bishop, chief marketing officer at DotNetNuke, discusses the new version, its many benefits, and how it will provide developers with a range of cloud tools.

WHIR: The press release states that DNN 6 is the most "cloud-ready" version ever to be released. Can you explain and elaborate on this?

Mitch Bishop: DNN 6 offers two cloud options. The first is the ability to host your DotNetNuke website in the Windows Azure Cloud Hosting platform. This capability is available in both the free and commercial editions of the product. The second is the ability to store files in either the Amazon S3 or Windows Azure File Storage offerings. Any files needed by your website (images, for example) can be stored either locally, on the website server, or in the cloud using one of these offerings. The developer can set it up so that the files are served up seamlessly, i.e. the website user will not know where the files are coming from – it all looks like one seamless experience. The cloud file storage capability is only offered in the commercial versions of the DotNetNuke product.

WHIR: How will the new DNN Store benefit users?

MB: The store (Snowcovered.com) is an online marketplace that offers 10,000 extensions that help developers add on customized capabilities and skins (site designs) to their website. Having a rich set of extensions means that developers will not have to waste time building their own custom modules or designs – there's a good chance that required functionality already exists in the store. The DotNetNuke 6 release supports a direct connection to the store that dramatically simplifies the shopping, acquisition, installation and maintenance of these add-on extensions.

WHIR: What kinds of applications are available through the Snowcovered store?

MB: Modules, skins and other apps (functional website code) that make it easy for developers to extend the capabilities of their site.

WHIR: Tell me a bit about the new eCommerce module and how it will help users?

MB: The eCommerce module gives companies a quick way to offer robust online shopping experiences for their customers. The benefit is speed; installing the module and populating the product list is fast and easy. Since payment options are included, there is no time wasted building this functionality on your own.

WHIR: What are the main differences among the three versions (community, professional, enterprise) and what kind of user is each version intended for?

MB: The Community (free) version of the DotNetNuke platform has all the features needed to build a robust website. The platform is flexible, extensible and secure, making it perfect for thousands of business and hobbyist sites. The Professional and Enterprise Editions offer additional capabilities for mid-market enterprises that need more content control and flexibility for customizing and monitoring their web sites.

Tony Bishop (a.k.a. tbtechnet) described a New, Low Friction Way to Try Out Windows Azure and Get Free Help in a 7/26/2011 post to TechNet’s Windows Azure, Windows Phone and Windows Client blog:

If you’re a developer interested in quickly getting up to speed on the Windows Azure platform there are excellent ways to do this.

- Get a no-cost, no credit card required 30-day Windows Azure platform pass

- Get free technical support and also get help driving your cloud application sales. How?

- It's easy. Chat online or call: http://www.microsoftplatformready.com/us/home.aspx

- Need help? Visit http://www.microsoftplatformready.com/us/dashboard.aspx

- Just click on Windows Azure Platform under Technologies

- How much capacity?

- Should be enough to try out Azure

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Anne Grubb listed LightSwitch articles for DevProConnections by Jonathan Goodyear, Michael K. Campbell, Michael Otey, Tim Huckaby, and Don Kiely in a 7/26/2011 post:

Visual Studio LightSwitch, which was originally announced nearly a year ago, is a simplified version of the Visual Studio IDE geared toward both non-developer users and developers who want to build small-scope, data-driven line-of-business applications quickly. LightSwitch applications use Silverlight for the front end and can pull data from multiple sources, such as SQL Server, SQL Azure, SharePoint, and Microsoft Access.

Jason Zander, corporate vice president for the Visual Studio team in the Developer Division at Microsoft, blogged about the Visual Studio LightSwitch announcement here. In the blog, Zander discusses highlights of the release, including starter kits, the ability to publish LightSwitch applications to Windows Azure, and LightSwitch extensions.

DevProConnections has been covering Visual Studio LightSwitch since its announcement in August 2010. Check out the following articles on LightSwitch for independent developer perspectives on the product by several DevProConnections authors--Jonathan Goodyear, Michael K. Campbell, Michael Otey, Tim Huckaby, and Don Kiely. And look for more in-depth technical articles on LightSwitch coming soon!

- Visual Studio LightSwitch: Mort Lives!

Jonathan Goodyear explains why Microsoft's Visual Studio LightSwitch should be a big favorite with Mort, the classic Visual Basic persona for the non-developer interested in creating LOB apps for his team.- Visual Studio LightSwitch: A Useful Rapid Development Tool for Building Data Applications

Don Kiely appreciates Visual Studio LightSwitch, now in Beta 2, because it's based on tried-and-true Microsoft technologies, like Silverlight 4, WCF, and SQL Server, and provides a good way for developers to build data-centric applications for small businesses or groups quickly.- Visual Studio LightSwitch: A Silverlight Application Generator

Visual Studio LightSwitch is a uniquely SKU’d version of Visual Studio that has tools to quickly build Silverlight applications through a series of wizard choices and configuration settings.- Visual Studio LightSwitch and WebMatrix: Are They Good for Professional Developers?

Microsoft's recent releases of the WebMatrix and Visual Studio LightSwitch tools for non-developers shows real vision in encouraging businesses to use the Microsoft stack for ALL their development needs.- Visual Studio LightSwitch’s True Target Audience

Here's why despite Microsoft positioning Visual Studio LightSwitch as an end-user developer tool, it’s actually a better fit for professional developers.- Turning On to LightSwitch

Microsoft once owned the novice developer space with Visual Basic (VB) 6. When Microsoft took a turn down the .NET road, VB6 with its simplicity and productivity was lost for good. This is where the new Visual Studio LightSwitch product comes in.

Andrew Brust (@andrewbrust) asserted LightSwitch: The Answer to the Right Question in a 7/27/2011 post to his Redmond Review blog for 1105 Media:

After nearly a full year in beta, Visual Studio LightSwitch was released on July 26. And not a moment too soon: Microsoft needs an approachable line-of-business dev tool in its bag of tricks and customers need one to get their apps done. But many developers I'm friendly with are dismissive of LightSwitch. I've been trying to formulate an answer to their challenge. In thinking about it, I believe they may be asking the wrong question.

People in the dev world tend to split products up into framework-based tools that generate code, and elaborate development platforms where code is crafted. Framework-based tools provide efficiencies, but seem to do that at the cost of power and flexibility. Rich coding platforms eliminate limitations and, while they lack certain efficiencies, their power appears to make them more practical overall. Under this dichotomy, only the elaborate development platforms appear acceptable for real-world applications. So LightSwitch is dismissed.

But that's based on a false choice. People tend to fit things they haven't seen before into categories they already know. That's a reasonable thing to do, but sometimes a new template is required. I believe that's the case with LightSwitch.

Why It's Different

LightSwitch defies the frameworked versus crafted categorization scheme. It encourages declarative and schematic specification of an application, and yet it still accommodates imperative code. This isn't about painting controls on a form and then throwing in a bunch of load/save and validation code. Developers build data models inside LightSwitch, and define queries, validation and business rules as part of that model. Screens aren't designed in a WYSIWYG fashion, but instead are described, in terms of layout and data content. It's as if developers are editing markup, but in a UI rather than with angle brackets in a text editor.Most tools automate at the expense of offering control. Somehow, LightSwitch provides for both. Sure, screens can be generated on the fly at runtime or statically at design time. But they can also be designed manually, or first generated and then heavily customized. A rich event model lets developers write lots of code, if that's truly needed. And because LightSwitch is part of Visual Studio and .NET, it permits development of conventional classes and can reference external assemblies in its projects. Support for six types of extensions, from screen templates up to full-on Silverlight controls, allows for a collaborative workflow between hardcore .NET developers and focused LightSwitch pros.

Perhaps most important, LightSwitch targets Windows Azure and SQL Azure -- and I dare say it does so better than anything else in the Microsoft stack. Come armed with the appropriate IDs and credentials, and LightSwitch can shift gears from desktop to cloud, simply by taking you through a different path in its publish wizard.

Honest Appraisal

LightSwitch isn't a code generator. It's not Access or SharePoint, and it's certainly not Excel. But LightSwitch can be used in place of those tools to create a bunch of applications that otherwise would live in Access databases, SharePoint lists or, even more likely, Excel spreadsheets. We're much better off letting LightSwitch implement those apps using responsible technologies like Silverlight, SQL Server, RIA Services, the Entity Framework, IIS and Windows Azure. Somehow, the LightSwitch team eliminated the stack's complexity without compromising its power. Yet another apparent tradeoff transcended.There are many workers who are organized, logical thinkers, and in their work have well-defined routines and procedures that manage structured data. These routines and procedures constitute bona fide systems. Many of these systems may be maintained in spreadsheets, where they're essentially manual; the spreadsheet is not much different from a paper notebook when used in this manner.

In terms of justification, using LightSwitch to materialize these systems into real applications and databases is no different from developers organizing repetitive code into well-written functions and services. In both cases, refactoring occurs. In both cases, the outcome is positive. And while the personnel and skill sets may differ in each scenario, the validity of each is unified and unshakable. One team at Microsoft really gets that. I think the market should, as well.

Bruce Kyle posted Get Azure Cloud Apps Up, Running in Minutes with LightSwitch to the US ISV Evangelism Blog on 6/27/2011:

Microsoft Visual Studio LightSwitch is now available to MSDN subscribers with general availability on Thursday. LightSwitch is a simplified self-service development tool that enables you to build business applications quickly and easily for the desktop and cloud.

Visual Studio LightSwitch enables developers of all skill levels to build line of business applications for the desktop, web, and cloud quickly and easily. LightSwitch applications can be up and running in minutes with templates and intuitive tools that reduce the complexity building data-driven applications, including tools for UI design and publishing to the desktop or to the cloud with Windows Azure.

LightSwitch simplifies attaching to data with data source wizards or creating data tables with table designers. It also includes screen templates for common tasks so you can create clean interfaces for your applications without being a designer. Basic applications can be written without a line of code. However, you can add custom code that is specific to your business problem without having to worry about setting up classes and methods.

For more information, see the blog post at Microsoft® Visual Studio® LightSwitch™ 2011 is Available Today and a video, LightSwitch Overview with Jason Zander.

How to Get LightSwitch

Get Visual Studio LightSwitch 2011 (MSDN Subscribers). If you’re not a MSDN Subscriber, you can download a 90 day trial.

See the LightSwitch site about Thursday’s general.

For the complete VS LightSwitch RTW story, see Windows Azure and Cloud Computing Posts for 7/26/2011+.

SD Times on the Web reported ComponentOne Releases a LightSwitch Extension Offering Instant Business Intelligence in a 7/27/2011 press release:

ComponentOne, a leading component vendor in the Microsoft Visual Studio Industry Partner program, today announced the release of ComponentOne OLAP for LightSwitch, the first OLAP screen for Microsoft LightSwitch. Microsoft Visual Studio LightSwitch is the latest addition to the Visual Studio family and offers its users a way to create high-quality business applications for the desktop and the cloud without code.

ComponentOne OLAP for LightSwitch provides custom controls and screen templates for displaying interactive pivot tables, charts, and reports. OLAP tools provide analytical processing features similar to those found in Microsoft Excel Pivot tables and charts.

"OLAP for LightSwitch preserves the spirit of Visual Studio for LightSwitch by allowing users to create interactive data views without a deep level of programming knowledge," said Dan Beall, product manager at ComponentOne. "It allows any level of user to snap a pivoting data screen into Visual Studio LightSwitch and instantly get in-depth business intelligence functionality," said Beall.

The Microsoft LightSwitch team created a video demonstrating the OLAP for LightSwitch product which is available for viewing on the company product page.

"What sets this extension apart is the ability to simply add this extension to your project and get a tool that creates interactive tables, charts, and reports similar to those found in Microsoft Excel Pivot tables and charts. Drag-and-drop views give you real-time information, insights, and results in seconds. All this without cubes or code!" said Beall."There are online tutorials, forums, documentation and diagrams available as part of the ComponentOne LightSwitch experience," Beall. "We are pleased to welcome this product to the ComponentOne family of products and our online community.

In the OpenLight blog, Microsoft Silverlight MVP Michael Washington writes, "Ok folks, I am 'gonna call it', we have the "Killer Application" for LightSwitch, ComponentOne's OLAP for LightSwitch, you can get it for $295." Washington continues, "Well, I think ComponentOne's OLAP for LightSwitch may be "The One" for LightSwitch. This is the plug-in that for some, becomes the deciding factor to use LightSwitch or not."Washington goes on to describe that a "Killer App" is an application that provides functionality that is so important, that drives the desire to use the product that it is dependent upon.

Pricing and Availability

As Mr. Washington mentioned, the product is available for immediate download and the cost is $295.00 per license. ComponentOne offers online purchase options www.componentone.com and by telephone at 412.681.4343 or 1.800.858.2739.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Jim O’Neil posted Inside the Cloud on 7/27/2011:

The Global Foundation Services team, the folks at Microsoft who 'run the cloud,' have just released a video tour of a few of the Microsoft data centers (including the Azure data centers in Chicago, Illinois and Dublin, Ireland). The clip is a little over 10 minutes long and well worth the time in my opinion. I found the evolution of the infrastructure that powers these data centers to be a fascinating story in itself - cutting energy utilization by 50% and water usage by two orders of magnitude over traditional data centers! Take a quick break and watch it here:

This is the same video that yesterday’s post offered.

David Linthicum (@DavidLinthicum) asserted “There seems to be more cloud construction projects than there is talent to support them. That could spell real trouble” as a deck for his Why the shortage of cloud architects will lead to bad clouds article of 7/27/2011 for InfoWorld’s Cloud Computing blog:

Designing and building cloud computing-based systems is nothing like building traditional applications and business systems. Unfortunately, many in IT find that out only when it's too late.

The complexities around multitenancy, resource sharing and management, security, and even version control lead cloud computing startups -- and enterprises that build private and public clouds -- down some rough roads before they start to learn from their mistakes. Or perhaps they just have to kill the project altogether as they discover all that investment is unsalvageable.

I've worked on cloud-based systems for years now, and the common thread to cloud architecture is that there are no common threads to cloud architecture. Although you would think that common architectural patterns would emerge, the fact is clouds do different things and must use very different architectural approaches and technologies. In the world of cloud computing, that means those who are smart, creative, and resourceful seem to win out over those who are just smart.

The demand has exploded for those who understand how to build clouds. However, you have pretty much the same number of cloud-experienced architects being chased by an increasing number of talent seekers. Something has to give, and that will be quality and innovation as organizations settle for what they can get versus what they need.

You won't see it happen right away. It will come in the form of outages and security breaches as those who are less than qualified to build clouds are actually allowed to build them. Moreover, new IaaS, SaaS, and PaaS clouds -- both public and private -- will be functional copies of what is offered by the existing larger providers, such as Google, Amazon Web Services, and Microsoft. After all, when you do something for the first time, you're more likely to copy rather than innovate.

If you're on the road to cloud computing, there are a few things you can do to secure the talent you need, including buying, building, and renting. Buy the talent by stealing it from other companies that are already building and deploying cloud-based technology -- but count on paying big for that move. Build by hiring consultants and mentors to both do and teach cloud deployment at the same time. Finally, rent by outsourcing your cloud design and build to an outside firm that has the talent and track record.

Of course, none of these options are perfect. But they're better than spending all that time and money on a bad cloud.

Joe Panettieri reported Red Hat Warns Government About Cloud Lock-In in a 7/26/2011 post to the TalkinCloud blog:

In an open letter of sorts, Red Hat is warning U.S. policy makers and government leaders about so-called cloud lock-in — the use of proprietary APIs (application programming interfaces) and other techniques to keep customers from switching cloud providers. The open letter, in the form of a blog entry from Red Hat VP Mark Bohannon, contains thinly veiled criticism of Microsoft and other companies that are launching their own public clouds.

Bohannon penned the blog to recap a new TechAmerica report, which seeks to promote policies that accelerate cloud computing’s adoption. The blog is mostly upbeat and optimistic about cloud computing. But Bohannon also mentions some “strong headwinds” against cloud computing — including:

“steps by vendors to lock in their customers to particular cloud architecture and non-portable solutions, and heavy reliance on proprietary APIs. Lock-in drives costs higher and undermines the savings that can be achieved through technical efficiency. If not carefully managed, we risk taking steps backwards, even going toward replicating the 1980s, where users were heavily tied technologically and financially into one IT framework and were stuck there.”

Microsoft Revisited

By pointing to the 1980s, Bohannon is either referring to (A) old proprietary mainframes, (B) proprietary minicomputers or (C) the rise of DOS and then Microsoft Windows. My bet is C, since Red Hat back in 2009 warned its own customers and partners about potential lock-in to Windows Azure, Microsoft’s cloud for platform as a service (PaaS).

For its part, Microsoft has previously stated that Windows Azure supports a range of software development standards, including Java and Ruby on Rails.

Still, Bohannon reinforces his point by pointing government officials to open cloud efforts like the Open Virtualization Alliance (OVA); Red Hat’s own OpenShift PaaS effort; and Red Hat’s CloudForms for Infrastructure-as-a-Service (IaaS).

Concluded Bohannon: “The greatest challenge is to make sure that with the cloud, choice grows rather than shrinks. This effort will be successful so long as users are kept first in order of priority, and remain in charge.”

I understand Bohannon’s concern about cloud lock-in. But I’m not ready to sound the alarm over Windows Azure. Plenty of proprietary software companies and channel partners are shifting applications into the Azure cloud. We’ll continue to check in with partners to measure the challenges and dividends.

Read More About This Topic

“Bohannon’s concern about cloud lock-in” is his worry that anyone using a platform other than Red Hat’s potentially decreases his bonus. The fact that a cloud computing platform uses open source code doesn’t mean that one can more easily move a cloud application to or from another open or closed source platform.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Hanu Kommalapati (@hanuk) posted links to current Microsoft Cloud/Azure Security Resources on 7/27/2011:

Technical Overview of the Security Features in the Windows Azure Platform: http://www.microsoft.com/online/legal/?langid=en-us&docid=11.

- Windows Azure Security Overview: http://www.globalfoundationservices.com/security/documents/WindowsAzureSecurityOverview1_0Aug2010.pdf

- Windows Azure Privacy: http://www.microsoft.com/online/legal/?langid=en-us&docid=11

- Securing Microsoft Cloud Infrastructure: http://www.globalfoundationservices.com/security/documents/SecuringtheMSCloudMay09.pdf.

- Security Best Practices For Developing Windows Azure Applications: http://www.globalfoundationservices.com/security/documents/SecurityBestPracticesWindowsAzureApps.pdf

- Technorati Tags: Windows Azure,Cloud Security,Windows Azure Security,Azure Security,Cloud Computing,Azure SAS 70,Cloud Security Best Practices,Azure Security Best Practices

<Return to section navigation list>

Cloud Computing Events

Paula Rooney (@PaulaRooney1) reported Microsoft: Cloud need only be open at the surface, not open source in a 7/27/2011 article for ZDNet’s Linux and Open Source blog:

Microsoft is more open — at least on the surface — and that’s all that matters in the cloud era, one company exec maintains.

At Oscon 2011, Gianugo Rabellino, Senior Director for Open Source Communities at Microsoft, said as long as the APIs, protocols and standards for the cloud are open, that is, open surface, customers don’t care about the underlying platform.

So, it does not matter that the cloud is built primarily on open source technologies, notably Linux?

“Am I saying that openness doesn’t matter in the cloud? No, openness is extremely important [but] I argue that in the cloud the source code is the Terms of Use and the SLA,” Rabellino said, referring to service-level agreements.”

He coined these terms — open surface and open core — to describe a continued commingling — or a blurring — of open source and closed source software that lies at the core of the enterprise and the cloud.

Open core, or open source, is the existing model in which core features are open source and value-added proprietary commercial software is built on top of it to monetize the technology. The open surface model, Microsoft’s approach, can be done with APIs, protocols and standards, the Microsoft exec said. The two models are coming together nicely.

He noted, for example, that PHP and Red Hat Enterprise Linux 6 run on Microsoft’s Azure cloud platform “pretty well” and that Microsoft is working with WordPress, Drupal, Zoomla, Eclipse and other open source projects to ensure interoperability on Azure.

“This is what’s happening in the cloud. the cloud changes a lot of things. the [traditional] yardsticks we had aren’t there anymore. What version of Facebook are you running? it doesn’t matter anymore,” he noted. “In the cloud, you have all this technology blurring . Sometimes you don’t see them and when you see services , does it matter? Can you tell what stack has been producing JSON or XML feed? No.”

Microsoft also announced a new version of Azure SDK for PHP is now available and the company is announcing new tools to cloud enable open source developed applications for Azure. The company is also working with almost 400 open source projects to ensure interoperability, including an open source project called PHP Cloud Sniffer.

Rabellino, who came to Microsoft from Italy nine months ago, said employees in more than half of Microsoft’s 60 buildings in Seattle are working with open source projects.