Windows Azure and Cloud Computing Posts for 5/28/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

• Updated 5/29/2010 with:

- Rinat Abdullin’s Salescast - Scalable Business Intelligence on Windows Azure post which provides more details on Lokad’s new Azure forecasting application (see the Live Windows Azure Apps, APIs, Tools and Test Harnesses section)

- Dinakar Nethi’s and Michael Thomassy’s Developing and Deploying with SQL Azure whitepaper of 5/28/2010 (see the SQL Azure Database, Codename “Dallas” and OData section).

- Jomo Fisher’s Creating an OData Web Service with F# demo project of 5/29/2010 (see the SQL Azure Database, Codename “Dallas” and OData section)

- David Aiken’s Remember to update your DiagnosticsConnectionString before deploying Windows Azure projects of 5/28/2010 (see the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below Lokad)

- Herve Roggero’s SQL Azure: Notes on Building a Shard Technology of 5/28/2010 (see the SQL Azure Database, Codename “Dallas” and OData section)

- Chad Collins demonstrating the Nubifer Cloud:Link hosted on Microsoft Windows Azure with Microsoft in this 00:10:08 YouTube video (see the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below Lokad)

- Jeffrey Schwartz’ in-depth ADFS 2.0 Opens Doors to the Cloud article of 5/29/2010 for Redmond Magazine’s June 2010 issue (see the AppFabric: Access Control and Service Bus section)

Updates will be irregular over the Memorial Day weekend. Today’s extra long issue (50 768-px pages) should hold your attention until Tuesday.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Jai Haridas of the Windows Azure Storage Team posted WCF Data Service Asynchronous Issue when using Windows Azure Tables from SDK 1.0/1.1 on 5/27/2010:

We have received a few reports of problems when using the following APIs in Windows Azure Storage Client Library (WA SCL) for Windows Azure Tables and the following routines:

- SaveChangesWithRetries,

- BeginSaveChangesWithRetries/EndSaveChangesWithRetries,

- Using CloudTableQuery to iterate query results or using BeginExecuteSegmented/EndExecutedSegment

- BeginSaveChanges/EndSaveChanges in WCF Data Service Client library

- BeginExecuteQuery/EndExecuteQuery in WCF Data Service Client library

The problems can surface in any one of these forms:

- Incomplete callbacks in WCF Data Service client library which can lead to 90 second delays

- NotSupported Exception – the stream does not support concurrent IO read or write operations

System.IndexOutOfRangeException – probable I/O race condition detected while copying memory

This issue stems from a bug in asynchronous APIs (BeginSaveChanges/EndSaveChanges and BeginExecuteQuery/EndExecuteQuery) provided by WCF Data Service which has been fixed in .NET 4.0 and .NET 3.5 SP1 Update. However, this version of .NET 3.5 is not available in the Guest OS and SDK 1.1 does not support hosting your application in .NET 4.0.

The available options for users to deal with this are:

- Rather than using the WA SCL Table APIs that provide continuation token handling and retries, use the WCF Data Service synchronous APIs directly until .NET 3.5 SP1 Update is available in the cloud.

- As a work around, if the application is fine with dealing with the occasional delay from using the unpatched version of .NET 3.5, the application can look for the StorageClientException with the message “Unexpected Internal Storage Client Error” and status code “HttpStatusCode.Unused”. On occurrence of such an exception, the application should dispose of the context that was being used and this context should not be reused for any other operation as recommended in the workaround.

- Use the next version of the SDK when it comes out, since it will allow your application to use .NET 4.0

Jai provide more details on the three options.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Dinakar Nethi’s and Michael Thomassy’s Developing and Deploying with SQL Azure 16-page whitepaper of 5/28/2010 observes:

SQL Azure is built on the SQL Server’s core engine, so developing against SQL Azure is very similar to developing against on-premise SQL Server. While there are certain features that are not compatible with SQL Azure, most T-SQL syntax is compatible. The MSDN link http://msdn.microsoft.com/en-us/library/ee336281.aspx provides a comprehensive description of T-SQL features that are supported, not supported, and partially supported in SQL Azure.

The release of SQL Server 2008 R2 adds client tools support for SQL Azure including added support to Management Studio (SSMS). SQL Server 2008 R2 (and above) have full support for SQL Azure – in terms of seamless connectivity, viewing objects in the object explorer, SMO scripting etc.

At this point of time, if you have an application that needs to be migrated into SQL Azure, there is no way to test it locally to see if it works against SQL Azure. The only way to test is to actually deploy the database into SQL Azure.

This document provides guidelines on how to deploy an existing on-premise SQL Server database into SQL Azure. It also discusses best practices related to data migration.

• Jomo Fisher explains Creating an OData Web Service with F# in this demo project of 5/29/2010:

I’ve spent a little time looking at the OData Web Service Protocol. There is quite a lot to like about it. It is an open, REST-ful format for communicating with a web service as if it were a database. You can query an OData service with LINQ.

To help me learn about it I set out to write a simple OData web service. I expected to have to do quite a lot of work because OData is very rich and just parsing the urls, which can contain SQL-like queries, looked to be hard work.

It turned out, however, to be pretty straight-forward. You basically just need to implement IDataServiceQueryProvider and IDataServiceMetadataProvider. All the heavy-lifting in terms of parsing is done for you. You get a nice System.Linq Expression which is easy to deal with (mainly because my service uses LINQ to Objects under the covers).

If you’d like to take a look at the service I wrote, I published it on the Visual Studio Gallery. You just need to go to File\New Project in Visual Studio 2010:

Please let me know what you think, and whether you have any issues or spot any bugs.

• Herve Roggero’s SQL Azure: Notes on Building a Shard Technology of 5/28/2010 describes working with SQL Server (not SQL Azure) vertical partition shards with the SqlClient library:

In Chapter 10 of the book on SQL Azure (http://www.apress.com/book/view/9781430229612) I am co-authoring, I am digging deeper into what it takes to write a Shard. It's actually a pretty cool exercise, and I wanted to share some thoughts on how I am designing the technology.

A Shard is a technology that spreads the load of database requests over multiple databases, as transparently as possible. The type of shard I am building is called a Vertical Partition Shard (VPS). A VPS is a mechanism by which the data is stored in one or more databases behind the scenes, but your code has no idea at design time which data is in which database. It's like having a mini cloud for records instead of services.

Imagine you have three SQL Azure databases that have the same schema (DB1, DB2 and DB3), you would like to issue a SELECT * FROM Users on all three databases, concatenate the results into a single resultset, and order by last name. Imagine you want to ensure your code doesn't need to change if you add a new database to the shard (DB4).

Now imagine that you want to make sure all three databases are queried at the same time, in a multi-threaded manner so your code doesn't have to wait for three database calls sequentially. Then, imagine you would like to obtain a breadcrumb (in the form of a new, virtual column) that gives you a hint as to which database a record came from, so that you could update it if needed. Now imagine all that is done through the standard SqlClient library... and you have the Shard I am currently building.

Here are some lessons learned and techniques I am using with this shard:

- Parellel Processing: Querying databases in parallel is not too hard using the Task Parallel Library; all you need is to lock your resources when needed

- Deleting/Updating Data: That's not too bad either as long as you have a breadcrumb. However it becomes more difficult if you need to update a single record and you don't know in which database it is.

- Inserting Data: I am using a round-robin approach in which each new insert request is directed to the next database in the shard. Not sure how to deal with Bulk Loads just yet...

- Shard Databases: I use a static collection of SqlConnection objects which needs to be loaded once; from there on all the Shard commands use this collection

- Extension Methods: In order to make it look like the Shard commands are part of the SqlClient class I use extension methods. For example I added ExecuteShardQuery and ExecuteShardNonQuery methods to SqlClient.

- Exceptions: Capturing exceptions in a multi-threaded code is interesting... but I kept it simple for now. I am using the ConcurrentQueue to store my exceptions.

- Database GUID: Every database in the shard is given a GUID, which is calculated based on the connection string's values.

- DataTable. The Shard methods return a DataTable object which can be bound to objects.

I will be sharing the code soon as an open-source project in CodePlex. Please stay tuned on twitter to know when it will be available (@hroggero). Or check www.bluesyntax.net for updates on the shard.

I’m still waiting for the SQL Azure team’s “Best Practices for Sharding SQL Azure Databases” (or whatever) white paper. Dave Robinson, are you listening?

Paul Jenkins announced the availability of his OoO: Demo Solution for OpenID, OData and OAuth on 5/28/2010:

So it took me a little more than the initial couple of days I estimated, but you can finally download the VS2010 Solution for my OoO (aka OpenID, oData and OAuth together) post.

Please don’t take this as gospel or even an example of good practices.

Fire up the website, then fire up the command line client, which will redirect you to the Login page which requires OpenID credentials. There isn’t a fancy OpenID Relaying Party selector, you’ll just have to type it in yourself. That will then let you to generate a verifier token, which lets the CLI client to access the OData service.

This example ignores the scope values being passed in.

Would I use OData?

Yes and no. I’d use OData if I just wanted people to have LINQ access to various resources – but probably resources I wouldn’t try and protect. Why not? While oData lets you define service methods which let you perform more advanced operations and still return the OData format it doesn’t expose these service methods to the WCF Proxy Client (ie, what is generated from Add Service Reference..), but instead requires you to use magic strings. That is, something like:

DataServiceQuery<Product> q = ctx.CreateQuery<Product>("GetProducts")

.AddQueryOption("category","Boats") ;

List<Product> products = q.Execute().ToList();WCF Data Services lost me on the magic strings bit. I’d probably go for a plain ole WCF Service with SOAP/JSON endpoints if I needed methods instead of just the data.

Jew Paltz issued a A call to action for a new Heterogeneous Sync Framework on 5/28/2010:

We need a framework to sync separate unrelated data sources. Like LDAP & Outlook, or Outlook and your custom in-house CRM.

I am constantly coming across scenarios that sound exactly like this, and every time it seams like I am re-inventing the wheel and building the entire solution over again. I am actually quite confident that everyone reading this post will have dozens of examples similar to what is described here.

I have come to the conclusion a while ago that this problem domain needs to be abstracted and then we can build a framework to handle some of the recurring themes. I was really excited when I found Microsoft Sync Framework because I thought it was exactly that type of abstraction. However after researching it for about two months now, and implementing it a few times. I have to conclude that it is not what we are looking for.

We need a Heterogeneous Sync Framework. A Framework to sync unrelated (but naturally relatable) Data Sources.

The Microsoft Sync Framework deals too much with the relationship and change tracking. It assumes that you are starting with matching data, and only then, does it changes over time.

We need a Sync Framework that assumes that we have conflicting data at every step, and we have no idea how it got that way. Because the data is coming from two sources that couldn't give a darn about each other. …

The prospects for syncing with Outlook are much better now that Microsoft does right by freeing Outlook archives (an article by Stephen Shankland for C|Net News on 5/27/2010). See also the Microsoft Sync Framework Developer Center.

Glennen reported in the OData Support section of the LINQ to SQL templates for T4 CodePlex project on 5/24/2010:

I've extended L2ST4 with OData support i.e. adding DataServiceKey attribute for primarykeys and IgnoreProperties attribute for Enums. Should I submit a patch? I guess one could add the full IUpdateable implementation for [LINQ to SQL] as well (http://code.msdn.microsoft.com/IUpdateableLinqToSql).

Microsoft’s Damien Guard is the project moderator (and T4 guru).

Pål Fossmo wrote WCF Data Services: A perfect fit for our CQRS implementation on 5/19/2010:

When we started implementing CQRS [Command and Query Responsibility Segregation] architecture in the project I'm working on at the moment, we used NHibernate to get the data from the database on the query side and to the view model in the GUI. This worked fine, but it took a bit of time to implement what was needed to get the data through “all” the layers and to the GUI. It was tedious and error prone.

We started to look for other ways to do this. We considered using plain SQL through ADO.NET, but we wanted something that was simpler and faster to implement. By using ADO.NET we still had to create web services and do a bit of mapping. WCF Data Services ended up being our “savior”.

A quick update on WCF Data Services

WCF Data Services is built upon the open data protocol (OData), and OData is built upon the atompub protocol. OData is developed at Microsoft, but published as a open standard. You can read more about it at www.odata.org. I think OData is very exciting, and there are already other languages supporting this protocol besides .NET. To mention some; PHP and Java.

A cool thing; If a data store have a LINQ provider, it’s possible to expose the data through the OData protocol using WCF Data Services in few simple steps. If I’m not mistaken, there is a provider for db4o and a provider under development for MongoDB.How we did it

To set up WCF DS over the read database, the only thing we had to do, was to create the table(s) we wanted exposed through WCF DS. Add a EDMX model to a empty ASP.NET web project, and finally add a WCF Data Service to the web project and added the entity set as a datasource (We also added some security, but when it comes to security in the OData protocol, you’re on your own. OData doesn’t say anything about how you should do this).

So, to fetch data into the view model in the presentation layer, we simply use a LINQ query to get the data we want. DataServiceContext is one of the main actors in this process. The result from the query is added to the presentation model. WCF DS comes with a object-to-object mapper that makes the job mapping to the presentation model very simple. You can set it up to map all the properties in the presentation model, and throw an exception if a property is missing, or set it up to ignore missing properties.

A small code example:

DataServiceContext context = new DataServiceContext(...);

context.IgnoreMissingProperties = true;The OData protocol gives us a lot of stuff for free; like getting the top ten records, paging, sorting, and other stuff you expect when querying a relational database. And this is just some of the abilities you get with WCF DS. Read about the conventions at http://www.odata.org/developers/protocols/uri-conventions

This has simplified the process of adding new view models to the query side and have made us more productive.

Although we only have implemented this on the query side, I see no problems using the same approach on the command side.

Chris Sells wrote an extensive Open Data Protocol by Example topic for the MSDN library in March 2010, which I missed when posted. The topic begins:

The purpose of the Open Data protocol[i] (hereafter referred to as OData) is to provide a REST-based protocol for CRUD-style operations (Create, Read, Update and Read) against resources exposed as data services. A “data service” is an endpoint where there is data exposed from one or more “collections” each with zero or more “entries”, which consist of typed named-value pairs. OData is published by Microsoft under the Open Specification Promise so that anyone that wants to can build servers, clients or tools without royalties or restrictions.

Exposing data-based APIs is not something new. The ODBC (Open DataBase Connectivity) API is a cross-platform set of C language functions with data source provider implementations for data sources as wide ranging as SQL Server and Oracle to comma-separated values and Excel files. If you’re a Windows programmer, you may be familiar with OLEDB or ADO.NET, which are COM-based and.NET-based APIs respectively for doing the same thing. And if you’re a Java programmer, you’ll have heard of JDBC. All of these APIs are for doing CRUD across any number of data sources.

Since the world has chosen to keep a large percentage of its data in structured format, whether it’s on a mainframe, a mini or a PC, we have always needed standardized APIs for dealing with data in that format. If the data is relational, the Structured Query Language (SQL) provides a set of operations for querying data as well as updating it, but not all data is relational. Even data this is relational isn’t often exposed for use in processing SQL statements over intranets, let alone internets. The structured data of the world is the crown jewels of our businesses, so as technology moves forward, so must data access technologies. OData is the web-based equivalent of ODBC, OLEDB, ADO.NET and JDBC. And while it’s relatively new, it’s mature enough to be implemented by IBM’s WebSphere[ii], be the protocol of choice for the Open Government Data Initiative[iii] and is supported by Microsoft’s own SharePoint 2010 and WCF Data Services framework[iv]. In addition, it can be consumed by Excel’s PowerPivot, plain vanilla JavaScript and Microsoft’s own Visual Studio development tool.

In a web-based world, OData is the data access API you need to know. …

And continues with detailed Atom and C# examples.

<Return to section navigation list>

AppFabric: Access Control and Service Bus, CDN

• Jeffrey Schwartz asserts “The new Microsoft Active Directory Federation Services release promises to up the ante on cloud security” in the deck of his in-depth ADFS 2.0 Opens Doors to the Cloud article of 5/29/2010 for Redmond Magazine’s June 2010 issue:

Microsoft Active Directory Federation Services (ADFS) 2.0, a key add-in to Windows Server 2008, was released in May. It promises to simplify secure authentication to multiple systems, as well as to the cloud-based Microsoft portfolio. In addition, the extended interoperability of ADFS 2.0 is expected to offer the same secure authentication now provided by other cloud providers, such as Amazon.com Inc., Google Inc. and Salesforce.com Inc.

ADFS 2.0, formerly known as "Geneva Server," is the long-awaited extension to Microsoft Active Directory that provides claims-based federated identity management. By adding ADFS 2.0 to an existing AD deployment, IT can allow individuals to log in once to a Windows Server, and then use their credentials to sign into any other identity-aware systems or applications.

Because ADFS 2.0 is already built into the Microsoft cloud-services portfolio -- namely Business Productivity Online Suite (BPOS) and Windows Azure -- applications built for Windows Server can be ported to those services while maintaining the same levels of authentication and federated identity management.

"The bottom line is we're streamlining how access should work and how things like single sign-on should work from on-premises to the cloud," says John "J.G." Chirapurath, senior director in the Microsoft Identity and Security Business Group.

Unlike the first release, ADFS 2.0 supports the widely implemented Security Assertion Markup Language (SAML) 2.0 standard. Many third-party cloud services use SAML 2.0-based authentication; it's the key component in providing interoperability with other applications and cloud services.

"We look at federation and claims-based authentication and authorization as really critical components to the success and adoption of cloud-based services," says Kevin von Keyserling, president and CEO of Independence, Ohio-based Certified Security Solutions Inc. (CSS), a systems integrator and Microsoft Gold Certified Partner.

While ADFS 2.0 won't necessarily address all of the security issues that surround the movement of traditional systems and data to the cloud, by all accounts it removes a key barrier -- especially for applications such as SharePoint, and certainly for the gamut of applications. Many enterprises have expressed reluctance to use cloud services, such as Windows Azure, because of security concerns and the lack of control over authentication.

"Security [issues], particularly identity and the management of those identities, are perhaps the single biggest blockers in achieving that nirvana of cloud computing," Chirapurath says. "Just like e-mail led to the explosive use of Active Directory, Active Directory Federation Services will do the same for the cloud."

Because ADFS 2.0 is already built into Windows Azure, organizations can use claims-based digital tokens, or identity selectors, that will work with both Windows Server 2008 and the cloud-based Microsoft services, enabling hybrid cloud networks. The aim is to let a user authenticate seamlessly into Windows Server or Windows Azure and share those credentials with applications that can accept a SAML 2.0-based token.

Windows 7 and Windows Vista have built-in CardSpaces, which allow users to input their identifying information. Developers can also make their .NET applications identity-aware with Microsoft Windows Identity Foundation (WIF).

WIF provides the underlying framework of the Microsoft claims-based Identity Model. Implemented in the Windows Communication Foundation of the Microsoft .NET Framework, apps developed with WIF present authentication schema, such as identification attributes, roles, groups and policies, along with a means of managing those claims as tokens. Applications built by enterprise developers and ISVs based on WIF will also be able to accept these tokens.

Pass-through authentication in ADFS 2.0 is enabled by accepting tokens based on both the Web Services Federation (WSFED), WS-Trust and SAML standards. While Microsoft has long promoted WSFED, it only agreed to support the more widely adopted SAML spec 18 months ago.

"The bottom line is we're streamlining how access should work and how things like single sign-on should work from on-premises to the cloud." John Chirapurath, Senior Director, Identity and Security Business Group, Microsoft.

Jeff continues with pages 2 and 3 of his article.

Clemens Vasters announced he’ll present TechEd: ASI302 Design Patterns, Practices, and Techniques with the Service Bus in Windows Azure AppFabric with Juval Lowy to TechEd North America attendees in this 5/28/2010 post:

Session Type: Breakout Session

Track: Application Server & Infrastructure

Speaker(s): Clemens Vasters, Juval Lowy

Level: 300 - AdvancedThe availability of the Service Bus in Windows Azure AppFabric is disruptive since it enables new design and deployment patterns that are simply inconceivable without it, opening new horizons for architecture, integration, interoperability, deployment, and productivity. In this unique session organized especially for Tech·Ed, Clemens Vasters and Juval Lowy share their perspective, techniques, helper classes, insight, and expertise in architecting solutions using the service bus. Learn how to manage discrete events, how to achieve structured programming over the Service Bus buffers, what options you have for discovery and even how to mimic WCF discovery, what are the recommended options for transfer security and application authentication, and how to use AppFabric Service Bus for tunneling for diagnostics or logging, to enabling edge devices. The session ends with a glimpse at what is in store for the next versions of the service bus and the future patterns.

Yes, that's Juval and myself on the same stage. That'll be interesting.

Added on 5/28/2010 to the Breakout sessions list of my Updated List of 74 75 Cloud Computing Sessions at TechEd North America 2010 post of 5/22/2010.

The Windows Azure Team’s Announcing Pricing for the Windows Azure CDN post of 5/28/2010 begins:

Last November, we announced a community technology preview (CTP) of the Windows Azure Content Delivery Network (CDN). The Windows Azure CDN enhances end user performance and reliability by placing copies of data, at various points in a network, so that they are distributed closer to the user. The Windows Azure CDN today delivers many Microsoft products – such as Windows Update, Zune videos, and Bing Maps - which customers know and use every day. By adding the CDN to Windows Azure capabilities, we’ve made this large-scale network available to all our Windows Azure customers.

To date, this service has been available at no charge. Today, we’re announcing pricing for the Windows Azure CDN for all billing periods that begin after June 30, 2010. The following three billing meters and rates will apply for the CDN:

- $0.15 per GB for data transfers from European and North American locations

- $0.20 per GB for data transfers from other locations

- $0.01 per 10,000 transactions

With 19 locations globally (United States, Europe, Asia, Australia and South America), the Windows Azure CDN offers developers a global solution for delivering high-bandwidth content. The Windows Azure CDN caches your Windows Azure blobs at strategically placed locations to provide maximum bandwidth for delivering your content to users. You can enable CDN delivery for any storage account via the Windows Azure Developer Portal.

Windows Azure CDN charges will not include fees associated with transferring this data from Windows Azure Storage to CDN. Any data transfers and storage transactions incurred to get data from Windows Azure Storage to the CDN will be charged separately at our normal Windows Azure Storage rates. CDN charges are incurred for data requests it receives and for the data it transfers out to satisfy these requests.

All usage for billing periods beginning prior to July 1, 2010 will not be charged. To help you determine which pricing plan best suits your needs, please review the comparison table, which includes this information.

To learn more about the Windows Azure CDN and how to get started, please be sure to read our previous blog post or visit the FAQ section on WindowsAzure.com.

Vittorio Bertocci (a.k.a. Vibro) posted Interviste su Punto Informatico e DotNetCenter.it on 5/28/2010, which begins:

Dear English-speaking reader, this post is about two interviews I gave to Italian media about WIF and claims based identity in general; henceforth, the post itself is in Italian. If you are curious and trust machine translations they are here and here.

I’ve lost any fluency I once had in Italian (as the result of being married briefly to an Italian girl when much younger), but I believe the translations are reasonably accurate and readable.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

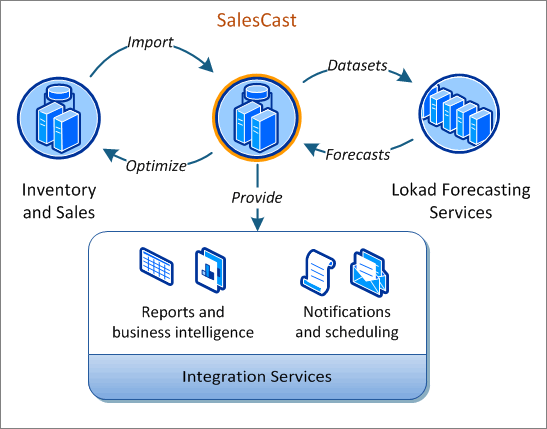

• Rinat Abdullin provides more details about Lokad’s new Azure forecasting application (see below) in his Salescast - Scalable Business Intelligence on Windows Azure post of 5/29/2010:

Yesterday we finally released first version of Salescast. There is an official announcement from Lokad. In this article we'll talk a bit about how it was built, focusing on the technology, Windows Azure and what this means to customers in terms of business value.

What does Salescast do?

This web application offers smart integration between various inventory and sales management solutions and Lokad Forecasting Services.

Basically, if you have some eCommerce shop that you want to run through some analytics and get forecasts on the future sales, Salescast can help out and handle the routine. It will detect your solution, retrieve the data, process and upload it to Lokad Forecasting Services and assemble the results into nice reports. This decision support comes with some extra integration experience on top of that to automate and streamline the process further:

- Reduce inventory costs and over-stocks.

- Improve customer satisfaction.

- Increase overall sales.

- Ease relationships with suppliers.

How was it Built?

Salescast is running on Windows Azure platform and was architected to take full advantage of the cloud computing features it provides. For Lokad this meant coming up with the architecture principles, development approaches and frameworks that could allow to leverage all the potential efficiently.

Principles were based on the adaptation of CQRS and DDD principles for the Windows Azure, great tools and frameworks that Microsoft provides with it. In order to fill some (temporary) gaps in this ecosystem, custom Enterprise Service Bus for Azure was created.

We are planning to share experience, technological principles and frameworks with the community, just like it has been done with Lokad Shared Libraries, Lokad.Cloud and the other projects. For those, who are following xLim line of research and development, this will match with version 4: CQRS in the Cloud.

At the moment, let's focus briefly on the business value all this technology creates for the customers.

Handling any Scale

Salescast has implicit capabilities of scaling out. It does not matter, how large is the inventory of customer or history of the sales. Starting from a few hundred products, up to hundreds of thousands and above - Salescast can handle it.

So if you are a large retailer, you don't need to sign a large contract in order to just try how the solution works for your network of warehouses. Neither you need to wait for development teams to scale architecture and procure the hardware resources.

It's all already there, powered by the scalability of CQRS architecture, ESB for Azure and virtually unlimited cloud computing resources of Windows Azure.

Anticipating the Unexpected

Salescast can work reliably and incrementally with any data sources. If sales and inventory management solution is persisted within SQL Azure - great. If it is an eCommerce shop running on mySQL in shared environment that tends to timeout from time to time - we are designed to handle it without stressing the other end.

We understand that unexpected things can happen in the real world. More than 95% of possible problems will be handled automatically and gracefully. If something goes really wrong (i.e.: eCommerce server is changed or firewall rules - changed), then we'll know about the problem, will be able to handle it on case-by-case basis and then continue the process from where it stopped.

Principles of enterprise integration and reliable messaging with ESB on top of Azure Queues help to achieve this.

Designed for Evolution

Salescast is designed for evolution.

We understand, that customers might use rare systems, custom setups or unique in-house solutions. They could need some specific integration functionality in order to handle specific situations.

In order to provide successful integration experience in this context, Salescast will have to evolve and adapt, get smarter. In fact, future evolution of this solution is already built into the architecture and implemented workflows.

For example, if there is some popular eCommerce solution that we didn't think of integrating with, we'll teach Salescast how to handle it, for free. The next customer that attempts to optimize sales managed by a similar solution, will get it auto-detected and integrating instantly. This applies for the new versions and flavors of these systems as well.

Basic principles of efficient development, Inversion of Control, pluggable architecture and some schema-less persistence helped to achieve this. Domain Driven Design played a significant role here as well.

Cost-Effective

Salescast is designed to be cost-effective. In fact, it's effective to the point of being provided for free. This comes from the savings that are passed to the customers. They are based upon:

- environment allowing to have efficient and smart development that is fast and does not require large teams;

- efficient maintenance that is automated and requires human intervention only in exceptional cases;

- elastic scaling out that uses only the resources that are needed;

- pricing of the Windows Azure Platform itself.

Obviously you still need to pay for the consumption of Lokad Forecasting Services. But their pricing is cost-effective, as well (to the point of being 10x cheaper than any competition). So there are some tangible benefits for the money being spent.

Secure and Reliable

Salescast, as a solution, is based on the features provided by the Microsoft Windows Azure Platform. This includes:

- Service Level Agreements for the computing, storage and network capacities.

- Hardware reliability of geographic distribution of Microsoft data centers.

- Regular software upgrades and prompt security enhancements.

Lokad pushes this further:

- Secure HTTPS connections and industry-grade data encryption.

- Redundant data persistence.

- Regular backups.

- Reliable OpenID authentication.

Summary

This was a quick technological overview of Salescast solution from Lokad, along with features and benefits it is capable of providing just because it is standing on the shoulders of giants. Some of these giants are:

- Windows Azure Platform for Cloud Computing and the ecosystem behind.

- Time-proven principles of development and scalable architecture.

- Various open source projects and the other knowledge shared by the development community.

Lokad will continue sharing and contributing back to help make this environment even better.

From this point you can:

- Check out official public announcement of Salescast and subscribe to Lokad company blog to stay tuned in for a company updates.

- Subscribe to the updates of this Journal on Efficient Development

- Check out xLim materials to see what is already shared within this body of knowledge on efficient development.

I'd also love to hear any comments, thoughts or questions you've got!

Lokad claims Salescast, sales forecasting made WAY EASIER in this 5/28/2010 post:

We are proud to announce that we have just released the first version of Salescast.

Salescast is a web application hosted on Windows Azure that deliverers sales forecasts, the easy way. Feature-wise, Salescast is very close to our Lokad Safety Stock Calculator (LSSC), a desktop app which, despite its name, is also delivering sales forecasts.

Why another client app?

Over the two years of existence of LSSC, we noticed that data integration represented the No1 issue faced by our clients. Forecasting issues were simply dwarfed by the sheer amount of data import issues. We have been trying to add more and more data adapters to LSSC, reaching +20 supported apps, and yet LSSC keeps failing at properly importing data as there are thousands of apps in the market.

Stepping back from the actual situation, we asked ourselves: why should clients even bother about data integration? Shouldn't this sort of thing be part of the service in the first place? Letting our prospects and clients face such issues was not acceptable.

Thus, we decided to address the problem from a radically different angle: just grant Lokad an access to your data, and Lokad will figure out the rest; and the project codenamed Salescast was born.

Salescast needs one single information to setup the whole forecasting process: a SQL connection to your database. Nearly every modern ERP, CRM, accounting, shopping cart, POS, MRP ... software store data in a SQL database. Most of your business apps are probably powered by SQL. Salescast also supports the different SQL flavors: MSSQL, MySQL, Oracle SQL.

Do you believe in magic?

We haven't discovered the ultimate technology that would make all data integration issues go away. In order to solve data integration issues, Salescast uses a combination of technology and process.

1. Salecast attempts to auto-detect the target application leveraging its library of known apps, and if the data format is recognized, it proceeds with the forecasting process.

2. If the database is not recognized, then a Lokad engineer gets appointed to design the data adapter. Once the adapter is designed we get back to step 1.

How much is this going to cost?

We don't intend to charge beside our primary per-forecast pricing. More precisely, we promise to give a try at integrating every single app that gets plugged to Salecast. If the integration proves to be really complicated (which happens with old ad-hoc systems) then, we might come back with a quote to complete the job. Naturally, you're free to refuse the quote.

What about the future of Salescast?

For the initial release of Salescast, we have been exclusively focused on SQL database. Later on, we plan to add a wider range of data formats (Excel spreadsheets, REST APIs, ...). We will also enhance the reporting capabilities of Salescast. Just let us know what you need.

Salescast is readily available, get your forecasts now!

• David Aiken suggests that you Remember to update your DiagnosticsConnectionString before deploying Windows Azure projects in this 5/28/2010 post:

In the ServiceConfiguration.cscfg file, you have a DiagnosticsConnectionString which as a default is linked to local storage.

<Setting name="DiagnosticsConnectionString" value="UseDevelopmentStorage=true" />

When you deploy your package it will never start correctly (stuck in Busy/Initializing), because you will be trying to log to the local storage, which doesn’t exist in the cloud.

Fixing this is easy:

- Create a storage account if you don’t already have one.

- In Visual Studio, bring up the properties of your project, then click settings.

- Click on the … next to the DiagnosticsConnectionString.

- Enter your storage details in the dialog.

- Save then deploy.

THIS POSTING IS PROVIDED “AS IS” WITH NO WARRANTIES, AND CONFERS NO RIGHTS

Chad Collins demonstrates the Nubifer Cloud:Link hosted on Microsoft Windows Azure with Microsoft in this 00:10:08 YouTube video interview by Suresh Sreedharan:

Suresh Sreedharan talks with Chad Collins, CEO at Nubifer Inc. about their cloud offering on the Windows Azure platform. Nubifer Cloud:Link monitors your enterprise systems in real time, and strengthens interoperability with disparate owned and leased SaaS systems.

Ryan Dunn describes Hosting WCF in Windows Azure in this 5/28/2010 post:

This post is a bit overdue: Steve threatened to blog it himself, so I figured I should get moving. In one of our Cloud Cover episodes, we covered how to host WCF services in Windows Azure. I showed how to host both publically accessible ones as well as how to host internal WCF services that are only visible within a hosted service.

In order to host an internal WCF Service, you need to setup an internal endpoint and use inter-role communication. The difference between doing this and hosting an external WCF service on an input endpoint is mainly in the fact that internal endpoints are not load-balanced, while input endpoints are hooked to the load-balancer.

Hosting an Internal WCF Service

Here you can see how simple it is to actually get the internal WCF service up and listening. Notice that the only thing that is different is that the base address I pass to my ServiceHost contains the internal endpoint I created. Since the port and IP address I am running on is not known until runtime, you have to create the host and pass this information in dynamically.

public override bool OnStart() { // Set the maximum number of concurrent connections ServicePointManager.DefaultConnectionLimit = 12; DiagnosticMonitor.Start("DiagnosticsConnectionString"); // For information on handling configuration changes // see the MSDN topic at http://go.microsoft.com/fwlink/?LinkId=166357. RoleEnvironment.Changing += RoleEnvironmentChanging; StartWCFService(); return base.OnStart(); } private void StartWCFService() { var baseAddress = String.Format( "net.tcp://{0}", RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["EchoService"].IPEndpoint ); var host = new ServiceHost(typeof(EchoService), new Uri(baseAddress)); host.AddServiceEndpoint(typeof(IEchoService), new NetTcpBinding(SecurityMode.None), "echo"); host.Open(); }

Consuming the Internal WCF Service

From another role in my hosted service, I want to actually consume this service. From my code-behind, this was all the code I needed to actually call the service.

protected void Button1_Click(object sender, EventArgs e) { var factory = new ChannelFactory(new NetTcpBinding(SecurityMode.None)); var channel = factory.CreateChannel(GetRandomEndpoint()); Label1.Text = channel.Echo(TextBox1.Text); } private EndpointAddress GetRandomEndpoint() { var endpoints = RoleEnvironment.Roles["WorkerHost"].Instances .Select(i => i.InstanceEndpoints["EchoService"]) .ToArray(); var r = new Random(DateTime.Now.Millisecond); return new EndpointAddress( String.Format( "net.tcp://{0}/echo", endpoints[r.Next(endpoints.Count() - 1)].IPEndpoint) ); }

The only bit of magic here was querying the fabric to determine all the endpoints in the WorkerHost role that implemented the EchoService endpoint and routing a request to one of them randomly. You don't have to route requests randomly per se, but I did this because internal endpoints are not load-balanced. I wanted to distribute the load evenly over each of my WorkerHost instances.

One tip that I found out is that there is no need to cache the IPEndpoint information you find. It is already cached in the API call. However, you may want to cache your ChannelFactory according to best practices (unlike me).

Hosting Public WCF Services

This is all pretty easy as well. The only trick to this is that you need to apply a new behavior that knows how to deal with the load balancer for proper MEX endpoint generation. Additionally, you need to include a class attribute on your service to deal with an address filter mismatch issue. This is pretty well documented along with links to download the QFE that contains the behavior patch out on the WCF Azure Samples project on Code Gallery in Known Issues. Jim Nakashima actually posted about this the other day as well in detail on his blog as well, so I won't dig into this again here.

Lastly, if you just want the code from the show, have at it!

Lee Pender asserts “Microsoft might be ‘all in’ for the cloud -- where it now has competition from Salesforce.com and VMware -- but its Dynamics applications will likely benefit most from a hybrid model” as the deck for his Convergence in the Cloud: Dynamics, Azure and Going Hybrid article for the June 2010 issue of Redmond Magazine posted on 5/28/2010:

Never before has the word "convergence" been so appropriate a description of Microsoft enterprise-software offerings. The company has long called its annual Dynamics conference (last held in late April) Convergence, but now products and product categories really are beginning to converge in Redmond.

Just a couple of years ago, enterprise resource planning (ERP) applications were the centerpiece of the Microsoft Dynamics product collection, which consists of four ERP suites and a multi-platform customer relationship management (CRM) offering. But now Redmond is selling CRM first -- particularly the Dynamics CRM Online service the company hosts itself.

Microsoft offers three versions of Dynamics CRM -- its own hosted service (Dynamics CRM Online), a version hosted by partners and an on-premises deployment. At Convergence, it was Dynamics CRM Online that gomost of the attention, says Josh Greenbaum, principal at Enterprise Applications Consulting in Berkeley, Calif.

But it's a hybrid model, Greenbaum believes -- rather than a pure hosted model -- which will be most critical for Dynamics.

"Windows Azure is turning out to be the thing that's going to propel Dynamics into the forefront of Microsoft strategy," he says. "The value-add is going to come from the business services that Microsoft can provide on top of Windows Azure." [Emphasis added.]

Java and the Cloud

Two Microsoft competitors, one of which is not a proponent of the hybrid model, made news in April with a Platform as a Service (PaaS) offering that will provide some competition for Windows Azure. Hosted-applications giant Salesforce.com Inc. and virtualization titan VMware Inc. have joined forces to create VMforce, a Java-based cloud-development platform that's due out for a preview in the second half of this year. The VMforce platform will be a cloud-based development platform for Java developers, who number about 6 million worldwide, Salesforce.com and VMware officials say. With VMforce, developers can create .WAR files in Java and drop them into VMforce.VMforce is an "hermetically sealed environment," says Mitch Ferguson, senior director of alliances at VMware. "The Java developer downloads [the .WAR file] into VMforce, and we handle everything from there," Ferguson explains.

New Battlegrounds

The new platform will provide some competition to Windows Azure, says Ray Wang, partner for enterprise strategy at Altimeter Group LLC in San Mateo, Calif., but it'll mainly serve as a rival to other Java-based platforms."VMforce is more a threat to other Java vendors," Wang says. "The decision to go .NET PaaS via Windows Azure remains safely in Microsoft's camp, but the Java and .NET wars now move to a new battleground."

It's on this battleground that Windows Azure, VMforce and other platforms will fight the wars of product convergence. Enterprise apps like Dynamics will end up running on or combining with one platform or the other -- or both -- but Microsoft and the VMforce team have thus far set out different visions for how the convergence of apps and platforms will take place.

Salesforce.com and VMware emphasize the software-free nature of VMforce, touting its automation capabilities and its lack of complexity.

"We're going to make [VMforce] a more abstract platform where you don't have to deal with the lower-level infrastructure as you do with the Windows Azure platform," says Eric Stahl, senior director of product marketing at Salesforce.com.

Greenbaum, however, contends that hybrid connections with on-premises software will make Windows Azure more appealing than VMforce.

"VMforce put a stake in the ground that I think Windows Azure can compete against very successfully," he says.

Steve Marx explains how he coded (and debugged) his live Windows Azure Swingify demo app with Python in this 00:33:08 Cloud Cover Episode 13 - Running Python - the Censored Edition Channel9 video of 5/28/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow

In this special censored episode:

- We show you how to run Python in the cloud via a swingin' MP3 maker

- We talk about how Steve debugged the Python application

- Ryan and Steve join a boy band

Show Links:

SQL Azure Session ID Tracing

Windows Azure Guidance Part 2 - AuthN, AuthZ

Running MongoDb in Windows Azure

We Want Your Building Block Apps

Reprinted from Windows Azure and Cloud Computing Posts for 5/27/2010+.

Steve Marx’ Making Songs Swing with Windows Azure, Python, and the Echo Nest API post of 5/27/2010 begins:

I’ve put together a sample application at http://swingify.cloudapp.net that lets you upload a song as an MP3 and produces a “swing time” version of it. It’s easier to explain by example, so here’s the Tetris theme song as converted by Swingify.

Background

The app makes use of the Echo Nest API and a sample developed by Tristan Jehan that converts any straight-time song to swing time by extended the first half of each beat and compressing the second half. I first saw the story over on the Music Machinery blog and then later in the week on Engadget.

I immediately wanted to try this with some songs of my own, and I thought others would want to do the same, so I thought I’d create a Windows Azure application to do this in the cloud.

How it Works

We covered this application on the latest episode of the Cloud Cover show on Channel 9 (to go live tomorrow morning – watch the teaser now). In short, the application consists of an ASP.NET MVC web role and a worker role that is mostly a thin wrapper around a Python script.

The ASP.NET MVC web role accepts an MP3 upload, stores the file in blob storage, and enqueues the name of the blob:

[HttpPost] public ActionResult Create() { var guid = Guid.NewGuid().ToString(); var file = Request.Files[0]; var account = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); var blob = account.CreateCloudBlobClient().GetContainerReference("incoming").GetBlobReference(guid); blob.UploadFromStream(file.InputStream); account.CreateCloudQueueClient().GetQueueReference("incoming").AddMessage(new CloudQueueMessage(guid)); return RedirectToAction("Result", new { id = guid }); }The worker role mounts a Windows Azure drive in

OnStart(). Here I used the same tools and initialization code as I developed for my blog post “Serving Your Website From a Windows Azure Drive.” InOnStart():var cache = RoleEnvironment.GetLocalResource("DriveCache"); CloudDrive.InitializeCache(cache.RootPath.TrimEnd('\\'), cache.MaximumSizeInMegabytes); drive = CloudStorageAccount.FromConfigurationSetting("DataConnectionString") .CreateCloudDrive(RoleEnvironment.GetConfigurationSettingValue("DriveSnapshotUrl")); drive.Mount(cache.MaximumSizeInMegabytes, DriveMountOptions.None);Then there’s a simple loop in

Run():while (true) { var msg = q.GetMessage(TimeSpan.FromMinutes(5)); if (msg != null) { SwingifyBlob(msg.AsString); q.DeleteMessage(msg); } else { Thread.Sleep(TimeSpan.FromSeconds(5)); } }Steve continues with code for the implementation of

SwingifyBlob(), which calls out topython.exeon the mounted Windows Azure drive and suggests running “the Portable Python project, which seems like an easier (and better supported) way to make sure your Python distribution can actually run in Windows Azure.”

Reprinted from Windows Azure and Cloud Computing Posts for 5/27/2010+.

War Room’s New House GOP site costs more money if it succeeds post of 5/27/2010 to Salon.com confirms Republican house members’ use of Windows Azure to host their new AmericaSpeakingOut.com voter-participation site:

… The way it works is, basically, anyone who wants to can post whatever they want, and Republicans will use their comments in town halls and campaign manifestoes this fall. So far, the site has mostly been notable for two things. The first is strange comments: "Congressmen and women should wear Saran Wrap to work" was featured on the site's "Transparency/Open Government" section on Thursday morning, and the featured idea under "Constitutional Limits" was, "We should apologize to King George III. He was mentally handicapped, and he couldn't help but horribly mismanage the government." The second, of course, is the fact that taxpayer dollars are funding the whole thing. The site is being paid for out of the GOP leadership budget, despite its dot-com (not dot-gov) name.

The worst part about it, though, is that the more successful the site is, the more money it will cost you. Both Microsoft, which provided the software platform and servers that run the site, and House Republican aides confirmed to Salon Thursday that the more traffic the site generates, the more tax dollars the GOP will have to pay in fees to host it.

"The Microsoft TownHall software, which is the underlying software on which the America Speaking Out website was built, is available at no cost," Microsoft spokeswoman Sarah Anissipour said in an e-mailed statement. "Customers only pay to host their TownHall solution on Windows Azure, which is available using a scalable, pay-only-for-usage model. The number of people visiting a website does have a direct correlation to Windows Azure usage." Translated out of P.R.-speak, that means the more people who come and post crazy ideas on the site, the more it costs. Brendan Buck, a House GOP leadership aide, confirmed that Republicans are using Azure to host the site. [Emphasis added.] …

John C. Stame suggests Business Solution Workloads for the Cloud in this 5/27/2010 post:

Almost a year ago, I wrote about the business value that cloud computing offers. In that post, I write about some well known benefits that are realized by leveraging the cloud for SaaS (Software as a Service) and PaaS (Platform as a Service). For example, cloud computing transfers the traditional capital expenditure (CapEx) model common in data centers today to an operational expenditure (OpEx) model. Cloud Services like Azure Services Platform and Microsoft Online Services allow CIOs and CFOs to control costs more effectively through these cloud computing service offerings.

With Windows Azure, Microsoft’s Cloud Services Platform, in the market now for over 6 months, I wanted to highlight some real business workload scenarios that are great candidates for the cloud and offer potentially excellent business value running in the cloud. Here are a few key areas business are looking at:

Web Applications – This is probably the most obvious workload that makes sense moving to the cloud in today’s SaaS world. It seems like there are more and more solutions that are used in this model. Its not only email services like Hotmail, Yahoo mail, or GMail, but also CRM applications, and of course ecommerce. Businesses and ISVs can focus on their core business models and software development rather than worry about data centers and how many servers and bandwidth will be necessary for the web application to scale out, as well as enter new markets quickly. A cloud services platform like Windows Azure Platform provides a flexible and economic option for quickly deploying rich web applications.

HPC (High Performance Computing) – HPC workloads use pools of computing power to solve advanced computational equations or problems. In these types of scenarios, the application typically includes very complex mathematical computations. It often generally refers to the engineering applications associated with cluster-based computing (like computational fluid dynamics, or building and testing of virtual prototypes), as well as business uses of cluster-based computing or parallel processing / computing, such as data warehouses and high transaction processing. These workload scenarios are well suited for Windows Azure. There is a great case study published highlighting RiskMetrics Group use of Windows Azure to support large bursts in computing activity over short periods of time.

LOB (Line of Business) Applications – Enterprises often have many, sometimes hundreds or thousands, of departmental LOB applications servicing specific business processes and business units. These applications can be distressing to IT management as they sometimes end up with a lack of centralized management, policy compliance, and hardware security. Yet, they often provide the business critical services, and sometimes require quick deployment times. A cloud services platform like Windows Azure provides an enterprise class on-demand computing environment with the flexibility that the business needs, and the security and reliability that centralized IT demands.

Let me know if you have any other ideas or questions, comments, or would like to discuss…

See also John’s The Business Value of Cloud Computing of 6/29/2009.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie reports Ask and ye shall receive – F5 joins Microsoft’s Dynamic Data Center Alliance to bring network automation to a Systems Center Operations Manager near you in her F5 Friday: I Found the Infrastructure Beef post of 5/28/2010 to the F5 DevCentral blog:

You may recall that last year Microsoft hopped into Infrastructure 2.0 with its Dynamic Datacenter Toolkit (DDTK) with the intention of providing a framework through which data center infrastructure could be easily automated and processes orchestrated as a means to leverage auto-scaling and faster, easier provisioning of virtualized (and non-virtualized in some cases) resources. You may also recall a recent F5 Friday post on F5’s Management pack capabilities regarding monitoring and automatic provisioning based on myriad application-centric statistics.

You might have been thinking that if only the two were more tightly integrated you really could start to execute on that datacenter automation strategy.

Good news! The infrastructure beef is finally here.

WHERE DID YOU FIND IT?

I found the infrastructure beef hanging around, waiting to be used. It’s been around for a while hiding under the moniker of “standards-based API” and “SDK” – not just here at F5 but at other industry players’ sites. The control plane, the API, the SDK – whatever you want to call it – is what enables the kind of integration and collaboration necessary to implement a dynamic infrastructure such as is found in cloud computing and virtualization. But that dynamism requires management and monitoring – across compute, network, and storage services – and that means a centralized, extensible management framework into which infrastructure services can be “plugged.”

That’s what F5 is doing with Microsoft.

Recently, Microsoft announced that it would open its Dynamic Datacenter Alliance to technology partners, including the company’s compute, network, and storage partners. F5 is the first ADN (Application Delivery Network) partner in this alliance. Through this alliance and other partnership efforts, F5 plans to further collaborate with Microsoft on solutions that promote the companies’ shared vision of dynamic IT infrastructure.

Microsoft envisions a dynamic datacenter which maximizes the efficiency of IT resource allocation to meet demand for services. In this vision, software and services are delivered through physical or virtualized computing, network and storage solutions unified under the control of an end-to-end management system. That management system monitors the health of the IT environment as well as the software applications and constantly reallocates resources as needed. In order to achieve such a holistic view of the datacenter, solutions must be integrated and collaborative, a la Infrastructure 2.0. The automated decisions made by such a management solution are only as good as the data provided by managed components.

Microsoft’s Dynamic Datacenter Toolkit (DDTK) is a dynamic datacenter framework that enables organizations and providers the means by which they can not only automate virtualized resource provisioning but also manage compute, network, and storage resources. F5 now supports comprehensive integration with Microsoft System Center, Virtual Machine Manager, Windows Hyper-V, and more. Both physical (BIG-IP Local Traffic Manager) and virtual (BIG-IP Local Traffic Manager Virtual Edition) deployment options are fully supported through this integration. The integration with DDTK also provides the management system with the actionable data required to act upon and enforce application scalability and performance policies as determined by administrator-specified thresholds and requirements.

Some of the things you can now do through SCOM include:

- Discover BIG-IP devices

- Automatically translate System Center health states for all managed objects

- Generate alerts and configure thresholds based on any of the shared metrics

- Use the PowerShell API to configure managed BIG-IPs

- Generate and customize reports such as bandwidth utilization and device health

- Automatic object-level configuration synchronization at the device level (across BIG-IP instances/devices)

- Monitor real-time statistics

- Manage both BIG-IP Global Traffic Manager and Local Traffic Manager

- Deepen just-in-time diagnostics through iRules integration by triggering actions in System Center

- Migrate live virtual machines

- Network spike monitoring in the virtual machine monitor console, eliminating bottlenecks before they happen

There’s a lot more, but like Power Point it’s never a good idea to over-use bullet points. A more detailed and thorough discussion of the integration can be read at your leisure in the F5 and Microsoft Solution Guide [PDF].

Mary Jo Foley reported in her As the Microsoft org chart turns post of 5/28/2010 that “…As part of STB’s (Server and Tools business’) strategy to align future Windows endpoint security and systems management engineering, the Forefront endpoint protection development team will join the System Center development team within STB’s Management and Services Division, which is led by Brad Anderson.”

Grant KD announced Microsoft introduces Cloud Guide for IT Leaders in this 5/28/2010 post to the Server and Tools Business News Bytes blog:

Today, Microsoft launched its Cloud Guide for IT Leaders, an online digital resource for information technology and business leaders interested in cloud computing.

Wondering how the roles of IT staffers will change with cloud computing? Curious why organizations have left Google Apps? The Cloud Guide for IT Leaders provides access to a steady stream of rich content—highlight videos, executive interviews, images, blog entries and keynote replays—addressing those issues.

Learn more by visiting the Cloud Guide at http://www.microsoft.com/presspass/presskits/cloud/. You can also follow us on Twitter via http://twitter.com/STBNewsBytes.

More Windows Azure agitprop; the Cloud Computing group must have a humungous PR budget. The “steady stream of rich content—highlight videos, executive interviews, images, blog entries and keynote replays” sounds like the OakLeaf blog’s content.

Satinder Kaur’s India to emerge as central hub for cloud computing article of 5/28/2010 for the ITVoir Network quotes Steve Ballmer:

According to Microsoft CEO Steve Ballmer, who is currently in India these days stated that India is a hot destination for cloud computing vendors, confirmed sources.

The technology “Cloud Computing” has taken the world by storm. It is the most discussed about technology globally and India seems to be a hot bet for the same. India seems to be the global hub for cloud computing as companies would look to India to support transitions and deployment.Along with various vendors betting on cloud computing, Microsoft is also betting heavily on the segment. In this segment, Microsoft is rivaling Amazon, Google and AT&T. The company is trying to convince enterprises to get switched to manage data centers.

Mr. Ballmer further emphasized on the importance of its cloud computing platform, Azure. People can use the applications of Azure from email to payroll systems hosted online.

Furthermore, citing India among the hot bet for cloud vendors Mr. Ballmer stated that cloud opportunity would fetch in around 3 lakh [300,000] jobs in coming five years and the business would grow to $70 billion by that time.

In India, cloud would act as catalyst for IT adoption.

Bruce Guptil wrote Trip Report: Too Late for the Cloud? Not By A Long Shot! as a Saugatuck Research Alert on 5/26/2010 (site registration required):

… Both [cloud] events [with Saugatuck participants] had very different audiences; the Sage Insights event was focused on partners, while AribaLive was aimed at buyers, users, and suppliers of what is calls “business commerce” software and services. But both were, fundamentally, Cloud-oriented events, focusing on strategic approaches to Cloud IT and markets by the vendors themselves, and highlighting strategic approaches by partners and customers.

At both events, Saugatuck presented our latest research, analysis and guidance for vendors and users regarding areas of Cloud opportunity, timing, and how to manage. We spent much of our time speaking with attendees between sessions, after hours, and in Q&A sessions after our presentations.

We came away from these events with three significant impressions:

- Cloud IT is not the wave of the future; it is the wave of Right Now. It is fully integrated into the thinking of vendors and users alike, and dominates discussion among not only IT professionals but business executives, from C-level to Finance to HR and Procurement.

- While Cloud IT dominates thinking, there is significant, practically massive, uncertainty as to how to approach and execute Cloud as either a business or IT strategy.

- And even though almost all attendees we spoke with agreed that Cloud IT itself is in very early stages, and that Cloud development, adoption, and usage strategies and practices have yet to be well-formulated and refined, a recurring question asked of and discussed with Saugatuck at these events was whether or not technologies, providers, and users are already too late to “win” in Cloud IT. The general sentiment is that if they have not yet established a solid Cloud strategy and made significant investments, they might be too far behind the curve, and are destined to lose business to firms that have already made such moves.

Bruce continues with Saugatuck’s usual Why Is It Happening? and Market Impact topics.

Michael Pastore claims “Cloud computing offers significant advantages for businesses of all sizes, and it's easier to get started than you think. Microsoft makes Windows Azure compute time available for MSDN subscribers, as well as for software start-ups through the Microsoft BizSpark program” and suggests “Learn why cloud computing is a good fit for you and how you can get started” in his With MSDN and BizSpark, Cloud Computing is Closer than You Think post to Internet.com’s Cloud Computing Showcase (sponsored by the Microsoft Windows Azure Platform):

If there is one word continually associated with cloud computing, it's "agility." Cloud computing allows developers and IT departments to increase their agility by moving any or all parts of an application or data storage to the cloud—Internet-based storage on remotely hosted servers. The cloud allows organizations to save money on IT infrastructure and also provides them with an infrastructure they can use on-demand. They can easily add compute power for a busy shopping season or test a new application without an upfront infrastructure investment.

While a great deal of the talk around cloud computing is centered around its implications for the enterprise data center, a number of cloud-based services provide developers with a set of features to help them create applications without the need for infrastructure. Among the best known are Microsoft's Windows Azure platform, Amazon's Web Services, and Google App Engine.

These platforms enable agility by allowing developers working alone or as part of a large enterprise IT organization to create applications that use the cloud for some or all of their computing power and storage. They allow developers to add cloud-based functionality or storage to on-premise applications. Regardless of the size of the application or the organization, software developers can have a cloud-based application up and running using any of these platforms in a fairly short amount of time.

The Microsoft Windows Azure platform is probably the most complete cloud computing platform available to software developers. Azure is an example of a "PaaS" solution (platform-as-a-service); for developers that means they can focus on the application they're developing and not the need to manage underlying infrastructure. It provides Windows-based compute and storage services for cloud applications using three main components: Windows Azure, SQL Azure, and Windows Azure platform AppFabric.

Each of these components plays a specific role in Microsoft's cloud platform. Windows Azure is the Windows-based environment that runs cloud-based applications and stores non-relational data at Microsoft data centers. It automates how your app scales out, scales back, and handles such tasks as failover and maintenance. SQL Azure provides the relational data services for cloud applications. AppFabric provides connectivity and access control for solutions between the cloud and on-premise.

Don't let the Microsoft nomenclature confuse you. The Windows Azure platform is built to embrace interoperability, which is important if you want to develop cloud functionality for existing on-premise applications that might not be built on the Microsoft platform. There are Azure tools for the Eclipse IDE; Windows Azure SDKs for Java and PHP; and AppFabric SDKs for PHP and Ruby developers, and the Tomcat server. …

Michael continues with examples and benefits offered to MSDN subscribers and startups.

<Return to section navigation list>

Cloud Security and Governance

Adron B. Hall’s Your Cloud, My Cloud, Security in the Cloud of 5/28/2010 discusses security issues with Web analytics in the cloud:

I had a great conversation the other night while at the Seattle Web Analytics Wednesday (#waw) with Carlos (@inflatemouse) and a dozen others. @inflatemouse brought up the idea that an analytics provider using the cloud, increases or at least possibly increases the risk of security breach to the data. This is, after all a valid point, but because of the inherent way web analytics works this is and is not a concern.

Web Analytics is Inherently Insecure

Web analytics data is collected with a Javascript Tag. Omniture, Webtrends, Google, Yahoo, and all of the analytics providers use Javascript. Javascript is a scripting language, which is not compiled, and stored in plain text in the page or an include, or passed into the URI when needed. This plain text Javascript is all over the place, and able to be read merely by looking at it. So the absolute first point of data collection, the Javascript tags, is 100% insecure.

The majority of data is not private. So this insecurity isn't a huge risk or at least should not be. If it is, you have larger issues before you even contemplate using an on-premise and cloud solution to bump up your compute and storage capabilities. Collecting data that needs to be secure via web analytics is an absolute no. Do NOT collect secure, private, or other important pieces of data this way. If you have even the slightest legal breach in this context, your entire analytics provision could have this data scraped, possibly used in court in a class action suite, or in other ways even.

For the rest of this write up, I will assume that you’ve appropriately encrypted, or enabled SSL, or otherwise secured your analytics or data collection in some way.

Getting that Boost on Black Friday

E-commerce has gotten HUGE over the last decade. The last Black Friday sales and holiday season saw the largest e-commerce activity in history. Omniture, Webtrends, and all of the other web analytics providers often see a ten fold increase in web traffic over this period of time. Sometimes, for some clients, this traffic is handled flawlessly by racks and racks of computers sitting in multiple collocation facilities around the world. However, for some clients that have exceedingly large traffic boosts, data is lost. (yes, ALL the providers lose data, more so during these massive boosts) The reason is simple, the machines can?t process in time or handle the incoming traffic because the extra throughput isn?t available to scale.

Enter the cloud. The cloud has vastly more scalability, almost an infinite supply by comparison, to any of the infrastructure available to the analytics providers. Matter of fact the cloud has more scale available than all of the analytics providers. This is actually saying a lot, because Webtrends (and maybe some of the others) I know does an amazing job with their scalability and data collection, arguably more accurate and consistent than any of the other providers (especially since many of them just sample and "guess" at the data).

So when you extend your capabilities to the cloud for web analytics do you really increase your security vulnerability? Most of the providers of web analytics have their own array of security measures, that I won't go into on levels of security. However, does introducing the cloud change anything? Does it alter the architecture so significantly as to introduce legitimate security concerns?

Immediately, from a functional point of view, assuming good architecture, intelligent system design, and good security practices are in use already, introducing the cloud should and is transparent to clients. For the provider it should not increase legal concerns, functional concerns, or otherwise pending the aforementioned items are taken care of appropriately. But that is just it, every single current provider has legacy architecture, various other elements that do not provide a solid basis for a migration to the cloud for that extra bump of power and storage.

So what should be done? What if a provider wants that extra power? Can the technical debts be paid to use the awesome promises of the cloud? Is the security really secure enough?

Probably not. Probably so. But . . .

This provides a prospective opportunity for a new solution for web analytics to be provided. It provides a great opportunity for a modern cloud based solution, that provides more than just a mere Javascript tag and insecure unencrypted data to be collected for analysis. It provides the grand opportunity to design an architecture that could truly lead the industry into the future. Will Webtrends, Omniture, Unica, or someone else step in to lead the analytics industry into the future?

At this point I'm not really sure, but it definitely is an interesting thought and a conversation that I have had a lot of people at #altnet meetings, cloud meetups, and with cloud architects, engineers, and others that have similar curiosities. I await impatiently to see someone or some business take the lead!

J. D. Meier offers a comprehensive patterns & practices Security Guidance Roundup in this 5/28/2010 post:

This is a comprehensive roundup of our patterns & practices security guidance for the Microsoft platform. I put it together based on customers looking for our security guidance, but having a hard time finding it. While you might come across a guide here or a How To there, it can be difficult to see the full map, including the breadth and depth of our security guidance. This is a simple map. organized by “guidance type” (i.e. Guides, App Scenarios, Checklists, Guidelines, How Tos, … etc.)

Books / Guides (“Blue Books”)

If you’re familiar with IBM Redbooks, then you can think of our guides as Microsoft “Blue Books.” Our patterns & practices Security Guides provide prescriptive guidance and proven practices for security. Each guide is a comprehensive collection of principles, patterns, and practices for security. These are also the same guides used to compete in competitive platform studies. Here are our patterns & practices Security Guides:

- Building Secure ASP.NET Applications

- Improving Web Application Security - Threats and Countermeasures

- Improving Web Services Security: Scenarios and Implementation Guidance for WC