Windows Azure and Cloud Computing Posts for 5/21/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

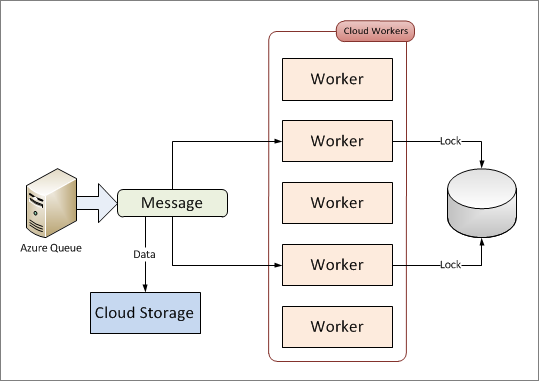

Rinat Abdullin asserts “Not all queues are created equal” in his MSMQ Azure vs. Scalable Queue with Concurrency Locks post of 5/21/2010:

While talking about the development friction points around Azure Queues I've mentioned using some types of locks. These locks are needed in order to perform message de-duplication which will guarantee, that this "ChargeCustomerCommand" will be processed once and only once.

We don't want to charge customer twice, do not we? So in order to guarantee that this never (ever) happens in our Azure application, we need to implement message de-duplication and locking.

But why don't we want to use some of these smart locking approaches with Azure Queues? Here are a few reasons.

First of all, this adds some complexity to the solution. Just take a look at the simplest implementation, that uses cloud storage (Azure Blob) to keep messages larger than 8KB, while relying on RDBMS (SQL Azure) to guarantee message de-duplication.

The implementation could even be more complex (i.e.: using RDBMS only for the concurrency locks, while keeping actual message states in the cloud storage). Yet, does your solution really need this level of complexity and potential scalability? How many transactions per second do you need to process?

Jonathan Oliver had written a lot on the concurrency locks, indempotency patterns and message de-duplication in the cloud environments.

But that's all complex stuff. Complex things in your solution tend to increase development costs exponentially and you probably don't want to do that.

Wouldn't it be simpler just to:

Second, even if we are OK with the development tax of concurrency locking against Azure Queues, this does not save us from answering following questions:

How do we handle transactions and roll back on failures?

How exactly do we persist messages?

How do we handle visibility timeouts and message reprocessing in case of failure?

How do we roll back our message, if it consists of two parts - message header within the Azure Queue and actual serialized message within the Blob storage?

How do we redirect or publish large messages? Do we download and create new copies of every message or do try to be smart by copying only azure queue messages that reference serialized data in Azure Blob?

How do we keep track of this serialized data chunks and clean up these, when there are no references left? Do we implement our own garbage collector service for the messages?

In the middleware world such questions are normally answered by the middleware server, and not the developer. One of these established middleware servers is MSMQ. So why not let it handle the job? This will significantly simplify initial development for any message-based cloud solution, reducing complexity, development and maintenance costs.

These few cloud software applications that grow above the performance offered by an MSMQ server can move towards implementing purely scalable queues on top of Windows Azure or go towards refactoring to avoid such bottlenecks. NServiceBus users mentioned multi-server systems that processed millions of messages per hour, so this is achievable (NSB uses MSMQ as a messaging transport).

Third reason is pure performance. Throughput of Azure Queues is not that big at the moment. People had been reporting something like 500 messages per minute in total. That's 10 messages per second in the case, where we don't have any BLOBs to read or locks to manage.

Azure Queues, as a tool, have quite a few of uses as well. Especially given their pricing model and implicit scalability. Yet, in my opinion, they can't replace messaging transport with the MSMQ behavior. Especially in the case of xLim and CQRS architecture approaches.

Would your Windows Azure solution benefit more from the plain Azure Queue or MSMQ Azure?

Sean Kearon commented “Very interesting article. It's easy to assume that Azure Queues will behave like MSMQ - I did!” Agreed. Read more about MSMQ MSDN.

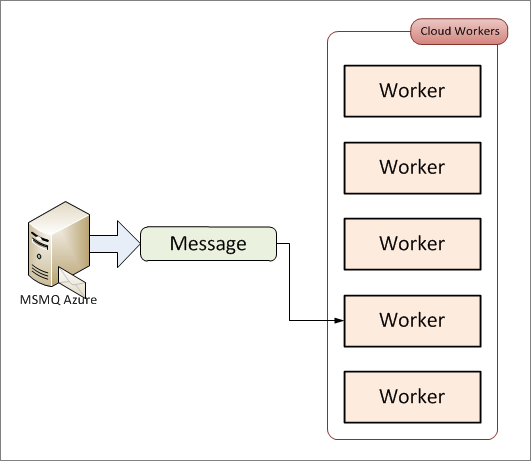

Rinat Abdullin compares Azure Queues and Microsoft Message Queue Service (MSMQ) in his Windows Azure Most Wanted - MSMQ Azure post of 5/21/2010:

As we all know, Windows Azure provides cloud computing services on "Infrastructure as a Service" basis. This includes usual storage, network and computing capacities as well as a few things on top of that:

- Windows Azure Queues;

- Azure Table Storage;

- Azure Dev Fabric and terrific Visual Studio 2010 integration;

- SQL Azure.

It seems like there is everything in place to build truly scalable applications on top of the Windows Azure. In fact, that's how we do this at Lokad (BTW, it feels really interesting to watch in real time how Azure provisions 20+ worker roles to absorb the load and then lets them go).

Yet, there is one really important missing piece that would've simplified large-scale enterprise development a lot. It would've lowered the entry barrier for the development of simple cloud applications as well.

I'm talking about something like MSMQ Azure (Microsoft Message Queuing), just like we have SQL Azure.

Microsoft Message Queuing or MSMQ is a Message Queue implementation developed by Microsoft and deployed in its Windows Server operating systems since Windows NT 4 and Windows 95.

Wait, but does not Windows Azure already have messaging in form of Azure Queues? Well, not quite.

MSMQ server provides non-elastic message queues that can be transactional and durable. In a sense that's similar to any RDBMS engine (non-elastic, transactional and durable). And that's how SQL Azure is.

Azure Queues, on the other side, are designed for the cloud elasticity. This means, that any number of messages and message queues could exist for your application. You don't even have to bother about the servers, capacities or scaling - infrastructure will handle these details. As a trade-off Azure Queues have to implement lightweight transactional model in form of message visibility timeouts. This guarantees, that the message is guaranteed to be processed at least once. But it does not guarantee, that the message will be processed only once (forcing us to do some sort of locking in sensitive scenarios). We additionally lose System.Transactions support.

So Azure Queues have scalability that would work for a huge enterprise, but have a number of pain points, that such an enterprise would have to deal with:

Implementing transaction scopes (at least in form of the volatile enlistments);

Implementing some sort of locks (these normally fall back to your good old MS SQL Server);

Implementing BLOB persistence for the messages above the Azure Queue message limit (which gets hit really fast).

At Lokad, we've been working around these problems in OSS projects of Lokad.Cloud (Azure Object/Cloud Mapper and distributed execution framework) and Lokad Cloud ESB (Azure Service Bus Implementation), more of which I'll talk about later. Yet, a lot of things would've been more simple if Azure MSMQ was to be provided along the similar lines as SQL Azure.

MSMQ server is not scalable on it's own, but how many solutions do really need to process hundreds of transactions per second? Probably 95% of applications would live happily with a single MSMQ Azure instance, just like SQL Azure is enough. And if the application gets stressed to the limits of a single MSMQ Azure instance, then the business behind it is probably profitable enough to have some development resources to invest into partitioning or fine-tuning performance bottlenecks.

Additionally, Azure MSMQ would open direct transition path for OSS enterprise service bus implementation projects like NServiceBus, MassTransit and Rhino ESB towards the cloud (at the moment disparities between the durable transactional message transports and cloud queues keep them away from the cloud despite all the interest in the community).

And with the proper Cloud ESB available within Windows Azure one could build really scalable, flexible and efficient enterprise solutions with the rich toolset and experience of CQRS and DDD.

If we look at the problem of porting existing code that uses MSMQ, it feels like a decent and expensive challenge to port it to Azure Queues transport. If we are talking about an enterprise solution in production, I doubt it will be an easy job to justify to your CTO migration to the cloud in such scenario.

In the next blog post we'll talk about Azure Queues with concurrency locks vs. MSMQ.

What do you think? Do you agree with the proposition (you can vote for it on uservoice) or are you happy with the Azure Queues?

I agree about the utility of MSMQ Azure but not the characterization of Windows Azure as IaaS with added features. AFAIK, most everyone considers Azure to be a PaaS implementation. I’ve added 3 votes on uservoice, and copied Rinat’s suggestion to Implement MSMQ Azure (from Rinat Abdullin) on the Windows Azure Feature Voting Forum.

Maor David-Pur observes that you can’t Update [an] Azure Service While It Is Running in this 5/21/2010 post:

Lets assume that we are doing a project that needs to update endpoint configuration (for example: add a new internal/input endpoints) and even create new worker role when the service is running. According to http://msdn.microsoft.com/en-us/library/dd179341.aspx#Subheading2 it seems we can’t change the service definition when the service is running. So what options are there to do this?

The only option here is to make your service definition changes, delete the running service and recreate\deploy. The change of endpoints as well as adding additional roles require a schema change to the service.

Eugenio Pace describes deferred writes with queues in Windows Azure Guidance – Yet another way of writing records to store and dealing with failures on 5/20/2010:

These series of posts dealt with various aspects of dealing with failures while saving information on Windows Azure Storage:

- Windows Azure Guidance - Additional notes on failure recovery on Windows Azure

- Windows Azure Guidance – Failure recovery and data consistency – Part II

- Windows Azure Guidance – Failure recovery – Part III (Small tweak, great benefits)

We discussed several improvements on the code to make it more resilient. In all the cases discussed, we assumed that persisting changes on the system was a synchronous operation. Even though the articles focused mainly on the lowest levels of the app (from the Repository classes and below), it is likely these are called from a web page or some other components where a user is waiting for a result:

The solutions proposed focused on the highlighted area above and are especially useful if the changes need to be reflected immediately to the user afterwards. But that’s not always the case.

A further improvement to the system’s scalability and resilience can be achieved if instead of saving synchronously, we defer the write to the future. This should be straightforward for reader of this blog and familiar with Windows Azure:

Writing to a Windows Azure Queue is an atomic operation.

Caveats:

- Queues can only hold 8Kb of data. If your entities/records/etc fit in 1 message, great! If not, then you probably need to write the whole thing to a blob and then to the queue. Because there are no transactions between queues and blobs all considerations in the referenced articles still apply.

- The system assumes users can afford to wait the “delta T” in the diagram above (sort of an “eventual consistency”). This is true for many use cases (but not in all cases of course)

- The worker logic to save records to the storage system needs to deal with failures anyway (for example, using the Get/Delete pattern).

In general, deferred writes are a great way to improve scalability of your system. Of course, this is not new. COM+ supported this architecture. Similar solutions are available on other platforms.

I wonder how many of my readers recognize EXEC CICS READQ TD QUEUE(MYQUEUE)… :-)

WordPress.org’s Plugin Directory lists Windows Azure Storage for WordPress:

This WordPress plugin allows you to use Windows Azure Storage Service to host your media for your WordPress powered blog. Windows Azure Storage is an effective way to scale storage of your site without having to go through the expense of setting up the infrastructure for a content delivery.

Please refer UserGuide.pdf for learning more about the plugin.

For more details on Windows Azure Storage Services, please visit the Windows Azure Platform web-site.

Related Links: Plugin Homepage

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Alex James explains Using Restricted Characters in Data Service Keys in this 5/21/2010 post to the WCF Data Services blog:

If your Data Service includes entities with a string key, you can run into problems if the keys themselves contain certain restricted characters.

For example OData has this uri convention for identifying entities:

~/Feed('key')

So if the 'key' contains certain characters it can confuse Uri parsing and processing.

Thankfully though Peter Qian, a developer on the Data Services team, has put together a very nice post that covers a series of workarounds for the problems you might encounter.

Peter says the restricted characters are: %,&,*,:,<,>,+,#, /, ?,\

Wayne Walter Berry explains BCP and SQL Azure in this 5/21/2010 post to the SQL Azure Team blog:

BCP is a great way to locally backup your SQL Azure data, and by modifying your BCP based backup files you can import data into SQL Azure as well. In this blog post, we will learn:

- How to export data out of tables in SQL Azure server into a data file by using BCP and

- How to use the BCP utility to import new rows from a data file into SQL Azure tables.

What is BCP?

The bcp utility is a command line utility that ships with Microsoft SQL Server. It bulk copies data between SQL Azure (or SQL Server) and a data file in a user-specified format. The bcp utility that ships with SQL Server 2008 R2 is fully supported by SQL Azure.

You can use BCP to backup and restore your data on SQL Azure

You can import large numbers of new rows into SQL Azure tables or export data out of tables into data files by using bcp.

The bcp utility is not a migration tool. It does not extract or create any schema or format information from/in a data file or your table. This means, if you use bcp to back up your data, make sure to create a schema or format file somewhere else to record the schema of the table you are backing up. bcp data files do not include any schema or format information, so if a table or view is dropped and you do not have a format file, you may be unable to import the data. The bcp utility has several command line arguments. For more information on the arguments, see SQL Server Books Online documentation. For more information on how to use bcp with views, see Bulk Exporting Data from or Bulk Importing Data to a View.

Exporting Data out of SQL Azure

Imagine you are a developer or a database administrator. You have a huge set of data in SQL Azure that your boss wants you to backup.

To export data out of your SQL Azure database, you can run the following statement at the Windows command prompt:

bcp AdventureWorksLT2008R2.SalesLT.Customer out C:\Users\user\Documents\GetDataFromSQLAzure.txt -c -U username@servername -S tcp:servername.database.windows.net -P password

This will produce the following output in the command line window:

The following screenshot shows the first 24 rows in the GetDataFromSQLAzure.txt file:

Wayne continues with a similar approach for Importing Data to SQL Azure.

Chris Sells chronicles a whole new [data-driven] sellsbrothers.com site in this 5/21/2010 post:

The new sellsbrothers.com implementation has been a while in the making. In fact, I've had the final art in my hands since August of 2005. I've tried several times to sit down and rebuild my 15-year-old sellsbrothers.com completely from scratch using the latest tools. This time, I had a book contract ("Programming Data," Addison-Wesley, 2010) and I needed some real-world experience with Entity Framework 4.0 and OData, so I fired up Visual Studio 2010 a coupla months ago and went to town.

The Data Modeling

The first thing I did was design my data model. I started very small with just Post and Comment. That was enough to get most of my content in. And that lead to my first principle (we all need principles):

thou shalt have no .html files served from the file system.

On my old site, I had a mix of static and dynamic content which lead to all kinds of trouble. This time, the HTML at least was going to be all dynamic. So, once I had my model defined, I had to import all of my static data into my live system. For that, I needed a tool to parse the static HTML and pull out structured data. Luckily, Phil Haack came to my rescue here.

Before he was a Microsoft employee in charge of MVC, Phil was well-known author of the SubText open source CMS project. A few years ago, in one of my aborted attempts to get my site into a reasonable state (it has evolved from a single static text file into a mish-mash of static and dynamic content over 15 years), I asked Phil to help me get my site moved over to SubText. To help me out, he built the tool that parsed my static HTML, transforming the data into the SubText database format. For this, all I had to do was transform the data from his format into mine, but before I could do that, I had to hook my schema up to a real-live datastore. I didn't want to have to take old web site down at all; I wanted to have both sites up and running at the same time. This lead to principle #2:

thou shalt keep both web sites running with the existing live set of data.

And, in fact, that's what happened. For many weeks while I was building my new web site, I was dumping static data into the live database. However, since my old web site sorting things by date, there was only one place to even see this old data being put in (the /news/archive.aspx page). Otherwise, it was all imperceptible.

To make this happen, I had to map my new data model onto my existing data. I could do this in one of two ways:

- I could create the new schema on my ISP-hosted SQL Server 2008 database (securewebs.com rocks, btw -- highly recommended!) and move the data over.

- I could use my existing schema and just map it on the client-side using the wonder and beauty that was EF4.

Since I was trying to get real-world experience with our data stack, I tried to use the tools and processes that a real-world developer has and they often don't get to change the database without a real need, especially on a running system. So, I went with option #2.

And I'm so glad I did. It worked really, really well to change names and select fields I cared about or didn't care about all from the client-side without ever touching the database. Sometimes I had to make database changes and when that happened, I has careful and deliberate, making the case to my inner DB administrator, but mostly I just didn't have to.

And when I needed whole new tables of data, that lead to another principle:

build out all new tables in my development environment first.

This way, I could make sure they worked in my new environment and could refactor to my heart's content before disturbing my (inner) DB admin with request after request to change a live, running database. I used a very simple repository pattern in my MVC2 web site to hide the fact that I was actually accessing two databases, so when I switched everything to a single database, none of my view or controller code had to change. Beautiful!

Chris continues with “Data Wants To Be Clean,” “Forwarding Old Links,”What's New?,” “The Room for Improvement” and concludes:

Where are we?

All of this brings me back to one more principle. I call it Principle Zero:

thou shalt make everything data-driven.

I'm living the data-driven application dream here, people. When designing my data model and writing my code, I imagined that sellsbrothers.com was but one instance of a class of web applications and I kept all of the content, down to my name and email address, in the database. If I found myself putting data into the code, I figured out where it belonged in the database instead.

This lead to all kinds of real-world uses of the database features of Visual Studio, including EF, OData, Database projects, SQL execution, live table-based data editing, etc. I lived the data-driven dream and it yielded a web site that's much faster and runs on much less code:

- Old site:

- 191 .aspx file, 286 KB

- 400 .cs file, 511 KB

- New site:

- 14 .aspx files, 19 KB

- 34 .cs files, 80 KB

Do the math and that's 100+% of the content and functionality for 10% of the code. I knew I wanted to do it to gain experience with our end-to-end data stack story. I had no idea I would love it so much.

Pablo Castro announced on 5/20/2010 that the OData team is Adding support for HTTP PATCH to an OData service:

Back when we were working on the initial version of OData (Astoria back then) there was some discussion about introducing a new HTTP method called PATCH, which would be similar to PUT but instead of having to send a whole new version of the resource you wanted to update, it would let you send a patch document with incremental changes only. We needed exactly that, but since the proposal was still in flux we decided to use a different word (to avoid any future name clash) until PATCH was ready. So OData services use a custom method called "MERGE" for now.

Now HTTP PATCH is official. We'll queue it up and get it integrated into OData and our .NET implementation WCF Data Services. Of course those things take a while to rev, and we have to find a spot in the queue, etc. In the meanwhile, this extremely small WCF behavior adds PATCH support to any service created with WCF Data Services. This example is built using .NET 4.0, but it should work in 3.5 SP1 as well. You can get the code and a little example from here:

http://code.msdn.microsoft.com/DataServicesPatch.

To use it, just add [PatchSupportBehavior] as an attribute to your service class. For example:

[PatchSupportBehavior]

public class Northwind : DataService<NorthwindEntities>

{

// your data service code

}As for how it works, the behavior catches all requests before they go to the WCF Data Services runtime. For each request it inspects the HTTP method used, and if it is PATCH then it changes it to MERGE to make the system believe it was MERGE all along.

To try it out you can use any tool or library that lets you do HTTP directly. For example, using CURL:

curl -v -X PATCH -H content-type:application/json -d "{CategoryName: 'PatchBeverages'}" http://localhost:29050/Northwind.svc/Categories(1)

Pablo continues with examples of HTTP responses before and after applying the attribute. It’s nice to hear from Pablo again; his posts are far less frequent since Astoria became OData.

Egil Hansen posted a minified version of his .NET OData Plugin for jQuery to GitHub on 5/16/2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Vittorio Bertocci (Vibro) announced the Information Card Issuance CTP in this 5/21/2010 post:

Greetings from Melbourne! It’s 4:00am here and I just jumped out of the bed, today I the flight back to Redmond. If you know me you know I am not a morning person, you can imagine the mood I would normally be in right now… and instead I am pretty happy, since I bear good news [everyone]. I’ll relay them in the words of the GETeam, since they put it so beautifully (and my Broca’s area can’t yet simulate English at this time of the day).

Today, Microsoft is announcing the availability of the Information Card Issuance Community Technical Preview (CTP) to enable the following scenarios with Active Directory Federation Services 2.0 RTM:

- Administrators can install an Information Card Issuance component on AD FS 2.0 RTM servers and configure Information Card Issuance policy and parameters.

- End users with IMI 1.0- or IMI 1.1 (DRAFT)-compliant identity selectors can obtain Information Cards backed by username/password, X.509 digital certificate, or Kerberos.

- Continued support for Windows CardSpace 1.0 in Windows 7, Windows Vista and Windows XP SP 3 running .NET 3.5 SP1.

We have also adding two new mechanisms for interaction and feedback on this topic, a dedicated Information Card Issuance Forum and a monitored e-mail alias ici-ctp@microsoft.com.

Now that’s good stuff! What are you waiting for, download the Information Card Issuance Community Technical Preview and live the dream!

Strangely, the “Geneva” Team Blog, a.k.a. the CardSpace Blog hadn’t announced the CTP by the time of Vibro’s post.

The “Geneva” Team Blog posted AD FS 2 proxy management on 5/21/2010:

Since the AD FS 2.0 release candidate (RC), the AD FS product team got feedback that the experience of setting up AD FS proxy server and making it work with AD FS Federation Service is cumbersome, as it involves multiple steps across both AD FS proxy and AD FS Federation Service machines.

In AD FS 2.0 RC, after IT admin installs AD FS 2 proxy server on proxy machine, she runs proxy configuration wizard (PCW) and needs to:

- Select or generate a certificate as the identity of the AD FS 2 proxy server.

- Add the certificate to AD FS Federation Service trusted proxy certificates list

- Outside of AD FS management console, make sure the certificate’s CA is trusted by AD FS Federation Service machines.

Such above steps are needed to set up a level of trust between AD FS proxy server and AD FS Federation Service. The AD FS proxy server might live in DMZ and provides one layer of insulation from outside attack.

AD FS administrator need to keep track of the proxy identity certificate life time and proactively renew it to make sure it does not expire and disrupt its service.

There are several pain points around AD FS proxy setup and maintaining experience for AD FS 2 RC version:

- Setting up proxy involves touching multiple machines (both proxy and Federation Service machines)

- Maintaining AD FS proxy working state involves manual attention and steps

In RTW, above issues are addressed by:

- Easy provisioning: AD FS admin set up proxy with AD FS Federation Service by specifying username/password of an account that is authorized by AD FS Federation Service to issue proxy trust token to identify AD FS proxy servers. The proxy trust token is a form of identity issued by the AD FS Federation Service to the AD FS proxy server to identify established trust. By default, domain accounts which are part of the Administrators group on the AD FS Federation Service machines or the AD FS Federation Service domain service account are granted such privilege to provision trust by proxy from AD FS Federation Service. Such privilege is expressed via access control policy and is configurable via powershell. By default proxy trust token is valid for 15 days.

- Maintenance free: Over time, the AD FS proxy server periodically renews the proxy trust token from the AD FS Federation Service to maintain AD FS proxy server in a working state. By default AD FS proxy server tries to renew proxy trust token every 4 hours.

- Revocation support: If for whatever reasons, established proxy trust needs to be revoked by AD FS Federation Service, AD FS Federation Service has both powershell and UI support to do that. All proxies are revoked at the same time. There is no support for individual proxy server revocation.

- Repair support: When proxy trust expires or is revoked, AD FS administrator can repair such trust between AD FS proxy server and AD FS Federation Service by running PCW in UI mode or command line mode (fspconfigwizard.exe).

The team continues with “Management Support” and “Workflow” topics.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Fox describes Integrating Azure & Sharepoint 2010 [& Codename “Dallas”] in this detailed 5/21/2010 post:

Azure is Microsoft’s platform in the cloud that provides you with an endpoint for your custom services and data storage. It also provides you with a great development environment to build and deploy solutions into the cloud. To get more information on how to get started with Windows Azure, you can go here: http://www.microsoft.com/windowsazure/. So the question is: what can we do with Azure and SharePoint 2010? The answer is: a lot.

First, think about the new features in SharePoint 2010 that make it possible to, for example, interact with data in different ways. I’m specifically thinking of BCS—which you could use to model the data that you get from Azure. Also, think about the ease with which you can now create objects such as Web Parts or Events in SharePoint 2010—you could also apply service endpoints from the cloud and integrate with these artifacts. Further, think about the ability to create and distribute Azure services to your customer in WSPs, which is the standard way of building and deploying SharePoint solutions. This opens the way for you to build and deliver cloud-based solutions of an array of shapes and sizes.

One interesting way that you can integrate Azure today with SharePoint is through the use of Azure Dallas, the code-name for a public cloud data technology. You can go here for more info: http://www.microsoft.com/windowsazure/dallas/. Specifically, you can take data that is hosted in the cloud and then use that in your solutions. We’re working on a solution now that does just this; that is, it takes Dallas data hosted on Azure and pulls it into SharePoint (specifically using a Silverlight application to render the data) and then pushes the data from Azure into a SharePoint list. This is a light integration, but in the grander scheme is pretty interesting as it shows Azure data integration and mild integration with SharePoint 2010. I showed this at a recent keynote at the SharePoint Pro Summit in Las Vegas and talked to it again today at Microsoft’s internal TechReady 10 conference.

What you’re seeing is the overlay of crime and business data overlaid on a Bing map of the Seattle area. Why would I do this? Because pulling in this type of data into SharePoint can help me, as a real estate decision maker, understand high-crime areas along with business data (e.g. types of businesses along with real estate data) to aid me in making the right decisions for my customers. Fairly interesting, yes?

While the solution is not completed yet, I did want to post some of the hook-up code, else, well, rotten tomatoes would surely be flying my way. So, to get you started, I’ve added the high-level steps it would take for you to get a solution running on SharePoint that integrates Azure/Dallas with SharePoint 2010—specifically a custom Web Part.

Steve continues with the details for implementing the Web Part.

Timothy Dalby’s Find-A-Home app that runs under Windows Azure became a finalist in Canada’s Make Web Not War contest on 5/20/2010:

Find-A-Home is an innovative decision engine that simplifies the process of finding a new home by automatically researching the area a house is located in and providing each home with a score based on a number of metrics. The engine uses a number of data sets, including the locations of City Parks, Schools, Bus Stops, Police Stations, Fire Stations, and Recreation Centers to build a ranking for each house.

Go to the Property Listings page:

and click a View link to display data for a fictitious address:

Vote for who YOU think should win:

The audience will vote based on what they hear/see/read, which means your votes via twitter and twtpoll will make a difference! Here’s how:

Tweet with a hashtag for only ONE application above (#tholus or #findahome or #taxicity) + #webnotwar + link to the FTW! site: http://bit.ly/ftwvote (in no particular order

)

EXAMPLE: OMG, #findahome totally rocks and should win! http://bit.ly/ftwvote #webnotwar

PR Newswire reports Akvelon Successfully Ports Drupal, Joomla! into Microsoft's Windows Azure™ Platform on 5/21/2010:

In modifying Drupal and Joomla! for use on the Windows Azure platform, leading cloud computing consultants Akvelon Inc. (http://www.akvelon.com) proved this week that open source and the cloud are indeed compatible -- a concept many had questioned before today.

Microsoft's first cloud computing offering, the Windows Azure™ cloud operating system, allows users to develop, store, manage and host applications from the cloud. But until now, the popular open-source content management systems Drupal and Joomla! had not been ported into the Windows Azure platform.

"Akvelon is pleased to leverage the power of Windows Azure to help developers use Drupal and Joomla! open-source technologies in the cloud," stated Sergei Dreizin, CEO of Akvelon. "The strengths of these technologies ensure a robust cloud-based platform for developing and deploying applications and services."

The company saw the need to bring Drupal and Joomla! into the cloud, and Windows Azure was its cloud services operating system of choice.

"Windows Azure is powerful and flexible enough to allow developers to really take advantage of all Drupal and Joomla! have to offer the cloud," said Dreizin. "We needed a cloud service that was robust, nimble, scalable and cost-effective for Drupal and Joomla! users. We found all that and more in Windows Azure." …

Microsoft Dynamics CRM reports on 5/21/2010 “Just one month left” to enter the xRM Showcase Challenge:

One Framework: Many Applications.

Showcase your work creativity by submitting your solution to share with the community. Not only will you earn "street cred", but you may also win a great prize. Be a contestant and a judge!

Microsoft Dynamics CRM and its flexible application development framework, xRM, can help you build quality custom applications to track any business relationship. But you already know that because you’ve created great solutions using these tools.

Now it’s time to take the challenge: enter your solution in our contest today. Win enough votes from online community members and your solution will be showcased on the Microsoft Dynamics CRM Web site. Plus, you’ll win a very cool prize!

Be sure to check back to see how you rank, and cast your five votes for the solutions of your choice (yes, you can vote for your own, and, no, we won’t tell). Your votes can be cast based on creativity, functionality, problem-solving, return on investment, or any other reason.

After you submit your solution, promote it on Twitter with hash tag #xrmshowcase.

Eric Nelson asks Q&A: Does it make sense to run a personal blog on the Windows Azure Platform? and answers “NO!” in this 5/20/2010 post:

I keep seeing people wanting to do this (or something very similar) and then being surprised at how much it might cost them if they went with Windows Azure. Time for a Q&A.

Short answer: No, definitely not. Madness, sheer madness. (Hopefully that was clear enough)

Longer answer:

No because It would cost you a heck of a lot more than just about any other approach to running a blog. A site that can easily be run on a shared hosting solution (as many blogs do today) does not require the rich capabilities of Windows Azure. Capabilities such as simplified deployed and management, dedicated resources, elastic resources, “unlimited” storage etc. It is simply not the type of application the Windows Azure Platform has been designed for.

Related Links:

Dave Courbanou asks Capgemini: Giving Microsoft BPOS A SaaS Boost? in this 5/20/2010 post to The VAR Guy blog:

Capgemini, the technology and outsourcing consulting service, and Microsoft have inked an alliance to help businesses embrace the cloud. The move could also give Microsoft’s Business Productivity Online Suite (BPOS) SaaS platform a lift. Here’s why.

Capgemini plans on marketing and delivering BPOS to its customers. The consulting firm says the move builds on Capgemini’s existing relationship with the Microsoft Windows Azure team. While BPOS involves Exchange Online, SharePoint Online and other Microsoft-centric applications, Windows Azure is a cloud operating system for a range of third-party applications.

Microsoft is building similar SaaS relationships with other big integrators. One prime example involves Computer Sciences Corp., which helped Microsoft to win a major BPOS and SaaS engagement with the U.K.’s Royal Mail Group.

Back in March 2010, Microsoft Channel Chief Allison Watson called on partners to more aggressively embrace both BPOS and Windows Azure. Some VARs think the Microsoft cloud platforms offer a clear path into recurring revenue opportunities, but other partners worry about Microsoft controlling billing, branding and customer relationships for the platforms.

Sumit Chawla announced a New version of the Command-Line Tools for PHP to Deploy Applications on Windows Azure in this 5/20/2010 post to the Interoperability @ Microsoft blog:

Today at Tek-X during the “Tips & Tricks to get the most of PHP with IIS, and the Windows Azure Cloud” session, Microsoft showcased the new version of the Windows Azure Command-line Tool for PHP available for download under an open source BSD license at: http://azurephptools.codeplex.com/.

Announced in March 2010, the Windows Azure Command-line Tool for PHP enables developers to easily package and deploy new or existing PHP applications to Windows Azure using a simple command-line tool without an Integrated Development Environment (IDE). Developers have an option of deploying to the Development Fabric (a sort of local cloud for development and test) or directly to the Windows Azure Cloud. The new version of the Windows Azure Command-line Tool for PHP supports both Web and Worker service roles allowing developers the freedom to customize their applications to their needs (Web roles are the internet facing applications, and Worker roles are for background tasks).

This project initially was started as the result of feedback we received from PHP developers who are using various IDEs (or none), who told us that a command-line tool would be a great addition to the Windows Azure Tools for Eclipse project.

To get familiar with the tools you can read this post New command-line tool for PHP to deploy applications on Windows Azure or watch this video on Channel9 where I presented the new features and demo how to deploy a PHP application (using WordPress with SQL Server Build) to Windows Azure.

Watch the 00:16:13 Webcast here.

This demo is actually an abstract of the “Welcome to the Cloud: Windows Azure Command-line tools for PHP” webcast I presented last Friday as part of the PHP Architect webcast series. The entire recording will be available soon at: http://www.phparch.com/. Stay tuned!

As always, if you have feedback, questions, or wishes, please join us on the project site: http://azurephptools.codeplex.com/.

Additional links:

Return to section navigation list>

Windows Azure Infrastructure

Marius Oiaga reports BizTalk Server 2010 Hits Beta: RTM in Q3 2010 in this 5/21/2010 post to Softpedia:

BizTalk Server 2010 is one step closer to finalization, having hit an important development milestone on May 20, 2010. The Beta bits of the next generation of BizTalk Server are now available for download, with Microsoft encouraging early adopters to grab the release and start testing. In the Redmond company’s perspective, BizTalk Server 2010 is a key piece of Microsoft’s application infrastructure technologies puzzle, along with offerings such as Windows Azure AppFabric and Windows Server AppFabric.

Of course, the Redmond company’s vision is much more complex and spans across a wide range of products, including some released recently.

“BizTalk Server 2010 aligns with the latest Microsoft platform releases, including SQL Server 2008 R2, Visual Studio 2010 and SharePoint 2010, and will integrate with Windows Server AppFabric. This alignment, along with the availability of a wide array of platform adapters, allows customers to stitch together more flexible and manageable composite applications,” revealed a member of the BizTalk Server team.

According to current plans, BizTalk Server 2010 will be released to manufacturing in the third quarter of 2010. Microsoft hasn’t provided a specific availability deadline so far, and it isn’t expected to until the development process of the product is almost finalized. BizTalk Server 2010 is, of course, the successor of Microsoft’s current version of the Integration and connectivity server solution, BizTalk Server 2009, and the seventh release of the product.

“Updates include enhanced trading partner management, a new BizTalk mapper and simplified management through a single dashboard that enables customers to backup and restore BizTalk configurations. Also included in this release are new Business to Business integration capabilities such as rapidly on-board and easy management of trading partners and secure FTP adapter (FTPS),” the BizTalk Server team representative added.

BizTalk Server 2010 Beta is available for download here.

David Linthicum posted links to his cloud-related white papers and InfoWorld articles to a New from David Linthicum page of the Bick Group Web site on 5/21/2010.

Michael Vizard posted Putting a Cost on Cloud Computing to the ITBusinessEdge blog on 5/20/2010:

Figuring out the real cost of IT and the projected return on those investments has never been very easy. And with the rise of cloud computing services where pricing can change on a daily basis, it's only going to get more difficult.

To help address this issue, Digital Fuel, a provider of applications for managing IT budgets, is rolling out a cloud computing extension to its suite of IT financial-management applications that are delivered as a service.

According to Digital Fuel CEO Yisreal Dancziger, the service includes an IT Cloud Cost Management Module that features connectors to all the major cloud computing services that track pricing. Dancziger concedes that volume pricing and spot pricing for cloud computing services may affect costs, but most of the major service such as Amazon have pretty consistent pricing.

The real issue, says Dancziger, is that IT organizations are going to need a framework to compare public versus private cloud computing platforms and any hybrid approach to cloud computing that they may decide to pursue.

As IT evolves into a service managed by internal IT departments relying on a mix of internal and external resources, figuring out the financing of IT is getting more complex. The concept behind Digital Fuel and similar applications and services is to give IT access to the same type of finance applications that every other sector that invests in major capital equipment projects already has.

<Return to section navigation list>

Cloud Security and Governance

Maxwell Cooter questions a “Changing attitude towards security?” in his How managers stopped worrying and learned to trust the cloud to NetworkWorld’s Security blog on 5/21/2010:

More than half of European organisations believe that cloud computing will result in an improvement in security defences. That's according to a survey conducted on the behalf of IT conference 360.

In February, a survey from Symantec found that only a third of IT managers thought cloud computing would improve security - the same number that thought cloud would make their systems more insecure

The survey could herald a change in attitude by IT managers. Security frequently features as the number one inhibitor when it comes to looking at cloud. Only this week, SNIA Europe chairman Bob Plumridge described how it was the main concern of user companies. And in March, the former US National Security Agency technical director, Brian Snow said that he didn't trust cloud services

According to the 360IT poll, only 25 percent of managers surveyed thought that the onset of cloud computing would make security worse.

Commenting on the survey, Richard Hall, CEO of CloudOrigin, said that the current trend of businesses migrating their IT systems into the cloud does not mean a reduction in security defences.

"After decades performing forensic and preventative IT security reviews within banking and government, it was already clear to me that the bulk of security breaches and data losses occur because of a weakness of internal controls," he said in his 360IT blog post.

According to Hall, the complete automation by public cloud providers means the dynamic provision, use and re-purposing of a virtual server occurs continuously within encrypted sub-nets. "That's why solutions built on commodity infrastructure provided by the likes of Amazon Web Services have already achieved the highest standards of operational compliance and audit possible," he said.

Interesting about-face, but I believe the assertion that “solutions built on commodity infrastructure provided by the likes of Amazon Web Services have already achieved the highest standards of operational compliance and audit possible” is epic over-reaching.

<Return to section navigation list>

Cloud Computing Events

Eventbrite lists the UK AzureNET User Group: The Power of Three meeting on 5/27/2010 at Microsoft Cardinal Place London:

After our fantastic adventure at Techdays, the UK AzureNET user group is back at Microsoft Cardinal Place London. We have squeezed in 3 Azure talks into an unmissed evening of presentations and giveaways. We have got FREE licenses for Cerebrata Studio (http://www.cerebrata.com), books and as well as much more to give away.

See follow us on twitter @ukazurenet for more details as they are published.

Agenda:

6:00pm-6:30pm: Registration

6:30pm: Interoperability on Windows Azure.

Rob Blackwell (AWS)

Most people are (understandably) building Azure applications in C Sharp and .NET, but the AzureRunMe project (http://azurerunme.codeplex.com) makes it easy to run third party technologies such as Java, Python, Ruby and even Clojure.

Windows Azure uses open APIs, a design decision that makes storage and AppFabric easily accessible to developers on non-Microsoft platforms. I’ll run through some examples and demos and show why I think that Azure has a useful part to play in interoperability scenarios.7:00pm: Patterns for the Windows Azure Platform.

Marcus Tillett (@drmarcustillett), Dot Net Solutions

Windows Azure Platform is fast evolving platform with patterns for use its use continuing to evolve, is this session we will take a quick look at some common patterns that are applicable to Azure.7:30pm: Break for Pizza

8:15pm: Azure gotchas - What to look out for

Daniel May (sharpcloud)

Having been worked with Azure and Silverlight to aid in the build of the sharpcloud software (www.sharpcloud.com), Daniel has encountered a number of different issues, some that are easily fixed and others that require more attention. This session will be going over the general gotchas you might fall trap to at some point during your use of Azure.9:15pm: Wrap up and close

Brent Howard and David Burela will present on the Dynamics CRM SDK and integrating Dynamics CRM with Azure at the Melbourne Dynamics CRM Users Group meeting of 6/3/2010:

In this month’s session we will be covering some of the new features and changes to the Microsoft Dynamics CRM SDK 4.0.12 and integrating CRM to Azure.

We will be focusing mostly on the “Advanced Developer Extensions for Microsoft Dynamics CRM 4.0″ and how this can help improve seamless integration to your application.

As always we will be kicking off around 5:30pm with pizzas and soft drinks ready to dive in at 6.

The sessions will be presented by Brent Howard and David Burela

Please RSVP at http://xrmazure.eventbrite.com

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Veronica C. Silva wrote about Cloud Commons in her Industry players collaborate on cloud computing post of 5/20/2010 for NetworkWorld’s DataCenter blog:

Major industry players recently forged an alliance to collaborate on cloud computing, which has recently become the buzzword in the IT industry.

Industry players, including a university and an investment firm, organised 'Cloud Commons', a Web-based collaborative community that seeks to help IT professionals learn more about cloud computing through sharing and feedback from peers.

With IT professionals sharing best practices and their expertise, Cloud Commons organisers expect IT professionals to be properly guided on how best to implement cloud computing to meet their organisation's business objectives.

Among the founding collaborators of Cloud Commons include CA Technologies, TM Forum, Red Hat, Carnegie Mellon University and Insight Investments.

"Today, there is no comprehensive, unbiased source that solicits and aggregates the most current and relevant knowledge about cloud computing and the accumulated, actual experiences that organisations are having with the cloud," said David Hodgson, senior vice president of the cloud products and solutions business line at CA Technologies. "Cloud Commons will address this need--providing IT professionals with situational awareness and visibility into what is possible with the cloud."

Collaborative features

As a collaboration tool, the Web community contains features that encourage sharing of ideas and learning to allow IT professionals to implement cloud computing in their organisations. There is an area on the website that will allow participants to provide qualitative feedback on their experience with third-party cloud services. Participants can also post comments to share their best practices.

Carnegie Mellon University will also make available the Service Measurement Index (SMI) it is developing. The index is intended as a standard for quantifying and evaluating cloud computing services and addresses the need for industry-wide, globally accepted measures for calculating the benefits and risks of cloud computing services.

The website will also be informative, with articles from industry analysts and subject matter experts, blog feeds from industry thought leaders, white papers and stories on real experiences from IT professionals.

There will also be a marketplace of cloud computing service offerings to include vendor service ratings to enable participants to compare alternate service options.

Industry partners

"Demand for cloud services holds significant potential for the technology and communications industries, but many barriers still exist to widespread adoption at an enterprise level," said Martin Creaner, president, TM Forum, an industry association of suppliers and providers of communications solutions and services.

"We are fully supportive of this type of initiative to improve the uptake and development of a vibrant and open cloud services market," Creaner added.

CA Technologies said it has invested on independent research as initial information that can help IT professionals learn more about cloud computing services. But it expects the online community to contribute more information through the website.

Veronica continues on page 2. I’m not at all convinced that “TM Forum, Red Hat, Carnegie Mellon University and Insight Investments” are “major players” in the cloud industry. It’s my opinion that Cloud Commons syndication services will be at least as biased as mine, but toward the offerings of the founders.

Barb Mosher reports Google Offers A Business Edition of the Google App Engine in her 5/20/2010 post to CMSWire:

With Google I/O happening right now, we all knew there would be lots of new things coming from Google. We also all know how much the company wants to lead in the enterprise over Microsoft. So it should not really be surprising that they now have a business edition of their hosted application environment, Google App Engine. The question is, will anyone buy it?

Google App Engine Two Years Ago

We introduced the Google App Engine two years ago when it was first announced at the first ever Google I/O conference. At the time of its release it was in private beta and free, designed to compete against Amazon for developer attention to host and run their web applications.

Two years later we see the Google App Engine for Business.

Google App Engine for Business

The Google App Engine for Business offers enterprise customers a scalable infrastructure for hosting their own web applications. Currently in preview, it is open to a limited number of enterprises. What new capabilities does it offer over the free version?

- Centralized Management: Using a single administration console you can manage what applications are hosted in your secure Google Apps domain. Using access control lists, you secure access to who can deploy new code and who can access the application data. New applications deployed into the domain are actually hosted in a subdomain, so they automatically pick up an URL (ie *.apps.example.com).

- Access to Google APIs: Not limited to the business edition, you can develop applications in Java or Python that can access Google's services through a number of APIs.

- Hosted SQL Databases: The free version of the Google App Engine does not include a relational database. Instead it uses its BigTable database system. But for enterprises, a relational database is a must (even Microsoft shifted to offering this capability on Windows Azure). SQL database support provides developers a fully functionally dedicated relational database without the hassle of managing the database itself. Just what databases will be supported isn't specificied.

- SSL on Your Domain: Enterprise can add the extra security of SSL communications to their hosted applications.

Both Hosted SQL and SSL Support are still works in progress. According to Google's roadmap, Hosted SQL will be available sometime before the end of Q3 with SSL support coming for the end of the year. Both will be in limited release.

The Google Apps Engine for Business offers a 99.9% SLA plus premium developer support at your fingertips. What does it cost? Each application hosted will cost US$ 8 per user/month up to a maximum of US$ 1,000 per month. So you pay based on the number of users who access the application.

If you want to host your public website or another publicly accessible web application on your domain, you can, but the pricing for that hasn't been worked out yet.

A Partnership with VMWare

Not only will enterprises be able to develop solutions for Google's cloud infrastructure, but thanks to a new partnership between Google and VMWare, they will also be able to link their on-premise apps with their cloud-based apps, or other clouds altogether (like Amazon).

A Threat to Amazon and Azure

Google Apps Engine for Business was always a potential threat to Amazon EC2, especially when Google finally offered support for Java. But the new Business edition also may make waves for Microsoft's hosted platform, Azure.

Of course, Google Apps Engine for Business is only in preview and some of the enterprise capabilities aren't even available in preview yet, so it's going to a be awhile before that threat has any strength.

Add this news to Google's announcement of storage for developers and we see the momentum Google is building. What's next? We are watching.

Rob Conery’s In The Wild: How We Do It at Tekpub post of 5/19/2010 compares the cost of Ruby On Rails/MongoDB/MySQL with Windows Azure and SQL Server:

I've received a flood of emails since we launched the revamped Tekpub site (using Rails and MongoDb) a few months back. Many people have been surpised to find out that we use both MongoDb *and* MySQL. Here's the story of how we did it and why.

Let's Get This Out Of The Way First

Some people might read this as "Rob's defecting" or "Rob's a 'Rails Guy' now". If you're looking for drama and sassy posts on The One True Framework - there's a site for that. Our choice to move to Rails/NoSQL was largely financial - you can read more about that here. What follows can easily be translated to whatever framework you work with - assuming a Mongo driver exists for it.

TL;DR Summary

We split out the duties of our persistence story cleanly into two camps: reports of things we needed to know to make decisions for the business and data users needed to use our site. Ironically these two different ways of storing data have guided us to do what's natural: put the application data into a high-read, high-availability environment (MongoDb) - put the historical, reporting data into a system that is built to answer questions: a relational data store.

It's remarkable at how well this all fits together into a natural scheme. The high-read stuff (account info, productions and episode info) is perfect for a "right now" kind of thing like MongoDb. The "what happened yesterday" stuff is perfect for a relational system. There are other benefits as well and one of them is a very big thing for us is

We don't want to run reports on our live server. You don't know how long they will take - nor what indexing they will work over (limiting the site's perf). Bad. Bad-bad.

The Verdict It works perfectly. I could *not* be happier with our setup. It's incredibly low maintenance, we can back it up like any other solution, and we have the data we need when we need it.

Dramatis Personae

The actors in this play are making their way to the stage now... as the curtain rises...

Ruby on Rails 2.3.5

Ruby 1.8.7

MongoMapper gem from John Nunemaker

MySQL 5.1

DataMapper gem

... and of course MongoDb

The server that we're running in Ubuntu 9.1 up on Amazon EC2 - large instance with prepay.

Rob goes on to explain how the site works and what it costs on Amazon EC2 versus what it might cost on Windows Azure. Much of the conversation at the end deals with SQL Server licensing costs, which could be avoided by using SQL Azure. …

David Methvin takes Microsoft to task for how it upgraded MSDN blogs, missing IMAP in Hotmail, and complex Windows Azure diagrams in his Microsoft's Eating Caviar In The Cloud post of 5/18/2010:

One of my favorite terms in Microsoft-speak is "dogfooding", the act of using a product or service internally so that its developers can experience it the same way that customers will. When it comes to cloud computing, though, Microsoft seems to have lost its way. Their cloud efforts reflect an internal diet that's more like caviar than dogfood.

Here's an example. This week, Microsoft is upgrading their MSDN blogging platform. For the next week, bloggers will not be able to make new posts unless they were queued before the upgrade started. Also, nobody will be able to make posts on new or existing blog entries. So here we have one whole week of downtime for Microsoft's communication with their users. Could any of Microsoft's own customers afford the luxury of a week-long offline upgrade for their own companies?

Another case is Windows Live Hotmail. An update is coming soon to Hotmail. Another blog entry talks about how Microsoft uses feedback from Hotmail users to decide which features to implement. This new release has a lot of "social" features, but still lacks the basic IMAP functionality that any decent mail system should have. Users hunger for standard IMAP connectivity, but Microsoft serves them the hacky Outlook Connector instead.

Both of these examples expose Microsoft's version-oriented and feature-oriented view of software. Rather than seeing the web as a series of services that are always online, Microsoft seems to have no problem with taking their blogs offline for a week, which is an eternity in Internet time. Rather than incrementally improving Hotmail with small tweaks over time, Microsoft focuses on big-bang releases and still manages to ignore the need for basic IMAP functionality.

Still not convinced? Here's another experiment you can try. Spend five minutes looking at the Amazon Web Services page. Now head over to the Microsoft Azure page with the same five-minute study period. Which offering do you think you understand better? Which one seems to reflect the needs of companies building web-based services? To me, Amazon wins on both counts. Microsoft's Azure diagram, no doubt exported from a lengthy PowerPoint deck, talks in vague generalities but never seems to really explain what is being offered.

Rumor is that Google will be following Amazon and announcing a similar set of services at their Google I/O conference this week. This is further bad news for the caviar consumers at Microsoft. Amazon and Google aren't coming up with some untested ivory-tower idea of what their customers might buy. Instead, they're selling versions of the same services that they use on their own web sites, ones that have been tested, proven, and refined over several years of real use. Now that's dogfooding.

<Return to section navigation list>