Windows Azure and Cloud Computing Posts for 5/13/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Update 5/14/2010: My Q&A with Microsoft about SharePoint 2010 OnLine with Access Services post of 5/12/2010 (copied from my Amazon Author Blog on 5/14/2010) in the Windows Azure Infrastructure section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Table and Queue Services

Fritz Onion announced a new Windows Azure Storage - Tables and Queues Pluralsight On-Demand! course on 5/13/2010:

Windows Azure Storage - Tables and Queues

| This module covers the concepts and programming model for working with Azure tables and queues. Coverage includes requirements for each, using the StorageClient classes for managing and working with queues and tables, and how to configure connections. |

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

David Robinson offers SQL Azure Quick Links in a 5/13/2010 post to the SQL Azure Team blog:

This is a work in progress blog post that gives you easy links to SQL Azure resources. We will be updating it frequently with current links and additional resources. Make sure to book mark it.

Management

- SQL Azure Portal: Where you manage your servers and database, including: creating new databases, configuring your firewall, etc.

- Microsoft Online Customer Portal: Manage your account, billing, and services.

- Windows Azure Platform Status Dashboard: Provides the current status of the health of the Windows Azure Platform. You can also subscribe to an RSS feed here

- Windows Azure Platform Support Links: Here you can report an issue with your service or bill, request an increase to your quota, plus links to the Windows Azure Platform forums

Development

- MSDN Development Center: Jump off site for information on developing and managing SQL Azure.

- Channel 9 Videos: Videos tagged with SQL Azure in Channel 9.

- MSDN Documentation: MSDN Documentation on SQL Azure.

- SQL Azure Forums: Ask questions and get help. This is the direct link as noted in the support links above.

SQL Azure Data Sync

- Data Sync Quick Start: Quick start for developers using SQL Azure Data Sync.

- Data Sync Download: Download SQL Azure Data Sync, read the Quick Start first so that you have installed the prerequisites.

Marketing

- Microsoft.com Marketing Site: Official site giving details of the database product and services available.

News

- SQL Azure Team Blog: News, Development Tips. You are here.

John R. Durant shows you how to Connect Microsoft Excel To SQL Azure Database in this 4/5/2010 article, which I missed when posted:

A number of people have found my post about getting started with SQL Azure pretty useful. But, it's all worthless if it doesn't add up to user value. Database are like potential energy in physics-it's a promise that something could be put in motion. Users actually making decisions based on analysis is analogous to kinetic energy in physics. It's the fulfillment of the promise of potential energy.

So what does this have to with Office 2010? In Excel 2010 we made it truly easy to connect to a SQL Azure database and pull down data. Here I explain how to do it.

By following these steps you will be able to:

- Create an Excel data connection to a SQL Azure database

- Select the data to import into Excel

- Perform the data import

…

John continues with a table of illustrated instructions for each of the preceding steps. Thanks to David Robinson for the 5/13/2010 head’s up.

Guy Barrette’s brief SQL Azure vs. SQL Server post of 5/13/2010 points to:

If you’d like to know the differences between SQL Server and SQL Azure, check this white paper:

This paper compares SQL Azure Database with SQL Server in terms of logical administration vs. physical administration, provisioning, Transact-SQL support, data storage, SSIS, along with other features and capabilities.

This FAQ is also interesting:

Microsoft SQL Azure FAQ

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team’s Real World Windows Azure: Interview with Pankaj Arora, Senior Solution Manager on the 2009 Giving Campaign Auction Tool Team at Microsoft post of 5/13/2010 begins:

As part of the Real World Windows Azure series, we talked to Pankaj Arora, Senior Solution Manager at Microsoft, about using the Windows Azure platform to deliver the company's 2009 Giving Campaign Auction Tool. Here's what he had to say:

MSDN: Tell us about the annual Giving Campaign Auction at Microsoft.

Arora: The Giving Campaign Auction is a grassroots initiative that runs an online auction site, called the Giving Campaign Auction Tool, where donors can list items and services for buyers to bid on. All of the money raised goes to charity. It is a yearly volunteer effort within Microsoft IT; without dedicated resources to develop and run the site, the volunteer teams usually struggle to find available resources.

MSDN: What was the biggest challenge the Auction Tool team faced prior to implementing Windows Azure?

Arora: With an initiative that only runs once a year for one month, the Auction Tool is highly cyclical in nature-and it boasts a high volume of site traffic. In fact, during the 2008 Giving Campaign, the Auction Tool went down during the last minutes of the auction when we see the most traffic, and we suspected that increased load was a contributing factor. So, what we were looking for was a solution that we could quickly scale to handle peak traffic spikes. Plus, because we're an all-volunteer team, we needed a solution that would enable developers to quickly build the Auction Tool in their off-time.

MSDN: Can you describe the solution you built to help address your need for a scalable solution that could handle traffic spikes?

Arora: We migrated the Giving Campaign Auction Tool to the Windows Azure platform with only minor code changes. It took the equivalent of one-and-a-half developers-who had no experience using Windows Azure-only two weeks to migrate the existing tool to Windows Azure. We started with four Web roles for the front-end service, but eventually we added an additional 20 Web roles to handle burst traffic. With Windows Azure, we can just click a button, add more Web roles, and scale up without worrying about traffic spikes. We also used Microsoft SQL Server on-premises, which worked seamlessly with the cloud components. To top things off, we used Microsoft Silverlight to build a new interface for the application.

MSDN: What makes your solution unique?

Arora: We raised a lot of money for charity with the 2009 Auction Tool, and part of that is because, with Windows Azure, we were able to sponsor the initiative without any interruption in service. In one month, the site hosted auctions for 875 items, had 15,838 unique visitors, supported 56,346 total sessions, and raised nearly U.S.$500,000-including $505 for the world's best bologna sandwich.

…

The Q&A continues in the standard case-study format.

Marcello Tonarelli describes the Customer Portal Accelerator for Microsoft Dynamics CRM and its use with Windows Azure in this 5/13/2010 post:

The Customer Portal accelerator for Microsoft Dynamics CRM provides businesses the ability to deliver portal capabilities to their customers while tracking and managing these interactions in Microsoft Dynamics CRM.

This accelerator combines all the functionality previously released in the individual eService, Event Management and Portal Integration Accelerators. Customers can turn on or off this functionality depending on their specific requirements.

Customers can also choose whether they wish to deploy their portal on their own web servers or in the cloud with Windows Azure.

The Customer Portal Accelerator for Microsoft Dynamics CRM will work with all deployment models for Microsoft Dynamics CRM including on-premise, Microsoft Dynamics CRM Online and partner-hosted.

The Customer portal Accelerator for Microsoft Dynamics CRM introduces new Content Management capabilities in addition to all the functionality of eService, Event Management and Portal Integration.

Customers can still choose to install and configure the previous versions of the portal accelerators individually but you are strongly urged to install this new portal framework for your portal requirements.

Download the code from http://customerportal.codeplex.com/.

Fritz Onion announced a new Windows Azure Roles Pluralsight On-Demand! course on 5/13/2010:

Windows Azure Roles

| This module discusses Windows Azure Roles and how to use them. Specifically, focus is placed on how roles make development different than standard web development, and how worker roles operate. |

Pat McLoughlin’s CITYTECH Azure Calculator BETA post of 5/12/2010 announced:

The CITYTECH Azure Calculator (CAC) is now ready with a BETA release! After a few last minute hiccups the application is up on CITYTECH’s Windows Azure instance and ready for use. Check it out here. The CITYTECH Azure Calculator is a Silverlight based application that is served up via Windows Azure. Currently the application runs completely within a users browser on the Silverlight framework. In future releases we hope to leverage some more Worker Roles to let Azure do alot of the heavy lifting.

For more information about the history of the CITYTECH Azure Calculator please check out my previous blog post.

The CAC is very simple to use. All you need to use the CAC is at least one IIS log file. If you don’t have any log files, but still want to see how the calculator works go to the About page and you can download a zip file with a few log files in it.

Once you have some IIS log files the CAC is very easy to use. Go to the Home page (Clicking on the CITYTECH Azure Calculator image will take you there) and click the Select Files button. This will open a dialog up that allows you to select multiple files. Once you have selected your files click the Process Files button.

The CAC will process for a little bit. (The processing time is based on the amount and size of the files that are uploaded) Once it is done processing you will be taken to the Usage page. The Usage page shows a graph that approximates how much it would cost to run the applications in the log files on Windows Azure.

Pat continues with more illustrated help topics.

Ammalgam reports 6 Hot new SaaS, Cloud Computing Startups found at the All About the Cloud Conference this week in San Francisco:

At the All About The Cloud conference in San Francisco, a handful of new cloud computing and software-as-a-service (SaaS) companies were revealed:

New York-based AppFirst offers SaaS-based real-time application monitoring. The software offering lets users see into the “black box” that is their applications, regardless of application language, components, server type (physical or virtual) and whether on premise or in the cloud. The SaaS offering from AppFirst digs into applications to give IT a view into how the apps work and to address issues before they become a problem.

Cloudkick takes management and portability to the next level. Cloudkick is a management tool and portability layer for cloud infrastructure, offering a common set of tools like performance trending and fault-detection that works across various cloud providers, including Amazon.com and Racksapce. San Francisco-based Cloudkick also launched a hybrid offering, aptly named Hybrid Cloudkick, that lets companies manage their existing non-cloud infrastructure as if it were in the cloud.

Identity and access management in the cloud has been tricky, and Emillion hopes to demystify it. With its official launch at All About The Cloud, Finland-based Emillion showcased Distal, its identity and access management offerings for SaaS and cloud applications. Distal lets cloud and SaaS providers offer customers single sign-on capabilities and automated user management based on their existing directories. Distal requires no installation, programming or new training: The company said users just place a text file on an existing intranet server and set a couple of parameters.

San Diego-based Everyone Counts drew its inspiration from the 2000 presidential election, searching to find a way to deliver state-of-the-art technology to the election process to reduce the limitations and potential errors of the paper-based standard. Comprised of a team of information security and elections experts, Everyone Counts developed a SaaS platform for secure elections.

Govascend has built a platform that lets state and local governments build and deploy custom mobile applications. The Broomfield, Colo.-based company offers a development platform that enables mobile cloud applications to be developed and modified in-house; lets applications be shared between agencies in a Web-based library and enables one-click application deployment. Govascend’s offering requires no infrastructure and is based on the Microsoft Windows Azure cloud platform. [Emphasis added.]

Less Software’s supply chain management application can boost efficiency in acquiring, managing and selling inventory by enabling better management of logistics and order fulfillment processes, the company said. Based in Thousand Oaks, Calif., Less Software is available in an on-demand, pure SaaS model, meaning less software is required on premise.

Check them out when you get a chance..

James Broome describes Developing outside the cloud with Azure in this 5/10/2010 article that I missed when posted:

My colleague Simon Evans and I recently presented at the UK Azure .Net user group in London about a project that we have just delivered. The project is a 100% cloud solution, running in Windows Azure web roles and making use of Azure Table and Blob Storage and SQL Azure. Whilst development on the project is complete, the site is not yet live to the public, but will be soon, which will enable me to talk more freely on this subject.

A few of the implementation specific details that we talked about in the presentation focused on how we kept the footprint of Azure as small as possible within our solution. I explained why this is a good idea and how it can be achieved, so for those who don’t want to watch the video, here’s the write-up. …

James continues with “Working with the Dev Fabric,” “Data Access and the Repository Pattern,” “Dependency Injection and stub Repositories,” and “Diagnostics” topics and delivers this summary:

Hopefully this has shown that developing an Azure application does not have to be a process that takes over your entire solution and forces you down a particular style of development.

By architecting your solution well you can minimise the footprint that Azure has within your solution, which means that it’s easy to break the dependencies if required.

Being able to run an application in a normal IIS setup, without the Dev Fabric speeds up the development process, especially for those working higher up the stack and dealing solely with UI and presentation logic.

Using RoleEnvironment.IsAvailable to determine whether your running “in the cloud” or not allows you to act accordingly if you want to provide alternative scenarios.

By reducing the footprint of Azure within the solution, inexperienced teams can work effectively and acquire the Azure specific knowledge as and when required.

Return to section navigation list>

Windows Azure Infrastructure

Mary Jo Foley asks and answers, in part, How quickly can Microsoft close the SharePoint-SharePoint Online gap? in this detailed 5/13/2010 post to her All About Microsoft blog for ZDNet:

I’ve seen more than a few customers and press/bloggers wondering about how and when Microsoft plans to roll out some of the new SharePoint 2010 capabilities to customers of its hosted SharePoint Online service.

When Microsoft was developing SharePoint 2010, officials told me that the team was taking a new tack: The SharePoint 2010 and SharePoint Online teams were working together on the latest release (instead of the software team passing the baton to the Online team only after the release was finished). The goal was to minimize the gap between Microsoft’s rollout of the software and the service.

So how much did Microsoft actually manage to minimize that gap with the 2010 release?

At the Office 2010/SharePoint 2010 launch in New York City on May 12, Microsoft execs said to expect the company to release SharePoint Online “for our largest customers” updated with 2010 functionality “later this year.” (Translation: SharePoint Online Dedicated customers get the update first.) Some time after that, SharePoint Online Standard users will get a refresh with 2010 functionality. (SharePoint Online Standard is the SKU typically purchased by SMBs and others who don’t mind multitenant/shared infrastructure.) Microsoft is on the same schedule for getting Exchange Server 2010 functionality into Exchange Online, officials added.

An internal Microsoft slide I ran on my blog a few months ago showed Microsoft’s original plan (as of November 2009) was to get SharePoint 2010 functionality into the Business Productivity Online Suite (BPOS) Dedicated release in the spring (March) of 2010 and to BPOS Standard users before the end of calendar 2010. I’m thinking that those dates have probably slipped, given that SharePoint Online is one of the pieces of the BPOS offering, and SharePoint Online Dedicated isn’t getting 2010 functionality until later this year. Microsoft Senior Vice President Kurt Delbene did say that a beta of the 2010 feature refresh would make it to SharePoint Online Standard customers before the end of this calendar year, but wouldn’t provide a year as to when the final version would be released.

Here are a couple of more slides from November that break out more in a more granular way which SharePoint 2010 features are likely to make it into the SharePoint Online release. (Not all of them ever will; some are on-premises software features only, by design.) Given these slides are from a Microsoft presentation from a few months ago, these plans still could change.

Here’s the SharePoint Online Dedicated rollout feature slide:

(Click on the image to enlarge.)

And here’s the SharePoint Online Standard rollout feature slide:

(Click on the image to enlarge.) …

Notice the inclusion of Access Services as a W14 item as the second feature below the Other button above. Access Hosting currently offers hosted Access Services for $79 per month plus SharePoint 2010 Standard and Enterprise CALs for $7.50 per month for additional users.

William Weinberger reports Microsoft SharePoint 2010: The Reviews are In in this 5/13/2010 post to the VAR Guy blog:

After months of buildup, Microsoft has released SharePoint 2010, the latest version of their collaboration platform. It’s garnering praise for tight integration with the new Office 2010, but SharePoint 2010 seems to otherwise be a fairly incremental release. And some folks are concerned about SharePoint 2010’s SaaS challenges. Here are the details for your potential customer migrations.

One of SharePoint 2010’s most-touted new features is the real-time collaborative functionality it adds to Microsoft Office apps Word, Excel, and PowerPoint. A NetworkWorld review says that feature works as advertised, but the real draw of the new version is the enhanced page customization functionality — something that didn’t get a lot of pre-launch lip service.

That functionality comes in form of an AJAX-powered editor that lets you make WSIWYG changes in a very Wiki-like format, as CMSWire points out, with the ease of page-linking that implies. Better yet, it supports changing the language of the browser chrome with a few clicks.

But SharePoint 2010 comes with its caveats. It’s 64-bit only, so VARs may have to upgrade some older hardware if they want to get in on this. Moreover, it doesn’t support Internet Explorer 6 at all, and older versions of most other Microsoft software constrains large chunks of functionality, so investing in a SharePoint 2010 license could mean investing in Windows Server 2008 R2 and Office 2010 whether you want to or not.

The other problem is that it’s simply not cloud-ready. When I spoke to Apptix yesterday, they told me that while hosted SharePoint 2010 is definitely on their roadmap, the product that Microsoft delivered isn’t a true multi-tenant solution and can’t be delivered as-a-service. It’s a stark contrast to Steve Ballmer’s public pro-cloud stance, and one that Salesforce.com executive and friend of The VAR Guy Peter Coffee noted in a blog entry. [Emphasis added.]

Of course, companies like Azaleos are offering managed SharePoint 2010 deployments. But that’s not really the same thing as real SaaS solutions, and when Coffee asks why SharePoint wasn’t built on Microsoft’s Windows Azure cloud platform, I have to nod in agreement. …

Access Hosting is delivering Access Services as a hosted service, as noted in the previous post above. For more background on the SharePoint 2010 Online controversy, see my Windows Azure and Cloud Computing Posts for 5/12/2010+ post.

• Update 5/14/2010: My Q&A with Microsoft about SharePoint 2010 OnLine with Access Services post of 5/12/2010 (copied from my Amazon Author Blog on 5/14/2010) provides the official reply of a Microsoft spokesman to my following two questions:

When will SharePoint 2010 be available online from Microsoft?

This is Clint from the Online team. It will be available to our larges[t] online customers this year, and we will continue rolling out the 2010 technology to our broad base of online customers, with updates coming every 90 days. You can expect to see a preview of these capabilities later this year.Will SharePoint 2010 Online offer the Enterprise Edition or at least Access Services to support Web databases?

This is Clint from the Online team. We have not disclosed the specific features that will be available in SharePoint Online at this time, but you can expect most of the Enterprise Edition features to be available. Stay tuned for more details in the coming months.

John Soat asks Do You Have/Need a Cloud Computing Strategy? in a Microsoft-sponsored Cloud Perspectives blog post for InformationWeek of 5/13/2010:

The short answer is probably not/probably so. The cloud is not a point solution or a short-term fix, though it is tempting to view it that way. The cloud is a shift in computing architecture and IT philosophy that demands a serious strategic approach to understand where it fits within your overall technology deployment blueprint now and going forward.

According to a recent InformationWeek Analytics 2010 survey, more than half of software-as-a-service users (59%) look on SaaS as a tactical point solution “for specific functionality.” That’s all well and good, given that SaaS started out that way, mostly in terms of specific applications (CRM, HR), and its primary appeal is still speed of implementation, which is a tactical maneuver.

However, SaaS is only one aspect of the cloud. Infrastructure-as-a-service, known in a previous life as co-location or data center outsourcing, already should be a strategic weapon rather than a tactical one. Platform-as-a-service, which requires serious intent and investment, immediately smacks of strategy, and should be approached thusly.

Even SaaS should no longer be looked on as just a quick fix. In the InformationWeek Analytics survey, about a third of SaaS users (32%) say their “long-term vision [is] to primarily use SaaS applications.” That’s a significant chunk of true believers, and a (growing) cloud constituency that’s hard to ignore.

Still, you don’t have to throw everything over to embrace the cloud. As systems and business processes run their course, cloud services should be considered as replacements. The cloud, or even SaaS by itself, doesn’t have to be the whole of your IT strategy to be part of your IT strategy.

Sounds to me like a good question to ask the SharePoint 2010 Online team.

Elizabeth White prefixes her Cloud Computing Market to Reach $12.6 Billion in 2014 –IDC post of 5/13/2010 with “Enterprise Cloud Server Hardware Offers Increased Efficiencies”:

The breaking dawn of economic recovery combined with an aging server installed base and IT managers' desire to rein in increasingly complex virtual and physical infrastructure is driving demand for both public and private cloud computing. According to new research from International Data Corporation (IDC), server revenue for public cloud computing will grow from $582 million in 2009 to $718 million in 2014. Server revenue for the much larger private cloud market will grow from $7.3 billion to $11.8 billion in the same time period.

"Now is a great time for many IT organizations to begin seriously considering this technology and employing public and private clouds in order to simplify sprawling IT environments," said Katherine Broderick, research analyst, Enterprise Platforms and Datacenter Trends.

IDC defines the public cloud as being open to a largely unrestricted universe of potential users; designed for a market, not for a single enterprise. Private cloud deployment is designed for, and access restricted to, a single enterprise (or extended enterprise); an internal shared resource, not a commercial offering; IT organization as "vendor" of a shared/standard service to its users. "Many vendors are strategically advancing into the private and public cloud spaces, and these players are widely varied and have differing levels of commitment," Broderick added.

Additional findings from IDC's research include the following:

- Public cloud computing has lower ASVs than an average x86-based server

- Public cloud seems less likely to be broadly adopted than private

- Public clouds will be less enterprise focused than private clouds

- According to recent IDC survey results, almost half of respondents, 44%, are considering private clouds

The IDC study, Worldwide Enterprise Server Cloud Computing 2010-2014 Forecast (IDC #223118) presents market size and forecast information for server hardware infrastructure supporting public and private clouds from 2009 through 2014. This is IDC's first cloud forecast from the server hardware perspective and will be built upon in the future. This document presents the opportunity for servers in the cloud by customer revenue, units, CPU type, form factor, and workload.

Lori MacVittie claims Standardization at the application platform layer enables specialization of infrastructure resulting in greater economy of scale in a preface to her How Standardization Enables Specialization post of 5/13/2010:

In all versions of Dungeons and Dragons there is a nifty arcane spell for wizards called “Mirror Image.” What this nifty spell does is duplicates an image of the wizard. This is useful because it’s really hard for all those nasty orcs, goblins, and bugbears to tell which image is the real one. Every image does exactly what the “real” wizard does. The wizard is not impacted by the dismissal of one of the images; neither are the other images impacted by the dismissal of other images. Each one is exactly the same and yet each one is viewed by its opponents as individuals entities. An illusion, of course, but an effective one.

In the past an “application” has been a lot like that wizard. It’s a single entity, albeit one that might be comprised of several tiers of functionality. cloud computing – specifically its ability to enable scale in an elastic manner - changes that dramatically, making an “application” more like a wizard plus all his mirror images than a single entity. This is the epitome of standardization at the platform layers and what ultimately allows infrastructure to specialize and achieve greater economies of scale.

ECONOMY of SCALE

Some of the benefits of cloud computing will only be achieved through standardization. Not the kind of standardization that comes from RFCs and vendor-driven specifications but the standardization that happens within the organization, across IT. It’s the standardization of the application deployment environment, for example, that drives economy of scale into the realm of manageable. If every JavaEE application is deployed on the same, standardized “image”, then automation becomes a simple, repeatable process of rolling out new images (provisioning) and hooking up the server in the right places to its supporting infrastructure. It makes deployment and ultimately scalability an efficient process that can be easily automated and thus reduce the operational costs associated with such IT tasks. This also results in a better economy of scale, i.e. the process doesn’t exponentially increase costs and time as applications scale.

But just because the application infrastructure may be standardized does not mean the applications deployed on those platforms are standardized. They’re still unique and may require different configuration tweaking to achieve optimal performance…which defeats the purpose of standardization and reverses the operational gains achieved. You’re right back where you started.

Unless you apply a bit of “application delivery magic.”

STANDARDIZATION ENABLES SPECIALIZATION

Many of the “tweaks” applied to specific application servers can be achieved through an application delivery platform. That’s your modern Load balancer, by the way, and it’s capable of a whole lot more than just load balancing. It’s the gatekeeper through which application messages must flow to communicate with the application instances and when it’s placed in front of those images it becomes, for communications purposes, the application. That means it is the endpoint to which clients connect, and it, in turn, communicates with the application instances.

This gives the application delivery platform the strategic position necessary to provide application and network optimizations (tweaks) for all applications. Furthermore, it can apply application specific – not application platform specific, but application specific – optimizations and tweaks. That means you can standardize your application deployment platform across all applications because you can leverage a centralized, dynamic application delivery platform to customize and further optimize the application’s performance in a non-disruptive, transparent way.

It sounds counter-intuitive, but standardization at the application platform layer allows for specialization at the application delivery layer.

Lori continues with an explanation of why her assertion is true.

Reuven Cohen reports that The White House Further Outlines Federal Cloud Strategy in this 5/13/2010 post:

Interesting post today over at the Whitehouse.gov blog by Vivek Kundra, the U.S. Chief Information Officer. The post describes both the rationale as well as what cloud computing, at least what it means to the US Government.

Interesting post today over at the Whitehouse.gov blog by Vivek Kundra, the U.S. Chief Information Officer. The post describes both the rationale as well as what cloud computing, at least what it means to the US Government.

Here are a couple of the more interesting parts.

"For those of you not familiar with cloud computing, here is a brief explanation. There was a time when every household, town, or village had its own water well. Today, shared public utilities give us access to clean water by simply turning on the tap. Cloud computing works a lot like our shared public utilities. However, instead of water coming from a tap, users access computing power from a pool of shared resources. Just like the tap in your kitchen, cloud computing services can be turned on or off as needed, and, when the tap isn’t on, not only can the water be used by someone else, but you aren’t paying for resources that you don’t use. Cloud computing is a new model for delivering computing resources – such as networks, servers, storage, or software applications."

The post clearly outlines the use of Cloud Computing within the US federal government's IT strategy. "The Obama Administration is committed to leveraging the power of cloud computing to help close the technology gap and deliver for the American people. I am hopeful that that the Recovery Board’s move to the cloud will serve as a model for making government’s use of technology smarter, better, and faster."

<Return to section navigation list>

Cloud Security and Governance

Elizabeth White reports “Less than half of cloud services are vetted for security” in her Cloud Security Study Finds IT Unaware of All Cloud Services Used post of 5/13/2010:

CA, Inc. and the Ponemon Institute, an independent research firm specializing in privacy, data protection and information security policy, on Wednesday announced a study analyzing significant cloud security concerns that persist among IT professionals when it comes to cloud services used within their organization.

The study, entitled "Security of Cloud Computing Users," reveals that more than half of U.S. organizations are adopting cloud services, but only 47 percent of respondents believe that cloud services are evaluated for security prior to deployment. Of equal concern, more than 50 percent of respondents in the U.S. say their organization is unaware of all the cloud services deployed in their enterprise today.

"Organizations put themselves at risk if they fail to evaluate cloud services for security and don't have a view of what cloud services are in use throughout the business," said Dave Hansen, corporate senior vice president and general manager for CA's Security business unit. "All parties - IT, the end user, and management - should be involved in the decision making process, and need to build guidance around cloud computing adoption to help their organizations more securely deploy cloud services."

Findings also showed that there was a substantial concern across industries in maintaining security for mission critical data sets and business processes in the cloud. The surveyed IT practitioners noted that a variety of data sets were still too risky to store in the cloud:

- 68 percent thought that cloud computing was too risky to store financial information and intellectual property;

- 55 percent did not want to store health records in the cloud; and

- 43 percent were not in favor of storing credit card information in the cloud.

Additional key findings from the study included:

- Less than 30 percent of respondents were confident they could control privileged user access to sensitive data in the cloud.

- Only 14 percent of respondents believe cloud computing would actually improve their organization's security posture.

- Just 38 percent of respondents agreed that their organization had identified information deemed too sensitive to be stored in the cloud.

The research suggests that IT personnel should take a full inventory of their organization's cloud computing resources, closely evaluate cloud providers, and assess the steps taken to mitigate risks. Going forward, IT should institute policies around what data is appropriate for cloud use and should evaluate deployments before they are made. …

<Return to section navigation list>

Cloud Computing Events

Beth Massi will present Creating and Consuming OData Services in Business Applications to the North Bay .NET User Group on Tuesday, 5/18/2010 7:00 to 8:00 PM PDT at O'Reilly Media in Sebastopol, Tarsier Conference Room (between Building B and Building C), 1003-1005 Gravenstein Highway North, Sebastopol (8 miles west of Santa Rosa):

The Open Data Protocol (OData) is a REST-ful protocol for exposing and consuming data on the web and is becoming the new standard for data-based services. In this session you will learn how to easily create these services using WCF Data Services in Visual Studio 2010 and will gain a firm understand of how they work. Youll also see how to consume these services and connect them to other data sources in the cloud to create powerful BI data analysis in Excel 2010 using the PowerPivot add-in. Finally, we will build our own add-in that consumes OData services exposed by SharePoint 2010 as well as take advantage of the new sparkline data visualizations in Excel 2010.

Beth is a Senior Program Manager on the Visual Studio team.

For more information, go to http://www.baynetug.org/.

Many years ago, I designed an apple vacuum-drying facility and warehouse on South Gravenstein Highway for Vacu-Dry Corporation. In those days, Sebastopol was the apple capitol of Northern California, but Vacu-Dry sold its apple-processing business to Tree Top Inc. and closed the plant in 1999. Now Sebastopol competes with Berkeley as the computer book capitol of Northern California.

Gartner, Inc. delivered its updated Events Calendar for June and the remainder of 2010 via e-mail on 5/13/2010 with this introduction:

Cloud computing was recently named the top strategic technology for 2010 on the CIO’s agenda. Across all of our 2010 Gartner events we’ll give you advice on many cloud topics, including:

- How your organization can benefit from cloud computing for agility, efficiency or creating new business models

- How to evaluate and mitigate the real cost and risks of moving to the cloud

- When and how to adopt cloud computing to get maximum business value

- How to source, select and manage cloud vendors and solutions

- And more...

Click Events Calendar for the browser based version.

Dennis Chung’s Up Close with Zane Adams - Deciphering the cloud (17 May 2010) in Singapore post of 5/13/2010 announces:

Special Invite Agenda

Date: 17th May 2010 (Monday)

Time: 7.00pm to 8.30pm (Registration Starts at 6.30pm)

Venue: 1 Marina Boulevard #21-00,MS Singapore–Auditorium Level 216.30pm: Registration (Light refreshments will be served)

7.00pm: Cloud Computing Deciphered for IT Pros

8.30pm: Home Sweet HomeCloud Computing Deciphered for IT Pros

Is Cloud Computing confusing? The answer is No. It is about choice and options. Join us in this special behind-the-doors session with Zane Adam, General Manager, SQL Azure and Middleware. Zane will present Microsoft’s overall cloud strategy and how Microsoft helps customers and partners in building private and public cloud infrastructure. We will hear about the many choices and options available to businesses and individuals, covering what and how SQL Azure, Windows Azure, AppFabric, BPOS and Virtualization stack up in our cloud vision and the path forward.

Skill Level: 100-200

About Zane Adam

Zane Adam

General Manager

Product Management

SQL Azure and Middleware

Microsoft CorporationZane leads the product management group on SQL Azure, Windows Server AppFabric, Windows Azure AppFabric, BizTalk and related technologies critical to our innovation and industry. He is focused on helping customers and partners in building private and public cloud infrastructure for the future.

rPath announced on 5/12/2010 a Webinar to Discuss New Methods for Bringing Scalable IT Automation to Windows Application Deployment and Configuration. WEBINAR: rPath to Slay .NET Deployment Dragon, on 5/20/2010 at 11:00 AM PDT:

Releasing .NET applications into production is complex, manual, cumbersome and excessively costly for even the best-run IT organizations. The reason? Today’s .NET production environments are diverse assemblies of scripts and manual processes cobbled together by harried operations engineers trying to cope with unstable, rapidly changing applications and exploding scale. And there are scarcely few tools and automation available to IT operations groups who deploy, configure, maintain and troubleshoot .NET applications.

In an upcoming webinar sponsored by rPath, an innovator in automating system deployment and maintenance, industry experts will discuss emerging approaches for automating .NET application deployment and configuration—bringing consistency, control and scale to .NET applications running in production.

- What: “Slaying the .NET Deployment Dragon: Bringing Scalable IT Automation to Windows Application Deployment and Configuration” webinar

- Who: Jeffrey Hammond, principal analyst, Forrester Research; Joe Baltimore, .NET consultant and solution provider; Shawn Edmondson, director of product management, rPath

- When: Thursday, May 20, 2010, at 2:00 p.m. Eastern time (11:00 a.m. Pacific time)

- How: Register for this free event at bit.ly/bDUn87.

Attendees will learn:

- How to remediate a .NET deployment bottleneck through automation

- The integrated dev/ops process for fast and seamless deployment and change

- How to create a low-latency, low-overhead application release process

- Packaging .NET applications for portability across physical, virtual and cloud environments

All registrants will receive a copy of the whitepaper “Automating .NET Application Deployment and Configuration.”

For more information on rPath, please visit www.rpath.com. For additional perspectives, please visit and subscribe to rPath RSS blog feeds at blogs.rpath.com/wpmu/. Follow rPath on Twitter at @rpath.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Rich Miller reports Car Crash Triggers Amazon Power Outage in this 5/13/2010 post to the Data Center Knowledge blog:

Amazon’s EC2 cloud computing service suffered its fourth power outage in a week on Tuesday, with some customers in its US East Region losing service for about an hour. The incident was triggered when a vehicle crashed into a utility pole near one of the company’s data centers, and a transfer switch failed to properly manage the shift from utility power to the facility’s generators.

Amazon Web Services said a “small number of instances” on EC2 lost service at 12:05 p.m. Pacific time Tuesday, with most of the interrupted apps recovering by 1:08 p.m. The incident affected a different Availability Zone than the ones that experienced three power outages last week.

The sequence of events was reminiscent of a 2007 incident in which a truck struck a utility pole near a Rackspace data center in Dallas, taking out a transformer. The outage triggered a thermal event when chillers struggled to restart during multiple utility power interruptions.

Crash Triggers Utility Outage

“Tuesday’s event was triggered when a vehicle crashed into a high voltage utility pole on a road near one of our datacenters, creating a large external electrical ground fault and cutting utility power to this datacenter,” Amazon said in an update on its Service Health Dashboard. “When the utility power failed, most of the facility seamlessly switched to redundant generator power.”A ground fault occurs when electrical current flows into the earth, creating a potential hazard to people and equipment as it seeks a path to the ground.

“One of the switches used to initiate the cutover from utility to generator power misinterpreted the power signature to be from a ground fault that happened inside the building rather than outside, and immediately halted the cutover to protect both internal equipment and personnel,” the report continued. “This meant that the small set of instances associated with this switch didn’t immediately get back-up power. After validating there was no power failure inside our facility, we were able to manually engage the secondary power source for those instances and get them up and running quickly.”

Switch Default Setting Faulted

Amazon said the switch that failed arrived from the manufacturer with a different default configuration than the rest of the data centers’ switches, causing it to misinterpret this power event. “We have already made configuration changes to the switch which will prevent it from misinterpreting any similar event in the future and have done a full audit to ensure no other switches in any of our data centers have this incorrect setting,” Amazon reported.Amazon Web Services said Sunday that it is making changes in its data centers to address a series of power outages last week. Amazon EC2 experienced two power outages on May 4 and an extended power loss early on Saturday, May 8. In each case, a group of users in a single availability zone lost service, while the majority of EC2 users remained unaffected.

It’s a rather dumb move not to inspect and test the configuration of cutover switches when installing them to connect utility power, standby generators and server racks.

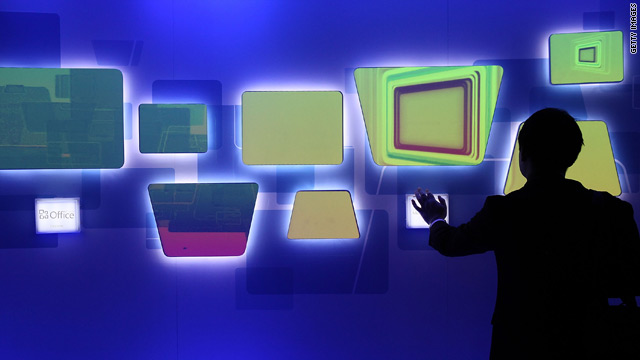

Lara Ferrar asks Can Microsoft beat Google in the battle to rule cloud computing? in this 5/13/2010 story for CNN:

Microsoft made a major leap skywards this week with the release of a cloud-based version of its Office software to businesses called Office Web Apps.

But can it dominate cloud-based computing or will new giants emerge?

Microsoft's new product launch counters what many tech commentators thought the preeminent software company would never do.

"A lot of people say we will see pigs fly before we see Microsoft Office running in the cloud," said Tim O'Brien, Microsoft's senior director of platform strategy.

"We are betting big on the cloud with our most successful product, and we are investing heavily both in the product engineering side and the physical infrastructure side."

While Microsoft has offered varying cloud services for a while -- 15 years, according to O'Brien -- the launch of Office 2010, one of Microsoft's most lucrative products, is noteworthy.

It means a company that has made its mark selling shrink-wrapped software will be going head-to-head with Google and other firms that have grown up offering productivity tools online.

"It goes without saying that this is a massive step-function for the industry and it is a disruptive one," O'Brien told CNN. …

However Google does not see its applications in direct competition to Microsoft.

"I certainly would characterize our Google Apps business very, very differently," Ken Norton, a senior project manager at Google, said. "It has been hugely successful."

More than 2 million businesses run Google Apps with more than 3,000 signing up each day, according to the company. Norton says one of the biggest challenges for Microsoft is whether it innovate rapidly in the ever-changing environment of the Web, pointing out that Google had an "optimized" version of Gmail available for the iPad almost immediately after Apple released the device last month.

The search giant is also continuing to release new features on Google Labs, its site used to demonstrate and test new products, he said.

"In traditional software, you have to plan really far ahead," Norton told CNN.

"We can do releases constantly, which allows us to do innovative things... and get immediate responses from our end users. We are in a position where we are able to respond very quickly."

…

<Return to section navigation list>