Windows Azure and Cloud Computing Posts for 5/1/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Updates moved from Windows Azure and Cloud Computing Posts for 4/30/2010+ on 5/2/2010 due to excessive length

•• Updates added on 5/2/2010

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in May 2010.

Azure Blob, Table and Queue Services

•• Steve Marx claims Shared Access Signatures Are Easy These Days and proves it in this 5/2/2010 post:

I wrote a blog post back when Shared Access Signatures were first released called “New Storage Feature: Shared Access Signatures,” which gave some sample code to use what was then a brand new feature in Windows Azure storage (and not supported by the storage client library).

These days, using Shared Access Signatures is much simpler. I just wrote some .NET code that uses the

Microsoft.WindowsAzure.StorageClientlibrary to do the following:

- Create a blob.

- Generate a Shared Access Signature (SAS) for that blob that allows read and write access.

- Display a working URL to the blob.

- Modify and read back the blob using only the SAS for authorization.

Here’s the code:

// regular old blob storage

var account = CloudStorageAccount.DevelopmentStorageAccount; // or your cloud account

var container = account

.CreateCloudBlobClient()

.GetContainerReference("testcontainer");

container.CreateIfNotExist();

var blob = container.GetBlobReference("test.txt");

blob.Properties.ContentType = "text/plain";

blob.UploadText("Hello, World!");

// create a shared access signature (looks like a query param: ?se=...)

var sas = blob.GetSharedAccessSignature(new SharedAccessPolicy()

{

Permissions = SharedAccessPermissions.Read

|SharedAccessPermissions.Write,

SharedAccessExpiryTime = DateTime.UtcNow + TimeSpan.FromMinutes(5)

});

Console.WriteLine("This link should work for the next five minutes:");

Console.WriteLine(blob.Uri.AbsoluteUri + sas);

// now just use the SAS to do blob operations

var sasCreds = new StorageCredentialsSharedAccessSignature(sas);

// new client using the same endpoint (including account name),

// but using the SAS as the credentials

var sasBlob = new CloudBlobClient(account.BlobEndpoint, sasCreds)

.GetBlobReference("testcontainer/test.txt");

sasBlob.UploadText("Hello again!");

Console.WriteLine(sasBlob.DownloadText());

There’s nothing more to it than that! For more details about Shared Access Signatures, see “Cloud Cover Episode 8: Shared Access Signatures” or the MSDN documentation on the details of signature itself.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

•• The Strategy and Architecture Council (Microsoft Developer & Platform Evangelism - U.S. West Region) blog announced a one-hour OData and You: An everyday guide for Architects Webcast by Doug Purdy on 5/7/2010 at 12:00 Noon PDT:

Join Doug Purdy as he talks about the importance of the Open Data Protocol (OData) to Archi-tects. Doug will provide context regarding the rise of OData, and the challenges it seeks to solve. Then, through both demo and discussion, Doug will explain why knowledge of OData is as important – if not more so – for Architects as it is for Developers. To conclude, Doug will discuss the implications OData has to service orientation and what he sees as future trends. If you missed Doug in the MIX 2010 keynote be sure to catch him here at the Architect Café!

Link to Register: http://bit.ly/OData_Guide Presenter: Douglas Purdy CTO, Data & Modeling, Microsoft

See additional description in the Microsoft Worldwide Events announced OData and You: An everyday guide for Architects Featuring Douglas Purdy post in the Cloud Computing Events section below.

•• Rex Hanson explains Using the SQL Server Spatial library in Windows Azure with the Microsoft.SqlServer.Types.dll and SqlServerSpatial.dll libraries that are compatible with Azure’s MSVCR80.dll version:

Microsoft recently announced that SQL Azure will support working with native spatial data in June of this year. This is great news, and significantly enhances the usability of SQL Azure as a geographic data respository. As a result, many folks may want to start utilizing spatial data in their Azure hosted Web applications. Working with SQL Server spatial data in a .NET application usually involves working with the SQL Server Spatial (SqlServerSpatial.dll) and Types (Microsoft.SqlServer.Types.dll) libraries included with the SQL Server System CLR Types feature pack. The technical capabilities of the SQL Server Spatial library have been established in numerous blog posts, conference sessions, forums, and in the product documentation.

Basically the library offers a standard, light-weight set of spatial operators built on geometry and geography spatial data types that are relatively easy to use and integrate in a .NET application. You can download, install, use, and distribute these libraries as needed. The latest edition of the SQL CLR Types was released in November 2009 for SQL Server 2008 R2. If the .NET application that uses the SQL spatial libraries is installed on a local machine or hosted on a local server, you have complete control over the environment in which it operates.

However, if you choose to deploy an ASP.NET application as a Windows Azure web service, you will encounter a limitation. The platform for Windows Azure is a flavor of 64-bit Windows Server 2008 prior to the R2 release. The Nov 2009 SQL Spatial library is dependent on the system library MSVCRT80.dll which is not included with this operating system. As a result, when you attempt to use the SQL Spatial library in your Azure hosted ASP.NET web service, you'll likely see the following error:

Unable to load DLL 'SqlServerSpatial.dll': The application has failed to start because its side-by-side configuration is incorrect. Please see the application event log for more detail. (Exception from HRESULT: 0x800736B1)

MSVCR80.dll is required by the SQL Spatial library and must be installed/configured on the host operation system, it cannot simply be dropped in the same directory. In addition, you cannot install features and components in your Windows Azure workspace. So how can you use the SQL Spatial library in an Azure hosted application? Simple, include a SQL Spatial library from a SQL Server 2008 (not R2) edition of the CLR Types. The functionality is basically unchanged. …

•• Bruce Johnson (@LACanuck) offers the slide deck from his Setting Your Data Free with OData presentation to the Toronto Code Camp 2010 on 5/2/2010.

• Charles Babcock claims SugarCRM VP Clint Oram “Thinks customers will like the option of moving software in-house” in his SugarCRM Gives Azure A Try article of 5/1/2010 for InformationWeek’s Windows blog:

Clint Oram isn't picky about cloud platforms. He wants SugarCRM to be run as a service from any one that customers want. "Our strategy is to run on all cloud platforms," says Oram, founder and VP of the open source CRM company.

That said, he does give a nod to Azure, where SugarCRM has been running the the past few months for a few customers. "Azure is by far one of the strongest platforms," he says. Oram adds that SugarCRM could move its code to Azure with very little engineering, despite being in PHP. Besides .Net, Azure now supports Java, Ruby, and Python as well PHP. That's important, as the platform-as-a-service competition expands: VMware and Salesforce.com just teamed up to bring Java to Salesforce's Force platform (see "VMware Plus Salesforce.com, An Unlikely Pair").

Oram thinks customers will like that Azure makes it easy to move both an application and data between an in-house data center and Microsoft's. While SugarCRM supports both MySQL and Microsoft SQL Server, it runs SQL Azure in Microsoft's cloud. Oram says companies will take advantage of that compatibility. "You can opt to run SugarCRM on premises, keeping your data private, then decide to migrate to a SaaS strategy with very little engineering effort," he says. Moving to the cloud gives companies benefits such as automated high availability, he adds.

Since Azure offers standard relational database access to CRM data, companies could, Oram says, synchronize their data in the cloud with a matching set on premises. That would chew through some of the cloud's cost savings, but Oram believes it could become standard practice at some companies.

Makes sense to me. SQL Azure Data Sync could handle the synchronization scenario with little or no development cost.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

•• Cliff Simpkins (@cliffsimpkins) announced AppFabric Virtual Launch on May 20th – Save the Date for private cloud devotees at 8:30 AM PDT on 4/29/2010 in the The .NET Endpoint blog:

To close out the month of April, I’m happy to share news of the event that the group will be conducting next month to launch Windows Server AppFabric and BizTalk Server. [Emphasis added.]

On May 20th @8:30 pacific, we will be kicking off the Application Infrastructure Virtual Launch Event on the web. The event will focus on how your current IT assets can harness some of the benefits of the cloud, bringing them on-premises, while also connecting them to new applications and data running in the cloud.

We have some excellent keynote speakers for the virtual launch:

- Yefim Natis, Vice President and Distinguished Analyst at Gartner:

Yefim will discuss the latest trends in the application infrastructure space and the role of application infrastructure in helping business benefit from the cloud.- Abhay Parasnis, General Manager of Application Server Group at Microsoft:

Abhay will discuss how the latest Microsoft investments in the application infrastructure space will begin delivering the cloud benefits to Windows ServerIn addition to the keynotes, there will be additional sessions providing additional details around the participating products and capabilities that we will be delivering in the coming months. Folks who would likely get the most from the event are dev team leads and technical decision makers, as much of the content will focus more on benefits of the technologies, and much less on the implementation and architectural details.

That being said - the virtual launch event is open to all and requires no pre-registration, no hotel bookings, and no time waiting/boarding/flying on a plane. In the spirit of bringing cloud benefits to you, the team has decided to host our event in the cloud. :)

The Application Infrastructure Virtual Launch Event website is now live at http://www.appinfrastructure.com/ – today, you can add a reminder to your calendar and submit feedback on the topic you are most interested in. On Thursday, May 20th, at 8:30am Pacific Time, you can use the URI to participate in the virtual launch event.

Cliff’s blog post emphasizes Windows Server AppFabric, but Windows Azure AppFabric is included in the event also. See the footer of the Applications Infrastructure: Cloud benefits delivered page below:

Application Infrastructure Virtual Launch Event

While you explore how to use the cloud in the long-term, find out how to harness the benefits of the cloud here and now. In this virtual event, you’ll learn how to:

- Increase scale using commodity hardware.

- Boost speed and availability of current web, composite, and line-of-business applications.

- Build distributed applications spanning on-premises and cloud.

- Simplify building and management of composite applications.

- And much, much more!

Microsoft experts will help you realize cloud benefits in your current IT environment and also connect your current IT assets with new applications and data running in the cloud.

This is a valuable opportunity to learn about cloud technologies and build your computing skills. Don’t miss it!

••• Update 5/3/2010: Mary Jo Foley posted May 20: Microsoft to detail more of its private cloud plans on 5/3/2010 to her All About Microsoft blog:

Microsoft officials have been big on promises but slow on delivery, in terms of the company’s private-cloud offerings. However, it looks like the Redmondians are ready to talk more about a couple of key components of that strategy — including the Windows Server AppFabric component, as well as forthcoming BizTalk Server releases.

Microsoft has scheduled an “Application Infrastructure Virtual Launch Event” for May 20. According to a new post on the .Net Endpoint blog, “The event will focus on how your current IT assets can harness some of the benefits of the cloud, bringing them on-premises, while also connecting them to new applications and data running in the cloud.”

Windows Server AppFabric is the new name for several Windows infrastructure components, including the “Dublin” app server and “Velocity” caching technology. In March, Microsoft officials said to expect Windows Server AppFabric to ship in the third calendar quarter of 2010. Microsoft last month made available the final version of Windows Azure AppFabric, which is the new name for Microsoft’s .Net Services (the service bus and access control services pieces) of its cloud-computing platform.

BizTalk Server — Microsoft’s integration server — also is slated for a refresh this year, in the form of BizTalk Server 2010. That release will add support for Windows Server 2008 R2, SQL Server 2008 R2 and Visual Studio 2010, plus a handful of other updates. Company officials have said Microsoft also is working on a new “major” version of BizTalk, which it is calling BizTalk Server vNext. This release will be built on top of Microsoft’s Windows AppFabric platform. So far, the Softies have not provided a release target for BizTalk Server vNext. …

Mary Jo continues with an analysis of Microsoft’s “private cloud play,” such as the Dynamic Data Center Toolkit [for Hosters], announced more than a year ago, Windows Azure for private clouds, and Project Sydney for connecting public and private into hybrid clouds.

Due to its importance, the two preceding articles will be repeated in the Windows Azure and Cloud Computing Posts for 5/3/2010+ post.

•• Vittorio Bertocci (a.k.a., Vibro) wrote and illustrated Claims on the Client… (… and fire in the sky ♫) on 5/2/2010:

Don’t you hate it when a technical blog devolves in what is for the most part a series of announcements? That’s kind of what happened to mine. The reality is that making the things which get announced here take an inordinate amount of time, and that every remaining moment (usually none) should be spent writing the WIF book *gasps*.

Well, this time I am making an exception and spending some of this Seattle-Paris flight discussing a topic which came up very often in the last few months: how to obtain, and how to use, claims on a client. I had various discussions about it both internally and more recently during the workshops, and given the originality of my positions I think it’s high time I actually write it down and point people to it instead of restarting every time the conversation from the beginning.

Remember this blog’s disclaimer, those are MY thoughts (as opposed to the official IDA blog, please always refer to it first).

The Problem

Let’s say that you learned how to use WIF for driving the behavior of your web application (or web service). IClaimsPrincipal is your friend: You use claims for authorizing access to certain pages/services, you customize the appearance of the pages according to what the claims tell you abut the user, and so on: all without the need of actually knowing what goes on under the hood. Life is good.

Let’s say that now you are asked to create a Silverlight application, let’s say in-browser for the sake of simplicity. You run the federation wizard on the hosting website, like you are used to for the web application case, and you obtain the usual redirect behavior to the identity provider. So far so good. However, when you go in the Silverlight code and you search for the claims, perhaps for deciding if a certain portion of the UI should enabled or disabled according to the user’s role, but they are nowhere to be found. What’s going on?

Identity-wise, a classic Silverlight application is kind of a strange animal. The application has its home on a web site, the one on which the XAP resides, but the UI is in fact executed within the browser process, back on the client machine. It’s like a cat sleeping on the web server, which unfolds a very long neck and pops up its cute face in the client’s browser when you navigate there.

In the case above, when you ask a token to the Identity Provider you are in fact asking it for the body of the cat, the web site. The code which drives the redirects, understand the token format, performs the various validations and so on all reside on the web server. In this case, the SilverLight code (the cat’s head) does not even see the token; and no token, no claims [noisily uncork a bottle of sparkly wine for reinforcing the point]. …

Vibro continues with a description of issues that occur when “writing a SL application for Windows Phone 7” and shows you “How to Make Claims Available to the Client.”

His Programming Windows Identity Foundation book for Microsoft Press is scheduled for publication on 9/15/2010.

• Vittorio Bertocci announced on 5/1/2010 that he will present three sessions about Windows Azure and Windows Identity Foundation (WIF) at the European Identity Conference & Cloud Summit 2010 in Munich:

I just (barely) made it back few days ago from a 3-weeks EU trip (Belgium & UK WIF Workshops, Belgium TechDays and Italian VS2010 launch), and I am already setting my out of office message again.

In few hours I have a flight for Munich, where I’ll delivery the German WIF Workshop and I’ll attend the European Identity Conference & Cloud Summit 2010. Looking forward to hang out with all the friends working in the identity space! Despite of all the travel I’ve been doing this year, I had to skip the classic events where the identity people gather (RSA, IIW) and there’s certainly no shortage of news to discuss.

Here there are my sessions:

05/05/2010 16:30-17:30

Claims Based Identity and the Cloud

Details05/06/2010 16:30-17:30

Panel: Windows Azure, force.com, Google AppEngine, J2EE-based Approaches – What’s the Best Take for a Cloud Platform? [Emphasis added.]

Details05/07/2010 13:30-16:00

Claims & Cloud: Using Windows Identity Foundation in Windows Azure [Emphasis added.]

Details

Microsoft is present in force at the EIC: Kim Cameron, Carla Canavor, Ariel Gordon, Tony Nadalin and Ronny Bjones will all be there. All our sessions are nicely summarized in this post on the government engagement team blog.

Well, I know that I always say it, but I am really looking forward for the workshop and the EIC :-)

If you happen to be at the conference in Munich don’t be shy, come to say hi!

If you read Italian or trust automated translation, check out this side-by-side translation by the Microsoft Translator of Vibro’s Nessuno e’ profeta in patria… (Nobody is [a] winner at home ...) post of 5/1/2010 about the Visual Studio 2010 Launch Event in Italy.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Eugenio Pace recommends designing for “unplug scenarios” in his Windows Azure Guidance – Failure recovery and data consistency – Part II post of 5/2/2010:

I had some great answers on my previous post question, like Simone’s. Some where closer than others, but in general you got it right, Thanks!

The recovery strategy depicted there assumes that all failures are external. That is, writing to a table fails, for example, and you have a chance to run the clean up code. But what happens if your own code fails? Remember: the entire VM can go away at any point in time!

Note: as someone told me one, you should design for “unplug scenarios”. That is, at any point in time your system should recover for someone unplugging your server.

For example, what happens if your VM evaporates just before it executes the SaveChanges:

Then you end up with a some blobs, a few messages in a queue notifying a worker that image compression is needed, a record in the “Expense” table (the “write master” in the diagram above), but no details….

The additional problem is that the user can go back to the site and might even see the expense in the grid, but then when attempting to navigate to the details, guess what… nothing will be there.

The background process that looks for orphaned records might eventually pick up a “detail-less” Expense record and clean it. But this is probably not a great solution.

A small change can greatly improve the user experience. Can you think suggest one?

•• The Strategy and Architecture Council (Microsoft Developer & Platform Evangelism - U.S. West Region) announced the Azure Skittles – Series demo Azure project on 5/2/2010:

This skittles game is a very simple walkthrough of creating and debugging a Windows Azure application locally on a Windows 7 development machine with Visual Studio 2010.

We will have a WPF application that calls a WCF Web Role in Azure. The Web Role will enqueue a work request into an Azure Queue. The Worker Role will remove the work request from the Queue, process it and do something with it. The something I’ve drawn out is to place it into a SQL Azure database. I will do that part in a future post. I am going to go through the key steps. I’m not going to go into the nitty gritty or talk about best patterns & practices surrounding the application (this is skittles after all). This example was made on the RC of VS2K10, but also works on the RTM.

Read the entire series on John Alioto’s blog at

- Azure Skittles Part 1 (

missing figures; John notifiedfigures appear fixed) - Azure Skittles Part 2

- Azure Skittles Part 3

- Azure Skittles Part 4

- Azure Skittles Part 5

•• Joannes Vermorel announced that Lokad’s Forecasting API v3 is live (REST + SOAP) on 5/2/2010:

About 18 months ago, we were announcing the release of the API v2. Today, we are proud to announce that Forecasting API v3 is live.

Both Safety Stock Calculator and Call Center Calculator have been already upgraded toward API v3 starting from the versions 2.5 and above.

As a primary benefit the 1h wait delay between data upload and forecast download is no more. We recommend to upgrade toward the latest versions of those apps.

(note: our Excel add-in has not been upgraded yet)

The primary focus of this release is simplicity. Indeed, the API v2 had incrementally grown to +20 web methods with both a certain level of redundancy and a lack of separation of concern.

Then, over the last 18 months, thanks to our growing partner network, we discovered many minor yet annoying glitches in API v2 related to popular programming environments (Java, Python, C++, Apex, ...). Thus, we made sure API v3 would avoid designs that prove to be troublesome in some environments.

Also, API v3 comes with both REST and SOAP endpoints. Indeed, REST has emerged as THE approach to ensure maximal interoperability in modern web-oriented enterprise environments, and we are very committed in staying up to the industry standards.

Here are a few facts about API v3:

- 8 web methods (and only 4 of them actually [are] required for production usage) while API v2 had 20+ methods. Less methods mean less time to spend deciphering our API spec.

- The .NET Forecasting Client for API v3 has been reduced about 1k lines of code against +10k lines of code for the previous API v2 version.

Since API v3 provides all the features of API v2 while being much simpler, we have already started to migrate all existing service in production toward API v3.

API v2 won't be available for new users any more, but we will make sure all customers in production are properly migrated before shutting down the service for API v2.

Don't hesitate to contact us if you need you need any assistance in this process.

•• The OnWindows.com site reported Ignify streamlines payment process on 4/29/2010:

Ignify, a global customer relationship management and enterprise recource planning (ERP) consulting firm, e-commerce provider has released ePayments for Microsoft Dynamics, a hosted invoice payment and management service that gives customers of companies using Microsoft ERP solutions a self-service portal for viewing, managing and paying invoices.

The service was developed in combination with Windows Azure, Microsoft’s cloud services platform, designating Ignify a member of Microsoft’s Front Runner programme, and is an extension of Ignify’s payment support for e-commerce sites currently used by over 200 large and medium sized businesses throughout the world.“Through the technical and marketing support provided by the Front Runner programme, we are excited to see the innovative solutions built on the Windows Azure platform by the ISV community,” said Doug Hauger, general manager for Windows Azure Microsoft. “The companies who choose to be a part of the Front Runner programme show initiative and technological advancement in their respective industries.”

ePayments for Microsoft Dynamics is a subscription service based on the software-as-a-service model and offers a viable approach for streamlining accounting operations and improving cash flow by reducing accounts receivables, simplifying direct payment practices, reducing the time to clear checks and eliminating paper checks altogether. …

Return to section navigation list>

Windows Azure Infrastructure

•• Chris Murphy claims “Its Azure and apps strategies are giving businesses new choices” in his Microsoft's Cloud Plan: What's In It For You? article of 5/1/2010 for InformationWeek:

"We're actually trying to help people do what they really will need to do for the modern time. You don't get that out of what Amazon is doing."

-- Steve BallmerMicrosoft's cloud computing strategy is a tale of two clouds. There are its popular software-as-a-service offerings: SharePoint, Exchange, Dynamics CRM, and the soon-to-be-released new Web version of Office 2010. And there's Microsoft's emerging platform as a service, Azure.

How quickly and easily customers adopt these offerings is an open question, but Microsoft CEO Steve Ballmer, in an interview at the company's Redmond, Wash., headquarters, says that every CIO he talks to is at least considering the move. And just in case they aren't, all of Microsoft's 2,000 account managers are being required to make a cloud pitch to each of their customers.

One of the main cogs of Microsoft's cloud strategy is Azure, its approach to selling computing power over the Internet based on usage, as customers need it. Microsoft has some enterprise customers such as Kelley Blue Book and Domino's testing key Web applications on Azure, and some smaller tech companies--including SugarCRM--sell software services running on the platform. But Azure continues to be a work in progress.

Azure is a long-term bet because, for it to be a blockbuster the way Microsoft envisions, software needs to be written differently--just as Web apps are different from client-server apps, which are different from mainframe software. Microsoft sees the switch to cloud development as being as significant as those earlier generational shifts. Developers will write applications, for example, so they take advantage of key features of a cloud architecture, such as the ability to automatically scale server resources up and down as needed for demand, treating their computing capacity as a pooled resource. Companies that run huge data centers to run Web apps--Google, Facebook, Microsoft, Amazon--do that today, but most enterprise data centers don't. …

You can download a free extended version of Chris’s article as a Microsoft's Cloud Plan Cloud Computing Brief from InformationWeek::Analytics. Highly recommended.

Kevin Jackson reports Open Group Publishes Guidelines on Cloud Computing ROI and claims the guidelines “Introduce the main factors affecting ROI from Cloud Computing” in this 5/2/2010 post:

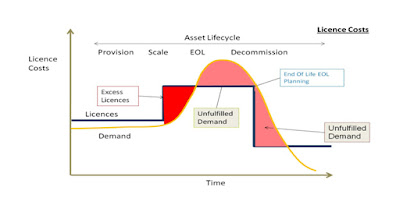

In an important industry contribution, The Open Group has published a white paper on how to build and measure cloud computing return on investment (ROI). Produced by the Cloud Business Artifacts (CBA) project of The Open Group Cloud Computing Work Group, the document:

- Introduces the main factors affecting ROI from Cloud Computing, and compares the business development of Cloud Computing with that of other innovative technologie;

- Describes the main approaches to building ROI by taking advantage of the benefits that Cloud Computing provide; and

- Describes approaches to measuring this ROI, absolutely and in comparison with traditional approaches to IT, by giving an overview of Cloud Key Performance Indicators (KPIs) and metrics

In presenting their model, business metrics were used to translate indicators of cloud computing capacity-utilization curves into direct and indirect business benefits. The metrics used include:

Speed of Cost Reduction;

Optimizing Ownership Use;

[The Guidelines are] Available online here.

• tbtechnet’s On-ramp: Delivering your Applications over the Web post of 4/30/2010 points to the Microsoft Partner Network’s ISV Application Hosting and Consulting - S + S Incubation Center (Worldwide) page:

Are you an independent software vendor (ISV) interested in delivering your applications over the Web? Adding software-as-a-service delivery to your offerings provides you with an additional revenue stream and expands your customer service options. Experienced Microsoft hosting partners can ease the commercial, financial, and technical challenges you might encounter while adding service-based delivery to your applications.

The ISV Application Hosting and Consulting catalog includes Gold Certified hosting partners who guide ISVs through a structured series of business and architectural consulting sessions to ensure your business model and applications are ready for service-based delivery. These Software-plus-Services Incubation Centers will help you rapidly deploy your hosted applications in a reliable, secure environment.

Search the ISV Application Hosting and Consulting catalog to find a hosting partner in your area who can help you add software-as-a-service delivery to your applications.

I was surprised to find only four Gold Certified and one Registered Partners in the worldwide S + S Incubation Center list:

- NaviSite (Gold Certified Partner)

- New World Apps (Gold Certified Partner)

- OpSource (Gold Certified Partner)

- Tenzing (Gold Certified Partner)

- StrataScale (Registered Partner)

•• Richard Mayo and Charles Perng co-authored IBM’s Cloud Computing Payback: An Explanation of Where the ROI Comes From in November 2009, but ZDNet offered it as a download in May 2010. The authors assert the “Purpose of this Document” is:

As companies throughout the world examine the business value of Cloud Computing, it is important to understand how Cloud can lower IT expenses. Understanding how long it takes until your business can recoup the investment it made in Cloud Computing is the “payback” period. In today’s economic environment, this is a critical component of any investment analysis. When we speak with IT executives, regardless of the industry they are in, they want to have important answers to key cost saving questions about Cloud Computing. The intent of this document is to inform the reader about the five (5) key areas of costs savings that are associated with every Cloud infrastructure implementation.

Each of the five areas will be defined in terms of their characteristics, the savings that your company would anticipate achieving based on the size of your environment and the data elements that need to be benchmarked and tracked to project and calculate the savings. The tracking and calculation of savings is a key element of any Cloud implementation program. We will also provide the list of underlying projects that comprise each cost saving area. These projects are the “action steps” that can be undertaken to obtain the savings for your organization.

The savings described in this document are based on the implementation of a cloud computing environment versus a traditional infrastructure. A traditional infrastructure typically has the characteristics of a single application per server with manual provisioning and a siloed management environment, which results in high costs and low productivity. An IBM study1 has shown that moving from a traditional infrastructure to a public cloud can yield a modest reduction in costs. However, for significant cost reductions a private cloud infrastructure is required. This is due to the fact that the underlying cost savings of the public cloud accrue to the public cloud provider and are only partially past on to the public cloud user. Setting up a private cloud computing environment results in the largest cost savings based on today’s public cloud pricing models.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

•• Cliff Simpkins (@cliffsimpkins) announced AppFabric Virtual Launch on May 20th – Save the Date for private cloud devotees at 8:30 AM PDT on 4/29/2010 in the The .NET Endpoint blog. See the full text of the announcement (and a link to Mary Jo Foley’s coverage of the event) in the AppFabric: Access Control and Service Bus section above.

•• Microsoft Worldwide Events announced OData and You: An everyday guide for Architects Featuring Douglas Purdy to be presented on 5/7/2010 at 12:00 noon PDT:

Event Overview

- What are the challenges we’re trying to solve?

- What is OData?

- Why OData?

- Example: MIX OData API and Netflix OData API

- Why should architects care about this? Isn’t this just a developer consideration?

- What are the implications to my service architecture?

- How can I get started?

- Conclusion

- Q&A

Registration Options: Event ID: 1032450906

•• O’Reilly Media’s Web 2.0 Expo will be held at San Francisco’s Moscone West convention center on 5/3 to 5/6/2010. Following are sessions schedule for the Cloud Computing track as of 5/2/2010:

Schedule: Focus on Cloud Computing sessions

Any web business has to consider using a cloud service. The decision will be based on economics, reliability and your risk tolerance. This track will cover the latest tools and technologies used to minimize your risk. We thank Joyent for supporting the event.

- 9:00am Tuesday, 05/04/2010

- I Made a Map of the Internet - And Other Lessons About Speeding Up Websites

- Location: 2006

- Tom Hughes-Croucher (Yahoo!)

I have made a map of everything involved in getting from your computer, via your ISP, to a web site and back. It's exhaustive, but that's the point. Where the heck should we optimize? This talk will take the audience on a journey through the guts of the internet to learn how and where to optimize for speed. Read more.

- 1:30pm Tuesday, 05/04/2010

- How to Make Cloud Computing into Smart Computing - Sponsored Session

- Location: 2014

- Jason Hoffman (Joyent, Inc.)

Many businesses are challenged when trying to make the reality of cloud computing meet the promise of cloud computing. They hope to eliminate the hassle of managing infrastructure, gets rid of up-front capital costs, and make it possible to scale applications worry free-- but they hit roadblocks not the way. Read more.

- 11:00am Wednesday, 05/05/2010

- The Hidden Lessons of Cloud Computing

- Location: 2010

- Christopher Brown (Opscode)

While everyone has been loudly arguing the hype and kicking the tires, cloud computing has been quietly teaching us some valuable lessons. Which vendors or APIs succeed in the marketplace will be less interesting than the improved infrastructure, application architecture and even corporate IT deployments made possible by what we've learned. Read more.

- 1:30pm Wednesday, 05/05/2010

- Latency Trumps All

- Location: 2010

- Chris Saari (Yahoo!)

Addressing latency is what drives much of the real time web revolution as we know it today. From Twitter’s up to the moment updates, to Google serving a search result in the blink of an eye dealing with latency is the key to keeping the web, and your own computer, running quickly. Learn how to conquer latency for building fantastic products by looking at examples from around the industry. Read more.

- 2:35pm Wednesday, 05/05/2010

- A View From the Clouds

- Location: 2010

- Rajen Sheth (Google, Inc.)

Google Enterprise is at the forefront of the cloud computing momentum. In this session Google Senior Product Manager Rajen Sheth will talk about building Google Apps from the idea phase to today's suite used by millions worldwide. He'll also touch on how cloud providers work with businesses, schools and organizations interested in cloud computing to address the issues they face. Read more.

- 3:40pm Wednesday, 05/05/2010

- What the Platform Needs Next: Cloud Users Speak Up

- Location: 2010

- Moderated by: Alistair Croll (Bitcurrent)

- Panelists: Adrian Cole (jclouds), Jeremy Edberg (reddit.com), John Fan (Cardinal Blue Software), Eran Shir (Dapper)

What's most important in clouds? What killer features are missing, and what should clouds offer next? This panel will bring together heavyweight end users to look at the limitations of on-demand computing platforms today, and to suggest what needs to be built next in order for developers to truly embrace elastic computing. Read more.

I wonder why Microsoft, who’s a Platinum Sponsor, didn’t present/sponsor a session or two about Windows Azure and SQL Azure, considering the company’s expenditure for Cloud Expo New York 2010 (see below.)

•• Bill Zack reviewed Microsoft’s participation in Cloud Expo New York 2010 in his Microsoft is “All In” Cloud Expo in New York post of 5/2/2010:

Lest there be any doubt that Microsoft is “All In” the cloud, we recently were one of the primary sponsors of Cloud Expo at New York’s Javits Convention Center.

Highlight of the expo was a complete Azure Data Center in a box, a 30 foot compute and storage container shipped in and assembled on the spot! More about that later.

"We are very serious about the cloud. We view it as a natural extension of on-premise software," said Yousef Khalidi, a Microsoft distinguished engineer working on the Microsoft Azure cloud offering, during his talk. "We believe in a hybrid model going forward, that would span the whole spectrum." ( taken from an Infoworld article)

Cloud Computing Expo, was hosted by Sys-Con. It is “the premier industry showcase on the East Coast and featured the brightest, most well-respected thought leaders and practitioners in the Cloud/Utility Computing space.” It featured four days of presentations, panels and expo hall. The event brought together approximately 3,000 Senior Technologists including CIOs, CTSs, VPS of technology, IT Directors and managers, network and storage managers, network engineers, enterprise architects, communications and networking specialists from all size organizations. It was four days of keynote presentations, panel sessions, and a tradeshow floor jam packed with cloud focused companies.

Microsoft’s goal for participating at Cloud Computing Expo was to convince the world that we are very serious about the Cloud.

Highlights of the event:

- Approximately 3,000 attendees

- Microsoft, was a GOLD sponsor, with 6 sessions on the schedule with either Microsoft and/or Microsoft partners.

- The Microsoft Global Foundation Services (GFS) datacenter container in our space

- 4 key Microsoft partners participating (Avanade, Cognizant, Infosys and SOASTA) were there to tell our entire Cloud story

General Takeaways:

- Attendees at the booth responded consistently that we certainly conveyed our holistic approach and the “We’re all in” messaging really came across well.

- Continued comments were “What’s Azure?” and: I didn’t know Microsoft had a CRM tool!?”

- The container rocks! It makes something intangible called the cloud very “real” and concrete for people. PLUS, it was just really cool!

- Having Microsoft partners woven into the presence and the sessions also made it clear that our customers can really get their solutions “landed” with Microsoft technologies. Microsoft has always had a very strong partner ecosystem and this event was no exception. Having partners with us at the event was key in lending credibility and offering a different perspective to attendees.

- Great interest in security and interoperability of Azure and the ability to easily develop and manage on premises and cloud apps seamlessly. …

Microsoft Conference Sessions:

Microsoft and/or Microsoft partners presented 6 sessions including:

- Programmed Session: Patterns for Cloud Computing Bill Zack, Architect Evangelist, Microsoft

- Programmed Session: CMO’s Toolkit: Harnessing Facebook using Cloud Computing Jeff Barnes, Architect Evangelist, Microsoft

- General Session (sponsored) : Microsoft’s Cloud Services Approach Yousef Khalidi, Distinguished Engineer, Microsoft

- Sponsored Session: Securing the Cloud Infrastructure at Microsoft Mark Estberg, Senior Director Information Security Risk & Compliance Management, Microsoft

- Sponsored Session: Cloud Computing Rules of Thumb and Best Practices Keith Pijanowski, PSA, Microsoft (Moderator); Becca Bushong Meadows, Director, Business Intelligence, Stanley Associates, Inc.; Al Perez, Chief Software Architect, Total Computer

- End User Power Panel: Who's Doing What (and Where) with Cloud Computing? …

•• David Chou announced Azure – the cool parts! A BizSpark Camp on 5/1/2010:

Want to know what makes Azure different? Learn how to deploy your own application to Azure in this free developer’s training and you could win a Zune HD! Light breakfast and lunch included!

This event will have a heavy technical focus, so make sure you don’t leave your web development skills at home!

Schedule for the day:

| Begins | Ends | Activity |

|

8 am |

8:30 am |

Registration & continental breakfast |

|

8:30 am |

10:15 am |

Azure presentations and demos |

|

10:15 am |

10:30 am |

Break |

|

10:30 am |

12 pm |

Azure presentations and demos, continued. |

|

12 pm |

1 pm |

Lunch |

|

1 pm |

4:45 pm |

Azure walkthough followed by a "hackathon". |

|

4:45 pm |

5 pm |

Zune HD giveaway |

|

5 pm |

6 pm |

Networking |

Date & Registration Information:

- May 7, 2010: San Francisco: http://bizsparkcampsf.eventbrite.com

- June 4, 2010: Los Angeles: http://bizsparkcampla.eventbrite.com

•• James Governor’s IBM Impact: Saturday night thoughts post of 5/1/2010 begins:

I am in Las Vegas for IBM Impact 2010- Big Blue’s business alignment/middleware event. Why am I missing a bank holiday weekend for a tech show? Because this is one of the most important events of the year- if you’re an enterprisey geek, that is. IBM is a major Redmonk client, and I have been covering IBM middleware for 15 years now.

The main reason to be here- the people. Clients, friends, prospects, there will be as many people here for Impact as the Mayweather fight…

Having a paid gig while I am here doesn’t hurt either, and explains my early arrival. I will be presenting tomorrow at Sandy Carter’s Cool Cloud Cash workshop – which is all about how IBM business partners can make money from the cloud. I will be talking about onboarding and hand-holding, workload analysis and triage, security and data governance, and explaining why the cloud is just the same old burger, with a new relish.

Cloud will of course be a big deal at Impact this year, and I have to say IBM has done some outstanding work in the last 12 months in the space. There should be some meaty announcements to take in. …

• Vittorio Bertocci (a.k.a., Vibro) announced on 5/1/2010 that he will present three sessions about Windows Azure and Windows Identity Foundation (WIF) at the European Identity Conference & Cloud Summit 2010 in Munich. See details in the AppFabric: Access Control and Service Bus section above.

• The Citrix Web site claims the “Complete Session Catalog is now available” for Citrix Synergy 2010, San Francisco to be held at Moscone Center West, San Francisco, on 5/12 through 5/14/2010:

Synergy San Francisco, May 12-14, offers a packed agenda of breakout sessions, hands-on training labs, keynotes, demos and special activities designed to help attendees take advantage of the latest technologies. The complete Session Catalog is now live: it provides details on more than 60 sessions in three tightly focused tracks:

- Desktop Virtualization

- Datacenter/Cloud

- IT Business and Strategy, produced by CIO magazine and designed for senior IT executives

Synergy is the definitive conference on desktop virtualization and one of the most pragmatic events for what’s real in the cloud—public and private. Through open discussion, real-world use cases and practical skills training, Synergy will show you how the convergence of virtualization, networking and cloud computing can result in a simpler, more cost-effective IT infrastructure. Attending this global event is a strategic investment in the future of your computing environment—and your professional capabilities. …

Follow #Synergy, #Synergy2010, #CitrixSynergy and @Citrix on Twitter. Hashtag overpopulation, anyone?

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Derrick Harris claims VMforce: Good for VMware, Bad for Oracle in this 5/2/2010 post to GigaOM:

Much has already been written about this week’s VMforce announcement, but my biggest question still hasn’t been answered: Who’s the biggest winner in this partnership -– Salesforce.com or VMware? I’m also interested in who the biggest loser is, as Microsoft, Oracle, IBM and the entire SaaS-based CRM community all seem to have taken hits.

A Big Winner: Salesforce.com

As I wrote last week, the combination of SaaS and PaaS could prove to be powerful, and Salesforce.com was poised to capitalize on this if it only expanded its Force.com user base. Enter VMforce. Now, Salesforce.com can bring in a new — and much, much larger — developer community to build applications atop Force.com. Once they’re in, the hope is that the hooks into Salesforce.com’s various collaboration, support and SaaS tools will make them want to stay, and maybe even expand into Salesforce.com’s other services.

The Biggest Winner: VMware

I suspect VMforce represents a mere seed from which will sprout a vast PaaS empire. If VMware expands its PaaS partnerships beyond Salesforce.com – in the manner it has grown its vCloud ecosystem -– users will be able to port both VMs and code from on-premise environments into the cloud, and then across a variety of cloud providers’ services. The one piece that makes all this flexibility possible: VMware. VMware also is facing an all-out assault on the virtualization front, and rather than battling simultaneously with Microsoft, Citrix, Oracle and Red Hat, it’s changing the nature of the conflict. If it were a matter of comparing apples to apples, customers would face a difficult choice, but VMware is trying to show them they can have an entire fruit basket.

The Biggest Loser: Oracle

Compared with Oracle, Salesforce.com now looks even more appealing as a SaaS option, and VMware looks more appealing as both a virtualization and Java platform option. IBM hasn’t gone anywhere either, and is pushing its cloud offerings hard. Even Microsoft enables Java development on Windows Azure, as does Google on App Engine. Oracle said it won’t be pursuing Sun’s cloud ambitions, but it might be time to rethink those plans, at least in terms of a PaaS offering.

Read the full article here, and be sure to attend Structure 2010 in June, where we’ll certainly here more about VMforce from keynote speakers Paul Maritz and Marc Benioff.

Just couldn’t resist running another VMforce article! (Reading the full article requires a GigaOM Pro subscription.)

• Alex Williams quotes Eric Engleman’s Puget Sound Business Journal (Seattle) in Alex’s Amazon Pursues The Feds and the Potential Billions in Cloud Computing Services report of 5/1/2010 for the ReadWriteCloud blog:

Amazon is quietly pursuing the multi-billion dollar federal cloud computing market, intensifying an already fast accelerating sales and marketing effort by Google, Microsoft and a host of others.

According to the Seattle Business Journal, Amazon is making progress with federal agencies and is being included in a host of proposals.

It's a potentially huge market. According to the Business Journal, the federal government will spend $76 billion this year on IT. That includes $20 billion to pay for infrastructure.

Federal Chief Information Officer Vivek Kundra is leading a charge for the Obama administration to invest in cloud computing. This could represent any number of services, be it application hosting, test and development or for managing certain operations.

This is where Amazon may have sway. The company has the most experience of any cloud computing service provider. It is focusing on Amazon Web Services as the most profitable part of the business, exceeding annual e-commerce revenues.

The Seattle Business Journal cites one bid the company made with a Virginia-based IT Service company:

"In a sign of Amazon's interest in the federal cloud market, the company last year teamed with Apptis, the Chantilly, Va.-based government IT services company, to respond to a request for quotes (RFQ) put out by the General Services Administration. The GSA was seeking information on "Infrastructure-as-a-Service" offerings for the government, including cloud storage, virtual machines and cloud web hosting.

The GSA later canceled the RFQ, saying the cloud market had matured and it needed to make changes to the solicitation. The agency will put out a revised RFQ in the coming weeks. Amazon spokeswoman Kay Kinton called Apptis a "good partner" and said "we expect to keep working with them," but did not elaborate. She declined to talk about Amazon's plans for the new RFQ, saying the company hasn't seen it yet."

The Business Journal states that Amazon has an office in Washington, D.C. and has hired a former FBI officer with a background in computer security to help with developing relationships. …

I believe the assertion that Amazon Web Services is “exceeding annual e-commerce revenues” is overreaching or at least very premature. I don’t see this claim in the Puget Sound Business Journal’s Amazon quietly pursues federal cloud-computing work article.

• See The Citrix Web site claims the “Complete Session Catalog is now available” for Citrix Synergy 2010, San Francisco to be held at Moscone Center West, San Francisco, on 5/12 through 5/14/2010 in the Cloud Computing Events section above.

<Return to section navigation list>