Windows Azure and Cloud Computing Posts for 5/11/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Table and Queue Services

Brad Calder’s Windows Azure Storage Abstractions and their Scalability Targets of 5/10/2010 is a comprehensive analysis of Windows Azure storage methodology:

The four object abstractions Windows Azure Storage provides for application developers are:

- Blobs – Provides a simple interface for storing named files along with metadata for the file.

- Tables – Provides massively scalable structured storage. A Table is a set of entities, which contain a set of properties. An application can manipulate the entities and query over any of the properties stored in a Table.

- Queues – Provide reliable storage and delivery of messages for an application to build loosely coupled and scalable workflow between the different parts (roles) of your application.

- Drives – Provides durable NTFS volumes for Windows Azure applications to use. This allows applications to use existing NTFS APIs to access a network attached durable drive. Each drive is a network attached Page Blob formatted as a single volume NTFS VHD. In this post, we do not focus on drives, since their scalability is that of a single blob.

The following shows the Windows Azure Storage abstractions and the Uris used for Blobs, Tables and Queues. In this post we will (a) go through each of these concepts, (b) describe how they are partitioned (c) and then talk about the scalability targets for these storage abstractions.

Storage Accounts and Picking their Locations

In order to access any of the storage abstractions you first need to create a storage account by going to the Windows Azure Developer Portal. When creating the storage account you can specify what location to place your storage account in. The six locations we currently offer are:

- US North Central

- US South Central

- Europe North

- Europe West

- Asia East

- Asia Southeast

As a best practice, you should choose the same location for your storage account and your hosted services, which you can also do in the Developer Portal. This allows the computation to have high bandwidth and low latency to storage, and the bandwidth is free between computation and storage in the same location.

Then also shown in the above slide is the Uri used to access each data object, which is:

- Blobs

- Tables

- Queues

The first thing to notice is that the storage account name you registered in the Developer Portal is the first part of the hostname. This is used via DNS to direct your request to the location that holds all of the storage data for that storage account. Therefore, all of the requests to that storage account (inserts, updates, deletes, and gets) go to that location to access your data. Finally, notice in the above hostnames the keyword “blob”, “table” and “queue”. This directs your request to the appropriate Blob, Table and Queue service in that location. Note, since the Blob, Table and Queue are separate services, they each have their own namespace under the storage account. This means in the same storage account you can have a Blob Container, Table and Queue each called “music”.

Now that you have a storage account, you can store all of your blobs, entities and messages in that storage account. A storage account can hold up to 100TBs of data in it. There is no other storage capacity limit for a storage account. In particular, there is no limit on the number of Blob Containers, Blobs, Tables, Entities, Queues or Messages that can be stored in the account, other than they must all add up to be under 100TBs. …

Brad continues with details for Windows Azure Blobs, Tables, Queues, Partitions, Scalability and Performance Targets, and concludes with comments on scaling and latency:

See the next set of upcoming posts on best practices for scaling the performance of Blobs, Tables and Queues.

Finally, one question we get is what the expected latency is for accessing small objects:

- The latency is typically around 100ms when accessing small objects (less than 10s of KB) from Windows Azure hosted services that are located in the same location (sub-region) as the storage account.

- Once in awhile the latency can increase to a few seconds during heavy spikes while automatic load balancing kicks in (see the upcoming post on Windows Azure Storage Architecture and Availability).

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

David Robinson’s Connections and SQL Azure post of 5/11/2010 to the SQL Azure Team blog begins:

When using a web enabled database like SQL Azure, it requires connections over the internet or other complex networks and because of this, you should be prepared to handle unexpected dropping of connections. Established connections consist of: connections that are returning data, open connections in the connection pool, or connections being cached in client side variables. When you are connecting to SQL Azure, connection loss is a valid scenario that you need to plan for in your code. The best way to handle connection loss it to re-establish the connection and then re-execute the failed commands or query.

Network reliability

The quality of all network components between the machine running your client code and the SQL Azure Servers is at times outside of Microsoft’s sphere of control. Any number of reasons on the internet may result in the disconnection of your session. When running applications in Windows Azure the risk of disconnect is significantly reduced as the distance between the application and the server is reduced.

In circumstances when a network problem causes a disconnection, SQL Azure does not have an opportunity to return a meaningful error to the application because the session has been terminated. However, when reusing this connection (like when you use connection pooling), you will get a 10053 (A transport-level error has occurred when receiving results from the server. (provider: TCP Provider, error: 0 - An established connection was aborted by the software in your host machine) error.

Redundancy Requires Connection Retry Considerations

If you are used to connecting against a single SQL Server within the same LAN and that server fails or goes down for an upgrade then your application will get disconnected permanently. However, if you have been coding against a redundant SQL Server environment you might already have code in place to manage reconnecting to your redundant server when you primary server is unavailable. In this situation you suffer a short disconnect instead of an extended downtime. SQL Azure behaves much like a redundant SQL Server cluster. The SQL Azure fabric manages the health of every node in the system. Should the fabric notice that a node is either in an unhealthy state or (in the event of an upgrade) a node is ready to be taken offline, the fabric will automatically reconnect your session to a replica of your database on a different node.

Currently some failover actions result in an abrupt termination of a session and as such the client receives a generic network disconnect error (A transport-level error has occurred when receiving results from the server. (provider: TCP Provider, error: 0 - An established connection was aborted by the software in your host machine.) The best course of action in these situations is to reconnect and SQL Azure will automatically connect you to a healthy replica of your database.

Resource Management by SQL Azure

Like any other database SQL Azure will at times terminate sessions due to errors, resource shortages and other transient reasons. In these situations SQL Azure will always attempt to return a specific error if the client connection has an active request. It is important to note that it may not always be possible to return an error to a client application if there are no pending requests. For example, if you are connected to your database through SQL Server Management Studio for longer than 30 minutes without having any active request your session will timeout and because there are no active requests SQL Azure can’t return an error.

In these circumstances, SQL Azure will close an already established connection:

- An idle connection was held by an application for more than 30 minutes.

- You went to lunch and left your SQL Server Management Studio connection for longer than 30 minutes

SQL Azure Errors

Before we start writing code to handle connection loss, a few other SQL Azure errors would benefit from a re-establish the connection and then re-execute the failed commands or the query. They include:

- 40197 - The service has encountered an error processing your request. Please try again.

- 40501 - The service is currently busy. Retry the request after 10 seconds.

Dave continues with C# SQL Azure connection code to accommodate the preceding issues.

Alex James continues his series with OData and Authentication – Part 2 – Windows Authentication of 5/11/2010:

Imagine you have an OData Service installed on your domain somewhere, probably using the .NET Data Services producer libraries, and you want to authenticate clients against your corporate active directory.

How do you do this?

On the Serverside

First on the IIS box hosting your Data Service you need to turn on integrated security, and you may want to turn off anonymous access too.

Now all unauthenticated requests to the website hosting your data service will be issued a HTTP 401 Challenge.

For Windows Authentication the 401 response will include these headers:

WWW-Authenticate: NTLM

WWW-Authenticate: NegotiateThe NTLM header means you need to use Windows Authentication.

The Negotiate header means that the client can try to negotiate the use of Kerberos to authenticate. But that is only possible if both the client and server have access to a shared Kerberos Key Distribution Centre (KDC).If, for whatever reason, a KDC isn’t available a standard NTML handshake will occur.

Detour: Basic Authentication

While we are looking into Windows Authentication it is worth quickly covering basic auth too because the process is very similar.

When you configure IIS to use Basic Auth the 401 will have a different header:

WWW-Authenticate: Basic realm="mydomain.com"This tells the client to do a Basic Auth handshake to provide credentials for 'mydomain.com'. The ‘handshake’ in Basic Auth is very simple – and very insecure – unless you are also using https.

On the Clientside

Now that a NTLM challenge has been made, what happens next?

In the browser:

Most browsers will present a logon dialog when they receive a 401 challenge. So assuming the user provides valid credentials they are typically free to start browsing the rest of site and by extension the OData service.

Occasionally the browser and the website can "Negotiate" and agree to use kerberos, in which case the authentication can happen automatically without any user input.

The key takeaway though is that in a browser it is pretty easy to authenticate against a Data Service that is secured by Windows Authentication.

Alex continues with examples for .NET client and Silverlight client apps, and concludes:

As you can see using Windows Authentication with OData is pretty simple, especially if you are using the Data Services libraries.

But even if you can’t, the principles are easy enough, so clients designed for other platforms should be able to authenticate without too much trouble too.

Next time out we’ll cover a more complicated scenario involving OAuth.

Alex James starts a series about OData and Authentication – Part 1 on 5/10/2010:

Marcelo Lopez Ruiz continues his WCF Data Service series with WCF Data Service Projections - null handling and expansion of 5/10/2010:

One of the capabilities that I wanted to call out of WCF Data Service projections on the client is the ability to include expanded entities.

So I can write something like the following, which returns the ID, LastName and manager's ID and LastName for each employee. This is just a Northwind database with the 'Employee1' property renamed to 'Manager'.

var q = from e in service.Employees

select new Employee()

{

EmployeeID = e.EmployeeID,

LastName = e.LastName,

Manager = new Employee()

{

EmployeeID = e.Manager.EmployeeID,

LastName = e.Manager.LastName,

}

};This query works because we're projecting values into an entity types without doing any transformations, and so the values will generally round-trip correctly.

For the expanded Manager employee, note that we must initialize it from the projected Manager employee as well. Mixing properties from different entities into a single one would mess up the state and thus such as query would be rejected by the local query processor.

Marcelo continues with more details and sample code.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Wade Wegner explains Using the .NET Framework 4.0 with the Azure AppFabric SDK in this 5/11/2010 post:

The other day I attempted to build a sample application that communicated with the Azure AppFabric Service Bus by creating a Console application targeting the .NET Framework 4.0. After adding a reference to Microsoft.ServiceBus I was bewildered to see that my Service Bus bindings in the system.ServiceModel section were not recognized.

I soon realized that the issue was the machine.config file. When you install the Azure AppFabric SDK the relevant WCF extensions are added to the .NET Framework 2.0 machine.config file, which is shared by .NET Framework 3.0 and 3.5. However, .NET Framework 4.0 has its own machine.config file, and the SDK will not update the WCF extensions.

Fortunately, there’s an easy solution to this issue: use the CLR’s requiredRuntime feature.

- Create a configuration file named RelayConfigurationInstaller.exe.config in the “C:\%Program Files%\Windows Azure platform AppFabric SDK\V1.0\Assemblies\” folder with the following code:

<?xml version ="1.0"?>

<configuration>

<startup>

<requiredRuntime safemode="true"

imageVersion="v4.0.30319"

version="v4.0.30319"/>

</startup>

</configuration>

- Open up an elevated Visual Studio 2010 Command Prompt, browse to the directory, and run: RelayConfigurationInstaller.exe/ i

Your .NET Framework 4.0 machine.config file will now have the required configuration settings for the Service Bus bindings. Thanks to Vishal Chowdhary for the insight!

Vittorio Bertocci (Vibro) announced The New Release of the Identity Training Kit works on VS2010! on 5/10/2010:

Fig 1. The Identity Developer Training Kit in all its new-vs2010-theme-design awesomeness

In the last few months we have seen a growing demand for making our samples & guidance available to VS2010 users. I am happy to announce that we finally have a VS2010 RTM version of the Identity Developer Training Kit!

Based on the latest build of the WIF SDK, the April 2010 release of the Training Kit works with VS2010 RTM & the .NET fx 4.0 and removes all dependencies on VS2008 and .NET 3.5 (only exception being the Windows Azure labs, which still require the presence of .NET 3.5 since the Windows Azure VMs are 3.5 based). All instructions have been updated, and all the screenshots & code snippets are now targeted at VS2010. Yes, I know, today it’s already May… don’t ask :-)

We made the decision of keeping available on the download page also the former version (March 2010) of the training kit, so that if you work with VS2008 you still have something to refer to. However be aware of the fact that going forward we will update & maintain just the VS2010-compatible version. Ah, one last thing; just as the new WIF SDK 4.0 samples won’t work SxS with the samples in the WIF SDK 3.5, the March 2010 and April 2010 versions of the training kit will NOT work side by side. If on your machine you have both VS2010 and VS2008, you will have to choose which environment you want to use for using the training kit and installing the appropriate package.

Well, that’s it: welcome to the new decade! ;-)

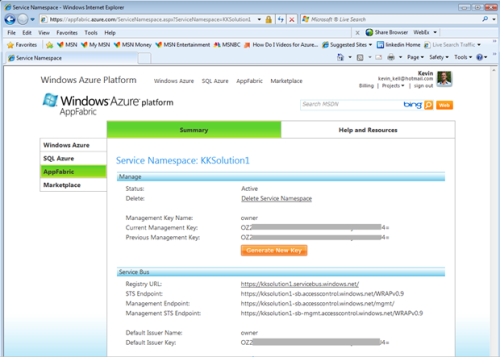

Kevin Kell’s Azure Platform AppFabric Service Bus post of 5/10/2010 for LearningTree.com describes the Azure AppFabric:

Okay, back to Windows Azure and the Azure Platform …

My personal opinion is that Azure is a very compelling cloud offering. While certainly not perfect, and not without the need for some developer effort, I continue to believe that Azure is a very attractive option for a variety of reasons. This is particularly true for .Net developers.

Organizations wishing to develop a “hybrid” cloud solution should definitely consider the AppFabric Service Bus as a technology of choice. If the hybrid solution is based on Windows and .Net then this choice is extremely appealing.

So what exactly is the Service Bus and what can you use it for?

Well, basically, the Service Bus allows interoperability between applications and services transparently over the Internet. Service Bus circumvents the need to deal with network address translation, firewalls, opening new ports, etc. Applications and services that can authenticate with the Service Bus can communicate with one another without knowing any of the details of where the other is running. Essentially the Service Bus acts as a trusted third party in the communication between an arbitrary host and client. This is perfect for allowing communication between something running in a private data center and something running on the cloud (aka a “hybrid” solution)!

How does this work in practice?

Well, the first thing you have to do is to create an AppFabric project:

Figure 1 Creating an AppFabric Project

Within the project you can define multiple namespaces. Within each namespace you have access to endpoints, names and keys you need to utilize the Service Bus.

Figure 2 The Service Namespace

Then, you can use these endpoints and keys in your client and host projects to enable them to communicate.

Let’s consider an example:

http://www.youtube.com/watch?v=8muyy_vC_sg

So, enabling communications between applications is perhaps not as simple as one might like. The purpose of the Service Bus, though, is to make implementing these communications as straightforward as possible.

Enroll in Learning Tree’s Windows Azure Programming course to learn in much more detail how to utilize the AppFabric Service Bus in your hybrid cloud solutions!

Mario Spuszta’s (mszCool) TechDays 2010 – Windows Azure AppFabric Session – Download a Sample – From all On-Premise to partial Cloud partial on-premise… post of 5/9/2010 begins:

I[n] my last posting I published the first set of downloads on my sessions at TechDays 2010 in Switzerland, Basel. Basically I delivered two sessions at the conference:

- Designing and implementing Claims-Based Security-Solutions with WIF

see my last posting for all the details.- Understanding and using Windows Azure AppFabric

- Presentation for Download

- Code Samples for Download

- Video of my presentationIn this post I’ll explain, what I did in my session at TechDays and why. As it’s going to be a little longer, I’ll create a separate post for explaining, how to setup the complete demo-environment. In this posting you’ll learn about a scenario where using Azure AppFabric as a means for integration cloud and on-premise solutions makes perfectly sense.

Again I used the same sample as I did for the WIF-session at TechDays, I just modified it in a different way during the session (okay, that was for saving time:)). The original scenario is made up with two web shop applications that are calling a payment web service as follows:

In the original solution everything runs on-premise. When thinking about the cloud it’s obvious what I want to do:

- The shop-applications should run in the cloud!

They can benefit from the elastic capabilities and the scale-out as well as pay-per-use from the cloud in a perfect sense. At the same time these shop-applications do not really store sensitive data.- The payment-service should stay on-premise!

Why? Because it stores highly sensitive data where I don’t know if I am allowed to store this data outside of our country – payment-information of our customers. Therefore I want to keep it in our own data centers to keep control over this sensitive data.But – the shop needs to call the payment service. Nevertheless the shop-frontends running in the cloud need to be able to call the payment-service without any hurdles. Of course you can do this by opening up firewall ports, registering names, dealing with proxies and network address translation and all that stuff. But with AppFabric you can do this in a different way, and it has advantages in my opinion. The modified architecture after the session looked as follows:

The Windows Azure AppFabric and its Service Bus component act as a communication relay between the service consumer and the service provider. Both are connecting from inside-out of their data center to the AppFabric. All access to the Service Bus itself and between the involved service providers and consumers is secured by a component called Access Control Service.

The ACS (access control service) allows you to do fine-grained, claims-based authorization based on standards (OAuth WRAP at the moment, later it will be extended with a full-blown STS using WS-* and SAML). …

Mario continues with a description of the project and concludes:

Stay tuned for a subsequent post where I will provide step-by-step instructions to setup my demo-environment.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Tayloe Stansbury describes Intuit’s Principles for Creating and Driving Value in the Cloud in this 00:37:05 video archive of his 5/11/2010 keynote to the SIIA’s All About the Cloud conference in San Francisco:

Tayloe, who’s Senior VP and Chief Technology Officer, describes how Intuit has moved from a boxed software publisher to primarily a cloud-based Software as a Service business. Intuit has 4 million small-business customers in the Americas. Intuit has teamed up with Microsoft to provide Windows Azure SDK 1.0 for the Intuit Partner Platform. The SDK supports Intuit Data Services (IDS) and makes it easy for developers to program against customers' QuickBooks data within their applications. The SDK also includes an IDS-integrated sample app.

This keynote is highly recommended for anyone considering marketing commercial consumer-oriented software in the cloud.

Repeated in the Cloud Computing Events section below.

Intuit announces it will Acquire Healthcare Communications Leader Medfusion in this 5/10/2010 press release:

Intuit Inc. (Nasdaq: INTU) has signed a definitive agreement to acquire privately held Medfusion, a Cary, N.C. based leader in patient-to-provider communications. Medfusion offers enhanced online solutions that enable healthcare providers to offer superior service to their patients while improving office efficiency and generating revenue. The cash transaction is valued at approximately $91 million.

The acquisition will accelerate Intuit's healthcare strategy by combining the company's proven track record in creating innovative, easy-to-use consumer and small business solutions with Medfusion's industry-leading patient-to-provider communication solutions. Together the companies will be able to resolve healthcare provider and consumer concerns, such as:

- Enabling more effective and efficient patient interactions online.

- Accessing and managing their personal health information.

- Creating more efficient ways for patients to settle and track their healthcare expenses.

"This transaction expands our software-as-a-service offerings with a solution currently used by more than 30,000 healthcare providers, the vast majority of whom are essentially small businesses," said Brad Smith, Intuit president and chief executive officer. "The combination of Medfusion's industry-leading patient-provider communication solutions and Intuit's expertise in creating innovative solutions that improve the financial lives of small businesses and consumers, will help us create new solutions that make the clinical, administrative and financial side of healthcare easier for everyone." …

John Moore comments on Intuit’s acquisition in his Intuit Makes a Move, Acquires Medfusion post of 5/11/2010 to the Chilmark Research blog:

Yesterday, Intuit announced its intent to acquire HIT vendor, Medfusion, for roughly $91M. This is an interesting and savvy move by Intuit. Interesting in that Intuit is signaling an intent to more directly enter the small physician market. Chilmark also sees this as a savvy move as this acquisition gives Intuit a relatively inexpensive point of access to some 30,000+ physicians that Medfusion currently serves. This provides Intuit an opportunity to sell its financial accounting solutions (Quickbooks) to this market, but we do not believe this is the real intent of this acquisition.

The real intent is more longer-term and likely more lucrative for Intuit: The ability to leverage the Medfusion acquisition to embed the relatively new Quicken Health solution right into a physician’s Medfusion-based website. As Chilmark has stated many times before, consumers use of HIT will first revolve around specific transactional processes such as appointment scheduling, online Rx refill requests, and paying bills. Having seen a demo of Quicken Health, Chilmark is quite impressed with its ease-of-use and rich functionality. This is truly a consumer-focused product. Providing physician practices an ability to embed Quicken Health into their existing Medfusion website looks pretty sweet from this vantage point and will ultimately put pressure on other physician website vendors.

(Note: last year Medfusion made an acquisition of its own, acquiring the slowly fading PHR vendor Medem for an undisclosed sum.)

Scott Golightly answers How Do I: Run Java Applications in Windows Azure? in this 00:22:45 Webcast of 5/11/2010:

Windows Azure in an open platform. This means you can run applictions written in .NET, PHP, or Java. In this video Scott Golightly will show how to create and run an application written in Java in Windows Azure. We will create a simple Java application that runs under Apache Tomcat and then show how that can be packaged up and deployed to the Windows Azure development fabric.

Downloads:

Ryan Dunn announced Windows Azure MMC v2 Released on 5/10/2010:

I am happy to announce the public release of the Windows Azure MMC - May Release. It is a very significant upgrade to the previous version on Code Gallery. So much, in fact, I tend to unofficially call it v2 (it has been called the May Release on Code Gallery). In addition to all-new and faster storage browsing capabilities, we have added service management as well as diagnostics support. We have also rebuilt the tool from the ground up to support extensibility. You can replace or supplement our table viewers, log viewers, and diagnostics tooling with your own creation.

This update has been in the pipeline for a very long time. It was actually finished and ready to go in late January. Given the amount of code however that we had to invest to produce this tool, we had to go through a lengthy legal review and produce a new EULA. As such, you may notice that we are no longer offering the source code in this release to the MMC snap-in itself. Included in this release is the source for the WASM cmdlets, but not for the MMC or the default plugins. In the future, we hope to be able to release the source code in its entirety.

How To Get Started:

There are so many features and updates in this release that I have prepared a very quick 15-min screencast on the features and how to get started managing your services and diagnostics in Windows Azure today!

Return to section navigation list>

Windows Azure Infrastructure

Phil Dunn claims “End-to-end interoperability and an open approach to language, development tools and Internet protocols eases pressure on IT. Just ask Domino's Pizza.” in his prefaces to his Overcome Lock-In Fears with Microsoft's Windows Azure Cloud Computing article of 5/11/2010 that “was commissioned by and prepared for Microsoft Corporation:”

When IT staff, software developers, and business leaders put their heads together to consider "cloud computing" scenarios, the word "Microsoft®" often conjures mixed emotions. Even though most enterprises leverage Microsoft solutions across their systems and development environments, they fear vendor lock-in.

The conventional thinking goes something like this: We're a mixed development environment, so we don't want to get stuck in a cloud environment that excludes our heterogeneous investments. Eventually we'll move several applications to the cloud, but we're not sure which ones right now. So, we want to be careful about what that environment looks like.

Fortunately, the folks in Redmond designed their cloud environment to address these concerns specifically. The open Windows® Azure™ cloud computing environment plays "agnostic," allowing mixed languages, development tools, applications, and Internet protocols to co-exist in Microsoft's public cloud.

Pizzas from the Cloud

And how does that apply to the real world? Domino's Pizza® provides a compelling use case. Rather than purchase and provision servers by the boatload, Domino's wanted to leverage the cloud to scale for seasonal and weekly pizza demand fluctuations.

Think about it. On one day, Super Bowl Sunday, every time zone in the world orders at the same time, and a sizeable portion of this is during halftime. That's a 50 percent volume spike on one day. A similar effect happens to a lesser degree at noon Eastern Time, then Central, then Mountain, and finally Pacific as people order pizza for lunch, and on Friday nights, during popular sporting events, and during finals at college campuses. Domino's is one of the busiest e-commerce sites in the world, with more than 20 million pizzas ordered online per year.

In the past, Domino's was forced to buy hardware to support the Super Bowl Sunday phenomenon and keep it up and running the remaining 364 days of the year. Naturally, the company wanted to move to a smarter, more agile, less costly approach.

At first glance, there might seem to be a problem. Domino's uses the Microsoft stack for its network of in-store systems, but its Web environment is Java and Tomcat. Initially, Domino's thought it might be forced to change development technologies to realize the benefit of the cloud or look elsewhere.

That's not true. Using Windows Azure Domino's is developing a solution that will deploy and run its Java/Tomcat code in the cloud. The benefits are significant. For starters, server costs are all borne by the Microsoft datacenter (not Domino's). This includes expenses for infrastructure housing, powering, cooling, maintaining, and the less apparent but important cost to have Domino's staff assigned to maintenance, patches and upgrading of all of the cloud infrastructure. The cloud subscription model helps Domino's save money on capital expenses while offering the company the flexibility to ramp up computing power when it needs it and dial it down when pizza demand is low. …

David Linthicum claims “Today's generation of college graduates is the first that will be wholly affected by the shift to cloud computing” in a preface to his The commencement of the cloud post of 5/11/2010 to InfoWorld’s Cloud Computing blog:

It was my honor to deliver the commencement address at the Indiana University of Pennsylvania's Eberly College of Business over the weekend. Of course, my topic was cloud computing -- specifically, what it means to the new generation graduating this year. Here is an excerpt of the speech:

In case you haven't noticed, the world of computing is a world of change. Where once we could see and touch the hardware and software we use and the places we store our data, we now abstract away the computer systems and software that process and store our information, both personal and business-oriented.

The facts speak for themselves:

- 56 percent of Internet users use Webmail services such as Hotmail, Gmail, or Yahoo Mail.

- 34 percent store personal photos online.

- 29 percent use online applications such as Google Documents or Adobe Photoshop Express.

- 7 percent store personal videos online.

- 5 percent pay to store computer files online.

- 5 percent back up hard drives to an online site.

I'm sure most of you are on Facebook and are sneaking a quick look at Twitter on your phones during my speech today. I know that you, the class of 2010, "gets it."

These days the focus is on cloud computing, or the ability to leverage complex and high-value computer systems over the Internet -- as you need them and when you need them. Using cloud technology, the playing field for businesses is much more level. Small businesses that once could not afford high-end enterprise applications can now do so by using cloud computing providers such as Google, Amazon.com, and Salesforce.com. …

Dave concludes:

The movement and direction are clear: Based upon current trends, market experts predict an annual growth rate of 20 percent for cloud computing, set against the overall software market growing at only around 6 percent. Global revenue from cloud computing will top $150 billion by 2013. This includes the shift from on-premise to cloud-based providers. There's a need for talented people to assist in this migration. Hopefully a few of you will consider that career path.

How we consume computing is changing, and it will change forever. You will be the first graduating class that is wholly affected by this change, no matter what your career path holds. Just as the Web revolutionized the way we consumed content in the 1990s, cloud computing will revolutionize the way we consume applications in the coming decade, and how we do enterprise architecture and IT planning moving forward.

I wish you all a great adventure on the road ahead.

Lori MacVittie advises Don’t get caught in the trap of thinking dynamic infrastructure is all about scalability in her It’s a Trap! post of 5/11/2010:

If it were the case that a “dynamic infrastructure” was focused solely on issues of scalability then I’d have nothing left to write. That problem, the transparent, non-disruptive scaling of applications - in both directions – has already been solved. Modern load balancers handle such scenarios with alacrity.

Luckily, it’s not the case that dynamic infrastructure is all about scalability. In fact, that’s simply one facet in a much larger data center diamond named context-awareness.

“Fixed, flat, predictable, no-spike workloads” do not need dynamic infrastructure. That’s the claim, anyway, and it’s one I’d like to refute now before it grows into a data center axiom.

All applications in a data center benefit from a dynamic infrastructure whether they are cloud, traditional, legacy or on-premise and in many cases off-premise benefit as well (we’ll get around to how dynamic infrastructure can extend control and services to off-premise applications in a future post).

So let me sum up: a dynamic infrastructure is about adaptability.

It is the adaptable nature of a dynamic infrastructure that gives it the agility necessary to scale up or scale down on-demand, to adjust application delivery policies in real-time to meet the specific conditions in the

network, client, and application infrastructure at the time the request and response is being handled. It is the adaptable nature of a dynamic infrastructure to be programmable - to be able to be extended programmatically – to address the unique issues that arise around performance, access, security, and delivery when network, client,and application environments collide in just the right way.

The trap here is that one assumes that “fixed, flat, predictable, no-spike workload” applications are being delivered in a vacuum. That they are not delivered over the same network that is constantly under a barrage of traffic from legitimate traffic, from illegitimate traffic, from applications that are variable, bursty, and unpredictable in their resource consumption. The trap is that applications are being delivered to clients who always access the application over the same network, in the same conditions, using the same client.

The trap is to assume that dynamic infrastructure is all about scalability and not about adaptability.

Lori concludes with a “DYNAMIC is about REAL-TIME DECISIONS” section:

… Dynamic infrastructure is about delivery of applications, encompassing security, scalability, reliability, performance, and availability. And those concerns are valid for even the most static of applications, because even though the application may be static in its capacity and consumption of resources, the infrastructure over and through which it is delivered is not.

<Return to section navigation list>

Cloud Security and Governance

J. D. Meier describes ASP.NET Security Scenarios on Azure in this 5/11/2010 post:

As part of our patterns & practices Azure Security Guidance project, we’re putting together a series of Application Scenarios and Solutions. Our goal is to show the most common application scenarios on the Microsoft Azure platform. This is your chance to give us feedback on whether we have the right scenarios, and whether you agree with the baseline solution.

ASP.NET Security Scenarios on Windows Azure

We’re taking a crawl, walk, run approach and starting with the basic scenarios first. This is our application scenario set for ASP.NET:

- ASP.NET Forms Auth to Azure Storage

- ASP.NET Forms Auth to SQL Azure

- ASP.NET to AD with Claims

- ASP.NET to AD with Claims (Federation)

ASP.NET Forms Auth to Azure Storage

Scenario

Solution

Solution summary table not shown. …

ASP.NET Forms Authentication to SQL Azure

Scenario

Solution

Solution summary table not shown. …

J.D. continues with ASP.NET to AD with Claims and ASP.NET to AD with Claims (Federation) topics. I’m anxiously awaiting patterns & practices’ publication of the guidance manual. CodePlex says “NOTE - This project is currently postponed with a planned start later in 2010.”

Chris Hoff (@Beaker) asserts Virtualization & Cloud Don’t Offer An *Information* Security Renaissance… in this 5/11/2010 post:

I was reading the @emccorp Twitter stream this morning from EMC World and noticed some interesting quotes from RSA’s Art Coviello as he spoke about Cloud Computing and security:

Fundamentally, I don’t disagree that virtualization (and Cloud) can act as fantastic forcing functions that help us focus on securing the things that matter most if we agree on what that is, exactly.

We’re certainly gaining better tools to help us understand how dynamic infrastructure, amorphous perimeters, mobility and collaboration are affecting our “craft,” however, I disagree with the fact that we’re going to enjoy anything resembling a “turnaround.” I’d suggest it’s more accurate to describe it as a “reach around.”

How, what, where, who and why we do what we do has been dramatically impacted by virtualization and Cloud. For the most part, these impacts are largely organizational and operational, not technological. In fact, most of the security industry (and networking for that matter) have been caught flat-footed by this shift which is, unfortunately, well underway with the majority of the market leaders scrambling to adjust roadmaps.

The entire premise that you have to consider that your information in a Public Cloud Computing model can be located and operated on by multiple actors (potentially hostile) means we have to really focus back on the boring and laborious basics of risk management and information security.

Virtualization and Cloud computing are simply platforms and operational models respectively. Security is as much a mindset as it is the cliche’ three-legged stool of “people, process and technology.” While platforms are important as “vessels” within and upon which we build our information systems, it’s important to realize that at the end of the day, the stuff that matters most – regardless of disruption and innovation in technology platforms — is the information itself.

“Embed[ding] security in” to the platforms is a worthy goal and building survivable systems is paramount and doing a better job of ensuring we consider security at an inflection point such as this is very important for sure. However, focusing on infrastructure alone reiterates that we are still deluded from the reality that applications and information (infostructure,) and the protocols that transport them (metastructure) are still disconnected from the cogs that house them (infrastructure.)

Focusing back on infrastructure is not heaven and it doesn’t represent a “do-over,” it’s simply perpetuating a broken model.

We’re already in security hell — or at least one of Dante’s circles of the Inferno. You can’t dig yourself out of a hole by continuing to dig…we’re already not doing it right. Again.

Two years ago at the RSA Security Conference, the theme of the show was “information centricity” and unfortunately given the hype and churn of virtualization and Cloud, we’ve lost touch with this focus. Abstraction has become a distraction. Embedding security into the platforms won’t solve the information security problem. We need to focus on being information centric and platform independent.

By the way, this is exactly the topic of my upcoming Blackhat 2010 talk: “CLOUDINOMICON: Idempotent Infrastructure, Survivable Systems & Bringing Sexy Back to Information Centricity” Go figure.

<Return to section navigation list>

Cloud Computing Events

Doug Hauger’s Impact of Cloud Innovation keynote of 5/11/2010 for SIIA’s All About the Cloud 2010 conference in San Francisco is partly archived on video:

Cloud computing is changing the physics of how developers and business can deliver software innovation to customers. In this session, Doug Hauger will discuss some of these industry trends and why they are exciting for both customers and technology providers like Microsoft. The session will discuss the opportunities and challenges for the business and also outline Microsoft’s cloud computing strategy. He will discuss the journey and the approach that Microsoft is taking to bring cloud innovation to its customer and developer base.

Doug is Microsoft’s general manager for Windows Azure. Click here to learn more about Origin Digital, an Accenture company, which Doug mentioned during this keynote.

Phil Wainwright posted his Cloud: it's not all or nothing article to ZDNet’s Software as Services blog on 5/11/2010 from the SIIA’s All About the Cloud conference in San Francisco:

I’m at the opening session of All About The Cloud, the SaaS industry conference that results from the merger of OpSource’s successful SaaS Summit with the Software and Information Industry Association’s influential OnDemand event. Apart from DreamForce, this has now become the must-attend event on the SaaS industry conference calendar and provides an invaluable opportunity to get up to date with sentiment among SaaS and cloud vendors [disclosure: I'm a speaker at the event, paying my own way, and both OpSource and SIIA are past consulting clients].

From a sentiment perspective, the opening session has set an interesting tone, perhaps best summed up by Saugatuck Technology analyst and CEO Bill McNee, who has just completed a presentation on current market trends. His very first statement was that the world is not moving wholesale to the cloud — the cloud will co-exist with on-premises IT infrastructure for the foreseeable future.

“I don’t like the word hybrid,” he added. “We call it an interwoven world. The vast majority of the new money will go the cloud, but we’ll build bridges between these two environments.”

The opening keynote, by Intuit CTO Tayloe Stansbury, reinforced this message from a different perspective. “Likely you know us for boxes of software that people run on their desktops,” he had begun by saying. He went on to discuss Intuit’s early commitment to using the cloud to deliver services, beginning with the launch of the web version of TurboTax in 1999, and ran through the company’s now vast catalog of online software and services. All of its SaaS lines, he revealed, amount to $1 billion in revenues, and the total revenues of what Intuit calls connected services — which adds to the SaaS total other important online services that don’t fall into classic definitions of software such as merchant services and tax e-filing — come to $2 billion. “That accounts for about 60% of our company’s revenue,” he concluded (should someone tell Sage I wonder?).

So conventional software vendors are becoming cloud providers, while cloud providers are having to learn how to work in harmony with conventional IT infrastructure. Cloud is here to stay, but I sense a new maturity at this year’s conference, which sees it taking its place within the evolving mainstream software industry. What does that mean for concepts like private cloud? Well that’s the subject of the panel I’ll be moderating tomorrow, so let me leave the answer to that question until then.

Tayloe Stansbury describes Intuit’s Principles for Creating and Driving Value in the Cloud in this 00:37:05 video archive of his 5/11/2010 keynote to the SIIA’s All About the Cloud conference in San Francisco:

Tayloe, who’s Senior VP and Chief Technology Officer, describes how Intuit has moved from a boxed software publisher to primarily a cloud-based Software as a Service business. Intuit has 4 million small-business customers in the Americas. Intuit has teamed up with Microsoft to provide Windows Azure SDK 1.0 for the Intuit Partner Platform. The SDK supports Intuit Data Services (IDS) and makes it easy for developers to program against customers' QuickBooks data within their applications. The SDK also includes an IDS-integrated sample app.

Repeated from the Live Windows Azure Apps, APIs, Tools and Test Harnesses section above.

Chris Woodruff reports OData Blowout in June… 8 OData Workshops in Raleigh, Charlotte, Atlanta, Chicago and NYC in this 5/10/2010 post:

Interested in OData? Want to discover what the next revolution in data will be? In this workshop, the attendee is invited to consider the many opportunities and challenges for data-intensive applications, interorganizational data sharing for “data mashups,” the establishment of new processes and pipelines, and an agenda to exploit the opportunities as well as stay ahead of the data deluge.

June 2 – Raleigh, NC 8:30am – 12pm @ The Jane S. McKimmon Conference & Training Center http://odataworkshop.eventbrite.com/

June 3 – Charlotte, NC Details to come

June 4 – Atlanta 8:30am – 12pm 115 Perimeter Center Place, NE., Suite 250, Atlanta , GA, 30346 http://odataatlanta.eventbrite.com/

June 21 and 22 Chicago (downtown and suburbs)

- 6/21 – 8:30am – 12pm @ Microsoft Office – Downers Grove http://tinyurl.com/ODataChicago1

- 6/21 – 1pm-4:30pm @ Microsoft Office – Downers Grove http://tinyurl.com/ODataChicago2

- 6/22 – 8:30 – 12pm @ Microsoft Office – Chicago http://tinyurl.com/ODataChicago3

- 6/22 – 1pm-4:30pm @ Microsoft Office – Chicago http://tinyurl.com/28y5hfx

June 28 – NYC Details to come

Abstract

The Open Data Protocol (OData) is an open protocol for sharing data. It provides a way to break down data silos and increase the shared value of data by creating an ecosystem in which data consumers can interoperate with data producers in a way that is far more powerful than currently possible, enabling more applications to make sense of a broader set of data. Every producer and consumer of data that participates in this ecosystem increases its overall value.

OData is consistent with the way the Web works – it makes a deep commitment to URIs for resource identification and commits to an HTTP-based, uniform interface for interacting with those resources (just like the Web). This commitment to core Web principles allows OData to enable a new level of data integration and interoperability across a broad range of clients, servers, services, and tools.

OData is released under the Open Specification Promise to allow anyone to freely interoperate with OData implementations.

In this talk Chris will provide an in depth knowledge to this protocol, how to consume a OData service and finally how to implement an OData service on Windows using the WCF Data Services product.

Agenda

- Introductions (5 minutes)

- Overview of OData (10 minutes)

- The OData Protocol (1 hour)

- 15 minute break

- Producing OData Feeds (1 hour)

- Consuming OData Feeds (1 hour)

MSDN Events Presents: Launch 2010 Highlights on 6/15/2010 at 1:00 PM to 5:00 PM at the Microsoft Silicon Valley Campus, 1065 La Avenida, Bldg 1, Mountain View, CA 94043:

Event Overview

Find out firsthand about new tools that can boost your creativity and performance at Launch 2010, featuring Microsoft® Visual Studio® 2010. Learn how to improve the process of changing your existing code base and drive tighter collaboration with testers. Explore innovative web technologies and frameworks that can help you build dynamic web applications and scale them to the cloud. And, learn about the wide variety of rich application platforms that Visual Studio 2010 supports, including Windows 7, the Web, SharePoint, Windows Azure, SQL, and Windows Phone 7 Series. There’s a lot that’s new!

Registration Options

- Event ID: 1032446405

- Register by Phone: 1-877-673-8368

- Register with a Windows Live™ ID: Register Online

Note that the Launch 2010 Highlights in Sacramento, CA on 6/19/2010 is full and isn’t accepting new reservations.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

USA Today reports Salesforce's Benioff: Microsoft, IBM play catchup in cloud computing in this 5/11/2010 story by Byron Acohido:

With Google, IBM and Microsoft suddenly racing to deliver Software-as-a-Service to small businesses, Marc Benioff, outspoken co-founder and CEO of Salesforce.com, couldn't be more tickled.

Benioff has been championing the service as disruptive technology for more than a decade. Technology Live caught up with Benioff shortly after he delivered this keynote presentation at a Google event promoting Google Apps Marketplace. Excerpts of that interview:

TL: So what do you make of all of these clever, discrete hosted services suddenly becoming available to small business?

Benioff: No one has to buy software anymore. The software has gone online. That's where the software lives. It's not anything more than that.

TL: So what's driving this?

Benioff: Users are just completely frustrated with the lack of innovation that has occurred in traditional business software. You look at products like Microsoft SharePoint or IBM's Lotus Notes and they're designed for systems whose days are gone by.

TL: Why are you such an enthusiastic backer of Google Apps Marketplace?

Benioff: Google Apps Marketplace is kind of like Apple's iPhone apps store, except it's for business software. The idea is that customers can choose from a lot of different vendors and technologies and easily integrate them. That's where the power is. There are a lot of different ways you can integrate these services together.

TL: Surely you're not ruling out Microsoft and IBM in this emerging space?

Benioff: No. I'm not ruling out Microsoft and IBM, but they have done a terrible job over the last decade. That's just material fact. Certainly their investors have paid the price for their inability to innovate and their attempts to try to hold on to the old paradigms instead of innovating aggressively in the Internet. It's not about Microsoft or IBM; they're just trying to figure out how they can tactically catch up.

TL: Where do you see this going in the next year or two?

Benioff: We are entering the second generation of cloud computing, call it Cloud 2.0. It's about enabling a whole new level of capabilities for business users delivered on the next generation of BlackBerries, iPhones and iPads. It's about all these cloud services providing a greater level of collaboration, social computing, communication, entertainment and information management.

TL: Do you see cloud computing emerging as an X-factor; can it help small business boost the economic recovery?

Benioff: Yes, this is fantastic for small business because it gives them the power of big business. We have large customers and we have small customers. Cisco has 50,000 users with us, Dell has 80,000 users. But we have lots of customers with five users, 10 users and 30 users. They're all running the exact same code from the exact same servers. It used to be small business lined up over here to buy this software, medium business lined up over here and large business lined up over here. Cloud computing blurs those lines. All businesses are now able to get this kind of capability with a level of equality that's never been possible before. It makes it a more democratic world.

Graphic credit: Salesforce.com.

Sharon Gaudin reports “Q&A: Dave Girouard discusses what it's like to lock horns with Microsoft and what he thinks Google's advantages are over its Redmond rival” in her Google exec: Microsoft is years behind in cloud apps story of 5/11/2010 for ComputerWorld:

Of the challenges that could keep Google from reaching its goal of using Google Docs to move into the enterprise, Microsoft may be one of the biggest barriers.

Google and rival Microsoft are battling on several fronts: search, browsers, operating systems, and office software. And the office software front is heating up as Google continues to push Google Docs to the forefront of cloud computing and Microsoft readies to move its ubiquitous Office suite into the cloud as well.

However, Dave Girouard, president of Google's enterprise division, tells Computerworld that when it comes to competition, there's Microsoft and then there's ... well, there's Microsoft. But he seems to be really looking forward to locking horns with his Redmond, Wash., counterparts.

In a one-on-one interview, Girouard talks about competing with a company known for its office apps, Google's own growth in the enterprise, and what he thinks Google's advantages are over Microsoft. …

Sharon continues with the Q&A transcript.

Lars Fosberg claims “Enomaly Helps Best Buy Leverage Cloud Computing to Connect with Customers” in his Best Buy Goes to the Cloud post of 5/11/2010:

In late May [2009], Gary Koelling and Steve Bendt came to Enomaly looking for our help to realize their latest brainchild, called "Connect". We'd previously worked with Best Buy to develop an internal social networking site called "Blueshirt Nation" and we were eager for an another opportunity to collaborate with them.

Inspired by Ben Herington's ConnectTweet, the concept was simple; Connect BlueShirts directly with customers via twitter. In less than two months, Enomaly developed a Python application on Google App Engine to syndicate tweets from Best Buy employees and rebroadcast them. Thereby allowing customers to ask questions via twitter (@twelpforce) and have the answer crowdsourced to a vast network of Best Buy employees who are participating in the service. Now the answer to "looking for a Helmet Cam I can use for snowboarding and road trips" is only a tweet away.

The application, which has since come to be named Twelpforce, is a prime example of innovators like Best Buy leveraging cloud computing to enhance their business. The response to the service since it was launch on Sunday has been very positive and it's exciting to be an intregral part in bringing this project to life. We're interested in hearing your feedback on the service, so please feel free to leave a comment!

Editorial note: This post originally appeared on the bestbuyapps.com blog.

Not exactly news, but an interesting example of commercial adoption of social networking.

<Return to section navigation list>