Windows Azure and Cloud Computing Posts for 5/22/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

The Windows Azure Team announced New Plugin Enables WordPress To Use Windows Azure Storage Services on 5/21/2010:

A new post on the Interoperability team blog outlines a use case showcasing a new plugin for WordPress that enables users to use Windows Azure Storage Service to host media for a WordPress powered blog. The plugin, developed by Microsoft, is now available as an open source project from the WordPress repository.

Register for your Windows Azure account (try for free till July 31, 2010 with the Introductory Special), install the plugin and get started!

Additional links

- Installing WordPress on Windows with the Web Platform Installer: http://www.microsoft.com/web/gallery/WordPress.aspx

- Running WordPress on Windows with SQL Server: http://wordpress.visitmix.com/

- Windows Azure SDK for PHP: http://www.interoperabilitybridges.com/projects/php-sdk-for-windows-azure

About Windows Azure Storage

Windows Azure Storage enables applications to store and manipulate large objects and files in the cloud via blobs, manipulate service state via tables, and provide reliable delivery of messages using queues. You can read more about Windows Azure Storage here.

If you want to manage your media (images or any file offered for download) in a consistent way and share them across multiple websites then you might want to consider using Windows Azure Storage blobs. Windows Azure includes a service called Windows Azure Content Delivery Network (CDN) which offers developers a solution for delivering high-bandwidth content. Windows Azure CDN has currently 18 locations globally (United States, Europe, Asia, Australia and South America). Windows Azure CDN caches your Windows Azure blobs at strategically placed locations to provide maximum bandwidth for delivering your content to users. The benefit of using a CDN is better performance and experience for users who are farther from the source of the content stored in the Windows Azure Storage blobs. You can read more on MSDN here.

Jean-Christophe Cimetiere expands on the Azure Team’s post with his Taking advantage of Windows Azure Storage from PHP: example with a WordPress plugin post of 5/21/2010 to the Interoperability @ Microsoft blog:

Continuing our efforts on improving interoperability between PHP and Microsoft technologies, we have created an example showcasing a new plugin for WordPress that allows WordPress developers to take advantage of the storage capacity of Windows Azure. This plugin enables WordPress to use Windows Azure Storage Service to host media for a WordPress-powered blog.

The plugin, developed by Microsoft, is now available as an open source project from the WordPress repository: http://wordpress.org/extend/plugins/windows-azure-storage/

About Windows Azure Storage and Content Delivery Network (CDN)

Windows Azure Storage enables applications to store and manipulate large objects and files in the cloud via blobs, manipulate service state via tables, and provide reliable delivery of messages using queues. You can read more about Windows Azure Storage here.

If you want to manage your media (images or any file offered for download) in a consistent way and share them across multiple websites then you might want to consider using Windows Azure Storage blobs. Windows Azure includes a service called Windows Azure Content Delivery Network (CDN) which offers developers a solution for delivering high-bandwidth content. Windows Azure CDN has currently 18 locations globally (United States, Europe, Asia, Australia and South America). Windows Azure CDN caches your Windows Azure blobs at strategically placed locations to provide maximum bandwidth for delivering your content to users. The benefit of using a CDN is better performance and experience for users who are farther from the source of the content stored in the Windows Azure Storage blobs. You can read more on the Windows Azure Team Blog and on MSDN

Windows Azure Storage from PHP with a WordPress plug-in.

The Windows Azure Storage plugin for WordPress allows developers running their own instance of WordPress to take advantage of the Windows Azure Storage services, including the Content Delivery Network (CDN) feature. It provides a consistent storage mechanism for WordPress Media in a scale-out architecture where the individual web servers don’t share a disk. Note that this scenario goes beyond WordPress and could also be very compelling any other web application where there’s a need to load balance across a number of web servers without shared disk.

The plugin is a regular WordPress plugin developed in PHP, and can be deployed on any WordPress instance (running on Windows or Linux - requires at least version 2.8.0 and tested up to version 2.8.4). The plugin uses the Windows Azure SDK for PHP to handle the interactions with Windows Azure.

Once the plugin is installed you’ll see it in the WordPress plugins management interface.

Once the plugin is activated and configured, which simply consists of setting your Windows Azure account information and a few options, you can use it directly through the blog post editor:

To include an image in the post, just click on the “Azure” icon. The following screen will pop up:

From here you simply pick the image you want to include.

When the plugin is installed, you can choose to have all media managed through the WordPress Media Management interface or during imports to also go to Windows Azure blob storage. Then it shows up in the regular list of media elements and not just under the Azure button.

Once you have published the post you can see that your image lives on Windows Azure Storage, although your WordPress applications can be hosted anywhere else.

Give it a try!

The plugin is now available from the WordPress repository: http://plugins.svn.wordpress.org/windows-azure-storage/. Register for your Windows Azure account (try for free till July 31, 2010 with the Introductory Special), install the plugin, and get started!

Dave Simpson predicts Cloud storage is due for a shakeout in this 5/21/2010 post to the InfoStor blog:

I realize that it may seem ludicrous to predict a shakeout in a market that hasn’t even taken off, but I’m afraid that’s what’s in storage for the cloud storage market.

From where I sit (an editor’s desk, or the receiving end of the PR Gatling guns) there seems to be a startup getting into cloud storage every week. And since this has been going on for well over a year, those dozens of cloud storage providers from last year have probably blossomed into hundreds by now.

IDC predicts that cloud storage will grow from 9% of the overall IT cloud services market in 2009 to 14% in 2013. In dollar terms, that means revenues from cloud storage of $1.57 billion in 2009 to $6.2 billion in 2013.

There would appear to be more than enough room for dozens (hundreds?) of players in a market of that size, right? Wrong. There’s only room for a handful of profitable RAID vendors, just to take one example, and the RAID array market is way bigger than the cloud storage market.

What are these legions of cloud storage startups smoking? Or shooting? How can they possibly expect to turn a profit? There are a number of problems:

If I was going to start a storage company (pity the fool), I’d rather be a RAID vendor than a cloud storage provider. In RAID, at least you can undercut EMC and the boys on price. Maybe even technology. You’re not going to be able to that with cloud storage.

Cloud storage vendors already provide storage at pennies (ok, nickels in some cases) per GB per month. What are you going to do – Provide it for drachmas per GB? Not much profit in that business model. A better bet would be to start a disk drive manufacturing business!

There are a number of other obvious impediments for small cloud storage providers (small providers, not clouds).

Look who’s already in the cloud storage business.

Amazon didn’t invent the term ‘cloud storage,’ buy they’re almost synonymous with it.

And earlier this week, Google appeared to put its toes into cloud storage (see “Google Launches Online Data Storage Service for Developers” on Enterprise Storage Forum). After some free initial capacity, pricing appears to be 17 cents/GB/month, plus 10 cents/GB for uploading and 15 cents/GB for downloading.

Sure, Google’s cloud storage service is just for developers now, but if cloud storage truly is a billion dollar market you know that Google will chase Amazon.

And some people think that Microsoft will get into this space (google “windows azure”). Again, mostly for developers, but why stop there?

And then there’s EMC (Mozy, Atmos), the storage vendor that every storage vendor just loves competing with.

And cloud storage consumer kings such as Carbonite, who are moving up the food chain into SMB land.

The list of impediments to succeeding in the cloud storage market goes on and on, just like the list of vendors entering the space.

Don’t get me wrong: Cloud storage is a great thing (no matter how you define it, which can be like trying to nail a blob of mercury to a ceiling of Jell-o), but there just won’t be room for all the vendors clamoring for a piece of the pie.

So, if you’re a cloud storage startup and you still have some capital left . . . Wanna start a RAID company?

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Joel Forman briefly describes Creating Logins and Users in SQL Azure in this 5/21/2010 post to the SlalomWorks blog:

I recently needed to create a user with read only access to a SQL Azure database. I thought that this task would make for a good blog post, given thant managing users and access levels is a little differnet in SQL Azure than in a traditional hosted environment.

There is some good documentation out there SQL Azure supported TSQL syntax for doing these types of tasks. Here is a link to one resource that I found helpful: Managing Databases and Logins in SQL Azure

The first step is to create a new login. You will need to connect to the master database using your SQL Azure administrator account that you set up for your SQL Azure server in the developer portal, sql.azure.com. Here is the syntax I used:

CREATE LOGIN myreadonlylogin WITH password=’abc123‘;

SELECT * FROM sys.sql_logins;The next step is to create a user in your SQL Azure database for this login. Disconnect from the master database, and connect to your SQL Azure database. Then, against your SQL Azure database, your can run the following syntax to create a user:

CREATE USER myreadonlyuser FROM LOGIN myreadonlylogin;

Finally, you need to assign this user permissions in this database. I ran the following to assign read only access:

EXEC sp_addrolemember ‘db_datareader’, ‘myreadonlyuser‘;

Mike Amundsen lists his reservations about OData in a Thinking about REST and HTTP thread of 5/21/2010 on the DevComments list:

For me, the OData format has the following shortcomings WRT the REST architectural style:

- OData uses Atom as an envelope for a custom payload:

- OData is an Object Transfer pattern, not a state transfer pattern

- OData has limited hypermedia support

- OData relies on URI Convention

Below are some details and ideas on how these shortcomings might be addressed:

Atom as an Envelope

OData uses the Atom format as an envelope for a custom XML payload. I would prefer that a dedicated media type be developed that does not have the extra "baggage" of Atom. For example, in order for me to write a client application that uses the OData format, I need to encode understanding of two Atom RFCs (Atom Syndication [1] and Atom Publishing [2]) _and_ I need to encode into my client the details of the custom payload that appears within the <content /> element (the stuff I'm really interested in anyway). A better choice, IMO, would have been to use some version of the data format employed for the now defunct SQL Server Data Services (SSDS/SDS). It was lean, specific, and provided the same functionality in a much smaller payload that was easier to encode into clients.

Object Transfer Pattern & Limited Hypermedia Options Similar to Atom, OData is really an object transfer format.

OData servers currently support two different _serializations_ (JSON and XML) but I am not able to send anything other than an "entry" object (or a batch of them, etc.). Essentially, I can't transfer arbitrary state, just pre-defined objects. Since I can only transfer predefined objects within the Atom envelope, I have very few Hypermedia options to provide to my clients. I can't see how to send directions for custom queries, or ways to accomplish specific tasks on the server (compute this months totals and return the results, approve the remaining open invoices for process, etc.). HTML uses the <form /> element with varying method and format instructions for this. SMIL uses the <send /> element along with an XPath query to indicate which portions of the message are to be returned; etc.

I see reference to this kind of thing in 2.13 Invoking Service Operations [3] but this is a very limited situation and while I see it is possible for clients to execute these GET methods with arbitrary URIs, I see nothing in the docs that indicates how I can fashion a response from the server that _tells_ clients this operation is possible. One way to resolve this would be to expand the media-type to include similar message blocks that would contain a link relation, URI, and one or more template elements that clients could use to fill in themselves or present to users for population. I show some rudimentary example of this (in simple XML) in a recent blog post [4].

Reliance on URI Convention

Much of the documentation for OData is spent outlining URI conventions that need to be encoded into the client application. I would prefer the use of the URI-Templates [5] model for simple filter cases. This would allow clients to simply encode the rules for URI templates and execute them for templated links rather than requiring programmers to commit specific URI conventions directly to code. Using templates also means servers can modify the arrangement of the URI/query without requiring re-coding of the clients.

For more complex queries (basically arbitrary filters/sorts, etc.) I'd prefer OData advertise support for one or more query media types themselves (accept: text/t-sql, text/linq, text/yql, etc.[sign, no registered types *yet*]). This would reduce the need to define complex URI conventions and provide greater flexibility in the future when other query languages become more desirable (e.g. application/sparql-query [6], etc.). In all these cases, clients can code for the query language, not the URI convention.

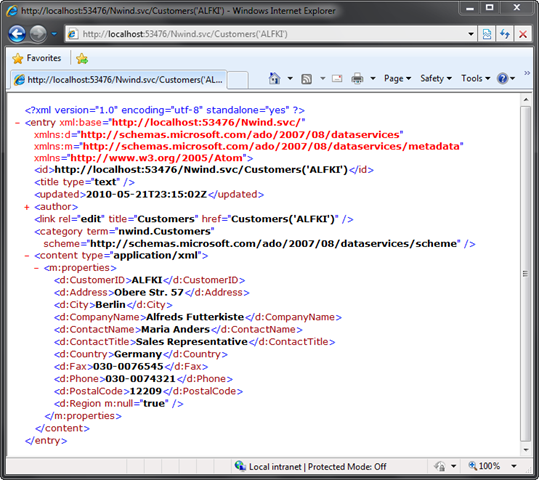

Azret Botash shows you how to use the WCF Data Service Provider for [DevExpress] XPO in this 5/21/2010 tutorial:

The Open Data Protocol (OData) is quickly becoming the format of choice when it comes to exposing data. The growing number of client side libraries and all the new features from WCF Data Services is what makes the developers switch from proprietary (Custom REST APIs, SOAP, Binary Formats etc…) to OData.

The simplest way to expose your data as OData is by using the WCF Data Services, which currently comes with a couple of out of the box providers (EF, Reflection etc…). Each provider requires it’s own data model and if you have made XPO your ORM of choice, switching to another model is out of the question. Adding an additional data layer is not something you would want to do either, maintaining more than one is rarely a good practice.

The cool thing about the WCF Data Services is that you can plug in custom providers. A provider for XPO can be downloaded from CodePlex. We’ll develop it together in this series of blog posts, but first, I want to show you how easy it is to expose your XPO model using the XPO Data Service Provider.

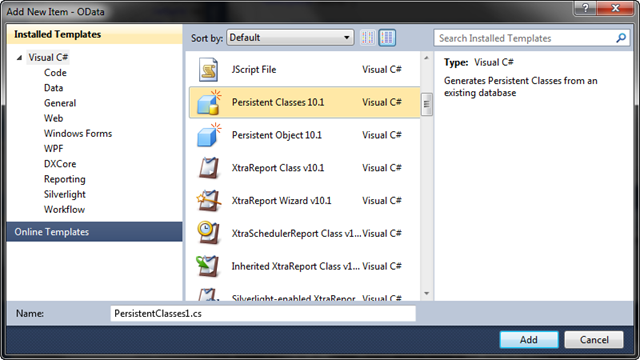

Step 1: Have your XPO Model Ready

For demonstration purposes my persistent objects are mapped to the Nwind access database. I used the Add New Persistent Classes Wizard to generate mine.

public class Customers: XPLiteObject {

string fCustomerID;

[Key]

[Size(5)]

public string CustomerID {

get { return fCustomerID; }

set { SetPropertyValue<string>("CustomerID", ref fCustomerID, value); }

}

...

}

public class Products : XPLiteObject {

int fProductID;

[Key(true)]

public int ProductID {

get { return fProductID; }

set { SetPropertyValue<int>("ProductID", ref fProductID, value); }

}

...

}Step 2 : Add a new WCF Data Service to your project

public class Nwind : DataService< /* TODO: put your data source class name here */ >

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

// TODO: set rules to indicate which entity sets and service operations are visible, updatable, etc.

// Examples:

// config.SetEntitySetAccessRule("MyEntityset", EntitySetRights.AllRead);

// config.SetServiceOperationAccessRule("MyServiceOperation", ServiceOperationRights.All);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

}

}Step 3: Add a Reference to DevExpress.Xpo.Services.v10.1.dll

Step 4: Configure your WCF Data Service

[XpoConnectionString("Nwind")]

public class Nwind : XpoDataService

{

public static void InitializeService(DataServiceConfiguration config)

{

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

}

}File: Web.config

<connectionStrings>

<add name="Nwind" connectionString='Provider=Microsoft.Jet.OLEDB.4.0;Data Source="nwind.mdb"'/>

</connectionStrings>And that’s it! We can now explore our data using a number of different clients that support OData or simply view the feeds in the browser.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Maureen O’Gara claims the group is “Supposed to identify gaps in existing identity management standards” in her OASIS Forms ID-in-the-Cloud Group post of 5/22/2010:

OASIS, the standards consortium, has formed a new group to address the security challenges posed by identity management in cloud computing.

The new OASIS Identity in the Cloud (IDCloud) Technical Committee is supposed to identify gaps in existing identity management standards and investigate the need for profiles to achieve interoperability in current standards. [Link added.]

Committee members will do the risk and threat analyses on collected use cases and produce guidelines for mitigating vulnerabilities.

The 451 Group anticipates that the IDCloud profiles that result will enable a consistent set of policies that do the job of encapsulating business logic across multiple domains.

Committee members include Alfresco, CA, Capgemini, Cisco, Cognizant, Boeing, eBay, IBM, Microsoft, Novell, Ping Identity, Red Hat, SafeNet, SAP, Skyworth TTG, Symantec, Vanguard and VeriSign with othes expected to join. Microsoft and Red Hat are co-chairing the effort.

The idea is to extend the foundational security standards such as XACML, SAML, WS-Security and WS-Trust that were developed at OASIS in the first place.

Anthony Nadalin of Microsoft and Red Hat’s Anil Saldhana are IDCloud co-chairs.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Zhiming Xue reports Ford and Microsoft Showcase Fiestaware Custom Application Platform at Maker Faire in this 5/21/2010 post:

Ford and Microsoft partnered to create an application platform to build custom apps for the car as part of a project called "American Journey 2.0". The platform itself -- called "Fiestaware" -- is built on top of Windows 7 and Robotics Developer Studio, and includes components optimized to work with Windows Azure. Ford, Microsoft, and University of Michigan are showing the Fiestaware application platform at Maker Faire this weekend (May 22-23rd) in San Mateo, CA, running inside of two Ford Fiestas, along with apps built on the platform by Ford and by students at the University of Michigan.

The project was supported by Ford, University of Michigan, Microsoft and other partners. Cumulux provided developers the early builds of the Fiestaware platform. Intel donated the Dell Studio PCs to run in the Fiestas and Intel SSDs to run inside the Dell Studios. Sprint provided wireless 3G and 4G wireless communications during the road trip. Note that this project is not directly related to Ford SYNC. Ford Sync is a production offering that helps you bring your digital life into your car. American Journey 2.0 is a research platform exploring how to bring your car into your digital life.

If you are curious about the Fiestaware platform technology components, the list below should satisfy your curiosity.

- Platform is built on Windows 7

- It supports natural user interfaces, such as touch and speech interaction

- Its user interface is built with .NET (Windows Presentation Foundation)

- Platform supports using SQL CE on the PC in the vehicle to cache/synchronize with SQL Azure in order to handle intermittent connectivity gracefully [Emphasis added.]

- Application development environment is Visual Studio 2008 with Microsoft Robotics Developer Studio. Microsoft Robotics Developer Studio is used to manage access and coordinate use of resources on the embedded vehicle network (e.g., vehicle sensor data) and in Windows 7 (e.g., Windows 7 Speech API).

- Additionally, Microsoft provided access to the Windows Azure Platform. [Emphasis added.]

Check out more detail by clicking the picture below.

See the Windows Azure Team announced New Plugin Enables WordPress To Use Windows Azure Storage Services on 5/21/2010 in the Azure Blob, Drive, Table and Queue Services section above.

Return to section navigation list>

Windows Azure Infrastructure

No significant articles yet today.

<Return to section navigation list>

Cloud Security and Governance

See Maureen O’Gara’s OASIS Forms ID-in-the-Cloud Group post of 5/22/2010 in the AppFabric: Access Control and Service Bus section above.

See Jean-Christophe Cimetiere expands on the Azure Team’s post with his Taking advantage of Windows Azure Storage from PHP: example with a WordPress plugin post of 5/21/2010 to the Interoperability @ Microsoft blog in the AppFabric: Access Control and Service Bus section above.

<Return to section navigation list>

Cloud Computing Events

My Updated List of 74 Cloud-Computing Sessions at TechEd North America 2010 of 5/22/2010 includes date, time, room, and complete description for each categorized in the following groups:

Breakout Session (45)

Breakout Session (45) - Interactive Session (10)

- Pre-Conference Seminar (1)

- Birds-of-a-Feather (6)

- Hands-on Lab (4)

- TLC Demo Station (8)

- Certification Preparation (0)

Update 5/23/2010: Due to the extreme length of the page, added links by category.

My List of 9 OData-Related Sessions at TechEd North America 2010 5/22/2010 is categorized like the preceding cloud-computing list.

Bruce Kyle performs a early post-mortem in his 1100 Developers Join in Portland Code Camp post of 5/22/2010. Following are the introduction and Azure-related sections:

Greetings from Portland Code Camp hosted at University of Portland in Portland, OR. More than 1000 developers have signed up. Check it out on twitter with the #devsat tag to see what people are saying about 100 or so presenters in 16 sessions each hour.

I had the privilege of doing several talks throughout the day. And the number one question is where to get the decks and resource material. So here's a set of links to the resources so you can follow up. I also get to acknowledge the great work of my colleagues who helped create the original decks, Phil Pennington, Simon Guest, and David Robinson.

I caught up with organizers Stuart Celarier and Arnie Rowland. They are thrilled the the Portland Mayor will be addressing the lunch crowd. Both had touted that this was the first mayor to speak to the informal group.

I'll post a movie montage up on my facebook page on Sunday. And I'll update this blog post later in the day.

About Portland Code Camp

Portland Code Camp and SQL Saturday are combining and coordinating efforts to bring 700–800 regional software development professionals together for the opportunity to immerse themselves in seminars, presentations, group exploration, and networking. Participants will be able to engage in their preferred technology, as well as to sample other options, with a focus on extending information exchange and enhancing the cross pollination of ideas

…

Patterns of Windows Azure Applications

There are key patterns in developing or migrating your software to Windows Azure. And there are good reasons for doing so. There are four main patterns.

- Using the Cloud for Scale

- Using the cloud for multi tenancy

- Using the cloud for compute

- Using the cloud for (infinite) storage

- Using the cloud for communications

I made great use of Simon Guest's slide presentation. You can find the slides here: Patterns For Moving To The Cloud.

Stefan Tilkov put together a great set of notes on the talk QCon SF 2009: Simon Guest, Patterns of Cloud Computing.

Get started with Windows Azure at the Channel 9 Learning Center. You'll also find a link to the latest Windows Azure Developer Training Kit.

SQL Azure 101

I generously borrowed from David Robinson's presentation at the Windows Azure Firestarter. You can see his presentation on Channel 9 Windows Azure FireStarter: SQL Azure with David Robinson.

The key takeaways are that you use the same tools and skills in getting your application to run in the cloud as you do to run on premises.

Learn more about SQL Azure on MSDN.

Front Runner

If you're working on a software project that uses Windows Server 2008 R2, Windows 7, Windows Azure, SQL Azure, join Front Runner. Front Runner provides you with phone and online tech support. Front Runner offers ISVs additional marketing support.

Join at http://msdev.com/frontrunner

ISV Videos on Windows Azure

See how other software companies are getting their applications up on Windows Azure on Channel 9. Most videos are under ten minutes:

David Bankston of INgage Networks, talks about social networking and Azure Gunther Lenz, Microsoft, chats with Michael Levy, SpeechCycle Gunther Lenz, Microsoft, chats with Guy Mounier, BA-Insight, about BA-Insight's next Generation solution levereging Windows Azure Aspect leverages storage + compute in call center application From Paper to the Cloud -- Epson's Cloud App for Printer, Scanner and Windows Azure Vidizmo - Nadeem Khan, President, Vidizmo talks about technology, roadmap and Microsoft partnership Murray Gordon talks with ProfitStars at a recent Microsoft event Datacastle Brings Data Protection From the Cloud Using Windows Azure Making business decisions with rules technology from InRule Technology Talking with Chatfe at the recent BizSpark camp for Windows Azure in New York Talking with Conexus Software at the Microsoft BizSpark Camp for Windows Azure in New York Talking with Marketers Anonymous at the recent Microsoft BizSpark camp for Windows Azure in New York Talking with Open Solutions at the recent Microsoft BizSpark event for Windows Azure in New York Talking with Summit Cloud at the recent Microsoft BizSpark event on Windows Azure in New York Talking with YouMD at the recent Microsoft BizSpark camp for Windows Azure in New York Talking with Pervasive Software Inc. about electronic document exchange and Azure Linxter brings MonitorGrid to manage servers from cloud LessMeeting improves the productivity and efficiency of enterprise meetings Izenda brings the power of self-serve BI from cloud FandomU brings worldwide enthusiasts and passionate individuals closer via events/conferences EyeMail delivers personalized audio/video emails from Windows Azure CoreMotives combines the power of Dynamics xRM and Windows Azure Platform to offer new Marketing Automation solution ChasingSavings.com adopts the Windows Azure Platform to meet the needs of their growing business

Greg Ness posted Future In Review Panel: Is the Network Ready for Cloud Computing? on 5/21/2010 to his Senior Tech Execs on Networks and Cloud Computing series:

Future in Review is Mark Anderson’s annual tech conference, described by The Economist as “the best technology conference in the world.” Our session on networks and clouds followed Mark’s interview with Microsoft CTO Ray Ozzie on “The Complex World of Emerging Platforms, from Cloud to Phone.” After Ray and Mark set the stage by talking about new platforms and complexity, we had the opportunity to explain why today’s networks need to be automated.

This 30 minute panel “Is the Network Ready for Cloud Computing” includes:

- Glenn Dasmalchi, Tech Chief of Staff, Office of the CTO, Cisco Systems;

- Lew Tucker, former Cloud CTO, Cloud Computing, Sun Microsystems;

- Mark Thiele, VP Data Center Strategy, ServiceMesh;

- Yousef Khalidi, Distinguished Engineer, Windows Azure, Microsoft;

- Richard McDougall, Chief Cloud Application Architect and Principal Engineer, Office of the CTO, VMware; and

- Greg Ness (blog author), Vice President, Infoblox.

You can view the session here. [Emphasis added.]

<Return to section navigation list>

Other Cloud Computing Platforms and Services

John E. Dunn claims “Star UK startup bought out” in his Oracle acquires Secerno for database firewall post of 5/21/2010 to the CIO.co.uk blog:

Oracle has bought UK database security startup Secerno for an undisclosed sum, bringing to an end the independence of one of the last decade’s most unusual UK-based startups.

As CIO sister title Techworld pointed out in the first news item ever written on the company just before its 2006 product launch, UK technology start-ups with saleable products are a rarity. For one to get bought for decent money in short order is vanishingly rare. More often, the UK tech start-ups, especially university spin-offs, sell on their IP for pennies or just fade away to nothing.

The deal is expected to close at the end of June, after which Oracle will incorporate the company’s DataWall database firewall, a multi-platform system used to analyse transactions for suspicious queries.

Registration is free, and gives you full access to our extensive white paper library, case studies & analysis, downloads & speciality areas, and more.Sign up to our newsletters and get up to date articles directly to your inbox. Get the latest, breaking IT news, our most read articles, expert insight and latest white papers.

Apart from working across different databases, including virtualised databases, the system’s strength is that it can work in real time by comparing each query to an established profile of what is ‘normal’ for that database. Unusual queries – typical of hacking attempts – are blocked.

“Secerno’s database firewall product acts as a first line of defence against external threats and unauthorised internal access with a protective perimeter around Oracle and non-Oracle databases,” said Oracle’s database senior vice president, Andrew Mendelsohn.

The official press release did not make clear which of Secerno’s team will remain in place but the assumption is that the core, including CEO Steve Hurn, and original co-founder and CTO, Steve Moyle, will be among them. Co-founder Paul Davie departed the company earlier this year.

The sale will have been driven in part by Secerno’s big-shot investors, one of which is Amadeus Capital Partners, itself co-founded by Hermann Hauser, a key figure in the setting up of the famous Acorn microcomputer company in the 1980s. That company in turn spun out ARM, the chip used today in the iPhone and many other low-power devices.

Protecting cloud databases probably is one of Oracle’s primary incentives for the acquisition.

EDL Consulting reports Business intelligence software leaders [SAP, Cisco, EMC and VMware] team to boost cloud computing in this 5/20/2010 post:

At the ongoing SAP Sapphire NOW conference taking place in both Orlando, Florida and Frankfurt, Germany, SAP announced that it has partnered with Cisco, EMC and VMware to help promote the use of cloud computing in the business community. Through the development of advanced SaaS business applications and reliable cloud-based data centers, the companies hope to instill confidence in organizations hesitant to move to the cloud.

Using clothing manufacturer Levi Strauss as an example, SAP and its partners pointed to a reduction of total cost of IT operations for Levi after it implemented cloud computing. Levi reported that cloud computing also provided it with greater agility to quickly make changes and fix problems as they arose.

"This collaborative demonstration of success is only possible when combining the strength of SAP's world-class business applications and the offerings from global technology leaders such as Cisco, EMC and VMware," Vishal Sikka of SAP said.

SAP applications make up more than 22 percent of the global business intelligence software market, according to Gartner. As the company makes more SaaS applications available, the use of cloud computing in the business community is likely to rise. Its most recent SaaS release, Business ByDesign, is an application ideal for small businesses to leverage SAP's reliability.

<Return to section navigation list>

![Editing with the Windows Azure Storage plugin for WordPress[6] Editing with the Windows Azure Storage plugin for WordPress[6]](http://blogs.msdn.com/blogfiles/interoperability/WindowsLiveWriter/TakingadvantageofWindowsAzureStoragefrom_9FDE/Editing%20with%20the%20Windows%20Azure%20Storage%20plugin%20for%20WordPress[6]_thumb.png)