Windows Azure and Cloud Computing Posts for 5/6/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in May 2010.

Azure Blob, Table and Queue Services

No significant articles yet today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Alex James posted OData and Messenger across the web to the OData blog on 5/6/2010:

The Windows Live team recently started talking about the new version of Messenger, which will include a RESTful interface that supports OData.

This is a very exciting development for the OData eco-system.

Read all about it here. [Windows blog.]

Herve Roggero announced in a 5/6/2010 post to LinkedIn’s SQL Azure and SQL Server Security group that he’s Developing a SHARD Library for .NET 3.5 - Looking for partners to test:

I am currently developing a Shard Library for .NET 3.5 and I am looking for a few companies willing to test it out, for which I would provide a full license at no cost - I may decide to provide this library as an open source project at some point but depending on the amount of work needed this may turn into a licensed product. The Shard library I am developing is a high-performance implementation with round-robin Inserts, smart Updates/Deletes and merged Selects from multiple sources, including SQL Azure. [Emphasis added.]

If you are interested and you have some time in the next few weeks/months to work on this with me, I will provide you with an NDA to sign so we can begin working together.

ARE YOU ALL IN? Then join me!

By Herve Roggero, Managing Partner, Pyn Logic

David Robinson asks Are You Ready to jump in and learn SQL Azure? on 5/6/2010 and offers resources, if you are:

Ready to jump in and learn SQL Azure? Here are some resources from Microsoft’s Channel 9.

Introduction to SQL Azure

In this lab, you will walk through a series of simple use cases for SQL Azure such as: preparing your account, managing logins, creating database objects and query your database. Here are the direct links to the various sections:

- Overview

- Exercise 1: Preparing Your SQL Azure Account

- Exercise 2: Working with Data. Basic DDL and DML

- Exercise 3: Build a Windows Azure Application that Accesses SQL Azure

- Exercise 4: Connecting via Client Libraries

- Summary

Migrating Databases to SQL Azure

In this lab, you will use the AdventureWorksLT2008 database and show how to move an existing on-premise database to SQL Azure including modifying the DDL and moving data via BCP and SQL Server Integration Services. Here are the direct links to the various sections:

- Overview

- Exercise 1: Moving an Existing Database to the Cloud

- Exercise 2: Using BCP for Data Import and Export

- Exercise 3: Using SSIS for Data Import and Export

- Summary

- Known Issues

SQL Azure: Tips and Tricks

In general, working with a database in SQL Azure is the same as working against an on SQL Server with some additional considerations covered in this lab.

- Overview

- Exercise 1: Manipulating the SQL Azure firewall via API’s

- Exercise 2: Managing Connections – Logging SessionIds

- Exercise 3: Managing Connections – Throttling, latency and transactions

- Exercise 4: Supportability – Usage Metrics

- Summary

What is SQL Azure?

Just what is SQL Azure? Join Zach in this brief video for an introduction to SQL Azure exploring what makes SQL Azure just like SQL Server and what makes it different and ready for the cloud.

The Open Data Protocol’s Roadshow subsite announced on 5/5/2010 the OData Roadshow:

Join us for the OData Roadshow and learn how to implement Web APIs using the Open Data Protocol. This event will include depth presentations and hands-on time for you to explore ideas on building an API for your web application.

An important part of the OData Roadshow, will be the afternoon hacking sessions, where you'll have a chance to work OData into your own projects on whatever platform you choose. Bring your laptop, ready to experiment with your ideas and pound out some code.

Agenda: Morning

- OData introduction, along with the ecosystem of products that support it

- Implementing and consuming OData services

- Hosting your OData assets in Azure

- Monetizing your OData services via "Dallas"

- Real-world tips & tricks to consider when developing an OData service

Agenda: Afternoon

- Open discussion and hands-on coding time to experiment with ideas and uses for OData in their current/future projects.

Speakers

Register Now

Douglas Purdy (@douglasp), CTO, Data and Modeling in Microsoft’s Business Platform Division.

Jonathan Carter (@lostintangent), Technical Evangelist in Microsoft's Developer & Platform Evangelism group.

Want to tweet about the OData Roadshow? Use the hash tag #OData.

For the schedule, see the Mike Flasko announced on 5/5/2010 that the WCF Data Services Team will sponsor an OData Roadshow post in the Cloud Computing Events section below.

It appears to me that Doug and Jon will be spending more time in aircraft than in the classrooms.

Dan Jones reports SQL Azure Now Supports Data-tier Applications in this 5/5/2010 post:

As announced a few weeks ago on the SQL Azure blog, SQL Azure now supports Data-tier Applications which are introduced with Visual Studio 2010 and SQL Server 2008 R2. Here’s a quick tour of the feature.

Here’s what I did:

- Launch Visual Studio 2010 and create a new Data-tier Application project

- Import my pubs database from a local instance

- Set my deployment properties to point to my SQL Azure account

- Build and deploy from Visual Studio

It’s that simple!

So what does this look like? Here are some screen shots:

Dan continues with additional screen captures and concludes:

Connecting SQL Server 2008 R2 Management Studio to my SQL Azure instance I see the databases as well as the meta-data for the Data-tier Application.

With Visual Studio 2010, SQL Server 2008 R2 Management Studio and the latest release of SQL Azure we have a symmetrical experience for creating, registering, and deploying Data-tier Applications. Unfortunately at this time we don’t support Upgrading a Data-tier Application on SQL Azure, as we do with an on-Premise install of SQL Server. To move to your database to the next version of your application you’d deploy side-by-side and migrate your data using your favorite data migration tool.

Try this out for yourself and I think you’ll find this to be extremely valuable. In addition, if you want to share your database schema with other people you no longer have to provide a bunch of Transact-SQL scripts. You can give them a DAC Pack file. The file will contain the name of the application, the version, and the database schema definition. This will make management of applications, including deployment much simpler!

<Return to section navigation list>

AppFabric: Access Control and Service Bus

See Sanjay Jain announced Released: Microsoft Dynamics CRM Statement of Direction April-2010 on 5/6/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Fox Business reports GCommerce Creates Cloud-Based Inventory System Using Microsoft SQL Azure to Transform the Special Order Process press release of 5/6/2010:

The $300 billion automotive aftermarket parts industry (http://www.aftermarket.org/) is undergoing a transformation through the introduction of an innovative cloud-based solution created by GCommerce (http://www.gcommerceinc.com/) with support from Microsoft Corp. A new market technology solution called the Virtual Inventory Cloud (VIC(TM)) provides inventory visibility and efficiency for drop ship special orders between distributors/retailers and manufacturers/suppliers in the automotive aftermarket. GCommerce has emerged as the leading provider of a B2B solution for the automotive aftermarket by providing Software as a Service (SaaS) using elements of cloud-based technologies to automate procurement and purchasing for national retailers, wholesalers, program groups and their suppliers.

"VIC(TM) enables hundreds of distributors and retailers in the automotive aftermarket to improve margins and increase customer loyalty through better visibility into parts availability from suppliers. The solution opens the door to connect into capabilities that only the largest companies could afford through heavy IT investments," said Rick Main, executive vice president, Sales & Marketing, GCommerce. "This is representative of a new transformative wave of business innovation that extends capabilities beyond the four walls of an enterprise, enabled through adoption of cloud platforms. The cloud will exponentially accelerate GCommerce's ability to serve the automotive aftermarket market and others like it." …

"Customers are increasingly turning to cloud-based solutions for the innovation needed in their supply chains because the centralized access to processes and information is well suited to the multi-enterprise nature of today's supply chains," said Dennis Gaughan, vice president, AMR Research.

Windows Azure and Microsoft SQL Azure enable VIC to be a more efficient trading system capable of handling tens of millions of transactions per month for automotive part suppliers. GCommerce's VIC implementation with support from Microsoft allows suppliers to create a large-scale virtual data warehouse that reduces dependency on paper-based processes and leverages a technology-based automation system that empowers people. This platform has potential to transform distribution supply chain transactions and management across numerous industries.

"As today's announcement demonstrates, Microsoft SQL Azure and Windows Azure enable mission-critical enterprise processes that deliver agile, cloud-based solutions for our partners," said Rahul Auradkar, director of the Cloud Services Team in the Business Platforms Division at Microsoft. "The automotive aftermarket industry faces a large-scale, complex challenge in implementing business processes like the real-time inventory and procurement process for drop ship special orders. Microsoft SQL Azure and Windows Azure deliver the agility, efficiency and scalability that GCommerce needs to meet this challenge and transform the automotive aftermarket supply chain."

The Windows Azure Team blog posted its Real World Windows Azure: Interview with Martin Szugat, Chief Product Officer at SnipClip case study about an Azure-based Facebook app on 5/6/2010. It begins:

As part of the Real World Windows Azure series, we talked to Martin Szugat, Chief Product Officer at SnipClip, about using the Windows Azure platform to deliver its social-media application and the benefits that Windows Azure provides. Here's what he had to say:

MSDN: Tell us about SnipClip and the services you offer.

Szugat: SnipClip is a social-collecting game on Facebook that enables people to collect short video clips of their favorite brand, artist, or movie by clipping them into a digital collector's album. People can trade and gift duplicate collectibles with friends and compete with other fans to complete a collection and win prizes. Companies with consumer focus and media brands use SnipClip to activate and engage their Facebook fans, to increase their fans' purchasing intent, and to promote or monetize their content.

MSDN: What was the biggest challenge SnipClip faced prior to implementing Windows Azure?

Szugat: Several of our customers and partners wanted SnipClip to guarantee high availability and scalability for the application. Specifically, customers and partners wanted to trust that we could scale from a few hundred to hundreds of thousands of users within hours if demand dictated it. We couldn't guarantee that kind of scalability with a physical server infrastructure at an even remotely reasonable cost-a cloud solution was the only viable option.

MSDN: Can you describe the solution you built with Windows Azure to help address your need for a scalable and highly available Web application?

Szugat: To start, SnipClip built a Web service based on the Windows Communication Foundation programming model. Then, we migrated that Web service to a Web role in Windows Azure, which provides us with quick computing scalability to handle demand. This step took us only two hours. The service uses Microsoft SQL Azure to store relational data, which has high availability and built-in fault tolerance. SnipClip takes advantage of Blob storage in Windows Azure to store image files. …

Read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Visit SnipClip at: www.snipclip.com

John O’Donnell’s Announcing Microsoft Dynamics CRM SDK 4.0.12 post of 5/6/2010 to the US ISV Developer blog advises:

Microsoft Dynamics CRM SDK 4.0.12 is now available for download! This update contains some very exciting additions:

Advanced Developer Extensions

Advanced Developer Extensions for Microsoft Dynamics CRM, also referred to as Microsoft xRM, is a new set of tools included in the Microsoft Dynamics CRM SDK that simplifies the development of Internet-enabled applications that interact with Microsoft Dynamics CRM 4.0. It uses well known ADO.NET technologies. This new toolkit makes it easy for you to build an agile, integrated Web solution! [Link to http://xrm.com/ added.]

Advanced Developer Extensions for Microsoft Dynamics CRM supports all Microsoft Dynamics CRM deployment models: On-Premises, Internet-facing deployments (IFDs), and Microsoft Dynamics CRM Online. The SDK download contains everything you need to get started: binaries, tools, samples and documentation.

Authentication for Microsoft Dynamics CRM Online

We have added new authentication documentation and sample code for Microsoft Dynamics CRM Online that does not require using certificates, making it easier for you to write code for your online solutions.

Sanjay Jain announced Released: Microsoft Dynamics CRM Statement of Direction April-2010 on 5/6/2010:

Learn the latest updates on CRM “5”, Accelerators, Integration Adaptors, SDK, CRM Online, Globalization, and Azure AppFabric. You can download the Statement-of-Direction here (requires access to PartnerSource). [Emphasis added.']

Sanjay Jain, ISV Architect Evangelist, Microsoft Corporation

- Blog: http://Blogs.msdn.com/SanjayJain

- Twitter: http://twitter.com/SanjayJain369

I’ve asked Sanjay for a simplified method by which registered partners can access the Statement of Direction without signing up to sell Microsoft Dynamics CRM at retail. I’ll update this post if and when he replies.

Mary Jo Foley reported Microsoft to deliver its cloud-hosted CRM 5 first, software later on 5/6/2010:

I asked a Microsoft spokesperson for verification [that CRM 5 Online is still on track to ship late this year, but the on-premises version won’t be available until 2011]. This is all the company is willing to say: “CRM5 will be available late 2010 beginning with Online in 32 markets and 41 languages, followed by the on-premises version.”

The Windows Azure Team reported Windows Azure and Lokad Founder Help Students Build Open Source Project on 5/5/2010:

For the past four years, Joannes Vemorel, founder of Lokad, has taught the course, Sofware Engineering and Distributed Computing, at the École Normale Supérieure (ENS) in Paris. The course covers the fundamental concepts underlying software engineering, with a particular interest in complex and/or distributed systems such as cloud computing, and we have provided Windows Azure resources to support the course and its students.

As part of the course every year, Joannes asks a dozen first-year students to take over a software project. Last year, they created Clouster, a scalable clustering algorithm that runs on Windows Azure and this year, they created an online multiplayer strategy game built on Windows Azure called Sqwarea (square+war+area). In order to further challenge the students, Vemorel mandated a complex connectivity rule in the game that required students to leverage some of the scalability features in Windows Azure. Check out the game here and, if you're interested in learning more, be sure to read Vemorel's post.

Mike Taulty’s KoodibooK Book Publishing – WPF, Silverlight, ASP.NET MVC2, Azure post of 5/5/2010 begins:

Earlier in the year I dropped in to see some former colleagues of mine who are working on a new product/service called KoodibooK at their software company in Bath (UK).

KoodibooK is a product that allows you to build up a set of photographs into a book, publish that book to an online store and then buy copies of the book that you made or, equally, books that other people have made and published.

I really like the application - you can try it yourself in beta from http://www.koodibook.com – it looks great and “feels” really slick, snappy and just “friendly” to use.

I’ve put together photograph books like this before myself using iPhoto on my Mac and I hadn’t come across a Windows solution quite as rich as this one – I plan to try it for my next photo book and see how it works out.

On the tech side - what I really found interesting was that the client applications and the websites/services that the guys have brought together cover such a wide range of the Microsoft stack.

The book creation part of the application needs to be rich and so there’s a Windows application written in WPF that leads the user from the creation of their book through to publishing it to the website.

On the website there’s still a need to show the book off in its best light and so Silverlight steps in ( with some re-use from the desktop code base ) in order to ensure that a very wide range of users can still see the books that are available with high fidelity even though they may not have the book creation application installed.

And those Silverlight “islands of richness” are hosted within web pages written with ASP.NET MVC2 and hosted in Windows Azure – KoodibooK is “living the dream” when it comes to bringing together all those platform pieces :-) …

Mike continues with additional project details and an interview with “Richard Godfrey and Paul Cross of KoodibooK captured on video talking about the application ( which they demo ).”

CoreMotives explains why the company uses Windows Azure and Dynamics CRM as the platforms for their Marketing Suite in this recent update to their Web site:

All CoreMotives solutions are powered by the Windows Azure cloud and run inside Microsoft Dynamics CRM. There is no software to install or maintain. We allow you the freedom to introduce powerful tools to your organization without the IT burden traditionally associated with adding Microsoft CRM marketing automation solutions.

Because our solutions are hosted and have zero-footprint, you can start using them immediately, regardless of your Microsoft CRM flavor: On Premise, Partner Hosted or Microsoft CRM Online.

CoreMotives selected Windows Azure as our architecture platform because it provides a scalable, secure and powerful method of delivering high quality software solutions. Windows Azure is a “cloud-based” infrastructure with processing and storage capabilities. What this means to our clients is that they can use our solutions without installing software on their own servers. They have nothing to maintain, or support over time – thus lower costs.

CoreMotives is 100% focused on Microsoft Dynamics CRM. This is the only CRM system for which we develop products, not Salesforce.com, not Sugar – just Microsoft CRM. Yes, we run our business with Microsoft CRM, and we fuel our sales by using our own Marketing Suite.

We support all versions of Microsoft CRM: 4.0 OnPremise, Partner Hosted, or CRM Online and we also support CRM 3.0. All variations are supported such as 32 & 64 bit, IFD, SSL, client-side certificates, etc.

It’s a 15 minutes provisioning process to begin using all the features of our marketing suite. And there is nothing to install on your CRM server. This is a zero footprint solution.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie claims If you don’t know how scaling services work in a cloud environment you may not like the results in her Cloud Load Balancing Fu for Developers Helps Avoid Scaling Gotchas post of 5/6/2010:

One of the benefits of cloud computing, and in particular IaaS (Infrastructure as a Service) is that the infrastructure is, well, a service. It’s abstracted, and that means you don’t need to know a lot about the nitty-gritty details of how it works. Right?

Well, mostly right.

While there’s no reason you should need to know how to specifically configure, say, an F5 BIG-IP load balancing solution when deploying an application with GoGrid, you probably should understand the implications of using the provider’s API to scale using that load balancing solution. If you don’t you may run into a “gotcha” that either leaves you scratching your head or reaching for your credit card. And don’t think you can sit back and be worry free, oh Amazon Web Services customer, because these “gotchas” aren’t peculiar to GoGrid. Turns out AWS ELB comes with its own set of oddities and, ultimately, may lead many to come to the same conclusion cloud proponents have come to: cloud is really meant to scale stateless applications.

Many of the “problems” developers are running into could be avoided by a combination of more control over the load balancing environment and a basic foundation in load balancing. Not just how load balancing works, most understand that already, but how load balancers work. The problems that are beginning to show themselves aren’t because of how traffic is distributed across application instances or even understanding of persistence (you call it affinity or sticky sessions) but in the way that load balancers are configured and interact with the nodes (servers) that make up the pools of resources (application instances) it is managing.

LOAD BALANCING FU – LESSON #1

Our first lesson revolves around nodes and the way in which load balancers interact with them. This one impacts the way in which you orchestrate processes, automate tasks, and interact with cloud framework APIs that in turn interact with load balancing solutions. It also has the potential to influence your choice of provider or core application design decisions, such as how state is handled and persisted.

In general, a load balancing virtual server interacts with a pool (farm) of nodes (applications). Because of the way in which networks are architected today, i.e. they’re IP-based for interoperability, a node is identified by an IP address. When the virtual server receives a request it chooses the appropriate pool comprising one or more nodes based on the associated algorithm. Cloud computing providers today appear to be offering primarily one of two industry standard algorithms: round robin and least connections. There are many more algorithms, standard and not, but these two seem to be the most popular.

When a load balancing solution detects a problem with a node (it’s monitoring the node by sending ICMP pings or opening a TCP connection or doing an HTTP GET, depending on the provider and implementation) it marks it as “down”. Nodes can also be marked as “down” purposefully, in the event you want to perform some maintenance or find a problem with the application running and need to address it.

Lori continues with more of Lesson #1 and a “SCALING SERVICES IMPACT DEVELOPER DECISIONS” topic, which concludes:

In all cases, when you’re scaling down you don’t really want to just terminate the instance; you want to mark the node as down and allow the connections to bleed off before terminating it and deleting it from the load balancing solution. That’s the way it should work. By simply terminating the instance the node is marked as down and may continue to incur charges because health checks are using bandwidth to determine the status of the node. In the optimal case the load balancing service would offer a “conditional” delete function in its API that marked the node as “down” and, when all connections were completed, would automatically remove the node from the load balancing service. This is not the case today, for many reasons, but is where we hope to see such services go in the future.

Andrew R. Hickey asserts “Software-as-a-service (SaaS) usage is on a staggering path to growth, according to a recent survey by Gartner” in his SaaS Set To Explode In 2010: Survey article of 5/5/2010 for ChannelWeb:

According to Gartner, more than 95 percent of organizations will maintain or grow their SaaS use through 2010 fueled mostly by integration requirements, a change in sourcing strategy, and total cost of ownership.

“SaaS applications clearly are no longer seen as a new deployment model by our survey base, with almost half of those surveyed affirming use of SaaS applications in their business for more than three years,” said Sharon Mertz, research director at Gartner, in the survey. “The varying levels of maturity within the user base suggest growing opportunities for service providers along the adoption curve, as organizations seek assistance with initiatives ranging from process redesign to implementation to integration services.”

Despite the pending upswing in SaaS use, Gartner also found that many companies don’t have policies governing the evaluation and uses of SaaS. The survey revealed that 39 percent of respondents had policy or processes governing SaaS, which was up just one percent from 2008.

Gartner conducted the survey in December 2009 and January 2010, querying 270 IT and business management professionals involved in the implementation, support, planning and budgeting decisions regarding enterprise application software.

The survey found that the most popular SaaS applications include email, financial management and accounting, sales force automation, customer service and expense management, with more than 30 percent of survey respondents using those applications.

Additionally, 53 percent of organizations expect to increase their SaaS investment slightly over the next two years and 19 percent expect to boost SaaS investments significantly. Still, about 25 percent of respondents expect SaaS investments to remain level and 4 percent will reduce their SaaS spend slightly. Meanwhile, 72 percent of respondents believe SaaS investments will increase compared to current investments while 45 percent said on-premise budgets will increase compared to where they currently are. …

Reuven Cohen explains How to Make Money in Cloud Computing in this 5/6/2010 post, which begins:

It's been fairly quiet on the blog front lately mostly because of my ridiculous travel schedule as well as an endless series of meetings both with new customers & partners. A recurring question I've been asked lately has revolved around one of the more difficult questions to answer in cloud computing. The questions is how does one make money in cloud computing? A very good question, one that I've been asking myself for quite sometime. So let me begin by giving you a brief history of my company, Enomaly.

Over the last 6 1/2 years since we founded Enomaly Inc I've often considered myself a bootstrapper. The ability to build a self-sustaining business that succeeds without external financial support - built and supported by the money we make . In my case Enomaly was formed out of the previous consulting work I was doing in enterprise content management, back then (pre-2004) I focused on open source CMS's products. (I made money on others free software) Basically I built Enomaly by taking the money I made as an independent consultant / freelancer / contractor and brought together a founding team and created Enomaly.

The original partners, George a financial whiz looked after the operations and Lars looked after both the product and project management, they both excelled in areas I found myself weak in. As time passed we used the revenue to hire more people and develop our products all the while gradually growing the business. This time gave us the ability to both grow and adapt to emerging market trends in what we called "elastic computing", now generally refereed to as "Infrastructure as a service" or Cloud Computing. One of biggest advantages was time.

Ruv continues with more details about Enomaly Inc and concludes:

So how do you make money in Cloud Computing? By making and more importantly "selling" products and service people want to buy.

Alan Le Marquand posted a detailed explanation of the Windows Azure Platform. Inside the Cloud. Microsoft's Cloud World Explained Part 2. on 5/5/2010 to the TechNet Edge blog:

In this post we will explore the Windows Azure Platform in more detail. After reading it you'll have an understanding of the components that make up the platform, what functions they perform as well as an idea of what applications are good candidates to run on Windows Azure Platform and the considerations for making that choice.

[Click to read the full post

]

Here’s the link to Part 1 of Alan’s series: Head in the Clouds. Microsoft's Cloud World Explained Part 1 of 4/23/2010 and a few other TechNet Edge links from Part 2:

<Return to section navigation list>

Cloud Security and Governance

See SeedTS and Layer 7 Technologies announce a joint Webinar, Identity and Policy Management in SOA Governance Thursday, 5/6/2010 10:00am PDT presented by Jean Rodrigues and K. Scott Morrison in the Cloud Computing Events section below.

K. Scott Morrison claimed “Cloud is now mature enough that we can begin to identify anti-patterns associated with using these services” as a preface to his Top Five Mistakes People Make When Moving to the Cloud post of 5/3/2010:

Cloud is now mature enough that we can begin to identify anti-patterns associated with using these services.

Keith Shaw from Network World and I spoke about worst practices in the cloud last week, and our conversation is now available as a podcast.

Come and learn how to avoid making critical mistakes as you move into the cloud.

Charlie Kaufman presents a 00:20:37 Azure Network Security Webinar according to this TechNet Edge post of 5/6/2010":

Charlie Kaufman, security architect for Azure, gives us details on how the network communications work and are secure within Azure. Here are the topics we get into:

- 5:30 – Why don’t we use IPSec between the Compute node and store?

- 6:43 – Breakdown of the virtualization security in Azure

- 10:50 – How do we protect against malicious users doing things like Denial of Service (DoS) attacks?

- 15:15 – How to protect against users who create a bogus account and try to make attacks from inside the Azure framework?

Length: 20:37

Watch other IT Pro Azure videos on the Edge Cloud Page

See Law Seminars International presents A Comprehensive Two-Day Seminar on Cutting Edge Developments in Cloud Computing: New business models and evolving legal issues on 5/17 and 5/18/2010 at the Crowne Plaza Hotel in Seattle, WA in the Cloud Computing Events section.

<Return to section navigation list>

Cloud Computing Events

Mike Flasko announced on 5/5/2010 that the WCF Data Services Team will sponsor an OData Roadshow:

We are taking the MIX10 Services Powering Experiences content on the road.

New York, NY – May 12, 2010

Chicago, IL – May 14, 2010

Mountain View, CA – May 18, 2010

Shanghai, China – June 1, 2010

Tokyo, Japan – June 3, 2010

Reading, United Kingdom – June 15, 2010

Paris, France – June 17, 2010One full day of OData and Azure fun!

More details at http://www.odata.org/roadshow

David Chou annouced on 5/5/2010 Free Training – Microsoft Web Camps in Mountain View on May 27 & 28, 2010:

The Microsoft Web Team is excited to announce a new series of events called Microsoft Web Camps!

function WebCamps () {

Day1.Learn();

Day2.Build();

}Interested in learning how new innovations in Microsoft's Web Platform and developer tools like ASP.NET 4 and Visual Studio 2010 can make you a more productive web developer? If you're currently working with PHP, Ruby, ASP or older versions of ASP.NET and want to hear how you can create amazing websites more easily, then register for a Web Camp near you today!

Microsoft's Web Camps are free, two-day events that allow you to learn and build on the Microsoft Web Platform. At camp, you will hear from Microsoft experts on the latest components of the platform, including ASP.NET Web Forms, ASP.NET MVC, jQuery, Entity Framework, IIS, Visual Studio 2010 and much more. See the full agenda here.

Register now and we look forward to seeing you at camp soon!

Mountain View, CA May 27 & 28

1065 La Avenida Map

Mountain View, CA 94043

Phone: (650) 693-4000Speakers

Jon Galloway works for Scott Hanselman as an ASP.NET Community Program Manager helping to evangelize and promote the ASP.NET framework and Web Platform. Jon previously worked at Vertigo Software, where he worked on several Microsoft conference websites (PDC08, MIX09, WPC09), built the CBS March Madness video player, and lead a team which created several Silverlight advertising demos for MIX08. Prior to that, he’s worked in a wide range of web development shops, from scrappy startups to Fortune 500 financial companies. He was an ASP.NET and Silverlight Insider, ASP.NET MVP, published author, regular contributor to several open source .NET projects. He runs the Herding Code podcast (http://herdingcode.com) and blogs at http://weblogs.asp.net/jgalloway

SeedTS and Layer 7 Technologies announce a joint Webinar, Identity and Policy Management in SOA Governance Thursday, 5/6/2010 10:00am PDT:

Webinar Overview:

Digital Identity is at the heart of the modern business ecosystem. However, governing SOA interactions - particularly across a distributed or federated environment - creates complexity not found in typical user-machine exchanges. Separating policy creation and enforcement from service implementation can simplify SOA governance without compromising scalability, flexibility and auditability - accommodating even the most elaborate, heterogeneous IT landscapes. This Webinar will review best practices for building and operating a policy-centered, identity-driven SOA.

What You Will Learn:

- The connection between identity management and SOA governance

- The importance of separating policy and entitlements from service implementation

- The challenges of managing and validating identity in SOA interactions

- Options for managing and enforcing identity-driven SOA policies

- How to implement identity-based governance in real-world SOA

Presented by:Jean Rodrigues - Enterprise Solutions Director at SeedTS, and K. Scott Morrison, CTO and Chief Architect at Layer 7 Technologies.

Please note that the first 15 minutes of this webinar will be in Portuguese and the remainder will be in English.

Register Now!!! You will receive a confirmation email with the details. We look forward to seeing you there!

Law Seminars International presents A Comprehensive Two-Day Seminar on Cutting Edge Developments in Cloud Computing: New business models and evolving legal issues on 5/17 and 5/18/2010 at the Crowne Plaza Hotel in Seattle, WA:

Who Should Attend

Attorneys, business professionals and cloud service providers

Why You Should Attend

In the future, will all computing be cloud computing? As the economic case for cloud computing grows, will the international legal system be able to adequately define and enforce the rights and liabilities of cloud service providers, their partners, commercial clients and ordinary consumers?

This timely two-day conference will explore different cloud computing service models and the challenges they pose to traditional concepts of data ownership and control, contractual rights, privacy and security, law enforcement, copyrights and trademarks, and conflicts of law. In each of these areas, our faculty of leading in-house lawyers, private lawyers and academics with extensive experience in cloud computing will discuss the law today, where it is going, and where it should be going.

~ Barry J. Reingold, Esq. and Steven D. Young, Esq., Program Co-Chairs

What You Will Learn

- Newly evolving business models

- Governance in the Cloud

- The complexities of moving data between jurisdictions

- Security in the Cloud

- Dealing with law enforcement agencies

- Special issues with government data

- Intellectual property protection

- Use of Open Source Software

- Strategies of the cloud providers

- Terms of service for consumer cloud services

- Key terms in B2B contracts

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Bob Evans claims Oracle's New Strategy Unfolds in this May 2010 Cloud Computing Brief for InformationWeek::Analytics:

Life is all about tradeoffs. Time with kids or answer a few more e-mails? Grab that airport cheeseburger or mu[n]ch on some carrots? Follow the Pittsburgh Pirates or decide to root for a real Major League team?

We assess our needs, evaluate our options, make decisions, and realize that some of those decisions are much more difficult and complex than others.

Well, in that category of difficult decisions, I've got a doozy: You know you're spending too much on infrastructure and maintenance versus growth projects and innovation, and you are committed to changing that. You know your current roster of global systems could serve as an archeological technology museum showcasing every type of hardware, software and network, and you're determined to update all that. And you also know that all CIOs take a sacred oath vowing to love the one-stop-shop IT vendor in theory but to avoid it like the bubonic plague in practice.

And then you hear Charles Phillips, Oracle's president, make a cogent and persuasive argument that provides the answer to all of your problems, including your overly strained budget, your brittle systems, your inability to get out ahead of the busy work, your aging and mismatched applications, and most of all your inability to meet the CEO's expectation that you become a full-time driver of growth and innovation.

Under Phillips' plan, your cost of internal operations plummet, your integration headaches vanish, your performance problems disappear, and your CEO views you as a business leader who's taken on and overcome a massive and seemingly intractable problem.

But here's the tradeoff: To get all that, you have to standardize on Oracle. The whole stack. Storage to applications. The thing you swore in blood you'd never do.

Phillips' solutions sound almost too good to be true--and perhaps they are. Then again, Oracle's strategy and Oracle's technology are unlike anything else in the IT industry today, due to their breadth and ability to extend from systems to storage to middleware to OLTP to databases and ERP and vertical-market expertise.

Table of Contents

2 Oracle's Phillips Says Standardizing On Oracle Is The IT Cure

6 Phillips Says 22% Annual Fees Are Great For CIOs

9 Oracle's Larry Ellison Declares War on IBM and SAP

14 Oracle's Dazzling Profit Machine Threatened by Rimini Suit

17 Larry Ellison's Nightmare:

10 Ways SAP Can Beat Oracle

22 Oracle's Larry Ellison Mixes Fiction with Facts on SAP

25 Oracle, SAP, and the End of Enterprise Software Companies

28 10 Things SAP's Co-CEOs Should Focus On

31 Oracle Sues Rimini Street for "Massive Theft"

33 In Oracle Vs. SAP, IBM Could Tip Balance

If you believe Charles Phillips, please make me an offer for my slightly used Bay Bridge span.

Alex Williams reports VMware Acquires Gemstone in Deal To Fortify New Java Platform with Salesforce.com in this 5/6/2010 post to the ReadWriteCloud blog:

VMware is acquiring Gemstone, a Beaverton, Or.-based company that develops real-time caching and distributed database technologies that serves as an in-memory data fabric for masive data processing and transaction environments.

The technology is used as middleware for SpringSource, the platform VMware acquired recently. SpringSource is the foundation for VMforce, the Java-based application development platform it launched with Salesforce.com last week.

Gemstone represents that data fabric for VMware. It provides the capability to develop read/write applications. This comes from Gemstone's ability to transact massive data sets.

That's a critical aspect of the VMforce platform. Apps need to have that interaction capability. It's the key aspect of the VMforce service in the capability to create micro-applications for any number of enterprise uses.

Gemstone has historically worked with large companies in financial services, for instance, that require big data, real-time transaction environments.

Terms of the deal were not disclosed.

Alex Handy writes SpringSource [not VMware] to buy GemStone Systems in this 5/6/2010 article for SDTimes on the Web:

SpringSource today announced that it will acquire privately held GemStone Systems, with the idea of integrating that company’s distributed caching data fabric into SpringSource’s cloud-targeted Java stacks. Terms of the deal, which is expected to close by the end of next week, were not disclosed.

Rod Johnson, general manager of VMware's SpringSource division, said that the GemStone acquisition is intended to round out the VMware Java middleware portfolio. In March, SpringSource acquired RabbitMQ, a company that supports the Advanced Message Queuing Protocol implementation.

“Fundamentally, we're building out our middleware capabilities, but we're doing it with an eye towards what we think of as next-generation middleware,” said Johnson. “We're choosing things like our TC Server product, GemStone and RabbitMQ that are highly relevant to solving the new problems of cloud deployment.”

Johnson went on to state that this acquisition will help developers to deal with data management in live applications. “We think data management is becoming increasingly important. As you move to cloud, you potentially have different data stores underneath. You need to scale that to a degree that's problematic for relational databases," he said.

One alternative to relational databases has been the NoSQL movement, but that doesn't mean NoSQL is the only answer. Johnson said that caching is an ideal solution for enterprises, as the transactional limits on NoSQL databases are prohibitive for large businesses with complex Java applications. He said that using a caching layer like GemStone's data fabrics can allow data stored in relational databases to scale similarly to NoSQL's, but without removing the benefits of a relational database. …

Who’s on first? Of course, VMware owns (or will soon own) SpringSource, so the distinction isn’t all that significant.

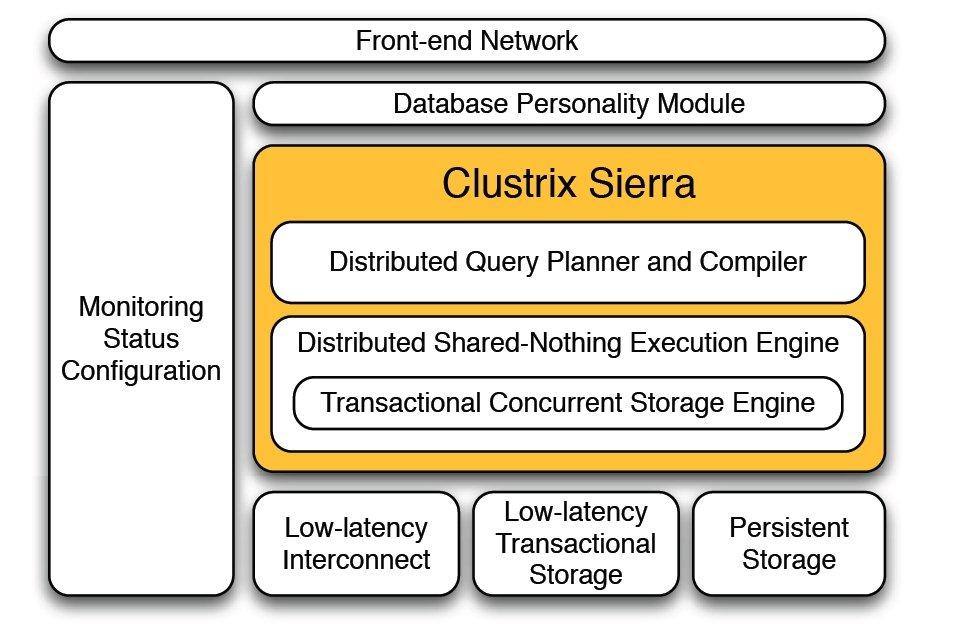

James Hamilton analyzes the Clustrix Database Appliance and gives it thumbs up in this 5/6/2010 post:

Earlier this week Clusterix announced a MySQL compatible, scalable database appliance that caught my interest. Key features supported by Clustrix:

- MySQL protocol emulation (MySQL protocol supported so MySQL apps written to the MySQL client libraries just work)

- Hardware appliance delivery package in a 1U package including both NVRAM and disk

- Infiniband interconnect

- Shared nothing, distributed database

- Online operations including alter table add column

I like the idea of adopting a MySQL programming model. But, it’s incredibly hard to be really MySQL compatible unless each node is actually based upon the MySQL execution engine. And it’s usually the case that a shared nothing, clustered DB will bring some programming model constraints. For example, if global secondary indexes aren’t implemented, it’s hard to support uniqueness constraints on non-partition key columns and it’s hard to enforce referential integrity. Global secondary indexes maintenance implies a single insert, update, or delete that would normally only require a single node change would require atomic updates across many nodes in the cluster making updates more expensive and susceptible to more failure modes. Essentially, making a cluster look exactly the same as a single very large machine with all the same characteristics isn’t possible. But, many jobs that can’t be done perfectly are still well worth doing. If Clustrix delivers all they are describing, it should be successful.

I also like the idea of delivering the product as a hardware appliance. It keep the support model simple, reduces install and initial setup complexity, and enables application specific hardware optimizations.

Using Infiniband as a cluster interconnect is a nice choice as well. I believe that 10GigE with RDMA support will provide better price performance than Infiniband but commodity 10GigE volumes and quality RDMA support is still 18 to 24 months away so Inifiband is a good choice for today.

Going with a shared nothing architecture avoids dependence on expensive shared storage area networks and the scaling bottleneck of distributed lock managers. Each node in the cluster is an independent database engine with its own physical (local) metadata, storage engine, lock manager, buffer manager, etc. Each node has full control of the table partitions that reside on that node. Any access to those partitions must go through that node. Essentially, bringing the query to the data rather than the data to the query. This is almost always the right answer and it scales beautifully.

In operation, a client connects to one of the nodes in the cluster and submits a SQL statement. The statement is parsed and compiled. During compilation, the cluster-wide (logical) metadata is accessed as needed and an execution plan is produced. The cluster-wide (logical) metadata is either replicated to all nodes or stored centrally with local caching. The execution plan produced by the query compilation will be run on as many nodes as needed with the constraint that table or index access be on the nodes that house those table or index partitions. Operators higher in the execution plan can run on any node in the cluster. Rows flow between operators that span node boundaries over the infiniband network. The root of the query plan runs on the node where the query was started and the results are returned to client program using the MySQL client protocol

As described, this is a very big engineering project. I’ve worked on teams that have taken exactly this approach and they took several years to get to the first release and even subsequent releases had programming model constraints. I don’t know how far along Clustrix is a this point but I like the approach and I’m looking forward to learning more about their offering.

- White paper: Clustrix: A New Approach

- Press Release: Clustrix Emerges from Stealth Mode with Industry’s First Clustered DB

For my initial report on the Clustrix appliance, see Stacey Higginbotham claims Clustrix Builds the Webscale Holy Grail: A Database That Scales in her 5/3/2010 post to the GigaOm blog in Windows Azure and Cloud Computing Posts for 5/4/2010+.

Bill McNee and Charlie Burns co-authored IBM Impact 2010: Markers in an Evolving Cloud Strategy on 5/5/2010 as a Saugatuck Research Alert (site registration required):

What is Happening?

This week Saugatuck’s Founder and CEO, Bill McNee, attended IBM’s annual software conference, Impact 2010, in Las Vegas, Nevada. This year’s event – with business agility as its major theme – drew approximately 6,000 attendees representing over 1,200 customers and 850 business partners.

Among the many product announcements and customer presentations delivered at Impact, Saugatuck found two that were particularly important markers that reflect IBMs evolving Cloud strategy:

A. IBM’s acquisition of Cast Iron Systems, a leading provider of cloud-based integration services

B. IBM’s subtle introduction of an emerging vision for “shared Cloud middleware”Why Is It Happening?

Acquisition of Cast Iron Systems – As projected by Saugatuck beginning as far back as April 2006, and supported by our ongoing research ever since, customer use of Cloud-based offerings continues to grow and to evolve from point solutions to increasingly integrated and complex business process solutions (please see Research Report “Lead, Follow, and Get Out of the Way: A Working Model for Cloud IT through 2014”, SSR706, 25Feb2010).

As we detailed in a recently published QuickTake assessment, Cast Iron Systems has been building expertise and a strong customer portfolio and growing market presence since being founded in 2001 (see Cast Iron Systems: Facilitating Integration of Existing Systems with SaaS and Cloud Offerings, QT-645, 25Sept09). …

Does Saugatuck’s trademark graphic remind you of Northwind Traders? It does me.

Alex Williams reports Yahoo! Looks Into its Future and Sees Hadoop in this 5/5/2010 post to the ReadWriteCloud blog:

Hadoop is gaining more commercial acceptance. We see a number of signs of its growing popularity. It became abundantly clear in a recent conversation we had with a Yahoo! executive who says the company is rebuilding its future on the distributed warehousing and analytics technology.

It's a similar track we are seeing with the larger consumer social networks and cloud computing providers. Facebook uses Hadoop to do deep social analytics which powers the ability to provide its established level of personal interaction. Windows Azure is adopting Hadoop. …

Yahoo! started using Hadoop initially in 2006 as a science project to process and analyze massive data sets. They developed a prototype on 20 nodes. Today, Yahoo! manages more than 25,000 nodes for data processing and analytics.

Yahoo! found that product development could be done in a fraction of the time. They found they could just throw machines at a project to do the processing. What once took 29 days could be accomplished in less than one.

As a result, Yahoo! began integrating Hadoop for all parts of its business. The company offloaded storage from the IT department and put the data in a cluster.

Today, Yahoo uses Hadoop for determining best advertising placement and for content optimization. For example, the company started testing how the optimization worked on the home page by serving up content relevant to the user. It worked. Yahoo! saw a 150 percent increase in user engagement measurements.

The next step is to use Hadoop for optimizing latency, a major issue for scaling data networks in the cloud.

Hadoop is becoming the standard for data processing and analytics in social networks, genome projects and beyond. Some see that as proof it has gained commercial acceptance.

So now it's on to the next big data dicing project. And what will that be?

Cassandra for one.

More to come.

The preceding are just some of the reasons that Microsoft reportedly is looking into implementing Hadoop on the Windows Azure platform (see Microsoft [is] readying Hadoop for Windows Azure, a 5/3/2010 two-page article for SD Times on the Web linked in my Windows Azure and Cloud Computing Posts for 5/3/2010+.)

<Return to section navigation list>