Windows Azure and Cloud Computing Posts for 5/3/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Azure Blob, Table and Queue Services

Phani Raju offered an example of Server Driven Paging II , Implementing a Smart Auto-Paging Enumerable on 5/1/2010:

What is this ?

An “Auto-paging Enumerable” is an IEnumerable implementation that allows the application to automatically fetch the next page of results if the current page of results is enumerated and the application wants more.

By “Smart”, we mean that we only load the next page when the current page runs out of results.We don’t load a page into memory unless the application needs it.

How do I use this in my applications ?

Add the “ServerPagedQueryExtensions.cs” file into your application and use one of the following patterns:

- Binding to User Interface controls

XAML Code for WPF Listbox:<ListBox DisplayMemberPath="Name" Name="lbCustomers" />

C# code to bind the collection to the listbox:CLRProvider Context = new CLRProvider(new Uri("http://localhost:60901/DataService.svc/")); //Extension method on DataServiceQuery<T> var pagedCustomerRootQuery = (from c in Context.Customers select new Customer() { Name = c.Name }) as DataServiceQuery<Customer>; lbCustomers.ItemsSource = pagedCustomerRootQuery.Page(Context, maxItemsCount);- With anonymous types in projections

CLRProvider Context = new CLRProvider(new Uri("http://localhost:60901/DataService.svc/")); short maxItemsCount = 6; //Factory method var customerPaged = ServerPagedEnumerableFactory.Create( from c in Context.Customers select new { Name = c.Name }, Context, maxItemsCount);- In non-user interface backend code

foreach (Customer instance in Context.Customers.Page(Context, maxItemsCount)) { //Do something with instance variable here }

You can download the source code for the sample application as ServerDrivenPagingServer.zip from SkyDrive. Phani continues with a detailed “How this was built” topic.

Jai Haridas gives very detailed instructions for Protecting Your Tables Against Application Errors in this lengthy post of 5/3/2010, which includes many source code samples, to the Windows Azure Storage Team blog:

“Do applications need to backup data in Windows Azure Storage if Windows Azure Storage already stores multiple replicas of the data?” For business continuity, it can be important to protect the data against errors in the application, which may erroneously modify the data.

If there are problems at the application layer, the errors will get committed on the replicas that Windows Azure Storage maintains. So, to go back to the correct data, you will need to maintain a backup. Many application developers today have implemented their own backup strategy. The purpose of this post is to cover backup strategies for Tables.

To backup tables, we would have to iterate through the list of tables and scan each table to copy the entities into blobs or a different destination table. Entity group transactions could then be used here to speed up the process of restoring entities from these blobs. Note, the example in this post is a full backup of the tables, and not a differential backup.

Table Backup

We will go over a simple full backup solution here. The strategy will be to take as input a list of tables and for each table a list of keys to use to partition the table scan. The list of keys will be converted into ranges such that separately backing up each of these ranges will provide a backup of the entire table. Breaking the backing up of the table into ranges this way allows the table to be backed up in parallel. The TableKeysInfo class will encapsulate the logic of splitting the keys into ranges as shown below:

public class TableKeysInfo { private List<PartitionKeyRange> keyList = new List<PartitionKeyRange>(); /// <summary> /// The table to backup /// </summary> public string TableName { get; set; } public TableKeysInfo(string tableName, string[] keys) { if (tableName == null) { throw new ArgumentNullException("tableName"); } if (keys == null) { throw new ArgumentNullException("keys"); } this.TableName = tableName; // sort the keys Array.Sort<string>(keys, StringComparer.InvariantCulture); // split key list {A, M, X} into {[null-A), [A-M), [M-X), [X-null)} this.keyList.Add(new PartitionKeyRange(null, keys.Length > 0 ? keys[0] : null)); for (int i = 1; i < keys.Length; i++) { this.keyList.Add(new PartitionKeyRange(keys[i - 1], keys[i])); } if (keys.Length > 0) { this.keyList.Add(new PartitionKeyRange(keys[keys.Length - 1], null)); } } /// <summary> /// The ranges of keys that will cover the entire table /// </summary> internal IEnumerable<PartitionKeyRange> KeyRangeList { get { return this.keyList.AsEnumerable<PartitionKeyRange>(); } } }

Jai continues with more backup details and “Table Restore,” as well as “Libraries that Support Backup” topics. The latter includes a link to the Table Storage Backup & Restore for Windows library.

David Worthington claims Microsoft [is] readying Hadoop for Windows Azure, according to an anonymous Microsoft source, in this 5/3/2010 two-page article for SD Times on the Web:

Microsoft is preparing to provide Hadoop, a Java software framework for data-intensive distributed applications, for Windows Azure customers.

Hadoop offers a massive data store upon which developers can run map/reduce jobs. It also manages clusters and distributed file systems. Microsoft will provide Hadoop within a "few months," said a Microsoft executive who wished to remain anonymous.

The technology makes it possible for applications to analyze petabytes of both structured and unstructured data. Data is stored in clusters, and applications work on it programmatically.

"They are probably seeing Hadoop adoption trending up, and possibly have some large customers demanding it," said Forrester principal analyst Jeffrey Hammond.

"Microsoft is all about money first; PHP support with IIS and the Web PI initiative were all about numbers and creating platform demand. If Hadoop support helps creates platform demand for Azure, why not support it? Easiest way to lead a parade is to find one and get in front of it."

Microsoft's map/reduce solution, codenamed "Dryad," is still a reference architecture and not a production technology.

Further, AppFabric, a Windows Azure platform for developing composite applications, currently lacks support for data grids. Microsoft has experienced difficulty in porting Velocity, a distributed in-memory application cache platform, to Windows Azure, because Velocity requires administrative privileges to install, the anonymous executive told SD Times. …

The Azure platform is not restricted to .NET development. Microsoft partnered with Soyatec to produce the Azure SDK for Java. However, using Hadoop as a data grid solution is a departure from Microsoft's approach to data access. …

Microsoft can gain a data grid capability in Azure by acquiring a partner such as ScaleOut Software or Alachisoft, said Forrester senior analyst Mike Gualtieri. Microsoft is not far behind other platform players in providing distributed data grids on the cloud, he added.

ScaleOut Software uses a memory-based approach to map/reduce analysis. Its parallel method invocation integrates parallel query with map/reduce on object collections stored in the grid.

“In-memory, object-oriented map/reduce within the business logic layer offers important advantages in reducing development time and maximizing performance,” said William Bain, CEO of ScaleOut Software. “Over time, we are confident that this approach will efficiently handle petabyte data sets, which currently rely on file-based implementations.”

Additionally, there are open-source map/reduce solutions for .NET. A project called hadoopdotnet ports Hadoop to the .NET platform. It is available under the Apache 2.0 open-source license. MySpace Qizmt, a map/reduce framework built using .NET, is another alternative. It is licensed under GNU General Public License v3.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

The SQL Server 2008 R2 Report Builder 3.0 Team has produced a Getting Data from the SQL Azure Data Source Type (Report Builder 3.0) topic for SQL Server 2008 R2 Books Online:

Microsoft SQL Azure Database is a cloud-based, hosted relational database built on SQL Server technologies. To include data from SQL Azure Database in your report, you must have a dataset that is based on a report data source of type SQL Azure. This built-in data source type is based on the SQL Azure data extension. Use this data source type to connect to and retrieve data from SQL Azure Database.

This data extension supports multivalued parameters, server aggregates, and credentials managed separately from the connection string.

SQL Azure is similar to an instance of SQL Server on your premises and getting data from SQL Azure Database is, with a few exceptions, identical to getting data from SQL Server. SQL Azure Database features align withSQL Server 2008.

For more information about SQL Azure, see "SQL Azure" on msdn.microsoft.com.

In This Article:

Brazilian blogger Felipe Lima posted a mini-review of WCF Data Services and the OData protocol in his 4/24/2010 post:

During the last days, I've been watching some cool MIX'10 videos with Scott Hanselman. One of them took my attention specially, which is this one: Beyond File | New Company: From Cheesy Sample to Social Platform.

Scott takes a simple website like nerddinner.com and shows how they tuned it up to enable several different social collaboration, integration and emergent features in it. It is really worth the time watching.

One of the points that really made me curious, was the new OData protocol. It was introduced in.NET 4 with WCF Data Services (formerly ADO.NET Data Services) and aims to unlock data from its silos that exist in major applications today. What's really impressive about it, is that you are able to query it as a web service and perform complex Linq queries against it (even inserting, updating and deleting are supported!). All this, over a common RESTful Http interface. OData is built upon the AtomPub format, so there is nothing being invented here. This presentation at PDC'09 with Pablo Castro provides further techincal details and I definitely recommend watching if you plan to make use of this technology.

I'm planning on implementing this on a Website CMS I'm working on and will write about my findings, barriers found and solutions here in the near future. Stay tuned.

Will do. Felipe is from Porto Allegre in Rio Grande do Sul (southeastern Brazil), one of my favorite parts of the world. (I have many fond memories of the time I spent in Joinville the largest city in Santa Catarina state. I’m surprised that Bing doesn’t have a street-level map of Joinville, which has a population of about 500,000, many of whom are of German descent. Here’s Google’s map of Joinville.)

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Ron Jacobs advertised endpoint.tv - Troubleshooting with [Windows Server] AppFabric on 5/3/2010:

Troubleshooting applications in production is always a challenge. With AppFabric monitoring your workflows and services, you get great information about exactly what is happening, including notices about unhandled exceptions.

In this episode, Michael McKeown will show you more about how you can use these features to troubleshoot problems with your applications.

Be sure to check out the AppFabric Wiki for more great tips, and to share yours as well.

• Cliff Simpkins (@cliffsimpkins) announced AppFabric Virtual Launch on May 20th – Save the Date for private cloud devotees at 8:30 AM PDT on 4/29/2010 in the The .NET Endpoint blog:

To close out the month of April, I’m happy to share news of the event that the group will be conducting next month to launch Windows Server AppFabric and BizTalk Server. [Emphasis added.]

On May 20th @8:30 pacific, we will be kicking off the Application Infrastructure Virtual Launch Event on the web. The event will focus on how your current IT assets can harness some of the benefits of the cloud, bringing them on-premises, while also connecting them to new applications and data running in the cloud.

We have some excellent keynote speakers for the virtual launch:

- Yefim Natis, Vice President and Distinguished Analyst at Gartner:

Yefim will discuss the latest trends in the application infrastructure space and the role of application infrastructure in helping business benefit from the cloud.- Abhay Parasnis, General Manager of Application Server Group at Microsoft:

Abhay will discuss how the latest Microsoft investments in the application infrastructure space will begin delivering the cloud benefits to Windows ServerIn addition to the keynotes, there will be additional sessions providing additional details around the participating products and capabilities that we will be delivering in the coming months. Folks who would likely get the most from the event are dev team leads and technical decision makers, as much of the content will focus more on benefits of the technologies, and much less on the implementation and architectural details.

That being said - the virtual launch event is open to all and requires no pre-registration, no hotel bookings, and no time waiting/boarding/flying on a plane. In the spirit of bringing cloud benefits to you, the team has decided to host our event in the cloud. :)

The Application Infrastructure Virtual Launch Event website is now live at http://www.appinfrastructure.com/ – today, you can add a reminder to your calendar and submit feedback on the topic you are most interested in. On Thursday, May 20th, at 8:30am Pacific Time, you can use the URI to participate in the virtual launch event.

Cliff’s blog post emphasizes Windows Server AppFabric, but Windows Azure AppFabric is included in the event also. See the footer of the Applications Infrastructure: Cloud benefits delivered page below:

![image_thumb[2] image_thumb[2]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEi5sFev-ZqQ6S3XreRj7pQjbMBIEP_Ia0w4BFT-TcE0I0dEW1M380BvGx-qTKirP-gkb66asR4Jzb4btBWu7Okb52q38eOxDGeIlCdL-VZ5sUTcByU9wutw2YQAhwONxZe_qIlvtujK/?imgmax=800)

Application Infrastructure Virtual Launch Event

While you explore how to use the cloud in the long-term, find out how to harness the benefits of the cloud here and now. In this virtual event, you’ll learn how to:

- Increase scale using commodity hardware.

- Boost speed and availability of current web, composite, and line-of-business applications.

- Build distributed applications spanning on-premises and cloud.

- Simplify building and management of composite applications.

- And much, much more!

Microsoft experts will help you realize cloud benefits in your current IT environment and also connect your current IT assets with new applications and data running in the cloud.

This is a valuable opportunity to learn about cloud technologies and build your computing skills. Don’t miss it!

• Update 5/3/2010: Mary Jo Foley posted May 20: Microsoft to detail more of its private cloud plans on 5/3/2010 to her All About Microsoft blog:

Microsoft officials have been big on promises but slow on delivery, in terms of the company’s private-cloud offerings. However, it looks like the Redmondians are ready to talk more about a couple of key components of that strategy — including the Windows Server AppFabric component, as well as forthcoming BizTalk Server releases.

Microsoft has scheduled an “Application Infrastructure Virtual Launch Event” for May 20. According to a new post on the .Net Endpoint blog, “The event will focus on how your current IT assets can harness some of the benefits of the cloud, bringing them on-premises, while also connecting them to new applications and data running in the cloud.”

Windows Server AppFabric is the new name for several Windows infrastructure components, including the “Dublin” app server and “Velocity” caching technology. In March, Microsoft officials said to expect Windows Server AppFabric to ship in the third calendar quarter of 2010. Microsoft last month made available the final version of Windows Azure AppFabric, which is the new name for Microsoft’s .Net Services (the service bus and access control services pieces) of its cloud-computing platform.

BizTalk Server — Microsoft’s integration server — also is slated for a refresh this year, in the form of BizTalk Server 2010. That release will add support for Windows Server 2008 R2, SQL Server 2008 R2 and Visual Studio 2010, plus a handful of other updates. Company officials have said Microsoft also is working on a new “major” version of BizTalk, which it is calling BizTalk Server vNext. This release will be built on top of Microsoft’s Windows AppFabric platform. So far, the Softies have not provided a release target for BizTalk Server vNext. …

Mary Jo continues with an analysis of Microsoft’s “private cloud play,” such as the Dynamic Data Center Toolkit [for Hosters], announced more than a year ago, Windows Azure for private clouds, and Project Sydney for connecting public and private into hybrid clouds.

Due to its importance, the preceding article was reposted from Windows Azure and Cloud Computing Posts for 5/1/2010+.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Rinat Abdullin complains Microsoft is Reinventing CQRS for Windows Azure, but without DDD in this 5/4/2010 post (CQRS = Command-Query Responsibility Segregation

MSDN has recently got a Bid Now sample application for the Windows Azure Cloud Computing Framework.

Bid Now is an online auction site designed to demonstrate how you can build highly scalable consumer applications.

This sample is built using Windows Azure and uses Windows Azure Storage. Auctions are processed using Windows Azure Queues and Worker Roles. Authentication is provided via Live Id.

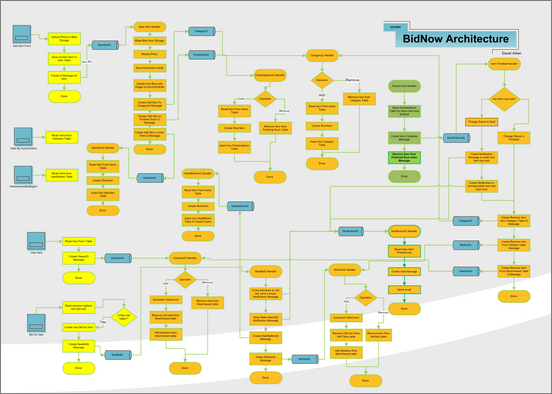

It is an extremely nice starter. Yet, I think there are at least three major directions, in which this sample could be improved in order to serve as a proper architectural guidance and reference implementation for building cloud computing solutions.

First, even such a simple application already has quite a complex architecture. There is no strict logical structure around the messages, handlers, objects and behaviors. It is possible to draw a diagram sketching all there relations, but it will be rather hard to understand and work upon:

…

Command-Query Responsibility Segregation already provides well-known and established body of knowledge on defining architecture of such consumer applications and delivering them. What’s more important, there is a clear migration path for existing brown-field solutions towards CQRS.

CQRS couples nicely with the DDD (guidance on understanding, modeling and evolving the domain synchronously with the business changes) and Event Sourcing (persistence-independent approach on persisting the domain that brings forward the scalability, simplicity and numerous business benefits).

There already is plenty of materials on CQRS by Greg Young, Udi Dahan, Mark Nijhof, Jonathan Oliver and many others (links)

Given all this, I’d think that instead of inventing a new Azure guidance for developing enterprise applications, Microsoft might simply adopt established and documented principles, adjusting them slightly to the specifics of the Windows Azure environment and filling up the missing tool set (or just waiting till OSS community does this). This approach might provide better ROI for the resources at hand along with the faster and more successful adoption of Windows Azure for the enterprise applications.

What do you think?

Despite support from EA authority Udi Dahan, I don’t believe CQRS has hit the DDD mainstream yet.

Toddy Mladenov’s Collecting Event Logs in Windows Azure post of 5/3/2010 expands on his earlier Windows Azure Diagnostics – Where Are My Logs?:

While playing with Windows Azure Diagnostics for my last post Windows Azure Diagnostics – Where Are My Logs? I noticed few things related to collecting Event Logs.

Configuring Windows Event Logs Data Source

First let’s configure the Event Logs data source. Here are the four simple lines that do that:

DiagnosticMonitorConfiguration dmc = DiagnosticMonitor.GetDefaultInitialConfiguration();

dmc.WindowsEventLog.DataSources.Add("Application!*");

dmc.WindowsEventLog.ScheduledTransferPeriod = TimeSpan.FromMinutes(1);

DiagnosticMonitor.Start("DiagnosticsConnectionString", dmc);I would highly recommend you to define the

“Application!*”string globally, and make sure it is not misspelled. I spent quite some time wondering why my Event Logs are not showing up in my storage table, and the reason was my bad spelling skills. BIG THANKS to Steve Marx for opening my eyes!If you want to see other event logs like System and Security you should add those as data sources before you call

DiagnosticMonitor.Start()method.

Toddy continues with “Filtering Events,” “How are Windows Event Logs Transferred to Storage?” and “Guidelines for Capturing Windows Events Using Windows Azure Diagnostics” topics.

Bob Familiar’s ARCast.TV - Accenture: Enabling the Mobile Workforce with Windows Azure of 5/3/2010 points to a new Webcast:

When most people think of the cloud, they generally look at is as means of building and hosting highly-scalable, highly-available applications to their customers and partners. However, many forward looking companies see the cloud as a way to enable and empower their mobile workforces, giving people the ability to work on-the-go wherever and whenever they need.

This is what Accenture has done using Windows Azure. In this episode of ARCast, Denny Boynton sits down with Joseph Paradi, Innovation Lead for Internal IT at Accenture to discuss why they chose the cloud to keep their dynamic and mobile workforce connected to their business.

Watch the Webcast here.

Chris J. T. Auld’s Windows Azure Certificates for Self management Scenarios post of 5/3/2010 is an illustrated tutorial for processing the X.509 certificates needed by the Azure Management API:

The Windows Azure Management API uses x509 certificates to authenticate callers. In order to make a call to the API you need to have a certificate with both public and private key at the client and and the public key uploaded into the Azure portal. But, if you then want to call the management API from your Windows Azure VMs then you’ll also need to install the cert into the instances by defining them in the service definition This post will show you how.

I found it a bit of a pain to get going so here’s my simple guide. I used this to setup the certs for my favourite open source Azure toolkit Lokad-Cloud. We’ll be creating a self signed certificate, then uploading that certificate into the Windows Azure management portal. Finally we’ll add the certificate to our service model to ensure that Windows Azure installs the certificate into our VM instance when it is started.

Here’s the approach in pictures so you can follow along.

- Create a self signed certificate in the IIS7 Manager

Open IIS7 Manager- Expand the node for your local machine

- Double Click Server Certificates

…

Chis continues with two more steps, then explains how to export the certification with its private key and export it for use by Windows Azure.

Rahul Johri confirms Java supported on Windows Azure in this 5/3/2010 post from Microsoft Hyderabad:

Some good news for some of my Java friends from the Azure team - Windows Azure does support multiple languages (.NET, PHP, Ruby, Python or Java) and development tools (Visual Studio or Eclipse), along with the interoperable approach, which does make it a strong contender in Java development niche market.

Read for more…

Not exactly news, but the Windows Azure Platform and Interoperability page appears to have been updated recently.

Return to section navigation list>

Windows Azure Infrastructure

K. Scott Morrison names The Top 5 Mistakes People Make When Moving to the Cloud in this 5/3/2010 podcast with Keith Shaw:

Cloud is now mature enough that we can begin to identify anti-patterns associated with using these services. Keith Shaw from Network World and I spoke about worst practices in the cloud last week, and our conversation is now available as a podcast.

Come and learn how to avoid making critical mistakes as you move into the cloud.

Dana Gardner reports Hot on heels of smartphone popularity, cloud-based printing scales to enterprise mainstream in this 5/3/2010 post to ZDNet’s BriefingsDirect blog:

As enterprises focus more on putting applications and data into Internet clouds, a new trend is emerging that also helps them keep things tangibly closer to terra firma, namely, printed materials – especially from mobile devices.

Major announcements from HP and Google are drawing attention to printing from the cloud. But these two heavy-hitters aren’t the only ones pushing the concept. Lesser-known brands like HubCast and Cortado got out in front with cloud printing services that work to route online print orders to printer choices via the cloud. [Disclosure: HP is a sponsor of BriefingsDirect podcasts.]

Still a nascent concept, some forward-thinking enterprises are moving to understand what printing from the cloud really means, what services are available, why they should give it a try, how to get started—and what’s coming next. Again, we’re early in the cloud printing game, but when Fortune 500 tech companies start advocating for a better way to print, it’s worth investigating. …

Dana continues with separate analyses of HP CloudPrint and Google cloud-based printing initiatives.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff’s (@Beaker) Security: In the Cloud, For the Cloud & By the Cloud… post of 5/3/2010 describes three models for for use when discussing cloud secuity:

When my I interact with folks and they bring up the notion of “Cloud Security,” I often find it quite useful to stop and ask them what they mean. I thought perhaps it might be useful to describe why.

In the same way that I differentiated “Virtualizing Security, Securing Virtualization and Security via Virtualization” in my Four Horsemen presentation, I ask people to consider these three models when discussing security and Cloud:

- In the Cloud: Security (products, solutions, technology) instantiated as an operational capability deployed within Cloud Computing environments (up/down the stack.) Think virtualized firewalls, IDP, AV, DLP, DoS/DDoS, IAM, etc.

- For the Cloud: Security services that are specifically targeted toward securing OTHER Cloud Computing services, delivered by Cloud Computing providers (see next entry) . Think cloud-based Anti-spam, DDoS, DLP, WAF, etc.

- By the Cloud: Security services delivered by Cloud Computing services which are used by providers in option #2 which often rely on those features described in option #1. Think, well…basically any service these days that brand themselves as Cloud…

At any rate, I combine these with other models and diagrams I’ve constructed to make sense of Cloud deployment and use cases. This seems to make things more clear. I use it internally at work to help ensure we’re all talking about the same language.

Lori MacVittie claims Deep packet inspection is useless when you’re talking about applications in her That’s Awesome! And By Awesome I Mean Stupid post of 5/3/2010:

Back in the early days of networking (when the pipes were small and dumb) the concept of “Deep Packet Inspection” started to bubble up the network stack. Deep Packet Inspection describes the ability of a networking device to fully inspect an Ethernet packet; essentially it’s the ability to examine the data in the payload that’s actually being transported across the network. This is a Very Good Thing because it allows myriad networking devices to perform interesting and useful functions like sniffing out malicious activity (attacks, attempted breaches) and, in many cases, stop them.

The reason it’s called Deep Packet Inspection, of course, is because it’s based on the limited ability of network devices to inspect the flow of data. That is, they were only able to do so on a packet by packet basis. That’s a problem when you start trying to examine application data because of the way that data is bifurcated into packets at the lowest layers of the network stack. …

Lori continues with a “LIKE OLD SKOOL WORD WRAP” topic that analyzes the problems with deep-packet inspection of 1,500-byte Ethernet packet payloads. She concludes:

That’s why the term Deep Packet Inspection is not only inaccurate, it’s misleading and it should be removed from the vernacular of any solution that provides services for applications and not individual packets. And if you should see the term, it should set off an alarm bell to ask if they really mean data or packets, because the difference has a dramatic effect on the functionality and capabilities of the solution being offered. It may be the case that the solution isn’t actually doing Deep Packet Inspection, but rather it’s doing application payload inspection and stream-based processing, but if you don’t ask, you might end up with a solution that’s incapable of doing what you need it to do. So understand what you need to do (or might need to do in the future) and then make sure your solution of choice is capable of doing it.

<Return to section navigation list>

Cloud Computing Events

• Cliff Simpkins (@cliffsimpkins) announced AppFabric Virtual Launch on May 20th – Save the Date for private cloud devotees at 8:30 AM PDT on 4/29/2010 in the The .NET Endpoint blog. See the full text of the announcement (and a link to Mary Jo Foley’s coverage of the event) in the AppFabric: Access Control and Service Bus section above.

Due to its importance, the preceding article was reposted from Windows Azure and Cloud Computing Posts for 5/1/2010+.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeremy Geelan claims “The IBM Software Group has acquired more than 55 companies since 2003” as a preface to his IBM To Turn Cast Iron into Cloud Computing Gold post of 5/3/2010:

IBM, which has eleven cloud computing labs around the world including one that is due to open in Singapore later this week, has already unveiled - last November - the world's largest private cloud computing environment for business analytics, an internal cloud called BlueInsight. Today, it has rounded out its Cloud strategy by acquiring Cast Iron Systems, advancing in a single step IBM’s capabilities for a so-called "hybrid" cloud model - attractive to enterprises because it allows them to blend data from on-premise applications with public and private cloud systems.

"IBM’s acquisition of Cast Iron Systems will help make cloud computing a reality for organizations around the world that are seeking greater flexibility and cost efficiency in their IT operations," the company stated in its announcement earlier today.

Cast Iron Systems is a privately held company based in Mountain View, CA.

IBM’s industry-leading business process and integration software portfolio grew more than 20 percent in the first quarter of 2010, a growth that this deal will bolster.

“The integration challenges Cast Iron Systems is tackling are crucial to clients who are looking to adopt alternative delivery models to manage their businesses,” said Craig Hayman, general manager, IBM WebSphere (pictured). “The combination of IBM and Cast Iron Systems will make it easy for clients to integrate business applications, no matter where those applications reside. This will give clients greater agility and as a result, better business outcomes,” he added.

“Through IBM, we can bring Cast Iron Systems’ capabilities as the world’s leading provider of cloud integration software and services to a global customer set,” said Ken Comée, president and chief executive officer, Cast Iron Systems. He continued:

“Companies around the world will now gain access to our technologies through IBM’s global reach and its vast network of partners. As part of IBM, we will be able to offer clients a broader set of software, services and hardware to support their cloud and other IT initiatives.”

James Governor analyzes IBM’s latest cloud acquisition in his IBM acquires Cast Iron Systems: Cloud Services integration for Enterprise post of 5/3/2010:

I got up early this morning for an invite only breakfast with WebSphere GM Craig Hayman.

Turns out IBM just acquired Cast Iron Systems. What’s the big idea?

Take companies onto the cloud. That’s right folks- its a cloud onboarding play. The firm has 75 employees, was founded 2001, and has a stellar list of partners… and adapters to integrate with them. Pretty much every SaaS company of note is on the roster. We’re not just talking Netsuite salesforce.com and RightNow technologies though- ADP is another example.

One interesting issue will be immediate contention with partners. When you see CA and BMC Remedy on a partner list and IBM is doing the acquiring you know that Switzerland is about to become a little less neutral.

One interesting element of the Cast Iron “cloud” play is that its OmniConnect is an on premise integration appliance. The software allows for drag and drop integration of on premise and cloud apps. So now IBM Software Group has another appliance play to join Datapower.

In fact as Hayman said:

“In the early days we said “jeez- you could do that with Datapower. But… they had specific application integration patterns for the space.”In other words IBM acquired Cast Iron Systems to accelerate its own cloud play. IBM is being onboarded to the cloud as much as its customers are…

The case studies IBM talked this morning were pretty compelling.

Companies like ADP that normally take 4 months to bring a new customer onboard are now able to do it in a matter of weeks.

“The biggest issue is migration of data and so on. They said yeah we’ve heard it all before…. But they were “a little taken aback”. “you guys and your drag and drop technology are removing the effort of building code.”

Cast Iron’s CEO continued:

“Google is doing a lot [in terms of application capability]. Its great, but the data they need – for example in SAP, is held behind the firewall. So we help with integration of that data through Google App Engine.”“You can’t have a SaaS app that talks to quick time to value, if it then needs three months to integrate…”

Cast Iron is available as an appliance, or cloud, by monthly charge. Its already available on the IBM product list. …

Looks to me like more Windows Azure competition is in the works.

James continues with a IBM’s Cast Iron Fix for API Proliferation footnote, posted on 5/3/2010 at about noon PDT:

I hate it when that happens. Just came into the analyst general session and IBM WebSphere CTO Gennaro Cuomo reminded me of Redmonk’s own research agenda and how it relates to IBM’s decision to acquire Cast Iron Systems.

Here is the thing – one of Stephen [O’Grady]’s predictions for 2010 was that API proliferation will become a real problem this year. Clearly it already is. I had to talked to Jerry at length about the need to take a registry/repository approach to Cloud-based APIs last year. Well it seems that’s the space Cast Iron is in. At first glance I was thinking about the deal in more traditional middleware terms- adapters and integration.

But in fact Cast Iron may be way more interesting that that. Its an API management platform – IBM will do the work to track all these APIs and help organisations build apps that target them. Unlike many web companies enterprises don’t really have time to concentrate on tracking API changes.

Holy crap. I just realised its also an amazing data play. Cast Iron effectively instruments the world of APIs. IBM is going to know exactly what’s going on in cloud development- what’s hot and what’s not. This really could be a transformative acquisition.

disclosure: IBM is a client.

Phil Wainwright claims "IBM bought Cast Iron Systems because it simply had nothing in its huge Websphere toolbox that could do cloud integration” in his IBM buys itself a cloud integration toolbox post of 5/3/2010 to ZDNet’s Software as Services blog:

IBM bought Cast Iron Systems because it simply had nothing in its huge Websphere toolbox that could do cloud integration. I just heard the company’s SVP of its software group Steve Mills admit this in today’s IBM press briefing, talking about the acquisition. While IBM has a massive catalog of technologies for integrating applications within a company, he confessed that tiny Cast Iron were the masters when it came to any form of integration that extends beyond the enterprise firewall: “Cast Iron connects customers to external suppliers. They do the inter-enterprise integration better than anyone else does,” he admitted.

There are other striking admissions implicit in IBM’s acquisition today. Did you hear the sharp intake of breath that reverberated across IBM Global Services on reading these two telling sentences in IBM’s press release announcing the acquisition today?

“Using Cast Iron Systems’ hundreds of pre-built templates and services expertise, expensive custom coding can be eliminated, allowing cloud integrations to be completed in the space of days, rather than weeks or longer.”

As WebSphere GM Craig Hayman told Redmonk analyst James Governor over breakfast this morning, Cast Iron’s “drag and drop technology” means that companies like ADP have cut new customer onboarding time from months to weeks, “removing the effort of building code.” For many long years, IBM — along with every other established enterprise software vendor — has avoided offering its customers such short-cuts. But, as Cast Iron’s CEO divulged to Governor (aka @monkchips), the cloud doesn’t work like that: “You can’t have a SaaS app that talks to quick time to value, if it then needs three months to integrate …” …

Stacey Higginbotham claims Enterprise Cloud Adoption Is Changing the Playing Field in this 5/3/2010 post to GigaOm:

Cloud computing has played a starring role in the technology press for the past 2-3 years, but it’s now moving from the haven of startups and random corporate side projects seeking flexible and cheap computing on Amazon’s Web Services to enterprises figuring out how to use on-demand compute capacity to change their IT cost structure and eventually link their internal applications to public clouds. So get ready for another round of acquisitions and maybe investments.

IBM today purchased a cloud computing startup called Cast Iron, that helps tie internal software to applications running in the cloud, and Intel contributed to a $40 million funding round to an infrastructure-as-a-service provider’s first round of funding. Both investments are aimed squarely at helping enterprise buyers adopt cloud computing by addressing some of the shortfalls that have so far kept enterprise IT from doing so.

One reason IBM was so keen on Cast Iron is that it has three different business offerings: one that links on-premise apps to other on-premise apps, one that links on-premise apps to the cloud and one that links clouds one another, said Promod Haque, a managing partner with Norwest Venture Partners, a Cast Iron investor. He noted that IBM liked that Cast Iron wasn’t focused on one small aspect of the cloud computing opportunity, but could help enterprises support apps across a variable environment.

That’s a wise move, as enterprises aren’t going to hop wholesale into the cloud. CA has also recently decided to acquire several startups aimed at performing a similar support function for corporate customers trying out infrastructure, platforms or even software as a service. Most enterprise customers will have a strategy that covers on-premise clouds with other service providers, be they infrastructure providers like Savvis or software such as Salesforce.com.

Stacey continues with additional private cloud adoption analysis.

Savio Rodrigues claims “Red Hat's new cloud support for RHEL unlikely to help stem Ubuntu cloud usage” in a preface to his “New Red Hat cloud offering poses high barrier to adoption” post of 4/28/2010:

Red Hat's cloud strategy appears focused on retaining existing customers, not attracting new customers.

There's little argument that Red Hat is the undisputed leader in the enterprise Linux market by the measure that counts most: revenue. However, Red Hat's position as the leading Linux vendor for cloud workloads remains in dispute at best, and far from reality at worst. All signs point to Ubuntu as the future, if not current, leader in the Linux cloud workload arena. …

First, data from the Ubuntu User Survey [PDF] decidedly points to Ubuntu's readiness for mission-critical workloads, with more than 60 percent of respondents considering Ubuntu a viable platform for cloud-based deployments.

Second, statistics taken from Amazon EC2 and synthesized by The Cloud Market clearly point to Ubuntu's leading position against other cloud operating systems in EC2 instances today. With these facts in hand, one could have expected Red Hat to take steps to grow Red Hat Enterprise Linux (RHEL) adoption in cloud environments. In fact, Red Hat's Cloud Access marketing page makes a bold claim:

“Red Hat is the first company to bring enterprise-class software, subscriptions, and support capabilities all built in to business and operational models that were designed specifically for the cloud.”

However, Red Hat announced a program in which existing customers or new customers willing to purchase at least 25 active subscriptions of RHEL Advanced Platform Premium or RHEL Server Premium could deploy unused RHEL subscriptions on Amazon EC2. With the minimum support price of $1,299 for RHEL Advanced Platform Premium and a minimum of 25 subscriptions, the price of entry is $32,475. Well, you'll actually need at least 26 subscriptions, so you can move subscription No. 26 to Amazon EC2 with full 24/7 Red Hat support. As such, the price of entry is $33,774.

Guy Rosen’s State of the Cloud – May 2010 post of 5/3/2010 shows that “Amazon now controls more than 50% of cloud-hosted sites”:

A new month, a new State of the Cloud post! In this month’s post we’ll revisit the relative sizes of the top providers and see just how much of the cloud market the biggest players own.

But first, this month’s figures -

…

Let’s take a look at cloud providers from another angle. We last did this back in February.

Our first conclusion: Amazon now controls more than 50% of cloud-hosted sites. The second conclusion: by this metric at least, the cloud race continues to be a two-horse race: Amazon and Rackspace together control 94%, and all the rest of the providers retain but a sliver of control.

Graphics credit: Guy Rosen. As mentioned in OakLeaf extracts from previous Guy Rosen articles, Windows Azure, Google App Engine, Engine Yard and Heroku are Platforms as a Service (PaaS). Guy only covers Infrastructure as a service (IaaS) in his “State of the Cloud” posts.

<Return to section navigation list>