Windows Azure and Cloud Computing Posts for 5/15/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Don MacVittie claims “We take tables for granted. Really take them for granted” as a preface to his Tiering is Like Tables, or Storing in the Cloud Tier post of 5/15/2010:

… I should not have to care about NAS vs. SAN anymore, we’re in the twenty-first century after all, and storage is as important to our systems as tables are to our lives. And in many ways they serve the same purpose – a place to store stuff, a place to drop stuff temporarily while we do something else… You get the analogy. Let us face basic facts here, an Open Source group (or ten) was able to come up with a way to host both proprietary OS’s and Open Source OS’s on the same hardware, determining what to use when, and that’s not even touching on the functionality of USB auto-detection, so why is it that you can’t auto-detect whether my share is running CIFs or NFS, or for that matter in an IP world, iSCSI?

For all of my “use caution when approaching cloud” writing – that is mostly to offset the “everything will be in the cloud yesterday!” crowd - I do see one bit that would drive me to look closer – RAIN (Redundant Array of Independent Nodes) plus a cloud gateway like that offered by nasuni offers you highly redundant storage without having to care how you access it. Yes, there are drawbacks to this method of access, but the gateway makes it look like a NAS, meaning you can treat your cloud the same as you treat your NAS boxes. No increase in complexity, but access to essentially unlimited archival storage… Sounds like a plan to me.

There are some caveats of course, you’d need to put all things that were highly proprietary or business critical on disks that weren’t copied out to the cloud, at least for now, since security is absolutely less certain than within your data center, and anyone who argues that point is likely selling cloud storage services. There aren’t other people accessing data on your SAN or NAS boxes, only employees. In the cloud, others are sharing your space. People you don’t know. That carries additional security risk. End of debate.

Don continues with the details of F5’s File Virtualization product, ARX.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Andrew Fryer’s SQL Azure – Back to the Future post of 5/15/2010 to his TechNet blog is an introduction to SQL Azure, which mentions Project “Houston”:

As I write this I am setting up a SQL Azure database to use as the source for some of my BI demos over the coming months, and the experience takes me back to when I first started in business intelligence.

If you haven’t come across SQL Azure, imagine someone has set up SQL Server for you on a server and all you have to do is create your databases, database objects and permissions for your users to use them. The someone is Microsoft, and the service is available on a pay per use basis. It’s sufficiently like SQL Server that you can use all the tools you are familiar with to manage it and access data from it. But there are differences..

The lack of tools and capabilities in SQL Azure reminds me particularly of SQL Server 6.5, hence the title of the post. For example there’s no mirroring or clustering, as in SQL Azure there is triple failover built in, it’s just the user doesn’t have any control over it.

Some of the key new features of SQL Server 2008 are also missing some of which is good and some bad. It doesn’t matter that the management features like Policy Based Management and Resource Governor aren’t there as they are also irrelevant in an Azure world where Microsoft is managing the instance and underlying server for you. However other things are still being added like the new data types introduced in SQL Server 2008. (like geospatial, hierarchy and the other clr data types).

Coming back to the subject of tools, what is not obvious is that management studio in the recently released SQL Server 2008 R2 allows you to also manage your SQL Azure databases.

simply connect..

and it business as usual. (not the odd version number for SQL Azure - 10.25!)

BTW my good friend Eric Nelson has an excellent post on that here .

The good news here is that there is a web based Silverlight management application in the works, called project Houston, which will allow you to do all the basic stuff in your browser like managing tables and there are third party tools also out there as well. [Emphasis added.]

This leads me to one of the best things about SQL Azure namely upgrades. As SQL Azure is enhanced with features like geospatial, project Houston etc. your database gets upgraded which takes the major headache of upgrades out of the equation. The releases for SQL Azure are every 90 days compared with 3 years for SQL Server that you install on your server. Next up for release in June is the geospatial data type and where databases are currently limited to 1 or 10 GB (and priced accordingly) there will be a new 50 GB version.

To quote my good friend Keith Burns at the recent TechDays event, “SQL Azure is actually pretty boring” and when it comes to databases boring is good, so this SQL Azure thing might well be the future.

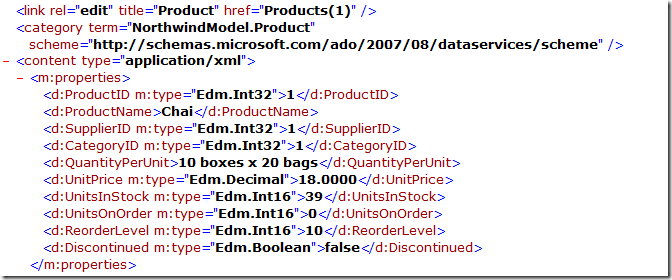

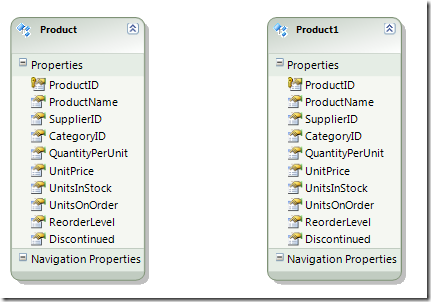

Raj Kaimal’s Pre-filtering and shaping OData feeds using WCF Data Services and the Entity Framework - Part 2 post of 5/15/2010 continues where Pre-filtering and shaping OData feeds using WCF Data Services and the Entity Framework - Part 1 left off:

Shaping the feed

The Product feed we created earlier returns too much information about our products.Let’s change this so that only the following properties are returned – ProductID, ProductName, QuantityPerUnit, UnitPrice, UnitsInStock. We also want to return only Products that are not discontinued.

Splitting the Entity

To shape our data according to the requirements above, we are going to split our Product Entity into two and expose one through the feed. The exposed entity will contain only the properties listed above. We will use the other Entity in our Query Interceptor to pre-filter the data so that discontinued products are not returned.

Go to the design surface for the Entity Model and make a copy of the Product entity. A “Product1” Entity gets created.

Raj continues with his illustrated tutorial and concludes:

Download Sample Project for VS 2010 RTM: NortwindODataFeed.zip

Make sure you have the right Entity Connection String. Depending on how you have your server/user set up, you may have to include the Catalog name like so:

…\sqlexpress;Initial Catalog=Northwind;Integrated Security=True;MultipleActiveResultSets=True"" …

Tip: To debug your feed, add config.UseVerboseErrors in the InitializeService method.

Pop Quiz: What happens when we add this config.SetEntitySetAccessRule("ProductDetails", EntitySetRights.AllRead); ?

The SSIS Team Blog reveals ADO.NET Destination package format change in SQL 2008 R2 in this 5/14/2010 post:

As I previously mentioned, SQL 2008 R2 added new Bulk Insert functionality to the ADO.NET Destination. This changed the component version, which means that if you edit/save packages that have ADO.NET Destination components in R2, the package will give you a loading error when you use it on a 2008 (R1/RTM) machine.

SQL MVP John Welch has a very good write up of the issue, and provides steps (as well as an XSLT script) for “downgrading” your package to the 2008 format.

Note, this problem only happens with packages that use the ADO.NET Destination. All other packages can be moved between R2 and 2008 without any problems.

Also note that even without modification, the package will load in the 2008 designer (with errors). Replacing the old destination with a new one (and re-connecting the metadata) will resolve the issue.

This issue is likely to affect users of SSIS workflows to migrate schemas and data from SQL Server to SQL Azure.

Lauren Amundson (@laurenamundson) begins a new OData series with her OData: Part 1 of 5 – Introduction post of 5/7/2010:

I'm really glad that I started this blog when I did. VS 2010 is out. .NET 4.0 is out of beta. There's just lots of super cool new toys to play with and explore. One of those is OData. Our world runs on data. We are like Number Five from Short Circuit. Need more Input! OData, which is the Open Data Protocol is here to help us consume and expose our data in a standard way.

Honestly, I am inspired to drill into this topic because I'm excited for the OData Live WebCast by Douglas Purdy tomorrow afternoon. It's going to be awesome.

So, a disclaimer. Anything involving data access isn't going to be able to be discussed very succinctly so if this piques your interest I would recommend drilling in further or grabbing a book or stay tuned for the other parts of this series.Basically the goal of OData is to kick all of the data silos around the data-world to the curb and replace the myraid of different data-handleing "standards" with a real single standard to query and update data. Tall order, and not what you as an architect/designer/developer might want, or is could be the next best thing for data access since <insert something witty />.

OData is not Microsoft's. It used to be, but now it is its own thing released under the OSP (Open Specification Promise). Microsoft, after hearing that we devs and architects wanted to increase utilization of standard-based-approaches has created OData and is working to create an independent community around it. The whole developing something and then calling it a standard does sound so Microsoft. But, there is a need for a standard way to deal with data and someone has got to do it. For what it's worth, so far it looks like they are doing it very well. This isn't Microsoft's first forrey into open source as AJAX.NET is open source and seems to be going very well...I use it and have gotten wonderful results.

Microsoft's OData functionality is the protocol formally known as Astoria which encapsulates ADO.NET and is the next step of ADO.NET. It combines the best of HTTP, REST, AtomPub and JSON. I'm going to assume you all know what the HTTP protocol is. REST's stateless communication leverages these HTTP verbs in web services often unlocking AJAX functionality. AtomPub is an application-level protocol that uses HTTP to publish and edit web "stuff". JSON is a string representation of a JavaScript object and is also extremely helpful in AJAX. Each was only a sentence so that's a bit simple to the point of almost being wrong, but at least we have a starting point to know what OData is built upon/using. OData is RESTful extensions to AtomPub which uses JSON and ATOM to expose the data which can be both read and edited using HTTP.

Lauren continues her essay with descriptions of additional benefits from the use of OData. Subscribed.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Cory Fowler shows you How to Configure the Guest OS in Windows Azure in this 5/15/2010 post:

It’s an exciting time with the release of Visual Studio 2010, .NET 4 and ASP.NET MVC 2. I made the move to rewriting my Azure Email Queuer Application to ASP.NET MVC and leveraging some Telerik ASP.NET MVC Extension Controls to kick up the project a little bit.

In making this move it made it necessary to upgrade from the default Guest OS for my Windows Azure Hosted Service. Luckily I was pointed to the “Windows Azure Guest OS Versions and SDK Compatibility Matrix” by Brent Stineman [@BrentCodeMonkey] which gave me the low down on how to configure Azure for the Guest OS I needed to target.

Here is the how to get the “osVersion” attribute in your Cloud Service Configuration (.cscfg) File.

Generating a Windows Azure Configuration File

Obviously, the easiest way to create a Cloud Service Configuration file for Windows Azure is to use the Visual Studio templates for a Cloud Service. This is all fine and dandy for those of us that are using the .NET Framework on Azure, but Azure is a Robust Cloud Service Model that allows Open Source Languages like PHP, Python, Ruby and even Java. How do those folks Generate Cloud Service Configuration file?

This task is made simple with the Windows Azure Command-line Tool which is part of the Windows Azure SDK.

In the Azure Command-Line tool, you should start by adding the following to the %PATH%:

C:\Program Files\Windows Azure SDK\v1.1\binHere’s where things get fun, using the CSPack.exe file you can use a flag to Generate a Skeleton Windows Azure Configuration File. You’ll have to first save the following Service Definition File (I’m not sure why they don’t have a tool to generate this):

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="[Service Name]" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> <WebRole name="[Must be a valid folder path]"> <InputEndpoints> <InputEndpoint name="HttpIn" protocol="http" port="80" /> </InputEndpoints> <ConfigurationSettings> <Setting name="DiagnosticsConnectionString" /> </ConfigurationSettings> </WebRole> <WorkerRole name="[Must be a valid folder path]"> <ConfigurationSettings> <Setting name="DiagnosticsConnectionString" /> </ConfigurationSettings> </WorkerRole> </ServiceDefinition>Once you’ve replaced the Square Bracketed names with valid data, save the above XML to a file called “ServiceDefinition.csdef”, run the following command in your command-line tool:

c:\samples\HelloWorld>cspack HelloWorld\ServiceDefinition.csdef /generateConfigurationFile:ServiceConfiguration.cscfgThis will probably cause an error as there is a good chance there isn’t an endpoint defined for the Roles. However, It does generate the necessary Cloud Service Configuration File. In order to do the next step you’ll need to deploy a project, this will require that you use cspack to package your configuration to a cspkg file.

This can be done using the following:

c:\samples\HelloWorld>cspack HelloWorld\ServiceDefinition.csdef /role:HelloWorld_WebRole; HelloWorld_WebRole /out:HelloWorld.cspkg

Got my Configuration File and Package, Now What?

Now We’ll Login to the Windows Azure Development Portal, which currently needs a Windows Live ID to login [The Windows Azure team is looking for feedback on other login methods that would be handy for Cloud login, if you’d like to share your Great Windows Azure Idea].

After you’re created a new Hosted Service to host your application, you’ll have to deploy your project, seen below:

Once the Deployment finishes uploading you will need to hit the configure button.

After deployment, Windows Azure adds some additional configuration settings to the Configuration file. Opening the Configuration file in the Deployment Portal will allow you to modify your deployments configuration, note that this is also the way that you scale up/down the number of running instances of your application.

For this exercise to make sense you will have to Understand Which Windows Azure Guest OS is Required for your application. On the Details page for the Guest OS that you have selected you will see the Configuration Value for the Guest OS which will start with WA-GUEST-OS. Copy the entire value, as you will be pasting it into the osVersion attribute for your Cloud Service Configuration.

If you specify an osVersion this will keep your deployment targeted to the Guest OS you chose. Note that future service updates may contain patches to the deployment which may fix security vulnerabilities, it is not guaranteed that these patches won’t affect your deployment so each set of security deployments may be hosted under a different Guest OS. You will be able to test out the newest Guest OS in your Staging environment before upgrading your Production deployment. …

Joannes Vermorel describes his Really Simple Monitoring application monitoring system in this 5/15/2010 post:

Moving toward cloud computing relieves from (most) hardware downtime worries, yet, cloud computing is no magic pill that garanties that every single of our apps is ready to serve users as expected.

You need a monitoring system to achieve this. In particular, OS uptime and simple HTTP responsiveness is only scratching the surface as far monitoring is concerned.

In order to go beyond plain uptime monitoring, Lokad has started a new Windows Azure open source project named Lokad.Monitoring. The project comes with several tenants:

- A monitoring philosophy,

- A XML format, the Really Simply Monitoring (shamelessly inspired by RSS),

- A web client for Windows Azure

Beta is version is already in production. Check project introduction [Google Code] page.

The project is BSD-licensed.

The Phat Site Blog asks (but doesn’t answer) Azure in Australia: is Microsoft too late? in this 5/15/2010 post:

Microsoft is an entrenched name in the industry with hordes of developers flocking to its banner. Easing the way for developers to bring their applications to the cloud is the essence of Azure.

Microsoft will have to fight less than other providers to lure developers to its platform, because of the very large number of existing Microsoft developers who will want to use the platform to move their products into the cloud.

“If you think of it with the functionality of .NET, the 50,000 or so developers that exist in Australia who are .NET developers automatically become cloud developers overnight,” Microsoft developer and platform evangelism director Gianpaolo Carraro says.

Developers scale the amounts of computer storage they need for their applications by entering a ratio by which they want to change their uptake. Microsoft also aims to make it even easier by offering a cloud-based pay-per-use SQL database, connectivity between Azure and on-premise applications, as well as single sign-on functionality.

Of course, anyone using an infrastructure-as-a-service cloud product from a cloud competitor will be able to install their own SQL database on virtual machines, or write their own single sign-on and app connectivity. However, with Azure, Microsoft has done the leg work for developers, exacting a price for that higher level of support. the idea is that developers can stop wasting time on lower level issues and spend more time on their apps.

“Development houses try to spend most of their time developing the features of their software product themselves, not the infrastructure in terms of the connectivity, the web hosting, the databases,” Carraro says.

One such developer is catering specialist CaterXpress. Director Anthony Super says that CaterXpress is currently in the process of moving all its clients using its apps from on-premise servers to Azure, which will mean being hosted on Microsoft’s servers in Singapore.

According to Super, this means a 60 per cent saving in costs for the company. “You’re looking at the monthly expenditure on running our own equipment at the moment, not even to mention the labour costs on top of that versus a fully managed version of Windows Azure,” he says.

CaterXpress has had to ask its clients if they were willing to have the app hosted overseas, but Super says there has as yet been “no hesitation” because of the more rigorous redundancy and back-up Azure offers. Geography isn’t relevant, he believes. “I guess because we deal with small-to-medium-sized businesses those sort of questions just aren’t asked.”

If some of the customers don’t agree, CaterXpress will simply keep them running on the in-house servers, but those customers won’t be receiving the cost savings that CaterXpress will pass onto the others.

Apparently CaterXpress believes the answer is “No.”

John O’Donnell announced New Video - John Ayres of Symon Communications talks with Jared Bienz at MIX10 in his 5/14/2010 post:

Video Link - John Ayres of Symon Communications talks with Jared Bienz at MIX10

John Ayers from Symon talks to Jared Bienz from Microsoft about building powerful mobile applications. John talked about their mobile player and plans to use Windows Azure for scale.

Lauren Amundson (@laurenamundson) explains why she’s using Azure for her new social networking venture with Pete Amundson in her Cloud Computing: Azure and it's Competition post of 5/13/2010:

As a child I would often get yelled at for having my head up in the clouds. Little did anyone know, but one day I would be programming on Windows Azure, a Microsoft platform that runs my web app and stores its data in the cloud. So, while my head isn’t in the cloud, my ideas are.

The “cloud” is getting a lot of talk recently. It’s a fun word that everyone can latch on to, and many of us are already using cloud applications. Google Docs, anyone? The term "cloud" is a convenient metaphor for what is going on. The “stuff” you are using isn’t local it’s out there somewhere in the ether…or cloud. What’s nice is that, like the electricity grid, resources are shared. This makes the cloud very scalable. So, if you need electricity you don’t have to make your own and have all the equipment, you just buy the power from the electricity company. So, from a server side, if you don’t need to own the box that transmits the data and you just need the ability to transmit it then why would you want the overhead of that box? You can avoid the capital expenditure for the hardware, server software, etc and just pay for the service.

There are lots of reason why I am using Azure for my new social networking venture with Pete Amundson. First, we already mentioned the avoidance of some of the capital expenditure, which is big for us. Also, since this new venture could expand quickly (fingers crossed) we want something super agile that can rapidly expand as load increases. We’ll make it scalable, of course, but it’s much easier to make software scalable than it is hardware. Furthermore, we don’t need to have the hardware to handle our peak load at all times. Since the resources are shared we can grab and drop as need be in the same way that I don't have to pay for the electricity to power a light bulb that isn't on. Until I flip the switch I am not chewing through my financial resources. The cloud gives us that.

Lauren continues with descriptions of “other features of the cloud that Sippity will not utilize but that other companies, like my day job, might utilize.”

Return to section navigation list>

Windows Azure Infrastructure

Moshe Kaplan’s Memories from David Chappell's Lecture or Why [is] MS Azure still in its Alpha? post of 5/11/2010 (updated 5/16/2010) analyzes Windows Azure from an Israeli developer’s standpoint:

Clarification: This post is based on my understanding of the cloud computing market. I may not fully understood the full Microsoft offer, and probably in some aspects my colleague David Chappell and I have different perspectives and opinions.

UPDATE 1: (May 16, 2010) Based on conversation with David Chappell.I attended yesterday David Chappell's great lecture regarding the Microsoft Azure platform. Except for letting you know that you cannot find a decent WiFi Access in the Microsoft R&D offices, I would like to share with you several technical and product details regarding Azure and my opinion regarding Microsoft Cloud Computing GTM:

Pricing

- Both auto scale and getting back to the original state are supported through the API and not automatically . You should do it manually. (UPDATE 1)

- There is no admin mode: you can install (almost) nothing, no SQL Server,

no PHP,no MySQL in service mode and no RDP... or in other words total vendor lock-in. Since HA system requires service mode you must use the Azure stack for HA systems. (Update 1)- No imaging or "

stoppedsuspended staged server" mode where you pay only for the storage. You must pay for suspended staged machines (like in GoGrid. unlike Amazon EBS based machines)- Bandwidth - few people complain regarding it, because few people use it "there is a lot of room right now...". Please notice that having a lot of room is good. However, only time will tell how Microsoft will

- Storage - S3, SimpleDB and SQS like storage mechanism that is accessed using REST (no SOAP in the system):

- Blobs:

- EBS like binary data storage

- Can be mapped to "Azure Drives" that can be used as EBS: NTFS files

- CDN mechanism Support

- Tables:

- Key value storage (NoSQL)

- Table>Entiity>Property {@Name;@Type;@Value}.

- Don't expect for SQL and it really scalable. You are right, it is sounds just like SimpleDB.

- Queues: SQS like

- Used for task distribution between workers

- Please notice that you should delete messages from the Q, in order to make sure that the message does not appear again (there is a configurable 30 seconds timeout till messages will reappear)

- Access: grouping data into storage accounts

- Fabric:

- Fabric Controller: controls the VMs, networking and etc. A fabric agent is installed on every machine. It is interesting that Microsoft exposes this element and tells us about it, while Amazon keeps it hidden... (UPDATE 1): Please notice that this is a plus for MS, as turns out that Amazon for example does not tell us everything about its infrastructure, and maybe it is time to expose this information.

- Azure Development Fabric: a downloadable framework that can be installed for development purposes on your premises. Why should you have this mess of incompatibility between development and production environments for the aim of saving few dozens of USD a month? Maybe MS knows... (UPDATE 1): Please notice that saving few bucks is a great idea. However, based on my experience WAN effects and real hosting environment can present different behavior than the one you had on your development premises. Therefore, I highly recommend you to install both your integration and production instances in the same environment.

- SQL Azure Database:

- Up to 10GB database. Sharding is a must if you really have data.

But hey, if you really had data, you were not here...(UPDATE 1): If you have critical systems with a lot of data, I don't recommend using Azure yet. However, you can find a previous post of mine regarding SQL Server Sharding implementation.- Windows Azure Platform AppFabric

- Service Bus: a SOA like solution that is aimed to connect between intranet web services and the cloud. This a major component that does not exist in other players offering, due to the MS Go To Market (GTM) strategy (see below). If you use other cloud computing environment, you may use this service while integrating it with your internal IT environment.

- Access Control

Pricing is the #1 reason for using the cloud, and it seems that Microsoft is lagging behind in thisTarget Applications

- Computing: $0.12-$0.96 per hour. That is X3 AWS spot prices. (UPDATE 1): Please notice that spot prices are not the official Amazon price list. However, it is common method to use it and it is well explained in a previous post of mine.

- Storage: $0.15/GB per month + $0.01/10K operations

- SQL Azure: $10/GB per month. Yes it is limited to 10GB. (UPDATE 1): Please notice that the Amazon offering is extremely different both in options and in pricing model, so decisions should be taken based on detailed calculation..

- Traffic:

- America/Europe: $0.1/GB in, $0.15/GB out

- APAC: $0.3/GB in, $0.45/GB out

The Azure plarform best fits the following case scenarios according toMicrosoft (based on Amazon experience)David Chappell (David does not work for Microsoft, nor does he speak for them):Microsoft Go To Market Strategy

- Massive scale web applications

- HA apps

- Apps with variable loads

- Short/Unpredictable life time applications: marketing campaigns, pre sale...

- Parallel processing applications: finance

- Startups

- Applications that do not fit well to the current organizations:

- No data center cases

- Joint venture with other parties

- When biz guys don't want to see the IT

(Update 1): Please notice that is my analysis regarding Microsoft Go To Market strategy and is based on my market view, and from conversations with market leaders and may not reflect David Chappel's opinion:

- Microsoft is building on their partnerships and marketing force rather than providing the best technical/pricing solution. They build on these ISVs to expand their existing platform to Azure as part of their online/cloud/SaaS strategy and portfolio. (UPDATE 1): I believe that currently the Azure solution is less flexible than IaaS offers in the market, and with higher cost.

- MS currently focus in establishing itself as a valid player in the cloud computing market, by comparing itself to Amazon, Google, Force.com and VMware. (UPDATE 1): Microsoft, of course, do not say it by themselves. However, they sponsor respected industry analysts to pass this message

- It seems that Microsoft was trying to focus in solving enterprises requirements, rather than approaching the Cloud native players: web 2.0 and internet players that are native early adapters... Is it a correct marketing move? Are enterprises really head to this platform, when there are better and more stable platforms? Will they head at all?

- My 2 Cents: We probably gonna see here another Java/.Net round. However, this time is not just operating system and development environment, but the whole stack from hardware to applications: Microsoft oriented players will try the Azure platform, while others will probably use other solutions. However, this time Microsoft is not in a good position, since (1) major part of the new players in the market prefer Java/Python/PHP/Erlang stacks instead of the .Net stack; and (2) other players already offer Microsoft offering as part of their stack.

(UPDATE 1): Personally I think that Microsoft has a great development force, and it shown in the past that it can get into existing markets with strong leaders (Netscape in the browsers, Oracle in the database, Oracle and SAP in ERP and CRM) and turn in a leader in the market. I believe that this is their target in the cloud computing arena and I'm sure that in the future Azure will be a competent player. However, this is still a first version, and I would not recommend you to run your critical system based on it, as you probably did not run your critical system on the first MS SQL Server version.

Keep Performing,

Luke Puplett analyzes Windows Azure and Web hosting costs in the UK in his Compare the Meerkat: Windows Azure Cost Planning post of 5/15/2010:

This weekend I had planned to get my data into the cloud, Microsoft's cloud to be precise, but was confronted with Microsoft's version of an online shopping service before I could provision my little slice of the cloud. Probably foolishly, I was expecting to just walk right in with my Live ID and MSDN sub, but it gave me the opportunity to compare the Windows Azure prices with the rest of the colo and hosting market.

As with any cost planning, a load of assumptions have to be made about capacity and requirements. In the ordinary world of hosting, this means basically wondering if you'll use all the RAM on the supplied box or not, but with the Windows Azure model so granularly broken down, it makes it slightly more trifling.

Azure has prices for storage transactions as well as for things like AppFabric Access Control transactions and Service Bus connections. For the sake of my planning I have conveniently pretended that they're not there in a sort of ignorance is bliss line of thinking. Well, I actually don't think I'll use this stuff for my project, yet.

I'll delay no longer and get to the interesting part. For £129.22 I'll be getting an equivalent of a 1Ghz processor running at full chat for a month, as well as 1Mbit of full chat bandwidth, 0.1Mbit incoming and 50Gb storage used. Oh and not forgetting 10Gb of SQL Azure - 1Gb being really rather too measly for anyone's use of a full blown RMDBS. [Click images for larger versions.]

What's perhaps interesting is that MS don't seem to charge for extra RAM. To access more RAM you take a bigger instance with more processors, but if your workload is the same the clock cycles will cost the same, albeit spread over more cores.

For comparison's sake here's a similar costing scenario I did with a bunch of hosting companies in and around the UK. Stop squinting.

The company I has previously chosen was Fido.net which charges about £100p/m for a dedicated dual core box with a single 500Gb HDD and Windows and SQL Server 2008. They give you 10Mbit for about £30p/m which equates to around 3Tb in/out data. …

Luke continues with a “Half Conclusion:”

Fido.net's £100 + £30 is very much like Azure's £129 and so this leaves me in a quandary. I could go with Azure and its instant scalability and other features to plug into etc. but that £130 in the Fido purse gives me much more than what I get with Azure, if I choose to use it. Remember that with Azure I'm pricing that exact usage, whereas with the others, there's a lot more room left before I need to buy and bigger or another dedicated server.

If I were to compare the cost of a fully utilised dedicated server with Azure, the dedicated box would win hands down. And therein lies the rub: those damned usage assumptions.

Mostly though, I am put off by that SQL Azure price; £60p/m for 10Gb when normal hosters will dish out the whole server and SQL Server with the freedom to fill the whole disk with a 250Gb database if you so desire.

I hope this gives someone food for thought, even if my costings aren't terribly scientific.

P.S. Doesn't factor in the 'offers' that Microsoft have for new joiners, which are about 50% off for 6 months ish.

I believe Luke has missed the issue of additional compute-time charges for each additional Web or Worker role his app needs.

Art Whitman claims “When you lay out the ROI case for cloud services, it becomes clear that it will be an ancillary application delivery method for most companies” in a preface to his Practical Analysis: Our Maturing View Of Cloud Computing article of 5/15/2010 for Information Week’s Software Insights blog:

Our latest survey on cloud computing deals with return on investment (the full report will be out this summer). In many ways, thinking about ROI of any new technology forces decision makers to cut through both their own hype and the stuff vendors manufacture to take a studied look at benefits and risks. It's one thing to echo back the desires of line-of-business adherents in pursuit of new investment; it's a far different thing to haul out a spreadsheet in front of the CEO and CFO and claim that you have a handle on the real financial impact of a new technology.

Through all of our surveys on cloud computing, we've seen both excitement and skepticism from IT pros, executives, and line-of-business managers alike, but this was the first survey where both the responses and free-form comments showed the sort of pragmatism that IT professionals usually show toward new technologies. For instance, practically gone is the notion of calculating a one-year ROI. IT pros realize that, for better or worse, moving to software as a service and other cloud services means moving from a model that requires large capex outlays followed by much smaller opex ones to a model that calls for almost no capex but regular, larger opex. That means that what looks like a good deal in years one and two won't look as good in years four and five. In fact, unless the application itself changes, the total cost of moving an application to a service provider will usually increase the overall cost of the application over the long run.

Although Mindtouch CEO Aaron Fulkerson does a good job listing the major points that his company's namesake product (available as a service too) touches on in this reviewcam, it's also one of those products that's difficult to describe.

And that five-year case had better be a compelling one, particularly for existing applications. For existing apps, adopting a SaaS model means introducing new risks into their operation, as well as additional configuration, integration, and training costs. Some 59% of respondents to our survey take risk into consideration as part of their ROI analysis of cloud apps (and hopefully everything else). The point here isn't that your SaaS vendor may have poorer security or reliability than your own company has; the point is that you can't easily evaluate its security or reliability. That amounts to risk, and that should be viewed as an expense. …

Stuart Corner reports “The ITU has set up a new focus group on cloud computing ‘to enable a global cloud computing ecosystem where interoperability facilitates secure information exchange across platforms.’" in his ITU to examine cloud computing standards post of 5/15/2010 to ITWire:

The ITU says the group will take a global view of standards activity around cloud computing and will define a future path for greatest efficiency, creating new standards where necessary while taking into account the work of others and proposing them for international standardisation.

The group's chairman, Vladimir Belenkovich, said: "Specifically, we will identify potential impacts in standards development in other fields such as NGN, transport layer technologies, ICTs and climate change, and media coding.

"A first brief exploratory phase will determine standardisation requirements and suggest how these may be addressed within ITU study groups. Work will then quickly begin on developing the standards necessary to support the global rollout of fully interoperable cloud computing solutions."

Malcolm Johnson, director of ITU's Telecommunication Standardisation Bureau, said: "Cloud is an exciting area of ICTs where there are a lot of protocols to be designed and standards to be adopted that will allow people to best manage their digital assets. Our new Focus Group aims to provide some much needed clarity in the area."

A recently published ITU-T Technology Watch report titled 'Distributed Computing: Utilities, Grids and Clouds' describes the advent of clouds and grids, the applications they enable, and their potential impact on future standardisation.

The International Telecommunication Union is the eldest organization in the UN family still in existence. It was founded as the International Telegraph Union in Paris on 17 May 1865 and is today the leading United Nations agency for information and communication technology issues, and the global focal point for governments and the private sector in developing networks and services.

I’m suspicious of any governmental standardization action, especially by the ITU.

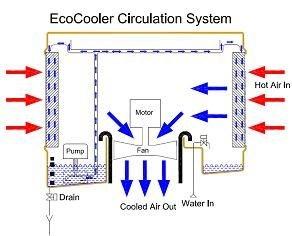

James Hamilton explains Computer Room Evaporative Cooling in this 5/14/2010 post:

I recently came across a nice data center cooling design by Alan Beresford of EcoCooling Ltd. In this approach, EcoCooling replaces the CRAC units with a combined air mover, damper assembly, and evaporative cooler. I’ve been interested by evaporative coolers and their application to data center cooling for years and they are becoming more common in modern data center deployments (e.g. Data Center Efficiency Summit).

An evaporative cooler is a simple device that cools air through taking water through a state change from fluid to vapor. They are incredibly cheap to run and particularly efficient in locals with lower humidity. Evaporative coolers can allow the power intensive process-based cooling to be shut off for large parts of the year. And, when combined with favorable climates or increased data center temperatures can entirely replace air conditioning systems. See Chillerlesss Datacenter at 95F, for a deeper discussion see Costs of Higher Temperature Data Centers, and for a discussion on server design impacts: Next Point of Server Differentiation: Efficiency at Very High Temperature.

In the EcoCooling solution, they take air from the hot aisle and release it outside the building. Air from outside the building is passed through an evaporative cooler and then delivered to the cold aisle. For days too cold outside for direct delivery to the datacenter, outside air is mixed with exhaust air to achieve the desired inlet temperature.

This is a nice clean approach to substantially reducing air conditioning hours. For more information see: Energy Efficient Data Center Cooling or the EcoCooling web site: http://www.ecocooling.co.uk/.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

BrightTalk announces a Cloud Computing Virtual Summit on 5/25/2010:

Gartner identified Cloud Computing as the top technology for 2010, yet the number of enterprises choosing to adopt the cloud is not as high as anticipated. Implementation of cloud infrastructure is a strategic IT decision that involves careful evaluation and promises long term benefits, while application deployment has been more readily adopted in the short term. The experts at this summit will highlight best practices for managing cloud infrastructure, discuss how to distribute workloads in the cloud and give you an overview of what services are available in the market today.

The page lists nine Webcasts.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

No significant articles today.