Windows Azure and Cloud Computing Posts for 5/8/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Updated for articles added on 5/9/2010: Happy Mothers Day!

• Updated for articles added on 5/9/2010: Happy Mothers Day!

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Table and Queue Services

Stu Charlton’s WS-REST musings: Investment in REST (it's not where we might have expected) essay of 5/8/2010 asserts:

Most of those I spoke to at WS-REST felt was that media types, tools, and frameworks for REST have been growing slowly and steadily since 2008, but lately there's been a major uptick in activity: HTML5 is giving browsers and web applications a makeover, OAuth is an RFC, several Atom extensions have grown, Facebook has adopted RDFa, Jersey is looking at Hypermedia support, etc.

But, coming out of the WS-* vs. REST debates, it is interesting in noting what hasn't happened the past two years, since the W3C Workshop on the Web of Services, which was a call to bridge-building. As expected, SOAP web services haven't really grown on the public-facing Internet. And, also as expected, RESTful services have proliferated on the Internet, though with varying degrees of quality. What hasn't happened is any real investment in REST on the part of traditional middleware vendors in their servers and toolsets. Enterprises continue to mostly build and consume WSDL-based services, with REST being the exception. There has been, at best, "checkbox" level features for REST in toolkits ("Oh, ok, we'll enable PUT and DELETE methods"). Most enterprise developers still, wrongly, view REST as "a way of doing CRUD operations over HTTP". The desire to apply REST to traditional integration and enterprise use- cases has remained somewhat elusive and limited in scope.

Why was this?

Some might say that REST is a fraud and can't be applied to enterprise integration use cases. I've seen the counter-evidence, and obviously disagree with that assessment, though I think that REST-in-practice (tools, documentation, frameworks) could be improved on quite a lot for the mindset and skills of enterprise developers & architects. In particular, the data integration side of the Web seems confused, with the common practice being "make it really easy to perform the required neurosurgery" with JSON. I still hold out hope for reducing the total amount of point-to-point data mapping & transformation through SemWeb technologies like OWL or SKOS.

Perhaps it was the bad taste left from SOA, or a consequence of the recession. Independently of the REST vs. WS debate, SOA was oversold and under-performed in the market place. Money was made, certainly, but no where near as much as BEA, IBM, TIBCO, or Oracle wanted, and certainly not enough for most Web Services-era middleware startups to thrive independently. It's kind of hard to spend money chasing a "new technology" after spending so much money on the SOAP stack (and seeing many of the advanced specs remain largely unimplemented and/or unused, similar to CORBA services in the 90's).

Or, maybe it was just the consequence of the REST community being a bunch of disjointed mailing list gadflys, standards committee wonks, and bloggers, who all decided to get back to "building stuff" after the AtomPub release, and haven't really had the time or inclination to build a "stack", as vendors are prone to.

Regardless the reason, the recent uptick in activity hasn't come from the enterprise or the enterprise software vendors offering a new stack. The nascent industries that have invested in REST are social computing, exemplified by Twitter, Facebook, etc. and cloud computing, with vCloud, OCCI and the Amazon S3 or EC2 APIs leading the way.

The result has been a number of uneven media types, use of generic formats (application/xml or application/json), mixed success in actually building hypermedia instead of "JSON CRUD over HTTP", and a proliferation of toolkits in JavaScript, Java, Ruby, Python, etc.

We're going to be living with the results of this first generation of HTTP Data APIs for quite some time. It's time to apply our lessons learned into a new generation of tools and libraries.

You also might enjoy Stu’s WS-REST musings: Put Down the Gun, Son of 5/7/2010, WS-REST Workshop Themes of 5/6/2010 and Building a RESTful Hypermedia Agent, Part 1 of 3/29/2010:

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Karl Shiflett uses NetFlix’s OData feed to power his Stuff – WPF Line of Business Using MVVM Video Tutorial as described in this 5/9/2010 post that includes links to a complete video tutorial:

Stuff is an example application I wrote for WPF Line of Business Tour at Redmond event. During some of the event sessions I used the code in Stuff to demonstrate topics I was teaching.

During the event, we did not do an end-to-end examination of Stuff, that is the purpose of this blog post.

Stuff

In its current form, Stuff is a demo application that allows you to store information about the movies you own. It uses the Netflix OData cloud database for movie look up, which makes adding a movie to your collection very easy.

One goal for Stuff was that a developer could take the code, open it in Visual Studio 2010 and press F5 and run the application. I didn’t want burden developers with setting up a database, creating an account to use an on-line service or have to mess with connection strings, etc.

The Netflix OData query service made my goal achievable because it has a very low entry bar.

Stuff v2. Shortly, I’ll modify Stuff by swapping out the remote data store layers and point it at Amazon’s web service so that I can not only look up movies but books, music and games too. The reason I didn’t do this up front was because developers would have to go and get an Amazon account just to use and learn from the application.

Another goal I had for Stuff was to limit referencing other assemblies or frameworks including my own. All the code for Stuff is in the solution except the Prism EventAggregator. I hope that trimming the code down to exactly what is being used in the application helps with the learning process.

There is a complete solution in both C# and VB.NET.

Karl continues with links to 1400 x 900 vimeo Web casts and downloadable code.

David Robinson wrote his SQL Azure FAQ Available For Download on 5/8/2010:

Our SQL Azure FAQ is available for download. The paper provides an architectural overview of SQL Azure Database, and describes how you can use SQL Azure to augment your existing on-premises data infrastructure or as your complete database solution.

You can download it here.

The download page offers this overview:

SQL Azure Database is a cloud based relational database service from Microsoft. SQL Azure provides relational database functionality as a utility service. Cloud-based database solutions such as SQL Azure can provide many benefits, including rapid provisioning, cost-effective scalability, high availability, and reduced management overhead. This paper provides an architectural overview of SQL Azure Database, and describes how you can use SQL Azure to augment your existing on-premises data infrastructure or as your complete database solution.

Shayne Burgess reports availability of a ADO.NET Data Services Update for .NET Framework 3.5 SP1 – Refresh in this 6/8/2010 post to the WCF Data Services (nee Project “Astoria”) blog:

The Data Services team has released a refresh of the ADO.NET Data Services Update for .NET Framework 3.5 SP1 today and provides fixes for issues reported to us in the update to .NET Framework 3.5 SP1. For Windows Vista, Windows XP, Windows Server 2003 and Windows Server 2008 the refresh can be downloaded here. For Windows 7 and Server 2008 R2 the refresh can be downloaded here. The installer for the refresh is cumulative with the original ADO.NET Data Services Update for .NET Framework 3.5 SP1 and can be installed both on systems with that update installed and on systems without the update installed.

This update fixes the following issues:

- Single quote (') characters in JSON server responses incorrectly escaped

- Requests containing filter expressions on string, byte or GUID failing when server is in medium trust

- Intermittent MethodAccessException during request processing on 64-bit server running in medium trust

- MethodAccessException on medium trust server with Feed Customization (Friendly Feed) mappings

- Duplicate object instances in client library when the entity key contains an escape character

Ashay Choudhary asks Not much activity on PDO, does this mean "it just works" and we ship it? in this 5/8/2010 post:

Time and time again we have been told that Microsoft needs to provide a good PDO driver as this is the future direction for PHP. Given how many different feature requests were filed and comments posted (not just on this forum, on our Connect site, blog, survey, etc.), the download and forum activity statistics for the version 2.0 CTP are significantly lower than our previous CTPs and Final releases.

“How much lower?” one may ask. Currently it is at about 25% of the peak we had for our previous v1.1 CTP that we released in May 2009.

Couple that with the fact that we have only a handful of support posts on our MSDN forum, again much lower than for our v1.1 CTP, and that Drupal 7 has successfully adopted it for their SQL Server / SQL Azure support, it is indeed quite surprising. [Emphasis added.]

Does this mean that people just didn’t need it and we shouldn’t have done it?

Or does it mean that it is so broken that we need to start from scratch and do it over?

Or does it mean that it meets your needs and, except for the few issues reported, we should consider shipping it very soon?Well, it is kind of too late for the first, and the forum activity doesn’t quite support the second. Which leaves us with the third scenario, and not quite what we expected.

The Drupal, Doctrine and Zend folks did and sent us some feedback. Our PDO driver is available on our download center as well as a referenceable component in the Web Platform Installer.

So, I urge you all to download it and try it out.

Ashay describe the version 2.0.0 CTP release in his SQL Server Driver for PHP 2.0 CTP adds PHP's PDO style data access for SQL Server post of 4/19/2010.

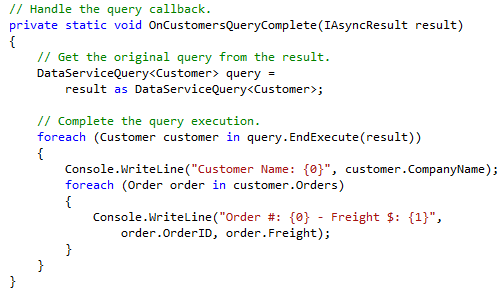

Jon Watson shows you how to fire asynchronous OData queries in his OData Mix10 – Part Dos post of 5/7/2010:

The other day I had a snazzy post on fetching all the video (WMV) files from Mix ‘10. A simple, console application that grabbed the urls from the OData feed and downloaded the videos. I wanted to change that app to fire the OData query asynchronously so here’s what resulted:

There are two important things here that are not explained well in the

- See lines 5 and 6? That’s where I query for the WMV files and it returns an IQueryable<T>. You *have* to cast that to a DataServiceQuery<T> and then call BeginExecute. The documented example does not filter so it didn’t show that step.

- Line 16 shows the correct way to get the previously executed DataServiceQuery<T> from the async result. If you looked at the MSDN example docs it shows (incorrectly) just casting the result, like this:

// wrong var query = result as DataServiceQuery<Mix.File>;Other than those items it is relatively straight forward and we’re all async-ified. Enjoy!

Jon’s earlier post on the topic was Using OData to get Mix10 files of 4/6/2010.

Bruce Kyle reports SQL Server 2008 R2 Released to MSDN, Trials Available in this 5/7/2010 post to the US ISV Developer blog:

Subscribers to Microsoft's TechNet and Microsoft Developer Network (MSDN) services now have access to four editions of SQL Server 2008 R2. Products currently available for download on those subscriber portals include the Standard, Enterprise, Developer and Workgroup editions.

The releases are in English only, according to the SQL Server team blog. They can be obtained in either 32-bit or 64-bit editions. See SQL Server 2008 R2 is now available to MSDN and TechNet subscribers.

Trial editions of SQL Server 2008 R2 are also available through the Microsoft Evaluation Centers for TechNet (here) and MSDN (here).

Additional language support will be released in May.

Download SQL Server 2008 R2 Trials, Feature Pack, Tools

See Download Trial Software to:

- Download the SQL Server 2008 Feature Pack

- Download a free, fully functional 180-day trial edition of SQL Server 2008 Enterprise today and take advantage of SQL Server security, scalability, developer productivity, and the industry’s lowest total cost of ownership.

- Download SQL Server 2008 Assessment and Migration Tools

Digital Launch

View a SQL Server 2008 R2 Digital Tour at www.sqlserverlaunch.com.

About SQL Server 2008 R2, Getting Started

For more information about SQL Server 2008 R2, including how to get started developing for it, see my blog post Microsoft Brings Business Intelligence Deep to Enterprise With SQL Server 2008 R2.

For more information about the Express Edition, see SQL Server 2008 R2 Express Raises Max Database to 10 Gigs. [Emphasis added.]

For help in getting your application up and running on SQL Server 2008 R2, join Front Runner for SQL Server. You can access one-on-one technical support by phone or e-mail from our developer experts, who can help you get your application compatible with SQL Server 2008 R2. Once your application is compatible, you’ll get a range of marketing benefits to help you let your customers know that you’re a Front Runner.

David Robinson links to Two great MSDN videos on SQL Azure in this 5/7/2010 post:

MSDN Video: Microsoft SQL Azure RDBMS Support (Level 200)

This session will cover the following topics: Creating, accessing and manipulating tables, views, indexes, roles, procedures, triggers, and functions, inserts, updates, and deletes, constraints, transactions, temp tables, query support. Watch the Video on MSDN.

MSDN Video: Microsoft SQL Azure Security Model (Level 200)

This session will cover the following topics: Authentication and Authorization to SQL Azure. Watch the Video on MSDN.

Shayne Burgess announced Windows Phone Developer Tools April 2010 Refresh and the OData Client in this 5/7/2010 post to the WCF Data Services blog:

Two months ago we released an OData Library for the Windows Phone 7 – see the blog post here. If you have downloaded the recent April 2010 Refresh of the Windows Phone Developer Tools you will have found that there is an issue in the refresh of the tools with loading the OData library. If you reference the System.Data.Services.Client.dll assembly from the April 2010 Refresh of the tools and try to deploy your project you will see the following message (thanks to Tim Heuer for point this out):

The specific text of the error message is “Could not load file or assembly ‘{File Path}\System.Data.Services.Client.dll’ or one of its dependencies”.

This is an issue with the way Authenticode signed assemblies are loaded in the new version of the tools. Included in the blog post by Tim Heuer is a PowerShell script that resolves the issue when run against the System.Data.Services.Client.dll assembly from the OData Windows Phone 7 library.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Bruce Kyle explains Single Sign-On Simplified With AD FS 2.0 in this 5/8/2010 post to the US ISV Developer blog:

Active Directory Federation Services 2.0 (AD FS 2.0) has been finalized and is now available for download. This is the server part of how you can handle identity in your applications.

AD FS 2.0 is a role in Windows Server that simplifies access and single sign-on to both on-premises and cloud-based applications. Using “claims-based” identity technology, it helps enable secure business collaboration and productivity within the enterprise, across organizations, and on the Web.

Some of the top scenarios AD FS 2.0 will support are:

- Collaboration with Office documents and SharePoint across companies with single sign-on access.

- Single sign-on access to hosted/cloud services, extended from on-premises Active Directory to Microsoft (or other) cloud services.

- Implementation of access security and management policies to many different applications with varied security requirements. [Emphasis added.]

Overall, AD FS 2.0 will help you streamline user access management with a simpler, unified approach and native single sign-on. It builds on Active Directory and interoperates with other directories via WS* and SAML support, too.

You can get AD FS 2.0 at Federation Services.

Read the AD FS 2.0 datasheet to learn more.

About Active Directory Federated Services 2.0 and Windows Identity Foundation

Active Directory Federation Services 2.0 is a security token service for IT that issues and transforms claims and other tokens, manages user access and enables federation and access management for simplified single sign-on.

.NET developers use Windows Identity Foundation (WIF) to externalize identity logic from their application, improving developer productivity, enhancing application security, and enabling interoperability. Enjoy greater productivity, applying the same tools and programming model to build on-premises software as well as cloud services. Create more secure applications by reducing custom implementations and using a single simplified identity model based on claims. Enjoy greater flexibility in application deployment through interoperability based on industry standard protocols, allowing applications and identity infrastructure services to communicate via claims. See Windows Identity Foundation Simplifies User Access for Developers.

Learn more about how you can use AD FS 2 and WIF in your applications at Identity Developer Training Course on Channel 9. You will find a developer training kit and hands on labs.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bill Wilder’s Two Roles and a Queue – Creating an Azure Service with Web and Worker Roles Communicating through a Queue post of 5/8/2010

Visualizer: http://baugfirestarter.cloudapp.net/

Running example of sample designed below: http://bostonazuresample.cloudapp.net/

The following procedure assume Microsoft Visual Web Developer 2010 Express on Windows 7. The same general steps apply to Visual Studio 2008, Visual Studio 2010, and Web Developer 2008 Express versions, though details will vary.

Bill continues with an illustrated tutorial for creating his simple (but live) Azure queued messaging application.

Nitin Salgar describes changes coming in the 50-GB version of SQL Azure in this 5/2/2010 post:

I recently received a Mail from Microsoft Associate to participate in the 50GB preview for SQL Azure (find my tweet here.)

I've been doing quite a bit of poking around on it which resulted in me blogging my findings on it.

One of the most fundamental change is all tables need to have a Clustered Index on it. A day afer I found this out SQL Azure team members posted a Blog about this.*

XML and several other datatypes are now being supported on it unlike the previous version.

SSMS(R2 Nov CTP onwards) is still the predominant tool for programming. However, there are a couple of tools available in the market for evaluation.

Redgate has two tools namely

- SQL Compare

- SQL Data Compare

These two tools have been in the market for several years. [H]owever, their support for Azure is something new and is available only for Beta Testers.

Embarcadero DBArtisan is another tool providing SQL Azure Access. This tool includes Querying capability unlike Redgate tools. Initial use of the DBArtisan gave me a grouse feeling. There were many instances where the tool was incapable of performing tasks which was possible in SSMS, which I tweeted here

SQL Azure team has been working really hard on several counts and trying to make it happen. The new release exposed new DMV's.

The DMV's prove that Azure will be bringing in more capabilities like SQL CLR.

It’s nice

to seeto try out the improvements as they happen.You can find more about it by following the team blog and of couse me by following me on twitter @nitinsalgar

More Later

* The requirement for a clustered index on each SQL Azure table has been present since early CTPs.

David Lemphers’ Windows Azure - Sending SMTP Emails! post of 1/8/2009 explains a technique that remains popular today:

A question popped up today about sending emails from within the Windows Azure environment, specifically using SmtpClient.

So, to cover off some basics:

- The Windows Azure environment itself does not currently provide a SMTP relay or mail relay service

- .NET applications run under a variation of the standard ASP.NET medium trust policy called Windows Azure Trust policy

- Port 25 is open (well, all ports are open actually) to outbound traffic for Windows Azure applications

As we don't provide a host for sending or relaying SMTP mail messages, you'll need to find one that will. Your ISP or free mail provider will do providing they are setup to enable SMTP relaying.

Next, there is an issue with using the SmtpClient class with its Port property set to something other than 25 and its trust election, see this forum thread:

So to demo this, I whipped up a quick sample app that sends STMP emails through a relay host as a web role:

The code is here:

And the app [was] deployed here, [but appears to be gone]:

Return to section navigation list>

Windows Azure Infrastructure

Ammalgam posted Video: Secure network communications within Windows Azure to Cloud Computing Zone’s blog on 5/7/2010:

Charlie Kaufman (from Microsoft), gives us details on how the network communications work and are secure within Windows Azure.

Topics:

- Why don’t we use IPSec between Compute node and store?

- Breakdown of virtualization security in Azure

- How do we protect against malicious users doing things like Denial of Service (DoS) attacks?

- How to protect against users who create a bogus account and try to make attacks from inside Azure framework?

Arthur Cole claims “Cloud computing is a lot like sex” as the prefix to his Sex and the Cloud post of 5/7/2010 to the ITBusinessEdge blog:

Now that I've grabbed your attention, let me explain. Like sex, everybody seems to be talking about it but few are actually doing it. And like sex, the media tends to tout all the wonders to be had without bothering to highlight the responsibilities involved or the trouble in store if you're not careful.

Because the fact is, things can go south on the cloud. While Informationweek's David Linthicum overstates it a bit when he says concerns like over-hype, security and costs are enough to kill the cloud outright, he does make a point that the cloud is uncharted territory for IT, so it's best to ensure that you keep at least one foot on solid ground as you venture out.

Fortunately, enterprises consist of more than just IT techs. While the geeks are awestruck by the incredible scalability and flexibility, the lawyers and accountants and business managers are all evaluating it from their own perspectives. That's why we're seeing more and more discussion regarding the cloud's audit and compliance capabilities as things more forward. The fact that nearly two-thirds of enterprises say they are just as comfortable or more with their cloud's compliance features as with their own internal systems makes me wonder how well-prepared most organizations are for a significant discovery order.

These and other issues are at the heart of the rollout of probably the biggest cloud user/provider to date: the federal government. The General Services Administration's Apps.gov site was launched to great fanfare late last year, but so far has registered only 170 actual transactions. The vast majority of traffic seems to be people looking for information rather than actually running apps. Still, expectations are that nearly half of government offices will be using the cloud within the next two years.

And that's why I still think the cloud at large has some strong legs under it. Sure, there are challenges to be faced, and in many respects the cloud will provide only marginal, or even negative, improvements over current data architectures. But according to the latest IDC estimates, the worldwide data load will hit 1.2 Zettabytes this year -- that's more than one trillion GB and represents a 50 percent increase over 2009. The cloud itself won't be able to provide more resources to handle that load, but it will allow the IT community to manage it more effectively by spreading it out over the broadest possible set of existing resources.

In that vein, then, the cloud is not just probable, it's inevitable. There simply is no other way to effectively handle all the data coming our way.

<Return to section navigation list>

Cloud Security and Governance

• W. Scott Blackmer offered an essay about Information Governance on 5/6/2010:

When it comes to creating policies for handling personal data in an organization, who decides? How are those policy decisions made and kept up to date?

These are questions of governance – I would call it “information governance.” Most large enterprises have established responsibilities and procedures for information technology governance and specifically for IT security policies, procedures, procurement, management, and training. In many cases, however, these have not been fully mapped to personal data compliance and risk management requirements, which should be defined and monitored by a somewhat different group of people, from departments beyond IT and security. Unless privacy issues are visible in the internal governance process, the organization – and the individuals that deal with it -- may be exposed to some nasty surprises.

One consequence of the growing body of laws, regulations, standards, and contractual requirements dealing with protected categories of personally identifiable information (PII) is a heightened awareness of the importance of establishing effective internal governance mechanisms. The organization needs to be clear on who decides, and how, key questions such as these:

- Which kinds of PII should be collected in the first place?

- Which categories of PII require particular safeguards or treatment, either legally or because the information is considered especially sensitive by customers and employees, or by the organization itself?

- How should PII be secured?

- Who should be given access to PII, and for what purposes?

- How are individuals informed of events (such as business changes and security breaches) and options (such as op-in or opt-out choices) that affect their privacy and personal security?

- How should PII be disposed of at the end of its useful life?

In some cases, legislators, regulators, and industry standards bodies provide guidance on PII management and governance, at least by implication. But for the most part, organizations must find their own way to weave privacy compliance and PII risk management into effective internal governance procedures. Adding privacy to the organization’s governance structure, with constant reference to evolving privacy rules and standards, is one way to avoid costly mistakes and arm the organization with legal defenses in the event of a security breach or a serious privacy complaint. …

Scott concludes:

From our observation, and from reports by professional associations and conference participants, it appears that two elements are key to the success of organizations that have established effective information governance relating to PII: a high-level champion that the CEO, board, and business managers will listen to, and a liaison team to review PII issues and make recommendations to management. Depending on the structure and mission of the organization, the privacy liaison team might include representatives of several functions that deal with PII: IT, security, HR, customer relations, marketing, government relations, labor relations, legal, compliance, audit, procurement or contract management, product development, international subsidiaries (subject to different PII rules). It is not hard to imagine who should have a seat at the table (or more likely on the email list and occasional conference call), but it may be a challenge to identify who will convene and lead the team, unless the organization has already designated a chief privacy officer or equivalent position.

In the end, good information governance depends not only on procedures and tools but on the quality, drive, and authority of those who lead the effort.

• David Navetta’s The Legal Defensibility Era is Upon Us post of 5/4/2010 to the InformationLawGroup Web site carries the following abstract:

The era of legal defensibility is upon us. The legal risk associated with information security is significant and will only increase over time. Security professionals will have to defend their security decisions in a foreign realm: the legal world. This article discusses implementing security that is both secure and legally defensible, which is key for managing information security legal risk. …

Final thoughts. As legal risk increases a legal defensibility approach will become more important and eventually commonplace. Our data driven society, and the legal risks arising out of it, dictate that we work together. Now is the time for legal, privacy and security professionals to break down arbitrary and antiquated walls that separate their professions. The distinctions between security, privacy and compliance are becoming so blurred as to ultimately be meaningless. Like it or not, it all must be dealt with holistically, at the same time, and with expertise from multiple fronts. In this regard we must all develop thick skins and be not afraid to stop zealously guarding turf. The reality is, the legal and security worlds have collided, and most lawyers don't know enough about security, and most security professionals don't know enough about the law. Let's change that. With the era of legal defensibility upon us, it is past time that this conversation went to the next level. So please take a look at my article. I sincerely look forward to your comments and constructive criticism on my thoughts.

Download the article here.

<Return to section navigation list>

Cloud Computing Events

Bruce Kyle announced Windows Azure FireStarter Videos Up on Channel 9 on 5/9/2010:

The videos from the Windows Azure FireStarter event in Redmond are now available for on Channel 9. You can watch or click to see the videos:

- Windows Azure FireStarter- Windows Azure Storage with Brad Calder

- Windows Azure FireStarter- SQL Azure with David Robinson

- Windows Azure FireStarter- Bui…for the Cloud with David Aiken

- Windows Azure FireStarter- Migrating Applications to the Cloud with Mark Kottke

- Windows Azure FireStarter- Panel Q&A

• Dom Green’s Cloud Coffee at #dddscot post of 5/9/2010 points to his Windows Azure presentation to DDD Scotland this weekend:

DDD Scotland has been a great weekend of lots of geek-ery, fun and fire. It was also my first experience of speaking at a large conference and gave me the opportunity to talk about some of the things I had learned whilst developing on the Windows Azure platform over the past year, working with the European Environmental Agency and RiskMetrics.

View more presentations from domgreen.

• Steven Nagy describes his CodeCampOz- Five Azure Submissions in this 5/9/2010 post:

CodeCampOz is a once-a-year event over the course of a weekend where code freaks gather from all around Australia descend on the small town of Wagga Wagga in central NSW.

The next one will be held on November 20th and 21st, according to this announcement. The call for speakers has also gone out. I’ve submitted five presentations, I’m hoping at least one will get accepted. I thought I would just quickly post the five submissions here for your review:

Title: Windows Azure Compute For Developers

Level: 300

Outline:

The barrier to entry of a new technology can often seem daunting at first. However the Microsoft hype is true: .Net developers ARE cloud developers! This session will demonstrate how cloud apps are just like apps you’ve already been writing. We’ll also go through some of the API functionality that you can use if you like to add more ‘cloudness’ to your applications and we’ll focus on some key abstractions to help make your applications hot-swappable between on-premise and Azure servers.Title: Architecting For Azure

Level: 300

Outline:

Organisations are looking to Azure for two primary reasons: cost-reduction and elasticity. While it is easy to port your applications to Azure, it does not mean you will automatically reap the benefits of ‘running in the cloud’. This session will take a look at some key philosophies to keep in mind when architecting your applications to run on Azure.(this session is a rework of my Azure launch Philippines presentation and my upcoming Remix10 presentation)

Title: Hybrid Azure

Level: 300

Outline:

Don’t want to store your data in the cloud? Still looking to leverage from elasticity? Azure isn’t just about moving your whole world into a Microsoft data centre. There are many scenarios in which you will want to utilise specific features of the Azure platform without swallowing the whole pill, and this session will explore just a few.Title: Building the Windows Azure Platform

Level: 200

Outline:

Windows Azure provides millions of compute and storage instances across multiple data centres around the world and manages all the applications that run on top if them, configuring load balances, VLans and DNS entries dynamically to meet the scalable needs of millions. And it does all this automatically! In this high level discussion we will peel back the layers of abstraction to reveal how Windows Azure really works under the hood to automatically provision virtual machines and applications in a highly fault tolerant manner.Title: Azure By Example

Level: 400

Outline:

This no-slides presentation will walk through a sample application with high scalability and redundancy needs that utilises all the parts of the Windows Azure platform. Focusing on reusability and key points of abstraction, we’ll demonstrate how Windows Azure Compute, Storage, Sql Azure, Service Bus and Access Control Services all come together to form the architecture of a highly scalable application.If you think of anything else you’d like to see around Azure at CodeCampOz, let me know and I’ll make another submission.

Wagga Wagga is about 240 road km west of Canberra, for those interested in attending.

• Andre Durand announced the Cloud Identity Summit: Building Credibility in the Cloud to be held 7/20 to 7/22/2010 in Keystone CO, USA:

As Cloud computing matures, organizations are turning to well-known protocols and tools to retain control over data stored away from the watchful eyes of corporate IT. The Cloud Identity Summit is an environment for questioning, learning, and determining how identity can solve this critical security and integration challenge.

The Summit features thought leaders, visionaries, architects and business owners who will define a new world delivering security and access controls for cloud computing.The conference offers discussion sessions, instructional workshops and hands-on demos in addition to nearly three days of debate on standards, trends, how-to’s and the role of identity to secure the cloud.

Topics

Understanding the Cloud Identity EcosystemThe industry is abuzz with talk about cloud and cloud identity. Discover the definition of a secure cloud and learn the significance of terms such as identity providers, reputation providers, strong authentication, and policy and rights management mediators.

Recognizing Cloud Identity Use-Cases

The Summit will highlight how to secure enterprise SaaS deployments, and detail what is possible today and what’s needed for tomorrow. The requirements go beyond extending internal enterprise systems, such as directories. As the cloud takes hold, identity is a must-have.

Standardizing Private/Public Cloud Integration

The cloud is actually made up of a collection of smaller clouds, which means agreed upon standards are needed for integrating these environments. Find out what experts are recommending and how companies can align their strategies with the technologies.

Dissecting Cloud Identity Standards

Secure internet identity infrastructure requires standard protocols, interfaces and API's. The summit will help make sense of the alphabet soup presented to end-users, including OpenID, SAML, SPML, ACXML, OpenID, OIDF, ICF, OIX, OSIS, Oauth IETF, Oauth WRAP, SSTC, WS-Federation, WS-SX (WS-Trust), IMI, Kantara, Concordia, Identity in the Clouds (new OASIS TC), Shibboleth, Cloud Security Alliance and TV Everywhere?

Who's in your Cloud and Why? Do you even know?

Privileged access controls take on new importance in the cloud because many security constructs used to protect today’s corporate networks don't apply. Compliance and auditing, however, are no less important. Learn how to document who is in your cloud, and who is managing it.Planning for 3rd Party (Cloud-Based) Identity Services

What efficiencies in user administration will come from cloud-based identity and outsourced directory providers? How can you use these services to erase the access control burdens internal directories require for incorporating contractors, partners, customers, retirees or franchise employees. Can clouds really reduce user administration?

Preparing for Consumer Identity

OpenID and Information cards promise to give consumers one identity for use everywhere, but where are these technologies in the adoption life-cycle? How do they relate to what businesses are already doing with their partners, and what is required to create a dynamic trust fabric capable to make the two technologies a reality?

Making Cloud Identity Provisioning a Reality

The access systems used today to protect internal business applications take a backseat when SaaS is adopted. How do companies maintain security, control and automation when adopting SaaS? The Summit will answer questions around secure SaaS usage? Do privileged account controls extend to the cloud? Are corporate credentials accidentally being duplicated in the cloud? Does the enterprise even know about it?

Securing & Personalizing Mashups

Many SOA implementations are using WS-Trust, but in the cloud OAuth is becoming the standard of choice for lightweight authorization between applications. Learn about what that means for providing more personalized services and how it provides more security to service providers.

Enterprise Identity meets Consumer Identity

There are two overlapping sets of identity infrastructures being deployed that have many duplications, including Single Sign-On to web applications. How will these infrastructures align or will they? How will enterprises align security levels required for their B2B interactions with the convenience they have with their customer interactions?Monitoring Cloud Activity

If you've invested in SEIM, you can see what’s happening behind your firewall, but can you see beyond into employee activity in the cloud? What is being done to align controls on activity beyond enterprise walls with established internal controls?

Note that Christofer Hoff, Director Cloud & Virtualization for Cisco and John Shewchuk, Microsoft Technical Fellow, Cloud Identity & Access are among the featured speakers.

• Clemens Vasters describes his forthcoming tour of Asia as a “traveling talking head” in his UA 875 SEA NRT post of 5/8/2010 en route to Tokyo Narita airport:

… I’ll spend two days in Singapore as part of a Windows Azure ISV workshop series that has been organized by our field colleagues in the APAC region; the first day I’ll be presenting the all-up Windows Azure Platform –Compute, Storage, Management and Diagnostics, Database, Service Bus, Access Control, and the additional capabilities we’ll be adding over the next several months. On day two, I’ll be meeting for 1:1s with a range of customers about their plans to move applications to the cloud. That pattern will repeat over the next two weeks in Kuala Lumpur/Malaysia (this Thu/Fri), in Manila/Philippines (next Mon/Tue), and in Seoul/South Korea (next Wed/Thu). From Seoul onwards, some of my colleagues will take over and go to Sydney and Auckland, while I’m flying further westwards to Europe to speak at the NT Konferenca in Slovenia before returning to Seattle after a short stopover in Germany to see the folks.

Once I’m back in Seattle I’ve got 5 days at the office to debrief and prep for TechEd North America and then it’s off to New Orleans for the week and then, after a weekend stopover in Seattle, I’m off to the NDC 2010 conference in Oslo/Norway. It’s definitely the most flying I’ve done since I [went to] work for Microsoft. …

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Williams reports Heroku: Ruby Platform Sees 50 Percent Increase in Apps Since November in this 5/7/2010 post to the ReadWriteCloud blog:

The number of apps hosted on the Ruby platform Heroku has increased 50 percent since November, pointing out how cloud-based platforms are becoming the norm for the capabilities the services provide in terms of testing and reliability.

Heroku had more than 40,000 apps on its platform last November. Now more than 60,000 apps are using the Heroku service.

Heroku's success is not alone. Amazon Web Services (AWS) and Rackspace are rapidly expanding. AWS announced its expansion to the Asia Pacific region this past week. In its announcement, AWS featured Kim Eng, one of Asia'a largest security brokers. The firm said the availability of the AWS service helped minimize its latency for its KE Trade iPhone application.

Rackspace developer Mike Mayo built the company's latest iPad app for customers to monitor Rackspace cloud networks. He said the cloud provides a way for developers to test with better efficiency. For example, using cloud platforms, apps are continually tested for bugs. Those bugs can be fixed one at a time as they pop up. That's easier than doing a build and then fixing the 20 bugs that are discovered.

Heroku

Heroku is a Ruby-on-Rails platform that according to the company web site "eliminates the need to manage servers, slices or clusters." Developers focus on the code and that's pretty much it.

Heroku hosts the Flightcaster application, an interesting example of a service that uses two cloud providers to serve its app. Flightcaster is a service that checks your flight to see if it is on time. It can predict up to six hours in advance if your flight will be late.

Flightcaster uses Heroku to serve the application. But the actual data analysis is done through Amazon S3 where Hadoop manages the data analytics. The data is passed from Heroku to Amazon S3 and then back to Heroku where it is served back to the customer making the request.

Heroku is an example of a service that is making it infinitely easier for developers to develop and manage applications. It's part of a new breed of platforms emerging that are growing in popularity.

We'll see if Heroku will grow 100 percent over the span of a year. That would mean 80,000 apps. Seems plausible, doesn't it?

Chris Kanaracus claims Plattner to Renew Pitch for In-memory Databases in this 5/6/2010 article for PCWorld’ BusinessCenter blog:

SAP co-founder Hasso Plattner is set to revisit the topic of in-memory databases during a keynote address at the vendor's upcoming Sapphire conference on May 19.

The news was revealed this week in a video interview with Plattner, who interviewed himself with the help of some camera trickery and costumes.

In-memory databases store information in a system's main memory, instead of on disk, providing a performance boost. The technology, which Plattner also discussed at last year's Sapphire event, is now beginning to be reflected in SAP BI (business intelligence) products, such as BusinessObjects Explorer.

During his self-interview, Plattner indicated the German software giant has ambitious plans in the works.

Powered by ever-increasing amounts of RAM, in-memory computing will usher in an era of "completely new applications" in areas such as predictive analytics, Plattner said. Users will be able to conduct "multi-step queries at human speed, I mean less than one second. I think there's a revolution coming on the application side based on the speed."

Plattner described a hybrid approach to database management and data processing. For example, in the event of a power failure, information held in-memory would be lost. But the data could be quickly reloaded and restructured from permanent storage, using solid-state drives instead of traditional disk, according to Plattner. …