Windows Azure and Cloud Computing Posts for 5/19/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Update 5/19/2010 11:00 AM PDT: First details on Google App Engine for Business are in the Other Cloud Computing Platforms and Services section below.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Jai Haridas of the Windows Azure Storage Team reports a problem with (and workaround for) the StorageClient library’s CopyBlob implementation in Updating Metadata during Copy Blob and Snapshot Blob of 5/19/2010:

When you copy or snapshot a blob in the Windows Azure Blob Service, you can specify the metadata to be stored on the snapshot or the destination blob. If no metadata is provided in the request, the server will copy the metadata from the source blob (the blob to copy from or to snapshot). However, if metadata is provided, the service will not copy the metadata from the source blob and just store the new metadata provided. Additionally, you cannot change the metadata of a snapshot after it has been created.

The current Storage Client Library in the Windows Azure SDK allows you to specify the metadata to send for CopyBlob, but there is an issue that causes the updated metadata not actually to be sent to the server. This can cause the application to incorrectly believe that it has updated the metadata on CopyBlob when it actually hasn’t been updated.

Until this is fixed in a future version of the SDK, you will need to use your own extension method if you want to update the metadata during CopyBlob. The extension method will take in metadata to send to the server. Then if the application wants to add more metadata to the existing metadata of the blob being copied, the application would then first fetch metadata on the source blob and then send all the metadata including the new ones to the extension CopyBlob operation.

In addition, the SnapshotBlob operation in the SDK does not allow sending metadata. For SnapshotBlob, we gave an extension method that does this in our post on protecting blobs from application errors. This can be easily extended for “CopyBlob” too and we have the code at the end of this post, which can be copied into the same extension class.

Jai continues with the C# code to demonstrate the problem and for the CopyBlob extension method to solve it.

See Matthew Weinberger’s Datacastle Brings Laptop Encryption to Windows Azure Cloud post of 5/19/2010 to the MSPMentor blog summarizes Datacastle’s relationship with Azure in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

See Werner Vogels explains a new Amazon Web Services feature in his Expanding the Cloud - Amazon S3 Reduced Redundancy Storage post of 5/19/2010 in the Other Cloud Computing Platforms and Services section below.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

The SQL Azure Team explains backing up SQL Azure data to a local SQL Server instance in its illustrated, 18-step Exporting Data from SQL Azure: Import/Export Wizard tutorial of 5/19/2010:

In this blog post we will show you how to export data from your SQL Azure database to a local SQL Server database using the SQL Server Import and Export Wizard in SQL Server Management Studio 2008 R2. This is a great technique to backup your data on SQL Azure to your local SQL Server.

We have installed the Adventure Works database for SQL Azure to test with on our SQL Azure account; you can find that database here.

The first thing we need to do is connect SQL Server Management Studio 2008 R2 to SQL Azure; in this demonstration we are running the SQL Server Import and Export from the SQL Server Management Studio. How to connect to SQL Azure was covered in this blog post.

Here is how to import from SQL Azure:

1. In SQL Server Management Studio, connect to your local SQL Server (this could be SQL Server Express Edition 2008 R2).

2. Create a new database named: AdventureWorksDWAZ2008R2.

3. Right click on that database and choose from the drop down context menu All Tasks | Import Data…

4. This will open the SQL Server Import and Export Wizard dialog.

5. Click next to get past the starting page.

6. On the next page of the wizard you choose a data source. In this example, this is SQL Azure. The data source you need to connect to SQL Azure is the .NET Framework Data Provider for SqlServer.

7. Scroll to the bottom of the properties, here is where you need to enter your SQL Azure information.

8. Under Security set Encrypt to True.

9. For the Password enter your SQL Azure password.

10. For User ID enter your SQL Azure Administrative username.

11. Under Source for Data Source enter the full domain name (Server Name) for your account on SQL Azure. You can get this from the SQL Azure Portal.

12. For Initial Catalog enter the database name on SQL Azure.

The tutorial continues with choosing a destination, selecting source tables and views, and performing the backup.

Andrew Fryer asks Why SQL Azure? and gives reasons in this 5/19/2010 post:

There are probably three reasons why you might need a cloud based database like SQL Azure:

- You want to run a third party application but don’t want the bother of managing it yourself.

- You have developed your own application but don’t want to or can’t host it on your own infrastructure. This might because of a standards issue , or because you want to run some tactical or experimental application without investing in a lot of infrastructure.

- A variation of either of the above but where the application needs to be internet facing and you can’t or won’t open up your own infrastructure to do this.

- Your just starting your business and don’t want make heavy investments in IT and so the pay per use model is attractive. It could also be that you have more flexibility with opex than capex anyway.

However there may well be a list of objections that is even longer:

- Data privacy

- Regulatory compliance

- Connectivity

My answer to the privacy question is that most of us already have a lot of confidential information about us in the cloud already, and are happy to have our most sensitive emails and conversations stored in this way today.

Regulatory compliance is often used as an automatic response to resist change e.g. “that contravenes the data protection act”. Of course careful consideration of compliance is essential to maintaining the prestige and credibility of an organisation, but in a lot of cases this should be no barrier to SQL Azure adoption because:

- You can elect to have your data stored inside the EU when you set it up.

- It is iso27001 certified

- There are already 500 government customers including county councils in the UK using this and other Microsoft cloud services

Connectivity may also be seen as a blocker in moving to the cloud but this will depend on your user base. Everyone hitting cloud applications in a building with slow bandwidth is a potential problem, conversely a mobile workforce might actually get a better experience from Azure as they will not have to fight over inbound connections to get into your servers.

My final point about SQL Azure is that is deals with AND very well. Most of today’s cloud offerings are all about OR – You can run your infrastructure on premise OR in the cloud. Azure, BPOS etc. are designed to work in a mixed economy. For example with your internet store could be on SQL Azure but would hook up to other services for payment (obviating the need to be PCI compliance) AND you would pull data from it down to your office and mix it with other sources for BI using PowerPivot or reporting services.

The MSDN Library provides the System.ServiceModel.DomainServices.Hosting.OData Namespace provides a class for working with a data service:

Provides a data service on top of a domain service.

Namespace: System.ServiceModel.DomainServices.Hosting.OData

Assembly: System.ServiceModel.DomainServices.Hosting.OData (in System.ServiceModel.DomainServices.Hosting.OData.dll)Syntax

[BrowsableAttribute(false)] public class DomainDataService : DataService<Object>, IServiceProviderInheritance Hierarchy

Object

DataService<Object>

System.ServiceModel.DomainServices.Hosting.OData.DomainDataServiceThread Safety

Any public static (Shared in Visual Basic) members of this type are thread safe. Any instance members are not guaranteed to be thread safe.

See Also

Reference

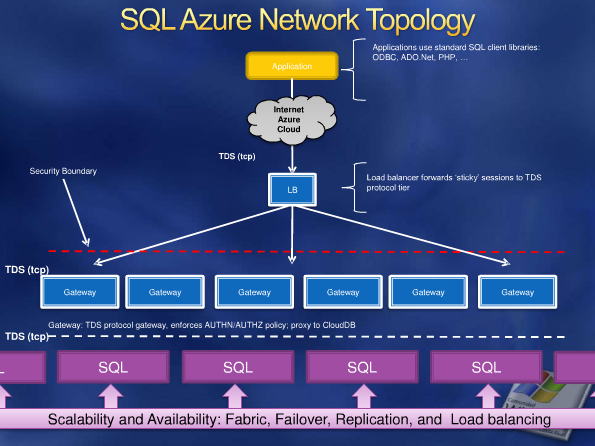

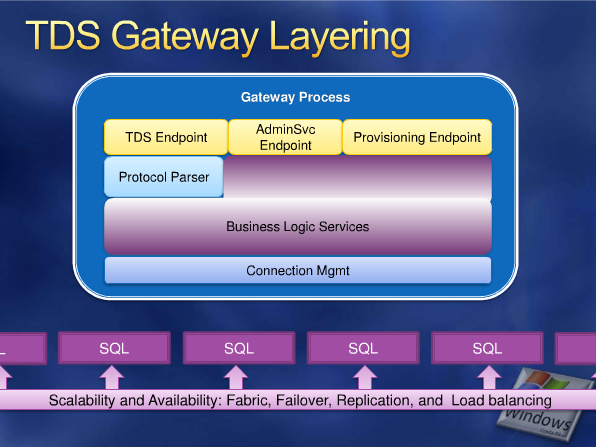

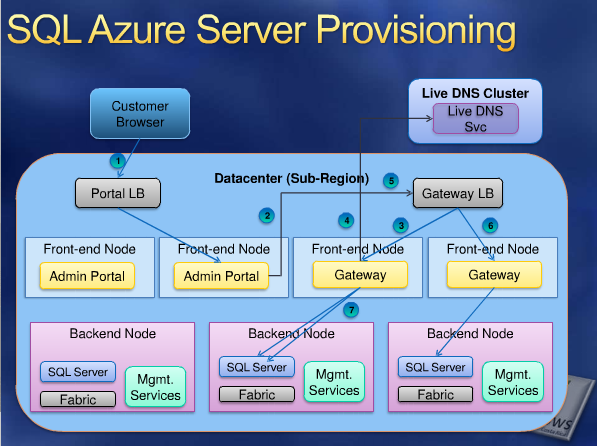

Eduardo Castro’s SQL [Azure] Under the Hood presentation include many data flow diagrams for SQL Azure that aren’t readily available elsewhere:

Slide 9 of 25:

Slide 10 of 25:

Slide 12 of 25:

Slide 15 of 25:

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruce Kyle announced on 5/19/2010 in an ISV Video: Nubifer Expands Enterprise Mashups to Windows Azure:

Cloud:Link from Nubifer monitors cloud-based applications and any URI in an enterprise from Windows Azure .It monitors your enterprise systems in real time, and strengthens interoperability with disparate owned and leased SaaS systems includes Windows Azure.

Nubifer Cloud:Link monitors your enterprise systems in real time, and strengthens interoperability with disparate owned and leased SaaS systems. When building "Enterprise Mash-ups" , custom addresses and custom source codes are created by engineers to bridge "the white space", or "electronic hand-shakes", between the various enterprise applications within your organization. By using Nubifer Cloud:Link, you gain a real time and historic view of system based interactions.

Cloud:Link is designed and configured via robust admin tools to monitor custom "Enterprise Mash-ups" and deliver real time notifications, warning and performance metrics of your separated, but interconnected business systems. Cloud:Link offers the technology and functionality to help your company monitor and audit your enterprise system configurations.

Video Link: Nubifer cloud solution on Windows Azure. Suresh Sreedharan talks with Chad Collins, CEO at Nubifer Inc. about Nubifer's cloud offering on the Windows Azure platform.

About Nubifer

Nubifer provides mid to large size companies with scalable cloud hosted enterprise applications - SaaS (software as a service), PaaS (platform as a service), HRIS (human resource information systems), CRM (customer resource management) and data storage offerings. In addition, Nubifer provide our noted consulting support and cloud infrastructure consulting services.

Other Videos About How ISVs Use Windows Azure

- David Bankston of INgage Networks, talks about social networking and Azure

- Gunther Lenz, Microsoft, chats with Michael Levy, SpeechCycle

- Gunther Lenz, Microsoft, chats with Guy Mounier, BA-Insight, about BA-Insight's next Generation solution levereging Windows Azure

- Aspect leverages storage + compute in call center application

- From Paper to the Cloud -- Epson's Cloud App for Printer, Scanner and Windows Azure

- Vidizmo - Nadeem Khan, President, Vidizmo talks about technology, roadmap and Microsoft partnership

- Murray Gordon talks with ProfitStars at a recent Microsoft event

Matthew Weinberger’s Datacastle Brings Laptop Encryption to Windows Azure Cloud post of 5/19/2010 to the MSPMentor blog summarizes Datacastle’s relationship with Azure:

Data security vendor Datacastle is now delivering their RED laptop backup and encryption endpoint solution as a service through Microsoft’s Windows Azure cloud platform. Here’s the lowdown.

Datacastle RED provides secure, policy-based encrypted data backup, data deduplication, remote data deletion, and more to protect business data on laptop machines, according to their press release. By building and managing their solutions from Azure, Datacastle claims it can scale deployments to any size.

“The Windows Azure platform provides greater choice and flexibility in how we develop and deploy the Datacastle RED cloud service to our customers,” said Ron Faith, CEO of Datacastle, in a prepared statement.

The Datacastle RED service speaks for itself. But I’m always interested to see companies jump to Windows Azure — overall, I think it’s been quiet on that front so far, and I’m waiting for their developer base to reach critical mass.

Simon Guest presents a 01:05:47 Patterns for Cloud Computing Web cast with Windows Azure in this 5/16/2010 video from InfoQ and QCon:

Summary

Simon Guest presents 5 cloud computing patterns along with examples and Azure solutions for scaling, multi-tenancy, computing, storage and communication.Bio

Simon Guest is Senior Director at Microsoft in the Developer and Platform Evangelism group. Since joining Microsoft in 2001, Simon has led the Platform Architecture Team, acted as Editor-in-Chief of the Microsoft Architecture Journal, pioneered the area of .NET and J2EE interoperability, and has been a regular speaker at many conferences worldwide, including PDC, TechEd, and JavaOne.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie asserts Three simple action items can help ensure your next infrastructure refresh cycle leaves your data center prepared and smelling minty fresh*. and asks Are You Ready for the New Network? in this 5/19/2010 post to the F5 DevCentral blog:

Most rational folks agree: public cloud computing will be an integral piece of data center application deployment strategy in the future, but it will not replace IT. Just as Web 2.0 did not make extinct the client-server model (which did not completely eradicate the mainframe model) neither will public cloud computing marginalize the corporate data center.

But it will be a part of that data center; integrated and controlled and leveraged via the new network.

As it implies, your network today is probably not the “new” network. It’s not integrated, it’s not collaborative, it’s not dynamic. Yet. It’s still a disconnected set of components covering a variety of application-related services: acceleration, optimization, security, load balancing. It’s still an unrelated set of policies spread across a variety of control mechanisms such as firewalls and data leak prevention systems. It’s still an unmanageable, untamable beast that requires a lot of spreadsheets, scripts, and manual attention to keep it reliable and running smoothly.

But it shouldn’t be for long IF there’s an efficient, collaborative infrastructure in the future of your data center. There probably is given the heavy emphasis of late on green computing (as in both cash and grass) and the more practical goal of more efficient data centers.

Lori continues with a “WHAT YOU CAN DO TO PREPARE” topic with three action items.

John Treadway’s IT Disintermediation and The Cloud essay of 5/18/2010 explains the role of IT “in managing everything above the hypervisor”:

On a fairly regular basis I get into a discussion with people that starts something like this:

Hey John, with the cloud is IT even necessary anymore? I mean, if I can buy computing and storage at Amazon and they manage it, what will happen to all of those IT guys we’re paying? Do we just get rid of them?

Well, no, not exactly. But their jobs are changing in fairly meaningful ways and that will only accelerate as cloud computing penetrates deeper and deeper into the enterprise.

It’s no big news that most new technologies enter the enterprise at the department or user level and not through IT. This is natural given the disincentive for risk taking by IT managers. As a rule, new things tend to fail more often (and often more spectacularly) than the mature stuff that’s been hanging around the shop. And this is not good for IT careers (CIO does often stand for “career is over” afterall).

This departmental effect happened with mini computers, workstations, and eventually PCs. Sybase and Sun got into Wall Street firms through the trading desk after Black Monday (Oct. 19, 1987) because older position-keeping systems could not keep up with the volume (effectively shutting down most trading floors). IT does not lead technology revolutions. Often times IT works to hinder the adoption of shiny new objects. But there are good reasons for that…

For many years, these incursions were still traditional on-premise systems. In most cases, over time, IT eventually found a way to assert control over these rogue technologies and install the governance processes and stability of operations that users and executive management expected.

More recently, in the SaaS era, Salesforce.com’s success hinged on getting sales reps to adopt outside of the official SFA procurement process supported by IT. Marketing apps often come in through the marketing department with no IT foreknowledge, but then are partly turned over to IT when integrating them with existing systems proves to be no simple matter. I say partly because the physical servers and software running SaaS apps never gets into IT’s sphere of control. Often, the level of control turns into one of customization, oversight, monitoring, and involvement in the procurement process.

Like it or not, in a SaaS world, a fair bit of the traditional work of IT is forever more disintermediated. But how about in the IaaS world??

As we move to IaaS, the level of disintermediation actually diminishes – a lot. Most people assume the opposite without stopping to think about it.

As shown above, however, in the IaaS world IT has a HUGE role to play in managing everything above the hypervisor. Vendor management, ITSM, monitoring, configuration, service catalogs, deployment management, automation, and many more core IT concepts are still very much alive and well – thank you! The only difference is that IT gets out of the real estate, cabling, power, and racking business – and most would agree that is a good thing.

What else changes?

First off, the business process around provisioning needs to change. If I can get a virtual machine at Rackspace in 5 minutes, don’t impose a lot of overhead on the process and turn that into 5 days (or more!) like it is in-house at many enterprises. But do keep track of what I’m doing, who has access, what my rights and roles are, and ensure that production applications are deployed on a stack that is patched, plugged and safe from viruses, bots and rootkits.

Second, IT needs to go from being the gatekeeper, to the gateway of the cloud. Open the gates, tear down the walls, but keep me safe and we’ll get along nicely (thank you). IT will need to use new tools, like newScale, enStratus, RightScale, Ping Identity and more to manage this new world. And, IT will need to rethink their reliance on decades-old IT automation tools from BMC, HP, IBM and others.

Lastly, the cloud enables a huge explosion in new technologies and business models that are just now starting to emerge. The MapReduce framework has been used very successfully for a variety of distributed analytics workloads on very large data sets, and is now being used to provide sentiment analysis in real-time to traders on Wall Street. Scale out containers like GigaSpaces enable performance and cost gains over traditional scale-up environments. And there are many more. IT needs to develop a broad knowledge of the emerging cloud market and help their partners on the business side leverage these for competitive advantage.

If there’s one thing that the cloud can change about IT, it’s to increase the strategic contribution of technology to the business.

<Return to section navigation list>

Cloud Security and Governance

See Matthew Weinberger’s Datacastle Brings Laptop Encryption to Windows Azure Cloud post of 5/19/2010 to the MSPMentor blog summarizes Datacastle’s relationship with Azure in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section above.

<Return to section navigation list>

Cloud Computing Events

The Bellevue .NET User Group announced on 5/19/2010 that it will hold a Talk on Windows Azure on 5/23/2010:

- Date - Sunday 23rd May 2010

- Agenda - This session will discuss the emerging Microsoft technology named Windows Azure. Its features, technical jargons associated with it and what are the scenarios it best fits into.

- Location - 14040 NE 8th St Bellevue WA 98007 (Pearl Solutions LLC conf room)

- Start Time - 12:45 PM

- End Time - 1:45 PM

- Contact Person - Vidya Vrat Agarwal

- Contact Number - 425-516-2058

Strange way to spend a Sunday.

Wayne Walter Berry reports on 5/19/2010 Bruce Kyle at Portland Code Camp 5/22 Getting Started With SQL Azure:

Time: 3:15 PM – 4:30 PM, Saturday, May 22, 2010

Location: Franz Hall, Room 217Bruce Kyle (@brucedkyle) will show how to get started using SQL Azure. Working with SQL Azure should be a familiar experience for most developers supporting the same tooling and development practices currently used for on premise SQL Server applications. He will show how to use familiar tools, including: show some tips and tricks, which are important to working with SQL Azure, such as managing your connection in the event of throttling and querying the metrics views.

That is not all he is presenting that day, click here for all his presentations, which include:

Patterns in Windows Azure Platform: Session Details

Time: 10:45 AM – 12:00 PM, Saturday, May 22, 2010

Location: Franz Hall, Room 026Tags: Architecture, Azure, Cloud

Bruce will present the four main patterns for Windows Azure Platform. These are architectural patterns patterns for high availability, scalability, and computing power with Windows Azure.

The Microsoft UK Small Business Specialist recommends that you Register now for Windows Azure Accelerator workshops: May 2010 in England:

Delivered via a workshop format, this training will focus on Windows Azure, SQL Azure and .NET Services. The Windows Azure Platform is an internet-scale cloud services platform hosted in Microsoft data centres, which provides an operating system and a set of developer services that can be used individually or together. Azure’s flexible and interoperable platform can be used to build new applications to run from the cloud or enhance existing applications with cloud-based capabilities.

Register now to attend these workshops, which will be held at

- Microsoft offices in Thames Valley Park, Reading on 24 May (code: 44WW8) and 25 May (code: 44WW9)

- Microsoft offices at Cardinal Place, in London on 26 May (code: 44WW10)

- Black Marble offices in Yorkshire on 27 May (code: 44WW11).

Also don’t forget to sign-up for the Live Meeting follow-up session occurring 28 May (code: 44WW12), which provides an opportunity to test your knowledge with the assistance of subject matter experts.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Google announced the Google App Engine for Business on 5/19/2010 at its Google I/O conference in San Francisco’s Moscone Center:

- A formal Service Level Agreement (SLA)

- Secure Sockets Layer on your company’s domain for transport security

- An SQL database (in addition to current BigTable-based storage.)

- Developer dashboard

- US$8/app*month user charge with a maximum of US$1,000/app*month

- Access is limited to members of your Google Apps domain by default

App Engine for Business isn’t available yet, but there’s a Google App Engine Road Map and more details are available on the Introducing App Engine for Business page. Also check out the Enabling Cloud Portability with Google App Engine for Business and VMware page.

Additional links:

- Lena Rao for TechCrunchIT": Google Launches Business Version Of App Engine; Collaborates With VMware

- Google App Engine Blog: App Engine goes to work with App Engine for Business

- Mike Kirkwood for the ReadWriteCloud: Storm of Innovation: Google Partners with VMware for Apps, Clouds, and Widgets

- The Official Google Blog: Google I/O 2010 Day 1: A more powerful web in more places

I’m signed up for the preview and waiting for a shoe or two to drop in Redmond.

Jessie Jiang posted Google Storage for Developers: A Preview on 5/19/2010:

As developers and businesses move to the cloud, there’s a growing demand for core services such as storage that power cloud applications. Today we are introducing Google Storage for Developers, a RESTful cloud service built on Google’s storage and networking infrastructure.

Using this RESTful API, developers can easily connect their applications to fast, reliable storage replicated across several US data centers. The service offers multiple authentication methods, SSL support and convenient access controls for sharing with individuals and groups. It is highly scalable - supporting read-after-write data consistency, objects of hundreds of gigabytes in size per request, and a domain-scoped namespace. In addition, developers can manage their storage from a web-based interface and use GSUtil, an open-source command-line tool and library.

We are introducing Google Storage for Developers to a limited number of developers at this time. During the preview, each developer will receive up to 100GB of data storage and 300GB monthly bandwidth at no charge. To learn more and sign up for the waiting list, please visit our website.

We’ll be demoing the service at Google I/O in our session and in the Developer Sandbox. We’re looking forward to meeting those of you who are attending.

I wasn’t able to sign up for the preview waiting list; the signup form wouldn’t open. Not a good sign.

Werner Vogels explains a new Amazon Web Services feature in his Expanding the Cloud - Amazon S3 Reduced Redundancy Storage post of 5/19/2010:

Today a new storage option for Amazon S3 has been launched: Amazon S3 Reduced Redundancy Storage (RRS). This new storage option enables customers to reduce their costs by storing non-critical, reproducible data at lower levels of redundancy. This has been an option that customers have been asking us about for some time so we are really pleased to be able to offer this alternative storage option now.

Durability in Amazon S3

Amazon Simple Storage Service (S3) was launched in 2006 as "Storage for the Internet" with the promise to make web-scale computing easier for developers. Four years later it stores over 100 billion objects and routinely performs well over 120,000 storage operations per second. Its use cases span the whole storage spectrum; from enterprise database backup to media distribution, from consumer file storage to genomics datasets, from image serving for websites to telemetry storage for NASA.

Core to its success has been its simplicity: no matter how many objects you want to store, how small or big those objects are, or what object access patterns you have to deal with, you can rely on Amazon S3 to take away the headaches of dealing with the hard issues typically associated with big storage systems. All the complexities of scalability, reliability, durability, performance and cost-effectiveness are hidden behind a very simple programming interface.

Under the covers Amazon S3 is a marvel of distributed systems technologies. It is the ultimate incrementally scalable system; simply by adding resources it can handle scaling needs in storage and performance dimensions. It also needs to handle every possible failure of storage devices, of servers, networks and operating systems, all while continuing to serve hundreds of thousands of customers.

The goal for operational excellence in Amazon S3 (and for all other AWS services) is that it should be "indistinguishable from perfect". While individual components may be failing all the time, as is normal in any large scale system, the customers of the service should be completely shielded from this. For example to ensure availability of data, the data is replicated over multiple locations such that failure modes are independent of each other. The locations are chosen with great care to achieve this independence and if one or more of those locations becomes unreachable, S3 can continue to serve its customers. Some of the biggest innovations inside Amazon S3 have been how to use software techniques to mask many of the issues that would easily have paralyzed every other storage system.

The same goes for durability; core to the design of S3 is that we go to great lengths to never, ever lose a single bit. We use several techniques to ensure the durability of the data our customers trust us with, and some of those (e.g. replication across multiple devices and facilities) overlap with those we use for providing high-availability. One of the things that S3 is really good at is deciding what action to take when failure happens, how to re-replicate and re-distribute such that we can continue to provide the availability and durability the customers of the service have come to expect. These techniques allow us to design our service for 99.999999999% durability.

Relaxing Durability

There are many innovative techniques we deploy to provide this durability and a number of them are related to the redundant storage of data. As such, the cost of providing such a high durability is an important component of the storage pricing of S3. While this high durability is exactly what most customers want, there are some usage scenarios where customers have asked us if we could relax the durability in exchange for a reduction in cost. In these scenarios, the customers are able to reproduce the object if it would ever be lost, either because they are storing another authoritative copy or because they can reconstruct the object from other sources.

We can now offer these customers the option to use Amazon S3 Reduced Redundancy Storage (RRS), which provides 99.99% durability at significantly lower cost. This durability is still much better than that of a typical storage system as we still use some forms of replication and other techniques to maintain a level of redundancy. Amazon S3 is designed to sustain the concurrent loss of data in two facilities, while the RRS storage option is designed to sustain the loss of data in a single facility. Because RRS is redundant across facilities, it is highly available and backed by the Amazon S3 Service Level Agreement.

More details on the new option and its pricing advantages can be found on the Amazon S3 product page. Other insights in the use of this option can be read on the AWS developer blog.

It will be interesting to see when and how the Windows Azure Storage team responds to AWS’ latest move. According to AWS’ S3 Prices RRS reduces storage charges from US$0.15/GB to US$0.10 GB (33%) for the first 50 TB and by slightly reduced percentages for additional storage.

Stacey Higginbotham claims Amazon Tries to Take the Commodity Out of Cloud Computing in this 5/19/2010 post to the GigaOm blog:

Amazon will offer a lower-priced, less reliable tier of its popular Simple Storage Service, the retailer said today. The offering, called Reduced Redundancy Storage, is aimed at companies that wouldn’t be utterly bereft if the less reliable storage fails. From the Amazon release:

“Amazon S3’s standard and reduced redundancy options both store data in multiple facilities and on multiple devices, but with RRS, data is replicated fewer times, so the cost is less. Once customer data is stored, Amazon S3 maintains durability by quickly detecting failed, corrupted, or unresponsive devices and restoring redundancy by re-replicating the data. Amazon S3 standard storage is designed to provide 99.999999999% durability and to sustain the concurrent loss of data in two facilities, while RRS is designed to provide 99.99% durability and to sustain the loss of data in a single facility.”

As the market for infrastructure-as-a-service platforms grow, Amazon is trying to offer variations and services that distinguish its compute and storage cloud from those of Rackspace and Verizon and from platforms such as Microsoft’s Azure or VMforce. Cheaper storage with a lower service level is one such way, and its spot pricing instances are another.

On his blog, Amazon’s Jeff Barr offers an overview of the RSS offering. For more detail, check out Amazon CTO Werner Vogel’s explanation on how S3 works and what the magic behind RRS is.

For more on the economics of cloud computing and how they will evolve, visit our Structure 2010 conference June 23 and 24 where Amazon CTO Werner Vogels will be a key note speaker.

Mike Kirkwood reports Memcached and Beyond: NorthScale Raises Bar with $10m Series B and the NoSQL movement this 5/19/2010 post to the ReadWriteCloud blog:

![]()

NorthScale is on the move. Hot off its recent accomplishment in winning several awards, including best-in-show, at the 2010 Under the Radar event award, it has announced a successful round of financing from the Mayfield Fund.

It successful release of its initial products in March has put the company on the map as a defining leader in the noSQL approach to data persistence. The company has already gained Zynga as a customer of its commercial version of Memcached and has announced its plans to go to the next level in providing elastic data infrastructure as an approach to scaling applications in the cloud.

Along with the new funding, the company has attracted industry vetean Bob Wiederhold to join the company.

In Wiederhold's first blog post as CEO, "Simply Transformational", he shares the opportunity for the company in a word - simple.

If the company can make scaling high performance web applications in cloud infrastructures easier, a big opportunity exists for scaling a big portion of the persistence data market.

Scaling Out: noSQL Approach

Here is a the company presentation (along with Q & A) from the presentation at Under the Radar.

In this demonstration, the company shares the places where the noSQL works well - and its vision for moving beyond Memcached for scaling both within and beyond the walls of the data center.

Scaling: It is The Biggest Show on Earth

One of the things that is satisfying with NorthScale and the growth of Memcached is how the pattern of the web has changed the underlying assumptions of data architecture.

The reality is that nearly all information is needed by web applications. Since web applications scale in the cloud, the data layer needs to scale in the same manner too to avoid being the bottleneck.

When we look to the list of Memcached users we see many of the current leaders in scaling web architecture such as Facebook, YouTube, and Zynga intertwined with the simple yet effective approach.

On Facebook' Open Source page, the company describes its involvement with Memcached:

memcached is a distributed memory object caching system. Memcached was not originally developed at Facebook, but we have become the largest user of the technology.

NorthScale is building its business on the premise that elastic data infrastructure as a key layer in the stack. From what we see, solving this need for the full gambit of distributed applications will be needed to reach the scale out potential of cloud computing.

Will NorthScale help evolve from Memcached high-scaling web object technology to a ubiquitous data tier used by the majority of cloud applications?

Josh Greenbaum rings in on the Microsoft Sues Salesforce.com topic in this 5/18/2010 post to the Enterprise Irregulars blog:

I just got a copy of a brief Microsoft filed in the U.S. District in Seattle alleging that Salesforce.com infringed on nine of Microsoft’s patents. It’s not clear at this writing how valid a case Microsoft has, nor has there been any official response from Salesforce.com posted on the company’s website. So a full analysis will have to wait until tomorrow.

But several things are clear from a first reading of the brief. Microsoft is using some fairly broad patents covering such things as “system and method for controlling access to data entities in a computer network”, and “method and system for identifying and obtaining computer software from a remote computer” that hardly seem to be something only Salesforce.com is doing. Indeed, the rather sparse description of the patent and the rather simple allegations that Salesforce.com is doing these things make is seem that Microsoft could potentially sue a large number of companies for violating these patents if it so chooses.

Which leads to the second point: the fact that Microsoft is suing Salesforce.com at all. There are usually two reasons competitors sue each other: out of desperation or out of a perceived sense that prevailing is a near certainty. The former is usually seen in David vs. Goliath lawsuits that often involve Microsoft in the role of Goliath against some soon to be former competitor looking for injunctive relief from being crushed to death. Regardless of the merits of the suit, the plaintiff is rarely heard from again.

The other reason — the one that comes with a strong assumption that the suit will be successful — was seen recently when Oracle sued SAP over allegations that SAP’s former TomorrowNow subsidiary illegally obtained Oracle’s IP. That gist of that suit, while still unsettled, has largely been conceded by SAP, which has since disbanded TomorrowNow.

My sense is that Microsoft is operating under this latter assumption. While Salesforce.com’s CRM business is significantly larger that Microsoft’s, Microsoft hardly fits into the dying David mold, and this suit hardly seems the desperate gasp of a soon to be extinct competitor.

Rather, this suit is meant to be played to the hilt, and I expect Microsoft to do exactly that. I fully expect Salesforce.com to fight back just as vigorously, as it surely must. The result promises to be a protracted battle of egos and lawyers for some time to come.

<Return to section navigation list>