Windows Azure and Cloud Computing Posts for 5/20/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in May 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

tbtechnet suggests you Watch the Eclipse: Data Storage on the Microsoft Windows Azure platform in this 5/20/2010 post to the Windows Azure Platform, Web Hosting and Web Services blog:

There’s a brand new lab that is intended to show PHP developers how they can use Windows Azure Tools for the Eclipse development environment to work with data storage on the Microsoft Windows Azure platform.

This lab will walk you through how to write code for Windows Azure storage, and how to perform basic operations using the graphic interface provided by the Windows Azure Storage Explorer running within Eclipse. There is a video that accompanies this lab, so that you can follow along, step-by-step.

The lab should take you about an hour: http://www.msdev.com/Directory/Description.aspx?eventId=1853

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Liam Cavanagh’s wrote in his See You at Tech Ed North America post of 5/10/2010:

Just a quick post to let you know that a few of the engineers and I will be attending TechEd North America 2010. This year we have a booth (look for the "SQL Azure Data Sync" banners) and a few sessions, including one I am presenting titled "Using Microsoft SQL Azure as a Datahub to Connect Microsoft SQL Server and Silverlight Clients", as well as some content in a few other sessions including one by Patric McElroy titled "What’s New in Microsoft SQL Azure".

I can't give away too much, but we do have a couple of great annoucements coming that relate to our work on synchronization with SQL Azure.

So if you happen to be at the conference, hopefully I will see you either at my session or at the booth.

The SQL Azure team managed to crowd the SQL Server News Hour with David Robinson into a 00:02:50 You Tube video featuring [who else but] David Robinson:

Buck Woody hosts the SQL Server News Hour with this week's guest David Robinson (Senior Program Manager, Microsoft) to discuss SQL Azure.

Michael Washington posted Simple Silverlight 4 Example Using oData and RX Extensions to the Code Project on 5/20/2010:

This is part II to the previous Blog (http://openlightgroup.net/Blog/tabid/58/EntryId/98/OData-Simplified.aspx) where we looked a simple OData example. This time we will make a simple Silverlight application that talks to an oData service.

Note, for this tutorial, you will also need to download and install RX Extensions from: http://msdn.microsoft.com/en-us/devlabs/ee794896.aspx, and the Silverlight Toolkit from: http://silverlight.codeplex.com.

As with the last tutorial, we will not use a database, just a simple collection that we are creating programmatically. This will allow you to see just the oData parts.

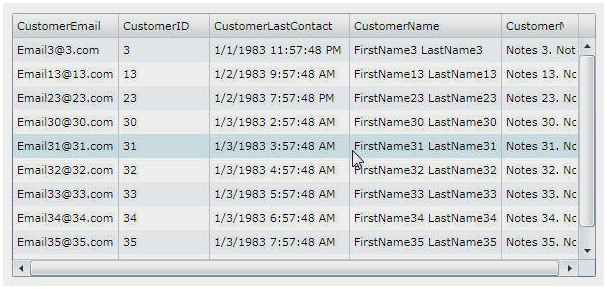

Michael continues with an illustrated, step-by-step tutorial to display the following DataGrid:

Douglas Purdy announced the availability of OData Roadshow Update/Slides in this 5/20/2010 post in the middle of his word-wide OData hajira:

We just completed the US leg of the roadshow. Thanks to everyone who attended. We really hit our stride in Mountain View (it is home court for me), although the NYC and Chicago stops offered some really great customer interactions that we are still talking about.

We’ll be doing a keynote at the European VC Summit (Guy Kawasaki is the MC which I am excited about) and then it is off to Asia, TechEd US, and back to Europe.

We will be taping at least one of those events, which I will post here.

In the meantime, you can get the PDF (PPT is too big for WP to upload and I am too tired to SSH in to the server and fix it).

Wayne Walter Berry explains the workaround for an SSIS Error to SQL Azure with varbinary(max) with SQL Server 2008 R2’s Import and Export Wizard in his 5/20/2010 post to the SQL Azure Team blog:

In using the SQL Server Import and Export Wizard found in SQL Server 2008 R2 to export data to SQL Azure, I noticed that it was having trouble with a column that was the data type of varbinary(max). This article will discuss how to the fix the issue I encountered in hopes that it will help you.

The Problem

In using the SQL Server Import and Export Wizard, I was using a .NET Framework Data Provider for SqlServer data source to connect to SQL Azure, and a SQL Server Native Client 10.0 data source to connect to my local server. I was exporting from my local server into SQL Azure. I described the process of using the SQL Server Import and Export Wizard in this blog post.

When exporting one particular table that had a varbinary(max) column, the SQL Server Import and Export Wizard (using SSIS) was throwing a red icon (critical error unable to export), because the .NET Framework Data Provider for SqlServer didn’t know how to translate the varbinary(max) column. The error I was getting was this:

SSIS Type: (Type unknown ...)

Background

When SSIS goes between two dissimilar data sources it must convert the data types of one data source to the other. To do this, it uses a set of mapping files found in: C:\Program Files\Microsoft SQL Server\100\DTS\MappingFiles. The two files involved in my case are: SqlClientToSSIS.xml and MSSQLToSSIS10.xml. The SqlClientToSSIS.xml was the one being used by the .NET Framework Data Provider for SqlServer data source to translate the datatype, and the SQL Server Native Client 10.0 data source was using MSSQLToSSIS10.xml.

Solution

With the SQL Server 2008 R2 release the MSSQLToSSIS10.xml file has a definition for varbinarymax, the dtm:DataTypeName element. However, the SqlClientToSSIS.xml file doesn’t contain a definition for varbinarymax. This leaves SSIS unable to map this data type when moving data between these two providers. The solution was to add this definition to the SqlClientToSSIS.xml file:

Note: I had to grant myself write permissions to SqlClientToSSIS.xml in Windows Explorer so that I could modify it.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Cliff Simpkins exclaimed Now available!: Windows Server AppFabric RC and BizTalk Server 2010 beta in this 5/20/2010 post to The .NET Endpoint blog:

Several weeks ago, I told you about our upcoming Application Infrastructure Virtual Launch event. Today, I am pleased to announce the availability of the Windows Server AppFabric Release Candidate (RC). To learn more, I recommend tuning into the keynote (and the many other sessions we have going on) today at the App Infrastructure Virtual Launch event!

Here’s a brief overview of the announcements we’re making during the event this morning:

First off, we’re officially launching Windows Server AppFabric, with the immediate availability of the Windows Server AppFabric Release Candidate (RC); the final RTM release will be available for download in June. I would like to invite you to check out the new Windows Server AppFabric MSDN page (also revamped today!) and download the release candidate to get started.

Also today, we’re excited to announce the availability of the first BizTalk Server 2010 Beta; which now seamlessly integrates with Windows Server AppFabric, combining the rich capabilities of BizTalk Server integration and the flexible development experience of .NET to allow customers to easily develop and manage composite applications. To learn more (and download the beta), visit the BizTalk website at www.microsoft.com/biztalk.

Together with the already available Windows Azure AppFabric, Windows Server AppFabric and BizTalk Server 2010 form Microsoft’s application infrastructure technologies, bringing even more value to the Windows Server application server. These offerings benefit developers and IT pros by delivering cloud-like elasticity, high availability, faster performance, seamless connectivity, and simplified composition for the most demanding, enterprise applications.

If you’ve been following this blog, we hope you’ve been enjoying the technical insights that the product team has been providing into AppFabric and the underlying technologies (WCF and WF). To gain a broader context about our technologies, and to gain access to a wealth of technical resources, be sure to visit the virtual launch event. In particular, here are some specific sessions and content that the team would like to highlight for your consideration:

- Application Server Session

- Enterprise Integration Session

- Windows Server AppFabric Product Stand

Following the Virtual Launch Event, there will be a number of local community launch events around the globe where developers and IT Pros can attend, in-person, to learn more about Microsoft’s application infrastructure and talk with others in the .NET community about how these technologies make the lives of developers and IT Pros easier. Be sure to check with your local user group to see if we have an event near you.

Seems strange to me to have a “Lauch Event for an RC.”

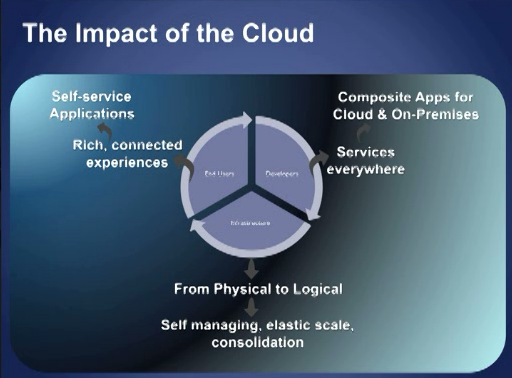

Application Infrastructure: Cloud Benefits Delivered was the theme of Microsoft’s Application Infrastructure Virtual Launch Event of 5/20/2010:

“Application infrastructure” (often referred to as middleware) is an essential enabling technology for helping IT meet the challenges of evolving their business applications. Enterprises of all sizes experience tremendous cost and complexity when extending and customizing their applications today. Given the constrains of the economy IT must increasingly find new ways to do more with less and this means finding less expensive ways to develop new capabilities to meet the needs of the business. At the same time, the demands of business users are ever increasing.

Environments of great predictability and stability have given way to business conditions that are continually changing, with shorter windows of opportunity and greater impacts of globalization and regulation. This all contributes to put tremendous stress on IT to find new ways to bridge the demanding needs of the users/business and the reality of their packaged applications.

Microsoft’s Application Infrastructure

Microsoft’s industry-leading2 Application Infrastructure technologies consist of the following:

- Windows Server AppFabric provides a set of application services focused on improving the speed, scale, and management of web and composite applications. Existing web applications are faster and more scalable through distributed in-memory caching; and building and managing composite applications is simplified with out-of-the-box management and monitoring infrastructure for workflows and services.

- Windows Azure AppFabric connects hybrid applications and services running on-premises and in the cloud. This includes applications running on Windows Azure, Windows Server and a number of other platforms including Java, Ruby, PHP and others. It delivers secure connectivity between loosely-coupled services and applications, enabling them to navigate firewalls or network boundaries.

- BizTalk Server enables organizations to connect and extend heterogeneous systems across the enterprise and with trading partners. BizTalk Server 2010 comes pre-integrated with Windows Server AppFabric to allow .NET developers to more rapidly build composite applications that connect to line-of-business systems. This brings the familiar programming model of .NET to complex enterprise integration scenarios involving LOB systems.

This Application Infrastructure builds upon a consistent and proven foundation of developer frameworks (.NET Framework), IT Pro management tools (System Center, IIS & Powershell), and an enterprise ready platform (Windows Server/Azure & SQL Server).

Ellen Messemer reports “New offerings from Novell and Symplified give customers more control” in her Cloud-based identity management gets a boost post of 5/19/2010 to NetworkWorld’s Security blog:

Giving network managers a way to provide access, single sign-on and provisioning controls in cloud-computing environments got a boost today from both Novell and a much smaller competitor, start-up Symplified.

Novell said its Identity Manager 4.0 product, expected out in the third quarter, will be able to work with Salesforce.com and Google Apps, as well as Microsoft SharePoint, and SAP applications to support a federated identity structure in the enterprise.

Symplified broke new ground with what it's calling Trust Cloud for EC2, software that provides access management, authentication, user provisioning and administration, single sign-on and usage auditing for enterprise applications running on the Amazon EC2 platform. It can be ordered through Symplified's Trust Cloud site and automatically deployed on the Amazon EC2 virtual-machine instances that customers request under an arrangement with Amazon.

Out and available now, Trust Cloud for EC2, "is a big deal," says Burton Group senior analyst Ian Glazer, because it offers what promises to be the most comprehensive approach yet to exerting identity management controls over enterprise data running in Amazon's EC2 infrastructure-as-a-service data centers. "You can put controls into the EC2 environment, even make the data always flow the way you want."

- Symplified, which also has other proxy-based products for integrating enterprise identity management functions with Google and Safesforce.com applications, believes the central issue in tackling the security challenges in Amazon's EC2 environment is designing security for "multi-tenancy," says Eric Olden, Symplified CEO. "It's like an apartment complex."

Amazon's EC2 data centers, of which there are about 35 around the world, constitute a massive virtualized universe of primarily Xen hypervisor-based Linux-based machines, which Amazon refers to as the "Amazon machine image," or AMI, Olden says.

Like Google, and Safesforce.com, Amazon supports the Security Assertion Markup Language (SAML) protocol, seen as a standard building block for identity management interoperability. But only about 5% of the estimated 2,200 service providers in the burgeoning cloud-computing market appear to support SAML, Olden says, so Symplified also elected to support a variety of non-SAML-based protocols, such as those used at cloud-based recruiting and personnel management application provider Taleo, for example.

Analyst Glazer says cloud computing is having a profound effect on the vendors in the identity management arena, which spent years arguing and developing SAML, to find one of its most promising uses is not just in the fortress of the enterprise to control provisioning and other functions in corporate networks, but now also in the cloud.

Ellen continues with additional quotes from Glazer.

The Windows Azure Platform AppFabric Team posted IIS Hosted WCF Services using AppFabric Service Bus the evening of 5/19/2010:

Through a number of customer engagements we’ve heard time and again how extending current SOA investments to the cloud, through the use of Windows Azure AppFabric, has been transformative for our customer’s businesses.

Customers have accrued value in many areas, but one theme that gets repeated over and over again is taking current back-end systems and connecting those systems to AppFabric Service Bus.

Whether companies are extending high-value on-premises systems to the cloud for mobile workforce access or partner access, AppFabric Service Bus, coupled with AppFabric Access Control, has allowed these customers to extend the life of their systems with new cloud-enabled scenarios. The really interesting part here is that these customers have been able to cloud-enable existing systems without the cost and risk of rewriting and hosting them in the cloud.

In cases where customers are aggressively moving their software assets to the cloud, they too have leveraged the connectivity provided by Windows Azure AppFabric to keep their Tier 1 applications running in their own datacenters. With AppFabric, they are able to maintain control of these business critical systems, letting them run in their own datacenter(s), managed by their own IT staff, but cloud-enable them through AppFabric.

In an effort to further cloud-enable existing systems, one of our team members wrote a whitepaper that shows how to take WCF services hosted in IIS 7.5 and connect them up to the AppFabric Service Bus. This has been a frequently requested feature, so we’re excited to get this whitepaper out.

If you have WCF services hosted in IIS 7.5 and want to connect them to the cloud, this whitepaper will show you how to enable that scenario.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

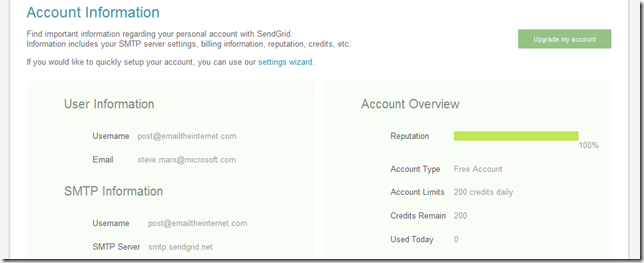

Steve Marx shows you how to EmailTheInternet.com: Sending and Receiving Email in Windows Azure in this 5/20/2010 post:

Running right now at http://emailtheinternet.com is my latest demo, which lets you send anything via email and posts it to a public URL on the web.

This sample, which you can download here, does three things of technical interest:

- It uses a third party service (SendGrid) to send email from inside Windows Azure.

- It uses a worker role with an input endpoint to listen for SMTP traffic on port 25.

- It uses a custom domain name on a CDN endpoint to cache blobs.

Background: Email and Windows Azure

Sending email is more complicated than you might think. Jeff Atwood has a blog post called “So You’d Like to Send Some Email (Through Code)” that sums up some of the complexities.

Sending email directly from a cloud like Windows Azure presents further challenges, because you don’t have a dedicated IP address, and it’s quite likely that spammers will use Windows Azure (if they haven’t already) to send truckloads of spam. Once that happens, spam blacklists will quickly flag the IP range of Windows Azure data centers as sources of spam. That means your legitimate email will stop getting through.

The best solution to these challenges is to not send email dircetly from Windows Azure. Instead, relay all email through a third-party SMTP service (like SendGrid or AuthSMTP) with strict anti-spam rules and perhaps dedicated IP addresses.

Note that receiving email is a completely different story. As long as people are willing to send email to your domain, you can receive it in Windows Azure by just listening on port 25 for SMTP traffic.

Sending email using a third-party service

The first thing I did was sign up for a free account with SendGrid. The free tier allows me to send up to 200 emails per day, but it doesn’t give me access to some of the advanced features. I’d recommend using at least the Silver tier if you’re serious about your email being delivered and looking correct for all users. …

Once I completed the quick signup, I took down all the details, which I added as configuration settings to my project:

Actually sending email is incredibly easy using the

System.Net.Mailnamespace. Here’s the code that sends email replies for EmailTheInternet.com:var reply = new MailMessage(RoleEnvironment.GetConfigurationSettingValue("EmailAddress"), msg.FromAddress) { Subject = msg.Subject.StartsWith("RE:", StringComparison.InvariantCultureIgnoreCase) ? msg.Subject : string.Format("RE: " + msg.Subject), Body = body, IsBodyHtml = msg.HasHtmlBody // send HTML if we got HTML }; if (!reply.IsBodyHtml) reply.BodyEncoding = Encoding.UTF8; // make it a proper reply reply.Headers["References"] = msg.MessageID; reply.Headers["In-Reply-To"] = msg.MessageID; // use our SMTP server, port, username, and password to send the mail (new SmtpClient(RoleEnvironment.GetConfigurationSettingValue("SmtpServer"), int.Parse(RoleEnvironment.GetConfigurationSettingValue("SmtpPort"))) { Credentials = new NetworkCredential(RoleEnvironment.GetConfigurationSettingValue("SmtpUsername"), RoleEnvironment.GetConfigurationSettingValue("SmtpPassword")) }).Send(reply);Yes, it’s really that easy. If you take a look at the code, you’ll see that there’s much more work involved in constructing the HTML body of the email than in sending it via SMTP.

Note that for EmailTheInternet.com, I’m using SendGrid’s free pricing tier, which means I get a limited number of emails per day, and I don’t get a dedicated IP address or whitelabeling. Because of this, my emails may not all make it through spam filters, and some email clients will show the emails as coming from sendgrid “on behalf of post@emailtheinternet.com.” This is simply because I haven’t paid for those services, not because of some limitation of this approach.

Steve continues with the code and instructions for Receiving email using a worker role and details about Custom domains and CDN. Steve concludes:

Download the code

You can download the Visual Studio 2010 solution here, but note that I have not included the dependencies. Here’s where you can download them:

Thanks, Twitter followers!

A special thanks to my followers on Twitter who helped me test this (and fix a few bugs) before I released the code here. I really appreciate the help!

Thanks, Steve, for a true Azure tour de force.

Eric Nelson reminded developers that Windows Azure BidNow Sample [is] definitely worth a look in his 5/20/2010 post from Microsoft UK:

[Quicklink: download new Windows Azure sample from http://bit.ly/bidnowsample]

On Mondays (17th May) in the 6 Weeks of Windows Azure training (Now full) Live Meeting call, Adrian showed BidNow as a sample application built for Windows Azure.

I was aware of BidNow but had not found the time to take a look at it nor seems it running before. Adrian convinced me it was worth some a further look.

In brief

- I like it :-)

- It is more than Hello World, but still easy enough to follow.

- Bid Now is an online auction site designed to demonstrate how you can build highly scalable consumer applications using Windows Azure.

- It is built using Visual Studio 2008, Windows Azure and uses Windows Azure Storage.

- Auctions are processed using Windows Azure Queues and Worker Roles. Authentication is provided via Live Id.

- Bid Now works with the Express versions of Visual Studio and above.

- There are extensive setup instructions for local and cloud deployment

You can download from http://bit.ly/bidnowsample (http://code.msdn.microsoft.com/BidNowSample) and also check out David original blog post.

Related Links

- UK based? Sign up to UK fans of Windows Azure on ning

- Check out the Microsoft UK Windows Azure Platform page for further links

The Windows Azure Team posted Real World Windows Azure: Interview with Sean Nolan, Distinguished Engineer at Microsoft on 5/20/2010:

As part of the Real World Windows Azure series, we talked to Sean Nolan, Distinguished Engineer in the Health Solutions Group at Microsoft, about using Windows Azure to deliver the H1N1 Response Center Web site and the benefits that Windows Azure provides. Here's what he had to say:

MSDN: Tell us about the H1N1 Response Center.

Nolan: In October 2009, public health officials were concerned that the H1N1 pandemic was becoming a serious issue for our national ability to deliver quality care. When faced with flu symptoms, people don't always know what to do-stay home and rest, visit their physician, or go to the emergency room-so we saw an opportunity to help. We teamed up with experts from Emory University to create the H1N1 Response Center, a Web site where people can receive guidance based on a self-assessment.

MSDN: What was the biggest challenge you faced with the H1N1 Response Center Web site before implementing Windows Azure?

Nolan: The ability to handle burst traffic was the biggest issue. For example, when the media broke a story about the virus, it would cause dramatic increases in site visits. The same would happen in response to other trigger events, such as school closings. And of course, the most important time for our service to be available was during those bursts, so we needed a solution that could handle them seamlessly.

MSDN: Can you describe the solution you built with Windows Azure to help address your need for a scalable solution?

Nolan: We're hosting the H1N1 Response Center on the Windows Azure. Visitors fill out a self-assessment that includes questions about age, gender, symptoms, and key medical conditions. Emory's algorithms determine the severity of the situation and offer guidance that individuals can use as input to make decisions about next steps. If a trip to a doctor is appropriate, people can take advantage of an additional service built on top of Microsoft HealthVault healthcare Web site technology to prepare for the visit. We also send real-time data to public health officials by using Microsoft Amalga software for healthcare. Queue storage services and Worker roles in Windows Azure help us move data effectively between these components. …

Figure 1. The H1N1 Response Center Web site.

John O’Donnell posted this brief (00:03:28) 422Group adopts Windows Azure as part of their Continuum 422 CRM Webcast to Channel9 0n 5/20/2010:

Keith Beindorf from 422Group shares benefits of Azure for their on-demand solution for students.

The Systems Integrator Launches Innovative Software with Minimal Capital Investment case study of Dot Net Solutions’ use of Windows Azure was re-featured at Microsoft’s Application Infrastructure Virtual Launch Event of 5/20/2010:

Dan Scarfe, Chief Executive Officer, Dot Net Solutions:

To improve its own development process, Dot Net Solutions created a virtual project-collaboration application. When the software, called ScrumWall, drew great interest from customers, the company used Windows Azure™ to offer it as a hosted service. The solution made it possible for Dot Net Solutions to bring its new product to market quickly, with minimal investment costs, while offering superior performance to users.

Windows Azure enables us to move into the realm of the ISV. We’re already experts at delivering custom software for customers. We can now take these skills and build a software product, delivering it to a potentially massive user base—but without the risk of hosting it on our own infrastructure.

The version from the launch event is read-only.

Eugenio Pace posted Windows Azure Architecture Guide – Part 1 – Complete, Starting Part 2 on 5/19/2010 after the cutoff for yesterdays issue:

We are “done” with the first part of the guide. You can download the guide release candidate from here.

I want to thank the long list of reviewers and contributors for this first deliverable. Thanks much!!

Windows Azure Architecture Guide – Part 2

After a long star career with us, Adatum is taking some time off. Please welcome TailSpin, our star in this second part of the Windows Azure Architecture Guide.

TailSpin is a startup ISV that has specialized in SaaS solutions. One of their flagship applications is Surveys, a system for polls and surveys. Surveys is offered as a service and customers would typically subscribe to the app and pay a monthly fee for a number of seats.

TailSpin has decided to host Surveys on Windows Azure for many reasons:

- They are a rather small company and wants to “grow as they go”. They want to avoid big upfront investments and Windows Azure gives them the opportunity to acquire resources as their customer base grows.

- Surveys are seasonal in essence. For example, retail companies might run surveys around big shopping seasons like Christmas, etc. Windows Azure elasticity would help them follow demand more efficiently.

- They want to deploy their solution globally. Windows Azure geo-distributed datacenters will help with this.

TailSpin developers are seasoned .NET developers, very experienced with the Microsoft technology stack and also experienced in building high scale solutions.

Surveys is primarily a web application built with ASP.NET MVC 2.0. It has 3 different types of users:

- TailSpin operations: that uses it to manage all customers subscriptions, usage metrics, etc.

- TailSpin customers (a.k.a. “subscribers” or “tenants”). They use the application to create and launch surveys, analyze results, etc.

- TailSpin customers’ customers. These, in most cases, are just the public that answer the surveys.

Surveys is a multitenant application. There are instances of the application deployed all over the world to serve customers in each geography.

TailSpin wants to target a wide variety of customers: big, medium and small companies all over the world. This of course has consequences in terms of capabilities expectations: big companies will demand more sophisticated integration with their own existing systems (e.g. SSO with their own identity infrastructure, own data stores and applications, etc). Smaller organizations will go with simpler versions.

The “hidden agenda” for Part 2

As usual, this story serves one purpose only: illustrate design consideration that we find interesting and challenging. I’m sure you can now guess some of these based on the description above:

- How to automate provisioning and on-boarding?

- Should we have a single data repository? or multiple?

- How will customers tweak and extend “their” app? (e.g. data, UI)

- How will TailSpin ensure scalability of their solution as more a more customers subscribe to it?

- How will they handle version?

- How does authentication & authorization work for each customer segment? Should they use identity federation always?

- How will they take advantage of Windows Azure geo-distribution? What’s the impact on the infrastructure (e.g. DNS, SSL, certificates)?

- Should TailSpin consider a hybrid data model (SQL Azure + Windows Azure storage) to satisfy different requirements in Surveys?

Technologies

There’s no legacy involved here, so we plan to make use of the latest technology available to us:

- Visual Studio 2010

- ASP.NET MVC 2.0

- .NET 4.0

The tools in particular include significant improvements we’d like to use. (Check Jim’s blog for more details).

As usual, we’d love your feedback and input.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie asserts Network Optimization Won’t Fix Application Performance in the Cloud in this 5/20/2010 post to the F5 DevCentral blog:

… where response time and speed are concerned, many businesses automatically assume Google.com- and Amazon.com-levels of performance from services such as Google App Engine and Amazon EC2, but this can be a mistake.

-- ESJ, “Q&A: Managing Performance of Cloud-Based Applications and Services”

A big mistake, indeed. While the underlying systems may be optimized and faster than fast, that doesn’t mean that applications won’t suffer poor performance. There are many other factors that determine how an application will perform, and most of them are variable. They can change from day to day, hour to hour, and from user to user. It certainly won’t hurt to optimize the network and, for providers at least, it’s about the only thing they can do to help customers whose applications may be suffering from performance problems.

Network optimization is a very different game from application and application delivery optimization. The former focuses on well, the network and doesn’t take into consideration anything about the application like protocols and connections and repetitive data delivery. The ability to accelerate an application (because that’s really what we’re talking about) is not something that can be achieved solely by optimizing the network. Optimizing the network does not impact the application’s ability to process requests and return responses, nor does it improve the capacity of the application, nor does it reduce the chattiness of an application protocol. It doesn’t do anything for the application because it’s is, appropriately, focused on the network.

Application performance issues can be caused by poorly performing networks, yes. But a provider only has access over one leg of that network – the one they control. Poor performance caused by networks outside the organizational boundary of control cannot be addressed by localized network optimization.

TODAY is not TOMORROW is not YESTERDAY

Always-on connectivity is now a life-style and it certainly doesn’t appear to be slowing down. Users connect to applications at all hours of the day and night and from disparate places from day to day. They use a wide variety of devices that connect over multiple networks. And while 80% of all successful cloud computing providers may soon implement network optimization as predicted by Gartner, they can only optimize what they control – and what they control is their network, not the networks over which users connect.

An application deployed in the cloud may perform perfectly fine over a corporate LAN connection, and perhaps even over the corporate wireless network. But once a user moves to their own wireless network, or a cellular network, or the Internet cafe down the street’s network, all guarantees regarding performance are off.

What’s necessary to solve the problems of application performance in the cloud is not simply faster networks but smarter networks. Smarter networks that are capable of adapting in real-time to changing conditions that impact application performance; conditions that cannot be addressed by network optimizations that are constrained by organizational control boundaries.

Our focus thus far regarding the new network has been on operational concerns: the ability of infrastructure components to integrate and collaborate with each other in new ways to ensure rapid adaptation to changing operational events within the data center. But the other side of the new network is the ability to handle with alacrity the dynamism inherent in the users; in the users desire and ability to move seamlessly from desktop to laptop to mobile device, from corporate LAN to remote office to Internet cafe. Each of these endpoints and networks has different performance characteristics and capabilities and thus may require specific network and application delivery network policies to address specific problem areas that hinder acceptable application performance.

Complicating the matter is the fact that different user + network combinations can have dramatically different impacts on the application servers. Devices with lower bandwidth capabilities consume application resources for longer periods of time because it takes them longer to send and receive data. More mobile users thus increases the number of concurrent users a single application instance can support given desired performance characteristics which may result in the need to scale sooner than if all users were accessing the application from a desktop over a fat LAN connection.

Lori concludes with an “APPLICATION PERFORMANCE REALLY MEANS APPLICATION DELIVERY PERFORMANCE” topic.

Martin Veitch claims Cloud computing: Still pie in the sky to many in this 5/20/2010 article for the CIO.co.uk blog:

Sometimes the disconnect between media hype and reality is almost palpable. For over a year now we've been using the term "cloud computing" to describe a Venn diagram of complementary trends. God knows that 'cloud' is a better tag than SaaS or other descriptors for this unwieldy crew of services - especially if you have to come up with hundreds of punning headlines for a living -- but the overwhelming fact that I have been forced to confront is that not a lot of people know what the 'cloud' is, and many of the minority of people who think they do have some very odd ideas about it. And finally, a fair number of these people are CIOs or others paid to come up answers at some point.

I've probably heard the views of over 1000 people on the subject and it's clear to me that we in the media have sped off way down the road and, having seen the blueprint for a brave new world, we've gone ahead and put up the signs and organised the party, assuming that incoming tenants will sort themselves out. The problem is that there's no guarantee that they will and we could be left with a tattered mess of ill-defined sprawl.

There are plenty of numbers around but given the state of some of the research I'd rather report some ad hoc opinions.

Definitions of cloud computing: There are loads of them but precious little exists in the way of agreement. Some people use 'cloud' to mean anything connected to the internet; others seem to use it as a proxy for 'datacentre'. Broad interpretations bracket services that make no good sense to not do over the internet (WebEx, message security scanning, antivirus) with siloed applications reached over the web (Google Docs or GMail) or platform-as-a-service approaches such as Salesforce.com's Force.com and fully-fledged (fluffed?) public cloud environments such as Amazon.com's AWS and Microsoft's Windows Azure. And then of course there are... [Emphasis added.]

Private clouds: Cloud computing not complicated enough for you? Try bringing in internal environments that take on some characteristics of public clouds such as metered billing or support for line-of-business chargeback but keep systems in-house for security/governance/other reasons and therefore lose benefits such as economies of scale.

Why you would do the cloud thing: If your business is in crisis - say you run a media business or the UK government - then it's virtualy the only game in town for slashing IT costs and it's tempting to bet the farm. Alternatively, if you need to move fast and just have to go now without untold budget to throw at the problem, the cloud is attractive. It also looks nice if your business is spiky and you don't want to pay for a lot of under-utilised kit. And it's also a quick win and sop to CEOs and CFOs as you save on admins, software licences, servers and datacentres.

Why you wouldn't do the cloud thing: Concerns over who gains access to sensitive data, especially abroad. Worries over stability of suppliers. Flaky network connections. Pesky regulators with rulebooks drafted in tablets of stone relating to where data sits and pesky legal departments that have read ancient charters. You're secretly scared by the possibility of losing the IT department or you're even more scared by possibility of losing my own job. You've been so busy that you haven't got a clue what people are talking about.

This, it seems to me, is where we are with cloud computing -- only just starting out. Perhaps by the time journalists and analysts have started to flag the real interest will begin.

<Return to section navigation list>

Cloud Security and Governance

David Linthicum asserts “If you view users as just revenue sources, you forget all about service -- the cloud's key value” in his Facebook's critical lesson for cloud providers post of 5/20/2010 post to InfoWorld’s Cloud Computing blog:

The privacy issues around Facebook are so widely covered that I'd rather not beat that dead horse here. However, there are many lessons around the Facebook debacle for the rank-and-file cloud computing providers, whose numbers are growing weekly.

The core issue is that Facebook has been focused on the data it is gathering and not the users it is serving. Facebook seems to view users as content providers and does not consider their core needs.

Cloud providers could be making the same mistake as Facebook: viewing users as accounts that generate revenue, instead of as people and businesses that need to have their core requirements understood. I'm seeing this a bit today with some of the larger cloud computing providers focusing more on numbers than on service.

In Facebook's case, its "numbers before customers" mistake was in privacy settings, where the default was opting users into a more public profile than they would likely want -- with the unpleasant result of letting stalkers track their victim or your coworkers discovering your interest in collecting comics. Facebook has compounded that mistake with the arrogant belief that only the tech media care about their privacy problems.

In the case of the cloud providers, their mistake is about not learning how their users need to deal with security, and not making sure to be highly secure. Truth be told, cloud providers today pay lip service to security, but don't meet the core security requirements that most enterprises and governments seek. Moreover, if they ever change or break their security systems, without working with their users, they will be history -- and fast.

D E Levin uses Amazon S3 RRS as an example of a service for “data that is not mission-critical” in his Data Ownership, Access, Protection... the Discussion Grows post of 5/20/2010 to the Voices for Innovation blog:

People around the world -- myself and a lot of policy makers included -- are giving more and more thought to issues of data: data ownership, data use, data access, data storage, data back-up, data protection, and so on. A range of innovation -- cloud computing, social media services, broadband, and more -- has driven this issue to the fore. The conversation is already under way... in companies, in the media, and among policy makers. If you're concerned as a citizen and/or have a stake as a tech professional, business owner, etc., you should try to stay informed and take part in the conversation. Some issues will shake out in the marketplace and some ultimately in the public policy arena.

A range of news this week heightened my attention to this issue. To a large extent, we consciously create and are associated with data. You have a (changing) address, a (fixed) birthdate, three credit scores, a tax ID number, and so on. You also have created a list of contacts with phone numbers, email, and so on. But in the digital age, we also broadcast information even if we don't associate ourselves with it. The Electronic Frontier Foundation showed how we can leave behind digital fingerprints through our browser. Google's mea culpa on its practice of collecting and storing data packets as it accumulated Street View data offered another reminder that we can broadcast info unthinkingly from unsecured networks.

These two news stories raise alarms, but a service story -- from Amazon --adds nuance. Amazon is planning to offer a less reliable, more affordable data storage solution (see Gigaom story). This serves as a reminder that, hey, some data isn't mission critical, and if we can't get to it right away, we can live with that (and pay less money for it). This seems like a reasonable marketplace response, and Amazon is being transparent about the offering. David Pogue, the NY Times tech columnist, writes today that we can become too concerned about data security; he gives the example of network security at his daughter's elementary school. Some in healthcare reasonably argue that data safeguards threaten data aggregation and analysis that could lead to better health outcomes and cost controls.

There is no doubt that these are complex issues that will evolve over time. Give some thought to your data and data in general. What are some of the base level rules, if any, you would like to see promoted by policy makers or championed by technology providers? The answer to this question will likely inform your digital life in the years to come.

<Return to section navigation list>

Cloud Computing Events

All About the Cloud’s Conference Media page of 5/20/2010 offers:

Keynotes:

- Principles for Creating and Driving Value in the Cloud | Video | Slides

Tayloe Stansbury, SVP & Chief Technology Officer, Intuit- Impact of Cloud Innovation | Video | Slides

Doug Hauger, General Manager, Windows Azure, Microsoft- Companies Need More Than Servers:

How and Why the Ecosystem is Critical to Cloud Success | Video | Slides

Treb Ryan, CEO, OpSource, Inc.- Cloud Revolution: Beyond Computing | Video | Slides

Maynard Webb, Chairman & CEO, LiveOpsPresentations:

- Reshaping the Enterprise IT Landscape SaaS and Cloud Go Mainstream | Slides

William McNee, Founder & Chief Executive Officer, Saugatuck Technology Inc.- Partnering with Oracle in the Cloud | Slides

Kevin O'Brien, Senior Director, ISV & SaaS Strategy for Worldwide Alliances and Channels, Oracle Corporation- SaaS Business Analytics Put Line of Business Managers in the Drivers Seat | Slides

Scott Wiener, CTO, Cloud9 Analytics- Looking "Between the Apps": What Matters to CIO's Evaluating Cloud Services | Slides

Rick Nucci, Chief Technology Officer & Co-founder, Boomi- Democratizing the Cloud - Enterprise Cloud Development | Slides

Chris Keene, CEO, WaveMaker Software- Fast Track to SaaS: From On-Premise to On-Demand! | Slides

Feyzi Fatehi, Chief Executive Officer, Corent Technology, Inc.- Cloud Security - Moving beyond Single sign on in 2010 | Slides

Anita Moorthy, Sr.Solutions Marketing Manager, Novell, Inc.- Built to Last - for SaaS | Slides

William Soward, President & Chief Executive Officer, Adaptive Planning- On-Demand Decision Making In Cloudy Times | Slides

Jon Kondo, Cheif Executive Officer, Host Analytics Inc.- SharePoint Easy Button | Slides

Rob LaMear, Founder & CEO, Fpweb.net- Moving Software Products to the Cloud | Slides

Eileen Boerger, President, Agilis Solutions- Accelerating the Transformation to the Cloud

Hyder Ali, Industry Solutions Director, Hosting, Microsoft

Trina Horner, US SaaS/Cloud Channel Development Strategist, Microsoft Corporation | Slides- Delivering on the Full Promise of the Cloud | Slides

Greg Gianforte, Founder, Chairman & CEO, RightNow Technologies, Inc.- Securing the Cloud Ecosystem | Slides

Marc Olesen, Senior Vice President and General Manager , McAfee SaaS- Private Cloud vs. Public Cloud: An Analyst Perspective | Slides

Agatha Poon, Research Manager, Global Cloud Computing , Tier1 ResearchPanels:

- Private Clouds | Video

Phil Wainewright, Director, Procullux Ventures (Moderator)

Zorawar Biri Singh, VP, IBM Enterprise Initiatives, Cloud Computing, IBM

Brian Byun, VP & GM, Cloud, VMWare, Inc.

Jeff Deacon, Managing Director, Cloud Services, Verizon

Rebecca Lawson, Enterprise Business Marketing, Hewlett Packard- Public Clouds | Video

Jeffrey Kaplan, Managing Director, THINKstrategies (Moderator)

Mr. Scott McMullan, Google Apps Partner Lead, Google Enterprise

Jim Mohler, Sr. Director, Product Development, NTT America

Steve Riley, Sr. Technical Program Manager, Amazon Web Services

John Rowell, Co-Founder & Chief Technology Officer, OpSource, Inc.

Matt Thompson, General Manager, Developer and Platform Evangelism, Microsoft

I’m disappointed that there’s no video of Bill McNee’s presentation.

CKC-Seminars announced on 5/20/2010 that the 3rd International SOA Symposium and the 2nd International Cloud Symposium will be held 10/5 and 10/6/2010 at the Berliner Congress Center, Berlin, Germany:

The 3rd International SOA Symposium and the 2nd International Cloud Symposium continue to be the largest and most comprehensive SOA event of the year. This event is dedicated to showcasing the world's leading SOA experts and authors from around the world. Themes and topics are as follows:

Exploring Modern Service Technologies & Practices

Service Architecture & Service Engineering Service Governance & Scalability SOA Case Studies & Strategic Planning REST Service Design & RESTful SOA Service Security & Policies Semantic Services & Patterns Service Modeling & BPM Scaling Your Business Into the Cloud

The Latest Cloud Computing Technology Innovation Building and Working with Cloud-Based Services Cloud Computing Business Strategies Case Studies & Business Models Understanding SOA & Cloud Computing Cloud-based Infrastructure & Products

Following the success of previous years' International SOA Symposia in Amsterdam and Rotterdam and two SOA Symposia held in the USA (sponsored by the U.S. Department of Defense), this edition will be held at the Berlin Congress Center in Berlin, Germany on October 5th to 6th, 2010. A series of SOA and Cloud Computing Certified Professional Workshops will also take place from October 7th to 13th, 2010.

Every SOA Symposium event is dedicated to providing valuable content specifically for IT practitioners. The program committee judges each speaker's session to ensure it complies with the content-driven philosophy of this conference series.

SOA Symposium events feature internationally recognized speakers from organizers such as Oracle, Microsoft, IBM, Sun, Software AG, Amazon, Google, Red Hat, Capgemini, HP, Logica, Ordina, SOA Systems, AmberPoint, Salesforce.com, US Department of Defense, Burton Group/Gartner, Accenture. Expert speakers, such as Thomas Erl, Grady Booch, Anne Thomas Manes, David Chappell, Dirk Krafzig, Paul Brown, Mark Little, Toufic Boubez, Clemens Utschig, Tony Chan and many others, provide new and exclusive coverage of the most relevant and critical topics in the SOA industry today.

Bruce Kyle recommends that you Spend an Hour in the Cloud with Windows Azure in this 5/20/2010 post to the US ISV Developer blog:

You are invited to spend an hour learning about Windows Azure. In this Windows Azure training session you will learn about Azure; how to develop and launch your own application as well as how it impacts your daily life.

Session occur each Wednesday and Friday through the beginning of July.

The Agenda

- Overview of cloud computing

- Introduction to Windows Azure

- Tour of the Azure Portal

- Uploading your first Azure package

- Real world scenario

- Experiencing your first cloud app & behind the scenes

- Q & A

All presentations begin at 11:00 AM Central time (9:00 AM Pacific, noon Eastern).

See Bruce’s post for dates and registration links.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Stefan Ried’s VMware’s Cloud Portability Promise Powered By Google post of 5/20/2010 to the Forrester blog adds another slant on how the VMware/Google partnership’s affects cloud-vendor lock-in:

Every week the platform as a service (PaaS) market has something exciting happening. After VMware recently announced a partnership with salesforce.com to jointly develop vmforce, the virtualization expert today managed to be part of Google’s latest announcement of Google’s App Engine for Business. This is specifically important for ISVs.

Still, one of the biggest strategic concerns that ISVs have in moving their applications into the cloud is the long term safety of an investment into a single technology stack or hosted PaaS offering. Led by IBM and other major vendors (except Google) the open cloud manifesto was launched last year along with other standard efforts to make the cloud more interoperable and portable. Actually, many cloud offerings even mean a double lock-in for ISVs – into the specific new technology stack and in many cases into the single hosting service of the PaaS vendor. The history of Java and web services teaches us that the path through standard bodies can be a solid basis to avoid these vendor lock-in situations. However, the tech industry has also learned, mainly from Microsoft, that the establishment of de-facto standards, evolved out of originally proprietary approaches, can in some cases be a faster path to market share.

Today’s surprise was Google’s joint commitment with VMware to establish such a de-facto cloud platform standard. An application developed once on VMware’s Spring Framework will be, so the vendors state, deployable in many options:

- On Google’s App Engine for Business.

- On salesforce.com’s Force.com data center, where the high level PaaS functionality of vmforce is also available.

- On premise in a customer’s own data center virtualized by VMware’s vSphere.

- On basically any independent hosting provider which runs a vCloud environment by VMware to virtualize its hosting services to a subscriber.

This clearly addresses the problem of deployment lock-in very well. This combined with the broad acceptance of the open sourced Spring Framework means that the second lock-in momentum was never as great a concern for millions of Java developers. Thus, Forrester considers VMware’s Spring Framework an increasingly good platform choice for ISVs' application development in the cloud and on premise.

Beyond the new partnership with VMware, the Google App Engine has evolved significantly. It started on a niche path with a Python-based programming model, but finally became recognized by more developers when it started to support at least plain Java. Giving some navigation in the cloud, Forrester defines PaaS as a pre-integrated technology stack with a set of rich platform components for the development and deployment of general business applications. Compared to this, Google’s App Engine was, with its plain Java capabilities, not really a PaaS offering. It was more on the intersection between infrastructure as a service (IaaS) and PaaS.

In addition, Google was actually only able to address less than one-third of the overall PaaS market. We predicted about a year ago that platforms in the cloud will be attractive in three major use cases or major groups of buyers:

- ISVs which migrate or re-implement their packaged software in the cloud.

- Corporate application developers who are considering cloud alternatives to a traditional middleware stack, in public and/or in private clouds.

- Systems integrators, outsourcers, and hosting providers, which run a PaaS to build an ecosystem of SaaS solutions from ISVs; hoping to remain attractive and competitive to their traditional customers.

Google’s App Engine was basically missing capabilities in order to serve as a migration target for ISVs with more complex Java apps. It was also failing to address corporate development purposes as, after development and testing took place in the cloud, the environment for productive deployment on premise was not available as a licensed software stack. Finally Google’s hosting approach is obviously overlapping with the hosting services of established enterprise-level hosting providers.

The game changed significantly with Google’s announcement today of the preview availability of the Google App Engine for Business. Please see Google’s detailed roadmap here.

First of all, a couple of basic key features were added to move a bit closer to a real PaaS stack. Most important is the announcement of an SQL database service and a block storage service. Support of Google’s Web Toolkit (GWT) also provides some offline functionality to web-centric applications deployed on the new App Engine. This is the same offline approach that Google’s applications took after Google Gears was retired. Beyond the technical capabilities, Google’s commitment to provide a 99.9% SLA finally justifies the “for Business” name tag, and makes the risk more predictable for ISVs deploying their apps here.

Forrester had the chance to talk to Google and VMware in advance of today’s Google IO conference and is confident that the current preview availability will be followed by general availability by the end of 2010. The pricing is, by the way, very competitive, based on an $8/named user/month basis. ISVs that develop consumer facing applications will welcome Google’s statement to Forrester that only named users that use an application at least once in a calendar month will be counted. So, thousands of registered users will not be charged to an ISV if they are not actively using a deployed application (war-file). Like with other PaaS offerings, Google takes care of the scalability, and despite simple IaaS offerings, nobody has to manually manage virtual instances. [Emphasis added, see below.]

Finally, despite all the euphoria, everybody considering a major application implementation on this basis should recall that:

- Spring does by no means provide high level business process platform components, such as a BPM service. If this is required, the announced vmforce stack is a better partner to VMware than Google. Maybe Google or even VMware itself will close this gap by acquiring a BPM engine similar to salesforce.com’s acquisition of Informavores, now (vm)force.com’s BPM component.

- There are still tons of reasons why an enterprise-class Java application should be deployed on a tradition Oracle or IBM middleware stack. Nevertheless, the traditional middleware vendors should speed up their multi-tenant hosting ecosystem to defend their market share now.

- In addition to the pure technology decision, the marketing momentum of a platform is a major decision aspect in ISV’s platform selection process. Explore recent Forrester market data here.

Let us know if you register for the preview of the new App Engine for Business and share your experiences in a comment. Also see other Forrester analysts like Frank Gillett sharing their assessment.

This sounds to me like settling for VMware lock-in to avoid hosting lock-in. However, as I mentioned in a comment to the above article and a Tweet today, “Many stories (e.g. PC World) about #GAE4Business that I'm seeing fail to note that the US$8/user*month charge is per application #io2010.”

David M. Smith rings in about pricing of the Google App Engine for Business (#GAE4Business, my hashtag) in his Big implications for the web and the cloud at Google I/O post of 5/19/2010 to the Gartner Blogs:

I spent today at the Google I/O developer conference. Fun Fact: Google claims it stands for Innovation and Open. Day one (unfortunately only able to attend one day) had two main themes: one was about the web, the other about the cloud. Big implications for both.

The Web, including HTML5, video and a web app store

…

The other main theme was “Bringing the cloud to the enterprise”.

First, VMware CEO Paul Maritz talked about Cloud portability and the role that Java, specifically the Spring framework, can play here. Through technologies that VMware acquired from Springsource, it has begun to drive Spring as a viable way to develop Java apps for the cloud. It spans not only the new version of Google App Engine (for business) but also its recently announced VMForce joint venture with Salesforce.com, as well as private clouds running Vsphere and other public clouds running Vcloud. VMware is posed to fill the void in enterprise cloud leadership. While there are certainly cloud leaders in general (e.g., Google, Amazon), there are no natural enterprise leaders. Microsoft is trying, but it is early. [Emphasis added.]

GAE for business was also announced as an enterprise focused offering that takes GAE and adds the spring framework support along with management tools, SLAs, SSL, a SQL database, and revised pricing. The pricing model derives more from Google Apps (SaaS-style subscription vs. IaaS-style ‘pay as you go’ which is how GAE (non-business) is priced. It is interesting that this pricing model was talked about, whereas for VMForce, it was not finalized. I view this as an indicator that the SaaS subscription model will be more popular for “PaaS” style offerings such as GAE. [Emphasis added.]

Clearly big news on both fronts. More to come. Oh and more tomorrow but I’ll have to get it second hand.

I believe the most popular payment model for GAE by far will be no charge for staying within GAE’s free service thresholds. Microsoft should adopt free thresholds for Windows Azure and possibly for SQL Azure, depending on Google’s pricing for the forthcoming SQL DBMS.

William Vambenepe claims “2010 is the year of PaaS” and analyzes VMware’s two most recent partnerships in his From VMWare + SalesForce.com (VMForce) to VMWare + Google: VMWare’s PaaS milestones post of 5/19/2010:

Three weeks ago, VMWare and Salesforce.com launched VMForce, a Salesforce-hosted PaaS solution based on VMWare runtime technology and force.com application services. In my analysis of the announcement, I wrote:

VMWare wants us to know they are under the covers because of course they have much larger aspirations than to be a provider to SalesForce. They want to use this as a proof point to sell their SpringSource+VMWare stack in other settings, such as private clouds and other public cloud providers (modulo whatever exclusivity period may be in their contract with SalesForce)

Well, looks like there wasn’t much of an exclusivity period after all as today VMWare is going an another date, with Google App Engine (GAE) this time. But a comparison of the two partnerships reveals that there is a lot more in the VMForce announcement than in today’s collaboration.

The difference in features

Before VMForce, we could not deploy Java applications on force.com. After VMForce we can (with the restriction that they have to be Spring-based). That’s a big difference. On the GAE side, the announcement today that we can now more easily run Spring Roo applications on GAE is nothing drastic. We could do it before, it was just harder. There was an ongoing effort to simplify it and there was no secret about this. Looks like it has delivered some improvements as part of the 1.1.0.M1 release. This only becomes major news via the magic of being announced as Google I/O rather than as an email on a developer mailing list.

Now, I don’t want to belittle the benefit of making things simpler. Simplicity is a feature. Especially for Roo where it is key to the value proposition. This looks very nice (and will probably push me over the edge to actually give Roo a spin now that I have an easy way to host whatever toy app I produce). It just doesn’t represent a major technology announcement.

The difference in collaboration

Salesforce is using VMWare infrastructure to run VMForce.com. They present a unified customer support service across the companies. They also presumably have some kind of revenue-sharing agreement. That makes for a close integration as far as business partnership go. No such thing that I can see on the Google side. No VMWare hypervisor is getting inside the Google infrastructure. No share of revenues from Google App Engine for Business goes to VMWare (any SpringSource infrastructure used is brought by the customer, not provided by Google, unlike with VMForce). And while Rod Johnson asserts that “today’s announcement makes Spring the preferred programming model for Google App Engine” (a sentence repeated on the vCloud blog), I don’t see any corresponding declaration on the Google side that would echo the implicit deprioritization of Python and non-Spring Java support. Though I am sure they had nice things to say on stage at Google I/O. From Google’s perspective this sounds more like “we’re happy that they’ve made their tools work well with OUR infrastructure” than an architectural inflection. I think Google has too much pride in their infrastructure to see VMWare/SpringSource as an infrastructure provider rather than just a tool/library. But maybe I’m just jealous… And tools are important anyway.

What we’re not seeing

These VMWare+Salesforce and VMWare+Google announcements are also interesting for what is NOT there. Isn’t it interesting that the companies that VMWare enables with a PaaS platform are those which… already have a PaaS platform? What we haven’t seen is VMWare enabling a mid-tier telco to become a PaaS provider. Someone who has power, servers and wires and wants to become a Cloud provider. VMWare is just starting to sell them an IaaS platform (vCloud) and cannot provide them with a turnkey PaaS platform yet by lack of application services (IDM, storage…) and of a comprehensive (i.e. not just virtualization) management platform. Make a list of what application services you think a PaaS platform requires and you probably have a good idea of the VMWare shopping list. But with Salesforce and Google this is not a problem, as they already have these services and all they need from VMWare is an application runtime (or just a runtime interface for Google) and some development tools.

The application services are also where the real PaaS portability issues appear. Which is also probably why Salesforce.com and Google are not too worried about being commoditized as interchangeable Spring runtime infrastructures. They know that the differentiation is with the application services. Their respective values in this domain are their business focus (processes, analytics, SaaS integration) for Salesforce and their scalability and low cost for Google (and more and more also the SaaS part). Not to mention Amazon who is not resting on its IaaS laurels and also keeps innovating in Cloud application services (e.g. RRS just yesterday). On their side, the value proposition seems to be centered on practicality and scale.

This is good news overall. As Steve Herrod writes, SpringSource has indeed been very busy and displays an impressive amount of energy in figuring this PaaS thing out. We’re still in the very early days of the battle of the Cloud Frameworks and at this point the armies are establishing beachheads and not yet running into one another. There is plenty of space for experimentation. 2010 is the year of PaaS.

VMware’s PR Department issued its VMware to Collaborate with Google on Cloud Computing press release on 5/19/2010:

Companies Address Need for Cloud Portability by Harnessing VMware vCloud Technologies, SpringSource, Google Web Toolkit, and Google App Engine to Simplify Modern Application Development and Deployment

SAN FRANCISCO, May 19, 2010 -- VMware, Inc. (NYSE: VMW), the global leader in virtualization and cloud infrastructure, today announced a series of technology collaborations with Google to deliver solutions that make enterprise software developers more efficient at building, deploying and managing applications within any cloud environment; public, private and hybrid. Announced today onstage at the Google I/O conference in San Francisco by Paul Maritz, president and CEO of VMware, the two companies will bring together technologies and expertise to help accelerate enterprise adoption of cloud computing.

“Companies are actively looking to move toward cloud computing. They are certainly attracted by the economic advantages associated with cloud, but increasingly are focused on the business agility and innovation promised by cloud computing,” said Paul Maritz, president and CEO of VMware. “VMware and Google are aligning to reassure our mutual customers and the Java community that choice and portability are of utmost importance to both companies. We will work to ensure that modern applications can run smoothly within the firewalls of a company’s datacenter or out in the public cloud environment.”

VMware and Google are collaborating on multiple fronts to make cloud applications more productive, portable, and flexible. These projects will enable Java developers to build rich web applications, use Google and VMware performance tools on cloud apps, and deployments of Spring Java applications on Google App Engine.

“Developers are looking for faster ways to build and run great web applications, and businesses want platforms that are open and flexible,” said Vic Gundotra, Google vice president of developer platforms. “By working with VMware to bring cloud portability to the enterprise, we are making it easy for developers to deploy rich Java applications in the environments of their choice.”

Spring, Google App Engine, and SpringSource Tool Suite

Google is announcing support for Spring Java apps on Google App Engine as part of a shared vision to make it easy to build, run, and manage applications for the cloud, and to do so in a way that makes the applications portable across clouds. Using the Eclipse-based SpringSource Tool Suite, developers can build Spring applications in a familiar and productive way and have the flexibility to choose to deploy their applications in their current private VMware vSphere environment, in VMware vCloud partner clouds, or directly to Google App Engine.Spring Roo and Google Web Toolkit

VMware and Google are working together to combine the speed of development of Spring Roo, a next generation rapid application development tool, with the power of the Google Web Toolkit (GWT) to build rich browser apps. These GWT powered applications can leverage modern browser technologies such as AJAX and HTML5 to create the most compelling end user experience on both smart phones and computers.Spring Insight and Google Speed Tracer

The two companies are also collaborating to more tightly integrate VMware’s Spring Insight performance tracing technology within the SpringSource tc Server application server with Google’s Speed Tracer technology to enable end to end performance visibility of cloud applications built using Spring and Google Web Toolkit.

Juan Carlos Perez reports Google's App Engine Now in Business Version on 5/19/2010 for PCWorld’s Business Center:

Google will make a strong pitch to enterprise programmers at its I/O developer conference Wednesday with the unveiling of a business version of its App Engine application hosting service and with new cloud portability initiatives in partnership with VMware.

With the announcements, Google hopes to tap into what it sees as rising demand from enterprises to create and host custom-built applications in a cloud architecture to have more deployment flexibility and reduce infrastructure management costs and complexity.

"What we hear loud and clear from medium and large enterprise customers is wanting that cloud platform to build their own applications on," said Matthew Glotzbach, Google Enterprise director of product management.

Google launched App Engine two years ago primarily for developers of consumer-oriented Web applications who wanted to host their software on the Google cloud infrastructure.

While businesses liked the App Engine concept, many felt the product lacked some key enterprise features required by IT departments, so Google is now filling those gaps with this new version, said David Glazer, a Google engineering director.

For example, App Engine for Business has a central IT administration console designed to manage all of an organization's applications, as well as a 99.9 percent uptime service-level guarantee and technical support.

App Engine for Business also lets IT administrators set security policies for accessing the organization's applications, and features a pricing scheme of US$8 per user per month, up to a $1,000 monthly maximum. The product is currently available to a limited number of customers, but Google hopes to broaden access to it later this year. [Emphasis added; see note below.]

Later, Google will let developers host SQL databases in App Engine for Business, offering another option to the Google Big Table data store, and add SSL to protect application communications.

Through a partnership with VMware, Google is working to provide enterprises that use App Engine with portability capacity, so that they can deploy their applications in a variety of Java-compatible settings, whether in App Engine itself, VMware-based private or partner clouds or another hosted application platform service such as Amazon's EC2. …

As I mentioned in a comment to the above article and a Tweet today, “Many stories (e.g. PC World) about #GAE4Business that I'm seeing fail to note that the US$8/user*month charge is per application #io2010.”

<Return to section navigation list>