Windows Azure and Cloud Computing Posts for 7/1/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Mark Kromer (@mssqldude) posted a list of links to his Complete Microsoft Cloud BI Series on 7/1/2011:

I completed my 5 part series on Microsoft Cloud BI for the SQL Server Magazine BI Blog today. To read the entire series from start to finish, these are the links to each of the pieces:

Thanks, Mark

<Return to section navigation list>

Marketplace DataMarket and OData

The Windows Azure Team (@WindowsAzure) asked and answered Love Baseball? Check Out The MLB Head-to-Head Stats Dashboard On Windows Azure DataMarket on 7/1/2011:

Are you a baseball fan? Would you like to know the best Major League Baseball (MLB) players right now or compare real-time stats for two MLB teams head-to-head? What if you could see it all in a real-time dashboard for free? Sound Good? Then we have great news for you: welcome to the MLB Head-to-Head Stats Dashboard!

The MLB Head-to-Head Stats Dashboard is built on Windows Azure and the STATS.com datasets available on the Windows Azure Marketplace DataMarket, The dashboard allows you to compare MLB player performance and see stats and insights about the current season -- all in real-time. Information is continually updated with the latest game data so the dashboard always shows the latest statistics. A free trial mode is available that enables you to compare two MLB teams; a full STATS account will allow you to compare head-to-head stats of all MLB players.

Click here to learn more and sign up for your free trial.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Steve Marx (@smarx) posted Cloud Cover Episode 49 - Access Control in the Windows Azure Toolkit for Windows Phone 7 on 7/1/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show at @CloudCoverShow.

In this episode, Vittorio Bertocci joins Steve as they discuss the role of Windows Azure Access Control in the Windows Azure Toolkit for Windows Phone 7.

In the news:

- New Windows Azure Traffic Manager Features Ease Visibility Into Hosted Service Health

- Rock, Paper, Azure! - The Grand Tournament

- Hosting Services with WAS and IIS on Windows Azure

- Porting Node to Windows with Microsoft's Help

Be sure to check out http://windowsazure.com/events to see events where Windows Azure will be present!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan explained the relationship between Windows Azure VM Role and SYSPREP in a 7/1/2011 post:

When someone prepare[s] VHD for VM Role, they might have the question if SYSPREP is really necessary or not.

First I would describe some of very important VM Role Image configuration achieved using SYSPREP as below:

VM image should be clean and in generalized state which is considered default image. Using a generalized image is the cleanest and most reliable method for deploying a Windows OS image on new hardware.

- VM should not have hardware configuration embedded in it. A generalized VM Image allows Windows Setup to properly re-evaluate the hardware to run smoothly. When VM runs on guest OS it configure itself to run based on Guest OS hardware and because the target hardware where your VM role will be running will be different from your own hardware where you have created the VM image so you need SYSPREP to setup OS in hardware neutral mode, so when it runs first time, it can actively re-evaluate the hardware environment.

- SYSPREP is prerequisite for cloning and duplicating machines. Through this process, each VM Image receive a fresh identity. If you are to create more than one instance from the parent, SYSPREP ensures that there are no ugly side effects.

- VM image “machine name” does matter when same image is used to deploy multiple instances as needed. SYSPREP erases the machine name to a state in which new name is given when VM is deployed. If the image is not SYSPREP, the machine name will remain the same and during multiple instances, duplicate machine name creates several conflicts.

- SYSPREP sets to image to non-activated state (or called default most – VL Activation) so when it is deployed or started, it can be activated depend on the environment . When VM Role image is deployed in cloud, the Windows Azure Integration Components are used to activate the VM Role image OS for Volume Licensing, regardless of which product key is present in the image.

- SYSPREP sets base OS locale to EN-US.

- VM Role image must be set to UTC time zone. SYSPREP is used to set this accurately.

- You must understand then Windows Azure does deploy instance or multiple instances form your VHD deployment. So it is must that your based VHD should be clean, and in such state that it can be used to deploy instances after instances as needed. SYSPREP is the best way to bring VM Image to this state.

- SYSPREP also sets up Administrator account to default state

As you can see SYSPREP really does help to create a VM Role image which can be effectively used for deploying multiple instances as needed so i would say SYSPREP indeed is needed.

Read more:

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

I had a hard time trying to get some diagnostics from a RoleEntryPoint.

I was doing some setup in this entry point and getting some errors.

So I tought, mmmm: this is a Task for the super Azure DiagnosticMonitor.

And I added a bunch of Trace statements and waited to get some output in my WADLogsTables, but NOTHING!!! ZERO NILCH! NADA!!

What happenned!!!

I took me a while to get to it. So

This is the things. I had to do.

1. First add a file called WaIISHost.exe.config

2. Add the Azure Diagnostics Trace Listener

<?xml version="1.0"?> <configuration> <system.diagnostics> <trace> <listeners> <add name="AzureDiagnostics" type="Microsoft.WindowsAzure.Diagnostics.DiagnosticMonitorTraceListener, Microsoft.WindowsAzure.Diagnostics, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"> <filter type="" /> </add> </listeners> </trace> </system.diagnostics> </configuration>3. And very very important, you must go to Copy to Output Directory property for this file and set it to Copy Always.

4. And another thing that you need is a lot of patience. The Diagnostics infraestructure takes a while.

So you add a Thread.Sleep after the Start callDiagnosticMonitor.Start(storageAccount, configuration);

Thread.Sleep(10000);5. After you do that you will be able to collect some information from the WADLogsTables

Tony Bailey (a.k.a. tbtechnet) posted Getting a Start on Windows Azure Pricing on 6/30/2011:

The Windows Azure Platform Pricing Calculator is pretty neat to help application developers get a rough initial estimate of their Windows Azure usage costs. But where to start? What sort of numbers do you plug in? We've taken a crack at some use cases so you can get a basic idea. We've put the Windows Azure Calculator Category Definitions at the bottom of the post.

Get a free 30-day Windows Azure pass no credit card required. Promo code = TBBLIF

- Get free technical support for your Windows Azure development. Just click on Windows Azure Platform under Technologies

1. E-commerce application

a. An application developer is going to customize an existing Microsoft .NET e-commerce application for a national chain, US-based florist. The application needs to be available all the time, even during usage spikes, scale for peak periods such as Mother’s Day and Valentine’s Day

b. You can use the Azure pricing data to figure out what elements of your application are likely to cost the most, and then examine optimization and different architecture options to reduce costs. For example, storing data in binary large object (BLOB) storage will increase the number of storage transactions. You could decrease transaction volume by storing more data in the SQL Azure database and save a bit on the transaction costs. Also, you could reduce the number of compute instances from two to one for the worker role if it’s not involved in anything user-interactive—it can be offline for a couple minutes during an instance reboot without a negative impact on the e-commerce application. Doing this would save $90.00 per month in compute instances (three instead of four instances in use).

2. Asset Tracking Application

a. A building management company needs to track asset utilization in its properties worldwide. Assets include equipment related to building upkeep, such as electrical equipment, water heaters, furnaces, and air conditioners. The company needs to maintain data about the assets and other aspects of building maintenance and have all necessary data available for quick and effective analysis.

b. Hosting applications like this asset tracking system on Windows Azure can make a lot of sense financially and as a strategic move. For about $6,500.00 in the first year, this building management company can improve the global accessibility, reliability, and availability of their asset tracking system. They gain access to a scalable cloud application platform with no capital expenses and no infrastructure management costs, and they avoid the internal cost of configuring and managing an intricate relational database. Even the pay-as-you-go annual cost of about $9,000.00 is a bargain, given these benefits.

3. Sales Training Application

a. A worldwide technology corporation wants to streamline its training process by distributing existing rich-media training materials worldwide over the Internet.

b. Hosting applications like this sales training system on Windows Azure can make a lot of sense financially and as a strategic move. Using the high estimate, for a little less than $10,000.00 a year, this technology company can avoid the cost of managing and maintaining its own CDN while taking advantage of a scalable cloud application platform with no capital expenses and no infrastructure management.

4. Social Media Application

a. A national restaurant chain has decided to use social media to connect directly with its customers. They hire an application developer that specializes in social media to develop a web application for a short-term marketing campaign. The campaign’s goal is to generate 500,000 new fans on a popular social media site.

b. Choosing to host applications on Windows Azure can make financial sense for short-term marketing campaigns that use social media applications because you don’t have to spend thousands of dollars buying hardware that will sit idle after the campaign ends. It also gives you the ability to rapidly scale the application in or out, up or down depending on demand. As a long-term strategic decision, using Azure lets you architect applications that are easy to repurpose for future marketing campaigns.

Windows Azure Calculator Category Definitions

Azure Calculator Category

How does this relate to my application?

What does this look like on Azure?

Measured by

This is the raw computing power needed to run your application.

A compute instance consists of CPU cores, memory, and disk space for local storage resources—it’s a pre-configured virtual machine (VM). A small compute instance is a VM with one 1.6 GHz core, 1.75 GB of RAM, and a 225 GB virtual hard drive. You can scale your application out by adding more small instances, or up by using larger instances.

Instance size:

Extra small

Small

Medium

Large

Extra large

If you application uses a relational database such as Microsoft SQL Server, how large is your dataset excluding any log files?

A SQL Azure Business Edition database. You choose the size.

GB

This is the data your application stores—product catalog information, user accounts, media files, web pages, and so on.

The amount of storage space used in blobs, tables, queues, and Windows Azure Drive storage

GB

Storage Transactions

These are the requests between your application and its stored data: add, update, read, and delete.

Requests to the storage service: add, update, read, or delete a stored file. Each request is analyzed and classified as either billable or not-billable based on the ability to process the request and the request’s outcome.

# of transactions

Data Transfer

This is the data that goes between the external user and your application (e.g., between a browser and a web site).

The total amount of data going in and out of Azure services via the internet. There are two data regions: North America/Europe and Asia Pacific.

GB in/GB out

Content Delivery Network (CDN)

This is any data you host in datacenters close to your users. Doing this usually improves application performance by delivering content faster. This can include media and static image files.

The total amount of data going in and out of Azure CDN via the internet. There are two CDN regions: North America/Europe and other locations.

GB in/GB out

Service Bus Connections

These are connections between your application and other applications (e.g., for off-site authentication, credit card processing, external search, third-party integration, and so on).

Establishes loosely-coupled connectivity between services and applications across firewall or network boundaries using a variety of protocols.

# of connections

Access Control Transactions

These are the requests between your application and any external applications.

The requests that go between an application on Azure and applications or services connected via the Service Bus.

# of transactions

Jonathan Rozenblit (@jrozenblit) posted What’s In the Cloud: Canada Does Windows Azure - Scanvee on 6/30/2011:

Since it is the week leading up to Canada day, I thought it would be fitting to celebrate Canada’s birthday by sharing the stories of Canadian developers who have developed applications on the Windows Azure platform. A few weeks ago, I started my search for untold Canadian stories in preparation for my talk, Windows Azure: What’s In the Cloud, at Prairie Dev Con. I was just looking for a few stores, but was actually surprised, impressed, and proud of my fellow Canadians when I was able to connect with several Canadian developers who have either built new applications using Windows Azure services or have migrated existing applications to Windows Azure. What was really amazing to see was the different ways these Canadian developers were Windows Azure to create unique solutions.

This week, I will share their stories.

Leveraging Windows Azure for Mobile Applications

Back in May, we talked about leveraging Windows Azure for your next app idea. We talked about the obvious scenarios of using Windows Azure – web applications. Lead, for example, is a web-based API hosted on Windows Azure. Election Night is also a web-based application, with an additional feature – it can be repackaged as a software-as-a-service (hosted on a platform-as-a-service). (More on this in future posts) Connect2Fans is an example of a web-based application hosted on Windows Azure. With Booom!!, we saw Windows Azure being used as a backend to a social application.

Today, we’ll see how Tony Vassiliev from Gauge Mobile and his team used Windows Azure as the backend for their mobile application.

Gauge Mobile

Gauge Mobile’s focus lies in mobile communications solutions. Scanvee, their proprietary platform, provides consumers, business and agencies the ability to create, manage, modify and track mobile barcode (ie. QR codes) and Near Field Communication (NFC) campaigns. Scanvee offers various account options to accommodate all business needs, from a basic free account to a fully integrated enterprise solution. Essentially, Gauge Mobile helps businesses of all sizes communicate more effectively with their consumers through mobile devices. They provide the tools to track and analyze their activities and make improvement in future communications.

Jonathan: When you guys were designing Scanvee, what was the rationale behind your decision to develop for the Cloud, and more specifically, to use Windows Azure?

Tony: Anything in the cloud makes more sense for a start-up. The advantages which equally apply to mobility can always be summed up to: affordability, scalability, and risk mitigation by reducing administrative requirements and increasing reliability. Microsoft has a definitive advantage when it comes to supporting their developer community. Windows Azure integration into existing development environments such as Visual Studio and SQL Server makes it easier and more efficient to develop for Azure. As well, naturally, utilization of existing technologies such as .Net framework and SQL Server are beneficial. Lastly, Platform-as-a-service model works better for us as a start-up than infrastructure-as-a-service offerings such as Amazon EC2. Azure offers turnkey solutions for load balancing, project deployment, staging environments and automatic upgrades. In conjunction with SQL Azure which replicates SQL Server in the cloud, total risk and administration costs of Azure solutions are less than an investment in EC2 where we still need to setup, configure and maintain our development and production environments internally.

Jonathan: What Windows Azure services are you using? How are you using them?

Tony: We are using a Windows Azure compute services - a web role (to which the mobile application connects) at the moment and we have a worker role in the works for background processing of large data inputs, outputs, invoice generation and credit card processing. We are also using one business SQL Azure database to hold the application's data and one for QA. We will be using sharding once we are at a point where we need to scale. Access Control and SQL Azure Reporting are two other features that we have on our roadmap. Access Control for integration into third party social networks and SQL Azure Reporting for our analytics. We may also end up using SQL Azure Sync depending on the state of SQL Azure and upcoming features for back up and point in time restores..

Jonathan: During development, did you run into anything that was not obvious and required you to do some research? What were your findings? Hopefully, other developers will be able to use your findings to solve similar issues.

Tony: There were few minor hiccups but nothing significant, everything was pretty straight forward!

Jonathan: Lastly, how did you and your team ramp up on Windows Azure? What resources did you use? What would you recommend for other Canadian developers to do in order to ramp up and start using Windows Azure??

Tony: Our senior engineer and architect is a .NET expert who had previously heard of Azure. He came equipped with all of Microsoft's development tools which provide good integration for Azure development. We started with a free account on Azure, created a prototype web role, deployed it, and sure enough it was a very simple and elegant procedure and we have continued to use Azure since.

As you can see, when it comes to mobile platforms, Windows Azure offers you an easy way to add backend services for your applications without having to put strain on the device. If your application has intensive processing and data requirements, such as Scanvee, you’ll need the infrastructure capacity in the backend to be able to support those requirements. Windows Azure can do that for you in a matter of a few clicks with no upfront infrastructure or configuration costs. Check out Connecting Windows Phone 7 and Slates to Windows Azure on the Canadian Mobile Developers’ Blog to get a deeper understand of how these platforms can work together. Once you’ve done that, get started by downloading the Windows Azure Toolkit for Windows Phone 7 or for iOS and working through Getting Started With The Windows Azure Toolkit for Windows Phone 7 and iOS.

I’d like to take this opportunity to thank Tony for sharing his story. For more information on Gauge Mobile, check out their site, follow them on Twitter, Facebook, and LinkedIn.

Tomorrow – another Windows Azure developer story.

Join The Conversation

What do you think of this solution’s use of Windows Azure? Has this story helped you better understand usage scenarios for Windows Azure? Join the Ignite Your Coding LinkedIn discussion to share your thoughts.

Previous Stories

Missed previous developer stories in the series? Check them out here.

Jas Sandhu (@jassand) reported Windows Azure Supports NIST Use Cases using Java in a 6/29/2011 post to the Interoperability @ Microsoft blog:

We've been participating in creating a roadmap for adoption of cloud computing throughout the federal government, with the National Institute for Standards and Technology (NIST) , an agency of the U.S. Department of Commerce, and the United States first federal physical science research laboratory. NIST is also known for publishing the often-quoted Definition of Cloud Computing, used by many organizations and vendors in the cloud space.

Microsoft is participating in the NIST initiative to jumpstart the adoption of cloud computing standards called Standards Acceleration to Jumpstart the Adoption of Cloud Computing, (SAJACC).The goal is to formulate a roadmap for adoption of high-quality cloud computing standards. One way they do this is by providing working examples to show how key cloud computing use cases can be supported by interfaces implemented by various cloud services available today. Microsoft worked with NIST and our partner, Soyatec, to demonstrate how Windows Azure can support some of the key use cases defined by SAJACC using our publicly documented and openly available cloud APIs.

NIST works with industry, government agencies and academia. They use an open and ongoing process of collecting and generating cloud system specifications. The hope is to have these resources serve to both accelerate the development of standards and reduce technical uncertainty during the interim adoption period before many cloud computing standards are formalized.

By using the Windows Azure Service Management REST APIs we are able to manage services and run simple operations including simple CRUD operations, solve simple authentication and authorizations using certificates. Our Service management components are built with RESTful principles and support multiple languages and runtimes including Java, PHP and .NET as well as IDEs including Eclipse and Visual Studio.

It also provides rich interfaces and functionality that provide scalable access to public, private and hosted clouds. All of the SDKs are available as open source too. With the Windows Azure Storage Service REST APIs we can use 3 sets of APIs that provide storage management support for Tables, Blobs and Queues with the same RESTful principles using the same set of languages. These APIs as well are available as open source.

We also have an example that we have created called SAJACC use case drivers to demonstrate this code in action. In this demonstration written in Java we show the basic functionality demonstrated for the NIST Sample. We created the following scenarios and corresponding code …

1. Copying Data Objects into a Cloud, the user is able to copy items on their local machine (client) and copy to the Windows Azure Storage without any change in the file; the assumptions are to have credential with a pair of account name and key. The scenario involves generating a container with a random name in each test execution to avoid possible name conflicts. The container uses the Windows Azure API. With the credential previously created the user prepares the Windows Azure Storage execution context. Then a blob container is created, with optional custom network connection timeout and retry policy, you are able to easily recover from network failure. Then we will create a block blob and transfer a local file to it. We will then compute a MD5 hash for the local file, get one for the blob and compare it to show there are equivalent and no data was lost

2. Copying Data Objects Out of a Cloud, repeats what we do from the first use case, Copying Data Objects into a Cloud. Additionally we will include another scenario, where set public access to the blob container and get its public URL; we will then as an un-identified (public) user retrieve the blob using an http GET request and save it to the local file system. We will then generate a MD5 hash for this file and compare it to the originals we used previously

3. Erasing Data Objects in a Cloud erases a data object on behalf of a user. With the credentials and data you created in the previous examples we will use the public URL of the blob and delete it by using its blob name. We will verify by using an http GET request to confirm that it has been erased.

4. VM Control: Allocating VM Instance, the user is able to create a VM image to compute on that is secure and performs well. The scenario involves creating a Java Keystore and Truststore from a user certificate to support SSL transport (described below). We will also create Windows Azure management execution context to issue commands from and create a hosted service using it. We will then prepare a Windows Azure service package and copy it to the blob we created in the first use case. We will then deploy in the hosted service using its name and service configuration information including the URL of the blob and the number of instances. We can then change the instance count to as many roles we want to execute using what we deploy and verify the change by getting status information from it.

5. VM Control: Managing Virtual Machine Instance State, the user is able to stop, terminate, reboot, and start the state of a virtual instance. We will first prepare an app to run as the Web Role in Windows Azure. The program will add a Windows Azure Drive to keep some files persistent when the VM is killed or rebooted. We will have two web pages, one where a random file is created inside the mounted drive, and another to list all the files on the drive. Then we will build and package the program and deploy the Web Role create as a hosted service on Window Azure using the portal. We will then create another program to manage the VM instance state similar to what we had done before in the previous use case, VM Control: Allocating VM Instance. We will use http GET requests to visit the first web page to create a random file on the Windows Azure Drive and the second web page to lists the files to show that they are not empty. We will then use the management execution context to stop the VM and disassociate the IP address and confirm this by visiting the second web page which will not be available. We will then use the same management execution context to restart the VM and confirm that the files in the drive are persistent between the restarts of the VM.

6. Copying Data Objects between Cloud-Providers, the user is able to copy data objects from one Windows Azure Storage account to another. This example involves creating a program to run as a worker role where a storage execution context is created. We will use the container as per the first use case, Copying Data Objects into a Cloud. We will download the blob to a local file system. We will then then create a second storage execution context and transfer the downloaded file to this new storage execution context. Then as per the first use case we will create a new program and deploy it to retrieve the two blobs and compare and verify the contents MD5 hashes are the same.

Java code to test the Service Management API

Test Results

Managing API Certificates

For the Java examples (use cases 4-6), we need to have key credentials. In our download we demonstrate the Service Management API being called with an IIS certificate. We will take you through generating an X509 certificate for the Windows Azure Management API. We show the management console for IIS7 and certificate manager in Windows. Creating the self-signed server certificates and exporting them to the Windows Azure portal and generate a JKS format key store for the Java Azure SDK. We will then upload it to the Azure account and converting the keys for use in the Java Keystore and for calling the Service Management API from Java.

We then demonstrate the Service Management API using the Java Key tool Certificates. We will use the Java Keystore and export an X.509 certificate to the Windows Azure Management API. Then we upload certificate to an Azure account. We will then construct a new Service Management Rest object with the specific parameters and end by testing the Services Management API from Java

To get more information, the Windows Azure Storage Services REST API Reference and the Windows Azure SDK for PHP Developers are useful resources to have. You may also want to explore more with the following tutorials:

- Table Storage service, offers structured storage in the form of tables. The Table service API is a REST API for working with tables and the data that they contain.

- Blob Storage service, stores text and binary data. The Blob service offers the following three resources: the storage account, containers, and blobs

- Queue Service, stores messages that may be read by any client who has access to the storage account. A queue can contain an unlimited number of messages, each of which can be up to 8 KB in size

With the above tools and Azure cloud services, you can implement most of the Use Cases listed by NIST for use in SAJACC. We hope you find these demonstrations and resources useful, and please send feedback!

Resources:

Patrick Butler Monterde described Developing and Debugging Azure Management API CmdLets in a 6/29/2011 post:

The Azure Management API Cmdlets enable you to use PowerShell Cmdlets to manage your Azure projects. You can access this project from the following link: http://wappowershell.codeplex.com/

Working with the Azure Management API Cmdlets

1. Follow the instructions to install the Cmdlets

2. Installing a certificate is needed for executing the Cmdlets:

For creating the certificate follow this instructions: http://blogs.msdn.com/b/avkashchauhan/archive/2010/12/30/handling-issue-csmanage-cannot-establish-secure-connection-to-management-core-windows-net.aspx

Viewing Certificates: http://windows.microsoft.com/en-US/windows-vista/View-or-manage-your-certificates

Lessons Learned

Install the certificate your are going to be using as the Azure Management API certificate in the computer you are using for Execution/development. If this is not done you will get errors like this one: Windows Azure PowerShell CMDLET returns "The HTTP request was forbidden with client authentication scheme 'Anonymous'" error message. Link: http://blogs.msdn.com/b/avkashchauhan/archive/2011/06/13/what-to-do-when-windows-azure-powershell-cmdlet-returns-quot-the-http-request-was-forbidden-with-client-authentication-scheme-anonymous-quot-error-message.aspx

Developing and Debugging Recommendations

1. Closing the PowerShell Windows for Compiling

The PowerShell shell windows will lock the build and rebuild of the project because it using the Azure Management Cmdlets compiled libraries. You need to close any PowerShell window you may have open before Building the Azure Cmdlets project and for un-installation of the Cmdlets.

2. Streamline Testing

Create a text file with the variables and values, open a PowerShell window and copy and paste the variables. The variables will be available for the entire life of the window. Example:

$RolName = "WebRole1_IN_0"

$RolName2= "WebRole1_IN_1"

$Slot = "Production"

$ServiceName = "Mydemo"

$SubId = "6cad9315-45a8-3e4r4e-8bdc-3e3rfdeerrrf"

$Cert = "C:\certs\AzureManagementAPICertificate.cer"Reboot-RoleInstance -RoleInstanceName $RolName -Slot $Slot -SubscriptionId $SubId -Certificate $Cert -ServiceName $ServiceName

ReImage-RoleInstance -RoleInstanceName $RolName2 -Slot $Slot -SubscriptionId $SubId -Certificate $Cert -ServiceName $ServiceName

Visual studio Azure Management Cmdlets debugging Steps:

- Open the Azure Management Cmdlets Solution in Visual Studio

- Close all open PowerShell windows

- Open a CMD Windows and Run the Cmdlets Uninstall script:

- Run the script “uninstallPSSnapIn.bat”

- Find location “C:\AzureServiceManagementCmdlets\setup\dependency_checker\scripts\tasks”

- Recompile the project in Visual studio

- (as needed) Put your breakpoints

- On the CMD Window Run the Cmdlets installation script: installPSSnapIn.cmd

- Find location “C:\AzureServiceManagementCmdlets\setup\dependency_checker\scripts\tasks”

- Run the script “installPSSnapIn.cmd”

- Open a PowerShell Window. Run the following commands in the PowerShell window

- Add-PSSnapin AzureManagementToolsSnapIn

- Get-Command -PSSnapin AzureManagementToolsSnapIn

- Go back to Visual studio and Attach the Debugger to the PowerShell

- Tools Tab –> Attach to Process… –> Attach to the PowerShelll process.

- Go back to the the PowerShell Window

- Setup the variables you may need:

$RolName = "WebRole1_IN_0"

$RolName2= "WebRole1_IN_1"

$Slot = "Production"

$ServiceName = "Mydemo"

$SubId = "6cad9315-45a8-3e4r4e-8bdc-3e3rfdeerrrf"

$Cert = "C:\certs\AzureManagementAPICertificate.cer- Run the CmdLet you want to debug >> The execution will stop on the BreakPoint in Visual Studio

- For Example: Reboot-RoleInstance -RoleInstanceName $RolName -Slot $Slot -SubscriptionId $SubId -Certificate $Cert -ServiceName $ServiceName

Running the Commands:

Thanks to David Aiken for the PowerShell tips and tricks.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework

Joe Kunk (@JoeKunk) asserted “Learn how to extend support for Microsoft C#-only BlankExtension/BizType projects to Visual Basic” in a deck for his An Encrypted String Data Type for Visual Studio LightSwitch article for the July 2001 edition of Visual Studio Magazine’s On VB column:

In my recent article, "Is Visual Studio LightSwitch the New Access?", I looked at the suitability of LightSwitch as a replacement tool for departmental applications developed in Microsoft Access. LightSwitch is being positioned as a tool for the power user to develop Microsoft .NET Framework applications without having to face the substantial learning curve of the full .NET technology stack. I described each of the six extension types available to add the functionality to LightSwitch that an experienced .NET developer might want to use to make development in LightSwitch easier, or that a designer may want to use to build a visually appealing application.

This article demonstrates the usefulness of LightSwitch extension points by showing how to build a custom business data type to provide automatic encryption to a database string field. Once installed, the "Encrypted String" field data type can be available for use in any table in a LightSwitch project with the extension enabled. When a field of the Encrypted String data type is used in a LightSwitch screen, the field automatically decrypts itself when the cursor enters the field and automatically encrypts itself whenever the cursor leaves the field. The LightSwitch developer can store sensitive information in the database in encrypted form with no more effort than selecting the "Encrypted String" data type when designing the table.

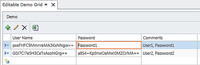

Figure 1 shows an example of a LightSwitch grid with two rows of data, each row having User Name and Password as the Encrypted String data type. The cursor is in the Password field of the first row, so it's shown and editable as plain text -- which is automatically encrypted when the cursor leaves the field. The Comments column shows the decrypted value of each field. The database sees and saves the field value in its encrypted form; be sure to allow sufficient length in the database field for the encrypted string value.

[Click on image for larger view.]Figure 1. The Encrypted String business type being edited in a LightSwitch screen.

Installing and Using the Encrypted String Business Type

In order to run LightSwitch, you must be using Visual Studio Professional or higher with Service Pack 1 installed, and also the Visual Studio LightSwitch beta 2 (available for download here). Beta 1 must be uninstalled before installing beta 2.To install the Encrypted String business type into LightSwitch, download the code from this article and extract all files. Close all instances of Visual Studio. Double-click the Blank.Vsix.vsix file found in the Blank.VSIX\bin folder. You'll see the Visual Studio Extension Installer dialog box. Make sure the Microsoft LightSwitch checkbox is checked and click the Install button. You'll see an Installation Complete confirmation dialog box once the install is done. The Visual Studio Extension Manager will now show Encrypted String Extension in the Installed Extensions list.

The Encrypted String extension must be enabled in any LightSwitch project that wishes to use it. In the LightSwitch project, right-click on the project's properties and choose the Extensions tab. Click on the Encrypted String Extension item to activate it for the project, as shown in Figure 2. Now the Encrypted String data type is available for use when creating a table in the same manner as an Integer, Date Time or String field.

[Click on image for larger view.]Figure 2. Including the Encrypted String Extension in the LightSwitch project.

To remove existing LightSwitch extensions, go to the Extension Manager in Visual Studio. Choose Tools and then Extension Manager, then select the extension and choose Uninstall. Close Visual Studio for the change to take effect.

Creating the Custom Business Type Extension

On March 16, 2011, the LightSwitch team published the "LightSwitch Beta 2 Extensibility Cookbook". In addition to the Cookbook document, the post has a BlankExtension.zip file, which is the completed version of the Cookbook's instructions to create a sample extension of each type in the Cookbook. This completed sample will serve as the starting point to create the Encrypted String custom business type extension. The instructions in this section serve as a guide for how you can modify this sample project to implement your own custom business type, as I did.Download the BlankExtension.zip file and extract the "Blank Extension - BizType" project to a location of your choosing. Load the BlankExtension solution into Visual Studio. Examine each project in the Blank Extension solution to ensure all LightSwitch references are correct in the Blank.Client, Blank.ClientDesign and Blank.Common projects. Note that the LightSwitch beta 2 product does not install into the Global Assembly Cache, or GAC, but instead into the C:\Program Files\Microsoft Visual Studio 10.0\Common7\IDE\LightSwitch\1.0 folder. Remove any incorrect references and browse to the needed assemblies in the Client subfolder of the LightSwitch folder.

Julie Lerman (@julielerman) described Entity Framework June 2011 CTP: TPT Inheritance Query Improvements in a 7/1/2011 post to her Don’t Be Iffy blog:

I want to look at some of the vast array of great improvements coming to EF that are part of the June 2011 CTP that was released yesterday.

Everyone’s going on and on about the enums.

There’s a lot more in there. Not sure how many I’ll cover but first up will be the TPT store query improvements.

I’ll use a ridiculously simple model with code first to demonstrate and I’ll share with you a surprise discovery I made (which began with some head scratching this morning).

Beginning with one base class and a single derived class.

public class Base { public int Id { get; set; } public string Name { get; set; } } public class TPTA: Base { public string PropA { get; set; } }By default, code first does TPH and would create a single database table with both types represented. So I use a fluent mapping to force this to TPT. (Note that you can’t do this configuration with Data Annotations).

public class Context: DbContext { public DbSet<Base> Bases { get; set; } protected override void OnModelCreating(DbModelBuilder modelBuilder) { modelBuilder.Entity<Base>().Map<TPTA>(m => m.ToTable("TPTTableA")); } }Here’s my query:

from c in context.Bases select new {c.Id, c.Name};Notice I’m projecting only fields from the base type.

And the store query that results:

SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name] FROM [dbo].[Bases] AS [Extent1]Not really anything wrong there. So what’s the big deal? (This is where I got confused…

)

Now I’ll add in another derived type and I’ll modify the configuration to accommodate that as well.

public class TPTB: Base { public string PropB { get; set; } } modelBuilder.Entity<Base>().Map<TPTA>(m => m.ToTable("TPTTableA"))

.Map<TPTB>(m => m.ToTable("TPTTableB"));Execute the query again and look at the store query now!

SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name] FROM [dbo].[Bases] AS [Extent1] LEFT OUTER JOIN (SELECT [Extent2].[Id] AS [Id] FROM [dbo].[TPTTableA] AS [Extent2] UNION ALL SELECT [Extent3].[Id] AS [Id] FROM [dbo].[TPTTableB] AS [Extent3]) AS [UnionAll1] ON [Extent1].[Id] = [UnionAll1].[Id]Egad!

Now, after switching to the new bits (retargeting the project and removing EntityFramework.dll (4.1) and referencing System.Data.Entity.dll (4.2) instead)

The query against the new model (with two derived types) is trimmed back to all that’s truly necessary:

SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name] FROM [dbo].[Bases] AS [Extent1]Here’s some worse ugliness in EF 4.0. Forgetting the projection, I’m now querying for all Bases including the two derived types. I.e. “context.Bases”.

SELECT CASE WHEN (( NOT (([UnionAll1].[C2] = 1) AND ([UnionAll1].[C2] IS NOT NULL)))

AND ( NOT (([UnionAll1].[C3] = 1) AND ([UnionAll1].[C3] IS NOT NULL)))) THEN '0X' WHEN (([UnionAll1].[C2] = 1)

AND ([UnionAll1].[C2] IS NOT NULL)) THEN '0X0X' ELSE '0X1X' END AS [C1], [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], CASE WHEN (( NOT (([UnionAll1].[C2] = 1) AND ([UnionAll1].[C2] IS NOT NULL))) AND ( NOT (([UnionAll1].[C3] = 1)

AND ([UnionAll1].[C3] IS NOT NULL)))) THEN CAST(NULL AS varchar(1)) WHEN (([UnionAll1].[C2] = 1)

AND ([UnionAll1].[C2] IS NOT NULL)) THEN [UnionAll1].[PropA] END AS [C2], CASE WHEN (( NOT (([UnionAll1].[C2] = 1) AND ([UnionAll1].[C2] IS NOT NULL))) AND ( NOT (([UnionAll1].[C3] = 1)

AND ([UnionAll1].[C3] IS NOT NULL)))) THEN CAST(NULL AS varchar(1)) WHEN (([UnionAll1].[C2] = 1)

AND ([UnionAll1].[C2] IS NOT NULL)) THEN CAST(NULL AS varchar(1)) ELSE [UnionAll1].[C1] END AS [C3] FROM [dbo].[Bases] AS [Extent1] LEFT OUTER JOIN (SELECT [Extent2].[Id] AS [Id], [Extent2].[PropA] AS [PropA], CAST(NULL AS varchar(1)) AS [C1], cast(1 as bit) AS [C2], cast(0 as bit) AS [C3] FROM [dbo].[TPTTableA] AS [Extent2] UNION ALL SELECT [Extent3].[Id] AS [Id], CAST(NULL AS varchar(1)) AS [C1], [Extent3].[PropB] AS [PropB], cast(0 as bit) AS [C2], cast(1 as bit) AS [C3] FROM [dbo].[TPTTableB] AS [Extent3]) AS [UnionAll1] ON [Extent1].[Id] = [UnionAll1].[Id]And now with the new CTP:

SELECT CASE WHEN (( NOT (([Project1].[C1] = 1) AND ([Project1].[C1] IS NOT NULL)))

AND ( NOT (([Project2].[C1] = 1) AND ([Project2].[C1] IS NOT NULL)))) THEN '0X' WHEN (([Project1].[C1] = 1)

AND ([Project1].[C1] IS NOT NULL)) THEN '0X0X' ELSE '0X1X' END AS [C1], [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], CASE WHEN (( NOT (([Project1].[C1] = 1) AND ([Project1].[C1] IS NOT NULL))) AND ( NOT (([Project2].[C1] = 1)

AND ([Project2].[C1] IS NOT NULL)))) THEN CAST(NULL AS varchar(1)) WHEN (([Project1].[C1] = 1)

AND ([Project1].[C1] IS NOT NULL)) THEN [Project1].[PropA] END AS [C2], CASE WHEN (( NOT (([Project1].[C1] = 1) AND ([Project1].[C1] IS NOT NULL))) AND ( NOT (([Project2].[C1] = 1)

AND ([Project2].[C1] IS NOT NULL)))) THEN CAST(NULL AS varchar(1)) WHEN (([Project1].[C1] = 1)

AND ([Project1].[C1] IS NOT NULL)) THEN CAST(NULL AS varchar(1)) ELSE [Project2].[PropB] END AS [C3] FROM [dbo].[Bases] AS [Extent1] LEFT OUTER JOIN (SELECT [Extent2].[Id] AS [Id], [Extent2].[PropA] AS [PropA], cast(1 as bit) AS [C1] FROM [dbo].[TPTTableA] AS [Extent2] ) AS [Project1] ON [Extent1].[Id] = [Project1].[Id] LEFT OUTER JOIN (SELECT [Extent3].[Id] AS [Id], [Extent3].[PropB] AS [PropB], cast(1 as bit) AS [C1] FROM [dbo].[TPTTableB] AS [Extent3] ) AS [Project2] ON [Extent1].[Id] = [Project2].[Id]At first glance you may think “but it’s just as long and just as ugly” but look more closely:

Notice that the first query uses a UNION for the 2nd derived type but the second uses another LEFT OUTER JOIN. Also the “Cast 0 as bit” is gone from the 2nd query. I am not a database performance guru but I’m hoping/guessing that all of the work involved to make this change was oriented towards better performance. Perhaps a DB guru can confirm. Google wasn’t able to.

I did look at the query execution plans in SSMS. They are different but I’m not qualified to understand the impact.

Here’s the plan from the first query (from EF 4 with the unions)

and the one from the CTP generated query:

The ADO.NET Team published Announcing the Microsoft Entity Framework June 2011 CTP on 6/30/2011:

We are excited to announce the availability of the Microsoft Entity Framework June 2011 CTP. This release includes many frequently requested features for both the Entity Framework and the Entity Framework Designer within Visual Studio.

What’s in Entity Framework June 2011 CTP?

The Microsoft Entity Framework June 2011 CTP introduces both new runtime and design-time features. Here are some of the new runtime features:

- The Enum data-type is now available in the Entity Framework. You can use either the Entity Designer within Visual Studio to model entities that have Enum properties, or use the Code First workflow to define entities that have Enum objects as properties. You can use your Enum property just like any other scalar property, such as in LINQ queries and updates.

- Two new spatial data-types for Geography and Geometry are now natively supported by the Entity Framework. You can use these types and methods on these types as part of LINQ queries, for example to find the distance between two locations as part of a query.

- You can now add table-valued functions to your entity data model. A table-valued function is similar to a stored procedure, but the result of executing the table-valued function is composable, meaning you can use it as part of a LINQ query.

- Stored procedures can now have multiple result sets in your entity data model.

- The Entity Framework June 2011 CTP includes several SQL generation improvements, especially around optimizing queries over models with table-per-type (TPT) inheritance.

- LINQ queries are now automatically compiled and cached to improve query performance. In previous releases of the Entity Framework, you could use the CompiledQuery class to compile a LINQ query explicitly but in this CTP this happens automatically for LINQ queries.

There are several new features for the Entity Framework Designer within Visual Studio:

- The Entity Designer now supports creation of Enums, spatial data-types and table-value functions from the designer surface.

- You can now create multiple diagrams for each entity data model. Each diagram can contain entities and relationships to make visualizing your model easier. You can switch between diagrams using the Model Browser and include related entities on each diagram as an optional command.

- The StoreGeneratedPattern for key columns can now be set on an entity Properties window and this value will propagate from your entity model down to the store definition.

- Diagram information is now stored in a separate file from the edmx or entity code files.

- When you import stored procedures using the Entity Model Wizard, you can now batch import your stored procedures as function imports. The result shape of each stored procedure will automatically become a new complex type in your entity model. This makes getting started with stored procedures very easy.

- The Entity Designer surface now supports selection driven highlighting and entity shape coloring.

Getting the Microsoft Entity Framework June 2011 CTP

The Microsoft Entity Framework June 2011 CTP is available on the Microsoft Download Center and there are three separate installers. You will need to have Visual Studio 2010 SP1 to install and use the new Entity Designer. All three installers are available here and should be installed in the following order:

- Download and install the runtime installer for the Entity Framework 2011 CTP runtime components.

- Download and install the WCF Data Services 2011 CTP installer to use new features in WCF Data Services with the Entity Framework.

- Download and install the designer installer to use the new Entity Framework 2011 CTP entity designer within Visual Studio 2010 SP1.

Getting Started

There are a number of resources to help you get started with this CTP.

Targeting the Microsoft Entity Framework June 2011 CTP Runtime

The easiest way to use the CTP is to set your project’s target framework to Microsoft Entity Framework June 2011 CTP. This target framework allows use of the CTP versions of the Entity Framework assemblies in addition to the standard .NET 4.0 RTM assemblies.

To re-target a C# project:

- Right-click the project in the Solution Explorer and choose Properties or select <Project> Properties from the Project menu.

- On the Application tab, select Microsoft Entity Framework June 2011 CTP or Microsoft Entity Framework June 2011 CTP Client Profile from the Target framework dropdown.

- A dialog box will appear indicating that the project needs to be closed and reopened to retarget the project. Click Yes.

To re-target a VB.NET project:

- Right-click the project in the Solution Explorer and choose Properties or select <Project> Properties from the Project menu.

- Select the Compile tab and click the Advanced Compile Options… button on the bottom left of the dialog.

- Select Microsoft Entity Framework June 2011 CTP or Microsoft Entity Framework June 2011 CTP Client Profile from the Target framework dropdown.

- Click Ok.

- A dialog box will appear indicating that the project needs to be closed and reopened to retarget the project. Click Yes.

Walkthroughs

For assistance in using the new design-time and runtime features please refer to the following walkthroughs [see posts below]:

Support

The Entity Framework Pre-Release Forum can be used for questions relating to this release.

Uninstalling

We recommend installing the Microsoft Entity Framework June 2011 CTP in a non-production environment to avoid any risk associated with installing and uninstalling pre-release software. If the CTP is uninstalled, existing functionality installed with Visual Studio 2010 (such as the Entity Designer) may no longer be available. To restore the features that were removed, complete the following steps:

- Locate the Visual Studio 2010 installation DVD and insert it into your computer. If you are using an Express SKU of Visual Studio and don’t have the installation media, you can obtain new media here

- Launch an administrative-level command prompt and execute one of the following commands. These commands restore versions of the EF Tools files that were initially shipped with Visual Studio 2010.

With Ultimate, Pro, or other non-Express SKUs:

[DVD drive letter]:\WCU\EFTools\ADONETEntityFrameworkTools_enu.msi USING_EXUIH=1With C# Express, VB Express, and Visual Web Developer Express SKUs:

[DVD drive letter]:\[VBExpress, VCSExpress, or VWDExpress]\WCU\EFTools\ADONETEntityFrameworkTools_enu.msi USING_EXUIH=1

- To update the EF Tools with what shipped with Visual Studio Service Pack 1, there are two options:

- To run an update only for EF Tools, create a DVD with Visual Studio Service Pack 1 and execute the following command from an administrative-level command prompt. This update will only take a few minutes to complete.

msiexec /update [DVD drive letter]:\VS10sp1-KB983509.msp /package {14DD7530-CCD2-3798-B37D-3839ED6A441C}

- To avoid creating a DVD, reapply SP1. Launch the Uninstall/Change programs applet in the Control Panel, double-click on Microsoft Visual Studio 2010 Service Pack 1, and then choose the option to “Reapply Microsoft Visual Studio Service Pack 1.” This approach will take longer since all of SP1 will be applied, not just the EF Tools file updates.

What’s Not in the Entity Framework June 2011 CTP?

There are a number of commonly requested features that did not make it into this CTP of the Entity Framework. We appreciate that these are really important to you and our team has started work on a number of them already. We will be reaching out for your feedback on these features soon:

- Stored Procedure or table-valued function support in Code First

- Migration support in Code First

- Customizable conventions in Code First

- Unique constraints support

- Batching create-update-delete statements during save

- Second level caching

Thank You

It is important for us to hear about your experience using the Entity Framework and we appreciate any and all feedback you have regarding this CTP or other aspects of the Entity Framework. We thank you for giving us your valuable input and look forward to working together on this release.

ADO.NET Entity Framework Team

Pedro Ardila described Walkthrough: Spatial (June CTP) on 6/30/2011:

Spatial data is one of the new features in Entity Framework June 2011 CTP. Spatial data allows users to represent locations on a map as well as points, geometric shapes, and other data which relies on a coordinate system. There are two main types of spatial data: Geography and Geometry. Geography data takes the ellipsoid nature of the earth into account while Geometry bases all measurements and calculations on Euclidean data. Please see this blog post if you would like to understand more about the Spatial design in EF.

In this walkthrough we will see how to use EF to interact with spatial data in a SQL Server database. We will create a console application in Visual Studio using the Code First and Database First Approach. Our application will use a LINQ query to find all landmarks within a certain distance of a person’s location.

Pre-requisites

- Visual Studio 2010 Express and SQL Server 2008 R2 Express or Higher. Click here to download the Express edition of VS and SQL Server Express

- Microsoft Entity Framework June 2011 CTP. Click here to download.

- Microsoft Entity Framework Tools June 2011 CTP. Click here to download.

- Microsoft SQL Server 2008 Feature Pack. This pack contains the SQL Spatial Types assembly used by Entity Framework. Click here to download.

- You can also get the types by installing SQL Server Management Studio Express or higher. You can download Express here.

- Your Visual Studio 2010 setup might include these types already, in which case you won’t need to install additional components.

- SeattleLandmarks database. You can find it at the bottom of this post.

Setting up the Project

- Launch Visual Studio and create a new C# Console application named SeattleLandmarks.

- Make sure you are targeting the Entity Framework June 2011 CTP. Please see the EF June 2011 CTP Intro Post to learn how to change the target framework.

Code First Approach

We will first see how to create our application using Code First. You could alternatively use a Database First approach, which you will see below.

Creating Objects and Context

First we must create the classes we will use to represent People and Landmarks, as well as our context. For the sake of this walkthrough, we will create these in Program.cs. Normally you would create these in separate files. The classes will look as follows:

public class Person { public int PersonID { get; set; } public string Name { get; set; } public DbGeography Location { get; set; } } public class Landmark { public int LandmarkID { get; set; } public string LandmarkName { get; set; } public DbGeography Location { get; set; } public string Address { get; set; } } public class SeattleLandmarksEntities : DbContext { public DbSet<Person> People { get; set; } public DbSet<Landmark> Landmarks { get; set; } }

Database InitializerWe will use a database initializer to add seed data to our Landmarks and People tables. To do so, create a new class file called SeattleLandmarksSeed.cs. The contents of the file are the following:

using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.Data.Entity; using System.Data.Spatial; namespace SeattleLandmarks { class SeattleLandmarksSeed : DropCreateDatabaseAlways<SeattleLandmarksEntities> { protected override void Seed(SeattleLandmarksEntities context) { var person1 = new Person { PersonID = 1, Location = DbGeography.Parse("POINT(-122.336106 47.605049)"), Name = "John Doe" }; var landmark1 = new Landmark { LandmarkID = 1, Address = "1005 E. Roy Street", Location = DbGeography.Parse("POINT(-122.31946 47.625112)"), LandmarkName = "Anhalt Apartment Building" }; var landmark2 = new Landmark { LandmarkID = 2, Address = "", Location = DbGeography.Parse("POINT(-122.296623 47.640405)"), LandmarkName = "Arboretum Aqueduct" }; var landmark3 = new Landmark { LandmarkID = 3, Address = "815 2nd Avenue", Location = DbGeography.Parse("POINT(-122.334571 47.604009)"), LandmarkName = "Bank of California Building" }; var landmark4 = new Landmark { LandmarkID = 4, Address = "409 Pike Street", Location = DbGeography.Parse("POINT(-122.336124 47.610267)"), LandmarkName = "Ben Bridge Jewelers Street Clock" }; var landmark5 = new Landmark { LandmarkID = 5, Address = "300 Pine Street", Location = DbGeography.Parse("POINT(-122.338711 47.610753)"), LandmarkName = "Bon Marché" }; var landmark6 = new Landmark { LandmarkID = 6, Address = "N.E. corner of 5th Avenue & Pike Street", Location = DbGeography.Parse("POINT(-122.335576 47.610676)"), LandmarkName = "Coliseum Theater Building" }; var landmark7 = new Landmark { LandmarkID = 7, Address = "", Location = DbGeography.Parse("POINT(-122.349755 47.647494)"), LandmarkName = "Fremont Bridge" }; var landmark8 = new Landmark { LandmarkID = 8, Address = "", Location = DbGeography.Parse("POINT(-122.335197 47.646711)"), LandmarkName = "Gas Works Park" }; var landmark9 = new Landmark { LandmarkID = 9, Address = "", Location = DbGeography.Parse("POINT(-122.304482 47.647295)"), LandmarkName = "Montlake Bridge" }; var landmark10 = new Landmark { LandmarkID = 10, Address = "1932 2nd Avenue", Location = DbGeography.Parse("POINT(-122.341529 47.611693)"), LandmarkName = "Moore Theatre" }; var landmark11 = new Landmark { LandmarkID = 11, Address = "200 2nd Avenue N./Seattle Center", Location = DbGeography.Parse("POINT(-122.352842 47.6186)"), LandmarkName = "Pacific Science Center" }; var landmark12 = new Landmark { LandmarkID = 12, Address = "5900 Lake Washington Boulevard S.", Location = DbGeography.Parse("POINT(-122.255949 47.549068)"), LandmarkName = "Seward Park" }; var landmark13 = new Landmark { LandmarkID = 13, Address = "219 4th Avenue N.", Location = DbGeography.Parse("POINT(-122.349074 47.619589)"), LandmarkName = "Space Needle" }; var landmark14 = new Landmark { LandmarkID = 14, Address = "414 Olive Way", Location = DbGeography.Parse("POINT(-122.3381 47.612467)"), LandmarkName = "Times Square Building" }; var landmark15 = new Landmark { LandmarkID = 15, Address = "5009 Roosevelt Way N.E.", Location = DbGeography.Parse("POINT(-122.317575 47.665229)"), LandmarkName = "University Library" }; var landmark16 = new Landmark { LandmarkID = 16, Address = "1400 E. Galer Street", Location = DbGeography.Parse("POINT(-122.31249 47.632342)"), LandmarkName = "Volunteer Park" }; context.People.Add(person1); context.Landmarks.Add(landmark1); context.Landmarks.Add(landmark2); context.Landmarks.Add(landmark3); context.Landmarks.Add(landmark4); context.Landmarks.Add(landmark5); context.Landmarks.Add(landmark6); context.Landmarks.Add(landmark7); context.Landmarks.Add(landmark8); context.Landmarks.Add(landmark9); context.Landmarks.Add(landmark10); context.Landmarks.Add(landmark11); context.Landmarks.Add(landmark12); context.Landmarks.Add(landmark13); context.Landmarks.Add(landmark14); context.Landmarks.Add(landmark15); context.Landmarks.Add(landmark16); context.SaveChanges(); } } }

Adding Entities containing Spatial Data

In the code above, we added a number of landmarks containing spatial data. Note that to initialize a spatial property we use the DbGeography.Parse method which takes WellKnownText. In this case, we passed a point with a longitude and latitude.Invoking the Initializer

Now, to make sure the Initializer gets invoked, we must add the following lines to App.config inside <configuration>:

<appSettings> <add key="DatabaseInitializerForType SeattleLandmarks.SeattleLandmarksEntities, SeattleLandmarks" value="SeattleLandmarks.SeattleLandmarksSeed, SeattleLandmarks" /> </appSettings>Writing the App (Code First)

- Open Program.cs and enter the following code:

using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.Data.Spatial; using System.Data.Entity; namespace ConsoleApplication4 { class Program { static void Main(string[] args) { using (var context = new SeattleLandmarksEntities()) { var person = context.People.Find(1); var distanceInMiles = 0.5; var distanceInMeters = distanceInMiles * 1609.344; var landmarks = from l in context.Landmarks where l.Location.Distance(person.Location) < distanceInMeters select new { Name = l.LandmarkName, Address = l.Address }; Console.WriteLine("\nLandmarks within " + distanceInMiles + " mile(s) of " + person.Name + "'s location:"); foreach (var loc in landmarks) { Console.WriteLine("\t" + loc.Name + " (" + loc.Address + ")"); } Console.WriteLine("Done! Press ENTER to exit."); Console.ReadLine(); } } } public class Person { public int PersonID { get; set; } public string Name { get; set; } public DbGeography Location { get; set; } } public class Landmark { public int LandmarkID { get; set; } public string LandmarkName { get; set; } public DbGeography Location { get; set; } public string Address { get; set; } } public class SeattleLandmarksEntities : DbContext { public DbSet<Person> People { get; set; } public DbSet<Landmark> Landmarks { get; set; } } }The first thing we do is create a context. Then we create distance and person variables. In line 14, we use the Find method in DbSet to retrieve the first person. We use both of these in the LINQ query in line 19. In this query we select all the locations within a half mile of the given person’s location. To compute the distance, we use the Distance method in System.Data.Spatial.DbGeography. You can explore the full list of methods by browsing through the System.Data.Spatial namespace in the Class View window.The output is the following:

Landmarks within 0.5 mile(s) of John Doe's location:

Bank of California Building (815 2nd Avenue)

Ben Bridge Jewelers Street Clock (409 Pike Street)

Bon Marché (300 Pine Street)

Coliseum Theater Building (N.E. corner of 5th Avenue & Pike Street)

Done! Press ENTER to exit.

Database First Approach

Here are the steps to follow if you would like to use Database First:

Attaching the Seattle Landmarks Database

- Launch SQL Server Management Studio and connect to your instance of SQL Express.

- Right click on the Databases node and select Attach…

- Under Databases to attach, click Add… and then browse to the location of SeattleLandmarks.mdf. Click Add and then click OK.

Creating a Model

- Add a new ADO.NET Entity Data Model to your project by right clicking on the project and navigating to Add > New Item.

- In the Add New Item window, click Data, and then click ADO.NET Entity Data Model. Name your model ‘SeattleLandmarksModel’ and click Add.

- The Entity Data Model Wizard will open. Click on Generate from database and then click Next>.

- Select New Connection… on the Wizard. Under Server name, enter the name of your SQL Server instance (if you installed SQL Express, the server name should be .\SqlExpress)

- Under Connect to a database, select SeattleLandmarks from the first dropdown menu.

- The Connection Properties window should look as follows. Click OK to proceed

- On the Entity Data Model Wizard, click Next, then click on the Tables checkbox to include People and Landmarks on your model. Click Finish.

The resulting Model is very simple. It includes two entities named Landmark and Person. Each of these contains a Spatial Property called Location:

Writing the App (Database First)

Our program above would work against Database First with a few minor changes: On line 15. Rather than using the Find() method, we will use the Take() method in ObjectContext to retrieve the first person; Lastly, the entities and context we need are automatically generated by the VS tools when using Database First. Therefore, we don’t need the People, Landmarks, and SeattleLandmarksEntities classes defined from line 43 onwards.

How It Works

EF treats Geometry and Geography as primitive types. This allows us to use them in the same way we would use integers and strings as properties in an entity. The SSDL for our entities is the following:

<Schema Namespace="SeattleLandmarksModel.Store" Alias="Self" Provider="System.Data.SqlClient" ProviderManifestToken="2008" xmlns:store="http://schemas.microsoft.com/ado/2007/12/edm/EntityStoreSchemaGenerator" xmlns="http://schemas.microsoft.com/ado/2009/11/edm/ssdl"> <EntityContainer Name="SeattleLandmarksModelStoreContainer"> <EntitySet Name="Landmarks" EntityType="SeattleLandmarksModel.Store.Landmarks" store:Type="Tables" Schema="dbo" /> <EntitySet Name="People" EntityType="SeattleLandmarksModel.Store.People" store:Type="Tables" Schema="dbo" /> </EntityContainer> <EntityType Name="Landmarks"> <Key> <PropertyRef Name="LandmarkID" /> </Key> <Property Name="LandmarkID" Type="int" Nullable="false" StoreGeneratedPattern="Identity" /> <Property Name="LandmarkName" Type="nvarchar" MaxLength="50" /> <Property Name="Location" Type="geography" /> <Property Name="Address" Type="nvarchar" MaxLength="100" /> </EntityType> <EntityType Name="People"> <Key> <PropertyRef Name="PersonID" /> </Key> <Property Name="PersonID" Type="int" Nullable="false" StoreGeneratedPattern="Identity" /> <Property Name="Name" Type="nvarchar" MaxLength="50" /> <Property Name="Location" Type="geography" /> </EntityType> </Schema>Notice that the type for Location in both entities is geography. You can see this in the VS designer by right clicking on Location and selecting Properties.Conclusion

In this walkthrough we have seen how to create an application which leverages spatial data using the Code First and Database First approaches. Using spatial functions, we calculated the distance between two locations. You can find out more about spatial types in EF here. Lastly, we appreciate your feedback, so please feel free to leave your questions and comments below.

Pedro Ardila

Program Manager – Entity Framework

Pawel Kadluczka posted a Walkthrough: Enums (June CTP) on 6/30/2011:

This post will provide an overview of working with enumerated types (“enums”) introduced in the Entity Framework June 2011 CTP. We will start from discussing the general design of the feature then I will show how to define an enumerated type and how to use it in a few most common scenarios.

Where to get the bits?

To be able to use any of the functionality discussed in this post you first need to download and install Entity Framework June 2011 CTP bits. For more details including links installation instructions see the Entity Framework June 2011 CTP release blog post.

Intro

Enumerated types are designed to be first class citizen in the Entity Framework. The ultimate goal is to enable them in most places where primitive types can be used. This is not the case in this CTP release (see Limitations in Entity Framework June 2011 CTP section) but we are working on addressing most of these limitations in post-CTP releases. In addition enumerated types are also supported by the designer, code gen and templates that ship with this CTP.

Design/Theory

As per MSDN enumerated types in the Common Language Runtime (CLR) are defined as “a distinct type consisting of a set of named constants”. Since enums in the Entity Framework are modeled on CLR enums this definition holds true in the EF (Entity Framework) as well. The EF enum type definitions live in conceptual layer. Similarly to CLR enums the EF enums have underlying type which is one of Edm.SByte, Edm.Byte, Edm.Int16, Edm.Int32 or Edm.Int64 with Edm.Int32 being the default underlying type if none has been specified. Enumeration types can have zero or more members. When defined, each member must have a name and can optionally have a value. If the value for the member is not specified then the value will be calculated based on the value of the previous member (by adding one) or set to 0 if there is no previous member. If the value for a given member is specified it must be in the range of its enum underlying type. It is fine to have values specified only for some members and it is fine to have multiple members with the same name but you can’t have more than one member with the same name (case matters). There are two main takeaways from this:

conceptually, the way you define enums in EF is very similar to the way you define enums in C# or VB.NET which makes mapping enum types defined in your program to EF enum types easy

if you happen to have at least one member without specified value then the order of the members matters, otherwise it does not

The last piece of information is that enumerated types can have “IsFlags” attribute. This attribute indicates if the type can be used as set of flags and is an equivalent of [Flags] attribute you can put on an enum type in your C# or VB.NET program. At the moment this attribute is only used for code generation.

Limitations in Entity Framework June 2011 CTP

The biggest limitations of Enums as shipped in the CTP are:

Properties that are of enumerated type cannot be used as keys

It is not possible to create EntityParameters that are enumerated types

System.Enum.HasFlag method is not supported in LINQ queries

EntityDataSource works with enums only in some scenarios

Properties that are of enum type cannot be used as conditions in the conceptual model (C-Side conditions)

It is not possible to specify enum literals as default values for properties in the conceptual model (C-Space)

Practice

Now that we know a bit about enums let’s see how they work in practice. To make things simple, in this walkthrough we will create a model that contains only one entity. The entity will have a few properties – including an enum property. In the walkthrough I will use “Model First” approach since it does not require having a database (note that there is no difference in how the tools in VS work for “Database First” approach). After creating a database we will populate it with some data and write some queries which will involve enum properties and values.

Preparing the model

- Properties that are of enumerated type cannot be used as keys

- Launch Visual Studio and create a new C# Console Application project (in VS File à New à Project or Ctrl + Shift + N) which we will call “EFEnumTest”

- Make sure you are targeting the new version of EF. To do so, right click on your project on the Solution Explorer and select Properties.

- Select Microsoft Entity Framework June 2011 CTP from the Target framework dropdown.

Figure 1. Targeting the in Entity Framework 4.2 June 2011 CTP

Press Ctrl + S to save the project. Visual Studio will ask you for permission to close and reopen the project. Click Yes

Add a new model to the project - right click EFEnumTest Project à Add New Item (or Ctrl + Shift + A), Select ADO.NET Data Entity Model from Visual C# Items and call it “EnumModel.edmx”. Then click Add.

Since we are focusing on “Model First” approach select Empty Model in the wizard and press Finish button.

Once the model has been created add an entity to the model. Go to Toolbox and double click Entity or right click in the designer and select Add New à Entity

Rename the entity to Product

Add properties to the Product entity:

- Id of type Int32 (should actually already be created with the entity)

- Name of type String

- Category of type Int32

- Change the type of the property to enum. The Category property is currently of Int32 type but it would make much more sense for this property to be of an enum type. To change the type of this property to enum right click on the property and select Convert to Enum option from the context menu as shown on Figure 2 (there is a small glitch in the tools at the moment that is especially visible when using “Model First” approach – the “Convert to Enum” menu option will be missing if the type of the property is not one of the valid underlying types. In “Model First” approach when you create a property it will be of String type by default and you would not be able to find the option to convert to the enum type in the menu – you would have to change the type of the property to a valid enum underlying type first – e.g. Int32 to be able to see the option).

Figure 2. Changing the type of a property to enum

Define the enum type. After selecting Conver to Enum menu option you will see a dialog that allows defining the enum type. Let’s call the type CategoryType, use Byte as the enum underlying type and add a few members:

- Beverage

- Dairy

- Condiments

We will not use this type as flags so let’s leave the IsFlag checkbox unchecked. Figure 3 shows how the type should be defined.

Figure 3. Defining an enum type

Press OK button to create the type.

This caused a few interesting things to happen. First the type of the property (if the properties of the property are not displayed right click the property and select Properties) is now CategoryType. If you open the Type drop down list you will see that the newly added enum type has been added to the list (by the way, did you notice two new primitive types in the drop down? Yes, Geometry and Geography are new primitive types that ship in CTP and make writing geolocation apps much simpler). You can see that on Figure 4.

Figure 4. The type of the property changed to "CategoryType"

Having enum types in this dropdown allows you changing the type of any non-key (CTP limitation) scalar property in your model to this enum type just by selecting it from the drop down list. Another interesting thing is that the type also appeared in the Model Browser, EnumModel à Enum Types node. Note that Enum Types node is new in this CTP and allows defining enum types without having to touch properties – you can just right click it and select Add Enum Type option from the menu. Enum types defined this way will appear in the Type drop down list and therefore can be used for any non-key (CTP limitation) scalar property in the model.

Save the model.

Inspecting the model

In the Solution Explorer right click on the EnumModel.edmx

Choose Open With… option from the context menu

Select Xml (Text) Editor from the dialog

Press OK button

Confirm that you would like to close the designer

Now you should be able to see the model in the Xml form (note that you will see some blue squiggles indicating errors. This is because the database has not been yet created and there is no mappings for the Product entity – you can ignore these errors at the moment)

Navigate to CSDL content - we are only interested in changes to conceptual part of the edmx file as there are no changes related to enums in SSDL and C-S mapping changes – and you should see something like:

<EntityType Name="Product"> <Key> <PropertyRef Name="Id" /> </Key> <Property Type="Int32" Name="Id" Nullable="false" annotation:StoreGeneratedPattern="Identity" /> <Property Type="String" Name="Name" Nullable="false" /> <Property Type="EnumModel.CategoryType" Name="Category" Nullable="false" /> </EntityType> <EnumType Name="CategoryType" UnderlyingType="Byte"> <Member Name="Beverage" /> <Member Name="Dairy" /> <Member Name="Condiments" /> </EnumType>What you can see in this fragment is the entity type and the enum type we created in the designer. If you look carefully at the Category property you will notice that the type of the property refers to the EnumModel.CategoryType which is our enum type.

Inspecting code generated for the model

In Solution Explorer double click EnumModel.Designer.cs

Expand Enums region in the file that opened. The definition for our enum type looks like this: