Windows Azure and Cloud Computing Posts for 7/22/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

• Updated 7/24/2011 at 1:30 PM PDT with new articles marked • by Michael Washington, ComponentOne, Herve Roggero, Paul MacDougall, BPN.com and Microsoft TechNet.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Brent Stineman (@BrentCodeMonkey) explained Long Running Queue Processing (Year of Azure Week 3) in a 7/22/2011 post:

Sitting in Denver International Airport as I write this. I doubt I’ll have time to finish it before I have to board and my portable “Azure Appliance” (what a few of us have started to refer to my 8lb 17” wide-screen laptop as) simply isn’t good for working with on the plane. I had hoped to write this will working in San Diego this week, but simply didn’t have the time.

And no, I wasn’t at Comic Con. I was in town visiting a client for a few days of cloud discovery meetings. Closest I came was a couple blocks away and bumping into two young girls in my hotel dressed as the “Black Swan” and Snookie from True Blood. But I’m rambling now.. fatigue I guess.

Anyway, a message came across on a DL I’m on about long running processing of Azure Storage queue messages. And while unfortunately there’s no way to renew the “lease” on a message, it did get me to thinking that this week my post would be about one of the many options for processing a long running queue message.

My approach will only work if the long running process is less than the maximum visiblitytimeout value allowed for a queue message. Namely, 2 minutes. Essentially, I’ll spin the message processing off into a background thread and then monitor that thread from the foreground. This approach would allow me to multi-thread processing of my messages (which I won’t demo for this week because of lack of time). It also allows me to monitor the processing more easily since I’m doing it from outside of the process.

Setting up the Azure Storage Queue

Its been a long time since I’ve published an Azure Storage queue sample, and the last time I messed with them I was hand coding against the REST API. This time we’ll use the StorageClient library.

- // create storage account and client

- var account = CloudStorageAccount.FromConfigurationSetting("AzureStorageAccount");

- CloudQueueClient qClient = account.CreateCloudQueueClient();

- // create a queue object to use to manipulate the queue

- CloudQueue myQueue = qClient.GetQueueReference("tempqueue");

- // make sure our queue exists

- myQueue.CreateIfNotExist();

Pretty straight forward code here. We get our configuration setting, and create a CloudQueueClient that we’ll use to interact with our Azure Storage service. I think use that client to create a CloudQueue object which we’ll use to interact with our specific queue. Lastly, we create the queue if it doesn’t already exist.

Note: I wish the Azure AppFabric queues had this “Create If Not Exist” method. *sigh*

Inserting some messages

Next up we insert some messages into the queue so we’ll have something to process. That’s even easier….

- // insert a few (5) messages

- int iMax = 5;

- for (int i = 1; i <= iMax; i++)

- {

- CloudQueueMessage tmpMsg = new CloudQueueMessage(string.Format("Message {0} of {1}", i, iMax));

- myQueue.AddMessage(tmpMsg);

- Trace.WriteLine("Wrote message to queue.", "Information");

- }

I insert 5 messages numbered 1-5 using the CloudQueue AddMessage method. I should really trap for exceptions here, but his works for demonstration purposes. Now for the fun part..

Setting up our background work process

Ok, now we have to setup an object that we can use to run the processing on our message. Stealing some code from the MSDN help files, and making a couple minor mods, we end up with this…

- class Work

- {

- public void DoWork()

- {

- try

- {

- int i = 0;

- while (!_shouldStop && i <= 30)

- {

- Trace.WriteLine("worker thread: working…");

- i++;

- Thread.Sleep(1000); // sleep for 1 sec

- }

- Trace.WriteLine("worker thread: terminating gracefully.");

- isFinished = true;

- }

- catch

- {

- // we should do something here

- isFinished = false;

- }

- }

- public CloudQueueMessage Msg;

- public bool isFinished = false;

- public void RequestStop()

- {

- _shouldStop = true;

- }

- // Volatile is used as hint to the compiler that this data

- // member will be accessed by multiple threads.

- private volatile bool _shouldStop;

- }

The key here is the DoWork method. This one is setup to take 30 seconds to “process” a message. We also have the ability to abort processing using the RequestStop method. Its not ideal but this will get our job done. We really need something more robust in my catch block, but at least we’d catch any errors and indicate processing failed.

Monitoring Processing

Next up, need to launch our processing and monitor it.

- // start processing of message

- Work workerObject = new Work();

- workerObject.Msg = aMsg;

- Thread workerThread = new Thread(workerObject.DoWork);

- workerThread.Start();

- while (workerThread.IsAlive)

- {

- Thread.Sleep(100);

- }

- if (workerObject.isFinished)

- myQueue.DeleteMessage(aMsg.Id, aMsg.PopReceipt); // I could just use the message, illustraing a point

- else

- {

- // here, we should check the queue count

- // and move the msg to poison message queue

- }

Simply put, we create our worker object, give it a parameter (our message) and create/launch a thread to process the message. We then use a while loop to monitor the thread. When its complete, we check isFinished to see if processing completed successfully.

Next Steps

This example is pretty basic but I hope you get the gist of it. In reality, I’d probably be spinning up multiple workers and throwing them into a collection so that I can then monitor multiple processing threads. As more messages come in, I can put them into collection in place of threads that have finished. I could also have the worker process itself do the deletion if the message was successfully processed. There are lots of options.

But I need to get rolling so we’ll have to call this a day. I’ve posted the code for this sample if you want it, just click the icon below. So enjoy! And if we get the ability to renew the “lease” on a message, I’ll try to swing back by this and put in some updates.

Download Brent’s YOA - Week 3.zip source code from Windows Live Skydrive here.

<Return to section navigation list>

SQL Azure Database and Reporting

• Herve Roggero described Preparing for Data Federation in SQL Azure in a 7/23/2011 post to Geeks with Blogs:

This blog will help you prepare for an upcoming release of SQL Azure that will offer support for Data Federation. While no date has been provided for this feature, I was able to test an early preview and compiled a few lessons learned that can be shared publicly. Note however that certain items could not be shared in this blog because they are considered NDA material; as a result, you should expect additional guidance in future posts when the public Beta will be made available.

What is Data Federation?

First and foremost, allow me to explain what Data Federation is in SQL Azure. Many terms have been used, like partitioning, sharding and scale out. Other sharding pattern exist too, which could be described in similar terms; so it is difficult to paint a clear picture of what Data Federation really is without comparing with other partitioning models. (To view a complete description of sharding models, take a look at a white paper I wrote on the topic where with a link on this page: http://bluesyntax.net/scale.aspx)

The simplest model to explain is the linear shard: each database contains all the necessary tables for a given customer (in which case the customer ID would be the dimension). So in a linear shard, you will need 100 databases if you have 100 customers.

Another model is the compressed shard: a container (typically a schema) inside a database contains all the necessary tables for a given customer. So in a compressed shard, each database could contain one or more customers, all logically usually separated by a schema (providing a strong security data enforcement model). As you will see, Data Federation offers an alternative to schema separation.

The scale up model (which is not a shard technically) uses a single database in which customer records are identified by an identifier. Most applications fall in this category today. However the need to design flexible and scalable components in a cloud paradigm makes this design difficult to embrace.

Data Federation, as it turns out, is a mix of a compressed shard and a scale up model as described above, but instead of using a schema to separate records logically, it uses a federation (a new type of container) which is a set of physical databases (each database is called a federation member). So within each database (a federation member) you can have multiple customers. You are in control of the number of customers you place inside each federation, member and you can either split customers (i.e. create new database containers) or merge them back at a later time (i.e. reducing the number of databases making up your shard).

For a more complete description of Data Federation, see Cihan [Biyikoglu’s] blog here: http://blogs.msdn.com/b/cbiyikoglu/archive/2010/10/30/building-scalable-database-solution-in-sql-azure-introducing-federation-in-sql-azure.aspx

As a result, Data Federation allows you to start with a single database (similar to a scale up model), and create federations over time (moving into the compressed shard territory). When you create a federation, you specify a range over the customer IDs you would like to split, and SQL Azure will automatically move the records in the appropriate database (federation member). You could then, in theory, design “as usual” using a scale up model and use Data Federation at a later time when performance requirements dictate.

I did say in theory.

Design Considerations

So at this point you are probably wondering… what’s the catch? It’s pretty simple, really. It has to do with the location of the data and performance implications of storing data across physical databases: referential integrity. [Read Cihan’s blog about the limitations on referential integrity here: http://blogs.msdn.com/b/cbiyikoglu/archive/2011/07/19/data-consistency-models-referential-integrity-with-federations-in-sql-azure.aspx]

Data Federation is indeed very powerful. It provides a new way to spread data access across databases, hence offering a shared-nothing architecture across federation members hosting your records. In other words: the more members your federation has (i.e. databases) the more performance is at your fingertips.

But in order to harvest this new power, you will need to make certain tradeoffs, one of which being referential integrity. While foreign key relationships are allowed within a federated member, they are not allowed between members. So if you are not careful, you could duplicate unique IDs in related tables. Also, since you have distributed records, you may need to replicate certain tables on all your federated members, hence creating a case of data proliferation which will not be synchronized across federation members for you.

If you are considering to use Data Federation in the future and would like to take an early start in your design, I will make the following recommendations:

- The smaller the number of tables, the easier it will be for you to reconcile records and enforce referential integrity in your application

- Do not assume all existing Referential Integrity (RI) constructs will still exist in a federated database; plan to move certain RI rules in your application

- It is possible that the more you create federations members, the more expensive your implementation will be (no formal pricing information is available at the time of this writing)

- Data Federation still uses the scale up model as its core, since each federated table needs to contain the customer ID in question; so record security and access control is similar to a scale up model

- You will need to leverage parallel processing extensively to query federated members in parallel if you are searching for records across your members, so if you are not familiar with the Task Parallel Library (TPL), take a look. You will need it

In summary, Data Federation is for you if you need to scale your database to dozens of databases or more due to performance and scalability reasons. It goes without saying that the simpler the database, the easier the adoption of Data Federation will be. Performance and scalability improvements often come with specific tradeoffs; Data Federation is no exception and as expected the tradeoff is referential integrity (similar to NoSQL database systems).

Klint Finley (@Klintron) asked in a Cloud Poll: Can Microsoft's Distributed Analytics Tools Compete with Hadoop? posted to the ReadWriteCloud on 7/22/2011:

This week Microsoft Research released Project Daytona MapReduce Runtime, a developer preview of a new product designed for working with large distributed data sets. Microsoft also has a big data analytics platform that uses LINQ instead of MapReduce called LINQ to HPC. Notably, LINQ to HPC is used in production at Microsoft Bing.

But Microsoft is entering an increasingly crowded market. There's the open source Apache Hadoop, which is now being sold in different flavors by companies such as Cloudera, DataStax, EMC, IBM and soon a spin-off of Yahoo. Not to mention HPCC, which will be open-sourced by LexisNexis.

Microsoft's products are currently in early, experimental stages and the company may never step up the development and marketing of these to be serious Hadoop and HPCC competitors. But could Microsoft be competitive here if it wants to?

[Poll:] Can Microsoft's Distributed Analytics Tools Compete with Hadoop?

<Return to section navigation list>

MarketPlace DataMarket and OData

Steve Fox reported SharePoint and Windows Phone 7 Developer Training Kit Ships! on 7/22/2011:

Today, the SharePoint and Windows Phone 7 Developer Training Kit shipped. This is a great milestone because there is not a lot of good material out there on how to build phone apps for SharePoint 2010. The kit comprises a number of units:

Introduction to Windows Phone 7 Development

In this unit you will understand real world examples of connecting to and consuming information stored in SharePoint with custom applications that you write with Visual Studio on Windows Phone 7. With the introduction of Windows Phone 7, .Net developers have the tools and resources available to create, test, deploy and sell stunning applications using the same familiar tools that you have been using for years. You don’t need to learn a new language, you just need to learn how the platform works and start building applications in Silverlight or XNA Framework. We have a lot to teach you in this course, you will start by building the familiar “Hello World” application and then dive into connecting to SharePoint and building applications that can improve your business.

Setting Up A SharePoint and Windows Phone 7 Development Environment

Getting started developing Windows Phone 7 applications for SharePoint can be a little difficult. In this unit you will learn how to setup a developer environment and explore some of the options to consider. You will see how to setup and configure a development environment in both a physical and virtual environment.

SharePoint 2010 Mobile Web Development

SharePoint supports mobile views of sites, lists and libraries. Many modern mobile browsers do not need this support as they work well with SharePoint without the need for the mobility redirection. But there are scenarios where you want to provide the best app like experience. The end user experience is focused on collaboration with access to Lists and Libraries. The mobile viewers make it easier to users to view office documents on their mobile devices. If you are planning to use mobile Web Parts and Field Controls you need to plan for how the controls will render.

Integrating SharePoint Data in Windows Phone 7 Applications

Windows Phone 7 can integrate with SharePoint like any other remote application. In this unit you will learn how to access SharePoint List data, user profile data, and social data. You will understand how to use alerts, site data, and views.

Advanced SharePoint Data Access in Windows Phone 7 Applications

Accessing server data is one of the most important aspects of Phone 7 development. In this unit you will learn how to Create, Edit, Update, and Delete data. You will learn other advanced topics such as data paging and working with images from SharePoint.

Security With SharePoint And Windows Phone 7 Applications

Connecting to and consuming data from the SharePoint server can be challenging giving some of the security restrictions. In this unit you will learn about connecting to data both inside and outside of your corporate network. You will learn about some or the options using Forms Based Authentication (FBA) and Unified Access Gateway (UAG). You will also learn how the various security techniques affect your application design.

Integrating Push Notifications with SharePoint Data in Windows Phone 7 Applications

The Microsoft Push Notification Service in Windows Phone offers third-party developers a resilient, dedicated, and persistent channel to send data to a Windows Phone application from a web service in a power-efficient way. In this unit you will learn how create push notifications in SharePoint using event handlers.

Integrating SharePoint 2010 and Windows Azure

Working in the cloud is becoming a major initiative for application development departments. Windows Azure is Microsoft's cloud incorporating data and services. In this unit you will learn how to leverage SharePoint and Azure data and services in your Windows Phone 7 applications.

Deploying Windows Phone 7 Applications

Once you have completed your Windows Phone 7 application you will need to publish it to the Marketplace. In this unit you will learn how to avoid some common publishing mistakes. You will also see how to protect and secure your applications. You will also learn about a couple of techniques to make your applications private.

SharePoint and Windows Phone 7 Tips and Best Practices

Windows Phone 7 and SharePoint application enable you to create very powerful enterprise collaboration applications, but there are still advanced topics to master. In this unit you will learn how to optimize performance with large data sets. Learn how to look up a SharePoint user’s metadata. Learn how to read list schemas to create dynamic applications that do not require recompilation when a list schema changes. Understand how to implement a pure MVVM pattern. See how to use phone actions to integrate SharePoint data with phone functionality.

To check out the new kit online, you can go here: http://msdn.microsoft.com/en-us/SharePointAndWindowsPhone7TrainingCourse.

To download an offline version of the kit, go here: http://www.microsoft.com/download/en/details.aspx?displaylang=en&id=26813

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

The Azure Incubation Team in the Developer & Platform Evangelism group updated the Cloud Ninja Project to v2.1 (Stable) on 6/29/2011 (missed when updated):

Project Description

The Cloud Ninja Project is a Windows Azure multi-tenant sample application demonstrating metering and automated scaling concepts, as well as some common multi-tenant features such as automated provisioning and federated identity. This sample was developed by the Azure Incubation Team in the Developer & Platform Evangelism group at Microsoft in collaboration with Full Scale 180. One of the primary goals throughout the project was to keep the code simple and easy to follow, making it easy for anyone looking through the application to follow the logic without having to spend a great deal of time trying to determine what’s being called or have to install and debug to understand the logic.

Key Features

- Metering

- Automated Scaling

Federated Identity [Emphasis added]

- Provisioning

Version 2.0 of Project Cloud Ninja is in progress and the initial release is available. In addtion to a few new features in the solution we have fixed a number of bugs.New Features

- Metering Charts

- Changes to metering views

- Dynamic Federation Metadata Document

Contents

- Sample source code

- Design document

- Setup guide

Sample walkthrough

Notes

- Project Cloud Ninja is not a product or solution from Microsoft. It is a comprehensive code sample developed to demonstrate the design and implmentation of key features on the platform.

- Another sample (also known as Fabrikam Shipping Sample) published by Microsoft provides an in depth coverage of Identity Federation for multi-tenant applications, and we recommend you to review this in addition to Cloud Ninja. In Cloud Ninja we utilized the concepts in that sample but also put more emphasis on metering and automated scaling. You can find the sample here and related Patterns and Practices team's guidance here.

Team

- Bhushan Nene (Microsoft)

- Kashif Alam (Microsoft)

- Dan Yang (Microsoft)

- Ercenk Keresteci (Full Scale 180)

- Trent Swanson (Full Scale 180)

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

The Windows Azure Connect Team reported Windows Azure Connect Endpoint Upgrade also available from Microsoft Update on 7/22/2011:

As part of the Windows Azure Management Portal update, a new release of the Windows Azure Connect (which is in CTP) endpoint software (1.0.0952.2) is available. In addition to bug fixes, the Connect endpoint is localized into 11 languages, with a language choice selection at the beginning of the interactive client install.

For corporate machines distributing updates with WSUS (Windows Server Update Services) or SCCM (System Center Configuration Manager), the endpoint update can be imported into WSUS from the Microsoft Update Catalog http://catalog.update.microsoft.com/v7/site/Search.aspx?q=windows%20azure%20connect .

For machines not receiving updates from WSUS (Windows Server Update Services), just start Windows Update, Check for updates, and select Windows Azure Connect Endpoint Upgrade.

Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Paul MacDougall asserted “’Project Daytona’ tools let researchers use Windows Azure cloud platform to crunch data, and some tech from archrival Google plays a part” as a deck for his Microsoft Azure Does Big Data As A Service article of 7/20/2011 for InformationWeek (missed when posted):

Microsoft unveiled new software tools and services designed to let researchers use its Azure cloud platform to analyze extremely large data sets from a diverse range of disciplines--and it's borrowing technology from archrival Google to help gird the platform.

The software maker unveiled the initiative, known as Project Daytona, at its Research Faculty Summit in Redmond, Wash., this week. The service was developed under the Microsoft Extreme Computing Group's Cloud Research Engagement program, which aims to find new applications for cloud platforms.

"Daytona gives scientists more ways to use the cloud without being tied to one computer or needing detailed knowledge of cloud programming--ultimately letting scientists be scientists," said Dan Reed, corporate VP for Microsoft's technology policy group.

To sort and crunch data, Daytona uses a runtime version of Google's open-license MapReduce programming model for processing large data sets. The Daytona tools deploy MapReduce to Windows Azure virtual machines, which subdivide the information into even smaller chunks for processing. The data is then recombined for output.

Daytona is geared toward scientists in healthcare, education, environmental sciences, and other fields where researchers need--but don't always have access to--powerful computing resources to analyze and interpret large data sets, such as disease vectors or climate observations. Through the service, users can upload pre-built research algorithms to Microsoft's Azure cloud, which runs a across a highly distributed network of powerful computers.

Daytona "will hopefully lead to greater scientific insights as a result of large-scale data analytics capabilities," said Reed.

Along with cloud, the development of tools that make it easier for customers to store and analyze big data has become a priority of late for the major tech vendors. Demand for such products is being driven by the fact that enterprises are faced with ever-increasing amounts of information coming in through sources as diverse as mobile devices and smart sensors affixed to everything from refrigerators to nuclear power plants.

Oracle has come to the table with its Exadata system, which can run in private clouds, while IBM spent $1.8 billion last year to acquire Netezza and its technologies for handling big data.

Microsoft sees its Azure public cloud as the ideal host for extremely large data sets. The Project Daytona architecture breaks data into chunks for parallel processing, and the cloud environment allows users to quickly and easily turn on and off virtual machines, depending on the processing power required.

The idea, in effect, is to provide data analytics as a service. Microsoft noted that Project Daytona remains a research effort that "is far from complete," and said it plans to make ongoing enhancements to the service. Project Daytona tools, along with sample codes and instructional materials, can be downloaded from Microsoft's research site.

• BPN.com reported Singularity completes Proof of Concept on Windows Azure Platform with integration of multiple Microsoft Cloud products in a 7/18/2011 post (missed when posted):

In an intensive two week Proof of Concept (PoC) at the Microsoft Technology Centre (MTC) Singularity has proven its Case Management & BPM product, TotalAgility, to work on the Azure Platform and to interact seamlessly with Dynamics CRM, SharePoint and Exchange in the cloud.

"In the new era of people-driven, cloud-based processes Singularity is extending its Case Management industry leadership¹ so that our clients can further reduce cost and risk, improve their customers' experience and more easily meet changing regulatory and policy requirements," said Dermot McCauley, Singularity's Director of Sales and Marketing. "Our strategic partnership with Microsoft enables us to provide cloud-based services that make mobile and desk-based workers more productive. We are already seeing commercial benefits from this PoC."

The Proof of Concept began with the successful porting of the Singularity TotalAgility platform from a .Net server to the Azure cloud service. After the successful port, further cloud-based integration with Dynamics CRM 2011, SharePoint Online and Exchange was achieved, allowing case workers, such as insurance underwriters, fraud investigators, customer service engineers and others to manage relationships, data, documents, processes and communications without on-premise software installation. The result is a new market advantage for organizations who leverage Singularity and the Windows Azure Platform as a rapid on-ramp to flexible cloud computing.

David Gristwood of Microsoft [pictured at right], who led the PoC, said "In a very short space of time Singularity built a compelling solution that leverages TotalAgility, Dynamics CRM, SharePoint and Exchange in the cloud to bring real business benefit to information and knowledge workers. Singularity clearly understands the business benefit for their clients of cloud adoption. The scalability and reliability of the Azure Platform allows Singularity clients to rapidly design and execute agile Case Management business processes that can effortlessly scale throughout the organization."

"What we have shown," remarked Peter Browne, Singularity's CTO, "is the realizable potential of the Microsoft cloud services. By offering Case Management in the cloud, Singularity enables its clients to manage and execute critical business processes anywhere and from any device. And the scalability and resilience of Microsoft Windows Azure provides the flexible growth path our clients need so they can adopt process across their organization at a pace that suits them, without the need to install additional infrastructure."

¹Read the report of independent industry experts in 'The Forrester Wave™: Dynamic Case Management' available free at:

http://www.singularitylive.com/Campaigns/forrester-wave-090211.aspxSupporting Resources:

- For more information on TotalAgility and Microsoft Lync visit:

http://www.singularitylive.com/solutions/bpm-lync.aspx- To view the TotalAgility v5.2 Press Release visit:

http://www.singularitylive.com/campaigns/5.2PR- To download a copy of the Case Management for Microsoft Whitepaper visit:

http://www.singularitylive.com/solutions/case-management-ms.aspx- For more information on TotalAgility and Case Management for SharePoint visit:

http://www.singularitylive.com/solutions/bpm-sharepoint.aspx

Andy Cross (@andybareweb) described Port Control in Windows Azure SDK 1.4 Compute

Emulator in a 7/23/2011 post:

A question I have been asked a number of times about developing for Windows Azure using the local compute Emulator (devfabric) is “how do I control the port used to launch my application”? The short of it is that you can’t, but this post shows you a few ideas about how to get control back over your dev ports.

When you are developing for Windows Azure, you may find that undesirable things happen to the port used in a Web Role when you are in a debugging cycle. For instance, the “Endpoint” port might be defined as 80, but when debugging the web application may start on port 81, and a quick “Stop Debugging, Start Debugging ” action might result in the web application running on port 82.

This is sometime inconvenient and can lead to other problems: if your default browser is Chrome it will not load at port 87 as it deems it an “Unsafe Port” - do a quick internet search for disabling this and you can find a solution that uses a command line argument. Other problems that can happen are that your hyperlinks go awry if you are trying to specify the port, web services can become orphaned from clients … etc

The background for why this happens is that the Compute Emulator will detect in-use ports and increment the port number it is using until it finds a free port. So, if you have IIS already running on port 80, the first available port for the Compute Emulator might be 81. Furthermore, in the case that you are working TOO QUICKLY you may close a debugging session and immediately start a new one, only in the background the Compute Emulator is still tearing down the previous deployment, and is still using the port. This means that the Compute Emulator will have to use the next available port again, say 82, and by acting quickly you can reliably increment (or walk) the ports from 82 to 83, 84, 85 etc.

In order to solve this, you can shutdown the Compute Emulator. As described here: http://michaelcollier.wordpress.com/2011/03/20/windows-azure-compute-emulator-and-ports/ you may have to exit completely if the port was used the first time the Compute Emulator was running.

Another solution is to just “Shutdown the Compute Emulator”, which if the scenario is port walking as above is sufficient to solve the problem.

Since this happens to me quite often (I’m quick debugger …. or maybe I just create a lot of silly bugs!) I chose to add a shortcut to Visual Studio in order to be able to do this.

Firstly you must add an external tool:

Add External Tool

This provides you with a Window into which you can enter details of an external tool that Visual Studio will launch for you on command. This command will be the one that resets the Compute Emulator.

Add Tool

The details for this tool are:

Title: Reset DevFabric Ports

Command: C:\Program Files\Windows Azure SDK\v1.4\bin\csrun.exe

Arguments: /devfabric:shutdown

Use Output window: checked

This will create an entry in the Tools menu above where you previously found the External Tools option.

Optional – Create a toolbar button

To make it even easier to reset your ports, I added this command as a toolbar.

Double Click on a toolbar or in the space around your toolbars in order to bring up the Customise dialog, click “New”:

After you have clicked “New”, enter a name for your toolbar, customise the new toolbar and then click “Add Command”

New Toolbar

Click this new Down Arrow

Select Customise...

Click Add Command

This dialog that loads gives you the ability to add many commands that are available within Visual Studio, so we will be able to find our External Tool in here. The first thing to do is find the Tools list item on the left box, and then find the External Tool [n] on the right. The exact number for you may be different, for me it was 6. You can find this number up front by going to the External Tools dialog and counting the index of your item in the list box. Note that the first command is command 1, not command 0 – so if the item is the sixth in the list, choose External Command 6.

Find the External Command

Once you click OK, you will be able to see your new button in Visual Studio:

Your new button

In Action

Since we selected for Visual Studio to use the output window, this is where we’ll see the results:

Starting

Complete

Once this has completed, you can see that the Compute Emulator is stopped:

Compute Emulator is shutdown

The next time you start debugging, the Compute Emulator will automatically restart, with the first port being the first available; in my case back to 81.

Zoiner Tejada (@ZoinerTejada) explained “How to use unit testing and mocking to build tests for an Azure-hosted website” in his Practical Testing Techniques for Windows Azure Applications post of 7/22/2011 to the DevProConnections blog:

As I undertake this discussion of testing Windows Azure applications, I realize that you might be brand-new to Azure. Additionally, you might be unfamiliar with the concept of unit testing and the related notions of Inversion of Control (IoC), dependency injection, and test-driven development (TDD). When you combine all these tools, the volume of information can prove overwhelming.

In this article, then, my aim is to provide a clear overview of the various testing technologies (unit testing frameworks, mocking frameworks, and automated unit-test generation tools). To this end, I'll focus on how you can leverage the tools to test your Azure applications and how to apply various testing technologies in a practical way that does not require you to refactor any Azure code that you may have already written (or inherited). At the same time, I'll try to provide a good road map for improving the testability of your projects through better design, wherever possible.

The main reason to consider unit and integration testing of your Azure projects is to save time. Read through the following list of rationales for testing, and I'm sure you'll agree that any small investment in testing can pay big dividends in time savings:

- to prevent situations in which you have to wait for deployments to Azure or for the cloud emulator to fire up

- to avoid simple mistakes and regression failures

- to prevent troubleshooting of problems related to temporary environmental issues (such as network outages)

- to generate builds that can quickly verify continued functionality without impeding the code-build-debug cycle

- to create an environment in which you can quickly test newly added functionality

Over time, building your suite of unit tests will help you avoid the accumulation of technical debt.

Unit Test Types

I'll focus primarily on unit tests and the robust relative integration tests. Let's get some basic terminology out of the way before we dive in.

Unit tests are narrowly focused and are designed to exercise one specific bit of functionality. A unit test is commonly referred to as the Code Under Test (CUT). Any dependencies taken by the CUT are abstracted away either by hard-coding values (i.e., providing stub implementations) or by dynamically substituting production implementations with "mock" implementations from the test. As an example of the latter process, a unit test of a business layer can examine the validation logic for creating a new domain entity by simulating the actual interaction of the entity with the database through a Data Access Layer (DAL).

Integration tests are broader tests that, by their nature, test multiple bits of functionality at the same time. In many cases, integration tests are akin to unit tests for which the stubs use production classes. For example, an integration test might create the domain entity, write this entity to a real database, and verify that the correct result was written.

Azure Applications from a Dependency Perspective

When you consider all the aspects of unit tests, it should become clear that we must look at Azure applications from the perspective of their components and the dependencies that they take. Azure applications can consist of web roles plus their related websites, web services, and RoleEntryPoint code. They can also contain worker roles and the RoleEntryPoint code that defines the worker logic.

For Azure applications, the most common dependencies are those to the data sources, such as Windows Azure Tables, BLOBs, queues, AppFabric Service Bus Durable Message Buffers, Microsoft SQL Azure, cloud drives, AppFabric Access Control Service, and various third-party services. When you build tests for Azure applications, you want to replace these dependencies so that your tests can focus more narrowly on exercising the desired logic.

You typically employ the following items to test your Azure applications:

- a unit testing framework to define and run the tests

- a mocking framework to help you isolate dependencies and build narrowly scoped unit tests

- tools that provide increased code coverage to aid automatic unit test generation

- other frameworks that can help you use testable designs by leveraging dependency injection and applying the IoC pattern

I'll touch on all these items, but I'll pay particular attention to the unit testing and mocking frameworks.

Selecting a Unit Testing Framework

Unit testing frameworks assist you as you work with coding, managing, executing, and analyzing unit tests. You definitely don't want to build one of these yourself, as plenty of good frameworks are already available. We'll focus on the Visual Studio Unit Testing Framework (aka MSTest), because it's included in Visual Studio 2010, feels familiar, and does a good job. Other popular .NET unit testing frameworks include NUnit, xUnit, MbUnit, and MSpec. …

Mike Tillman reported Windows Azure Code Samples Page Gets a Makeover in a 7/22/2011 post to the Windows Azure blog:

We're very happy to announce the new Windows Azure Code Samples Gallery that makes it easier for you to find the code samples that you need and provides the ability to add and maintain code samples in our growing catalog. This page is built on Microsoft's Code Gallery site, and has been customized to allow you to browse code samples within the familiar context on the Windows Azure website.

One of the nice features of the gallery is that you can filter the samples based on programming language, affiliation, technology, and other keyword data. To help you further zoom in on the right sample, each sample has a user rating, the number of times the sample has been downloaded, and the last date the sample was modified. Once you've found the sample, you can browse the code before downloading it and even ask the sample author questions through the Q and A feature.

We're still working on making sure all the great samples we had on the previous code samples page can be found here, but we wanted everyone to know about this new experience. Take a look and let us know what you think by emailing us at azuresitefeedback@microsoft.com.

Wade Wegner (@WadeWegner) posted Cloud Cover Episode 52 - Tankster and the Windows Azure Toolkit for Social Games on 7/22/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show at @CloudCoverShow.

In this episode, Steve and Wade talk about the newly released Windows Azure Toolkit for Social Games. The toolkit enables unique capabilities for social gaming prerequisites, such as storing user profiles, maintaining leader boards, in-app purchasing, and more. The toolkit also comes complete with reusable server side code and documentation, as well as Tankster, a new proof-of-concept game built with HTML5.

- Microsoft Delivers New Cloud Tools and Solutions at the Worldwide Partner Conference

- Extending the Windows Azure Accelerator for Web Roles with PHP and MVC3

- Announcing: SQL Azure July 2011 Service Release

- Build Your Next Game with the Windows Azure Toolkit for Social Games

- Windows Azure Platform Management Portal Updates Now Available

Matias Woloski (@woloski) uploaded the source code for Windows Azure Accelerator for Worker Role – Update your workers faster on 7/22/2011:

During the last couple of years I worked quite a lot with Windows Azure. There is no other choice if you work with the Microsoft DPE team, like we do at Southworks.

The thing is that we usually have to deal with last minute deployments to Azure that can take more than a minute <grin>. The good news is that some of that pain started to be eased lately.

- First, the Azure team enabled Web Deploy. For development this helped a lot.

- Then, we helped DPE to build the Windows Azure Accelerators for Web Roles, announced by Nate last week. I explained how the accelerator work in a previous post. We actually used the Web Role Accelerator to deploy www.tankster.net (the social game announced on Wednesday). We have the game backend running in two small instances and we had everything ready for the announcement last week but of course there were tweaks on the game till the last minute. We did like 10 deployments in the last day before the release. 10 * 15 minutes per deploy is almost three hours. Instead using the accelerator each deploy took us 30 seconds. The dev team happy with getting 3 hours of our life back.

- Now, to complete the whole picture I saw it might be a good idea to have the same thing but for Worker Roles

So I teamed with Alejandro Iglesias and Fernando Tubio from the Southworks crew and together we created the Windows Azure Accelerator for Worker Roles.

How it works?

You basically deploy the accelerator with your Windows Azure solution and the “shell” worker will be polling a blob storage container to find and load the “real worker roles”. We made it easy so you don’t have to change any line of code of your actual worker role. Simply throw the entry point assembly and its dependencies in the storage container, set the name of the entry point assembly in a file (__entrypoint.txt) and the accelerator will pick it up, unload the previous AppDomain (if any) and create a new AppDomain with the latest version.

How to use it?

You can find the project in github, there is a README in the home page that explain the steps to use it. Download it and let us know what you think!

I would like to have a Visual Studio template to make it easier to integrate with existing solutions.

Enjoy!

Robin Shahan (@RobinDotNet) described How to install IIS Application Request Routing in Windows Azure in a 7/21/2022 post:

A few months ago, I needed to use IIS Application Request Routing for my company’s main website, which runs in Windows Azure. We wanted to have some of the pages redirect to a different web application, but still show the original domain name. We wanted to whitelist most of our current website, and let everything else redirect to the other site.

We could RDP into the instances of our web role and install ARR and put the configuration information for the reverse proxy into the web.config, and it worked great. The problem is whenever Microsoft installed a patch, or we published a new version, our changes would get wiped out. So I needed to figure out how to have this be installed and configured when the Azure instance starts up. I figured I could do this with a startup task in my web role, but what would I actually put in the script to do that?

I remembered something useful I saw at the MVP Summit (that wasn’t covered by NDA) – a cool website by Steve Marx (who’s on the Windows Azure team at Microsoft) showing cool things you can do in Azure, and one of them was installing ARR. He provides the basic commands needed. I’ll show you how to set up the whole process from soup to nuts.

Steve gives information both for running the web installation and for installing from an msi. I chose to use the msi, because I know I have tested that specific version, and I know the final version of my install scripts work with it. I was concerned about the links for the web installation changing or the version being updated and impacting my site, and I tend to be ultra-careful when it comes to things that could bring down my company’s website. I haven’t been called even once in the middle of the night since we moved to Azure, and I have found that I like sleeping through the night.

A prerequisite for the ARR software is the Web Farm Framework. So you need to download both of these msi’s. The only place I could find these downloads available was this blog. You’ll need the 64-bit versions, of course.

That article states that the URLRewrite module is also required, but it’s already included in Windows Azure, so you don’t have to worry about it.

So now you have your msi’s; when I downloaded them, they were called requestRouter_amd64_en-US.msi and webfarm_amd64_en-US.msi. Add the two MSI’s to your web role project. Right-click on the project and select “Add Existing Item”, and browse to them and select them. In the properties for each one, set the build action to ‘content’ and set ‘copy to output directory’ to ‘copy always’. If you don’t do this, they will not be included in your deployment, which makes it difficult for Azure to run them.

Now you need to write a startup task. I very cleverly called mine “InstallARR.cmd”. To create this, open Notepad or some plain text editor. Here is the first version of my startup task.

d /d "%~dp0" msiexec /i webfarm_amd64_en-US.msi /qn /log C:\installWebfarmLog.txt msiexec /i requestRouter_amd64_en-US.msi /qn /log C:\installARRLog.txt %windir%\system32\inetsrv\appcmd.exe set config -section:system.webServer/proxy /enabled:"True" /commit:apphost >> C:\setProxyLog.txt %windir%\system32\inetsrv\appcmd.exe set config -section:applicationPools -applicationPoolDefaults.processModel.idleTimeout:00:00:00 >> C:\setAppPool.txt exit /b 0This didn’t work every time. The problem is that the msiexec calls run asynchronously, and they only take a couple of seconds to run. So about half the time, the second one would fail because the first one hadn’t finished yet. Since they are running as silent installs (/qn), there wasn’t much I could do about this.

I realized I need to put a pause in after each of the installs to make sure they are done before it continues. There’s no Thread.Sleep command. So the question I have to ask you here is, “Have you ever pinged one of your Azure instances?” I have, and it doesn’t respond, but it takes 3-5 seconds to tell you that. So what could I put in the script that would stop it for 3-5 seconds before actually continuing? Yes, I did. Here’s my final script, but with my service names changed to protect the innocent.

d /d "%~dp0" msiexec /i webfarm_amd64_en-US.msi /qn /log C:\installWebfarmLog.txt ping innocent.goldmail.com msiexec /i requestRouter_amd64_en-US.msi /qn /log C:\installARRLog.txt ping notprovenguilty.goldmail.com %windir%\system32\inetsrv\appcmd.exe set config -section:system.webServer/proxy /enabled:"True" /commit:apphost >> C:\setProxyLog.txt %windir%\system32\inetsrv\appcmd.exe set config -section:applicationPools -applicationPoolDefaults.processModel.idleTimeout:00:00:00 >> C:\setAppPool.txt exit /b 0This worked perfectly.

Save this script as InstallARR.cmd. Add it to your project (File/AddExisting), set the build action to ‘content’ and set ‘copy to output directory’ to copy always. If you don’t do this, it won’t be included in your deployment, and Windows Azure won’t be able to run it. (Are you having a feeling of déjà vu?)

So how do you get Windows Azure to run it? You need to add it to your Service Definition file (the .csdef file in your cloud project). Just edit that file and add this right under the opening element for the <WebRole>.

<Startup> <Task commandLine="InstallARR.cmd" executionContext="elevated" taskType="background" /> </Startup>Setting the executionContext to “elevated” means the task will run under the NT AUTHORITY\SYSTEM account, so you will have whatever permissions you need.

As recommended by Steve Marx, I’m running this as a background task. That way if there is a problem and it loops infinitely for some reason, I can still RDP into the machine.

I think you also need your Azure instance to be running Windows Server 2008 R2, so change the osFamily at the end of the <Service Configuration> element in the Service Configuration (cscfg) file, or add it if it’s missing.

osFamily="2" osVersion="*"To configure the routing, add the rewrite rules to the <webserver> section of your web.config. Here’s an example. If it finds any matches in the folder or files specified in the first rule, it doesn’t redirect – it shows the page in the original website. If it doesn’t find any matches in the first rule, it checks the second rule (which in this case, handles everything not listed specifically in the first rule) and redirects to the other website.

<rewrite> <rules> <rule name="Reverse Proxy to Original Site" stopProcessing="true"> <match url= "^(folder1|folder2/subfolder|awebpage.html|anasppage.aspx)(.*)" /> </rule> <rule name="Reverse Proxy to Other Site" stopProcessing="true"> <match url="(.*)" /> <action type="Rewrite" url="http://www.otherwebsite.com/{R:1}" /> </rule> </rules> </rewrite>Now when you publish the web application to Windows Azure, it will include the MSI’s and the startup task, run the startup task as it’s starting up the role, install and enable the IIS Application Request Routing, and use the configuration information in the web.config.

Jas Sandhu posted Neo4j, the open source Java graph database, and Windows Azure to the Interoperability @ Microsoft blog on 7/5/2011 (missed when posted):

Recently I was travelling in Europe. I alwasy find it a pleasure to see a mixture of varied things nicely co-mingling together. Old and new, design and technology, function and form all blend so well together and there is no better place to see this than in Malmö Sweden at the offices of Diversify Inc., situated in a building built in the 1500’s with a new savvy workstyle. This also echoed at the office of Neo Technology in a slick and fancy incubator, Minc, situated next to the famous Turning Torso building and Malmö University in the new modern development of the city.

M

y new good friends, Diversify's Magnus Mårtensson, Micael Carlstedt, Björn Ekengren, Martin Stenlund and Neo Technology's Peter Neubauer hosted my colleague Anders Wendt from Microsoft Sweden, and me. The topic of this meeting was about Neo Technology’s Neo4j, open source graph database, and Windows Azure. Neo4j is written in Java, but also has a RESTful API and supports multiple languages. The database works as an object-oriented, flexible network structure rather than as strict and static tables. Neo4j is also based on graph theory and it has the ability to digest and work with lots of data and scale is well suited to the cloud. Diversify has been doing some great work getting Java to work with Windows Azure and has given us on the Interoperability team a lot of great feedback on the tools Microsoft is building for Java. They have also been working with some live customers and have released a new case study published in Swedish and an English version made available by Diversify on their blog.

I took the opportunity to take a video where we discuss the project, getting up on the cloud with Windows Azure and what's coming up on InteropBridges.tv,

Video Interview on Channel9: Neo4j the Open Source Java graph database and Windows Azure

Related to this effort and getting Java on Windows Azure there are a few more goodies to check out …

Video of the presentation (skip ahead to ~12 mins) by Magnus (blog post) and Björn (blog post) at the Norwegian Developer Conference (NDC) on hosting a Java application on Windows Azure and their experiences using them together.

Magnus and Björn also got to do a radio interview on .NET Rocks (mp3) on Windows Azure and Java.

I want to thank the guys for taking the time to do the interview, meet with customers, getting some business out of the way and also being fabulous hosts and showing me around town. I am grateful for your Swedish hospitality!

Resources:

Jas is an Evangelist based out off Microsoft's Silicon Valley Campus who works on Open Source and Interoperability Strategy.

Jas didn’t mention that Microsoft Research’s Dryad is a graph database generator for Windows Server. From the Microsoft Research Dryad site:

… The Dryad Software Stack

As a proof of Dryad's versatility, a rich software ecosystem has been built on top Dryad:

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

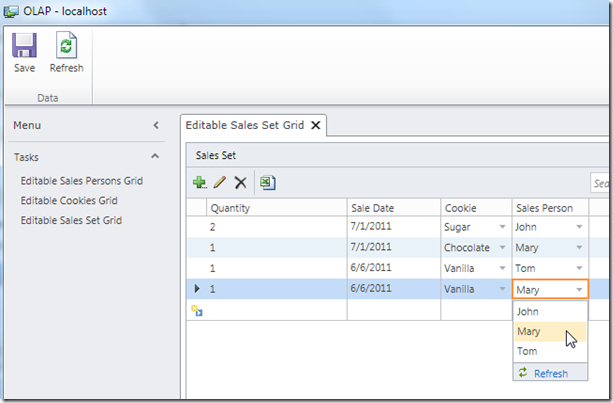

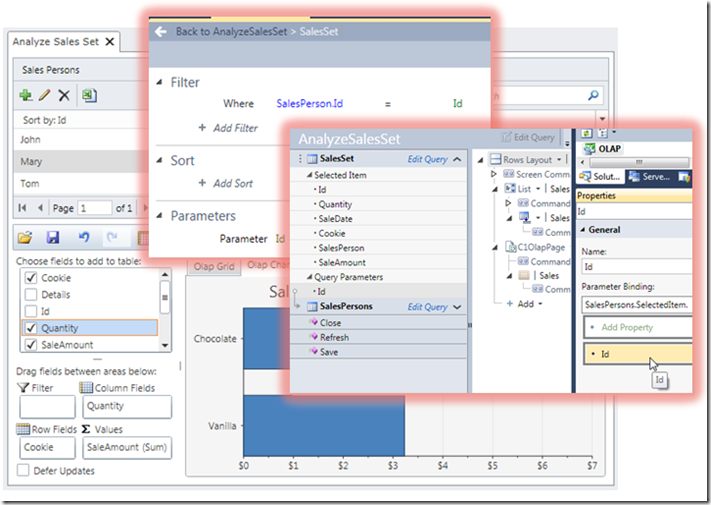

• Michael Washington (@ADefWebserver) wrote a tutorial for Using OLAP for LightSwitch in a 7/24/2011 post to his Visual Studio LightSwitch Help Website:

ComponentsOne’s OLAP For LightSwitch, is a “pivoting data analyzer” control that plugs into LightSwitch. It has been called the ‘Killer App’ for LightSwitch.

You can download the demo at this link: http://www.componentone.com/SuperProducts/OLAPLightSwitch/

This provides a .zip file that contains two files. Double-click on the C1.LightSwitch.Olap.vsix file to run it.

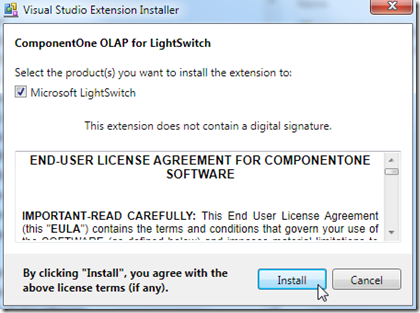

This brings up a the installer box. Click Install.

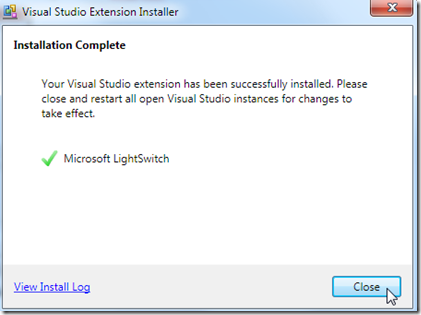

It installs really fast, click the Close button.

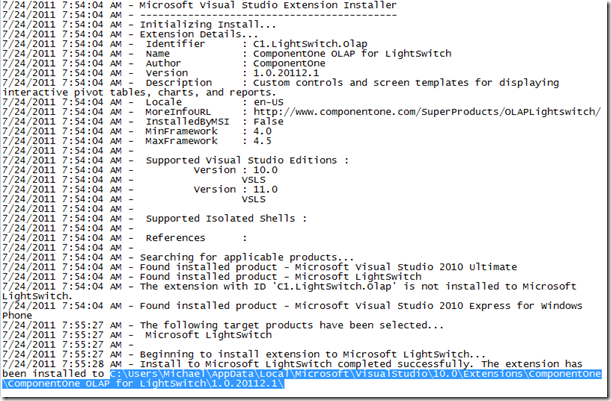

If you do look at the log, you will see it simply copies files to the: C:\Users\...\AppData\Local\Microsoft\VisualStudio\10.0\Extensions\ComponentOne\ComponentOne OLAP for LightSwitch\1.0.20112.1\ directory.

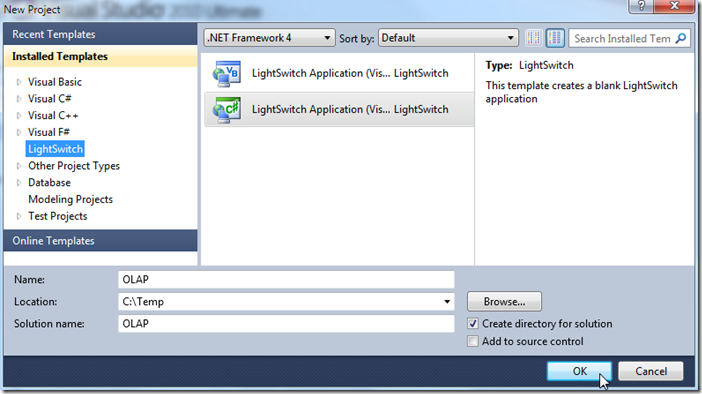

Create The Sample Application

Create a new LightSwitch project.

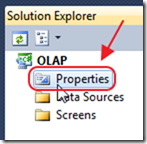

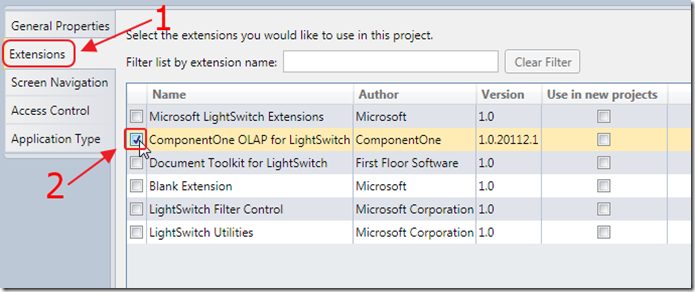

In the Solution Explorer, double-click on Properties.

In Properties, select Extensions, then check the box next to ComponentOne OLAP for LightSwitch.

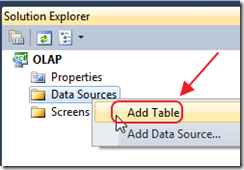

In the Solution Explorer, right-click on Data Sources, and select Add Table.

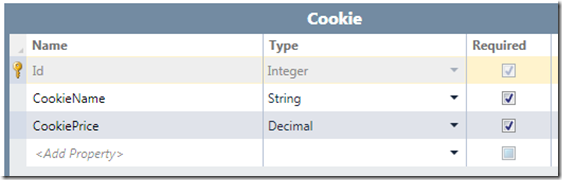

Make a table called Cookie according to the image above.

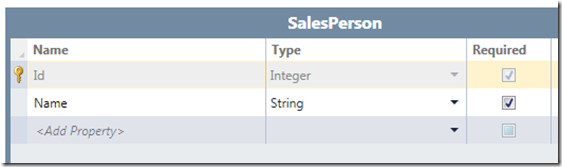

Make a table called SalesPerson according to the image above.

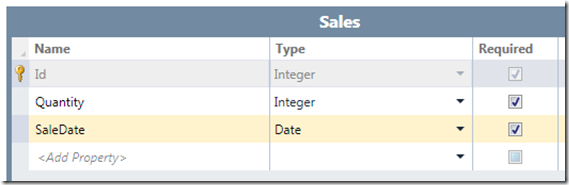

Make a table called Sales according to the image above.

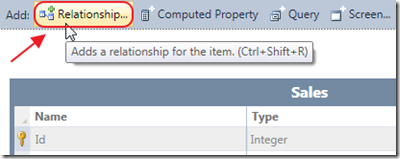

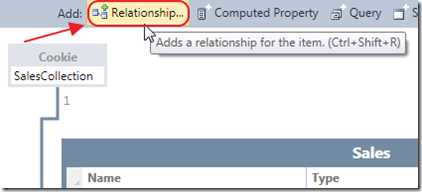

Click the Relationship button.

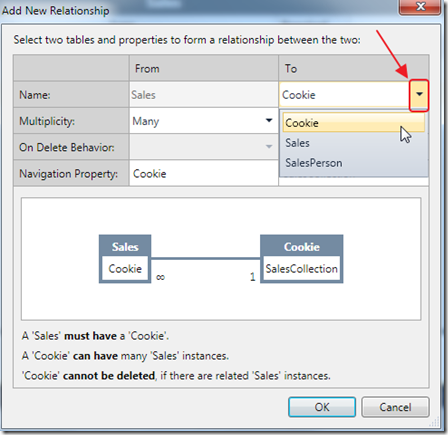

Make a relationship to Cookie.

Click the Relationship button again.

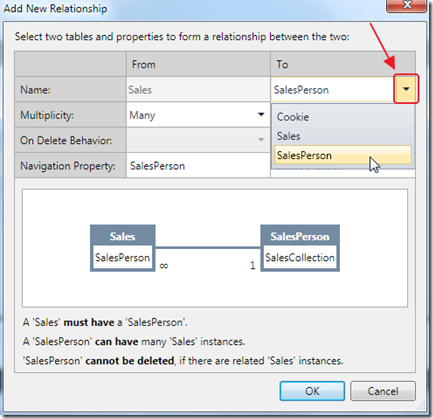

Make a relationship to SalesPerson.

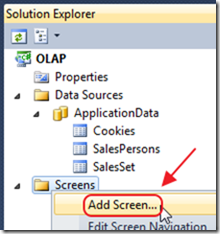

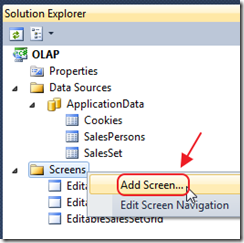

In the Solution Explorer, right-click on Screens, and select Add Screen.

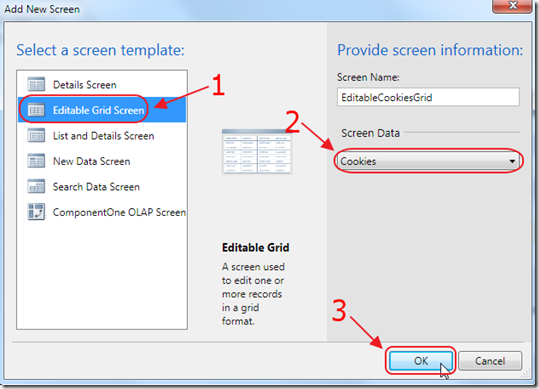

Create an Editable Grid Screen with Cookies as the Screen Data.

Also make an Editable Grid Screen for SalesPersons and Sales (the name of the Sales table may have been changed to SalesSet by LightSwitch).

Hit the F5 key to run the application.

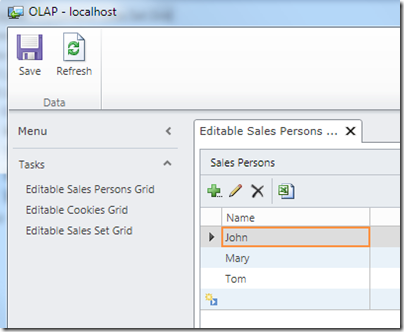

Enter Sales people (remember to click the Save button).

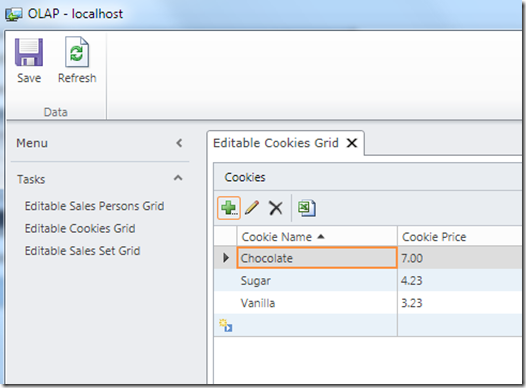

Enter Cookies.

Enter Sales.

Close the window to stop the program.

Use the OLAP Control

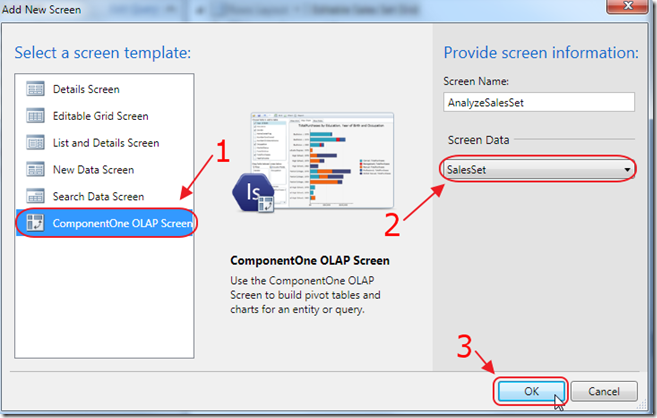

Add a new Screen.

Select the ComponentOne OLAP Screen template, and SalesSet for Screen Data.

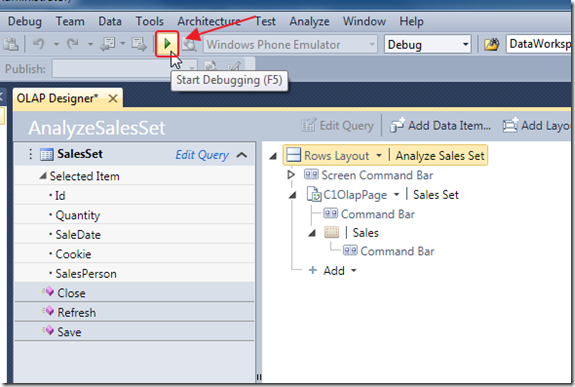

The Screen will be created.

Run the application.

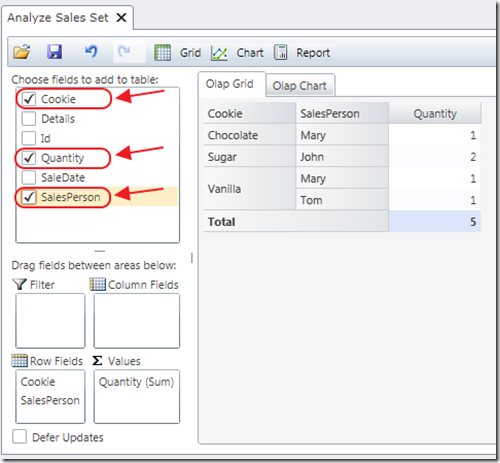

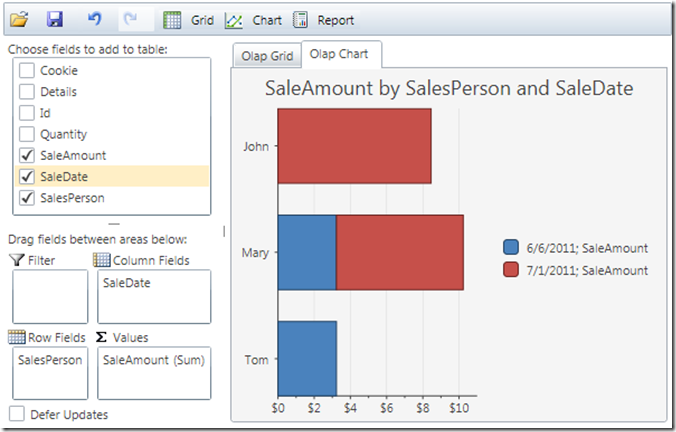

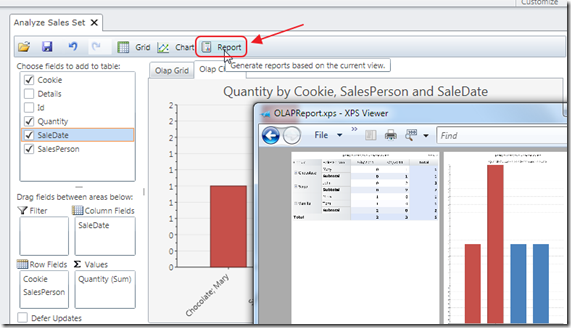

If we select Cookie, Quantity, and SalesPerson, we can see the sales.

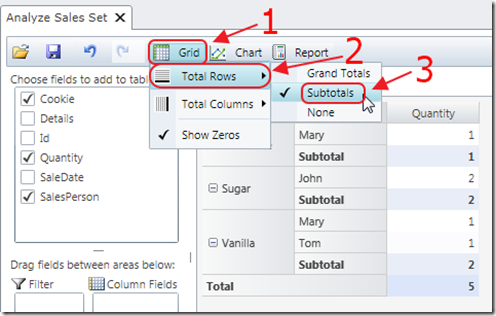

We can also show SubTotals.

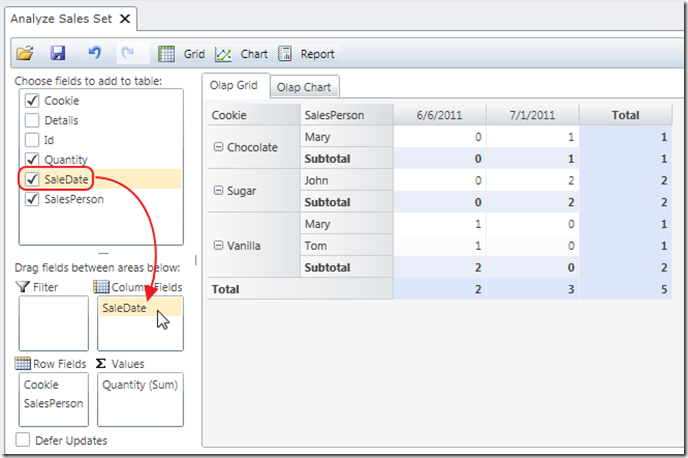

We can drag a field to the Colum Fields to add it to the analysis

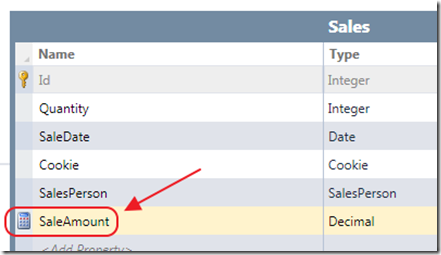

For deeper analysis we can create computed fields.

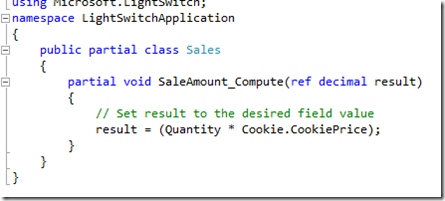

Using code such as the code above.

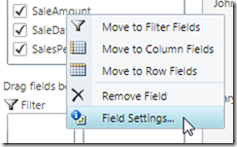

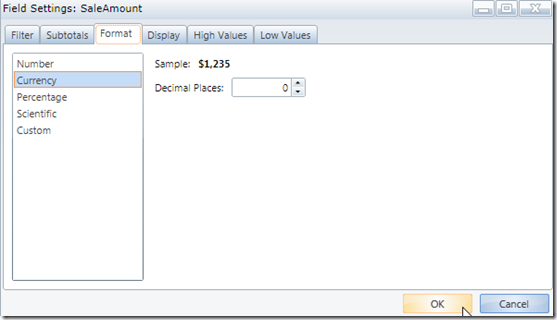

We can change various settings.

We can Format fields.

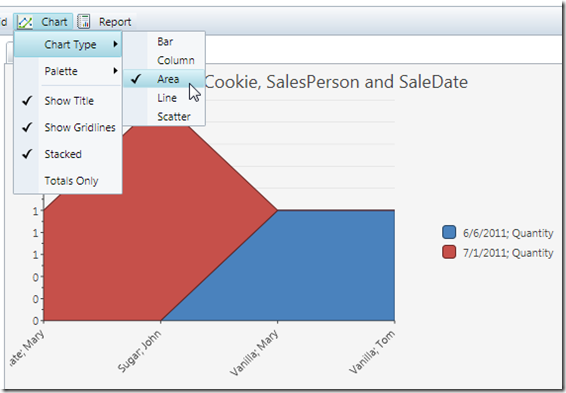

We can also see OLAP charts.

There are many types of charts.

All the normal LightSwitch tools work, so we can filter the data to allow us to select a sales person (and/or a date range).

You can also print reports. [Emphasis added.]

Full Documentation

For full documentation on this product, see: http://helpcentral.componentone.com/nethelp/c1olaplightswitch/

• Michael Washington (@ADefWebserver) reported LightSwitch: We Now Have Our 'Killer App' in a 7/23/2011 post to the OpenLightGroup.net blog:

** Update: See my walk-thru at: Using OLAP for LightSwitch ** [See above post.]

Ok folks, I am ‘gonna call it’, we have the “Killer Application” for LightSwitch, ComponentOne's OLAP for LightSwitch (live demo) (you can get it for $295).

Remember when Oprah Winfrey called Barack Obama “The One” ? Well, I think ComponentOne's OLAP for LightSwitch may be “The One” for LightSwitch. This is the plug-in that for some, becomes the deciding factor to use LightSwitch or not.

A “Killer App” is an application that provides functionality that is so important, that drives the desire to use the product that it is dependent upon. The Halo series made the Xbox what it is today. For the Xbox, Halo was the “killer app”.

The funny thing is that ComponentOne already has a OLAP for Silverlight. The reason it is a “Killer App”, is how it leverages what is so great about LightSwitch. ComponentOne's “Nice App” becomes a “Killer App” when it is “inside” of LightSwitch.

All the major control vendors have products for LightSwitch that they have already announced. The “dirty little secret” is that all they are doing is repackaging existing products to work with LightSwitch. In case you didn’t know what is really going on with the Visual Studio LightSwitch launch (let me just stop for a moment and remind you that this is all my opinion, and I do not speak for Microsoft, and I actually know nothing for certain):

- LightSwich is being marketed to people who may not otherwise use Visual Studio. This will allow Visual Studio to grow market share.

- EVERYONE is on board with this (including yours truly). This plan has no downside, however, it does require control vendors to re-package their controls as LightSwitch extensions.

- LightSwitch works great as a superior easy to use View Model Framework for building professional applications (ahem… I wrote a book on the subject, feel free to get a copy at this link).

- Did I mention that EVERYONE is on board with this plan?

But now we have a “Killer App”. No need to get into tireless arguments with developers who are afraid of the changes in the status quo that a tool such as LightSwitch brings. Now we can simply show a person this video, and say “you can build this app, with no programming, in less than 2 minutes… period”.

ComponentOne’s OLAP For Silverlight Control came out 4 months ago, the difference is that you could not create a complete application in 2 minutes, unless you use it in LightSwitch. Also, you can use the LightSwitch Excel Importer to quickly get data into your application.

Yes, this is a game changer. LightSwitch has always been a game changer, now it has it’s “Killer App”.

It’s likely that the OLAP for LightSwitch control will attract new users, just as crosstab queries make Microsoft Access 1.0 a commercial success. However, at $295 for the standard edition, the control is a bit pricey.

• ComponentOne offers a live demo and free download of it’s OLAP for LightSwitch control at Business Intelligence in the Flip of a Switch:

Try an application built with OLAP for LightSwitch or download the extension and try it for yourself.

Live Demo

Test drive a fully functional Silverlight application build with OLAP for LightSwitch: See it Here

Free Trial

Download a trial copy to try OLAP for LightSwitch in your environment. This download is a .zip file containing a Visual Studio LightSwitch template (.vsix) and a Help file. Install the .vsix and OLAP for LightSwitch will be available. See the Help file for a Quick Start tutorial: Download it Here.

Seems a bit pricey to me.

Clint Edmonson posted a Visual Studio LightSwitch 2011 Resource Guide to the NotSoTrivial.net blog on 7/12/2011 (missed when posted):

Microsoft Visual Studio LightSwitch gives you a simpler and faster way to create high-quality business applications for the desktop and the cloud, regardless of your development skills. LightSwitch is a new addition to the Visual Studio family designed to simplify and shorten the development of typical forms-over-data business applications.

With the impending release on July 26th, I’ve received a ton of requests for more information. Here is a collection of links to resources to get you started:

Websites & Portals

- LightSwitch 2011 Portal – Official product portal where you can download the current beta and get updates on the official launch.

- LightSwitch Developer Center – With LightSwitch, building business apps has never been easier. Stay up to date and learn more about features and capabilities of LightSwitch.

- Official LightSwitch team blog – Public voice of the team who created LightSwitch. You’ll hear the latest news here first.

- LightSwitch Help Website – Forums, How To’s and FAQs driven by the community.

Tutorials & Training

- What is LightSwitch? – See how Visual Studio LightSwitch can help you develop business applications that run on the desktop or the cloud quickly.

- Hello World Tutorial – This blog post by Jason Zander walks you through creating your first LightSwitch application.

- LightSwitch Videos on Channel 9 – A collection of recordings from near and far all about LightSwitch.

- LightSwitch Beta 2 Training Kit – The Visual Studio LightSwitch Training Kit contains demos and labs to help you learn to use and extend LightSwitch.

- LightSwitch Beta 2 Developer Forums – Questions and discussions around the Visual Studio LightSwitch Beta release.

- Exploring the LightSwitch Architecture – LightSwitch applications are built on a classic three-tier architecture, on top of existing .NET technologies and proven architectural design patterns. See how LightSwitch works under the covers.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Microsoft TechNet posted links to and descriptions of all Windows Azure Forums in 7/2011:

Windows Azure Platform Development: General Windows Azure platform development topics: Getting started, development strategies, design, web services, samples, tools, SDK, APIs & how to best use Windows Azure platform.

Windows Azure Platform Troubleshooting, Diagnostics & Logging: Troubleshoot & diagnose deployment errors, service & application issues for applications running on the Windows Azure Platform. Discuss application monitoring & logging.

Windows Azure Storage, CDN and Caching: Discuss Windows Azure Storage (BLOB service, table service, queue service & Windows Azure Drive), Windows Azure Content Delivery Network (CDN), Windows Azure AppFabric Caching (distributed, in-memory, application cache service) & other non-relational solutions for storing & accessing data for the Windows Azure platform.

SQL Azure: Discuss the various SQL Azure technologies including Database, Reporting and the Data Synchronization Service

Connectivity and Messaging - Windows Azure Platform: Windows Azure Connect, Windows Azure Traffic Manager, connecting Windows Azure Platform services to on-premise servers & virtual networking. Windows Azure AppFabric Service Bus for secure messaging and connectivity capabilities.

Managing Services on the Windows Azure Platform: Deploying & managing services on the Windows Azure platform: Using the Windows Azure Portal, Service management tools, samples, APIs & account/subscription management.

Security for the Windows Azure Platform: Windows Azure platform security best practices, data security for Windows Azure Storage & SQL Azure, Windows Azure AppFabric Access Control, authentication & authorization methods.

-

Windows Azure Platform Purchasing, Pricing & Billing: Discuss Windows Azure Platform offers, pricing, estimating usage/costs, billing & comparisons.

Lori MacVittie (@lmacvittie) asserted Differences in terminology, technology foundations and management have widened the “gap” between dev and ops to nearly a chasm in an introduction to her F5 Friday: The Gap That become a Chasm post to F5’s DevCentral blog:

There has always been a disconnect between “infrastructure” and “applications” and it is echoed through organizational hierarchies in every enterprise the world over. Operations and network teams speak one language, developers another. For a long time we’ve just focused on the language differences, without considering the deeper, underlying knowledge differences they expose.

Application Delivery Controllers, a.k.a Load balancers, are network-deployed solutions that, because of their role in delivering applications, are a part of the “critical traffic path”. That means if they are misconfigured or otherwise acting up, customers and employees can’t conduct business via web applications. Period. Because of their critical nature and impact on the network, the responsibility for managing them has for the most part been relegated to the network team. That, coupled with the increasingly broad network switching and routing capabilities required by application delivery systems, has led to most ADCs being managed with a very network-flavored language and model of configuration.

Along comes virtualization, cloud computing and the devops movement. IT isn’t responsive enough – not to its internal customers (developers, system administrators) nor to its external customers (the business folks). Cloud computing and self-service will solve the problems associated with the length of time it takes to deploy applications! And it does, there’s no arguing about that. Rapid provisioning of applications and automation of simple infrastructure services like load balancing have become common-place.

But it’s not the end of the journey, it’s just the beginning. IT is expected to follow through, completely, to provide IT as a Service. What that means, in Our view (and that is the Corporate Our), is a dynamic data center. For application delivery systems like BIG-IP, specifically, it means providing application delivery services such as authentication, data protection, traffic management, and acceleration that can be provisioned, managed and deployed in a more dynamic way: as services able to be rapidly provisioned. On-demand. Intuitively.

Getting from point A to point B takes some time and requires some fundamental changes in the way application delivery systems are configured and managed. And this includes better isolation such that application delivery services for one application can be provisioned and managed without negatively impacting others, a common concern that has long prevented network admins from turning over the keys to the configuration kingdom. These two capabilities are intrinsically tied together when viewed through the lens of IT as a Service. Isolating application configurations only addresses the underlying cross-contamination impact that prevents self-service, it does not fix the language gap between application and network admins. Conversely, fixing the language-gap doesn’t address the need to instill in network admins confidence in the ability to maintain the integrity of the system when the inevitable misconfiguration occurs.

We need to address both by bridging what has become a chasm between application and network admins by making it possible for application admins (and perhaps even one day the business folks) to manage the applications without impacting the network or other applications.

SERVICES versus CONFIGURATION

A very simple example might be the need to apply rate shaping services to an application. The application administrator understands the concept – the use of network capabilities to limit application bandwidth by user, device or other contextual data – but may not have the underlying network knowledge necessary to configure such a service. It’s one thing to say “give these users with this profile priority over those users with that profile” or “never use more than X bandwidth for this application” but quite another to translate that into all the objects and bits that must be flipped to put that into action. What an application administrator needs to be able to do is, on a per-application basis, attach policies to that application that define maximum bandwidth or per-user limitations based on their contextual profile. How does one achieve that in a system where the primary means of configuration is based on protocol names and behavior and not the intended result?

Exactly. They don’t. The network admin has to do that with his limited understanding of what the application admin really wants and needs, because they’re speaking different languages. The network admin has to codify the intent using protocol-based configuration and often this process takes weeks or more to successfully complete. Even what should be a simple optimization exercise – assigning TCP configuration profiles based on anticipated network connectivity to an application – requires the ability to translate the intention into network-specific language and configuration options. What we need is to be able to present the application admin with an interface that lets them easily specify anticipated client network conditions (broadband, WLAN, LAN, etc…) and then automatically generate all the appropriate underlying network and protocol-specific configurations for that application – not a virtual IP address that is, all too often, shared with other applications for which configurations can be easily confused.

What’s needed to successfully bridge the chasm is a services-oriented, application-centric management system. If combined with the proper multi-tenant capabilities, such a system would go far toward achieving a self-service style, on-demand provisioning and management system. It would take us closer to IT as a Service.

It may be that We (and that is the Corporate We) have a solution that bridges the chasm between network and application administration, between the static configuration of traditional application delivery systems and the application-focused, service-oriented dynamic data center architecture. It may be that We will be letting you know what that is very soon….

Joe Panettieri reported Microsoft Mum on Cloud Revenues in a post to the TalkinCloud blog of 7/21/2011:

Microsoft announced record Q4 revenues today but the earnings release made no mention of cloud revenues. In stark contrast, IBM earlier this week said its cloud-related revenues will double this year, and VMware largely credited cloud computing in a strong earnings report earlier this week. So, should Wall Street and partners worry about Microsoft’s silence on cloud revenues?

Actually, no. From where I sit, Microsoft’s two major cloud platforms — Office 365 and Windows Azure — remain well-positioned for long-term success. Office 365 debuted June 28, far too recent for Microsoft to comment about initial revenues. And Windows Azure, Microsoft’s platform as a service (PaaS), only has one year under its belt. Azure revenues are surely small since most customers are still kicking the tires. But we do hear from a growing number of readers that are launching applications in the Azure cloud. Key examples include:

- Quosal, a quoting software platform for VARs and MSPs, now available on Azure.

- CA ARCserve, the backup software platform, coming to Windows Azure later this year.

- MediaValet, a digital asset management system (DAMS), which seems to be catching on in Azure.

The momentum story seems less clear for Office 365. The platform’s predecessor, BPOS (Business Productivity Online Suite) remains online and continues to suffer from occasional failures — giving Microsoft’s cloud efforts a black eye. Microsoft claims to have more than 40,000 cloud partners — but how many of them actually generate noteworthy revenue from Microsoft’s cloud? The answer that question is unknown.

Still, Microsoft COO Kevin Turner in a prepared statement said Microsoft was well-positioned for cloud computing over the long haul. And I concede: Deadlines kept me away from the actual earnings call, which may have contained some cloud chatter.

Also, plenty of technology giants decline to discuss cloud revenues. A key example: When Google recently announced quarterly results, the company said nothing about Google Apps revenues and the Google Apps partner program — though the Google Apps reseller program has more than 1,000 members at last count.

Read More About This Topic

- Excel Micro Offering Customers Tablets for Google Apps Moves

- Microsoft Gives University of Nebraska $250k to Move to Office 365

- Microsoft Shutting BPOS Customers Out of Office 365, For Now

- Microsoft Office 365 Now Available for Public Consumption

- Google Offers Perspective on Microsoft Office 365 Launch

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) posted More On Security & Big Data…Where Data Analytics and Security Collide on 7/22/2011:

My last blog post “InfoSec Fail: The Problem With Big Data Is Little Data,” prattled on a bit about how large data warehouses (or data lakes, from “Big Data Requires A Big, New Architecture,”) the intersection of next generation data centers, mobility and cloud computing were putting even more stress on “security”:

As Big Data and the databases/datastores it lives in interact with then proliferation of PaaS and SaaS offers, we have an opportunity to explore better ways of dealing with these problems — this is the benefit of mass centralization of information.

Of course there is an equal and opposite reaction to the “data gravity” property: mobility…and the replication (in chunks) and re-use of the same information across multiple devices.

This is when Big Data becomes Small Data and the ability to protect it gets even harder.

With the enormous amounts of data available, mining it — regardless of its source — and turning it into actionable information (nee intelligence) is really a strategic necessity, especially in the world of “security.”

Traditionally we’ve had to use tools such as security event information management (SEIM) tools or specialized visualization* suites to make sense of what ends up being telemetry which is often disconnected from the transaction and value of the asset from which they emanate.

Even when we do start to be able to integrate and correlate event, configuration, vulnerability or logging data, it’s very IT-centric. It’s very INFRASTRUCTURE-centric. It doesn’t really include much value about the actual information in use/transit or the implication of how it’s being consumed or related to.

This is where using Big Data and collective pools of sourced “puddles” as part of a larger data “lake” and then mining it using toolsets such as Hadoop come into play.

We’re starting to see the commercialization of Hadoop outside of vertical use cases for financial services and healthcare and more broadly adopted for analytics across entire lines of business, industry and verticals. Combine the availability of cheap storage with ever more powerful and cost-effective compute and network and you’ve got a goldmine ready to tap.

One such solution you’ll hear more about is Zettaset who commercialize and productize Hadoop to enable the construction of enormously powerful data security warehouses and analytics.

Zettaset is a key component of a solution offering that is doing what I describe above for a CISO of a large company who integrates enormous amounts of disparate and seemingly unrelated data to make managed risk decisions that is fed to humans and automated processes alike.

These data are sourced from all across the business — including IT — and allows the teams and constituent interested parties from across the company to slice and dice data from petabytes of information which previously would have been silted. Powerful.