Windows Azure and Cloud Computing Posts for 7/25/2011+

A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles.

• Updated 7/25/2011 5:00 PM with new articles marked • by Rob Hirschfeld, Windows Azure Team, Martin Tantow, Marcelo Lopez Ruiz and Alex Williams.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Lynn Langit (@llangit) posted SQL Server Developer Tools (Juneau)–first look on 7/25/2011:

I have been preparing a talk (for an internal Microsoft product training) on the new SQL Server Developer Tools (or SSDT – code named ‘Juneau), based on the SQL Server vNext CTP 3 (code named – ‘Denali’).

I encourage you to try this out. To do so, you’ll need to have Visual Studio 2010, with SP1 installed. Then you’ll need to download and install a version SQL Server Denali CTP 3, AFTER that you can download and install the CTP for Juneau.

Start by taking a look at the new node in the Visual Studio Server Explorer > SQL Server (as shown below with the [new] local instance, a SQL Azure instance, a SQL Denali instance, and a SQL Server 2008 R2 instance). Right click on any database in this node to try out the new ‘Create new project’ (for off-line database development) or the ‘Schema Compare’ features.

Of particular interest to SQL Azure developers is that the Juneau tools are version-aware. What this means is that you can target your development to SQL Azure (or other versions) and the tools will provide you with warnings, highlights, etc…in your T-SQL code that are specific to that particular version.

There are a couple of good talks from TechEd North America on the topic of Juneau as well. The first one covers the new features around database lifecycle management.

The second video covers future features (the bits don’t seem to be released to the public yet) in Entity Framework and Juneau.

As I work on my talk I am wondering how those of you who have been using ‘Data Dude’ are finding SSDT? Drop me a note via this blog.

Also, I’ll publish the presentation after I give it.

Tim Anderson (@timanderson) asked “Tasty Visual Studio upgrade or 'One step forward, two steps back'?” in a deck for his Microsoft previews 'Juneau' SQL Server tools article of 7/25/2011 for The Register:

Microsoft has released a third preview of SQL Server 2011, codenamed "Denali" and including the "Juneau" toolset.

In the Denali database engine there are new features that supporting high availability, and improve query performance of data warehousing queries. Then there's FileTable, a special table type that is also published as a Windows network share, and which enables file system access to data managed by SQL Server.

For business intelligence, Denali includes tabular modelling, which means in-memory databases that support business intelligence analysis, and a new interactive visualisation and reporting client called Project Crescent.

Interestingly, Project Crescent is built with Silverlight rather than HTML5, despite Microsoft's new-found commitment to all things HTML.

Alongside these new database features Microsoft is introducing a new set of tools delivered as add-ins to Visual Studio, and which work with older versions of SQL Server as well as Denali. This SQL Server Development Tools (SSDT) collection, codenamed "Juneau", is an ambitious project that builds on what was done for the existing Visual Studio 2008 and 2010 database projects – although, curiously, some features currently found in database projects are missing in Juneau.

The database being a key part of most business applications, the goal of Juneau is to integrate database development with application development, and to bring capabilities like testing, debugging, version control, refactoring, dependency checking, and deployment to the database. In addition, the query and design tools should mean that developers rarely need to switch to SQL Server Management Studio.

Juneau previews database changes and warns of the consequences

Juneau provides a new SQL Server project type in Visual Studio for database design and debugging. A SQL Server database project stores the entire database schema as the Transact-SQL (T-SQL) scripts that are required to create it, including code that runs in the database, such as stored procedures and triggers. You can import the schema from an existing database, or you can build it from scratch.

Since it's code, the schema is amenable to code-oriented operations such as versioning and refactoring, and it enables features such as Go to Definition.

Juneau uses a new testing and debugging method that works with a local, single-user database that's created automatically for each database project. When you debug the project, the database schema is applied to this local instance.

There are some limitations to this method, such as no support for full-text indexes. You can, however, debug SQL CLR, which is custom .NET code running in the database – SQL Server 2011 uses .NET Framework 4.0.

Unlike the old Visual Studio database projects, Juneau has a visual table designer. That said, some actions are not really visual and simply generate a template line of T-SQL for you to edit. The T-SQL editor, however, stays automatically in sync with the visual designer and includes smart code completion based on the underlying schema. Apparently this uses the actual parser from the SQL Server database engine under the covers.

Compare and contrast

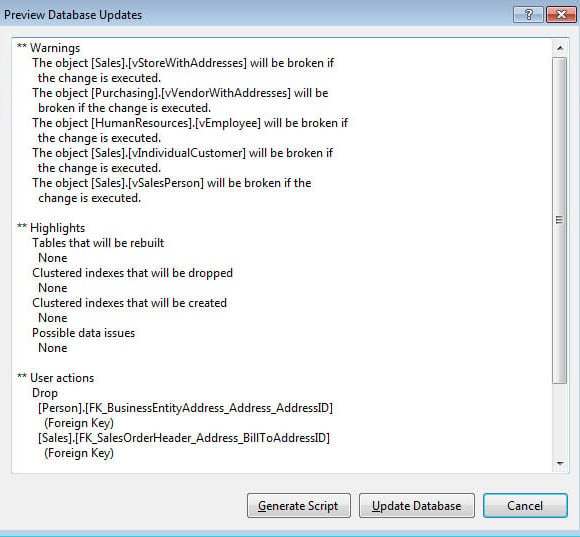

A key part of the SSDT collection is a tool called Schema Compare. This takes the schema in your project, compares it to a target database, and reports any differences. For example, if a database admin has made some changes since the schema was imported to the project, Schema Compare will highlight them. The tools are also able to generate update scripts that apply the project schema to the target, and warn you of consequences such as data loss.

The SSDT Juneau tools always use a two-stage process to amend an existing database. Rather than apply the changes immediately, it previews the update, warns you about what will break, and offers the choice of a generated script or immediate update. This buffered approach is a strong feature, and will help to prevent mistakes.

Another notable feature is deployment. The Publish Database tool lets you specify a target which can be an on-premise SQL Server or SQL Azure, hosted on Microsoft's cloud. [Emphasis added.]

Be forewarned: not all the features of Juneau are in the current preview. One missing piece – so far, at least – is the application project and database project integration, which will link SQL Server projects to the Entity Framework for object-relational mapping.

While Juneau's general approach looks strong, it is puzzling that some of the best parts of the old database projects – such as the Schema Dependency Viewer and Data Compare tool – are not in the preview version. As one one developer on Microsoft's discussion forum put it, "It feels like 1 step forward, 2 steps backwards."

As of today, Juneau's visual design tools are weak, there's no visual query designer, and no database diagramming or visual modelling tool. Some of these gaps may be filled by the time the tools are released – or, failing that, in a future update. If so, the new SSDT could be an excellent set of tools – although of course you're tied to SQL Server.

Although the Juneau tools currently install into Visual Studio 2010, Microsoft says they will be part of the next version of Visual Studio. They can also be used standalone, though they still use the Visual Studio shell. Expect more detail on this at the Build conference in September.

You can find more information about Juneau and download the preview here.

<Return to section navigation list>

MarketPlace DataMarket and OData

• The Windows Azure (Marketplace) Team (@WindowsAzure) announced Available Now: Windows Azure Marketplace Content & Tools Update in a 7/25/2011 post:

We are very excited today to announce our latest content release, which makes some great new data available on the Windows Azure Marketplace, as well as the release of our new Offer Submission page!

Are you looking to publish your application or data on the Marketplace? Check out https://marketplace.windowsazure.com/publishing for all the information you need including samples, documentation and to access the new Offer Submission page!

Also available today is BranchInfo™ 2011 from our friends at RPM Consulting. BranchInfo 2011 is a historical database of every bank branch location in the U.S, containing branch level information carefully address-matched and geocoded by institution and site across a 5 year timeframe. This allows analysts to focus on current office locations, and the capability to trace and project the development and ownership of branches over time. Users can also drill down into one mile trade areas for each branch, to determine deposits and share, market potential, and competition.

RPM Consulting has also just released MarketBank™ 2011, providing estimated usage and balances of financial products and services among America’s households. Data are provided at the block group level, and aggregated to branch trade areas and other standard and custom business and community geographies. Estimates are based upon a proprietary model utilizing data from the Federal Reserve Bank’s Survey of Consumer Finances, and other industry data to market populations as defined by ESRI 2010/2015 Updated Demographics.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Vittorio Bertocci (@vibronet) described Using the Windows Azure Access Control Service in iOS Applications in a 7/25/2011 post:

Nossir, there is nothing wrong with my blog. The screenshot above is indeed taken from a Mac desktop, and the one you see on the center is actually an instance of the iPhone emulator. What you should notice, however, is the list of Identity Providers it shows, so strangely similar to what we have shown on Windows Phone 7 and ACS… but I better start from the beginning.

A couple of months ago the Windows Azure Platform Evangelism team, in which I worked until recently, released a toolkit for taking advantage of the Windows Azure platform services from Windows Phone 7 applications. The toolkit featured various integration points with ACS, as explained at length here.

At about the same time, the team (and Wade specifically) released a version of the same toolkit tailored to iOS developers. That first iOS version integrated with the core Windows Azure services, but didn’t take advantage of ACS.

Well, today we are releasing a new version of the Windows Azure toolkit for iOS featuring ACS integration!Dear iOS friends landing on this blog for the first time: of course I understand you many not be familiar with the Windows Azure Control Service. You can get a quick introduction here, however for the purpose of providing context for this post let me just say the following: ACS is a fully cloud-hosted service which helps you to add to your application sign-in capabilities from many user sources such as Windows Live ID, Facebook, Google, Yahoo, arbitrary OpenID providers, local Active Directory instances, and many more. Best of all, it allows you to do so without having to learn each and every API or SDK; the integration code is the same for everybody, and extremely straightforward. All communications are done via open protocols, hence you can easily take advantage of the service from any platform, as this very post demonstrates. Try it!

I am no longer on the Evangelism team, but the ACS work for this deliverable largely took place while I was still on it: recording the screencast and writing this blog post provides nice closure. Thanks to Wade for having patiently prepped & provided a Mac already perfectly configured for the recording! Also, for driving the entire project, IMO one of the coolest things we’ve done with ACS so far.

And now, for something completely different:

The Release

As usual, you’ll find everything in DPE’s GitHub repository: https://github.com/microsoft-dpe

There will be four main entries you’ll want to pay attention to:

- watoolkitios-lib

This is a library of Objective-C snippets which can help you to perform a number of common tasks when using WIndows Azure. For the specific ACS case, you’ll find code for listing identity providers, acquire and handle tokens, invoke the ACS management APIs, and so on.- watoolkitios-doc

As expected, some documentation.- watoolkitios-samples

A sample application which demonstrates how to put the various snippets together- cloudreadypackages

Those are a set of ready-to-go packages that can be directly uploaded and launched in Windows Azure, without requiring you to have access to Visual Studio or the Windows Azure SDK: all you need is to deploy them via the portal (which works on Mac, too). The packages can be used as test backend for your iOS applications.

The packages take advantage of the technique described here to allow changes in the config settings even after deploy time. Which is a great segue for…- The ACS config tool for IOs

In the Windows Azure Toolkit for Windows Phone 7 we included some Visual Studio templates which contain all the necessary logic for wiring up a phone application to ACS and configure ACS to issue tokens for that app. In iOS/xCode there’s no direct equivalent of those templates, but we still wanted to shield the developer from many of the low level details of using Windows Azure. To that end, we created a tool which can automatically configure the application, ACS and Windows Azure.Using the ACS Configuration Tool for iOS in the Toolkit

If you want to see the tool in action, check out the webcast; here I will give you few glimpses, just to whet your appetite.

This is a classic wizard, and it opens with a classic welcome page. We don’t like surprises, hence we announce what the tool is going to do. Let’s click next.

The first screen gathers info about the Windows Azure storage account you want to use; nothing to do with ACS yet. Next.

The next screen gathers the certificate used for doing SSL with the cloud package. Again, no ACS yet. Next.

Ahh, NOW we are talking business. Just like in the toolkit for Windows Phone 7 we offered the possibility of using the membership provider or ACS, here we do the same: depending on which option you pick, the way in which the user will be prompted for credentials and how calls will be secured will differ accordingly. Here we go the ACS way, or course.

I would say this is the key screen in the entire process. Here we prompt the developer to provide the ACS namespace theyw ant to use with their iOS application, and the management key we need to modify the namespace settings accordingly. If you are unsure about how to obtain those values, a helpful link points to a document which will briefly explain how to navigate the ACS portal to get those.

In this wizard we try to strike a balance between showing you the power of the services we use and keeping the experience simple. As we did for the WP7 toolkit, here we apply some defaults (Google, yahoo and live id as identity providers, pass-through rules for all) that will show how ACS works without offering too many knobs and levers to operate. If you are unhappy with the defaults, you can always go directly to the portal and modify the settings accordingly. For example you may add a Facebook app as identity provider, and that will show up automatically in the phone application without any changes to the code.

The final screen of the wizard informs you that it has enough info to start the automatic configuration process. First it will generate a ServiceConfiguration.cscfg file, which you’ll use for configuring the Windows Azure backend (your cloudready package) via the portal. Then the wizard will reach out directly to the ACS management endpoint, and will add all the settings as specified.

As soon as you hit Save the wizard will ask you for amlocation for the cscfg file, then it will contact ACS and show you a bar as it progresses thru the configuration. Pretty neat!

Above you can see the generated ServiceConfiguration.cscfg. Of course the entire point of generating the file is so that you don’t have to worry about the details, but if you are curious you can poke around. You’ll mainly find the connection strings for the Windows Azure storage and the settings for driving the interaction with ACS.

All you need to do is to navigate (via the Windows Azure management portal) to the hosted service you are using for your backend, hit Configure and paste in the autogenerated ServiceConfiguration.cscfg.The next step in the screencast shows how to run the sample application, already properly configured, in Xcode. If you hit the play button, you’ll be greeted by the screen which which I opened the post.

The rest is business as usual: the application follows the same pattern as the ACS phone sample and labs: an initial selection driven by browser based sign in protocols to obtain and cache the token from ACS (a SWT) and subsequent web service calls secured via OAuth. Below a Windows Live ID prompt, followed by the first screen of the app upon successful authentication.

Well, that’s it folks! I know that Wade and the gang will keep an eye on the GitHub repository: play with the code, let them know what you like and what you don’t like, branch the code and add the improvements you want, go crazy!

Vittorio Bertocci (@vibronet) reported New in ACS: Portal in Multiple Languages, a New Rule Type… and Wave Bye-Bye to Quotas in a 7/25/2011 post:

Big news in ACSland today! There few new key features that - I am sure – many of you will welcome with a big smile.

As usual, for the full scoop take a look at the announcement and the release notes; here I’ll just give you few highlights & customarily lighthearted commentary.

The Portal Comes in 11 Languages

Riding the wave of the general localization effort sweeping the Windows Azure portal, the ACS portal can now entertain users in 10 extra languages, such as Japanese, Chinese (simplified and traditional), Korean, Russian, Portuguese, Spanish, German, French and even Italian

.

Switching it is pretty trivial, to the point that I am daring to switch to Chinese without (too much) fear of not being able to revert to English

. Just pick the language you want in the dropdown on the top right corner, and the UI will switch immediately. Also note the URL (in my case it moved to https://windows.azure.com/Default.aspx?lang=zh-Hans).

From that moment on, everything will be localized accordingly: for example if I invoke the management portal for one namespace, I get the HRD page localized accordingly:

And of course, the portal itself is now fully localized:

Note that I can override the language settings directly from the ACS portal, as highlighted in the image above.

Biographic note: I always have a lot of fun checking out the Italian versions of the software I use. The reason is that everybody have a different threshold about what should be translated and what should remain in their original formulation (why translating IP to “provider di identita’” but leaving RP as “relying party”? (or even why keeping “provider” but translating “identity”?)), and for expats like myself that threshold is often 0 (as in “do not translate at all”). Mismatches in expectations lead to those "benign violations” that McGraw claims constituting the basis of humor. But I digress: ignore my pet peeves, I am sure that having the portal available in multiple languages will be of enormous help for making ACS even easier to use. Good job guys!Quotas Are No More

Ah, this one is as simple as it will be appreciated, I have not the slightest doubt about it.

Some of you occasionally stumbled on quotas: deliberate restrictions which capped the maximum number of entities (rules, trusted IPs, RPs, etc etc) that could be created within a given namespace. Well, rejoice: those restrictions are now all gone. Have fun!Rules Accept Up To 2 Input Claims

Here I risk throwing myself in a somewhat lengthy explanation, which I know many of my colleagues will deem unnecessary (as in “why does he always take hours to get to the point?!”). In order to preempt their complaints, here there are the sheer facts about the new rules:

From this release on, you have the option of specifying up to two claims as input for claims transformation rules. If claims triggering both input conditions are present (logical AND), then the rule will trigger. The input claims must both be from the same identity provider, as there is no flow that would allow ACS to gather claims from multiple sources at once; alternatively, they can mix one identity provider and ACS itself.

That’s all very straightforward. When you create your rule, specify your input claim conditions as usual; you’ll have the chance of adding a second input claim, by clicking on “Add a second input claim” as shown above.

That opens up a new area in the UI, where you can specify the details of the second input claim. It’s that easy! Note that only newly created rules will allow a second input claim, and that rules created via the Generate command won’t have the second input claim either.

One application of this new rule type is pretty obvious: you can express logic which depends from more than one factor (two, in fact) in the input token. As in “you get to be in the ‘Gold’ role only if you are in the group ‘Managers’ AND in the group ‘Partners’”, which was impossible to express before introducing the new rule type. Unless you enlist in the process the administrator of the IP and you convince them to add the rule in THEIR system directly at the origin, but that would be cheating.

Another application is slightly less obvious: it is the chance of composing the current input with decisions taken in former iterations. I know, that’s not especially clear. That’s why I am throwing myself in the lengthy explanation in this other post, which is totally optional.

That’s it folks! Once again, don’t rely on this unreliable blog and read for yourself about the news in the announcement and the release notes. I am sure you’ll surprise us with real creative uses of those new features now at your disposal!

The Identity and Access Control Team posted Announcing July 2011 update to Access Control Service 2.0 on 7/25/2011:

Windows Azure AppFabric Access Control Service (ACS) 2.0 received a service update. All customers with ACS 2.0 namespaces automatically received this update, which primarily contained bug fixes in addition to a few new features and service changes:

Localization in eleven languages

The ACS management portal is now available in 11 languages. Newly-supported languages include Japanese, German, Traditional Chinese, Simplified Chinese, French, Italian, Spanish, Korean, Russian, and Brazilian Portuguese. Users can choose their desired language from the language chooser in the upper-right corner of the portal.

Rules now support up to two input claims

The ACS 2.0 rules engine now supports a new type of rule that allows up to two input claims to be configured, instead of only one input claim. Rules with two input claims can be used to reduce the overall number of rules required to perform complex user authorization functions. For more information on rules with two input claims, see http://msdn.microsoft.com/en-us/library/gg185923.aspx.

Encoding is now UTF-8 for all OAuth 2.0 responses

In the initial release of ACS 2.0, the character encoding set for all HTTP responses from the OAuth 2.0 endpoint was US-ASCII. In the July 2011 update, the character encoding of HTTP responses is now set to UTF-8 to support extended character sets.

Quotas Removed

The previous quotas on configuration data have been removed in this update. This includes removal of all limitations on the number of identity providers, relying party applications, rule groups, rules, service identities, claim types, delegation records, issuers, keys, and addresses that can be created in a given ACS namespace.

Please use the following resources to learn more about this release:

For any questions or feedback please visit the Security for the Windows Azure Platform forum.

If you have not signed up for Windows Azure AppFabric and would like to start using these new capabilities, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

The Windows Azure AppFabric Team (@Azure_AppFabric) posted Announcing the Windows Azure AppFabric July release on 7/25/2011:

Today we are excited to announce several updates and enhancements made to the Windows Azure AppFabric Management Portal and the Access Control service.

Management Portal

Localization

As announced on the Windows Azure blog: Windows Azure Platform Management Portal Updates Now Available, the Windows Azure Platform Management Portal now supports localization in 11 languages. The newly supported languages are Japanese, German, Traditional Chinese, Simplified Chinese, French, Italian, Spanish, Korean, Russian, and Brazilian Portuguese.

Users can choose their desired language from the language chooser in the top left pane of the Portal.

You can read about additional enhancements in the blog post: Windows Azure Platform Management Portal Updates Now Available.

Co-admin support

Customers can grant access to additional users (Co-Administrators) on the Windows Azure Management Portal as documented here: How to Setup Multiple Administrator Accounts.

These Co-administrators will now have access to the AppFabric section of the portal.

For any questions or feedback regarding the Management Portal please visit the Managing Services on the Windows Azure Platform forum.

Access Control

The following updates have been made to all ACS 2.0 namespaces.

Rules now support up to two input claims

The ACS 2.0 rules engine now supports a new type of rule that allows up to two input claims to be configured, instead of only one input claim. Rules with two input claims can be used to reduce the overall number of rules required to perform complex user authorization functions. For more information on rules with two input claims, see http://msdn.microsoft.com/en-us/library/gg185923.aspx.

Encoding is now UTF-8 for all OAuth 2.0 responses

In the initial release of ACS 2.0, the character encoding set for all HTTP responses from the OAuth 2.0 endpoint was US-ASCII. In the July 2011 release, the character encoding of HTTP responses is now set to UTF-8 to support extended character sets.

Quotas Removed

The previous quotas on configuration data have been removed in this release. This includes removal of all limitations on the number of identity providers, relying party applications, rule groups, rules, service identities, claim types, delegation records, issuers, keys, and addresses that can be created in a given ACS namespace.

Please use the following resources to learn more about this release:

For any questions or feedback regarding the Access Control service please visit the Security for the Windows Azure Platform forum.

If you have not signed up for Windows Azure AppFabric and would like to start using these new capabilities, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

• Martin Tantow (@mtantow) asserted The Battle For The Social Cloud Has Just Started in a 7/25/2011 post to the CloudTimes blog:

The new technology trend next year will be about strong connections between various electronic gadgets, PC’s and post-PC devices. It will be about the cloud dominion using shared interfaces, wireless communications, cloud-based database storage, which will highlight connections without using cords.

This is what Google mobile apps is all about with the launch of OS X Lion last Tuesday; Apple is further developing the App Store and the iCloud. This is also what Microsoft is aiming with its forthcoming product launch of Windows 8, where the Redmond company is focused on creating a single and consolidated ID from Windows Phone 7, Office Xbox and Skype.

Google’s mobile apps for Android and iOS already showcase these features and are continuously developing its web capabilities using their strong social network partners.

Google mobile apps have strong social networking features; however, everyone must understand that it isn’t anything like just another Twitter or Facebook account. With Google mobile apps it is not just viewing public profiles or just making friends on social network sites, rather it is about creating valid identities across varying cloud based services. Multiple devices like Facebook, Twitter, Amazon, Apple, Google and Microsoft will now have the capability to provide more substantial information like Social Security information, Driver’s license, car keys, passport and credit cards.

According to Edd Dumbill of O’Reilly Radar, this new feature will now have the capacity to provide user identity, sharing information, communication with other users, annotation capabilities and notification alerts. Dumbill said, Google will be “The social backbone of the web,” which is now an important part of the entire technology ecosystem.

Eric Schmidt, Google’s chairman now talks about Facebook as an identity drawer instead of a social network site. This is why Google+ was developed and started over a year ago to be at par with this feature of Facebook. According to Schmidt, mobile cloud computing is about customization and personalization, communication and identity.

Meanwhile, Facebook continues to get the upper hand on this with heir new identity machines like Spotify for login credentials, Gawker Media for the communication threads, Bing for a more customized search and an integrated contact details management for Windows Phone 7.

Google+ webapps hopes to deliver the same if not exceed its performance in this area through its strong web partners who are all willing to take advantage of Facebook’s millions of accounts. They can also take advantage of Google’s ability to get access from their own mobile applications through Android.

These social network giants continue to battle for the cloud and now not just with their newest product applications, but with their extended users and partners. The faceoff between these rivals was initially seen through their video chat offerings, Facebook acquired Skype, while Google is powered by Hangouts squaring. Microsoft is one perfect example of the link between these two giants. Skype, which happens to be one of Microsoft’s newest partnerships in providing Skype’s voice and video chat facilities, is also pushing partnerships with Google, and Baidu.

Google’s strategy in all these is to have social platforms like Twitter, Linkedin, Tumbler and Quora to defend itself against the solid partnership of Microsoft and Facebook, which is effectively doing the trick of putting pressure on their products.

It was actually their way of getting user’s attention that is captured in their clever ads that says, “What G+ is all about (psst!!! It’s not social).” Google is seriously building and “fixing collaborations and sharing across apps and across platforms,” Vincent Wong of Google said. Other than just the social networking features’ post and updates, Google focuses more on their strengths in Gmail, Documents, Calendar, Web, Reader and Photos. Wong said “That’s almost everything you use on your computer!”

Google’s strong alliances is evidenced by Google+ Apps releasing the iOS app. Here Google plans to share their user’s content to target Twitter and LinkedIn. Here Google+ for iPhone will work like a notification machine that will pull everyone towards their own set of extended circle.

The Definition of the Future eStore

At the rate Google is going on with its business strategies, it is so easy to share pictures, videos and links, but with Google+ mobile apps, it will no longer be impossible to share office documents, news and shopping discounts and maps.

Google will now become the virtual mobile wallet system, when the previous identity system becomes a strong purchasing system. So an example will be, if Silicon Valley were to host a basketball tournament, which is shared on the public cloud, then we will soon be seeing the Final Four that includes Google, Apple with Twitter, Facebook with Microsoft and Amazon.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Wade Wegner (@WadeWegner) filled in a few more gaps in the ACS story with Windows Azure Toolkit for iOS Now Supports the Access Control Service in a 7/25/2011 post:

Today we released an update to our Windows Azure Toolkit for iOS that provides some significant enhancements – in particular, we now provide support for using the Windows Azure Access Control Server (ACS) from an iOS application. You can get all the bits here:

- (updated) watoolkitios-lib

- (updated) watoolkitios-samples

- (updated) watoolkitios-doc

- (new) watoolkitios-configutility

- (new) cloudreadypackages

We first released this toolkit on May 6th, and since then we’ve released two minor updates and even accepted a merge request from the community. This toolkit has been a real pleasure to work on. Not only has it been to break out of the traditional Microsoft stack and learn about new languages and environments, but it’s also been great to introduce a lot of Objective-C and iOS developers to the power of Windows Azure.

There are three key aspects to version 1.2 of the iOS toolkit:

- Cloud Ready Packages for Devices

- Configuration Tool

- Support for ACS

These three pieces are incredibly important when trying to develop iOS applications that use Windows Azure; consequently, let me try and explain each of these components and how they help to make development easier.

Cloud Ready Packages for Devices

One of the biggest challenges when using Windows Azure for an iOS developer today is the inability to create a package that can be deployed to Windows Azure. To make this easier, we have pre-built four Cloud Ready Packages for Devices so that you – the iOS developer – don’t have to setup Windows 7 and run CSPACK. Instead, you simply have to download the most appropriate cloud ready package, update the .CSCFG file, then deploy through the Windows Azure Portal.

We have four “flavors” of the Cloud Ready Packages:

- ACS + APNS – this version allows you to use the Access Control Service and register your certificate for the Apple Push Notification Service

- ACS – this version allows you to use the Access Control Service

- Membership + APNS – this version allows you to use a simple membership store in Windows Azure table storage for users and register your certificate for the Apple Push Notification Service

- Membership – this version allows you to use a simple membership store in Windows Azure table storage for users

For more information on how to use and deploy these packages, take a look at this video on deploying the Cloud Ready Packages for Devices.

Configuration Tool

Along with the CSPKG you need a CSCFG to deploy your application to Windows Azure. The CSCFG file is an xml document that helps to describe elements of your application to Windows Azure so that it is able to correctly run your application.

In Visual Studio we have tools that make it easy to update the CSCFG file without having to open up the XML, but of course you cannot do this on a Mac. To make this easier, we created a tool that you can use on the Mac to walkthrough and generate the CSCFG file with all the appropriate details. Once created, you can use this CSCFG file along with the downloaded CSPKG file to deploy your application.

In addition to creating the CSCFG file, the configuration tool will also updated ACS with all the appropriate settings so that you can build & run your application quickly. For all the details, please take a look at Vittorio Bertocci’s post on Using the Windows Azure Access Control Service in iOS Applications.

Support for ACS

Everything I’ve described above is designed to make it easier for an iOS developer to quickly and easily use the Access Control Service. To use the library for authenticating to ACS, it’s really quite simple:

NSLog(@"Intializing the Access Control Client..."); WACloudAccessControlClient *acsClient = [WACloudAccessControlClient accessControlClientForNamespace:@"iostest-walkthrough" realm:@"uri:wazmobiletoolkit"]; [acsClient showInViewController:self.viewController allowsClose:NO withCompletionHandler:^(BOOL authenticated) { if (!authenticated) { NSLog(@"Error authenticating"); } else { NSLog(@"Creating the authentication token..."); WACloudAccessToken *token = [WACloudAccessControlClient sharedToken]; /* Do something with the token here! */ } }];I’ll post more walkthroughs and documentation shortly.

As always, please let me know what you think of the release! Your feedback is important to us, especially as it pertains to prioritizing future features and capabilities.

See the Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus section above for more details of ACS support.

Cory Fowler (@SyntaxC4) described Configure Windows Azure Diagnostics–One Config To Rule Them All! in a 7/25/2011 post:

One thing each developer strives for is maintaining less code. I don’t know why anyone would code something more than once, or have to modify code when it could be placed in a configuration file, which is much simpler to modify, not to mention easier to share between projects.

At first, I considered creating a base class for RoleEntryPoint which contained my Diagnostics Configuration for my Windows Azure Projects. Although this would have done a decent job of facilitating my Diagnostics Configuration, it didn’t quite sit right with me as it would need to either load a large set of configurations from the Cloud Service Configuration file which would need to be added to every project, or I would be stuck hardcoding a number of defaults which I deemed to be a good baseline set, neither of these options were very appealing.

Configuration Settings could have been handled by creating a new Project Template within Visual Studio but this still seems like a larger effort than is needed.

I started thinking about how a Windows Azure VM Role would configure it’s diagnostics and started searching around the web of a solution. I’m glad I did as I ended up finding this Golden Nugget. Enter Windows Azure Diagnostics Configuration File:

Web Role: Role [Root] Bin Directory

Worker Role Placement: Role Root

VM Role Placement: %ProgramFiles%\Windows Azure Integration Components\<VersionNumber>\DiagnosticsWindows Azure Diagnostics Configuration File

Schema: %ProgramFiles%\Windows Azure SDK\<VersionNumber>\schemas\DiagnosticsConfig201010.xsd

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration" configurationChangePollInterval="PT1M" overallQuotaInMB="4096"> <DiagnosticInfrastructureLogs bufferQuotaInMB="1024" scheduledTransferLogLevelFilter="Verbose" scheduledTransferPeriod="PT1M" /> <Logs bufferQuotaInMB="1024" scheduledTransferLogLevelFilter="Verbose" scheduledTransferPeriod="PT1M" /> <Directories bufferQuotaInMB="1024" scheduledTransferPeriod="PT1M"> <!-- These three elements specify the special directories that are set up for the log types --> <CrashDumps container="wad-crash-dumps" directoryQuotaInMB="256" /> <FailedRequestLogs container="wad-frq" directoryQuotaInMB="256" /> <IISLogs container="wad-iis" directoryQuotaInMB="256" /> <!-- For regular directories the DataSources element is used --> <DataSources> <DirectoryConfiguration container="wad-panther" directoryQuotaInMB="128"> <!-- Absolute specifies an absolute path with optional environment expansion --> <Absolute expandEnvironment="true" path="%SystemRoot%\system32\sysprep\Panther" /> </DirectoryConfiguration> <DirectoryConfiguration container="wad-custom" directoryQuotaInMB="128"> <!-- LocalResource specifies a path relative to a local resource defined in the service definition --> <LocalResource name="MyLoggingLocalResource" relativePath="logs" /> </DirectoryConfiguration> </DataSources> </Directories> <PerformanceCounters bufferQuotaInMB="512" scheduledTransferPeriod="PT1M"> <!-- The counter specifier is in the same format as the imperative diagnostics configuration API --> <PerformanceCounterConfiguration counterSpecifier="\Processor(_Total)\% Processor Time" sampleRate="PT5S" /> </PerformanceCounters> <WindowsEventLog bufferQuotaInMB="512" scheduledTransferLogLevelFilter="Verbose" scheduledTransferPeriod="PT1M"> <!-- The event log name is in the same format as the imperative diagnostics configuration API --> <DataSource name="System!*" /> </WindowsEventLog> </DiagnosticMonitorConfiguration>For more information, see the Windows Azure Libarary Article: Using the Windows Azure Diagnostics Configuration File.

I would suggest reading the Section: “Installing the Diagnostics Configuration File” which outlines how the diagnostics configuration file is handled along side the DiagnosticManager configurations.

Mary Jo Foley (@maryjofoley) reported Microsoft updates Azure tookit for Apple's iOS to support federation with Facebook, Google and more on 7/25/2011 (see also the Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus section):

Microsoft rolled out an updated version (1.2) of its Windows Azure toolkit for iOS on July 25.

Included in the latest update is support for the Azure Access Control Service (ACS). According to a blog post by Wade Wegner, an Azure Technical Evangelist, this ACS support “makes it extremely easy for an Objective-C and iOS developer to leverage ACS to provide identity federation to existing identity providers (such as Live ID, Facebook, Yahoo!, Google, and ADFS,” or Active Directory Federation Services.”

ACS is one of the elements of Windows Azure AppFabric.

Today’s 1.2 release is the fourth update to the toolkit since Microsoft initially delivered it in early May. Microsoft also has released similar toolkits for Windows Phones and for Android phones.

Other new features in the 1.2 iOS toolkit include:

- A number of “Cloud Ready Packages” that can be deployed to Azure, eliminating the need for developers to run Windows and/or Visual Studio to create the package being deployed to Microsoft’s cloud OS

- A polished sample app that now supports ACS

- A native OSX application that can both create the .CSCFG file with the correct values and configure the Access Control Service

Last week, Microsoft made available an alpha of an Azure toolkit for social-game developers.

More details about the Access Control Services features are in the Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus section above.

Bill Wilder described his Talk: Architecture Patterns for Scalability and Reliability in Context of Azure Platform in a 7/21/2011 post (missed when posted):

I spoke last night to the Boston .NET Architecture Study Group about Architecture Patterns for Scalability and Reliability in Context of the Windows Azure cloud computing platform.

The deck is attached at the bottom, after a few links of interest for folks who want to dig deeper.

Command Query Responsibility Segregation (CQRS):

- I’m a big fan of Bertrand Meyer‘s work, and I just learned that CQRS is based on his earlier CQR pattern

- Martin Fowler has a entry on CQRS (recently added, I will now read this)

- CQRS on Windows Azure (MSDN Magazine article)

- .NET Rocks podcast: Episode 639 Udi Dahan Clarifies CQRS (That same podcast episode is also included in the Azure Top 40 feed that I curate: Azure Top 40 http://bit.ly/azuretop40)

- http://abdullin.com/cqrs/

Sharding is hard:

- Foursquare down for 11 hours from imbalanced shards: http://blog.foursquare.com/2010/10/05/so-that-was-a-bummer/ (though the High Scalability blog says it was longer)

NoSQL:

- NoSQL and the Windows Azure Platform whitepaper from Microsoft (which I found to be a very good read)

CAP Theorem:

PowerPoint slide deck used during my talk:

Janie Chang posted a description of Microsoft Research’s forthcoming Excel DataScope application as From Excel to the Cloud on 6/13/2011 (missed when posted):

On June 15, Microsoft’s Washington (D.C.) Innovation and Policy Center will host thought leaders, policymakers, analysts, and press for Microsoft Research’s D.C. TechFair 2011. The event showcases projects from Microsoft Research facilities around the world and provides a strategic forum for researchers to discuss with a broader community their work in advancing the state of the art in computing. Microsoft researchers and attendees alike will have an opportunity to exchange ideas on how technology and the policies concerning those technologies can improve our future.

Roger Barga, architect in the Cloud Research Engagement team within the eXtreme Computing Group (XCG), looks forward to the D.C. TechFair, where he will demonstrate Excel DataScope, a Windows Azure cloud service for researchers that simplifies exploration of large data sets.

The audience for the event—members of the Obama administration and staff, members of the U.S. Congress and staff, representatives of prominent think tanks, academics, and members of the media—also will get an opportunity to explore cutting-edge research projects in other areas, such as natural user interfaces, the environment, healthcare, and privacy and security.

Opportunities in Big Data

“Big data” is a term that refers to data sets whose size makes them difficult to manipulate using conventional systems and methods of storage, search, analysis, and visualization.

“Scientists tend to talk about big data as a problem,” Barga says, “but it’s an ideal opportunity for cloud computing. How large data sets can be addressed in the cloud is one of the important technology shifts that will emerge over the next several years. Microsoft Research’s Cloud Research Engagement projects push the frontiers of client and cloud computing by making investments in projects such as Excel DataScope to support researchers in the field.”

As one of XCG’s cloud-research projects, Excel DataScope offers data analytics as a service on Windows Azure. Users can upload data, extract patterns from data stored in the cloud, identify hidden associations, discover similarities, and forecast time series. The benefits of Excel DataScope go beyond access to computing resources: Users become productive almost immediately because Microsoft Excel acts as an easy-to-use interface for the service.

“Excel is a leading tool for data analysis today,” Barga explains. “With 500,000,000 licensed users, there are incredible numbers of people already comfortable with Excel. In fact, the spreadsheet itself is a fine metaphor for manipulating data. It’s friendly, and it allows different data types, so it’s a good technology ramp to the cloud for data analysts.”

The Excel DataScope research ribbon adds new algorithms and analytics that users can execute in Windows Azure.The project enables the use of Excel on the cloud through an add-in that displays as a research ribbon in the spreadsheet’s toolbar. The ribbon provides seamless access to computing and storage on Windows Azure, where users can share data with collaborators around the world, discover and download related data sets, or sample from extremely large—terabyte-sized—data sets in the cloud. The Excel research ribbon also provides new data-analytics and machine-learning algorithms that execute transparently on Windows Azure, leveraging dozens or even hundreds of CPU cores.

“All Excel DataScope does,” Barga explains, “is start up an analytics algorithm in the cloud. You get to visualize the results and never have to move the actual data out of the cloud. We don’t want a data analyst to learn much more than the names of the algorithms and what they do. Users should just think that Excel has a new capability which opens up great opportunities for extracting new insights out of massive data sets.”

Opportunities for Access

While the cloud puts massively scalable computing resources into the hands of users, Barga notes that there are performance differences between cloud-based computers and supercomputers. Supercomputers and high-performance computing clusters are designed to share data at high frequencies and with low latency; in this respect, cloud computing is slower. Another key differentiator is storage services. High-performance clusters have storage arrays that provide high-speed, high-bandwidth pathways to storage. This is not the case in clouds, where storage often resides separately from computing nodes, with multiple routers or hops widening the distance between them.

Barga believes the highly available nature of cloud computing mitigates these differences and changes the game when it comes to opportunities for accessing computing resources.

“Our observation,” he muses, “is that while a cloud may be slower in some regards, you get the computing resources when you want and as much as you want. Many of the major data labs in the country, who have some of the biggest iron around, have wait times of weeks for jobs in the queue, so, in terms of elapsed time, your cloud job could have run ages ago, and your report could be written up by now.”

Successful university research groups and companies are interested in Microsoft’s Cloud Research Engagement initiatives because, while their labs have plenty of processing power, when there are situations that require fast decision making, such as pandemics or a new crop virus, it’s hard to secure enough CPU cycles on short notice. The cloud, therefore, is a game-changer even for groups that already have many computing resources.

Opportunities in Analysis

In the same way that Excel was the logical interface choice for the project, the research team selected algorithms to include on the research ribbon based on popularity.

“It turns out there’s a fairly consistent set of tasks,” Barga says, “to making sense out of data, whether in the social sciences, engineering, or oceanography. You need clustering, for example, to see how the data groups together. You want to look for outliers and run regression analysis to understand how the data trends. We felt if we implemented the top two dozen or so algorithms, we would have a good starter set. It’s extensible, so people can add their own analytics over time. That’s when things will get really exciting.”

Barga and his colleagues want users to write their own algorithms for the cloud, then upload and register the code on the service. Once that happens, the next time the user logs into Excel DataScope, the algorithm will appear on the research ribbon. When users begin to publish algorithms into a shared workspace, things get even more interesting.

“That’s the vision: for users to publish high-value or specialized algorithms in a viewable library that others can access to try out, install, and make part of their working set of algorithms,” Barga says. “This is where it gets exciting, when experts in particular domains start contributing algorithms that unlock the value of data.”

Opportunities for Collaboration

The ability to share both data and algorithms has been one of the project’s design goals. Excel DataScope includes the notion of security-enhanced workspaces, where users can upload data sets to share with research colleagues anywhere in the world. This opens opportunities for cross-discipline collaboration that is nothing less than transformative.

For example, say that an expert in oceanography works within a particular discipline and with data specific to an area of study. Understanding and predicting the effects of an oil spill, however, requires knowledge from multiple disciplines, such as ocean chemistry, biology, and ecology. Simulations of complex oceanographic and atmospheric models require mining, searching, and analysis of huge data sets in near-real time, across disciplines, as never before. The ability to collaborate and extract insight from large data sets is part of a shift from traditional paradigms of theory, experimentation, and computation to data-driven discovery.

Barga (pictured at right) and colleagues from the Cloud Research Engagement team are busy publicizing the resources they offer to researchers in the field. The response they receive from talks and introductory videos validate the research community’s interest in data-driven discovery.

“We hear a lot of excitement,” he says, “and there are always researchers in the back of the room who want to know whether we have algorithms or data sets for a particular domain. We’ll say, ‘Sorry, we don’t have those,’ but they’ll say: ‘No, that’s good. We have expertise in that area, and we’d love to build a library of algorithms, contribute data, and make it available to the rest of the world,’ which is very encouraging—not to mention very cool.”

Democratizing Access to Data

During the D.C. TechFair, Barga will discuss the value of cloud computing in the context of the Open Government Directive, which makes data available to the public through websites such as data.gov.

“The government is contributing data sets,” he explains, “and we’d like to engage with scientists who are willing to add other data sets to data.gov. But data by itself is not enough. We’d like to see people proposing analytics associated with those data sets, so that when you go to data.gov, you also find useful algorithms to run against the data. We’d like to talk to scientists who want to extract insights or craft policies based on that data.”

At the moment, relatively few scientists or organizations can perform analysis of big data, either because of a lack of knowledge or a lack of resources. This distances data from the people who need to make decisions. The team plans to expand the Excel DataScope initiative later this year to include more of the research community and to release a programming guide that will explain how to write algorithms that scale out on Azure.

Barga sees the project as revolutionary: It democratizes access to data and, consequently, insights into data. He envisions a future data market in which users can find data sets and, with a few mouse clicks, select algorithms that the system identifies as relevant to the selected data, then start analyzing.

“We have architected a pathway from Excel to the cloud,” he says. “We have an infrastructure that sets the stage for a future where a decision maker can select from huge data sets and ask about trends in healthcare, poverty, or education. When we get there, it will have a huge economic impact, not just for scientific research, but also for businesses and for the country. This is a dialogue we’re trying to open and the space we are trying to move into with this project.”

Jared Jackson presented a 00:02:57 Cloud Data Analytics from Excel Webcast on 6/12/2011 (missed when posted):

In this video, Jared Jackson, researcher in the eXtreme Computing Group at Microsoft Research, provides an overview of the features of Excel DataScope, a tool that enables researchers and data analysts to seamlessly access the resources of the cloud, via Windows Azure, from the familiar interface of Microsoft Excel. By using Excel DataScope, you can download and use extremely large data collections; extract insight by using machine learning and data analytics libraries; and take action exploring the data, sharing the data, and publishing the data to the cloud.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The ADO.NET Team announced EF 4.1 Update 1 Released on 7/25/2011:

Back in April we announced the release of Entity Framework 4.1 (EF 4.1), today we are releasing Entity Framework 4.1 – Update 1. This is a refresh of the EF 4.1 release that includes a small number of bug fixes and some new types to support the upcoming migrations preview.

What’s in Update 1?

Update 1 includes a small set of changes including:

- Bug fix to remove the need to specify ‘Persist Security Info=True’ in the connection string when using SQL authentication. In the EF 4.1 release ‘Persist Security Info’ was required for Code First to be able to create a database for a connection using SQL Authentication. The update includes a fix to remove this requirement. Note that ‘Persist Security Info’ is still required if you construct a DbContext using a DbConnection instance that has already been opened and closed.

- Introduction of new types to facilitate design-time tools for Code First. Update 1 introduces a set of types to make it easier for design time tools to interact with derived DbContexts:

- DbContextInfo can be used to instantiate and interact with a derived context as well as determine information about the origin of the connection string etc..

- IDbContextFactory<TContext> is used to let DbContextInfo know how to construct derived DbContext types that do not expose a default constructor. If your context does not expose a default constructor then an implementation if IDbContextFactory should be included in the same assembly as your derived context type.

How Do I Get Update 1?

Entity Framework 4.1 – Update 1 is available in a couple of places:

- Download the stand alone installer

Note: This is a complete install of EF 4.1 and does not require a previous installation of the original EF 4.1 RTM.- Add or upgrade the ‘EntityFramework’ NuGet package

Note: If you have previously run the EF 4.1 stand alone installer you will need to upgrade or remove the installation before using the updated NuGet package. This is because the installer will add the EF 4.1 assembly to the Global Assembly Cache (GAC). When available the GAC’d version of the assembly will be used at runtime.

Note: The NuGet package only includes the EF 4.1 runtime and does not include the Visual Studio item templates for using DbContext with Model First and Database First development.Getting Started with EF 4.1

There are a number of resources to help you get started with EF 4.1:

- ADO.NET Entity Framework page on the MSDN Data Developer Center

There is lots of great new content on this site, including ‘Getting Started’ videos for the new features in EF 4.1- MSDN Documentation

- ADO.NET Entity Framework Forum

- Code First walkthrough

- Model First / Database First walkthrough

Support

This release can be used in a live operating environment subject to the terms in the License Terms. The ADO.NET Entity Framework Forum can be used for questions relating to this release.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Bruce Kyle reported Windows Azure Supports NIST Standards Acceleration for Cloud Computing on 7/25/2011:

Microsoft is participating in the National Institute for Standards and Technology (NIST) initiative to jumpstart the adoption of cloud computing standards called Standards Acceleration to Jumpstart the Adoption of Cloud Computing, (SAJACC).The goal is to formulate a roadmap for adoption of high-quality cloud computing standards.

One way they do this is by providing working examples to show how key cloud computing use cases can be supported by interfaces implemented by various cloud services available today.

NIST works with industry, government agencies, and academia. They use an open and ongoing process of collecting and generating cloud system specifications. The hope is to have these resources serve to both accelerate the development of standards and reduce technical uncertainty during the interim adoption period before many cloud computing standards are formalized.

By using the Windows Azure Service Management REST APIs we are able to manage services and run simple operations including simple CRUD operations, solve simple authentication and authorizations using certificates. Our Service management components are built with RESTful principles and support multiple languages and runtimes including Java, PHP and .NET as well as IDEs including Eclipse and Visual Studio.

For more information, see Windows Azure Supports NIST Use Cases using Java.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• Martin Tantow (@mtantow) posted Piston Cloud Computing Takes on Private Cloud Security on 7/25/2011:

Piston Cloud Computing recently earned $4.5 million dollars of funding for supplying software in the crowded space of private cloud computing. Earlier this year and for several months Piston participated in the Nebula NASA project, and for awhile it has been the favored beta software for testing by early users. Joshua McKenty, CEO and co-founder of Piston announced that they will announce a new set of products in the near future, but in the meantime it will remain as a small group of developers who are all working on their cloud platform.

It is a fact that users have varying user experience from even the top tablet PC’s. To make this on field level, take a close look at the strengths and weaknesses of Android/Honeycomb, Apple iOS and RIM’S QNX OS. McKenty, one of the founders of the OpenStack was the brains behind Nebula. OpenStack has contributed to Rackspace as well as NASA on a service-for-a-fee cloud infrastructure and hopes to become the standard for the cloud-based environment as preferred by users and suppliers.

On the NASA project, McKenty said “Most of what we did at NASA around security couldn’t be released,” this is understandable since any leakage on their security domain will compromise the entire system, so the government gave specific instructions that they do not want any information to be on the public domain.

All IT platforms in general should operate as a business working within another business. This is when resources for business are provided by the company to get a good return of their revenue investment. McKenty said “Some aspects of security are being addressed (in OpenStack) and others are not. Security is a logical place to build a start-up, open source business.”

Piston products will be offered similar to the components of OpenStack that is built on Phyton. It will hopefully arrive at a balance between being a major contributor to OpenStack, while it reserves some important codes for Piston as a value added feature of the product.

According to McKenty, OpenStack’s Project Policy Board is the governing body for Piston. He said at the last poll survey, Piston landed at number three among the contributors for OpenStack codes and he said he will be glad for them to land at number one.

On adding security to big data that have already been setup, McKenty stated although the cloud is the perfect place for huge data. It will be so complex to add security once the cloud platform has been in place already. To add to this, security that is placed after a huge data may not be as efficient if they were done simultaneously. “You have to take the right architectural approach to keep them both performing well,” McKenty said.

Another cloud player based on OpenStack is Cloud.com that combines its extensions based on Java to create CloudStack. Cloud.com was recently acquired by Citrix. Amazon’s former VP of engineering Chris Pinkham has a new project that is to create a generic edition of Amazon Services, to avoid being tied up with Amazon’s API virtual machine. To date, Piston partnered with Hummer Winblad, Divergent Ventures, and True Ventures (see quick announcement here) for their funding.

<Return to section navigation list>

Cloud Security and Governance

Jon Shende prefixed his Risk and Its Impact on Security Within the Cloud - Part 1 article of 7/25/2011 for the Cloud Security Journal with “The effect of people and processes on cloud technologies”:

These days when we hear the term "cloud computing" there is an understanding that we are speaking about a flexible, cost-effective, and proven delivery platform that is being utilized or will be utilized to provide IT services over the Internet. As end users or researchers of all things "cloud" we expect to hear about how quickly processes, applications, and services can be provisioned, deployed and scaled, as needed, regardless of users' physical locations.

When we think of the typical traditional IT security environment, we have to be cognizant of the potential for an onslaught of attacks, be they zero day, the ever-evolving malware engines and the increase in attacks via social engineering, the challenge for any security professional is to develop and ensure as secure an IT system as possible.

Thoughts on Traditional Security and Risk

Common discussions within the spectrum of IT security are risks, threats and vulnerability, and an awareness of the impact of people and processes on technologies. Having had opportunities to work on data center migrations as well as cloud services infrastructures, a primary question of mine has been: what then of the cloud and cloud security and the related risk derived from selected services being outsourced to a third-party provider?ISO 27005 defines risk as a "potential that a given threat will exploit vulnerabilities of an asset or group of assets and thereby cause harm to the organization."

In terms of an organization, risk can be mitigated, transferred or accepted. Calculating risk usually involves:

- Calculating the value of an asset

- Giving it a weight of importance in order to prioritize its ranking for analysis

- Conducting a vulnerability analysis

- Conducting an impact analysis

- Determining its associated risk.

As a security consultant, I also like the balanced scorecard as proposed by Robert Kaplan and David Norton, especially when aimed at demonstrating compliance with policies that will protect my organization from loss.

Cloud Security and Risk

In terms of cloud aecurity, one key point to remember is that there is an infrastructure somewhere that supports and provides cloud computing services. In other words the same mitigating factors that apply to ensure security within a traditional IT infrastructure will apply to a cloud provider's infrastructure.All this is well and good within the traditional IT environment, but how then can we assess, or even forecast for and/or mitigate risk when we are working with a cloud computing system? Some argue that "cloud authorization systems are not robust enough with as little as a password and username to gain access to the system, in many private clouds; usernames can be very similar, degrading the authorization measures" (Curran,Carlin 2011)

We have had the arguments that the concentrated IT security capabilities at cloud service provider (CSP) can be beneficial to a cloud service customer (CSC); however, businesses are in the realm of business to ensure a profit from their engagements. One study by P. McFedries (2008) found that "disciplined companies achieved on average an 18% reduction in their IT budget from cloud computing and a 16% reduction in data center power costs."

To mitigate this concern, a CSC will need to ensure that their CSP defines the cloud environment as the customer moves beyond their "protected" traditional perimeter. Both organizations need to ensure that all high risk security impact to the customer organization meets or exceeds the customer organization's security policy and requirements and their proposed mitigation measures. As part of a "cloud policy" a CSC security team should identify and understand any cloud-specific security risks and their potential impact to the organization.

Additionally a CSP should leverage their economies of scale when it comes to cloud security (assets, personnel, experience) to offer a CSC an amalgamation of security segments and security subsystem boundaries. Any proficient IT Security practitioner then can benefit from the advantage of leveraging a cloud provider's security model. However, when it applies to business needs the 'one size fits all' cloud security strategy will not work.

Of utmost importance when looking to engage the services of a cloud provider is gaining a clear picture of how the provider will ensure the integrity of data to be held within their cloud service/s. That said all the security in the world would not prevent the seizure of equipment from government agencies investigating a crime. Such a seizure can interrupt business operations or even totally halt business for an innocent CSC sharing a server that hosts the VM of an entity under investigation. One way to manage the impact on a CSC function within the cloud as suggested by Chen, Paxon and Katz (2010) is the concept of "mutual auditability."

The researchers further went on to state that CSPs and CSCs will need to develop a mutual trust model, "in a bilateral or multilateral fashion." The outcome of such a model will allow a CSP "in search and seizure incidents to demonstrate to law enforcement that they have turned over all relevant evidence, and prove to users that they turned over only the necessary evidence and nothing more."

Is it then feasible for a CSC to calculate the risk associated with such an event and ensure that there is a continuity plan in place to mitigate such an incident ? That will depend on the business impacted.

Another cause for concern from cloud computing introduces a shared resource environment from which an attacker can exploit covert and side channels.

Risks such as this need to be acknowledged and addressed when documenting the CSP-CSC Service Level Agreement (SLA). This of course may be in addition to demands with respect to concerns for Availability, Integrity, Security, Privacy and Reliability? Would a CSC feel assured that their data is safe when a CSP provides assurance that they follow the traditional static based risk assessment models?

I argue not, since we are working within a dynamic environment. According to Kaliski, Ristenpart, Tromer, Shacham, and Savage (2009) "neighbouring content is more at risk of contamination, or at least compromise, from the content in nearby containers."

So how then should we calculate risk within the Cloud? According to Kaliski and Pauley of the EMC Corporation, "just as the cloud is "on-demand," increasingly, risk assessments applied to the cloud will need to be "on-demand" as well."

The suggestion by Kaliski and Pauley was to implement a risk as a service model that integrates an autonomic system, which must be able to effectively measure its environment as well as "adjust its behaviour based on goals and the current context".

Of course this is a theoretical model and further research will have to be conducted to gather data points and "an autonomic manager that analyses risks and implements changes".

In terms of now, I believe that if we can utilize a portion of a static risk assessment, define specific controls and control objectives as well as map such to that within a CSP or, define it during the SLA process, a CSC can then observe control activities that manage and/or mitigate risk to their data housed at the CSP.

Traditionally governance and compliance requirements should also still apply to the CSP, e.g., there must be a third-party auditor for the CSP cloud services and these services should have industry recognized security certificates where applicable.

Conclusion

Some things that a CSC needs to be cognizant of with regard to cloud security in addition to tradition IT security measures with a CSP are:

- The ability of the CSP to support dynamic data operation for cloud data storage applications while ensuring the security and integrity of data at rest

- Have a process in place to challenge the cloud storage servers to ensure the correctness of the cloud data with the ability of original files being able to be recovered by interacting with the server (Wang 2011)

- Encryption-on-demand ability or other encryption metrics that meets an industry standard, e.g., NIST

- A privacy-preserving public auditing system for data storage security in Cloud Computing (W. L. Wang 2010)

- Cloud application security policies automation

- Cloud model-driven security process, broken down in the following steps: policy modelling, automatic policy generation, policy enforcement, policy auditing, and automatic update (Lang 2011)

Works Cited

- Curran, Sean Carlin and Kevin. "Cloud Computing Security. ." International Journal of Ambient Computing and Intelligence, 2011: 38-46.

- Lang, Ulrich. Model-driven cloud security. IBM, 2011.

- Thomas Ristenpart, Eran Tromer, Hovav Shacham, and Stefan Savage. Hey, You, Get Off of My Cloud!Exploring Information Leakage in Third-Party Compute Clouds. CCS 2009, ACM Press, 2009.

- Wang, Wang, Li, Ren. Privacy-Preserving Public Auditing for Data Storage Security in Cloud Computing. IEEE INFOCOM, 2010.

- Wang, Wang,Li Ren. Lou. "Enabling Public Verifiability and Data Dynamics for Storage Security in Cloud Computing." Chicago, 2011.

- Yanpei Chen, Vern Paxson,Randy H. Katz. What's New About Cloud Computing Security? Berkeley: University of California at Berkeley, 2010.

Marcia Savage reported ISACA releases cloud computing governance guide to the SearchCloudSecurity.com site on 7/25/2011:

ISACA released a new guide designed to help organizations understand how to implement effective cloud computing governance.

The guide, IT Control Objectives for Cloud Computing, aims to help readers understand cloud computing and how to build the relevant controls and governance around their cloud environments. It also provides guidance for companies considering cloud services.

Governance becomes more critical than ever for organizations utilizing cloud services, according to the guide from ISACA, a non-profit global organization focused on information systems assurance and security, and enterprise governance. Companies need to implement a cloud computing governance program to effectively manage increasing risk and multiple regulations, and ensure continuity of critical business processes in the cloud, according to ISACA.

In these economic times, executive management is excited about the potential for the cloud to reduce costs and increase the value of IT, but “getting that value is part of a good governance program,” said Jeff Spivey, international vice president of ISACA, and president of Security Risk Management Inc., a consulting firm based in Charlotte, N.C. “And making sure when you are getting the value, that you’re also managing the risk as opposed to jumping blindly off the cliff and hoping there’s water down there,” he added.

The cloud computing governance guide outlines how COBIT and other IT governance tools developed by ISACA can help organizations in managing cloud environments. Spivey said COBIT can be applied to a number of different scenarios, including cloud technologies. ISACA is accepting public comment on the latest version, COBIT 5, through July 31.

The ISACA guide is complementary to work from the Cloud Security Alliance by focusing on ISACA’s strength in governance, said Spivey, who was a founding member of the CSA.

As the cloud evolves and companies increasingly adopt cloud services, there’s still a lot of ambiguity around the topic and a need for guidance, he said. The ISACA guide can help organizations make sure they have the right controls in place and assist others contemplating the cloud to understand its complexities, he added.

IT Control Objectives for Cloud Computing, the third in ISACA’s IT Control Objectives series, is available at www.isaca.org/ITCOcloud. The first book in the series focused on Sarbanes-Oxley.

Full disclosure: I’m a paid contributor to SearchCloudComputing.TechTarget.com, a sister publication.

<Return to section navigation list>

Cloud Computing Events

• Marcelo Lopez Ruiz (@mlrdev) reported on 7/25/2011 that he’ll present datajs at DevCon5 on 7/27/2011 at 11:45 AM to 1:00 PM:

Later this week I'll be speaking at DevCon5 in New York. We'll look at how the browser landscape is evolving and where it's going, and present some of the work we've done in layering conventions over REST in producing OData, as well as the work we've been doing in datajs to leverage the increase in capabilities.

Hope to see any readers of this blog at the conference - drop me a comment if you want to meet up during/after the conference, or catch me on Twitter at @mlrdev.

Marcelo’s session is named HTML5 and the future JavaScript platform.

O’Reilly Media’s Open Source Convention (OSCON, @oscon) began 7/25/2011 with the following Cloud Computing track:

Open source played a vital role in making cloud technologies possible. It underpins much cloud infrastructure, has driven down costs, and led innovation in cloud management. Yet the cloud model of software as a service presents direct challenges to the freedom and principles of open source.

- 9:00amMonday, 07/25/2011

- Learning Puppet - A Tutorial for Beginners