Windows Azure and Cloud Computing Posts for 7/13/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Steve Marx (@smarx) explained Using a Local Storage Resource From a Startup Task in a 7/13/2011 post:

You probably already knew that you should always declare local storage via the

LocalStorageelement in yourServiceDefinition.csdefwhen you need disk space on your VM in Windows Azure. The common use for this sort of space is as a scratch disk for your application, but it’s also a handy place to do things like install apps or runtimes (like Ruby, Python, and Node.js, as I do in the Smarx Role). You’ll typically do that from a startup task, where it may not be obvious how to discover the local storage path.

You can, of course, simply write your startup task in C# and use the normal

RoleEnvironment.GetLocalResourcemethod to get the path, but startup tasks tend to be implemented in batch files and PowerShell. When I need to do this, I use a short PowerShell script to print out the path (getLocalResource.ps1 from Smarx Role):param($name) [void]([System.Reflection.Assembly]::LoadWithPartialName("Microsoft.WindowsAzure.ServiceRuntime")) write-host ([Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment]::GetLocalResource($name)).RootPath.TrimEnd('\\')and then use it from a batch file like this (installRuby.cmd from Smarx Role):

powershell -c "set-executionpolicy unrestricted" for /f %%p in ('powershell .\getLocalResource.ps1 Ruby') do set RUBYPATH=%%pI’ve also, in the past, used a small C# program (

Console.WriteLine(RoleEnvironment.GetLocalResource(args[0]).RootPath);) in place of the PowerShell script. (I still use the sameforloop in the batch file, just callingGetLocalResource.exeinstead.) The PowerShell script just seems scripty-er and thus feels better in a startup task. Otherwise I don’t see a difference between these two approaches.

<Return to section navigation list>

SQL Azure Database and Reporting

BusinessWire reported IFS to Develop and Deploy Enterprise Smartphone Apps Using Windows Azure in a 7/13/2011 press release:

IFS, the global enterprise applications company announced today its intent to develop and deploy additional IFS Touch Apps, a series of smartphone apps that extend the capabilities of IFS Applications, using the Windows Azure platform.

“IFS Touch Apps and IFS Cloud are a great example of how IFS is moving in the direction of software plus services in a more advanced way”

IFS Touch Apps is a set of apps to allow users of the IFS Applications software suite to access this ERP and other functionality through smartphones. IFS Touch Apps targets the mobile individual who works on the move, using a smartphone to perform quick tasks.

IFS Touch Apps can also speed up business processes as there will be less time waiting for mobile individuals to perform their step in the process - for example approving purchases or authorizing expenses.

Microsoft Corp. technology will be running behind IFS Touch Apps because the apps will connect to the IFS Cloud—a set of services running inside the Windows Azure cloud environment, where they serve as the backbone of the smartphone apps. IFS customers will be able to “uplink” their IFS Applications installations to IFS Cloud and benefit from the new IFS Touch Apps. This model of deployment via the Windows Azure Platform enables automatic updates of the apps and cloud services, reduces the overhead of providing apps for multiple smartphone platforms, and disconnects the apps from dependencies on specific versions of IFS Applications. In practice this greatly reduces the effort needed by companies to adopt enterprise apps and keep up-to-date with the fast moving world of smartphones.

IFS also announced its intent to offer IFS Touch Apps for the Windows Phone 7. As a result, IFS customers will be able to choose to run the first IFS Touch Apps on Windows Phone 7, iPhone or Android.

“IFS Touch Apps and IFS Cloud are a great example of how IFS is moving in the direction of software plus services in a more advanced way,” IFS Chief Technology Officer Dan Matthews said. “In addition to the core platform of Windows Azure, IFS will become one of the first enterprise software vendors to make use of Microsoft SQL Azure, a new data storage part of the Azure cloud environment. SQL Azure will be used to as the backbone for the IFS Cloud customer portal, where customers can view statistics and manage their usage of IFS Touch Apps.”

The first IFS Touch Apps will be rolled out to early adopter users later this year. IFS has held customer focus groups to gather input on the initial apps and to determine which apps should receive the highest priority and meet the most pressing needs of customers.

“Focus groups and early adopter programs are a key way for IFS to keep the development process close to customers,” Matthews said. “Our goal is to address our customers’ most pressing needs rather than to offer a broad spectrum of apps that customers may purchase, only to find that the app is not helpful to them or does not deliver the benefit they anticipated.”

“We are excited IFS has chosen the Windows Azure platform for the development of the IFS Touch Apps, since they are making advances in apps for the mobile workforce and use of cloud computing. We are also pleased to be able to support their initiative and to have them as one of our Global Independent Software Vendor partners,” said Kim Akers, general manager for global ISV partners at Microsoft.

About IFS

IFS is a public company (OMX STO: IFS) founded in 1983 that develops, supplies, and implements IFS Applications™, a component-based extended ERP suite built on SOA technology. IFS focuses on industries where any of four core processes are strategic: service & asset management, manufacturing, supply chain and projects. The company has 2,000 customers and is present in more than 50 countries with 2,700 employees in total. For more information about IFS, please visit: www.IFSWORLD.com

<Return to section navigation list>

MarketPlace DataMarket and OData

David Linthicum (@DavidLinthicum) asserted “Though many call for metadata to provide better approaches to data management in the cloud, there are no easy answers” in a deck for his Can metadata save us from cloud data overload? article of 7/14/2011 for InfoWorld’s Cloud Computing blog:

It's clear that the growth of data is driving the growth of the cloud; as data centers run out of storage, enterprises spin up cloud storage instances.

Indeed, IDC's latest research of the digital universe suggests that data volumes continue to accelerate. Recently Paul Miller at GigaOm chimed in on this issue: "While the cost of storing and processing data is falling, the sheer scale of the problem suggests that simply adding more storage is not a sustainable strategy."

As Miller points out, metadata is one way to address data growth. The use of metadata allows users to effectively curate the bits and bytes for which they are responsible. But this may be a bit more difficult in practice than most expect.

The idea is simple. Much of the growth in data is due to a less-than-comprehensive understanding of the core data of record and, thus, the true meaning of the data. As a result, we're compelled to store everything and anything, including massive amounts of redundant information, both in the cloud and in the enterprise. Although it seems that the simple use of metadata will reduce the amount of redundant data, the reality is that it's only one of many tools that need to be deployed to solve this issue.

The management of data needs to be in the context of an overreaching data management strategy. That means actually considering the reengineering of existing systems, as well as understanding the common data elements among the systems. Doing so requires much more than just leveraging metadata; it calls for understanding the information within the portfolio of applications, cloud or not. It eventually leads to the real fix.

The problem with this approach is that it's a scary concept to consider. You'll have to alter existing applications, systems, and databases so that they're more effective, including how they use and manage information. That's a systemic change, which is much harder and riskier to do than spinning up a cloud server or adding more storage. But in the end, it addresses the problem the right way, avoiding an endless stream of stopgaps and Band-Aids.

OData’s built-in metadata features make it more useful but also more verbose.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Michael Collier (@MichaelCollier) described Deploying My First Windows Azure AppFabric Application in a 7/13/2011 post:

In my last post I walked through the basic steps on creating my first Windows Azure AppFabric Application. In this post I’m going to walk through the steps to get that basic application deployed and running in the new Windows Azure AppFabric Application Manager.

Before I get started, I wanted to point out a few important things:

- If you don’t already have access to the June CTP, head over to http://portal.appfabriclabs.com now to create a namespace and request access.

- For a great overview on Windows Azure AppFabric Applications, be sure to check out Windows Azure MVP Neil Mackenzie’s blog post (http://convective.wordpress.com/2011/07/01/windows-azure-appfabric-applications).

- Create your own Windows Azure account FREE for 30-days at http://bit.ly/MikeOnAzure with pass “MikeOnAzure”.

To get started, I need to go to the AppFabric labs portal at http://portal.appfabriclabs.com. From there I go to the “AppFabric Services” button on the bottom of the page, and then the “Applications” button in the tree menu on the left. I had previously requested and been granted a namespace, so my portal looks like the screenshot below:

I noticed that I had namespaces related to Windows Azure storage and SQL Azure. This is separate from any other storage or SQL Azure you may already have. The CTP labs environment provdes one SQL Azure database to get started with, but you can’t create new databases as the provided user doesn’t have the necessary rights (see this forum post for additional details). I can access Access Control Services v2 by highlighting my namespace and then clicking on the “Access Control Portal” green toolbox in the top ribbon. To launch the Windows Azure AppFabric Application Manager, I simply highlight my namespace and then click on the “Application Manager” blue icon in the top ribbon. Let’s do that.

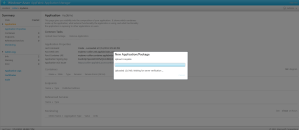

From here I can see any Windows Azure AppFabric Applications that are deployed as well as some application logging (within scope of the AF App Manager). In order to create a new application, I can click on the “New Application” link on the Application Dashboard, or also the “New Application” option under the “Actions” menu in the upper-right hand corner of the dashboard.

Let’s assume for now that I am not complete with my application, but I would like to reserve the domain name. This is similar to creating a new Windows Azure hosted service (reserves the domain name but doesn’t require a package be uploaded to Windows Azure at the time). When I create that new application, I am presented with a dialog that lets me upload the package and specify the domain name I’d like to use.

When I click on the “Submit” button, I will see a progress dialog to show me the status of the package creation. This only takes a few seconds.

Now that my application is created in the Windows Azure AppFabric AppManager, I can click the name of the application to open a new screen containing all sorts of details about the application.

From this screen I can see properties related to the application, any containers used by my application (great explanation in Neil’s blog post), the endpoints I have created, any referenced services, as well as some monitoring metrics for my application. At this point these fields are empty since I haven’t deployed anything yet. I also noticed that I can drill into details related to logging, certifications, or scalability options by navigating the links on the menu on the left side of the window.

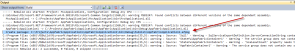

In my last post I created a very simple ASP.NET MVC application that uses SQL Azure and Windows Azure AppFabric cache. I would now like to take my application and deploy it to the Windows Azure AppFabric Application Manager. To do so I have two options – publish from Visual Studio 2010 or upload the application via AppFabric Application Manager. For the purposes of this initial walkthrough, I decided to use the AppFabric Application Manager. I click on the “Upload New Package” link and am prompted for the path to the AppFabric package. I figured the process to get the package would be similar to that of a standard Windows Azure application – from Visual Studio, right-click on my cloud project and select “Publish” and choose to not deploy but just create the package. I would be wrong. In the June CTP for AppFabric Applications, it doesn’t work like that (personally I hope this changes to be more like standard Azure apps). I’ll need to get the path to the AppFabric package (an .afpkg file) from the build output window (or browse to the .\bin\Debug\Publish subdirectory of the application).

Now that I have the package, I can upload it via the AppFabric Application Manager. I’ll receive a nice progress dialog while the package is being uploaded.

Once uploaded, I received a dialog to let me know that I’ll soon be able to deploy the application. This is somewhat similar to traditional Windows Azure applications – I first upload the package to Windows Azure and then I can start the role instances.

Note: While working in the AppFabric Manager I did notice there is no link back to the main portal. I found that kind of “funny” since there was a link from the portal to the AppFabric Manager. I opened a second browser tab so I could have the portal in one and the manager in another.

Now that my application is imported into the Windows Azure AppFabric Application Manager, I will need to deploy it. I can see in the summary screen for my application the various aspects of my application. In my case I can see the services (the AppFabric cache and SQL services) and endpoints (my ASP.NET MVC app endpoint). I also notice the containers are listed as “Imported”.

In order to deploy my application, I simply click the “Deploy Application” link on the Summary screen. Doing so will bring up a dialog showing what will be deployed, along with an option to start the application, or not, after the application is deployed.

While deploying the application I noticed the State of each item under Containers update to “Deploying…”. This process will take a few minutes to fully deploy. The State changed from “Deploying” to “Stopped” to “Starting” and finally “Started”.

This process takes about 10 minutes to get up and running and usable. Most of that time was spent waiting on DNS to update so that I could access the application. It was about 4 minutes to go from “Deploying” to fully “Started”.

Now my application is up and running! I didn’t have to mess with determining what size VMs I wanted, how many instances, or any “infrastructure” stuff like that. I just needed to write my application, provide credentials for SQL Azure and AppFabric Cache, and deploy. Pretty nice! This is a very nice and easy way to deploy applications without having to worry about many of the underlying details. Azure AppFabric Applications provide a whole new layer, and a good one in my opinion, of abstraction on the Windows Azure platform. It may not be for every app, but I certainly feel is fits a good many applications.

UPDATE 7/13/2010: Corrected statement related to use of AppFabric CTP provided SQL Azure and storage accounts.

Damir Dobric (@ddobric) began an AppFabric Applications - Part 1 series on 7/13/2011:

Last year at PDC Microsoft announced the platform on the AppFabric, which should simplify development of composite applications. After lot of discussions about so called “Comp[osite] Apps,” the final name has been changed to avoid confusion with the term Composite Services and Co. So, we have now AppFabric Applications. To me this is a great experiment, which show[s] how some very complex things in the li[f]e of one software developer can be simplified.

You will probably notice that we have now following major programming models in the world of Microsoft:

1. .NET on premise

2 Windows Azure .NET programming model

3. Windows Azure AppFabric model called “AppFabric Applications”I do not want to discuss now which one should win. It is for now important that Windows Azure programming model introduce nice things, but it does not solve many important problems. This is why AppFabric Applications model is required. One service in this context exposes an endpoint that other services can access by adding a service reference to a second service. In general, AppFabric Applications consist of services that communicate using endpoints and service references.

AppFabric Applications Model is based on the Service Groups concept. By definition Service Groups can contain one or more services. The relationships between services shared in groups defines the AppFabric Applications model. Additionally this model introduces a number of services (components). Every service is in fact the project type in visual studio which implements some service. For example ASP.NET service (component) is a typical ASP.NET project.

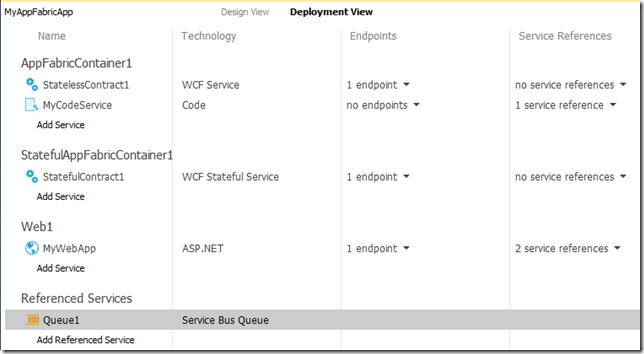

Depending on the service group only appropriate services can be added to the group. For example in Web Service group you can add WCF Web Service and ASP.NET service. And so on. (I know naming is a bit confusing ). Finally Services and Service Groups are primary building blocks of a AppFabric Application. To simplify this story,thing about Service Groups as set of configuration properties which apply to all services in the group. For example they define how scalable or how available the group will be at runtime.[The ]following picture shows the model of one AppFabric Application.

This model (application) contains one ASP.NET service and two WCF services. This can be build and deployed in Windows Azure.

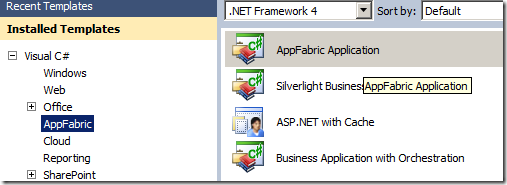

In general AppFabric application can be created by simply choosing “AppFabric Application”.

Interestingly, you might ask yourself what Business Application with Orchestration could be? It creates an application with Workflow (replacement for BizTalk orchestration) SQL Azure, Cache, WCF service and ASP.NET web application.

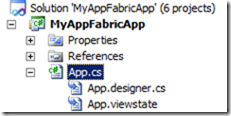

After AppFabric Application project template is chosen, following project is automatically created:

This is some kind of project container (like solution) which holds the model and relations between all other services in this application. When you double-click the App.cs the diagram like next one will appear.

In this diagram you can select between deployment view (sorts by service groups – picture above) or design view.

Additionally you can select the model (diagram) view:

This will show the application model shown above (see first picture).

Supported Services

June CTP support currently following services (components):

Supported Service Groups

In general there are currently following service groups:

- Web

Used for: WCF-Service, ASP NET application- State less (AppFabric Container)

Used for: WCF-, WF- and Code-Services- State full

Used for: State full services- Referenced

Used for: Externally referenced endpoints like SQL, Cache, Queue etc.To be continued…

Matias Woloski (@woloski) explained Windows Azure Accelerators for Web Roles or How to Convert Azure into a dedicated hosting elastic automated solution in a 7/13/2011 post:

Yesterday Nathan announced the release of the Windows Azure Accelerators for Web Roles. If you are using Windows Azure today, this can be a pain relief if you’ve got used to wait 15 minutes (or more) every time you deploy to Windows Azure (and hope nothing was wrong in the package to realize after then that you’ve lost 15 minutes of your life).

Also, as the title says, and as Maarten says in his blog, if you have lots of small websites you don’t want to pay for 100 different web roles because that will be lots of money. Since Azure 1.4 you can use the Full IIS support but the experience is not optimal from the management perspective because it requires to redeploy each time you add a new website to the cscfg.

In short, the best way I can describe this accelerator is:

It transform your Windows Azure web roles into a dedicated elastic hosting solution with farm support and a very nice IIS web interface to manage the websites.

I won’t go into much more details on the WHAT, since Nathan and Maarten already did a great job in their blogs. Instead I will focus on the HOW. We all love that things work, but when they don’t work you want to know where to touch. So, below you can find the blueprints of the engine.

Below some key code snippets that shows how things work.

The snippet below is the WebRole Entry Point Run method. We are spinning the Synchronization Service here that will block the execution. Since this is a web role, it will launch the IIS process as well and execute the code as usual.

public override void Run() { Trace.TraceInformation("WebRole.Run"); // Initialize SyncService var localSitesPath = GetLocalResourcePathAndSetAccess("Sites"); var localTempPath = GetLocalResourcePathAndSetAccess("TempSites"); var directoriesToExclude = RoleEnvironment.GetConfigurationSettingValue("DirectoriesToExclude").Split(';'); var syncInterval = int.Parse(RoleEnvironment.GetConfigurationSettingValue("SyncIntervalInSeconds"), CultureInfo.InvariantCulture); this.syncService = new SyncService(localSitesPath, localTempPath, directoriesToExclude, "DataConnectionstring"); this.syncService.SyncForever(TimeSpan.FromSeconds(syncInterval)); }Then the other important piece is the SyncForever method. What this method does is the following:

- Update the IIS configuration using the IIS ServerManager API by reading from table storage

- Synchronize the WebDeploy package from blob to local storage (point 4 in the diagram)

- Deploy the sites using WebDeploy API, by taking the package from local storage

- Creates and copies the WebDeploy package from IIS (if something changed)

public void SyncForever(TimeSpan interval) { while (true) { Trace.TraceInformation("SyncService.Checking for synchronization"); try { this.UpdateIISSitesFromTableStorage(); } catch (Exception e) { Trace.TraceError("SyncService.UpdateIISSitesFromTableStorage{0}{1}", Environment.NewLine, e.TraceInformation()); } try { this.SyncBlobToLocal(); } catch (Exception e) { Trace.TraceError("SyncService.SyncBlobToLocal{0}{1}", Environment.NewLine, e.TraceInformation()); } try { this.DeploySitesFromLocal(); } catch (Exception e) { Trace.TraceError("SyncService.DeploySitesFromLocal{0}{1}", Environment.NewLine, e.TraceInformation()); } try { this.PackageSitesToLocal(); } catch (Exception e) { Trace.TraceError("SyncService.PackageSitesToLocal{0}{1}", Environment.NewLine, e.TraceInformation()); } Trace.TraceInformation("SyncService.Synchronization completed"); Thread.Sleep(interval); } }My advice: If you are using Windows Azure today don’t waste more time doing lengthy deployments - Download the Windows Azure Accelerators for Web Roles.

Alan Smith described Building AppFabric Application with Azure AppFabric [Video] in a 00:19:24 Webcast of 7/12/2011 from the D’Technology:

In this episode Alan Smith uses the June 2011 CTP of Windows Azure AppFabric to create an AppFabric Application that consumes Windows Azure Storage services.

A prototype website for an Azure user group will be developed that allows meeting details to be added to Azure Table Storage and photos of meetings and presenters to be uploaded to Azure Blob Storage.

Avkash Chauhan described ACS Federation, Live ID, SAML 2.0 Support, and AppFabric Service Bus Connections Quota in a 7/11/2011 post:

In ACS v2 you can use Identity Provider integration i.e. Live, Google, Facebook, Yahoo, etc. if we dig deeper we find that:

- Windows Live ID uses WS-Federation Passive Requestor Profile

- Google and Yahoo use OpenID, including the OpenID Attribute Exchange extension.

- Facebook uses Facebook Graph, which is based on OAuth 2.0.

All of above different types of identity provider can be used seamlessly with Azure ACS v2.0. Using ACS, you don't need to worry about the details of federating with each identity provider, as ACS abstracts this information away from you, making your work very simple and easy to get done.

As of now ACS v2 does now support the SAML 2.0 protocol today. For now you can try using it with “intermediation” of ADFS 2.0 service however direct SAML 2.0 support is not available.

AppFabric Service Bus Connection Quota:

When exposing an on-premise service over Appfabric Service Bus, you may wonder, how many concurrent connections does service bus support? Previously, Service Bus connection limit was based on quotas which starts from 50 concurrent connections to 825 concurrent connections, depend on connection pack size purchased (e.g., pay-as-you-go, 5, 25, 100, or 500). In recent changes connection pack size is not the basis of your maximum quota. Instead all connection packs have default to 2000 concurrent connections.

So even if you have Connection Pack size as pay-as-you-go, 5, 25, 100, or 500 however with all the packs your connection quota will be 2000.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan reported When you RDP to your Web Role you might not be able to access IIS log folder in a 7/13/2011 post:

When you log into your Windows Azure VM which has Windows Azure Web Role, you might have problem accessing IIS logs. When you will try opening IIS logs folder you will get "access denied" error. The irony is that this issue happens randomly.

IIS Logs Location:

C:\Resources\Directory\<guid>.<role>.DiagnosticStore\LogFiles\W3SVC*

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bharat Ahluwalia posted Real World Windows Azure: Interview with Andrew O’Connor, Head of Sales & Marketing at InishTech to the Windows Azure Team Blog on 7/14/2011:

MSDN: Tell us about your company and the solution you have created for Windows Azure.

O’Connor: InishTech is a little bit unusual. Our Software Licensing and Protection Services (SLPS) platform is a classic multi-tenant Windows Azure application, utilizing the Windows Azure SDK, Windows Azure Dev Fabric and Visual Studio 2008. It has over 100 tenants and serves hundreds of thousands of end users. But it’s what SLPS actually does that makes us different – our customers are actually software companies building for Windows Azure. We help ISVs to make money from the cloud, and SLPS is how we do it.

SLPS can be used by anyone developing in .NET, but it’s particularly appropriate for Software-as-a-Service (SaaS). In a nutshell, SLPS is an easy-to-use, easy-to-integrate service that enables you to manage and control the licensing, packaging and activation needs of your software in the marketplace. This video explains why flexible licensing and packaging is so important for SaaS.

MSDN:What relevance does your solution have for the cloud?

O’Connor: Most ISVs must reinvent their business for the cloud; what we do is help work out the business end of the transition – answer critical monetization questions such as: “How can I offer managed trials for SaaS? “How do I link consumption, entitlements and limitations in my software?” and “How do I offer distinct service packages?”

MSDN: What are the key benefits of your solution for Windows Azure?

O’Connor: SLPS provides a valuable and important bolt-on service for your Windows Azure application that adds real value in three ways:

- Tenant Entitlement Management: The ability to create & manage tenant entitlements to your application based on any conceivable business model gives you the versatility to meet customer demand & the intelligence to monetize it.

- Software Packaging Agility: The ability to configure & package your application independent of the development team dramatically reduces cost and drives choice and differentiation. In this context, a picture is worth a thousand words.

- License Analytics: The ability to learn how products are being used and consumed via tight feedback loop is hugely beneficial for both a business and technical audience.

MSDN: So how does SLPS work?

O’Connor: During pre-release, your development team and product management team sit down and agree on the parameters for setting up the service for your applications in terms of products, features, SKU’s, etc. The developers then create the hooks within the application while the product managers configure the SLP Online account. Once you launch your application, it can be as automated as you like, particularly for a SaaS application where you want quick pain free provisioning.

MSDN: Is it available today?

O’Connor: Yes it is, and we’re offering a 20% discount to customers building applications for Windows Azure. Click here to visit our website to learn more.

SurePass announced July 14th during Microsoft World Partner Conference; SurePass Announces Secure Cloud Services (SCS) which runs on Windows Azure in a 7/14/2011 press release:

SurePass, a software and hardware security company specializing in securing cloud-based applications, today announced the availability of a comprehensive suite of Secure Cloud Services (SCS), which run on Windows Azure to enable technology providers and application developers to easily deploy the additional security elements needed to deliver a secure application to their enterprise and consumer clients alike.

Mark Poidomani, CEO – SurePass said: “As the IT paradigm shifts to cloud based computing to meet the enterprise’s need for flexibility and cost-savings, network and identity security has become a paramount concern for all parties. SurePass offers application developers and enterprises alike the opportunity to provide the added layer of security needed to prevent data breaches and identity theft to their clients as they move to the Cloud.”

Working with Microsoft, SurePass developed their Secure Cloud Services to run on Windows Azure and with Secure Token Services (STS), Active Directory, Forefront Threat Management Gateway (TMG), and SQL Server 2008, giving Microsoft customers further tools to move to the cloud in a seamless, secure and protected manner. SurePass is delivering a significant value both in cost savings and market credibility through next generation Cloud based security solutions.

“By leveraging Microsoft’s identity and security capabilities and applying them to their Windows Azure-based services, SurePass gives customers a security solution that builds on the strengths of the Microsoft platform,” said Prashant Ketkar, director of product management, Windows Azure, at Microsoft.

SurePass Secure Cloud Services protect the front door of their customer’s network with identity verification and management and also the back door through data encryption, representing a significant value to their clients. SurePass is one of the first in the industry to deliver a full suite of next generation security solutions that protects the enterprise from fraud, data breaches and identity theft for private, public, hybrid cloud and distributed applications. SurePass offers cost effective solutions that can be implemented within 24 hours, as well as professional services to customize the right solution for your particular application.

Robert Duffner posted Thought Leaders in the Cloud: Talking with Jared Wray, Founder and CTO of Tier3 to the Windows Azure Team Blog on 7/14/2011:

Jared Wray is the CTO and architect of the Tier 3’s Enterprise Cloud Platform. Wray founded Tier 3 in 2006 to address the emerging need for enterprise on-demand services. Wray oversees the company’s development, support and operations teams and is responsible for the company’s intellectual property strategy and new product development. A serial entrepreneur, Wray previously founded Dual, an interactive development firm with clients such as Microsoft and Nintendo..

- Role of partners in providing customized cloud solutions

- SLAs and cloud outages

- Migrating to the cloud vs. building for the cloud

- Things in clouds work better together

ROBERT DUFFNER: Jared, please take a minute to introduce yourself and Tier 3.

JARED WRAY: I'm Jared Wray and I'm the CTO and a founder for Tier 3. Tier 3 has been around for the last six years. It is an enterprise cloud platform company that focuses on the mid-tier to enterprise, with the emphasis of moving back-office, production, mission-critical apps to the cloud in a very secure, compliant manner and with key features enterprises need like performance optimization and high availability -- stuff that really isn't there right now in other cloud products in the market.

Before Tier 3, my background was in architecting and managing large Web-scale computing environments for companies here in the Seattle area, focusing on the enterprise. I've worked three out of the four Gates’ companies including Microsoft on Microsoft.com and Corbis Corporation, where I ran their large Web environment, and Ascentium. I also founded a company called Dual, which was an interactive agency that focused on Flash when it started becoming hot. Some of our customers were Sony, Microsoft, and Nintendo.

ROBERT DUFFNER: Okay, so you recently wrote in your blog, Can You Build Profitable Public Clouds out of Enterprise Technology? , where you questioned Douglas Gourlay's main points on what it takes to build profitable clouds. Can you please expand your thoughts on that question?

JARED WRAY: While Gourlay made some interesting points, the article missed on two main areas of focus for building a profitable pubic cloud: Who you're going to be focusing on - what your market is, and how you build it -- how you architect it and how you scale it.

One of the things that Gourlay doesn't focus on at all is who the market is. Today most public clouds serve the developer -- or what we would call QA lab environment. These are low-cap cost focused. That audience doesn't care about uptime, they don't care about SLAs; they only care about getting the cheapest computing resources they can. And with that market the cloud provider can play the open source game with a lot of commodity or white label boxes trying to drive that infrastructure price down to zero. Since those customers are just looking to offload workloads that are not mission critical and doesn't need high performance, that approach is fine.

But let’s contrast that with when the market is enterprise. That market that needs high service-level agreements with guaranteed up time. These guys can't have three to four days’ worth of downtime as it would simply kill their business. With that type of market, the cloud provider needs to consider what that market requires and what the total architecture is. That's where you start bringing in enterprise-grade gear to do an enterprise-grade job.

So then two points to consider in serving these markets when looking at how you build it – the cost model and the architecture. If you're thinking about going open source, it is important to know the cost model tradeoffs. A lot of companies don't realize that when they're buying an appliance or licensed software, you're buying IP someone else has created it for them. That’s the trade off with open source. If you build a public cloud all on open source, you have to have that expertise in house. So you're going to pay for it one way – via IP given it to you at a licensed rate - or another – via expert manpower on staff. And that cost model is one thing that's really confusing for a lot of people.

On the architecture side it’s the same thing. Providers have to figure out what to do architecture-wise to expand and scale. Offer an open source-type quality service or something that's being white labeled with low-end cost efficiencies and lower SLAs or provide secure, mission critical environment for customers who care about the SLA? Most public loud providers these days don't play a very good SLA game, they really play it like a hosting company where they think “hey, we'll just plan on it going down and we'll just credit people back”. Providing a secure, mission critical environment for customers care about those SLA takes enterprise gear. You have to have an enterprise backbone, so you have to use one of the top three switch providers in the world, you have to build your backbone off of that, so you have to have an enterprise-grade storage platform. And so on.

Gourlay says for dev/qa the only way to build is open source and white-label boxes, that's the only way to scale. And my opinion is, yeah, that's not really the case for all cloud providers, and it won't be the case long term for most providers because the market is shifting now to the enterprise. Now the big enterprises are looking to use the cloud, which wasn't the case before and drastically changes the landscape of requirements.

ROBERT DUFFNER: Jay Heiser from Garter recently wrote in a blog post entitled, How long does it take to reboot a cloud?, where he talks about the relative ability of any cloud service provider to recover your data and restore your services. Heiser cites that it took Google 4 days to restore 0.02% of the users of a single service and it took Amazon 4 days to recover from a limited outage, and they were never able to get all the data back. How do you architect for data backup and disaster recovery?

JARED WRAY: The pretty amazing thing to think about is the data expansion. Even at Tier 3 we're-doubling our storage every single year. And that's becoming a common trend for a lot of companies. Storage expansion is growing so fast that

youmany providers can’t keep up with how to retain it or even maintain it overall.Storage architecture failure happened with another cloud computing company recently also. Why? Because they built their own storage platform. They didn't go with a trusted enterprise grade storage product that's been around for ten years. They built it in house with their own devs so it's essentially on version one. Even though AWS has been around for quite a long time, this storage platform is theirs, it's internal, it's never had [a] whole bunch of different customers poking at it and finding the bugs. That means that they have to have all the risk on their side.

Google also recently had a storage failure and lost email from their Gmail service – again a custom storage architecture which they maintain internally. But, the best thing Google ever did was go to tape backups because that is how they restored all that data.

With that said, these companies have the resources to really invest in this approach and they will work to get it right. But I have a very hard time thinking they will not have more bumps along the way.

As an enterprise cloud provider, we have to think outside the box: instead of thinking about how are we going to reduce the data, we think about how are we going to have a recovery time that's optimal for everybody and also how are we going to maintain the storage in a realistic way, keeping it simplified. We asked ourselves early on, “Why are we building our own storage architecture and platform?” We knew we are about the storage management and how we manage that storage overall. So we still use enterprise hardware and leverage storage vendors who provide enterprise quality backup. We know that what they're providing is tried and true.

With data expansion growing and outpacing many of the technologies out there, many cloud providers really need to invest in a solid storage management approach and architecture. They need to quit thinking that data reduction is the key and just start to look at how to enable large data management.

ROBERT DUFFNER: Let’s talk about cloud computing for small and medium businesses. Clearly, software-as-a-service is a no-brainer, but what about infrastructure-as-a-service or even platform-as-a-service? When is it the right time to go to the cloud?

JARED WRAY: With the emergence of enterprise grade public clouds, small businesses have some critical thinking to do about how they manage their IT environments. Do they want to pay for the overall expertise of running a big back-end environment or can they move to a cloud to manage it and have that help and expertise built in? If they already have IT management services on site, they are paying a lot for very expensive engineers to just maintain their systems -- and those guys don’t have time to really focus on the core business. Most of the time, however, these small business don't really have the overall expertise of running a big environment, only enough to manage what they need -- mail services, file services, things like that. So the big opportunity right now is for those guys to move to the cloud and maintain it and get that extra expertise.

The greatest thing about the enterprise-grade public cloud is that it's taking enterprise-level services and slicing off a piece for everybody else -- these small to medium businesses are getting a little slice of enterprise grade heaven. They’re getting access to the type of enterprise environment they simply couldn’t build: the reliability is going to be there, the up time is going to be there, and the support is going to be there. The other side that's really great for these small companies is the management aspect. Many of these companies, they have extra staff right now doing backups, SAN administration, support on services that really have become commodity by moving to the cloud. By eliminating that, these teams now that are running at 200 percent can run at 100 percent and really focus on the application level and the software that runs the company. And that's going to be a huge relief for the IT team to offload so much of the infrastructure that really isn’t core to the business.

The most important thing for the IT team at that small company is to pick the right enterprise cloud provider. They can't just look for the cheapest price. These people need to move their back office, and that's the keys to the castle, it's everything that they have. And so what we've seen over the lifespan of our company in these cloud provider decisions, price is always a big point, but the deciding factor is the reliability of the system. We've seen multiple cloud providers in the past couple months have huge outages, three to four days, and then they've completely lost data. That is just not acceptable for a small company. That could put these companies out of business completely. Companies need to evaluate that risk and find a provider that's going to be able to give them the up time and support that they need. And even the recovery time, how do you recover from something like that?

I honestly think in the next two to three years we're going to see a large majority of small businesses just moving to the cloud because they can actually move everything, have it completely secure and maintainable. But the big thing is, find the right provider, find the right solution, and make sure that that risk is being evaluated. It's not really price, it's the risk that's being associated with it.

ROBERT DUFFNER: You know, so one of my favorite questions I ask on this Thought Leaders blog is on the subject of infrastructure, platform, and software-as-a-service (SaaS). This has definitely served as a good industry taxonomy for cloud computing. However, do you see these distinctions blurring? It sure does seem that way based on what's happening in the market with Amazon's Beanstalk, Salesforce.com’s push into platform-as-a-service, and Windows Azure offering more infrastructure-as-a-service capabilities.

JARED WRAY: Yeah, I completely agree. The analysts have tried to label all the cloud providers in this rigid service matrix where one company is just infrastructure. The discussion should really be what service they're offering and how does that affect the customer. With most cloud providers when they start becoming more and more successful, they have to blend. They can't really offer just infrastructure as a service and maintain it long-term because the technology is going to roadmap itself up into the application layer. It's going to start blending beyond what we're thinking now. Even software as a service need partners who are doing infrastructure as a service and platform as a service, all combined into one thing to make that software as a service more functional.

So when you think about going up the stack, those lines going to blur really quickly. At Tier 3 we are actually the perfect hybrid: we literally offer a platform-as-a-service type functionality, and yet it's still infrastructure as a service when you think about it in a holistic way. We're still offering CPU, memory, storage, but we're offering intelligence and bundling of those services into a single enterprise platform. And that's really where the key of platform-as-a-service is going to be.

I honestly think infrastructure-as-a-service and platform-as-a-service is going to merge together. The only time you're going to see pure platform-as-a-service is when it's vertical based -- one programming language or maybe a couple of languages. Long term these services will be taken over by cloud providers that are running up the application stack and will be able to support much more.

ROBERT DUFFNER: James Staten of Forrester Research recently wrote a great blog post entitled: Getting Private Cloud Right Takes Unconventional Thinking, where he addresses the confusion amongst enterprise IT professionals between infrastructure-as-a-service, private clouds, and server virtualization environments. He basically states that few enterprises, about 6 percent, operate at the level of sophistication required to getting a private cloud right. Do you have any thoughts on this, and can you expand more on what you're seeing in the market?

JARED WRAY: I would actually completely agree with him on that front. One of the things we've noticed is for those customers are running any private-cloud-type system using industry hypervisors, it is unbelievably hard to maintain, even if they have the expertise in house. It is extremely hard to get keep IT staff up to date, being able to maintain the cloud, being able to handle the system as a big resource engine instead of just a couple applications that you're running in your environment.

The real change with private cloud is in the IT support model. Before every single app had its own dedicated servers and IT supports the server and the applications. With cloud IT supports a pool of resources. That's a huge shift with big ramifications. Instead of knowing what's going on, they have to give any user in their environment control – they have to go to some business owner and say, okay, have your developers use this resource and you can do whatever you want. That's really a painful common scenario because now in this private cloud, they have to figure out how to make that resource pool very flexible. The only way they could do that in house is spend a lot of cap-ex to have extra resources available for when that business unit doesn't calculate or forecast correctly so they can handle that excess load. Or when the business unit takes off, they start using all the system, and suddenly they start affecting everybody in the environment because now they're running hotter than the entire environment can handle. Not ideal and a common private cloud scenario.

The other scenario we're seeing when companies turn to infrastructure-as-a-service. When a company decides not to build their own private cloud because it is too hard or costly and then turn to infrastructure-as-a-service, they're having a completely different set of challenges. While they can enable business owners to tap into whatever IaaS service they want, IT is not control of the environment those apps land on -- they can't control it, they don't know what's going on, and suddenly they have compliance or business continuity problems, without even simpler backups. Tons of resources, huge cowboy-ish environment! Think about the ramifications for this and the company. Who is in charge of security reviews, compliance, backups, or even patch management? They're not the castle anymore and they don’t have the expertise or experience to handle those IT requirements.

The single biggest challenge that we've seen in our company with customers coming on is how they control the ramp up of cloud services and enablement for the business. It's basically releasing the valve for most of these companies. We'll have companies come on and literally double their infrastructure within 30 days, and that's just amazing! But there is also the other side where these companies need to think outside of the box on how to keep the processes in place for compliance, security, and even overall application structure.

ROBERT DUFFNER: In another blog post you wrote, What You Think You Need vs. What You Really Need, you talk about how cloud computing changing the way people think about their IT infrastructure. Please expand on that.

JARED WRAY: This gets back to the old school way of doing planning for infrastructure - thinking back five to ten years - and some companies still do it this way today. When you're dealing with physical infrastructure, the average IT guy always does the same thing, which is they talk to the business owner, the business owner says “this is going to be huge, this project is going to grow beyond what you can even imagine, it's going to be massive! ” And so the IT infrastructure guy in charge of planning is thinking “how is it going to run on our infrastructure and what type of hardware does this new product/feature need to be able to support that type of scale?” So they create a plan over three to five year cycles of hardware: what to start out with and what hardware to run this application for the next three to five years with the new expected growth pattern that was explained. It's a lot of guesswork, and really just not an easy way to handle scale with statistics showing over 70% of projects fail or do not meet expectation. That is a major capital risk the business is taking on.

Most of these companies, even going to the cloud, we've seen the same thing: They're trying to plan for three years, even though flexibility is completely inherent and they can just grow on the fly. That's really a big benefit. When companies come to us to quote an environment for cloud they often start with a baseline of the hardware that they have now. This infrastructure has been planned for three years on a guesstimate and is usually over provisioned on most systems. Being in the infrastructure for 15 years, I have probably 15% percent of the time been right in guessing what the infrastructure is going to be required to run the application over the next three years. It's literally impossible. Business has become so agile that you have no idea in the next three years what you're going to need for infrastructure. Our job is to help them think about this in a new way – what they need vs. what they think they need.

ROBERT DUFFNER: Building apps from scratch for the cloud is definitely easier than migrating existing apps to the cloud. Should enterprises be migrating mission-critical apps to the public cloud?

JARED WRAY: Mission-critical apps can easily move to an enterprise cloud provider. What matters is who the provider is and how they set it up. Most cloud providers have focused on developers or getting that easy project live. But, companies like us, Tier 3, we do full migrations of mission critical production apps right into the cloud. It is literally like it's just an extended network for our IT customers and most of their customers don't even realize that they're now running in the cloud.

Most modern applications are very good at migrating to cloud environments now, especially in the back office. Microsoft's done an amazing job with that and the right enterprise cloud enables it to be easy to migrate. Most of our customers we see are fully migrated within 60 days to the cloud.

ROBERT DUFFNER: From what I've been seen as I talk to our enterprise customers is that the hybrid cloud is really going to be the way enterprises adopt the cloud, in other words, the ability to federate internal and external resources. It sounds great, but how do you make this work?

JARED WRAY: I think hybrid is going to be the biggest approach, especially for the big corporations. They already have internal infrastructure, most of them are starting to build their own private clouds or already have a private cloud that they're managing internally. You've have to realize, with big corporations, that data secure is paramount, so trusting a third-party provider, that's going to take a while, and the cloud's still young. If we're in a baseball game, and social media was in, the eighth inning, cloud right now is probably in the second inning, and a lot of things are still happening. Providers like Tier 3, Microsoft, and AWS are on the same page where we have to address and prove that the cloud is going to be more reliable, have better security, and be a better solution overall for their company.

But when you go to hybrid, it's nice because you're putting a toe in the water, which is good for them. All they have to do is put a toe in the water, they hook up a provider that they trust. They can have all the security checks, and compliance items addressed with the provider and then they can use the provider to offload resources that are lower risk and help the company at the same time.

It's the same thing like we talked about before about what you think you need versus what you really need, right? Most companies are building for excess of three years, so they're at least 30 percent over-provisioned all the time.

When you add a cloud provider, big projects that ramp up and ramp down, you can use those cloud providers for those resources so you're not taking the cap-ex burn on your side. This is a huge consideration for these companies if you think about the total cost of purchasing hardware, putting it into the lab. With the cloud you use the resources and then a year later, it's all gone and there is no waste. The project's done, it's now moved to production. That gear, most of the time probably half of it only gets reused, so it's a complete waste. Whereas if they have a good cloud provider and they're running a hybrid-type solution where the company’s network is extended to the cloud provider in a secure manner, they can use these resources, be enabled, IT is not the bottleneck, and now they can get everything up and running and even stuff that's non-mission-critical or some things that they don't want to manage anymore can be put in that hybrid environment and really put that toe in the water and eventually put the whole foot in.

I honestly think that hybrid is basically the two- to three-year stepping stone for companies. You're going to see a lot of corporations just saying, we're going to get out of the cap-ex game, we don't need to be into it anymore.

ROBERT DUFFNER: Late last year Ray Wang wrote in Forbes his predictions in 2011 for cloud computing. One of his predictions stated that development-as-a-service (DaaS), or the creation layer will be the primary way in which advanced customers will shift custom app development to the cloud. What are your thoughts on this?

JARED WRAY: I agree with him that custom app development is going to shift to the cloud and that's going to be the primary approach moving forward, just because it's the easiest, fastest way of doing things. If you think about development teams, one of the big bottlenecks they have is how do we to build and spin up an environment for this project and then take it down whenever we want?

What's interesting about this is when you think about development as a whole, everybody in the cloud is really focusing on what's the future. How are we going to get custom app development or even development as a service really moving forward? The problem with that is that 80 percent of the applications in the world are all in the back office, and everybody's forgotten about those. If you think about when Windows came out it was a mainframe world. Everything was owned by mainframes, and now it's taken, you know, 20 to 30 years for us to even get to the point where it's all client-server, right? We still have some mainframes around; big banks still use them, things like that. And it's taken 20 to 30 years.

So let's just say at the fast pace that we're going now, it takes 10 to 15 years. All those custom apps that are behind the firewall in these corporations, they're not going to be on a new development platform. They're going to still be around. So as cloud providers we need to figure out how to move those into the environment. You know, that's one of the things that we specialize at Tier 3. We literally take those custom apps that are behind the firewall -- that 80 percent that everybody still is using -- and move them into the enterprise cloud. Another big focus point is how we maintain them better in the cloud than internal infrastructure? That's going to be what everybody needs to focus on in the next ten years. Yeah, custom apps going to be huge, and it's going to be huge for the future, but remember development cycle -- the development cycle is one thing, right, releasing a product, but then getting all your customers to upgrade, that's a two- to five-year plan, at least.

Of course, engineering for the future, that's what we're supposed to be doing, but also how do we maintain the past and be able to migrate them into the cloud and get all the features and the things that they want right now?

ROBERT DUFFNER: In your recent article on TechRepublic, 10 things software vendors should consider when going SaaS, you talk about the need for independent software vendors (ISVs) to make architectural and platform decisions to ensure they deliver the best customer experience. Can you highlight some of the important considerations?

JARED WRAY: I'll focus on two main ones that we've seen over time that really pop up.

First is what are they trying to achieve for their own customers and their business model. Are customers asking for a “SaaS” model? Are they saying “I don't want to manage the software anymore”? They could also be saying “You guys are the experts, you know what's going on, you know the patches, and we want you to maintain it”. This is all about the service contract staying with the ISV instead of the customer. This is really a big services play.

First let’s deal with the moving the software to a service. ISVs have written this program, they've probably had it around for five to ten years, and they have a good installed base. Moving that installed base is very hard. It starts with changing their software to a multi-tenant architecture model where multiple customers can now use the same software platform. This is very hard and sometimes impossible. It's a lot of development, a lot of scoping of the application and figuring out what you can salvage in the current code base. It requires evolving from an architecture where every customer gets their own install to one where every customer connects to one big platform and it takes care of all the customer. That's a big, multi-tenancy architecture and it is hard – it could be two to three years of rewriting code.

So what we're talking about with a lot of our customers is what they are trying to achieve -- multi-tenancy and security. Our answer is for ISVs to give every single customer their own instantiation and use the cloud to do the multi-tenancy of that software layer with the scale and flexibility the cloud is known for. They have their customers connect privately over a secured VPN or a direct connection and be able to access that software that they would run locally in the cloud just like an extended network. They don't have to worry about it, and the main focus with this is how do they maintain support? ISVs need to be able to spin up the environment, have it online without rewriting the software, but really engage on a service level with their customer. This monthly service arrangement gives a recurring model that all ISV’s would love to have.

The second thing that they always have to consider is really how are they going to handle security long-term? It's great to build software that can handle multiple different customers at the same time, but remember one thing: If you are going from a model where you're installing that software down in their office or even data center, that's a secure model for them, it's on-premises, they're dealing with their own security, and sometimes that private information is too great to even think about going into a multi-tenant environment where it's an entire platform. Think about places who are doing credit card processing or online-type capabilities or they're keeping customer records, even though your software could go into a multi-tenancy architecture, most of the time you're going to want to have physical security splitting every single customer up.

So sometimes that architecture can work for you where you could go and do the Salesforce model where everybody's connecting and all that data is now in a big database somewhere, but it's basically split by customer. That's a big security concern that you have to realize. You will also have to consider how you are going to have your customers connect? If you're dealing with big corporations, they're not going to like the approach where it's public on the Internet. They're going to want a private VPN or a direct connection to just the servers that are dedicated to that customer.

Cloud companies like us at Tier 3 who have built-in ways of doing multi-tenancy really easily and spinning up environments on the fly can gives you this kind of approach - and with not as much development involvement as you would building an entire new platform for SaaS.

ROBERT DUFFNER: Jared, that's all the prepared questions that I had. Did you have any closing thoughts before we wrap up?

JARED WRAY: The cloud is ready for the enterprise as long as you find the right provider and really think about the entire solution. What you're seeing now in the market is that development have adopted cloud, and there have been a couple bumps in the road, but they're still adopting it at a pretty fast rate.

The real key is when we're going to see the big companies really start adopting cloud through a hybrid solution like we've talked about or even how we are going to have the small to medium businesses start adopting it at a very fast rate. The security is there in an enterprise cloud, the maintenance is there, and what we can do in the cloud is better than what can be done onsite.

ROBERT DUFFNER: Jared this is great. I appreciate your time.

JARED WRAY: Yes, thank you.

Avkash Chauhan explained Windows Azure Web Role: How to enable 32bit application mode in IIS Application Pool using Startup Task in a 7/14/2011 post:

It is very much possible that you may need to run 32 bit application inside Windows Azure Web role and to achieve it, you will have to configure IIS. In Windows Azure you will need proper start up script which will configure IIS to enable 32 bit application execution in the application pool.

Here are the instructions:

Step 1: You will need to create a simple text file name[d] startup.cmd (or <anyname>.cmd) first and in this file you will add your command line script, which will configure IIS Application Pool

Startup.cmd:

%windir%\system32\inetsrv\appcmd set config -section:applicationPools -applicationPoolDefaults.enable32BitAppOnWin64:trueNote: Please make sure then when you launch CMD file it works as expected. This means please test it completely for its correctness and expected result.

Step 2: Now you can update your Window Azure Service Definition file to include the start up task as below with “Elevated” permission because this needs admin permission to effect.

ServiceDefinition.csdef

<Startup>

<Task commandLine="Startup.cmd" executionContext="Elevated" taskType="simple">

</Task>

</Startup>

Step 3: Now make sure that the Startup.cmd file is part of your Web Role application (VS2010 Solution) and set its property “Copy Local to True” so when you publish this package, it can be the part of your package.

To learn more about how to use Windows Azure Start up task, please study below blog:

Steve Marx (@smarx) described his Ruby-Based Command-Line Tool for the Windows Azure Service Management API in a 7/13/2011 post:

I’ve created my first ever Ruby Gem, “waz-cmd,” to let me manipulate my Windows Azure applications and storage accounts from the command line on any system that has Ruby. (Mostly this is for my friends on Macs, where the PowerShell Cmdlets aren’t a great option.) Please give it a try and let me know what you think. Basic instructions from waz-cmd’s home on GitHub:

Installation

To install, just

gem install waz-cmdExample usage

c:\>waz generate certificates Writing certificate to 'c:\users\smarx/.waz/cert.pem' Writing certificate in .cer form to 'c:\users\smarx/.waz/cert.cer' Writing key to 'c:\users\smarx/.waz/key.pem' To use the new certificate, upload 'c:\users\smarx/.waz/cert.cer' as a management certificatein the Windows Azure portal (https://windows.azure.com) c:\>waz set subscriptionId XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX c:\>waz deploy blobedit staging c:\repositories\smarxrole\packages\ExtraSmall.cspkg

c:\repositories\smarxrole\packages\ServiceConfiguration.blobedit.cscfg Waiting for operation to complete... Operation succeeded (200) c:\>waz show deployment blobedit staging STAGING Label: ExtraSmall.cspkg2011-07-0120:54:04 Name: 27efeebeb18e4eb582a2e8fa0883957e Status: Running Url: http://78a9fdb38bc442238739b1154ea78cda.cloudapp.net/ SDK version: #1.4.20407.2049 ROLES WebRole (WA-GUEST-OS-2.5_201104-01) 2 Ready (use --expand to see details) ENDPOINTS 157.55.181.17:80 on WebRole c:\>waz swap blobedit Waiting for operation to complete... Operation succeeded (200) c:\>waz show deployment blobedit production PRODUCTION Label: ExtraSmall.cspkg2011-07-0120:54:04 Name: 27efeebeb18e4eb582a2e8fa0883957e Status: Running Url: http://blobedit.cloudapp.net/ SDK version: #1.4.20407.2049 ROLES WebRole (WA-GUEST-OS-2.5_201104-01) 2 Ready (use --expand to see details) ENDPOINTS 157.55.181.17:80 on WebRole c:\>waz show configuration blobedit production WebRole Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString: UseDevelopmentStorage=true GitUrl: git://github.com/smarx/blobedit DataConnectionString: DefaultEndpointsProtocol=http;AccountName=YOURACCOUNT;AccountKey=YOURKEY ContainerName: NumProcesses: 4Documentation

Run

waz helpfor full documentation.

One of the coolest features is

waz show history, which uses the relatively new List Subscription Operations method to show the history of actions taken in the portal or via the Service Management API, including who performed those actions. This is very handy when something goes wrong and you want to figure out how it happened. Let the finger pointing begin!The source code is also a decent complement to the Service Management API documentation if you want to write your own code.

The Windows Azure Team (@WindowsAzure) posted Real World Windows Azure: Interview with Mandeep Khera, Chief Marketing Officer at Cenzic to its blog on 7/13/2011:

MSDN: Tell us about Cenzic and the solutions you offer.

Khera: Business and governments increasingly rely on Web-based applications to interact with their customers and partners. These Web applications may contain many security vulnerabilities, which makes them ideal targets for attacks. As the only stand-alone provider of dynamic, black box testing of Web applications, Cenzic addresses these security risks head on by detecting vulnerabilities for remediation in order to stay ahead of the hacker curve with software, managed services, and cloud products to protect Websites against hacker attacks.

MSDN: Could you give us more details on the ClickToSecure Cloud solution?

Khera: 80 % of hacks happen through websites and there are more than 250 million websites out there. With ClickToSecure cloud, any business - even a small mom and pop shop - can get an application security vulnerability assessment service and easily run a scan (similar to anti-virus scan on the desktop) to find out where their security vulnerabilities are, along with detailed remediation information. This report can then be sent to developers so they can fix these security holes. Some of our packages even help companies get compliant with regulatory and other standards, such as PCI 6.6, OWASP etc.

Cenzic ClickToSecure Cloud also allows Windows Azure users to test all of their Web applications built on Windows Azure for application vulnerabilities. This testing can be done in a few minutes or hours, depending on the size and complexity of the application and type of assessment running. Built on Microsoft technologies, Cenzic ClickToSecure will be available through the Microsoft Azure Marketplace soon.

MSDN: What are the key benefits for Windows Azure customers?

Khera: One of the issues developers face is that as they develop Web applications, they are not able to find their security defects in the code before deployment. And once that application is deployed, any security vulnerabilities make the application – and its users - susceptible to attacks..Cenzic ClickToSecure Cloud solution enables customers to test Web applications built on Windows Azure for security vulnerabilities before putting them into production.

Please click below to watch a demo: (Please visit the site to view this video).

MSDN: Is this solution available to the public right now?

Khera: Yes, click here to learn more. As a special incentive, we are offering special pricing to all Windows Azure customers with a 20% discount off our list price. The first 500 people to sign up will also get a free HealthCheck scan.

Please visit the Cenzic website for more information.

Anuradha Shukla reported KPI Deploys Cloud for Microsoft Windows Azure in a 7/13/2011 post to the CloudTweaks blog:

Full-service systems integrator and implementation consultancy Key Performance Ideas has deployed Star Command Center Cloud Service for the Microsoft Windows Azure Cloud platform.

Star Analytics provides application process automation and integration software. Its founder and CEO, Quinlan Eddy noted that collaboration with Microsoft to serve enterprises running on the Azure platform will simplify the deployment and automation of business applications on-premise and in the Cloud. Star Command Center running on Microsoft Azure has the ability to orchestrate the range of cross-vendor enterprise applications that corporations depend on with Azure’s high availability, according to Allan Naim, architect evangelist – Cloud Computing, Microsoft Corporation.

Currently, more than 30 popular companies are using Star Analytics solutions to automate applications and processes across Cloud and on-premise computing environments without having to maintain complex code or engaging IT professionals. KPI decided to use these solutions because it was impressed by Star Analytics’ ability to automate and orchestrate workflows for enterprise applications that co-exist between hybrid BI computing environments. The solutions can also improve compliance and auditing support by centralizing automation lifecycle management (LCM), deliver self-service automation capabilities for business users, and offer plug-ins with native support for a variety of BI applications deployed by KPI clients.

“Star Command Center Azure Edition strengthens our System Maintenance And Remote Troubleshooting, or SMART, offering and provides clients real-time management and monitoring of their Enterprise Performance Management (EPM) and Business Intelligence (BI) systems,” said Garry Saperstein, chief technology officer of Key Performance Ideas.

“As a full-service systems integrator and implementation consultancy, we strive in helping our clients leverage technology to its fullest potential. Command Center removes the burden of custom coding with compliant, supportable and dependable software, allowing Key Performance Ideas to deliver a superior client experience and state-of-the-art EPM and BI solutions.”

Jonathan Rozenblit (@jrozenblit) asserted ISVs Realizing Benefits of the Cloud in a 7/13/2011 post to his DevPulse blog:

ISVs, one by one, across the country are realizing the benefits of Windows Azure and are sharing their stories. Last week, I shared the story of Connect2Fans and how they are successfully using Windows Azure to support their product. In the weeks to come, I will be sharing more of those stories.

These stories clearly demonstrate realized benefits; however, a new study by Forrester Consulting is now available looks at them in depth.

The study interviewed six ISVs that had developed applications on the Windows Azure platform (Windows Azure, SQL Azure, AppFabric). These ISVs were able to gain access to new customers and revenue opportunities and were able to capitalize on these opportunities using much of their existing code, skills, and prior investments.

The study found that the ISVs were able to:

- Port 80% of existing .NET code onto Windows Azure by simply recompiling the code.

- Transfer existing coding skills to develop applications targeting the Windows Azure platform.

- Leverage the Windows Azure flexible resource consumption model.

- Use the service-level agreement (SLA) from Microsoft to guarantee high availability and performance.

- Extend reach into global markets and geographically distant customers.

I highly recommend reading through the full study if you are considering Cloud options and are looking to understand the details behind these findings.

This article also appears on I See Value – The Canadian ISV Blog

Bart Robinson posted Cloud Elasticity - A Real-World Example on 5/3/2011 (missed when posted):

Windows Azure is the cloud-based development platform that lets you build and run applications in the cloud, launch them in minutes instead of months and code in multiple languages or technologies including .NET, PHP, and Java.

One of the promises of Windows Azure and cloud computing in general is elasticity. The ability to quickly and easily expand and contract computing resources based on demand. A common example is that of retailers during the holiday season. There is a huge spike of activity beginning on “Black Friday” and, traditionally, a lot of money is spent preparing for the peak load.

Social eXperience Platform (SXP) is a multi-tenant web service that powers community and conversations for many sites on microsoft.com. A great example of one of our tenants is the Cloud Power web site. SXP is responsible for providing the conversation content on the site while the remainder of the site is served from the standard microsoft.com infrastructure. When the Cloud Power site sees an increase in traffic, SXP also sees an increase in traffic, which is exactly what happened in April.

In this case, the web traffic spikes were due to ads, which typically ran for a day or two. Compared to March’s average daily traffic (represented by the 100% bar), SXP’s traffic spiked to over 700%. Here’s a graph that shows 72 hours of traffic while an ad campaign was active. The blue area is normal traffic and the red area is the additional traffic generated by the ads. Since the ad was targeted to the US, there is a heavy US business hour bias to the traffic on both the soft launch as well as the full run.

There are lots of examples of traffic spikes like this taking web sites down or causing such slow response that they appear to be down. Traditionally, the only way to handle such spikes was to over-purchase capacity. The majority of the time, the capacity isn't needed, so it sits mostly idle, consuming electricity and generating cooling costs. Windows Azure has a better approach.

The engineering team had a discussion with our business partners and learned that due to a couple of ad buys, SXP would see increased traffic on several days in April. In advance of the ads running, we worked with the Microsoft.com operations team and decided to double our Windows Azure compute capacity to ensure that we could handle the load.

This is where Windows Azure really made things easy. We went from 3 servers to 6 servers on our web tier within an hour of making the decision. The total human time to accomplish this was a couple of minutes. Our Ops lead changed one value in an XML file and Windows Azure took care of the rest. Within half an hour, we validated via the logs that we had doubled our capacity and all web servers were taking traffic.