Windows Azure and Cloud Computing Posts for 7/2/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 7/4/2011 with new articles marked • by Dhruba Borthakur (Facebook), Ray De Pena (Cloud Tweaks), Paul Patterson (LightSwitch), Silverlight Show (OData eBook), Eric Nelson (SQL Azure), Kim Spilker (Microsoft Press), Kent Weare (AppFabric), Bruce Kyle (Windows Phone SDK)

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Applications, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• Eric Nelson (@ericnel) posted Simplifying Multi-Tenancy with SQL Azure Federations to the UK ISV Evangelism Team blog on 7/4/2011:

Somehow I missed this post in March. Multi-Tenancy is something which regularly comes up in our conversations with ISVs as they consider the move to SaaS (and ultimately for some, a SaaS solution running on the Windows Azure PaaS). I welcome any technology which can be applied to simplify the creation of a multi-tenanted solution or at least offer new alternatives. Turns out SQL Azure Federations is just such a technology.

Cihan Biyikoglu (Program Manager in the SQL Azure team) has written an interesting post on Moving to Multi-Tenant Database Model Made Easy with SQL Azure Federations which looks at how SQL Azure Federations can help by allowing multiple tenant databases to reside within a single database using federations. This approach gives the following advantages:

- Multi-tenant database model with federations allow you to dynamically adjust the number of tenants per database at any moment in time. Repartitioning command like SPLIT allow you to perform these operations without any downtime.

- Simply rolling multiple tenant into a single database, optimizes the management surface and allows batching of operations for higher efficiency.

- With federations you can connect through a single endpoint and don’t have to worry about connection pool fragmentation for your applications regular workload. SQL Azure’s gateway tier does the pooling for you.

On the flip side, federations does not in v1 help with issues such as tenant data isolation and governance between tenants.

SQL Azure Federations will be available later this year (2011) and are explained in detail over on the TechNet Wiki - SQL Azure Federations: Building Scalable, Elastic and Multi-tenant Database Solutions.

Related Links:

- Sign up to Microsoft Platform Ready for assistance on the Windows Azure Platform

- http://www.azure.com/offers

- http://www.azure.com/getstarted

For more details about SQL Azure Federations, see my Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s March 2011 issue.

Mark Kromer (@mssqldude) finished his SQL Azure series for SQL Sever Magazine with All the Pieces: Microsoft Cloud BI Pieces, Part 5 (FINAL 5 of 5) on 7/1/2011:

Well, readers, all good things must come to an end. This is part 5, the final part of my series where I’ve walked you through the tools, techniques and pieces of the Microsoft Cloud BI puzzle that you’ll need to start developing your own Microsoft BI solutions that are Cloud (mostly, perhaps a bit hybrid) BI solutions.

We’ve talked about created data marts using Microsoft’s cloud database, SQL Azure, based off your large on-premises SQL Server data warehouse using SSIS or data synch. We’ve touched on creating analysis reports and dashboards with PowerPivot, SSRS and SQL Azure Reporting Services directly off that cloud data. And then we created some Azure-based dashboards and Web apps that used ASP.NET and Silverlight to present the business intelligence reports back to the user on a variety screens from PC to laptop to mobile devices.

As promised, I am wrapping things up with a look into the future of Microsoft Cloud BI. I am going to break this look into the future into 3 bullet items: SQL Server Data Mining in the Cloud, BI 3.0: Social BI and Cloud BI in Denali.

1. SQL Server Data Mining in the Cloud

The Microsoft BI Labs team has been working on a project to put SQL Server data mining in the cloud. Click here to go to their home page and I also pasted a screenshot from one of the samples below. If you’ve ever used the SQL Server data mining add-in for Excel, then you’ll be familiar with this new lab tool. It has the same concept of importing data into an spreadsheet and then running one of the SQL Server data mining algorithms against your data for the purposes of finding key influencers, market basket, classifications, etc. This site is a great example of BI in the cloud and is experimental, so not all of the models that you come to expect from the Excel add-in are available from this glimpse into the future project.2. BI 3.0: Social Business Intelligence

A very important set of use cases for Cloud BI has emerged around Social BI or BI 3.0. Some vendors are running with the concepts of Social BI 3.0, such as what Panorama is doing with their Necto product. Social collaboration and integration of social networking weaved directly into the business intelligence experience is a direction within the BI solution space that I am very excited by. We pioneered these efforts at Microsoft with the integration of SharePoint, PerformancePoint, SSRS and SQL Server where SharePoint is the key to BI 3.0 by leveraging the social collaboration capabilities of SharePoint. This is a natural progression from BI 2.0 which was pioneered by efforts like our Microsoft Enterprise Cube (MEC) project which brought interactive visualization through Silverlight to the existing out-of-the-box visualizations in PerformancePoint and SSRS together in SharePoint. Once social media took off as a natural part of life and enterprise collaboration, adding in that capability through SharePoint was a very easy and natural extension of BI 2.0, such as what Bart Czernicki described in his Silverlight for BI book.3. SQL Server Denali

There are a couple of advances in Denali that have been announced recently that will help you on the road to Cloud BI with SQL Server. First, one of the most talked-about features in Denali is Project Crescent, which provides report authors with a fully browser-based Silverlight experience for report authoring. You could use that tool instead of the thick-client Report Builder tool. Also, the new DAC and data synch capabilities in both Azure and Denali will make it much easier to migrate on-premises databases to the cloud and to keep them synched-up. If you wish to implement the suggested architecture from this series of an EDW on-premises, then SQL Server Denali will help with your data warehouse by including columnstore indexes for your fact tables and you can then synch that data to SQL Azure data marts with data synch.

<Return to section navigation list>

MarketPlace DataMarket and OData

• The Silverlight Show reported on 7/4/2011 a New eBook: Producing and Consuming OData in a Silverlight and WP7 App available and an Azure eBook coming:

Today we release as an eBook another SilverlightShow series: Producing and Consuming OData in a Silverlight and WP7 App by Michael Crump.

This ebook collects the 3 parts of the series Producing and Consuming OData in a Silverlight and Windows Phone 7 Application together with slides and source code.

Both the article series and the ebook will be soon updated to reflect the recent Mango updates. Everyone who purchased the ebook will be emailed the updated copy too.

We'll be giving some eBooks for free in our next webinars and upcoming trainings, so stay tuned for our news and announcements on Twitter, Facebook and LinkedIn!

New eBooks coming soon:

- The 8-part Windows Phone 7 series by Andrea Boschin

- The 2-part Silverlight in the Azure cloud series by Gill Cleeren

In the SilverlightShow Ebook area you will find all available ebook listings!

Sudhir Hasbe posted on 7/1/2011 WPC2011: Grow your business with Windows Azure Marketplace, a 00:01:06 YouTube preview of his Worldwide Partners Conference 2011 session:

The Windows Azure Marketplace is an online marketplace to find, share, advertise, buy and sell building block components, premium data sets and finished applications.

In this video, We will discuss how ISVs and Data Providers can leverage Windows Azure Marketplace to grow their business. You can hear how other ISVS are participating in Windows Azure Marketplace to drive new business.

Sudhir’s session wasn’t included in my Worldwide Partners Conference 2011 Breakout Sessions in the Cloud Services Track list of 7/3/2011.

The MSDN Library pubished a How to: Persist the State of an OData Client for Windows Phone for the Windows Phone OS 7.1 on 6/26/2011 (missed when posted):

This is pre-release documentation for the Windows Phone OS 7.1 development platform. To provide feedback on this documentation, click here. For the Windows Phone OS 7.0 developer documentation, click here.

Persisting state in an application that uses the Open Data Protocol (OData) client library for Windows Phone requires that you use an instance of the DataServiceState class. For more information, see Open Data Protocol (OData) Client for Windows Phone.

The examples in this topic show how to persist data service state for a single page in the page’s state dictionary when the OnNavigatingFrom(NavigatingCancelEventArgs) method is called, and then restore this data when OnNavigatedTo(NavigationEventArgs) is called. This demonstrates the basics of how to use the DataServiceState class to serialize objects to persist state and how to restore them. However, we recommend employing a Model-View-ViewModel (MVVM) design pattern for your multi-page data applications. When using MVVM, the data is stored in the application’s State dictionary in the Deactivated event handler method and is restored in the Activated event handler. In this case, the activation and deactivation should be performed by the ViewModel.

The examples in this topic extend the single-page application featured in How to: Consume an OData Service for Windows Phone. This application uses the Northwind sample data service that is published on the OData website. This sample data service is read-only; attempting to save changes will return an error.

The following example shows how to serialize data service state and store it in the state dictionary for the page. This operation is performed in the OnNavigatingFrom(NavigatingCancelEventArgs) method for the page.

protected override void OnNavigatingFrom(System.Windows.Navigation.NavigatingCancelEventArgs e) { // Define a dictionary to hold an existing // DataServiceCollection<Customer>. var collections = new Dictionary<string, object>(); collections.Add("Customers", customers); // Serialize the data service data and store it in the page state. this.State["DataServiceState"] = DataServiceState.Serialize(context, collections); }The following example shows how to restore data service state from the page’s state dictionary. This restore is performed from the OnNavigatedTo(NavigationEventArgs) method for the page.

protected override void OnNavigatedTo(System.Windows.Navigation.NavigationEventArgs e) { // Get the object data if it is not still loaded. if (!this.isDataLoaded) { object storedState; DataServiceState state; // Try to get the serialized data service state. if (this.State.TryGetValue("DataServiceState", out storedState)) { // Deserialize the DataServiceState object. state = DataServiceState.Deserialize(storedState as string); // Set the context from the stored object. var restoredContext = (NorthwindEntities)state.Context; // Set the binding collections from the stored collection. var restoredCustomers = state.RootCollections["Customers"] as DataServiceCollection<Customer>; // Use the returned data to load the page UI. this.LoadData(restoredContext, restoredCustomers); } else { // If we don't have any data stored, // we need to reload it from the data service. this.LoadData(); } } }Note that in this example, we must first check the isDataLoaded variable to determine whether the data is still active in memory, which can occur when the application is dormant but not terminated. If the data is not in memory, we first try to restore it from the state dictionary and call the version of the LoadData method that uses the stored data to bind to the UI. If the data is not stored in the state dictionary, we call the version of the LoadData method that gets the data from the data service; this is done the first time that the application is executed.

This example shows the two LoadData method overloads that are called from the previous example.

// Display data from the stored data context and binding collection. public void LoadData(NorthwindEntities context, DataServiceCollection<Customer> customers) { this.context = context; this.customers = customers; this.isDataLoaded = true; } private void LoadData() { // Initialize the context and the binding collection. context = new NorthwindEntities(northwindUri); customers = new DataServiceCollection<Customer>(context); // Define a LINQ query that returns all customers. var query = from cust in context.Customers select cust; // Register for the LoadCompleted event. customers.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(customers_LoadCompleted); // Load the customers feed by executing the LINQ query. customers.LoadAsync(context.Customers); } void customers_LoadCompleted(object sender, LoadCompletedEventArgs e) { if (e.Error == null) { // Handle a paged data feed. if (customers.Continuation != null) { // Automatically load the next page. customers.LoadNextPartialSetAsync(); } else { // Set the data context of the list box control to the sample data. this.LayoutRoot.DataContext = customers; this.isDataLoaded = true; } } else { MessageBox.Show(string.Format("An error has occurred: {0}", e.Error.Message)); } }

Other Resources

<Return to section navigation list>

Windows Azure AppFabric: Applications, Access Control, WIF and Service Bus

• Kent Weare posted AppFabric Apps (June 2011 CTP) – Simple Workflow App to his new MiddleWareInTheCloud blog on 7/3/2011:

In this post, I am going to walk you through creating a very simple AppFabric Application that includes invoking a Workflow. Since I still don’t have access to the Labs environment(*hint Microsoft hint*) this demo will run in the local dev fabric.

The first step is to create a new project in Visual Studio 2010 and select AppFabric Application.

The User Interface for our application will be an ASP.Net Web App. In order to add this Web App we need to click on the Add New Service label which you can find in the Design View when you double click the App.cs file.

Once the New Service dialog appears, we have the ability to select ASP.Net, provide a name for this application and then click OK.

Next we are going to perform the same actions to add a Workflow

This purpose of this simple application is to convert kilometers to miles and vice versa. The Workflow will be used to determine which which calculation needs to take place i.e Miles –> Kilometers or Kilometers –> Miles.

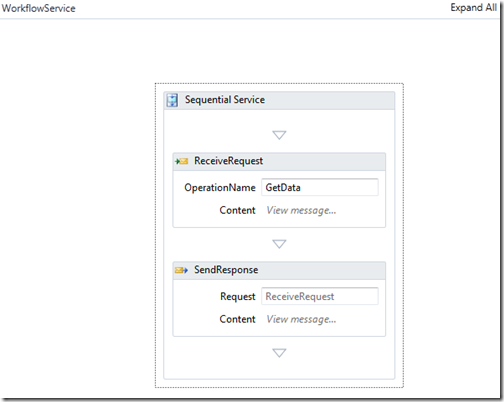

When we add our Workflow service we will see a Sequential Service Activity with our Request and Response activities.

The first thing we are going to do is delete the existing data variable and add a few new ones. The first one is called DistanceValue which is of type System.Double and the second is called DistanceMeasurement and it is of type System.String. The final variable that we are going to set is called Result and will be used to return our converted result to our calling client.

Next we are going to change our OperationName. We are going to set this to a value of ConvertDistance.

Since the default service that is generated by Visual Studio includes a single input parameter, we need to make some changes by clicking on the View parameter… label.

We want to set our input parameters to the variables that we previously created.

Next, we want to perform some logic by adding an If activity. If the target DistanceMeasurement is equal to KM then we want to convert Miles to Kilometers otherwise we want to convert Kilometers to Miles.

With our Workflow logic in place we now want to deal with returning our response to our client. In order to do so, we need to deal with our outbound parameters by clicking on the View parameter… label in our SendResponse activity.

Once inside the Content Definition dialog, we can create a parameter called outResult and assign our variable Result to it.

The last step that we should do within our workflow is to set the ServiceContractName. I have changed this value to be {http://MiddlewareInTheCloud/SimpleWorkflow/}ConvertDistance

Since communication will occur with this Workflow via the AppFabric ServiceBus, we need to set our URI and related credentials.

Before we can set a Service reference to our Workflow Service, we need to expose our endpoint. To do so we will pull down the no endpoints dropdown and select Add Endpoint.

We can now select ConvertDistance and provide a suitable Name.

With our endpoint set, we should be able to add a reference from our Web Application to our Workflow service. We no longer need to do this by right mouse clicking on Project References and selecting Add Service Reference.

Instead we add a Service Reference from the Design View on our App.cs file.

Once we have selected Add Service Reference, we should be prompted with our ConvertDistance Workflow service.

Once added, we will now see a service reference called Import1.

Now that our references are set we can take a look at our Diagram that Visual Studio has generated for us by right mouse clicking on our App.cs file and selecting View Diagram.

With our Workflow built and configured, we now will focus on our ASP.Net Web app. We will use a simple web form and call our Workflow from the code behind in our Web Form. Before we can do so, we need to add some controls to our Default.aspx page. In the markup of our Default.aspx webpage we are going to add the following:

Now we need to add an event handler for our btnSumbit button. Within this code we are instantiating a proxy object for our Workflow service and passing in values from our ASP.Net Web Form. We will then receive the result from our Workflow service and display it in a label control.

Testing

With our application now built it is time to test it out. We will do so in the local development fabric and can load our Windows Azure compute emulator by typing CTRL + F5. It will take a few minutes but eventually our Web Form will be displayed in our browser.

If we put in a value of 10 miles in our Distance to Convert field and click on our Button we will see that our workflow is called and returns a value of 16 Kilometers.

Conversely, if we set our Convert Distance to field to MI we will convert 16 Kilometers to 10 miles.

Conclusion

So as you can see wiring up Workflow services with ASP.Net web applications is pretty straight forward in AppFabric applications. Once I get access to the Labs environment I will continue this post with deploying and managing this application in the cloud.

Kent is a a BizTalk MVP residing in Calgary, Alberta, Canada where he manages a couple teams focused on BizTalk middleware and IT Tools such as Exchange, OCS and System Center.

Neil MacKenzie (@mknz) described Windows Azure AppFabric Applications in a 7/1/2011 post:

The Windows Azure AppFabric team is on a bit of a roll. After releasing Service Bus Queues and Topics into CTP last month, the team has just released AppFabric Applications into CTP. Nominally, AppFabric Application simplifies the task of composing services into an application but, in practice, it appears to rewrite the meaning of platform-as-a-service (PaaS).

The Windows Azure Platform has been the paradigm for PaaS. The platform supports various services such as ASP.NET websites in web roles, long running services in worker roles, highly-scalable storage services in the Windows Azure Storage Service, and relational database services in SQL Azure. However, the Windows Azure Platform does not provide direct support, other than at the API level, for integrating these various services into a composite application.

With the introduction of AppFabric Applications, the Windows Azure Platform now provides direct support for the composition of various services into an application. It does this by modeling the various services and exposing, in a coherent form, the properties required to access them. This coherence allows for the creation of Visual Studio tooling supporting the simple composition of services and the provision of a Portal UI supporting the management of deployed applications.

In AppFabric Applications, services are allocated to deployment containers which control the scalability of the services in them. Different containers can run different numbers of instances. These containers are hosted on the Windows Azure fabric. The services use that fabric in a multi-tenant fashion with, for example, instances of a stateless WCF service being injected into already deployed Windows Azure worker role instances.

In classic Windows Azure, the developer must be aware of the distinction between roles and instances, and how an instance is deployed into a virtual machine (VM). This need vanishes in AppFabric Applications, where the developer need know only about services and need know nothing about how they are deployed onto Windows Azure role instances.

The AppFabric Applications Manager runs on the AppFabric Labs Portal, and provides a nice UI allowing the management of applications throughout their deployment lifecycle. Unlike the regular Windows Azure Portal, the AppFabric Applications Manager provides direct access to application monitoring and tracing information. In future, it will also support changes to the configuration of the application and the services it comprises.

The AppFabric team blog post announcing the Windows Azure AppFabric June CTP, which includes AppFabric Applications, describes how to request access to the CTP. Karandeep Anand and Jurgen Willis introduced AppFabric applications at Tech-Ed 11 (presentation, slides). Alan Smith has posted two good videos on AppFabric Applications – one is an introduction while the other shows how to use Service Bus Queues in an AppFabric Application. The MSDN documentation for AppFabric Applications is here.

This post is the latest in a sequence of posts on various aspects of Windows Azure AppFabric. The earlier posts are on the Windows Azure AppFabric Caching service and Service Bus Queues and Topics.

Applications, Containers and Services.

AppFabric Applications supports the deployment of composite applications to a Windows Azure datacenter. An application comprises one or more services, each of which is deployed into a Container (or Service Group) hosted by Windows Azure. The container is the scalability unit for deployments of AppFabric Applications. An application can have zero or more of each type of container, and each container can be deployed as one or more instances.

AppFabric Applications supports three types of container:

- AppFabric Container

- Stateful AppFabric Container

- Web

An AppFabric Container can contain the following stateless services:

- Code

- WCF Service

- Workflow

A Code service is used to create long-running services in an AppFabric Application, and essentially provides the functionality of a worker role in classic Windows Azure. A WCF Service is a stateless WCF service. A Workflow service is a Windows Workflow 4 service.

A Stateful AppFabric Container can contain the following stateful services:

- Task Scheduler

- WCF Stateful Service

A Task Scheduler service is used to schedule tasks. A WCF Stateful service supports the creation of services which preserve instance state. To enhance scalability, WCF Stateful services support the partitioning of data and its replication across multiple instances. Furthermore, to ensure the fair allocation of resources in a multi-tenant environment WCF Stateful Services also implement the eviction of data when the 500MB limit per service is reached.

A Web container can contain:

- ASP.NET

- WCF Domain Service – Web

- WCF Service – Web

The ASP.NET service is an ASP.NET (or MVC) web application. The WCF Domain Service contains the business logic for WCF RIA Services application. The WCF Service is a web-hosted WCF service.

Applications are composed using more or less arbitrary mixes of these services. The services are related by adding, to an invoking service, a reference to the endpoint of another service. For example, a reference to the endpoint of a stateless WCF service could be added to an ASP.NET service. A simple application could comprise, for example, 2 instances of a Web container hosting an ASP.NET service with 1 instance of an AppFabric Container hosting a stateless WCF service.

When a service reference is added to a service, the reference is given a default name of Import1. This name can be changed to a more useful value. The Visual Studio tooling then adds a generated file, ServiceReferences.g.cs, to the project for the service importing the endpoint for the service. The following is an example of the generated file when a stateless WCF service is added to another service (using the default name of Import1):

class ServiceReferences { public static AppFabricContainer1.StatelessContract1.Service1.IService1 CreateImport1() { return Service.ExecutingService.ResolveImport<AppFabricContainer1.StatelessContract1.Service1.IService1>(“Import1″); } public static AppFabricContainer1.StatelessContract1.Service1.IService1 CreateImport (System.Collections.Generic.Dictionary<string, object> contextProperties) { return Service.ExecutingService.ResolveImport<AppFabricContainer1.StatelessContract1.Service1.IService1>(“Import1″, null, contextProperties); } }This generated file adds two methods that return a proxy object that can be used inside the service to access the stateless WCF service, as with traditional WCF programming. Note that if the service name had been changed from the default Import1 to MyWcfService, the methods would have been named CreateMyWcfService instead of CreateImport1.As well as the core services, AppFabric Applications also supports the use of referenced services – which are services not hosted in AppFabric Applications containers. The following referenced services are supported directly by the June CTP:

- AppFabric Caching

- Service Bus Queue

- Service Bus Subscription

- Service Bus Topic

- SQL Azure

- Windows Azure Blob

- Windows Azure Table

Both core services and referenced services are modeled so that the Visual Studio tooling can handle them. (To avoid confusion referenced services should really have been called something like external services.) This modeling provides for the capture, through a properties window, of the core properties needed to configure the referenced service. For example, for Windows Azure Blob these properties include the storage service account name and key, the container name, whether development storage should be used and whether HTTPS should be used.

The modeled properties typically include a ProvisionAction and an UnprovisionAction providing information required to provision and de-provision the referenced service. For example, for a SQL Azure referenced service the ProvisionAction is either InstallIfNotExist or None while the UnprovisionAction is either DropDatabase or None. In this case, a ProvisionAction of InstallIfNotExist is invoked only if a Data Tier Applications (DAC) package is provided as an Artifact for the SQL Azure referenced service.

The effect of adding a referenced service to a service is the same as with core services. The following is an example of the generated file when a Windows Azure Table referenced service is added to a stateless WCF service (using the default name of Import1):

namespace StatelessContract1 { class ServiceReferences { public static Microsoft.WindowsAzure.StorageClient.CloudTableClient CreateImport1() { return Service.ExecutingService.ResolveImport<Microsoft.WindowsAzure.StorageClient.CloudTableClient>( “Import1″); } public static Microsoft.WindowsAzure.StorageClient.CloudTableClient CreateImport1(System.Collections.Generic.Dictionary<string, object> contextProperties) { return Service.ExecutingService.ResolveImport<Microsoft.WindowsAzure.StorageClient.CloudTableClient>( “Import1″, null, contextProperties); } } }This generated file adds two methods that return a CloudTableClient object that can be used inside the service to access the Windows Azure Table Service. Note that if the service name had been changed from the default Import1 to CloudTableClient, the methods would automatically have been named CreateCloudTableClient instead of CreateImport1. The following is the service implementation for a WCF Stateless Service taken from an sample in the MSDN documentation:

namespace GroceryListService { public class Service1 : IService1 { private const string tableName = “GroceryTable”; private CloudTableClient tableClient; public Service1() { tableClient = ServiceReferences.CreateCloudTableClient(); tableClient.CreateTableIfNotExist(tableName); } public void AddEntry(string strName, string strQty) { GroceryEntry entry = new GroceryEntry() { Name = strName, Qty = strQty }; TableServiceContext tableServiceContext = tableClient.GetDataServiceContext(); tableServiceContext.AddObject(tableName, entry); tableServiceContext.SaveChanges(); } }The service objects exposed through ServiceReferences.g.cs when a reference service is referenced by another service are as follows:

Azure Blob Microsoft.WindowsAzure.StorageClient.CloudBlobClient Azure Table Microsoft.WindowsAzure.StorageClient.CloudTableClient Caching Microsoft.ApplicationServer.Caching.DataCache Service Bus Queue Microsoft.ServiceBus.Messaging.QueueClient Service Bus Subscription Microsoft.ServiceBus.Messaging.SubscriptionClient Service Bus Topic Microsoft.ServiceBus.Messaging.TopicClient SQL Azure System.Data.SqlClient.SqlConnection

The Service Bus Queues and Topics API exposes a .NET API using QueueClient, SubscriptionClient and TopicClient. Service Bus Queues can also be used through a WCF binding for service bus messaging that allows queues to be used for disconnected access to a service contract. In addition to the standard technique of using the methods exposed in ServiceReferences.g.cs it is also possible to access the service contract using the WCF binding for a Service Bus Queue. For example, a service can use a Service Bus Queue referenced service (SendToQueueImport) to invoke a service contract (IService1) exposed in another service by retrieving a proxy as follows:

IService1 proxy = Service.ExecutingService.ResolveImport<IService1>(“SendToQueueImport”);

This proxy can be used to invoke methods exposed by the contract, with the communication going through a Service Bus Queue. If IService1 contains a contract for a method named Approve() then it could be invoked through:

proxy.Approve(loan)

Development Environment

The AppFabric Applications SDK adds Visual Studio tooling to support the visual composition of applications. As services are added to the application, the tooling ensures that the appropriate projects are created and the relevant assembly references added to them. When a service reference is added to a service, the tooling adds the relevant generated file and assembly references to the project for the service.

When an AppFabric Application solution is created in Visual Studio it adds an Application project to the solution. The app.cs file in this project contains the definition of the application and all the services and artifacts used by it. Double clicking on app.cs brings up a list view showing either the Design View or the Deployment View. Right clicking on it allows the View Diagram menu item to be selected, which displays the application design in the form of a diagram. This is very useful when the application design is complex. (The app.designer.cs file contains the really gory details of the application composition.)

In the Design View of app.cs in Visual Studio, the services and referenced services in the application are grouped by service type. In the Deployment View, they are grouped by container (or service group). The scaleout count and the trace source level are container-level properties that can be changed in the Deployment View. They will be modifiable on the AppFabric Labs Portal, but the current CTP does not support this capability.

Applications can be instrumented using System.Diagnostics.Trace. The TraceSourceLevel can be set at the container (service group) level in the deployment view of the application in Visual Studio

When an application is deployed in the local environment, Visual Studio launches the Windows Azure Compute Emulator and the Windows Azure Storage Emulator. It then deploys a ContainerService with three roles:

- ContainerGatewayWorkerRole (1 instance)

- ContainerStatefulWorkerRole (2 instances)

- ContainerStatelessWorkerRole (2 instances)

Application services in AppFabric Containers and Stateful AppFabric Containers are then injected into these instances. Application services in a Web Container are injected into a second deployment which adds a web role instance. Note that the first deployment remains running after the application has been terminated, and is reused by subsequent deployments. However, I have run into an issue where an application containing no Web Container is not deleted after I exit the service. I have had to manually delete the deployment from the c:\af directory.

A basic application with an ASP.NET service consequently requires 6 instances running in the Compute Emulator. This puts significant memory pressure on the system that can leads to a delay in the deployment. Following deployment, the first few attempts to access a web page in an ASP.NET service frequently seem to fail. A few refreshes and a little patience usually brings success (assuming the code is fine). (On the VM on which I tried out AppFabric Applications, things went better after I pushed more memory and disk into it – a little patience was not sufficient to cure the out of disk space errors I received during deployment.)

Following a deployment in the development environment, the application assemblies are located in the c:\af directory. The development environment stores various log files temporarily in files under this directory and c:\AFSdk. The latter contains directories – GW0, SF0, SF1, SL0 and SL1 – corresponding to the instances of the ContainerService deployment. Once the application terminates, these logs are persisted into AppFabric Application Container-specific blobs in Storage Emulator containers named similarly to wad-appfabric-tracing-container-36eenedmci4e3bdcpnknlhezmi, where the final string represents the specific deployment.

An issue I came across was that the June CTP requires SQL Server Express. I have configured the Windows Azure Storage Emulator to use SQL Server 2008 R2. Consequently, both SQL Server Express and SQL Server 2008 R2 must be running when an application is deployed to the Compute Emulator. This is not an issue when the Storage Emulator uses SQL Server Express, which is the default for the Windows Azure SDK.

Production Environment

The AppFabric Application Manager displays informational information about the current lifecycle state of the application, such as uploaded or started. It supports the management of that lifecycle – including the uploading of application packages, and the subsequent deployment and starting of the application.

The AppFabric Manager also supports the display of application logs containing various metrics from containers, services and referenced services. For containers, these include metrics like CPU usage and network usage. The metrics include information such as ASP.NET requests and WCF call latency for services. The client-side monitoring of referenced services captures information such as SQL client execution time and Cache client get latency. The application logs can be downloaded and viewed in a trace viewer. This is all a huge improvement over the diagnostic capability exposed in the portal for classic Windows Azure.

The deployment and startup of an application with three trivial Code services distributed across two containers took less than 2 minutes. The deployment and startup of the GroceryList sample application with a web site, a stateless WCF service and a SQL Azure database took 8 minutes. This speedup, over the deployment of an instance of a worker or web role in classic Windows Azure, comes from the multi-tenant nature of AppFabric Applications which deploys services into an already running instance.

Summary

I am a great advocate of the PaaS model of cloud computing. I believe that AppFabric Applications represents the future of PaaS for use cases involving the composition of services that can benefit from the cost savings of running in a multi-tenanted environment. AppFabric Applications reduces the need to understand the service hosting environment allowing the focus to remain on the services. That is what PaaS is all about.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan discussed Windows Azure and UDP Traffic in a 7/2/2011 post:

As you may very well know, Windows Azure Web Role and Worker Role can have only HTTP/HTTPS/TCP type of end points. That

’smeans you can[‘t] use Web and Worker Role for UDP Traffic.<InputEndpoint name="<input-endpoint-name>" protocol="[http|https|tcp]" port="<port-number>" />

What if you would want UDP traffic in Windows Azure?

Your answer is Windows Azure VM Role & Windows Azure Connect.

- Windows Azure Connect provides a secure connection at IP-level, it does not have restriction for port numbers. You should check you local machine's firewall setting, making sure it allows incoming UDP traffic to reach the port the application is listening on.

- Using Windows Azure Connect you can allow UDP traffic from local network to the Windows Azure VM Role without any problem. Azure Connect allow UDP traffic in any direction however please make sure to configure the firewalls on any machines you want to do it on, including the Windows Azure VM Role machines as well.

For example, Windows Azure VM Role with Windows Azure Connect, can be used to create a forest of Game Servers to facilitate UDP communication.

Avkash Chauhan discussed Windows Azure VM Role and Firewall in a 7/2/2011 post:

In VM Role, Windows Firewall programming is depend on the user. If you are developing VM Role Image, the you should configure the firewall as you see fit for your objective. For Web and Worker role, the virtual machine is locked and endpoints are configured during VM initialization, depend on role end point configuration. So when you are using VM role you can configure Windows firewall depend on the way it is needed for your application.

- VM Role Training Lab: http://msdn.microsoft.com/en-us/gg502178

- VM Role Articles: http://blogs.msdn.com/b/avkashchauhan/archive/tags/vm+role/

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Bruce Kyle reported New Beta Adds Features to 'Windows Phone Developer Tools' on 7/4/2011 to the US ISV Evangelism Blog:

We are happy to announce that the new beta release of the Windows Phone SDK (renamed from 'Windows Phone Developer Tools') is available for download now.

New Features

The new beta Windows Phone SDK improves on last month's beta, and provides additional developer tooling features and capabilities, including:

- Updated Profiler. The updated profiler feature enables developers to profile performance of UI and code within an app. To use the profiler, you need to deploy and run your app on a Windows Phone device running a pre-release build of Mango – please see how to get the pre-release build of Mango below.

- Improved Emulator. The improved emulator provides stability and performance enhancements.

- Isolated Storage Explorer. The isolated storage explorer allows you to download and upload contents to/from the isolated storage of an application deployed to either the device or emulator.

- Advertising SDK. The Microsoft Advertising SDK is now included as part of the Windows Phone SDK.

Download

We have also updated our official documentation and introduced new educational material and code samples that provide more coverage across the platform. Check out What's New in Windows Phone Developer Tools.

Download the new beta Windows Phone SDK here and please let us know what you think by providing feedback on the App Hub Forums or on our WPDev UserVoice site.

You can use the SDK with Windows Phone OS 7.0 or 7.1.

• Kim Spilker (@kimspilker) suggested Create the Next Big App: Webcast Series in a 7/4/2011 post to the Microsoft Press blog:

Start from a scenario relevant to you – mobile and Sharepoint apps, Facebook promos, Access databases, and see how cloud addresses relevant gaps.

Learn about the Windows Azure Platform, an Internet-scale cloud computing and services platform hosted in Microsoft data centers. The webcast series will show you how the Windows Azure Platform provides you with a range of functionality to build applications from consumer Web to enterprise scenarios and includes an operating system with a set of developer services. The Windows Azure Platform provides a fully interoperable environment with support of industry standards and Web protocols, including REST, SOAP, and XML. Dive into these resources to learn more about how you can utilize this powerful solution to build new applications or extend existing ones.

See webcast titles and schedules: http://www.microsoft.com/events/series/azure.aspx?tab=webcasts&id=44705

Then try Azure at no cost CODE webcastpass, and deploy your 1st app in as little as 30 mins

Virtual Labs

Test drive Windows Azure Platform solutions in a virtual lab. See how you can quickly and easily develop applications running in the cloud by using your existing skills with Microsoft Visual Studio and the Microsoft .NET Framework. Virtual labs are simple, with no complex setup or installation required. You get a downloadable manual for the labs and you work at your own pace, on your own schedule.

- MSDN Virtual Lab: Advanced Web and Worker Roles

- MSDN Virtual Lab: Building Windows Azure Services

- MSDN Virtual Lab: Building Windows Azure Services with PHP

- MSDN Virtual Lab: Debugging Applications in Windows Azure

- MSDN Virtual Lab: Getting Started with Windows Azure Storage

- MSDN Virtual Lab: MSDN Virtual Lab: Exploring Windows Azure Storage

- MSDN Virtual Lab: Using Windows Azure Tables

- MSDN Virtual Lab: Windows Azure Native Code

- MSDN Virtual Lab: Windows Phone 7 and The Cloud

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: June 2011 post of 7/3/2011 shows 0 downtime for June:

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Azure Web role instances from Microsoft’s US South Central (San Antonio) data center. Here’s its uptime report from Pingdom.com for June 2011:

This is the first uptime report for the two-Web role version of the sample project. Reports will continue on a monthly basis.

Scott Densmore (@scottdensmore) recommended More Windows Azure Goodness - StockTrader 5.0 in a 7/3/2011 post:

A new Windows Azure end-to-end sample application that can be used locally, in Windows Azure or as a Hybrid application. If you are interested in enterprise technologies and Windows Azure I would recommend checking it out here. You can read, try it and download the source here.

Matias Woloski (@woloski) described an Ajax, Cross Domain, jQuery, WCF Web API or MVC, Windows Azure project in a 7/2/2011 post:

The title is SEO friendly as you can see

. This week, while working in a cool project, we had to explore options to expose a web API and make cross domain calls from an HTML5 client. Our specific scenario is: we develop the server API and another company is building the HTML5 client. Since we are in the development phase, we wanted to be agile and work independently from each other. The fact that we are using Azure and WCF Web API is an implementation detail, this can be applied to any server side REST framework and any platform.

We wanted a non-intrusive solution. This means being able to use the usual jQuery client (not learning a new client js API) and try to keep to the minimum the amount of changes in the client and server code. JSONP is an option but it does not work for POST requests. CORS is another option that I would like to try but I haven’t found a good jQuery plugin for that.

So in the end this is what we decided to use:

- WCF Web API to implement the REST backend (works also with MVC)

- jQuery to query the REST backend

- jQuery plugin (flXHR from flensed) that overrides the jQuery AJAX transport with a headless flash component

- Windows Azure w/ WebDeploy enabled to host the API

Having a working solution requires the following steps:

- Download jQuery flXHR plugin and add it to your scripts folder

- Download the latest flXHR library

- Put the cross domain policy xml file in the root of your server (change the allowed domains if you want)

<?xml version="1.0"?> <!DOCTYPE cross-domain-policy SYSTEM "http://www.macromedia.com/xml/dtds/cross-domain-policy.dtd"> <cross-domain-policy> <allow-access-from domain="*" /> <allow-http-request-headers-from domain="*" headers="*" /> </cross-domain-policy>Here is some JavaScript code that register the flXHR as a jQuery ajax transport and make an AJAX call when a button is click

<script type="text/javascript"> var baseUrl = "http://api.cloudapp.net/"; $(function () { jQuery.flXHRproxy.registerOptions(baseUrl, { xmlResponseText: false, loadPolicyURL: baseUrl + "crossdomain.xml" }); }); $.ajaxSetup({ error: handleError }); $("#btn").click(function () { $.ajax({ url: baseUrl + "resources/1", success: function (data) { alert(data); } }); }); function handleError(jqXHR, errtype, errObj) { var XHRobj = jqXHR.__flXHR__; alert("Error: " + errObj.number + "\nType: " + errObj.name + "\nDescription: " + errObj.description + "\nSource Object Id: " + XHRobj.instanceId ); } </script>It’s important to set the ajaxSetup, otherwise POST requests will be converted to GET requests (seems like a bug in the library)

Finally, make sure to include the following scripts

<script src="/Scripts/jquery-1.6.2.js" type="text/javascript"></script> <script type="text/javascript" src="/Scripts/flensed/flXHR.js"></script> <script src="/Scripts/jquery.flXHRproxy.js" type="text/javascript"></script>The nice thing of this solution is that you can set the baseUrl to an empty string and remove the “registerOptions” and everything will keep working just fine from the same domain using the usual jQuery client.

This is the client with (default.html)

This is the server implemented with WCF Web API running in Azure

Turning on the network monitoring on IE9, we can see what is going on behind the scenes.

Notice the last two calls initiated by Flash. The first one downloading the crossdomain policy file and then the actual call to the API.

Some gotchas:

- I wasn’t able to send http headers (via beforeSend). This means that you can’t set the Accept header, it will always be */*

- There is no support for other verbs than GET/POST (this is a Flash limitation)

I uploaded the small proof of concept here.

Jonathan Rozenblit (@jrozenblit) continued his series with What’s In the Cloud: Canada Does Windows Azure - PhotoPivot on 7/2/2011:

Happy Canada Day! Let’s celebrate with yet another Windows Azure developer story!

A few weeks ago, I started my search for untold Canadian stories in preparation for my talk, Windows Azure: What’s In the Cloud, at Prairie Dev Con. I was just looking for a few stores, but was actually surprised, impressed, and proud of my fellow Canadians when I was able to connect with several Canadian developers who have either built new applications using Windows Azure services or have migrated existing applications to Windows Azure. What was really amazing to see was the different ways these Canadian developers were Windows Azure to create unique solutions.

This is one of those stories.

Leveraging Windows Azure for Applications That Scale and Store Data

Back in May, we talked about leveraging Windows Azure for your next app idea, and specifically, usage scenarios around websites. We talked about how Windows Azure is ideal for sites that have to scale quickly and for sites that store massive amounts of data. Today, we’ll chat with Morton Rand-Hendriksen (@mor10) and Chris Arnold (@GoodCoffeeCode) from PhotoPivot.com and deep dive into the intricate ways they’ve used Windows Azure as a backend processing engine and mass storage platform for PhotoPivot.

PhotoPivot

PhotoPivot is an early stage, self-funded start-up with the potential for internet-scale growth as a value-add to existing photo platforms by adding a DeepZoom layer to peoples' entire image collections. This, coupled with its unique front-ends, creates a great user experience. PhotoPivot experiences huge, sporadic processing burden to create this new layer and is constantly in need of vast amounts of storage.

Jonathan: When you guys were designing PhotoPivot, what was the rationale behind your decision to develop for the Cloud, and more specifically, to use Windows Azure?

Morten: Cloud gives us a cost-effective, zero-maintenance, highly scalable approach to hosting. It enables us to spend our valuable time focusing on our customers, not our infrastructure. Azure was the obvious choice. Between Chris and I, we’ve developed on the Microsoft stack for 2 decades and Azure's integration into our familiar IDE was important. As a BizSpark member, we also get some great, free benefits. This enabled us to get moving fast without too much concern over costs.

Chris: I like integrated solutions. It means that if (when?) something in the stack I'm using goes wrong I normally have one point of contact for a fix. Using something like AWS would, potentially, put us in a position of bouncing emails back and forth between Amazon and Microsoft - not very appealing. I've also been a .NET developer since it was in Beta so using a Windows-based platform was the obvious choice.

Jonathan: What Windows Azure services are you using? How are you using them?

Chris: We use Windows Azure, SQL Azure, Blob Storage and CDN. Currently our infrastructure consists of an ASP.NET MVC front-end hosted in Extra Small web roles (Windows Azure Compute). We will soon be porting this to WordPress hosted on Azure. We also have a back-end process that is hosted in worker roles. These are only turned on, sporadically, when we need to process new users to the platform (and subsequently turned off when no longer needed so as to not incur costs). If we have a number of pending users we have the option to spin up as many roles as we want to pay for in order to speed up the work. We are planning to make good use of the off-peak times to spin these up - thus saving us money on data transfers in.

We use SQL Azure to store all the non-binary, relational data for our users. This is potentially large (due to all the Exif data etc. associated with photography) but, thankfully, it can be massively normalised. We use Entity Framework as our logical data layer and, from this, we automatically generated the database.

We use Blob storage for all of the DeepZoom binary and xml data structures. Public photos are put in public containers and can be browsed directly whilst non-public photos are stored in private containers and accessed via a web role that handles authentication and authorization.

One 'interesting' aspect to this is the way we generate the DeepZoom data. The Microsoft tools are still very wedded to the filing system. This has meant us using local storage as a staging platform. Once generated, the output is uploaded to the appropriate container. We are working on writing our own DeepZoom tools that will enable us to target any Stream, not just the filing system.

Our existing data centre was in the US. Because our Silverlight front-end does a lot of async streaming, users in the UK, noticed the 100ms lag. Using the CDN gives us a trivially simple way to distribute our image data and give our worldwide users a great experience.

Jonathan: During development, did you run into anything that was not obvious and required you to do some research? What were your findings? Hopefully, other developers will be able to use your findings to solve similar issues.

Chris: When designing something as complex as PhotoPivot, you’re bound to run into a few things:

- Table storage seemed the obvious choice for all our non-binary data. Using a NoSQL approach removes a layer from your stack and simplifies your application. Azure's table storage has always been touted as a fantastically cheap way to store de-normalised data. And, it is - as long as you don't need to access it frequently. We eventually changed to SQL Azure. This was, firstly, for the ability to query better and, secondly, because there's no per-transaction cost. BTW - setting up SQL Azure was blissfully simple - I never want to go back to manually setting database file locations etc!

- There's no debugging for deployment issues without IntelliTrace. This is OK for us as we have MSDN Ultimate through BizSpark. If you only have MSDN Professional, though, you won’t have this feature.

- Tracing and debugging are critical. We wrote new TraceListeners to handle Azure's scale-out abilities. Our existing back-end, pending user process, was already set up to use the standard Trace subsystems built into .NET. This easily allows us to add TraceListeners to dump info into files or to the console. There are techniques for doing this with local storage and then, periodically, shipping them to blob storage but I didn't like the approach. So, I created another Entity Data Model for the logging entities and used that to auto-generate another database. I then extended the base TraceListener class and created one that accepted the correct ObjectContext as a constructor and took care of persisting the trace events. Because the connection strings are stored in the config files this also gives us the ability to use multiple databases and infinitely scale out if required.

- The local emulators are pretty good, but depending on what you’re doing, there’s no guarantee that your code will work as expected in the Cloud. This can definitely slow up the development process.

- Best practice says to never use direct links to resources because it introduces the 'Insecure Direct Object Reference' vulnerability. In order to do avoid this, though, we would have to pay for more compute instances. Setting our blob containers to 'public' was cheaper and no security risk as they are isolated storage.

Jonathan: Lastly, what were some of the lessons you and your team learned as part of ramping up to use Windows Azure or actually developing for Windows Azure?

Chris: Efficiency is everything. When you move from a dedicated server to Azure you have to make your storage and processes as efficient as possible, because they directly effect your bottom line. We spent time refactoring our code to 'max out' both CPU and bandwidth simultaneously. Azure can be a route to creating a profitable service, but you have to work harder to achieve this.

How did we do it? Our existing back-end process (that, basically, imports new users) ran on a dedicated server. Using 'Lean Startup' principles I wrote code in a manner that allowed me to test ideas quickly. This meant that it wasn't as efficient or robust as production code. This was OK because we were paying a flat-rate for our server. Azure's pay-as-you-go model means that, if we can successfully refactor existing code so that it runs twice as fast, we'll save money.

Our existing process had 2 sequential steps:

- Download ALL the data for a user from Flickr.

- Process the data and create DeepZoom collections.

During step 1 we used as much bandwidth as possible but NO CPU cycles. During step 2, we didn't use ANY bandwidth but lots of CPU cycles. By changing our process flow, we were able to utilise both bandwidth and CPU cycles simultaneously and get through the process quicker. For example:

- Download data for ONE photo from Flickr.

- Process that ONE photo and create DeepZoom images.

- Goto 1.

Another HUGELY important aspect is concurrency. Fully utilising the many classes in the TPL (Task Parallel Library) is hard, but necessary if you are going to develop successfully on Azure (or any pay-as-you-go platform). Gone are the days of writing code in series

Thank you Chris and Morten. I’d like to take this opportunity to thank you for taking us on this deep dive exploring the inner workings of PhotoPivot.

In Moving Your Solution to the Cloud, we talked about two types of applications – compatible with Windows Azure and designed for Windows Azure. You can consider the original dedicated server hosted version of PhotoPivot as compatible with Windows Azure. Would it work in Windows Azure if it were deployed as is? Yes, absolutely. However, as you can see above, in order to really reap the benefits of Windows Azure, Chris had to make a few changes to the application. However, once done, PhotoPivot became an application that was designed for Windows Azure, and leveraging the platform to its max to reduce costs and maximize on scale.

If you’re a Flickr user, head over to photopivot.com and sign up to participate in the beta program. Once you see your pictures in these new dimensions, you’ll never want to look at them in any other way. From the photography aficionados to the average point-and-shooter, this is a great visualization tool that will give you a new way of exploring your picture collections. Check it out.

Join The Conversation

What do you think of this solution’s use of Windows Azure? Has this story helped you better understand usage scenarios for Windows Azure? Join the Ignite Your Coding LinkedIn discussion to share your thoughts.

Previous Stories

Missed previous developer stories in the series? Check them out here.

Gunther L posted Getting Real with Windows Azure: Real world Use Cases - Hosted by Microsoft and Hanu Software to the US ISV Evangelism blog on 7/1/2011:

Day & Time: Wednesday, July 6th from 1 – 2 PM Eastern

Recommended Audiences: Technology Executives, IT Managers, IT Professionals, COO, CTO, IT Directors, Solution Architects, Product Managers, Business Decision Maker, Technical Decision Makers, Developers, Chief Development Officers (CDO), Chief Information Officers (CIO)

You’ve probably been hearing about cloud computing. But when you get beyond the hype, most cloud solutions offer little more than an alternate place to store your data.

Join us for a cloud power event, and we’ll show you how we leveraged Microsoft cloud solutions and developed a value-added Windows Azure solution for a real world business case.

This webinar is for you:

- If you are looking to build SaaS applications or migrate existing desktop applications to Cloud.

- If you are looking to focus on your applications, not your infrastructure.

- If you are looking to leverage your existing Visual Studio and .NET, Php, Java to build compelling Cloud applications.

- If you are looking to build high availability, high performance, scalable applications

- If you are looking to adopt Cloud but confused about where to start.

Presenters:

- Bob Isaacs, Platform Strategy Advisor, Azure ISV - Microsoft Corporation

Bob works with Independent Software Vendors to help them realize the value of the Windows Azure Platform.- Vyas Bharghava, Technology Head – Hanu Software

Vyas helps ISVs and Enterprises adopt Microsoft Cloud Platform (Windows Azure).To Register click on: http://www.clicktoattend.com/?id=156172

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework

• Paul Patterson posted Microsoft LightSwitch – Using User Information for Lookup Lists on 6/30/2011:

Here is a little tip on how to get user information from your LightSwitch application, and then use that information as a source for assigning things to users.

I have an application that users sign in to view and manage tasks for the projects they are working on. The application contextually serves up data specifically for the user that is logged in. This is easily achieved by filtering the data via the query (see here for a great example of this) .

To get this filtering to work, I needed a way to assign projects and tasks to users. Here is an example of how I did this…

Fire up LightSwitch and create a new project. I called mine LSUserLookups.

Create a table named Project, like this…

Create another table named ProjectUser, and then add a relationship to the Project table, like this…

Now for some fun stuff. To get at the user information in LightSwitch, we’ll create a WCF service that will query the LightSwitch application instance for the user information.

Select to add a new project to the existing solution (File > Add > New Project). I named the new project LSUserLookupWCFService. Remember, we are adding a new project to the existing solution, not creating a new solution.

Delete the class file (Class1.vb) that gets created automagically.

In the same LSUserLookupWCFService project, add refrences to;

- System.ComponentModel.DataAnnotations,

- Microsoft.LightSwitch, which is probably located in C:\Program Files (x86)\Microsoft Visual Studio 10.0\Common7\IDE\LightSwitch\1.0\Client on your computer, And

- System.Transactions

Next, add a new class file to the new project and name it LSUser.vb. The add the following code to the file;

Imports System.ComponentModel.DataAnnotations Public Class LSUser Private m_UserName As String Private m_UserFullName As String <Key()> _ Public Property UserName() As String Get Return m_UserName End Get Set(value As String) m_UserName = value End Set End Property Public Property UserFullName() As String Get Return m_UserFullName End Get Set(value As String) m_UserFullName = value End Set End Property End ClassMake sure that the LSUser class includes the import of the DataAnnotations namespace. This is necessary so that the “Key” attribute can be applied to a property in the class; as applied to the UserName property. The key attribute is necessary for when we add the soon to be created WCF service to our LightSwitch application.

This LSUser class is going to be used for holding the user information that will be retrieved and used in the LightSwitch application.

Next, we need to create the WCF service that will; make the query for user information, and then serve up the collection of user information in the form of a collection of LSUser objects.

Add a Domain Service Class to the project. I named mine ProxySecurityDataService.vb.

In the Add New Domain Service Class dialog, I unchecked the Enable client access checkbox.

I updated ProxySecurityDataService.vb file with this…

Option Compare Binary Option Infer On Option Strict On Option Explicit On Imports System Imports System.Collections.Generic Imports System.ComponentModel Imports System.ComponentModel.DataAnnotations Imports System.Linq Imports System.ServiceModel.DomainServices.Hosting Imports System.ServiceModel.DomainServices.Server Imports System.Transactions Imports Microsoft.LightSwitch Imports Microsoft.LightSwitch.Framework.Base Public Class ProxySecurityDataService Inherits DomainService <Query(IsDefault:=True)> _ Public Function GetRoles() As IEnumerable(Of LSUser) Dim currentTrx As Transaction = Transaction.Current Transaction.Current = Nothing Try Dim workspace As IDataWorkspace = _ ApplicationProvider.Current.CreateDataWorkspace() Dim users As IEnumerable(Of Microsoft.LightSwitch.Security.UserRegistration) = _ workspace.SecurityData.UserRegistrations.GetQuery().Execute() Return From u In users _ Select New LSUser() _ With {.UserName = u.UserName, .UserFullName = u.FullName} Catch ex As Exception Return Nothing End Try End Function End ClassSo what does this bad boy class do? Well, it is simply going to take the current context that it is working in, which will be the LightSwitch application, and queries the application for the users that are registered with it. It then takes

thethe found users and returns a enumerable list of LUser objects containing the information. All this is wrapped up in a DomainService that LightSwitch will recognize as a datasource.That’s it for the WCF service. Now back to LightSwitch.

In the LightSwitch project, right-click Data Sources and select Add Data Source…

In the Attach Data Source Wizard dialog, select WCF RIA Service and then click Next…

In the next dialog, which may take a few seconds, click the Add Reference button. Then use the Projects tab to add the LSUserLookupWCFService project and press OK.

After some time the wizard should present you with the WCF service class that we created earlier. Select it and click the Next button.

Next, the wizard will ask you what entities to use from the service. Expand the Entities node to take a peak at what is being offered. I simply checked the Entities node to select everything (which really means just the one entity that is in there). Click the Finish button.

Now LightSwitch should present you with the entity designer screen for the newly added LSUser data source.

Cool beans! Now lets get crazy.

I want to assign users to projects. To do this, I simply create a relationship between the LSUser entity, and the ProjectUser entity.

Open up the ProjectUser entity, and add the following relationship…

And presto, a relationship to the registered users in the application…

Now go ahead and add a screen to the application like so…

WHOA!! Slow Down! Don’t be so impatient and start F5′ing the app to see your fabulous handy work. We need to add some users to that application so that we can actually choose from some.

Open up the properties of the LightSwitch application, and configure the Access Control to User Forms authentication. Also make sure to check the Granted for debug checkbox.

NOW you can launch the application (press F5).

When the application launches, navigate to the the Users item in the Administration menu. Add some users, for example…

Next, navigate to the Project List Detail screen. Add some projects, and then assign some users to the projects. Like so …

… and for your development pleasure, here is the source: LSUserLookups.zip (2 MB)

Thanks to Michael Washington (@ADefWebserver) for the heads up.

Michael Washington (@ADefWebserver) reported on 7/2/2011 Visual Studio LightSwitch is launching July 26th!:

Hello everyone, great news!

With Microsoft Visual Studio LightSwitch 2011, building business applications has never been easier – and it’s launching on July 26! see: Visual Studio LightSwitch is launching July 26th!

The latest articles on The LightSwitch Help Website:

- LightSwitch Concurrency Checking: One of the greatest benefits to using LightSwitch, is that it automatically manages data integrity when multiple users are updating data. It also provides a method to resolve any errors that it detects.

- LightSwitch–Modal Add and Edit Windows: The default Add and Edit screens generated by LightSwitch often don’t allow the control you need to meet the business requirements.

- Creating a Simple LightSwitch RIA Service (using POCO): WCF RIA Services are the “fix any data issue” solution. If the problem is getting data to your LightSwitch application, and the normal create or add table will not work, the answer is to use a WCF RIA Service.

- Saving Files To File System With LightSwitch (Uploading Files): This article describes how you can upload files using LightSwitch, and store them on the server hard drive. This is different from uploading files and storing them in the server database.

Also:

- The LightSwitch Help Website has just launced theLightSwitch Community Announcements Forum at: http://lightswitchhelpwebsite.com/Forum/tabid/63/aff/20/Default.aspx

- If you have a Blog, Publication, Service, etc., related to LightSwitch, you are welcome to post there.

- You can subscribe to the forum so you will be emailed when there is a posting. This should make it easier than trying to hunt for "the latest new thing" in this forum or Twitter.

Fritz Onion reported a New course on Lightswitch Beta 2 in a 7/2/2011 post to the Pluralsight blog:

Matt Milner has just published a new course: Introduction to Lightswitch Beta 2

In this course you will learn how to create, access and manipulate data in Visual Studio LightSwitch applications. You will also learn about the screen creation capabilities and how to create rich applications quickly.

Julie Lerman (@julielerman) posted Entity Framework/WCF Data Services June 2011 CTP: Derived Entities with Related Data to her Don’t Be Iffy blog on 7/2/2011:

Well, this change really came in the March 2011 CTP but I didn’t realize it until the June CTP was out, so I’ll call it a wash.

WCF Data Services has had a bad problem with inherited types where the derived type had a relationship to yet another type. For example, in this model where TPTTableA is a derived type (from Base)and has related data (BaseChildren).

If you expose just the Bases EntitySet (along with its derived type) in a WCF Data service, that was fine. I can browse to http://localhost:3958/WcfDataService1.svc/Bases easily.

But if I also exposed the related type (BaseChild) then the data service would have a hissy fit when you tried to access the hierarchy base (same URI as before). If you dig into the error it tells you:

“Navigation Properties are not supported on derived entity types. Entity Set 'Bases' has a instance of type 'cf_model.ContextModel.TPTTableA', which is an derived entity type and has navigation properties. Please remove all the navigation properties from type 'cf_model.ContextModel.TPTTableA'”

Paul Mehner blogged about this and I wrote up a request on Microsoft Connect. Here’s Paul’s post: Windows Communication Foundation Data Services (Astoria) – The Stuff They Should Have Told You Before You Started

But it’s now fixed!

Using the same model, I can expose all of the entity sets in the data service and I’m still able to access the types in the inheritance hierarchy.

Here is an example where I am looking at a single Base entity and in fact this happens to be one of the derived types, notice in the category the term is cf_model.TPTA. The type is strongly typed.

You can see that strong typing in the link to the related data:

Bases(1)/cf_model.TPTA/BaseChildren

That’s how the data service is able to see the relationship, only off of the specific type that owns the relationship.

So accessing the relationship is a little different than normal. I struggled with this but was grateful for some help from data wizard, Pablo Castro.

The Uri to access the navigation requires that type specification:

http://localhost:1888/WcfDataService1.svc/Bases(1)/cf_model.TPTA/BaseChildren

You also need that type if you want to eager load the type along with it’s related data:

http://localhost:1888/WcfDataService1.svc/Bases(1)/cf_model.TPTA?$expand=BaseChildren

Note that I was still having a little trouble with the navigation (the first of these two Uris). It turns out that cassini (i.e. the asp.net Web Development Server) was having a problem with the period (.) in between cf_model and TPTA.

Once I switched the service to use IIS Express (which was my first time using IIS Express and shockingly easy!), it was fine. (Thanks again to Pablo for setting me straight on this problem.)

So it’s come a long way and if this is how it is finalized, I can live with it though I guess it would be nice to have the URIs cleaned up.

Of course you’re more likely to use one of the client libraries that hide much of the Uri writing from us, so maybe in the end it will not be so bad. I have not yet played with the new client library that came with this CTP so I can’t say quite yet.

Julie Lerman (@julielerman) also posted Entity Framework & WCF Data Services June 2011 CTP : Auto Compiled LINQ Queries on 7/2/2011:

Ahh another one of the very awesome features of the new CTP!

Pre-compiled LINQ to Entities queries (LINQ to SQL has them too) are an incredible performance boost. Entity Framework has to a bit of work to read your LINQ to Entities query, then scour through the metadata to figure out what tables & columns are involved, then pass all of that info to the provider (e.g., System.Data.SqlClient) to get a properly constructed store query. If you have queries that you use frequently, even if they have parameters, it is a big benefit to do this once and then reuse the store query.

I’ve written about this a lot. It’s a bit of a PI[T]A to do especially once you start adding repositories or other abstractions into your application. And the worst part is that they are tied to ObjectContext and you cannot even trick a DbContext into leveraging CompiledQuery. (Believe me I tried and I know the team tried too.)

So, they’ve come up with a whole new way to pre-compile and invoke these queries and the best part is that it all happens under the covers by default. Yahoo!

Plus you can easily turn the feature off (and back on) as needed. With CompiledQuery in .NET 3.5 & .NET 4.0, the cost of compiling a pre-compiling a query that can be invoked later is more expensive than the one time cost of the effort to transform a L2E query into a store query. Auto-Compiled queries work very differently so I don’t know if you need to have the same concern about turning it off for single-use queries. My educated guess is that it’s the same. EF still has to work out the query,then it has to cache it then it has to look for it in the cache. So if won’t benefit from having the store query cached, then why pay the cost of storing and reading from the cache?

I highly recommend reading the EF team’s walk-through on the Auto-Compiled Queries for information on performance and more details about this feature and how it works. Especially the note that this paper says CompiledQuery is still faster.

A bit of testing

I did a small test where I ran a simple query 10 times using the default (compiled) and 10 times where I’ve turned off the compilation. I also started with a set up warmup queries to make sure that none of the queries I was timing would be impacted by EF application startup costs. Here you can see the key parts of my test. Note that I’m using ObjectContext here and that’s where the ContextOptions property lives (same options where you find LazyLoadingEnabled, etc). You can get there from an EF 4.1 DbContext by casting back to an ObjectContext.

public static void CompiledQueries() { using (var context = new BAEntities()) { FilteredQuery(context, 3).FirstOrDefault(); FilteredQuery(context, 100).FirstOrDefault(); FilteredQuery(context, 113).FirstOrDefault(); FilteredQuery(context, 196).FirstOrDefault(); } } public static void NonCompiledQueries() { using (var context = new BAEntities()) { context.ContextOptions.DefaultQueryPlanCachingSetting = false; FilteredQuery(context, 3).FirstOrDefault(); FilteredQuery(context, 100).FirstOrDefault(); FilteredQuery(context, 113).FirstOrDefault(); FilteredQuery(context, 196).FirstOrDefault(); } } internal static IQueryable<Contact> FilteredQuery(BAEntities context, int id) { var query= from c in context.Contacts.Include("Addresses") where c.ContactID == id select c; return query; }I used Visual Studio’s profiling tools to get the time taken for running the compiled queries and for running the queries with the compilation set off.

When I executed each method (CompiledQueries and NonCompiledQueries) 3 times, I found that the total time for the compiled queries ran about 5 times faster than the total time for the non-compiled queries.

When I executed each method 10 times, the compiled queries total time was about 3 times faster than the non-compiled.

Note that these are not benchmark numbers to be used, but just a general idea of the performance savings. The performance gain from using the pre-compiling queries is not news – although again, auto-compiled queries are not as fast as invoking a CompiledQuery. What’s news is that you now get the performance savings for free. Many developers aren’t even aware of the compiled queries. Some are daunted by the code that it takes to create them. And some scenarios are just too hard or in the case of DbContext, impossible, to leverage them.

WCF Data Services

I mentioned data services in the blog post title. Because this compilation is the default, that means that when you build WCF Data Services on top of an Entity Framework model, the services will also automatically get the performance savings as well.