Windows Azure and Cloud Computing Posts for 7/7/2011+

A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

SAP provided updated details about their Administration Settings for OData Channel in a 7/7/2011 help topic:

When using OData Channel, you retrieve your data from a backend system, an SAP Business Suite system where you define your service. The application logic and the metadata is thus hosted on the SAP Business Suite system.

SAP NetWeaver Gateway exposes the OData services for OData Channel Object Model Groups. So you need to activate your service in your SAP NetWeaver Gateway system. For this an IMG activity is available in the SAP NetWeaver Gateway Implementation Guide (IMG): in transaction SPRO open the SAP Reference IMG and navigate to SAP NetWeaver, Gateway, Administration, OData Channel, Register Remote Service.

After entering the System Alias for your backend system and, if needed, the Service Group (Model Group on the SAP Business Suite system), a list of available services is displayed. You can now activate a given service from the SAP Business Suite system by selecting it from the list and choosing Enable Service. This activation stores the relation of the service and the system alias and the related model. You are shown an URL pointing to the metadata document of the newly activated service. So you can test the service via Call Browser.

Prerequisites

The SAP Business Suite system supports the OData Channel functionality.

An Object Model as well as an Object Model Group have been created in the SAP Business Suite system.

An RFC connection to the SAP Business Suite system is available.

A valid system alias exists for the SAP Business Suite system.

You have the following authorizations:

S_CTS_ADMI (Administration functions in the Change and Transport System)

S_CTS_SADM (System-specific administration, transport)

S_DEVELOP (ABAP Workbench development)

S_ICF_ADM (Administration for the Internet Communication Framework)

S_TABU_DIS (table maintenance via standard tools such as SM30)

More Information

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Maarten Balliauw (@maartenballiauw) delivered A first look at Windows Azure AppFabric Applications in a 7/7/2011 post:

After the Windows Azure AppFabric team announced the availability of Windows Azure AppFabric Applications (preview), I signed up for early access immediately and got in. After installing the tools and creating a namespace through the portal, I decided to give it a try to see what it’s all about. Note that Neil Mackenzie also has an extensive post on “WAAFapps” which I recommend you to read as well.

So what is this Windows Azure AppFabric Applications thing?

Before answering that question, let’s have a brief look at what Windows Azure is today. According to Microsoft, Windows Azure is a “PaaS” (Platform-as-a-Service) offering. What that means is that Windows Azure offers a series of platform components like compute, storage, caching, authentication, a service bus, a database, a CDN, … to your applications.

Consuming those components is pretty low level though: in order to use, let’s say, caching, one has to add the required references, make some web.config changes and open up a connection to these things. Ok, an API is provided but it’s not the case that you can seamlessly integrate caching into an application in seconds (in a manner like one would integrate file system access in an application which you literally can do in seconds).

Meet Windows Azure AppFabric Applications. Windows Azure AppFabric Applications (why such long names, Microsoft!) redefine the concept of Platform-as-a-Service: where Windows Azure out of the box is more like a “Platform API-as-a-Service”, Windows Azure AppFabric Applications is offering tools and platform support for easily integrating the various Windows Azure components.

This “redefinition” of Windows Azure introduces some new concepts: in Windows Azure you have roles and role instances. In AppFabric Applications you don’t have that concept: AFA (yes, I managed to abbreviate it!) uses so-called Containers. A Container is a logical unit in which one or more services of an application are hosted. For example, if you have 2 web applications, caching and SQL Azure, you will (by default) have one Container containing 2 web applications + 2 service references: one for caching, one for SQL Azure.

Containers are not limited to one role or role instance: a container is a set of predeployed role instances on which your applications will run. For example, if you add a WCF service, chances are that this will be part of the same container. Or a different one if you specify otherwise.

It’s pretty interesting that you can scale containers separately. For example, one can have 2 scale units for the container containing web applications, 3 for the WCF container, … A scale unit is not necessarily just one extra instance: it depends on how many services are in a container? In fact, you shouldn’t care anymore about role instances and virtual machines: with AFA (my abbreviation for Windows Azure AppFabric Applications, remember) one can now truly care about only one thing: the application you are building.

Hello, Windows Azure AppFabric Applications

Visual Studio tooling support

To demonstrate a few concepts, I decided to create a simple web application that uses caching to store the number of visits to the website. After installing the Visual Studio tooling, I started with one of the templates contained in the SDK:

This template creates a few things. To start with, 2 projects are created in Visual Studio: one MVC application in which I’ll create my web application, and one Windows Azure AppFabric Application containing a file App.cs which seems to be a DSL for building Windows Azure AppFabric Application. Opening this DSL gives the following canvas in Visual Studio:

As you can see, this is the overview of my application as well as how they interact with each other. For example, the “MVCWebApp” has 1 endpoint (to serve HTTP requests) + 2 service references (to Windows Azure AppFabric caching and SQL Azure). This is an important notion as it will generate integration code for you. For example, in my MVC web application I can find the ServiceReferences.g.cs file containing the following code:

1 class ServiceReferences 2 { 3 public static Microsoft.ApplicationServer.Caching.DataCache CreateImport1() 4 { 5 return Service.ExecutingService.ResolveImport<Microsoft.ApplicationServer.Caching.DataCache>("Import1"); 6 } 7 8 public static System.Data.SqlClient.SqlConnection CreateImport2() 9 { 10 return Service.ExecutingService.ResolveImport<System.Data.SqlClient.SqlConnection>("Import2"); 11 } 12 }

Wait a minute… This looks like a cool thing! It’s basically a factory for components that may be hosted elsewhere! Calling ServiceReferences.CreateImport1() will give me a caching client that I can immediately work with! ServiceReferences.CreateImport2() (you can change these names by the way) gives me a connection to SQL Azure. No need to add connection strings in the application itself, no need to configure caching in the application itself. Instead, I can configure these things in the Windows Azure AppFabric Application canvas and just consume them blindly in my code. Awesome!

Here’s the code for my HomeController where I consume the cache/. Even my grandmother can write this!

1 [HandleError] 2 public class HomeController : Controller 3 { 4 public ActionResult Index() 5 { 6 var count = 1; 7 var cache = ServiceReferences.CreateImport1(); 8 var countItem = cache.GetCacheItem("visits"); 9 if (countItem != null) 10 { 11 count = ((int)countItem.Value) + 1; 12 } 13 cache.Put("visits", count); 14 15 ViewData["Message"] = string.Format("You are visitor number {0}.", count); 16 17 return View(); 18 } 19 20 public ActionResult About() 21 { 22 return View(); 23 } 24 }

Now let’s go back to the Windows Azure AppFabric Application canvas, where I can switch to “Deployment View”:

Deployment View basically lets you decide in which container one or more applications will be running and how many scale units a container should span (see the properties window in Visual Studio for this).

Right-clicking and selecting “Deploy…” deploys my Windows Azure AppFabric Application to the production environment.

The management portal

After logging in to http://portal.appfabriclabs.com, I can manage the application I just published:

I’m not going to go in much detail but will highlight some features. The portal enables you to manage your application: deploy/undeploy, scale, monitor, change configuration, … Basically everything you would expect to be able to do. And more! If you look at the monitoring part, for example, you will see some KPI’s on your application. Here’s what my sample application shows after being deployed for a few minutes:

Pretty slick. It even monitors average latencies etc.!

Conclusion

As you can read in this blog post, I’ve been exploring this product and trying out the basics of it. I’m no sure yet if this model will fit every application, but I’m sure a solution like this is where the future of PaaS should be: no longer caring about servers, VM’s or instances, just deploy and let the platform figure everything out. My business value is my application, not the fact that it spans 2 VM’s.

Now when I say “future of PaaS”, I’m also a bit skeptical… Most customers I work with use COM, require startup scripts to configure the environment, care about the server their application runs on. In fact, some applications will never be able to be deployed on this solution because of that. Where Windows Azure already represents a major shift in terms of development paradigm (a too large step for many!), I thing the step to Windows Azure AppFabric Applications is a bridge too far for most people. At present.

But then there’s corporations… As corporations always are 10 steps behind, I foresee that this will only become mainstream within the next 5-8 years (for enterpise). Too bad! I wish most corporate environments moved faster…

If Microsoft wants this thing to succeed I think they need to work even more on shifting minds to the cloud paradigm and more specific to the PaaS paradigm. Perhaps Windows 8 can be a utility to do this: if Windows 8 shifts from “programming for a Windows environment” to “programming for a PaaS environment”, people will start following that direction. What the heck, maybe this is even a great model for Joe Average to create “apps” for Windows 8! Just like one submits an app to AppStore or Marketplace today, he/she can submit an app to “Windows Marketplace” which in the background just drops everything on a technology like Windows Azure AppFabric Applications?

Mark O’Neill described Choosing standards for cloud authentication in a 7/7/2011 post to the SD Times on the Web blog:

CIOs naturally expect their organizations to make use of cloud applications, and often that means knitting cloud-based applications onto existing on-premise applications. The benefit is that users won’t have to enter more passwords or sign in a second time. The challenge is in choosing an authentication standard for authenticating users to the cloud services.

The Dutch computer scientist Andrew Tanenbaum said, “The nice thing about standards is that you have so many to choose from.” That describes the world of cloud authentication, except that in this case there are also some non-standards in the mix.

When choosing how to manage connections to Cloud-based services, developers have a choice between using actual approved standards (OAuth in particular, but also SAML) or de facto industry standards, which are proprietary specifications pushed by one big company (like Amazon's Query API).

OAuth and SAML are real standards. However, Amazon’s Query API is not, but it’s how you work with Amazon. Given the range of choice, developers often ask, is it wrong to not use the protocols available? From a pragmatic point of view, can you and your developers for your preferred cloud service providers implement standards across the board? This might take years, and if you wait, you risk losing the advantages of being an early mover in cloud adoption.

More heavyweight applications (as in “SOAP-based”) are typically better suited to the SAML standard, and more lightweight applications (REST-based) are better suited to the OAuth standard. In terms of maturity, SAML is a tried-and-tested standard. SAML is widely used in the mainstream and is broadly supported. For example, it is used by SalesForce.com. However, SAML does not apply comfortably to REST services and APIs.

On the other hand, OAuth, while less mature, is enjoying increasing adoption and is maturing rapidly. It is a more appropriate standard for use with REST services and APIs as it is more lightweight. Additionally, OAuth is easier to bind to lightweight REST APIs, especially with mobile applications. …

Michael Collier (@MichaelCollier) described Creating My First Windows Azure AppFabric Application in a 7/4/2011 post (missed when posted):

Microsoft recently made available the Windows Azure AppFabric June CTP to get started developing Windows Azure AppFabric applications. Read the announcement post from the AppFabric Team for a good overview of the Azure AppFabric application model. Be sure to download the CTP SDK too. You can also get a FREE 30-day Windows Azure pass at http://bit.ly/MikeOnAzure, using the promo code “MikeOnAzure”.

Once you have the SDK installed, you’ll want to get started creating your first Azure AppFabric application. Launch Visual Studio 2010 and create a new project. In the New Project Template wizard, you’ll see a new “AppFabric” section. You might have expected the Azure AppFabric projects to be located under the “Cloud” area, as that is where the other Azure project templates are. I did too. My assumption here is that this is CTP and the location of the templates are likely to change before final, or that Microsoft is looking to further merge the AppFabric’s (Windows Server and Windows Azure), thus making the choice a deployment target and not a specific type of project.

For the purposes of this walk-through, I’m going to create a new “AppFabric Application”. I’ll pick a cool name for my project and let Visual Studio create it. This will create an empty AppFabric Application in which I can start to add my services/components. There are a few oddities with the new project, and I will chalk this up to being an early CTP. The first is that there is no visual indication that the project is an “AppFabric” application. For instance, the project icon doesn’t contain the “AppFabric” icon overlay that is on the New Project template selection. The second thing I find odd is the “App.cs” file. At first glance, this looks like a regular C# file. There’s no visual clue that it is a special type of file. Double-clicking on the App.cs file will open the Design View for your AppFabric Application.

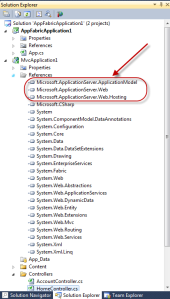

I can now add a new service by clicking on the “Add New Service” link/button in the upper-right corner of the Design View window. In this case I’m going to add a new ASP.NET MVC2 project. When that is done, the standard ASP.NET MVC2 project is added to the solution. Since I’m adding this application as part of a Windows Azure AppFabric Application, Visual Studio automatically adds a few new assemblies related to hosting my web app in the AppFabric Container. The new assemblies for the AppFabric Container are:

- Microsoft.ApplicationServer.ApplicationModel

- Microsoft.ApplicationServer.Web

- Microsoft.ApplicationServer.Web.Hosting

The Design View will also update to reflect the addition of my new ASP.NET MVC2 application. From there I can tell that my web app contains one endpoint and it does not reference any other services.

At this point I’m ready to go. To see the basic setup, I select “Start without debugging” (or press CTRL + F5) from the Build menu. There is no F5 debugging option in this CTP release. Visual Studio will kick of the build and deployment process. I can view the deployment output in the Output window. Notice here that Visual Studio is starting a new emulator, the Windows Azure AppFabric Emulator. Visual Studio will also start the standard Windows Azure compute and storage emulators.

From the Output window I can see the Application Id for your deployment. Also of interest is the path to the various log files that are created as part of running in the Windows Azure AppFabric container. It is my assumption that these log files would be similar to the logging available when running the application in a production Azure AppFabric container (upcoming post to walk-through that process).

Visual Studio will also launch a new console window that includes in the title the Application Id for your application. This is the new emulator for Windows Azure AppFabric Applications. Right now it’s pretty rough-looking – I’d expect it to get better before the final release. I don’t see a whole lot here while the application is running. I did notice the first time I started the application that the web browser (if creating a web application) will launch and Visual Studio will report the app is deployed and ready a little prematurely. In that case, wait until the AppFabric Application emulator reports the app is ready and then refresh the browser. I only noticed this on the initial deployment for the application – subsequent deployments seem to work as expected.

Now I would like to add a new SQL Azure “service” to my application. Notice that the component is supposedly a SQL Azure service, yet the description indicates a SQL Server database (nearly any variant). Possibly a CTP issue?

At this point Visual Studio will set up the SQL Azure information for me. The design view will indicate a new SQL Azure instance is available, and viewing the Properties will allow me to set the database name, connection string, and actions to take against the database upon deployment and tear down of the application. I did notice the provisioning process did not create the database for me as I would have expected. (UPDATE: Learned from Neil MacKenzie’s recent post that the SQL actions to provision and de-provision apply only if using a DAC pack.) Also, the default setup is to use SQL Express, not SQL Azure. Although changing to be SQL Azure should be a simple connection string change.

Now I need to wire up the connection between my web app and the database. To do so, simply add the SQL service as a Service Reference.

At this point, I can easily add some code to query my newly created database (note that I created a database manually and configured the connection details in the Properties panel).

using (SqlConnection sqlConnection = ServiceReferences.CreateSQLAzureImport()) { sqlConnection.Open(); var cmd = sqlConnection.CreateCommand(); cmd.CommandText = “SELECT top 1 ProjectName FROM Project”; cmd.CommandType = CommandType.Text; result = (string)cmd.ExecuteScalar(); }At this point I can press CTRL+F5 to run my application. I expected my home page to show some data from my SQL connection. Instead, I was greeted with an error message stating that I didn’t have permissions to access the database. That’s odd . . . I’m an admin on the machine!

Applications running in the AppFabric container run under the NT AUTHORITY\NETWORK SERVICE security context, and as such don’t have many rights. I changed the SQL connection string from the default Integrated Security to instead use SQL authentication (by setting User ID and Password properties).

To complete my fun little app, I decided to add a reference to Windows Azure AppFabric Cache to cache data instead of hitting the SQL Azure database each time. To use it simply entered my cache authorization token and cache service URL in the service properties panel.

The code to work with AF Cache in an AF Application is pretty darn easy.

DataCache cache = ServiceReferences.CreateDataCacheImport(); time = cache.Get(“time”) as DateTime?; if (time == null) { time = DateTime.UtcNow; cache.Add(“time”, time, TimeSpan.FromMinutes(5)); }When done, the deployment diagram for my simple app looks like this:

Overall, I’m pretty happy with this first CTP of the Windows Azure AppFabric Application SDK. It has it’s rough edges, but it’s pretty nice overall. I can definitely see the value-add and the direction looks really good. Exciting!!

In an upcoming post I’ll walk through the process of deploying this application to the Windows Azure AppFabric container.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team (@WindowsAzure) posted Now Available: Building blocks to help ISVs develop Multi-Tenant SaaS solutions on Windows Azure on 7/7/2011:

Software-as-a-Service (SaaS) is outpacing traditional packaged software in terms of market growth according to some recent market research. As new ISVs develop SaaS solutions or traditional ISVs transform their business to the SaaS model, they face some challenges in terms of building core SaaS framework that includes scaling, managing and metering their service.

In building SaaS solutions, multi-tenancy helps ISVs optimize resource utilization and lower the cost of goods sold (COGS). For an ISV to build a successful multi-tenant SaaS solution, it needs to address the operations needs of the service in the form of monitoring, metering and scaling. The Windows Azure ISV team at Microsoft has developed a set of building blocks to help ISVs with these operations.

This building blocks sample provides developers with the functionality to handle overall and per-tenant metering (database, storage, bandwidth, compute-surrogates), scaling (time-based and KPI based), as well as the ability to monitor and chart a set of KPIs for a visual representation of the health of the system. The building blocks also include self-service provisioning and identity management in a multi-tenanted environment. These facets are core to any multi-tenant solution and the sample provides a great starting point for ISVs to help incorporate these features in their apps.

Check out the [Cloud Ninja] project published @ Codeplex [See post below.] The project includes the following artifacts:

- Source code (this is the complete source code, it is componentized for easy consumption)

- Documentation (this details the core features of the building blocks, the design decisions and technology aspects of the solution; high level and detailed architecture for each component etc.)

- User guide (project setup and configurations)

- Walkthrough (provides details for the app feature set by walking through the functionality)

Please submit your feedback and questions regarding this sample here.

The Windows Azure ISV Team posted the Cloud Ninja Project v2.1 to CodePlex on 6/29/2011 (missed when posted, see above post):

Project Description

The Cloud Ninja Project is a Windows Azure multi-tenant sample application demonstrating metering and automated scaling concepts, as well as some common multi-tenant features such as automated provisioning and federated identity. This sample was developed by the Azure Incubation Team in the Developer & Platform Evangelism group at Microsoft in collaboration with Full Scale 180. One of the primary goals throughout the project was to keep the code simple and easy to follow, making it easy for anyone looking through the application to follow the logic without having to spend a great deal of time trying to determine what’s being called or have to install and debug to understand the logic.

Key Features

- Metering

- Automated Scaling

- Federated Identity

- Provisioning

Version 2.0 of Project Cloud Ninja is in progress and the initial release is available. In addtion to a few new features in the solution we have fixed a number of bugs.

New Features

- Metering Charts

- Changes to metering views

- Dynamic Federation Metadata Document

Contents

- Sample source code

- Design document

- Setup guide

- Sample walkthrough

Notes

- Project Cloud Ninja is not a product or solution from Microsoft. It is a comprehensive code sample developed to demonstrate the design and implmentation of key features on the platform.

Team

- Another sample (also known as Fabrikam Shipping Sample) published by Microsoft provides an in depth coverage of Identity Federation for multi-tenant applications, and we recommend you to review this in addition to Cloud Ninja. In Cloud Ninja we utilized the concepts in that sample but also put more emphasis on metering and automated scaling. You can find the sample here and related Patterns and Practices team's guidance here.

- Bhushan Nene (Microsoft)

- Kashif Alam (Microsoft)

- Dan Yang (Microsoft)

- Ercenk Keresteci (Full Scale 180)

- Trent Swanson (Full Scale 180)

[From the Full Scale 180 blog:

Kevin Kell described issues with Interoperability in the Cloud in a 7/7/2011 post to the Learning Tree blog:

One of the nice things about cloud computing is that it allows for choice.

That is, I am free to choose from any and all available technologies at any time. Yes, vendor lock-in is a concern, but I am not really that concerned about it! Here’s why: In the cloud, there is almost always multiple ways to make something work. A bold assertion perhaps, but here is what I mean.

Let’s say you come from a Windows programming background. Let’s say you want to deploy a simple contact management application to the Cloud. Cool. The Azure Platform has you covered. You could easily create and deploy your app to Azure. Probably you need some kind of persistent storage and, being a relational database kind of person, you choose SQL Azure.

So, here is that app: http://mycontacts.cloudapp.net/

(you may see a certificate warning you can ignore — I assure you the site is safe!)Now let’s say you really like the relational database that SQL Azure offers, but, for some reason, you don’t want to host your application on Windows Azure. Why not? Well, for one thing, it may be too expensive, at least for what you want to do right now. How can we reduce the startup cost? Sure, if this application goes viral you may need to scale it … but for now what? Maybe you could choose to deploy to an EC2 t1.micro Instance, monitor it, and see what happens.

So, here is that app: http://50.18.104.190/MyContacts/

If some readers recognize this application as one created with Visual Studio LightSwitch they are correct! The same app has been seamlessly deployed both to Azure and EC2 right from within Visual Studio. They both hit the same backend database on SQL Azure.

Here are the Economics:

There are differences, of course. Azure is a PaaS whereas EC2 is IaaS. If you are unclear on the difference please refer to this excellent article by my colleague Chris Czarnecki.

The point is developers (and organizations) have choice in the cloud. Choice is a good thing. In the future perhaps I will port the front end to Java and host it on Google App Engine, but that is a topic for another time!

Go ahead … add yourself to my contacts. Let’s see how this thing scales!

Brian Tinman asserted “Most manufacturing ERP users are unable to access the software from their mobile devices, according to a study commissioned by IFS North America” in a deck for his ERP users can’t access data from their mobiles article for the WorksManagement.co.uk site:

In its study of more than 281 manufacturing executives, conducted with US-based analyst Mint-Jutras, only 27% of respondents were performing any functions with their ERP software using a smart phone.

Nearly half (47%) said they had little to no access to ERP from any mobile device. And among CRM (customer relationship management) users, 32% said they had little to no mobile access to their applications.

Interestingly, however, respondents also indicated that mobile interfaces to ERP will start to figure in their software selection processes, particularly where ERP and CRMK are concerned.

"We realise how important mobile access to key enterprise data is today and will be in the future," says IFS CTO Dan Matthews.

"That is why we are … delivering mobile functionality through the IFS Cloud – a set of web services running inside Microsoft's Windows Azure cloud environment," he adds. [Emphasis added.]

Matthews explains that by putting most of the logic for the apps inside IFS Cloud, IFS also reduces the overhead of providing apps for multiple device platforms, "allowing us to get more mobile functionality to customers to market faster than would otherwise be the case".

IFS recently launched its Touch Apps initiative, which gives smart phone access to users of the IFS Applications enterprise suite via Microsoft's Windows Azure Cloud.

Gialiang Ge (pictured below) posted Channel 9 Visual Studio Toolbox: demoing All-In-One Code Framework on 7/7/2011 to the Microsoft All-In-One Code Framework blog:

Mei Liang - one of the owners of All-In-One Code Framework was interviewed by Robert Green - the famous host in Channel 9 Visual Studio Toolbox, to introduce All-In-One Code Framework and demonstrate Sample Browser Visual Studio extension.

http://channel9.msdn.com/Shows/Visual-Studio-Toolbox/Visual-Studio-Toolbox-All-In-One-Code-Framework

All-In-One Code Framework Sample Browser and Sample Browser Visual Studio Extension give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates.

Sample download and management was a pain to many developers. In the past, developers download various samples to their local folders. They would easily forget where the sample was downloaded, and have to download the sample again and again. Now, Sample Browser allows you to download code samples with a single click. It automatically manages all downloaded samples in a centralized place. If a sample has already been downloaded, Sample Browser displays the “Open” button next to the code sample. If an update becomes available for a code sample, an [Update Available] button will remind you to download the updated code sample.

You can try out Sample Browser and Sample Browser Visual Studio extension from the following links:

http://1code.codeplex.com/releases/view/64539

http://visualstudiogallery.msdn.microsoft.com/4934b087-e6cc-44dd-b992-a71f00a2a6dfAt the end of July, you will see a new version of the Sample Browsers with many novel features. Please follow up in twitter, facebook or the blog RSS feed to get the first-hand news. You are also welcome to email onecode@microsoft.com and tell us your suggestions.

Here’s a screen capture of the browser with Windows Azure samples selected for Visual Studio 2010:

Tim Anderson (@timanderson) explained The frustration of developing for Facebook with C# in a 7/7/2011 post:

I am researching a piece on developing for Facebook with Microsoft Azure, and of course the first thing I did was to try it out.

It is not easy. The first problem is that Facebook does not care about C#. There are four SDKs on offer: JavaScript, Apple iOS, Google Android, and PHP. This has led to a proliferation of experimental and third-party SDKs which are mostly not very good.

The next problem is that the Facebook API is constantly changing. If you try to wrap it neatly in an SDK, it is likely that some things will break when the next big change comes along.

This leads to the third problem, which is that Google may not be your friend. That helpful article or discussion on developing for Facebook might be out of date now.

Now, there are a couple of reasons why it should be getting better. Jim Zimmerman and Nathan Totten at Thuzi (Totten is now a technical evangelist at Microsoft) created a new C# Facebook SDK, needing it for their own apps and frustrated with what was on offer elsewhere. The Facebook C# SDK looks like it has some momentum.

C# 4.0 actually works well with Facebook, thanks to the dynamic keyword, which makes it easier to cope with Facebook’s changes and also lets it map closely to the official PHP SDK, as Totten explains.

Nevertheless, there are still a few problems. One is that documentation for the SDK is sketchy to say the least. There is currently no reference for it on the Codeplex site, and most of the comments are the kind that produces impressive-looking automatic documentation but actually tells you nothing of substance. Plucking one at random:

FacebookClient.GetAsync(System.Collections.Generic.IDictionary<string,object>)

Summary:

Makes an asynchronous GET request to the Facebook server.Parameters:

parameters: The parameters.Another problem, inherent to dynamic typing, is that IntelliSense (auto-completion in Visual Studio) has limited value. You constantly need to reference the Facebook documentation.

Finally, the SDK has changed quite a bit in different versions and some of the samples reference old versions.

In particular, I found it a struggle getting OAuth authentication and access token retrieval working and ended up borrowing Totten’s sample code here which mostly works – though note that the code in the sample does not cope with the same users logging out and logging in again; I fixed this by changing his InMemoryUserStore to use a ConcurrentDictionary instead of a ConcurrentBag, though there are plenty of other ways you can store users.

I’m puzzled why Microsoft does not invest more in making this easier. Microsoft invested in Facebook and it is easy to get the impression that Microsoft and Facebook are in some sort of informal alliance versus Google. Windows Phone 7, for example, ties in closely with Facebook and is probably the best Facebook phone out there.

As it is, although I prefer coding in C# to PHP, I would say that choosing PHP as the platform for your Facebook app will present less friction.

Related posts:

Cory Fowler (@SyntaxC4) described Dealing with Windows Azure SDK1.3 MachineKey issue causing inconsistent Hash/Encryption with Powershell [Work in Progress] in a 7/6/2011 post:

There has been a rise in the number of Encryption questions on the Windows Azure Forums. First off, I’d have to applaud those who are playing the safety card in the cloud and encrypting their data.

With the recent FBI Take over of Instapaper’s Servers due to an unrelated raid, looming in the minds of Business and Technical Decision makers are thought of how secure their systems may be in a similar scenario. Fortunately instapaper had encrypted their users passwords, however other potentially sensitive data like email addresses, and bookmark information were not encrypted which may have exposed a number of their user base.

[NOTE: This post is currently a work in progress, but I wanted to share what I was working on. Currently this work around for the SDK 1.3 Machine Key issue is subject to a Race Condition which is described in more detail in my previous post: Windows Azure Role Startup Life-Cycle]

Known Issue: Windows Azure Machine Key is replaced on Deployment

To better understand the Machine Key Element in the web.config file check out the documentation on MSDN Library. You can also Generate a Machine Key using an online tool provided by ASPNetResources.com.

The reason behind this blog post is due to a known issue which was introduced in the Windows Azure SDK 1.3 which will replace the Machine Key in your web.config file in deployment. There is a work around for this issue that is posted within the Windows Azure Documentation on MSDN, however, their solution does have a security warning attached [See Below], which this solution is trying to mitigate.

Security Note

Elevated privileges are required to use IIS 7.0 Managed Configuration API for any purpose, such as programmatic configuration of sites.

The role entry point must run with elevated privileges for this workaround to function correctly. The steps below configure the role for elevated execution privilege. This configuration applies only to the role entry point process. It does not apply to the site itself. Note that the role entry point process remains runs with elevated privileges indefinitely. Exercise caution.

Avoiding an Indefinite Elevation of Privileges with Startup Tasks

Startup Tasks which were also introduced in Windows Azure SDK 1.3 are a very powerful tool in a Windows Azure Developers Arsenal.

Startup Task allow the execution of any Command Shell executable file, including msi, bat, cmd, and Powershell (ps1). The purpose is to modify the base Operating System provided to work for the Application that is being deployed.

Each individual startup task allows for a different elevation of privileges to be applied.

Scripting a Machine Key into the web.config of each Website within IIS with Powershell

Powershell is a powerful scripting language which allows Access to IIS by using it’s WebAdministration Module. Below you will find a reusable script that will replace the machinekey in the web.config file located in the ‘siteroot’ in a Windows Azure Deployment.

param([string]$DecryptionToken, [string]$DecryptionKey, [string]$ValidationToken, [string]$ValidationKey) Import-Module WebAdministration Foreach ($site in Get-Website IIS:\Sites) { $curSite = $site.Name.ToString() Set-WebConfigurationProperty /system.web/machinekey -PSPath "IIS:\Sites\$curSite" -Name Decryption -Value $DecryptionToken Set-WebConfigurationProperty /system.web/machinekey -PSPath "IIS:\Sites\$curSite" -Name DecryptionKey -Value $DecryptionKey Set-WebConfigurationProperty /system.web/machinekey -PSPath "IIS:\Sites\$curSite" -Name Validation -Value $ValidationToken Set-WebConfigurationProperty /system.web/machinekey -PSPath "IIS:\Sites\$curSite" -Name ValidationKey -Value $ValidationKey } Remove-Module WebAdministrationThis script could potentially be cleaned up by someone that has more experience with Powershell, but it definitely achieves the goal that has been set out.

Slight Script Assumption

As you can see, this script iterates over all of the sites within IIS, meaning each site will effectively get the same Machinekey within their individual web.config files. It’s up to you if this is acceptable behavior or not for your particular scenario. This might not be a desired case for a Multi-Tenant Application.

The powershell above is only one part of the equation. The secondary piece is where we actually call this powershell script, which can be handed off to a Command Script (.cmd) to be used as our startup task.

Powershell.exe -ExecutionPolicy RemoteSigned -Command ".\ChangeMachineKey.ps1" 'AES' 'BC69E927D76C3B2C8BA96683AEE46324FC94C4760596238B5BB444E04F5C643C' 'SHA1' '81CB49C2CC63FC38B93A19010953E91F605B67103101CBE64A9B81386137556E3DADA60D2AD2DE61EDA2B0071C84E4904722EA0BB79A7750DCBB79A860C66E60'In order to run powershell script files within Windows Azure you *MUST* change the ExecutionPolicy, I suggest using RemoteSigned. Next -Command is referencing the Powershell Script and accepts the Arguments for the Decryption Type, DecryptionKey, Validation Type, and ValidationKey which are the necessary values for the attributes in the machinekey element.

Then to setup the startup task add the following to the Service Definition File within the WebRole Definition.

<Startup> <Task commandLine="PowershellRun.cmd" executionContext="elevated" taskType="background" /> </Startup>When dealing with Startup Tasks it is important you set them up properly within your Web Project File [Read: How to Resolve Cannot Find File Error for Windows Azure Startup Tasks in Visual Studio].

Cory Fowler (@SyntaxC4) explained How to Resolve ‘error: Cannot find file named 'approot\bin\startup.cmd'’ for Windows Azure Startup Tasks in Visual Studio in a 7/6/2011 post:

A number of people have been coming across an issue when attempting to create Startup Tasks in their Windows Azure Deployments. Typically I’ve been packaging my deployments with the CSPack Utility which is part of the Windows Azure Tools for Visual Studio. [Read: Installing PHP in Windows Azure Leveraging Full IIS Support: Part 3]

You can resolve this issue by selecting your startup script file in the Solution Explorer, and Navigating to the Properties [Alt + Enter]. The Second Item down on the List is “Copy to Output Directory” this needs to be set to either “Copy Always” or “Copy if Newer”.

In the example above, my startup script is named PowershellRun, but this could be renamed to match the startup script name in the title startup.cmd.

<Return to section navigation list>

Visual Studio LightSwitch

Sheel Shah described Creating a Custom Add or Edit Dialog (Sheel Shah) in a 7/7/2011 post to the Visual Studio LightSwitch blog:

LightSwitch provides built-in functionality quickly add or edit data in a grid or list. Unfortunately this modal dialog is not customizable. In more complex data entry scenarios, the built-in dialogs may not fulfill your customer's needs. There are a couple of options in this situation.

- Provide specific screens for adding/editing records. For extremely complex data entry or manipulation scenarios, this may be the best option. However, your application will need to manually manage refreshing data on the list or grid screens.

- Replace the built-in modal dialog with your own. This is ideal for quick edit or add functionality but does require a bit of code to achieve.

This article will describe how to provide a customized dialog for adding or editing records in a grid.

Getting Set Up

Create a new LightSwitch application. Add a Customer table with the following fields:

- FirstName (String, Required)

- MiddeInitial (String, Not Required)

- LastName (String, Required)

- PhoneNumber (Phone Number, Required)

Create a new Editable Grid screen for Customers.

Define the Modal Window

On the Editable Grid screen, right click on the top-most item and select "Add Group".

On the group, select the dropdown next to "Rows Layout" and change this to "Modal Window". Select the "Add" button underneath the modal window and choose Selected Item. This will display the currently selected customer in the modal window. This view can now be customized as necessary.

By default, a modal window group will display a button on the screen. This button can be used to launch the window. In our scenario, we don't want this default button display - instead, we'd like to launch the modal window whenever the user clicks the Add or Edit button in a list or grid. Select the modal window and in the properties grid set the "Show Button" property to false. We can also change the name of the group to CustomerEditDialog.

Modifying the Grid’s Add/Edit Functionality

We can now override the grid's add and edit buttons to instead launch our modal window. Open the Command Bar for the data grid, right click on the "Add…" button and select "Override Code".

Add the following code for the Execute and CanExecute methods. This code will add a new record to the collection and open the modal window.

Private Sub gridAddAndEditNew_CanExecute(ByRef result As Boolean) result = Me.Customers.CanAddNew() End Sub Private Sub gridAddAndEditNew_Execute() Dim newCustomer As Customer = Me.Customers.AddNew() Me.Customers.SelectedItem = newCustomer Me.OpenModalWindow("CustomerEditDialog") End SubSimilarly, right click on the "Edit…" button in the screen editor and select "Override Code" Add the following code.

Private Sub gridEditSelected_CanExecute(ByRef result As Boolean) result = Me.Customers.CanEdit AndAlso Me.Customers.SelectedItem IsNot Nothing End Sub Private Sub gridEditSelected_Execute() Me.OpenModalWindow("CustomerEditDialog") End SubTest the ApplicationRun the application and click on the Add or Edit buttons in the Editable Customer Grid. This should launch our custom dialog.

Unfortunately, the dialog we've created is quite limited in functionality. It does not include the Ok and Cancel buttons of the built-in dialog. It also does not update its title based on whether it is editing or creating a customer.

Providing Additional Functionality

In the screen designer, right click on the Modal Window and select "Add Button...". Choose "New Method" and name the method EditDialogOk. In the property sheet, change the Display Name of the button to just Ok.

Add a similar button called EditDialogCancel. Again, change the display name of the modal window to just Cancel.

Double click on the Ok button to add some code. We will add some code to close the modal dialog when either Ok or Cancel is pressed.

Private Sub EditDialogOk_Execute() Me.CloseModalWindow("CustomerEditDialog") End Sub Private Sub EditDialogCancel_Execute() Me.CloseModalWindow("CustomerEditDialog") End SubThis code simply closes the dialog when the user presses Cancel. This is not ideal. When Cancel is pressed, we'd like new records removed and changes to existing records reverted. This is possible, but requires a fair bit of code. The following helper class will provide this functionality.

Public Class DialogHelper Private _screen As Microsoft.LightSwitch.Client.IScreenObject Private _collection As Microsoft.LightSwitch.Client.IVisualCollection Private _dialogName As String Private _isEditing As Boolean = False Private _entity As IEntityObject Public Sub New (ByVal visualCollection As Microsoft.LightSwitch.Client.IVisualCollection, ByVal dialogName As String) _screen = visualCollection.Screen _collection = visualCollection _dialogName = dialogName End Sub Public Sub InitializeUI() 'This code may not work in Beta 2. Fixed in final release. AddHandler _screen.FindControl(_dialogName).ControlAvailable, Sub(sender As Object, e As ControlAvailableEventArgs) Dim childWindow As System.Windows.Controls.ChildWindow = e.Control AddHandler childWindow.Closed, Sub(s1 As Object, e1 As EventArgs) If _entity IsNot Nothing Then DirectCast(_entity.Details, IEditableObject).CancelEdit() End If End Sub End Sub End Sub Public Function CanEditSelected() As Boolean Return _collection.CanEdit() AndAlso (Not _collection.SelectedItem Is Nothing) End Function Public Function CanAdd() As Boolean Return _collection.CanAddNew() End Function Public Sub AddEntity() _isEditing = False _collection.AddNew() _screen.FindControl(_dialogName).DisplayName = "Add " + _collection.Details.GetModel.ElementType.Name BaseOpenDialog() End Sub Public Sub EditSelectedEntity() _isEditing = True _screen.FindControl(_dialogName).DisplayName = "Edit " + _collection.Details.GetModel.ElementType.Name BaseOpenDialog() End Sub Private Sub BaseOpenDialog() _entity = _collection.SelectedItem() If _entity IsNot Nothing Then Dispatchers.Main.Invoke(Sub() DirectCast(_entity.Details, IEditableObject).EndEdit() DirectCast(_entity.Details, IEditableObject).BeginEdit() End Sub) _screen.OpenModalWindow(_dialogName) End If End Sub Public Sub DialogOk() If _entity IsNot Nothing Then Dispatchers.Main.Invoke(Sub() DirectCast(_entity.Details, IEditableObject).EndEdit() End Sub) _screen.CloseModalWindow(_dialogName) End If End Sub Public Sub DialogCancel() If _entity IsNot Nothing Then Dispatchers.Main.Invoke(Sub() DirectCast(_entity.Details, IEditableObject).CancelEdit() End Sub) If _isEditing = False Then _entity.Delete() End If _screen.CloseModalWindow(_dialogName) End If End Sub End ClassUsing the Helper ClassFirst, we'll need to add the class to our project. Right click on the application in the solution explorer and select "View Application Code (Client)". Paste the code from above beneath the Application class. This will also require importing the Microsoft.LightSwitch.Threading namespace.

Imports Microsoft.LightSwitch.ThreadingNamespace LightSwitchApplication Public Class Application End Class Public Class DialogHelper 'Code from above End Class End NamespaceThis helper library requires a reference to an additional library. Select the application in Solution Explorer and switch to File View. Right click on the Client application and select "Add Reference". Select the "System.Windows.Controls" component and press Ok.

On our existing grid screen for Customer, we'll need to initialize and use this helper class. The code for many of our existing methods is replaced by calls to the helper class. The final code for our screen is shown below.

Public Class EditableCustomersGrid Private customersDialogHelper As DialogHelper Private Sub EditableCustomersGridOld_InitializeDataWorkspace( saveChangesTo As System.Collections.Generic.List(Of Microsoft.LightSwitch.IDataService)) customersDialogHelper = New DialogHelper(Me.Customers, "CustomerEditDialog") End Sub Private Sub EditableCustomersGridOld_Created() customersDialogHelper.InitializeUI() End Sub Private Sub gridAddAndEditNew_CanExecute(ByRef result As Boolean) result = customersDialogHelper.CanAdd() End Sub Private Sub gridAddAndEditNew_Execute() customersDialogHelper.AddEntity() End Sub Private Sub gridEditSelected_CanExecute(ByRef result As Boolean) result = customersDialogHelper.CanEditSelected() End Sub Private Sub gridEditSelected_Execute() customersDialogHelper.EditSelectedEntity() End Sub Private Sub EditDialogOk_Execute() customersDialogHelper.DialogOk() End Sub Private Sub EditDialogCancel_Execute() customersDialogHelper.DialogCancel() End Sub End ClassRun the ApplicationRun the application again. Click on the "Add" button for the grid. This will open our modal dialog with the title appropriately updated. If we click on the "Cancel" button, the newly added record will be removed. Similarly, if a record is being edited and "Cancel" is clicked, the changes will be reverted.

While a little involved, this technique can be applied to any of your other screens. If you have more than one list on a screen, multiple custom dialogs can be added. The DialogHelper class utilizes some of the more advanced API options available within LightSwitch. We’re also planning on wrapping this sample into a screen template that will automatically generate the modal window and code that is needed.

Sudhir Hasbe reminds the two people who aren’t yet aware that Lightswitch, a developers tool, will launch on July 26 in a 7/7/2011 post:

Article in Windows 7 News on Light Switch. Light Switch is really awesome…

Microsoft’s LightSwitch to Launch on July 26. This program will give developers a simpler and faster way to create high-quality business applications for the desktop and the cloud. LightSwitch is a new addition to the Visual Studio family.

Create Custom Business Applications

Microsoft may not be winning the Mobile Phone war, but it is certainly on top of the PC war. With LightSwitch Microsoft hopes to let developers build custom applications that rival off-the-shelf solutions. In this way, when developers want to pursue more serious applications that are not “Apps” Microsoft shows a way to do this. There will be pre-configured screen templates that give your application a familiar look and feel. There is prewritten code and other reusable components to handle routine application tasks, along with step-by-step guidance tools. However, if you need to write custom code, you can use Visual Basic .NET or C#. You can deploy to the desktop, browser, or cloud to share your application with others more easily without the cumbersome installation processes.

System Data Access

LightSwitch supports exporting data to Excel, and you can also attach your application to existing data sources like SQL Server, Microsoft SQL Azure, SharePoint, Microsoft Office Access (post-Beta), and other third-party data sources.

Lightswitch will also allow developers to create custom applications in tune with the way you do business. This will let developers build a scalable application that matches your current needs now and in the future. This doesn’t tie developers down to current technologies. Indeed, applications can grow to meet the technical demands of applications using the Microsoft Windows Azure Cloud Hosting option.

Extend Applications

The development environment for LightSwitch is Visual Studio. With this tool LightSwitch includes a lot of pre-built components . LightSwitch applications will support extensions for templates, data sources, shells, themes, business data types and custom controls. Get extensions from component vendors or develop them yourself using Visual Studio Professional, Premium or Ultimate.

You can compare Lightswitch with Visual studio.

You can download the Lightswitch Beta Version.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

My Video Archives from the Best of the Microsoft Management Summit, Belgium – June 15, 2011 post of 7/7/2011 provides:

Links to more than 30 TechNet/Edge videos of Microsoft System Center technical sessions recorded at Microsoft’s Best of Microsoft Management Summit, held at ALM Point, Antwerp, Belgium on 6/15/2011 and related sessions from Tech Ed North America 2011, held in Atlanta, GA on 5/16 to 5/19/2011, Tech Days 2011 Belgium, held at Metropolis Antwerpen, Antwerp, Belgium on 4/26 to 4/28/2011.

Following is an example:

Introduction to Opalis and a Sneak Peek at System Center Orchestrator

Get a sneak peak preview of System Center Orchestrator and see what Opalis can do in your environment today. More...

R “Ray” Wang posted an Executive Profiles: Disruptive Tech Leaders In Cloud Computing – Bob Kelly, Microsoft interview on 7/7/2011:

Welcome to an on-going series of interviews with the people behind the technologies in Social Business. The interviews provide insightful points of view from a customer, industry, and vendor perspective. A full list of interviewees can be found here.

Bob Kelly – Corporate Vice President, Microsoft’s Windows Azure Marketing, Microsoft

Biography

Kelly began his career with Microsoft in 1996 with the Windows NT Server 3.51 marketing team. He later transitioned to group manager of Windows NT Server and Windows 2000 Server marketing. Following the launch of Windows 2000 Server, Kelly helped to form the company’s U.S. subsidiary. He has held a series of marketing and product management roles, both in the field and corporate offices, such as general manager of Windows Server Product Management and general manager of infrastructure server marketing. Recently, Bob was named CVP for Windows Azure Marketing focusing on Microsoft’s cloud platform execution.

A Massachusetts native, Kelly earned his master’s degree and doctorate in English literature from the University of Dallas. In addition to enjoying spending time at home with his wife and four children, he’s active in local civic organizations. …

Read the interview here.

David Linthicum (@DavidLinthicum) asserted “The use of cloud computing to work around IT shines a bright light on what needs to change” as a deck for his What 'rogue' cloud usage can teach IT post of 7/7/2011 to InfoWorld’s Cloud Computing blog:

Many people in enterprises use cloud computing -- not because it's a more innovative way to do storage, compute, and development, but because it's a way to work around the IT bureaucracy that exists in their enterprise. This has led to more than a few confrontations. But it could also lead to more productivity.

One of the techniques I've used in the past is to lead through ambition. If I believed things were moving too slow, rather than bring the hammer down, I took on some of the work myself. Doing so shows others that the work both has value and can be done quickly. Typically, they mimic the ambition.

The same thing is occurring in employee usage of cloud services to get around IT. As divisions within companies learn they can allocate their own IT resources in the cloud and, thus, avoid endless meetings and pushback from internal IT, they are showing IT that it's possible to move fast and quickly deliver value to the business. They can even do so with less risk.

Although many in traditional IT shops view this as a clear threat and in many cases reprimand the "rogue" parties, they should reflect on their own inefficacies that have taken hold over the years. Moreover, the use of cloud computing shines a brighter light on how much easier IT could do things in the cloud. It becomes a clear gauge as to the difference between what IT can do now and what technology itself can achieve when not held back.

I'm sure reader comments will be full of security, governance, and responsibility issues that most IT pros believe is a burden that they, and only they, must bear. They'll say that although they might seem like the bad guys, they're taking on important responsibilities for everyone's good, and that those who work around them are not helping. That might be a comforting view, but it's also self-defeating.

The truth is that those who create "rogue" clouds demonstrate what is possible and perhaps raise the bar on how fast enterprise IT should be moving these days. IT should use those efforts as a sort of mirror; sometimes looking at your reflection shows flaws that are easily fixed.

Bruce Kyle posted Second Service Pack Ties High Performance Computing to Azure on 7/7/2011 to the US ISV Evangelism blog:

The second ‘service pack’ (SP2) for Windows HPC Server 2008 R2 focuses on providing customers with a great experience when expanding their on-premises clusters to Windows Azure, and we’ve also included some features for on-premises clusters.

You can download the service pack at HPC Pack 2008 R2 Service Pack 2 download.

- HPC direct integration with the Windows Azure APIs to provide a simplified experience for provisioning compute nodes in Windows Azure for applications and data.

- Tuned MPI stack for the Windows Azure network, support for Windows Azure VM role (currently in beta)

- Automatic configuration of the Windows Azure Connect preview to allow Windows Azure based applications to reach back to enterprise file server and license servers via virtual private networks.

For details, see the HPC Pack 2008 R2 Service Pack 2 (SP2) Released on the Windows Azure blog.

Future Roadmap

In the coming days, we will release a second beta for building data intensive applications (previously codenamed Dryad). We’re now calling it ‘LINQ to HPC’. LINQ has been an incredibly successful programming model and with LINQ to HPC we allow developers to write data intensive applications using Visual Studio and LINQ and deploy those data intensive applications to clusters running Windows HPC Server 2008 R2 SP2.

About Windows Server HPC

Windows HPC delivers the power of supercomputing in a familiar environment.

High Performance Computing gives analysts, engineers and scientists the computation resources they need to make better decisions, fuel product innovation, speed research and development, and accelerate time to market. Some examples of HPC usage include: decoding genomes, animating movies, analyzing financial risks, streamlining crash test simulations, modeling global climate solutions and other highly complex problems.

To evaluate Windows Server HPC, see Try Windows HPC Server Today.

David Bridgwater posted a Puppet Labs CEO: Cloud Is No Panacea for Developers article to DrDobbs.com on 7/6/2011:

Wake up call for developers who think the cloud offers pre-baked infrastructure layer with fewer deployment challenges?

The chief of open source data center automation company Puppet Labs has said that developers should exercise caution before jumping on the cloud computing bandwagon.

Emphasizing that deployment in the cloud doesn't remove the need to consider traditional application architecture issues like scaling, redundancy, security, and performance, Puppet Labs CEO Luke Kanies insists that cloud applications can often present the traditional performance and scaling perils of a physical infrastructure.

"The performance characteristics of the cloud can even inhibit your ability to scale. These limitations can also result in application architecture being designed around the cloud's shortfalls rather than in an optimal manner," said Kanies.

Developers need to remember that one of the great benefits of cloud computing is portability and that application architecture must be designed to be flexible, says the Puppet Labs boss. Kanies suggests that this allows workloads to be migrated from private cloud to public cloud and back, which inevitably maximizes the potential cost and performance benefits.

"In light of recent cloud outages, cloud marketing may also be giving developers and businesses a false sense of security about the availability of their applications. Cloud platforms are generally built to be robust and redundant, but it is still infrastructure — and infrastructure faults can mean business outages. Deploying on cloud platforms doesn't mean developers can get away with designing applications that are not robust and redundant," said Kanies.

Puppet Labs also hints at similar issues with managing security in the cloud. Echoing the mantra much repeated by cloud providers themselves, the company says that applications deployed in the cloud require the same security architecture and testing as applications deployed in-house.

"Cloud computing represents a great way to save money and speed up your deployments, but deploying your applications there is not without risk. Developers should keep in mind that many of the challenges of traditional application development still exist in the cloud and need to be considered," said Kanies.

Adam Hall posted Migrating from Opalis – Identifying activities that have changed in Orchestrator to the System Center Orchestrator Team Blog on 7/6/2011:

As we have previously indicated, there are some changes in the Standard Activities (what were referred to as Foundation Activities in the Opalis product) with the Orchestrator release.

This raises the question for customers who are migrating from Opalis to Orchestrator for a way to identify which Opalis workflows contain objects that are deprecated runbook activities in Orchestrator.

The following SQL query can be run on against either the Opalis 6.3 database or the Orchestrator database. It will identify the Opalis 6.3 workflows that make use of objects no longer available in Orchestrator. The query will return both the Opalis 6.3 workflow name as well as the name of the object in the workflow. Any workflow identified by this query will need to be updated after it has been imported into Orchestrator to remove the reference to the deprecated object.

Select

policies.[Name] as [Policy Name],

objects.[Name] as [Object Name]

From

[Objects] objects join

[Policies] policies

on objects.[ParentID]=policies.[UniqueID]

Where

objects.objecttype = '2081B459-88D2-464A-9F3D-27D2B7A64C5E' or

objects.objecttype = '6F0FA888-1969-4010-95BC-C0468FA6E8A0' or

objects.objecttype = '8740DB49-5EE2-4398-9AD1-21315B8D2536' or

objects.objecttype = '19253CC6-2A14-432A-B4D8-5C3F778B69B0' or

objects.objecttype = '9AB62470-8541-44BD-BC2A-5C3409C56CAA' or

objects.objecttype = '292941F8-6BA7-4EC2-9BC0-3B5F96AB9790' or

objects.objecttype = '98AF4CBD-E30E-4890-9D26-404FE24727D7' or

objects.objecttype = '2409285A-9F7E-4E04-BFB9-A617C2E5FA61' or

objects.objecttype = 'B40FDFBD-6E5F-44F0-9AA6-6469B0A35710' or

objects.objecttype = '9DAF8E78-25EB-425F-A5EF-338C2940B409' or

objects.objecttype = 'B5381CDD-8498-4603-884D-1800699462AC' or

objects.objecttype = 'FCA29108-14F3-429A-ADD4-BE24EA5E4A3E' or

objects.objecttype = '7FB85E1D-D3C5-41DA-ACF4-E1A8396A9DA7' or

objects.objecttype = '3CCE9C71-51F0-4595-927F-61D84F2F1B5D' or

objects.objecttype = '96769C11-11F5-4645-B213-9EC7A3F244DB' or

objects.objecttype = '6FED5A55-A652-455B-88E2-9992E7C97E9A' or

objects.objecttype = '9C1DF967-5A50-4C4E-9906-C331208A3801' or

objects.objecttype = 'B40FDFBD-6E5F-44F0-9AA6-6469B0A35710' or

objects.objecttype = '829A951B-AAE9-4FBF-A6FD-92FA697EEA91' or

objects.objecttype = '1728D617-ACA9-4C96-ADD1-0E0B61104A9E' or

objects.objecttype = 'F3D1E70B-D389-49AD-A002-D332604BE87A' or

objects.objecttype = '2D907D60-9C25-4A1C-B950-A31EB9C9DB5F' or

objects.objecttype = '6A083024-C7B3-474F-A53F-075CD2F2AC0F' or

objects.objecttype = '4E6481A1-6233-4C82-879F-D0A0EDCF2802' or

objects.objecttype = 'BC49578F-171B-4776-86E2-664A5377B178'The migration guide on the Orchestrator TechNet Library is currently being updated with this information.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Elizabeth White asserted “Use of protocol to benefit the cloud consumer” as a deck for her Cloud Security Alliance Announces Licensing Agreement with CSC post of 7/7/2011 to the Cloud Security Journal blog:

The Cloud Security Alliance has received a no-cost license for the CloudTrust Protocol (CTP) from CSC. The CTP is being integrated as the fourth pillar of the CSA's cloud Governance, Risk and Compliance (GRC) stack, which provides a toolkit for enterprises, cloud providers, security solution providers, IT auditors and other key stakeholders to instrument and assess both private and public clouds against industry established best practices, standards and critical compliance requirements. An integrated suite of CSA initiatives - CloudAudit, Cloud Controls Matrix and Consensus Assessments Initiative Questionnaire - the GRC stack is available for free download.

Jim Reavis, director of the non-profit Cloud Security Alliance, noted that the Cloud Trust Protocol provides "an increasingly important step in helping organizations realize the ultimate promise of cloud computing, and is a perfect addition to our evolving business."

The CTP was created by CSC to provide the cloud consumer with the right information to confidently make choices about what processes and data to put into what type of cloud, and to sustain information risk management decisions about cloud services. It provides transparency into cloud service delivery, offering cloud consumers important information about service security and cloud service providers with a standard technique to prepare and deliver information to clients about their data. In so doing, the CTP generates the evidence needed to verify that all of a company's activity in the cloud is happening as described.

"The CTP puts IT risk decision-making back in the hands of the cloud consumer by providing the data they need as they need it," said Ron Knode, CSC trust architect and creator of the CTP.

Because of the transparency it provides into cloud and organizations' cloud activity, the CTP provides a way for clients to inquire about configurations, vulnerabilities, access, authorization, policy, accountability, anchoring and operating status conditions. These important pieces of information give insight into the essential security configurations and operational characteristics for systems deployed in the cloud. The CTP also provides a standard way for cloud service providers to prepare and deliver information to the customer in response to requests about elements of transparency, and to determine the best way to respond to cloud inquiries.

The CSA will convene a CTP working group, chaired by Knode, to continue discussion on how to use the protocol to benefit the cloud consumer. More about the CTP can be found here.

Jonathan Penn reported Data Protection In The Cloud: The Facade Of Vendor Trust Is Crumbling in a 7/6/2011 post to his Forrester security blog:

Many non-US organizations operate under privacy regulations which require that customer data remain within their countries or jurisdictions. In response, US cloud providers build data centers and host their applications inside countries they're selling their solutions.

However, all this is nothing but theater. These requirements are far more useful as a local jobs program rather than as an effective privacy practice. I've warned about this before: any US vendor is going to be handing over data under a US subpoena, and most certainly under a National Security Letter. It doesn't matter if it resides in a data center on US soil, in the EU, or even in outer space.

So it's refreshing to see a vendor openly admit this, as Microsoft has.

Maybe now that we're all starting to be honest with each other will this issue gain some traction, and vendors begin to incorporate some real data protection measures into our cloud environments, such as encrypting the data in such a way where only customers - not the cloud providers - have the keys. I suspect we'll start to see such requirements begin to show up in a lot of RFPs.

Related Forrester Research

It’s not difficult to encrypt Windows Azure tables, blobs and queues, but I haven’t received a positive response from the SQL Azure team to my request for Transparent Data Encryption (TDE) for databases.

Ryan Ko reported Top submissions to be recommended to Wiley Security and Communication Networks Journal Special Issue in a 7/5/2011 post to the IEEE TSCloud 2011 blog:

We are pleased to announce that the top submissions from both the Main Track and the Demo Challenge [of the 1st IEEE International Workshop on Trust and Security in Cloud Computing (IEEE TSCloud 2011)] will be recommended for full journal submission to the Special Issue on Trust and Security in Cloud Computing for the SCI-E indexed journal Security and Communication Networks (Wiley) (ISSN: 1939-0114).

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Cloud Cover TV posted a Service providers dominate cloud business video segment on 7/6/2011 to SearchCloudComputing.com:

Things continue to change in the cloud, and the real cloud action is falling to service providers instead of big names like Amazon and enterprise private clouds. But this shift has some consequences for other players in the cloud computing market. Geva Perry, cloud consultant, analyst and blogger for "Thinking Out Cloud," stops by to share his thoughts on what's going on in the cloud market now in this week's episode of Cloud Cover TV.

We discuss:

- This week Dimension Data bought OpSource and GoDaddy was bought by a private equity consortium

- Amazon Web Services is on the fringes, and private cloud in the enterprise is still new

- Service providers of all shapes and sizes are where the cloud action is

- What does this mean for other players in the cloud market?

- GoDaddy is preparing to launch an Infrastructure as a Service called Data Center On-Demand

- Geva Perry, cloud consultant, analyst and blogger, shares his thoughts on what’s happening in the cloud computing market

- Why the big telcos in the U.S. aren’t in the cloud, but international ones are

- Why Amazon and Google were able to pull off the cloud in the U.S.

Watch the video here.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

![clip_image002[9] clip_image002[9]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/6545.clip_5F00_image0029_5F00_thumb_5F00_4188D9B4.jpg)

![clip_image002[11] clip_image002[11]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/8524.clip_5F00_image00211_5F00_thumb_5F00_0F250635.jpg)

![clip_image002[13] clip_image002[13]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/8203.clip_5F00_image00213_5F00_thumb_5F00_072D63D3.jpg)

![clip_image002[15] clip_image002[15]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/1273.clip_5F00_image00215_5F00_thumb_5F00_74786A1B.jpg)

![clip_image002[17] clip_image002[17]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/6557.clip_5F00_image00217_5F00_thumb_5F00_461EE46E.jpg)

![clip_image002[19] clip_image002[19]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/3312.clip_5F00_image00219_5F00_thumb_5F00_4CD1EDF1.jpg)

![clip_image001[6] clip_image001[6]](http://blogs.msdn.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-01-39-73-metablogapi/0272.clip_5F00_image0016_5F00_thumb_5F00_5384F774.jpg)

.jpg)

0 comments:

Post a Comment