Windows Azure and Cloud Computing Posts for 7/12/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi posted Announcing: SQL Azure July 2011 Service Release on 7/13/2011:

We’re about to make some updates to the SQL Azure service, which will pave the way for some significant enhancements and announcements we plan to make later this year. This July 2011 Service Release is foundational in many ways, with significant upgrades to the underlying engine designed to increase overall performance and scalability. This upgrade is also significant in that it represents a big first step towards providing a common base and feature set between the cloud SQL Azure service and our upcoming release of SQL Server Code Name “Denali”.

What’s coming in the SQL Azure July Service Release?

We’ll implement these upgrades over the coming weeks in all our global datacenters. Once these upgrades are complete, co-administrator support will enable customers to specify multiple database administrators, and SQL Azure will have increased capability for using spatial data types, making it the perfect cloud database for location-aware cloud and mobile applications.

To prepare for the service release, we urge all customers and developers download the latest updates to the SQL Server Management Studio tools, otherwise you may experience connectivity issues connecting to the database. Users who only use the database manager tools in the Windows Azure developer portal will be unaffected. The details and download links to accomplish this are below.

Applications running in Windows Azure, on-premises, or with a hoster that interact with data in SQL Azure will be unaffected and will continue to work normally.

Preparing for the Service Release: Download updated management tools

As the datacenters get upgraded, the version number of the database engine will increase, which will result in errors when connecting with the SQL Server Management Studio (SSMS) management tool.

To prevent experiencing any connectivity issues with management tools, we encourage you to immediately download and install the latest updates to the SQL Server Management Studio (SSMS) tools for managing your SQL Server and SQL Azure databases. The links to get the latest updates available in SQL Server 2008 R2 SP1 are:

Applications that utilize SQL Server Management Objects (SMO) will need to apply the Cumulative Update Package 7 for SQL Server 2008 R2.

If you encounter connectivity issues with SSMS over the next few weeks, please double-check that you have the latest updates. To verify you have the latest version of SSMS, open the tool, click on the help menu, and select ‘About’. You’ll get a dialog box listing version numbers of various SQL Server components. The first row, “Microsoft SQL Server Management Studio” should have a version greater or equal to 10.50.1777.0 once you’ve installed the updates.

Alex Handy asserted If you've given up on relational databases for the cloud... You're nuts! and gets his facts about Amazon SimpleDB mixed up in this seven-page story of 7/13/2011 for the SD Times on the Web blog:

The move to the cloud has brought many changes to software development, but few shifts have been as radical as those occurring in the database market right now. So different is the cloud for software architects that the first response from developers was to build entirely new databases to solve these new problems.

Thus, 2010 was the year of the NoSQL database. But as time has moved on and NoSQLs have become more mature, developers are figuring out that the old relational ways of doing things shouldn't be thrown out with the metaphorical bathwater.While relational databases were considered old world just a year ago, a new crop of options for in-cloud development has brought them racing back to the forefront. A combination of new relational databases aimed at the cloud, coupled with more mature in-cloud relational offerings, such as Microsoft's SQL Azure database and Amazon's SimpleDB, have presented some compelling reasons to ditch the new-fangled NoSQLs.

Amazon's SimpleDB, for example, is a cloud-based relational data storage system focused on simplicity, as the name implies. Rather than cram caching, transformations and compromise solutions to the CAP problem (Consistency, Availability, Partitions: You can only choose two) into a new-world database, SimpleDB eschews futuristic ideas in favor of a clean, easy-to-use data store that can form the backbone of scalable applications while providing the 20% of functionality needed by 80% of users.

Adam Selipsky, vice president of product management and developer relations for Amazon Web Services, said that SimpleDB is about choice and ease of use. “Running a relational database, irrespective of where you do it, takes a certain amount of work and administration," he said.

"There are a lot of use cases where people don't need that full functionality of a relational database. SimpleDB is really meant to be the Swiss Army knife of databases. You're not going to do joins, you're not going to do complex math procedures. If you want to do data indexing and querying, then it can take all the scaling hassles away from you, and you don't have to worry about schemas."

Read more: Next Page, 2, 3, 4, 5, 6, 7

SimpleDB is a key-value, NoSQL database, not a “a cloud-based relational data storage system.” Amazon’s RDB is their implementation of the MySQL RDBMS for AWS. SimpleDB is similar to Windows Azure table storage.

The SQL Azure Team (@SQLAzure) posted Announcing SQL Server Migration Assistant (SSMA) v.5.1 on 7/12/2011:

Automating database migration from Oracle, Sybase, MySQL, and Access database to SQL Azure and SQL Server “Denali”.

Microsoft announced today the release of SQL Server Migration Assistant (SSMA) v.5.1, a family of products to automate database migration to all edition of SQL Server (including SQL Server Express) and SQL Azure.

What’s New in this Release?

SSMA v5.1. supports conversion to the new features in SQL Server “Denali”, including:

- Conversion of Oracle Sequence to SQL Server "Denali" sequence

- Conversion of MySQL LIMIT clause to SQL Server "Denali" OFFSET / FETCH clauses

Free Downloads:

SSMA v5.1 is available for FREE and can be downloaded from the following:

Customers and partners can provide feedback or receive FREE SSMA technical support from Microsoft Customer Service and Support (CSS) through email at ssmahelp@microsoft.com.

Resources:

For more information and video demonstration of SSMA and how it can help with your database migration, please visit:

- SQL Server Migration Resources, including reasons to migrate and case studies

- SSMA introduction videos

- Automating Database Migration (North America TechEd 2011 recording)

- Oracle to SQL Server Migration (step by step walkthrough)

- Access to SQL Server Migration (step by step walkthrough)

- MySQL to SQL Server Migration (step by step walkthrough)

- Sybase to SQL Server Migration (step by step walkthrough)

- MSDN Database Migration Forum

The SQL Azure Team (@SQLAzure) described Converting Oracle Sequence to SQL Server "Denali" in a 7/12/2011 post:

One of the new feature in SQL Server Migration Assistant (SSMA) for Oracle v5.1 is the support for converting Oracle sequence to SQL Server “Denali” sequences. Although the functionalities are similar with Oracle’s sequence, there are a few differences. This blog article describes the differences and how SSMA handle those during conversion.

Data Type

SQL Server, by default, uses INTEGER data type to define sequence value. The maximum number supported with INTEGER type is 2147483647. Oracle supports 28 digit of integer value in its sequence implementation. On the other hand, SQL Server supports different data types when creating sequence. When migrating Sequence from Oracle, SSMA migrate Sequence from Oracle by creating sequence object in SQL Server with type of NUMERIC(28). This is to ensure that the converted sequence can store all possible values from the original sequence object in Oracle.

Oracle SQL Server CREATE SEQUENCE MySequence; CREATE SEQUENCE MySequence AS numeric (28,0) CREATE TABLE MyTable (id NUMBER, text VARCHAR2(50)); CREATE TABLE MyTable (id float, text VARCHAR(50)) INSERT INTO MyTable (id, text)

VALUES (MySequence.NEXTVAL, 'abc');INSERT INTO MyTable (id, text) VALUES (NEXT VALUE FOR MySequence, 'abc') UPDATE MyTable SET id=MySequence.NEXTVAL WHERE id = 1; UPDATE MyTable SET id= NEXT VALUE FOR MySequence WHERE id = 1 Starting Value

Unless explicitly defined, SQL Server create sequence with starting number of the lowest number in the data type range. For example, when using simple CREATE SEQUENCE MySequence, SQL Server “Denali” creates a sequence with starting value of -2147483648, as supposed in Oracle which uses default starting value of 1.

Another migration challenge is how to maintain continuity after the database migration, so the next sequence generated after migration to SQL Server is consistent with that of Oracle. SSMA creates SQL Server sequence using START WITH property using Oracle sequence's last_number property to resolve this challenge.

Oracle SQL Server CREATE SEQUENCE MySequence; -- last_number is 10 CREATE SEQUENCE MySequence AS numeric (28,0) START WITH 10;

Maximum CACHE Value

Oracle supports 28 digit of positive integer for CACHE. SQL Server supports up to 2147483647 for CACHE property value (even when the SEQUENCE is created with data type of NUMERIC(28)).

SSMA sets CACHE to the maximum supported value if the CACHE value in Oracle is set greater than 2147483647.

Oracle SQL Server CREATE SEQUENCE MySequence CACHE 2147483648; CREATE SEQUENCE MySequence AS numeric (28,0) START WITH 10 CACHE 2147483647;

ORDER|NOORDER Property

SQL Server does not support ORDER|NOORDER property in sequence. SSMA ignores this property when migrating sequence object.

Oracle SQL Server CREATE SEQUENCE MySequence NOORDER; CREATE SEQUENCE MySequence AS numeric (28,0) START WITH 10;

CURRVAL

SQL Server does not support obtaining current value of a sequence. You can lookup current_value attribute from sys.sequences. However, note that the current_value represent global value of sequence (across all sessions). Oracle's CURRVAL returns the current value for each session. Consider the following example:

Session 1 (Oracle) Session 2 (Oracle) SELECT MySequence.nextval FROM dual;

NEXTVAL

------------

1SELECT MySequence.currval FROM dual;

CURRVAL

------------

1SELECT MySequence.nextval FROM dual;

NEXTVAL

------------

2SELECT MySequence.currval FROM dual;

CURRVAL

------------

2SELECT MySequence.currval FROM dual;

CURRVAL

------------

1

The current_value from sys.sequence record the latest value across all session. Hence, in the example above, the current_value is the same for any sessions (current_value = 2).

When converting PL/SQL statement containing CURRVAL to SQL Server Denali, SSMA generates the following error:

* SSMA error messages:

* O2SS0490: Conversion of identifier <sequence name> for CURRVAL is not supported.When you encounter this error, you should consider rewriting your statement by storing next sequence value into a variable then read the variable value as current value. For example:

Oracle SQL Server CREATE PROCEDURE create_employee (Name_in IN NVARCHAR2, EmployeeID_out OUT NUMBER) AS

BEGININSERT INTO employees (employeeID, Name)

VALUES (employee_seq.nextval, Name_in);SELECT employee_seq.currval INTO EmployeeID_out FROM dual;

END;

CREATE PROCEDURE create_employee @Name_in NVARCHAR(50), @EmployeeID_out INT OUT AS

SET @EmployeeID_out = NEXT VALUE FOR employee_seq

INSERT INTO employees (employeeID, Name)

VALUES (@EmployeeID_out, @Name_in)

If you are using CURRVAL extensively in your application and rewriting all statements with CURRVAL is not possible, a alternative approach is to convert Oracle Sequence to SSMA custom sequence emulator. When migrating to SQL Server prior to "Denali", SSMA converts Oracle sequence using custom sequence emulator. You can use this conversion approach when migrating to SQL Server "Denali" (which support conversion of CURRVAL) by changing the SSMA project setting (Tools > Project Settings > General > Conversion > Sequence Conversion).

This discussion is of interest to SQL Azure developers, because SQL Azure is likely to require use of the Sequence object to enable use of bigint identity columns as federation keys. For more information on sharding SQL Azure databases with federations, see my Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s 3/2011 issue.

<Return to section navigation list>

MarketPlace DataMarket and OData

The Windows Azure Team (@WindowsAzure) Announced at WPC11: Windows Azure Marketplace Powers SQL Server Codename “Denali” to Deliver Data Quality Services in a 7/13/2011 post:

Yesterday at WPC11 in Los Angeles, we announced CTP 3 of SQL Server Codename “Denali”, which includes a key new feature called Data Quality Services. Data Quality Services enables customers to cleanse their existing data stored in SQL databases, such as customer data stored in CRM systems that may contain inaccuracies created due to human error.

Data Quality Services leverages Windows Azure Marketplace to access real-time data cleansing services from leading providers, such as Melissa Data, Digital Trowel, Loqate and CDYNE Corp. Here’s what they have to say about Windows Azure Marketplace and today’s announcement:

Loqate

“Technology that works seamlessly is what customers expect today", said Martin Turvey, CEO and president of Loqate. “Loqate provides global address verification that provides that seamless integration across 240 countries. Our new agreement with Microsoft to provide Loqate products and services through the Windows Azure Marketplace enables our joint customers to easily access our industry-leading technology. The integration with Windows SQL Server makes achieving the business benefits of accurate address verification and global geocoding quicker, faster, and easier.”

CDYNE Corp.

“Instead of requiring our customers to connect to CDYNE data services from scratch which can take days, Microsoft has made it easy for everyone by including the ability to use Data Quality services right from within SQL Server leveraging Windows Azure Marketplace", said William Chenoweth vice president of Marketing and Sales, CDYNE Corporation.

Digital Trowel

“These are valuable services that update and enhance customer and prospect data using Digital Trowel’s award-winning information extraction, identity resolution and semantic technology. The breadth, depth and accuracy of Digital Trowel’s data goes well beyond what is currently available in the market”, said Digital Trowel CEO Doron Cohen. “Easy access to these powerful services is made directly from SQL Server Data Quality Services, and also through the Windows Azure Marketplace."

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Richard Seroter (@rseroter) described Event Processing in the Cloud with StreamInsight Austin: Part I-Building an Azure AppFabric Adapter in a 7/13/2011 post:

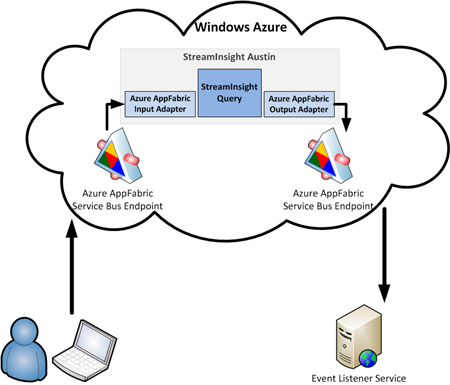

StreamInsight is Microsoft’s (complex) event processing engine which takes in data and does in-memory pattern matching with the goal of uncovering real-time insight into information. The StreamInsight team at Microsoft recently announced their upcoming capability (code named “Austin”) to deploy StreamInsight applications to the Windows Azure cloud. I got my hands on the early bits for Austin and thought I’d walk through an example of building, deploying and running a cloud-friendly StreamInsight application. You can find the source code here.

You may recall that the StreamInsight architecture consists of input/output adapters and any number of “standing queries” that the data flows over. In order for StreamInsight Austin to be effective, you need a way for the cloud instance to receive input data. For instance, you could choose to poll a SQL Azure database or pull in a massive file from an Amazon S3 bucket. The point is that the data needs to be internet accessible. If you wish to push data into StreamInsight, then you must expose some sort of endpoint on the Azure instance running StreamInsight Austin. Because we cannot directly host a WCF service on the StreamInsight Austin instance, our best bet is to use Windows Azure AppFabric to receive events.

In this post, I’ll show you how to build an Azure AppFabric adapter for StreamInsight. In the next post, I’ll walk through the steps to deploy the on-premises StreamInsight application to Windows Azure and StreamInsight Austin.

As a reference point, the final solution looks like the picture below. I have a client application which calls an Azure AppFabric Service Bus endpoint started up by StreamInsight Austin, and then take the output of StreamInsight query and send it through an adapter to an Azure AppFabric Service Bus endpoint that relays the message to a subscribing service.

I decided to use the product team’s WCF sample adapter as a foundation for my Azure AppFabric Service Bus adapter. However, I did make a number of changes in order to simplify it a bit. I have one Visual Studio project that contains shared objects such as the input WCF contract, output WCF contract and StreamInsight Point Event structure. The Point Event stores a timestamp and dictionary for all the payload values.

[DataContract] public struct WcfPointEvent { /// /// Gets the event payload in the form of key-value pairs. /// [DataMember] public Dictionary Payload { get; set; } /// /// Gets the start time for the event. /// [DataMember] public DateTimeOffset StartTime { get; set; } /// /// Gets a value indicating whether the event is an insert or a CTI. /// [DataMember] public bool IsInsert { get; set; } }Each receiver of the StreamInsight event implements the following WCF interface contract.

[ServiceContract] public interface IPointEventReceiver { /// /// Attempts to dequeue a given point event. The result code indicates whether the operation /// has succeeded, the adapter is suspended -- in which case the operation should be retried later -- /// or whether the adapter has stopped and will no longer return events. /// [OperationContract] ResultCode PublishEvent(WcfPointEvent result); }The service clients which send messages to StreamInsight via WCF must conform to this interface.

[ServiceContract] public interface IPointInputAdapter { /// /// Attempts to enqueue the given point event. The result code indicates whether the operation /// has succeeded, the adapter is suspended -- in which case the operation should be retried later -- /// or whether the adapter has stopped and can no longer accept events. /// [OperationContract] ResultCode EnqueueEvent(WcfPointEvent wcfPointEvent); }I built a WCF service (which will be hosted through the Windows Azure AppFabric Service Bus) that implements the IPointEventReceiver interface and prints out one of the values from the dictionary payload.

public class ReceiveEventService : IPointEventReceiver { public ResultCode PublishEvent(WcfPointEvent result) { WcfPointEvent receivedEvent = result; Console.WriteLine("Event received: " + receivedEvent.Payload["City"].ToString()); result = receivedEvent; return ResultCode.Success; } }Now, let’s get into the StreamInsight Azure AppFabric adapter project. I’ve defined a “configuration object” which holds values that are passed into the adapter at runtime. These include the service address to host (or consume) and the password used to host the Azure AppFabric service.

public struct WcfAdapterConfig { public string ServiceAddress { get; set; } public string Username { get; set; } public string Password { get; set; } }Both the input and output adapters have the required factory classes and the input adapter uses the declarative CTI model to advance the application time. For the input adapter itself, the constructor is used to initialize adapter values including the cloud service endpoint.

public WcfPointInputAdapter(CepEventType eventType, WcfAdapterConfig configInfo) { this.eventType = eventType; this.sync = new object(); // Initialize the service host. The host is opened and closed as the adapter is started // and stopped. this.host = new ServiceHost(this); //define cloud binding BasicHttpRelayBinding cloudBinding = new BasicHttpRelayBinding(); //turn off inbound security cloudBinding.Security.RelayClientAuthenticationType = RelayClientAuthenticationType.None; //add endpoint ServiceEndpoint endpoint = host.AddServiceEndpoint((typeof(IPointInputAdapter)), cloudBinding, configInfo.ServiceAddress); //define connection binding credentials TransportClientEndpointBehavior cloudConnectBehavior = new TransportClientEndpointBehavior(); cloudConnectBehavior.CredentialType = TransportClientCredentialType.SharedSecret; cloudConnectBehavior.Credentials.SharedSecret.IssuerName = configInfo.Username; cloudConnectBehavior.Credentials.SharedSecret.IssuerSecret = configInfo.Password; endpoint.Behaviors.Add(cloudConnectBehavior); // Poll the adapter to determine when it is time to stop. this.timer = new Timer(CheckStopping); this.timer.Change(StopPollingPeriod, Timeout.Infinite); }On “Start()” of the adapter, I start up the WCF host (and connect to the cloud). My Timer checks the state of the adapter and if the state is “Stopping”, the WCF host is closed. When the “EnqueueEvent” operation is called by the service client, I create a StreamInsight point event and take all of the values in the payload dictionary and populate the typed class provided at runtime.

foreach (KeyValuePair keyAndValue in payload) { //populate values in runtime class with payload values int ordinal = this.eventType.Fields[keyAndValue.Key].Ordinal; pointEvent.SetField(ordinal, keyAndValue.Value); } pointEvent.StartTime = startTime; if (Enqueue(ref pointEvent) == EnqueueOperationResult.Full) { Ready(); }There is a fair amount of other code in there, but those are the main steps. As for the output adapter, the constructor instantiates the WCF ChannelFactory for the IPointEventReceiver contract defined earlier. The address passed in via the WcfAdapterConfig is applied to the Factory. When StreamInsight invokes the Dequeue operation of the adapter, I pull out the values from the typed class and put them into the payload dictionary of the outbound message.

// Extract all field values to generate the payload. result.Payload = this.eventType.Fields.Values.ToDictionary( f => f.Name, f => currentEvent.GetField(f.Ordinal)); //publish message to service client = factory.CreateChannel(); client.PublishEvent(result); ((IClientChannel)client).Close();I now have complete adapters to listen to the Azure AppFabric Service Bus and publish to endpoints hosted on the Azure AppFabric Service Bus.

I’ll now build an on-premises host to test that it all works. If it does, then the solution can easily be transferred to StreamInsight Austin for cloud hosting. I first defined the typed class that defines my event.

public class OrderEvent { public string City { get; set; } public string Product { get; set; } }Recall that my adapter doesn’t know about this class. The adapter works with the dictionary object and the typed class is passed into the adapter and translated at runtime. Next up is setup for the StreamInsight host. After creating a new embedded application, I set up the configuration object representing both the input WCF service and output WCF service.

//create reference to embedded server using (Server server = Server.Create("RSEROTER")) { //create WCF service config WcfAdapterConfig listenWcfConfig = new WcfAdapterConfig() { Username = "ISSUER", Password = "PASSWORD", ServiceAddress = "https://richardseroter.servicebus.windows.net/StreamInsight/RSEROTER/InputAdapter" }; WcfAdapterConfig subscribeWcfConfig = new WcfAdapterConfig() { Username = string.Empty, Password = string.Empty, ServiceAddress = "https://richardseroter.servicebus.windows.net/SIServices/ReceiveEventService" }; //create new application on the server var myApp = server.CreateApplication("DemoEvents"); //get reference to input stream var inputStream = CepStream.Create("input", typeof(WcfInputAdapterFactory), listenWcfConfig, EventShape.Point); //first query var query1 = from i in inputStream select i; var siQuery = query1.ToQuery(myApp, "SI Query", string.Empty, typeof(WcfOutputAdapterFactory), subscribeWcfConfig, EventShape.Point, StreamEventOrder.FullyOrdered); siQuery.Start(); Console.WriteLine("Query started."); //wait for keystroke to end Console.ReadLine(); siQuery.Stop(); host.Close(); Console.WriteLine("Query stopped. Press enter to exit application."); Console.ReadLine();This is now a fully working, cloud-connected, onsite StreamInsight application. I can take in events from any internal/external service caller and publish output events to any internal/external service. I find this to be a fairly exciting prospect. Imaging taking events from your internal Line of Business systems and your external SaaS systems and looking for patterns across those streams.

Looking for the source code? Well here you go. You can run this application today, whether you have StreamInsight Austin or not. In the next post, I’ll show you how to take this application and deploy it to Windows Azure using StreamInsight Austin.

The Microsoft All-In-One Code Framework Team reported New code sample release from Microsoft All-In-One Code Framework in July in a 7/13/2011:

New Windows Azure Code Samples: CSAzureWebRoleIdentity

Download: http://code.msdn.microsoft.com/CSAzureWebRoleIdentity-004bc8dd

CSAzureWebRoleIdentity is a web role hosted in Windows Azure. It federates the authentication to a local STS. This breaks the authentication code from the business logic so that web developer can offload the authentication and authorization to the STS with the help of WIF.

This code sample is created to answer a hot Windows Azure sample request: webrole-ADFS authentication with over 25 customer votes.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Erik Oppedijk reported Digging into the Azure Accelerator for Web Roles in a 7/13/2011 post:

Intro

I get a lot of questions on how to maximize the use of a web role in Windows Azure, most of the time for multiple small(er) websites. A manual solution was to create a single project containing multiple sites (new feature since Azure SDK 1.3) but the big challenge here is how to update a single site and deploy it. The new WebDeploy option seems to fulfill this, but it only works on a single instance, and there is no persistence of the deploy after a recycle of the role.

The Windows Azure Accelerator for Web Roles is a codeplex project, and this tool will help us overcome some problems with Azure Deployment:

- Quick WebDeploy (around 30 seconds)

- Persistent updating of a site

- Run multiple websites in the same role

- Management Portal

- Centralized Trace logs

- SSL certificate management

Read the introduction and installation post by Nathan Totten for a step by step tutorial

Architecture

The basic idea behind the accelerator is a new Windows Azure Cloud Project, which hosts the logic. We can deploy this application to one or more instances in the cloud. So no sites are initially deployed, just a placeholder/management website.

Each instance polls the Windows Azure Blob Storage for (updated) deployments and if available automatically installs them.

From the management portal we can register a new site, and a developer can use WebDeploy to publish his application to a running instance. This instance will save the deployment files to Blob Storage. Now the other instances can deploy the same site from the storage and the recycling of a single role will cause the sites to be automatically redeployed.

Behind the scenes

There is a default SyncIntervalInSeconds of 15 seconds for the polling.

NuGet packages are used (WindowsAzureWebHost.Synchronizer and WindowsAzureWebHost.WebManagerUI)Azure Tables being used:

BindingCertificates

Bindings

WebHostTracingEntries

WebSites

WebSiteSyncStatusBlob Containers being used:

sites

bindingcertificatesTips

Don't turn on WebDeploy during a publish of the Accelerator application, there is a startup script that will do this for you!

Maarten Balliauw (@maartenballiauw) described Windows Azure Accelerator for Web Roles in a 7/13/2011 post:

One of the questions I often get around Windows Azure is: “Is Windows Azure interesting for me?”. It’s a tough one, because most of the time when someone asks that question they currently already have a server somewhere that hosts 100 websites.

In the full-fledged Windows Azure model, that would mean 100 x 2 (we want the SLA) = 200 Windows Azure instances. And a stroke at the end of the month when the bill arrives. Microsoft’s DPE team have released something very interesting for those situations though: the Windows Azure Accelerator for Web Roles.

In short, the WAAWR (no way I’m going to write Windows Azure Accelerator for Web Roles out every time!) is a means of creating virtual web sites on the IIS server running on a Windows Azure instance. Add “multi-instance” to that and have a free tool to create a server farm for you!

The features on paper:

- Deploy sites to Windows Azure in less than 30 seconds

- Enables deployments to multiple Web Role instances using Web Deploy

- Saves Web Deploy packages & IIS configuration in Windows Azure storage to provide durability

- A web administrator portal for managing web sites deployed to the role

- The ability to upload and manage SSL certificates

- Simple logging and diagnostics tools

Interesting… Let’s go for a ride!

Obtaining & installing the Windows Azure Accelerator for Web Roles

Installing the WAAWR is as easy as download, extract, buildme.cmd and you’re done. After that, Visual Studio 2010 (or Visual Studio Web Developer Express!) features a new project template:

Click OK, enter the required information (such as: a storage account that will be used for synchronizing the different server instances and an administrator account). After that, enable remote desktop and publish. That’s it. I’ve never ever setup a web farm more quickly than that.

Creating a web site

After deploying the solution you created in Visual Studio, browse to the live deployment and log in with the administrator credentials you created when creating the project. This will give you a nice looking web interface which allows you to create virtual web sites and have some insight into what’s happening in your server farm.

I’ll create a new virtual website on my server farm:

After clicking create we can try to publish an ASP.NET MVC application.

Publishing a web site

For testing purposes I created a simple ASP.NET MVC application. Since the default project template already has a high enough “Hello World factor”, let’s immediately right-click the project name and hit Publish. Deploying an application to the newly created Windows Azure webfarm is as easy as specifying the following parameters:

One Publish click later, you are done. And deployed on a web farm instance, I can now see the website itself but also… some statistics :-)

Conclusion

The newly released Windows Azure Accelerator for Web Roles is, IMHO, the easiest, fastest manner to deploy a multi-site webfarm on Windows Azure. Other options like.the ones proposed by Steve Marx on his blog do work, but are only suitable if you are billing your customer by the hour.

The fact that it uses web deploy to publish applications to the system and the fact that this just works behind a corporate firewall and annoying proxy is just fabulous!

This also has a downside: if I want to push my PHP applications to Windows Azure in a jiffy, chances are this will be a problem. Not on Windows (but not ideal there either), but certainly when publishing apps from other platforms. Is that a self-imposed challenge? Nope. Web deploy does not seem to have an open protocol (that I know of) and while reverse engineering it is possible I will not risk the legal consequences :-) However, some reverse-engineering of the WAAWR itself learned me that websites are stored as a ZIP package on blob storage and there’s a PHP SDK for that. A nifty workaround is possible as such, if you get your head around the ZIP file folder structure.

My conclusion in short: if you ever receive the question “Is Windows Azure interesting for me?” from someone who wants to host a bunch of websites on it? It is. And it’s easy.

The Windows Azure Team (@WindowsAzure) reported NOW AVAILABLE: Windows Azure Accelerator for Web Roles in a 7/12/2011 post:

We are excited to announce the release of the Windows Azure Accelerator for Web Roles. This accelerator makes it quick and easy for you to deploy one or more websites across multiple Web Role instances using Web Deploy in less than 30 seconds.

Feature highlights:

- Deploy websites to Windows Azure in less than 30 seconds.

- Enable deployments to multiple Web Role instances using Web Deploy from both Visual Studio and WebMatrix.

- Saves Web Deploy packages & IIS configuration in Windows Azure storage to provide durability.

- A Visual Studio Project template that allows you to create your web deploy host.

- Full source and NuGet packages that you can use to build custom solutions.

- Documentation to help you get started.

You can watch this video for more information about getting started:

After you have deployed the accelerator to your Windows Azure role, you can deploy your web applications to Windows Azure quickly and easily with Web Deploy. You can use Visual Studio to deploy a web application to Windows Azure.

Alternatively, you can use WebMatrix to deploy a web application to Windows Azure.

For additional information visit the following sites:

- Windows Azure Accelerator for Web Roles

- Nathan Totten’s blog post, “Windows Azure Accelerator for Web Roles”

- Getting Started Webcast

<Return to section navigation list>

Visual Studio LightSwitch

John Chen of the Visual Studio LightSwitch Team showed How to Create a Search Screen that Can Search Properties of Related Entities (John Chen) in a 7/13/2011 post:

LightSwitch has a powerful search data screen template to allow you quickly create a search screen in your LightSwitch application. You can watch Beth’s How Do I video to get started: How Do I: Create a Search Screen in a LightSwitch Application?

In this blog, I am going to answer one frequently asked question: How do I create a search screen that can search properties of related entities?

Let’s start with an example to illustrate the question. Assuming that in your application you have two entities, Category and Product, from the sample Northwind database as shown below (Figure 1).

Figure 1. The entities in the application

The Category and Product has a one-to-many relationship as shown in the connecting line from Category to Product. It means a Category can have many Product instances while a Product is associated with one Category. Now let’s create a default search screen on the Product table, the screen designer will look like Figure 2 below:

Figure 2. The default search screen designer view

You can see that the related entity Category (highlighted) is shown in the Data Grid’s row. For demonstration purpose, I delete some fields (‘Units In Stock’, etc) from the screen designer.

Now run the app and you can see the search screen in action as show in Figure 3, where I have typed ‘ch’ in the search box located on the top right of the screen (highlighted ).

Figure 3. Search screen in action

Even though the Category field is shown in the result (as a summary field), it is not searchable. For example, if you type ‘_chen’ in the search box, you will not get any result even though you might think that it would have results where the Category is Beverages_chen.

This is because the built-in search function does not cover the related entity fields. Fortunately, it is not difficult to overcome this by using queries (please see MSDN topic Filtering Data with Queries). There are multiple approaches depending on your usage scenarios. How to Create a Screen with Multiple Search Parameters in LightSwitch is one example of how to define your own search parameters in your screen. Alternatively, you can create a screen with one search parameter to search multiple fields including the related entity fields like this example:

Figure 4. Query multiple fields with one parameter

In the following, I will demonstrate one simple approach by modifying the query in the search screen. My goal is to use one search screen to search the product entities as well as filtering the result with the Category Name, which is a related entity field.

Let’s start with the SearchProducts screen in the screen designer in the previous example (see Figure 2).

First, I would edit the SearchProduct screen’s query by clicking on the Edit Query command on the top left portion of the designer (Figure 5, highlighted). Notice that this modifies only the query related to the screen itself. You could also create a global query that you could use across screens (see MSDN topic How to: Add, Remove, and Modify a Query)

Figure 5. Edit query on the screen designer

When the query designer shows up, I will click on the Add Filter button. Then leave the first filter column as ‘Where’ (Figure 6 - 1) and click on the second column’s drop down (Figure 6 - 2). Now you will see the list of fields available for the Product entity. You will also see that the related entity Category is in the list as a group. Expand the Category node (Figure 6 - 3), and choose CategoryName (Figure 6 - 4).

Figure 6. Editing the query expression

Set the condition column(Figure 6 - 5) as ‘contains’, the parameter type column (Figure 6 - 6) as ‘@Parameter’ and the parameter column (Figure 6 - 7) as ‘Add New’. Leave the new parameter name as CategoryName. The final query expression should look like Figure 7 below.

Figure 7. The final query expression

Now click on the Back to SearchProducts link (on the top left of the query designer) to return to the SearchProducts screen designer. Notice that in the left pane, there is a new group called Query Parameters (Figure 8 -1); expand it if it is collapsed. You can see the CategoryName parameter (Figure 8 -2) under Query Parameters, Drag the CategoryName parameter and drop it under the Screen Command Bar node in the content tree. (Figure 8-3).

Figure 8. Drag and drop CategoryName parameter to the content tree

Now you will notice that a Text Box called Category Name (Figure 9 -1) is created on the drop location. Additionally, you will see a screen property called CategoryName is created (Figure 9-2). The Category Name Text Box is data bound to the CategoryName screen property, which is consumed by the query as well.

Figure 9. The final look of the search screen designer

That is all the design work to setup a search text box for the CategoryName parameter. Let’s run the app. You should see the similar screen shot as in Figure 3 except now we have an additional Category Name search box on the top of the screen. You can then filter the category name first (Figure 10-1) then search again with the default search box (Figure 10-2) to search native fields in the product. Figure 10 shows a search result in action.

Figure 10. The final search screen in action

Furthermore, you can also hide the built-in search box and use just your own search box. In the example above, you can go back to the SearchProducts screen designer and click on the Products table (Figure 11-1). Hit F4 to ensure the Properties pane is shown. From the Properties pane, uncheck the Support search check box (Figure 11-2).

Figure 11. Hide the built-in search box

You can then modify the query and modify the screen designer accordingly to add as many search fields in Products and its related fields in Categories as needed.

Reference:

Beth Massi (@bethmassi) reported More “How Do I” Videos Released– Setting Field Values Automatically for Easy Data Entry (Beth Massi) in a 7/12/2011 post to the Visual Studio LightSwitch Team Blog:

We just released a couple more “How Do I” videos on the LightSwitch Developer Center:

#19 - How Do I: Set Default Values on Fields when Entering New Data?

#20 - How Do I: Copy Data from One Row into a New Row?

In these videos I show you how to write a bit of code to set values on fields in a couple different ways in order to make it faster for users to enter data.

And don’t forget you can watch all the How Do I videos here: LightSwitch “How Do I” Videos on MSDN

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Lori MacVittie (@lmacvittie) asserted #IPv6 Integration with partners, suppliers and cloud providers will make migration to IPv6 even more challenging than we might think… as an introduction to her The IPv6 Application Integration Factor post of 7/13/2011 to F5’s DevCentral blog:

My father was in the construction business most of the time I was growing up. He used to joke with us when we were small that there was a single nail in every house that – if removed – would bring down the entire building.

Now that’s not true in construction, of course, but when the analogy is applied to IPv6 it may be more true than we’d like to think, especially when that nail is named “integration”.

Most of the buzz around IPv6 thus far has been about the network; it’s been focused on getting routers, switches and application delivery network components supporting the standard in ways that make it possible to migrate to IPv6 while maintaining support for IPv4 because, well, we aren’t going to turn the Internet off for a day in order to flip from IPv4 to IPv6. Not many discussions have asked the very important question: “Are your applications ready for IPv6?” It’s been ignored so long that many, likely, are not even sure about what that might mean let alone what they need to do to ready their applications for IPv6.

IT’S the INTEGRATION

The bulk of issues that will need to be addressed in the realm of applications when the inevitable migration takes off is in integration. This will be particularly true for applications integrating with cloud computing services. Whether the integration is at the network level – i.e. cloud bursting – or at the application layer – i.e. integration with SaaS such as Salesforce.com or through PaaS services – once a major point of integration migrates it will likely cause a chain reaction, forcing enterprises to migrate whether they’re ready or not. Consider for example, that cloud bursting, assumes a single, shared application “package” that can be pushed into a cloud computing environment as a means to increase capacity. If – when – a cloud computing provider decides to migrate to IPv6 this process could become a lot more complicated than it is today. Suddenly the “package” that assumed IPv4 internal to the corporate data center must assume IPv6 internal to the cloud computing provider. Reconfiguration of the OS, platform and even application layer becomes necessary for a successful migration.

Enterprises reliant on SaaS for productivity and business applications will likely be first to experience the teetering of the house of (integration) cards.

Enterprises are moving to the cloud, according to Yankee Group’s 2011 US FastView: Cloud Computing Survey.

Approximately 48 percent of the respondents said remote/mobile user connectivity is driving the enterprises to deploy software as a service. This is significant as there is a 92 percent increase over 2010.

Around 38 percent of enterprises project the deployment of over half of their software applications on a cloud platform within three years compared to 11 percent today, Yankee Group said in its “2011 Fast View Survey: Cloud Computing Motivations Evolve to Mobility and Productivity.”

-- Enterprise SaaS Adoption Almost Doubles in 2011: Yankee Group Survey

Enterprise don’t just adopt SaaS and cloud services, they integrate them. Data stored in cloud-hosted software is invaluable to business decision makers but first must be loaded – integrated – into the enterprise-deployed systems responsible for assisting in analysis of that data. Secondary integration is also often required to enable business processes to flow naturally between on- and off-premise deployed systems. It is that integration that will likely first be hit by a migration on either side of the equation. If the enterprise moves first, they must address the challenge of integrating two systems that speak incompatible network protocol versions. Gateways and dual-stack strategies – even potentially translators – will be necessary to enable a smooth transition regardless of who blinks first in the migratory journey toward IPv6 deployment.

Even that may not be enough. Peruse RFC 4038, “Application Aspects of IPv6 Transition”, and you’ll find a good number of issues that are going to be as knots in wood to a nail including DNS, conversion functions between hostnames and IP addresses (implying underlying changes to development frameworks that would certainly need to be replicated in PaaS environments which, according to a recent report from Gartner, indicates a 267% increase in inquiries regarding PaaS this year alone), and storage of IP addresses – whether for user identification, access policies or integration purposes.

Integration is the magic nail; the one item on the migratory checklist that is likely to make or break the success of IPv6 migration. It’s also likely to be the “thing” that forces organizations to move faster. As partners, sources and other integrated systems make the move it may cause applications to become incompatible. If one environment chooses an all or nothing strategy to migration, its integrated partners may be left with no option but to migrate and support IPv6 on a timeline not their own.

TOO TIGHTLY COUPLED

While the answer for IPv6 migration is generally accepted to be found in a dual-stack approach, the same cannot be said for Intercloud application mobility. There’s no “dual stack” in which services aren’t tightly coupled to IP address, regardless of version, and no way currently to depict an architecture without relying heavily on topological concepts such as IP. Cloud computing – whether IaaS or PaaS or SaaS – is currently entrenched in a management and deployment system that tightly couples IP addresses to services. Integration relying upon those services, then, becomes heavily reliant on IP addresses and by extension IP, making migration a serious challenge for providers if they intend to manage both IPv4 and IPv6 customers at the same time. But eventually, they’ll have to do it. Some have likened the IPv4 –> IPv6 transition as the network’s “Y2K”. That’s probably apposite but incomplete. The transition will also be as challenging for the application layers as it will for the network, and even more so for the providers caught between two versions of a protocol upon which so many integrations and services rely. Unlike Y2K we have no deadline pushing us to transition, which means someone is going to have to be the one to pull the magic nail out of the IPv4 house and force a rebuilding using IPv6. That someone may end up being a cloud computing provider as they are likely to have not only the impetus to do so to support their growing base of customers, but the reach and influence to make the transition an imperative for everyone else.

IPv6 has been treated as primarily a network concern, but because applications rely on the network and communication between IPv4 and IPv6 without the proper support is impossible, application owners will need to pay more attention to the network as the necessary migration begins – or potentially suffer undesirable interruption to services.

David Linthicum (@DavidLinthicum, pictured below) asserted “Although many view cloud migration fees as payola, the charges are exactly what's needed from cloud computing companies” in a deck for his Why Microsoft's 'cloud bribes' are the right idea article of 7/13/2011 for InfoWorld’s Cloud Computing blog:

InfoWorld's Woody Leonhard uncovered the fact that Microsoft is paying some organizations to adopt its Office 365 cloud service, mostly in funds that Microsoft earmarks for their customers' migration costs and other required consulting. Although this raised the eyebrows of some bloggers -- and I'm sure Google wasn't thrilled -- I think this is both smart and ethical. Here's why.

Those who sell cloud services, which now includes everyone, often don't consider the path from the existing as-is state to the cloud. If executed properly, there is a huge amount of work and a huge cost that can remove much of the monetary advantages of moving to the cloud.

Microsoft benefits from subsidizing the switch because it can capture a customer that will use that product for many years. Thus, the money spent to support migration costs will come back 20- or 30-fold over the life of the product. This is the cloud computing equivalent to free installation of a cable TV service.

The incentive money does not go into the pockets of the new Office 365 user; it's spent on consulting organizations that come in and prepare the organization for the arrival of the cloud. This includes migrating existing documents and data, training users, creating a support infrastructure, and doing other necessary planning and prep work.

Others should follow Microsoft's lead, and some cloud vendors are doing so already.

As a rule of thumb, I would allocate about one-eighth of the first year's service revenue to pay for planning and migration costs. If it's a complex migration, such as from enterprise to IaaS (infrastructure as a service), I would budget more. Budget less if you're dealing with a simple SaaS (software as a service) migration.

Migrating to cloud computing requires effort, risk, and cash. Although the end state should provide much more agility and value, many steps separate where you are and where you need to be. Considering that the cloud providers like Microsoft, Amazon.com, and Google will benefit directly from our move to their cloud services, let them pay us to get there.

I agree with David on this point, although I believe that case studies and other marketing collateral that results should disclose that the service provider subsidized the migration.

David Pallman posted Introducing the Windows Azure Cost Modeler on 7/12/2011:

I’m pleased to introduce the Windows Azure Cost Modeler, a free online tool from Neudesic for estimating Windows Azure operational costs that can handle advanced scenarios.

Why another pricing calculator, when we already have several? There’s Microsoft’s new Windows Azure Pricing Calculator (nicely done), the Windows Azure TCO Calculator, and Neudesic’s original Azure ROI Calculator. These tools are great but they focus on the every-month-is-the-same scenario; unless your load will be constant month after month you’ll find yourself having to make some of the calculations yourself.

With the cost modeler, you can easily explore fluctuating-load scenarios such as these:

• Seasonal businesses that run a larger configuration during their busy season

• On-off processing where solutions are not constantly deployed year-round

• Configurations that increase or decrease over time to match projected changes in load

• Hosted services that are not deployed every day of the month (such as weekdays only)

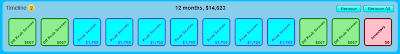

The Windows Azure Cost Modeler user interface is shown above. It includes a standard pricing calculator (middle section). It can track multiple configurations, represented as color-colored profile cards (top). Profile cards can be arranged in a storyboard sequence to define a timeline (bottom), one for each month. What we’ve strived for is flexibility in modeling while keeping the interface simple.

Profiles

A profile is a monthly pricing configuration that you can assign a name and color to. Profiles are arranged at the top of the tool. The pricing calculator shows the details of the currently selected profile and allows you to make changes.Buttons at the top right of the pricing calculator allow you to clear the current profile (resetting its inputs to 0), create a new profile, remove the current profile, or remove all profiles.

Pricing Calculator

The pricing calculator has similar inputs to the other Windows Azure pricing calculators and includes all platform services which have been commercially released, including Windows Azure Compute, Windows Azure Storage, Content Delivery Network, SQL Azure Database, AppFabric Access Control Service, AppFabric Caching, and AppFabric Service Bus. You can specify values using the sliders or you can directly edit the numbers.

In the Windows Azure Compute area of the calculator you specify the number of instances you want for each VM size. There is also an input for the number of hours deployed per month, which is handy if you don’t deploy your compute instances for every hour of the month. For example, if you only require your instances to be deployed for the first day of the month you would set hours/month to 24.

The Windows Azure Storage & CDN area is where you specify data size and number of transactions for Windows Azure Storage. If you’re using the Content Delivery Network, also estimate your data transfers.

For SQL Azure Database, select the number of databases for each of the available sizes.

In the Data Transfer area, specify the amount of egress (outbound data transfers). There is no input for ingress because inbound data transfers are now free in Windows Azure.

The AppFabric area is where you specify consumption of AppFabric Services. For Access Control Service, enter the number of transactions. For Caching, choose one of the six available cache sizes. For Service Bus, define the number of connections. The Service Bus price calculation will automatically select the best combination of connection packs.

The Estimated Charges panel shows the charges, itemized by service and totaled, with monthly and annual columns. By default amounts are rounded up to the nearest dollar; if you prefer to see the full amounts including cents, clear the “Round up to nearest dollar” checkbox.

At the top left of the Estimated Charges panel is a U.S. flag, indicating the rates are for North America and calculations are shown in U.S. dollars. The tool does not yet support other currencies, nor the slightly different rates in Asian data centers, but this is coming.

At the top right of the Estimate Charges panel is a help button, which will display the rates and also offer to send you to Azure.com to verify the rate information is still current.

Timeline

Once you’ve defined your profiles you can add them to the timeline at bottom. To add a profile to the timeline, select the profile you want and click the Add to Timeline button. The profile card appears in the timeline.

If you’ve added a profile card one or more times to the timeline and then need to make changes to the original profile, you can update the profiles in the timeline by clicking the Apply button at the top of the pricing calculator.

To remove a card, click the Remove button at the top right of the timeline. The selected card will be deleted. To remove all cards, click the Remove All button.

Scenario Examples

Here a few examples of how you can model scenarios in cost modeler.

Example: Ramping Up

In this scenario, a business wants to model their first year of Windows Azure costs. They will use a free trial offer for one month, then work on a Proof-of-Concept for two months, and then go into production. In the modeler, we create 3 profiles named Free, POC, and Production and add 1-Free, 2-POC, and 9-Production cards to the timeline to model Year 1 operational expenses.

Example: Seasonal Business

In this scenario, a tax preparation service has a very seasonal business where March-May are the busy season where a lot of computing power is needed and the rest of the year is the off season where very little compute power is needed. In the modeler, we create a Peak and Off Peak profile and arrange them in the timeline to show the year has 2-Off Peak, 3-On Peak, and 7-Off Peak.

Example: End of Month Bursting

In this scenario, a solution runs in the cloud only on the last day of each month to perform month-end batch processing and reporting tasks. If it is also the end of the quarter another day is needed to run end-of-quarter processing. If it is also the end of the year a third day is needed to run end-of-year processing.

We can define 3 profiles, one each for end of month (1 day of processing), end of quarter (2 days of processing), and end of year (3 days of processing). The correct profile for each calendar month is then very easy to assign: each quarter repeats the sequence EndMonth +EndMonth +EndQuarter except the final quarter which is EndMonth + EndMonth + EndYear.Summary

We hope you find Windows Azure Cost Modeler (http://azurecostmodeler.com) and Neudesic’s other tools and services helpful. If you have any feedback about how we can improve our offerings, please contact me through this blog.

For a deeper analysis of whether Windows Azure makes sense for you, I urge you to take advantage of a Cloud Computing Assessment.

Damon Edwards (@damonedwards) posted a DevOps in the Enterprise: Whiteboard Session at National Instruments (Video) Webcast on 7/12/2011:

I was recently in Austin, Texas and had a chance to visit National Instruments and talk DevOps.

National Instruments is a rare case study. While it's common to hear DevOps stories coming from web startups, National Instruments definitely falls into the category of a traditional legacy enterprise.

After meeting the larger team, I settled into a conference room with Ernest Mueller and Peco Karayanev to get a deeper dive into both their DevOps and multi-vendor Cloud initiatives. Below is the video from that session.

0:00 - Intro

Topics include:

- How Ernest, Peco, and National Instruments got from traditional legacy IT operations thinking to DevOps thinking

- Scaling a single web app in a startup vs. managing the "long tail of long tails" in a heterogeneous enterprise environment

- Challenge of moving developers from a desktop software mindset to developing for the cloud

10:37 - Whiteboard

Topics include:

- "Sharing is the Devil"... Managing complexity through isolation by design

- Overview of NI cloud architecture

- "Operations as a Service"

- Model-driven provisioning

- Building cloud apps that span multiple clouds (Azure and AWS)

- Working with deployment tools (and enabling developer self-service/maintenance)

- Tools the team is publishing as open source projects (Only the second time that National Instruments has released something under an open source license)

The Windows Azure Team (@WindowsAzure) announced a New Forrester Report Outlines The ISV Business Case for Windows Azure on 7/12/2011:

News from WPC11 this week has demonstrated that the partner opportunity with Microsoft has never been stronger. Validating that opportunity, a recently commissioned study conducted by Forrester Consulting, “The ISV Business Case For The Windows Azure Platform” found that software partners deploying solutions on Windows Azure are generating 20% - 250% revenue growth after nine to 14 months of operations.

Available for download here, the report examines the economic impact and business case for ISVs building applications on Windows Azure. To arrive at these findings, Forrester interviewed six geographically dispersed ISVs of various sizes who developed applications on Windows Azure. Key benefits for ISVs outlined in this report include the ability to:

- Re-use existing code. The ISV’s were able to port up to 80 percent of their existing Microsoft code onto Windows Azure by simply recompiling it.

- Leverage Windows Azure’s flexible resource consumption model. The ISVs tuned their applications to minimize Windows Azure resource usage and make it more predictable.

- Reach completely new customers. Having cloud-based applications that could be offered globally allowed the ISVs to sell into new market segments and to geographically distant customers.

The report outlines how, with Microsoft as a partner, ISVs successfully moved their applications to Windows Azure, gaining access to new customers and revenue opportunities, while also preserving their prior investments in existing code, and leveraging existing development skillsets. Download the report here to take a deeper look into the benefits of Windows Azure for ISVs.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Mark Hassell of the Microsoft Server and Cloud Platform Team (@MSServerCloud) suggested that you Take your business to the next level with new partner programs and incentives for private cloud computing in a 7/13/2011 post:

There’s a lot of hype around the evolution of virtualization and management, and the emergence of cloud computing. One thing that everyone can agree upon however is that most customers want to take virtualization and management to the next level by migrating to a cloud infrastructure and are looking to partners to help them decide on the best path to get there. So virtualization and management deliver new business opportunities today, and expansion into cloud computing, specifically private cloud, offers the prospect of even more opportunities in the future. With this in mind, Microsoft is announcing a series of new initiatives designed to aid channel partners in designing effective, profitable business models while working with their customers to stay on top of their datacenter infrastructure and cloud computing needs.

Microsoft Management & Virtualization Competency

Firstly, we are merging together the Systems Management and Virtualization competencies to form a new competency which reflects this continued convergence of management and virtualization and prepares partners to deliver a new breed of Private Cloud solutions. Whether you design, sell, deploy, or consult on server infrastructure, the Microsoft Management & Virtualization competency will help you to expand your role as a trusted advisor, create new business models, and generate new cloud-based revenue streams. Attaining this new competency will provide you with access to relevant resources, tools, and training for Microsoft System Center, virtualization and private cloud technologies and help to grow your business. And reaching Gold competency status will qualify you to earn channel incentives through the Management & Virtualization Solution Incentive Program (SIP) and through Private Cloud, Management and Virtualization Deployment Planning Services (PVDPS). The new Management and Virtualization competency won’t go live until May 2012 but to qualify partners will be required to demonstrate expertise in both Windows Server 2008 R2 Hyper-V and System Center. So you’ll have plenty of time to attain any new exam requirements – and with this in mind stop by our booth (# 1221) at WPC to learn about our readiness offer and save at least 20% on virtualization and management training.

Microsoft Solutions Incentives Program (SIP)

The second announcement we are making is an enhancement to the Microsoft Solutions Incentives Program (SIP) which rewards partners that identify and sell solutions that include Microsoft Virtualization, Systems Center and Forefront solutions, as well as associated licensing suites (ECI, SMSD, SMSE). This year we are increasing the size of payouts, so Core Infrastructure partners with a Gold competency, can earn fees up to 30% of the deal size on eligible opportunities. Read the FAQ for partners or contact your Microsoft Partner Account Manager (PAM) to learn more.Microsoft Private Cloud, Management & Virtualization Deployment Planning Services (PVDPS)

Complementing SIP is our third announcement and a brand new program called the Microsoft Private Cloud, Management & Virtualization Deployment Planning Services (PVDPS). This program helps Software Assurance (SA) customers take advantage of infrastructure solutions based on Windows Server, Systems Center and Hyper-V and can greatly reduce their datacenter costs and increase business agility. Customers can redeem their SA vouchers and receive a structured consulting engagement through partners that have attained a Gold competency in either Virtualization, Systems Management, Server Platform or Identity and Security. To learn more about PVDPS and to register to become a provider, visit the PVDPS website.Microsoft Sales Specialist Accreditation

Our final announcement is about the Microsoft Sales Specialist accreditation which is designed to help sales professionals strengthen their solution knowledge and gain recognition for solution selling expertise associated with select Microsoft solutions. At WPC we are introducing a new Private Cloud sales accreditation to help professionals communicate the benefits of private cloud computing, help customer overcome obstacles, and position product, technology and licensing requirements to differentiate a Microsoft solution from the competition. Attaining the Private Cloud sales accreditation will not only strengthen your solution knowledge, but help you to move business forward and deliver solutions more quickly – leading to deeper customer relationships and greater revenues. Go to Microsot Sales Specialist website to learn more.In conclusion if you are not enrolled in the Microsoft Sales Specialist program, if your organization is not active in the Solutions Incentive Program, or if you don’t qualify for Deployment Planning Services, you should consider attaining a Gold Core Infrastructure competency, which can not only help to differentiate your organization in the market but qualify your organization to earn valuable rewards and incentives. You should also start thinking about how you can drive revenue by migrating existing server infrastructure solutions to the cloud, building Hyper-V-based virtualization and System Center into your business practices and service offerings, and looking for ways to engage with Microsoft on joint private cloud opportunities.

I’d be happy to discuss any of your questions if you stop by our Private Cloud and Server Platform lounge at WPC and introduce yourself. Here’s how to stay up-to-date on all the latest Microsoft Server and Cloud Platform news during and after WPC:

- Read our new Server and Cloud Platform blog: http://blogs.technet.com/b/server-cloud/

- “Like” us on our new Facebook page: http://www.facebook.com/Server.Cloud

- Follow us on Twitter: @MSServerCloud

Microsoft’s Server and Tools Business News Bytes Blog reported Microsoft Delivers New Cloud Tools and Solutions at the Worldwide Partner Conference on 7/12/2011:

Cloud computing is as big a transformation, and opportunity, as the technology industry has ever seen. Partners and customers can look to Microsoft for the most comprehensive cloud strategy and offerings, in order to improve their business agility, focus and economics. Today, at the Worldwide Partner Conference, Microsoft announced tools and solutions to help partners capitalize on the opportunities, as well as examples of partners and customers already finding success.

Windows Azure Platform:

- Windows Azure Marketplace: The Windows Azure Marketplace will now enable application sales, allowing partners and customers to easily sell, try and buy Windows Azure-based applications. This capability builds on 99 partner data offerings already available for sale through the Marketplace and more than 450 currently listed applications. The new Windows Azure Marketplace application selling capability is available today. More information is available on the Windows Azure blog at http://blogs.msdn.com/b/windowsazure/.

- New examples of Innovative Solutions built on Windows Azure:

- Microsoft partner Wire Stone recently helped Boeing launch its “737 Explained Experience” on Windows Azure to give prospective 737 customers a rich, visually immersive tour of the plane. A case study is available here.

- Microsoft partner BrandJourney Venturing helped General Mills create a new business outlet on Windows Azure that enables consumers to find and purchase gluten-free products. A case study is available here.

- Microsoft yesterday released a commissioned study conducted by Forrester Consulting entitled “The ISV Business Case For The Windows Azure Platform:” Findings include that software partners deploying solutions on Windows Azure are generating 20% to 250% new revenue by reaching new customers, saving up to 80% on their hosting costs, and are able to reuse up to 80% of their existing .NET code when moving to Windows Azure. The study is available here.

- Windows Azure Platform Appliance Progress:

- Fujitsu announced in June that they would be launching the Fujitsu Global Cloud Platform (FGCP/A5) service in August 2011, running on a Windows Azure Platform Appliance at their datacenter in Japan. By using FGCP/A5, customers will be able to quickly build elastically-scalable applications using familiar Windows Azure platform technologies, streamline their IT operations management and be more competitive in the global market. In addition, customers will have the ability to store their business data domestically in Japan if they prefer.

- HP also intends to use the appliance to offer private and public cloud computing services, based on Windows Azure. They have an operational appliance at their datacenter that has been validated by Microsoft to run the Windows Azure Platform and they look forward to making services available to their customers later this year.

- eBay is in the early stages of implementing on the Windows Azure platform appliance and has successfully completed a first application on Windows Azure (ipad.ebay.com). eBay is continuing to evaluate ways in which the Windows Azure platform appliance can help improve engineering agility and reduce operating costs.

Private Cloud Computing with Windows Server and System Center:

- Windows Server 8: In his keynote STB president Satya Nadella highlighted that the next version of Windows Server, codenamed Windows Server 8, will be the next step in private cloud computing. More information will be shared at the BUILD conference in September.

- System Center 2012:

- Microsoft announced a beta of the Operations Manager capability of System Center 2012. System Center 2012 will further help partners and customers build private cloud services that focus on the delivery of business applications, not just infrastructure. Operations Manager is a key component that provides end-to-end application service monitoring and diagnostics across Windows, others platforms and Windows Azure. Operations Manager will fully integrate technology from the AVIcode acquisition for monitoring and deep insights into applications. The beta will be available next week through this site and more information can be found next week on the System Center blog.

- “Project Concero” is now officially the App Controller capability within System Center 2012. Demonstrated during Satya Nadella’s keynote today, App Controller is a new solution in System Center that gives IT managers control across both private and public cloud assets (Windows Azure) and gives application managers across the company the ability to deploy and manage their applications through a self-service experience.

SQL Server “Denali”:

- SQL Server “Denali” Community Technology Preview (CTP): SQL Server “Denali,” is a cloud-ready information platform to build and deliver apps across the spectrum of infrastructure. Customers and partners can use this opportunity to preview the marquee capabilities and new enhancements coming in the next release of SQL Server with the community technology preview available through this site.

- For the first time, customers can begin testing the much anticipated features of “Denali” with today’s CTP, including SQL Server AlwaysOn and Project “Apollo” for added mission critical confidence, Project “Crescent” for highly visual data exploration that unlocks breakthrough insights, and SQL Server Developer Tools code named “Juneau” for a modern development experience across server, BI, and cloud development projects. For more information about “Denali” or the new CTP, visit the SQL Server Data Platform Insider blog.

Notice that there was still no mention of Dell Computer’s intention to implement or resell WAPA. [See post below.]

Gavin Clarke (@gavin_clarke) analyzed the preceding blog post and asserted “Deadlines reset, evaluations ongoing” as a deck for his Microsoft: HP, eBay polishing Azure cloud-in-a-box (honest) article of 7/12/2011 for The Register:

A year after they were announced and six months after they were to be delivered, Microsoft has offered a fleeting glimpse of its Windows Azure cloud-in-a-box appliance parnerships with HP and eBay.

At its Worldwide Partners Conference (WPC) in Los Angeles on Tuesday, Microsoft said that HP has a Windows Azure appliance running in its data center, that it has been validated by Microsoft, and that HP "looks forward" to making services available to customers "later this year".

The PC and server maker intends to offer both private and public cloud services on Windows Azure, according to Microsoft.

eBay's progress and commitment looks a little less firm than that of HP. Microsoft said that eBay is "in the early stages of implementing on the Windows Azure platform appliance."

A year after eBay was named as a Windows Azure appliance partner by Microsoft along with HP, Dell, and Fujitsu, the online auction shop has completed a grand total of just one single application on Windows Azure: ipad.ebay.com.

eBay is "continuing to evaluate ways in which the Windows Azure platform appliance can help improve engineering agility and reduce operating costs," Microsoft said.

Tuesday's announcements were the second time this year that Microsoft has gone on the offense to "prove" that the Windows Azure appliance strategy is actually happening and hasn't fizzled.

One year ago, also at WPC, Microsoft named Dell, HP, Fujitsu, and eBay as early adopters of what it said was a "limited production" release of the planned Windows Azure appliance. The plan was for the first Windows Azure appliance to appear later in 2010, with companies building and running services before the end of that year.

Dell, HP, and Fujitsu would become service providers by hosting services for their customers running on Azure. They may also help Microsoft design, develop, and deploy the appliances to others.

Since then, not only have the target dates been missed by Microsoft's quartet without any explanations, but all the companies have been shy about saying what's going on – or even if they remained committed to Microsoft's cloud-in-a-box.

HP CEO Léo Apotheker, when asked by The Reg this March whether the planned cloud services that he was announcing would run on Azure, would neither confirm nor recommit.

Dell, speaking separately in March, said it planned to offer at least two public clouds, one based on Windows Azure but the other on something else that it would not detail. Fujitsu and Microsoft in June announced that Fujitsu's Global Cloud Platform, based on Azure, would launch in August. [Fujitsu’s] services [have] been in beta testing with at [least} twenty companies since April.

<Return to section navigation list>

Cloud Security and Governance

Chris Czarnecki reported Missile Defence Agency Adopts Cloud Computing in a 7/13/2011 post to the Learning Tree blog:

Today, whilst on a consulting assignment related to mobile development, we discussed the integration of mobile and Cloud Computing. My client immediately said “the big problem with cloud computing is security, applications co-hosted with other organisations applications is dangerous …”. As you know, this is something that I have written about before. Having then began to discuss the merits of Cloud Computing to provide a more balanced view to my client, an email arrived that did the job for me – in fact in a much better way than I was doing. The email contained an article by Jim Armstrong, CIO of the Missile Defence Agency. In the article he explained how the agency had deployed the cloud to better serve their customers. Key features in achieving this were to provide:

- Optimal service

- Reduce failure points

- More maintainable environment

- Reduce operating costs