Windows Azure and Cloud Computing Posts for 6/30/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Mark Teasdale (@MTeasdal) continued his series with a Blob Storage – Part Two post on 6/30/2011:

Continuing our discussion on blob storage I thought it would be interesting if we looked at how blob storage can solve a perennial criticism of Azure, i.e. it is just to expensive to allow for hobbyists and individuals to use it. I have worked in the IT community for quite a will as an independent contractor and I know a lot of people within that community. A common requirement for contractors is to have a web site advertising thier skillset, portfolio and clients. Nine times out of ten, the site is static, there is absolutely no need for a data store, there are no financial transactions being pushed through the site and there is probably no need for video etc.

Quite often I hear members from this community state that Azure is to pricey, and for their needs I have to agree. However I suspect that they are going down the route of having a web role (extra small instance), lets say 10 gb of storage and a CDN. Now give or take this is going to cost in the region of £26.50 or $47 a month. Straightaway there is no challenge in finding a cheaper equivalent offer by several orders of magnitude elsewhere… absolute no brainer.

However remember we are only wanting to serve up a static web site or silverlight application. Could blob storage come to our rescue ? Well we know that BLOB’s can be stored in a public container and are accessible via a public URI over an HTTP connection. The great thing about this storage mechanism is that it allows you to configure MIME types to ensure that the browser can correctly render the served content. We are going to look at hosting a static web site but its just as viable to serve up a silverlight application.

In this blog post we are going to serve a static html file from blob storage, but first we are going to upload it. I created a very simple windows application to upload the file to the blob storage account I created in Azure. I am assuming that you have already created a storage account.

The code above is a pretty standard use of the api that wraps around the rest service interface. However I did notice quite a nice way of instantiating access to your account without the overhead of the configuration normally required when you start with a web role template project within Azure.

This involves creating a StorageCredentialsAccountAndKey object that takes name of the account and its key that you created within the Azure framework. True this method is never going to be flexible enough to be acceptable in an enterprise scenario but for quick proof of concept or a small application designed to get you up to speed this is a nice way to get stuff up to Azure without having to write code to handle writing and reading to a configuration file.

Notice also in the code that we use to create the container

We make sure that the name of the container is specified in lower case. Once the file is uploaded we can access it through the URL which in my case was

https://harkatcomputingtraining.blob.core.windows.net/mytestcontainer/7ef585ed-5ad0-439e-9dc0-d60b7fd204e9/HelloWorld.htm

Now I haven’t created a web role to host this page and if you look at our screenshot below

You can see we have reduced our monthly price considerably to $7.75 which is starting to put Azure in the same ball park as other hosting solutions.

Directory Structure and Content Delivery Networks

Of course web sites are structured hierarchically within folders, but you can simulate this by putting the slash within the blob name. Now we can significantly improve performance by using Content Delivery Networks (CDNs). Basically a CDN is a method of delivering content by an optimised Edge server to as close to the requesting client as possible. Content is uploaded to the originating server which then delivers to the edge server nearest to the request if the edge server has not already got a copy of the content.

A CDN edge server is located in the major data centers of

- Los Angeles

- Dublin

- New York

- London

- Dubai

- Hong Kong

- Tokyo

Origin servers sole purpose is to serve content to the edge servers and it redirects requests to the nearest edge server. If we click the CDN menu on the Azure portal under the storage account we can just enable a new CDN endpoint through the presented screen,

This will then give us a new endpoint as shown below

Now with a new endpoint comes a new address for accessing content as shown below and this has to be incorporated in the access url

Now this really isn’t user friendly, but remember you can add you own domain name to make it more accessible, we will cover this in a later blog post.

What we have achieved is a cheaper hosting solution in Azure for a certain type of site either web or Silverlight. It proves that with a bit of imagination that Azure can be a platform that viable to both hobbyists, very small companies and technology professionals. The blob storage option gives us a cheap alternative to using some of the more expensive components of Azure such as compute instances and SQL Azure.

Mark published Azure Blob Storage Basics Part 1 on 6/12/2011.

Jerry Huang described Gladinet CloudAFS 3 in a 6/29/2011 post:

Gladinet CloudAFS is reaching version 3, the same version level as the other two products (Cloud Desktop & Cloud Backup) in the same Gladinet Cloud Storage Access Suite.

CloudAFS is an acronym for cloud attached file server. The basic functionality is to attach cloud storage services to a file server as a mountable volume. Thus expanding the file server with cloud storage capability.

Its functionality includes Backup file server shares to cloud, Access cloud storage as a network share, Sync file servers over two different locations, Integrate existing applications such as Backup Exec with cloud storage and Connect a group of users over cloud storage. It services the same BASIC (Backup, Access, Sync, Integrate, Connect) use cases for cloud storage.

1. Enhanced User Interface

The CloudAFS user interface is updated to share the same look and feel with Gladinet Cloud Desktop and Cloud Backup. Once you are familiar with one of the product, you are pretty much familiar with all of the products.

2. Mount Cloud Storage Service

More and more cloud storage services are available to mount into the file server. For example, CloudAFS supports Amazon S3 (all regions), AT&T Synaptic Storage, EMC Atmos, Google Storage, Internap XIPCloud, KT ucloud, Mezeo, Nirvanix, OpenStack, Peer1, Rackspace Cloud Files, Windows Azure Storage. As more and more cloud storage services providers coming to the market, more and more are coming to Gladinet CloudAFS too.

3. Manage Local Directories

You can keep your local directories as your network shares and use cloud storage service to protect them.

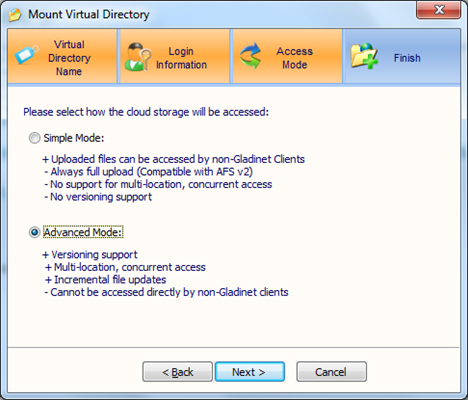

4. Cloud Storage Advanced Mode

In the advanced mode, a cloud storage service can be mounted with cloud sync folder capability, thus allowing two CloudAFS servers to sync to each other.

Related Posts

<Return to section navigation list>

SQL Azure Database and Reporting

OneNumbus and Jasco posted Introducing Jasco® 2SQL™ Azure™ about a service for converting Access databases to SQL Azure (and SQL Azure) on 6/30/2011:

Help enable your employees to easily access up-to-date informations for more effective decision making.

Jasco 2SQL quickly and securely migrates mission critical data to Microsoft SQL Server or Microsoft Azure, which helps ensure your data can be used more effectively across your organisation and remains accessible to your users.

For more details about moving Access tables to SQL Server and SQL Azure, see my Bibliography and Links for My “Linking Access tables to on-premise SQL Server 2008 R2 Express or SQL Azure in the cloud” Webcast of 4/26/2011 post of 4/24/2011.

<Return to section navigation list>

MarketPlace DataMarket and OData

The WCF Data Services Team announced datajs V1 Now Available in a 6/29/2011 post:

Over the last few months, we have been hard at work on the datajs library, releasing four preview versions and working with web developers to fine-tune the library. Today, we’re proud to announce that the first version of datajs is now available for download.

What does it do?

datajs is a JavaScript library for web applications that supports the latest version of the OData protocol and HTML5 features such as local storage. It provides a simple, extensible API that can help you write better web applications, faster. Our goal is to simplify working with data on the web, and to leverage improvements in modern browsers.

Where do I get it?

You can download the development and minified version at http://datajs.codeplex.com/. The source code for the release is also available, distributed under the MIT license.You can also use NuGet to download the datajs package.

We hope this helps build a better web, and we look forward to hearing more from your experience.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

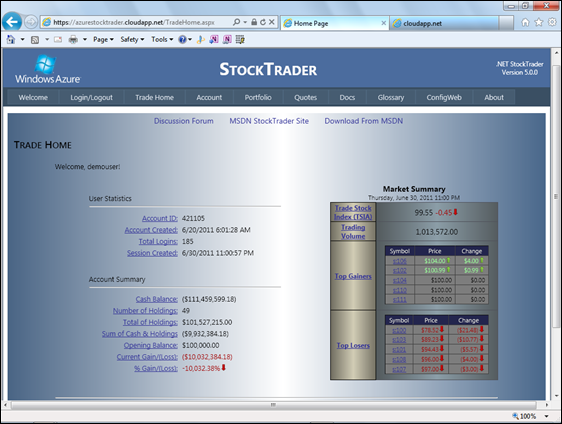

The Windows Azure Team reported Just Released: StockTrader 5.0, Windows Azure Platform End-to-End Sample Application with a live cloudapp.net demo in a 6/30/2011 post:

A new end-to-end sample application called StockTrader 5.0 is now available for free download. This sample application illustrates the use of secure services to integrate private on-premises resources (business services and SQL Server) with the public-cloud Web application running in a Windows Azure Web Role. The application also demonstrates the use of SQL Azure and/or SQL Server to store and manage application configuration data, with one-click changes to configuration settings that automatically get propagated across all Windows Azure or on-premises nodes running the various tiers.

The application shows a complete enterprise application that was migrated from a purely on-premises application, to a Windows Azure cloud application. The application also includes a Visual Studio template and wizard that will enable you to create a multi-tier, structured Visual Studio solution based on the same high-performance design pattern as the sample itself. The template can be used to target both on-premises and Windows Azure/SQL Azure deployments.

The sample is easy to setup, with an automated installer that installs the application and databases for use on-premises, as well as on Windows Azure with SQL Azure. Full source code is included.

The application is also running live on Windows Azure, using a SQL Azure backing database, here. The live version gives you access to a Web UI that shows the Windows Azure instances, SQL Azure databases, and Windows Azure AppFabric caches the application uses—including live status information, latency information, and SQL Azure query performance statistics. You can also browse the on-premise deployed service domains that are running in a private data center on Hyper-V virtual machines managed by System Center and VMM 2012 (beta).

This end-to-end application scenario:

- Demonstrates one code base deployed on-premises in private clouds, on the Windows Azure public cloud, or in hybrid cloud environments.

- Includes a single high-performance data access layer that works with both SQL Server and SQL Azure.

- Demonstrates the use of Windows Azure AppFabric and Windows Server AppFabric caching.

- Demonstrates a multi-tier design, with the use of secure services to integrate service domains distributed across the Internet.

- Can be seamlessly scaled across many Windows Azure instances, or across many on-premise Hyper-V VMs (managed by System Center Virtual Machine Manager).

- An optional desktop smart client (WPF) application uses Windows Azure-hosted business services and SQL Azure.

Click here to learn more and download StockTrader 5.0. Click here to see the live version.

Steve Yi reported Now Available: Windows Phone 7 SDK Release for Project Hawaii in a 6/30/2011 post to the Windows Azure Team blog:

Microsoft Research has just released a SDK preview download of its Windows Phone 7 + Cloud Services Software Development Kit. (SDK) This SDK is designed around developing Windows Phone 7 applications with services that are not available to the general public. These services include computation (Windows Azure); storage (Windows Azure); authentication (Windows Live ID); notification; client-back-up; client-code distribution and location.

Microsoft Research’s Windows Phone 7 + Cloud Services SDK page describes the SDK as follows:

This is a software-development kit (SDK) for the creation of Windows Phone 7 (WP7) applications that leverage research services not yet available to the general public. The primary goal of this SDK is to support the efforts of Project Hawaii, a student-focused initiative for exploration of how cloud-based services can be used to enhance the WP7 experience.

and shows the date published as 1/25/2011. I assume that date is incorrect.

Acutmatica announced JAMS Manufacturing for Acumatica delivers Advanced Manufacturing Software on the Microsoft Windows Azure Cloud in a 6/30/2011 press release:

BETHESDA, Md.--(BUSINESS WIRE)--Acumatica and JAAS Systems, providers of Cloud ERP software, today announced the availability of a fully integrated manufacturing, distribution, and accounting solution on the Windows Azure Cloud. Mid-sized manufacturing companies can use the solution to manage sales, inventory, material planning, master production schedules, and much more from a web-browser no matter where they are located.

“By partnering with JAAS Systems we are able to deliver a complete manufacturing solution with access from anywhere, scalability, reduced maintenance, and deployment options.”

JAAS Systems developed JAMS Manufacturing for Acumatica using Acumatica’s web-based software development kit and Microsoft Visual Studio. The integration delivers a seamless user experience with a common user interface, integrated menus, and shared database elements.

“We took over 100 years of manufacturing software expertise and used Acumatica’s tools to move it to the Cloud in a few months,” said Fred Szumlic, General Manager at JAAS Systems. “Acumatica’s platform allowed us to inexpensively develop a web-based solution with fully integrated accounting and distribution. Windows Azure allowed us to meet customer demand by quickly launching a SaaS solution on the Cloud without the expense of building and managing a datacenter.”

JAMS Manufacturing for Acumatica integrates with the Acumatica Financial Suite, Distribution Suite, and Customer Management Suite to deliver a complete solution for make-to-stock, make-to-order, job-shop, and repetitive manufacturing companies. The entire solution can be run on a customer’s premise or deployed as a service in the Cloud to save money on initial deployment costs and ongoing maintenance.

“Windows Azure allows software companies to utilize their existing skills to develop Cloud applications without needing to worry about infrastructure, data replication, and scalability,” said Kim Akers, general manager for global ISV partners at Microsoft Corp. “Collaboration between Cloud ISVs such as Acumatica and JAAS Systems allows businesses to gain the benefits of the Cloud as well as the ability to integrate applications.”

“Several customers that were using our software to manage distribution wanted to extend the benefits of Cloud ERP to their manufacturing processes,” said Ezequiel Steiner, CEO of Acumatica. “By partnering with JAAS Systems we are able to deliver a complete manufacturing solution with access from anywhere, scalability, reduced maintenance, and deployment options.”

Availability:

JAMS Manufacturing for Acumatica is available today. Customers can purchase a software license or a turnkey annual subscription service (SaaS) that runs on Microsoft Windows Azure. To learn more visit www.acumatica.com/manufacturing.

About Acumatica

Acumatica develops web-based ERP software that delivers the benefits of Cloud and SaaS without sacrificing customization, control, security, or speed. Acumatica can be deployed on premise, hosted at a datacenter, or run on a Cloud computing platform. Learn more about Acumatica’s Cloud ERP solution at www.acumatica.com.

About JAAS Systems

JAAS Systems Ltd is a leading provider of manufacturing software for mid-sized businesses. JAAS Advanced Manufacturing Software delivers powerful manufacturing applications for make-to-stock, make-to-order, engineer-to-order, job-shop, and repetitive-process manufacturing companies. By integrating with leading accounting solutions JAAS can provide customers with a complete and cost effective business management solution. Learn more at www.jaas.net.

Insite Software asserted “Microsoft-centric solution provides .NET developers with advanced cloud computing solution and SDK for more profitable and rapid ecommerce deployments” in an introduction to its Insite Software Delivers B2B Ecommerce Platform Certified by Microsoft for Windows Azure press release of 6/30/2011:

Minneapolis, MN (PRWEB) June 30, 2011. Insite Software, a leading provider of B2B and B2C ecommerce platforms and shipping solutions, today announced that Microsoft Corporation has certified InsiteCommerce as one of the first enterprise B2B ecommerce platform powered by Windows Azure for cloud computing. The latest version of InsiteCommerce provides the Microsoft .NET development community and design agencies with advanced cloud deployment and an easy-to-use SDK allowing firms to rapidly develop and deliver scalable, high performing, B2B ecommerce projects for their manufacturing, distribution and retail clients.

“.NET developers have been looking for a reliable, scalable, ecommerce platform that delivers the B2B capabilities in the cloud, as Microsoft Commerce Server does not currently support the Windows Azure platform,” said Brian Strojny, CEO of Insite Software. “InsiteCommerce is designed for cloud computing and leverages the benefits of Windows Azure to help developers provide faster, scalable and more profitable B2B ecommerce deployments. We are pleased to be one of the first enterprise B2B ecommerce platforms certified by Microsoft and look forward to working more closely with the .NET development community.”

The InsiteCommerce ecommerce platform was built to support the manufacturing, distribution, and retail industries with industry-leading B2B and B2C web strategies including custom website design, content management, and integration to back-end ERP systems. Its latest SDK reduces the complexity of designing and maintaining premier ecommerce sites by offering .NET developers complete control. The advanced toolkit enables .NET development firms to become self-sufficient and deliver more ecommerce projects in a short period time.

“Movement of integration and experiences to the cloud offers opportunities for lowered costs, lower operational burden, and shorter time-to-market,” stated the August 2010 Forrester Research, Inc. report, What Every Exec Needs to Know About the Future of eCommerce Technology. “In the near future, we will see solutions that will eliminate standalone applications altogether and provide Web services to power sites and the increasing variety of user experiences that businesses will need to meet changing customer needs.”

To download more information on InsiteCommerce ecommerce platform for Windows Azure, visit http://www.insitesoft.com/partners/developersresellers/azurecertifiedecommerce.html.

About Insite Software:

Insite Software is a leading provider of B2B and B2C ecommerce platforms and shipping solutions, serving more than 700 customers, design agencies and .NET development firms across the globe. Headquartered in Minneapolis, Minnesota, Insite Software’s solutions are used by leading manufacturers, distributors and retailers to sell and distribute their products to dealers, franchisers, stores, contractors, consumers and others. Insite offers ecommerce solutions for companies at all stages of adoption and addresses the unique needs of B2B and B2C sites. InsiteCommerce’s SDK offers .NET and other development firms an advanced toolkit to become self-sufficient and deliver more ecommerce projects in a short period time. Insite Software’s customers select the InsiteCommerce edition that fits the demands of their buyers, timeframe and ecommerce strategy, ranging from a pre-wired Express service to a more flexible Professional edition and the fully customizable Enterprise solution. All InsiteCommerce editions are fully integrated with a wide range of cloud platforms, CMS, CRM, and ERP providers including Epicor, Infor, Microsoft Dynamics, Sage, SAP, Sitecore, and Windows Azure. More information is available online at http://www.insitesoft.com, by phone at 866.746.0377, by email at info(at)insitesoft(dot)com, or on our blog.

Avkash Chauhan repored a workaround for Windows Azure Web Role Error: "Faulting application WaHostBootstrapper.exe" on 6/29/2011:

The Web Role was stuck as below:

6:09:56 PM - Preparing...

6:09:56 PM - Connecting...

6:09:59 PM - Uploading...

6:11:15 PM - Creating...

6:12:28 PM - Starting...

6:13:20 PM - Initializing...

6:13:21 PM - Instance 0 of role AvkashTestWebRole is initializing

6:18:39 PM - Instance 0 of role AvkashTestWebRole is busy

6:21:51 PM - Instance 0 of role AvkashTestWebRole is stopped

6:21:51 PM - Warning: All role instances have stopped

6:22:23 PM - Instance 0 of role AvkashTestWebRole is busy

6:23:26 PM - Instance 0 of role AvkashTestWebRole is stopped

6:23:26 PM - Warning: All role instances have stopped

I logged into the Azure VM I saw exception logged in Application event log and BootStrapper logs

Following exception related with WaHostBootStrapper.exe was the first in list:

Log Name: Application

Source: Application Error

Date: 6/29/2011 7:40:42 PM

Event ID: 1000

Task Category: (100)

Level: Error

Keywords: Classic

User: N/A

Computer: RD00155D4224C7

Description:

Faulting application WaHostBootstrapper.exe, version 6.0.6002.18009, time stamp 0x4d6ac763, faulting module ntdll.dll, version 6.0.6002.18446, time stamp 0x4dcd9861, exception code 0xc0000005, fault offset 0x000000000001b8a1, process id 0xbe8, application start time 0x01cc36946462e8a0.

Following BootStrapper log shows the error with WaHostBootStrapper.exe which is stuck as below

[00001744:00001280, 2011/06/29, 19:57:47.150, 00040000] Process exited with 0.

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] <- WaitForProcess=0x0

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] <- ExecuteProcessAndWait=0x0

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] -> WapRevertEnvironment

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] <- WapRevertEnvironment=0x0

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] -> WapModifyEnvironment

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] -> WapUpdateEnvironmentVariables

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] -> WapUpdateEnvironmentVariable

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] <- WapUpdateEnvironmentVariable=0x0

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] <- WapUpdateEnvironmentVariables=0x0

[00001744:00001280, 2011/06/29, 19:57:47.150, 00010000] -> WapGetEnvironmentVariable

[00001744:00001280, 2011/06/29, 19:57:47.150, 00100000] <- WapGetEnvironmentVariable=0x800700cb

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] <- WapModifyEnvironment=0x0

[00001744:00001280, 2011/06/29, 19:57:47.181, 00040000] Executing Startup Task type=2 cmd=E:\plugins\RemoteAccess\RemoteAccessAgent.exe

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> ExecuteProcess

[00001744:00001280, 2011/06/29, 19:57:47.181, 00040000] Executing E:\plugins\RemoteAccess\RemoteAccessAgent.exe.

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] <- ExecuteProcess=0x0

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> WapRevertEnvironment

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] <- WapRevertEnvironment=0x0

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> WapModifyEnvironment

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> WapUpdateEnvironmentVariables

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> WapUpdateEnvironmentVariable

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] <- WapUpdateEnvironmentVariable=0x0

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] <- WapUpdateEnvironmentVariables=0x0

[00001744:00001280, 2011/06/29, 19:57:47.181, 00010000] -> WapGetEnvironmentVariable

[00001744:00001280, 2011/06/29, 19:57:47.181, 00100000] <- WapGetEnvironmentVariable=0x800700cb

[00001744:00001280, 2011/06/29, 19:57:47.228, 00010000] <- WapModifyEnvironment=0x0

[00001744:00001280, 2011/06/29, 19:57:47.228, 00040000] Executing Startup Task type=0 cmd=E:\plugins\RemoteAccess\RemoteAccessAgent.exe /blockStartup

[00001744:00001280, 2011/06/29, 19:57:47.228, 00010000] -> ExecuteProcessAndWait

[00001744:00001280, 2011/06/29, 19:57:47.228, 00010000] -> ExecuteProcess

[00001744:00001280, 2011/06/29, 19:57:47.228, 00040000] Executing E:\plugins\RemoteAccess\RemoteAccessAgent.exe /blockStartup.

[00001744:00001280, 2011/06/29, 19:57:47.228, 00010000] <- ExecuteProcess=0x0

[00001744:00001280, 2011/06/29, 19:57:47.228, 00010000] -> WaitForProcess

Following event log entry shows the error is related with some of the DLL are missing:

Log Name: Application

Source: ASP.NET 4.0.30319.0

Date: 6/29/2011 7:49:54 PM

Event ID: 1310

Task Category: Web Event

Level: Warning

Keywords: Classic

User: N/A

Computer: Machine_Name

Description:

Event code: 3008

Event message: A configuration error has occurred.

Event time: 6/29/2011 7:49:54 PM

Event time (UTC): 6/29/2011 7:49:54 PM

Event ID: ************************

Event sequence: 1

Event occurrence: 1

Event detail code: 0

Application information:

Application domain: /LM/W3SVC/********/*****************

Trust level: Full

Application Virtual Path: /

Application Path: E:\sitesroot\0\

Machine name: <Machine_Name>

Process information:

Process ID: 2788

Process name: w3wp.exe

Account name: NT AUTHORITY\NETWORK SERVICE

Exception information:

Exception type: ConfigurationErrorsException

Exception message: Exception of type 'System.OutOfMemoryException' was thrown.

at System.Web.Configuration.CompilationSection.LoadAssemblyHelper(String assemblyName, Boolean starDirective)

at System.Web.Configuration.CompilationSection.LoadAllAssembliesFromAppDomainBinDirectory()

at System.Web.Configuration.AssemblyInfo.get_AssemblyInternal()

at System.Web.Compilation.BuildManager.GetReferencedAssemblies(CompilationSection compConfig)

at System.Web.Compilation.BuildManager.CallPreStartInitMethods()

at System.Web.Hosting.HostingEnvironment.Initialize(ApplicationManager appManager, IApplicationHost appHost, IConfigMapPathFactory configMapPathFactory, HostingEnvironmentParameters hostingParameters, PolicyLevel policyLevel, Exception appDomainCreationException)

Exception of type 'System.OutOfMemoryException' was thrown.

at System.Reflection.RuntimeAssembly._nLoad(AssemblyName fileName, String codeBase, Evidence assemblySecurity, RuntimeAssembly locationHint, StackCrawlMark& stackMark, Boolean throwOnFileNotFound, Boolean forIntrospection, Boolean suppressSecurityChecks)

at System.Reflection.RuntimeAssembly.InternalLoadAssemblyName(AssemblyName assemblyRef, Evidence assemblySecurity, StackCrawlMark& stackMark, Boolean forIntrospection, Boolean suppressSecurityChecks)

at System.Reflection.RuntimeAssembly.InternalLoad(String assemblyString, Evidence assemblySecurity, StackCrawlMark& stackMark, Boolean forIntrospection)

at System.Reflection.Assembly.Load(String assemblyString)

at System.Web.Configuration.CompilationSection.LoadAssemblyHelper(String assemblyName, Boolean starDirective)

Request information:

Request URL: http:// <Internal_IP_Address>/default.aspx

Request path: /default.aspx

User host address: <Internal_IP_Address>

User:

Is authenticated: False

Authentication Type:

Thread account name: NT AUTHORITY\NETWORK SERVICE

Thread information:

Thread ID: 6

Thread account name: NT AUTHORITY\NETWORK SERVICE

Is impersonating: False

Stack trace: at System.Web.Configuration.CompilationSection.LoadAssemblyHelper(String assemblyName, Boolean starDirective)

at System.Web.Configuration.CompilationSection.LoadAllAssembliesFromAppDomainBinDirectory()

at System.Web.Configuration.AssemblyInfo.get_AssemblyInternal()

at System.Web.Compilation.BuildManager.GetReferencedAssemblies(CompilationSection compConfig)

at System.Web.Compilation.BuildManager.CallPreStartInitMethods()

at System.Web.Hosting.HostingEnvironment.Initialize(ApplicationManager appManager, IApplicationHost appHost, IConfigMapPathFactory configMapPathFactory, HostingEnvironmentParameters hostingParameters, PolicyLevel policyLevel, Exception appDomainCreationException)

Launching web application using local IP address in the Virtual Machine I see the following error:

Server Error in '/' Application.

________________________________________

Exception of type 'System.OutOfMemoryException' was thrown.

Description: An unhandled exception occurred during the execution of the current web request. Please review the stack trace for more information about the error and where it originated in the code.

Exception Details: System.OutOfMemoryException: Exception of type 'System.OutOfMemoryException' was thrown.

Source Error:

An unhandled exception was generated during the execution of the current web request. Information regarding the origin and location of the exception can be identified using the exception stack trace below.

Stack Trace:

[OutOfMemoryException: Exception of type 'System.OutOfMemoryException' was thrown.]

System.Reflection.RuntimeAssembly._nLoad(AssemblyName fileName, String codeBase, Evidence assemblySecurity, RuntimeAssembly locationHint, StackCrawlMark& stackMark, Boolean throwOnFileNotFound, Boolean forIntrospection, Boolean suppressSecurityChecks) +0

System.Reflection.RuntimeAssembly.InternalLoadAssemblyName(AssemblyName assemblyRef, Evidence assemblySecurity, StackCrawlMark& stackMark, Boolean forIntrospection, Boolean suppressSecurityChecks) +567

System.Reflection.RuntimeAssembly.InternalLoad(String assemblyString, Evidence assemblySecurity, StackCrawlMark& stackMark, Boolean forIntrospection) +192

System.Reflection.Assembly.Load(String assemblyString) +35

System.Web.Configuration.CompilationSection.LoadAssemblyHelper(String assemblyName, Boolean starDirective) +118

[ConfigurationErrorsException: Exception of type 'System.OutOfMemoryException' was thrown.]

System.Web.Configuration.CompilationSection.LoadAssemblyHelper(String assemblyName, Boolean starDirective) +11396867

System.Web.Configuration.CompilationSection.LoadAllAssembliesFromAppDomainBinDirectory() +484

System.Web.Configuration.AssemblyInfo.get_AssemblyInternal() +127

System.Web.Compilation.BuildManager.GetReferencedAssemblies(CompilationSection compConfig) +334

System.Web.Compilation.BuildManager.CallPreStartInitMethods() +280

System.Web.Hosting.HostingEnvironment.Initialize(ApplicationManager appManager, IApplicationHost appHost, IConfigMapPathFactory configMapPathFactory, HostingEnvironmentParameters hostingParameters, PolicyLevel policyLevel, Exception appDomainCreationException) +1087

[HttpException (0x80004005): Exception of type 'System.OutOfMemoryException' was thrown.]

System.Web.HttpRuntime.FirstRequestInit(HttpContext context) +11529072

System.Web.HttpRuntime.EnsureFirstRequestInit(HttpContext context) +141

System.Web.HttpRuntime.ProcessRequestNotificationPrivate(IIS7WorkerRequest wr, HttpContext context) +4784373

________________________________________

Version Information: Microsoft .NET Framework Version:4.0.30319; ASP.NET Version:4.0.30319.1

Note:

The key here is that “Out of memory exception could mislead” which is actually caused because of “some reference DLL is missing” at first place. See the exception hierarchy to understand it.

Solution:

A few references were not set to "Copy Local as true" and after setting all necessary references to "Copy Local as True" and then upgrade the deployment solved the problem.

DevX.com asserted “New Eclipse tool plug-in for Microsoft Azure aims to simplify Java development on Microsoft's cloud platform” in a deck for its Microsoft Debuts Upgraded Eclipse Tool for Azure Cloud article of 6/29/2011:

Microsoft has released a community technology preview (CTP) of the Windows Azure Plugin for Eclipse with Java. The tool makes it easier for Eclipse users to package their Java applications for deployment on Microsoft's Azure cloud computing service. "There are many ways to build packages to deploy to Windows Azure, including Visual Studio and command line-based tools. For Java developers, we believe the Eclipse plug-in we are providing may be the easiest," said Microsoft's Martin Sawicki. "And we have received a strong response from developers that these serve as great learning tools to understand how to take advantage of the Windows Azure cloud offering."

New features in the plug-in include a UI for remote access configuration, schema validation and auto-complete for *.cscfg and *.csdef Azure configuration files, an Azure project creation wizard, sample utility scripts for downloading or unzipping files, shortcuts to test deployment in the Azure compute emulator, an Ant-based builder and a project properties UI for configuring Azure roles.

Jonathan Rozenblit (@jrozenblit) continued his What’s In the Cloud: Canada Does Windows Azure - Booom!! series on 6/29/2011:

Since it is the week leading up to Canada day, I thought it would be fitting to celebrate Canada’s birthday by sharing the stories of Canadian developers who have developed applications on the Windows Azure platform. A few weeks ago, I started my search for untold Canadian stories in preparation for my talk, Windows Azure: What’s In the Cloud, at Prairie Dev Con. I was just looking for a few stores, but was actually surprised, impressed, and proud of my fellow Canadians when I was able to connect with several Canadian developers who have either built new applications using Windows Azure services or have migrated existing applications to Windows Azure. What was really amazing to see was the different ways these Canadian developers were Windows Azure to create unique solutions.

This week, I will share their stories.

Leveraging Windows Azure for Social Applications

Back in May, we talked about leveraging Windows Azure for your next app idea. We talked about the obvious scenarios of using Windows Azure – web applications. Lead, for example, is a web-based API hosted on Windows Azure. Election Night is also a web-based application, with an additional feature – it can be repackaged as a software-as-a-service (hosted on a platform-as-a-service). (More on this in future posts) Connect2Fans is an example of a web-based application hosted on Windows Azure. But we also talked other, not-as-obvious scenarios such as extensions for SharePoint and CRM, mobile applications, and social applications.

Today, we’ll see how Karim Awad and Trevor Dean of bigtime design used Windows Azure to build their social application, Booom!! (recognize the application? Booom!! was also featured at the Code Your Art Out Finals)

Booom!!!

Booom!! allows you to receive the information you want from your favourite brands and pages on Facebook. No more piles of messages in the "Other" box or constant posts to your wall. Booom!! only sends the stuff you want and drops it right into your personal Booom Box. Booom!! provides a simplified messaging system for both page admins and Facebook users.

At the Code Your Art Out Finale, I had a chance to chat with Karim and Trevor, the founders and developers of Booom!! about how they built Booom!! using Windows Azure.

Jonathan: When you guys were designing Booom!!, what was the rationale behind your decision to develop for the Cloud, and more specifically, to use Windows Azure?

Karim: We chose cloud technologies for the app not only because it was a technical requirement for the competition (Code Your Art Out) but we are always conscious of scalability with everything that we build. We want our applications to be able to grow with their user base and cloud technology allows us to accomplish this without much financial investment.

Trevor: We were in the final stages of evaluating Amazon’s EC2 when we entered into the Code Your Art Out competition. We were excited to hear the Windows Azure was a technical requirement because we wanted to evaluate Microsoft’s cloud solution as well. I found the Azure management portal to be very intuitive and with little effort I had commissioned a database and deployed a WCF service to the Cloud. This same scenario on Amazon would have taken at least twice as long and I would have also had to have previously installed SQL Server and any other supporting applications. One feature I saw as a real benefit was being able to promote your code from staging servers to production servers. It was a no brainer after the first deployment attempt. We are a start-up and we have limited resources and limited finances, using Azure helps to ease those pains and remain competitive.

Jonathan: What Windows Azure services are you using? How are you using them?

Trevor: We have a .NET WCF service hosted in a Windows Azure hosted service – a web role. Our PHP-based Facebook application front-end application talks to the service in Azure. We are currently working on deploying the front-end onto Azure as well, and then we will also start taking advantage of the storage services to optimize the front-end client performance. Then, we will be “all in”!

Jonathan: During development, did you run into anything that was not obvious and required you to do some research? What were your findings? Hopefully, other developers will be able to use your findings to solve similar issues.

Trevor: We did experience some issues with getting PHP deployed to the Cloud and we are still working through those issues. We used the command line tool (Windows Azure Command-line Tools for PHP) to create the deployment package and everything worked great in the DevFabric but when I deployed, I would get a "500 - Internal server error". I have determined the issue is with my "required" declaration but I'm not sure why and I could not find much documentation on this or on how to enable better error messaging or get access to logs. Still working on that. Otherwise, everything was smooth!

Jonathan: Lastly, what were some of the lessons you and your team learned as part of ramping up to use Windows Azure or actually developing for Windows Azure?

Trevor: Microsoft has always built intuitive tools and Azure is another great example. There will be many things I'm sure we will learn about cloud technologies and Azure specifically but for now it was nice to see that what we did already know was reinforced.

Karim: Azure is an impressive piece of technology that will help many small to large business grow and scale. It's nice to see Microsoft playing nicely with other technologies as well – WordPress, PHP, MySQL, etc.

I’d like to take this opportunity to thank Karim and Trevor for sharing their story. When you have a chance, check out Booom!!. Enter your email address so that you can receive an invite to the beta release when it is ready. I had the opportunity to see Booom!! being demonstrated – looks pretty cool. I, for one, am looking forward to giving it a test drive. Stay tuned for pictures and videos from the demonstration.

Tomorrow – another Windows Azure developer story.

Join The Conversation

What do you think of this solution’s use of Windows Azure? Has this story helped you better understand usage scenarios for Windows Azure? Join the Ignite Your Coding LinkedIn discussion to share your thoughts.

Previous Stories

Missed previous developer stories in the series? Check them out here.

This post also appears in Canadian Developer Connection.

<Return to section navigation list>

Visual Studio LightSwitch

The Visual Studio Lightswitch Team (@VSLightSwitch) updated its Excel Importer for Visual Studio LightSwitch add-in on 6/24/2011:

Update: The Excel importer will now allow CSV files to be imported from LightSwitch web applications.

Introduction

Excel Importer is a Visual Studio LightSwitch Beta 2 Extension. The extension will add the ability to import data from Microsoft Excel to Visual Studio LightSwitch applications. The importer can validate the data that is being imported and will even import data across relationships.

Getting Started

To install and use the extension in your LightSwitch applications, unzip the ExcelImporter zip file into your Visual Studio Projects directory (My Documents\Visual Studio 2010\Projects) and double-click on the LightSwitchUtilities.Vsix.vsix package located in the Binaries folder. You only need LightSwitch installed to use the Excel Importer.

In order to build the extension sample code, Visual Studio 2010 Professional, Service Pack 1, the Visual Studio SDKand LightSwitch are required. Unzip the ExcelImporter zip file into your Visual Studio Projects directory (My Documents\Visual Studio 2010\Projects) and open the LightSwitchUtilities.sln solution.

Building the Sample

To build the sample, make sure that the LightSwitchUtilities solution is open and then use the Build | Build Solution menu command.

Running the Sample

To run the sample, navigate to the Vsix\Bin\Debug or the Vsix\Bin\Release folder. Double click the LightSwitchUtilities.Vsix package. This will install the extension on your machine.

Create a new LightSwitch application. Double click on the Properties node underneath the application in Solution Explorer. Select Extensions and check off LightSwitch Utilities. This will enable the extension for your application.

Using the Excel Importer

In your application, add a screen for some table. For example, create a Table called Customer with a single String property called Name. Add a new Editable Grid screen for Customers. Create a new button for the screen called ImportfromExcel. In the button's code, call LightSwitchUtilities.Client.ImportFromExcel, passing in the screen collection you'd like to import data from.

Run the application and hit the Import From Excel button. This should prompt you to select an Excel file. This Excel file must be located in the Documents directory due to Silverlight limitations. The Excel file's first row should identify each column of data with Title. These titles will be displayed in the LightSwitch application to allow you to map them to properties on the corresponding LightSwitch table.

For more details, please see How to Import Data from Excel Using the Excel Import Extension.

For more information on how to develop your own extensions for the community please see the Extensibility Cookbook. And please ask questions in the LightSwitch Extensibility forum.

Don Kiely offers 7+ hours of training for LightSwitch development in a his Exploring Visual Studio LightSwitch course for LearnDevNow.com:

Course Description: LightSwitch is one of the most interesting development technologies to come out of Microsoft in a long time. Built on Visual Studio, it is truly a rapid application development tool in a way that Visual Basic and Access never were. It provides a solid foundation and infrastructure for common business applications, built using tried and true technologies. Yet it is highly extensible and flexible. It is not right for all applications, but when it is a good fit, it is pretty sweet. In this course, you will get a thorough overview of LightSwitch so that you can make a decision about whether it will work for you and the kinds of applications you build.

7+ hours of media runtime

Visual Studio LightSwitch

- A New Way to Develop

- When is LightSwitch Appropriate?

- Installing LightSwitch

- Demo: LightSwitch App

LightSwitch Development Environment

- Building a New LightSwitch App

- Components of a LightSwitch App

- Demo: LightSwitch Tour

Creating a New LightSwitch App

- The Sample Application

- Demo: Creating the Application

Application Architecture

- Application Architecture

LightSwitch Data Sources

- Data Access Layer

- LightSwitch Data Types

- SQL Server Provider Specifics

- Intrinsic SQL Database

- Entity Relationships

Exploring Data Sources

- Demo: Data Entities

- Computed Fields

- Limitations on Computed Fields

- Demo: Computed Fields

Using Data Objects

- Creating Choice Lists

- Demo: Choice Lists

- Setting Default Values

- Demo: Default Values

- Handling Data Events

- LightSwitch Data Objects

- Collection Object Performance

- Comparison of Entity Objects

Queries

- Queries

- Designing Queries

- Sorting and Parameters

- Handling Query Events

- Extending Queries in Code

- Demo: Extending Queries

- Retrieving Query Data Using Code

- Demo: Retrieving Query Data

Application User Interface

- Presentation Layer

- LightSwitch Shell

- Business Objects and Rules

- Screens

- Screen Data Management

Creating Screens

- Creating Screens

- Handling Screen Events

- Demo: Screens

Validating User Input

- Validating User Input

- LightSwitch Validation Rules

- Types of Validation Rules

- Validation Rule Execution

- Writing Custom Validation Rules

- ValidationResultsBuilder Collection

- Validation Erros in UI

- Demo: Validation

LightSwitch Development Detail

- Customizing the Navigation Menu

- Demo: Customizing the Menu

- LightSwitch Extensibility

- Installing and Using Extension

- Demo: Extensions

- LightSwitch Diagnostics

- Demo: Diagnostics

- Controlling Startup State

- Demo: Changing Startup State

Application Security

- LightSwitch Security

- Role-Based Authorization

- LightSwitch Permissions

- Testing Security

- Other Security Features

- Demo: Implementing Security

Deploying an Application

- Deploying an Application

- Demo: 2 Tier App Package

- Demo: Installing the App

- Deploying a 3-Tier App

Return to section navigation list>

Windows Azure Infrastructure and DevOps

James Urquhart (@jamesurquhart) issued an All cloud roads lead to applications post mortem for Giga Om’s Structure 2011 conference to his Wisdom of Clouds C|Net blog on 6/30/2011:

Last week's Structure conference in San Francisco was fascinating to me on several levels. The conference centered much more on the business and market dynamics of cloud than pure technology and services, so there was significantly more coherence to the talks as a whole than in previous years.

Vendors had products and services, and customers had private and public cloud deployments; as opposed to previous years where the "vaporware" almost formed storm clouds.

Another key observation from the show was the change in emphasis this year from virtual machines (2009) and "workloads" (2010) to an almost maniacal focus on the term "application." Everyone was talking about the importance of supporting application development, operating applications in the cloud, integrating applications, and so on. In 2011, applications are king.

In fact, for the foreseeable future, applications are king.

And that is as it should be. I've noted in the past that I believe cloud computing is an operations model, not a technology. There is nothing in the execution stack that is "cloud." Management and monitoring protocols don't change for cloud. The secret sauce of cloud is in how you provision and operate those elements: on demand and at the appropriate scale.

What's more, cloud is an application centric operations model. As I note in my "Cloud and the Future of Networked Systems" presentation which I gave earlier this year, IT operations is moving from a server-centric to application-centric operations model, where more and more of the management and monitoring tasks will be defined by (and targeted at) the application itself, not the underlying infrastructure.

The application-centricity of cloud leads to an interesting corollary: if the focus of cloud is on applications, then all cloud solutions must look at the problem they solve from the perspective of the application. In other words, designing a tool for virtual machine management in the cloud is a short-term game. Eventually, the application will begin to hide the containers in which it runs, and the concept of managing a guest operating system in a virtual machine won't make sense.

However, designing tools with the development, deployment, and operations of code and data in the cloud model has tremendous promise. It's a disruptive concept--displacing our server-centric past--and one for which few enterprises have any serious tools or processes. Certainly not in terms of operations.

One example of application-centricity can be found in cloud tools like enStratus and RightScale. (Disclosure: I am an adviser to enStratus.) Both of these vendors are still too VM-centric for my taste, but each ideally wants to provide tools for managing applications across disparate cloud systems. The VM view is simply an artifact of how most applications are built and deployed today.

So, how can one achieve application centricity? Well, there are a few choices:

Use management tools that are application aware for deployment to infrastructure as a service, including development tools and platforms, cloud management tools like the ones I mentioned above, and organize your operations tasks around applications, not server groups.

Build to a platform as a service environment that hides operations tasks from you and lets you focus on only the application itself.

Ditch building your own applications and acquire functionality through the software as a service model, where the vendor operates all aspects of the application for you, except the use of the application itself.

I should be clear about one thing before I wrap up, however. In addition to application-centric operations, someone has to deliver the service that the application is deployed to (or the application itself, in the case of software as a service) and the infrastructure that supports the service.

Data center operations and managed services do not go away. Rather, they become the responsibility of the cloud provider (public or private), not the end user.

Ultimately, I think this new separation of concerns in operations is at the heart of the difficult cultural change that many IT organizations face. However, the result of that change is the ability for business units to focus on business functionality. For real this time. Not like before the application became the center of the cloud computing universe.

David Linthicum (@DavidLinthicum) asserted “It sounds like denial: Microsoft's new cloud chief says cloud computing won't hurt the software giant” in a deck for his Is Microsoft whistling past the software graveyard? article of 6/30/2011 for InfoWorld’s Cloud Computing blog:

After taking over for Microsoft's Bob Muglia, Microsoft's new cloud chief, Satya Nadella, says the cloud is a "sales opportunity" for Microsoft, not a threat. He claims the cloud will not hurt Microsoft, and the company will be able to hold onto its existing market share and dominance on the PC, while at the same time gaining a larger and larger share of the emerging cloud computing market.

Let's check the logic here.

Let's say you're a huge software company that sells through thousands and thousands of channels. Your products include operating systems, office automation software, enterprise software, and development tools. Moreover, you charge a lot of money because you can. After all, you're a leader in those markets.

Now, you're looking to provide most of that same technology through the public cloud, which bypasses your established channels, and you can no longer charge the same premium. I'm talking Windows Azure and Office 365. Furthermore, you're a follower and not a leader in the still-emerging cloud computing marketplace.

Despite Nadella's optimism, this sounds like a bag of hurt to me.

The fact is, all large software providers moving their software services to cloud services will ultimately hinder their own sales process and perhaps cannibalize their own market base. It can't be avoided.

That's why Oracle, IBM, and Hewlett-Packard have not played up any public cloud offering, and instead opt to push the local data-center-centric private cloud options. They see the public cloud pushing down their traditional software business, as white-belted salesmen roaming enterprise halls are quickly replaced with Web browsers.

In many respects, Microsoft is actually being much more proactive than its counterparts, building out a public cloud service despite the certainty it will see some initial rough waters. However, "no pain"? Sorry, but this is going to hurt a lot.

Patrick Butler Monterde reported Azure and HPC Server: You can now run MPI Workloads in Azure in a 6/29/2011 post:

Exciting news on the Azure HPC space. With the new release of the HPC Pack 2008 R2 Service Pack 2 (SP2) you can now run MPI Workloads in Azure (in addition to Batch and Parametric sweeps)

The second service pack to the HPC Pack 2008 R2 software is now available!

This update includes a number of great new features, including

- Enhanced Azure capabilities, such as adding Azure VM nodes to your cluster, creating Azure node configuration scripts, and supporting Remote Desktop connections

- Ability to run MPI-based applications in Azure

- Job scheduling support through a REST interface and an IIS-hosted web portal

- A new job scheduling policy that uses 'resource pools' to ensure compute access to different user groups

- SOA improvements, such as in-process broker support for increased speed, and a new common data staging feature

- and all the 'normal' service pack stability improvements

For more information on those, and other, new features available in Service Pack 2 please see our documentation on TechNet.

The single SP2 installer applies to both Express and Enterprise installations, as well as the standalone 'Client Utilities' and 'MS-MPI' packages. You can download it from the Microsoft Download Center.

An important note is that this service pack can not be uninstalled by itself. Uninstalling the service pack will also uninstall the HPC Pack itself, so you'll need to take a full backup (including the SQL databases) before installation if you want to be able to 'roll back.'

If you do not have an HPC Pack 2008 R2 cluster, you can download a free Windows HPC Server 2008 R2 evaluation version. Before you install, you can try out the new Installation Preparation Wizard which can help analyze your environment for common issues and provide some best practice guidance to help ensure an easy HPC cluster setup.

Let us know your thoughts over on our Windows HPC discussion forums.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Jo Maitland (@JoMaitlandTT) interviewed Dow Jones’ Altaf Rupani in her Dow Jones pushes limits of hybrid cloud post of 6/30/2011 to the SearchCloudComputing.com blog:

Altaf Rupani, vice president of global strategic planning and architecture at Dow Jones, is on a mission to get the best out of new cloud computing architectures inside his company for all the usual reasons: reducing time to market on new apps and avoiding the capital cost of new hardware. In this interview, Rupani discusses his company’s private cloud roll out and the challenges it still faces working with service providers to get a hybrid cloud system up and running.

How long did it take to build your private cloud?

Rupani: About a year and half.Why did you go this route versus tapping into readily available public cloud resources like Amazon Web Services?

Rupani: We don't use EC2 for business critical apps; the public cloud isn't ready for the enterprise, there needs to be more governance controls that cater to the enterprise.Aren't these kinds of controls tough to build in a private cloud environment, too? Or can anybody at your company jump on your private cloud and provision services?

Rupani: Yes, you need to establish governance and rules and introduce rigor so that you are following role-based access controls, but this is easier to do today inside your own four walls.Who has access to your private cloud?

Rupani: Developers, marketing folks, business analysts, people who are tech-savvy on the business side of the house… It is there for anyone that needs it.Is there training involved, how do you get employees up and running, and what are the potential pitfalls?

Rupani: We have an on-boarding process; we enlighten and educate people on the portal. Otherwise you could shoot yourself in the foot if you let people on who don't know what they are doing. We leave it to the tech leads to spread the word. Otherwise you could have 400 virtual machines or 4,000 provisioned for 10 minutes of use.What systems did you put in place to guard against that?

Rupani: You need to create an auto-approval process for certain groups of users. For example, developers can provision assets without as many hoops to jump through as other employees less familiar with the system. Our mobile development team can provision as many instances at a time as they need as this is a high priority job.How large is your private cloud?

Rupani: All new instances are provisioned through our private cloud and we have 350 active instances, but this spikes up or down depending on workloads.[He declined to say what percentage of Dow Jones' total server environment the private cloud represents, but it is likely less than 10% today.]

Do hardware choices, HP versus Dell for example, give you any advantage in your private cloud architecture?

Rupani: No. We use off-the-shelf hardware. Dell, HP, IBM -- it doesn't matter, we just need a service-level agreement (SLA) for response time, a de-dupe rate for storage etc… We created the framework for a resilient cloud first, then we picked vendors that met that criteria.More on hybrid cloud:

Analyzing today's hybrid cloud architectures

Preparing for a hybrid cloud move

Hybrid cloud computing explained

What software are you using for virtualization and automation?

Rupani: VMware and DynamicOps.DynamicsOps is based on Microsoft technologies and uses the .NET framework. Does this mean you'll use Windows Azure for application development?

Rupani: I’m bound by NDAs. Get back to me in three months.What applications are running in production on your private cloud today?

Rupani: Corporate applications, including back office stuff like SharePoint 2010, have been consolidated from five separate instances to one instance running on the private cloud. B2B apps on the cloud include DowJonesNews.com and our archive. B2C apps include WSJ.com, MarketWatch.com and Barron’s. All of these have some presence on the private cloud and are using it more and more.What advantages have you seen so far?

Rupani: One of the biggest advantages is that we no longer need to spend so much money on transitional technology setups for new projects. It's a cost-avoidance strategy as we don't need net new assets. There's also a cost-efficiency advantage as we are getting better usage out of our existing servers. We tripled our average utilization to 35 to 40% per physical machine.That still seems low. Why not 60 to 70% utilization?

Rupani: We leave headroom to account for peaks.What about labor? Do you save costs there?

Rupani: Yes. Cloud instances are half the cost of physical instances including labor.How many administrators maintain your private cloud?

Rupani: It's less than five.What about hybrid cloud? Does that make sense for your company?

Rupani: We'd like to extend our internal private cloud to public cloud in a hybrid model, but we're still working on the SLAs and data residency mandates with public cloud providers to make that viable.When will that happen?

Rupani: Before the end of the calendar year we'll be able to use hybrid; through APIs we will be able to plumb the providers' capacity behind our portal.Give us an example of why that would be useful.

Rupani: Let's say there's an employee in Europe working on a big marketing launch, but there's no Dow Jones capacity there. The system will say here are the templates available for services and it's the same workflow and policies as internal services, but it launches on the public cloud. It federates with the enterprise.What challenges have you faced in getting this hybrid model to work?

Rupani: When the provider is a black box it's not good; single sign-on and identity and access control is not easy to get done. The portal in the U.S. is just a façade; what you need is an access point for the consumer that is the shortest path, local to the U.K. if the person is in the U.K., not in the US. This requires bifurcation; directional DNS and load balancing that can intercept and redirect the user to the resource.These are things that are common in serving Web pages today, but haven't been there for enterprise systems?

Rupani: Right.Are there other challenges with the hybrid model?

Rupani: Service providers had not envisaged the workflow we needed, so we are really pioneering this path; it takes a lot of trial and error.Which service providers are you working with?

Rupani: I can’t say just yet. Get back to me in a few months, but it's the usual suspects.How have your users responded to the private cloud?

Rupani: People are lining up to use it. The time-to-market for new apps is so much faster. Users are willing to pay more [for it] as they get their server before they come back from lunch instead of in three weeks. [That] is awesome from an application delivery standpoint.What are your goals for the private cloud this year?

Rupani: To move more applications to it where appropriate and train all our users on it.Jo Maitland is the Senior Executive Editor of SearchCloudComputing.com.

For more details about DynamicOps, see Derrick Harris’ Dell Cloud OEM Partner DynamicOps Gets $11M post of 2/28/2011 to Giga Om’s Structure blog.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Adam Hall posted Announcing the availability of the VMware and IBM Netcool IPs for Orchestrator! to the Systems Center Orchestrator blog 6/28/2011:

Today we are very excited to announce the availability of 2 new Integration Packs (IPs) for System Center Orchestrator.

We have published the new IPs for IBM Netcool and VMware vSphere which are available for immediate download by clicking the links.

Further details about the two IPs are below:

System Center Orchestrator 2012 Integration Pack for VMware vSphere

This version of the integration pack for VMware for vSphere contains new activities, new features, and enhancements. You can read the release notes here.

New Activities

Activity

Description

Maintenance Mode

Provides functionality to both enter and exit maintenance mode for an ESX host. Entering maintenance mode prevents VMs powering up or failing over to the host if it is taking part in a high availability cluster. Maintenance mode is normally enabled prior to powering off the host for hardware maintenance.

Get Host Properties

Retrieves a list of properties for a specified host in the VMware vSphere cluster. Examples of these properties would be Connection Status (Powered on, disconnected, etc) and Maintenance Mode state.

Get Host Datastores

Retrieve a list of datastores available for a specified host managed by the VMware vSphere server. This can be used to check capacity of the system when automatically adding a new VM to the managed host

New Features

This integration pack includes the following new features:

- The Get Hosts activity now returns, in the published data, the state of the Host in the cluster, such as running or maintenance mode.

- The Clone VM activity now does not show the datastore or hosts in object's subscription list which aren’t available. Previously all datastores / hosts were returned, now only the available ones are returned.

- New timeout field added to the options menu for all VMware vSphere activities. If no value is given, this will default to 100 seconds.

- Support added for ESX 4.1 and as a result adding support for Windows 7 Guest Operating Systems as well as other operating systems, as below:

- The 32-bit and 64-bit editions of Windows 7

- The 64-bit edition of Windows Server 2008 R2

- The 32-bit and 64-bit editions of Windows Vista

Issues Fixed

The following issues have been fixed in this integration pack:

- DNS Settings now configured on Clone VM activity correctly as supplied. This is a comma-separated list of DNS servers.

- You can use a custom port to connect to Virtual Center. The port value is blank by default, with blank value representing to use the default port.

System Center Orchestrator 2012 Integration Pack for IBM Tivoli Netcool/OMNIbus

This version of the integration pack for IBM Tivoli Netcool/OMNIbus contains new features and enhancements. You can read the release notes here.

New Features

This integration pack supports the following new features:

- Activities support connection to IBM Tivoli Netcool/OMNIbus v7.3

- All activities can connect to the Netcool Object Server using an SSL Connection.

- All features now use the same logging framework. There is no longer a requirement for Java specific logging information.

- All activities now publish UTC fields in two formats; the original long timestamp (1269986359), and the OIS standard format (M/d/yyyy h:m:s tt).

Issues Fixed

The following issues are fixed in this integration pack:

- Previously, activities would fail if a string contained a combination of a single quote and a question mark.

- The Monitor Alert activity no longer allows published data in Monitor Alert fields. Monitor Alert fields accept only variables.

Ellen Messmer reported Gartner: IT should be planning, moving to private clouds in a 6/15/2011 post to NetworkWorld’s Cloud Computing blog (missed when posted):

ORLANDO -- If speedy IT services are important, businesses should be shifting from traditional computing into virtualization in order to build a private cloud that, whether operated by their IT department or with help from a private cloud provider, will give them that edge.

That was the message from Gartner analysts this week, who sought to point out paths to the private cloud to hundreds of IT managers attending the Gartner IT Infrastructure, Operations & Management Summit 2011 in Orlando. Transitioning a traditional physical server network to a virtualized private cloud should be done with strategic planning in capacity management and staff training.

"IT is not just the hoster of equipment and managing it. Your job is delivery of service levels at cost and with agility," said Gartner analyst Thomas Bittman. He noted virtualization is the path to that in order to able to operate a private cloud where IT services can be quickly supplied to those in the organization who demand them, often on a chargeback basis.

Gartner analysts emphasized that building a private cloud is more than just adding virtual machines to physical servers, which is already happening with dizzying speed in the enterprise. Gartner estimates about 45% of x86-based servers carry virtual-machine-based workloads today, with that number expected to jump to 58% next year and 77% by 2015. VMware is the distinct market leader, but Microsoft with Hyper-V is regarded as growing, and Citrix with XenServer , among others, is a contender as well.

"Transitioning the data center to be more cloud-like could be great for the business," said Gartner analyst Chris Wolf, adding, "But it causes you to make some difficult architecture decisions, too." He advised Gartner clientele to centralize IT operations, look to acquiring servers from Intel and AMD optimized for virtualized environments, and "map security, applications, identity and information management to cloud strategy."

Although cloud computing equipment vendors and service providers would like to insist that it's not really a private cloud unless it's fully automated, Wolf said the reality is "some manual processes have to be expected." But the preferred implementation would not give the IT admin the management controls over specific virtual security functions associated with the VMs.

Regardless of which VM platform is used — there is some mix-and-match in the enterprise today though it poses specific management challenges — Gartner analysts say there is a dearth of mature management tools for virtualized systems.

"There's a disconnect today," said Wolf, noting that a recent forum Gartner held for more than a dozen CIOs overseeing their organizations building private clouds, more than 75% said they were using home-grown management tools for things like hooking into asset management systems and ticketing.

Nevertheless, Gartner is urging enterprises to put together a long-term strategy for the private cloud and brace for the fast-paced changes among vendor and providers that will bring new products and services — and no doubt, a number of market drop-outs along the way. …

<Return to section navigation list>

Cloud Security and Governance

SDTimes on the Web posted a Cloud Computing Interoperability Presents a Greater Challenge to Adoption Than Security, Say IEEE Cloud Experts press release on 6/20/2011:

The greatest challenge facing longer-term adoption of cloud computing services is not security, but rather cloud interoperability and data portability, say cloud computing experts from IEEE, the world's largest technical professional association. At the same time, IEEE's experts say cloud providers could reassure customers by improving the tools they offer enterprise customers to give them more control over their own data and applications while offering a security guarantee.

Today, many public cloud networks are configured as closed systems and are not designed to interact with each other. The lack of integration between these networks makes it difficult for organizations to consolidate their IT systems in the cloud and realize productivity gains and cost savings. To overcome this challenge, industry standards must be developed to help cloud service providers design interoperable platforms and enable data portability, topics that will be addressed at IEEE Cloud 2011, 4-9 July in Washington, D.C.

"Security is certainly a very important consideration, but it's not what will inhibit further adoption," said Dr. Alexander Pasik, CIO at IEEE and an early advocate of cloud computing as an analyst at Gartner in the 1990s. "To achieve the economies of scale that will make cloud computing successful, common platforms are needed to ensure users can easily navigate between services and applications regardless of where they're coming from, and enable organizations to more cost-effectively transition their IT systems to a services-oriented model."

According to industry research firm IDC, revenue from public cloud computing services is expected to reach US$55.5 billion by 2014, up from US$16 billion in 2009. Cloud computing plays an important role in people's professional and personal lives by supporting a variety of software-as-a-service (SaaS) applications used to store healthcare records, critical business documents, music and e-book purchases, social media content, and more. However, lack of interoperability still presents challenges for organizations interested in consolidating a host of enterprise IT systems on the cloud.

According to IEEE Fellow Elisa Bertino, professor of Computer Science at Purdue University and research director at the Center for Education and Research in Information Assurance, the interoperability issue is more pressing than perceived data security concerns. "Security in the cloud is no different than security issues that impact on-premises networks. Organizations are not exposing themselves to greater security risks by moving data to the cloud. In fact, an organization's data is likely to be more secure in the cloud because the vendor is a technology specialist whose business model is built on data protection." …

Read more: Next Page

<Return to section navigation list>

Cloud Computing Events

Plankytronixx (@plankytronixx) posted Windows Azure Bootcamp: Complete run-through on 6/30/2011:

I’ve created a complete run through of everything in the UK bootcamp, all in order. Click the first video, watch, click the second video, watch, etc., etc. from beginning to end.

Click the image to go to the complete Bootcamp run-through.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Carl Brooks (@eekygeeky) explained OpSource exit shows the power of the platform in a 6/30/2011 post to SearchCloudComputing.com’s Troposphere blog:

OpSource has been bought by ICT and IT services giant Dimension Data. This tells us several important things about the cloud computing market when we look at some of the details. It’s mostly positive unless you’re a private cloud cultist or one of the vendor giants enabling private cloud cargo cults in various areas of IT.

OpSource likely made out here, too; Yankee Group analyst Camille Mendler said NTT, which now owns Dimension Data and was a 5% equity investor in OpSource, is well known for piling up money to get what it wants. “NTT was an early investor in OpSource years ago. They always pay top dollar (see DiData price, which turned off other suitors)” Mendler said in a message.

Mendler also pointed out the real significance of the buy: the largest providers are moving to consildate their delivery arms and their channel around cloud products, becuase that’s where the action is right now. Amazon Web Services is an outlier; private cloud in the enterprise is in its infancy; but service providers in every area are in a wholesale migration into and delivering cloud computing environments. OpSource already runs in some NTT data center floor space; DiData has a massive SP/MSP customer base and it’s OpSource’s true strength as well.

DiData is already actively engaged with customers that are doing cloudy stuff, said Mendler, and they basically threw up their hands and bought out the best provider focused cloud platform and service provider they could find. “There’s a white label angle, not just enterprise” she said.

And it’s not the only deal for cloud for providers, by NTT either: It bought controlling interest in an Australian MSP with a cloud platform in May. gathering in OpSource means NTT have a stake in most of the world in a fairly serious fashion when it comes to the next wave of public and hosted cloud providers.

What else?

Well, DiData is a huge firm in IT services. They have all the expertise and software they’d ever need, but instead of developing a platform or an IaaS to sell to customers, they bought one outright and are starting up a cloud services business unit to sell it pretty much as is. That means, as has been pointed out so many times before, building a cloud is hard work, and quite distinct from well understood data center architectures around virtualization and automation as we used to know them.

It also means there was a pressing need for a functioning cloud business today, or more likely yesterday. “Essentially, what Dimension has said is ‘nothing changes with OpSource’” said OpSouce CTO John Rowell.