Windows Azure and Cloud Computing Posts for 11/30/2010+

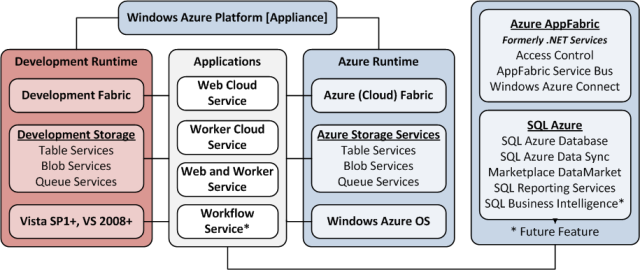

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V or Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Jai Haridas reported Changes in Windows Azure Storage Client Library – Windows Azure SDK 1.3 in an 11/30/2010 post to the Windows Azure Storage Team blog:

We recently released an update to the Storage Client library in SDK 1.3. We wanted to take this opportunity to go over some breaking changes that we have introduced and also list some of the bugs we have fixed (compatible changes) in this release.

Thanks for all the feedback you have been providing us via forums and this site, and as always please continue to do so as it helps us improve the client library.

Note: We have used Storage Client Library v1.2 to indicate the library version shipped with Windows Azure SDK 1.2 and v1.3 to indicate the library shipped with Windows Azure SDK 1.3

Breaking Changes

1. Bug: FetchAttributes ignores blob type and lease status properties

In Storage Client Library v1.2, a call to FetchAttributes never checks if the blob instance is of valid type since it ignores the BlobType property. For example, if a CloudPageBlob instance refers to a block blob in the blob service, then FetchAttributes will not throw an exception when called.

In Storage Client Library v1.3, FetchAttributes records the BlobType and LeaseStatus properties. In addition, it will throw an exception when the blob type property returned by the service does not match with type of class being used (i.e. when CloudPageBlob is used to represent block blob or vice versa).

2. Bug: ListBlobsWithPrefix can display same blob Prefixes multiple times

Let us assume we have the following blobs in a container called photos:

- photos/Brazil/Rio1.jpg

- photos/Brazil/Rio2.jpg

- photos/Michigan/Mackinaw1.jpg

- photos/Michigan/Mackinaw2.jpg

- photos/Seattle/Rainier1.jpg

- photos/Seattle/Rainier2.jpg

Now to list the photos hierarchically, I could use the following code to get the list of folders under the container “photos”. I would then list the photos depending on the folder that is selected.

IEnumerable<IListBlobItem> blobList = client.ListBlobsWithPrefix("photos/"); foreach (IListBlobItem item in blobList) { Console.WriteLine("Item Name = {0}", item.Uri.AbsoluteUri); }The expected output is:

- photos/Brazil/

- photos/Michigan/

- photos/Seattle/

However, assume that the blob service returns Rio1.jpg through Mackinaw1.jpg along with a continuation marker which an application can use to continue listing. The client library would then continue the listing with the server using this continuation marker, and with the continuation assume that it receives the remaining items. Since the prefix photos/Michigan is repeated again for Mackinaw2.jpg, the client library incorrectly duplicates this prefix. If this happens, then the result of the above code in Storage Client v1.2 is:

- photos/Brazil/

- photos/Michigan/

- photos/Michigan/

- photos/Seattle/

Basically, Michigan would be repeated twice. In Storage Client Library v1.3, we collapse this to always provide the same result for the above code irrespective of how the blobs may be returned in the listing.

3. Bug: CreateIfNotExist on a table, blob or queue container does not handle container being deleted

In Storage Client Library v1.2, when a deleted container is recreated before the service’s garbage collection finishes removing the container, the service returns HttpStatusCode.Conflict with StorageErrorCode as ResourceAlreadyexists with extended error information indicating that the container is being deleted. This error code is not handled by the Storage Client Library v1.2, and it instead returns false giving a perception that the container exists.

In Storage Client Library v1.3, we throw a StorageClientException exception with ErrorCode = ResourceAlreadyExists and exception’s ExtendedErrorInformation’s error code set to XXXBeingDeleted (ContainerBeingDeleted, TableBeingDeleted or QueueBeingDeleted). This exception should be handled by the client application and retried after a period of 35 seconds or more.

One approach to avoid this exception while deleting and recreating containers/queues/tables is to use dynamic (new) names when recreating instead of using the same name.

4. Bug: CloudTableQuery retrieves up to 100 entities with Take rather than the 1000 limit

In Storage Client Library v1.2, using CloudTableQuery limits the query results to 100 entities when Take(N) is used with N > 100. We have fixed this in Storage Client Library v1.3 by setting the limit appropriately to Min(N, 1000) where 100 is the server side limit.

5. Bug: CopyBlob does not copy metadata set on destination blob instance

As noted in this post, Storage Client Library v1.2 has a bug in which metadata set on the destination blob instance in the client is ignored, so it is not recorded with the destination blob in the blob service. We have fixed this in Storage Client Library v1.3, and if the metadata is set, it is stored with the destination blob. If no metadata is set, the blob service will copy the metadata from the source blob to the destination blob.

6. Bug: Operations that returned PreConditionfailure and NotModified returns BadRequest as the StorageErrorCode

In Storage Client Library v1.2, PreConditionfailure and NotModified errors lead to StorageClientException with StorageErrorCode mapped to BadRequest.

In Storage Client Library v1.3, we have correctly mapped the StorageErrorCode to ConditionFailed

7. Bug: CloudBlobClient.ParallelOperationThreadCount > 64 leads to NotSupportedException

ParallelOperationThreadCount controls the number of concurrent block uploads. In Storage Client Library v1.2, the value can be between 1, int.MaxValue. But when a value greater than 64 was set, UploadByteArray, UploadFile, UploadText methods would start uploading blocks in parallel but eventually fail with NotSupported exception. In Storage Client Library v1.3, we have reduced the max limit to 64. A value greater than 64 will cause ArgumentOutOfRangeException exception right upfront when the property is set.

8. Bug: DownloadToStream implementation always does a range download that results in md5 header not returned

In Storage Client Library v1.2, DownloadToStream, which is used by other variants – DownloadText, DownloadToFile and DownloadByteArray, always does a range GET by passing the entire range in the range header “x-ms-range”. However, in the service, using range for GETs does not return content-md5 headers even if the range encapsulates the entire blob.

In Storage Client Library v1.3, we now do not send the “x-ms-range” header in the above mentioned methods which allows the content-md5 header to be returned.

9. CloudBlob retrieved using container’s GetBlobReference, GetBlockBlobReference or GetPageBlobReference creates a new instance of the container.

In Storage Client Library v1.2, the blob instance always creates a new container instance that is returned via the Container property in CloudBlob class. The Container property represents the container that stores the blob.

In Storage Client Library v1.3, we instead use the same container instance which was used in creating the blob reference. Let us explain this using an example:

CloudBlobClient client = account.CreateCloudBlobClient(); CloudBlobContainer container = client.GetContainerReference("blobtypebug"); container.FetchAttributes(); container.Attributes.Metadata.Add("SomeKey", "SomeValue"); CloudBlob blockBlob = container.GetBlockBlobReference("blockblob.txt"); Console.WriteLine("Are instances same={0}", blockBlob.Container == container); Console.WriteLine("SomeKey value={0}", blockBlob.Container.Attributes.Metadata["SomeKey"]);For the above code, in Storage Client Library v1.2, the output is:

Are instances same=False

SomeKey metadata value=This signifies that the blob creates a new instance that is then returned when the Container property is referenced. Hence the metadata “SomeKey” set is missing on that instance until FetchAttributes is invoked on that particular instance.

In Storage Client Library v1.3, the output is:

Are instances same=True

SomeKey metadata value=SomeValueWe set the same container instance that was used to get a blob reference. Hence the metadata is already set.

Due to this change, any code relying on the instances to be different may break.

10. Bug: CloudQueueMessage is always Base64 encoded allowing less than 8KB of original message data.

In Storage Client Library v1.2, the queue message is Base64 encoded which increases the message size by 1/3rd (approximately). The Base64 encoding ensures that message over the wire is valid XML data. On retrieval, the library decodes this and returns the original data.

However, we want to provide an alternate where that data which is already valid XML data is transmitted and stored in the raw format, so an application can store a full size 8KB message.

In Storage Client Library v1.3, we have provided a flag “EncodeMessage” on the CloudQueue that indicates if it should encode the message using Base64 encoding or send it in raw format. By default, we still Base64 encode the message. To store the message without encoding one would do the following:

CloudQueue queue = client.GetQueueReference("workflow"); queue.EncodeMessage = false;One should be careful when using this flag, so that your application does not turn off message encoding on an existing queue with encoded messages in it. The other thing to ensure when turning off encoding is that the raw message has only valid XML data since PutMessage is sent over the wire in XML format, and the message is delivered as part of that XML.

Note: When turning off message encoding on an existing queue, one can prefix the raw message with a fixed version header. Then when a message is received, if the application does not see the new version header, then it knows it has to decode it. Another option would be to start using new queues for the un-encoded messages, and drain the old queues with encoded messages with code that does the decoding.

11. Inconsistent escaping of blob name and prefixes when relative URIs are used.

In Storage Client Library v1.2, the rules used to escape a blob name or prefix provided in the APIs like constructors, GetBlobReference, ListBlobXXX etc, are inconsistent when relative URIs are used. The relative Uri name for the blob or prefix to these methods are treated as escaped or un-escaped string based on the input.

For example:

a) CloudBlob blob1 = new CloudBlob("container/space test", service);

b) CloudBlob blob2 = new CloudBlob("container/space%20test", service);

c) ListBlobsWithPrefix("container/space%20test");In the above cases, v1.2 treats the first two as "container/space test". However, in the third, the prefix is treated as "container/space%20test". To reiterate, relative URIs are inconsistently evaluated as seen when comparing (b) with (c). (b) is treated as already escaped and stored as "container/space test". However, (c) is escaped again and treated as "container/space%20test".

In Storage Client Library v1.3, we treat relative URIs as literal, basically keeping the exact representation that was passed in. In the above examples, (a) would be treated as "container/space test" as before. The latter two i.e. (b) and (c) would be treated as "container/space%20test". Here is a table showing how the names are treated in v1.2 compared to in v1.3.

12. Bug: ListBlobsSegmented does not parse the blob names with special characters like ‘ ‘, #, : etc.

In Storage Client Library v1.2, folder names with certain special characters like #, ‘ ‘, : etc. are not parsed correctly for list blobs leading to files not being listed. This gave a perception that blobs were missing. The problem was with the parsing of response and we have fixed this problem in v1.3.

13. Bug: DataServiceContext timeout is in seconds but the value is set as milliseconds

In Storage Client Library v1.2, timeout is incorrectly set as milliseconds on the DataServiceContext. DataServiceContext treatsthe integer as seconds and not milliseconds. We now correctly set the timeout in v1.3.

14. Validation added for CloudBlobClient.WriteBlockSizeInBytes

WriteBlockSizeInBytes controls the block size when using block blob uploads. In Storage Client Library v1.2, there was no validation done for the value.

In Storage Client Library v1.3, we have restricted the valid range for WriteBlockSizeInBytes to be [1MB-4MB]. An ArgumentOutOfRangeException is thrown if the value is outside this range.

Other Bug Fixes and Improvements

1. Provide overloads to get a snapshot for blob constructors and methods that get reference to blobs.

Constructors include CloudBlob, CloudBlockBlob, CloudPageBlob and methods like CloudBlobClient’s GetBlobReference, GetBlockBlobReference and GetPageBlobReference all have overloads that take snapshot time. Here is an example:

CloudStorageAccount account = CloudStorageAccount.Parse(Program.ConnectionString); CloudBlobClient client = account.CreateCloudBlobClient(); CloudBlob baseBlob = client.GetBlockBlobReference("photos/seattle.jpg"); CloudBlob snapshotBlob = baseBlob.CreateSnapshot(); // save the snapshot time for later use DateTime snapshotTime = snapshotBlob.SnapshotTime.Value; // Use the saved snapshot time to get a reference to snapshot blob // by utilizing the new overload and then delete the snapshot CloudBlob snapshotRefernce = client.GetBlobReference(blobName, snapshotTime); snapshotRefernce.Delete();2. CloudPageBlob now has a ClearPage method.

We have provided a new API to clear a page in page blob:

public void ClearPages(long startOffset, long length)3. Bug: Reads using BlobStream issues a GetBlockList even when the blob is a Page Blob

In Storage Client Library v1.2, a GetBlockList is always invoked even for reading Page blobs leading to an expected exception being handled by the code.

In Storage Client Library v1.3 we issue FetchAttributes when the blob type is not known. This avoids an erroneous GetBlockList call on a page blob instance. If the blob type is known in your application, please use the appropriate blob type to avoid this extra call.

Example: when reading a page blob, the following code will not incur the extra FetchAttributes call since the BlobStream was retrieved using a CloudPageBlob instance.

CloudPageBlob pageBlob = container.GetPageBlobReference("mypageblob.vhd"); BlobStream stream = pageBlob.OpenRead(); // Read from stream…However, the following code would incur the extra FetchAttributes call to determine the blob type:

CloudBlob pageBlob = container.GetBlobReference("mypageblob.vhd"); BlobStream stream = pageBlob.OpenRead(); // Read from stream…4. Bug: WritePages does not reset the stream on retries

As we had posted on our site, In Storage Client Library v1.2, WritePages API does not reset the stream on a retry leading to exceptions. We have fixed this when the stream is seekable, and reset the stream back to the beginning when doing the retry. For non seekable streams, we throw NotSupportedException exception.

5. Bug: Retry logic retries on all 5XX errors

In Storage Client Library v1.2, HttpStatusCode NotImplemented and HttpVersionNotSupported results in a retry. There is no point in retrying on such failures. In Storage Client Library v1.3, we throw a StorageServerException exception and do not retry.

6. Bug: SharedKeyLite authentication scheme for Blob and Queue throws NullReference exception

We now support SharedKeyLite scheme for blobs and queues.

7. Bug: CreateTableIfNotExist has a race condition that can lead to an exception “TableAlreadyExists” being thrown

CreateTableIfNotExist first checks for the table existence before sending a create request. Concurrent requests of CreateTableIfNotExist can lead to all but one of them failing with TableAlreadyExists and this error is not handled in Storage Client Library v1.2 leading to a StorageException being thrown. In Storage Client Library v1.3, we now check for this error and return false indicating that the table already exists without throwing the exception.

8. Provide property for controlling block upload for CloudBlob

In Storage Client Library v1.2, block upload is used only if the upload size is 32MB or greater. Up to 32MB, all blob uploads via UploadByteArray, UploadText, UploadFile are done as a single blob upload via PutBlob.

In Storage Client Library v1.3, we have preserved the default behavior of v1.2 but have provided a property called SingleBlobUploadThresholdInBytes which can be set to control what size of blob will upload via blocks versus a single put blob. For example, the setting the following code will upload all blobs up to 8MB as a single put blob and will use block upload for blob sizes greater than 8MB.

CloudBlobClient.SingleBlobUploadThresholdInBytes = 8 * 1024 *1024;The valid range for this property is [1MB – 64MB).

See also The Windows Azure Team released Windows Azure SDK Windows Azure Tools for Microsoft Visual Studio (November 2010) [v1.3.11122.0038] to the Web on 11/29/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

<Return to section navigation list>

SQL Azure Database and Reporting

TechNet’s Cloud Scenario Hub team recently added How to Build and Manage a Business Database Application on SQL Azure:

Faced with the need to create a business database, most people think "small database" they think of Microsoft Access. They might also add SQL Server Express edition or, perhaps, SQL Server Standard edition, to an Access implementation to provide users with relational features.[*]

What all these solutions have in common is that they run on-premise -- and therefore require a server to run on, a file location to live in, license management and a back-up and recovery strategy.

A new alternative to these approaches to the on-premise database is SQL Azure, which delivers the power of SQL Server without the on-premise costs and requirements.

Microsoft SQL Azure Database is a secure relational database service based on proven SQL Server technologies. The difference is that it is a fully managed cloud database, offered as a service, running in Microsoft datacenters around the globe. It is highly scalable, with built in high-availability and fault tolerance, giving you the ability to start small or serve a global customer base immediately.

The resources on this page will help you get an understanding of using SQL Azure as an alternative database solution to Access or SQL Express.

An overview of SQL Azure

This 60-minute introduction SQL Azure explores what’s possible with a cloud database.

Scaling Out with SQL Azure

This article from the TechNet Wiki gives an IT Pro view of building SQL Azure deployments.

How Do I: Calculate the Cost of Azure Database Usage? (Video)

Max Adams walks through the process of calculating real-world SQL Azure costs.

Comparing SQL Server with SQL Azure

This TechNet Wiki article covers the differences between on-premise SQL Server and SQL Azure.

Getting Started with SQL Azure

This Microsoft training kit comes with all the tools and instructions needed to begin working with SQL Azure

Try SQL Azure 1GB Edition for no charge

Start out with SQL Azure at no cost, then upgrade to a paid plan that fits your operational needs.

Developing and Deploying with SQL Azure

Get guidelines on how to migrate an existing on-premise SQL Server database into SQL Azure. It also discusses best practices related to data migration.

Walkthrough of Window Azure Sync Service

This TechNet Wiki article explains how to use Sync Services to provide access to, while isolating, your SQL Azure database from remote users.

SQL Azure Migration Tool v.3.4

Ask other IT Pros about how to make SharePoint Online work with on-premise SharePoint.

Microsoft Learning—Introduction to Microsoft SQL Azure

This two-hour self-paced course is designed for IT Pros starting off with SQL Azure.

How Do I: Introducing the Microsoft Sync Framework Powerpack for SQL Azure (Video)

Microsoft’s Max Adams explains the Synch Framework and tools for synchronizing local and cloud databases.

Connection Management in SQL Azure

This TechNet Wiki article explains how to optimize SQL Azure services and replication.

Backing Up Your SQL Azure Database Using Database Copy

The SQL Azure Team Blog explains the SQL copy process between two cloud database instances.

Security Guidelines for SQL Azure

Get a complete overview of security guidelines for SQL Azure access and development.

SQL Azure Connectivity Troubleshooting Guide

The SQL Azure Team Blog explores common connectivity error messages.

TechNet Forums on Windows Azure Data Storage & Access

Your best resource is other IT Pros working SQL Azure databases. Join the conversation.

Creating a SQL Server Control Point to Integrate On-Premise and Cloud Databases

When using multiple SQL Server Utilities, be sure to establish one and only one control point for each.

How Do I: Configure SQL Azure Security? (Video)

Microsoft’s Max Adams introduces security in SQL Azure, covering logins, database access control and user privilege configuration.

[*] You don’t need SQL Server “to provide users with relational features.” Access offers relational database features unless you use SharePoint lists to host the data or publish an Access application to a Web Database on SharePoint Server.

Markus ‘maol’ Perdrizat posted SQL In the Cloud on 11/30/2010:

CloudBzz has a little intro to SQL In the Cloud, and with that they mean the public cloud. Good overview of existing offerings, and the suggestion that

Cloud-based DBaaS options will continue to grow in importance and will eventually become the dominant model. Cloud vendors will have to invest in solutions that enable horizontal scaling and self-healing architectures to address the needs of their bigger customers

I can only agree. What I wonder, though, is how much these DBaaS is based on traditional RDBMS such as MySQL, and what kind of changes we’ll eventually see to better support the elasticity requirements of cloud DBs. E.g. I hear anecdotically that in SQL Azure, every cloud DB is just a table in the underlying SQL Server infrastructure (see also Inside SQL Azure) [*], and the good folks at Xeround are also investing heavily in their Virtual Partitioning scheme.

Related posts:

[*] The way I read the SQL Azure tea leaves: “Every cloud DB is just an SQL Server database with replicated data in a customized, multi-tenant SQL Server 2008 instance.”

Markus is a Database Systems Architect at one of the few big Swiss banks.

<Return to section navigation list>

Dataplace DataMarket and OData

See The Windows Azure Team reported Just Released: Windows Azure SDK 1.3 and the new Windows Azure Management Portal on 11/30/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below for new Windows Azure Marketplace DataMarket features.

Julian Lai of the WCF Data Services team described a new Entity Set Resolver feature in this 11/29/2010 post:

Problem Statement:

In previous versions, the WCF Data Services .NET and Silverlight client libraries always assumed that all collections (aka entity sets) had the same base URI. This assumption was in place because the library used the URI provided in the DataServiceContext constructor to generate collection URIs using the OData addressing conventions. For example:

- Base URI: http://localhost:1309/NorthwindDataService.svc/

- Sample collection: Customers

- Collection URI by convention: http://localhost:1309/NorthwindDataService.svc/Customers

This simple convention based approach works when all collections belong to a common root, but a number of scenarios, such as partitioning, exist where two collections may have different base URIs or the service needs more control over how URI paths are constructed. For example, imagine a case where collections are partitioned across different servers for load balancing purposes. The “Customers” collection might be on a different server from the “Employees” collection.

To solve this problem, we added a new feature we call an Entity Set Resolver (ESR) to the WCF Data Services client library. The idea behind the ESR feature is to provide a mechanism allowing the client library to be entirely driven by the server (URIs of collections in this case) response payloads.

Design:

For the ESR feature, we have introduced a new ResolveEntitySet property to the DataServiceContext class. This new property returns a delegate that takes the collection name as input and returns the corresponding URI for that collection:

public Func<string, Uri> ResolveEntitySet { get; set; }This new delegate will then be invoked when the client library needs the URI of a collection. If the delegate is not set or returns null, then the library will fall back to the conventions currently used. For example, the API on the DataServiceContext class to insert a new entity looks like:

public void AddObject(string entitySetName, Object entity)

Currently this API inserts the new entity by taking the collection/entitySetName provided and appending it to the base URI passed to the DataServiceContext constructor. As previously stated, this works if all collections are at the same base URI and the server follows the recommended OData addressing scheme. However, as stated above, there are good reasons for this not to be the case.

With the addition of the ESR feature, the AddObject API will no longer first try to create the collection URI by convention. Instead, it will invoke the ESR (if one is provided) and ask it for the URI of the collection. One possible way to utilize the ESR is to have it parse the server’s Service Document -- a document exposed by OData services that lists the URIs for all the collections exposed by the server. Doing this allows the client library to be decoupled from the URI construction strategy used by an OData service.

It is important to note that since the ESR will be invoked during the processing of an AddObject call, the ESR should not block. Building upon the ESR parsing the Service Document example above, a good way is to have the collection URIs stored in a dictionary where the key is the collection name and the value is the URI. If this dictionary is populated during the DataServiceContext initialization time, users can avoid requesting and parsing the Service Document on demand. In practice this means the ESR should return URIs from a prepopulated dictionary and not request and parse the Service Document on demand.

The ESR feature is not scoped only to insert operations (AddObject calls). The resolver will be invoked anytime a URI to a collection is required and the URI hasn’t previously been learnt by parsing responses to prior requests sent to the service. The full list of client library methods which will cause the client library to invoke the ESR are: AddObject, AttachTo, and CreateQuery.

Mentioned above, one of the ways to use the ESR is to parse the Service Document to get the collection URIs. Below is sample code to introduce this process. The code returns a dictionary given the Service Document URI. For the dictionary, the key is the collection name and the value is the collection URI. To see the ESR in use, check out the sources included with the OData client for Windows Live Services blog post.

public Dictionary<string, Uri> ParseSvcDoc(string uri) { var serviceName = XName.Get("service", "http://www.w3.org/2007/app"); var workspaceName = XName.Get("workspace", "http://www.w3.org/2007/app"); var collectionName = XName.Get("collection", "http://www.w3.org/2007/app"); var titleName = XName.Get("title", "http://www.w3.org/2005/Atom"); var document = XDocument.Load(uri);return document.Element(serviceName).Element(workspaceName).Elements(collectionName) .ToDictionary(e => e.Element(titleName).Value, e => new Uri(e.Attribute("href").Value, UriKind.RelativeOrAbsolute)); }

While initializing the DataServiceContext, the code can also parse the Service Document and set the ResolveEntitySet property. In this case, ResolveEntitySet is set to the GetCollectionUri method:

DataServiceContext ctx = new DataServiceContext(new Uri("http://localhost:1309/NorthwindDataService.svc/")); ctx.ResolveEntitySet = GetCollectionUri; Dictionary<string, Uri> collectionDict = new Dictionary<string, Uri>(); collectionDict = ParseSvcDoc(ctx.BaseUri.OriginalString);The GetCollectionUri method is very simple. It just returns the value in the dictionary for the associated collection name key. If this key does not exist, the method will return null and the collection URI will be constructed using OData addressing conventions.

public Uri GetCollectionUri(string collectionName) { Uri retUri; collectionDict.TryGetValue(collectionName, out retUri); return retUri; }

For those of you working with Silverlight, I’ve added an async version of the ParseSvcDoc. It parses the document in the same manner but doesn’t block when retrieving the Service Document. Check out the attached samples for this code.

Attachment:

SampleAsyncParseSvcDoc.cs

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Bill Zack charts The Future of Windows Azure AppFabric in this brief reference of 11/30/2010 to a Gartner research report:

Windows Azure AppFabric has an interesting array of features today that can be used by ISVs and other developers to architect hybrid on-premise/in-cloud applications. Components like Service Bus, Access Control Service and the Caching Service. are very useful in their own right when used to build hybrid applications.

Other announced features such as the Integration Service and Composite Applications are coming features that will make AppFabric even more powerful as an integration tool.

It is important to be able to know where AppFabric is going in the next 2-3 years so that you can take advantage of the features that exist today while making sure to plan for features that will emerge in the future. This research paper from Gartner is an analysis of where they believe Windows AppFabric is headed. Remember that this is an analysis and opinion from Garner and not a statement of policy or direction from Microsoft.

Note: Windows Azure and Cloud Computing Posts for 11/29/2010+ included an article about the Gartner research report.

The Claims-Based Identity Team (a.k.a. CardSpace Team) posted Protecting and consuming REST based resources with ACS, WIF, and the OAuth 2.0 protocol on 11/29/2010:

ACS (Azure Access Control Service) recently added support for the OAuth 2.0 protocol. If you haven’t heard of it, OAuth is an open protocol that is being developed by members of the identity community to solve the problem of allowing 3rd party applications to access their data without providing their passwords. In order to show how this can be done with WIF and ACS, we have posted a sample on Microsoft Connect that shows an end-to-end scenario.

The scenario in the sample is meant to be as simple as possible to show the power of the OAuth protocol to enable web sites to access resource on behalf of a user without the user providing his or her credentials to that site. In our scenario, Contoso has a web service that exposes customer information that needs to be protected. Fabrikam has a web site and wants users to be able to view their Contoso data directly on it. The user doesn’t have to log in to the Fabrikam site, but gets redirected to a Contoso specific site in order to login and give consent to access data on their behalf.

The Contoso web service requires OAuth access tokens from ACS to be attached to incoming requests. The necessary protocol flow for the Fabrikam web site (in OAuth terms – the web server client), including redirecting the user to login and give consent, requesting access tokens from ACS, and attaching the token to outgoing requests to the service is taken care of under the covers. The sample contains a walkthrough that describes the components in more detail.

Try it out here, and tell us what you think!

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

See The Windows Azure Team reported Just Released: Windows Azure SDK 1.3 and the new Windows Azure Management Portal on 11/30/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below for the new Windows Azure Connect CTP.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Robert Duffner posted Thought Leaders in the Cloud: Talking with Roger Jennings, Windows Azure Pioneer and Owner of OakLeaf Systems to the Windows Azure Team blog on 11/30/2010:

Roger Jennings is the owner of OakLeaf Systems, a software consulting firm in northern California. He is also a prolific blogger and author, including a book on Windows Azure. Roger is a graduate of the University of California, Berkeley.

In this interview we discuss:

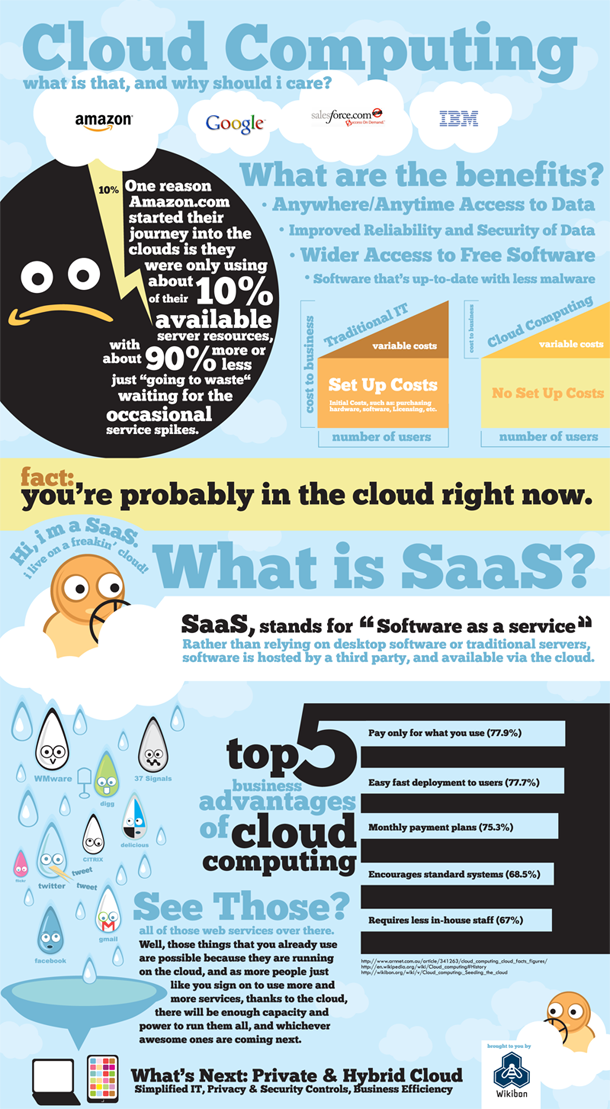

- What gets people to have the "Ah-ha" moment to understand what the cloud is about

- Moving to the cloud to get out of the maintenance business

- Expected growth of cloud computing

- The suitability of the cloud for applications with compliance requirements

- How the cloud supports entrepreneurism

Robert Duffner: Roger, tell us a little bit about yourself and your experience with cloud computing and development.

Roger Jennings: I've been consulting and writing articles, books, and what have you about databases for about the past 15 years. I was involved with Azure from the time it started as an alpha product a couple of years ago, before the first PDC 2008 presentation.

I wrote a book for Wrox called Cloud Computing with the Windows Azure Platform, which we got out in time for the 2009 PDC meeting.

Robert: You've seen a lot of technology come and go. What was it about Windows Azure that got your attention and made it something that you really wanted to stay on top of?

Roger: My work with Visual Studio made the concept of platform-as-a-service interesting to me. I had taken a look at Amazon web services; I had done some evaluations and simple test operations. I wasn't overwhelmed with AWS Simple DB because of its rather amorphous design characteristics, lack of schema, and so on, which was one of the reasons I wasn't very happy with Windows Azure's SQL Data Services (SDS) or SQL Server Data Services (SSDS), both of which were Entity-Attribute-Value (EAV) tables grafted to a modified version of SQL Server 2008.

I was one of those that were agitating in late 2008 for what ultimately became SQL Azure. And changing from SDS to SQL Azure was probably one of the best decisions the Windows Azure team ever made.

Robert: Well, clearly you're very well steeped in the cloud, but probably the majority of developers haven't worked hands-on with the cloud platform. So when you're talking about cloud development, what do you say that helps people have that "Aha!" moment where the light bulb turns on? How do you help people get that understanding?

Roger: The best way for people to understand the process is that, when I'm consulting with somebody on cloud computing, I'll do an actual port of a simple application to my cloud account. The simplicity of migrating an application to Windows Azure is what usually sells them.

Another useful approach is to show people how easily they can move between on-premises SQL Server and SQL Azure in the cloud. The effort typically consists mainly of just changing a connection string, and that simplicity really turns them on.

I do a lot of consulting for very large companies who are still using Access databases, and one of the things they like the feel of is migrating to SQL Azure instead of SQL Server as the back end to Access front ends.

Robert: It's one thing to move an application to the cloud, but it's another thing to architect an application for the cloud. How do you think that transition's going to occur?

Roger: I don't see a great deal of difference, in terms of a cloud-based architecture as discrete from a conventional, say, MVC or MVVM architecture. I don't think that the majority of apps being moved to the cloud are being moved because they need 50, 100 or more instances on order.

I think most of them will be moderate-sized applications that IT people do not want to maintain -- either the databases or the app infrastructure.

Robert: That's a good point, even though an awful lot of the industry buzz about the cloud is around the idea of scale-out.

Roger: I'm finding that my larger clients are business managers who are particularly interested in cloud for small, departmental apps. They don't want to have departmental DBAs, for example, nor do they want to write a multi-volume justification document for offshoring the project. They just want to get these things up and running in a reliable way without having to worry about maintaining them.

They can accomplish that by putting the SQL Azure back end up and connecting an Azure, SharePoint or Access front end to it, or else they can put a C# or VB Windows app on the front of SQL Azure.

Robert: According to the Gartner Hype Cycle, technologies go through inflated expectations, then disillusionment, and then finally enlightenment and productivity. They have described cloud computing as having already gone over the peak of inflated expectations, and they say it's currently plummeting to the trough of disillusionment.

Roger: I don't see it plummeting. If you take a look at where the two cloud dots are on the Gartner Hype Cycle, they're just over the cusp of the curve, just on the way down. I don't think there's going to be a trough of disillusionment of any magnitude for the cloud in general.

I think it's probably just going to simply maintain its current momentum. It's not going to get a heck of a lot more momentum in terms of the acceleration of acceptance, but I think it's going to have pretty consistent growth year over year.

The numbers I have seen suggest 35 percent growth, and something like compounded growth at an annual rate in the 30 to 40 percent range does not indicate to me running down into the trough of disillusionment. [laughs]

Robert: So how have Azure and cloud computing changed the conversations you have with your customers about consulting and development?

Roger: They haven't changed those conversations significantly, as far as development is concerned. In terms of consulting, most customers want recommendations as to what cloud they should use. I don't really get into that, because I'm prejudiced toward Azure, and I'm not really able to give them an unbiased opinion.

I'm really a Microsoft consultant. My Access book, for instance, has sold about a million copies overall in English, so that shows a lot of interest. Most of what I do in my consulting practice with these folks is make recommendations for basic design principles, and to some extent architecture, but very often just the nitty-gritty of getting the apps they've written up into the cloud.

Robert: There was a recent article, I think back in September in "Network World," titled "When Data Compliance and Cloud Computing Collide." The author, a CIO named Bernard Golden, notes that the current laws haven't kept up with cloud computing.

Specifically, if a company stores data in the cloud, the company is still liable for the data, even though the cloud provider completely controls it. What are your thoughts on those issues?

Roger: Well, I've been running a campaign on that issue with the SQL Azure guys to get transparent data encryption (TDE) features implemented. The biggest problem is, of course, the ability of a cloud provider's employees to snoop the data, which presents an serious compliance issue for some clients.

The people I'm dealing with are not even thinking about putting anything that is subject to HIPAA or PCI requirements in a public cloud computing environment until TDE is proven by SQL Azure. Shared secret security for symmetrical encryption of Azure tables and blobs is another issue that must be resolved.

Robert: The article says that SaaS providers should likely have to bear more of the compliance load that cloud providers provide, because SaaS operates in a specific vertical with specific regulatory requirements, whereas a service like Azure, Amazon, or even Google can't know the specific regulations that may govern every possible user. What are your thoughts on that?

Roger: I would say that the only well-known requirements are HIPAA and PCI. You can't physically inspect the servers that store specific users' data, so PCI audit compliance is an issue. These things are going to need to be worked out.

I don't foresee substantial movement of that type of data to the cloud immediately. The type of data that I have seen being moved up there is basically departmental or divisional sales and BI data. Presumably, if somebody worked at it hard enough, they could intercept it. But it would require some very specific expertise to be an effective snooper of that data.

Robert: In your book, you start off by talking about some of the advantages of the cloud, such as the ability for a startup to greatly reduce the time and cost of launching a product. Do you think that services like Azure lower the barrier to entrepreneurship and invention?

Roger: Definitely for entrepreneurship, although not necessarily for invention. It eliminates that terrible hit you take from buying conventional data center-grade hardware, and it also dramatically reduces the cost of IT management of those assets. You don't want to spend your seed capital on hardware; you need to spend it on employees.

Robert: You've probably seen an IT disaster or two. Just recently there was a story where a cloud failure was blamed for disrupting travel for 50,000 Virgin Blue airline customers.

Roger: I've read that it was a problem with data replication on a noSQL database.

Robert: What are some things you recommend that organizations should keep in mind as they develop for the cloud, to ensure that their applications are available and resilient?

Roger: Automated testing is a key, because the cloud is obviously going to be good for load testing and automated functional testing. I'm currently in the midst of a series of blog posts about Windows Azure load testing and diagnostics.

Robert: What do you see as the biggest barriers to the adoption of cloud computing?

Roger: Data privacy concerns. Organizations want their employees to have physical possession of the source data. Now, if they could encrypt it, if they could do transparent data encryption, for instance, they would use it, because people seem to have faith in that technology, even though not a lot of people are using it. Cloud computing can eliminate, or at least reduce, the substantial percentage of privacy breaches resulting from lost or stolen laptops, as well as employee misappropriation.

Robert: That's all of the questions I had for you, but are there some interesting things you're working on or thinking about that we haven't discussed?

Roger: I've been wrapped up in finishing an Access 2010 book for the past three to four months, so other than writing this blog and trying to keep up with what's going on in the business, I haven't really had a lot of chance to work with Azure recently as much as I'd like. But my real long-term interest is the synergy between mobile devices and the cloud, and particularly Windows Phone 7 and Azure.

I'm going to be concentrating my effort and attention for at least the next six months on getting up to speed on mobile development, at least far enough that I can provide guidance with regard to mobile interactions with Azure.

Robert: People think a lot about cloud in terms of the opportunity for the data center. You mentioned moving a lot of the departmental applications to the cloud as kind of the low hanging fruit, but you also talked about Windows Phone. Unpack that for us a little bit, because I think that's a use case that you don't hear the pundits talking about all the time.

Roger: IBM has been the one really promoting this, and they've got a study out saying that within five years, I think it's sixty percent of all development expenditures will be for cloud-to-mobile operations. And it looked to me as if they had done their homework on that survey.

Robert: There are a lot of people using Google App Engine as kind of a back-end for iPhone apps and things like that. It seems like people think a lot about websites and these department-level apps running in the cloud. Whether it's for consumers or corporate users, though, they are all trying to get access to data through these devices that people carry around in their pockets. Is that the opportunity that you're seeing?

Roger: Yes; I think that's a key opportunity but it's for all IaaS and Paas providers, not just Google. A Spanish developer said "Goodbye Google App Engine" in a November 22, 2010 blog post about his problems with the service that received 89,000 visits and more than 120 comments in a single day.

Robert: Well, I want to thank you for taking the time to talk today.

Roger: It was my pleasure.

The Windows Azure Team reported Just Released: Windows Azure SDK 1.3 and the new Windows Azure Management Portal on 11/30/2010:

At PDC10 last month, we announced a host of enhancements for Windows Azure designed to make it easier for customers to run existing Windows applications on Windows Azure, enable more affordable platform access and improve the Windows Azure developer and IT Professional experience. Today, we're happy to announce that several of these enhancements are either generally available or ready for you to try as a Beta or Community Technology Preview (CTP). Below is a list of what's now available, along with links to more information.

The following functionality is now generally available through the Windows Azure SDK and Windows Azure Tools for Visual Studio release 1.3 and the new Windows Azure Management Portal:

- Development of more complete applications using Windows Azure is now possible with the introduction of Elevated Privileges and Full IIS. Developers can now run a portion or all of their code in Web and Worker roles with elevated administrator privileges. The Web role now provides Full IIS functionality, which enables multiple IIS sites per Web role and the ability to install IIS modules.

- Remote Desktop functionality enables customers to connect to a running instance of their application or service in order to monitor activity and troubleshoot common problems.

- Windows Server 2008 R2 Roles: Windows Azure now supports Windows Server 2008 R2 in its Web, worker and VM roles. This new support enables you to take advantage of the full range of Windows Server 2008 R2 features such as IIS 7.5, AppLocker, and enhanced command-line and automated management using PowerShell Version 2.0.

- Multiple Service Administrators: Windows Azure now supports multiple Windows Live IDs to have administrator privileges on the same Windows Azure account. The objective is to make it easy for a team to work on the same Windows Azure account while using their individual Windows Live IDs.

- Better Developer and IT Professional Experience: The following enhancements are now available to help developers see and control how their applications are running in the cloud:

- A completely redesigned Silverlight-based Windows Azure portal to ensure an improved and intuitive user experience

- Access to new diagnostic information including the ability to click on a role to see role type, deployment time and last reboot time

- A new sign-up process that dramatically reduces the number of steps needed to sign up for Windows Azure.

- New scenario based Windows Azure Platform forums to help answer questions and share knowledge more efficiently.

The following functionality is now available as beta:

- Windows Azure Virtual Machine Role: Support for more types of new and existing Windows applications will soon be available with the introduction of the Virtual Machine (VM) role. Customers can move more existing applications to Windows Azure, reducing the need to make costly code or deployment changes.

- Extra Small Windows Azure Instance, which is priced at $0.05 per compute hour, provides developers with a cost-effective training and development environment. Developers can also use the Extra Small instance to prototype cloud solutions at a lower cost.

Developers and IT Professionals can sign up for either of the betas above via the Windows Azure Management Portal.

- Windows Azure Marketplace is an online marketplace for you to share, buy and sell building block components, premium data sets, training and services needed to build Windows Azure platform applications. The first section in the Windows Azure Marketplace, DataMarket, became commercially available at PDC 10. Today, we're launching a beta of the application section of the Windows Azure Marketplace with 40 unique partners and over 50 unique applications and services.

We are also making the following available as a CTP:

- Windows Azure Connect (formerly Project Sydney), which enables a simple and easy-to-manage mechanism to set up IP-based network connectivity between on-premises and Windows Azure resources, is the first Windows Azure Virtual Network feature that we're making available as a CTP. Developers and IT Professionals can sign up for this CTP via the Windows Azure Management Portal.

If you would like to see an overview of all the new features that we're making available, please watch an overview webcast that will happen on Wednesday, December 1, 2010 at 9AM PST. You can also watch on-demand sessions from PDC10 that dive deeper into many of these Windows Azure features; check here for a full list of sessions. A full recap of all that was announced for the Windows Azure platform at PDC10 can be found here. For all other questions, please refer to the latest FAQ.

Ryan Dunn (@dunnry) delivered a workaround for Using Windows Azure MMC and Cmdlet with Windows Azure SDK 1.3 on 11/30/2010:

If you haven't already read on the official blog, Windows Azure SDK 1.3 was released (along with Visual Studio tooling). Rather than rehash what it contains, go read that blog post if you want to know what is included (and there are lots of great features). Even better, goto microsoftpdc.com and watch the videos on the specific features.

If you are a user of the Windows Azure MMC or the Windows Azure Service Management Cmdlets, you might notice that stuff gets broken on new installs. The underlying issue is that the MMC snapin was written against the 1.0 version of the Storage Client. With the 1.3 SDK, the Storage Client is now at 1.1. So, this means you can fix this two ways:

- Copy an older 1.2 SDK version of the Storage Client into the MMC's "release" directory. Of course, you need to either have the .dll handy or go find it. If you have the MMC installed prior to SDK 1.3, you probably already have it in the release directory and you are good to go.

- Use Assembly Redirection to allow the MMC or Powershell to call into the 1.1 client instead of the 1.0 client.

To use Assembly Redirection, just create a "mmc.exe.config" file for MMC (or "Powershell.exe.config" for cmdlets) and place it in the %windir%\system32 directory. Inside the .config file, just use the following xml:

<configuration> <runtime> <assemblybinding xmlns="urn:schemas-microsoft-com:asm.v1"> <dependentassembly> <assemblyidentity culture="neutral" publickeytoken="31bf3856ad364e35" name="Microsoft.WindowsAzure.StorageClient"></assemblyidentity> <bindingredirect newversion="1.1.0.0" oldversion="1.0.0.0"></bindingredirect> </dependentassembly> <dependentassembly> <assemblyidentity culture="neutral" publickeytoken="31bf3856ad364e35" name="Microsoft.WindowsAzure.StorageClient"></assemblyidentity> <publisherpolicy apply="no"> </dependentassembly> </assemblybinding> <runtime> <configuration>

Bill Zack reported Windows Azure Platform Training Kit – November Update with Windows Azure SDK v1.3 updates on 11/30/2010:

There is a newly updated version of the Windows Azure Platform Training Kit that matches the November 2010 SDK 1.3 release. This release of the training kit includes several new hands-on labs for the new Windows Azure features and the new/updated services we just released.

The updates in this training kit include:

- [New lab] Advanced Web and Worker Role – shows how to use admin mode and startup tasks

- [New lab] Connecting Apps With Windows Azure Connect – shows how to use Project Sydney

- [New lab] Virtual Machine Role – shows how to get started with VM Role by creating and deploying a VHD

- [New lab] Windows Azure CDN – simple introduction to the CDN

- [New lab] Introduction to the Windows Azure AppFabric Service Bus Futures – shows how to use the new Service Bus features in the AppFabric labs environment

- [New lab] Building Windows Azure Apps with Caching Service – shows how to use the new Windows Azure AppFabric Caching service

- [New lab] Introduction to the AppFabric Access Control Service V2 – shows how to build a simple web application that supports multiple identity providers

- [Updated] Introduction to Windows Azure - updated to use the new Windows Azure platform Portal

- [Updated] Introduction to SQL Azure - updated to use the new Windows Azure platform Portal

In addition, all of the HOLs have been updated to use the new Windows Azure Tools for Visual Studio version 1.3 (November release). We will making more updates to the labs in the next few weeks. In the next update scheduled for early December we will also include presentations and demos for delivering a full 4-day training workshop.

You can download the November update of the Windows Azure Platform Training kit from here: http://go.microsoft.com/fwlink/?LinkID=130354

Finally, we’re now publishing the HOLs directly to MSDN to make it easier for developers to review and use the content without having to download an entire training kit package. You can now browse to all of the HOLs online in MSDN here: http://go.microsoft.com/fwlink/?LinkId=207018

Wade Wegner (@WadeWegner) described Significant Updates Released in the BidNow Sample for Windows Azure for the Windows Azure SDK v1.3 in an 11/30/2010 post to his personal blog:

There have been a host of announcements and releases over the last month for the Windows Azure Platform. It started at the Professional Developers Conference (PDC) in Redmond, where we announced new services such as Windows Azure AppFabric Caching and SQL Azure Reporting, as well as new features for Windows Azure, such as VM Role, Admin mode, RDP, Full IIS, and a new portal experience.

Yesterday, the Windows Azure team released the Windows Azure SDK 1.3 and Tools for Visual Studio and updates to the Windows Azure portal (for all the details, see the team post). These are significant releases that provide a lot of enhancements for Windows Azure.

One of the difficulties that come with this many updates in a short period of time is keeping up with the changes and updates. It can be challenging to understand how these features and capabilities come together to help you build better web applications.

This is why my team has released sample applications like BidNow, FabrikamShipping SaaS, and myTODO.

What’s in the BidNow Sample?

Just today I posted the latest version of BidNow on the MSDN Code Gallery. BidNow has been significantly updated to leverage many pieces of the Windows Azure Platform, including many of the new features and capabilities announced at PDC and that are a part of the Windows Azure SDK 1.3. This list includes:

- Windows Azure (updated)

- Updated for the Windows Azure SDK 1.3

- Separated the Web and Services tier into two web roles

- Leverages Startup Tasks to register certificates in the web roles

- Updated the worker role for asynchronous processing

- SQL Azure (new)

- Moved data out of Windows Azure storage and into SQL Azure (e.g. categories, auctions, buds, and users)

- Updated the DAL to leverage Entity Framework 4.0 with appropriate data entities and sources

- Included a number of scripts to refresh and update the underlying data

- Windows Azure storage (update)

- Blob storage only used for auction images and thumbnails

- Queues allow for asynchronous processing of auction data

- Windows Azure AppFabric Caching (new)

- Leveraging the Caching service to cache reference and activity data stored in SQL Azure

- Using local cache for extremely low latency

- Windows Azure AppFabric Access Control (new)

- BidNow.Web leverages WS-Federation and Windows Identity Foundation to interact with Access Control

- Configured BidNow to leverage Live ID, Yahoo!, and Facebook by default

- Claims from ACs are processed by the ClaimsAuthenticationManager such that they are enriched by additional profile data stored in SQL Azure

- OData (new)

- A set of OData services (i.e. WCF Data Services) provide an independent services layer to expose data to difference clients

- The OData services are secured using Access Control

- Windows Phone 7 (new)

- A Windows Phone 7 client exists that consumes the OData services

- The Windows Phone 7 client leverages Access Control to access the OData services

Yes, there are a lot of pieces to this sample, but I think you’ll find that it mimics many real world applications. We use Windows Azure web and worker roles to host our application, Windows Azure storage for blobs and queues, SQL Azure for our relational data, AppFabric Caching for reference and activity data, Access Control for authentication and authorization, OData for read-only services, and a Windows Phone 7 client that consumes the OData services. That’s right – in addition to the Windows Azure Platform pieces, we included a set of OData services that are leveraged by a Windows Phone 7 client authenticated through Access Control.

High Level Architecture

Here’s a high level look at the application architecture:

Compute: BidNow consists of three distinct Windows Azure roles – a web role for the website, a web role for the services, and a worker role for long running operations. The BidNow.Web web role hosts Web Forms that interact with the underlying services through a set of WCF Service clients. These services are hosted in the BidNow.Services.Web web role. The worker role, BidNow.Worker, is in charge of handling the long running operations that could hurt the websites performance (e.g. image processing).

Storage: BidNow uses three different types of storage. There’s a relational database, hosted in SQL Azure, that contains all the auction information (e.g. categories, auctions, and bids) and basic user information, such as Name and Email address (note that user credentials are not stored in BidNow, as we use Access Control to abstract authentication). We use Windows Azure Blob storage to store all the auction images (e.g. the thumbnail and full image of the products), and Windows Azure Queues to dispatch asynchronous operations. Finally, BidNow uses Windows Azure AppFabric Caching to store reference and activity data used by the web tier.

Authentication & Authorization: In BidNow, users are allowed to navigate through the site without any access restrictions. However, to bid on or publish an item, authentication is required. BidNow leverages the identity abstraction and federation aspects of Windows Azure AppFabric Access Control to validate the users identity against different identity providers – by default, BidNow leverages Windows Live Id, Facebook, and Yahoo!. BidNow uses Windows Identity Foundation to process identity tokens received from Access Control, and then leverages claims from within those tokens to authenticate and authorize the user.

Windows Phone 7: In today’s world it’s a certainty that your website will have mobile users. Often, to enrich the experience, client applications are written for these devices. The BidNow sample also includes a Windows Phone 7 client that interacts with a set of OData services that run within the web tier. This sample demonstrates how to build a Windows Phone 7 client that leverages Windows Azure, OData, and Access Control to extend your application.

Of course, there’s a lot more that you’ll discover one you dig in. Over the next few weeks, I’ll write and record a series of blog posts and web casts that explore BidNow in greater depth.

Getting Started

To get started using BidNow, be sure you have the following prerequisites installed:

- Windows 7, Vista SP1 or Windows Server 2008

- Windows PowerShell

- Windows Azure Software Development Kit 1.3

- Windows Azure AppFabric SDK 2.0

- Internet Information Services 7.0 (or greater)

- Microsoft .NET Framework 4

- Microsoft SQL Express 2008

- Microsoft Visual Web Developer 2010 Express, or Microsoft Visual Studio 2010

- Windows Identity Foundation Runtime

- Windows Identity Foundation SDK 4.0

As you’ve come to expect, BidNow includes a Configuration Wizard that includes a Dependency Checker (for all the items listed immediately above) and a number of Setup Script you can walk through to configure BidNow. At the conclusion of the wizard you can literally hit F5 and go.

Of course, don’t forget to download the BidNow Sample. For a detailed walkthrough on how to get started with BidNow, please see the Getting Started with BidNow wiki page.

If you have any feedback or questions, please send us an email at bidnowfeedback@microsoft.com.

Steve Plank (@plankytronixx) summarized new Windows Azure SDK v1.3 features in his Windows Azure 1.3 SDK released post of 11/30/2010:

The long-awaited 1.3 SDK with support for the following (from the download page):

- Virtual Machine (VM) Role (Beta):Allows you to create a custom VHD image using Windows Server 2008 R2 and host it in the cloud.

- Remote Desktop Access: Enables connecting to individual service instances using a Remote Desktop client.

- Full IIS Support in a Web role: Enables hosting Windows Azure web roles in an IIS hosting environment.

- Elevated Privileges: Enables performing tasks with elevated privileges within a service instance.

- Virtual Network (CTP): Enables support for Windows Azure Connect, which provides IP-level connectivity between on-premises and Windows Azure resources.

- Diagnostics: Enhancements to Windows Azure Diagnostics enable collection of diagnostics data in more error conditions.

- Networking Enhancements: Enables roles to restrict inter-role traffic, fixed ports on InputEndpoints.

- Performance Improvement: Significant performance improvement local machine deployment.

It could be quite easy to get carried away and think “oh great, I can use Windows Azure Connect or VM Role now”. But note that these are the features of the SDK, not the entire Windows Azure Infrastructure. You still need to be accepted on to the VM Role and Windows Azure Connect CTP programs.

You can download the SDK from here.

The Windows Azure Team released Windows Azure SDK Windows Azure Tools for Microsoft Visual Studio (November 2010) [v1.3.11122.0038] to the Web on 11/29/2010:

Windows Azure Tools for Microsoft Visual Studio, which includes the Windows Azure SDK, extends Visual Studio 2010 to enable the creation, configuration, building, debugging, running, packaging and deployment of scalable web applications and services on Windows Azure.

Overview

Windows Azure™ is a cloud services operating system that serves as the development, service hosting and service management environment for the Windows Azure platform. Windows Azure provides developers with on-demand compute and storage to host, scale, and manage web applications on the internet through Microsoft® datacenters.

Windows Azure is a flexible platform that supports multiple languages and integrates with your existing on-premises environment. To build applications and services on Windows Azure, developers can use their existing Microsoft Visual Studio® expertise. In addition, Windows Azure supports popular standards, protocols and languages including SOAP, REST, XML, Java, PHP and Ruby. Windows Azure is now commercially available in 41 countries.

Windows Azure Tools for Microsoft Visual Studio extend Visual Studio 2010 to enable the creation, configuration, building, debugging, running, packaging and deployment of scalable web applications and services on Windows Azure.

New for version 1.3:

Windows Azure Tools for Microsoft Visual Studio also includes:

- Virtual Machine (VM) Role (Beta):Allows you to create a custom VHD image using Windows Server 2008 R2 and host it in the cloud.

- Remote Desktop Access: Enables connecting to individual service instances using a Remote Desktop client.

- Full IIS Support in a Web role: Enables hosting Windows Azure web roles in a IIS hosting environment.

- Elevated Privileges: Enables performing tasks with elevated privileges within a service instance.

- Virtual Network (CTP): Enables support for Windows Azure Connect, which provides IP-level connectivity between on-premises and Windows Azure resources.

- Diagnostics: Enhancements to Windows Azure Diagnostics enable collection of diagnostics data in more error conditions.

- Networking Enhancements: Enables roles to restrict inter-role traffic, fixed ports on InputEndpoints.

- Performance Improvement: Significant performance improvement local machine deployment.

- C# and VB Project creation support for creating a Windows Azure Cloud application solution with multiple roles.

- Tools to add and remove roles from the Windows Azure application.

- Tools to configure each role.

- Integrated local development via the compute emulator and storage emulator services.

- Running and Debugging a Cloud Service in the Development Fabric.

- Browsing cloud storage through the Server Explorer.

- Building and packaging of Windows Azure application projects.

- Deploying to Windows Azure.

- Monitoring the state of your services through the Server Explorer.

- Debugging in the cloud by retrieving IntelliTrace logs through the Server Explorer.

Repeated from yesterday’s post due to importance.

The Microsoft Case Studies Team added a four-page Custom Developer [iLink] Reduces Development Time, Cost by 83 Percent for Web, PC, Mobile Target case study on 11/29/2010:

Organization Profile: iLink Systems, an ISO and CMMI certified global software solutions provider, offers system integration and custom development. Based in Bellevue, Washington, it has 250 employees.

Business Situation: iLink wanted to enter the fast-growing market for cloud computing solutions, and wanted to conduct a proof of concept to see if the Windows Azure platform could live up to expectations.

Solution: iLink teamed with medical content provider A.D.A.M. to test Windows Azure for a disease assessment engine that might see millions of hits per hour should it go into production use.

Benefits:

- Multiplatform approach reduced development time, cost by 83 percent

- Deployments can be slashed from “months to minutes”

- Updates implemented quickly, easily by business analysts

- Confidence in business direction gained from trustworthy platform

Software and Services:

- Windows Azure

- Microsoft Visual Studio 2010 Ultimate

- Microsoft Expression Blend 3

- Microsoft Silverlight 4

- Microsoft .NET Framework 4

- Microsoft SQL Azure

Vertical Industries: High Tech and Electronics Manufacturing

Country/Region: United States

Business Need: Business Productivity

IT Issue: Cloud Services

Srinivasan Sundara Rajan described “Multi-Tiered Development Using Windows Azure Platform” in his Design Patterns in the Windows Azure Platform post of 11/29/2010:

While most Cloud Platforms are viewed as Virtualization, Hypervisors, Elastic Instances and infrastructure-related flexibility that enables you to arrive at the dynamic infrastructure, Windows Azure is a complete development platform where the scalability of the multi-tiered systems can be enabled through the usage of ‘Design Patterns'.

Below are the common ‘Design Patterns' that can be realized using the Windows Azure as a PaaS platform.

Web Role & Worker Role

Windows Azure currently supports the following two types of roles:

- Web role: A web role is a role that is customized for web application programming as supported by IIS 7 and ASP.NET.

- Worker role: A worker role is a role that is useful for generalized development, and may perform background processing for a web role.

A service must include at least one role of either type, but may consist of any number of web roles or worker roles.

Role Network Communication|

A web role may define a single HTTP endpoint and a single HTTPS endpoint for external clients. A worker role may define up to five external endpoints using HTTP, HTTPS, or TCP. Each external endpoint defined for a role must listen on a unique port.Web roles may communicate with other roles in a service via a single internal HTTP endpoint. Worker roles may define internal endpoints for HTTP or TCP.

Both web and worker roles can make outbound connections to Internet resources via HTTP or HTTPS and via Microsoft .NET APIs for TCP/IP sockets.

So we can have a ASP.NET front end application hosted on a VM which is a web role and a WCF Service hosted VM which is a worker role and the following design patterns can be applied.

Session Facade

Worker Role VM as a facade is used to encapsulate the complexity of interactions between the business objects participating in a workflow. The Session Facade manages the business objects, and provides a uniform coarse-grained service access layer to clients.The below diagram shows the implementation of Session Façade pattern in Windows Azure.

Business Delegate / Service Locator

The Business Delegate reduces coupling between presentation-tier clients and business services. The Business Delegate hides the underlying implementation details of the business service, such as lookup and access details of the Azure / Worker role architecture.Service Locator object abstracts server lookup, and instance creation. Multiple clients can reuse the Service Locator object to reduce code complexity, provide a single point of control, and improve performance by providing a caching facility.

These two patterns together provide valuable support for Dynamic Elasticity and Load Balancing in a cloud environment. We can have logic in these roles (Business Delegate, Service Locator) such that the Virtual Machines with the least load are selected for Service and providing higher scalability.

Typical Activities that can be loaded to a Business Delegate Worker Role are:

- Monitoring Load Metrics using APIs for all the other Worker Role VMs

- Gather and Persist Metrics

- Rule Based Scaling

- Adding and Removing Instances

- Maintain and Evaluate Business Rules for Load Balancing

- Auto Scaling

- Health Monitoring

- Abstract VM migration details from Web Roles

Other Patterns

Windows Azure's coarse grained, asynchronous architecture of Web Roles and Worker Roles facilitates several other common design patterns mentioned below, which makes this a robust enterprise development platform and not just a Infrastructure Virtualization Enabler.

- Transfer Object Assembler

- Value List Handler

- Transfer Object

- Data Access Object

- Model View Controller Architecture

- Front controller

- Dispatcher View

Summary

Design patterns help in modularizing software development and deployment process , so that the building blocks can be developed independently and yet tied together without much tight coupling between them. Utilizing them for the Windows Azure development will compliment the benefits already provided by the Cloud platform.

<Return to section navigation list>

Visual Studio LightSwitch

Bruce Kyle recommended that you Get Started with LightSwitch at New Developer Center in an 11/30/2010 post to the US ISV Evangelism blog:

LightSwitch is a rapid development tool for building business applications. LightSwitch simplifies the development process, letting you concentrate on the business logic and doing much of the remaining work for you. By using LightSwitch, an application can be designed, built, tested, and in your user’s hands quickly.

In fact, it is possible to create a LightSwitch application without writing a single line of code. For most applications, the only code you have to write is the code that only you can write: the business logic.

An updated LightSwitch Developer Center on MSDN shows you how to get started. The site includes links to the download site, How-Do I videos, tutorials, the developer training kit and forums.

LightSwitch allows ISVs to create [their] own shells, themes, screen templates and more. We are working with partners and control vendors to create these extensions so you will see a lot more available as the ecosystem grows.

Also check out the team blog that describes how you can get started with LightSwitch:

Basics:

- How To Use Lookup Tables with Parameterized Queries

- How To Create a Custom Search Screen

- How Do I: Import and Export Data to/from a CSV file

- How Do I: Create and Use Global Values In a Query

- How Do I: Filter Items in a ComboBox or Modal Window Picker in LightSwitch

Advanced:

Return to section navigation list>

Windows Azure Infrastructure

Zane Adam described the NEW! Windows Azure Platform Management Portal in an 11/30/2010 post:

We have launched the new Windows Azure Platform Management Portal, and with it the database manager for SQL Azure (formerly known as Project Houston). Now, you can manage all your Windows Azure platform resources from a single location – including your SQL Azure database.

The management portal seamless[ly] allows for complete administration of all Windows Azure platform resources, streamlines administration of creating and managing SQL Azure databases, and allows for ultra-efficient administrator of SQL Azure.

The new management portal is 100% Silverlight and features getting started wizards to walk you through the process of creating subscriptions, servers, and databases along with integrated help, and a quick link ribbon bar.

The database manager (formerly known as Project “Houston”) is a lightweight and easy to use database management tool for SQL Azure databases. It is designed specifically for web developers and other technology professionals seeking a straightforward solution to quickly develop, deploy, and manage their data-driven applications in the cloud. Project “Houston” provides a web-based database management tool for basic database management tasks like authoring and executing queries, designing and editing a database schema, and editing table data.

Major features in this release include:

- Navigation pane with object search functionality

- Information cube with basic database usage statistics and resource links

- Table designer and table data editor

- Aided view designer

- Aided stored procedure designer

- T-SQL editor

To reach the new management portal you need go to: http://windows.azure.com; and for the time being the old management portal can be reached at: http://sql.azure.com.