Windows Azure and Cloud Computing Posts for 11/1/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Azure DataMarket and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Azure DataMarket and OData

Sudhir Hasbe reported New Content available on Windows Azure Marketplace - DataMarket!!! on 11/1/2010:

Since we launched Windows Azure Marketplace on Friday, we have been getting questions on what offerings were already available and what are coming soon. Elisa has a new post listing exactly this information.

With the launch of our new marketplace yesterday we introduced a number of exciting new datasets – both public and commercial! Check out some of the great new data we have:

- 5 Offerings from Alteryx – Demographic, Expenditure, Geography and Map Data

- 19 Offerings from UN – International Statistics Data

- 6 Offerings from STATS – Sports Statistics

- 2 Offerings from Weather Central – Weather Forecast and Imagery

- 1 Offering from WeatherBug – Historical Weather Observations

- 3 Offerings from Zillow – Home Valuation, Mortgage Information and Neighborhood Data

- 1 Offering from Melissa Data – US Zip code Data

- 2 Offerings from Modul1 – Swedish Education and Unemployment Statistics

- 1 Offering from CDYNE – US National Death Index

- 2 Offering from Data.gov – US Crime and Recovery Data

- 1 Offering from EEA – European Greenhouse Gas Emissions Data

- 1 Offering from places.sg - Places of Interest in Singapore

- 1 Offering from Wolfram Alpha – Answers to questions from fitness to food, math and medicine among others

- 1 Offering from XeBral – Japanese public company financial and statistical data

We also announced a list of datasets that you can expect to see soon (You can read more about them on DataMarket, but note the blue Coming Soon logo on the right of the page)

New Content on DataMarket - Windows Azure Marketplace DataMarket Blog - Site Home - MSDN Blogs

Steve Yi recommended this Video: Building Scale-Out Database Solutions on SQL Azure on 11/1/2010:

SQL Azure provides an information platform that you can easily provision, configure, and use to power your cloud applications. In this session Lev Novik explores the patterns and practices that help you develop and deploy applications that can exploit the full power of the elastic, highly available, and scalable SQL Azure Database service. The talk will detail modern scalable application design techniques such as sharding and horizontal partitioning and dive into future enhancements to SQL Azure Databases.

Watch The PDC 2010 session Building Scale-Out Database Solutions on SQL Azure by Lev Novik.

- Read more about SQL Azure Federation: Building Scalable Database Solution with SQL Azure - Introducing Federation in SQL Azure

Dan Jones posted Project “Houston” Grows up to become Database manager for SQL Azure on 10/31/2010:

I’ve blogged before on Project “Houston” (here and here). at PDC this past week we announced that as part of the Developer Portal refresh Project “Houston” will be known as “Database manager for SQL Azure”. Database manager is a Silverlight client (running in the browser) for schema design and editing, entering data in to tables, and querying the database.

There are other announcements for SQL Azure: SQL Azure Reporting CTP and SQL Azure Data Sync CTP2. You can read more about Database manager and these additional features here.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

J. D. Meier announced the availability of an ADO.NET Developer Guidance Map on 11/1/2010:

If you’re interested in Microsoft data access (ADO.NET, Entity Framework, etc.), this map is for you. Microsoft has an extensive collection of developer guidance available in the form of Code Samples, How Tos, Videos, and Training. The challenge is -- how do you find all of the various content collections? … and part of that challenge is knowing *exactly* where to look. This is where the map comes in. It helps you find your way around the online jungle and gives you short-cuts to the treasure troves of available content.

The Windows ADO.NET Developer Guidance Map helps you kill a few birds with one stone:

- It show you the key sources of data access content and where to look (“teach you how to fish”)

- It gives you an index of the main content collections (Code Samples, How Tos, Videos, and Training)

- You can also use the map as a model for creating your own map of developer guidance.

Download the ADO.NET Developer Guidance Map

Contents at a Glance

- Introduction

- Sources of Data Access Developer Guidance

- Topics and Features Map (a “Lens” for Finding ADO.NET Content)

- Summary Table of Topics

- How The Map is Organized (Organizing the “Content Collections”)

- Getting Started

- Architecture and Design

- Code Samples

- How Tos

- Videos

- Training

Mental Model of the Map

The map is a simple collection of content types from multiple sources, organized by common tasks, common topics, and ADO.NET features:Special Thanks …

Special thanks to Chris Sells, Diego Dagum, Mechele Gruhn, Paul Enfield, and Tobin Titus for helping me find and round up our various content collections.Enjoy and share the map with a friend.

J. D. also published a Windows Azure Developer Guidance Map on 10/31/2010:

If you’re a Windows Azure developer or you want to learn Windows Azure, this map is for you. Microsoft has an extensive collection of developer guidance available in the form of Code Samples, How Tos, Videos, and Training. The challenge is -- how do you find all of the various content collections? … and part of that challenge is knowing *exactly* where to look. This is where the map comes in. It helps you find your way around the online jungle and gives you short-cuts to the treasure troves of available content.

The Windows Azure Developer Guidance Map helps you kill a few birds with one stone:

- It show you the key sources of Windows Azure content and where to look (“teach you how to fish”)

- It gives you an index of the main content collections (Code Samples, How Tos, Videos, and Training)

- You can also use the map as a model for creating your own map of developer guidance.

Download the Windows Azure Developer Guidance Map

Contents at a Glance

- Introduction

- Sources of Windows Azure Developer Guidance

- Topics and Features Map (a “Lens” for Finding Windows Azure Content)

- Summary Table of Topics

- How The Map is Organized (Organizing the “Content Collections”)

- Getting Started

- Architecture and Design

- Code Samples

- How Tos

- Videos

- Training

Mental Model of the Map

The map is a simple collection of content types from multiple sources, organized by common tasks, common topics, and Windows Azure features:Special Thanks …

Special thanks to David Aiken, James Conard, Mike Tillman, Paul Enfield, Rob Boucher, Ryan Dunn, Steve Marx, Terri Schmidt, and Tobin Titus for helping me find and round up our various content collections.Enjoy and share the map with a friend.

Wes Yanaga reported that Windows Phone Radio Episodes [Are] Available Online on 11/1/2010:

With the rush of announcements regarding Windows Phone 7, I wanted to make sure you’re aware of a series of podcasts called Windows Phone Radio.

The program is hosted weekly by Matt Akers and Brian Seitz. Each podcast episode features behind the scenes information and conversation with key personalities inside Microsoft and our partner friends. Visit the site and download or listen.

J. D. Meier continued his run with Code Sample Collections Roundup for ADO.NET, ASP.NET, Silverlight, WCF, Windows Azure, and Windows Phone on 10/31/2010:

Code Sample Collections are a simple way to gather, organize, and share code samples. Rather than a laundry list, each collection is organized by a set of common categories for that specific technology. The collections of code samples are created by mapping out the existing code samples from various sources including MSDN Library, Code Gallery, Channel9, and CodePlex.

Here is a roundup of my most recent Code Sample Collections:

- ADO.NET Code Samples Collection

- ASP.NET Code Samples Collection

- Silverlight Code Samples Collection

- WCF Code Samples Collection

- Windows Azure Samples Collection

- Windows Phone Code Samples Collection

My Related Posts

And yet another Windows Phone Code Samples Collection of the same date:

The Microsoft Windows Phone Code Samples Collection is a roundup and map of Windows Phone code samples from various sources including the MSDN library, Code Gallery, CodePlex, and Microsoft Support.

You can add to the Windows Phone code examples collection by sharing in the comments or emailing me at FeedbackAndThoughts at live.com.

Common Categories for Windows Phone Code Samples

The Windows Phone Code Samples Collection is organized using the following categories:Windows Phone Code Samples Collection

Category

Items

Accelerometer

Application Bar

Control Tilt Effect

Data Access

- ODATA; Developing a Windows Phone 7 Application that Consumes ODATA

- ODATA; Odata Client Library for WP7

General

Globalization and Localization

Location Service

Maps

Media

Orientation

Panorama / Pivot

Settings Page

Splash Screen

WebBrowser Control

Web Services

XNA

My Related Posts

Be sure to check out his earlier Windows Azure Scenarios Map of 10/31/2010.

Bruce Kyle reported Windows Phone 7 App Certification Requirements Updated on 10/31/2010:

The requirements for Windows Phone 7 applications to be published in Marketplace have been updated.

You can find the requirements on the AppHub (http://create.msdn.com). Here’s a direct link to the PDF file. The WP7 App Certification Requirements lay out all the policies developers are required to follow in their WP7 apps & games.

You’ll find some new guidance and requirements for media applications. Also maximum size of apps was changed from 400 to 225 MB.

You’ll find a summary at Updated Version of Windows Phone 7 Application Certification Requirements.

David Pallman delivered his First Impressions Moving from an iPhone to Windows Phone 7 on 10/31/2010:

Along with the other attendees of Microsoft’s Professional Developer Conference last week I received a Windows Phone, an LG E900 to be precise. It’s now been several days since I switched over from the iPhone I’d been using for the last couple of years to a Windows Phone and I thought I would share my first impressions. I’ll tell you up-front, I really like it.

iPhone ExperienceFirst off, why was I on an iPhone to begin with? When I was ready for a new phone a few of years back there really wasn’t anything else in the iPhone’s class of user experience and innovation so it was a simple decision. Moreover, coverage where I live is poor for many of the main carriers but AT&T comes in strong which was another point in the iPhone’s favor.

I’ve enjoyed using my iPhone, but I kind of got disenchanted with Apple earlier this year when their version 4 Phone OS came out. The iTunes sync tool offered to upgrade my iPhone 3 to the version 4 OS so I accepted—and boy was I sorry! The phone became a near-useless piece of junk, with sporadic fits of slowness or outright unresponsiveness. It took a lot of research to find the right painstaking process to get back to the original OS. Apple was no help whatsoever, all but denying there was a problem. They also made some less than encouraging admissions, such as this one confessing the algorithm for showing how many bars of signal strength has been misleading. Moreover, owners of the iPhone 4 seemed to be having a lot of pain. I wasn’t sure I wanted to stay with Apple, which put me in the mood for looking around at other options. I knew Windows Phone was coming, and of course Android has been making quite a splash. I was waiting for something to push me in a particular direction.

The LG E900

That push came at PDC when attendees were given Windows Phones. The evening after receiving it I unwrapped the phone from its packaging box with anticipation—noting with some surprise the packaging claim that this phone was intended for use in Europe. I presume that’s just an artifact of this being a give-away. The “European” claim was reinforced by a Frankenstein’s monster of an adaptor, and that I wasn’t able to find an online user guide except on LG’s UK site. Things I can overlook in a free phone.

I immediately noticed the form factor difference—the LG E900 is longer and thinner than an iPhone and also noticeably heavier which gave me some concern at first; however I’ve since found the weight doesn’t bother me. I have to say, there wasn’t much in the way of instructions. The online user manual was of some help, once I tracked it down, but apparently you’re supposed to just figure out most things by exploring. For the most part this worked but it was a little frustrating not knowing how to do some basic things at first.Making the Switch

I’m not a phone expert, and wondered to myself what I would have to do to get service on the phone. Then it dawned on me that perhaps I could just move the SIM card from my iPhone over to the Windows Phone. Would that work? I decided to find out.An hour later, I still hadn’t gotten the SIM card out of my iPhone. That’s because it sits behind a door that isn’t easy to open unless you have a special tool or a paper clip. While this sounds simple, good luck doing it if you don’t have a paper clip handy: I tried a ballpoint pen, a plastic toothpick, a twist tie I scraped the insulation off of, and anything else I could find in my hotel room. After many tries but no success, I did what I should have done originally and went down to the hotel front desk and asked for a paper clip. 5 minutes later I had my SIM card out. It wasn’t obvious to me which way this oriented in the LG phone but I eventually figured it out through trial and error. A phone call and a text to my wife confirmed my phone service was working.

As I started to play with the phone I realized that my voice and text service was functional but not my data service. I could not browse the web or get email working. Fortunately I stumbled on this post which explains the set up steps to get your data working if you move from an iPhone to an LG E900 Windows Phone. After I’d followed these instructions to define an “APN”, data worked like a charm.

The User Interface

I hadn’t expected to like the UI of the Windows Phone all that much, for several reasons. First, it’s hard not to admire the iPhone’s user interface—the layout, size, and appearance of application icons as well as the design of many of the screens just feels right. Secondly, I’d seen the Windows Phone UI in conferences, online videos and television ads. I knew Microsoft had to strike out in a unique direction but it didn’t look all that appealing to me.However, actually using one is a different story. The interface really works well. Three buttons on the bottom of the phone left you navigate back, go to the Start page, or search contextually within whatever app you’re in. There’s a cool feeling you get sliding and flipping around that you can’t appreciate when watching someone else do it. Those television ads that say we need “a phone to save us from our phones” are right on the money: you can get in and do your work really quickly on a Windows Phone. I like it.

I will say I wasn’t thrilled with the default red theme on my Start screen which I promptly changed to an appealing blue. Also, whatever theme color you do choose (there are ten to choose from, shown below), that color does dominate the user interface. Most of your Start screen tiles will be that color. The UI could benefit from a 2-color theme over a single color theme in my opinion. Speaking of the Start screen, those tiles are “live tiles” that contain data and some of them animate. This allows you at a glance to know if you have voice mail, text message, email, new apps in the marketplace, and so on.

Setting up Outlook for my corporate mail could not have gone easier. Hotmail, on the other hand, was a different story. It wouldn’t sync, giving a mysterious error code and message that it was having trouble connecting to the Hotmail service after a few seconds. The fix turned out to be changing the mail account definition’s sync time from All Messages to Last 3 Days. This was discovered after a lot of trial and error: the error message certainly didn’t give a hint about that, nor is it clear to this day why All Messages doesn’t work (for me, anyway).A few things took me some time to figure out. I couldn’t find a battery indicator at first; this turns out to be on the cover screen that you normally slide away right off the bat when you go to use the phone. Vibrate/ring also eluded me until I realized hitting the volume controls pops up an area for setting that.

Video and Audio

The video and audio quality are quite good. Streaming a movie from Netflix was excellent over a wifi connection—but rather blocky and dissatisfying without wifi.I watched Iron Man over the phone via Netflix and the picture was truly outstanding.

There's a 5 megapixel camera on the LG E900. It's terrific: it takes amazingly good photos and video.

Office

You get Office on the Windows Phone which is pretty cool. On my flight from Seattle back to Southern California I used Word on the phone to write a document and it was usable. It’s a little slow for me because I’m still getting used to this phone’s keyboard but that’s temporary.App Store and Zune

The App Store experience is good and similar to the iPhone’s App Store. The number one phone app I use is email and that’s already there. Other apps I use that are already available for Windows Phone include NetFlix and Twitter. Another app I like to use on the iPhone is Kindle: fortunately at PDC it was announced Kindle is coming soon for Windows Phone and it was demonstrated in the keynote talk. Another favorite iPhone app of mine is Scrabble; we’ll have to see if that becomes available on Windows Phone. The app store experience is good but of course there aren’t as many apps yet for Windows Phone as for longer-established phones. We developers need to get busy creating great Windows Phone apps.Just as iTunes is both the music/video store and sync tool for iPhone, Zune servers that purpose for Windows Phone. Once I installed Zune on my PC and connected the phone to it, I was able to download music (on a 14-day trial) and move music and pictures over to the phone.

Development

Another reason to get excited about Windows Phone is that you can program for it in Silverlight, which is a technology I use quite often. Moreover, Windows Phone and Windows Azure cloud computing go very well together. At PDC, Steve Marx gave a really great session on joint phone-and-cloud development which I highly recommend. There’s definitely some phone+cloud development in my future.Conclusions

It’s not fair to compare a phone you’ve been using for a couple of days against one you’ve been using for a couple of years, but these are my first impressions. So far, I haven’t looked back!

Nancy Gohring asserted “Some enterprises are finding that cloud-based applications make it possible to roll out mobile enterprise apps” in a deck for her Cloud services spurring mobile enterprise apps article of 10/29/2010 for InfoWorld’s Mobilize/News blog:

Mobilizing enterprise apps is nothing new, yet beyond email and a few other horizontal applications, it's still a niche market. But combining the cloud with the newest generation of smartphones is just starting to change that.

Juniper Research expects the total market for cloud-based mobile apps to grow 88 percent between 2009 and 2014. About 75 percent of that market will be enterprise users, Juniper predicts.

An ABI Research study from a year ago predicts that a new architecture for mobile apps based on the cloud will drastically change the way mobile apps are developed, acquired and used. Cloud services can make it easier for developers by minimizing the amount of code they have to customize for each of the phone platforms. "This trend is in its infancy today, but ABI Research believes that eventually it will become the prevailing model for mobile applications," ABI Research analyst Mark Beccue wrote in the report.

Connecting a mobile app to a cloud-based service has other upsides for enterprises, said John Barnes, CTO of Model Metrics, a company that develops mobile apps that work in conjunction with Salesforce.com and Amazon Web Services. "One advantage of these cloud platforms is you can synch from the mobile device to the cloud without intermediate servers or a VPN," he said.

That means deployment can be simpler for companies that don't want to manage in-house servers that support the mobile app.

Salesforce is one cloud provider aiming to make it easier for businesses to mobilize their cloud-based services. "We see that mobile devices are changing how business applications are deployed," said Ariel Kelman, vice president, platform product marketing at Salesforce.com. Salesforce offers APIs and toolkits to help businesses extend applications they've build on Force.com to the iPhone or BlackBerry phones.

Rehabcare is one organization that used those tools to build an application for its workers. Based in St. Louis, Rehabcare owns and operates 34 hospitals nationwide and also operates more than 1,000 facilities on behalf of hospital owners.

The mobile application built on Force.com allows Rehabcare to be more competitive when bidding for patients, Dick Escue, CIO for the company, said. Typically, when a patient is being discharged from a hospital but needs further care, the hospital will put out a broad call to all nearby facilities asking if they want to care for the patient.

In the past, one worker in each of Rehabcare's markets was responsible for filling in a seven page form about the patient by hand and faxing it to the medical directors at Rehabcare facilities who would then decide whether to take on the new patient. It was a time consuming process that was dependant on the legibility of the worker's handwriting and on the medical director seeing the fax as soon as it came in.

"In our CEO's opinion, we were taking way too long to respond to these referrals," he said.

Read more: next page ›, 2

J. Peter Bruzzese wrote “Love it or hate it, the new Windows Phone 7 mobile OS has success written all over it” as a preface to his Windows Phone 7 will be a serious game-changer article of 10/27/2010 for InfoWorld’s Enterprise Windows blog:

I use an Android smartphone and I like it. Although iPhones continue to catch my attention and are certainly more polished in many ways, I'm not one to jump on that fad. The BlackBerry is still loved by huge numbers of loyal fans, and the BlackBerry Torch may keep RIM in the game a bit longer. But the new player in the months ahead, the one everyone is going to be watching, is Windows Phone 7. Tired of being mocked in the mobile market, Microsoft has put a lot of effort into Windows Phone 7 and is anticipating a decent following as folks defect from their existing devices. Will it happen and will it be worth it?

Beyond the walls of Microsoft, very few have even seen the new Windows Phone 7 up close, though developers have had plenty of chances make use of the simulator and start creating all those mobile apps in the new platform (let me put in my plug now for Angry Birds for Windows Phone 7). At Tech Ed in New Orleans this past summer, journalists were allowed to hold one and play with it for a while, though this demo did nothing for me personally.

[ For a contrarian point of view: InfoWorld's Galen Gruman says Windows Phone 7 is a disaster, and early reviews have been negative. …]

The only person outside of Microsoft who has had the chance to really work with it is Paul Thurrott, who has just finished up the book "Windows Phone 7 Secrets" and is obviously an expert on the new platform. For years, Thurrott has been known for his frank and honest evaluation of technology, and he doesn't mince words. His comments on the Windows Phone? He says, "Windows Phone is not just another smartphone. It's a revolution."

One key reasons, I believe, that Windows Phone 7 will succeed is that Microsoft is pulling away from its former policy of letting vendors take the Windows Mobile OS and tweak it to oblivion. Instead, Microsoft is mimicking its iPhone competitor Apple (which controls the software and hardware of every device it sells) in part by requiring vendors to provide very specific hardware minimum requirements and even decreeing similar hardware buttons be available on all phones and in the same locations on the phone. For example, the devices must have:

- A Back button, a Start button, and a Search button

- A minimum 5-megapixel camera and flash

- Assisted GPS, a compass, an accelerometer, and an FM tuner

While OEMs have some say as to what they do with the phone operating system, Microsoft has made major improvements by not allowing them to issue junk hardware or drastically alter the operating system to suit their agenda. There are some standard tile services when you start the phone that cannot be altered by the OEM, nor can the OEMs remove any built-in apps. They can, however, install their own services and apps.

Users cannot remove any of the built-in apps but can remove any OEM apps on the phone. The Windows Phone 7 Marketplace app store can include additional OEM stores, but the official Marketplace cannot be replaced by the OEM. All apps go through the Microsoft store. These kinds of changes in the way Microsoft works with OEMs for its mobile world will ensure a consistent experience for users regardless of their specific phone.

Read more; next page ›, 2

Tom Espiner wrote Microsoft provides Azure access to academic projects on 10/27/2010 for ZDNet UK’s Cloud News and Analysis section:

Microsoft will provide access to its Azure cloud platform to two academic projects for three years.

Horizon, a research project run by University of Nottingham, and Inria, a French computer science collaboration, will use Azure for three years without charge, Microsoft said on Wednesday. The deal will see the projects use Azure for scalable computing, storage, and web management research.

Horizon and Inria are researching technologies that can be used for societal benefit.

Microsoft researchers previously collaborated with the projects, Dan Reed, Microsoft's corporate vice president of the eXtreme Computing Group, told ZDNet UK.

"In the collaboration with both institutions, Microsoft [previously] focused on research projects rather than computing resources," said Reed.

How Microsoft's Azure and cloud services are shaping up: Cloud computing and Azure services will become integral parts of a Microsoft enterprise agreement, says Mark Taylor.

Read moreThe outlay for Microsoft for the Azure provision was difficult to gauge, said Reed, but Microsoft's spending on the projects will run to "a substantial number of millions of dollars".

Microsoft will make resources such as Azure support available to the projects, and work with researchers on academic aspects. According to Reed, 10 Microsoft researchers will contribute, focusing on building software, tools and technologies on Azure. Reed said that a majority of this software will be made open source.

"Researchers will use whatever model is appropriate, but our plan is to make most of the software open source," said Reed. "Whatever [Microsoft] develop[s], we will make available as open source."

One of the aims of the Microsoft researchers will be to develop desktop software that can handle interfacing with back-end high-performance computing systems.

"Excel is a killer app for researchers, and very popular as a desktop tool," said Reed. "We want to create a capability for desktop tools to scale and power... [and] run computations that are far more complex. People want a simple, easy way to use interfaces." Reed said that during the three years, Microsoft was "hoping to stimulate models [of computing] that researchers and agencies can acquire on an ongoing basis".

Derek McAuley, director of the University of Nottingham Horizon project, told ZDNet UK on Wednesday that Horizon planned to research how Azure can be used as a technology to deliver services, and how small businesses can use Azure in their business models.

One ongoing Horizon project will test how cloud computing can be combined with social networking and apps on mobile devices for car-sharing purposes, said McAuley.

"[Researchers will look at] apps for location-based services that integrate social networking with car-sharing," said McAuley. "Car-sharing is a social issue."

Another strand of research will focus on how small businesses and the public sector can use Azure, said McAuley.

"[We'll] sit down and draw out the business case," said McAuley. "The cost of cloud computing is low, but it can scale massively." McAuley's team of 30 staff members already have access to paid-for cloud like Amazon Elastic Cloud Compute (EC2). When the three-year period for Azure use is over, the university may pay to continue Azure research, said McAuley.

"We're already paying for other cloud resources," said McAuley. "If we continue to use Azure, aside from knocking on the door and asking Microsoft [to extend the deal], we'll end up paying for it."

French researchers for Inria will use Azure for research projects such as neuroscience research.

"We are excited that our neuroscience imaging project will have access to this cloud resource and the continued collaboration," said Jean-Jacques Lévy, director of the Microsoft Research-Inria Joint Centre, in a statement.

Microsoft will also continue collaborating with the Venus-C project, a European collaboration that seeks to deploy cloud for biomedicine, civil engineering and science, said the statement.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

Daniel J. St. Louis reported Microsoft Helps Customers, Partners Harness ‘Cloud Power’ on 11/1/2010:

Microsoft launches a global campaign to provide guidance to businesses on leveraging the public cloud, private cloud and cloud productivity.

REDMOND, Wash. — Nov. 1, 2010 — Today, Microsoft takes its cloud computing investments a step further by kicking off a global “Cloud Power” campaign, which gives customers considering the cloud much-needed information about the potential benefits for their business as well as advice on how to get started.

View one of the TV ads from the "Cloud Power" campaign educating businesses about the benefits of Microsoft’s cloud offerings.

Microsoft has repeatedly made its commitment to the cloud very clear and has made repeated updates to its cloud offerings. For example, last week at the Microsoft Professional Developers Conference (PDC), Microsoft announced several new updates to the Windows Azure platform – the most comprehensive operating system for Platform-as-a-service – that will help customers create rich applications that enable new business scenarios in the cloud.

These announcements followed the announcement in October of the new Office 365, which brings cloud productivity to businesses of all sizes, helping them save time and money and free up valuable resources. And back in July, Microsoft inked major deals with fellow tech giants HP, eBay, Dell and Fujitsu to deliver the Windows Azure platform appliance, a new offering that brings the Windows Azure platform to their datacenters, making it easier for them to deliver cloud services to customers and other partners.

The “Cloud Power” campaign focuses on three key scenarios: public cloud, private cloud and cloud productivity, said Gayle Troberman, chief creative officer for the Central Marketing Group.

“Making sense of all of the options and helping customers harness the power of the cloud in the manner that best suits their business needs is the goal of this effort,” Troberman said. “Whether an organization is just starting to explore the cloud or is at the right point in its IT evolution to start making major investments, we have the right products and the unique expertise to help.”

Microsoft offers customers a solid blend of real world experience – the company has been offering cloud services for well over a decade – as well as the only set of solutions that spans Platform-as-a-Service (PaaS), Infrastructure-as-a-Service (IaaS) and Software-as-a-Service (SaaS). This unique combination provides customers with choice, flexibility and functionality when they transition to cloud computing. The company’s cloud customers already include seven of the top 10 global energy companies, 13 of the top 20 global telecom firms, 15 of the top 20 global banks, and 16 of the top 20 global pharmaceutical companies.

On the new Cloud Power site, customers talk about their experiences with Microsoft’s cloud technologies. A case study on 3M, for example, demonstrates how Windows Azure helped the company speed up its development environment. "With Windows Azure, we get a highly scalable environment, pay only for the resources we need, and relieve our IT staff of the systems management and administration responsibilities of supporting a dynamic infrastructure," said Jim Graham, a technical manager at 3M.

Microsoft Online Services helped Coca-Cola better engage its employees, another case study showed. "John Brock, our CEO, challenged us to identify better ways to connect all our employees,” Kevin Flowers, director of Enabling Technologies, said in the case study. “Microsoft helped us launch from a legacy infrastructure to a solution that provided better business value to all our people."

The new campaign has an international focus. Microsoft will run a series of educational events that will help businesses around the world learn more about cloud computing. Events will be hosted by Microsoft subsidiaries and tailored based on local business needs. More than 150 such events are already planned for the coming months.

“Cloud Power” also appears in a new ad campaign that begins today, and includes print, online, radio, and TV. Over the next several weeks, the ads will roll out globally in magazines and newspapers, on billboards and airport signs, and during TV programs such as Monday Night Football tonight. The ads will hit on three of Microsoft’s core cloud computing technologies: Office 365, Windows Azure and Windows Server Hyper-V.

Visit the Cloud Power campaign site.

Learn more about Windows Azure, Office 365, and Windows Server Hyper-V.

Source: Microsoft

HPC in the Cloud announced Microsoft as the winner of its Cloud Outreach and Education Innovators award in its HPC in the Cloud Reveals 2010 Editors' Choice Awards post of 11/1/2010:

HPC in the Cloud, an online publication which covers the extreme-scale leading-edge of cloud computing -- where high performance computing (HPC) and cloud computing intersect, revealed the winners of its inaugural Editors' Choice Awards at 2010 Cloud Expo West in Santa Clara, Calif. The HPC in the Cloud team, including Tomas Tabor, publisher of HPC in the Cloud, and Nicole Hemsoth, managing editor for the online publication, are presenting the awards on the show floor during the Cloud Expo West event.

"It's exciting to present this award, in its first year of inception, honoring achievement at the extreme-scale leading-edge of cloud computing," said Nicole Hemsoth [pictured at right], managing editor of HPC in the Cloud. "After much collaboration with our editors and contributors, we came up with a list of winners who were truly pushing the envelope in high performance cloud computing, and really driving the technology forward."

In its first year, the HPC in the Cloud Editors' Choice Awards is determined through a rigorous selection process involving the publications editors and industry luminaries to determine the leaders in the extreme-scale leading-edge of cloud computing. The categories include Cloud Innovation Leader, Cloud Security Leader, Cloud Platform Innovators, Cloud Ecosystem Innovators, Cloud Management Innovators, Cloud Networking Innovators, Enterprise Cloud Leader, and Cloud Outreach and Education Innovators. The awards are an annual feature of the HPC in the Cloud publication.

The full list of winners for the 2010 awards can be found at the HPC in the Cloud website.

The HPC in the Cloud 2010 Editors' Choice Awards

2010 Cloud Innovation Leader

Editor's Choice: Platform Computing

2010 Cloud Security Leader

Editor's Choice: Tie – IBM & Novell

Cloud Platform Innovators

Editor's Choice: Amazon Web Services

Cloud Ecosystem Innovators

Editor's Choice: CA Technologies

Cloud Management Innovators

Editor's Choice: RightScale, Inc.

Cloud Networking Innovators

Editor's Choice: Mellanox Technologies

Enterprise Cloud Leader

Editor's Choice: CSC Software

Cloud Outreach and Education Innovators

Editor's Choice: Microsoft [Emphasis added.]

Sounds like “damnation by faint praise” to me.

Randy Bias asserted Elasticity is NOT #Cloud Computing … Just Ask Google on 11/1/2010:

In my keynote presentation (free registration required to download) on Day 1 of the Enterprise Cloud Summit at Interop NYC last week some interesting comments followed up in person and on twitter. I think one particular notion that surprised people was the assertion that elasticity, a property commonly associated with ‘cloud’ and ‘cloud computing’, wasn’t a part of cloud computing, but rather a side effect.

This really goes back to the definition problem with cloud computing. I have alluded to this in the past; that the Cloudscaling definition is functionally different from many of the common definitions out there today, including the famous NIST definitions. Why do we think about this differently and why does that mean that elasticity is overblown as a property of cloud computing?

The rest of this post is an illustrated walk-through of our thinking. Please click through (below).

The Start of a Thought

As many of you know, I’ve been working in the cloud computing space and blogging about this area for a very long time. When the definitional problems started, along with the bickering in mid-2008, I started thinking:Are ‘cloud’ and ‘cloud computing’ the same? Is there commonality between SaaS, PaaS, and IaaS that we’re missing? What did Salesforce.com/Force, Amazon EC2/Amazon.com, and Google/Google App Engine all have in common?

The conclusion I came to was a sort of ‘aha!’ moment. I realized that the large web operators had essentially built whole new ways of building IT. Something that was fundamentally different to what went before. I also realized that a big part of why the definition problems existed is because the change was so massive that it was affecting everything in IT, not just some part, like storage or application development.

Finally, I also realized that the bottom layers of IT, particularly the most common ones used every where such as servers, storage, and networking, were being treated in a completely different way by Amazon, Google, and company. These folks were not following the standard play book. In some ways they were following the playbook with the layers above servers, storage, and networking. For example, they used standard programming languages to build their applications, such as Python and Java. These had not changed. The applications being provided were the same as those before, like e-mail, search, and recommendation systems. Storage, compute, and networks were very different, however as were the ‘platform’ layers directly above them that allowed ‘pooling’ of these resources (e.g. GoogleFS).

What really changed matters though is that many of these pioneers took a whole new approach to running the lower layers of IT: datacenter facilities, the infrastructure architecture, and the direct layer above physical resources that creates a common datacenter platform. Something Google calls warehouse computing (PDF), but we simply call “cloud computing.”

Public utility cloud providers exist because “warehouse” (cloud) computing is now possible at a massive scale for low cost. Commoditization begets utilitization. Something pioneered not by enterprise vendors, but by Amazon, Google, Microsoft, and the like.

Cloud Computing as a Continuum of IT Infrastructure

In Nick Carr’s famous book, Does IT Matter, he argued eloquently, providing copious examples, that most business infrastructure goes through a fairly common cycle. This cycle is well-understood and more of a force of nature than anything else. What we are seeing now with cloud computing is nothing more than this cycle replayed again with information technology (IT), just like it has with electricity, roads/highways, banking, and telecommunications before it.Here is a diagram that illustrates the cycle.

This cycle also maps directly to the three clear phases that we see with IT infrastructure:

I think this really says it all. For us, cloud computing is a whole new way of delivering IT services, particularly at the infrastructure level that does not look anything like what has come before. In the same way that Mainframe and Client-Server computing are very very different.

Another way to illustrate this is to look at the approaches that each of these take:

I’m not going to go into this matrix in detail right now, but whether you disagree with aspects or not, I’m certain you can see the trend occurring in the diagram. Cloud computing definitely appears to be an evolution of the way that we create IT.

Elasticity As Side-Effect

Which brings me to the basic argument. If the following are true about cloud computing:

- It is something new …

- … developed by the giant web businesses in order to get to massive scale

- … and an evolution of how IT infrastructure is created

Then we have to look carefully at how and why an Amazon or Google did what they did. The diagram I used to explain during my keynote:

Large Internet business needed scale, cost-efficiency, and agility to be competitive. Google is 1 Million servers. Amazon.com releases new code thousands of times per day. Microsoft runs 2,000 physical servers per headcount. Google runs 10,000 per headcount and aspires for 100,000. Google and Amazon use little or no ‘enterprise computing’ solutions.

So what happened? The causation resulted in high levels of automation, a devops culture, use of standardized commodity hardware, a focus on homogeneity, etc. The end result is a system that lends itself to being turned into a utility (aka ‘utilitization‘. Hence the arrival of public clouds. One of the side-effects of using cloud computing techniques to build an IT infrastructure is that now those platforms or applications built on top of it can leverage the automation to get elasticity (benefit), pay-only-for-what-you-use with metering (benefit), and other autonomous functions (benefit).

Again, these benefits are essentially side effects of cloud computing, not cloud computing itself. The gray section labeled results above represents a number of the core aspects and features of cloud computing. This is why the arguments about the existence of internal ‘private’ clouds can be so bitter[1]. From a public cloud provider perspective, an internal infrastructure cloud is simply an automated virtual server on-demand system, missing many of the aspects of cloud computing above.

Conclusion

Are on-demand automated virtual machines an infrastructure cloud? I would argue no. That’s not ‘new’. Again, we need to look at what the large web businesses such as Amazon and Google did that has changed the game. It wasn’t elasticity, it wasn’t automation, and it wasn’t virtual machines. It was a whole new way of providing and consuming information technology (IT). If you aren’t following that path, you aren’t building a cloud.Ask yourself, before you buy that new shiny hardware appliance or enterprise vendor cloud-washed hardware solution:

“What would Google do?“

Adron Hall (@adronbh) started his Cloud Throw Down: Part 1 series with a post on 11/1/2010:

The clouds available from Amazon Web Services, Windows Azure, Rackspace and others have a few things in common. They’re all providing storage, APIs, and other bits around the premise of the cloud. They all also run on virtualized operating systems. This blog entry I’m going to focus on some key features and considerations I’ve run into over the last year or so working with these two cloud stacks. I’ll discuss a specific feature and then will rate it declaring a winner.

Operating System Options

AWS runs on their AMI OS images, running Linux, Windows, or whatever you may want. They allow you to execute these images via the EC2 instance feature of the AWS cloud.

Windows Azure runs on Hyper-V with Windows 2008. Their VM runs Windows 2008. Windows Azure allows you to run roles which execute .NET code or Java, PHP, or other languages, and allow you to boot up a Windows 2008 OS image on a VM and run whatever that OS might run.

Rating & Winner: Operating Systems Options goes to Amazon Web Services

Development Languages Supported

AWS has a .NET, PHP, Ruby on Rails, and other SDK options. Windows Azure also has .NET, PHP, Ruby on Rails, and other SDK options. AWS provides 1st class citizen support and development around any of the languages, Windows Azure does not but is changing that for Java. Overall both platforms support what 99% of developers build applications with, however Windows Azure does have a few limitations on non .NET languages.

Rating & Winner: Development Languages Supported is a tie. AWS & Windows Azure both have support for almost any language you want to use.

Operating System Deployment Time

AWS can deploy one of hundreds of any operating system image with a few clicks within the administration console. Linux instances take about a minute or two to startup, with Windows instances taking somewhere between 8-30 minutes to startup. Windows Azure boots up a web, service, or CGI role in about 8-15 minutes. The Windows Azure VM Role reportedly boots up in about the same amount of time that AWS takes to boot a Windows OS image.

The reality of the matter is that both clouds provide similar boot up times, but the dependent factor is which operating system you are using. If you’re using Linux you’ll get a boot up time that is almost 10x faster than a Windows boot up time. Windows Azure doesn’t have Linux so they don’t gain any benefit from this operating system. So really, even though I’m rating the cloud, this is really a rating about which operating system is faster to boot.

Rating & Winner: Operating System Deployment Time goes to AWS.

Today’s winner is hands down AWS. Windows Azure, being limited to Windows OS at the core, has some distinct and problematic disadvantages with bootup & operating system support options. However I must say that both platforms offer excellent language support for development.

To check out more about either cloud service navigate over to:

That’s the competitions for today. I’ll have another throw down tomorrow when the tide may turn against AWS – so stay tuned! :)

Shane O’Neill wrote “Before you ever saw Windows Azure, Microsoft CIO Tony Scott had to use it for two years. In this Q&A, he shares what he's learned about cloud moves, security, application portability and how CIOs can prepare” as a deck for his Q&A: Microsoft CIO Eats His Own Cloud Dog Food 11/1/2010 article for CIO.com:

A big part of Microsoft CIO Tony Scott's job in Redmond is to personally use all of Microsoft's technologies, including its cloud products. Microsoft types call this "eating your own dog food."

Microsoft IT was an early test environment for cloud-based productivity suite, Office 365, and Windows Azure, the company's platform-as-a-service offering that is the basis for its cloud strategy.

Research firm Gartner said at its Gartner Symposium recently that despite the complexity of Microsoft's cloud services, the company has "one of the most visionary and complete views of the cloud." Late last week at Microsoft's PDC (Professional Developers Conference) event in Redmond, the company stirred the Windows Azure pot by announcing new virtualization capabilities designed to entice developers.

In an interview with CIO.com's Shane O'Neill, Microsoft's Scott [pictured below] discussed some best practices for CIOs that he's learned from "dogfooding" Windows Azure. He also shares his views on how the cloud will change the financing of business projects and how CIOs can prepare accordingly.

CIO.com recently published a story about cloud adoption that cited a survey where the number one reason for not moving to the cloud was "Don't Understand Cloud Benefits." How is Microsoft cutting through the noise and confusion to clearly outline for CIOs how a cloud model will help their organization?

In my experience CIOs are practical folks, with a healthy level of skepticism about the "next big thing." Conceptually, they all get the benefits of the cloud. But there's a lot of uncertainty out there about how to get started. It can be a little bit intimidating.

Our role at Microsoft IT is to dogfood all our own products, so we started by moving some basic apps to Azure. This was two years ago, before the product was released to the public. We were just getting our feet wet, and I saw some healthy skepticism at the time even in my own organization.

But once you dip your toe in, the learning process begins. We saw an improvement in the quality of the apps we moved to Azure. One example is MS.com, which we moved to Azure and were able to scale the services based on demand versus based on peak capacity. We really saw the advantages of a standardized cloud platform versus fine-tuning every server to each application.

A great example of this is our Microsoft Giving Campaign tool, which we use for internal fund-raising for non-profit organizations. That app gets most of its usage once a year in October during the Giving Campaign. The rest of the year it sits idle. That is a perfect application for the cloud. The new Giving Campaign tool was built on Azure and on the last two days of the campaign, where we often see the peak loads, it never slowed down and the results were phenomenal. We raised twice as much money as we had in any prior year.

1 2 3 4 next page »

Lori MacVittie (@lmacvittie) described her Infrastructure Scalability Pattern: Sharding Sessions post of 11/1/2010 as A deeper dive on how to apply scalability best practices using infrastructure services:

So it’s all well and good to say that you can apply scalability patterns to infrastructure and provide a high-level overview of the theory but it’s always much nicer to provide more detail so someone can actually execute on such a strategy. Thus, today we’re going to dig a bit deeper into applying a scalability pattern – horizontal partitioning, to be exact – to an application infrastructure as a means to scale out an application in a way that’s efficient and supports growth and that leverages infrastructure, i.e. the operational domain.

This is the reason for the focus on “devops”; this is certainly an architectural exercise that requires an understanding of both operations and the applications it is supporting, because in order to achieve a truly scalable sharding-based architecture it’s going to have to take into consideration how data is indexed for use in the application. This method of achieving scalability requires application awareness on the part of not only the infrastructure but the implementers as well.

It should be noted that sharding can be leveraged with its sibling, vertical partitioning (by type and by function) to achieve Internet-scale capabilities.

SHARDING SESSIONS

One of the more popular methods of scaling an application infrastructure today is to leverage sharding. Sharding is a horizontal partitioning pattern that splits (hence the term “shard”) data along its primary access path. This is most often used to scale the database tier by splitting users based on some easily hashed value (such as username) across a number of database instances.

Developer-minded folks will instantly recognize that this is hardly as easy as it sounds. It is the rare application that is developed with sharding in mind. Most applications are built to connect to a single database instance, and thus the ability to “switch” between databases is not inherently a part of the application. As with other scalability patterns, applying such a technique to a third-party or closed-source application, too, is nearly impossible without access to the code. Even with access to the code, such as an open-source solution, it is not always in the best interests of long-term sustainability of the application to modify it and in some cases there simply isn’t time or the skills necessary to do so.

In cases where it is simply not feasible to rewrite the application it is possible to leverage the delivery infrastructure to shard data along its primary incoming path and implement a horizontal partitioning strategy that, as required, allows for repartitioning as may be necessary to support continued growth.

What we want to do is leverage the context-aware capabilities of an application delivery controller (a really smart Load balancer, if you will) to inspect requests and ensure that users are routed to the appropriate pool of application server/database combinations. Assuming that the application is tied to a database, there will be one database per user segment defined. This also requires that the application delivery controller be capable of full-proxy inspection and routing of every request, because if a user whose primary storage is in one database, a request for a user’s data that is stored in another database will need to be directed appropriately. This can be accomplished using the inspection capabilities and a set of network-side scripts that intercept, inspect, and then conditionally route each request based on the determination of which pool of resources holds the requested data. This will often take the form of a hashed value, but as this can add latency to the total response time an alternative to ascertaining the appropriate hashed value for each request is to compute the hashed value (which is used to choose the appropriate pool of resources) and then set a cookie with that value that can be easily accessed upon subsequent requests. Other methods might include simply examining the first X digits of some form of ID carried in a header value. Because the choice at the application delivery layer directly correlates to persistent data, it is important that whatever method is used by always available. Immutable and always available data should be used as the conditional value upon which routing decisions are made, lest users end up directed to a pool of resources in which their persistent data does not reside or, worse, rejected completely.

Alternatively, another approach is to shard data based on location of the user. Again, this requires the ability of the infrastructure to determine at request time the location of the user and route requests appropriately. For smaller sites this type of infrastructure may be self-contained but for large “Internet” scale applications this may actually go one step further and leverage global application delivery techniques like location-based load balancing that first directs users to a specific data center, usually physically located near them, and then internal leverages either additional sharding or another partitioning scheme to enable granular scalability throughout the entire infrastructure.

SHARDING DATA is COMPLICATED

Sharding data – whether within the application or in the infrastructure – is complicated. It is no simple thing to spread data across multiple databases and provide the means by which such data might be shared across all users, regardless of their data “home”. Applications that use a shared session database architecture are best suited for a sharding-based scalability strategy because session data is almost never shared across users, making it easier to implement as a means to scale existing applications without modification.

It is not impossible, however, to implement a sharding-based strategy to scale applications in which other kinds of data is split across instances. It becomes necessary then to more carefully consider how data is accessed and what infrastructure components are responsible for performing the necessary direction and redirection to insure that each request lands in the right data pool. The ability to load balance across databases, of course, changes only where the partitioning occurs. Rather than segment application server and database together, another layer of load balancing is introduced between the application server and the database, and requests are distributed across the databases.

This change in location of segmentation responsibility, however, does not impact the underlying requirement to distinguish how a request is routed to a particular database instance. Using sharding techniques to scale the database layer still requires that requests be inspected in someway to ensure that requests are routed to where the requested data lives. One of the most common location-based sharding patterns is to separate read and write, with write services being sharded based on location (because inserting into a database is more challenging to scale than reading) with read being either also sharded or scaled using a central data store and making heavy use of memcache technology to alleviate the load on the persistent store. Data must be synchronized / replicated from all write data stores into the centralized read store, however, which eliminates true consistency as a possibility. For applications that require Internet-scale but are not reliant on absolute consistency, this model can scale out much better than those requiring near-perfect consistency.

And of course if the data source is shared and scaled out as a separate tier, with sessions stored in the application layer tier, then such concerns become moot because the data source is always consistent and shared and only the session layer need be sharded and scaled out as is required.

DEVOPS – FOR THE WIN

This horizontal scaling pattern requires even more of an understanding of application delivery infrastructure (load balancing and application switching), web operations administration, and application architecture than vertical scaling patterns. This is a very broad set of skills to expect from any one individual, but it is the core of what comprises the emerging devops discipline.

Devops is more than just writing scripts and automating deployment processes, it’s about marrying knowledge and skills from operations with application development and architecting broader, more holistic solutions. Whether the goal is better security, faster applications, or higher availability devops should be a discipline every organization is looking to grow.

In our next deep dive we’ll dig into THE Non-Partitioned Dynamic Scalability PATTERN

Microsoft’s North Central (Chicago) and South Central (San Antonio) data centers had four-hour service management problems on 11/1/2010, according to these two posts:

[Windows Azure Compute] [South Central US] [Green(Info)] Intermittent service management issues

Today, November 01, 2010, 3 hours ago

Nov 1 2010 11:00AM There are intermittent service management issues that may cause some deployment operations to fail. Hosted services that are already running are not impacted and will continue to run normally. Some deployment management operations may however fail intermittently. Retrying the failed operation is the recommended course of action until the root cause is identified and resolved. Storage functionality is not impacted.

Nov 1 2010 3:00PM The intermittent service management issues have been resolved. Running applications were not impacted. Storage functionality was not impacted.[Windows Azure Compute] [North Central US] [Green(Info)] Intermittent service management issues

Today, November 01, 2010, 6 hours ago

Nov 1 2010 11:00AM There are intermittent service management issues that may cause some deployment operations to fail. Hosted services that are already running are not impacted and will continue to run normally. Some deployment management operations may however fail intermittently. Retrying the failed operation is the recommended course of action until the root cause is identified and resolved. Storage functionality is not impacted.

Nov 1 2010 3:00PM The intermittent service management issues have been resolved. Running applications were not impacted. Storage functionality was not impacted.

I’m surprised that these problems would occur simultaneously in two US data centers.

James Hamilton claimed Datacenter Networks are in my Way in this 10/31/2010 essay:

I did a talk earlier this week on the sea change currently taking place in datacenter networks. In Datacenter Networks are in my Way I start with an overview of where the costs are in a high scale datacenter. With that backdrop, we note that networks are fairly low power consumers relative to the total facility consumption and not even close to the dominant cost. Are they actually a problem? The rest of the talk is arguing networks are actually a huge problem across the board including cost and power. Overall, networking gear lags behind the rest of the high-scale infrastructure world, block many key innovations, and actually are both cost and power problems when we look deeper.

The overall talk agenda:

- Datacenter Economics

- Is Net Gear Really the Problem?

- Workload Placement Restrictions

- Hierarchical & Over-Subscribed

- Net Gear: SUV of the Data Center

- Mainframe Business Model

- Manually Configured & Fragile at Scale

- New Architecture for Networking

In a classic network design, there is more bandwidth within a rack and more within an aggregation router than across the core. This is because the network is over-subscribed. Consequently, instances of a workload often needs to be placed topologically near to other instances of the workload, near storage, near app tiers, or on the same subnet. All these placement restrictions make the already over-constrained workload placement problem even more difficult. The result is either the constraints are not met which yields poor workload performance or the constraints are met but overall server utilization is lower due to accepting these constraints. What we want is all points in the datacenter equidistant and no constraints on workload placement.

Continuing on the over-subscription problem mentioned above, data intensive workloads like MapReduce and high performance computing workloads run poorly on oversubscribed networks. Its not at all uncommon for a MapReduce workload to transport the entire data set at least once over the network during job run. The cost of providing a flat, all-points-equidistant network are so high, that most just accept the constraint and other run MapReduce poorly or only run them in narrow parts of the network (accepting workload placement constraints).

Net gear doesn’t consume much power relative to total datacenter power consumption – other gear in the data center are, in aggregate much worse. However, network equipment power is absolutely massive today and it is trending up fast. A fully configured Cisco Nexus 7000 requires 8 circuits of 5kw each. Admittedly some of that power is for redundancy but how can 120 ports possibly require as much power provisioned as 4 average sized full racks of servers? Net gear is the SUV of the datacenter.

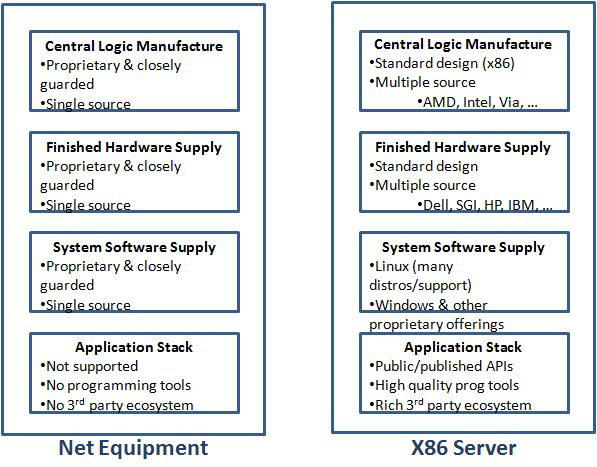

The network equipment business model is broken. We love the server business model where we have competition at the CPU level, more competition at the server level, and an open source solution for control software. In the networking world, it’s a vertically integrated stack and this slows innovation and artificially holds margins high. It’s a mainframe business model.

New solutions are now possible with competing merchant silicon from Broadcom, Marvell, and Fulcrum and competing switch designs built on all three. We don’t yet have the open source software stack but there are some interesting possibilities on the near term horizon with OpenFlow being perhaps the most interesting enabler. More on the business model and why I’m interested in OpenFlow at: Networking: The Last Bastion of the Mainframe Computing.

Talk slides: http://mvdirona.com/jrh/TalksAndPapers/JamesHamilton_POA20101026_External.pdf

Paul Krill asserted “Windows Azure Virtual Machine role enables migration of existing Windows Server systems” as the introduction to his Microsoft taps virtualization to move apps to cloud article of 10/29/2010 for InfoWorld’s Cloud Computing blog:

Microsoft is looking to make it easier to move existing Windows Server applications to the company's Windows Azure cloud platform via virtual machine technology.

The company unveiled this week Windows Azure Virtual Machine Role, intended to ease migration of these applications by eliminating the need to make costly application changes. Customers would get the benefit application management costs by moving software to the cloud, said Jamin Spitzer, Microsoft director of platform strategy.

[ Also on InfoWorld: Microsoft this week emphasized Azure and shared more details on Office 365 at its Professional Developers Conference (PDC) in Redmond, Wash. | Use server virtualization to get highly reliable failover at a fraction of the usual cost. Find out how in InfoWorld's High Availability Virtualization Deep Dive PDF special report. ]

"I think Virtual Machine Roles get customers and partners more comfortable with Windows Azure as a platform," Spitzer said.

Virtual Machine Role leverages Microsoft's Hyper-V virtualization technology. A public beta release of Virtual Machine Role is due by the end of 2010.

Microsoft announced Virtual Machine Role at PDC. The company at the event also reiterated its support for Java on Azure, intending to make it a "first class citizen" on the cloud and stressed efforts to improve Eclipse tooling for Azure. "It will get even better than it is today," Spitzer said.

Improved Java enablement is due in 2011.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Mary Jo Foley claimed Microsoft plays the PaaS card to better position its cloud platform on 11/1/2010:

Microsoft has used a number of different metaphors to explain its cloud-computing positioning and strategy over the past couple of years.

There was Software+Services. Then talk switched to three screens and a cloud. Earlier this year, there was “We’re All In.” And during the summer, there was talk of Microsoft as providing “IT as a Service” (a catch phrase a few of Microsoft’s competitors have used as well).

At the company’s Professional Developers Conference in Redmond late last week, Microsoft execs fielded another new positioning attempt. Microsoft is claiming to be the leading general purpose platform-as-a-service (PaaS) cloud platform.

Microsoft execs aren’t saying the company is No. 1 in the infrastructure as a service (IaaS) or software as a service (SaaS) arenas. Microsoft does have offerings in both those categories, however.

IaaS is the cloud tier which provides developers with basic compute and storage resources. PaaS is more like full operating system/services infrastructure for the cloud. And SaaS is the cloud-hosted applications tier. IaaS players include Amazon (with its EC2 and VMware with VCloud. PaaS players, in addition to Microsoft with Azure, include Google (with Google App Engine) and Salesforce (with Force.com). In the SaaS space, there are numerous players, including Microsoft (with Office 365/Office Web Apps), Google (with Gmail, Google Apps/Docs), Salesforce and more.

“Platform as a service is where the advantages of the cloud are (evident),” said Amitabh Srivastava, Senior Vice President of Microsoft’s Server and Cloud Division.

Another way that Microsoft is attempting to establish to differentiate itself from the cloud pack is by emphasizing that virtualization and cloud computing are not one and the same. At the PDC, Server and Tools President Bob Muglia told attendees that there’s a difference between managing a virtual machine and “true” cloud computing.

“If you are managing a VM, it’s not PaaS,” said Muglia.

Even though Microsoft will provide a Virtual Machine Role capability, it is offering this to customers who want a way to move an existing application to the cloud, knowing they won’t be able to take advantage of the cloud’s scalability and multi-tenancy functionality right off the bat by doing so.

Windows in the Cloud

Since it first outlined its plans for Azure — back when the cloud OS was known by its codename “Red Dog” — Microsoft intended to build its cloud environment in a way that made it look and work like “Windows in the cloud.”

Mark Russinovich, a Microsoft Technical Fellow who joined the Azure effort this summer, described Azure as “an operating system for the datacenter,” during one of his presentations at PDC. Azure “treats the datacenter as a machine” by handling resource management, provisioning and monitoring, as well as managing the application lifecycle. Azure provides developers with a shared pool of compute, disk and networking resources in a way that makes them appear “boundless.”

By design, Azure was built to look and work like Windows Server (minus the many interdependencies that Microsoft has been attempting to untangle via projects like MinWin). The “kernel” of Windows Azure is known as the Fabric Controller. In the case of Azure, the Fabric Controller is a distributed, stateful application running on nodes (blades) spread across fault domains, Russinovich said. There are other parallels between Windows and Windows Azure as well. In the cloud, services are the equivalent of applications, and “roles” are the equivalent of DLLs (dynamic link libraries), he said.

By the same token, SQL Azure is meant, by design, to provide developers with as much of SQL Server’s functionality as it makes sense to put in the cloud. Microsoft’s announcement last week of SQL Azure Reporting tools — which will allow users to embed reports in their Azure applications, making use of rich data visualizations, exported into Word, Excel and PDF formats — was welcomed by a number of current and potential Azure customers. (A Community Technology Preview of SQL Azure Reporting is due out before the end of this year, with the final release due in the first half of 2011.)

Microsoft also is working to deliver by mid-2011 the final version of a SQL Azure Data Sync tool that will allow developers to create apps with geo-replicated SQL Azure data that can be synchronized with on-premises and mobile applications. And the “Houston” technology, now known as Database Manager for SQL Azure, will provide a lightweight Web-based data management and querying capability when it is released in final form before the end of 2010.

Microsoft is rolling out a number of other enhancements to its Azure platform over the next six to nine months. Srivastava categorized some of these coming technologies as “bridges” to Microsoft’s core PaaS platform, and others as “onramps” onto it.

One example of an Azure “bridge, according to Srivastava, is “Project Sydney.” Sydney, now known officially as Windows Azure Connect, is technology that will allow developers to create an IP-based network between on-premises and Windows Azure resources. The CTP of Azure Connect is due out before the end of this year and will be generally available in the first half of 2011, Microsoft officials said last week.

Srivastava put new technologies like the coming Azure Virtual Machine Role, Server Application Virtualization and the Admin Mode (via which a team can work on the same Azure account using their multiple Windows Live IDs) as examples of “on ramps to Azure.

Read more: (Page 1 of 2) What else is cloud-bound? Go to the next page to find out: 2

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Eric Nelson (@ericnel) announced on 11/1/2010 a FREE Technical Update Event for UK Independent Software Vendors, 25th Nov in Reading:

My team is pulling together a great afternoon on the 25th of this month to help UK ISVs get up to speed on the latest technologies from Microsoft and explore their impact on product roadmaps. The session is intended for technical decision makers including senior developers and architects.

Technologies such as the Windows Azure Platform, SQL Server 2008, Windows Phone 7, SharePoint 2010 and Internet Explorer 9.0 will be represented.

Check out the agenda and register before all the seats vanish

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Handy claimed Free tier of cloud hosting is Amazon's answer to Google in an 11/1/2010 article for SDTimes on the Web:

Amazon was first out of the gate as cloud hosting began, but since then, Google’s free services have steadily gained on Amazon’s pay-per-hour services. Today, Amazon looked to tilt the balance back to its side with the announcement of a new free tier of hosting in its Web services cloud.

The new plan offers one year of free hosting on a micro-Linux image with just over 600MB of RAM. Additionally, developers will be able to use Amazon's S3 storage, Elastic Block storage, load balancing and free data transfers. The service will be free for one year for new users, after which it reverts to traditional pricing by the hour.

"We're excited to introduce a free usage tier for new AWS customers to help them get started on AWS," said Adam Selipsky, vice president of Amazon Web Services. "Everyone from entrepreneurial college students to developers at Fortune 500 companies can now launch new applications at zero expense and with the peace of mind that they can instantly scale to accommodate growth."

In addition to the now-free versions of Amazon's services, developers will also be able to use some of Amazon's in-cloud services for free as well, such as Amazon’s Elastic Storage, the SimpleDB distributed database, and a notification service. Users of the free tier of AWS will also be able to use the following free (though limited) services: 1GB of storage on SimpleDB, 5GB of storage on S3, and an overall bandwidth cap of 15GB in and 15GB out per month.

Amazon and Google are now competing directly with each other in the market for free cloud hosting. Google's AppEngine however, is focused on specific application architectures: Java, or Python and Django, all using MySQL. Amazon, on the other hand, does not limit the choice of developers, and it offers full hosting of anything that will run on the computers they host within their cloud.

The year limit, however, means that developers beginning for free on Amazon will eventually have to turn into paying customers, and this tier of free services will be offered only to new customers.