Windows Azure and Cloud Computing Posts for 11/15/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi recommended a Video: What’s New in Microsoft SQL Azure from TechEd Europe 2010 in an 11/15/2010 post:

In this demo-intensive and video, David Robinson shows how to build Web applications quickly with SQL Azure Databases and familiar Web technologies. He also demonstrate several new enhancements Microsoft has added to SQL Azure, including the new SQL Azure Portal and these keys features:

Silverlight based

- Clean, intuitive design

- Integrated experience with other Windows Azure services

- Wizards to guide you through common tasks

- Rich filtering & pagination support

- View all your subscription at a single time

- Database managers (formerly Project ‘Houston’) integrated.

View the Video: What’s New in Microsoft SQL Azure.

Dave was the technical editor for my Cloud Computing with the Windows Azure Platform book.

My Windows Azure, SQL Azure, OData and Office 365 Sessions at Tech*Ed Europe 2010 post (updated 11/14/2010) provides a complete list of cloud-related presentations at Tech*Ed Europe with links to videos and power point slides.

<Return to section navigation list>

Dataplace DataMarket and OData

Tyler Bell (@twbell) asserted “Linked data allows for deep and serendipitous consumer experiences” as a subhead for his Where the semantic web stumbled, linked data will succeed post of 11/15/2010 to the O’Reilly Radar blog:

In the same way that the Holy Roman Empire was neither holy nor Roman, Facebook's OpenGraph Protocol is neither open nor a protocol. It is, however, an extremely straightforward and applicable standard for document metadata. From a strictly semantic viewpoint, OpenGraph is considered hardly worthy of comment: it is a frankenstandard, a mishmash of microformats and loosely-typed entities, lobbed casually into the semantic web world with hardly a backward glance.

But this is not important. While OpenGraph avoids, or outright ignores, many of the problematic issues surrounding semantic annotation (see Alex Iskold's excellent commentary on OpenGraph here on Radar), criticism focusing only on its technical purity is missing half of the equation. Facebook gets it right where other initiatives have failed. While OpenGraph is incomplete and imperfect, it is immediately usable and sympathetic with extant approaches. Most importantly, OpenGraph is one component in a wider ecosystem. Its deployment benefits are apparent to the consumer and the developer: add the metatags, get the "likes," know your customers.

Such consumer causality is critical to the adoption of any semantic mark-up. We've seen it before with microformats, whose eventual popularity was driven by their ability to improve how a page is represented in search engine listings, and not by an abstract desire to structure the unstructured. Successful adoption will often entail sacrificing standardization and semantic purity for pragmatic ease-of-use; this is where the semantic web appears to have stumbled, and where linked data will most likely succeed.

Linked data intends to make the Web more interconnected and data-oriented. Beyond this outcome, the term is less rigidly defined. I would argue that linked data is more of an ethos than a standard, focused on providing context, assisting in disambiguation, and increasing serendipity within the user experience. This idea of linked data can be delivered by a number of existing components that work together on the data, platform, and application levels:

- Entity provision: Defining the who, what, where and when of the Internet, entities encapsulate meaning and provide context by type. In its most basic sense, an entity is one row in a list of things organized by type -- such as people, places, or products -- each with a unique identifier. Organizations that realize the benefits of linked data are releasing entities like never before, including the publication of 10,000 subject headings by the New York Times, admin regions and postcodes from the UK's Ordnance Survey, placenames from Yahoo GeoPlanet, and the data infrastructures being created by Factual [disclosure: I've just signed on with Factual].

- Entity annotation: There are numerous formats for annotating entities when they exist in unstructured content, such as a web page or blog post. Facebook's OpenGraph is a form of entity annotation, as are HTML5 microdata, RDFa, and microformats such as hcard. Microdata is the shiny, new player in the game, but see Evan Prodromou's great post on RDFa v. microformats for a breakdown of these two more established approaches.

- Endpoints and Introspection: Entities contribute best to a linked data ecosystem when each is associated with a Uniform Resource Identifier (URI), an Internet-accessible, machine readable endpoint. These endpoints should provide introspection, the means to obtain the properties of that entity, including its relationship to others. For example, the Ordnance Survey URI for the "City of Southampton" is http://data.ordnancesurvey.co.uk/id/7000000000037256. Its properties can be retrieved in machine-readable format (RDF/XML,Turtle and JSON) by appending an "rdf," "ttl," or "json" extension to the above. To be properly open, URIs must be accessible outside a formal API and authentication mechanism, exposed to semantically-aware web crawlers and search tools such as Yahoo BOSS. Under this definition, local business URLs, for example, can serve in-part as URIs -- 'view source' to see the semi-structured data in these listings from Yelp (using hcard and OpenGraph), and Foursquare (using microdata and OpenGraph).

- Entity extraction: Some linked data enthusiasts long for the day when all content is annotated so that it can be understood equally well by machines and humans. Until we get to that happy place, we will continue to rely on entity extraction technologies that parse unstructured content for recognizable entities, and make contextually intelligent identifications of their type and identifier. Named entity recognition (NER) is one approach that employs the above entity lists, which may also be combined with heuristic approaches designed to recognize entities that lie outside of a known entity list. Yahoo, Google and Microsoft are all hugely interested in this area, and we'll see an increasing number of startups like Semantinet emerge with ever-improving precision and recall. If you want to see how entity extraction works first-hand, check out Reuters-owned Open Calais and experiment with their form-based tool.

- Entity concordance and crosswalking: The multitude of place namespaces illustrates how a single entity, such as a local business, will reside in multiple lists. Because the "unique" (U) in a URI is unique only to a given namespace, a world driven by linked data requires systems that explicitly match a single entity across namespaces. Examples of crosswalking services include: Placecast's Match API, which returns the Placecast IDs of any place when supplied with an hcard equivalent; Yahoo's Concordance, which returns the Where on Earth Identifier (WOEID) of a place using as input the place ID of one of fourteen external resources, including OpenStreetMap and Geonames; and the Guardian Content API, which allows users to search Guardian content using non-Guardian identifiers. These systems are the unsung heroes of the linked data world, facilitating interoperability by establishing links between identical entities across namespaces. Huge, unrealized value exists within these applications, and we need more of them.

- Relationships: Entities are only part of the story. The real power of the semantic web is realized in knowing how entities of different types relate to each other: actors to movies, employees to companies, politicians to donors, restaurants to neighborhoods, or brands to stores. The power of all graphs -- these networks of entities -- is not in the entities themselves (the nodes), but how they relate together (the edges). However, I may be alone in believing that we need to nail the problem of multiple instances of the same entity, via concordance and crosswalking, before we can tap properly into the rich vein that entity relationships offer.

The approaches outlined above combine to help publishers and application developers provide intelligent, deep and serendipitous consumer experiences. Examples include the semantic handset from Aro Mobile, the BBC's World Cup experience, and aggregating references on your Facebook news feed.

Linked data will triumph in this space because efforts to date focus less on the how and more on the why. RDF, SPARQL, OWL, and triple stores are onerous. URIs, micro-formats, RDFa, and JSON, less so. Why invest in difficult technologies if consumer outcomes can be realized with extant tools and knowledge? We have the means to realize linked data now -- the pieces of the puzzle are there and we (just) need to put them together.

Linked data is, at last, bringing the discussion around to the user. The consumer "end" trumps the semantic "means."

Related:

It’s surprising that the only mention of OData was in the comments.

<Return to section navigation list>

Azure AppFabric: Access Control and Service Bus

Arman Kurtagic explained Securing WCF REST Service with Azure AppFabric Access Control Service and OAuth(WRAP) in this 11/12/2010 tutorial:

Introduction

There are a lots of code examples of how to create REST services but there are few example of how to secure them. As security is one of the most important things when building any kind of system this should not be undermined. Most of those securing features when using WCF and REST are using SSL, some kind of custom implementations and/or using ASP.NET features. There were some attempts to create OAUTH channels for WCF REST services and there are many types of implementations out there nut the question is what to use, but this is not easy and it is very confusing. How can I (very simple) use for example OAUTH for my WCF REST services?

Handling security in RESTful applications can be done using transport security (SSL), message security (encrypting), authentication (signing messages, tokens) and authorization (which is controlled by service).

When using token based authentication (OAUTH) token is retrieved from service by sending user name and password to the service, in some cases one will also send secret key and/or application key.

If one will use WCF data services (formerly Astoria, ADO.NET data service) this could be done relatively easy but in this case we will try to create an secure WCF REST service using Azure AppFabric Access Control Service.

As we will use Azure AppFabric Access Control here are key features listed from codeplex, AppFabric Access Control:

- Integrates with Windows Identity Foundation (WIF) and tooling

- Out-of-the-box support for popular web identity providers including: Windows Live ID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth 2.0 (draft 10), WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows users to choose their identity provider

- An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

Implementation

Steps that we will perform will look like this:

- Configure Windows Azure Access Control via its portal.

- Create “secure” local WCF REST service using WCF REST service template and implement custom ServiceAuthorizationManager.

- Create test client that will authenticate and retrieve token from Access Control Service, which will be then used by WCF REST service to validate calls. This can be viewed in figure bellow.

Figure 1: Secure WCF REST service with ACS.Configure Windows Azure Access Control (Define rules, keys, claims)

Administrator will register claims and keys with ACS management service or through Management Portal. ACS will be the one who takes care of safety and will sign all tokens with key that is generated in the ACS.

WCF service has key which is used to validate token received from the client.I will go through all step to configure ACS via management portal, this can also be viewed in the ACS labs on the codeplex, otherwise go to step 2.

- Log in to https://portal.appfabriclabs.com

- By pressing project name one will be able to Add Service NameSpace (new page).

- Then pressing Access Control link

- By pressing Manage button we will be redirected to the Access Control Service main menu. Here we can administrate TOKENS, certificates, groups, claims, and so on.

- Press Relying Party Applications and Add Relying Party Application to configure Relying party (WCF REST Service).

Here we are going to configure service endpoint which will be “secure”.

a) Write Name of the Service under Name.

b) Write URL of the service that will be “secure” (in our case local service)

c) Under token format I have changed SAML 2.0 to SWT (optional).

d) Under Identity providers unselect Windows Live ID

e) Under Token Signing Options press generate to generate new Token signing key, which will be used by our service, so you can copy now this token or take it later.- Press Save and go back to main menu (Look at image under 4.). Press Rule Groups and there should be Default Rule Group for WCF Rest Azure Service(one we created in previous step).

a) Press Default Rule Group for WCF Rest Azure Service (or you relaying part name).

a) Press Add Rule

b) After pressing Add Rule you will be redirected to another page there you can choose Access Control Service under Claim Issuer. All other can have default values.- Last step is to create an Identity for service that client(consumer) will be using. In main menu (under 4.) press Service Identities and then Add Service Identity. This service identity name will be then used as a login name in the client code. Press Save.

- Finally Press Add Credentials and write name and password then save.

Now we can work with our service code. This configuration can also be done programmatically. More information can be found at http://acs.codeplex.com.

Creating Secure WCF REST Service

Create new Windows Azure Cloud Service and add Wcf Web Role. I have used new WCF REST template for that.

In Web.config add values for:

- Service Namespace (which we created under point 2), in my case armanwcfrest.

- Hostname for acs.

- Signing key which we have generated (under point 5-e)

<appSettings>

<addkey="AccessControlHostName"value="accesscontrol.appfabriclabs.com"/>

<addkey="AccessControlNamespace"value="armanwcfrest"/>

<addkey="IssuerSigningKey"value="yourkey"/>

</appSettings>Now we need to create custom Authorization manager to authenticate each call to our service. For that purpose we need to create custom class and inherit from SecurityAuthorizationManager.

ServiceAuthorizationManager is called once for each message that is coming to the service (message that service is going to process). ServiceAuthorizationManager will determine if a user can perform operation before the deserialization or method invocation occurs on service instance. For that it is more preferable than using PrincipalPermission which will invoke method and why do that if the user will be rejected. Another advantage of using ServiceAuthorizationManager is that can separate service business logic from authorization logic.

This custom class will do following things:

- Override CheckAccessCore to check for Authorization HttpHeader

- Check if that header begins with WRAP

- Take access_token value and use TokenValidator class (help class provided in code) to validate token.

- Return true if token validation succeded or false if not.

65 lines of C# code elided for brevity.

In Global.asax.cs I have created SecureWebServiceHostFactory that will be using AccessControlServiceAuthorizationManager class.

28 lines of C# source code elided for brevity.

Client Code

- Client application will retrive token from Azure AppFabric Access Control Service for specific adress (URL).

- It will send message to our service with token inside Authorization header.

- Hopefully receive response.

Client.cs

39 lines of C# source code elided for brevity.

Fiddler View client requesting token

Client call with Authorization Token and response from the service.

Resources:

More information about OAUTH, identity and access control in the cloud can be found in Vittorio Bertocci’s session at PDC 2010.

- Documentation about OAUTH.

- Rest libraries Hammonck, RestSharp, WCF Web API.

- Source Code: RestService

Note: Many thanks to my friend Herbjörn for reviewing this post. Do not miss his presentation on Azure Summit 17-18 Nov (2010)

David Kearns [pictured below] asserted “If you think of cloud computing as just the latest in a string of buzzwords, then you need to read ‘The Definitive Guide to Identity Management’” as a deck for his Book reveals truths about the cloud article of 11/12/2010 for NetworkWorld’s Secruity blog:

I've known Archie Reed for many years, since he was vice president of strategy for TruLogica back in the early years of this century. He went with that company when it was acquired by Compaq, then stayed when that organization was bought by HP, filling various roles involving identity and security until ending up, today, as HP's chief technologist for cloud security.

Back in 2004, I was touting Archie's eBook The Definitive Guide to Identity Management, still available today from Realtime Publishers (and worth reading if you haven't already).

Today Archie has another eBook out, Silver Clouds, Dark Linings: A Concise Guide to Cloud Computing, available for your Kindle and other eBook platforms.

If, like me, you think of cloud computing as just the latest in a string of buzzwords (service-oriented architecture, network computing, software-as-a-service, client server, etc.) for, essentially, the same thing then you need this book.

If you think that there's no need for an identity layer in cloud computing then you need this book.

For me, chapter 8 "Cloud Governance, Risk, and Compliance" is worth the price of the book, with its sub-chapters:

- Risk Management

- Governance

- Compliance

- Cohesive GRC

- Cloud GRC Model

- Cloud Service Portfolio Governance

- Cloud Service Consumer/Producer Governance

- Cloud Asset Vitality

- Cloud Organization Governance

- GRC Is a Process, Not a Project!

Reed and his co-author (Stephen G. Bennett, senior enterprise architect at Oracle) conclude the chapter with three essentials truths:

- The actions of individuals can undermine your cloud service adoption efforts. Therefore define an appropriate cloud GRC model to address your planning, control and monitoring needs.

- Cloud services present many unique situations for you to address. Therefore, you must consider and weigh up the risks against any monetary gains.

- Effective cloud governance provides organizations with visibility into and oversight of cloud services utilized used across the organization.

The rest of the book tells you how to get there.

Download the book to your preferred eBook reader then keep it handy whenever yet another business line manager casually mentions that they implemented yet another cloud computing service.

Alik Levin updated CodePlex’s Access Control Service Samples and Documentation (Labs) on 11/8/2010 (missed when posted):

Introduction

The Windows Azure AppFabric Access Control Service (ACS) makes it easy to authenticate and authorize users of your web sites and services. ACS integrates with popular web and enterprise identity providers, is compatible with most popular programming and runtime environments, and supports many protocols including: OAuth, OpenID, WS-Federation, and WS-Trust.

Contents

Prerequisites: Describes what's required to get up and running with ACS.Getting Started: Walkthrough the simple end-to-end scenarioWorking with the Management Portal: Describes how to use the ACS Management PortalWorking with the Security Token Service: Provides technical reference for working with the ACS Security Token Service

- ACS Token Anatomy: coming soon

- WS-Federation: coming soon

- WS-Trust: coming soon

- OAuth 2.0: coming soon

ACS Entities: Describes the entities used by ACS and how they impact token issuing behaviorWorking with the Management Service: coming soonSamples: Descriptions and Readmes for the ACS SamplesACS Content Map: Collection of external ACS realted contentKey Features

- Integrates with Windows Identity Foundation and tooling (WIF)

- Out-of-the-box support for popular web identity providers including: Windows Live ID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth 2.0 (draft 10), WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows users to choose their identity provider

- An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

Platform Compatibility

ACS is compatible with virtually any modern web platform, including .NET, PHP, Python, Java, and Ruby. For a list of .NET system requirements, see Prerequisites.Core Scenario

Most of the scenarios that involve ACS consist of four autonomous services:The core scenario is similar for web services and web sites, though the interaction with web sites utilizes the capabilities of the browser.

- Relying Party (RP): Your web site or service

- Client: The browser or application that is attempting to gain access to the Relying Party

- Identity Provider (IdP): The site or service that can authenticate the Client

- ACS: The partition of ACS that is dedicated to the Relying Party

Web Site Scenario

This web site scenario is shown below:

- The Client (in this case a browser) requests a resource at the RP. In most cases, this is simply an HTTP GET.

- Since the request is not yet authenticated, the RP redirects the Client to the correct IdP. The RP may determine which IdP to redirect the Client to using the Home Realm Discovery capabilities of ACS.

- The Client browses to the IdP authentication page, and prompts the user to login.

- After the Client is authenticated (e.g. enters credentials), the IdP issues a token.

- After issuing a token, the IdP redirects the Client to ACS.

- The Client sends the IdP issued token to ACS.

- ACS validates the IdP issued token, inputs the data in the IdP issued token to the ACS rules engine, calculates the output claims, and mints a token that contains those claims.

- ACS redirects the Client to the RP.

- The Client sends the ACS issued token to the RP.

- The RP validates the signature on the ACS issued token, and validates the claims in the ACS issued token.

- The RP returns the resource representation originally requested in (1).

Web Service Scenario

The core web service scenario is shown below. It assumes that the web service client does not have access to a browser and the Client is acting autonomously (without a user directly participating in the scenario).

- The Client logs in to the IdP (e.g. sends credentials)

- After the Client is authenticated, the IdP mints a token.

- The IdP returns the token to the Client.

- The Client sends the IdP-issued token to ACS.

- ACS validates the IdP issued token, inputs the data in the IdP issued token to the ACS rules engine, calculates the output claims, and mints a token that contains those claims.

- ACS returns the ACS issued token to the Client.

- The Client sends the ACS issued token to the RP.

- The RP validates the signature on the ACS issued token, and validates the claims in the ACS issued token.

- The RP returns the resource representation originally requested in (1).

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

David Gristwood reported on Windows Azure learnings - Mydeo's 2 Week Proof of Concept on 11/15/2010:

Another video about Microsoft partners using Windows Azure, this time with Mydeo at the end of an intense 2 week Windows Azure “Proof of Concept” activity at our Microsoft Technology Center (MTC) in the UK.

In the video they talk about their motivation for looking at Windows Azure, the architecture they came up with, and what they achieved in the 2 weeks working with Windows Azure.

The application was log processing for a streaming video content delivery network.

Rubel Khan recommended Windows Azure/Cloud Computing Learning Snacks on 11/14/2010:

Learn more about Microsoft Windows Azure Platform, Microsoft Online Services, and other Microsoft cloud offerings by watching the short, bite-sized snacks featured on the Microsoft Silverlight Learning Snacks page.

Cloud computing is a paradigm shift that provides computing over the Internet. It offers infrastructure, platform, and software as services; organizations can use any of these services, depending on their business needs. Organizations can simply connect to the cloud and use the available resources on a pay-per-use basis. Microsoft offers flexible cloud-computing solutions that help communicate, collaborate, and store data in a cloud. The cloud services offered by Microsoft are cost effective, secure, always available, and platform independent. This snack explains the concept of cloud computing and its advantages. The snack also provides an overview of Microsoft’s cloud-computing strategy and offerings.

Brian Swan posted Resources from PHP World Kongress Presentation on 11/9/2010 (missed when posted):

I’m just posting to share the resources from my presentation today at the PHP World Kongress in Munich, Germany. I’ve attached the slide deck that I used, but I’m not sure how useful it will be since most of my presentation was demos. (I hope to get video versions of the demos posted soon. If the deck doesn’t make sense now on its own, hopefully it will make sense when I get videos posted.) Here’s the links from my “Resources” slide, which is what most folks asked for:

Downloads and Project Sites:

- WinCache: http://pecl.php.net/package/WinCache

- PHP Manager for IIS: http://phpmanager.codeplex.com/

- URL Rewrite module for IIS: http://www.iis.net/download/URLRewrite

Windows Azure Command Line Tools for PHP: http://azurephptools.codeplex.com/

- Windows Azure Tools for Eclipse for PHP Developers: http://www.windowsazure4e.org/

- Windows Azure SDK for PHP: http://phpazure.codeplex.com/

- Windows Azure Companion: http://code.msdn.microsoft.com/azurecompanion

- SQL Server Reporting Services SDK for PHP : http://ssrsphp.codeplex.com/

- SQL Azure Migration Wizard : http://sqlazuremw.codeplex.com/

General information:

- Windows Azure platform: http://www.microsoft.com/windowsazure/

- Interoperability Team blog: http://blogs.msdn.com/b/interoperability/

- SQL Server Drivers for PHP Team blog: http://blogs.msdn.com/b/sqlphp/

- IIS Team blogs: http://blogs.iis.net/

OK…on to Berlin and TechEd tomorrow!

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

Tim Ferguson offered “Tips and tools for businesses to get on the private cloud bandwagon” in his Microsoft unveils Hyper-V Cloud scheme to push private clouds post to Silicon.com of 11/15/2010:

Microsoft has announced a new package of services aimed at businesses wanting to create their own private cloud infrastructures based on the Windows Server operating system.

Private cloud infrastructures allow organisations to use cloud computing to deliver hosted applications while keeping data within the company or at least isolated from other businesses. It is often touted as a way to gain the benefits of traditional public cloud computing, such as flexibility and scalability, while still guaranteeing data security.

Microsoft has already made moves in the private cloud field with the launch earlier this year of Windows Azure Platform Appliance. The appliance enables service providers and large customers to run the Azure development and hosting platform as a private cloud from their own datacentres, allowing developers to build new applications and run them in a cloud environment.

Now Microsoft has announced the Hyper-V Cloud, an initiative focused on the infrastructure-as-a-service side of private cloud, such as hosted computing power, networking and storage.

Microsoft's Hyper-V Cloud initiative is intended to help businesses implement private clouds more quickly

(Photo credit: Shutterstock)The Hyper-V Cloud initiative unveiled last week aims to provide tools to help speed private cloud deployments, among them deployment guides for using different hardware and software combinations to underpin rollouts - for example, an IBM server system, networking technology and storage that has been configured to work out of the box with Windows Server 2008 and Windows System Center as a part of a private cloud set-up.

The guides are based on work done by Microsoft Consulting Services and include modules covering architecture, deployment, operations, project validation and project planning.

The Hyper-V Cloud scheme also offers a list of 70 service providers around the world that provide hosted versions of infrastructures based on Microsoft technology.

In addition, part of the programme known as the Hyper-V Cloud Fast Track gives fixed combinations of software and hardware - such as networking and storage kit - that have been put together by Microsoft and partners including Dell, Fujitsu, Hitachi, HP and IBM to be used to support private cloud rollouts.

Dell, HP and IBM have all put together reference architectures for using their own virtualisation technology in conjunction with Windows 2008 Hyper-V and Microsoft System Center to provide foundations for running business applications in a cloud environment.

James Staten (@staten7) posted Cloud Predictions For 2011: Gains From Early Experiences Come Alive to his Forrester Research blog on 11/15/2010:

The second half of 2010 has laid a foundation in the infrastructure-as-a-service (IaaS) market that looks to make 2011 a landmark year. Moves by a variety of players may just turn this into a vibrant, steady market rather than today’s Amazon Web Services and a distant race for second.

- VMware vCloud Director finally shipped after much delay — a break from VMware’s rather steady on-time execution prior — and will power both ISP public clouds and enterprise private efforts in 2011.

- VMops changed its name and landed a passel of service providers; we’ll see if they live up to be the “.com” in Cloud.com.

- OpenStack came out of the gate with strong ISV support and small ISP momentum; 2011 may prove a make-or-break year for the open source upstart. And nearly every enterprise software player and professional services organization moved from learning about cloud to delivering value-add around it.

- Kudos to BMC, Novell, TrendMicro, and CA’s bank account for some particularly smart moves. Sadly, Oracle went from dismissal to misinformation when they cloudwashed San Francisco’s Moscone Center, despite actually making some solid moves in the cloud.

But enough with the past, what matters as 2010 edges towards a close is what enterprise Infrastructure & Operations (I&O) professionals should be planning for in the coming year. My esteemed colleague Gene Leganza has compiled the top 15 technology trends to watch over the next three years with cloud computing serving as an engine behind many of them. But let’s drill in more specifically on IaaS.

Not all moves that show promise today will result in a sustainable harvest come 12 months, but a few trends are likely to play out. Here are my top expectations:

- And The Empowered Shall Lead Us. In Forrester’s new book Empowered, we profile a new type of IT leader, and they don’t work for you. They work for the business, not I&O, and are leveraging technologies at the edge of the business to change relationships, improve customer support, design new products, and deliver value in ways you could not have foreseen. And, despite your “better judgment” you need to help them do this. Your frontline employees are the ones who see the change in the market first and are best positioned to guide the business on how to adjust. They can turn — and are turning — to cloud services to make this change happen but don’t always know how to leverage it best. This is where you must engage.

- You will build a private cloud, and it will fail. And this is a good thing. Because through this failure you will learn what it really takes to operate a cloud environment. Knowing this, your strategy should be to fail fast and fail quietly. Don’t take on a highly visible or wildly ambitious cloud effort. Start small, learn, iterate, and then expand

- Hosted private clouds will outnumber internal clouds 3:1. The top reason empowered employees go to public cloud services is speed. They can gain access to these services in minutes. Private clouds must meet this demand and not once, but consistently. That means standardized procedures executed by automation software, not hero VMware administrators. And most enterprises aren’t ready to pass the baton. But service providers will be ready in 2011. This is your fast path to private cloud, so take it.

- Community clouds will arrive, thank to compliance. The biotech field is already heading this direction. Federal government I&O teams are piloting them. And security and compliance will bring them together. Why struggle alone adapting your processes to meet FDA requirements when everyone else in your industry is doing the same? Cmed Technology is onto something here.

- Workstation applications will bring HPC to the masses. Autodesk’s Project Cumulus and the ISVs lining up behind GreenButton are showing the way, and they’ll do it because it expands — not threatens — their market. Both these companies have figured out how to put a cloud behind applications and in so doing deliver game-changing productivity: the kind of performance that can potentially match traditional grid computing but with nearly no effort by the customer. These moves leverage cloud economics and may disrupt supercomputing.

- Cloud economics gets switched on. Being cheap is good. We all know the basic of cloud economics — pay only for what you use — but the mechanism isn’t the lesson; it’s just the tool. Cloud economics 101 is matching elastic applications to cloud platforms and moving transient apps in and out so their costs are constantly returning to zero. Cloud economics 201 is designing and optimizing applications to take greatest advantage. Cloud economics 301 is knowing when and which cloud to use for maximum profitability. Look to early efforts such as Amazon Web Services’ Spot Instances and Enomaly’s SpotCloud to show the way here and the Cloud Price Calculator to help you normalize costs. As cloud segments such as IaaS commoditize, tools that let you play the market will grow in importance.

- The BI gap will widen. If business intelligence to you means a secure data warehouse, you will quickly learn which side of this gap you are on. Cloud technologies such as AWS’ Elastic Map Reduce, 1010Data, and BI unification will deliver real-time intelligence and cross-system insights that help businesses skate to where the puck is going and see — and make — the market shift before their competitors.

- Information is power and a new profit center. Not only will cloud computing help leading enterprises gain greater insight from their information, it will help them derive revenue from it too. Services such as Windows Azure DataMarket will help enterprises leverage data sources more easily and become one of those providers themselves. The Associated Press, Dun & Bradstreet, and ESRI are the model. Are you the next great provider? What value data are you keeping locked up in your vault?

- Cloud standards still won’t be here — get over it. Despite promising efforts by the DMTF, NIST, and the Cloud Security Alliance, this market will still be too immature for standardization. But that won’t mean a lack of progress in 2011. Expect draft specifications and even a possible ratification or two next year, but adoption of the standard will remain years off. Don’t let that hold you back from using cloud technologies, though, as most are built on the backs of prior standards efforts. Existing security, Web services, networking, and protocol standards are all in use by clouds. And cloud management tools are doing their best to abstract the difference from cloud to cloud.

- Cloud security will be proven but not by the providers alone. Because cloud security isn’t their responsibility — it’s shared. The cloud-leading enterprises get this, and we have already seen HIPAA, PCI, and other compliance standards met in the cloud. The cloud providers are certainly doing their part as evidenced by AWS's recent ISO 27001 certification. The best practices for doing this will spread in 2011, but we should all remember that you shouldn’t hold off on cloud computing until you solve these high-bar security challenges. Get started with applications that are easier to protect.

Simon Wardley (@swardley) described the “underlying transition (evolution) behind cloud” in his IT Extremists post of 11/14/2010:

The problem with any transition is that inevitably you end up with extremists, cloud computing and IT are no exception. I thought I'd say a few words on the subject.

I'll start with highlighting some points regarding the curve which I use to describe the underlying transition (evolution) behind cloud. I'm not going to simplify the graph quite as much as I normally do but then I'll assume it's not the first time readers have seen this.

Figure 1 - Lifecycle (click on image for higher resolution)

The points I'll highlight are :-

- IT isn't one thing it's a mass of activities (the blue crosses)

- All activities are undergoing evolution (commonly known as commoditisation) from innovation to commodity.

- As activities shift towards more of a commodity, the value is in the service and not the bits. Hence the use open source has naturally advantages particularly in provision of a marketplace of service providers.

- Commoditisation of an activity not only enables innovation of new activities (creative destruction), it can accelerate the rate of innovation (componentisation) of higher order systems and even accelerate the process of evolution of all activities (increase communication, participation etc).

- Commoditisation of an activity can result in increased consumption of that activity through price elasticity, long tail of unmet demand, increased agility and co-evolution of new industries. These are the principle causes of Jevons' paradox.

- As an activity evolves between different stages risks occur including disruption (including previous relationships, political capital & investment), transition (including confusion, governance & trust) and outsourcing risks (including suitability, loss of strategic control and lack of pricing competition.

- Benefits of the evolution of an activity are standard and include increased efficiencies (including economies of scale, balancing of heterogeneous demand etc), ability of user to focus on core activities, increased rates of agility and tighter linking between expenditure and consumption.

- Within a competitive ecosystem, adoption of a more evolved model creates pressure for others to adopt (Red Queen Hypothesis).

- The process of evolution is itself driven by end user and supplier competition.

- The general properties of an activity changes as it evolves from innovation (i.e. dynamic, deviates, uncertain, source of potential advantage, differential) to more of a commodity (i.e.repeated, standard, defined, operational efficiency, cost of doing business).

The above is a summary of some of the effects, however I'll use this to demonstrate the extremist views that appear in our IT field.

Private vs Public Cloud: in all other industries which have undergone this transition, a hybrid form (i.e. public + private) appeared and then the balance between the two extremes shifted towards more public provision as marketplaces developed. Whilst private provision didn't achieve (in general) the efficiencies of public provision, it can be used to mitigate transitional and outsourcing risks. Cloud computing is no exception, hybrid forms will appear purely for the reasons of balancing benefits vs risks and over time the balance between private and public will shift towards public provision as marketplaces form. Beware ideologists saying cloud will develop as just one or the other, history is not on their side

Commoditisation vs Innovation: the beauty of commoditisation is that it enables and accelerates the rate of innovation of higher order systems. The development of commodity provision of electricity resulted in an explosion of innovation in things which consumed electricity. This process is behind our amazing technological progress over the last two hundred years. Beware those who say commoditisation will stifle innovation, history says the reverse.

IT is becoming a commodity vs IT isn't becoming a commodity: IT isn't one thing, it's a mass of activities. Some of those activities are becoming a commodity and new activities (i.e. innovations) are appearing all the time. Beware those describing the future of IT as though it's one thing.

Open Source vs Proprietary : each technique has a domain in which it has certain advantages. Open source has a peculiarly powerful advantage in accelerating the evolution of an activity towards being a commodity, a domain where open source has natural strengths. The two approaches are not mutually exclusive i.e. both can be used. However, as activities become provided through utility services, the economics of the product world doesn't apply i.e. most of the wealthy service companies in the future will be primarily using open source and happily buying up open source and proprietary groups. This is diametrically opposed to the current product world where proprietary product groups buy up open source companies. Beware the open source vs proprietary viewpoint and the application of old product ideas to the future.

I could go on all night and pick on a mass of subjects including Agile vs Six Sigma, Networked vs Hiearchical, Push vs Pull, Dynamic vs Linear ... but I won't. I'll just say that in general where there exists two opposite extremes, the answer normally involves a bit of both.

<Return to section navigation list>

Cloud Security and Governance

Todd Hoff asserted Strategy: Biggest Performance Impact is to Reduce the Number of HTTP Requests in an 11/15/2010 post to the High Performance blog:

Low Cost, High Performance, Strong Security: Pick Any Three by Chris Palmer has a funny and informative presentation where the main message is: reduce the size and frequency of network communications, which will make your pages load faster, which will improve performance enough that you can use HTTPS all the time, which will make you safe and secure on-line, which is a good thing.

The benefits of HTTPS for security are overwhelming, but people are afraid of the performance hit. The argument is successfully made that the overhead of HTTPS is low enough that you can afford the cost if you do some basic optimization. Reducing the number of HTTP requests is a good source of low hanging fruit.

From the Yahoo UI Blog:

Reducing the number of HTTP requests has the biggest impact on reducing response time and is often the easiest performance improvement to make.

From the Experience of Gmail:

…we found that there were between fourteen and twenty-four HTTP requests required to load an inbox… it now takes as few as four requests from the click of the “Sign in” button to the display of your inbox.

So, design higher granularity services where more of the functionality is one the server side than the client side. This reduces the latency associated with network traffic and increases performance. More services less REST?

Other Suggestions for Reducing Network Traffic:

- DON’T have giant cookies, giant request parameters (e.g. .NET ViewState).

- DO compress responses (gzip, deflate).

- DO minify HTML, CSS, and JS.

- DO use sprites. DO compress images at the right compression level, and DO use the right compression algorithm for the job.

- DO maximize caching.

<Return to section navigation list>

Cloud Computing Events

Watch live video from the UP 2010 Cloud Computing Conference on LiveStream. The following capture is from Doug Hauger’s The Move Is On: Cloud Strategies for Business keynote of Day 1: 11/15/2010:

K. Scott Morrison reported on 11/14/2010 that he will Talk at Upcoming Gartner AADI 2010 in LA: Bridging the Enterprise and the Cloud to be held 11/16/2010 at the JW Marriott at LA Live hotel:

I’ll be speaking this Tuesday, Nov 16 at the Gartner Application Architecture, Development and Integration Summit in Los Angeles. My talk is during lunch, so if you’re at the conference and hungry, you should definitely come by and see the show. I’ll be exploring the issues architects face when integrating cloud services—including not just SaaS, but also PaaS and IaaS—with on-premise data and applications.

I’ll also cover the challenges the enterprise faces when leveraging existing identity and access management systems in the cloud. I’ll even talk about the thinking behind Daryl Plummer’s Cloudstreams idea, which I wrote about last week.

Come by, say hello, and learn not just about the issues with cloud integration, but real solutions that will allow the enterprise to safely and securely integrate this resource into their IT strategy.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr described Amazon Web Services’ New EC2 Instance Type - The Cluster GPU Instance in an 11/15/2010 post:

If you have a mid-range or high-end video card in your desktop PC, it probably contains a specialized processor called a GPU or Graphics Processing Unit. The instruction set and memory architecture of a GPU are designed to handle the types of operations needed to display complex graphics at high speed.

The instruction sets typically include instructions for manipulating points in 2D or 3D space and for performing advanced types of calculations. The architecture of a GPU is also designed to handle long streams (usually known as vectors) of points with great efficiency. This takes the form of a deep pipeline and wide, high-bandwidth access to memory.

A few years ago advanced developers of numerical and scientific application started to use GPUs to perform general-purpose calculations, termed GPGPU, for General-Purpose computing on Graphics Processing Units. Application development continued to grow as the demands of many additional applications were met with advances in GPU technology, including high performance double precision floating point and ECC memory. However, accessibility to such high-end technology, particularly on HPC cluster infrastructure for tightly coupled applications, has been elusive for many developers. Today we are introducing our latest EC2 instance type (this makes eleven, if you are counting at home) called the Cluster GPU Instance. Now any AWS user can develop and run GPGPU on a cost-effective, pay-as-you-go basis.

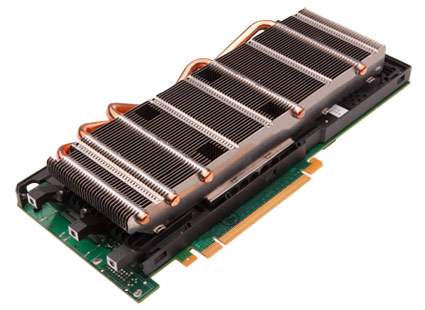

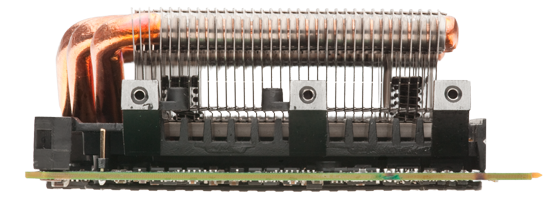

Similar to the Cluster Compute Instance type that we introduced earlier this year, the Cluster GPU Instance (cg1.4xlarge if you are using the EC2 APIs) has the following specs:

- A pair of NVIDIA Tesla M2050 "Fermi" GPUs.

- A pair of quad-core Intel "Nehalem" X5570 processors offering 33.5 ECUs (EC2 Compute Units).

- 22 GB of RAM.

- 1690 GB of local instance storage.

- 10 Gbps Ethernet, with the ability to create low latency, full bisection bandwidth HPC clusters.

Each of the Tesla M2050s contains 448 cores and 3 GB of ECC RAM and are designed to deliver up to 515 gigaflops of double-precision performance when pushed to the limit. Since each instance contains a pair of these processors, you can get slightly more than a trillion FLOPS per Cluster GPU instance. With the ability to cluster these instances over 10Gbps Ethernet, the compute power delivered for highly data parallel HPC, rendering, and media processing applications is staggering. I like to think of it as a nuclear-powered bulldozer that's about 1000 feet wide that you can use for just $2.10 per hour!

Each AWS account can use up to 8 Cluster GPU instances by default with more accessible by contacting us. Similar to Cluster Compute instances, this default setting exists to help us understand your needs for the technology early on and is not a technology limitation. For example, we have now removed this default setting on Cluster Compute instances and have long had users running clusters up through and above 128 nodes as well as running multiple clusters at once at varied scale.

You'll need to develop or leverage some specialized code in order to achieve optimal GPU performance, of course. The Tesla GPUs implements the CUDA architecture. After installing the latest NVIDIA driver on your instance, you can make use of the Tesla GPUs in a number of different ways:

- You can write directly to the low-level CUDA Driver API.

- You can use higher-level functions in the C Runtime for CUDA.

- You can use existing higher-level languages such as FORTRAN, Python, C, C++, or Java.

- You can use CUDA versions of well-established packages such as CUBLAS (BLAS), CUFFT (FFT), and LAPACK.

- You can build new applications in OpenCL (Open Compute Language), a new cross-vendor standard for heterogeneous computing.

- You can run existing applications that have been adapted to make use of CUDA.

Elastic MapReduce can now take advantage of the Cluster Compute and Cluster GPU instances, giving you the ability to combine Hadoop's massively parallel processing architecture with high performance computing. You can focus on your application and Elastic MapReduce will handle workload parallelization, node configuration, scaling, and cluster management.

Here are some resources to help you to learn more about GPUs and GPU programming:

- NVIDIA GPU Computing Developer Home Page.

- CUDA Toolkit Download.

- CUDA By Example, published earlier this year.

- Programming Massively Parallel Processors, also published this year.

- The gpgpu.org site has a lot of interesting articles.

So, what do you think? Can you make use of this "bulldozer" in your application? What can you build with this much on-demand computing power at your fingertips? Leave a comment, let me know!

Werner Vogels (@werner) continued the Cluster GPU discussion with Expanding the Cloud - Adding the Incredible Power of the Amazon EC2 Cluster GPU Instances on 11/15/2010:

Today Amazon Web Services takes another step on the continuous innovation path by announcing a new Amazon EC2 instance type: The Cluster GPU Instance. Based on the Cluster Compute instance type, the Cluster GPU instance adds two NVIDIA Telsa M2050 GPUs offering GPU-based computational power of over one TeraFLOPS per instance. This incredible power is available for anyone to use in the usual pay-as-you-go model, removing the investment barrier that has kept many organizations from adopting GPUs for their workloads even though they knew there would be significant performance benefit.

From financial processing and traditional oil & gas exploration HPC applications to integrating complex 3D graphics into online and mobile applications, the applications of GPU processing appear to be limitless. We believe that making these GPU resources available for everyone to use at low cost will drive new innovation in the application of highly parallel programming models.

From CPU to GPU

Building general purpose architectures has always been hard; there are often so many conflicting requirements that you cannot derive an architecture that will serve all, so we have often ended up focusing on one side of the requirements that allow you to serve that area really well. For example, the most fundamental abstraction trade-off has always been latency versus throughput. These trade-offs have even impacted the way the lowest level building blocks in our computer architectures have been designed. Modern CPUs strongly favor lower latency of operations with clock cycles in the nanoseconds and we have built general purpose software architectures that can exploit these low latencies very well. Now that our ability to generate higher and higher clock rates has stalled and CPU architectural improvements have shifted focus towards multiple cores, we see that it is becoming harder to efficiently use these computer systems.

One trade-off area where our general purpose CPUs were not performing well was that of massive fine grain parallelism. Graphics processing is one such area with huge computational requirements, but where each of the tasks is relatively small and often a set of operations are performed on data in the form of a pipeline. The throughput of this pipeline is more important than the latency of the individual operations. Because of its focus on latency, the generic CPU yielded rather inefficient system for graphics processing. This lead to the birth of the Graphics Processing Unit (GPU) which was focused on providing a very fine grained parallel model, with processing organized in multiple stages, where the data would flow through. The model of a GPU is that of task parallelism describing the different stages in the pipeline, as well as data parallelism within each stage, resulting in a highly efficient, high throughput computation architecture.

The early GPU systems were very vendor specific and mostly consisted of graphic operators implemented in hardware being able to operate on data streams in parallel. This yielded a whole new generation of computer architectures where suddenly relatively simple workstations could be used for very complex graphics tasks such as Computer Aided Design. However these fixed functions for vertex and fragment operations eventually became too restrictive for the evolution of next generation graphics, so new GPU architectures were developed where user specific programs could be run in each of the stages of the pipeline. As each of these programs was becoming more complex and demand for new operations such as geometric processing increased, the GPU architecture evolved into one long feed-forward pipeline consisting of generic 32-bit processing units handling both task and data parallelism. The different stages were then load balanced across the available units.

General Purpose GPU programming

Programming the GPU evolved in a similar fashion; it started with the early APIs being mainly pass-through to the operations programmed in hardware. The second generation APIs to GPU systems were still graphics-oriented but under the covers implemented dynamic assignments of dedicated tasks over the generic pipeline. A third generation of APIs, however, left the graphics specifics interfaces behind and instead focused on exposing the pipeline as a generic highly parallel engine supporting task and data parallelism.

Already with the second generation APIs researchers and engineers had started to use the GPU for general purpose computing as the generic processing units of the modern GPU were extremely well suited to any system that could be decomposed into fine grain parallel tasks. But with the third generation interfaces, the true power of General Purpose GPU programming was unlocked. In the taxonomy of traditional parallelism, the programming of the pipeline is a combination of SIMD (single instruction, multiple data) inside a stage and SPMD (single program, multiple data) for how results get routed between stages. A programmer will write a series of threads each defining the individual SIMD tasks and then an SPMD programs to execute those threads and collect and store/combine the results of those operations. The input data is often organized as a Grid.

NVIDIA's CUDA SDK provides a higher level interface with extensions in the C language that supports both multi-threading and data parallelism. The developer writes single c functions dubbed a "kernel" that operate on data and are executed by multiple threads according to an execution configuration. To easily facilitate different input models, threads can be organized into thread-blocks that are hierarchies for one-, two- and three-dimensional processors of vectors, matrices and volumes. Memories are organized into global memory, per-thread-block memory and per-thread private memory.

This combination of very basic primitives drives a whole range of different programming styles: map & reduce, scatter & gather & sort, as well as stream filtering and stream scanning. All running at extreme throughputs as high-end GPUs such as those supporting the Tesla "Fermi" CUDA architecture have close to 500 cores generating well over 500 GigaFLOPS per GPU.

The NVIDIA "Fermi" architecture as implemented in the NVIDIA Tesla 20-series GPUs (where we are providing instances with Tesla M2050 GPUs) are a major step up from the earlier GPUs as they provide high performance double precision floating point operations (64FP) and ECC GDDR5 memory.

The Amazon EC2 Cluster GPU instance

Last week it was revealed that the world's fastest supercomputer is now the Tianhe-1A with a peak performance of 4.701 PetaFLOPS. The Tianhe-1A runs on 14,336 Xeon X5670 processors and 7,168 Nvidia Tesla M2050 general purpose GPUs. Each node in the system consists of two Xeon processors and one GPU.

The EC2 Cluster GPU instance provides even more power in each instance: the two Xeon X5570 processors are combined with two NVIDIA Tesla M2050 GPUs. This gives you more than a TeraFLOPS processing power per instance. By default we allow any customer to instantiate clusters of up to 8 instances making the incredible power of an 8 TeraFLOPS available for anyone to use. This instance limit is a default usage limit, not a technology limit. If you need larger clusters we can make those available on request via the Amazon EC2 instance request form. If you are willing to switch to single precision floating the Tesla M2050 will even give you a TeraFLOP performance per GPU, doubling the overall performance.

We have already seen early customers out of the life sciences, financial, oil & gas, movie studios and graphics industries becoming very excited about the power these instances give them. Although everyone in the industry has known for years that General Purpose GPU processing is a direction with amazing potential, making major investments has been seen as high-risk given how fast moving the technology and programming was.

Cluster GPU programming in the Cloud with the Amazon Web Services changes of all of that. The power of world's most advanced GPUs is now available for everyone to use without any up-front investment, removing the risks and uncertainties that owning your own GPU infrastructure would involve. We have already seen with the EC2 Cluster Compute instances that "traditional" HPC has been unlocked for everyone to use, but Cluster GPU instances take this one step further making innovative resources that were even outside the reach of most professionals now available for everyone to use at very low cost. An 8 TeraFLOPS HPC cluster of GPU-enabled nodes will now only cost you about $17 per hour.

CPU and/or GPU

As exciting as it is to make GPU programming available for everyone to use, unlocking its amazing potential, it certainly doesn't mean that this is the start of the end of CPU based High Performance Computing. Both GPU and CPU architectures have their sweet spots and although I believe we will see a shift in the direction of GPU programming, CPU based HPC will remain very important.

GPUs work best on problem sets that are ideally solved using massive fine-grained parallelism, using for example at least 5,000 - 10,000 threads. To be able build applications that exploit this level of parallelism one needs to enter a very specific mindset of kernels, kernel functions, threads-blocks, grids of threads-blocks, mapping to hierarchical memory, etc. Configuring kernel execution is not a trivial exercise and requires GPU device specific knowledge. There are a number of techniques that every programmer has grown up with, such as branching, that are not available, or should be avoided on GPUs if one wants to truly exploit its power.

HPC programming for CPUs is very convenient compared to GPU programming as the power of traditional serial programming can be combined with that of using multiple powerful processors. Although efficient parallel programming on CPUs absolutely also requires a certain level of expertise its models and capabilities are closer to that of traditional programming. Where kernel functions on GPUs are best written as simple data operations combined with specific math operations, CPU based HPC programming can take on any level of complexity without any of the restrictions of for example the GPU memory models. Applications, libraries and the tools for CPU programming are plentiful and very mature, giving developers a wide range of options and programming paradigms.

One area where I expect progress will be made with the availability of the Cluster GPU instances is a combination of both HPC programming models which combines the power CPUs and the GPUs, as after all the Cluster GPU instances are based on the Cluster Compute Instances with their powerful quad core i7 processors.

Some good insight into the work that is needed to convert certain algorithms to run efficiently on GPUs is the UCB/NVIDIA "Designing Efficient Sorting Algorithms for Manycore GPUs" paper.

Cluster Computer, Cluster GPU and Amazon EMR

Amazon Elastic MapReduce (EMR) makes it very easy to run Hadoop's (MapReduce) massively parallel processing tasks. Amazon EMR will handle workload parallelization, node configuration and scaling, and cluster management, such that our customers can focus on writing the actual HPC programs.

Starting today Amazon EMR can take advantage of the Cluster Compute and Cluster GPU instances, giving customers ever more powerful components to base the large scale data processing and analysis on. These programs that rely on significant network I/O will also benefit from the low latency, full bisection bandwidth 10Gbps Ethernet network between the instances in the clusters.

Where to go from here?

For more information on the new Cluster GPU Instances for Amazon EC2 visit the High Performance Computing with Amazon EC2 page. For more information on using the HPC Cluster instances with Amazon Elastic MapReduce see the Amazon EMR detail page. Also more details can be found on the AWS Developer blog.

Werner is Amazon.com’s Chief Technical Officer.

James Hamilton contributed his Amazon Cluster GPU analysis in HPC in the Cloud with GPGPUs in an 11/15/2010 post:

A year and half ago, I did a blog post titled heterogeneous computing using GPGPUs and FPGA. In that note I defined heterogeneous processing as the application of processors with different instruction set architectures (ISA) under direct application programmer control and pointed out that this really isn’t all that new a concept. We have had multiple ISAs in systems for years. IBM mainframes had I/O processors (Channel I/O Processors) with a different ISA than the general CPUs , many client systems have dedicated graphics coprocessors, and floating point units used to be independent from the CPU instruction set before that functionality was pulled up onto the chip. The concept isn’t new.

What is fairly new is 1) the practicality of implementing high-use software kernels in hardware and 2) the availability of commodity priced parts capable of vector processing. Looking first at moving custom software kernels into hardware, Field Programmable Gate Arrays (FPGA) are now targeted by some specialized high level programming languages. You can now write in a subset of C++ and directly implement commonly used software kernels in hardware. This is still the very early days of this technology but some financial institutions have been experimenting with moving computationally expensive financial calculations into FPGAs to save power and cost. See Platform-Based Electronic System-Level (ESL) Synthesis for more on this.

The second major category of heterogeneous computing is much further evolved and is now beginning to hit the mainstream. Graphics Processing Units (GPUs) essentially are vector processing units in disguise. They originally evolved as graphics accelerators but it turns out a general purpose graphics processor can form the basis of an incredible Single Instruction Multiple Data (SIMD) processing engine. Commodity GPUs have most of the capabilities of the vector units in early supercomputers. What’s missing is they have been somewhat difficult to program in that the pace of innovation is high and each model of GPU have differences in architecture and programming models. It’s almost impossible to write code that will directly run over a wide variety of different devices. And the large memories in these graphics processors typically are not ECC protected. An occasional pixel or two wrong doesn’t really matter in graphical output but you really do need ECC memory for server side computing.

Essentially we have commodity vector processing units that are hard to program and lack ECC. What to do? Add ECC memory and a abstraction layer that hides many of the device-to-device differences. With those two changes, we have amazingly powerful vector units available at commodity prices. One abstraction layer that is getting fairly broad pickup is Compute Unified Device Architecture or CUDA developed by NVIDIA. There are now CUDA runtime support libraries for many programming languages including C, FORTRAN, Python, Java, Ruby, and Perl.

Current generation GPUS are amazingly capable devices. I’ve covered the speeds and feeds of a couple in past postings: ATI RV770 and the NVIDIA GT200.

Bringing it all together, we have commodity vector units with ECC and an abstraction layer that makes it easier to program them and allows programs to run unchanged as devices are upgraded. Using GPUs to host general compute kernels is generically referred to as General Purpose Computation on Graphics Processing Units. So what is missing at this point? The pay-as-you go economics of cloud computing.

You may recall I was excited last July when Amazon Web Services announced the Cluster Compute Instance: High Performance Computing Hits the Cloud. The EC2 Cluster Compute Instance is capable but lacks a GPU:

- 23GB memory with ECC

- 64GB/s main memory bandwidth

- 2 x Intel Xeon X5570 (quad-core Nehalem)

- 2 x 845GB HDDs

- 10Gbps Ethernet Network Interface

What Amazon Web Services just announced is a new instance type with the same core instance specifications as the cluster compute instance above, the same high-performance network, but with the addition of two NVIDIA Tesla M2050 GPUs in each server. See supercomputing at 1/10th the Cost. Each of these GPGPUs is capable of over 512 GFLOPs and so, with two of these units per server, there is a booming teraFLOP per node.

Each server in the cluster is equipped with a 10Gbps network interface card connected to a constant bisection bandwidth networking fabric. Any node can communicate with any other node at full line rate. It’s a thing of beauty and a forerunner of what every network should look like.

There are two full GPUs in each Cluster GPU instance each of which dissipates 225W TDP. This felt high to me when I first saw it but, looking at work done per watt, it’s actually incredibly good for workloads amenable to vector processing. The key to the power efficiency is the performance. At over 10x the performance of a quad core x86, the package is both power efficient and cost efficient.

The new cg1.4xlarge EC2 instance type:

- 2 x NVIDIA Tesla M2050 GPUs

- 22GB memory with ECC

- 2 x Intel Xeon X5570 (quad-core Nehalem)

- 2 x 845GB HDDs

- 10Gbps Ethernet Network Interface

With this most recent announcement, AWS now has dual quad core servers each with dual GPGPUs connected by a 10Gbps full-bisection bandwidth network for $2.10 per node hour. That’s $2.10 per teraFLOP. Wow.

- The Amazon Cluster GPU Instance type announcement: Announcing Cluster GPU Instances for EC2

- More information on the EC2 Cluster GPU instances: http://aws.amazon.com/ec2/hpc-applications/

James is a Vice President and Distinguished Engineer on the Amazon Web Services team where he is focused on infrastructure efficiency, reliability, and scaling. Prior to AWS, James was architect on the Microsoft Data Center Futures team and before that he was architect on the Live Platform Services team.

Tim Anderson (@timanderson) rings in with his Now you can rent GPU computing from Amazon analysis of 11/15/2010:

I wrote back in September about why programming the GPU is going mainstream. That’s even more the case today, with Amazon’s announcement of a Cluster GPU instance for the Elastic Compute Cloud. It is also a vote of confidence for NVIDIA’s CUDA architecture. Each Cluster GPU instance has two NVIDIA Tesla M2050 GPUs installed and costs $2.10 per hour. If one GPU instance is not enough, you can use up to 8 by default, with more available on request.

GPU programming in the cloud makes sense in cases where you need the performance of a super-computer, but not very often. It could also enable some powerful mobile applications, maybe in financial analysis, or image manipulation, where you use a mobile device to input data and view the results, but cloud processing to do the heavy lifting.

One of the ideas I discussed with someone from Adobe at the NVIDIA GPU conference was to integrate a cloud processing service with PhotoShop, so you could send an image to the cloud, have some transformative magic done, and receive the processed image back.

The snag with this approach is that in many cases you have to shift a lot of data back and forth, which means you need a lot of bandwidth available before it makes sense. Still, Amazon has now provided the infrastructure to make processing as a service easy to offer. It is now over to the rest of us to find interesting ways to use it.

Related posts:

Derrick Harris added GPGPU background in his Amazon Gets Graphic with Cloud GPU Instances post to the GigaOm blog of 11/15/2010:

Amazon Web Services today upped its high-performance computing portfolio by offering servers that will run graphics processors. The move comes on the heels of AWS releasing its Cluster Compute Instances in July, and validates the idea that specialized hardware may be better suited for certain types of computing in the cloud.

Sharing the same low-latency 10 GbE infrastructure as the original Cluster Compute Instances, GPU Instances include two Nvidia (s nvda) Tesla M2050 graphical processing units apiece to give users an ideal platform for performing graphically intensive or massively parallel workloads. AWS isn’t the first cloud provider to incorporate GPUs, but it’s certainly the most important one to do so. Furthermore, the advent of GPU Instances is one more sign that HPC need not be solely the domain of expensive on-premise clusters.

As I pointed out back in May, even Argonne National Laboratory’s Ian Foster, widely credited as a father of grid computing, has noted the relatively comparable performance between Amazon EC2 resources and supercomputers for certain workloads. Indeed, even with supercomputing resources having been available “on demand,” for quite a while, industries like pharmaceuticals – and even space exploration – have latched onto Amazon EC2 for access to cheap resources at scale, largely because of its truly on-demand nature. Cluster Compute Instances no doubt made AWS an even more appealing option thanks to its high-throughput, low-latency network, and GPU Instances could be the icing on the cake.

GPUs are great for tasks like video rendering and image modeling, as well as for churning through calculations in certain financial simulations. Skilled programmers might even write applications that offloads only certain application tasks to GPU Instances, while standard Cluster Compute Instances handle the brunt of the work. This is an increasingly common practice in heterogeneous HPC systems, especially with specialty processors like IBM’s Cell Broadband Engine Architecture

AWS will be competing with other cloud providers for HPC business, though. In July, Peer1 Hosting rolled out its own Nvidia-powered cloud that aims to help developers add 3-D capabilities to web applications (although, as Om noted then, movie studios might comprise a big, if not the primary, user base for such an offering). Also in July, New Mexico-based cloud provider Cerelink announced that it won a five-year deal with Dreamworks Animation to perform rendering on Cerelink’s Xeon-based HPC cluster. IBM (s ibm) has since ceased production of the aforementioned Cell processor, but its HPC prowess and new vertical-focused cloud strategy could make a CPU-GPU cloud for the oil and gas industry, for example, a realistic offering.

Still, it’s tough to see any individual provider stealing too much HPC business from AWS. By-the-hour pricing and the relative ease of programming to EC2 have had advanced users drooling for years, if only it could provide the necessary performance. By and large, the combination of Cluster Compute and GPU Instances solves that problem, especially for ad hoc jobs or those that don’t require sharing data among institutions.

Amazon’s GPU Instances come as the annual Supercomputing conference kicks off in New Orleans, an event which cloud computing has taken on a greater presence over the past few years. Already today, Platform Computing announced a collection of capabilities across its HPC product line to let customers manage and burst to cloud-based resources. Platform got into the cloud business last year with an internal-cloud management platform targeting all types of workloads.

Related content from GigaOM Pro (sub req’d):

<Return to section navigation list>

0 comments:

Post a Comment