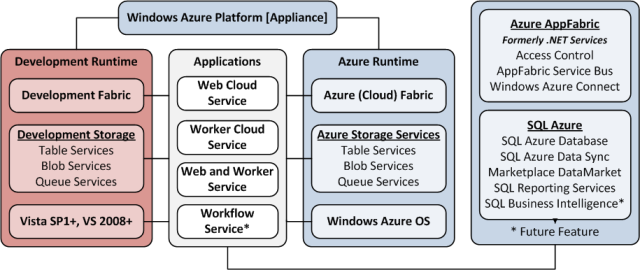

Windows Azure and Cloud Computing Posts for 11/16/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Wayne Walter Berry (@WayneBerry) reported the availability of a new article about SQL Azure Backup and Restore Strategy in an 11/16/2010 post to the SQL Azure Team blog:

A new article about developing a SQL Azure Backup and Restore Strategy has been posted in the wiki section of TechNet. This article discusses how to port your existing backup and restore strategy to SQL Azure to cover against hardware failure, force majeure, and user error. Along with developing your strategy it talks about which tools to use and best practices when backing up SQL Azure

Sreedhar Pelluru announced Sync Framework 4.0 October 2010 CTP Refreshed on 11/16 in an 11/16/2010 post to the Sync Framework Team blog:

We just refreshed the Sync Framework 4.0 CTP bits to add the following two features:

- Tooling Wizard UI: This adds a UI wizard on top of the command line based SyncSvcUtil utility. This wizard allows you to select tables, columns, and even rows to define a sync scope, provision/de-provision a database and generate server-side/client-side code based on the data schema that you have. This minimizes the amount of code that you have to write yourself to build sync services or offline applications. This Tooling Wizard can be found at: C:\Program Files (x86)\Microsoft SDKs\Microsoft Sync Framework\4.0\bin\SyncSvcUtilHelper.exe.

- iPhone Sample: This sample shows you how to develop an offline application on iPhone/iPad with SQLite for a particular remote schema by consuming the protocol directly. The iPhone sample can be found at: C:\Program Files (x86)\Microsoft SDKs\Microsoft Sync Framework\4.0\Samples\iPhoneSample.

More information about this release:

Download: The refreshed bits can be found at the same place of the public CTP released at PDC. You can find the download instructions at: here.

Documentation: We also refreshed the 4.0 CTP Documentation online at MSDN at: here.

PDC Session: To learn more about this release 4.0 in general, take a look at our PDC session recording "Building Offline Applications using Sync Framework and SQL Azure".

<In this release, we decided to bump the version of all binaries to 4.0, skipping version 3.0 to keep the version number consistent across all components.>

Sreedhar Pelluru reported the availability of Updated Sync Framework 4.0 CTP Documentation in an 11/16/2010 post:

The Sync Framework 4.0 CTP documentation on MSDN library (http://msdn.microsoft.com/en-us/library/gg299051(v=SQL.110).aspx) has been updated. It now includes the documentation for the SyncSvcUtilHelper UI tool, which is built on top of the SyncSvcUtil command-line tool. This UI tool exposes all the functionalities supported by the command-line tool. In addition, it lets you create or edit a configuration file that you can use later to provision/deprovision SQL Server/SQL Azure databases and to generate server/client code.

The ADO.NET Team posted Connecting to SQL Azure using Entity Framework to its blog on 11/15/2010:

The Entity Framework provides a very powerful way to access data stored in the cloud, particularly in SQL Azure. Over the past month the SQL Azure team has been posting some great info on how to use Entity Framework:

Using EF’s Model First capability with SQL Azure

This walkthrough shows how you can use EF to actually build your tables in SQL Azure directly from the EF designer.

Demo: Using Entity Framework to Create and Query a SQL Azure Database In Less Than 10 Minutes

In this article you’ll see two great videos – in the first, Faisal Mohamood explains what EF is and some of the benefits it offers .NET developers. In the second, you’ll see how EF can be used to create a SQL Azure database from scratch, populate it with data, and query it – all in less than 10 minutes!

Porting the MVC Music Store to SQL Azure

In this article you’ll see how to take the MVC Music Store tutorial application (which uses EF) and port it to SQL Azure.

Finally, check out this month’s issue of MSDN Magazine where Julie Lerman talks about some of the performance considerations when using EF with SQL Azure.

<Return to section navigation list>

Dataplace DataMarket and OData

Julian Lai described Additional Feed Customization Support for WCF Data Services in an 11/15/2010 post to the WCF Data Services Team blog:

1. Problem:

In the previous version, WCF Data Services introduced the feed customization feature. This feature enabled users to map properties to certain elements in the Atom document. As an example, users could map a name property to the Atom title element. This is especially useful for generic feed readers which may not know how to interpret the properties in the content element but understand other Atom elements.

The OData protocol, and therefore our implementation, did not allow users to map to the repeating Atom link and category elements. In addition, users never had the ability to map properties based on a condition.

2. Design:

We have extended the set of Atom elements that can be mapped and introduced a new feature known as Conditional Entity Property Mapping (EPM) to solve the issues discussed above. This new feature includes 2 parts. First, users can specify standard, unconditional mapping to Atom link and category elements. Second is the ability to map to the Atom link and category elements based on a condition. For example, only apply the mapping when the link element’s rel attribute (denoted as link/@rel) has a value equal to “http://MyValue”. We’ll get into some specifics later on in this post but the condition only applies to the link/@rel and category/@scheme attributes. Conditional mapping is not supported for other Atom or Custom feed elements at this time.

3. Understanding the Feature:

3.1 Extending Feed Customizations (Unconditional Mappings):

Let’s first start with unconditional mapping to Atom link and category elements. This is the same process as the feed customization introduced in the previous version.

Mappings are described on classes through the EntityPropertyMappingAttribute. This attribute is present on generated client classes to describe the mapping, and is used to define mappings exposed by the reflection provider:

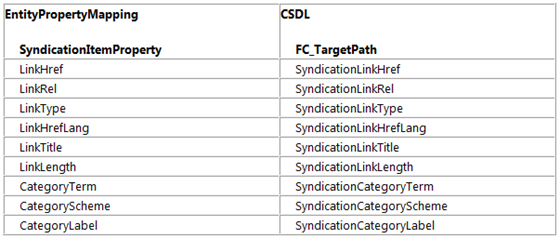

public EntityPropertyMappingAttribute( string sourcePath, SyndicationItemProperty targetSyndicationItem, SyndicationTextContentKind targetTextContentKind, bool keepInContent )We updated the SyndicationItemProperty enum to include the link and category element’s attributes. The full list of updates can be found in the table below.

A service describes mapping through the CSDL exposed by its $metadata endpoint. These same CSDL annotations are used to describe mappings to the Entity Framework (EF) provider. The set of valid values for FC_TargetPath has been extended to include the new link and category attributes:

<Property Name="Term" Type="String" Nullable="false" MaxLength="40" Unicode="true" FixedLength="false" m:FC_TargetPath="SyndicationCategoryTerm" m:FC_ContentKind="text" m:FC_KeepInContent="false" />The additions to both the SyndicationItemProperty enum and FC_TargetPath attribute can be found in the table below:

In the mapping described above, the “Term” property is mapped to “SyndicationCategoryTerm” and the property is also kept in the content element. Assuming the value of the “Term” property is “MyTermValue” and no other mappings exist, that section of the entry would look something like:

… <category term="MyTermValue" /> <content type="application/xml"> <m:properties> … </m:properties> </content>When mapping to the Atom link element, there is an additional rule to consider. Since an empty link/@rel value is interpreted as “alternate” (RFC4287), and the OData protocol does not allow mapping link/@rel to simple identifiers (eg. edit, self, alternate, …), when defining unconditional mapping to the link element we must specify a mapping to link/@rel:

[EntityPropertyMappingAttribute( "MyHref", SyndicationItemProperty.LinkHref, SyndicationTextContentKind.Plaintext, true)] [EntityPropertyMappingAttribute("MyRel", SyndicationItemProperty.LinkRel, SyndicationTextContentKind.Plaintext, true)] [DataServiceKeyAttribute("id")] public class Photos { public int id { get; set; } public string MyHref { get; set; } public string MyRel { get; set; } }3.2 New Conditional Entity Property Mappings:

Now that we’ve covered the extensions to feed customization mappings, let’s look at the second part of this feature, conditional mapping. There are situations where a user may only want to map to Atom link and category elements when they satisfy a certain condition. For example, assume we have a model that contains a collection of photos. These photos may differ in their resolution (eg. Hi-res, low-res, thumbnail). It makes sense to map the resolution to a category element since it helps to distinguish the different photos. Conditions are useful here because there may be other category elements we don’t care about.

In order to use conditions in the mappings, we introduced a new EntityPropertyMappingAttribute constructor:

public EntityPropertyMappingAttribute( string sourcePath, SyndicationItemProperty targetSyndicationItem, bool keepInContent, SyndicationCriteria criteria, string criteriaValue ) Notice the addition of the criteria and criteraValue parameters. The SyndicationCriteria parameter is a new enum with values:

- LinkRel

- CategoryScheme

Similarly, we are introducing new CSDL attributes for FC_Criteria and FC_CriteriaValue. For FC_Criteria, the corresponding values are:

- SyndicationLinkRel

- SyndicationCategoryScheme

The criteria indicates the attribute on which the condition exists. For example, if the criteria equals “CategoryScheme”, the condition would depend on the value of the category/@scheme.

The criteriaValue parameter specifies the value that must be matched in order for the mapping to apply. To make this clearer, let’s look at the following example:

[EntityPropertyMappingAttribute("Term", // sourcePath SyndicationItemProperty.CategoryTerm, // targetPath false, // keepInContent SyndicationCriteria.CategoryScheme, // criteria "http://foo.com/MyPhotos")] // criteriaValue [DataServiceKeyAttribute("id")] public class Category { public int id { get; set; } public string Term { get; set; } }To understand what this mapping describes, we’ll break it down into the serialization and deserialization steps. When serializing to a feed, the “Term” property will be mapped to category/@term with category/@scheme equal to the criteriaValue, “http://foo.com/MyPhotos”. Assuming the “Term” property has a value of “MyTermValue”, the mapping above would generate a feed like:

<entry> … <category term="MyTermValue" scheme="http://foo.com/MyPhotos" /> <content type="application/xml"> <m:properties> …For deserialization, if the entry has a category element where category/@scheme equals “http://foo.com/MyPhotos”, the mapping will occur. All other category elements will be ignored by this mapping. It is important to know that, when the value of the attribute and criteraValue are compared, the comparison is for case insensitive, non-escaped, and equal string values.

To finish off the examples, here is a conditional mapping to the link element:

[EntityPropertyMappingAttribute( "MyHref", // sourcePath SyndicationItemProperty.LinkHref, // targetPath false, // keepInContent SyndicationCriteria.LinkRel, // criteria "http://MyRelValue")] // criteriaValue [DataServiceKeyAttribute("id")] public class Photos { public int id { get; set; } public string MyHref { get; set; } }Notice how the condition is on link/@rel. Since the criteriaValue is not a simple identifier, this mapping automatically satisfies the simple identifier rule described above. When serializing to a feed, this will look like:

… <link href="http://hrefValue.com/" rel="http://MyRelValue" /> …To summarize, this new feature includes 2 parts. The first part extends Feed Customization to the Atom link and category elements. The second part introduces mapping based on conditions.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Yefim V. Natis, David Mitchell Smith and David W. Cearley co-authored a Windows Azure AppFabric: A Strategic Core of Microsoft's Cloud Platform for Gartner on 11/15/2010:

Continuing strategic investment in Windows Azure is moving Microsoft toward a leadership position in the cloud platform market, but success is not assured until user adoption confirms the company's vision and its ability to execute in this new environment.

If you’re not a Gartner client, you can read this report by paying US$2,495 for it.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

Cumulux explained Microsoft Azure Connect (formerly Project “Sydney”) in an 11/16/2010 post:

Windows Azure Connect feature announced in Microsoft Professional Development Conference 2010 provides a simple and easy-to-manage mechanism to setup IP-based connectivity between Azure and On-premises data resources. This makes it easier for an organization to move their currently running/active applications to the cloud by enabling direct IP-based network connectivity with their existing on-premises infrastructure.

For example, a company can deploy a Windows Azure application that connects to an on-premises SQL Server database, or domain-join Windows Azure services to their Active Directory deployment. In addition, Windows Azure Connect helps users connect securely to their cloud-hosted virtual machines. This feature will power safe remote administration and troubleshooting using the same tools the users use for on-premises applications.

CTP will be released in the end of 2010. That will include On-premises Agent for non-Azure resources to connect to On-premises resources from Azure. Windows Server 2008, Windows Server 2008 R2, Windows 7, Windows Vista SP1 and all the versions above that will be supported. Future versions will give user the enhanced ability to connect to azure securely from any VPN services used by their respective organizations.

This will be a pivotal feature as this stands as the brick and wall for the connections that will power the secure communication and transactions between cloud and on-premises resources.

For [a] more detailed technical overview, [check out] Microsoft PDC 2010 session on Azure Connect.

Bill Zack reported VM Role, Extra Small Instance and Windows Azure AppFabric Connect – Coming soon to Windows Azure! in an 11/16/2010 post to the Ignition Showcase blog:

These new Windows Azure features will be here soon:

- VM Role is not Infrastructure as a Service (IaaS) but it does combine the best of IaaS and PaaS (Platform as a Service). ISVs and SIs can leverage the VM Role as a transition tool to be used in moving an existing application to the cloud.

- Extra Small Instance is a Subset of the existing Small role size that is priced even lower. This role size provides you with a cost-effective training and development environment, the ability to prototype cloud solutions at a lower cost, and a way to experiment in the cloud before running your service at full scale

- Windows Azure AppFabric Connect will give you more flexibility in integrating in-cloud services with your own data center based components. It also allows the integration of your Windows Azure applications and data with your customer’s internal networks in a secure and safe manner.

If you would like to be notified when new Windows Azure features are available, as well as when we start accepting registrations for the VM role, Extra Small instance Beta and the Windows Azure Connect CTP, please click here to sign up.

Bill Zack

I’ve signed up; you should, too.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruce Kyle reported Bioscience “BLAST” Comes to Windows Azure, HPC Connects to the Cloud in an 11/16/2010 post to the US ISV Evangelism blog:

Today at the Supercomputing (SC) 2010 conference, Microsoft Corp. announced the release of NCBI BLAST on Windows Azure. The new application enables a broader community of scientists to combine desktop resources with the power of cloud computing for critical biological research. At the conference, Microsoft showcased the enormous scale of the application on Windows Azure, demonstrating its use for 100 billion comparisons of protein sequences in a database managed by the National Center for Biotechnology Information (NCBI).

About BLAST

Researchers in bioinformatics, energy, drug research and many other fields use the Basic Local Alignment Search Tool (BLAST) to sift through large databases, to help identify new animal species, improve drug effectiveness and produce biofuels, and for other purposes. NCBI BLAST on Windows Azure provides a user-friendly Web interface and access to Windows Azure cloud computing for very large BLAST computations, as well as smaller-scale operations. The application will allow scientists to use and collaborate with their private data collections, as well as data hosted on Windows Azure, including NCBI public protein data collections and the results of Microsoft’s large protein comparison.

NCBI BLAST on Windows Azure software is available from Microsoft at no cost, and Windows Azure resources are available at no charge to many researchers through Microsoft’s Global Cloud Research Engagement Initiative. More information is available at http://research.microsoft.com/azure.

Connecting Windows HPC Server to Windows Azure

At SC 2010 Microsoft also announced that by the end of the year it will release Service Pack 1 for Windows HPC Server 2008 R2, allowing customers to connect their on-premises high-performance computing systems to Windows Azure. This capability provides customers with on-demand scale and capacity for high-performance computing applications, lowering IT costs and speeding discovery.

In addition, Microsoft announced that Windows HPC Server has surpassed a petaflop of performance, a degree of scale achieved by fewer than a dozen supercomputers worldwide. The Tokyo Institute of Technology has verified that its Tsubame 2.0 supercomputer running on Windows HPC Server has exceeded the ability to execute a quadrillion mathematical computations per second. The achievement demonstrates that Windows HPC Server can provide world-class high-performance computing on cost-effective software accessible to a wide range of organizations.

Matthew Weinberger reported Mimecast Extends Security and Archiving to Microsoft Cloud in an 11/16/2010 post to the MSPMentor blog:

Mimecast, which already provides cloud-based email security, continuity, archiving and policy control, now plans to support the Microsoft BPOS SaaS productivity and collaboration suite. The company says the new support has seeks to solve customers’ migration headaches. Here are the details.

While Mimecast previously offered support for backing up on-premise Microsoft Exchange 2010 deployments, this is the first time they’re marrying their cloud solution to Microsoft BPOS — short for Business Productivity Online Suite. Mimecast is promising a 100% uptime SLA and full integration with the BPOS suite, according to the official press release.

If the solution works as promised, the benefits of using Mimecast with BPOS include:

- acting as a failsafe during migrations, meaning that even in the event of outage Mimecast will have a copy of your data so that continuity can be maintained;

- independent archiving, with the option of saving even deleted e-mails for up to ten years;

- advanced eDiscovery and legal hold to help with regulatory compliance requirements.

Mimecast does have a partner program, meaning MSPs can potentially resell this service to their customers — especially useful if you’re a Microsoft BPOS partner or potential Office 365 partner.

It’s interesting that Mimecast chooses to highlight that it can aid with Microsoft BPOS compliance – after all, the fact that the suite meets most compliance laws is a selling point… lawsuits aside.

Planky reported the availability of VIDEO: Ross Scott’s talk at the inaugural Cloud Evening event, 11th November, London, UK. Experiences of a small start-up with Windows Azure in an 11/16/2010 post to his Plankytronixx blog:

At last I have rendered and posted Ross Scott’s talk about the experiences of a small startup with Windows Azure.

To view it click here.

I’ll be posting Mark Rendle’s talk later when I’ve rendered it (that’s a lengthy overnight job!)…

David Pallmann announced AzureDesignPatterns.com Re-Launched in an 11/15/2010 post:

AzureDesignPatterns.com has been re-launched after a major overhaul. This site catalogues the design patterns of the Windows Azure Platform. These patterns will be covered in extended detail in my upcoming book, The Azure Handbook.

This site was originally created back in 2008 to catalog the design patterns for the just-announced Windows Azure platform. An overhaul has been long overdue: Azure has certainly come a long way since then and now contains many more features and services--and accordingly many more patterns. Originally there were about a dozen primitive patterns and now there over 70 catalogued. There are additional patterns to add but I believe this initial effort decently covers the platform including the new feature announcements based on what was shown at PDC 2010.

The first category of patterns is Compute Patterns. This includes the Windows Azure Compute Service (Web Role, Worker Role, etc.) and the new AppFabric Cache Service.

The second category of patterns is Storage Patterns. This includes the Windows Azure Storage Service (Blobs, Queues, Tables) and the Content Delivery Network.

The third category of patterns is Communication Patterns. This covers the Windows Azure AppFabric Service Bus.The fourth category of patterns is Security Patterns. This covers the WindowsAzure AppFabric Access Control Service. More patterns certainly need to be added in this area and will be over time.

The fifth category of patterns is Relational Data Patterns. This covers the SQL Azure Database Service, the new SQL Azure Reporting Service, and the DataMarket Service (formerly called Project Dallas).

The sixth category of patterns is Network Patterns. This covers the new Windows Azure Connect virtual networking feature (formerly called Project Sydney).

The original site also contained an Application Patterns section which described composite patterns created out of the primitive patterns. These are coming in the next installment.I’d very much like to hear feedback on the pattern catalog. Are key patterns missing? Are the pattern names and descriptions and icons clear? Is the organization easy to navigate? Let me know your thoughts.

Alan Le Marquand posted Migration planning made easier with MAP Toolkit 5.5 Beta on 11/15/2010:

I’m a fan of this toolkit, not just because I’ve worked with the people who build it, but also seeing what is can do. It’s used internally by use when we visit sites, also partner use it for their customers.

Over the years it’s been improved and expanded both through changing technologies and also feedback from these beta program.

So once again a new beta is available and the opportunity to try and provide feedback is here. So for those of you familiar with MAP, jump to the bottom of this post to find the sign up details.

For those not familiar with MAP, what does it do?

In a nutshell, “Simplify planning for upgrade or migration to the latest Microsoft products and technologies.” It provides reports and analysis of your environment to help you make decisions about migration and upgrade. The 5.5 version helps with the following.

Assess your environment for upgrade to Windows 7 and Windows Internet Explorer 8 (or the latest version). This tool helps simplify your organization's migration to Windows 7 and Internet Explorer 8—and, in turn, helps you enjoy improved desktop security, reliability and manageability.The MAP 5.5 Internet Explorer Upgrade Assessment inventories you environment and reports on deployed web browsers, Microsoft ActiveX controls, plug-ins and toolbars, and then generates a migration assessment report and proposal—information to help you more easily migrate to Windows 7 and Internet Explorer 8 (or the latest version).

Identify and analyse web application, and database readiness for migration to Windows Azure and SQL Azure. You can simplify you move to the cloud with the MAP 5.5 automated discovery with detailed inventory reporting on database and web application readiness for Windows Azure and SQL Azure. MAP identifies web applications, IIS servers, and SQL Server databases, analyses their performance characteristics, and estimates required cloud features such as number of Windows Azure compute instances, number of SQL Azure databases, bandwidth usage, and storage.

Discover heterogeneous database instances for migration to SQL Server. Now with heterogeneous database inventory supported, MAP 5.5 helps you accelerate migration to SQL Server with network inventory reporting for MySQL, Oracle, and Sybase instances.

Enhanced server consolidation assessments for Hyper-V. Enhanced server consolidation capabilities help you save time and effort when creating virtualization assessments and proposals. Enhancements include:

- Updated hardware libraries allowing you to select from the latest Intel and AMD processors.

- Customized server selection for easy editing of assessment data.

- Data collection and store every five minutes for more accurate reporting.

- Better scalability and reliability, requiring less oversight of the data collection process.

- Support for more machines.

If you are interested in this then read on…

Join the MAP beta review program for MAP Toolkit 5.5. We’d like your feedback too. Visit Microsoft Connect to download the beta materials.

Tell us what you think! Test drive our beta release, and send us your constructive feedback. Send your feedback and questions to the MAP Development Team. We value your input; this is the perfect opportunity to be heard. MAP 5.5 will be available for beta download through January, 14, 2011.

Already using Solution Accelerators? We’d like to hear about your experiences. Please send comments and suggestions to satfdbk@microsoft.com.

<Return to section navigation list>

Visual Studio LightSwitch

Karol Zadora-Przylecki explained How to use lookup tables with parameterized queries with a LightSwitch project in an 11/16/2010 tutorial for the LightSwitch Team blog:

Suppose you have two tables participating in a 1:many relationship, like Regions and Territories from the Northwind sample database:

The task is to build a screen that lets the user select a Region from a drop-down list and displays Territories that are within the selected region. This is possible to do with LightSwitch without writing any code. In this post I am going to show you how.

Start with the data

First you need to get the definitions of tables participating in the relationship into your application. In this post we will be using Regions and Territories from the Northwind sample database, but you can also create the tables from scratch in LightSwitch. For more information about attaching to existing databases and creating tables in LightSwitch please refer to How to: Connect to Data and How to: Create Data Entities topics in LightSwitch documentation. At the end you should have two tables linked by a 1:many relationship (see below)

Create a parameterized query

Next, create a parameterized query that will list territories within given region. To do it, right-click Territories table in Solution Explorer and choose “Add Query”. Name the query “TerritoriesByRegion” and add a parameterized filter clause to select appropriate territories:

Create a screen

Next, create a screen based on the TerritoriesByRegion query. We’ll use editable grid screen template here but you can use another template as well. When a parameterized query is used to create a screen, LightSwitch will automatically create a TextBox UI for the query parameter, together with the associated screen field. This works, but requires the end user of the app to remember and type in the name of the Region(s) of interest, which is not ideal. Instead, we want the list of the regions to be retrieved from the database and presented to the end user as a list of choices, so we are going to delete the textbox UI and the corresponding screen field that LightSwitch has created. After that your screen should look like this in the designer:

Set up the parameter UI

To make the parameter UI work you need three ingredients:

- A screen member (collection) to hold the list of choices,

- A screen member (single Region instance) to represent the selected region, and

- A ComboBox control wired up to the query parameter, so that when the selection changes, the TerritoriesByRegion query is executed and new territories are displayed

Let’s do it! Click the “Add Screen Item” button at the top of the screen designer, choose “Queries” option, select “Regions* (SelectAll)” from the list and name the query “AllRegions”

Next add a member for the selected Region—this time use “Local Property” option in Add Screen Item dialog:

Drag and drop the newly created SelectedRegion screen item on the left to screen content tree in the center of the screen designer. Change the control to ComboBox and customize the entry template so that it uses Horizontal Stack instead of Summary control. Leave only RegionDescription property in the stack (delete the RegionID which is redundant and not meaningful to the end user). Your screen content tree should look like this now:

Select the “SelectedRegion” ComboBox in the screen content tree. In the property sheet set the Choices property to “AllRegions”. This will ensure that the ComboBox is populated correctly:

Finally, we need to link the SelectedRegion ComboBox to the query parameter. Select the parameter in screen members area (it is under TerritoryCollection | Query Parameters), and set its Parameter Value property to “SelectedRegion.RegionID”:

That’s it!

You can now hit F5 and enjoy the newly created screen, when the user selects a region from the ComboBox, the territories are in that region are displayed:

Of course, if you created the data schema from scratch, you will need to create some Regions and Territories data first before testing the TerritoriesByRegion screen. ;-)

Return to section navigation list>

Windows Azure Infrastructure

Geva Perry announced the availability of his Evaluating Cloud Computing Services: Criteria to consider article for TechTarget in this 11/16/2010 post:

Today TechTarget published a piece I wrote for them about criteria to consider when selecting a cloud provider. Here's the opening paragraph:

Selecting a cloud computing provider is becoming increasingly complex. As cloud environments mature, many cloud providers attempt to differentiate themselves by focusing on specific aspects of their offerings, such as technology stacks or service-level agreements (SLAs). In short, not all cloud providers are created equal.

At the same time, enterprises are beginning to rely on cloud providers for hosting mission-critical applications, which raises the stakes for selecting the right cloud service. So how do organizations navigate this multifarious landscape? Below you'll find a few key factors for evaluating services as well as some resources to use.

I then go on to cover six key areas:

- Performance

- Technology stack

- SLAs and Reliability

- APIs: lock-in, community and ecosystem

- Security and compliance

- Cost

Please read the full article here.

Buck Woody posted the first episode of his Windows Azure Learning Plan on 11/16/2010:

Because Windows Azure is a platform, like Windows or Linux, there are a lot of things to know about it. Whenever I'm faced with learning such a broad technology I try to work "outside in" - that is, from a general perspective (understanding what it is) to a specific set of knowledge (knowing how to use it).

There are lots of resources for learning Windows Azure, so I thought I would put together a series of posts that lets you focus in on what you want to care about. Because the technology changes a great deal, I'll try and keep this "head" post up to date, and if you see anything I'm missing, feel free to post a comment here. Note that this isn’t all of the information you can find - it's the list I use to help others get up to speed. It's my "window" into Azure.

The first section (this post) is the "general overview" section of links and resources. It covers the "what is this thing" question, and of course each of these links will spawn off to more of them.

(Note: If the area says (Not yet linked) it means I haven't made that blog post yet. Be patient - it's coming!)

General Information

Audience: Everyone

Overview and general information about Windows Azure - what it is, how it works, and where you can learn more.

General Overview Whitepaper

Official Microsoft Site

Five facts technical pro's should know about cloud computing

http://www.infoworld.com/d/cloud-computing/five-facts-every-cloud-computing-pro-should-know-174

Video: Lap Around the Windows Azure Platform

Architecture

(Not yet linked)

Audience: Systems and Programming Architects

Patterns and practices for Windows Azure, How it works, and internals.

Compute

(Not yet linked)

Audience: Developers

Information on Web, Worker, VM and other roles, and how to program them.

Storage

(Not yet linked)

Audience: Developers, Data Professionals

Blobs, Tables, Queues, and other storage constructs, and how to program them.

Application Fabric

(Not yet linked)

Audience: Developers, System Integrators

Service Bus, Authentication and Caching, in addition to other constructs in the Application Fabric space.

Security

(Not yet linked)

Audience: Developers, Security Analysts

General and Specific security considerations, remunerations and remediation.

SQL Azure

(Not yet linked)

Audience: Developers, Data Professionals

SQL Server Azure information for architecting and managing applications in the cloud RDBMS.

Elizabeth White asserted “The cloud will be a growing part of the mix of resources used by enterprise IT organizations” as a deck for her Harris Interactive Report: Cloud Computing report of 11/16/2010:

[A] Harris Interactive survey shows surprisingly strong interest in public and private cloud computing. The survey, sponsored by Novell, reveals much broader adoption of cloud computing than has been suggested by previous research, and shows accelerating momentum behind developing private cloud infrastructures. The cloud will be a growing part of the mix of resources used by enterprise IT organizations.

David Linthicum claimed “In the flood of new cloud projects, a few are committing suicide -- find out why” as a preface to his 3 surefire ways to kill a cloud project article of 11/16/2010 for InfoWorld’s Cloud Computing blog:

Many enterprises are working on cloud computing projects, ranging from simple and quick prototypes to full-blown enterprise system migration. While most are succeeding, a few are biting the dust.

The reasons for failure vary, but common patterns are beginning to emerge. I keep seeing the same three reasons that cloud computing projects fail over and over again.

1. Not understanding compliance. There seems to be two modes: One is to assume that data can't reside anywhere but the data center, citing compliance issues. But no one bothers to test this assumption, and often there is no true issue with leveraging clouds. Two is assuming there are no compliance issues and getting in trouble quickly as legally restricted data finds itself in places it really should not be. Either approach is cloud computing poison.

2. Betting on the wrong horse. Not all cloud computing providers are equal, and a few have the nasty habit of going out of business or shutting down to avoid channel conflicts. We saw this when EMC shut down the Atmos storage-as-a-service provider to work on issues in its channels. Chances are we'll see a few more of these pop up at the larger on-premise players as they realize the cloud is a replacement for -- not an enhancement of -- their technology.

3. Not including IT. Although you'd think IT is driving most cloud computing endeavors, some projects are working around IT to get a software-as-a-service provider online, increase their storage, or support a rogue application development effort. I understand that in many instances IT is the department of "no," and eventually it will find you out and shut you down. The real answer to this problem, though, is that IT needs to be more open-minded about the use of cloud computing to move faster in creating infrastructure and applications that better support the business.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

Chris Czarnecki posted Microsoft Announces Private Cloud Solution: Hyper-V Cloud to the Learning Tree blog on 11/16/2010:

With the recent announcements Microsoft made enhancing the Azure PaaS offering, they have now announced comprehensive support for private clouds, their Hyper-V cloud. In response to customer demand for private clouds and the associated advantages they provide , better use of IT resources, elastic scalability, self-service etc, Microsoft has provided an Infrastructure as a Service (IaaS) solution for private clouds.

Hyper-V cloud offers three different approaches to building a private cloud.

Build your own requires buying hardware from recommended partners and software from Microsoft to build your own on-premise cloud. Microsoft has signed deals with Dell, Fujitsu, Hitachi, HP, IBM and NEC as recommended hardware partners.

Hyper-V Cloud Fast Track is an approach where an off-the-shelf private cloud can be purchased from the above hardware partners which includes the appropriate software from Microsoft. The advantage of this approach over build your own is that the approach reduces risk and speeds deployment of the private cloud based on the experience of the Microsoft partners. The partners also offer the potential to customise the cloud to meet exactly an organisations requirements.

Use a service provider who will host the private cloud for you off-premise. Microsoft already has over 70 service providers offering private clouds based on Microsoft technology, a list of the providers can be found here.

These announcements are without doubt an exciting proposition, and after a slow start with Azure, begin to position Microsoft as the organisation that has the most comprehensive cloud computing offering, providing Software as a Service (SaaS) through its online application programs, PaaS through Azure and now IaaS for on-premise private clouds as well as on Azure too. If they manage to deliver on their promise, this will be a set of solutions that will leave Amazon and Google trailing.

As Cloud Computing continues to develop at such a relentless pace, keeping up with the products and new developments and how they can impact your organisation is a difficult and time consuming task. Why not short cut the research process by attending Learning Tree’s comprehensive hands-on course on Cloud Computing which provides vendor neutral analysis and insights into the offerings from all the major Cloud Computing vendors and how your organisation may benefit from the advantages, whilst also being aware of the associated risks.

<Return to section navigation list>

Cloud Security and Governance

Boris Segalis, Esq. Ponder[s] the Role of Privacy Lawyers: From Jerusalem to New York in this 11/16/2010 article for the Information Law Group blog:

During the final week of October and beginning of November, I attended two privacy events that were set far apart geographically and philosophically: the Data Protection Commissioners Conference in Jerusalem and the ad:tech conference in New York City. The Jerusalem event had a decidedly pro-privacy flavor, while at ad:tech businesses showcased myriad ways for monetizing personal information. Both conferences posed interesting questions about the future of privacy, but as a privacy lawyer I was more interested in learning and observing than engaging in the privacy debates. The events’ apparently divergent privacy narratives made me ponder where a privacy lawyer may fit on the privacy continuum between these two great cities.

In Jerusalem, regulators and privacy advocates from around the world called for greater privacy protections. A few industry representatives who suggested that the industry was doing a good job protecting privacy seemed to be drowned out by regulators and privacy advocates, as well as other industry representatives who took a decidedly pro-consumer view of privacy protection, seeing it as a good business practice. Participants discussed boxing businesses that stray from certain principals of processing personal information in public shaming, investigations, privacy suits and enforcement actions. Aside from Facebook, businesses that are fueled by collecting, using and sharing personal information seemed significantly underrepresented in Jerusalem. These companies are critical players in the information economy to which speakers and panelists often referred, but their take on privacy remains largely unpopular with regulators and privacy advocates.

Seemingly on the other end of the privacy continuum was ad:tech at the Javitz Center in New York City. For many New York lawyers visiting the lower level of the Javitz Center must be an eerie experience because this is where thousands of us took the bar exam. Fortunately, this time the basement was not filled with endless rows of beige tables and matching folding plastic chairs. Instead, businesses from around the world working in interactive advertising and technology field were exhibiting their ability to track users’ online activities, build user profiles online and offline, combine personal information from multiple sources into sophisticated marketing profiles, and help advertisers target individuals ever more precisely. Many of the companies have built detailed databases containing the profiles of tens or hundreds of millions of consumers. Walking the isles of the exposition, I tried to imagine what the Jerusalem conference participants would think of ad:tech. My gut feeling was that they would think they were in a parallel universe. Here, far from walking on eggshells around privacy issues, talented and enthusiastic entrepreneurs (some of whom I met in person), arguably had no qualms about collecting and using personal information to create business value. Looking at the vibrant sea of colors and people that filled the space, and experiencing the excitement of business leaders who told me about their companies, it was hard to argue with the innovation and enormous value these businesses bring to the economy. It would be unfair to say that ad: tech exhibitors had no concern about privacy of the individuals whose personal information drives their businesses. But to what extent these businesses are focused on privacy concerns such as those raised in Jerusalem, is an open question.

There clearly is a significant divide between how privacy was seen in Jerusalem and New York. I do not know if regulators, privacy advocates and the industry can easily bridge this divide on their own. I believe, however, that privacy lawyers can contribute significantly to building a bridge between these somewhat parallel universes. Privacy lawyers can do this in an old-fashion way, by helping the various stakeholders understand each other better.

Today, U.S. privacy lawyers are facing a complex legal landscape. While there are well-established privacy laws (for example, GLB, FCRA, HIPAA and state breach notification laws), overall, the privacy landscape is unstable and evolving, and combines many legal and non-legal challenges. A number of factors contribute to this complexity. For example, privacy is a hot topic that continuously garners publicity. Privacy advocacy groups and more recently journalists are on the lookout for privacy practices they deem unfair. As a result, companies’ privacy practices and mistakes are often exposed instantaneously to unpredictable results. Another factor is that personal information crosses national borders at the speed of light, whether between people and organizations sending and receiving information, or for processing in the cloud. This movement of data leads to overlapping claims of jurisdiction. On one of the panels in Jerusalem, for example, several European lawyers and regulators disagreed sharply about jurisdiction when offered a complicated fact pattern of data transfers to and from Europe. Even in the U.S., privacy laws are constantly evolving with states enacting privacy and information security statutes at an alarming rate, courts and regulators reinterpreting privacy rights, and regulators and industry groups issuing their own guidance. Privacy may seem like a minefield for which there is no map.

Privacy lawyers strive to survey and understand this minefield and translate it into a roadmap that helps businesses not only to avoid the mines, but to think about privacy in a positive way. We want our clients to know that privacy is not a prohibition against collecting and using personal information, but a commitment to collect and use the information in a fair and transparent manner. We help our clients be proactive in addressing privacy and understanding that privacy can and should be good for business. On the other hand, we help companies frame their business models, personal information processing activities and privacy programs in a manner that helps privacy regulators and privacy advocates view our clients’ businesses and privacy programs in a positive light.

As privacy lawyers, we take on this complex task of achieving privacy harmony, and I believe we are best-suited to succeed in this quest.

<Return to section navigation list>

Cloud Computing Events

David Pallmann [pictured below] reported Webcast: Microsoft Cloud Computing Assessments: Determining TCO and ROI in an 11/15/2010 post:

On Tuesday 11/16/10 my Neudesic colleague Rinat Shagisultanov will deliver Part 2 in our webcast series on cloud computing assessments, this time dealing with how to make the business case by computing TCO and ROI.

Assessments: Determining TCO and ROI:

- Event Type: Webcast - Pacific Time

- Event Start Date: 11/16/2010 10:00 AM

- Event End Date: 11/16/2010 11:00 AM

- Presenter: Rinat Shagisultanov, Principal Consultant II, Neudesic

- Registration: https://www.clicktoattend.com/invitation.aspx?code=151052

Cloud computing can benefit the bottom line of nearly any company, but how do you determine the specific ROI for your applications? In this webcast you'll see how to compute the Total Cost of Ownership (TCO) for your on-premise applications and estimate what the TCO in the cloud will be to gauge your savings. You'll see how your Return on Investment (ROI) can be calculated by considering TCO, migration costs, and application lifetime. Knowing the ROI helps you make informed decisions about risk vs. reward and which opportunities will bring you the greatest value.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr reported AWS Receives ISO 27001 Certification on 11/16/2010:

We announced the successful completion of our first SAS 70 Type II audit just about a year ago. Earlier this year I talked about an application that had successfully completed the FISMA Low assessment and then received the necessary Authority to Operate.

Today I am happy to announce that we have been awarded ISO 27001 certification.

The full name of this certification is "ISO/IEC 27001:2005 - Information technology — Security techniques — Information security management systems — Requirements." This is a comprehensive international standard and one that should be of special interest to customers from an information security perspective. SAS 70, a third party opinion on how well our controls are functioning, is often thought of as showing "depth" of security and controls because there's a thorough investigation and testing of each defined control. ISO 27001, on the other hand, shows a lot of "breadth" because it covers a comprehensive range of well recognized information security objectives. Together, SAS 70 and ISO 27001 should give you a lot of confidence in the strength and maturity of our operating practices and procedures over information security.

We receive requests for many different types of reports and certifications and we are doing our best to prioritize and to respond to as many of them as possible. Please let me know (comments are fine) which certifications would let you make even better use of AWS.

Relevant AWS jobs include:

About time!

Microsoft had ISO/IEC 27001:2005 certificates from BSI on all its data centers in 2009 and has the same for 2010. The third-party (BSI) ISO certification link for each MSFT data center is here. The 2009 certificates are discussed in my Cloud Computing with the Windows Azure Platform book.

See Jay Heiser’s The SAS 70 Charade post to the Gartner blogs on 7/5/2010 in my Windows Azure and Cloud Computing Posts for 7/5/2010+ (scroll down). See also Chris Hoff’s Observations on “Securing Microsoft’s Cloud Infrastructure” of 6/1/2009.

<Return to section navigation list>

0 comments:

Post a Comment