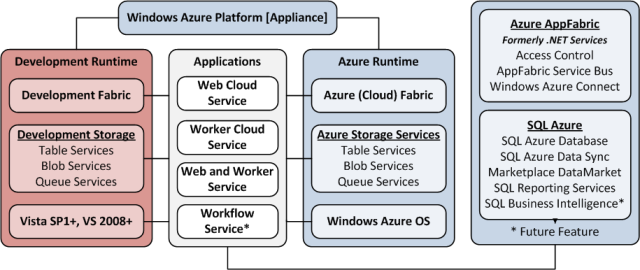

Windows Azure and Cloud Computing Posts for 11/18/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

My (@rogerjenn) Azure Storage Services Test Harness: Table Services 1 – Introduction and Overview post announced that source code is available for download as SampleWebCloudService.zip from my Windows Live Skydrive account.

See Updated Source Code for OakLeaf Systems’ Azure Table Services Sample Project Available to Download of 11/17/2010 for more details.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi announced Forrester Reports: SQL Azure Raises the Bar On Cloud Databases in an 11/18/2010 post to the SQL Azure team blog:

Over the past year, since the release of SQL Azure, we’ve been focused on delivering the highest quality database in the cloud. SQL Azure builds on the already strong SQL Server platform, but delivers a multi-tenant cloud based relational database, which is truly unique in the market.

Independent research firm Forrester Research, Inc. took note of this. On November 2, 2010, Forrester published “SQL Azure Raises The Bar On Cloud Databases.” Forrester interviewed 26 companies using Microsoft SQL Azure over the past six months. As we also hear from our customers, most stated that SQL Azure delivers a reliable cloud database platform to support various small to moderately sized applications as well as other data management requirements such as backup, disaster recovery, testing, and collaboration.

In the report, Principal Analyst Noel Yuhanna states that key enhancements have been made that position SQL Azure as a leader in the rapidly emerging category of cloud databases and writes that it “delivers the most innovative, integrated and flexible solution.”

Key advantages identified include:

- Low-cost acquisition and use

- Minimal effort for administration and configuration

- Easy to provision, on-demand capacity

- High availability at no extra effort or cost

- Scale-out capacity growth via a sharded data platform

- Transparent data access for applications and tools

There are also great customer testimonials, such as:

- “We did our cloud ROI analysis, and SQL Azure clearly stood out when it comes to offering a database platform at an attractive price. We are now considering more apps on SQL Azure.” (Andy Lapin, director of enterprise architecture, Kelley Blue Book)

- “Getting a database that’s 24x7 at a fraction of the cost, even if only for our less-critical applications, is a dream come true. Previously those less-critical apps went down too often because we did not have budget for highly resilient servers and databases. SQL Azure definitely changes the game.” (Database administrator, telco)

We encourage you to check out his research here: SQL Azure Raises The Bar On Cloud Databases.

Todd Hoff posted Announcing My Webinar on December 14th: What Should I Do? Choosing SQL, NoSQL or Both for Scalable Web Applications to the High Scalability blog on 11/18/2010:

It's time to do something a little different and for me that doesn't mean cutting off my hair and joining a monastery, nor does it mean buying a cherry red convertible (yet), it means doing a webinar!

- On December 14th, 2:00 PM - 3:00 PM EST, I'll be hosting What Should I Do? Choosing SQL, NoSQL or Both for Scalable Web Applications.

- The webinar is sponsored by VoltDB, but it will be completely vendor independent, as that's the only honor preserving and technically accurate way of doing these things.

- The webinar will run about 60 minutes, with 40 minutes of speechifying and 20 minutes for questions.

- The hashtag for the event on Twitter will be SQLNoSQL. I'll be monitoring that hashtag if you have any suggestions for the webinar or if you would like to ask questions during the webinar.

The motivation for me to do the webinar was a talk I had with another audience member at the NoSQL Evening in Palo Alto. He said he came from a Java background and was confused about the future. His crystal ball wasn't working anymore. Should he invest more time on Java? Should he learn some variant of NoSQL? Should he focus on one of the many other alternative databases? Or do what exactly?

He was exasperated at the bewildering number of database options out there today and asked my opinion on what he should do. I get that question a lot. And 30 seconds before the next session starting was not enough time for a real answer. So I hope to give the answer that I wanted to give then, here, in this webinar.

We've all probably had that helpless feeling of facing a massive list of strangely named databases, each matched against a list of a dozen cryptic sounding features, wondering how the heck we should make a decision? In the past there was a standard set of options. A few popular relational databases ruled and your job as a programmer was to force the square peg of your problem into the round hole of the relational database.

Then a few intrepid souls, like LiveJournal and Google, went off script and paved their own way, building specialized systems that solved their own specific problems. Over time those systems have generalized into the abundance we have today. It's as Mae West, seductive siren of the silver screen, once said "Too much of a good thing can be wonderful!"

We are in a time of great change, creativity, and opportunity. It's a little confusing, sure, but it's also a cool time, an optimistic time. We can now work together to solve problems faster, better, and in larger numbers than ever before. We can now build something new and different and it's faster, easier, and cheaper than ever before. The question is, where to start?

In this webinar what I hope to do is help you figure out how to answer the "What should I do" question for yourself, like what I try to do in my blog, only more conversational. We'll take a use case approach. I promise we won't spend 20 minutes on CAP or other eyes-glazing-over topics. We'll try to look at what you need to do and use your requirements to figure out which product, or more likely, set of products to use.

That's the plan. I really hope you can attend. If you like this blog I think you'll like the webinar too. And if you have a friend or coworker you think could benefit from the webinar please forward them this link.

This is my first webinar, so if you have any advice on how not to suck please comment here, email me directly, or use the SQLNoSQL hashtag and I'll see it. I'd appreciate the advice. If you have suggestions about what you would like me to talk about or what you think the right answer is, please let me know that too. All inputs welcome.

<Return to section navigation list>

Dataplace DataMarket and OData

Karsten Januszewski (@irhetroric) described Using Excel and PowerPivot For Data Mining Twitter in this 11/17/2010 post:

Out of the box, The Archivist (our Twitter archival and analysis service) provides six visualizations of sliced Twitter data. However, there are some visualizations that The Archivist doesn't provide.

For example, consider an archive on the term soundcloud with 388,000+ tweets. The Archivist will show you the top 25 users who tweeted about soundcloud. I can learn from The Archivist that the user who tweeted the most about soundcloud was top100djsgirls. But what if I wanted to look at just the tweets from top100djsgirls? The Archivist can’t do that.

Similarly, The Archivist provides a tweet by volume chart. I can see the peak day over the last 4 months for the term soundcloud was in mid-August of 2010. What if I’d like to look at just the tweets from the high volume day?

Or, what if I’d like to see a pie chart that shows distribution based on the language of a tweet? How’s about looking at the distribution of tweets over time filtered on a given user?

Pulling an archive into Excel and using PowerPivot to slice and dice the data makes it possible to answer these kinds of questions (and more). PowerPivot is designed for working with large datasets (100,000+) inside Excel, so it's perfect for mining large tweet archives.

In the screencast below, I'll walk you through exactly how to do this:

For more information about the Archivist, see my Archive and Mine Tweets In Azure Blobs with The Archivist Application from MIX Online Labs post of 7/11/2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Vittorio Bertocci (@VibroNet) posted a description of his two TechEd China 2010 sessions in 在中国TechEd上演讲!of 11/17/2010:

[thanks to Dan Yang for the translation :-)]

我将讲授两个课程,都在12月2日,下面是课程安排:

- Session Code: COS-300-1

- Time 9:45-10:45

- 云计算中的身份认证以及访问控制

Identity & Access Control in the Cloud

- Session Code: ARC-300-4

- Time: 17:00-18:00

- 利用Windows Azure Platform开发SaaS的解决方案

- Developing SaaS Solutions with the Windows Azure Platform

我上次来北京还是在2009年1月:那已经是很久以前的事了。我迫不及待再次看看你们美丽的城市,见见优秀的开发者们!

Tim Anderson (@timanderson) asked WS-I closes its doors–the end of WS-* web services? in this 11/17/2010 article:

The Web Services Interoperability Organization has announced [pdf] the “completion” of its work:

After nearly a decade of work and industry cooperation, the Web Services Interoperability Organization (WS-I; http://www.ws-i.org) has successfully concluded its charter to document best practices for Web services interoperability across multiple platforms, operating systems and programming languages.

In the whacky world of software though, completion is not a good thing when it means, as it seems to here, an end to active development. The WS-I is closing its doors and handing maintenance of the WS interoperability profiles to OASIS:

Stewardship over WS-I’s assets, operations and mission will transition to OASIS (Organization for the Advancement of Structured Information Standards), a group of technology vendors and customers that drive development and adoption of open standards.

Simon Phipps blogs about the passing of WS-I and concludes:

Fine work, and many lessons learned, but sadly irrelevant to most of us. Goodbye, WS-I. I know and respect many of your participants, but I won’t mourn your passing.

Phipps worked for Sun when the WS-* activity was at its height and WS-I was set up, and describes its formation thus:

Formed in the name of "preventing lock-in" mainly as a competitive action by IBM and Microsoft in the midst of unseemly political knife-play with Sun, they went on to create massively complex layered specifications for conducting transactions across the Internet. Sadly, that was the last thing the Internet really needed.

However, Phipps links to this post by Mike Champion at Microsoft which represents a more nuanced view:

It might be tempting to believe that the lessons of the WS-I experience apply only to the Web Services standards stack, and not the REST and Cloud technologies that have gained so much mindshare in the last few years. Please think again: First, the WS-* standards have not in any sense gone away, they’ve been built deep into the infrastructure of many enterprise middleware products from both commercial vendors and open source projects. Likewise, the challenges of WS-I had much more to do with the intrinsic complexity of the problems it addressed than with the WS-* technologies that addressed them. William Vambenepe made this point succinctly in his blog recently.

It is also important to distinguish between the work of the WS-I, which was about creating profiles and testing tools for web service standards, and the work of other groups such as the W3C and OASIS which specify the standards themselves. While work on the WS-* specifications seems much reduced, there is still work going on. See for example the W3C’s Web Services Resource Access Working Group.

I partly disagree with Phipps about the work of the WS-I being “sadly irrelevant to most of us”. It depends who he means by “most of us”. Granted, all this stuff is meaningless to the world at large; but there are a significant number of developers who use SOAP and WS-* at least to some extent, and interoperability is key to the usefulness of those standards.

The Salesforce.com API is mainly SOAP based, for example, and although there is a REST API in preview it is not yet supported for production use. I have been told that a large proportion of the transactions on Salesforce.com are made programmatically through the API, so here is one place at least where SOAP is heavily used.

WS-* web services are also built into Microsoft’s Visual Studio and .NET Framework, and are widely used in my experience. Visual Studio does a good job of wrapping them so that developers do not have to edit WSDL or SOAP requests and responses by hand. I’d also suggest that web services in .NET are more robust than DCOM (Distributed COM) ever was, and work successfully over the internet as well as on a local network, so the technology is not a failure.

That said, I am sure it is true that only a small subset of the WS-* specifications are widely used, which implies a large amount of wasted effort.

Is SOAP and WS-* dying, and REST the future? The evidence points that way to me, but I would be interested in other opinions.

Related posts:

I doubt if WS-* Web services will die that easily. They’re still a foundation for secure, digitally signed SOA messaging among enterprises. REST still hasn’t captured significant enterprise SOA mindshare.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

My Load-Testing the OakLeaf Systems Azure Table Services Sample Project with up to 25 LoadStorm Users post of 11/18/2010 described tests I conducted with LoadStorm’s on-demand web application load testing tool:

Here’s the final analysis after a 5-minute test with 5 to 25 users completed:

Each test included paging to the Next Page and First Page.

David MacLaren described his company’s MediaValet application in a Guest Post by David MacLaren, president & CEO, VRX Studios to the Windows Azure Team blog:

VRX Studios has been in the business of providing photography and content services to the global hotel industry for more than 10 years and we currently work with more than 10,000 hotels around the world. With this many customers who are so widely dispersed, you might think operations and logistics are our biggest challenges-they're not.

Today, our biggest headache comes from managing terabytes of media assets for dozens of large hotel brands with thousands of individual hotels and tens of thousands of stakeholders. Ironically, we've found that our large hospitality customers have the same content management headaches that we do.

Two years ago, we decided to take a serious look at this problem and try to find a solution. We started by looking at what was available from other vendors. A year and a half later, after several failed attempts to work with third-party digital asset management software providers, we gave up. Licensing a digital asset management system was not a viable solution for us. In addition to not meeting our core day-to-day content management needs, it was too expensive to license, set up, co-locate, support, and maintain.Six months ago, rife with frustration, we started exploring the idea of using our years of content production, management and distribution experience to design and build our own digital asset management system-we were surprised at what we found.

Technology over the past two years has taken huge strides at making an undertaking like creating a brand new, enterprise-class, digital asset management system-from scratch-a viable and financially feasible option. The most critical advancement was the maturation of cloud computing. It presented us with the ability to confidently, easily and cost-effectively deploy a global SaaS solution without the hefty capital expenditures and operational complexity required to set up and operate our own servers in data centers around the world. This was key but we still faced the challenge of creating an enterprise-class digital asset management system in the cloud to take advantage of the access, distribution, scalability, storage and backup benefits that the cloud now provides - Windows Azure was the answer we were looking for.

MediaValet Screenshot showing main Categories page of VRX Studios implementation.We reviewed every cloud-computing offering on the market and Windows Azure met our needs perfectly. Windows Azure has the best geo-location capabilities, enabling easy and fast deployment in every corner of the world, and it provides the developer tools and platform that we needed to develop a robust, scalable, and reliable enterprise-class software-as-a-service offering in the cloud.

Operationally, Windows Azure provides the monitoring, load balancing, scalability, and storage technology and support that will enable us to quickly outclass other digital asset management systems on the market today. Combined with our existing customer base and global reputation, we're in a good position as we launch the community preview of our new, 100 percent cloud-based, enterprise-class, digital asset management system, MediaValetTM, this month.For more information, visit: www.mediavalet.co| www.vrxstudios.com | www.vrxworldwide.com

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) announced LightSwitch Team “How To” Articles and New Developer Center Content on 11/18/2010:

Over on the LightSwitch team blog we’ve been posting a series of “How To” articles that have gotten pretty popular that you probably want to check out if you’re evaluating LightSwitch. Most of these How To articles cover topics not already covered in the How Do I videos.

Basics:

- How To Use Lookup Tables with Parameterized Queries

- How To Create a Custom Search Screen

- How Do I: Import and Export Data to/from a CSV file

- How Do I: Create and Use Global Values In a Query

- How Do I: Filter Items in a ComboBox or Modal Window Picker in LightSwitch

Advanced:

- How to Communicate across LightSwitch Screens

- How to create a RIA service wrapper for OData Source

- How to Configure a Machine to Host a 3-tier LightSwitch Beta 1 Application

Check this How To article feed as we post more on the team blog!

Developer Center Update:

We also made some updates to the LightSwitch Developer Center that hopefully will make it easier to navigate task-based content. The LightSwitch Developer Center is your one-stop-shop for getting started with and learning about LightSwitch :-). If you take a look at the home page and Learn page, we’ve added a section that calls out some focused learning content around building & deploying applications as well as some good links to learn more about the architecture of LightSwitch.

More content will build up as we approach Beta 2 so I wanted to start organizing things by tasks/topics. We’re also putting together a list of community sites and third-party extensions as we approach RTM so if you’re talking/teaching about LightSwitch right now I want to hear from you. ;-)

Sheel Shah explained How to Communicate Across LightSwitch Screens in an 11/17/2010 post to the Visual Studio LightSwitch Team blog:

One of the more common scenarios that we’ve encountered in business applications are data creation screens that involve related data. For example, an end user may be creating a new order record that requires the selection of a customer. In LightSwitch, this involves using a modal picker control. If this customer does not yet exist, the end user may navigate to a different screen to create a customer.

Unfortunately, once the new customer has been added, the modal picker on the new order screen does not automatically refresh. However, we can write some code in our application to get the behavior we want. This involves the following:

- Setup the modal picker on our new order screen to use a screen query instead of the automatic query.

- Creating an event on our application object that we raise whenever a new customer is added.

- Handling the event in our new order screen and refreshing the list of customers.

Setting up the Application

Create a table called ‘Customer’ with the string property called ‘Name’. Create another table called ‘Order’ with a string property called ‘Name’. Create a 1-to-Many relationship between ‘Customer’ and ‘Order’ (each ‘Customer’ has many ‘Orders’).

Define a ‘New Data Screen’ for ‘Order’. The name of this screen should be ‘CreateNewOrder’. On the screen, select ‘Add Data Item…’ and add a query property for ‘Customers’. Name this query ‘CustomerChoices’.

Select the modal picker for ‘Customer’ and in the property sheet, change the ‘Choices’ property to ‘CustomerChoices’. This will set the modal picker to use the ‘CustomerChoices’ query to determine the list of possible customer choices. We can then refresh this query from code when a customer is added.

Define CustomerAdded Event on the Application Object

First, define a ‘New Data Screen’ for ‘Customer’ called ‘CreateNewCustomer’. We now need to navigate to the code file for our application object to add the CustomerAdded event. On your ‘CreateNewCustomer’ select the Write Code dropdown and choose ‘CanRunCreateNewCustomer’. This will navigate to the application code file.

In the application code file define an event called ‘CustomerAdded’ and a method used to raise the event. LightSwitch runs in each screen’s logic in a different thread. The overall application also runs in its own thread. We will need to ensure that the event we define is raised on the correct thread. Since it is an application-wide event, we should ensure that it is raised on the application’s thread. This is covered in the code below.

Raise the CustomerAdded Event from CreateNewCustomer

Navigate to the ‘CreateNewCustomer’ screen. We will need to add code to the Saved method on our screen that triggers the ‘CustomerAdded’ event. Select the write code dropdown and select CreateNewCustomer_Saved.

Modify the existing code to raise the event. This is shown below.

Handle CustomerAdded Event in CreateNewOrder

Navigate to the ‘CreateNewOrder’ screen. We will need to add code that will listen for the ‘CustomerAdded’ event and refresh the ‘CustomerChoices’ query when it is raised. Click on the Write Code button and modify the code as follows.

Test the Application

We can now test our application. Run the application and open the ‘CreateNewOrder’ screen. Enter a name for the order and open the Customer picker. There are no customers added to the system. Now open the ‘CreateNewCustomer’ screen. Enter a new customer and save the screen. Navigate back to the ‘CreateNewOrder’ screen. Open the Customer picker again. The added customer should now be displayed.

The functionality we’ve described can be extended to other scenarios (such as workflow style applications).

Return to section navigation list>

Windows Azure Infrastructure

Bruce Guptil wrote SMB CEOs on Cloud IT: Conference Conversations Show Strong Interest, Broad Lack of Knowledge on 11/18/2010 as a Saugatuck Technologies Research Alert (site registration required):

What is Happening?

Chief executives from smaller firms around the US are bullish on Cloud IT, their own business, and the US economy. However, they are unclear on what they know, what they need to know, and where to gain the needed knowledge in order to make Cloud IT work for their business. They can‟t get enough of the knowledge and support that they need from Cloud IT providers to make decisions or purchases.

This could lead to a widening of the competitive gap between small and large firms, in a time when small business growth is sorely needed. It could also lead to delays for Cloud IT providers in reaching, serving, and building presence in the largest potential market for their services. …

Bruce continues with a “Why Is It Happening” section but the usual Market Impact section is missing.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

Alex Williams posted his WeeklyPoll: Microsoft's 'To The Cloud' TV Campaign to the ReadWriteCloud on 11/17/2010:

Microsoft has launched an advertising campaign with the catch phrase: "To the Cloud."

Typical for Microsoft, some people love the advertisements and others think the campaign is just awful.

The campaign will run across television, magazines, the Web, billboards and airport signs. It is designed to show the customer benefits of cloud computing.

According to WinRumors, Microsoft is also kicking off a campaign called "Cloud Power," designed to show off the benefits of the "private cloud." [Emphasis added.]

To the Cloud

Let's take a look at three spots from the "To the Cloud" campaign. The first video features a couple at the airport who have just learned their flight may be delayed anywhere from 15 minutes to three hours. They go "to the cloud," to remotely access a show off the personal computer at their house. The woman is happy to watch a show about celebrities. The woman mellows out, blissful to watch her show about celebrities on parole.

In the second example, a CEO of a nascent startup is at a coffee shop and he needs to share a document. The business plan is due in two hours and it needs a bit of work. He goes "to the cloud." The marketing guy looks at it from the office. The CFO takes a look from his house while his kids bop him on the head with a rubber toy. The CEO can now can quit his day job.

In a third example, a woman is editing a photo of her family. Her daughter is texting. The boys are fighting and Dad is trying to break it up. She goes "to the cloud," and edits the photo. Behind her, the family is sitting on the couch where the picture was taken. She clicks a big Facebook icon and says: "Finally, a photo I can share without ridicule. Windows gives me the family nature never could."

The ads are at once brilliant and a bit aggravating, too. Moving walls reveal a new laptop for the characters in essence so they may go "to the cloud." It's all playful and fun. It's all so easy. Cloud computing - it's for sharing photos, watching movies and starting a company.

What do you think of these ads? Do they trivialize cloud computing or make it accessible for us all? And is that so bad?

What do you Think of Microsoft's 'To The Cloud' TV Campaign?online surveys

John C. Stame (@JCStame) questioned the Economics of [Private] Cloud[s] in an 11/17/2010 post:

I wrote about the Business Value of Cloud Computing back in June, 2009. I highlighted in that post, why enterprises were interested in cloud computing from a business value perspective and discuss why this style of computing has been, and will continue to be huge platform shift. As I discussed then, the transformation to cloud computing will come with significant improvements in efficiency, agility and innovation.

Recently, the Corporate Strategy Group at Microsoft did an extensive analysis of the economics associated with cloud computing, leveraging Microsoft’s experience with cloud services like Windows Azure, Office 365, Windows Live, and Bing. The outcome resulted in a blog post and a whitepaper, “The Economics of the Cloud.”

In the paper, it highlights how the economics impact public clouds and private clouds to different degrees and describe how to weigh the trade-off that this creates. Private clouds address many of the concerns IT leaders have about cloud computing, and so they may be perfectly suited for certain situations. But because of their limited ability to take advantage of demand-side economies of scale and multi-tenancy, the paper concludes that private clouds may someday carry a cost that is as much more costly then that of public clouds.

<Return to section navigation list>

Cloud Security and Governance

The SD Times Newswire posted Cloud Security Alliance Unveils Governance, Risk Management and Compliance (GRC) Stack GRC Stack on 11/17/2010:

The Cloud Security Alliance (CSA) today announced the availability of the CSA Governance, Risk Management and Compliance (GRC) Stack, a suite of enabling tools for GRC in the cloud, now available for free download at www.cloudsecurityalliance.org/grcstack.

Achieving GRC goals requires appropriate assessment criteria, relevant control objectives and timely access to necessary supporting data. Whether implementing private, public or hybrid clouds, the shift to compute-as-a-service presents new challenges across the spectrum of GRC requirements. The CSA GRC Stack provides a toolkit for enterprises, cloud providers, security solution providers, IT auditors and other key stakeholders to instrument and assess both private and public clouds against industry established best practices, standards and critical compliance requirements.

“When cloud computing is treated as a governance initiative, with broad stakeholder engagement and well-planned risk management activities, it can bring tremendous value to an enterprise,” said Emil D'Angelo, CISA, CISM, international president of ISACA, a founding member of the Cloud Security Alliance and a co-developer of the GRC stack.

"Gaining visibility into service provider environments and governing them according to overall enterprise GRC strategy have emerged as major concerns for organizations when considering the use of public cloud services," said Eric Baize, Senior Director of Cloud Security Strategy at RSA, The Security Division of EMC. "The Cloud Security Alliance has acted in a timely manner to enable a concrete cloud GRC stack that will foster transparency and confidence in the public cloud. RSA will build these standards into its own RSA Archer eGRC platform, the foundation for the RSA Solution for Cloud Security and Compliance which will allow organizations to assess cloud service providers using the same tool that is used widely to manage risk and compliance across the enterprise.”

The Cloud Security Alliance GRC Stack is an integrated suite of three CSA initiatives: CloudAudit, Cloud Controls Matrix and Consensus Assessments Initiative Questionnaire:

- CloudAudit: aims to provide a common interface and namespace that allows cloud computing providers to automate the Audit, Assertion, Assessment, and Assurance (A6) of their infrastructure (IaaS), platform (PaaS), and application (SaaS) environments and allow authorized consumers of their services to do likewise via an open, extensible and secure interface and methodology. CloudAudit provides the technical foundation to enable transparency and trust in private and public cloud systems.

- Cloud Controls Matrix (CCM):provides a controls framework that gives detailed understanding of security concepts and principles that are aligned to the Cloud Security Alliance guidance in 13 domains. As a framework, the CSA CCM provides organizations with the needed structure, detail and clarity relating to information security tailored to the cloud industry

- Consensus Assessments Initiative Questionnaire (CAIQ): The CSA Consensus Assessments Initiative (CAI) performs research, creates tools and creates industry partnerships to enable cloud computing assessments. The CAIQ provides industry-accepted ways to document what security controls exist in IaaS, PaaS, and SaaS offerings, providing security control transparency. The questionnaire (CAIQ) provides a set of questions a cloud consumer and cloud auditor may wish to ask of a cloud provider.

“Cloud computing brings tremendous benefits to business, but these models also raise questions around compliance and shared responsibility for data protection,” said Scott Charney, Corporate Vice President for Microsoft’s Trustworthy Computing Group. “With the Cloud Security Alliance’s guidance, providers and enterprises can use a common language to ensure the right security issues are being considered and addressed for each type of cloud environment.”

Read more: Next Page

<Return to section navigation list>

Cloud Computing Events

Eric Nelson (@ericnel) reminds UK ISVs about a FREE technical update event on Windows Azure, SQL Server 2008 R2, Windows Phone 7 and more on the 25th Nov at Microsoft Ltd., Chicago 1, Building 3, Microsoft Campus, Thames Valley Park, Reading, Berkshire RG6 1WG

United Kingdom:

Just a brief reminder that if your company makes commercial software (in other words, you don’t just develop it for your own employees use) then you should check out the FREE technical event my team is putting on next Thursday (25th Nov 2010). We also with have an Xbox 360 with Kinect to give away plus free books and other goodies. I’m currently looking after the Xbox and have so far resisted peaking in… but I make no promises! :-)

Note that we have gone for zero breaks with the intention of making sure we have plenty of time at the end of the session for informal Q&A. The 3:30pm finish is “notional” :-)

Lunch 12pm to 1pm

1pm to 1:45pm Microsoft Technology Roadmap

Technologies such as the Windows Azure Platform, Windows Phone 7, SharePoint 2010 and Internet Explorer 9.0 all present new opportunities to solve the needs of your customers in new and interesting ways. This session will give an overview of the latest technologies from Microsoft which can significantly impact your own product roadmaps.

1:45pm to 3:15pm: Technology drill downs

In this session we will drill into the technical details behind three of the key technologies:

- Windows Azure Platform – learn how instant provisioning, no capital costs and the promise of “infinite scalability” make this a technology that every ISV should explore.

- Windows Phone 7 – understand where and how you can take advantage of this great device in your own solutions.

- SQL Server 2008 R2 – learn about the improvements that make this an obvious choice for data rich applications.

3:15pm to 3:30pm What next?

Today just scraped the surface and hopefully you will want to know more. This session will summarise the resources that will help you further explore and ultimately adopt the latest technologies.Register today as space is limited http://bit.ly/isvtechupdate25thnov

P.S. On the same day we have a morning session for business decision makers in ISVs. You can find out more and register at http://bit.ly/isvbusupdate25thnov . The intention is to have pretty much zero overlap between the two parts of the day – so you may well want to come along for the entire day.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Randy Bias (@randybias) asked Grid, Cloud, HPC … What’s the Diff? in an 11/18/2010 post to his Cloudscaling blog:

It’s always nice when another piece of the puzzle comes into focus. In this case, my time speaking at the first ever International Super Computer (ISC) Cloud Conference the week before last was well spent. The conference was heavily attended by those out of the grid computing space and I learned a lot about both cloud and grid. In particular, I think I finally understand what causes some to view grid as a pre-cursor to cloud while others view it as a different beast only tangentially related.

This really comes down to a particular TLA in use to describe grid: High Performance Computing or HPC. HPC and grid are commonly used interchangeably. Cloud is not HPC, although now it can certainly support some HPC workloads, née Amazon’s EC2 HPC offering. No, cloud is something a little bit different: High Scalability Computing or simply HSC here.

Let me explain in some depth …

Scalability vs. Performance

First it’s critical for readers to understand the fundamental difference between scalability and performance. While the two are frequently conflated, they are quite different. Performance is the capability of particular component to provide a certain amount of capacity, throughput, or ‘yield’. Scalability, in contrast, is about the ability of a system to expand to meet demand. This is quite frequently measured by looking at the aggregate performance of the individual components of a particular system and how they function over time.Put more simply, performance measures the capability of a single part of a large system while scalability measures the ability of a large system to grow to meet growing demand.

Scalable systems may have individual parts that are relatively low performing. I have heard that the Amazon.com retail website’s web servers went from 300 transactions per second (TPS) to a mere 3 TPS each after moving to a more scalable architecture. The upside is that while every web server might have lower individual performance, the overall system became significantly more scalable and new web servers could be added ad infinitum.High performing systems on the other hand focus on eking out every ounce of resource from a particular component, rather than focusing on the big picture. One might have high performance systems in a very scalable system or not.

For most purposes, scalability and performance are orthogonal, but many either equate them or believe that one breeds the other.

Grid & High Performance Computing

The origins of HPC/Grid exist within the academic community where needs arose to crunch large data sets very early on. Think satellite data, genomics, nuclear physics, etc. Grid, effectively, has been around since the beginning of the enterprise computing era, when it became easier for academic research institutions to move away from large mainframe-style supercomputers (e.g. Cray, Sequent) towards a more scale-out model using lots of relatively inexpensive x86 hardware in large clusters. The emphasis here on *relatively*.Most x86 clusters today are built out for very high performance *and* scalability, but with a particular focus on performance of individual components (servers) and the interconnect network for reasons that I will explain below. The price/performance of the overall system is not as important as aggregate throughput of the entire system. Most academic institutions build out a grid to the full budget they have attempting to eke out every ounce of performance in each component.

This is not the way that cloud pioneers such as Amazon.com and Google built their infrastructures.

Cloud & High Scalability Computing

Cloud, or HSC, by contrast, focuses on hitting the price/performance sweet spot, using truly commodity components and buying *lots* more of them. This means building very large and scalable systems.I was surprised at the ISC Cloud Conference when I heard one participant bragging about their cluster with 320,000 ‘cores’. Amazon EC2 (sans the new HPC offering) is at roughly 500,000 cores, quite possibly more. And Google is probably in the order of 10 million+ cores. Clouds built around High Scalability Computing are an order of magnitude larger than most grid clusters and designed to handle generic workloads, requiring hitting the price/performance sweet spot when building them.

Grid workloads can be very, very different.Some Grid Workloads Drive the Grid Community

In talking to the grid community I learned that there are effectively two key types of problem that are solved on large scale computing clusters: MPI (Message Passing Interface) and ‘embarrassingly parallel’ problems. I’m using terms I heard at the conference, but will use MPI and EPP (embarrassingly parallel problem) so that I can shorthand throughout the rest of this article.MPI is essentially a programming paradigm that allows for taking extremely large sets of data and crunching the information in parallel WHILE sharing the data between compute nodes. Some times this is also referred to as ‘clustering’, although that term is frequently overloaded today. Certain kinds of problems necessitate this sharing as the computed results on one node may effect the computed results on another node in the grid. MPI-based grids, the de facto standard for most academic institutions, are built to maximum throughput and performance per system, including the lowest latency possible. Most of them use Infiniband technology for example to effectively turn the entire grid into a single ‘supercomputer‘. In fact, most of these MPI-based grids are ranked into the Supercomputer Top500.

An MPI grid/cluster, in many ways, looks more like an old school mainframe and technology such as Infiniband essentially turns the network into a high-speed bus, just like a PCI bus inside a typical x86 server.

EPP workloads, by contrast, have no data sharing requirements. A very large dataset is chopped into pieces, distributed to a large pool of workers, and then the data is brought back and reassembled. Does this sound familiar? It should, it’s very similar to Google’s MapReduce functionality and the open source tool, Hadoop. EPP workloads are very commonly run on top of MPI clusters, although some academic institutions build out separate or smaller grids to run them instead.

The majority of grid workloads are of the EPP type. The diagram below shows this.

I had one person confide in me that “MPI power users drive grid requirements for vendors and assume that if their problems are solved, then the problems of [EPP] users are solved.“

This is interesting since these two types of workloads have different needs.HPC vs. HSC

The reality is that High Scalability Computing is ideal for the majority of EPP grid workloads. In fact, large amounts of this kind of work, in the form of MapReduce jobs have been running on Amazon EC2 since its beginning and have driven much of its growth.HPC is a different beast altogether as many of the MPI workloads require very low latency and servers with individually high performance. It turns out however, that all MPI workloads are not the same. The lower bottom of the top part of that pyramid is filled with MPI workloads that require a great network, but not an Infiniband network:

In keeping with Amazon Web Service’s tendency to build out using commodity (cloud) techniques, their new HPC offering does not use Infiniband, but instead opts for 10Gig Ethernet. This makes the network great, but not awesome and allows them to create a cloud service tailored for many HPC jobs. In fact, this recent benchmark posting by CycleComputing shows that AWS’ Cloud HPC system has impressive performance particularly for many MPI workloads.

HSC designed to accommodate HPC!

Which brings us back.

The Moral of the Story

So, what we have learned is that scalable computing is different from computing optimized for performance. That cloud can accommodate grid *and* HPC workloads, but is not itself necessarily a grid in the traditional sense. More importantly, an extremely overlooked segment of grid (EPP) has pressing needs that can be accommodated by run-of-the-mill clouds such as EC2. In addition to supporting EPP workloads that run on the ‘regular’ cloud some clouds may also build out an area designed specifically for ‘HPC’ workloads.In other words, grid is not cloud, but there are some relationships and there is obviously a huge opportunity for cloud providers to accommodate this market segment. At least, Amazon is spending 10s of Millions of dollars to do so, so why not you?

My (@rogerjenn) When Your DSL Connection Acts Strangely, First Reboot Your Modem/Router post of 11/18/2010 recounts:

Yesterday (Wednesday 11/17/2010) afternoon, my wife and I encountered a slowdown in connecting to certain Internet hosts, such as microsoft.com, msdn.com, yahoo.com, cloudapp.net (Windows Azure) and others with our ATT DSL connection. Other hosts, such as google.com and twitter.com, behaved as expected. I attributed the problem to temporary congestion or a routing mixup, but didn’t investigate further and turned the four computers in the house off.

This morning, I started my Windows Server 2003 R2 domain controller, as well as Windows Vista and Windows 7 clients and found none were able to connect to microsoft.com, msdn.com, yahoo.com, cloudapp.net and att.com. I didn’t receive immediate 404 errors; IE 8 remained in a “Connecting” state for several minutes. But google.com, twitter.com, and a few other hosts continued to behave as expected. Gmail and CompuServe (AOL) mail worked as usual, but Microsoft Online Services wouldn’t connect.

…

Headslap! I hadn’t rebooted the old-timey Cayman 3220-H modem/router provided by Pacific Bell; rebooting usually was required to restore dropped DSL connections. I pulled the power cable, waited 15 seconds, plugged it back in, and received the expected “three green” (same as for aircraft retractable landing gear in the down and locked position.)

Eureka! The reboot solved the problem. All hosts behaved as expected. I have no idea why the router had problems with only certain hosts.

…

<Return to section navigation list>

![LoadStormDetailedResults5min796px[5] LoadStormDetailedResults5min796px[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEid8OKElpRNuq__y3gvkw9XXMUuKSiY-fPcyux3VeJP09LtI7IlsAhPPI31Jp69gkbAy7hMnO8L7VQ4etqr1npIAus01uXvRwRJSUxqa3vooA6QkEOrWumcf-opglBVF9knr8asbDic/?imgmax=800)

0 comments:

Post a Comment