Windows Azure and Cloud Computing Posts for 11/20/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Kyle McClellan described Windows Azure Table Storage LINQ Support in this 11/22/2010 post:

Windows Azure Table storage has minimal support for LINQ queries. They support a few key operations, but a majority of the operators are unsupported. For RIA developers used to Entity Framework development, this is a significant difference. I wanted to put together this short post to draw your attention to this link.

When you use an unsupported operator, your application will fail at runtime. Typically the error is something along these lines.

An error occurred while processing this request.

at System.Data.Services.Client.DataServiceRequest.Execute[TElement](DataServiceContext context, QueryComponents queryComponents)

at System.Data.Services.Client.DataServiceQuery`1.Execute()

at System.Data.Services.Client.DataServiceQuery`1.GetEnumerator()

at SampleCloudApplication.Web.MyDomainService.GetMyParentEntitiesWithChildren()

at …with an InnerException that looks like this.

<?xml version="1.0" encoding="utf-8" standalone="yes"?>

<error xmlns="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata">

<code>NotImplemented</code>

<message xml:lang="en-US">The requested operation is not implemented on the specified resource.</message>

</error>To solve this problem, you will need to bring the data to the mid-tier (where your DomainService is running) using the .ToArray() method. For example, “return this.EntityContext.MyEntities.OrderBy(e => e.MyProperty)” will fail but “return this.EntityContext.MyEntities.ToArray().OrderBy(e => e.MyProperty)” will succeed.

The lack of support is an issue on the mid-tier, but has very little effect on the client. The base class, TableDomainService, does a lot of work processing the query to correctly handle most things sent from the client. The one caveat to this is some .Where filters are too complex to be processed. For example, most string operations like Contains can’t be used and string equality is case sensitive (so be sure to set FilterDescriptor.IsCaseSensitive to true when using DomainDataSource filters). Just like the other unsupported operators, you can still implement this on the mid-tier using .ToArray() before performing the complex .Where query.

The Azure Cloud Computing Developers Group will hold their December Meetup on 12/6/2010 at 6:30 PM at Microsoft San Francisco (in Westfield Mall where Powell meets Market Street), 835 Market Street Golden Gate Rooms - 7th Floor, San Francisco CA 94103:

We have an exciting event planned for Dec 6th. First, the talented Neil Mackenzie, expert in the architecture of data-related software systems, will talk about the critically important topic of Windows Azure Diagnostics. Neil will delight you with information about the design, configuration, and management of the Windows Azure Diagnostics.

Windows Azure Diagnostics enables you to collect diagnostic data from a service running in Windows Azure. You can use diagnostic data for tasks like debugging and troubleshooting, measuring performance, monitoring resource usage, traffic analysis and capacity planning, and auditing. Once collected, diagnostic data can be transferred to a Windows Azure storage account for persistence. Transfers can either be scheduled or on-demand.

The second topic will be around using Unit Tests to understand how the Windows Azure Storage system works. Specifically, I will illustrate how to create, delete and update Azure storage mechanisms, including Tables, Blogs, and Queues. Unit Tests typically aren't used as a teaching tool, but I have found them to be a great way to quickly and easily grasp core concepts, including the related C# code.

Please join us for a very informative evening.

Bruno Terkaly [pictured above right], Organizer

See my Adding Trace, Event, Counter and Error Logging to the OakLeaf Systems Azure Table Services Sample Project post of 11/22/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses for more details on Windows Azure diagnostics.

Adron Hall (@adronbh) concluded his series with Windows Azure Table Storage Part 2 of 11/22/2010 (with C# code elided for brevity):

In the first part of this two part series I reviewed what table storage in Windows Azure is. In addition I began a how-to for setting up an ASP.NET MVC 2 Web Application for accessing the Windows Azure Table Storage (sounds like keyword soup all of a sudden!). This sample so far is enough to run against the Windows Azure Dev Fabric.

However I still need to setup the creation, update, and deletion views for the site, so without further ado, let’s roll.

- In the Storage directory of the ASP.NET MVC Web Application right click and select Add and then View. In the Add View Dialog select the Create a strongly-typed view option. From the View data class drop down select the EmailMergeManagement.Models.EmailMergeModel, select Create form the View content drop down box, and uncheck the Select master page check box. When complete the dialog should look as shown below.

Add New View to Project Dialog

- Now right click and add another view using the same settings for Delete and name the view Delete.

- Right click and add another view using the same settings for Details and name the view Details.

- Right click and add another view for Edit and List, naming these views Edit and List respectively. When done the Storage directory should have the following views; Create.aspx, Delete.aspx, Details.aspx, Edit.aspx, Index.aspx, and List.aspx.

Now the next steps are to setup a RoleEntryPoint class for the web role to handle a number of configuration and initializations of the storage table. The first bit of this code will start the diagnostics connection string and wire up the event for the role environment changing. After this the cloud storage account will have the configuration for the publisher set so the configuration settings can be used. Finally the role environment setting will be setup so that it will recycle so that the latest settings and credentials will be used when executing.

- Create a new class in the root of the ASP.NET MVC Web Application and call it EmailMergeWebAppRole.

- Add the following code to the EmailMergeWebAppRole class. …

Now open up the StorageController class in the Controllers directory.

- Add an action method for the List view with the following code. …

- On the Index.aspx view add some action links for the following commands.

- Open up the Create.aspx view and remove the following HTML & other related markup from the page. …

There is now enough collateral in the web application to run the application and create new EmailMergeModel items and view them with the list view. Click on F5 to run the application. The first page that should come up is show[n] below.

Windows Azure Storage Samples Site Home Page

Click on the Windows Azure Table Storage link to navigate to the main view of the storage path. Here there is now the Create and List link. Click on the Create link and add a record to the table storage. The Create view will look like below when you run it.

Windows Azure Storage Samples Site Create Email Merge Listing

I’ve added a couple just for viewing purposes, with the List view now looking like this.

Windows Azure Storage Samples Site Listings

Well that’s a fully functional CRUD (CReate, Update, and Delete) Web Application running against Windows Azure. With a details screen to boot.

Peter Kellner explained Using Cloud Storage Studio From Cerebrata Software For Azure Storage Viewing in this 11/21/2010 post:

Just a quick shout out to the makers of Cloud Storage Studio Cerebrate Software. Thanks for a great product offering! I’ve been doing quite a bit of work recently with Microsoft Windows Azure Blob Storage and have really appreciated the insight into that storage Cloud Studio gives me. I had been using another product to do the same thing which I had though was easier and faster, but after just a couple hours of working with Cloud Studio, I’m finding I’ve really been missing out.

I’m attaching a screen shot below which shows viewing what is actually in the storage (not a hierarchical false view) as well as a display of all the meta data. Either of those two features are show starters for me and enough to switch.

If you have not used it, give it try (and have some patience, it takes a little bit of practice to find the true value).

Good luck!

<Return to section navigation list>

SQL Azure Database and Reporting

Cihangir Biyikoglu posted SQL Azure Federations – Sampling of Scenarios where federations will help! on 11/22/2010:

Sharding pattern has been popularized by internet scale applications so scenarios in this category turn out to be perfect fits for SQL Azure federations. I spent the last few years building one of these types of apps, working on electronic health record store on the web called HealthVault. HealthVault to stores electronic health records for the population of a whole country. It is available in the US and a number of other countries. Users and applications can put and get small data such as body weight all the way to huge blobs like MRIs into their health records. Much like many other application on the web, the traffic changes on the site as news articles, blogs and TV appearances attract users to the site.

Capacity requirement shift rapidly and even tough it is possible to predict capacity few days or weeks in advance but predicting next months is much more difficult. Pick your favorite free site and it is easy to generate examples like this; Imagine a news website or a blog site. On a given day, there isn’t always a way to predict which articles will go viral. As people render and comment on the popular viral articles, the database traffic goes up. Best way to handle such rapid shift in resource requirements is to repartition data to evenly distribute the load and take advantage of the full capacity of all machines in your cluster. Such unpredictability makes capacity planning a tough challenge day to day. Add on top, the unpredictable capacity requirements of software updates to these sites, with new and improved functionality and one thing is crystal clear.

There isn’t a scale-up machine you can buy that can handle HealthVault or other internet scale application workloads for their peak loads. This is where federations shine! Federations provide the ability to repartition data online. With no loss of availability, you can initiate an “ALTER FEDERATION … SPLIT AT” for example, to spread the workload on the federation member database that contains 100s or 1000s of news articles to 2 equal federations members covering half the new articles and get twice the throughput.

Another great scenario is multi-tenant ISV apps. Much like us in Azure teams, any software vendor who is building solutions for random number of customers have to consider the long tail of customers they will need to handle as well as the large head. Imagine a web-front for solo doctors’ offices or small bed & breakfast operators that manage scheduling and reservations. OR a human resources app tracking franchises each store… Given the hurried pace of business, customer’s use self-service UIs, provision then use the app right away… As your customers load shift, you can adjust the tenant count per database through online SPLITs and MERGEs or your databases.

Some ISVs try to manage each customers with dedicated database approach and that works when you a small number of customers to service. However for anyone serving moderate to large number of customers, managing 100s or 1Ks of databases become unpractical quickly. Federations provide a great way to work with tenants, customers or stores as atomic units and use the FILTERING connections to auto filter based on a federation key instance. (in case you have not seen one of the talks at PDC or PASS on SQL Azure federations, I will talk about this more in upcoming posts).

Federations can also help with sliding window scenarios where you manage time based data such as inventory over months or entity based federation where a ticker symbol could be used to manage asks and bids in a stock trading platform. In all these partitioned cases, if you’d like to repartition data without losing availability and with robust connection routing, federation will help.

I am certain there are many more examples out there brewing. I hope some of these examples help solidify the SQL Azure federations for you and help you visualize how to utilize federations. If you have scenarios you’d like to share, just leave a note on the blog…

<Return to section navigation list>

Dataplace DataMarket and OData

Gunnar Peipman described Creating [a] Twitpic client using ASP.NET and OData in this 11/22/2010 post:

OData

Open Data Protocol (OData) is one of new HTTP based protocols for updating and querying data. It is simple protocol and it makes use of other protocols like HTTP, ATOM and JSON. One of sites that allows to consume their data over OData protocol is Twitpic – the picture service for Twitter. In this posting I will show you how to build simple Twitpic client using ASP.NET.

Source code

You can find source code of this example from Visual Studio 2010 experiments repository at GitHub.

Source code repository

GitHubSimple image editor belongs to Experiments.OData solution, project name is Experiments.OData.TwitPicClient.

Sample application

My sample application is simple. It has some usernames on left side that user can select. There is also textbox to filter images. If user inserts something to textbox then string inserted to textbox is searched from message part of pictures. Results are shown like on the following screenshot.

Click on image to see it at original size.Maximum number of images returned by one request is limited to ten. You can change the limit or remove it. It is hear just to show you how queries can be done.

Querying Twitpic

I wrote this application on Visual Studio 2010. To get Twitpic data there I did nothing special – just added service reference to Twitpic OData service that is located at http://odata.twitpic.com/. Visual Studio understands the format and generates all classes for you so you can start using the service immediately.

Now let’s see the how to query OData service. This is the Page_Load method of my default page. Note how I built LINQ query step-by-step here.

My query is built by following steps:

- create query that joins user and images and add condition for username,

- if search textbox was filled then add search condition to query,

- sort data by timestamp to descending order,

- make query to return only first ten results.

Now let’s see the OData query that is sent to Twitpic OData service. You can click on image to see it at original size.

Click on image to see it at original size.This is the query that our service client sent to OData service (I searched Belgrade from my pictures):

/Users('gpeipman')/Images()?$filter=substringof('belgrade',tolower(Message))&$orderby=Timestamp%20desc&$top=10

If you look at the request string you can see that all conditions I set through LINQ are represented in this URL. The URL is not very short but it has simple syntax and it is easy to read. Answer to our query is pure XML that is mapped to our object model when answer is arrived. There is nothing complex as you can see.

Conclusion

Getting our Twitpic client work and done was extremely simple task. We did nothing special – just added reference to service and wrote one simple LINQ query that we gave to repeater that shows data. As we saw from monitoring proxy server report then the answer is a little bit messy but still easy XML that we can read also using some XML library if we don’t have any better option. You can find more OData services from OData producers page.

David Giard reported [Deep Fried Bytes] Episode 126: Chris Woodruff on OData on 11/22/2010:

In this interview, Deep Fried Bytes host Chris Woodruff explains the standard protocol OData and how to use .Net tools to create and consume data in this format.

The Deep Fried Bytes blog wasn’t up to date when I checked on 11/22/2010

Scott Guthrie (@scottgu) listed additional Upcoming Web Camps that will include OData hands-on development in a 11/21/2010 post:

Earlier this year I blogged about some Web Camp events that Microsoft is sponsoring around the world. These training events provide a great way to learn about a variety of technologies including ASP.NET 4, ASP.NET MVC, VS 2010, Web Matrix, Silverlight, and IE9. The events are free and the feedback from people attending them has been great.

A bunch of additional Web Camp events are coming up in the months ahead. You can find our more about the events and register to attend them for free here.

Below is a snapshot of the upcoming schedule as of today:

One Day Events

One day events focus on teaching you how to build websites using ASP.NET MVC, WebMatrix, OData and more, and include presentations & hands on development. They will be available in 30 countries worldwide. Below are the current known dates with links to register to attend them for free:

City Country Date Technology Registration Link Bangalore India 16-Nov-10 ASP.NET MVC Already Happened Paris France 25-Nov-10 TBA Register Here Bad Homburg Germany 30-Nov-10 ASP.NET MVC Register Here Bogota Colombia 30-Nov-10 Multiple Register Here Chennai India 1-Dec-10 ASP.NET MVC Register Here Seoul Korea 2-Dec-10 Web Matrix Coming Soon Pune India 3-Dec-10 ASP.NET MVC Register Here Moulineaux France 8-Dec-10 TBA Register Here Sarajevo Bosnia and Herzegovina 10-Dec-10 ASP.NET MVC Register Here Toronto Canada 11-Dec-10 ASP.NET MVC Register Here Bad Homburg Germany 16-Dec-10 ASP.NET MVC Register Here Moulineaux France 11-Jan-11 TBA Register Here Cape Town South Africa 22-Jan-11 Web Matrix Coming Soon Johannesburg South Africa 29-Jan-11 Web Matrix Coming Soon Tunis Tunisia 1-Feb-11 ASP.Net MVC Register Here Cape Town South Africa 12-Feb-11 ASP.Net MVC Coming Soon San Francisco, CA USA-West 18-Feb-11 ASP.NET MVC Coming Soon Johannesburg South Africa 19-Feb-11 ASP.Net MVC Coming Soon Redmond, WA USA-West 18-Mar-11 Odata Coming Soon Munich Germany 31-Mar-11 Web Matrix Register Here Moulineaux France 5-Apr-11 TBA Register Here Moulineaux France 17-May-11 TBA Register Here Irvine, CA USA-West 10-Jun-11 ASP.NET MVC Coming Soon Moulineaux France 14-Jun-11 TBA Register Here

Two Day Events

Two day Web Camps go into even more depth. These events will cover ASP.NET, ASP.NET MVC, WebMatrix and OData, and will have presentations on day 1 with hands on development on day 2. Below are the current dates for the events:

City Country Date Presenters Registration Link Hyderabad India 18-Nov-10 James Senior &

Jon GallowayAlready Happened Amsterdam Netherlands 20-Jan-11 James Senior &

Scott HanselmanComing Soon Paris France 25-Jan-11 James Senior &

Scott HanselmanComing Soon Austin, TX USA 7-Mar-11 James Senior &

Scott HanselmanComing Soon Buenos Aires Argentina 14-Mar-11 James Senior &

Phil HaackComing Soon São Paulo Brazil 18-Mar-11 James Senior &

Phil HaackComing Soon Silicon Valley USA 6-May-11 James Senior &

Doris ChenComing Soon

More DetailsYou can find the latest details and registration information about upcoming Web Camp events here.

Hope this helps,

Scott

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Wade Wegner announced the availability of his Code for the Windows Azure AppFabric Caching demo at PDC 2010 in this 11/22/2010 post:

This post is long overd[ue]. At PDC10 I recorded the session Introduction to Windows Azure AppFabric Caching, where I introduced the new Caching service. As part of the presentation I gave a demo where I updated an existing ASP.NET web application running in a Windows Azure web role to use the caching service. For details on the caching service, please watch the presentation.

In this presentation I showed three uses of the caching service:

- Using the Caching service as the session state provider.

- Using the Caching service as an explicit cache for reference data stored in SQL Azure.

- Using the Caching service along with the local cache feature to store resource data in the web client.

If you want to see this application running in Windows Azure, take a look here: http://cachedemo.cloudapp.net/

I had meant to immediately post the code to this application so that you can try it out yourself, but alas PDC10 and TechEd EMEA set me back quite a bit. So, without further ado, here are the files you’ll need:

CacheService.zip contains the solution and projects required to run the application. NothwindProducts.zip contains the SQL scripts required to setup the SQL Azure (if you want to use SQL Server, you’ll have to update the connection string accordingly) database you’ll need to use.

You’ll have to update a few items in the web.config file. Search and replace the following items:

- YOURCACHE – get this from the AppFabric portal

- YOURTOKEN – get this from the AppFabric portal

- YOURDATABASE (2 instances) – get this from the SQL Azure portal

- YOURUSERNAME – get this from the SQL Azure portal

- YOURPASSWORD – get this from the SQL Azure portal

Once you have made these updates, hit F5 to run locally.

Please note: when you run this locally against the Caching service, you will encounter significant network latency! This is to be expected, as you are making a number of network hops to get to the data center in order to reach your cache. To get the best results, deploy your application to South Central US as this is where the AppFabric LABS portal creates your cache.

If you have any problems or questions, please let me know.

Will Perry posted Getting Started with Service Bus v2 (October CTP) - Connection Points on 11/1/2010 (missed when posted):

Last week at PDC we released a Community Technology Preview (CTP) of a new version of Windows Azure AppFabric Service Bus. You can download the SDK, Samples and Help on the Microsoft Download Center. There’s plenty new in this release and the first is the notion of Explicit Connection Point Management.

In v1 whenever you started up a service and relayed it through the cloud over service bus we just quietly cooperated – this seems like a great idea until you think about a couple of advanced scenarios: Firstly: what happens when your service is offline but a sender (client) tries to connect to it? Well, we don’t know if the service is offline or the address is wrong (and there’s no way to differentiate); there’s no way to determine what’s a valid service connection point and what isn’t. There’s also no way to specify metadata about the service that connects (this is a HTTP endpoint, this endpoint is only Oneway). Also, you might have noticed that we added support for Anycast in v2 – how can you determine which connection points are Unicast (one listener/server and many senders/clients) and which are unicast (many load-balanced listeners and many clients)? That’s what connection points are for.

A Connection Point defines the metadata for a Service Bus connection. This includes the Shape (Duplex, RequestReply, Http or Oneway) and Maximum Number of Listeners and the Runtime Uri which acts as the service endpoint. Connection Points are managed as an Atom Feed using standard REST techniques. You’ll find the feed at the Connection Point Management Uri (-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints">-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints">-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints">-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints">-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints">https://<YourServiceNamespace>-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints)1 and it’ll look at little bit like this:

<feed xmlns="http://www.w3.org/2005/Atom">

<title type="text">ConnectionPoints</title>

<subtitle type="text">This is the list of ConnectionPoints currently available</subtitle>

<id>https://willpe-blog-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints</id>

<updated>2010-11-01T20:53:53Z</updated>

<generator>Microsoft Windows Azure AppFabric - Service Bus</generator>

<link rel="self"

href="https://willpe-blog-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints"/>

<entry>

<id>https://willpe-blog-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints(TestConnectionPoint)</id>

<title type="text">TestConnectionPoint</title>

<published>2010-11-01T20:51:11Z</published>

<updated>2010-11-01T20:51:11Z</updated>

<link rel="self"

href="https://willpe-blog-mgmt.servicebus.appfabriclabs.com/Resources/ConnectionPoints(TestConnectionPoint)"/>

<link rel="alternate"

href="sb://willpe-blog.servicebus.appfabriclabs.com/services/testconnectionpoint"/>

<content type="application/xml">

<ConnectionPoint xmlns="" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<MaxListeners>2</MaxListeners>

<ChannelShape>RequestReply</ChannelShape>

</ConnectionPoint>

</content>

</entry>

</feed>Writing some code to get your list of connection points is easy – in the rest of this post, I’ll show you how to (very simply) List, Create and Delete connection points. Before getting started, make sure that you’ve installed the October 2010 Windows Azure AppFabric SDK then create a new .Net Framework 4.0 (Full) Console Application in Visual Studio 2010.

- Add a reference to the Microsoft.ServiceBus.Channels assembly (its in the GAC, or at C:\Program Files\Windows Azure AppFabric SDK\V2.0\Assemblies\Microsoft.ServiceBus.Channels.dll if you need to find it on disk)

- Add 3 string constant containing your Service Namespace, Default Issuer Name and Default Issuer Key. You can get this information over at the Windows Azure AppFabric Portal:

private const string serviceNamespace = "<YourServiceNamespace>";

private const string username = "owner";

private const string password = "base64base64base64base64base64base64base64==";- Now define the hostname of your service bus management service (you can find this in the portal, too):

private static readonly string ManagementHostName = serviceNamespace + "-mgmt.servicebus.appfabriclabs.com";

</!--ENDFRAGMENT-->Access to Service Bus Management is controlled by the Access Control Service. You’ll need to present the ‘net.windows.servicebus.action’ claim with a value ‘Manage’ in order to have permission to Create, Read and Delete connection points. The default issuer (owner) is configured to have this permission by default when you provision a new account.

We’ll need to go ahead and get a Simple Web Token from the Access Control Service for our request. Note that, by default, the realm (relying party address) used for service bus management has the http scheme: you must retrieve a token for the management service using a Uri with the default port and http scheme even though you will in fact connect to the service over https:

- Get an auth token from ACS2 for the management service’s realm (remember, this must specify an http scheme):

string authToken = GetAuthToken(new Uri("http://" + ManagementHostName + "/"));- Add the token to the Authorization Header of a new WebClient and download the feed: – note that here we specify https)

using (WebClient client = new WebClient())

{

client.Headers.Add(HttpRequestHeader.Authorization, "WRAP access_token=\"" + authToken + "\"");

var feedUri = "https://" + ManagementHostName + "/Resources/ConnectionPoints";

var connectionPoints = client.DownloadString(feedUri);

Console.WriteLine(connectionPoints);

}Compile and run the app to see your current connection points (probably none). Now lets go ahead and create a Connection Point – to do this we just Post an Atom entry to the ConnectionPoint Management service. You can achieve this using classes from the System.ServiceModel.Syndication namespace3 but for clarity and simplicity we’ll stick to just using a big old block of xml encoded as a string – its not pretty this way, but it gets the job done!

- Define the properties of the new Connection Point – you need to specify a name (alphanumeric), the maximum number of listeners, a channel shape (Duplex, Http, Oneway or RequestReply) and the endpoint address (sometimes called a Runtime Uri) for the service:

string connectionPointName = "TestConnectionPoint";

int maxListeners = 2;

string channelShape = "RequestReply";

Uri endpointAddress = ServiceBusEnvironment.CreateServiceUri(

"sb", serviceNamespace, "/services/TestConnectionPoint");- Now, go ahead and define the new Atom Entry for the connection point. Note how the name is set as the entry’s title and the endpoint address is set as an alternate link for the entry.

var entry = @"<entry xmlns=""http://www.w3.org/2005/Atom"">

<id>uuid:04d8d317-511c-4946-bc1d-6f49b6f66385;id=3</id>

<title type=""text"">" + connectionPointName + @"</title>

<link rel=""alternate"" href=""" + endpointAddress + @""" />

<updated>2010-11-01T20:44:08Z</updated>

<content type=""application/xml"">

<ConnectionPoint xmlns:i=""http://www.w3.org/2001/XMLSchema-instance"" xmlns="""">

<MaxListeners>" + maxListeners + @"</MaxListeners>

<ChannelShape>" + channelShape + @"</ChannelShape>

</ConnectionPoint>

</content>

</entry>";- Create a HttpWebRequest, add the ACS Auth Token, set the Http Method to Post and configure the content type as ‘application/atom+xml’:

var request = HttpWebRequest.Create("https://" + ManagementHostName + "/Resources/ConnectionPoints") as HttpWebRequest;

request.Headers.Add(HttpRequestHeader.Authorization, "WRAP access_token=\"" + authToken + "\"");

request.ContentType = "application/atom+xml";

request.Method = "POST";- Finally, write the new atom entry to the request stream and retrieve the response. You’ll discover an atom entry describing your newly created connection point in that response:

using (var writer = new StreamWriter(request.GetRequestStream()))

{

writer.Write(entry);

}

WebResponse response = request.GetResponse();

Stream responseStream = response.GetResponseStream();

var connectionPoint = new StreamReader(responseStream).ReadToEnd();

Console.WriteLine(connectionPoint);If you re-run your application now, you’ll see that you’ve created a connection point which is listed in the feed we retrieved earlier. The last thing to do now is clean up after ourselves by issuing a delete against the resource we just created:

- Connection Point management addresses are similar to OData addresses – we address the specific connection point to delete by appending the connection point management Uri with the name of the item to delete in parenthesis:

var connectionPointManagementAddress

= "https://" + ManagementHostName + "/Resources/ConnectionPoints(" + connectionPointName + ")";- Add the ACS auth token to the header of a WebClient and issue a Delete against the entry:

using (WebClient client = new WebClient())

{

client.Headers.Add(HttpRequestHeader.Authorization, "WRAP access_token=\"" + authToken + "\"");

client.UploadString(connectionPointManagementAddress, "DELETE", string.Empty);

}So, there we go: Listing, Creating and Deleting Connection Points. Download the full example from Skydrive:

___

1 You’ll need to have a WRAP token in the Http Authorization Header to access this resource, so you can’t just navigate to it in a browser.

2 Read more about the Access Control Service at Justin Smith’s blog. Sample code to retrieve a token is included in the download at the end of this post.

3 An example of using the Syndication primitives to manipulate connection points is available in the October 2010 Windows Azure AppFabric Sample ‘ManagementOperations’

MSDN presented Exercise 1: Using the Windows Azure AppFabric Caching for Session State in 11/2010 as the first part of the Building Windows Azure Applications with the Caching Service training course:

In this exercise, you explore the use of the session state provider for Windows Azure AppFabric Caching as the mechanism for out-of-process storage of session state data. For this purpose, you will use the Azure Store—a sample shopping cart application implemented with ASP.NET MVC. You will run this application in the development fabric and then modify it to take advantage of the Windows Azure AppFabric Caching as the back-end store for the ASP.NET session state. You start with a begin solution and explore the sample using the default ASP.NET in-proc session state provider. Next, you add references to the Windows Azure AppFabric Caching assemblies and configure the session state provider to store the contents of the shopping cart in the distributed cache cluster provided by Window Azure AppFabric.

Here are links to the remaining exercises and parts:

Admin published Azure Extensions for Microsoft Dynamics CRM to the Result on Demand blog on 11/21/2010:

Microsoft DynamicsCRM2011 supports integration with the AppFabric Service Bus feature of the Windows Azure platform. By integrating Microsoft DynamicsCRM with the AppFabric Service Bus, developers can register plug-ins with Microsoft DynamicsCRM2011 that can pass run-time message data, known as the execution context, to one or more Azure solutions in the cloud. This is especially important for Microsoft Dynamics CRM Online as the AppFabric Service Bus is one of two supported solutions for communicating run-time context obtained in a plug-in to external line of business (LOB) applications. The other solution being the external endpoint access capability from a plug-in registered in the sandbox.

The service bus combined with the AppFabric Access Control services (ACS) of the Windows Azure platform provides a secure communication channel for CRM run-time data to external LOB applications. This capability is especially useful in keeping disparate CRM systems or other database servers synchronized with CRM business data changes.

In This Section

- Introduction to Windows Azure Integration with Microsoft Dynamics CRM

- Provides an overview of the integration implementation between Microsoft DynamicsCRM2011 and Windows Azure.

- Configure Windows Azure Integration with Microsoft Dynamics CRM

- Provides an overview of configuring Microsoft DynamicsCRM2011 and a Windows Azure Solution for correct integration.

- Write a Listener for a Windows Azure Solution

- Provides guidance about how to write a Microsoft DynamicsCRM2011 aware Listener application for a Windows Azure solution.

- Write a Custom Azure-aware Plug-in

- Provides guidance about how to write a custom Microsoft DynamicsCRM plug-in that can send business data to the Windows Azure AppFabric Service Bus.

- Send Microsoft Dynamics CRM Data over the AppFabric Service Bus

- Provides information about how to use the Azure aware plug-in that is included with Microsoft DynamicsCRM2011 .

- Walkthrough: Configure CRM for Integration with Windows Azure

- Provides a detailed walkthrough about how to configure Microsoft DynamicsCRM2011 for integration with Windows Azure platform AppFabric.

- Walkthrough: Configure Windows Azure ACS for CRM Integration

- Provides a detailed walkthrough about how to configure Windows Azure ACS for integration with Microsoft DynamicsCRM2011 .

- Sample Code for CRM-AppFabric Integration

- Provides sample code that demonstrate Microsoft DynamicsCRM2011 integration with Windows Azure platform AppFabric.

Related Sections

Plug-ins for Extending Microsoft Dynamics CRM

See Also

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

My (@rogerjenn) Adding Trace, Event, Counter and Error Logging to the OakLeaf Systems Azure Table Services Sample Project post of 11/22/2010 describes adding and analyzing Azure diagnostic features with LoadStorm and Cerebrata apps:

I described my first tests with LoadStorm’s Web application load-testing tools with the OakLeaf Systems Azure Table Services Sample Project in a Load-Testing the OakLeaf Systems Azure Table Services Sample Project with up to 25 LoadStorm Users post of 11/18/2010. These tests were sufficiently promising to warrant instrumenting the project with Windows Azure Diagnostics.

Users usually follow this pattern when opening the project for the first time (see above):

- Click the Next Page link a few times to verify that GridView paging works as expected.

- Click the Count Customers button to iteratively count the Customer table’s 91 records.

- Click the Delete All Customers button to remove the 91 rows from the Customers table.

- Click the Create Customers button to regenerate the 91 table rows from a Customer collection.

- Click the Update Customers button to add a + suffix to the Company name field.

- Click Update Customers again to remove the + suffix.

Only programmers and software testers would find the preceding process of interest; few would find it entertaining.

Setting Up and Running Load Tests

Before you can run a load test, you must add a LoadStorm.html page to your site root folder or a <!-- LoadStorm ##### --> (where ##### is a number assigned by LoadStorm) HTML comment in the site’s default page.

LoadStorm lets you emulate user actions by executing links with HTTP GET operations (clicking Next Page, then First Page) and clicking the Count Customers, Delete All Customers and Create Customers buttons in sequence with HTTP POST operations. …

The post continues with addition details of adding and testing Azure diagnostics.

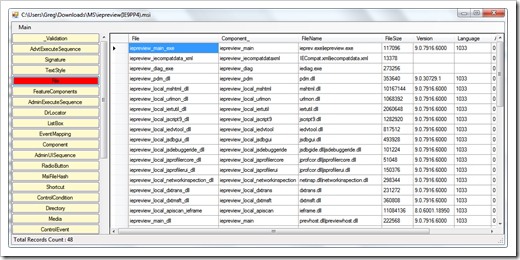

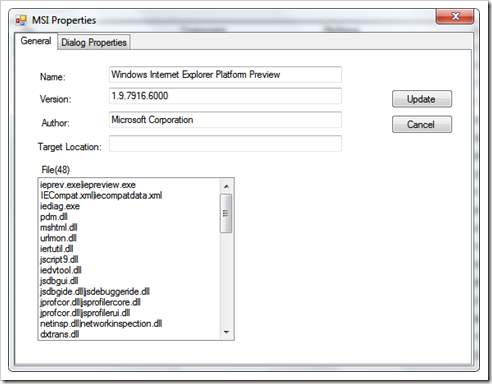

Gregg Duncan recommended MSI Explorer - A quick and simple means to spelunk MSIs on 11/21/2010:

“Most of us are quite familiar i[with] creating MSI or Setup [projects] for our applications. By using a MSI, we can make sure all dependencies for running the application will be placed properly. MSI is the best option available on Windows OS for packaging and distributing our application. For .NET developers, Visual Studio presents lot of features in creating setup and deployment projects for our application. But, there are no built-in tools in Visual Studio to look into the MSI contents. And that too, to make a small change in MSI, it requires rebuilding the entire Setup Project. So, I think it's better to design an application that will analyze the MSI and give the details of it along with capability of updating it without rebuilding.

Features present in this application:

- It allows us to look into the contents of the MSI.

- It allows us to export the contents of the MSI.

- It allows us to update commonly changing properties without rebuilding it.

- Easy to use UI.

- Now, Updating an MSI is quite simple

…

While Orca has been around for like a billion years or so (since about when MSI’s were introduced) it’s not the easiest tool to find, download and use. (o get the official version you have to download the entire Microsoft Windows SDK (493 MB), install that, etc, etc.

That’s what I really liked about the MSI Explorer, how easy it was to download and run. Just download the ZIP, unzip it, and assuming you have the .Net framework installed, run the EXE. Done. No install, no fuss, no muss. Quick, simple, easy and priced just right, free.

Snap of the ZIP’s contents;

The app running (with the IE9 Platform Preview 4 MSI open);

Related Past Post XRef:

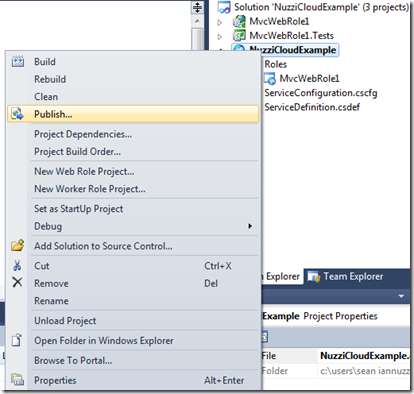

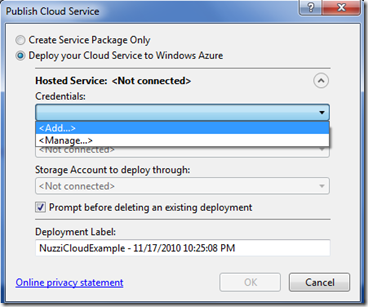

Sean Iannuzzi explained Publishing to Windows Azure using Windows Azure Service Management API on 11/17/2010 (missed when posted):

In many cases publishing to the Windows Azure is fairly straight forward and has become even more so with the completely integrated Windows Azure Service Management API. Prior to the API being enabled you had to create a package and deploy the package manually to the Cloud by selecting your Package and Configuration file. This is no longer the case with the Windows Azure Services Management API.

In addition, the Windows Services API seems to be a bit more integrated into Windows Azure. This could just be my perception of the services, but it seems that when I “cancel” or want to stop an already existing publish that it work faster using the API. I have also seen cases were the Cloud Services dashboard appears to be a bit behind with the status of the deployment.

In this post I will provide the information you will need in order to setup the Windows Azure Service Management API as this service requires the registration of a client side certificate and subscription ID. Below you will find screenshots of each step and the final result of a publish using the Management API.

Note:

- When publishing with the Management API it also provides an automatic label so that you can easily track your deployments.

- Before you being the following steps below, please either open your Windows Azure project or Create a New One and then follow the steps below.

Figure 1.00 – Publish to Azure using the Management API – Publish

Right click on your Cloud Project and Click Publish

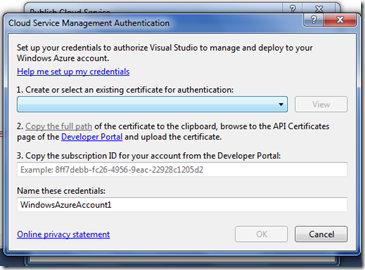

Figure 1.01 – Publish to Azure using the Management API – Add New Hosted Service Credential

In the publish Cloud Service Dialog click on the Credentials Dropdown and Click “Add”

Figure 1.02 – Publish to Azure using the Management API – Add New Hosted Service Credential

An authentication dialog will be shown prompting you “Select” or “Create” a certificate that will be used for authentication of the Management API. I suggest you create a “New” certificate.

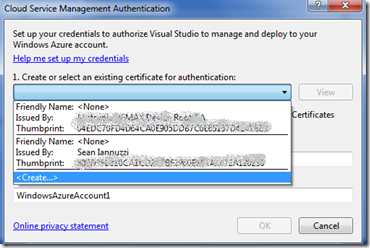

Figure 1.03 – Publish to Azure using the Management API – Create New Authentication Certificate

In the Authentication certificate dropdown click on “Create”

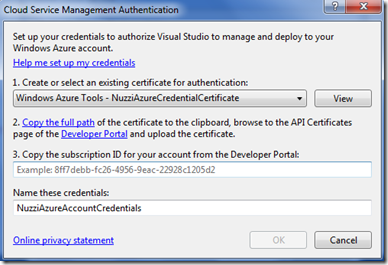

Figure 1.04 – Publish to Azure using the Management API – Enter a Friendly Name for your Authentication Certificate

Enter a Friendly Name for your Authentication Certificate

Figure 1.05 – Publish to Azure using the Management API – Certificate Created.

Now that you have created the certificate the next step will be to upload the certificate to the Cloud and associate it with your Subscription.

Figure 1.06 – Publish to Azure using the Management API – Copy Certificate Path

To save time click on the Copy Certificate Path link as shown below.

NOTE:

For these next steps you will need to login to your Windows Azure Account.

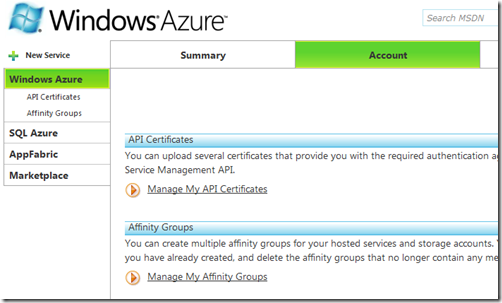

Figure 1.07 – Publish to Azure using the Management API – Managing My API Certificates

- Login to Windows Azure

- Click on your Azure Services

- Click on the Account Tab

- Click on the “Manage My API Certificates” Link

Figure 1.08 – Publish to Azure using the Management API – Paste the Copied Certificate Path

- Click in the Textbox Area or Click on the Browse button

- Paste in the path to the certificate your generated earlier.

Figure 1.09 – Publish to Azure using the Management API – Certificate Verification

Once you have uploaded your certificate you are just about finished. Verify that your certificate was installed as shown below.

Figure 1.10 – Publish to Azure using the Management API – Certificate Verification

- Next click back on the Account Tab

- Then locate and select your Subscription ID

- Once you have selected your Subscription ID navigate back to your Visual Studio 2010 Windows Azure Project where the dialog is still shown and paste in the Subscription ID as shown below.

Figure 1.11 – Publish to Azure using the Management API – Management API Services Enabled

Once you have completed the steps above the Management API Deployment Services should be connected to your Windows Azure Cloud Services and show you a list of available deployment options.

Note: When using the API by default the Deployment Wizard will add a Label so that you are able to track your deployments.

Figure 1.12 – Publish to Azure using the Management API – Deployment – Cancel

At this point you should be all set to use the Management API to publish your Cloud Application. One of the nice features with using the Management API is the ability to Cancel/Stop or a deployment process. While you can do this using the Azure Dashboard, I have found that using the API seems to be a bit more integrated or simply just work faster. Judge for yourself, but this is a very nice feature that is completely seamless.

Figure 1.13 – Publish to Azure using the Management API – Deployment Successful

At this point everything should be setup and enabled so that you can deployment you Cloud Application using the Windows Azure Management API.

<Return to section navigation list>

Visual Studio LightSwitch

The Virtual Realm blog published a comprehensive LightSwitch Links and Resources linkpost on 11/22/2010:

Microsoft Downloads

Visual Studio LightSwitch Beta 1 Training Kit (Link)

Microsoft Support Forums

MSDN Videos

- How Do I: Define My Data in a LightSwitch Application? (Link)

- How Do I: Create a Search Screen in a LightSwitch Application? (Link)

- How Do I: Create an Edit Details Screen in a LightSwitch Application? (Link)

- How Do I: Format Data on a Screen in a LightSwitch Application? (Link)

- How Do I: Sort and Filter Data on a Screen in LightSwitch Application? (Link)

- How Do I: Create a Master-Details (One to Many) Screen in a LightSwitch Application? (Link)

- How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application? (Link)

- How Do I: Write business rules for validation and calculated fields in a LightSwitch Application? (Link)

- How Do I: Create a Screen that can both Edit and Add Records in a LightSwitch Application? (Link)

- How Do I: Create and Control Lookup Lists in a LightSwitch Application? (Link)

- How Do I: How do I Set up Security to Control User (Link)

- How Do I: Deploy a Visual Studio LightSwitch Application? (Link)

Channel 9 Videos

- Steve Anonsen and John Rivard: Inside LightSwitch (Link)

- Visual Studio LightSwitch Beyond the Basics (Link)

- Introducing Visual Studio LightSwitch (Link)

LightSwitch Bloggers

- Matt Thalman's - http://blogs.msdn.com/b/mthalman/

- LightSwitch Team Blog - http://blogs.msdn.com/b/lightswitch/

- Beth Massi - http://blogs.msdn.com/b/bethmassi/

- Alessandro Del Sole’s Blog - http://community.visual-basic.it/alessandroenglish/

LightSwitch Team Blogs

- How to Communicate Access LightSwitch Screens (Link)

- How to use Lookup Tables with Parameterized Queries (Link)

- Creating a Custom Search Screen in Visual Studio LightSwitch – Beth Massi (Link)

- How to Create a RIA service wrapper for OData Source (Link)

- How Do I: Import and Export Data to and from a CSV File (Link)

- How Do I: Create and Use Global Values in a Query (Link)

- How Do I: Filter Items in a ComboBox or Model Window Picker in LightSwitch (Link)

- Overview of Data Validation in LightSwitch Applications (Link)

- The Anatomy of a LightSwitch Application Part 3, the logic Tier (Link)

- The Anatomy of a LightSwitch Application Part 2, the presentation Tier (Link)

- The Anatomy of a LightSwitch Application Part 1, Architecture Overview (Link)

General Links and Resources

- Microsoft LightSwitch Application using SQL Azure Database (Link)

- Switch On The Light - Bing Maps and LightSwitch (Link)

- How Do I: Import and Export Data to/From a CSV Files (Dan Seefeldt) (Link)

- Visual Studio LightSwitch - Help Website (Link)

- How to Reference security entities in LightSwitch (Link)

- Filtering data based on current user in LightSwitch Apps (Link)

- Telerik Blogs - Getting Started with #LightSwitch and OpenAccess (Link)

- LightSwitch Student Information System (Link)

- LightSwitch Student Information System (Part 2): Business Rules and Screen Permissions (Link)

- LightSwitch Student Information System (Part 3): Custom Controls (Link)

- Pluralcast 23: Visual Studio LightSwitch with Jay Schmelzer (Link)

- How Do I: Filter Items in a ComboBox or Model Window Picker in LightSwitch (Link)

- How Do I: Create and Use Global Values in a Query (Link)

- Filtering data based on current user in LightSwitch Applications (Link)

- Printing SQL Server Reports (.rdlc) with LightSwitch (Link)

- CodeProject - Beginners Guide to Visual Studio LightSwitch (Link)

- TechEd Africa 2010 - Presentation by Lisa Feigenbaum (DTL217) #LightSwitch - Future of Business Application Development (Link)

- Visual Studio LightSwitch: Connecting to SQL Azure Databases (Link)

- Visual Studio LightSwitch: Implementing and Using Extension Methods with Visual Basic (Link)

- Rapid Application Development with Visual Studio LightSwitch (Link)

The South Bay .NET User Group announced that Introduction to Visual Studio LightSwitch will be the topic of the 12/1/2010 meeting at the Microsoft - Mt. View Campus,

1065 La Avenida Street, Bldg 1, Mountain View, CA 94043 from 6:30 to 8:45 PM:

Event Description:

LightSwitch is a new product in the Visual Studio family aimed at developers who want to easily create business applications for the desktop or the cloud. It simplifies the development process by letting you concentrate on the business logic while LightSwitch handles the common tasks for you.

In this demo-heavy session, you will see, end-to-end, how to build and deploy a data-centric business application using LightSwitch. Well also go beyond the basics of creating simple screens over data and demonstrate how to create screens with more advanced capabilities.

You’ll see how to extend LightSwitch applications with your own Silverlight custom controls and RIA services. Well also talk about the architecture and additional extensibility points that are available to professional developers looking to enhance the LightSwitch developer experience.

Presenter's Bio: Beth Massi is a Senior Program Manager on the Visual Studio BizApps team at Microsoft who build the Visual Studio tools for Azure, Office, SharePoint as well as Visual Studio LightSwitch. Beth is a community champion for business application developers and is responsible for producing and managing online content and community interaction with the BizApps team.

She has over 15 years of industry experience building business applications and is a frequent speaker at various software development events. You can find her on a variety of developer sites including MSDN Developer Centers, Channel 9, and her blog http://www.bethmassi.com/. Follow her on twitter @BethMassi.

Drew Robbins explained Building Business Applications with Visual Studio LightSwitch in his DEV206 session at TechEd Europe 2010:

Visual Studio LightSwitch is the simplest way to build business application for the desktop and cloud. LightSwitch simplifies the development process by letting you concentrate on the business logic, while LightSwitch handles the common tasks for you. In this demo-heavy session you will see, end-to-end, how to build and deploy a data-centric business application using LightSwitch as well as how you can use Visual Studio 2010 Professional and Expression Blend 4 to customize and extend the presentation and data layers of a LightSwitch application for when the requirements grow beyond what is supported by default.

Return to section navigation list>

Windows Azure Infrastructure

Wriju summarized What’s new in Windows Azure on 11/21/2010:

What’s new in Windows Azure which is coming up next? In PDC 2010 bunch of new features like,

- Virtual Machine Role

- Extra Small Instance

- Virtual Network

- Remote Desktop

- Azure Marketplace

And many more. To find more on it http://www.microsoft.com/windowsazure/pdcannouncements/ [new]

I feel strongly that, with VMRole and Remote Desktop migration will be much more easier. We can install and configure 3rd party components.

Adrian Sanders asserted “Obviously we’re drinking the cloud kool-aid: maybe you should too!” in a deck for his The Top 5 Overlooked Reasons Why Business Belongs in the Cloud post of 11/21/2010:

There are plenty of “Top 5 lists” with generic reasons for why businesses should migrate into SaaS and cloud computing. Scalability, cost, mobility – they’re good reasons, sure, but we’ve heard them before: what else does cloud computing offer? If you’re thinking about moving your business into the cloud but haven’t yet, here are five reasons that are often overlooked:

1. Clients notice. Traditionally, IT has served a “backend” role in business. With the exception of email and websites, most businesses hide their IT solutions from clients, and with good reason: IT is ugly. Cloud computing changes that. Many SaaS offerings and cloud-based applications incorporate new ways of reaching clients as part of their workflow solutions. For example, Solve360, a popular online CRM, allows users to “publish” select materials from project workspaces, enabling real-time client collaboration. E-signature services allow clients to sign documents via a slick, paperless delivery model, and Helpdesk software lets clients access knowledge base forums and ticketed support in a branded, easy to use online environment. When it works, clients notice that you’re new, different, modern, and “slick.” IT itself becomes a branding mechanism.

2. Smarter architecture. Amid all the fuss about cloud differentiation it’s easy to forget that, aside from being cloud-based, many cloud apps are simply designed better than their on-premise counterparts. This could be attributable to a whole host of reasons, the most prominent of which is that (good) cloud apps have been designed entirely from the ground up. Whereas most on-premise solutions have strong ancestral roots in software designed 10-20 years ago, cloud apps have been developed much more recently, meaning they’ve benefited from years of accumulated programming and business experience. Cloud apps are designed for modern businesses: most on-premise apps simply aren’t.

3. Usability. One of the great innovations of cloud-computing has been the focus put on end-users. Many legacy apps put function first and usability second (MS Access, anyone?), whereas good cloud apps don’t see a difference between the two. This key principle can’t be underestimated: software is only as powerful as the people using it. Generally speaking (and yes, there are exceptions to this) cloud-based software understands that people matter, creating a better user experience and increasing efficiency.

4. Integration. We just published a blog post blasting API integration, but it’s worth noting that at least cloud-based software makes API integration a viable and affordable workflow solution. Good luck getting anything to work well with a legacy app, especially on the cheap: compare that reality with the generous and freely available API’s that most SaaS and cloud-based vendors offer and it’s an easy sell.

5. Quality of Service. This only applies to SaaS, but it’s a powerful enough attribute that I’m listing it as an argument for all cloud-computing. In a traditional IT setting, clients have a one-time transaction with vendors, repeated every few years for product upgrades. In the world of SaaS, clients generally pay vendors month-to-month and upgrades and bug-fixes are released on a significantly ramped-up timescale. This means that: A) clients can drop out at any time, giving vendors a perpetual incentive to innovate, and: B) clients get a product that’s updated far, far more frequently than before. In addition, SaaS vendors increasingly have robust forums and user communities where support questions and feature requests are addressed quickly, effectively, and by multiple user types. This establishes a culture of support and user-driven innovation that has long been missing from on-premise software.

Obviously we’re drinking the cloud kool-aid. Maybe you should too.

Adrian is the founder of VM Associates, an end-user consultancy for businesses looking to move to the cloud from pre-existing legacy systems

Tim Crawford observed that Servers Purchased Today Will Not Be Replaced in an 11/20/2010 post:

In the spirit of paradigm shifts, here’s one to think about.

Servers that are purchased today will not be replaced. Servers have a useful lifespan. Typically that ranges in the 3-5 years depending on their use. There are a number of factors that contribute to this. The cost to operate the server grows over time and it becomes less expensive to purchase a new one. The performance of the server is not adequate for newer workloads over time. These (and others) contribute to the useful lifespan of a server.

At the current adoption rates of cloud-based services, said servers will not be replaced. But rather, the services provided from those systems will move to cloud-based services. Of course there are corner cases. But as the cloud market matures, it will drive further adoption of services. Within the same timeframe, when existing servers become obsolete, many of those services will move to cloud-based services.

This shift requires several actions depending on your perspective.

- Server/ Channel Provider: How will you shift revenue streams to alternative offerings? Are you only a product company and can you make the move to a services model? Are you able to expand your services to meet the demand and complexities?

- IT Organizations: It causes a shift in budgetary, operational and process changes. Not to mention potential architecture and integration challenges for applications and services.

These types of changes take time to plan and develop before implementation. 3-5 years is not that far away in the typical planning cycle for changes this significant. The suggestion would be to get started now if you haven’t started already. There are great opportunities available today as a way to start “kicking the tires”.

This doesn’t bode well for server vendors.

Brent Stineman (@BrentCodeMonkey) offered Microsoft Digest for November 19th, 2010 in a post of the same date to the Sogeti Blogs:

So PDC10 and TechEd Europe have both come and gone. You’d think that information would have dried up following these two huge brain dumps. However, there’s still been a huge amount of data being sent out. Here we go…

In my last Microsoft specific cloud update, I talked about some of the new PDC10 announcements. If you’d like to hear a more definitive view on it, then check out Jame’s Conard’s update. For those of you not familiar with Mr. Conard, he’s the Sr. Director of Microsoft’s Developer & Platform Evangelism (DPE) group.

TechNet has published an article that takes a look Inside SQL Azure. This paper covers many great topics but the key one I think anybody working with SQL Azure should look at is three paragraphs on throttling. I still don’t consider this information comprehensive enough, but it’s a start.

If you’re less concerned about the internals then about new features such as SQL Azure Reporting Services, look no further.

One of the new features of the Azure AppFabric is explicit connection point management. Will, a member of the team, has published a quick tutorial on how to get started with this great new feature.

Clemens Vasters also recently updated his blog with samples from his PDC10 presentation on Azure AppFabric Service bus futures. This includes his Service Bus Management Tool, a new sample echo client/service, and a sample of the new durable message buffer.

Speaking of PDC10, many of the session materials (slide-decks, samples, etc..) have been made available. You can find them at: http://player.microsoftpdc.com/Schedule/Sessions

I’ve been digging into the Service Bus a bit myself lately and realized all the samples use the owner for security. This didn’t seem realistic and Richard Seroter was kind enough to see my questions and blog on how he addressed it.

The Windows Azure team updated their blog with some additional info on Azure packages and their impact on pricing. They also announced that they’ve updated the David Chappell whitepapers for the latest enhancements.

I was presenting to a group that was going through some Azure training yesterday, discussing the latest PDC announcements. A question came up regarding the new SQL Azure partition federations and Azure Storage. This question, when to use which, comes up often. There’s no simple answer, but I did recently run across a TechNet article that helps you understand your options better. This will hopefully lead to better informed decisions. If this isn’t enough, then you might also want to check out the Azure Storage Team’s blog for how to get the most out of Azure Tables.

One feature a lot of folks are look forward too is the upcoming remote desktop feature. If you wanted a walk-through, you’ve got it!

Tech is all good, but as more folks start to adopt Azure, there’s more of a demand for guidance on patterns and best practices. Fortunately, it looks like the PNP team is already aware of it and working to fill that need.

To round out this this digest, I’ll get a little less technical. There are always folks asking for a comparison between Microsoft and Amazon’s offerings. There’s been a ba-gillion comparisons, but IT World has put up one more highlight 5 key differences.

Robert Duffner has also been updating the Azure Team blog with some interviews with cloud thought leaders. One I’ve found particularly interesting is Robert’s interview with Charlton Barreto, Intel’s Technology Strategist.

This will likely be my final Microsoft specific digest before the end of the year. I have presentations I need to prepare for as well as some technical blogging I want to do. Combine those with the coming holidays and I think you can understand.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

Tim Anderson (@timanderson) described The Microsoft Azure VM role and why you might not want to use it in this 11/22/2010 article:

I’ve spent the morning talking to Microsoft’s Steve Plank – whose blog [Plankytronixx] you should follow if you have an interest in Azure – about Azure roles and virtual machines, among other things.

Windows Azure applications are deployed to one of three roles, where each role is in fact a Windows Server virtual machine instance. The three roles are the web role for IIS (Internet Information Server) applications, the worker role for general applications, and newly announced at the recent PDC, the VM role, which you can configure any way you like. The normal route to deploying a VM role is to build a VM on your local system and upload it, though in future you will be able to configure and deploy a VM role entirely online.

It’s obvious that the VM role is the most flexible. You will even be able to use 64-bit Windows Server 2003 if necessary. However, there is a critical distinction between the VM role and the other two. With the web and worker roles, Microsoft will patch and update the operating system for you, but with the VM role it is up to you.

That does not sound too bad, but it gets worse. To understand why, you need to think in terms of a golden image for each role, that is stored somewhere safe in Azure and gets deployed to your instance as required.

In the case of the web and worker roles, that golden image is constantly updated as the system gets patched. In addition, Microsoft takes responsibility for backing up the system state of your instance and restoring it if necessary.

In the case of the VM role, the golden image is formed by your upload and only changes if you update it.

The reason this is important is that Azure might at any time replace your running VM (whichever role it is running) with the golden image. For example, if the VM crashes, or the machine hosting it suffers a power failure, then it will be restarted from the golden image.

Now imagine that Windows server needs an emergency patch because of a newly-discovered security issue. If you use the web or worker role, Microsoft takes responsibility for applying it. If you use the VM role, you have to make sure it is applied not only to the running VM, but also to the golden image. Otherwise, you might apply the patch, and then Azure might replace the VM with the unpatched golden image.

Therefore, to maintain a VM role properly you need to keep a local copy patched and refresh the uploaded golden image with your local copy, as well as updating the running instance. Apparently there is a differential upload, to reduce the upload time.

The same logic applies to any other changes you make to the VM. It is actually more complex than managing VMs in other scenarios, such as the Linux VM on which this blog is hosted.

Another feature which all Azure developers must understand is that you cannot safely store data on your Azure instance, whichever role it is running. Microsoft does not guarantee the safety of this data, and it might get zapped if, for example, the VM crashes and gets reverted to the golden image. You must store data in Azure database or blob storage instead.

This also impacts the extent to which you can customize the web and worker VMs. Microsoft will be allowing full administrative access to the VMs if you require it, but it is no good making extensive changes to an individual instance since they could get reverted back to the golden image. The guidance is that if manual changes take more than 5 minutes to do, you are better of using the VM role.

A further implication is that you cannot realistically use an Azure VM role to run Active Directory, since Active Directory does not take kindly to be being reverted to an earlier state. Plank says that third-parties may come up with solutions that involve persisting Active Directory data to Azure storage.

Although I’ve talked about golden images above, I’m not sure exactly how Azure implements them. However, if I have understood Plank correctly, it is conceptually accurate.

The bottom line is that the best scenario is to live with a standard Azure web or worker role, as configured by you and by Azure when you created it. The VM role is a compromise that carries a significant additional administrative burden.

<Return to section navigation list>

Cloud Security and Governance

Patrick Butler Monterde published a current list of Azure Security Papers on 11/22/2010:

This is a list of Azure Security Paper published by the Microsoft Global Foundation Services (GFS), the team that manages and supports the Azure Data Centers.

Information Security Management System for Microsoft Cloud Infrastructure (November 2010)

This paper describes the Information Security Management System program for Microsoft's Cloud Infrastructure, as well as some of the processes and benefits realized from operating this model. An overview of the key certifications and attestations Microsoft maintains to prove to cloud customers that information security is central to Microsoft cloud operations is included.

Windows Azure™ Security Overview (August 2010)

To help customers better understand the array of security controls implemented within Windows Azure from both the customer's and Microsoft operations' perspectives, this paper provides a comprehensive look at the security available with Windows Azure. The paper provides a technical examination of the security functionality available, the people and processes that help make Windows Azure more secure, as well as a brief discussion about compliance.

Security Best Practices for Developing Windows Azure Applications (June 2010)

This white paper focuses on the security challenges and recommended approaches to design and develop more secure applications for Microsoft’s Windows Azure platform. It is intended to be a resource for technical software audiences: software designers, architects, developers and testers who design, build and deploy more secure Windows Azure solutions.

Chris Hoff (@Beaker) asked if The Future Of Audit & Compliance Is…Facebook? and answered in this 11/20/2010 post:

I’ve had an ephiphany. The future is coming wherein we’ll truly have social security…

As the technology and operational models of virtualization and cloud computing mature and become operationally ubiquitous, ultimately delivering on the promise of agile, real-time service delivery via extreme levels of automation, the ugly necessities of security, audit and risk assessment will also require an evolution via automation to leverage the same.

At some point, that means the automated collection and overall assessment of posture (from a security, compliance, and risk perspective) will automagically occur (lest we continue to be the giant speed bump we’re described to be,) and pop out indicatively with glee with an end result of “good,” “bad,” or “pass,” “fail,” not unlike one of those in-flesh turkey thermometers that indicates doneness once a pre-set temperature is reached.

What does that have to do with Facebook?

Simple.

When we’ve all been sucked into the collective hive of the InterCloud matrix, the CISO/assessor/auditor/regulator will look at the score, the resultant assertions and the supporting artifacts gathered via automation and simply click on a button:

You see, the auditor/regulator really is your friend.

It’s a cruel future. We’re all Zuck’d.

/Hoff

Related articles

- CloudAudit Joins Cloud Security Alliance (datacenterknowledge.com)

- CSA GRC Shows Cloud Compliance Maybe More Important Than Cloud Security (securecloudreview.com)

- Security and Compliance Disconnect: Does Compliance = Security? (thesecuritysamurai.com)

- FedRAMP. My First Impression? We’re Gonna Need A Bigger Boat… (rationalsurvivability.com)

- Navigating PCI DSS (2.0) – Related to Virtualization/Cloud, May the Schwartz Be With You! (rationalsurvivability.com)

- Incomplete Thought: Compliance – The Autotune Of The Security Industry (rationalsurvivability.com)

Image by Getty Images via @daylife and @Beaker.

Chris Hoff (@Beaker) posted Incomplete Thought: Compliance – The Autotune Of The Security Industry on 11/20/2010:

I don’t know if you’ve noticed, but lately the ability to carry a tune while singing is optional.

Thanks to Cher and T-Pain, the rampant use of the Autotune in the music industry has enabled pretty much anyone to record a song and make it sound like they can sing (from the Autotune of encyclopedias, Wikipedia):

Auto-Tune uses a phase vocoder to correct pitch in vocal and instrumental performances. It is used to disguise off-key inaccuracies and mistakes, and has allowed singers to perform perfectly tuned vocal tracks without the need of singing in tune. While its main purpose is to slightly bend sung pitches to the nearest true semitone (to the exact pitch of the nearest tone in traditional equal temperament), Auto-Tune can be used as an effect to distort the human voice when pitch is raised/lowered significantly.[3]

A similar “innovation” has happened to the security industry. Instead of having to actually craft and execute a well-tuned security program which focuses on managing risk in harmony with the business, we’ve simply learned to hum a little, add a couple of splashy effects and let the compliance Autotune do it’s thing.

It doesn’t matter that we’re off-key. It doesn’t matter that we’re not in tune. It doesn’t matter that we hide mistakes.

All that matters is that auditors can sing along, repeating the chorus and ensure that we hit the Top 40.

/Hoff

Related articles

- Auto-Tune Really Is The Devil’s Favorite Tool (dlisted.com)

- Auto-Tune: Why Pop Music Sounds Perfect (time.com)

- Simon Cowell’s X Factor Fesses to Auto-Tune Use (omg.yahoo.com)

- What T-Pain Sounds Like Without Auto-Tune: Not That Much Better Than Sasha Frere-Jones [Sasha Frere-jones] (gawker.com)

- Navigating PCI DSS (2.0) – Related to Virtualization/Cloud, May the Schwartz Be With You! (rationalsurvivability.com)

- What’s The Problem With Cloud Security? There’s Too Much Of It… (rationalsurvivability.com)

Image by Getty Images via @daylife

<Return to section navigation list>

Cloud Computing Events

Azure Users Group (Azug, Belgium) announced on 11/22/2010 a Sinterklaas goes cloudy! Integrating xRM meeting on 12/7/2010 at 6:00 PM at an undisclosed location:

- Date: 12/7/2010

- Start Time: 6:00 PM

- End Time: 8:00 PM

- Location TBD

- Ticket Price: Free

- Register for free

AZUG is organizing its third event in 2010. Come join us for an interesting session!

Integrating xRM by Yves Goeleven

Tired of writing the same type of application over and over again? Feel you don’t have enough time to spend on optimizing that complex algorithm because you have to create all of those data management screens? Well those days are over! Let me introduce you to the Dynamics Crm Online platform and it’s eXtended Relational Management (xrm) capabilities that you can harness for your windows azure applications. In this session I will show you how to get the most out of both services!

Bruce Kyle noted on 11/20/2010 Northwest Gamers Come to the Cloud in Sessions on Windows Azure on 12/15/2010 at 5:30 PM in Seattle, WA:

Knowing the competitive landscape of gaming, especially here in the Northwest, we want to invite you to a two-hour session to learn about building your next game on Windows Azure.

Hear from a game developer just like you, Sneaky Games, who has successfully leveraged the cloud and Windows Azure to build and deploy Facebook games such as Fantasy Kingdoms. The Windows Azure Platform can revolutionize the way you do business, making your company more agile, efficient and flexible while allowing you to grow your business, enhance your customer's experience and save money towards your bottom line.

Join us December 15 at 5:30 PM in Seattle, WA to learn more about what the Windows Azure Platform and cloud computing can do for you as a game developer, network with fellow developers and enjoy open bar and appetizers on us!

Register here.

K. Scott Morrison reported in his How to Fail with Web Services post of 11/19/2010:

I’ve been asked to deliver a keynote presentation at the 8th European Conference on Web Services (ECOWS) 2010, to be held in Aiya Napa, Cyprus this Dec 1-3. My topic is an exploration of the the anti-patterns that often appear in Web services projects.

Here’s the abstract in full:

How to Fail with Web Services

Enterprise computing has finally woken up to the value of Web services. This technology has become a basic foundation of Service Oriented Architecture (SOA), which despite recent controversy is still very much the architectural approach favored by sectors as diverse as corporate IT, health care, and the military. But despite strong vision, excellent technology, and very good intentions, commercial success with SOA remains rare. Successful SOA starts with success in an actual implementation; for most organizations, this means a small proof-of-concept or a modest suite of Web services applications. This is an important first step, but it is here where most groups stumble.