Windows Azure and Cloud Computing Posts for 11/24/2010

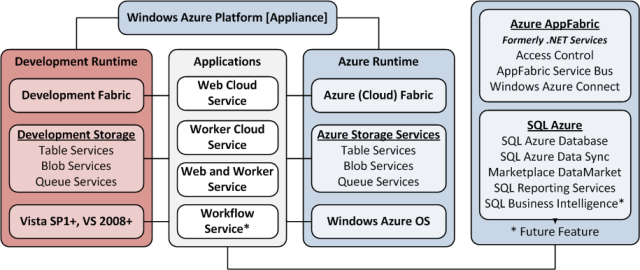

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

| Have a Happy and Bountiful Thanksgiving! |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Joseph Fultz compared SQL Azure and Windows Azure Table Storage in an article for the November 2010 issue of MSDN Magazine:

A common scenario that plays out in my family is that we decide to take it easy for the evening and enjoy a night out eating together. Everyone likes this and enjoys the time to eat and relax. We have plenty of restaurant choices in our area so, as it turns out, unless someone has a particularly strong preference, we get stuck in the limbo land of deciding where to eat.

It’s this same problem of choosing between seemingly equally good choices that I find many of my customers and colleagues experience when deciding what storage mechanism to use in the cloud. Often, the point of confusion is understanding the differences between Windows Azure Table Storage and SQL Azure.

I can’t tell anyone which technology choice to make, but I will provide some guidelines for making the decision when evaluating the needs of the solution and the solution team against the features and the constraints for both Windows Azure Table Storage and SQL Azure. Additionally, I’ll add in a sprinkle of code so you can get the developer feel for working with each.

Data Processing

SQL Azure and other relational databases usually provide data-processing capabilities on top of a storage system. Generally, RDBMS users are more interested in data processing than the raw storage and retrieval aspects of a database.

For example, if you want to find out the total revenue for the company in a given period, you might have to scan hundreds of megabytes of sales data and calculate a SUM. In a database, you can send a single query (a few bytes) to the database that will cause the database to retrieve the data (possibly many gigabytes) from disk into memory, filter the data based on the appropriate time range (down to several hundred megabytes in common scenarios), calculate the sum of the sales figures and return the number to the client application (a few bytes).

To do this with a pure storage system requires the machine running the application code to retrieve all of the raw data over the network from the storage system, and then the developer has to write the code to execute a SUM on the data. Moving a lot of data from storage to the app for data processing tends to be very expensive and slow.

SQL Azure provides data-processing capabilities through queries, transactions and stored procedures that are executed on the server side, and only the results are returned to the app. If you have an application that requires data processing over large data sets, then SQL Azure is a good choice. If you have an app that stores and retrieves (scans/filters) large datasets but does not require data processing, then Windows Azure Table Storage is a superior choice.

—Tony Petrossian, Principal Program Manager, Windows Azure

Reviewing the Options

Expanding the scope briefly to include the other storage mechanisms in order to convey a bit of the big picture, at a high level it’s easy to separate storage options into these big buckets:

- Relational data access: SQL Azure

- File and object access: Windows Azure Storage

- Disk-based local cache: role local storage

However, to further qualify the choices, you can start asking some simple questions such as:

- How do I make files available to all roles commonly?

- How can I make files available and easily update them?

- How can I provide structured access semantics, but also provide sufficient storage and performance?

- Which provides the best performance or best scalability?

- What are the training requirements?

- What is the management story?

The path to a clear decision starts to muddy and it’s easy to get lost in a feature benefit-versus-constraint comparison. Focusing back on SQL Azure and Windows Azure Table Storage, I’m going to describe some ideal usage patterns and give some code examples using each.

SQL Azure Basics

SQL Azure provides the base functionality of a relational database for use by applications. If an application has data that needs to be hosted in a relational database management system (RDBMS), then this is the way to go. It provides all of the common semantics for data access via SQL statements. In addition, SQL Server Management Studio (SSMS) can hook directly up to SQL Azure, which provides for an easy-to-use and well-known means of working with the database outside of the code.

For example, setting up a new database happens in a few steps that need both the SQL Azure Web Management Console and SSMS. Those steps are:

- Create database via Web

- Create rule in order to access database from local computer

- Connect to Web database via local SSMS

- Run DDL within context of database container

If an application currently uses SQL Server or a similar RDBMS back end, then SQL Azure will be the easiest path in moving your data to the cloud.

SQL Azure is also the best choice for providing cloud-based access to structured data. This is true whether the app is hosted in Windows Azure or not. If you have a mobile app or even a desktop app, SQL Azure is the way to put the data in the cloud and access it from those applications.

Using a database in the cloud is not much different from using one that’s hosted on-premises—with the one notable exception that authentication needs to be handled via SQL Server Authentication. You might want to take a look at Microsoft Project Code-Named “Houston,” which is a new management console being developed for SQL Azure, built with Silverlight. Details on this project are available at sqlazurelabs.cloudapp.net/houston.aspx.

SQL Azure Development

Writing a quick sample application that’s just a Windows Form hosting a datagrid that displays data from the Pubs database is no more complicated than it was when the database was local. I fire up the wizard in Visual Studio to add a new data source and it walks me through creating a connection string and a dataset. In this case, I end up with a connection string in my app.config that looks like this:

- <add name="AzureStrucutredStorageAccessExample.Properties.Settings.pubsConnectionString"

- connectionString="Data Source=gfkdgapzs5.database.windows.net;Initial Catalog=pubs;Persist Security Info=True;User ID=jofultz;Password=[password]"

- providerName="System.Data.SqlClient" />

Usually Integrated Authentication is the choice for database security, so it is feels a little awkward using SQL Server Authentication again. SQL Azure minimizes exposure by enforcing an IP access list to which you will need to add an entry for each range of IPs that might be connecting to the database.

Going back to my purposefully trivial example, by choosing the Titleview View out of the Pubs database, I also get some generated code in the default-named dataset pubsDataSet, as shown in Figure 1.

Figure 1 Automatically Generated Code for Accessing SQL Azure

I do some drag-and-drop operations by dragging a DataGridView onto the form and configure the connection to wire it up. Once it’s wired up, I run it and end up with a quick grid view of the data, as shown in Figure 2.

Figure 2 SQL Azure Data in a Simple Grid

I’m not attempting to sell the idea that you can create an enterprise application via wizards in Visual Studio, but rather that the data access is more or less equivalent to SQL Server and behaves and feels as expected. This means that you can generate an entity model against it and use LINQ just as I might do so if it were local instead of hosted (see Figure 3).

Figure 3 Using an Entity Model and LINQ

A great new feature addition beyond the scope of the normally available SQL Server-based local database is the option (currently available via sqlazurelabs.com) to expose the data as an OData feed. You get REST queries such as this:

- https://odata.sqlazurelabs.com/

- OData.svc/v0.1/gfkdgapzs5/pubs/

- authors?$top=10

This results in either an OData response or, using the $format=JSON parameter, a JSON response. This is a huge upside for application developers, because not only do you get the standard SQL Server behavior, but you also get additional access methods via configuration instead of writing a single line of code. This allows for the focus to be placed on the service or application layers that add business value versus the plumbing to get data in and out of the store across the wire.

If an application needs traditional relational data access, SQL Azure is most likely the better and easier choice. But there are a number of other reasons to consider SQL Azure as the primary choice over Windows Azure Table Storage.

The first reason is if you have a high transaction rate, meaning there are frequent queries (all operations) against the data store. There are no per-transaction charges for SQL Azure.

SQL Azure also gives you the option of setting up SQL Azure Data Sync (sqlazurelabs.com/SADataSync.aspx) between various Windows Azure databases, along with the ability to synchronize data between local databases and SQL Azure installations (microsoft.com/windowsazure/developers/sqlazure/datasync/). I’ll cover design and use of SQL Azure and DataSync with local storage in a future column, when I cover branch node architecture using SQL Azure. …

Joe continues with a description of Windows Azure table.

<Return to section navigation list>

Dataplace DataMarket and OData

James Clark argues that JSON is the new way to go in his XML vs the Web post of 11/24/2010:

Twitter and Foursquare recently removed XML support from their Web APIs, and now support only JSON. This prompted Norman Walsh to write an interesting post, in which he summarised his reaction as "Meh". I won't try to summarise his post; it's short and well-worth reading.

From one perspective, it's hard to disagree. If you're an XML wizard with a decade or two of experience with XML and SGML before that, if you're an expert user of the entire XML stack (eg XQuery, XSLT2, schemas), if most of your data involves mixed content, then JSON isn't going to be supplanting XML any time soon in your toolbox.

Personally, I got into XML not to make my life as a developer easier, nor because I had a particular enthusiasm for angle brackets, but because I wanted to promote some of the things that XML facilitates, including:

- textual (non-binary) data formats;

- open standard data formats;

- data longevity;

- data reuse;

- separation of presentation from content.

If other formats start to supplant XML, and they support these goals better than XML, I will be happy rather than worried.

From this perspective, my reaction to JSON is a combination of "Yay" and "Sigh".

It's "Yay", because for important use cases JSON is dramatically better than XML. In particular, JSON shines as a programming language-independent representation of typical programming language data structures. This is an incredibly important use case and it would be hard to overstate how appallingly bad XML is for this. The fundamental problem is the mismatch between programming language data structures and the XML element/attribute data model of elements. This leaves the developer with three choices, all unappetising:

- live with an inconvenient element/attribute representation of the data;

- descend into XML Schema hell in the company of your favourite data binding tool;

- write reams of code to convert the XML into a convenient data structure.

By contrast with JSON, especially with a dynamic programming language, you can get a reasonable in-memory representation just by calling a library function.

Norman argues that XML wasn't designed for this sort of thing. I don't think the history is quite as simple as that. There were many different individuals and organisations involved with XML 1.0, and they didn't all have the same vision for XML. The organisation that was perhaps most influential in terms of getting initial mainstream acceptance of XML was Microsoft, and Microsoft was certainly pushing XML as a representation for exactly this kind of data. Consider SOAP and XML Schema; a lot of the hype about XML and a lot of the specs built on top of XML for many years were focused on using XML for exactly this sort of thing.

Then there are the specs. For JSON, you have a 10-page RFC, with the meat being a mere 4 pages. For XML, you have XML 1.0, XML Namespaces, XML Infoset, XML Base, xml:id, XML Schema Part 1 and XML Schema Part 2. Now you could actually quite easily take XML 1.0, ditch DTDs, add XML Namespaces, xml:id, xml:base and XML Infoset and end up with a reasonably short (although more than 10 pages), coherent spec. (I think Tim Bray even did a draft of something like this once.) But in 10 years the W3C and its membership has not cared enough about simplicity and coherence to take any action on this.

Norman raises the issue of mixed content. This is an important issue, but I think the response of the average Web developer can be summed up in a single word: HTML. The Web already has a perfectly good format for representing mixed content. Why would you want to use JSON for that? If you want to embed HTML in JSON, you just put it in a string. What could be simpler? If you want to embed JSON in HTML, just use <script> (or use an alternative HTML-friendly data representation such as microformats). I'm sure Norman doesn't find this a satisfying response (nor do I really), but my point is that appealing to mixed content is not going to convince the average Web developer of the value of XML.

There's a bigger point that I want to make here, and it's about the relationship between XML and the Web. When we started out doing XML, a big part of the vision was about bridging the gap from the SGML world (complex, sophisticated, partly academic, partly big enterprise) to the Web, about making the value that we saw in SGML accessible to a broader audience by cutting out all the cruft. In the beginning XML did succeed in this respect. But this vision seems to have been lost sight of over time to the point where there's a gulf between the XML community and the broader Web developer community; all the stuff that's been piled on top of XML, together with the huge advances in the Web world in HTML5, JSON and JavaScript, have combined to make XML be perceived as an overly complex, enterprisey technology, which doesn't bring any value to the average Web developer.

This is not a good thing for either community (and it's why part of my reaction to JSON is "Sigh"). XML misses out by not having the innovation, enthusiasm and traction that the Web developer community brings with it, and the Web developer community misses out by not being able to take advantage of the powerful and convenient technologies that have been built on top of XML over the last decade.

So what's the way forward? I think the Web community has spoken, and it's clear that what it wants is HTML5, JavaScript and JSON. XML isn't going away but I see it being less and less a Web technology; it won't be something that you send over the wire on the public Web, but just one of many technologies that are used on the server to manage and generate what you do send over the wire.

In the short-term, I think the challenge is how to make HTML5 play more nicely with XML. In the longer term, I think the challenge is how to use our collective experience from building the XML stack to create technologies that work natively with HTML, JSON and JavaScript, and that bring to the broader Web developer community some of the good aspects of the modern XML development experience.

OData supports AtomPub XML format and JSON, so all isn’t lost. Thanks to Dare Obasanjo (@Carnage4Life) for the heads-up and comment: “It's official. JSON has killed XML on the Web.”

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Wade Wegner explained Using the SAML CredentialType to Authenticate to the Service Bus in this 11/24/2010 post:

The Windows Azure AppFabric Service Bus uses a class called TransportClientEndpointBehavior to specify the credentials for a particular endpoint. There are four options available to you: Unauthenticated, SimpleWebToken, SharedSecret, and SAML. For details, take a look at the CredentialType member. The first three are pretty well described and documented – in fact, if you’ve spent any time at all looking at the Service Bus, you’ve probably seen the following code:

SharedSecret Credential Type

- TransportClientEndpointBehavior relayCredentials = new TransportClientEndpointBehavior();

- relayCredentials.CredentialType = TransportClientCredentialType.SharedSecret;

- relayCredentials.Credentials.SharedSecret.IssuerName = issuerName;

- relayCredentials.Credentials.SharedSecret.IssuerSecret = issuerSecret;

This code specifies that the client credential is provided as a self-issued shared secret that is registered and managed by Access Control, along with the Issuer Name and Issuer Secret that you can grab (or generate) in the AppFabric portal. Quick and easy, but only marginally better than a username/password.

The use of a SAML token is more interesting, and in fact lately I’ve seen a number of requests for a sample. Unfortunately, it doesn’t appear that anyone has ever created a sample on how this should work. It’s one thing to say that the above code would change to the following …

Saml Credential Type

- TransportClientEndpointBehavior samlCredentials = new TransportClientEndpointBehavior();

- samlCredentials.CredentialType = TransportClientCredentialType.Saml;

… and something else to provide a sample that works. What makes this challenging is the creation of the SAML token – this is a complex process, and difficult to setup, test, or fake. Fortunately for you, I have put together a sample on how to use a SAML token as the CredentialType of the TransportClientEndpointBehavior.

DISCLAIMER: I am not an identity expert. You should look to people like Justin Smith, Vittorio Bertocci, Hervey Wilson, and Maciej ("Ski") Skierkowski’s for specific identity guidance. I’m simply leveraging much of the material, samples, and code they’ve written in order to enable this particular scenario.

In order to get this sample to work I’ve a number of frameworks. You’ll need to download and install these to get everything to work properly.

- .NET 4.0 Framework – I’ve only tested this with Visual Studio 2010 and the .NET 4.0 Framework.

- Windows Azure AppFabric SDK V1.0 – this SDK provides you with all the assemblies you’ll need in order to use the Service Bus.

- Windows Identity Foundation – WIF allows you to externalize use access from applications via claims (which is what we want to do).

- Windows Identity Foundation SDK – the WIF SDK provides you with templates and code samples to get going. Technically I don’t think we’re using this, but I have it installed and I’m not going to uninstall it to test.

I also used a number of samples in order to build this sample. You won’t need to download and install these in order to use this sample – I’m providing the source of the modified code below – but you might want to take a look at them anyways.

- Windows Azure AppFabric SDK Samples (C#) – specifically, I used the Service Bus EchoSample solution and Access Control AcmBrowser application.

- Fabrikam Shipping SaaS Demo – this is a great sample application, and I suggest you take a look. I personally used the SelfSTS and TestClient solutions – more on this in a moment.

Okay, now that we’ve got the various components and samples described, let’s get to the sample.

Overview

When I first started writing this post, I thought it’d be short and sweet. As you can see, it’s anything but. However, when you look through everything, I think you’ll see that there’s quite a bit to this sample.

There are three major pieces in this sample:

- Using a security token service (STS) to generate a SAML token.

- Configuring the Access Control service to accept the SAML token and authorize our WCF service to Send or Listen across the Service Bus.

- Modifying the EchoSample WCF service to get a SAML token and send it to Access Control.

Let’s walk through the pieces.

The Security Token Service

Remember my disclaimer above? Since I’m not an identity guy, I decided to use a tool built by an identity guy to act as my STS. Vittorio has created a fabulous tool called SelfSTS, which exposes a minimal WS-Federation STS endpoint. In the Fabrikam Shipping SaaS Demo Source Code, he provided an updated version of the SelfSTS tool that will also issue via WS-Trust, along with a sample on how to acquire the token and send it to ACS.

If you download FabrikamShippingSaaS and install it in the default location, you can find these two assets in the following locations:

- Updated SelfSTS: C:\FabrikamShippingSaaS\assets\SelfSTS\SelfSTS.sln

- Token acquisition and ACS submittal example: C:\FabrikamShippingSaaS\code\TestClient.sln

Definitely take a look at these resources. I highly encourage you to use these as much and as often as you like – the SelfSTS tool is invaluable when working with Access Control and WIF. In the TestClient solution, the method we’re most interested in is the GetSAMLToken method found in the Program.cs file. Take a look at it, but realize that we’re going to make a few changes to it for our example.

For the purposes of this sample, I took the SelfSTS project and included it in the EchoSampleSAML solution that you can find below. The only update I made to the tool was to extract a method I called Start – which encapsulates all of the logic needed to start the tool – and call it in the Main method.

Go ahead and run the SelfSTS project in the EchoSampleSAML solution below by right-clicking and choosing Debug –> Start New Instance. It looks like this:

There are two items that we’ll leverage below:

- The Endpoint: http://localhost:8000/STS/Issue/

- The “Metadata” URL: http://localhost:8000/STS/FederationMetadata/2007-06/FederationMetadata.xml

Quickly stop the SelfSTS and click “Edit Claim Types and Values”.

These are the claims that the SelfSTS will return. I’ve highlighted “joe” because we’re going to use this claim below when configuring Access Control. Make a mental note, because I want you to be aware of where “joe” comes from.

Go ahead and keep this tool running the entire time – you’ll leverage it throughout this process.

Configuring Access Control

The production Access Control services does not provide a UI in the portal to configure issuers and rules. Consequently, we’ll use the AcmBrowser tool found within the Windows Azure AppFabric SDK Samples. Depending on where you unzip the samples, you’ll find it here:

C:\WindowsAzureAppFabricSDKSamples_V1.0-CS\AccessControl\ExploringFeatures\Management\AcmBrowser

When you open the solution you’ll have to upgrade it for Visual Studio 2010. Start the application. You’ll see something like the following:

This is a very handy tool, but unfortunately it takes a little time to get used to it. The very first thing to do is enter your Service Namespace and Management Key (see A above). You can get these off of the AppFabric portal (i.e. http://appfabric.azure.com) in the following places:

Note: Make sure to put a “-sb” at the end of your Service Namespace in the AcmBrowser tool. This is because the tool calls the Access Control Management Endpoint, which by convention is your service namespace with a “-sb” appended to it.

Once you’ve entered these values, click the “Load from Cloud” button (see B above). This will grab your existing settings and load them into the Resources list. I recommend you click the “Save” button (see C above) to save a copy of them locally – this way you’ll have something to revert to if you make a mistake.

Okay, now that you have the basics loaded into AcmBrowser, here are the steps to setup Access Control:

- Right-click “Issuers” and click “Create”. Enter the following values and press OK.

- Display Name: SelfSTS

- Algorithm: FedMetadata

- Endpoint URI: http://localhost:8000/STS/FederationMetadata/2007-06/FederationMetadata.xml (from the SelfSTS)

- Expand Scopes –> ServiceBus Scope for … –> Rules.

- Create a rule that will allow “joe” to Send messages to the Service Bus endpoint (remember “joe” from above?). Right-click “Rules” and click “Create”. Enter the following values and press OK.

- Display Name: test send

- Type: Simple

- Input Claim

- Issuer: SelfSTS

- Type: http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name

- Value: joe

- Output Claim

- Create a rule that will allow “joe” to Send messages to the Service Bus endpoint (remember “joe” from above?). Right-click “Rules” and click “Create”. Enter the following values and press OK.

- Display Name: test listen

- Type: Simple

- Input Claim

- Issuer: SelfSTS

- Type: http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name

- Value: joe

- Output Claim

- Now you need to update Access Control. Unfortunately, AcmBrowser doesn’t update Access Control, so you have to clear then save.

- Click the “Clear Service Namespace in Cloud” button (see D above).

- Click the “Save to Cloud” button (see E above).

That’s it for Access Control. We’ve setup the SelfSTS as a trusted Issuer, and we’ve defined two rules – Send and Listen – for the claim “name” with a value of “joe”.

Modifying the EchoSample to Use

The rest of this sample is really pretty straightforward – we’ve either already done most of the work, or we’ll leverage a few code snippets from different resources. The first thing we’ll do is add a GetSAMLToken method to the Service project in the EchoSample. Add the following method in the Program.cs file:

Getting the SAML Token

- private static string GetSAMLToken(string identityProviderUrl, string identityProviderCertificateSubjectName, string username, string password, string appliesTo)

- {

- GenericXmlSecurityToken token = null;

- var binding = new WS2007HttpBinding();

- binding.Security.Mode = SecurityMode.Message;

- binding.Security.Message.ClientCredentialType = MessageCredentialType.UserName;

- binding.Security.Message.EstablishSecurityContext = false;

- binding.Security.Message.NegotiateServiceCredential = true;

- using (var trustChannelFactory = new WSTrustChannelFactory(binding, new EndpointAddress(new Uri(identityProviderUrl), new DnsEndpointIdentity(identityProviderCertificateSubjectName), new AddressHeaderCollection())))

- {

- trustChannelFactory.Credentials.UserName.UserName = username;

- trustChannelFactory.Credentials.UserName.Password = password;

- trustChannelFactory.Credentials.ServiceCertificate.Authentication.CertificateValidationMode = X509CertificateValidationMode.None;

- trustChannelFactory.TrustVersion = TrustVersion.WSTrust13;

- WSTrustChannel channel = null;

- try

- {

- var rst = new RequestSecurityToken(WSTrust13Constants.RequestTypes.Issue);

- rst.AppliesTo = new EndpointAddress(appliesTo);

- rst.KeyType = KeyTypes.Bearer;

- channel = (WSTrustChannel)trustChannelFactory.CreateChannel();

- token = channel.Issue(rst) as GenericXmlSecurityToken;

- ((IChannel)channel).Close();

- channel = null;

- trustChannelFactory.Close();

- string tokenString = token.TokenXml.OuterXml;

- return tokenString;

- }

- finally

- {

- if (channel != null)

- {

- ((IChannel)channel).Abort();

- }

- if (trustChannelFactory != null)

- {

- trustChannelFactory.Abort();

- }

- }

- }

- }

This method is almost identical to the GetSAMLToken found in FabrikamShippingSaaS’s TestClient. Here are the changes I made:

- Line 1: I changed the return type from SecurityToken to string. This is because the TransportClientEndpointBehavior will want the SAML token as a string.

- Lines 5-10: I moved the code from the GetSecurityTokenServiceBinding method into the GetSamleToken method. This is simply for readability in this blog post.

- Line 32: I removed the following code: rst.Entropy = new Entropy(CreateEntropy());

- Line 35: Added this line to grab the token out of the GenericXmlSecurityToken object.

- Line 36: Returning the tokenString instead of the token.

Now, there are a few values that we’re going to start in the App.config file. Here they are:

App.Config file

- <appSettings>

- <add key="identityProviderCertificateSubjectName" value="adventureWorks" />

- <add key="identityProviderUrl" value="http://localhost:8000/STS/Username" />

- <add key="appliesTo" value="https://{0}-sb.accesscontrol.windows.net/WRAPv0.9" />

- <add key="serviceNamespace" value="YOURSERVICENAMESPACE" />

- <!– this is the user and pwd of the user in AdventureWorks IdP –>

- <add key="username" value="joe" />

- <add key="password" value="p@ssw0rd" />

- </appSettings>

Replace YOURSERVICENAMESPACE with your actual service namespace.

Now, let’s create some static variables for these values in the Program.cs file:

Static Variables

- static string identityProviderUrl = ConfigurationManager.AppSettings["identityProviderUrl"];

- static string identityProviderCertificateSubjectName = ConfigurationManager.AppSettings["identityProviderCertificateSubjectName"];

- static string username = ConfigurationManager.AppSettings["username"];

- static string password = ConfigurationManager.AppSettings["password"];

- static string serviceNamespace = ConfigurationManager.AppSettings["serviceNamespace"];

- static string appliesTo = String.Format(ConfigurationManager.AppSettings["appliesTo"], serviceNamespace);

Now, we’re going to change a number of things within the Main method. First, remove the Console.Write() and Console.ReadLine() sections – we’re grabbing those out of the App.Config file. Next, we need to grab the SAML token. Write the following line:

Code Snippet

- string samlToken = GetSAMLToken(identityProviderUrl, identityProviderCertificateSubjectName, username, password, appliesTo);

This will call the GetSAMLToken method and pass along all the values required in order to generate the SAML token. When this runs, it will call out to SelfSTS and pass along the username/password specified in the App.Config file and, in return, get a SAML token that includes all the claims we want – including the “name”.

Now, we have to take this SAML token as use it with the TransportClientEndpointBehavior. Remove the existing TransportClientEndpointBehavior, and replace it with the following code:

Code Snippet

- TransportClientEndpointBehavior samlCredentials = new TransportClientEndpointBehavior();

- samlCredentials.CredentialType = TransportClientCredentialType.Saml;

- samlCredentials.Credentials.Saml.SamlToken = samlToken;

We’re now creating a new TransportClientEndpointBehavior with the CredentialType of TransportClientCredentialType.Saml, and setting the SamlToken property to our samlToken. Mission accomplished! (Note: since I changed the variable name to samlCredentials, but sure and update the code below where the TransportClientEndpointBehavior is added as a behavior.)

Feels good. Now all we have to do is run it!

Wrapping Up

Alright, so now we’re ready to run it. Grab the source code below. When you run it, be sure and update YOURSERVICENAMESPACE (two instances) and YOURISSUERSECRET (one instance). Also, be sure to start the projects in the following order:

- SelfSTS – we need to make sure our STS is running first.

- Service – when this starts it will grab the SAML token from SelfSTS, then connect to the Service Bus endpoint as a listener.

- Client – when this starts it will use the SharedSecret to connect to the Service Bus as a sender.

When the Service starts and successfully connects as a listener, it should look like this:

After you startup the Client and echo some text, you’ll see the text reflected in the console window:

And that’s it!

Now, the whole purpose of this is to highlight how to leverage the SAML token within the TransportClientEndpointBehavior class. Obviously you’ll want to use something like ADFS 2.0 and not the SelfSTS in a production, but fortunately I can refer you to my disclaimer above and wish you good luck!

Hope this helps!

Special thanks to Vittorio Bertocci and Maciej ("Ski") Skierkowski for their help, and Scott Seely for asking the question that got me to pull this together.

Brian Hitney continued his series with Distributed Cache in Azure: Part III on 11/24/2010:

It has been awhile since my last post on this … but here is the completed project – just in time for distributed cache in the Azure AppFabric! : ) In all seriousness, even with the AppFabric Cache, this is still a nice viable solution for smaller scale applications.

To recap, the premise here is that we have multiple website instances all running independently, but we want to be able to sync cache between them. Each instance will maintain its own copy of an object in the cache, but in the event that one instance sees a reason to update the cache (for example, a user modifies their account or some other non-predictable action occurs), the other instances can pick up on this change.

It’s possible to pass objects across the WCF service however because each instance knows how to get the objects, it’s a bit cleaner to broadcast ‘flush’ commands. So that’s what we’ll do. While it’s completely possible to run this outside of Azure, one of the nice benefits is that the Azure fabric controller maintains the RoleEnvironment class so all of your instances can (if Internal Endpoints are enabled) be aware of each other, even if you spin up new instances or reduce instance counts.

You can download this sample project here:

When you run the project, you’ll see a simple screen like so:

Specifically, pay attention to the Date column. Here we have 3 webrole instances running. Notice that the Date matches – this is the last updated date/time of some fictitious settings we’re storing in Azure Storage. If we add a new setting or just click Save New Settings, the webrole updates the settings in Storage, and then broadcasts a ‘flush’ command to the other instances. After clicking, notice the date of all three changes to reflect the update:

In a typical cache situation, you’d have no way to easily update the other instances to handle ad-hoc updates. Even if you don’t need a distributed cache solution, the code base here may be helpful for getting started with WCF services for inter-role communication. Enjoy!

Brian is a Developer Evangelist with Microsoft Corporation, covering North and South Carolina.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

Techy Freak published a detailed, illustrated Guide To Windows Azure Connect on 11/15/2010 (missed when posted):

Building applications for cloud and hosting them on cloud is one of the great things that happened in recent times. However, you might be having number of existing applications that you wish to migrate to cloud, but you do not want to move your database server to the cloud. Or you want to create a new application and host it in the cloud, but this new application needs to communicate with your existing on-premise applications hosted in your enterprise's network. Other case might be that your new application that you wish to host in cloud will rely for its authentication on your enterprise's Active Directory.

What options do you have? You can think of re-writing your on-premise applications for azure and then host them in azure, or in case of Database servers, you can move your DB servers to SQL Azure in cloud. But you have other easier option too, that has been announced in PDC10 and which will be released by the end of this year. What we are talking about is Windows Azure Connect.

Later in this post we will talk about How to setup windows azure connect and Azure Connect Use Cases & Scenarios. But first let's go through what connect is and why do we need it.

What is Windows Azure Connect

Windows Azure Connect provides secure network connectivity between your on-premises environments and Windows Azure through standard IP protocols such as TCP and UDP. Connect provides IP-level connectivity between a Windows Azure application and machines running outside the Microsoft cloud.

Figure 1: Windows Azure Connect

This combination can be of different scenarios, which we will see later in the section of Use Case and Scenarios.

In first CTP release we can access Azure resources by installing connect agent on the non-azure resources. The upcoming release will support Windows Server 2008 R2, Windows Server 2008, Windows 7, Windows Vista SP1, and up. A point to be noted here is that this isn’t a full-fledged virtual private network (VPN). However, future plans are to extend current functionality which will enable connectivity using your already existing on premise VPN devices that you use within your organization today.

Setup Azure Connect

Windows Azure Connect is a simple solution. Setting it up doesn’t require contacting your network administrator. All that’s required is the ability to install the endpoint agent on the local machine. Process for setting up Azure Connect involves 3 steps:

- Enable Windows Azure Roles for external connectivity. This is done using service model.

- Enable your on-premise/local computers for connectivity by installing windows azure connect agent.

- Manage Network Policy through Windows Azure Portal.

Final step is to configure and define network policy. This defines which WA roles and local computers that you have enabled for connect are able to communicate with each other. This is done using WA admin portal. It provides very granular level of control.

1. Enable Windows Azure Roles for external connectivity

To use Connect with a Windows Azure service, we need to enable one or more of its Roles. These roles can be Web/worker role or a new role Vm Role announced at PDC10.

For web/worker role, the only thing we need to do is to add an entry in your .csdef. You simply add a line of xml in your .csdef specifying to import or include windows connect plugin. Then, you need to specify your ActivationToken in ServiceConfiguration (.cscfg) file. ActivationToken is a unique per-subscription token, which means if you have two different Azure subscriptions then you will have individual Activation Token for each subscription. This token is direclty accessed from Admin UI.

For VM role, install the Connect Agent in VHD image using the Connect VM install package. This package is available through Windows Azure Admin Portal and it contains the ActivationToken within itself.

Also, in our .cscfg file you can specify optional settings for managing AD domain-join and service availability.Once these configuration are done for a role, connect agent will automatically be deployed for each new role instance that starts up. This means, if tomorrow, for example, you add more instances to the roles then each new instances will automatilcally be provisioned to use Azure Connect.

2. Enable your on-premise/local computers for connectivity

Your Local on-premise computers are enabled for connectivity with Azure Services by installing & activating the Connect agent. The connect agent can be installed to your local computers in two ways:

- Web-based installation: From your Windows Azure Admin portal you get a web-based installation link. This link is per-subscription basis and it has the activation token embedded within the URL.

- Standalone install package: Other option you have is to use standalone installation package. You can run this installation package using any standard software distribution tool installed in your system, as you do it for other programs. It will add activation token into registry and read its value from there.

Once Connect agent is installed, you will also have a client UI on your system as well as connect agent tray icon in your system tray. Connect agent tray icon & client UI let you view the current status, both the activation status as well as network connectivity status, of azure connect agent. Also it provides basic tasks such as network refresh policy.

Connect agent automatically manages network connectivity between your local computers and Azure services/apps. To do this it does several things including:

- Setting up virtual network adapter

- “Auto-connects” to Connect relay service as needed

- Configures IPSec policy based on network policy

- Enables DNS name resolution

- Automatically syncs latest network policies

3. Manage Network Policy

Once you have identified your Azure Roles that need to connect to on-premise comouters, and also you have installed and actiated Connect Agents on your local computers, you need to configure which roles connect to which of the configured local computers. To do this we specify Network Policy and it is managed through Windows Azure admin portal. Again, this is done on a per-subscription basis.

Management model for connect is pretty simple. There are 3 different type of operations you can do:

- You can take your local computers, that have been enabled and activated for Windows Azure Connect by installing connect agents on them, and organize them into groups. e.g. you might create a group that contain your SQL Server computers that one of your azure role needs to connect to, and call this "SQL Server Group". Or you might put all you developer laptops into My Laptops group or you might put computers related to a given project into a group. However, there are two constraints - first, a computer can only belong to a single group at a time and second, when you have a new computer where you just installed Windows Azure Connect agent on, the newly activated computer is unassigned by default meaning it doesn’t belong to any group, and therefore they wont have connectivity.

Figure 2: Connect Groups and Hosted Relay

As shown in the Figure 2 above, we can have groups of local Development machines, and call this group “Development Computers” and our Windows Azure Role – “Role A” will connect to this group. Similarly, we can have a group of our DB servers and name this group as “Database Servers” and have our Role B connect to this group. All these configurations will be done from Azure Admin Portal.

2. Windows Azure roles can be connected to the group of these local computers from admin UI. It is done for all of the instances that make up that azure role and all the local computers in that group. One thing to note is that WINDOWS AZURE CONNECT DOES NOT CONTROL CONNECTIVITY WITHIN AZURE. In other words you can’t use WA connect to connect between two roles which are part of a service or two instances that are part of the same role. The reason for this there are already existing mechanisms for doing this. WA connect is all about connecting azure roles to computers outside of azure.

3. Additionally, it has the ability of connecting group of local computers to other groups of local computers. This enables network connectivity between computers in each of these groups. Additionally, we can have interconnectivity for a given group. This allows all computers within that group to have connectivity with each other. This functionality is useful in ad-hoc or roaming scenarios e.g. if you like to have your developer laptop to have a secure connectivity back to a server that resides in your corporate network.

Networking Behavior

Once you have defined network policy , Azure connect service will automatically setup secure IP level network connection between all of those role instances that you enabled for connectivity and local computers, based upon the network policy you specified.

All of the traffic is tunneled through Hosted relay service. This ensures Windows Azure Connect will allow you to connect to resources regardless of firewalls and NAT configuration of the environment they are residing in. Your Azure Role Instances and your local computers, all will have, through WA Connect, secure IP level connectivity. This connectivity holder is established regardless of the physical network topology of those resources. Due to any firewalls or NATS, many local computers might not have direct public IP addresses. But using Windows Azure Connect you can establish connectivity through all of those firewalls and NATs, as long as those machines have outbound HTTPS access to the hosted relay service.

Figure 2 above shows how local on-premise computers are connected to web role instances through Hosted Relay Service.

Other point to note here is that network connectivity that Windows Azure Connect establishes is secured fully in an end to end fashion using IPSec, and Azure Connect agent takes care of setting this up automatically.

Each connected machine has a routable IPv6 address. Important point to note is even being part of Windows Azure Connect network, any of your existing network behavior remains unchanged. Connect doesn't alter the behavior of you existing network configuration. It just joins you to an additional network with connect.

Once the network connectivity is established, applications running either in azure or on local system will be able to resolve the names to IP address using DNS name resolutions that connect provides.Use Cases/Scenarios

Let's go through few of the scenarios where Windows Azure Connect might be our solution:

- Enterprise app migrated to Windows Azure that requires access to on-premise SQL Server. This is most widely seen scenario. You have your on-premise application which connects to your on-premise database server. Now you are willing to move your application to Azure, but due to some business reasons the database this application uses needs to stay on premises. Using Windows Azure connect, the web role, converted from on-premise ASP.NET application, can access the on-premises database directly. The wonderful thing here is that it does not even require to change the connection string, as both web-role and database server are in the same network.

- Domain-joined to the on-premises environment. This scenario opens up various other use cases. One such case is letting the application use existing Active Directory accounts and groups for access control to your Azure application. Theefore, you should not be bothered about exposing your AD to internet to provide access control in cloud. Using Windows Azure Connect your azure services/apps are domain joined with your Active Directory domain. This enables to control who can access azure services based on your existing windows user or group accounts. Also, domain-joining allows single sign-on to the cloud application by on-premises users, or it can allow your azure services to connect to on-premise SQL server using Windows Integrated Authentication. We will see in a seperate post How to Domain Join Azure Roles with Active Directory using Connect.

- Since you have direct connectivity to VMs running in cloud, you can remotely administer and trouble-shoot your Windows Azure Roles

Techy Freak continued his Windows Azure Connect series with Active Directory Domain Join of Azure Roles Using Connect of 11/16/2010:

We already have seen how Windows Azure connect can help us in connecting our windows azure role instances to our local computers in the previous post. We also saw in the use cases/scenarios for azure connect, that we can domain join our windows azure roles with our on-premise Active Directory, and this is possible by using connect plug-in.

This is a very useful feature provided by Windows Azure Connect. Active Directory Domain Join might help you in following regards:

The process to set up, enable and configure Active Directory Domain Join using connect, involves following steps:

- You can now control access to your azure role instances based on domain accounts.

- You can now provide access control using windows authentication along with on-premise SQL server.

- In general, as customers migrate existing Line of Business applications to cloud; many of those applications today are written or assume domain joined environment. And with this capability of domain joining your azure roles to on-premise AD, this process of migration can be made simpler.

- Enable one of your domain controller/DNS servers for connectivity by installing Windows Azure Connect Agent on that machine.

Many customers with multiple Domain Controller environment, will have many DCs. For such scenario it is recommended to create a dedicated AD site to be used for domain joining of your azure roles.- Configure your Windows Azure connect plug-in to automatically domain join your azure role instances to active directory. For domain joining there are specific settings to be done in Service Configuration file (.cscfg )

– credentials (domain account that has permission to domain join these new instances coming online)

- target organizational unit (OU) for where your azure role instances SHOULD be located within your AD

- you can specify list of domain users or groups that will automatically be added to local admin groups for your azure role.- Configure your network policy. This will specify which roles will connect to what Active Directory servers. This is done from admin portal.

- New Windows Azure Role instances will automatically be domain-joined

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

tbtechnet announced It’s Back: 30-days Windows Azure Platform: No Credit Card Required in an 11/24/2010 post to TechNet’s Windows Azure Platform, Web Hosting and Web Services blog:

If you’re a developer interested in quickly getting up to speed on the Windows Azure platform there are excellent ways to do this.

- Get no-cost technical support with Microsoft Platform Ready: http://www.microsoftplatformready.com/us/dashboard.aspx

- Just click on Windows Azure Platform under Technologies

Get a no-cost, no credit card required 30-day Windows Azure 30-day platform pass

- Promo code is MPR001

Brian Loesgen added more details of the author in his FREE 30-day Azure developer accounts! Hurry! post of the same date:

If you’ve worked with Azure you know that the SDK provides a simulation environment that lets you do a lot of local Azure development without even needing an Azure account. You can even do hybrid (something I like to do) where you’re running code locally but using Azure storage, queues, SQL Azure.

However, wouldn’t it be nice if you had a credit-card free way that you could get a developer account to really push stuff up to Azure, for FREE? Now you can! I’m not sure how long this will last, or if there’s a limit on how many will be issued, but right now, you can get (FOR FREE):

The Windows Azure platform 30-day pass includes the following resources:

- Windows Azure

- 4 small compute instances

- 3GB of storage

- 250,000 storage transactions

- SQL Azure

- Two 1GB Web Edition database

- AppFabric

- 100,000 Access Control transactions

- 2 Service Bus connections

- Data Transfers (per region)

- 3 GB in

- 3 GB out

Important: At the end of the 30 days all of your data will be erased. Please ensure you move your application and data to another Windows Azure Platform offer.

You may review the Windows Azure platform 30 day pass privacy policy here.

I believe giving potential Windows Azure developers four compute instances for 30 days was great idea. I’ve signed up for the offer and have received an email acknowledgement that my account will be active in two to three business days. Developers who signed up for earlier calendar-month passes will receive an email from Tony Bailey with the details for signing up for the new 30-day (not calendar month) pass.

Bill Zack posted SharePoint and Azure? to his Architecture & Stuff blog on 11/24/2010:

Of course SharePoint does not work in Windows Azure (yet), but there are ways to integrate with and leverage SharePoint capabilities with Windows Azure. Here are some resources that illustrate how to do this. (See Steve Fox’s blog for more ideas on the subject.)

- PDC 2010 Session: Integrating SharePoint with Windows Azure

- MSDN Magazine article: Connecting SharePoint to Windows Azure with Silverlight Web Parts

- MSDN Webcast – Level 300: SharePoint 2010 and Windows Azure (Level 300)

- PowerPoint Deck – SharePoint and Windows Azure: How They Play Together

- Several Steve Fox Blog posts:

- SharePoint and Azure: How They Play Together? (this includes code snippets, etc.)

Of course if you need SharePoint running in the cloud today you should investigate Office 365 which provides many of the features of on-premise SharePoint via SharePoint Online.

The Windows Azure Team posted Real World Windows Azure: Interview with Allen Smith, Chief of Police for the Atlantic Beach Police Department on 11/24/2010:

As part of the Real World Windows Azure series, we talked to Allen Smith, Chief of Police for the Atlantic Beach Police Department, about using the Windows Azure platform for its first-responders, law enforcement solution. Here's what he had to say:

MSDN: Tell us about the Atlantic Beach Police Department and the services you offer.

Smith: Our mission at the Atlantic Beach Police Department is to deliver "community-oriented policing." That is, to join in a partnership with our residents, merchants, and visitors to provide a constant, safe environment through citizen and police interaction in the town of Atlantic Beach, North Carolina.

MSDN: What were the biggest challenges that you faced prior to implementing the Windows Azure platform?

Smith: We constantly seek new ways to protect citizens and police officers. We are a small police department with only 18 full-time police officers, and one of our biggest challenges is cost. We have to operate within a budget, so we need cost-effective ways to systematically solve our challenges-whether they're current challenges or potential future challenges. One area where we saw an opportunity for improvement was the number of electronic devices that officers use. Police officers use multiple devices, such as in-car cameras, portable computers, and mobile telephones. We wanted to find a way to cost-effectively consolidate these devices.

MSDN: Can you describe the first-responders, law enforcement solution you built with the Windows Azure platform?

Smith: We use a geo-casting system developed by IncaX that is hosted in the cloud on Windows Azure and installed on several models of commercial, off-the-shelf smartphones. With the smartphones, officers can make phone calls and connect to the Internet and web-based applications that help them perform common tasks, such as checking for warrants during traffic stops. The smartphones act as in-car cameras, and they are equipped with geographic information system (GIS) software that enables us to locate officers wherever they are. This allows us to direct other responders to a scene or help officers when they require assistance. All of the data we collect is stored in Windows Azure Table storage.

The geographic information system in the smartphones enables the Atlantic Beach Police Department to locate police officers wherever they are, and streams a live camera feed to the Chief of Police's desktop computer.

MSDN: What makes your solution unique?

Smith: Though we are still in the testing phase, one thing that makes our solution unique, and we'll be one of the first police departments in the world to do this, is that we will be able to consolidate three devices that are regular tools for police officers down to one device that acts as an in-car camera, computer, and a mobile phone. We can locate officers wherever they are, and I will have a live camera feed from the police cruiser to my desktop computer in my office-we're always connected.

MSDN: What kinds of benefits are you realizing with the Windows Azure platform?

Smith: We have a first-responders application for law enforcement officers that is GIS-enabled, which helps better protect citizens and police officers-that's our number one priority, so is the underlying benefit we've gained from the solution. At the same time, by using Windows Azure to host the application at Microsoft data centers, we're able to reduce our IT maintenance and management costs. In addition, we're able to reduce hardware costs by consolidating our devices, which is something we would not have been able to do without hosting our application on Windows Azure.

To learn more about the Windows Azure solution used by the Atlantic Beach Police Department, and its broader applicability to you as a customer or partner, please contact Ranjit Sodhi, Microsoft Services Partner Lead: http://customerpartneradvocate.wordpress.com/contact

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

The Microsoft patterns & practices group updated their Windows Phone 7 Developer Guide release candidate status on 11/2/2010 (missed when posted):

This new guide from patterns & practices will help you design and build applications that target the new Windows Phone 7 platform.

The key themes for these projects are:

As usual, we'll periodically publish early versions of our deliverable on this site. Stay tuned!

- Just released! The guide Release candidate is now available for download here

- Project Overview

- High level Architecture

- UI Mockups

Project's scope

Drop #5 for WP7 Developer Guide (Release Candidate)

11/2/2010 - New update on the code! (Signed now)

This is our release candidate for the guide contents (docs and code).

Steps:

- Download WP7Guide-Code-RC file

- Unblock the content

- Run it on the box you want to copy the files (you will have to accepot the license)

- Select a folder in your system to extract files to

- Run the CheckDependencies.bat file

- Read the "readme"

Release notes:

- Make sure you run the Dependency Checker tool to verify external components & tools that are needed.

- IMPORTANT: the DependencyChecker is NOT an installer. If you have problems with it, look in the EventLog for exceptions in your environment. The self-extractable zipfile is not signed yet, so the previous workarounds should not be needed.

- As before, the entire source tree is available. But to keep things simpler, we have included 2 solutions:

- A "Phone Only" solution that doesn't open the entire application (it doesn't open the backend and mocks all calls to it)

- An entire solution that includes everything (Phone project, Windows Azure backend, etc)

<Return to section navigation list>

Visual Studio LightSwitch

Bill Zack described Visual Studio LightSwitch – New .NET Development Paradigm in an 11/19/2010 post to the Ignition Showcase blog (missed when posted):

This post will be covering a new tool from Microsoft, Visual Studio LightSwitch, currently in Beta.

We will be talking specifically about:

- What it is

- Why it is a very significant development

- Why you as an Independent Software Vendor should care

Windows Azure LightSwitch is one of the most significant development products to come out of Microsoft in a long time.

The .NET platform is an extremely rich, powerful and deep development platform. The very richness of the platform has created criticism in some areas. Many developers new to .NET have been faced with a steep learning curve. Many developers creating new applications (such as simple forms over data applications) have also felt strongly that developing these applications should be a lot simpler than it is.

LightSwitch combines the ease reminiscent of Access development with the sophistication of being able to customize those applications using standard .NET tools and techniques if those applications need to do things not directly supported by LightSwitch.

Via a wizard driven process you can create a forms over data application with a two or three tier architecture, choosing a user interface selected from one of several pre-defined UI templates. When you do that a standard .NET Solution is generated such that it can be enhanced via normal .NET development techniques if the generated solution does not do exactly what you want.

When you use LightSwitch to generate an application it automatically bakes in the use of proven design patterns (such as MVVM) and the use of proven .NET components such as Silverlight and RIA Services. Because it is base on a data provider model it also supports easy connection to a myriad of data sources including SQL Server, SQL Azure, web services as well as other data sources. In fact we expect a rich ecosystem of 3rd party add-ins to be developed to extend the product. As one example Infragistics provides a touch-enabled UI template to easily touch-enable a generated application.

Two very impressive features of LightSwitch are:

1. The ability to change your mind after development and generate a solution for a different deployment platform. For instance you can generate and deploy a desktop applications and then,by changing a parameter, regenerate it as a browser or Windows Azure application. Yes, even the cloud has been incorporated and baked into its design.

2. The ability to let you customize the user interface of the application while it is still running and see the results of the change immediately. In a way this is like Visual Studio Edit and Continue on steroids!

Currently the tool is in beta so download it, try it out, give the development team your feedback and help us to shape the product.

For more details see Jason Zander’s blog post, this video of a talk that he gave at VSLive and visit the LightSwitch web site for more details, how-to videos and to download the Beta.

Return to section navigation list>

Windows Azure Infrastructure

Srinivasan Sundara Rajan compared Dynamic Scaling and Elasticity: Windows Azure vs. Amazon EC2 on 11/24/2010:

A key benefit of cloud computing is the ability to add and remove capacity as and when it is required. This is often referred to as elastic scale. Being able to add and remove this capacity can dramatically reduce the total cost of ownership for certain types of applications; indeed, for some applications cloud computing is the only economically feasible solution to the problem.

This particular property will be the most valued to evaluate a cloud platform, and I have provided a comparison between two of the major IaaS platforms:

- Amazon EC2

- Windows Azure (Which is also a PaaS on top of the IaaS Offering)

Amazon EC2

Amazon Elastic Compute Cloud (Amazon EC2) is a web service that provides resizable compute capacity in the cloud. It is designed to make web-scale computing easier for developers.

Amazon EC2 provides out-of-the-box support for elasticity and auto scaling with multiple components.

Amazon CloudWatch

Amazon CloudWatch is a web service that provides monitoring for AWS cloud resources, starting with Amazon EC2. It provides visibility into resource utilization, operational performance, and overall demand patterns - including metrics such as CPU utilization, disk reads and writes, and network traffic.

Auto Scaling

Auto scaling allows you to automatically scale your Amazon EC2 capacity up or down according to conditions you define. With auto scaling, you can ensure that the number of Amazon EC2 instances you're using scales up seamlessly during demand spikes to maintain performance, and scales down automatically during demand lulls to minimize costs. Auto scaling is particularly well suited for applications that experience hourly, daily, or weekly variability in usage. Auto scaling is enabled by Amazon CloudWatch and available at no additional charge beyond Amazon CloudWatch fees.Auto scaling utilizes the following concepts.

- An AutoScalingGroup is a representation of an application running on multiple Amazon Elastic Compute Cloud (EC2) instances.

- A Launch Configuration captures the parameters necessary to create new EC2 Instances. Only one launch configuration can be attached to an AutoScalingGroup at a time.

- In Auto Scaling, the trigger mechanism uses defined metrics and thresholds to initiate scaling of AutoScalingGroups.

The following diagram, courtesy of Amazon EC2 site, gives the over view of auto scaling in Amazon EC2.

Windows Azure

The Windows Azure platform consists of the Windows Azure cloud-based operating system, which provides the core compute and storage capabilities required by cloud-based applications as well as some constituent services - specifically the service bus and access control - that provide other key connectivity and security-related features.

Windows Azure also provides robust dynamic scaling capabilities, however there are no named products like EC2 but rather a custom-coding approach is used at this time. However, there are third-party providers that are in the process of developing dynamic scaling applications.

In Windows Azure, auto scaling is achieved by changing the instance count in the service configuration. Increasing the instance count will cause Windows Azure to start new instances; decreasing the instance count will in turn cause it to shut instances down.

Decisions around when to scale and by how much will be driven by some set of rules. Whether these are implicit and codified in some system or simply stored in the head of a systems engineer, they are rules nonetheless.

Rules for scaling a Windows Azure application will typically take one of two forms. The first is a rule that specifies to add or remove capacity from the system at a given time - for example: run 20 instances between 8 am and 10 am each day and two instances at all other times. The second form is rules that are based around a response to metrics and this form warrants more discussion.

Taking action to scale Windows Azure is exposed through the use of the management API. Through a call to this API it is possible to change the instance count in the service configuration and in doing so to change the number of running instances.

Summary

Auto scaling is the number one feature in a cloud platform that directly addresses the need to reduce cost for large enterprises.Both EC2 and Azure provide the auto scaling feature. While EC2 provides a backbone and framework for auto scaling, Azure provides an API that can be extended. Already we are seeing several third-party providers delivering tools for Azure auto scaling.

Srinavasan is a solution architect for Hewlett Packard.

Bruce Guptil posted Over the River and to The Cloud: Giving Thanks as a Saugatuck Technology Research Alert on 11/24/2010 (site registration required):

What is Happening? The U.S. Thanksgiving holiday this week provides a unique opportunity to examine what it is about Cloud IT for which IT users, buyers, and providers should be thankful. So our crack analyst team here at Saugatuck developed the following list, sorted by IT buyers and organizations, IT vendors (including Cloud providers), consumers/end users, and the IT industry as a whole.

On the IT buyer and organizational level, we can be thankful for:

- Improved levels of service at lower costs. With Cloud IT in all its forms, IT organizations have, for the first time in memory, the first true opportunities to acquire and use leading-edge, proven IT without the risk and cost inherent in traditional capital investment. This translates to an opportunity – not a guarantee – for significantly improved levels of IT service over time without the cost levels of IT management traditionally required. IT executives thus should be thankful for gaining the ability to do what they truly desire – improve the ability of IT to serve the company – while doing what they are most often evaluated on – keeping IT costs low and in control.

- Faster, Better, Cheaper – Really. What’s coming from the Cloud, especially in regard to software-as-a-service solutions, is usually the latest, the most advanced, the most powerful, and on a per-user basis, the most affordable IT ever seen. Advances in user interfaces, data integration, and functionality are made available as part of ongoing subscriptions rather than as biennial upgrades that cause major business and budget disruption. Users can be thankful that at least parts of IT may finally becoming what it has always been sold as.

- Affordable Business Improvement and Competitive Strength. Businesses and other user organizations of all sizes and types tell Saugatuck that one of their main interests in Cloud IT is as an affordable means to bridge gaps in business management, operations, and systems; gaps that would previously have required significant new investment in traditional technologies. Cloud IT is not filling all gaps in all places, but it is providing the opportunity for “self service IT” and helping millions of businesses worldwide to quickly and affordably add operational capabilities that in turn help them remain competitive, and even gain competitive advantage, while minimizing risk to their own business and budgets. The economics and ease-of-use of the Cloud can often make it so inexpensive that ideas previously deemed unaffordable or unattainable are now worth trying. That means a lot in uncertain, even recessionary, economic times and markets.

- Markets, More Affordably. It’s already a marketing cliché, but the Cloud truly enables a global ability to compete for almost any size of type of business or organization. Companies of all sizes (and across a wide spectrum of industries) are creating new cloud-enabled business services that supplement, and in some cases replaces, traditional brick-and-mortar services. Marketing, sales, and support are cost-effectively available at the touch of a screen (and the transfer of monthly payments for IT and business services). This goes for IT providers, as well. Markets that may have been unreachable, or that did not even exist a few years ago, are now open for business. And more are coming, especially as the prime movers of Cloud IT – economic improvement, and affordable and reliable broadband networking (wireless and otherwise) – expand to include new countries and regions.

IT providers can also be thankful for the following:

- More Opportunity. Like real-world clouds, Cloud IT markets have no limits; they are constantly expanding, changing, and shifting. Therefore they present an almost unending array of opportunity. Even the most traditional of IT vendors and service providers are finding opportunity to grow and profit from Cloud IT, whether as critical components of Cloud delivery stacks, enabling technologies for Cloud-based applications and services, or using Cloud as a new channel. But also like real-world clouds, Cloud IT markets can shift, shrink, or even disperse unexpectedly. With great opportunity must also come significantly-improved ability to understand and manage that opportunity.

- New, More, and Bigger Ecosystems. A big part of “More Opportunity” for IT providers is the Cloud-based ability to find and work with more types of partners in more ways. For example: The collaborative capabilities of Cloud IT can enable dramatically improved channel communication and interaction, improving IT vendor market influence while reducing the costs of managing channels. The Cloud, properly utilized and managed, can thus enable new or better offerings and relationships that boost top-line revenues, market strength, and bottom-line profitability.

On an IT industry and market level, we can be thankful for:

- A Greener and More Sustainable Industry. Widespread use of Cloud IT promises better resource utilization, more efficient energy use, and reduced facilities footprints for most user IT organizations, and even for many IT providers. Yes, much if not most Cloud use is basically shifting IT to other locations, and Cloud overall is actually helping IT to grow farther and bigger than ever. But frankly, such growth was going to happen regardless. Sourcing to the Cloud enables tremendous gains in efficiency and cost, removes or reduces per-site pollution and resource use, and, with sufficient regulation and management, enables vastly more efficient utilization of energy and other resources by Cloud providers.

- New Excitement. Cloud IT has brought new excitement to IT. It’s a level of IT buzz not seen since the web/dot-com boom, and driving similar or even greater levels of capital investment in technology and services firms by shareholders and VCs. The influx of investment is increasing the attractiveness of technology-centered education and careers, helping to ameliorate recent challenges in finding skilled IT staff and managers. And it is helping to loosen the purse strings of large and small businesses, which have been hesitant to invest in anything in recent years, but now see Cloud IT as a means of doing more, and winning more, at less cost.

- Innovation and Value Creation – Within and Outside of IT. Just when you thought it was safe to rest on your laurels, something as transformative as the Cloud rears up like Bruce the shark in “Jaws” to shock you into awareness and creativity. New businesses are popping up overnight like mushrooms, and some of them will one day be the next IBM or Microsoft or Oracle or HP – or ZipCars, Netflix, or Amazon.

- Opportunity. The Cloud opens the door to new expertise and offers employment opportunity in exciting new areas to those who choose to pursue it. The Cloud is a ready-made jobs creation program at a time when talented people are struggling to find meaningful employment.

And on the consumer/end user level, let’s give thanks for:

- Global Interaction. The Cloud brings together interactions from geographically dispersed people to share and create value collaboratively. Not only do long-lost friends find each other and themselves on Facebook, but John in London, Beatrice in Sao Paolo, Brian in Christchurch, and Kim in Hong Kong can share their experiences and get answers to questions they have been unable to answer. Whenever co-workers are on the other side of the continent, we can still work together on projects and do meaningful work despite the distances and time zones between us.

- Entrepreneurial Empowerment. The Cloud empowers individuals and businesses, large and small, to meet their computing needs with little or no investment in expensive technology – and without the need to build a full-time IT staff. Who has money these days? Let’s rejoice that the Cloud makes possible starting a new business on a shoestring, adding new capability to an existing business system or connecting with likeminded people to share information -- without emptying our wallets.

- Free-range Computing – and Lifestyles. The Cloud also empowers travelers, whether on business or on holiday, to find and use the information they need and do the things they need to do with their mobile devices. When the flight is delayed, and you need to cancel or book a hotel room, when you’re suddenly with a free evening and want to find something interesting to do, or when you just need to while away a few moments in between things or before a meeting – the Cloud awaits your command. …

Bruce continues with the usual “Why Is It Happening?” and “Market Impact” sections.

David Lemphers recommended Choose your Cloud on Capability, Not Cost! in this 11/24/2010 post: