Windows Azure and Cloud Computing Posts for 11/11/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Cumulux reviewed SQL Azure Data Sync on 11/10/2010:

Microsoft SQL Azure database is a cloud-based relational database. Built in with high availability and fault tolerance, it delivers highly available, scalable, multi-tenant database service. By integrating it with the existing tool sets and providing symmetry with on-premises and cloud databases, the costs can be drastically controlled.

Microsoft SQL Azure Data Sync is a data synchronization service built on Microsoft Sync Framework technologies. The synchronization is done bi-directionally and the infrastructure ensures data integrity. SQL Azure Data sync service can be used for Geo-Replication of SQL Azure databases [How to do Geo-replication of SQL Azure databases]. By synching data and ensuring data integrity in all geographically distributed datacenters, the speed with which the servers can respond and serve will see a dramatic rise.

In the recently organized Microsoft Professional Developer Conference 2010, SQL Azure Data sync service has been enhanced to allow synchronization of data between on-premises and cloud. By doing so, it will preserve existing infrastructure investments and save costs. The synchronized data from the cloud can be extended to remote offices and retails stores. This will also give rise to new innovative ideas and solutions where the synchronized data in the cloud can be used by other applications on-premises and in the cloud.

The main components of this feature include the following:

- Schedule Sync: User can modify the interval at which the sync should take place.

- No-Code Sync Configuration: User can define data to be synchronized with easy-to-use tools available with the service.

- Data sub-setting: User can select the tables to be synchronized between SQL Azure databases.

- All the transactions are logged and synchronization can be effectively tracked using the Logging and Monitoring services.

For more detailed technical overview, Refer PDC 2010 Session on SQL Azure Data Sync .

For more information, Refer SQL Azure Data Sync Blog.

Users with Microsoft Live ID can access SQL Azure Labs.

Srinivasan Sundara Rajan ranks “SQL Azure, Amazon RDS and others....” is his Top Five Cloud Databases post of 11/10/2010:

Cloud Storage is an important aspect of a successful Cloud Migration. Hence any migration of enterprise applications to Cloud either Private or Public, would require a stronger database platform in support.

Again the database needs will differ according to the Cloud Service Model.

SaaS

In this service model Cloud Consumer may not worry about the underlying database as its implementation is hidden, however the Cloud Provider needs to worry about the appropriate choice of Cloud Database to meet the agreed QoS of the Software Service. Probably as a consumer of Google Gmail you may not be worried about the underlying database platform.IaaS

As IaaS Providers needs to have a catalog of database servers, it could be more of existing popular database servers being served over the cloud platform. For example Amazon EC2 provides a catalog of major databases including :

- IBM DB2

- IBM Informix Dynamic Server

- Oracle Database 11g

- MYSQL Enterprise

PaaS

In this model, as a cloud application development consumer your interest in the proper database support in support of cloud enablement matters most. For example better the features served by the PaaS platform Cloud database more the chances the application is highly scalable on Cloud.Some of the databases that come under the PaaS category where the database features have been exclusively tailored for Cloud are listed below.

1. SQL AZURE

Microsoft SQL Azure is a multitenant cloud-based relational database built on the SQL Server engine. SQL Azure offers the same Tabular Data Stream (TDS) interface for communicating with the database that is used to access any on premise SQL Server database. As a result, with SQL Azure, developers can use the same tools and libraries they use to build client applications for SQL Server.

Pros:

- A true cloud database with much of the administrative activities taken care by the platform and scalable

Cons:

- While touts to be just another version of Sql Server but there are differences in functionalities

- Space limitations to be addressed in future versions

2. Amazon RDS

Amazon Relational Database Service (Amazon RDS) is a web service that makes it easier to set up, operate, and scale a relational database in the cloud. It gives you access to the full capabilities of a MySQL 5.1 database running on your own Amazon RDS database instance.

Pros:

- While RDS in itself a PaaS platform, it can be bundled as part of EC2 IaaS offering to make it flexible for the customers.

Cons:

- But for the features like ‘Read Replica' the Scalability options are yet to mature

- Tools like App Fabric ( Of Azure Platform) to migrate the existing data and applications need to evolve

3. Google BigQuery

BigQuery is a web service for querying large datasets. It supports very fast execution of select-and-aggregate queries on tables with billions of records. It is a PaaS based database service, which is scalable and support SQL like syntax.

Pros:

- While most of the cloud databases have size issues, You can execute queries over billions of rows of data.

- It is compatible with the Google Storage as the persistent mechanism

Cons:

- While it supports SQL like syntax for querying it is still unstructured and non relational and enterprise applications may not utilize this design for storing their data, BigQuery does not currently support joins.

- New learning curve unlike SQL Azure or Amazon RDS which utilizes existing database interfaces

4. Amazon Simple DB

Amazon SimpleDB is a highly available, scalable, and flexible non-relational data store that offloads the work of database administration. Developers simply store and query data items via web services requests, and Amazon SimpleDB does the rest. It avoids complex database design and administration but rather keeps the data access really simple.

Pros:

- Good choice for low end needs and is in line with rest of Amazon AWS strategy

- Cost effective and flexible

Cons:

- All Amazon SimpleDB information is stored in domains. Domains are similar to tables that contain similar data. You can execute queries against a domain, but cannot execute joins between domains. So not conducive for enterprise class database design needs which involves heavy normalization of tables (Domains)

- Locking and Performance tuning options are limited

5. Other Big Enterprise Databases In IaaS Or ‘Cloud In a Box' Mode

Oracle 11g : While Oracle is yet to release some thing equivalent to SQL Azure, however Oracle 11g is available as part of the cloud platform and Cloud Appliances, which makes it a candidate for the Cloud databases.

- Oracle Exadata Cloud Appliance : The Oracle Exadata Database Machine is the only database machine that provides extreme performance for both data warehousing and online transaction processing (OLTP) applications, making it the ideal platform for consolidating onto grids or private clouds.

- Amazon EC2 : Amazon EC2 enables partners and customers to build and customize Amazon Machine Images (AMIs) with software based on needs. In that context oracle 11g is available as one of the AMI options.

DB2 : While DB2 is yet to release some thing equivalent to SQL Azure,

howeverDB2 11g is available as part of the cloud platform and Cloud Appliances, which makes it a candidate for the Cloud databases.

- Websphere Cloudburst Appliance : WebSphere CloudBurst V2.0 includes support for a virtual image that contains DB2 9.7 Data Server for WebSphere CloudBurst Appliance. A 90-day trial of the DB2 V9.7 Enterprise Data Server for WebSphere CloudBurst virtual image is pre-loaded in the WebSphere CloudBurst V2.0 catalog .

- Amazon EC2 : Amazon EC2 enables partners and customers to build and customize Amazon Machine Images (AMIs) with software based on needs. In that context IBM DB2 is available as one of the AMI options.

Pros:

- No need to elaborate on how good these enterprise class proven databases

Cons:

- While the appliances meant for private cloud have a price tag associated, enterprise databases as part of EC2 IaaS platform still requires administration and other maintenance typical of these enterprise databases.

<Return to section navigation list>

Dataplace DataMarket and OData

Ahmed Mustafa posted Introduction to Multi-Valued Properties to the WCF Data Services Team Blog on 11/11/2010:

Although you may see both the terms, “Bags” and “Multi-Valued Property”, they refer to the same thing. While this naming change was not included with the latest WCF Data Services 2010 October CTP release, based on community feedback, we’ve decided to use Multi-Valued Property for all future releases.

What is a Bag Type?

Today entities in WCF Data Services (Customer, Order, etc) may have properties whose value are primitive types, complex types or represent a reference to another entry or collection of entries. However there is no support for properties that *contain* a collection (or Bag) of values, the introduction of this type is meant to support such scenarios. Protocol changes need to support Bag type can be found here

How can I specify a Bag property in CSDL?

As you know, WCF Data Services may expose a metadata document ($metadata endpoint) which describes the data model exposed by the service. Below is an example of the extension of the metadata document to define Bag properties. In this case, the Customer Entry has a Bag of email addresses and apartment addresses.

How are Bags represented on the client?

From a WCF Data Services Client perspective a Bag property is an ICollection<T>, where in the above example T is SampleModel.Address or SampleModel.String. When using “Add Service Reference” against WCF Data Service in Visual Studio, WCF Data Services will generate an ObservableCollection<T> for each Bag Property in the model. If using DataSvcUtil.exe without specifying the “/DataServiceCollection” flag, System.Collections.ObjectModel.Collection<T> will be used to represent Bag properties of the entity type

A representation of the above example in a .NET type would be:

class Customer

{

int ID { get; set; }

string Name { get; set; }

ICollection<Address> Addresses { get; set; }

ICollection<string> EmailAddresses { get; set; }

}

How are Bags used on the client?

Bag is like any other complex type in the system, in terms of client CRUD operations with the notable exception that Bag properties cannot be null, they can only be empty.

NorthwindEntities svc = new NorthwindEntities(new Uri("http://foobar/Northwind.svc/"));

svc.Customers.Select (c => new { Addresses= c.Addresses})

<Return to section navigation list>

AppFabric: Access Control and Service Bus

The Windows Azure AppFabric Team wrote an Introduction to the Windows Azure AppFabric CTP October Release and posted it to MDSN in early November 2010:

Windows Azure Platform

[This is prerelease documentation and is subject to change in future releases. Blank topics are included as placeholders.]

Windows Azure AppFabric is a middleware platform and a suite of services that makes it easy to build and manage reliable, scalable, fast and secure composite applications that run on Windows Azure. Windows Azure AppFabric consists of the following services:

Windows Azure AppFabric Service Bus

The Windows Azure AppFabric Service Bus helps provide secure connectivity between loosely-coupled services and applications, which enables them to navigate firewalls or network boundaries and to use a variety of communication patterns. Services that register on the AppFabric Service Bus can easily be discovered and accessed, across any network topology. In addition to connectivity, the AppFabric Service Bus also provides a message buffer service that provides durable buffer functionality for asynchronous messaging. Messages can be pushed to the buffer using HTTP, and message recipients can retrieve and consume those messages at a later point. The durable message buffer service also supports the peek-lock and delete patterns so that messages can be guaranteed to be delivered at least one time, even in the face of client failure. For more information about this service, see Durable Message Buffers.

The Windows Azure AppFabric CTP October release introduces several new capabilities in the AppFabric Service Bus on both the service and the client side. Microsoft is very interested in customer feedback on those new capabilities. If you have suggestions or comments on the API surface or the new capabilities introduced here, visit the [[MSDN forum]].

In the Windows Azure AppFabric CTP October release, the AppFabric Service Bus client assembly and the client-side classes are significantly different from the client API for the September release of Windows Azure AppFabric. The CTP releases are meant to provide an early view of the future direction of the AppFabric Service Bus and the other services that reside under the Windows Azure AppFabric umbrella. In making these releases available, the goal is to allow for experimentation, proof-of-concept building, and to solicit feedback from the developer community.

For more information about specific differences between the September release of Windows Azure AppFabric and the Windows Azure AppFabric CTP October release, see the Release Notes for the Windows Azure AppFabric CTP October Release.

Windows Azure AppFabric Caching

You can provision AppFabric caches in Windows Azure using the Windows Azure AppFabric caching feature. You can use an AppFabric cache for ASP.NET session state or output caching, or you can directly call APIs to programmatically add and retrieve .NET objects from the cache. Caching can improve application performance, enable applications to scale in order to handle increased load, and potentially achieve cost savings. Until the Windows Azure AppFabric CTP October Release, caching was only available for the on-premise solution of Windows Server AppFabric. By using Windows Azure AppFabric caching, you get the benefits of a distributed in-memory cache without the requirement to manage the cache cluster or the individual cache hosts in that cluster. Windows Azure AppFabric manages the cache cluster for you and lets you focus on your caching strategy and application design. For more information about this service see Windows Azure AppFabric CTP Caching.

Windows Azure AppFabric Access Control

Windows Azure AppFabric Access Control helps you build federated authorization into your applications and services, without the difficult programming that is ordinarily required to help secure applications that extend beyond organizational boundaries. By using its support for a simple declarative model of rules and claims, AppFabric Access Control rules can easily and flexibly be configured to cover a variety of security needs and different identity-management infrastructures. For more information about this service see the AppFabric Access Control Labs release..

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team posted Real World Windows Azure: Interview with Jeff Yoshimura, Head of Product Marketing, Zuora on 11/11/2010:

As part of the Real World Windows Azure series, we talked to Jeff Yoshimura, Head of Product Marketing at Zuora, about using the Windows Azure platform to create the Zuora Toolkit for Windows Azure. Here's what he had to say.

MSDN: Can you please tell us about Zuora and the services you offer?

Yoshimura: Zuora is the global leader for on-demand subscription billing and commerce solutions. Our platform gives companies the ability to launch, run, and operate their recurring revenue businesses without the need for custom-built infrastructure or costly billing systems. In June 2010, we delivered the Zuora Toolkit for Windows Azure, which provides documentation, APIs, and code samples to enable developers to quickly automate commerce from within their Windows Azure applications.

MSDN: What are the biggest challenges that Zuora's recent offerings solve for Windows Azure customers?

Yoshimura: More and more companies are finding that their trading partners and customers want greater flexibility in the way they purchase products and make payments-a way that's usage-based and pay-as-you-go. As a result, businesses are wanting to quickly operationalize their cloud strategy or more effectively monetize their existing cloud offerings.

MSDN: Can you describe how the Zuora Toolkit for Windows Azure addresses these challenges?

Yoshimura: The Zuora Toolkit for Windows Azure helps ISVs and developers rapidly use all of the subscription capabilities described above, from within their existing Windows Azure services or websites. Any company that builds a solution on Windows Azure can use the Zuora Toolkit to plug in functionality for packaging products, configuring pricing plans, and automating credit card processing with PCI Level 1 security-all within a matter of minutes.

The Zuora Toolkit for Windows Azure enables developers to rapidly add PCI Level 1 security-compliant commerce capabilities into their existing Windows Azure applications.

MSDN: What makes the Zuora platform unique?

Yoshimura: The Zuora platform is designed from the ground up, specifically for subscription businesses and it's the first online billing solution for Windows Azure. So, as more ISVs and developers build their applications using Windows Azure and want to launch them into the market for commercial success, they'll need a billing and payments platform that is tightly integrated into the Windows Azure fabric.

MSDN: What kinds of benefits are you realizing with Windows Azure?

Yoshimura: From a development perspective, Windows Azure offers us several significant advantages. Because our team already had extensive experience working in Microsoft Visual Studio, we were able to take full advantage of the Windows Azure Tools for Visual Studio. This made configuring and porting our Z-Commerce application to the cloud much simpler, which helped us complete the project two weeks ahead of schedule.

Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008478

To read more Windows Azure customer success stories, visit:

www.windowsazure.com/evidence

<Return to section navigation list>

Visual Studio LightSwitch

Visual Studio LightSwitch

No significant articles today.

Return to section navigation list>

Windows Azure Infrastructure

David Linthicum claimed “Cloud computing needs software architects, but few truly get it -- and the major cloud providers have snapped up those who do” as he asked Software architects have the best job today -- but can they adapt? in this 11/11/2010 post to InfoWorld’s Cloud Computing blog:

After looking at 100 jobs, Money magazine rated software architect as the best job in America: "Like architects who design buildings, they create the blueprints for software engineers to follow -- and pitch in with programming too. Plus, architects are often called on to work with customers and product managers, and they serve as a link between a company's tech and business staffs."

However, in recent years the role of the software architect has been morphing quickly; more and more, we're being asked to design and build complex distributed systems that exist both outside and inside of an enterprise -- meaning clouds. The challenge has been acquiring the new skills required to build infrastructure, platform, and software clouds, which often means understanding how to design and construct multitenant and virtualized systems that can manage thousands of simultaneous users.

Although being a software architect is a great job today, it's a position that will morph quickly as cloud computing begins to creep into our enterprises and as cloud providers build or expand their software architecture teams.

Truth be told, software architects who understand cloud computing and can build instances of efficient cloud/software architecture are scarce. I talk to many who believe they can easily transition into the required skill set, but they fall down quickly when it comes to the emerging patterns of core cloud computing systems such as use-based accounting, virtualization, and multitenant access to resources. And most of the major cloud providers own the competent architects, so there aren't many left for consulting organizations or enterprises.

I suspect that software architects will better understand the mechanics of cloud computing as time goes on. However, it will be interesting to see if supply can ever keep up with demand. Perhaps software architect stays Money's top job for the next few years, just considering the skyrocketing pay.

Windows Azure Compute in the South Central US data center reported a [Yellow] Partial outage in South Central US on 11/11/2010 at

Nov 11 2010 11:56AM There is a contained, partial outage in the South Central US region. Most of the compute clusters in this region are not impacted. Storage clusters are not impacted. Hosted services deployed on the affected compute clusters may be impacted.

Nov 11 2010 6:19PM Service functionality has been fully restored in the cluster that was impacted in that region.

Pingdom also reported my Windows Azure Table Test Harness app, which is deployed to the South Central US data center, was down but with a different date and time:

PingdomAlert DOWN: Azure Tables (oakleaf.cloudapp.net) is down since 11/10/2010 11:58:21PM.

The problem with the test harness might be due to the fact that its StorageClient code hasn’t been updated since about the middle of 2009. The status is “Stopped” or “Busy” after suspending and restarting.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Dilip Tinnelvelly reported “New Gartner study draws focus on virtualization of security control” in a preface to his Security Needs Revamping on Delivery Front to Evolve with Private Cloud post of 11/11/2010:

According to a recent report by Gartner, the shift to cloud environments must be accompanied by a parallel evolution in security procedures. Virtualization technology is generally the primary gateway for most businesses to step into private cloud. Security programs must become more "adaptive" to the cloud model where processes become disjointed from hardware equipment and provision dynamically. Gartner predicts that by 2015, 40 percent of the security controls used within enterprise data centers will be virtualized, up from less than 5 percent in 2010.

To combat cyber threats, physical, network and virtualization-based segmentation of systems is created to achieve more user control. While the essential traits and goals of IT security such as ensuring the confidentiality, integrity, authenticity, access, and audit of our information and workloads - don't change, delivery methods must.

Thomas Bittman, vice president and distinguished analyst at Gartner says, "Policies tied to physical attributes, such as the server, Internet Protocol (IP) address, Media Access Control (MAC) address or where physical host separation is used to provide isolation, break down with private cloud computing,"

Aside from virtualization of security controls , Gartner mentions that private cloud security must be an integral, but a separately configurable part of the private cloud fabric and should be designed as a set of on-demand, elastic and programmable services . In addition, security should be configured by policies tied to logical attributes to create "adaptive trust zones" capable of separating multiple tenants.

The industry analyst company further provides six necessary attributes of private cloud security infrastructure: A Set of On-Demand and Elastic Services, Programmable Infrastructure, Policies that are based on logical, not physical attributes capable of incorporating runtime context into real-time security decisions, Adaptive trust zones that are capable of high-assurance separation of differing trust levels, Separately configurable security policy management and control and ‘Federatable' security Policy and identity.

Dilip is VP of Product Management & Technology at ChannelVission Inc., a Cloud computing firm specializing in channels for global business development using SaaS/PaaS platforms.

Bruce Kyle reported White Paper Addresses Security Considerations for Client and Cloud Applications on 11/10/2010:

The increasing importance of "client and cloud" computing raises a number of important concerns about security.

A new white paper helps you understand how Microsoft addresses potential security vulnerabilities during the development of “client and cloud” applications using the Security Development Lifecycle (SDL).

Download the white paper at Security Considerations for Client and Cloud Applications.

This paper describes how Microsoft has addressed security for its “client and cloud” platform and offers some guidance to other organizations who want to host their applications “in the cloud.” The paper begins by explaining how Microsoft applied the Security Development Lifecycle (SDL) to the development of its “client and cloud” applications, and how the SDL has evolved to meet the demands of this environment. The paper then moves on to discuss the operational security policies that Microsoft applies to its cloud platform, and to explain the level of security that users can expect from Microsoft’s cloud platform.

<Return to section navigation list>

Cloud Computing Events

After a few misteps this morning, more TechEd Europe 2010 session videos were showing up in the Cloud Computing and Online Services category on 11/11/2010 in a different format:

…

The same was true for Latest Vitualization Videos earlier today, but at about 4:00 PM PST all Virtualization videos disappeared from the site.

Salesforce.com announced that Dreamforce 2010 will take place from 12/6 to 12/9/2010 at Moscone Center, San Francisco:

Digital disruption. Social media. Real-time collaboration. Data convergence. Virtual teams. Agile development. Mobile everything. Cloud computing. The way we work is fundamentally changing. Some companies will adapt and grow. Others will be left behind.

Where do you go to understand the future? Learn from all-star customers? Master the cloud? For seven years, the most successful companies in the world have come to Dreamforce.

Join us at Dreamforce 2010, the cloud computing event of the year. This December 6-9, more than 20,000 fans will gather in San Francisco for our 8th annual user and developer conference.

In four action-packed days, we’ll give you all the education, inspiration, and innovation you need to succeed. There’s no better way to get up to speed on the latest advances in Salesforce apps, the Force.com platform, and cloud computing. And this year we’ll connect you with your peers and heroes in ways you’ve never thought possible.

It’s time to dream again.

President Bill Clinton keynotes Dreamforce

Under his leadership, the country enjoyed the strongest economy in a generation and the longest economic expansion in U.S. history. Join us to hear his fascinating insights on globalization and our growing interdependence.

Celebrate with Stevie Wonder

After a full day of Dreamforce, jam to the magical sounds of the legendary Stevie Wonder! With 30+ top ten hits and 22 Grammy Awards, the man personifies success. It’s good times guaranteed: signed, sealed, delivered.

Dreamforce 2010 will also host Cloudstock on 12/6/2010.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Tim Anderson (@timanderson) posted UK business applications stagger towards the cloud on 11/10/2010:

I spent today evaluating several competing vertical applications for a small business working in a particular niche – I am not going to identify it or the vendors involved. The market is formed by a number of companies which have been serving the market for some years, and which have Windows applications born in the desktop era and still being maintained and enhanced, plus some newer companies which have entered the market more recently with web-based solutions.

Several things interested me. The desktop applications seemed to suffer from all the bad habits of application development before design for usability became fashionable, and I saw forms with a myriad of fields and controls, each one no doubt satisfying a feature request, but forming a confusing and ugly user interface when put together. The web applications were not great, but seemed more usable, because a web UI encourages a simpler page-based approach.

Next, I noticed that the companies providing desktop applications talking to on-premise servers had found a significant number of their customers asking for a web-hosted option, but were having difficulty fulfilling the request. Typically they adopted a remote application approach using something like Citrix XenApp, so that they could continue to use their desktop software. In this type of solution, a desktop application runs on a remote machine but its user interface is displayed on the user’s desktop. It is a clever solution, but it is really a desktop/web hybrid and tends to be less convenient than a true web application. I felt that they needed to discard their desktop legacy and start again, but of course that is easier said than done when you have an existing application widely deployed, and limited development resources.

Even so, my instinct is to be wary of vendors who call desktop applications served by XenApp or the like cloud computing.

Finally, there was friction around integrating with Outlook and Exchange. Most users have Microsoft Office and use Outlook and Exchange for email, calendar and tasks. The vendors with web application found their users demanding integration, but it is not easy to do this seamlessly and we saw a number of imperfect attempts at synchronisation. The vendors with desktop applications had an easier task, except when these were repurposed as remote applications on a hosted service. In that scenario the vendors insisted that customers also user their hosted Exchange, so they could make it work. In other words, customers have to build almost their entire IT infrastructure around the requirements of this single application.

It was all rather unsatisfactory. The move towards the cloud is real, but in this particular small industry sector it seems slow and painful.

Related posts:

Klint Finley answered How Do Public Cloud Early Adopters Think About Cloud Computing? on 11/10/2010:

Cloud management company Appirio, seeing that most surveys on the subject of cloud computing focused on the industry as a whole, sponsored a survey of public cloud early adopters to see how they view the state of the public cloud. The results are quite interesting. Early adopters are downplaying problems like security risks, vendor lock-in and availability and reporting a change in the role of IT.

- 23% found security to be somewhat or significantly worse. Fifty-three percent found security to be somewhat or significantly better. Presumably, 24% found security to be "about the same."

- 12% found vendor lock-in to be somewhat or significantly worse, with 52% responding that the situation under cloud computing is actually better.

- 8% thought availability was worse, with 63% saying it was better.

Respondents were most likely to cite security as the most common misperception about the cloud.

Although IT was seen as the biggest skeptics, and the biggest propagators of cloud misinformation, the role of IT is also seen as changing. "Cloud applications have long been portrayed as a way for business to get around IT, whereas 70% of cloud adopters actually report that IT was a driver in the decision-making process," the report says. "And they expect their role to only increase moving forward."

Survey respondents indicated mobile access, adoption and integration as their top challenges moving forward.

Also of interest, MIT's Technology Review did an article on how mobile and cloud computing is disrupting enterprise IT. The article notes how Box.net was adopted by sales and marketing staff at TaylorMade-Adidas Golf Company after the company sprung for iPads. It also covers Pandora use of Box.net and Google Apps as a central integrators between various cloud services.

Jeff Barr posted Amazon S3: Multipart Upload to the Amazon Web Services blog on 11/10/2010:

Can I ask you some questions?

- Have you ever been forced to repeatedly try to upload a file across an unreliable network connection? In most cases there's no easy way to pick up from where you left off and you need to restart the upload from the beginning.

Are you frustrated because your company has a great connection that you can't manage to fully exploit when moving a single large file? Limitations of the TCP/IP protocol make it very difficult for a single application to saturate a network connection.

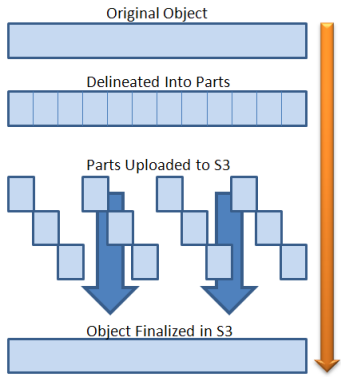

In order to make it faster and easier to upload larger (> 100 MB) objects, we've just introduced a new multipart upload feature.

You can now break your larger objects into chunks and upload a number of chunks in parallel. If the upload of a chunk fails, you can simply restart it. You'll be able to improve your overall upload speed by taking advantage of parallelism. In situations where your application is receiving (or generating) a stream of data of indeterminate length, you can initiate the upload before you have all of the data.

Using this new feature, you can break a 5 GB upload (the current limit on the size of an S3 object) into as many as 1024 separate parts and upload each one independently, as long as each part has a size of 5 megabytes (MB) or more. If an upload of a part fails it can be restarted without affecting any of the other parts. Once you have uploaded all of the parts you ask S3 to assemble the full object with another call to S3.

Here's what your application needs to do:

- Separate the source object into multiple parts. This might be a logical separation where you simply decide how many parts to use and how big they'll be, or an actual physical separation accomplished using the Linux split command or similar (e.g. the hk-split command for Windows).

- Initiate the multipart upload and receive an upload id in return. This request to S3 must include all of the request headers that would usually accompany an S3 PUT operation (Content-Type, Cache-Control, and so forth).

- Upload each part (a contiguous portion of an object's data) accompanied by the upload id and a part number (1-10,000 inclusive). The part numbers need not be contiguous but the order of the parts determines the position of the part within the object. S3 will return an ETag in response to each upload.

- Finalize the upload by providing the upload id and the part number / ETag pairs for each part of the object.

You can implement the third step in several different ways. You could iterate over the parts and upload one at a time (this would be great for situations where your internet connection is intermittent or unreliable). Or, you can upload many parts in parallel (great when you have plenty of bandwidth, perhaps with higher than average latency to the S3 endpoint of your choice). If you choose to go the parallel route, you can use the list parts operation to track the status of your upload.

Over time we expect much of the chunking, multi-threading, and restarting logic to be embedded into tools and libraries. If you are a tool or library developer and have done this, please feel free to post a comment or to send me some email.

Azure blobs have offered this feature from the get-go.

Lydia Leong (@cloudpundit) analyzed Amazon’s Free Usage Tier on 11/10/2010:

Amazon recently introduced a Free Usage Tier for its Web Services. Basically, you can try Amazon EC2, with a free micro-instance (specifically, enough hours to run such an instance full-time, and have a few hours left over to run a second instance, too; or you can presumably use a bunch of micro-instances part-time), and the storage and bandwidth to go with it.

Here’s what the deal is worth at current pricing, per-month:

- Linux micro-instance – $15

- Elastic load balancing – $18.87

- EBS – $1.27

- Bandwidth – $3.60

That’s $38.74 all in all, or $464.88 over the course of the one-year free period — not too shabby. Realistically, you don’t need the load-balancing if you’re running a single instance, so that’s really $19.87/month, $238.44/year. It also proves to be an interesting illustration of how much the little incremental pennies on Amazon can really add up.

It’s a clever and bold promotion, making it cost nothing to trial Amazon, and potentially punching Rackspace’s lowest-end Cloud Servers business in the nose. A single instance of that type is enough to run a server to play around with if you’re a hobbyist, or you’re a garage developer building an app or website. It’s this last type of customer that’s really coveted, because all cloud providers hope that whatever he’s building will become wildly popular, causing him to eventually grow to consume bucketloads of resources. Lose that garage guy, the thinking goes, and you might not be able to capture him later. (Although Rackspace’s problem at the moment is that their cloud can’t compete against Amazon’s capabilities once customers really need to get to scale.)

While most of the cloud IaaS providers are actually offering free trials to most customers they’re in discussions with, there’s still a lot to be said about just being able to sign up online and use something (although you still have to give a valid credit card number).

<Return to section navigation list>

0 comments:

Post a Comment