Windows Azure and Cloud Computing Posts for 11/8/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

John Treadway (@cloudbzz) posted SQL In the Cloud to his CloudBzzz blog on 11/8/2010:

Despite the NoSQL hype, traditional relational databases are not going away any time soon. In fact, based on continued market evolution and development, SQL is very much alive and doing well.

I won’t debate the technical merits of SQL vs. NoSQL here, even if I were qualified to do so. Both approaches have their supporters, and both types of technologies can be used to build scalable applications. The simple fact is that a lot of people are still choosing to use MySQL, PostgreSQL, SQL Server and even Oracle to build their SaaS/Web/Social Media applications.

When choosing a SQL option for your cloud-based solution, there are typically three approaches as outlined below. One note – this analysis applies to “mass market clouds” and not the enterprise clouds from folks like AT&T, Savvis, Unisys and others. At that level you often can get standard enterprise databases as a managed service.

- Install and Manage – in this “traditional” model the developer or sysadmin selects their DBMS, creates instances in their cloud, installs it, and is then responsible for all administration tasks (backups, clustering, snapshots, tuning, and recovering from a disaster. Evidence suggests that this is still the leading model, though that could soon change. This model provides the highest level of control and flexibility, but often puts a significant burden on developers who must (typically unwillingly) become DBAs with little training or experience.

Use a Cloud-Managed DBaaS Instance – in this model the cloud provider offers a DBMS service that developers just use. All physical administration tasks (backup, recovery, log management, etc.) are performed by the cloud provider and the developer just needs to worry about structural tuning issues (indices, tables, query optimization, etc). Generally your choice of database is MySQL, MySQL, and MySQL – though a small number of clouds provide SQL Server support. Amazon RDS and SQL Azure are the two best known in this category.

- Use an External Cloud-Agnostic DBaaS Solution – this is very much like the cloud-based DBaaS, but has a value of cloud-independence – at least in theory. In the long run you might expect to be able to use an independent DBaaS to provide multi-cloud availability and continuous operations in the event of a cloud failure. FathomDB and Xeround are two such options.

Here’s a chart summarizing some of the characteristics of each model:

In my discussions with most of the RDBMSaaS solutions I have found that user acceptance and adoption is very high. When I spoke with Joyent a couple of months ago I was told that “nearly all” of their customers who spend over $500/month with them use their DBaaS solution. And while Amazon won’t give out such specifics, I have heard from them (both corporate and field people) that adoption is “very robust and growing.” The exception was FathomDB was launched at DEMO2010. They seem to not have gained much traction, but I don’t get the sense they are being very aggressive. When I spoke with one of their founders I learned they were working on a whole new underlying DBMS engine that would not even be compatible with MySQL. In any event, they have only a few hundred databases at this point. Xeround is still in private beta.

The initial DBaaS value proposition of reducing the effort and cost of administration is worth something, but in some cases it might be seen to be a nice-to-have vs. a need-to-have. Inevitably, the DBaaS solutions on the market will need to go beyond this to performance, scaling and other capabilities that will be very compelling for sites that are experiencing (or expect to experience) high volumes.

Amazon RDS, for instance, just added the ability to provision read replicas for applications with a high read/write ratio. Joyent has had something similar to this since last year when they integrated Zeus Traffic Manager to automatically detect and route query strings to read replicas (your application doesn’t need to change for this to work).

Xeround has created an entirely new scale-out option with an interesting approach that alleviates much of the trade-offs of the CAP Theorem. And ScaleBase is soon launching a “database load balancer” that automatically partitions and scales your database on top of any SQL database (at least eventually – MySQL will be first, of course but plans include PostgreSQL, SQL Server and possibly even Oracle). My friends at Akiban are also innovating in the MySQL performance space for cloud/SaaS applications.

Bottom line, SQL-based DBaaS solutions are starting to address many (though not all) of the leading reasons why developers are flocking to NoSQL solutions.

All of this leads me to the following conclusions – I’m interested if you agree or disagree:

- Cloud-based DBaaS options will continue to grow in importance and will eventually become the dominant model. Cloud vendors will have to invest in solutions that enable horizontal scaling and self-healing architectures to address the needs of their bigger customers. While most clouds today do not offer an RDS-equivalent, my conversations with cloud providers suggest that may soon change.

- Cloud-Independent DBaaS options will grow but will be a niche as most users will opt for the default database provided by their cloud provider.

- The D-I-Y model of installing/managing your own database will eventually also become a niche market where very high scaling, specialized functionality or absolute control are the requirements. For the vast majority of applications, RDBMSaaS solutions will be both easier to use and easier to scale than traditional install/manage solutions.

At some point in the future I intend to dive more into the different RDBMSaaS solutions and compare them at a feature/function level. If I’ve missed any – let me know (I’ll update this post too).

Other Cloud DBMS Posts:

Steve Yi announced 2010 PASS Summit Sessions on SQL Azure in his 11/8/2010 post to the SQL Azure Team blog:

The 2010 PASS summit in Seattle, WA is being held the November 8th-11th. In preparation here is a list of sessions about SQL Azure being given – almost all of them by the SQL Azure team. This is a good chance to learn more about SQL Azure and ask questions.

A lap around Microsoft SQL Azure and a discussion of what’s new: Tony Petrossian

SQL Azure provides a highly available and scalable relational database engine in the cloud. In this session we will provide an introduction to SQL Azure and the technologies that enable such functions as automatic high-availability. We will demonstrate several new enhancements we have added to SQL Azure based on the feedback we’ve received from the community since launching the service earlier this year.

Building Offline Applications for Windows Phones and Other Devices using Sync Framework and SQL Azure: Maheshwar Jayaraman

In this session you will learn how to build a client application that operates against locally stored data and uses synchronization to keep up-to-date with a SQL Azure database. See how Sync Framework can be used to build caching and offline capabilities into your client application, making your users productive when disconnected and making your user experience more compelling even when a connection is available. See how to develop offline applications for Windows Phone 7 and Silverlight, plus how the services support any other client platform, such as iPhone and HTML5 applications, using the open web-based sync protocol.

Migrating & Authoring Applications to Microsoft SQL Azure: Cihan Biyikoglu

Are you looking to migrate your on-premise applications and database from MySql or other RDBMs to SQL Azure? Or are you simply focused on the easiest ways to get your SQL Server database up to SQL Azure? Then, this session is for you. We cover two fundamental areas in this session: application data access tier and the database schema+data. In Part 1, we dive into application data-access tier, covering common migration issues as well as best practices that will help make your data-access tier more resilient in the cloud and on SQL Azure. In Part 2, the focus is on database migration. We go through migrating schema and data, taking a look at tools and techniques for efficient transfer of schema through Management Studio and Data-Tier Application (DAC). Then, we discover efficient ways of moving small and large data into SQL Azure through tools like SSIS and BCP. We close the session with a glimpse into what is in store in future for easing migration of applications into SQL Azure.

Building Large Scale Database Solutions on SQL Azure: Cihan Biyikoglu

SQL Azure is a great fit for elastic, large scale and cost effective database solution with many TBs and PBs of data. In this talk we will explore the patterns and practices that help you develop and deploy applications that can exploit full power of the elastic, highly available and scalable SQL Azure Databases. The talk will detail modern scalable application design techniques such as sharding and horizontal partitioning and dive into future enhancements to SQL Azure Databases.

Introducing SQL Azure Reporting Services: Nino Bice, Vasile Paraschiv

Introducing SQL Azure Reporting Services – An in-depth review of the recently announced SQL Azure Reporting Services feature complete with demo’s, architectural review, code samples and just as importantly a discussion on how this new feature can provide important cloud capabilities for your company. If you are a BI professional, System Integrator, Consultant, ISV or have operational reporting needs within your organization then you must not miss this session that talks to Microsoft's ongoing commitment to SQL Azure and Cloud computing.

Loading and Backing Up SQL Azure Databases: Geoff Snowman

SQL Azure provides high availability by maintaining multiple copies of your database, but that doesn't mean that you should just trust Azure and assume your data is safe. If your data is mission critical, you should maintain a backup outside the Azure infrastructure. The database is also vulnerable to administration errors. If you accidentally truncate a table in your production database, that change will immediately be copied to all replicas, and there is no way to recover that table. In this session, you'll see how to use SSIS and BCP to back up a SQL Azure database. We'll also demonstrate processes you can use to move data from an on-premise database to SQL Azure. Finally, we'll discuss procedures for migrating your database from staging to production, to avoid the risks associated with implementing DDL directly in your production database.

SQL Azure Data Sync - Integrating On-Premises Data with the Cloud: Mark Scurrell

In this session we will introduce you to the concept of "Getting Data Where You Need It". We will show you how our new cloud based SQL Azure Data Sync Service enables on-premises SQL Server data to be shared with cloud-based applications. We will then show how the Data Sync Service allows data to be synchronized across geographically dispersed SQL Azure databases.

SQLCAT: SQL Azure Learning from Real-World Deployments: Abirami Iyer, Cihan Biyikoglu, Michael Thomassy

SQL Azure was released in January, 2010 and this session will discuss the what we have learned from a few of our first live, real-world implementations. We will showcase a few customer implementations by discussing their architecture and the features required to make them successful on SQL Azure. The session will cover our lessons learned and best practices on SQL Azure connectivity, sharding and partitioning, database migration and troubleshooting techniques. This is a 300 level session requiring an intermediate understanding of SQL Server.

SQLCAT: Administering SQL Azure and new challenges for DBAs: George Varghese, Lubor Kollar

SQL Azure represents significant shift in DBA’s responsibilities. Some tasks become obsolete (e.g. disk management, HA), some need new approaches (e.g. tuning, provisioning) and some are brand new (e.g. billing). This presentation goes over the discrepancies between administering the classic SQL Server and SQL Azure.

Cihangir Biyikoglu reported on 11/7/2010 that he’ll present two sessions at SQL PASS 2010, Nov 8th to 12th in Seattle:

Hi everyone, I’ll be presenting the following 2 sessions at PASS. If you get a chance, stop by and say hi!

Building Large Scale Database Solutions on SQL Azure

SQL Azure is a great fit for elastic, large scale and cost effective database solution with many TBs and PBs of data. In this talk we will explore the patterns and practices that help you develop and deploy applications that can exploit full power of the elastic, highly available and scalable SQL Azure Databases. The talk will detail modern scalable application design techniques such as sharding and horizontal partitioning and dive into future enhancements to SQL Azure Databases. We’ll do a deep dive into federations and see a demo of how one can take an regular application

Migrating & Authoring Applications to Microsoft SQL Azure

Are you looking to migrate your on-premise applications and database from MySql or other RDBMs to SQL Azure? Or are you simply focused on the easiest ways to get your SQL Server database up to SQL Azure? Then, this session is for you. We cover two fundamental areas in this session: application data access tier and the database schema+data. In Part 1, we dive into application data-access tier, covering common migration issues as well as best practices that will help make your data-access tier more resilient in the cloud and on SQL Azure. In Part 2, the focus is on database migration. We go through migrating schema and data, taking a look at tools and techniques for efficient transfer of schema through Management Studio and Data-Tier Application (DAC). Then, we discover efficient ways of moving small and large data into SQL Azure through tools like SSIS and BCP. We close the session with a glimpse into what is in store in future for easing migration of applications into SQL Azure.

<Return to section navigation list>

Dataplace DataMarket and OData

Mike Tulty offered a PDC 2010 Session Downloader in Silverlight on 11/7/2010:

I was struggling a little on the official PDC 2010 site to download all of the PowerPoints and videos I wanted and I scratched my head for a while before I found an OData feed which looked like it contained all the data I needed. That feed is at;

and I figured it wouldn’t be too much hassle to plug that into a Silverlight application that made it easier to do the downloading. I spent maybe a day on it during the rainy bits of the weekend and that application is running in the browser below and you should be able to click the button to install it locally.

Install the app from Mike’s blog.

I apologise that the XAP file is about 1MB, I think I could make this a lot smaller by taking out some unused styles but I haven’t figured out exactly which styles are used/un-used at this point. I also left the blue spinning balls default loader on it.

This is an elevated out of browser application because as far as I can tell the site serving the feed doesn’t have a clientaccesspolicy.xml file and also because I wanted to be able to write files straight into “My Videos” and “My Documents” without having to ask the user on a per-file basis.

Here’s the Help File

I’m not exactly the master of intuitive UI so here’s some quick instructions for use. Once you have the application installed you should see a screen like this;

with the circled tracks coming from the OData feed. When you click on a topic for the first time, the application goes back over OData;

and then should display the session list within that particular track;

You can then select which PowerPoints, High Def videos and Low Def videos you want to download.

Note – for the purposes of this app I only looked at the videos in MP4 format, I do not look for videos in WMV format and note also that not all sessions seem to have downloadable videos at this point.

You can make individual selections by just clicking on the content types – maybe I want Clemens’ session in High Def and I want the PowerPoints;

or you can use the big buttons at the bottom to try and do a “Select All” or “Clear All” on that particular content type – below I’m trying to download all the PowerPoints and all the Low Def videos from the “Framework & Tools” track;

Your selections should be remembered as you switch from track to track so you can go around this way building up a list of all the things that you want to download.

Once you’ve got that list, click the download button in the bottom right;

and the downloading dialog should pop up onto the screen and work should begin. It should progress like this;

showing you progress in terms of how many sessions it has completed, how many files (in total) it has completed, which session and file it’s working on right now and (potentially) the errors that it encounters along the way.

You can hit the Stop button and the downloads should stop with a set of “cancelled” errors for all the files/sessions that didn’t get completed.

A download should not (hopefully) leave a half-completed file on your disk and it should not (hopefully) overwrite any existing file on your disk.

Where are the downloaded files going? Into your My Documents and My Videos (PDC2010Low/PDC2010High) folders;

and if you do encounter errors in there like (e.g.) here where I unplug the network cable part way through…

then you should get some attempt at error handling with a list of the problems encountered whilst doing the downloads.

Enjoy – feel free to ping me with bugs and I’ll try and fix and, remember, this is just for fun – it’s not part of the “official” PDC site in any way.

Update – a few people asked me to post the source code here and so here’s the code – some notes;

- This was written quickly. I’d guess I spent maybe 5-6 hours on it.

- I wasn’t planning to share it.

- It has some MVVM ideas in it but they’re not taken to the Nth degree. It uses some commanding from Expression Blend.

- There’s probably a race condition or two in there as well.

- There’s no unit tests. What testing I did was done in the debugger.

- There are probably a bunch of styles in there that aren’t used. I started with the JetPack styles and trimmed some bits out but I wasn’t exhaustive.

- There’s a lot of “public” that should be “internal” and the code generally needs splitting into libraries.

but you can download it from here if you still want that code to open up and poke around in after all those caveats

Mike Flasko posted a summary of Recent OData Announcements on 11/6/2010:

At this past PDC we announced a number of new OData producers (EBay, TwitPic, etc) and libraries. Check out these posts to get all the news:

- OData lib for Windows Phone 7

- New OData Producers (EBay, TwitPic, etc)

- CTP1 of the next version of WCF Data Services (update to .NET 4 / Silverlight 4)

This CTP makes it now possible to interact with your the OData feed for your Windows Live account (i.e. easily get at your photos, contacts, etc)

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Steve Peschka is on a roll with The Claims, Azure and SharePoint Integration Toolkit Part 4 post of 11/8/2010:

This is part 4 of a 5 part series on the CASI (Claims, Azure and SharePoint Integration) Kit. Part 1 was an introductory overview of the entire framework and solution and described what the series is going to try and cover. Part 2 covered the guidance to create your WCF applications and make them claims aware, then move them into Windows Azure. Part 3 walked through the base class that you’ll use to hook up your SharePoint site with Azure data, by adding a new custom control to a page in the _layouts directory. In this post I’ll cover the web part that is included as part of this framework. It’s designed specifically to work with the custom control you created and added to the layouts page in Part 3.

Using the Web Part

Before you begin using the web part, it obviously is assumed that you a) have a working WCF service hosted in Windows Azure and b) that you have created a custom control and added it to the layouts page, as described in Part 3 of this series. I’m further assuming that you have deployed both the CASI Kit base class assembly and your custom control assembly to the GAC on each server in the SharePoint farm. I’m also assuming that your custom aspx page that hosts your custom control has been deployed to the layouts directory on every web front end server in the farm. To describe how to use the web part, I’m going to use the AzureWcfPage sample project that I uploaded and attached to the Part 3 posting. So now let’s walk through how you would tie these two things together to render data from Azure in a SharePoint site.

NOTE: While not a requirement, it will typically be much easier to use the web part if the WCF methods being called return HTML so it can be displayed directly in the page without further processing.

The first step is to deploy the AzureRender.wsp solution to the farm; the wsp is included in the zip file attached to this posting. It contains the feature that deploys the Azure DataView WebPart as well as jQuery 1.4.2 to the layouts directory. Once the solution is deployed and feature activated for a site collection, you can add the web part to a page. At this point you’re almost ready to begin rendering your data from Azure, but there is a minimum of one property you need to set. So next let’s walk through what that and the other properties are for the web part.

Web Part Properties

All of the web part properties are in the Connection Properties section. At a minimum, you need to set the Data Page property to the layouts page you created and deployed. For example, /_layouts/AzureData.aspx. If the server tag for your custom control has defined at least the WcfUrl and MethodName properties, then this is all you need to do. If you did nothing else the web part would invoke the page and use the WCF endpoint and method configured in the page, and it would take whatever data the method returned (ostensibly it returns it in HTML format) and render it in the web part. In most cases though you’ll want to use some of the other web part properties for maximum flexibility, so here’s a look at each one:

- Method Name* – the name of the method on the WCF application that should be invoked. If you need to invoke the layouts page with your own javascript function the query string parameter for this property is “methodname”.

- Parameter List* – a semi-colon delimited list of parameters for the WCF method. As noted in Parts 2 and 3 of this series, only basic data types are supported – string, int, bool, long, and datetime. If you require a more complex data type then you should deserialize it first to a string then call the method, and serialize it back to a complex type in the WCF endpoint. If you need to invoke the layouts page with your own javascript function the query string parameter for this property is “methodparams”.

- Success Callback Address – the javascript function that is called back after the jQuery request for the layouts page completes successfully. By default, this property uses the javascript function that ships with the web part. If you use your own function, the function signature should look like this: function yourFunctionName(resultData, resultCode, queryObject). For more details see the jQuery AJAX documentation at http://api.jquery.com/jQuery.ajax/.

- Error Callback Address – the javascript function that is called back if the jQuery request for the layouts page encounters an error. By default, this property uses the javascript function that ships with the web part. If you use your own function, the function signature should look like this: function yourFunctionName(XMLHttpRequest, textStatus, errorThrown). For more details see the jQuery AJAX documentation at http://api.jquery.com/jQuery.ajax/.

- Standard Error Message – the message that will be displayed in the web part if an error is encountered during the server-side processing of the web part. That means it does NOT include scenarios where data is actually being fetched from Azure.

- Access Denied Message* – this is the Access Denied error message that should be displayed if access is denied to the user for a particular method. For example, as explained in Part 2 of this series, since we are passing the user’s token along to the WCF call, we can decorate any of the methods with a PrincipalPermission demand, like “this user must be part of the Sales Managers” group. If a user does not meet a PrincipalPermission demand then the WCF call will fail with an access denied error. In that case, the web part will display whatever the Access Denied Message is. Note that you can use rich formatting in this message, to do things like set the font bold or red using HTML tags (i.e. <font color='red'>You have no access; contact your admin</font>). If you need to invoke the layouts page with your own javascript function the query string parameter for this property is “accessdenied”.

- Timeout Message* – this is the message that will be displayed if there is a timeout error trying to execute the WCF method call. It also supports rich formatting such as setting the font bold, red, etc. If you need to invoke the layouts page with your own javascript function the query string parameter for this property is “timeout”.

- Show Refresh Link – check this box in order to render a refresh icon above the Azure data results. If the icon is clicked it will re-execute the WCF method to get the latest data.

- Refresh Style – allows you to add additional style attributes to the main Style attribute on the IMG tag that is used to show the refresh link. For example, you could add “float:right;” using this property to have the refresh image align to the right.

- Cache Results – check this box to have the jQuery library cache the results of the query. That means each time the page loads it will use a cached version of the query results. This will save round trips to Azure and result in quicker performance for end users. If the data it is retrieving doesn’t change frequently then caching the results is a good option.

- Decode Results* – check this box in case your WCF application returns results that are HtmlEncoded. If you set this property to true then HtmlDecoding will be applied to the results. In most cases this is not necessary. If you need to invoke the layouts page with your own javascript function the query string parameter for this property is “encode”.

* – these properties will be ignored if the custom control’s AllowQueryStringOverride property is set to false.

Typical Use Case

Assuming your WCF method returns HTML, in most cases you will want to add the web part to a page and set two or three properties: Data Page, Method Name and possibly Parameter List.

If you have more advanced display or processing requirements for the data that is returned by Azure then you may want to use a custom javascript function to do so. In that case you should add your javascript to the page and set the Success Callback Address property to the name of your javascript function. If your part requires additional posts back to the WCF application, for things such as adds, updates or deletes, then you should add that into your own javascript functions and call the custom layouts page with the appropriate Method Name and Parameter List values; the query string variable names to use are documented above. When invoking the ajax method in jQuery to call the layouts page you should be able to use an approach similar to what the web part uses. The calling convention it uses is based on the script function below; note that you will likely want to continue using the dataFilter property shown because it strips out all of the page output that is NOT from the WCF method:

$.ajax({

type: "GET",

url: "/_layouts/SomePage.aspx",

dataType: "text",

data: "methodname=GetCustomerByEmail&methodparams=steve@contoso.local",

dataFilter: AZUREWCF_azureFilterResults,

success: yourSuccessCallback,

error: yourErrorCallback

});

Try it Out!

You should have all of the pieces now to try out the end to end solution. Attached to this posting you’ll find a zip file that includes the solution for the web part. In the next and final posting in this series, I’ll describe how you can also use the custom control developed in Part 2 to retrieve data from Azure and use it in ASP.NET cache with other controls, and also how to use it in SharePoint tasks – in this case a custom SharePoint timer job.

Steve Peschka continued his The Claims, Azure and SharePoint Integration Toolkit Part 3 series on 11/8/2010:

This is part 3 of a 5 part series on the CASI (Claims, Azure and SharePoint Integration) Kit. Part 1 was an introductory overview of the entire framework and solution and described what the series is going to try and cover. Part 2 covered the guidance to create your WCF applications and make them claims aware, then move them into Windows Azure. In this posting I’ll discuss one of the big deliverables of this framework, which is a custom control base class that you use to make your connection from SharePoint to your WCF application hosted in Windows Azure. These are the items we’ll cover:

- The base class – what is it, how do you use it in your project

- A Layouts page – how to add your new control to a page in the _layouts directory

- Important properties – a discussion of some of the important properties to know about in the base class

The CASI Kit Base Class

One of the main deliverables of the CASI Kit is the base class for a custom control that connects to your WCF application and submits requests with the current user’s logon token. The base class itself is a standard ASP.NET server control, and the implementation of this development pattern requires you to build a new ASP.NET server control that inherits from this base class. For reasons that are beyond the scope of this posting, your control will really need to do two things:

- Create a service reference to your WCF application hosted in Windows Azure.

- Override the ExecuteRequest method on the base class. This is actually fairly simple because all you need to do is write about five lines of code where you create and configure the proxy that is going to connect to your WCF application, and then call the base class’ ExecuteRequest method.

To get started on this you can create a new project in Visual Studio and choose the Windows Class Library type project. After getting your namespace and class name changed to whatever you want it to be, you will add a reference to the CASI Kit base class, which is in the AzureConnect.dll assembly. You will also need to add references to the following assemblies: Microsoft.SharePoint, System.ServiceModel, System.Runtime.Serialization and System.Web.

In your base class, add a using statement for Microsoft.SharePoint, then change your class so it inherits from AzureConnect.WcfConfig. WcfConfig is the base class that contains all of the code and logic to connect to the WCF application, incorporate all of the properties to add flexibility to the implementation and eliminate the need for all of the typical web.config changes that are normally necessary to connect to a WCF service endpoint. This is important to understand – you would typically need to add nearly a 100 lines of web.config changes for every WCF application to which you connect, to every web.config file on every server for every web application that used it. The WcfConfig base class wraps that all up in the control itself so you can just inherit from the control and it does the rest for you. All of those properties that would be changed in the web.config file though can also be changed in the WcfConfig control, because it exposes properties for all of them. I’ll discuss this further in the section on important properties.

Now it’s time to add a new Service Reference to your WCF application hosted in Windows Azure. There is nothing specific to the CASI Kit that needs to be done here – just right-click on References in your project and select Add Service Reference. Plug in the Url to your Azure WCF application with the “?WSDL” at the end so it retrieves the WSDL for your service implementation. Then change the name to whatever you want, add the reference and this part is complete.

At this point you have an empty class and a service reference to your WCF application. Now comes the code writing part, which is fortunately pretty small. You need to override the ExecuteRequest method, create and configure the service class proxy, then call the base class’ ExecuteRequest method. To simplify, here is the complete code for the sample control I’m attaching to this post; I’ve highlighted in yellow the parts that you need to change to match your service reference: …

Double-spaced C# sample code omitted for brevity.

So there you have it – basically five lines of code, and you really can just copy and paste directly the code in the override for ExcecuteRequest shown here into your own override. After doing so you just need to replace the parts highlighted in yellow with the appropriate class and interfaces your WCF application exposes. In the highlighted code above:

- CustomersWCF.CustomersClient: “CustomersWCF” is the name I used when I created my service reference, and CustomersClient is the name of the class I’ve exposed through my WCF application. The class name in my WCF is actually just “Customers” and the VS.NET add service reference tools adds the “Client” part at the end.

- CustomersWCF.ICustomers: The “CustomersWCF” is the same as described above. “ICustomers” is the interface that I created in my WCF application, that my WCF “Customers” class actually implements.

That’s it – that’s all the code you need to write to provide that connection back to your WCF application hosted in Windows Azure. Hopefully you’ll agree that’s pretty painless. As a little background, the code you wrote is what allows the call to the WCF application to pass along the SharePoint user’s token. This is explained in a little more detail in this other posting I did: http://blogs.technet.com/b/speschka/archive/2010/09/08/calling-a-claims-aware-wcf-service-from-a-sharepoint-2010-claims-site.aspx.

Now that the code is complete, you need to make sure you register both the base class and your new custom control in the Global Assembly Cache on each server where it will be used. This can obviously be done pretty easily with a SharePoint solution. With the control complete and registered it’s time to take a look at how you use it to retrieve and render data. In the CASI Kit I tried to address three core scenarios for using Azure data:

- Rendering content in a SharePoint page, using a web part

- Retrieving configuration data for use by one to many controls and storing it in ASP.NET cache

- Retrieving data and using it in task type executables, such as a SharePoint timer job

The first scenario is likely to be the most pervasive, so that’s the one we’ll tackle first. The easiest thing to do once this methodology was mapped out would have been to just create a custom web part that made all of these calls during a Load event or something like that, retrieve the data and render it out on the page. This however, I think would be a HUGE mistake. By wrapping that code up in the web part itself, so it executes server-side during the processing of a page request, could severely bog down the overall throughput of the farm. I had serious concerns about having one to many web parts on a page that were making one to many latent calls across applications and data centers to retrieve data, and could very easily envision a scenario where broad use could literally bring an entire farm down to its knees. However, there is this requirement that some code has to run on the server, because it is needed to configure the channel to the WCF application to send the user token along with the request. My solution for came to consist of two parts:

1. A custom page hosted in the _layouts folder. It will contain the custom control that we just wrote above and will actually render the data that’s returned from the WCF call.

2. A custom web part that executes NO CODE on the server side, but instead uses JavaScript and jQuery to invoke the page in the _layouts folder. It reads the data that was returned from the page and then hands it off to a JavaScript function that, by default, will just render the content in the web part. There’s a lot more to it that’s possible in the web part of course, but I’ll cover the web part in detail in the next posting. The net though is that when a user requests the page it is processed without having to make any additional latent calls to the WCF application. Instead the page goes through the processing pipeline and comes down right away to the user’s browser. Then after the page is fully loaded the call is made to retrieve only the WCF content.

The Layouts Page

The layouts page that will host your custom control is actually very easy to write. I did the whole thing in notepad in about five minutes. Hopefully it will be even quicker for you because I’m just going to copy and paste my layouts page here and show you what you need to replace in your page. …

ASP.NET code omitted for brevity.

Again, the implementation of the page itself is really pretty easy. All that absolutely has to be changed is the strong name of the assembly for your custom control. For illustration purposes, I’ve also highlighted a couple of the properties in the control tag itself. These properties are specific to my WCF service, and can be change and in some cases removed entirely in your implementation. The properties will be discussed in more detail below. Once the layouts page is created it needs to be distributed to the _layouts directory on every web front end server in your SharePoint farm. At that point it can be called from any site in any claims-aware web application in your SharePoint farm. Obviously, you should not expect it to work in a classic authentication site, such as Central Admin. Once the page has been deployed then it can be used by the CASI Kit web part, which will be described in part 4 of this series.

Important Properties

The WcfConfig contains two big categories of properties – those for configuring the channel and connection to the WCF application, and those that configure the use of the control itself.

WCF Properties

As described earlier, all of the configuration information for the WCF application that is normally contained in the web.config file has been encapsulated into the WcfConfig control. However, virtually all of those properties are exposed so that they can be modified depending on the needs of your implementation. Two important exceptions are the message security version, which is always MessageSecurityVersion.WSSecurity11WSTrust13WSSecureConversation13WSSecurityPolicy12BasicSecurityProfile10, and the transport, which is always HTTPS, not HTTP. Otherwise the control has a number of properties that may be useful to know a little better in your implementation (although in the common case you don’t need to change them).

First, there are five read only properties to expose the top-level configuration objects used in the configuration. By read-only, I mean the objects themselves are read-only, but you can set their individual properties if working with the control programmatically. Those five

fourproperties are:

- SecurityBindingElement SecurityBinding

- BinaryMessageEncodingBindingElement BinaryMessage

- HttpsTransportBindingElement SecureTransport

- WS2007FederationHttpBinding FedBinding

- EndpointAddress WcfEndpointAddress

The other properties can all be configured in the control tag that is added to the layouts aspx page. For these properties I used a naming convention that I was hoping would make sense for compound property values. For example, the SecurityBinding property has a property called LocalClient, which has a bool property called CacheCookies. To make this as easy to understand and use as possible, I just made one property called SecurityBindingLocalClientCacheCookies. You will see several properties like that, and this is also a clue for how to find the right property if you are looking at the .NET Framework SDK and wondering how you can modify some of those property values in the base class implementation. Here is the complete list of properties: …

Another couple of pages of C# code omitted for brevity.

Again, these were all created so that they could be modified directly in the control tag in the layouts aspx page. For example, here’s how you would set the FedBindingUseDefaultWebProxy property:

<AzWcf:WcfDataControl runat="server" id="wcf" WcfUrl="https://azurewcf.vbtoys.com/Customers.svc" OutputType="Page" MethodName="GetAllCustomersHtml" FedBindingUseDefaultWebProxy="true" />

Usage Properties

The other properties on the control are designed to control how it’s used. While there is a somewhat lengthy list of properties, note that they are mainly for flexibility of use – in the simple case you will only need to set one or two properties, or alternatively just set them in the web part that will be described in part four of this series. Here’s a list of each property and a short description of each.

- string WcfUrl – this is the Url to the WCF service endpoint, i.e. https://azurewcf.vbtoys.com/Customers.svc.

- string MethodName – this is the name of the method that should be invoked when the page is requested. You can set this to what method will be invoked by default. In addition, you can also set the AllowQueryStringOverride property to false, which will restrict the page to ONLY using the MethodName you define in the control tag. This property can be set via query string using the CASI Kit web part.

- string MethodParams – this is a semi-colon delimited list of parameter values that should be passed to the method call. They need to be in the same order as they appear in the method signature. As explained in part 2 of this blog series, parameter values really only support simple data types, such as string, bool, int and datetime. If you wish to pass more complex objects as method parameters then you need to make the parameter a string, and deserialize your object to Xml before calling the method, and then in your WCF application you can serialize the string back into an object instance. If passing that as a query string parameter though you will be limited by the maximum query string length that your browser and IIS supports. This property can be set via query string using the CASI Kit web part.

- object WcfClientProxy – the WcfClientProxy is what’s used to actually make the call to the WCF Application. It needs to be configured to support passing the user token along with the call, so that’s why the last of configuration code you write in your custom control in the ExecuteRequest override is to set this proxy object equal to the service application proxy you created and configured to use the current client credentials.

- string QueryResultsString – this property contains a string representation of the results returned from the method call. If your WCF method returns a simple data type like bool, int, string or datetime, then the value of this property will be the return value ToString(). If your WCF method returns a custom class that’s okay too – when the base class gets the return value it will deserialize it to a string so you have an Xml representation of the data.

- object QueryResultsObject – this property contains an object representation of the results returned from the method call. It is useful when you are using the control programmatically. For example, if you are using the control to retrieve data to store in ASP.NET cache, or to use in a SharePoint timer job, the QueryResultsObject property has exactly what the WCF method call returned. If it’s a custom class, then you can just cast the results of this property to the appropriate class type to use it.

- DataOutputType OutputType – the OutputType property is an enum that can be one of four values: Page, ServerCache, Both or None. If you are using the control in the layouts page and you are going to render the results with the web part, then the OutputType should be Page or Both. If you want to retrieve the data and have it stored in ASP.NET cache then you should use ServerCache or Both. NOTE: When storing results in cache, ONLY the QueryResultsObject is stored. Obviously, Both will both render the data and store it in ASP.NET cache. If you are just using the control programmatically in something like a SharePoint timer job then you can set this property to None, because you will just read the QueryResultsString or QueryResultsObject after calling the ExecuteRequest method. This property can be set via query string using the CASI Kit web part.

- string ServerCacheName – if you chose an OutputType of ServerCache or Both, then you need to set the ServerCacheName property to a non-empty string value or an exception will be thrown. This is the key that will be used to store the results in ASP.NET cache. For example, if you set the ServerCacheName property to be “MyFooProperty”, then after calling the ExecuteRequest method you can retrieve the object that was returned from the WCF application by referring to HttpContext.Current.Cache["MyFooProperty"]. This property can be set via query string using the CASI Kit web part.

- int ServerCacheTime – this is the time, in minutes, that an item added to the ASP.NET cache should be kept. If you set the OutputType property to either ServerCache or Both then you must also set this property to a non-zero value or an exception will be thrown. This property can be set via query string using the CASI Kit web part.

- bool DecodeResults – this property is provided in case your WCF application returns results that are HtmlEncoded. If you set this property to true then HtmlDecoding will be applied to the results. In most cases this is not necessary. This property can be set via query string using the CASI Kit web part.

- string SharePointClaimsSiteUrl – this property is primarily provided for scenarios where you are creating the control programmatically outside of an Http request, such as in a SharePoint timer job. By default, when a request is made via the web part, the base class will use the Url of the current site to connect to the SharePoint STS endpoint to provide the user token to the WCF call. However, if you have created the control programmatically and don’t have an Http context, you can set this property to the Url of a claims-secured SharePoint site and that site will be used to access the SharePoint STS. So, for example, you should never need to set this property in the control tag on the layouts page because you will always have an Http context when invoking that page.

- bool AllowQueryStringOverride – this property allows administrators to effectively lock down a control tag in the layouts page. If AllowQueryStringOverride is set to false then any query string override values that are passed in from the CASI Kit web part will be ignored.

- string AccessDeniedMessage – this is the Access Denied error message that should be displayed in the CASI Kit web part if access is denied to the user for a particular method. For example, as explained in part 2 of this series, since we are passing the user’s token along to the WCF call, we can decorate any of the methods with a PrincipalPermission demand, like “this user must be part of the Sales Managers” group. If a user does not meet a PrincipalPermission demand then the WCF call will fail with an access denied error. In that case, the web part will display whatever the AccessDeniedMessage is. Note that you can use rich formatting in this message, to do things like set the font bold or red using HTML tags (i.e. <font color='red'>You have no access; contact your admin</font>). This property can be set via query string using the CASI Kit web part.

- string TimeoutMessage – this is the message that will be displayed in the CASI Kit web part if there is a timeout error trying to execute the WCF method call. It also supports rich formatting such as setting the font bold, red, etc. This property can be set via query string using the CASI Kit web part.

Okay, this was one LONG post, but it will probably be the longest of the series because it’s where the most significant glue of the CASI Kit is. In the next posting I’ll describe the web part that’s included with the CASI Kit to render the data from your WCF application, using the custom control and layouts page you developed in this step.

Also, attached to this post you will find a zip file that contains the sample Visual Studio 2010 project that I created that includes the CASI Kit base class assembly, my custom control that inherits from the CASI Kit base class, as well as my custom layouts page.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Buck Woody offered his recommendations for Designing for the cloud in an 11/8/2010 post:

With the advent of platform as a service (Azure and paradigms like it) it isn't enough to simply write the same code patterns as we have in the past. To be sure, those patterns will still function in Azure, but we are not taking full advantage of the paradigm if we don't exercise it more fully.

I've been reading Neal Stephenson's "Snow Crash" again for the first time in a long time, and the old adage holds true - today's science fiction is tomorrows science fact. In this story there are interesting paradigms in code - a strange mixture of "the sims" or second-life and the Internet. There is also the idea of telecommuting, remote meetings and classes, which develop into a real second economy.

Developers will bring in something similar using cloud technologies. Data marketplaces such as the one we recently released will become the norm, and the App store model from Apple will bubble down - not up - into code fragments that will allow other developers and non-developers alike stitch together entirely new applications.

So what steps do you need to take now to get your skills and code up to speed? My recommendation is to modularize your code logic - not the code itself, but the thoughts behind it - in a more Service Oriented Architecture way. From the outset, think of the public and private methods the code provides, and most importantly, divorce it from the processing of the rest of the system as much as possible. And - this is the important part - keep the presentation layer separate.

Again, that concept isn't new. Lots of folks thought of this a long time ago. As software engineers we're always supposed to do that, but over and over again I find the code inextricably tied between the two. If you keep them separate, however, there is something else I propose you do: implement functional contracts between your front end and the presentation handler code. Let me explain.

I suggest you create three levels of code - all of them separate - one handling the processing of data, numerics a-la SOA, the second handling multiple rendering properties such as text, HTML, polygons, vectors and so on, and the third for each front end you plan to address. That's right, multiple front ends. I know this antithetical to a platform independent approach, but I think it is necessary. When I view an app designed for a win7 phone, an iPad and a web page, it is rubbish. Sorry, but there it is. Each platform has it's own strengths, and you should Code to those.

Keeping a three-level architecture with contracts allows you to only rewrite front ends as needed, which of course need updating often anyway.

So there you have it - not necessarily a revolutionary concept or an original one, but something to keep in mind for programming against a Platform as a Service like Windows Azure.

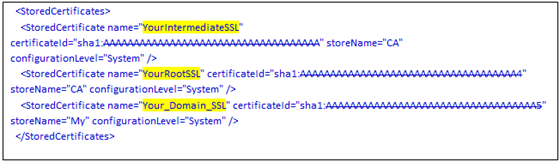

Avkash Chauhan explained Adding SSL (HTTPS) security with Tomcat/Java solution in Windows Azure in an 11/7/2010 post:

Once you have your Tomcat/Java based solution running on port 80 in Windows Azure you may decide to add HTTP endpoint to it or by the start of your service development you want to support HTTP and HTTPS endpoint with your Tomcat/Java service. To add a HTTPS endpoint to regular .net based service is very easy and described everywhere however adding a HTTPS endpoint to Tomcat/Java based service is little different. The reason is that for managed service the SSL certificate is managed by managed modules you get the SSL certificate from the system however in Tomcat manages SSL certificate its own way that’s why it is slightly different. This document helps you to get the HTTPS endpoint working with your Tomcat/Java service on Windows Azure.

Adding HTTPS security to any website is basically a certificate based security based on PKI security concept and to start with you first need to obtain a valid certificate for “client and server validation” from a Certificate Authority i.e. “Verisign” or “Go Daddy” or “Thawte” etc. I will not be discussing here who you will choose and how you will get this certificate. I would assume that you know the concepts and have certificate ready to add HTTPS security to your Tomcat service.

You will be given a Certificate to verify your domain name such as www.yourdomain.com so the certificate will be linked to your domain this way.

The process of adding SSL to tomcat is defined in the following steps:

- Getting certificates from CA and then creating keystore.bin file

- Adding keystore.bin file to tomcat

- Adding HTTPS endpoint to your tomcat solution

- Adding certificate to your Tomcat service at Windows Azure Portal

Here is the description of each above steps:

Getting certificates from CA and then creating keystore.bin file

To get these certificates you will need to create a CSR request from your tomcat/apache server so you can create a folder name keystore and create and save the CSR request contents there.

C:\Tomcat-Azure\TomcatSetup_x64\Tomcat\keystore

Most of the time you will get a certificate chain which includes your certificate, intermediate certificate and root certificate so essentially you will have 3 certificates:

- RootCertFileName.crt

- IntermediateCertFileName.crt

- PrimaryCertFileName.crt

Now once you received the certificate please save all 3 certificates in the keystore folder.

C:\Tomcat-Azure\TomcatSetup_x64\Tomcat\keystore

The certificate will only work with the same keystore that you initially created the CSR with. The certificates must be installed to your keystore in the correct order.

We will be using Keytool.exe a JAVA tool to link these certificates with Tomcat. To tool is located at:

C:\Tomcat-Azure\Java\jre6\bin\keytool.exe

Every time you install a certificate to the keystore you must enter the keystore password that you chose when you generated it so you will keep using the same password.

Now open a command window and use the keytool binary to run the following commands.

Installing Root Certificate in keystore:

keytool -import -trustcacerts -alias root -file RootCertFileName.crt -keystore keystore.key

There are two possibilities:

- You may receive a successful message as then we are good: "Certificate was added to keystore".

- You may also receive a message that says: "Certificate already exists in system-wide CA keystore under alias <...> Do you still want to add it to your own keystore? [no].” This is because the certificate may already stored in keystroke so select “Yes.”

You will see the message: "Certificate was added to keystore." Now we have added our Root certificate in the keystore.

Installing Intermediate Certificate in keystore:

keytool -import -trustcacerts -alias intermediate -file IntermediateCertFileName.crt -keystore keystore.key

You will see a message as: "Certificate was added to keystore".

We are good now.

Note if you don’t have an intermediate certificate not a problem and you can skip this step.

Installing Primary Certificate in keystore:

keytool -import -trustcacerts -alias tomcat -file PrimaryCertFileName.crt -keystore keystore.key

You will see a message as: "Certificate was added to keystore".

We are good now.

After it we can be sure that we have all certificates installed in keystore file.

Note you can actually see the contents of keystore.bin

Adding keystore.bin file to tomcat:

Our next step is to configure your server to use the keystore file.

Please get keystore.bin & yourdomain.key from the CSR creation location and then copy to your tomcat webapps folder:

Now open server.xml file from Tomcat\conf\server.conf and edit as below:

Note:

- Please be sure to have the same password as you had after CSR creation and used with keytool application.

- There are other methods to add keystore to tomcat so please look around on internet if you decided to prefer other methods.

Verify that you have SSL working in Development Fabric: https://127.0.0.1:81

Adding HTTPS endpoint to your tomcat solution

Our next step is to add HTTP endpoint to Tomcat solution. Please open the tomcat solution in visual studio which is located at:

C:\Tomcat-Azure\TomcatSetup_x64\Tomcat\Tomcat.sln

Once the solution is open please select the TomcatWorkerRole and open its properties dialog box as below:

Now please select “Endpoints” tab and you will see the windows as below:

In the “Endpoints” tab please select “Add Endpoint” button and add TCP endpoint with port 443.

For Tomcat the HTTPS endpoint defined as a “tcp” endpoint like HTTP. Setting protocol to “http” or “https” means that Azure will perform an http.sys reservation for that endpoint on the appropriate port. Since Tomcat does not use http.sys internally, we needed to make sure to model tomcat HTTPS endpoints as “tcp”.

You will also see that setting up tomcatSSL endpoint to TCP with port 443 then the SSL Certificate field get disabled so regular certificate cannot be used as below:

Now to make things in full perspective, we already know that Tomcat already has SSL certificates in its keystore.bin, so using TCP endpoint with port 443 will work even there is no certificate associated with it.

Now please save the project and verify that ServiceDefinition.cdef have the following data:

Now if you build your package using Packme.cmd these new changes will be in effect.

Adding certificate to your Tomcat service at Windows Azure Portal

Our next step is to add certificates in the Azure portal.

You need to get the Windows Azure Portal and your Tomcat Service page where you have your production and staging slots and go the “Certificates – Manage” section as below:

Now please select Manage and you will see the following screen:

In the above web windows please select all 2/3 PFX certificates (Root, Intermediate and Primary) and enter the password correctly if associated.

Once upload is done you will see the all the certificates located on portal as below:

You can also see the list of certificates install on server as below:

Now when you publish your service to Azure portal these certificates will be used to configure your Tomcat service and you will be able to use SSL with your Tomcat service.

<Return to section navigation list>

Visual Studio LightSwitch

Wes Yanaga announced Now available: Visual Studio LightSwitch Developer Training Kit in an 11/8/2010 post to the US ISV Evangelism blog:

The Visual Studio LightSwitch Training Kit contains demos and labs to help you learn to use and extend LightSwitch. The introductory materials walk you through the Visual Studio LightSwitch product. By following the hands-on labs, you'll build an application to inventory a library of books.

The more advanced materials will show developers how they can extend LightSwitch to make components available to the LightSwitch developer. In the advanced hands-on labs, you will build and package an extension that can be reused by the Visual Studio LightSwitch end user.

Here’s the LightSwitch Training Kit’s splash screen:

Beth Massi (@BethMassi) listed LightSwitch Team Interviews, Podcasts & Videos on 11/8/2010:

Have a long commute to work or want something to watch on your lunch break? Catch the team in these audio and video interviews!

Podcasts -

- CodeCast Episode 88: LightSwitch for .NET Developers with Jay Schmelzer

- MSDN Radio: Visual Studio LightSwitch with John Stallo and Jay Schmelzer

- MSDN Radio: Switching the Lights on with Beth Massi

- CodeCast Episode 95: LightSwitch Scenarios with Beth Massi

Video Interviews -

- Jay Schmelzer: Introducing Visual Studio LightSwitch

- Visual Studio LightSwitch - Beyond the Basics with Joe Binder

- Steve Anonsen and John Rivard: Inside LightSwitch

You can access these plus a lot more on the LightSwitch Developer Center http://msdn.com/lightswitch

Return to section navigation list>

Windows Azure Infrastructure

Mike Wickstrand’s Windows Azure: A Year of Listening, Learning, Engineering, and Now Delivering! post of 11/2/2010 got lost in the shuffle, so better late than never here:

Although I’ve worked at Microsoft for more than 11 years, 2009 marked the first time I had the opportunity to attend Microsoft’s Professional Developers Conference. When I walked around PDC 09 in Los Angeles last year and spoke with developers, I found that I was inundated with many great ideas on how to make Windows Azure better, almost too many to sort through and prioritize. As someone who helps chart the future course for Windows Azure this was a fantastic problem to have at that point, because in late 2009 we were finalizing our priorities and engineering plans for calendar year 2010.

Energized by those developer conversations and wanting a way to capture and prioritize it all, on the flight home I launched http://www.mygreatwindowsazureidea.com (Wi-Fi on the plane helped). It’s a simple site where Windows Azure enthusiasts and customers (big or small) can tell Microsoft’s Windows Azure Team directly what they need by submitting and voting on ideas. I wasn’t sure anyone would participate, so I submitted a few ideas of my own to get things going and gauge interest in some ideas we were kicking around within the Windows Azure Team. The goal of the site was and is to better understand what you need from Windows Azure and to build plans around how we make the things that "bubble to the top" a reality for our customers in the future.

So what happened? Well, with a year now gone by and a slew of features on tap for release it’s the perfect time to reflect back. In the past 12 months, more than 2000 unique visitors to mygreatwindowsazureidea.com have submitted hundreds of feature requests and cast nearly 13,000 votes for the features that matter most to them. There were also hundreds and hundreds of valuable comments and blog posts that grew out of the ideas people were sharing on the site. Thank you for this amazing level of participation!

With the announcements last week at PDC 10 and with a look forward to things that weren’t announced, but are in the works, I am pleased to let you know that we are addressing 62% of all of the votes cast with features that are already or soon will be available. Said another way, we are addressing 8 out of the top 10 most requested ideas (and more ideas lower down on the list) that in total account for roughly 8000 of the nearly 13,000 votes cast.

I hope that you agree that we are sincerely listening to you and knocking these high priority ideas off one-by-one. I am sure there are some of you that want new features to come sooner or perhaps you’re not happy as your requested feature isn’t yet available (or it isn’t available exactly in the way that you envisioned). With more than 2000 people participating, this is going to happen - - I just hope with what we are releasing that you are now even more enthusiastic to keep an active dialog going with me. Also, please realize this site is just one of many channels we use to determine our engineering and business priorities, and this one just happens to be the most public.

On that note, I received this e-mail the other day that I wanted to share with you:

From: Paul <last name and e-mail address withheld>

Sent: Saturday, October 30, 2010 4:15 AM

To: Mike Wickstrand

Subject: Windows AzureMike,

I’ve been keeping a close eye on Windows Azure, and so far it’s been a case of “Wow, I’d love to develop for this, but it’s too expensive”. I’ve been looking on “mygreatwindowsazureidea.com”, and I have to say, the new announcements for $0.05 per hour instances and being able to run multiple roles <web sites> per instance has tipped me in favour of Azure enough to begin learning and developing for it.

Thanks so much for listening to the feedback of the developer community. It gives me a warm feeling that we have our Microsoft of old back, who cares and listens to the developer community.

Honestly, this is great news.

Thanks,

Paul

(A born-again Microsoft fan-boi)

When I was sitting on that plane last year flying back from PDC 09 I hoped that in a year I was going to be able to look each of you in the eye and demonstrate to you that Microsoft listens and that the Windows Azure Team cares about what you need. In the best case scenario I envisioned that I would hear from customers like Paul. I gave you my assurance that if you tell us what you want that I will do my best to champion those ideas within Microsoft to make those things become a reality. I hope you feel like I’ve lived up that and that I’ve earned the right to keep hearing your ideas on how to make Windows Azure great for you and for your companies.

So…a big thank you to the more than 2000 people who shared ideas and voted for what you want and need from Windows Azure. To the thousands of Windows Azure customers who regularly receive e-mails from me asking for your opinions, thank you and please keep the feedback coming. And lastly, to the Windows Azure Team, thanks for making all of this happen, for Paul and for our thousands of customers just like him.

In the past year we’ve also added a few more ears to my team, so along the way please don’t hesitate to share your ideas with harism@microsoft.com (Haris), adamun@microsoft.com, (Adam) or rduffner@microsoft.com. (Robert).

We look forward to coming back to you in another year after PDC 11 and having an even better story to tell.

Dustin Amrhein announced “The need for eventual standardization” as an introduction to his Is Standardization Right for Cloud-Based Application Environments? essay of 11/8/2010:

For me, this week has been one of those weeks that I think all technologists enjoy. You know what I'm talking about. The week has been one of those rare periods of time when you get to put day-to-day work on the backburner (or at least delay it until you get back to your hotel at night), and instead focus on learning, networking, and stepping outside of your comfort cocoon.

This week, I am getting a chance to attend Cloud Expo, two CloudCamps, and QCon all within a four-day span. In the process, I am meeting many smart folks (all the while finding out there are indeed people behind those Twitter handles) and coming across quite a few interesting cloud solutions. I could easily write a post talking about the people I met and the cool things they are doing, but instead I want to focus on the cloud solutions I came across during the week. When it comes to the solutions, rather than focusing in on one or two specific solutions, I wanted to focus in on a class of solutions, specifically cloud management solutions.

It's an obvious trend... the number of cloud management solutions on display far, far outweigh any other class of offering. To be fair, calling a particular offering a cloud management solution is to brush a broad stroke. Accordingly, these solutions vary in some respects. Some focus more on delivering capabilities to setup and configure cloud infrastructure, while others emphasize facilities to enable the effective consumption of said infrastructure. While some of the capabilities vary, there is one capability that nearly all have in common. Just about each of these solutions that I have seen provide some sort of functionality around constructing and deploying application environments in a cloud.

Now, the approach that each solution delivers around this particular capability widely varies. Before we get into that, let's start by agreeing on what I mean by an application environment. In this context, when I say application environment, I am thinking of two main elements:

- Application infrastructure componentry: The application infrastructure componentry represent the building blocks of the application environment. These are the worker nodes (i.e. management servers, application servers, web servers, etc.) that support your application.

- Application infrastructure configuration: Application infrastructure is a means to the end of providing an application. Users always customize the configuration of the infrastructure in order to effectively deliver their application.

As I said, in tackling the pieces of these application environments, the solutions took different approaches. Nearly all had a way of representing the environment as a single logical unit. The name of that unit varied (patterns, templates, assemblies, etc.), as did the degree of abstraction. Some, but not all, provided a direct means to include configuration into that representation. Others left it up to the users to work out a construct by which they could include the configuration of their environment into the logical representation of their application environment.

At this point in the cloud game, I believe most would expect this high degree of variation. In addition, I believe most would agree it is a healthy thing as it gives users a high degree of choice (even if it can be frustrating as a vendor to try to differentiate/explain your particular approach). However, as the market begins to validate the right approaches, I firmly believe we need some sort of standardization or commonality in how we approach representing application environments for the cloud.

As I see it, an eventual standardization in the space of representing application environments built for the cloud will enable several beneficial outcomes. This includes, but is not limited to:

- Multi-system management: One of the most obvious benefits of a standardized application environment description is that it sets the course for the use of these representations by multiple different management systems. Users should be able to take these patterns, templates, assemblies, and move them from one deployment management system to another.

- Policy-based management: A standard description of the environment and configuration paves the way for systems to be able to interact with the environment. Among other things, this may enable generically applicable policy-based management of the application environment.

- Sustainable PaaS systems: My last post goes into this in more detail, but it is my belief that to build sustainable PaaS platforms we need a common representation of application environments.

Admittedly, there is much more to this topic than a few words. These are just a few quick thoughts inspired by some of the emerging solutions I got an up-close look at this week. What do you think? Should we gradually move toward standardization in this space?

Dustin Amrhein joined IBM as a member of the development team for WebSphere Application Server.

Chris Dannen asserted “A new crop of mobile software startups is changing the way enterprises choose software, at the expense of big players like Microsoft” in a preface to his The Great Mobile Cloud Disruption article of 1/8/2010 for the MIT Technology Review:

Soon after Apple launched the iPad this spring, the TaylorMade-adidas Golf Company bought about 80 of the tablets for its marketing and sales departments. Before long, most of those employees began using a content-sharing tool called Box.net as a way to recommend and comment on articles about leadership and personal growth, even though the IT department never sanctioned the software. Says Jim Vaughn, TaylorMade's head of sales development: "I'm not even sure how or when Box was put into the picture." But the software is now in use among hundreds of TaylorMade employees with tablets and smart phones.

TaylorMade was able to adopt this software so quickly because it's not hosted on the servers at its Carlsbad, California, headquarters—but rather on remote computers in the cloud. It's a story that's happening over and over at many large corporations. Mobile devices are upending the way enterprisewide software is bought and run, shifting decisions from IT departments to the users themselves. This mirrors the pattern of prior sea changes in technology, most notably the PC itself, which was at first heavily resisted by IT departments.

Palo Alto, California-based Box.net—along with venture capital-backed startups with names such as Yammer, Nimbula, and Zuora—is pouncing on this latest disruption now that it's clear that iPhones, iPads, Android phones, and other gadgets are not just a consumer phenomenon but the future of collaborative work.

Globally, the enterprise software market is worth more than $200 billion a year. But more and more of that market is moving into the mobile cloud. "Mobile is very hard to do from behind a corporate firewall," says David Sacks, CEO of Yammer, a corporate social network that enables employees at companies such as Molson Coors, Cargill, AMD, and Intuit to find coworkers with the right expertise if they encounter problems in the field.

These low-cost mobile cloud services (Box.net costs $15 per user per month) are poised to displace a sizeable chunk of the larger market and itself grow into a $5.2 billion sector with 240 million users by 2015, says ABI Research.

The main incumbent for enterprise software, Microsoft, has been slow to react, largely because most of its customers for cash-cow products such as Office (for personal productivity) as well as SharePoint and Dynamics (for enterprise resource planning) are mainly using on-premise servers, not cloud storage. Such programs are generally sold to big companies under multimillion-dollar site licenses.

The new mobile-savvy cloud services can plug into one another, working together, making them de facto allies against Microsoft. For instance, Box.net and Yammer both connect with Google Apps—a set of cloud-based tools for creating and managing documents that Google sells as an annual subscription for $50 per user. For Google, the emergence of these mobile cloud startups reinforces its strategy, says Chris Vander Mey, senior product manager for Google Apps. The idea is to offer an alternative to Microsoft Office in the cloud while making it easy to share data among programs that do other tasks. "That enables us to focus on a few things and do them really well, like e-mail," says Vander Mey.