Windows Azure and Cloud Computing Posts for 11/23/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Buck Woody posted Windows Azure Learning Plan – Storage to his Carpe Data blog on 11/23/2010:

This is one in a series of posts on a Windows Azure Learning Plan. You can find the main post here. This one deals with Storage for Windows Azure.

General Information

Overview and general information about Windows Azure - what it is, how it works, and where you can learn more.

General Overview Of Azure Storage

Official Microsoft Site on Azure Storage

Scalability Targets for Azure Storage

Windows Azure Storage Services REST API Reference

Blobs

Blob Storage is a binary-level "container" that can hold one or more streams of data, up to 200GB in size each.

Understanding Block Blobs and Page Blobs

Blob Service Concepts

Tables

Information on Web, Worker, VM and other roles, and how to program them.

Understanding the Table Storage Data Model

Table Service Concepts

Querying Tables and Entities

Queues

Blobs, Tables, Queues, and other storage constructs, and how to program them.

Queue Service Concepts

Queue Service API

Azure Drive

Service Bus, Authentication and Caching, in addition to other constructs in the Application Fabric space.

Windows Azure Drives white paper

Content Delivery Network

The CDN is an "edge-storage" solution that moves data closer to the client as they access it.

General Overview

Delivering High-Bandwidth Content with the Windows Azure CDN

Tools and Utilities

Free and not-free tools for Azure Storage.

Windows Azure Storage Client SDK

http://msdn.microsoft.com/en-us/library/microsoft.windowsazure.storageclient.aspx

Benchmarks and Throughput tests

Mount an Azure Drive as NTFS

http://azurescope.cloudapp.net/CodeSamples/cs/792ce345-256b-4230-a62f-903f79c63a67/

Command-line tool for working with Azure Storage

Joe Giardino of the Windows Azure Storage Team posted Windows Azure Storage Client Library: Potential Deadlock When Using Synchronous Methods on 11/23/2010:

Summary

In certain scenarios, using the synchronous methods provided in the Windows Azure Storage Client Library can lead to deadlock. Specifically, scenarios where the system is using all of the available ThreadPool threads while performing synchronous method calls via the Windows Azure Storage Client Library. Examples or such behavior are ASP.NET requests served synchronously, as well as a simple work client where you queue up N number of worker threads to execute. Note if you are manually creating threads outside of the managed ThreadPool or executing code off of a ThreadPool thread then this issue will not affect you.

When calling synchronous methods, the current implementation of the Storage Client Library blocks the calling thread while performing an asynchronous call. This blocked thread will be waiting for the asynchronous result to return. As such, if one of the asynchronous results requires an available ThreadPool thread (e.g. MemoryStream.BeginWrite, FileStream.BeginWrite, etc.) and no ThreadPool threads are available; its callback will be added to the ThreadPool work queue to wait until a thread becomes available for it to run on. This leads to a condition where the calling thread is blocked until that asynchronous result (callback) unblocks it, but the callback will not execute until threads become unblocked; in other words the system is now deadlocked.

Affected Scenarios

This issue could affect you if your code is executing on a ThreadPool thread and you are using the synchronous methods from the Storage Client Library. Specifically, this issue will arise when the application has used all of its available ThreadPool threads. To find out if your code is executing on a ThreadPool thread you can check System.Threading.Thread.CurrentThread.IsThreadPoolThread at runtime. Some specific methods in the Storage Client Library that can exhibit this issue include the various blob download methods (file, byte array, text, stream, etc.)

Example

For example let’s say that we have a maximum of 10 ThreadPool threads in our system which can be set using ThreadPool.SetMaxThreads. If each of the threads is currently blocked on a synchronous method call waiting for the async wait handle to be set which will require a ThreadPool thread to set the wait handle, we are deadlocked since there are no available threads in the ThreadPool that can set the wait handle.

Workarounds

The following workarounds will avoid this issue:

- Use asynchronous methods instead of their synchronous equivalents (i.e. use BeginDownloadByteArray/EndDownloadByteArray rather than DownloadByteArray). Utilizing the Asynchronous Programming Model is really the only way to guarantee performant and scalable solutions which do not perform any blocking calls. As such, using the Asynchronous Programming Model will avoid the deadlock scenario detailed in this post.

- If you are unable to use asynchronous methods, limit the level of concurrency in the system at the application layer to reduce simultaneous in flight operations using a semaphore construct such as System.Threading.Semaphore or Interlocked.Increment/Decrement. An example of this would be to have each incoming client perform an Interlocked.Increment on an integer and do a corresponding Interlocked.Decrement when the synchronous operation completes. With this mechanism in place you can now limit the number of simultaneous in flight operations below the limit of the ThreadPool and return “Server Busy” to avoid blocking more worker threads. When setting the maximum number of ThreadPool threads via ThreadPool.SetMaxThreads be cognizant of any additional ThreadPool threads you are using in the app domain via ThreadPool.QueueUserWorkItem or otherwise so as to accommodate them in your scenario. The goal is to limit the number of blocked threads in the system at any given point. Therefore, make sure that you do not block the thread prior to calling synchronous methods, since that will result in the same number of overall blocked threads. Instead when application reaches its concurrency limit you must ensure that no more additional ThreadPool threads become blocked.

Mitigations

If you are experiencing this issue and the options above are not viable in your scenario, you might try one of the options below. Please ensure you fully understand the implications of the actions below as they will result in additional threads being created on the system.

- Increasing the number of ThreadPool threads can mitigate this to some extent; however deadlock will always be a possibility without a limit on simultaneous operations.

- Offload work to non ThreadPool threads (make sure you understand the implications before doing this, the main purpose of the ThreadPool is to avoid the cost of constantly spinning up and killing off threads which can be expensive in code that runs frequently or in a tight loop).

Summary

We are currently investigating long term solutions for this issue for an upcoming release of the Azure SDK. As such if you are currently affected by this issue please follow the workarounds contained in this post until a future release of the SDK is made available. To summarize, here are some best practices that will help avoid the potential deadlock detailed above.

- Use Asynchronous methods for applications that scale – simply stated synchronous does not scale well as it implies the system must lock a thread for some amount of time. For applications with low demand this is acceptable, however threads are a finite resource in a system and should be treated as such. Applications that desire to scale should use simultaneous asynchronous calls so that a given thread can service many calls.

- Limit concurrent requests when using synchronous APIs - Use semaphores/counters to control concurrent requests.

- Perform a stress test - where you purposely saturate the ThreadPool workers to ensure your application responds appropriately.

References

- MSDN ThreadPool documentation: http://msdn.microsoft.com/en-us/library/y5htx827(v=VS.90).aspx

- Implementing the CLR Asynchronous Programming Model: http://msdn.microsoft.com/en-us/magazine/cc163467.aspx

- Developing High-Performance ASP.NET Applications: http://msdn.microsoft.com/en-us/library/aa719969(v=VS.71).aspx

Michael Stiefel explained Partitioning Azure Table Storage in an 11/22/2010 post to the Reliable Software blog:

Determining how to divide your Azure table storage into multiple partitions is based on how your data is accessed. Here is an example of how to partition data assuming that reads predominate over writes.

Consider an application that sells tickets to various events. Typical questions and the attributes accessed for the queries are:

How many tickets are left for an event? date, location, event What events occur on which date? date, artist, location When is a particular artist coming to town? artist, location When can I get a ticket for a type of event? genre Which artists are coming to town? artist, location The queries are listed in frequency order. The most common query is about how many tickets are available for an event.

The most common combination of attributes is artist or date for a given location. The most common query uses event, date, and location.

With Azure tables you only have two keys: partition and row. The fastest query is always the one based on the partition key.

This leads us to the suggestion that the partition key should be location since it is involved with all but one of the queries. The row key should be date concatenated with event. This gives a quick result for the most common query.

The remaining queries require table scans. All but one are helped by the partitioning scheme. In reality, that query is probably location based as well.

The added bonus of this arrangement is that it allows for geographic distribution to data centers closest to the customers.

<Return to section navigation list>

SQL Azure Database and Reporting

Andrew J. Brust (@andrewbrust) asked on 11/23/2010 SQL Azure Federation: Is it "NoSQL" Azure too? in his Redmond Diary column for the December 2010 issue of Visual Studio Magazine:

With all the noise (not to mention the funk) around HTML5 and Silverlight at PDC 2010, we could be forgiven for missing the numerous keynote announcements about Windows Azure and SQL Azure. And with all those announcements, we could be forgiven for missing the ostensibly more arcane stuff in the breakout sessions. But one of those sessions covered material that was very important.

That session was Lev Novik's "Building Scale-out Database Applications with SQL Azure," and given the generic title, we could be further forgiven for not knowing how important the session was. But it was important. Because it covered the new Federation (aka "sharding") features coming to SQL Azure in 2011. Oh, and by the way, you'd also be forgiven for not knowing what sharding was. Perhaps a little recent history would help.

When SQL Azure was first announced, its databases were limited in size to 10GB, in the pro version of the service. That's big enough for lots of smaller Web apps, but not for bigger ones, and definitely not for Data Warehouses. Microsoft's answer at the time to criticism of this limitation was that developers were free to "shard" their databases. Translation: you could create a bunch of Azure databases, treat each one as a partition of sorts, and take it upon yourself to take the query you needed to do, and divide it up into several sub queries -- each one executing against the correct "shard" -- and then merge all the results back again.

To be honest, while that solution would work and has architectural merit, telling developers to build all that plumbing themselves was pretty glib, and not terribly actionable. Later, Microsoft upped the database size limitation to 50GB, which mitigated the criticism, but it didn't really fix the problem, so we've been in a bit of a holding pattern. Sharding is sensible, and even attractive. But the notion that a developer would have to build out all the sharding logic herself, in any environment that claims to be anything "as a service," was far-fetched at best.

That's why Novik's session was so important. In it, he outlined the explicit sharding support, to be known as Federation, coming in 2011 in SQL Azure, complete with new T-SQL keywords and commands like CREATE/USE/ALTER FEDERATION and CREATE TABLE...FEDERATE ON. Watch the session and you'll see how elegant and simple this is. Effectively, everything orbits around a Federation key, which then corresponds to a specific key in each table, and in turn determines what part of those tables' data goes in which Federation member ("shard"). Once that's set up, queries and other workloads are automatically divided and routed for you and the results are returned to you as a single rowset. That's how it should have been along. Never mind that now.

Sharding does more than make the 50GB physical limit become a mere detail, and the logical size limit effectively go away. It also makes Azure databases more elastic, since shards can become more or less granular as query activity demands. If a shard is getting too big or queried too frequently, it can be split into two new shards. Likewise, previously separate shards can be consolidated, if the demand on them decreases. With SQL Azure Federation, we really will have Database as a Service... instead of Database as a Self-Service.

But it goes beyond that. Because with Federation, SQL Azure gains a highly popular feature of so-called document-oriented NoSQL databases like MongoDB, which features sharding as a foundational feature. Plus, SQL Azure's soon-to-come support for decomposing a database-wide query into a series of federation member-specific ones, and then merging the results back together, starts to look a bit like the MapReduce processing in various NoSQL products and Google Hadoop. When you add to the mix SQL Azure's OData features and its support for a robust RESTful interface for database query and manipulation, suddenly staid old relational SQL Azure is offering many of the features people know and love about their NoSQL data stores.

While NoSQL databases proclaim their accommodation of "Internet scale," SQL Azure seems to be covering that as well, while not forgetting the importance of enterprise scale, and enterprise compatibility. Federating (ahem!) the two notions of scale in one product seems representative of the Azure approach overall: cloud computing, with enterprise values.

Full disclosure: I’m a contributing editor for Visual Studio Magazine.

Steve Yi pointed to an article that compares SQL Azure and Windows Azure Table Storage on 11/23/2010:

In the November 2010 issue of MSDN Magazine Joseph Fultz wrote an article titled: “SQL Azure and Windows Azure Table Storage”. Joseph contends that often there is a point of confusion understanding the differences between Windows Azure Table Storage and SQL Azure. In this article he provide some guidelines for making the decision when evaluating the needs of the solution and the solution team against the features and the constraints for both Windows Azure Table Storage and SQL Azure. Additionally, there are some code samples so you get an idea of working with each option.

Read the article on MSDN: SQL Azure and Windows Azure Table Storage

<Return to section navigation list>

Dataplace DataMarket and OData

Zane Adam announced DataMarket: New Content Available and Paid Transactions Enabled! on 11/23/2010:

With the PDC’10 launch of DataMarket as a part of the Windows Azure Marketplace, we introduced the premium online marketplace to find, share, advertise, buy and sell premium data sets to customers.

Today’s update to DataMarket enables paid transactions for all our US-based customers.

Moreover, there are some great new additions to our list of content providers, including:

You can get more details about this announcement on the DataMarket blog, and explore these datasets at https://datamarket.azure.com.

Bill Zack explained Windows Azure Marketplace DataMarket–Enabling ISVs to Build Innovative Applications in an 11/23/2010 post to his Architecture & Stuff blog:

The DataMarket section of Windows Azure Marketplace enables customers to easily discover, purchase and manage premium data subscriptions. DataMarket brings data, imagery, and real-time web services from leading commercial data providers and authoritative public data sources together into a single location, under a unified provisioning and billing framework.

Windows Azure Marketplace DataMarket (previously known as codename “Dallas”) is now released to full production Release to Web (RTW). The DataMarket RTW lifts the “invitation only” purchase mode and brings in some new and cool datasets from a number of content providers.

For more details see:

Windows Azure Marketplace DataMarket – Information as a Service on any platform

https://datamarket.azure.com

Resources: Whitepaper | Forums | Blog |Also, this webcast will provide an overview of DataMarket and the tools and resources available in DataMarket to help ISVs build new and innovative applications, reduce time to market and cost. DataMarket also enables ISVs to access data as a service.

ISVs across industry verticals can leverage datasets from partners like D&B, Lexis Nexis, Stats, Weather Central, US Governments, UN, EEA to build innovative applications. DataMarket includes data across different categories like demographic, environmental, weather, sports, location based services etc.

Gunnar Peipman posted Creating Twitpic client using ASP.NET and OData to the DZone blog on 11/22/2010:

Open Data Protocol (OData) is one of new HTTP based protocols for updating and querying data. It is simple protocol and it makes use of other protocols like HTTP, ATOM and JSON. One of sites that allows to consume their data over OData protocol is Twitpic – the picture service for Twitter. In this posting I will show you how to build simple Twitpic client using ASP.NET.

Source code

You can find source code of this example from Visual Studio 2010 experiments repository at GitHub.

Source code repository GitHub

Simple image editor belongs to Experiments.OData solution, project name is Experiments.OData.TwitPicClient.

Sample application

My sample application is simple. It has some usernames on left side that user can select. There is also textbox to filter images. If user inserts something to textbox then string inserted to textbox is searched from message part of pictures. Results are shown like on the following screenshot.

Click on image to see it at original size.Maximum number of images returned by one request is limited to ten. You can change the limit or remove it. It is hear just to show you how queries can be done.

Querying Twitpic

I wrote this application on Visual Studio 2010. To get Twitpic data there I did nothing special – just added service reference to Twitpic OData service that is located at http://odata.twitpic.com/. Visual Studio understands the format and generates all classes for you so you can start using the service immediately.

Now let’s see the how to query OData service. This is the Page_Load method of my default page. Note how I built LINQ query step-by-step here.

My query is built by following steps:

- create query that joins user and images and add condition for username,

- if search textbox was filled then add search condition to query,

- sort data by timestamp to descending order,

- make query to return only first ten results.

Now let’s see the OData query that is sent to Twitpic OData service. You can click on image to see it at original size.

Click on image to see it at original size.This is the query that our service client sent to OData service (I searched belgrade from my pictures):

/Users('gpeipman')/Images()?$filter=substringof('belgrade',tolower(Message))&$orderby=Timestamp%20desc&$top=10

If you look at the request string you can see that all conditions I set through LINQ are represented in this URL. The URL is not very short but it has simple syntax and it is easy to read. Answer to our query is pure XML that is mapped to our object model when answer is arrived. There is nothing complex as you can see.

Conclusion

Getting our Twitpic client work and done was extremely simple task. We did nothing special – just added reference to service and wrote one simple LINQ query that we gave to repeater that shows data. As we saw from monitoring proxy server report then the answer is a little bit messy but still easy XML that we can read also using some XML library if we don’t have any better option. You can find more OData services from OData producers page.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Wade Wegner (@wadewegner) announced a new Hands-On Lab: Building Windows Azure Applications with the Caching Service on 11/22/2010:

Recently my team quietly released an update to the Windows Azure Platform Training Course (the training course is similar to the training kit, with minor differences) to coincide with new services released at PDC10. There’s a lot of nice updates in this release, including a new hands-on lab called Building Windows Azure Applications with the Caching Service, focused on building Windows Azure applications with the new Windows Azure AppFabric Caching service.

You can get to this new Caching HOL here.

Here’s the description to the hands-on lab:

In this lab, learn how to use the Caching service for both your ASP.NET session state and to cache data from your data-tier. You will see how the Caching service provides your application with a cache that provides low latency and high throughput without having to configure, deploy, or manage the service.

There are six parts to this lab:

- Overview

- Getting Started: Provisioning the Service

- Exercise 1: Using the Windows Azure AppFabric Caching for Session State

- Exercise 2: Caching Data with the Windows Azure AppFabric Caching

- Exercise 3: Creating a Reusable and Extensible Caching Layer

- Summary

Parts 2-4 should be consider required for anyone looking to build ASP.NET web applications that run in Windows Azure. For users looking for a more advanced caching layer – with a focus on reuse and extensibility – be sure to check out part 5 / exercise 3.

If you walk through exercises 1 & 2 you’ll see that the example application is the same application I demonstrated in my PDC10 talk and shared in this recent blog post.

Please send me feedback on any bugs, issues, or questions you have – thanks!

The Claims-Based Identity Blog posted AD FS 2.0 Step-by-Step Guide: Federation with Ping Identity PingFederate on 11/22/2010:

We have published a step-by-step guide on how to configure AD FS 2.0 and Ping Identity PingFederate to federate using the SAML 2.0 protocol. You can view the guide in docx, doc, or PDF formats and soon also as a web page. This is the third in a series of these guides; the guides are also available on the AD FS 2.0 Step-by-Step and How-To Guides page. Special thanks to Ping Identity for sponsoring this guide.

Brent Stineman (@BrentCodeMonkey) posted Enter the Azure AppFabric Management Service on 11/22/2010:

Before I dive into this update, I want to get a couple things out in the open. First, I’m an Azure AppFabric fan-boy. I see HUGE potential in this often overlooked silo of the Azure Platform. The PDC10 announcements re-enforced for me that Microsoft is really committed to making the Azure AppFabric the glue for helping enable and connect cloud solutions.

I’m betting many of you aren’t even aware of the existence of the Azure AppFabric Management Service. Up until PDC, there was little reason for anyone to look for it outside of those seeking a way to create new issuers that could connect to Azure Service Bus endpoints. These are usually the same people that noticed all the the Service Bus sample code uses the default “owner” that gets created when a new service namespace is created via the portal.

How the journey began

I’m preparing for an upcoming speaking engagement and wanted to do something more than just re-hash the same tired demos. I wanted to show how to setup new issuers so I asked on twitter one day about this and @rseroter responded that he had done it. He was also kind enough to quickly post a blog update with details. I sent him a couple follow-up questions and he pointed me to a bit of code I hadn’t noticed yet, the ACM Tool that comes as part of the Azure AppFabric SDK samples.

I spent a Saturday morning reverse engineering the ACM tool and using Fiddler to see what was going on. Finally, using a schema namespace I saw in Fiddler, I had a hit on an internet search and ran across the article "using the Azure AppFabric Management Service" on MSDN.

This was my Rosetta stone. It explained everything I was seeing with the ACM and also included some great tidbits about how authentication of requests works. It also put a post-PDC article from Will@MSFT into focus on how to manage the new Service Bus Connection Points. He was using the management service!

I could now begin to see how the Service Bus ecosystem was structured and the power that was just waiting here to be tapped into.

The Management Service

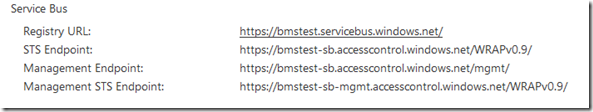

So, the Azure AppFabric Management API is a REST based API for managing your an AppFabric service namespace. When you go to the portal and create a new AppFabric service namepace, you’ll see a couple of lines that look like this:

Now if you’ve worked with the AppFabric before, you’re well aware of what the Registry URL is. But you likely haven’t worked much with the Management Endpoint and Management STS Endpoint. These are the endpoints that come into play with the AppFabric Management Service.

The STS Endpoint is pretty self-explanatory. It’s a proxy for Access Control for the management service. Any attempt to work with the management service will start with us giving an issuer name and key to this STS and getting at token back we can then pass along to the management service itself. There’s a good code snippet at the MSDN article, so I won’t dive into this much right now.

It’s the Management EndPoint itself that’s really my focus right now. This is the root namespace and there are several branches off of it that are each dedicated to a specific aspect of management:

Issuer – where our users (both simple users and x509 certs) are stored

Scope – the service namespace (URI) that issuers will be associated with

Token Policy – how long is a token good for, and signature key for ACS

Resources – new for connection point support

It’s the combination of these items that then controls which parties can connect to a service bus endpoint and what operations they can perform. It’s our ability to properly leverage this that will allow us to do useful real work things like setup sub-regions of the root namespace and assign specific rights for that sub-region to users. Maybe even do things like assign management at that level so various departments within your organization can each manage their own area of the service bus. J

In a nutshell, we can define an issuer, associate it with a scope (namespace path) which then also defines the rules for that issuer (Listen, Send, Manage). Using the management service, we can add/update/delete items from each of these areas (subject to restrictions).

How it works

Ok, this is the part where I’d normally post some really cool code snippets. Unfortunately, I spent most of a cold, icy Minnesota Sunday trying to get things working. ANYTHING working. Unfortunately I struck out.

But I’m not giving up yet. I batched up a few questions and sent them to some folks I’m hoping can find me answers. Meanwhile, I’m going to keep at it. There’s some significant stuff here and if there’s a mystery as big as what I’m doing wrong, it’s that I’m not entirely sure why we haven’t heard more about the Management Service yet.

So please stay tuned…

What’s next?

After a fairly unproductive weekend of playing with the Azure AppFabric Management Service, I have mixed emotions. I’m excited by the potential I see here, but at the same time, it still seems like there’s much work yet to be done. And who knows, perhaps this post and others I want to write may play a part in that work.

In the interim, you get this fairly short and theoretical update on the Azure AppFabric Management Service. But this won’t be the end of it. I’m still a huge Azure AppFabric fan-boy. I will let not let a single bad day beat me. I will get this figured out and bring it to the masses. I’m still working on my upcoming presentation and I’m confident my difficulties will be sorted out by then.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

Steve Plank explained Why you SHOULD NOT deploy an AD domain controller using Azure Connect with VM Role in an 11/23/2010 post:

I’ve heard a lot of talk recently about the forthcoming Windows Azure Connect service, combined with the soon-to-be-released-to-CTP of VM Role giving the possibility of hosting an Active Directory Domain Controller in the cloud. Although technically feasible, this post is designed to tell you why you shouldn’t do that.

The Web Role, Worker Role and VM Role all include local storage. Even a Windows Server at idle is actually doing quite a lot and making constant updates to the disks – and so it is with an instance deployed to the cloud as well. In Windows Azure, the state of a virtual machine (an instance) is not guaranteed if ever it restarts because of some sort of failure. Web and Worker Roles do persist the OS state across re-starts generated by automated updates; ones initiated by the fabric. With the VM Role there is no automated update process. It’s down to the owner of the VM Role to keep it up-to-date. Yes – there is a process for this which involves a differencing disk that is added to the base VHD that you supply when you first startup a VM Role in Windows Azure. However, the problem really exists with failures.

A failure of a VM Role can be caused by any number of things. Power supply failure to the rack, hard drive head-crash, failure of the hardware server the VM is hosted on plus a decent range of other hardware problems. The same for software. As they say “there’s no such thing as bug-free software”. Every so often the host OS or even the guest OS – the OS running in the VM you created, could happen across an unusual set of conditions while in kernel mode for which there is no handler. Well, if there is no handler, it means nobody thought such a condition would ever occur. The default behaviour of the kernel is to assume something has gone wrong with the kernel itself: it’d be dangerous to continue with a kernel in an unknown state. Think of the damage that could be caused. And so control is handed to a special handler – the one that causes the blue-screen fatal bugcheck. The resulting dump file may be useful in debugging what caused the problem after the event, after the operating system was stopped in its tracks by the bugcheck code. But this could happen to either the host OS or your VM.

When it does happen, the heartbeat that is emitted to the fabric by a special Windows Azure Agent installed in every instance managed by the cloud will stop. Eventually the fabric will recognise a timeout has occurred. It’s first concern is to get a new responsive instance up and running. It is very likely it won’t be on a host even in the same rack, let alone exactly the same host. Therefore, no guarantee is ever given for these situations that state will be preserved.

The fabric will take the base VHD, plus the collection of differencing disks (the ones that contain your OS updates) and “boot” that back in to the configuration specified in your service model. This diagram explains the problem.

Use the numbered points in the diagram to follow along:

- The base VHD plus the differencing VHD is used to create…

- ..a running instance of a Domain Controller as a Windows Azure VM Role

- The downward pointing green arrow represents the life of this Domain Controller. Let’s assume the life between instantiating the VM and the catastrophic failure (at step 5) is 61 days (or longer).

- As time advances, more and more changes are written to the Domain Controller. In the diagram I have shown this as being performed by a series of administrators. In reality though, it doesn’t matter how the changes get to the DC. Either directly or because of AD replication, say from an on-premise DC. The rules for hanging on to objects are the same. Deleted objects are tombstoned.

- A catastrophic failure of some description occurs and the instance immediately goes offline.

- The Windows Azure Fabric recognises the absence of the heartbeat and builds a new instance from the base and differencing VHDs. These VHDs are used to create a new instance…

- …and the result is that all the changes that have accrued in the intervening 61 days are now lost. If there is another online DC, say in an on-premise environment, it will refuse to speak to this “imposter”. The password will have changed twice in the intervening 60 days and the tombstone timeout will have occurred. You therefore cannot rely on replication to get this DC back in to the state it was before the failure.

Essentially, having the fabric fire-up a new DC based on an out-of-date image is a bit like the not-recommended practice of running DCPromo on a Virtual Machine and therefore getting a copy of the domain database on to the machine. Then taking it offline and storing the VHD as the “backup” of AD. Re-introducing that DC back in to the network after a time will cause it to be ignored in the same way for all the same reasons.

The risk with having applications that can use Windows Integrated Authentication in the cloud is that if the network between your on-premise Domain Controllers and the apps you have in the cloud goes down, the apps can’t be used. It therefore appears to be the case that a VM Role deployed as a Domain Controller up in the cloud and using Windows Azure Connect to give full domain connectivity is a good idea. And indeed it is – until a failure occurs.

Of course if somebody could come up with a way for the DC to store its directory data in blob storage, which is persistent across instance reboots, then we’d have a neat solution. Maybe that’s an opportunity for a clever ISV partner to exploit. In the meantime – take the opposite sentiment to Nike’s strapline – “Just Don’t Do It”.

Planky

The preceding post is the basis of Tim Anderson’s The Microsoft Azure VM role and why you might not want to use it article of 11/22/2010.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Wes Yanaga reported the availabilty of a Windows Azure Platform Training Course November 2010 Update on 11/23/2010:

The Windows Azure Platform Training Course includes a comprehensive set of hands-on labs and videos that are designed to help you quickly learn how to use Windows Azure, SQL Azure, and the Windows Azure AppFabric.

This release provides updated content as related to the Windows Azure Tools for Microsoft Visual Studio 1.2 (June 2010) and Windows Azure platform AppFabric SDK V2.0 (October update). Some of the new hands-on labs cover the new and updated services announced at the Professional Developers Conference (http://microsoftpdc.com) including the Windows Azure AppFabric Access Control Service, Caching Service, and the Service Bus. Additional content will be included in future updates to the training course.

Victoria Chelsea posted a copy of a 3M Automates Visual Attention Service to More Quickly Test the Impact of Creative and Graphic Designs press release on 11/23/2010:

3M has added automatic face and text detection to its Visual Attention Service, a web-based scanning tool that digitally evaluates the impact of creative and graphic designs. With this latest enhancement, users simply upload images to 3M’s Web portal and within seconds, the Visual Attention Service (VAS) tool analyzes and reveals the results. The suite of enhancements for the 3M tool also include a mobile application for the Apple iPhone, a plug-in for Adobe Photoshop, an ability to test key areas within a creative design, and new pricing options.

“With 30 years of vision science experience, 3M has a legacy and proven track record for innovation – bringing cutting-edge technology into easy-to-use product, software and hardware innovations.”

The new Adobe Photoshop Plug-In enables users to run an analysis inside of Photoshop CS5 in order to evaluate a design during the creative process. The mobile application further simplifies workflow and enhances accessibility to the VAS tool by allowing users to upload images and conduct a test using their iPhone.

Graphic designers and marketing professionals use 3M VAS to indicate which design elements the human eye will react to in the first three to five seconds, providing the accurate, fact-based scientific feedback needed to speed up the revision and approval processes. Launched in May of this year, the online tool is based on the company’s expertise in vision science and analyzes all the visual elements within an image, such as color, faces, shape, contrast and text, to accurately predict if a design’s visual hierarchy is strong enough to attract a viewer’s attention.

“With 30 years of vision science experience, 3M has a legacy and proven track record for innovation – bringing cutting-edge technology into easy-to-use product, software and hardware innovations,” said Terry Collier, marketing manager, 3M Digital Out of Home Department. “This scanning tool empowers designers to leverage their creative expertise and then justify their design to decision-makers by revealing why the layout will make the strongest impact.”

Websites, photos, creative content, packaging concepts, advertisements and all manner of creative media can be evaluated in different scenes or contexts. The results include a heat map and region map. The heat map highlights areas likely to receive attention within the first three to five seconds. The region map outlines these areas and reveals a score of the predicted probability that a person will look at the area along with diagnostics to show why the region is likely to get attention. As part of the new suite of enhancements to 3M’s service, users can now receive a separate score for key areas of interest.

3M’s service is globally available through the Microsoft ® Windows Azure ™ platform and features numerous new pricing options, including day, week and month passes. For a free trial of VAS, visit http://www.3M.com/vas.

Thomas Kruse and Jennifer Tabak of Valtech discuss with Erick Mott of Sitecore their expanded partnership in North America and provide some details about the benefits of “Sitecore by Valtech” CMS Azure Edition deployment packages in these 11/21/2010 video segments from Sitecore and Valtech Discuss Expanded Partnership at Gartner Symposium ITxpo 2010:

Sitecore’s CMS Azure Edition leverages Microsoft Windows Azure for enterprise class cloud deployment. Scale websites to new regions and respond to surges in demand with a single click. To learn more visit www.sitecore.net

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@BethMassi) explained How to Create a Screen with Multiple Search Parameters in LightSwitch in and 11/23/2010:

A couple weeks ago I posted about how to create a custom search screen where you could specify exactly what field to search on. I also talked about some of the search options you can set on entities themselves. If you missed it: Creating a Custom Search Screen in Visual Studio LightSwitch.

Since then a couple folks asked me how you can create a custom search screen that searches multiple fields that you specify. Turns out this is really easy to do – you can specify multiple filter criteria with parameters on a query and use that as the basis of the screen. To see how, head on over to my blog and read: How to Create a Screen with Multiple Search Parameters in LightSwitch

Enjoy,

Return to section navigation list>

Windows Azure Infrastructure

Andy Bailey (@AndyStratus) claims to describe How azure actually actually works in this 11/23/2010 post to his Availability Advisor blog:

Yes you read that headline correctly – two actuallies, actually …

That’s because this post is a response to this one, from IT Knowledge Exchange, entitled How Azure Actually Works.

It is a bit of a technical read, but I was interested to know a little bit more how cloud solutions, such as Azure, architect their systems to max the uptime, and there are a lot of fine learning points in it.

For the record though, if you go to the Windows Azure home page, it states a 99.9% uptime, which is the wrong side of 8.5 hrs per year. Or, to put it another way, it puts your systems at risk of a whole working day’s worth of downtime a year.

I have always advocated that this figure should be a lot better for cloud services. Fault tolerant technology is one way to start.

I love the bit about “a healthy host is a happy host.” I’ve never thought of a server being happy before but if ever a server were to be happy, it would be a fault tolerant one as it never fails!

As an availability expert, I am concerned though about the assumption that, because there are two nodes, then it is fault tolerant. Don’t think this is quite right as it goes on to mention recovery and restarts. Truly fault tolerant systems do not need to worry about recovery and restarting. Not so sure I’d run my business in the cloud based on this if I needed guaranteed uptime …

Azure does sounds like a great solution. But if availability is vital, then please consider hosting on a fault tolerant server.

Andy is Availability Architect at Stratus Technologies.

Carl Brooks’ (@eekygeeky) How Azure actually works, courtesy of Mark Russinovich article of 11/1/2010 for SearchCloudComputing.com’s Troposphere blog describes in summary how Windows Azure works according to the Gospel of Mark (Russinovich). Andy doesn’t refute the content of Carl’s article; instead, he argues that 99.9% uptime doesn’t provide adequate availability. Instead, Andy recommends buying a fault-tolerant server (presumably from Stratus Technologies).

Assuming that the allowed 8.5 hours of downtime per year with 99.9% availability is distributed uniformly over a 24 hour period, only 8/24 (1/3) of the downtime (2.83 hours) would occur during an 8-hour working day. 2.83 hours/year is 14.15 minutes per month.

The latest of my monthly Windows Azure Uptime Report: OakLeaf Table Test Harness for October 2010 (99.91%) indicates that during its first 10 months of commercial operation, Windows Azure has provided my OakLeaf Systems Windows Azure Table Test Harness running in Microsoft’s South Central US (San Antonio) data center with an average of 99.83% availability. As the Uptime Report notes, I’m running only one Web Role instance, so the 99.95% compute SLA doesn’t apply to my app.

For more details from Microsoft about Windows Azure Service Level Agreements, click here.

John Treadway (@cloudbzz) asserted Cloud Core Principles – Elasticity is NOT #Cloud Computing Response in an 11/22/2010 post:

Ok, I know that this is dangerous. Randy [Bias] is a very smart guy and he has a lot more experience on the public cloud side than I probably ever will. But I do feel compelled to respond to his recent “Elasticity is NOT #Cloud Computing …. Just Ask Google” post.

On many of the key points – such as elasticity being a side-effect of how Amazon and Google built their infrastructure – I totally agree. We have defined cloud computing in our business in a similar way to how most patients define their conditions – by the symptoms (runny nose, fever, headache) and not the underlying causes (caught the flu because I didn’t get the vaccine…). Sure, the result of the infrastructure that Amazon built is that it is elastic, can be automatically provisioned by users, scales out, etc. But the reasons they have this type of infrastructure are based on their underlying drivers – the need to scale massively, at a very low cost, while achieving high performance.

Here is the diagram from Randy’s post. I put it here so I can discuss it, and then provide my own take below.

My big challenge with this is how Randy characterizes the middle tier. Sure, Amazon and Google needed unprecedented scale, efficiency and speed to do what they have done. How they achieve this are the tactics, tools and methods they exposed in the middle tier. The cause and the results are the same – scale because I need to. Efficient because it has to be. These are the requirements. The middle layer here is not the results – but the method chosen to achieve them. You could successfully argue that achieving their level of scale with different contents in the grey boxes would not be possible – and I would not disagree. Few need to scale to 10,000+ servers per admin today.

However, I believe that what makes an infrastructure a “cloud” is far more about the top and bottom layers than about the middle. The middle, especially the first row above, impacts the characteristics of the cloud – not its definition. Different types of automation and infrastructure will change the cost model (negatively impacting efficiency). I can achieve an environment that is fully automated from bare metal up, uses classic enterprise tools (BMC) on branded (IBM) heterogeneous infrastructure (within reason), and is built with the underlying constraints of assumed failure, distribution, self-service and some level of over-built environment. And this 2nd grey row is the key – without these core principles I agree that what you might have is a fairly uninteresting model of automated VM provisioning. Too often, as Randy points out, this is the case. But if you do build to these row 2 principles…?

Below I have switched the middle tier around to put the core principles as the hands that guide the methods and tools used to achieve the intended outcome (and the side effects).

The core difference between Amazon and an enterprise IaaS private cloud is now the grey “methods/tools” row. Again, I might use a very different set of tools here than Amazon (e.g. BMC, et al). This enterprise private cloud model may not be as cost-efficient as Amazon’s, or as scalable as Google’s, but it can still be a cloud if it meets the requirement, core principles and side effects components. In addition, the enterprise methods/tools have other constraints that Amazon and Google don’t have at such a high priority. Like internal governance and risk issues, the fact that I might have regulated data, or perhaps that I have already a very large investment in the processes, tools and infrastructure needed to run my systems.

Whatever my concerns as an enterprise, the fact that I chose a different road to reach a similar (though perhaps less lofty) destination does not mean I have not achieved an environment that can rightly be called a cloud. Randy’s approach of dev/ops and homogeneous commodity hardware might be more efficient at scale, but it is simply not the case that an “internal infrastructure cloud” is not cloud by default.

John Taschek (@jtaschek) posted A debate with the Doctor of Failure to the Enterprise Irregulars blog on 11/21/2010 (missed due to RSS failure):

Failure happens. There are many issues and stakeholders in any technology implementation process and any of them adds complexity that can lead to failure. The question is how much technology, the DNA of the technology vendor, and other stakeholders contributes to the failure. Is it always a project management issue or can technology also be partially or even completely at fault? The analysis of these components is what Krigsman specializes in.

When it comes to IT Failure, there is a long history of projects (in CloudBlog’s view these are all on-premise) that failed because either the technology or the way the vendor sold the technology massively melted down. For example, CloudBlog feels obligated to point out that SAP was sued by Waste Management, Siebel has crashed numerous times, such as in Australia, at ATT, in numerous other telcos. Oracle meanwhile was sued by the US Department of Justice not for technology failure but for contract gouging.

We asked the Doctor of IT Failure himself – Michael Krigsman, who in reality is the CEO of Asuret and the author of the ZDNet blog IT Project Failures, about this. Krigsman also will refuse himself the title of Doctor of Failure because he is 100 percent focused on success and the prevention of failures. CloudBlog tried to break Krigsman out of his overly objective personality quirk and get him to admit the obvious, but it was tougher than it appeared. Perhaps it was the arid suburban air or the oxygen deprivation resulting from being a mile high, but Krigsman did finally admit that pure cloud companies do have certain advantages.

The debate was fun. The tension was thick. The atmosphere was dripping with humor, but here’s part 1 of the interview with Krigsman:

Here’s what we at CloudBlog holds to be true (Michael may disagree each or all of these – it was a debate after all):

- The closer a customer is to the vendor, the better the communication, resulting into reduced chances of failure.

- The purer the vendor’s cloud strategy, the faster access to innovation and ultimately to success.

- The best cloud multitenant infrastructures reduce failure because they are customer-tested daily on a massive scale.

- The more that cloud is part of a vendor’s DNA, the more the vendor is focused on customer success. It is inherent in the way the licenses are sold.

- The more agile a customer can be, the better the business benefit, resuling into reduced chance of failure.

- Cloud vendors offer a faster time to benefit because software, hardware, and the complexities around acquisition and implementation are reduced. Faster time to benefit equals better chance for success.

- The closer the business units work with IT the better the result of the system.

- The more open and accessible the system, the faster the pace of non-invasive innovation, leading to increased chances of customer success. These systems by their nature are best provided by pure cloud vendors.

- Faster access to innovation and closer ties among business units, IT, and vendor results in higher productivity and a reduced chance of failure.

- True collaboration at scale can only be done in the cloud, resulting in higher productivity, faster time to benefit, and a far greater chance of success.

CloudBlog didn’t ask Krigsman about each of these issues, but we asked him overall about several. Here’s his response.

What’s yours?

John is a strategist at salesforce.com.

Jeffrey Schwartz posted 10 Noteworthy New Features Coming to Microsoft's Azure Cloud Platform as his current Schwartz Cloud Report to 1105 Media’s RedmondMag.com site on 11/15/2010 (missed due to RSS failure):

Late last month Microsoft fleshed out its Windows Azure Platform with a roadmap of new capabilities and features that the company will roll out over the coming year.

Microsoft late last month fleshed out its Windows Azure Platform with a roadmap of new capabilities and features that the company will roll out over the coming year.

The improvements to Microsoft's cloud computing platform, revealed at its Professional Developers Conference (PDC) held in Redmond and streamed online, are substantial and underscore the company's ambitions to ensure the Windows Azure Platform ultimately achieves a wider footprint in enterprise IT.

"They are making Windows Azure more consumable and more broadly applicable for customers and developers," said IDC analyst Al Gillen.

The Windows Azure Platform, which today primarily consists of Windows Azure and SQL Azure, went live back in February. Microsoft boasts its cloud service is now being used for over 20,000 applications. In his keynote address at the PDC, Microsoft Server and Tools president Bob Muglia, played up the platform as a service (PaaS) cloud infrastructure that the company is building with Windows Azure.

"I think it is very clear, that that is where the future of applications will go," Muglia told attendees at PDC in his speech. "Platform as a service will redefine the landscape and Microsoft is very focused on this. This is where we are putting the majority of our focus in terms of delivering a new platform."

Muglia recalled Microsoft's second PDC in July of 1992 when the company introduced Windows NT, a platform that would play a key role in client-server computing. "We see a new age beginning, one that will go beyond what we saw 18 years ago," he said. "Windows Azure was designed to run as the next generation platform as a service. It is an operating system that was designed for this environment."

Still Gillen pointed out that Windows Azure represents the future of how applications will be built and data will be managed but it will not replace traditional Windows anytime soon. "At the end of the day, there will be customers running perpetual license copies [of traditional software] 10 or 15 years from now," Gillen noted.

While PDC was targeted at developers, the company's new offerings are bound to resonate with IT pros and partners. Some of the new services coming to Windows Azure and SQL Azure are aimed at bringing more enterprise features found in Microsoft's core platforms to the cloud. Among the new cloud services announced last month at PDC:

- Windows Azure Virtual Machine Roles: Will allow organizations to move entire virtual machine images from Windows Server 2008R2 to Windows Azure. "You can take a Windows Server 2008R2 image that you've built with Hyper-V in your environment and move that into the Windows Azure environment and run it as is with no changes," Muglia explained. Microsoft will release a public beta by year's end. The company also plans to support Windows Server 2003 and Windows Server 2008 SP2 sometime next year. "That's a compatibility play and an evolutionary play so the customers can have an opportunity to bring certain applications into Windows Azure, run them in a traditional Windows Server environment," Gillen said. "Over time, they will have the ability to evolve those applications to become native Azure or potentially just leave them there [on the VM Role running on top of Azure] forever and encapsulate them in some way and access the business value that those applications contain."

- Server Application Virtualization: Will let IT take existing applications and deploy them without going through the installation process, into a Windows Azure worker role, according to Muglia. "We think it's a very exciting way to help you get compatibility with existing Windows Server applications in the cloud environment," Muglia said. Added Gillen: "It has the potential to give customers a pretty comfortable path to bring existing applications over to Windows Azure. That can be really huge because if Microsoft can do that and they can bring Windows Server applications over to Windows Azure, and let them run without dragging along a whole operating system with them, that creates an opportunity that Microsoft can exploit that no one else in the industry can match." The company will release a community technology preview (CTP) by year's end with commercial availability slated for the second half of 2011.

- Remote Desktop: Set for release later this year, IT pros will be able to connect to a running instance of an app or service to monitor activities or troubleshoot problems.

- Windows Server 2008R2 Roles: Also due out later this year, Windows Azure will support Windows Server 2008R2 in Web, worker and VM roles, Microsoft said. That will let customers and partners use features such as IIS 7.5, AppLocker and command-line management using PowerShell Version 2.0, Microsoft said.

- Full IIS support: The Web role in Windows Azure will provide full IIS functionality, Microsoft said. This will be available later this year.

- Windows Azure Connect: The technology previously known as Project Sydney, Windows Azure Connect will provide IP-based connectivity between enterprise premises-based and Windows Azure-based services. "That will connect your existing corporate datacenters and databases and information and apps on your existing corporate datacenter virtually into the Windows Azure applications that you have," Muglia said. "In part that enables hybrid cloud," Gillen said. "Hybrid cloud is going to be so important simply because customers are not going to go directly to a full native cloud. If they can have an opportunity to have a hybrid scenario it's actually very attractive for a lot of customers. The company plans a CTP by year's end with release slated for the first half of 2011.

- Windows Azure Marketplace: Much like an app store, the Windows Azure Marketplace is aimed at letting devs and IT pros share buy and sell apps, services and various other components, including training offerings. A component of the marketplace is Microsoft's DataMarket, formerly code-named Dallas, which consists of premium apps with more than 40 data providers now on board. The Windows Azure Marketplace beta will be released by year's end.

- Multiple Admins: In a move aimed at letting various team members manage a Windows Azure account, the service will by year's end allow multiple Windows Live IDs to have administrator privileges, Microsoft said.

- Windows Azure AppFabric: The company announced the release of Windows AppFabric Access control, which helps build federated authorization to apps and services without requiring programming, Microsoft said. Also released was Windows Azure AppFabric Connect, aimed at bridging existing line-of-business apps to Windows Azure via the AppFabric Service Bus. It extends BizTalk Server 2010 to support hybrid cloud scenarios –those that use both on and off premises resources.

- Database Manager for SQL Azure: This Web-based database querying and management tool, formerly known as "Project Houston," will be available by year's end. Also, for those who like SQL Server Reporting Services, SQL Azure Reporting will be a welcome addition to SQL Azure, allowing users to analyze business data stored in SQL Azure databases.

Perhaps more mundane but bound to be noticed by all Windows Azure users, is an overall facelift to the portal, with what the company describes as an improved user interface. The new portal will provide diagnostic data, a streamlined account setup and new support databases and forums.

Full disclosure: I’m a contributing editor for 1105 Media’s Visual Studio Magazine.

Microsoft published an IT as a Service: Transforming IT with the Windows Azure Platform white paper dated 11/9/2010. From the introduction:

Cloud computing is changing how all of us—Microsoft and our customers—think about information technology. New deployment options, new business models, and new opportunities abound.

To Microsoft, cloud computing represents a transformation of the industry in which we and our partners work to deliver IT as a Service. This transformation will let you focus on your business, not on running infrastructure. It will also let you create better applications, then deploy those applications wherever makes the most sense: in your own data center, at a regional service provider, or in our global cloud. In short, IT as a Service will let you deliver more business value.

To make this transformation possible, Microsoft provides the Windows Azure platform, complementing our existing Windows Server platform. Together, these two platforms allow enterprises, regional service providers, and Microsoft itself to deliver applications across private and public clouds with a consistent identity, management, and application architecture. By increasing business value and lowering costs, this foundation for IT as a Service will transform how organizations use information technology.

…

Figure 2: Microsoft is the only vendor able to provide every aspect of IT as a Service.

Microsoft is uniquely positioned to provide this. To see why, it’s useful to review Microsoft’s offerings in each column:

- As a global provider, Microsoft offers a range of SaaS applications running in its own data centers, including Exchange Online, SharePoint Online, Dynamics CRM Online, and others. Microsoft also provides PaaS (and a form of IaaS) with the Windows Azure platform.

- For regional providers, Microsoft provides hosted versions of Exchange, SharePoint, and other applications, along with the ability for hosters to offer IaaS services using Windows Server with Hyper-V, System Center, and the Dynamic Data Center Toolkit for Hosters.

- For enterprises, Microsoft provides on-premises applications and an application platform built on Windows Server and SQL Server. Customers can also implement on-premises IaaS using Windows Server with Hyper-V, System Center, and the Self-Service Portal.

…

Lowering Cost with PaaS

Over the past several years, both Microsoft and our customers have gone through a series of changes in how we use servers to support applications. To a great degree, those changes have been driven by the quest for lower costs. Figure 3 illustrates this evolution.

Figure 3: Raising the abstraction level of an application platform lowers the platform’s cost of operation.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

Charles Babcock reported “The software giant says its customers don't necessarily want a single hypervisor cloud, so it's supporting OpenStack” as a deck for his Microsoft Supporting Cloud Open Source Code For Hyper-V article of 11/22/2010 for InformationWeek:

The software giant says its customers don’t necessarily want a single hypervisor cloud, so it’s supporting OpenStack.

Cloud computing has progressed on multiple fronts over the past week, and one of the most interesting advances is the fact that Microsoft has decided to support the open source project Open Stack.

OpenStack, you may remember, was the joint project announced by Rackspace and NASA last July to produce a suite of open source software to equip a cloud service provider with a standard set of services.

It’s likely to be adopted by young cloud service providers and some enterprises.

Microsoft offers its own Azure cloud, and it hopes plenty of Windows users gravitate to its cloud services. But just in case not everyone does, it’s commissioned startup Cloud.com, a supplier of cloud software, to provide support for Hyper-V in the OpenStack set of software. Cloud.com builds a variant of OpenStack, called CloudStack, which it offers to the demanding large-scale service provider market.

Tata Consulting’s cloud services are based on CloudStack, as is Korea Telecom, Logixworks, Profitability.net, Activa.com, and Instance Cloud Computing. CloudStack is open source code issued under GPL v3.

I sat down recently with Peder Ulander, CMO of Cloud.com, to ask about what his firm was doing for Microsoft and Hyper-V. OpenStack was announced as providing infrastructure software for a cloud environment that would run open source hypervisors Xen and KVM. By Xen, he also means XenServer from Citrix Systems, a commercial implementation.

By giving OpenStack the ability to host Hyper-V hypervisors as well, it will broaden the opportunity to build clouds with the project’s open source code, he noted.

“Right now, clouds are architected for each environment,” he said.That means Amazon Web Services’ EC2 runs Amazon Machine Images, a proprietary variation of the Xen hypervisor. Likewise, since its virtual machines don’t run in EC2, VMware has been busy equipping services providers with cloud infrastructures that run its virtual machines. AT&T’s Synaptic Compute Cloud, Bluelock, and Verizon Business are vCloud data centers running workloads under the VMware ESX hypervisor.

OpenStack, and its commercial implementers, such as Cloud.com, are trying to broaden the playing field. One open source stack able to run workloads from a variety of hypervisors might prove more viable than the present trend of creating hypervisor-specific cloud services, Ulander said. By integrating support for Hyper-V operations into OpenStack, the open source project will multiply the potential number of users of cloud services built on open stack, and hopefully, its number of adopters.

Read More

Since when did the computer press start putting halos behind journalists photos?

<Return to section navigation list>

Cloud Security and Governance

K. Scott Morrison posted Virtualization vs. the Auditors on 11/22/2010:

Virtualization, in the twilight of 2010, has become at last a mainstream technology. It enjoys widespread adoption across every sector—except those, that is, in which audit plays a primary role. Fortunately, 2011 may see some of these last bastions of technological-conservatism finally accept what is arguably the most important development in computing in the 21st century.

The problem with virtualization, of course, is that it discards the simple and comfortable division between physical systems, replacing this with an abstraction that few of us fully understand. We can read all we want about the theory of virtualization and the design of a modern hypervisor, but in the end, accepting that there exists provable isolation between running images requires a leap of faith that has never sat well with the auditing community.

To be fair to the auditors, when that person’s career depends on making a judgment of the relative security risk associated with a system, there is a natural and understandable tendency toward caution. Change may be fast in matters of technology, but human process is tangled up with complexities that just take time to work through.

The payment card industry may be leading the way toward legitimizing virtualization in architectures subject to serious compliance concerns. PCI 2.0, the set of security requirements for enhancing security around payment card processing, comes into effect Jan 1, 2011, and provides specific guidance to the auditing community about how they should approach virtualization without simply rejecting it outright.

Charles Babcock, in InformationWeek, published a good overview of the issue. He writes:

Interpretation of the (PCI) version currently in force, 1.2.1, has prompted implementers to keep distinct functions on physically separate systems, each with its own random access memory, CPUs, and storage, thus imposing a tangible separation of parts. PCI didn’t require this, because the first regulation was written before the notion of virtualization became prevalent. But the auditors of PCI, who pass your system as PCI-compliant, chose to interpret the regulation as meaning physically separate.

The word that should pique interest here is interpretation. As Babcock notes, existing (pre-2.0) PCI didn’t require physical separation between systems, but because this had been the prevailing means to implement provable isolation between applications, it became the sine qua non condition in every audit.

The issue of physical isolation compared to virtual isolation isn’t restricted to financial services. The military advocates similar design patterns, with tangible isolation between entire networks that carry classified or unclassified data. I attended a very interesting panel at VMworld back in September that discussed virtualization and compliance at length. One anecdote that really struck me was made by Michael Berman, CTO of Catbird. Michael is the veteran of many ethical hacking jobs. In his experience, nearly every official inventory of applications his clients possessed was incomplete, and it is the overlooked applications that are most often the weakness to exploit. The virtualized environment, in contrast, offers an inventory of running images under control of the hypervisor that is necessarily complete. There may still be weak (or unaccounted for) applications residing on the images, but this is nonetheless a major step forward through provision of an accurate big picture for security and operations. Ironically, virtualization may be a means to more secure architectures.

I’m encouraged by the steps taken with PCI 2.0. Fighting audits is a nearly always a loosing proposition, or is at best a Pyrrhic victory. Changing the rules of engagement is the way to win this war.

James Urquhart (@JamesUrquhart) asserted Cloud security is dependent on the law in an article of 11/22/2010 for C|Net News’ The Wisdom of Clouds blog:

I am a true believer in the disruptive value of cloud computing, especially the long term drive towards so-called "public cloud" services. As I've noted frequently of late, the economics are just too compelling, and the issues around security and the law will eventually be addressed.

However, lately there has been some interesting claims of the superiority of public clouds over privately managed forms of IT, including private cloud environments. The latest is a statement from Gartner analyst Andrew Walls, pointing out that enterprises simply assume self-managed computing environments are more secure than shared public services:

"When you go to the private cloud they start thinking, 'this is just my standard old data centre, I just have the standard operational issues, there's been no real change in what we do', and this is a big problem because what this tells us is the data centre managers are not looking at the actual impact on the security program that the virtualisation induces."

"They see public cloud as being a little bit more risky therefore they won't go with it. Now the reality is, from my own experience in talking to security organisations and data centre managers around the world is that in many of these cases, you're far safer in the public cloud than you are on your own equipment."

So, Walls seems to be saying that many (most?) IT organizations don't understand how virtualization changes "security," much less cloud, and therefore those organizations would be better off putting their infrastructure in the hands of a public cloud provider. That, to me, is a generalization so broad it's likely useless. There are way too many variables in the equation to make a blanket statement for the applications at any one company, much less for an entire industry.

In fact, regardless of the technical and organizational realities, there is one element that is completely out of the control of both the customer and cloud provider that makes public cloud an increased risk: the law. Ignoring this means you are not completely evaluating the "security" of potential deployment environments.

Some laws affect data management and control

There are two main forms of "risk" associated with the law and the cloud. The first is explicit legal language that dictates how or where data should be stored, and penalties if those conditions aren't met. The EU's data privacy laws are one such example. The U.K.'s Data Protection Act of 1998 is another. U.S. export control laws are an especially interesting example, in my opinion.The "risk" here is that the cloud provider may not be able to guarantee that where your data resides, or how it is transported across the network, won't be in violation of one of these laws. In IaaS, the end user typically has most of the responsibility in this respect, but PaaS and SaaS options hide much more of the detail about how data is handled and where it resides. Ultimately, it's up to you to make sure your data usage remains within the bounds of the law; to the extent you don't control of key factors in public clouds, that adds risk.

The cloud lacks a case law

The second kind of risk that the cloud faces with the law, however, is much more nefarious. There are many "grey areas" in existing case law, across the globe, with respect to how cloud systems should be treated, and what rights a cloud user has with respect to data and intellectual property.I spoke of the unresolved issues around the U.S. Constitution's Fourth Amendment protections against illegal search and seizure, but there are other outstanding legal questions that threaten the cloud's ability to protect users at the same level that their own data center facilities would. One example that is just coming to a head is the case of EMI versus MP3tunes.com.

Three years ago, EMI sued the company and it's founder and CEO, Michael Robertson, for willful infringement of copyright over the Internet. EMI claims that MP3tunes.com and its sister site, Sideload.com (a digital media search engine), are intentionally designed to enable users to violate music copyrights.

Robertson defends the sites as simply providing a storage service to end users, and therefore protected under the "safe harbor" provisions of the Digital Millennium Copyright Act. These provisions protect online services from prosecution under the DMCA as long as they remove infringing content when notified of it's presence.

At stake here is whether any online storage service (aka "cloud storage provider") is protected by the DMCA's safe harbor provisions, or if the very ability of users to find, upload and store infringing content is grounds for legal action. Even if MP3tunes is indeed found to be promoting infringement, what are the legal tests for identifying other such services? Will a new feature available at your favorite storage cloud suddenly put your provider--or worse, your data--at risk?

Yet another has to do with ownership of the physical resources, and what protections you have against losing your systems should those systems be seized for any reason. Imagine that your cloud provider was found to have been involved in violating federal law, and the FBI decided to seize all of their servers and disks for the investigation.

In this hypothetical situation, could you get your data back? What rights would you have? According to the 2009 case of a Texas colocation provider, in which 200 systems were seized--the vast majority of which belonged to the provider's clients, not the provider under investigation--very few.

There is no single "better option" for cloud

I don't want to overstate the risks here. We've worked with colocation, outsourcing and even cloud offerings for a number of years now, and there have been very few "disastrous" run-ins with the law. Providers are aware of the problem, and provide architectures or features to help stay within the law. In the long term, these issues will work themselves out and public cloud environments will grow in popularity even before they are resolved.However, making a blanket statement that public clouds are by de facto "more secure" than private clouds is just hype that ignores key realities of our fragile, nascent cloud marketplace. Until the market matures, the question of "better security" must take into account all factors that lead to risk in any given deployment scenario. With that context in mind, public and private clouds each have their weaknesses and strengths--which may vary from company to company or even application to application.

That said, Walls made one key point that I agree with emphatically. Just because a private cloud is behind your firewall, doesn't mean you don't have additional work to do to ensure the security of a private cloud environment. Having a data center does not automatically make you "more secure" than a public cloud provider any more than a cloud vendor is automatically more secure than anything an enterprise could do themselves.

Image credit: Flickr/Brian Turner

Chris Hoff (@Beaker) posted On Security Conference Themes: Offense *Versus* Defense – Or, Can You Code? on 11/22/2010: